Angle-Dependent Transport Theory-Based Ray Transfer Function for Non-Contact Diffuse Optical Tomographic Imaging

Abstract

1. Introduction

2. Material and Methods

2.1. Surface Radiation Theory

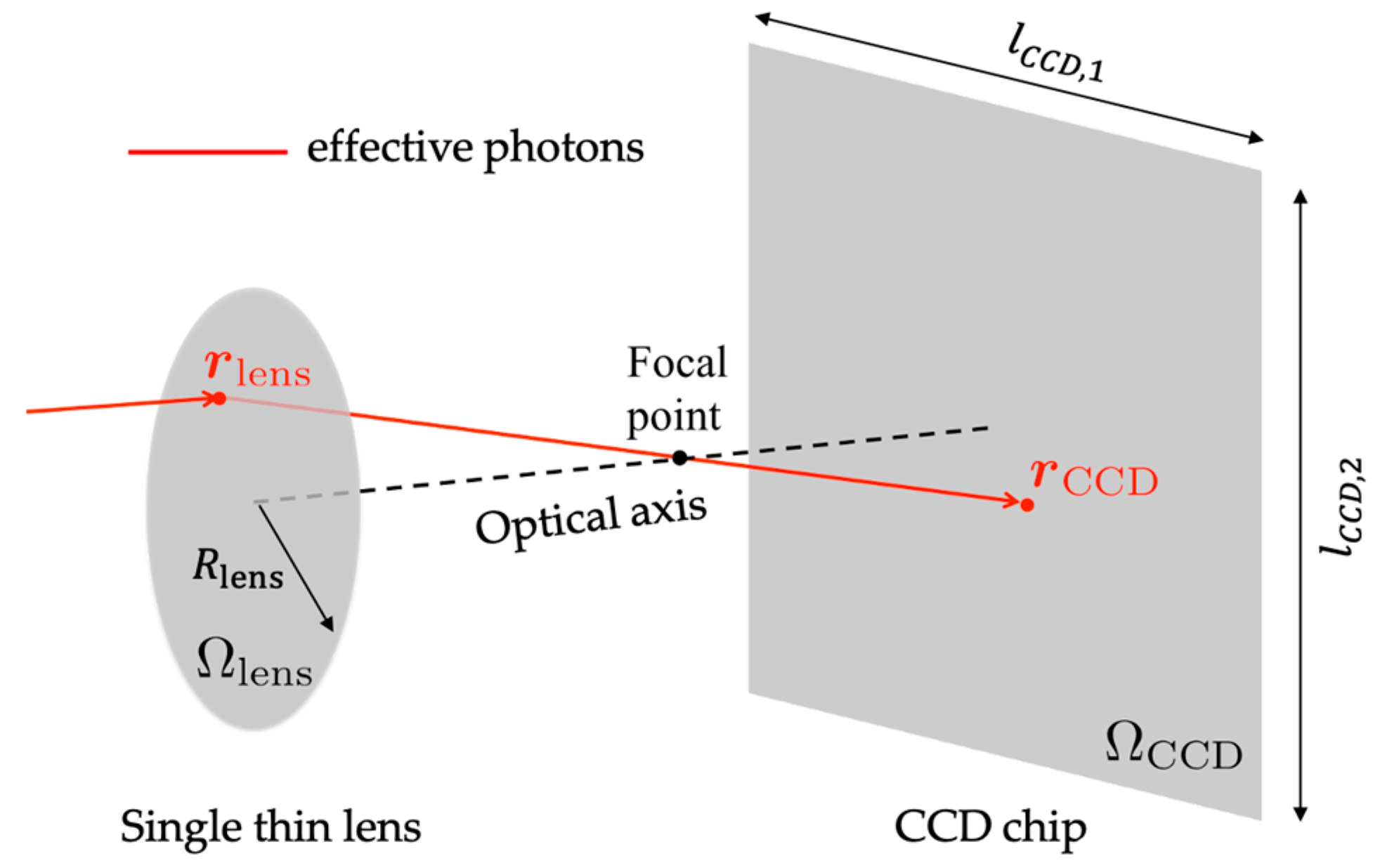

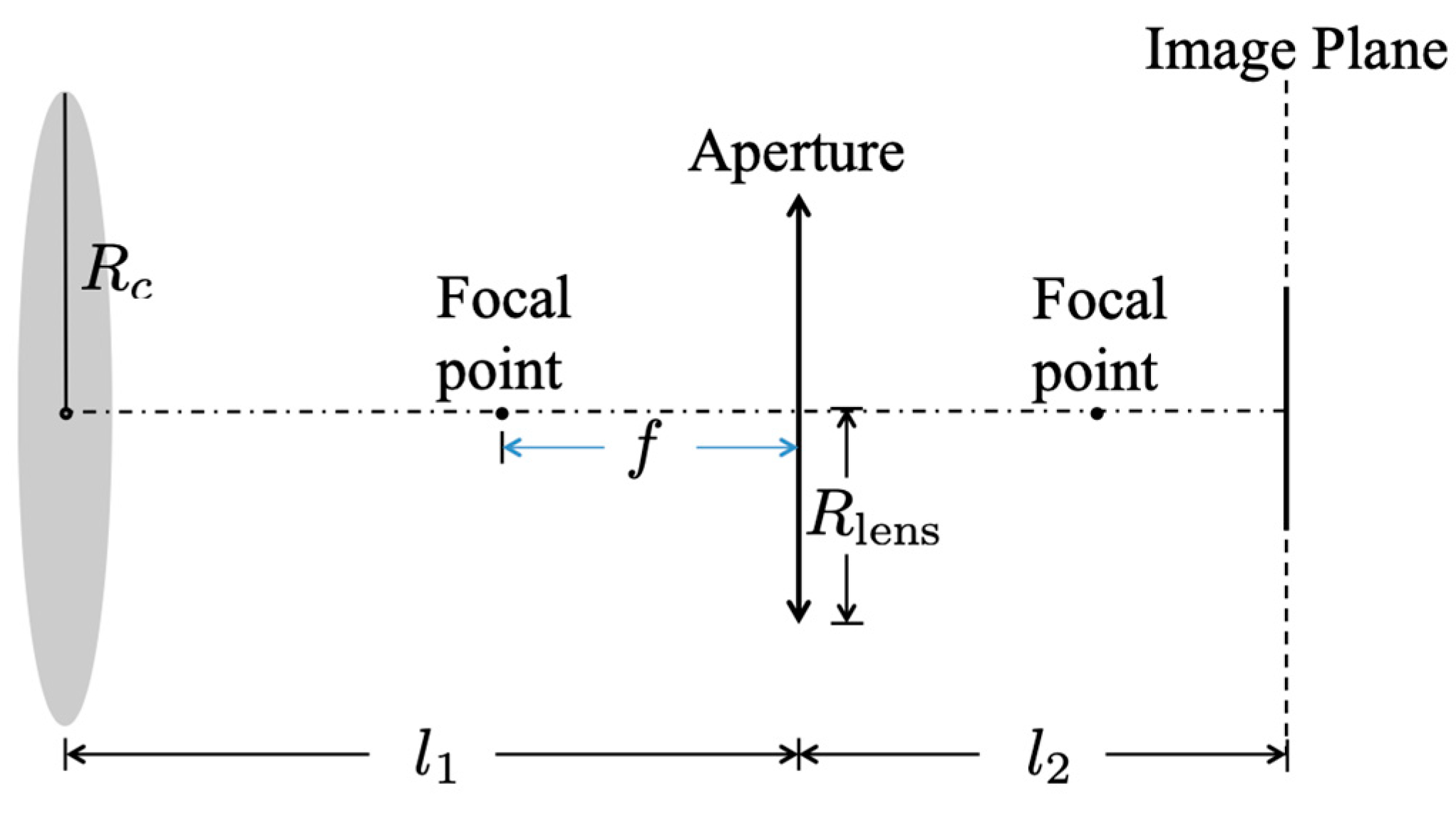

2.2. CCD Camera Acceptance Coordinates System

2.3. Light Propagation in Free Space and Coordinate Transformation

- The operator is a one-to-one and deterministic function from to . Therefore, the light propagation operator is well defined.

- The operator is locally differentiable. In other words, , which is defined as constrained on the surface triangle element , and its inverse are differentiable.

- There is no energy loss during the light’s travel from the surface to the CCD chip.

2.4. Numerical Algorithm for Measurement Operator

| Algorithm 1: Distributed backward ray-tracing algorithm for ’s construction |

|

|

|

| Get the coordinates and the pixel size . |

| for |

| for |

| Backtrack the photon with as its final status. |

| if this photon hits a triangle element on the object surface |

| ● , and of the vertices of (the control volume indices in finite element mesh) |

| ● and the area . |

| ● , define . |

| ● with perturbation method. |

|

● Find the index of the solid angle closest to the direction |

| ● . |

| ● |

| end for |

| end if |

| end for |

| end for |

| end for |

|

3. Results and Discussion

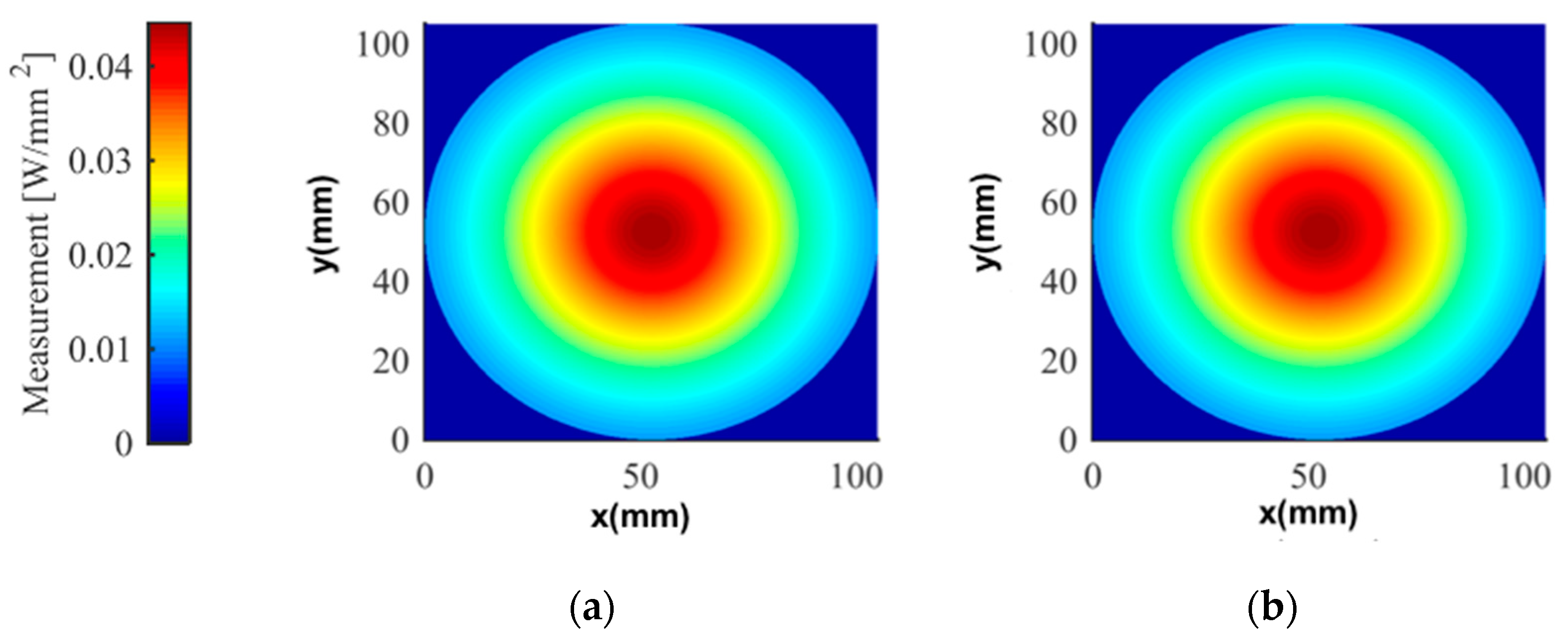

3.1. Validation through Analytic Solution

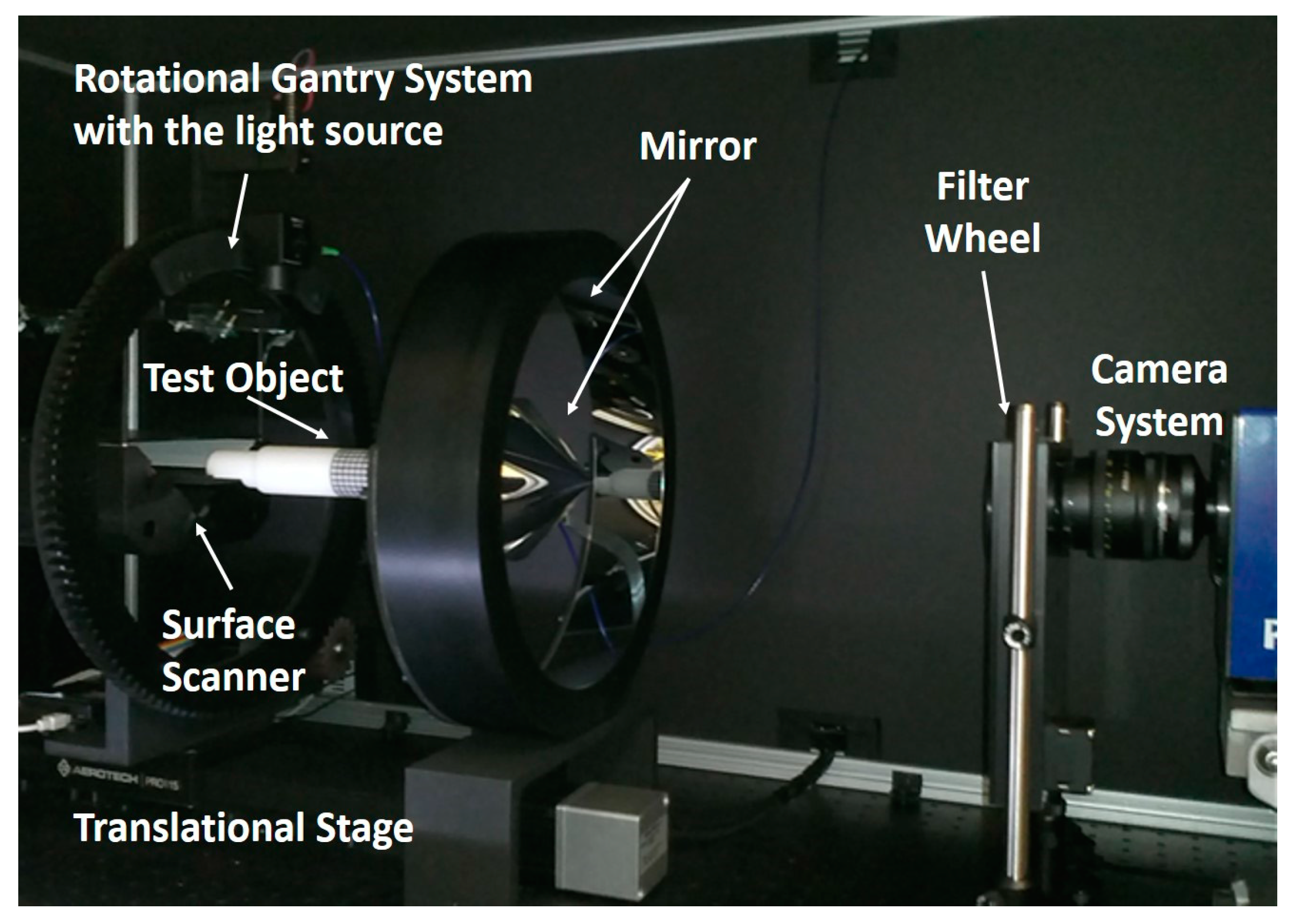

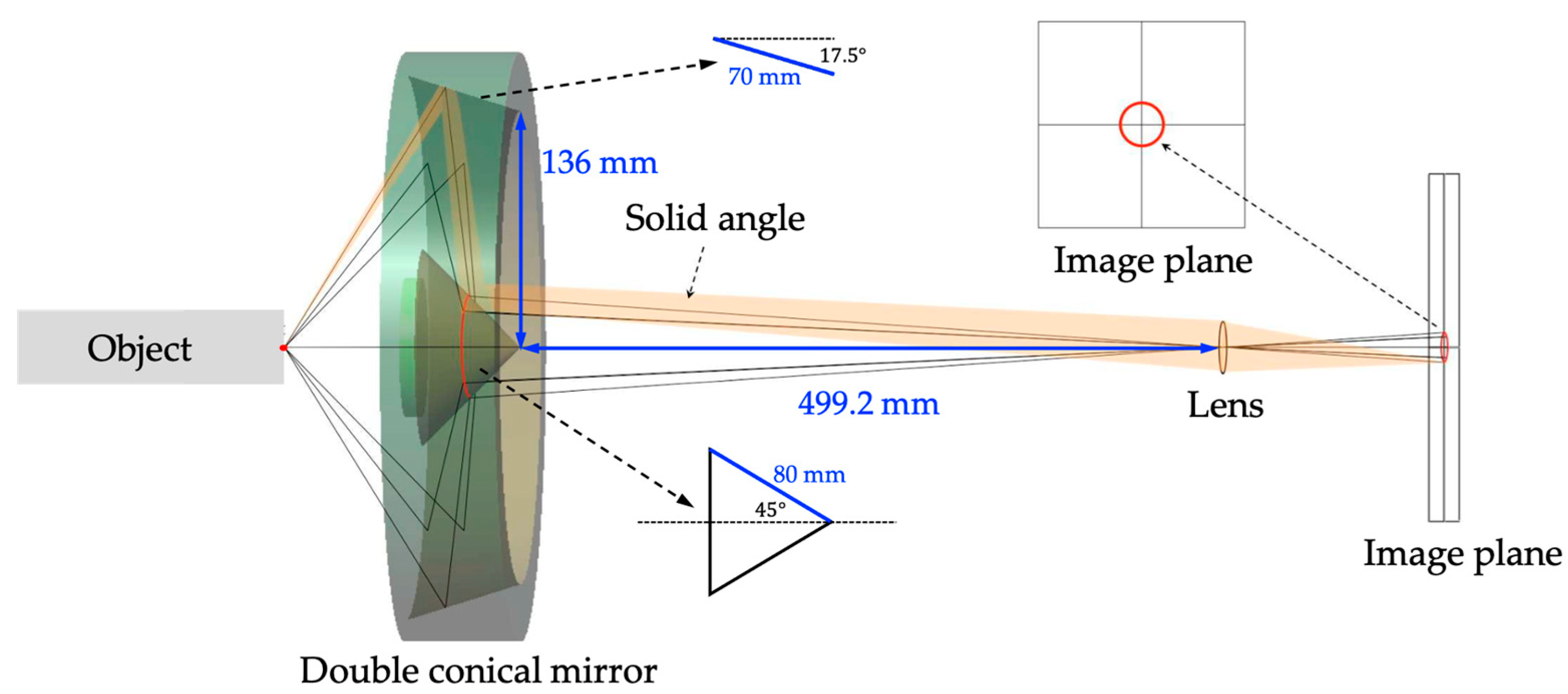

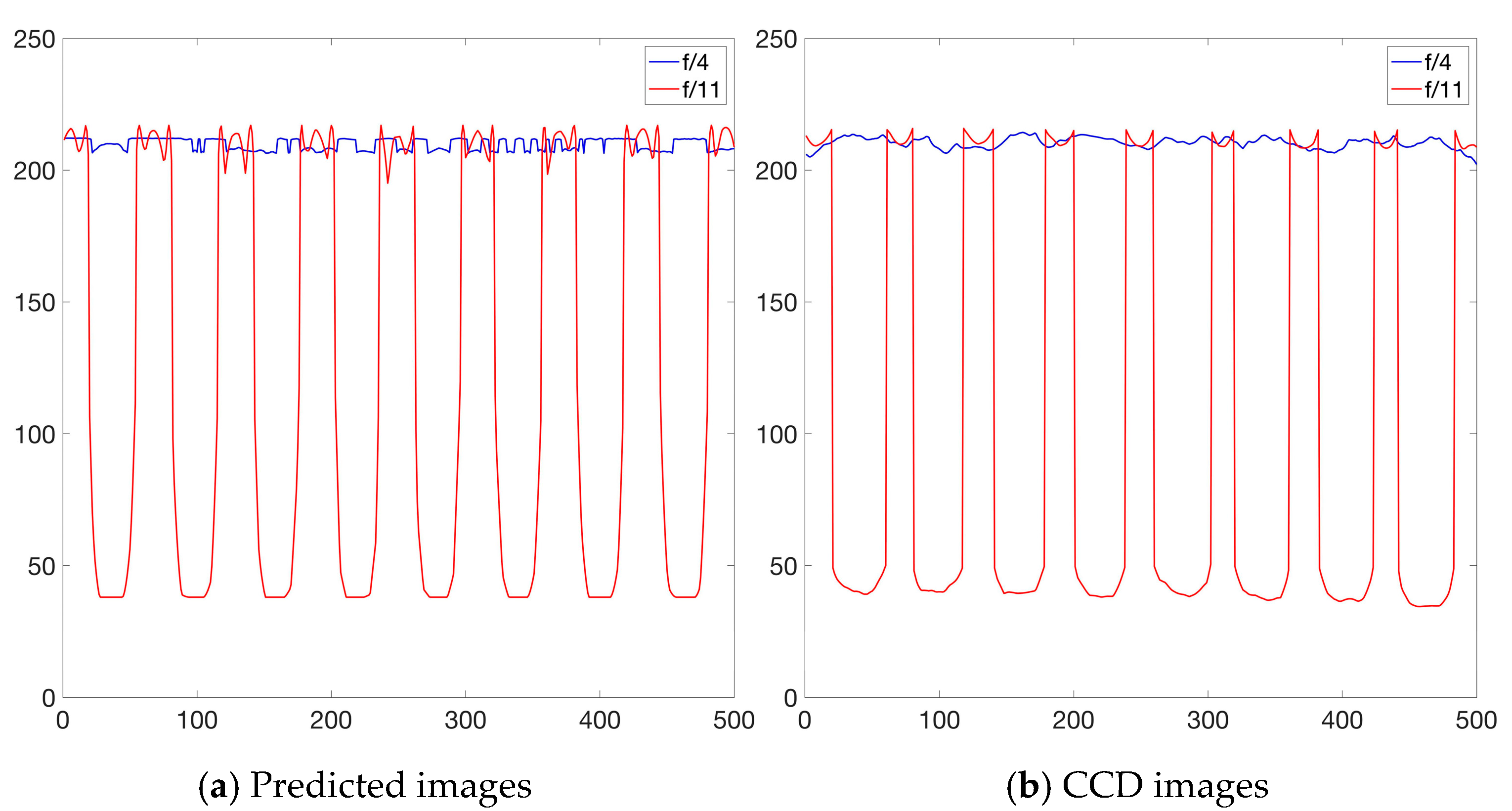

3.2. Validation through the Double Conical Mirror Non-Contact Imaging System

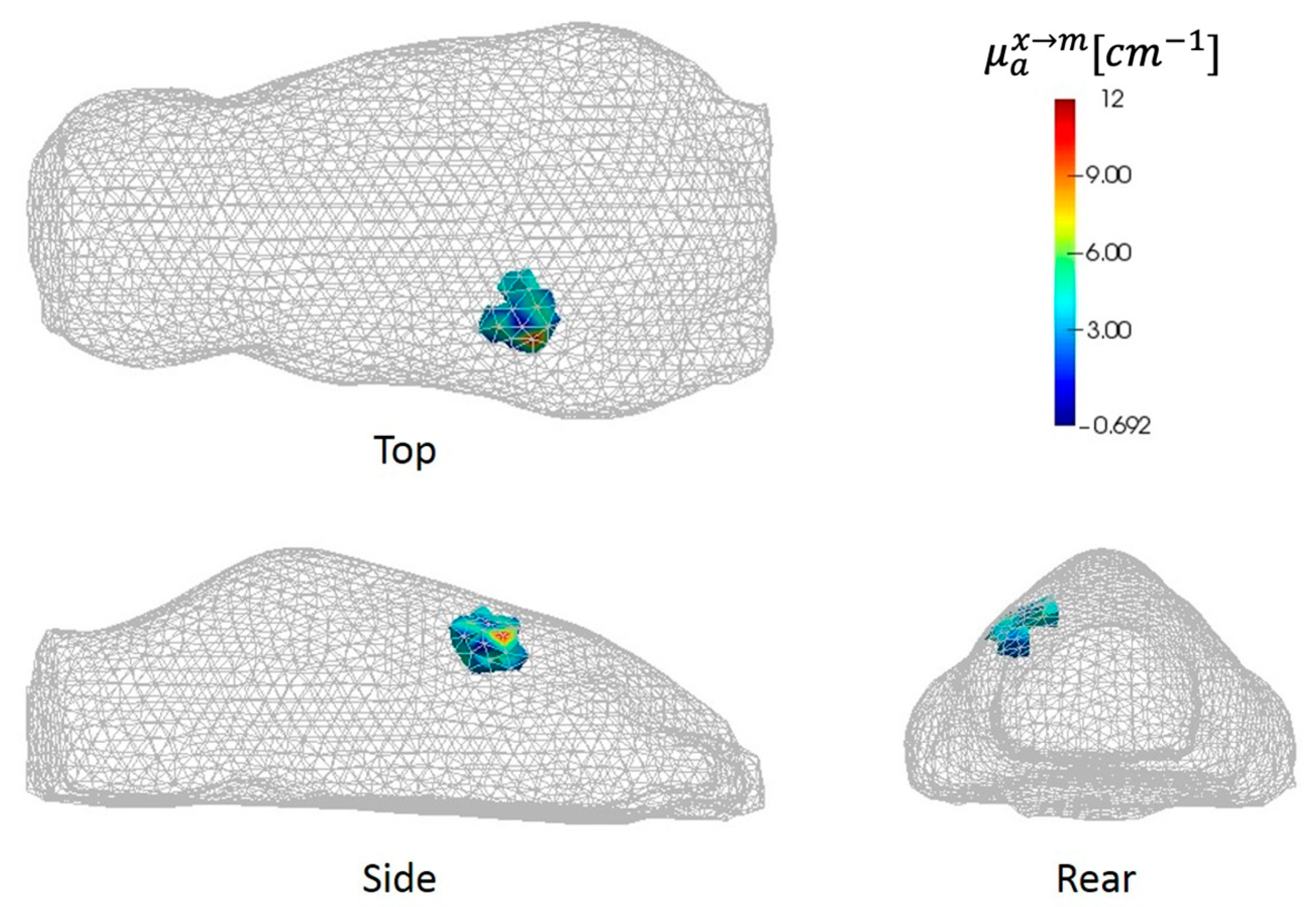

3.3. Application to Real Fluorescence Molecular Tomographic Imaging

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Altoe, M.L.; Kalinsky, K.; Marone, A.; Kim, H.K.; Guo, H.; Hibshoosh, H.; Tejada, M.; Crew, K.; Accordino, M.; Trivedi, M.; et al. Changes in diffuse-optical-tomography images during early stages of neoadjuvant chemotherapy correlate with tumor response in different breast cancer subtypes. Clin. Cancer Res. 2021, 27, 1949–1957. [Google Scholar] [CrossRef] [PubMed]

- Gunther, J.E.; Lim, E.A.; Kim, H.K.; Flexman, M.; Altoé, M.; Campbell, J.A.; Hibshoosh, H.; Crew, K.D.; Kalinsky, K.; Hershman, D.; et al. Dynamic Diffuse Optical Tomography for Monitoring Neoadjuvant Chemotherapy in Patients with Breast Cancer. Radiology 2018, 287, 778–786. [Google Scholar] [CrossRef] [PubMed]

- Tromberg, B.J.; Pogue, B.W.; Paulsen, K.D.; Yodh, A.G.; Boas, D.A.; Cerussi, A.E. Assessing the future of diffuse optical imaging technologies for breast cancer management. Med. Phys. 2008, 35, 2443–2451. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Montejo, L.; Hielscher, A.H. Diagnostic Evaluation of Rheumatoid Arthritis(RA) in Finger Joints Based on the Third-Order Simplified Spherical Harmonics (SP3) Light Propagation Model. Appl. Sci. 2022, 12, 6418. [Google Scholar] [CrossRef]

- Hielscher, A.H.; Kim, H.K.; Montejo, L.D.; Blaschke, S.; Netz, U.J.; Zwaka, P.A.; Illing, G.; Muller, G.A.; Beuthan, J. Frequency-Domain Optical Tomographic Imaging of Arthritic Finger Joints. IEEE Trans. Med. Imaging 2011, 30, 1725–1736. [Google Scholar] [CrossRef] [PubMed]

- Hielscher, A.H.; Klose, A.D.; Scheel, A.K.; Moa-Anderson, B.; Backhaus, M.; Netz, U.; Beuthan, J. Sagittal laser optical tomography for imaging of rheumatoid finger joints. Phys. Med. Biol. 2004, 49, 1147–1163. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Liu, Z.; Jiang, H. Optimization and evaluation of a three-dimensional diffuse optical tomography system for brain imaging. J. X-ray Sci. Technol. 2007, 15, 223–234. [Google Scholar]

- Fishell, A.K.; Burns-Yocum, T.M.; Bergonzi, K.M.; Eggebrecht, A.T.; Culver, J.P. Mapping brain function during naturalistic viewing using high-density diffuse optical tomography. Sci. Rep. 2019, 9, 11115. [Google Scholar] [CrossRef]

- Marone, A.; Hoi, J.W.; Khalil, M.A.; Kim, H.K.; Shrikhande, G.; Dayal, R.; Bajakian, D.R.; Hielscher, A.H. Modeling of the hemodynamics in the feet of patients with peripheral artery disease. Biomed. Opt. Express 2019, 10, 657–669. [Google Scholar] [CrossRef]

- Khalil, M.A.; Kim, H.K.; Kim, I.-K.; Flexman, M.; Dayal, R.; Shrikhande, G.; Hielscher, A.H. Dynamic diffuse optical tomography imaging of peripheral arterial disease. Biomed. Opt. Express 2012, 3, 2288–2298. [Google Scholar] [CrossRef]

- Zhang, X.F.; Badea, C. Effects of sampling strategy on image quality in noncontact panoramic fluorescence diffuse optical tomography for small animal imaging. Opt. Express 2009, 17, 5125–5138. [Google Scholar] [CrossRef]

- Da Silva, A.; Dinten, J.-M.; Coll, J.-L.; Rizo, P. From bench-top small animal diffuse optical tomography towards clinical imaging. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 2–26 August 2007; Volume 1–16, pp. 526–529. [Google Scholar]

- Chaudhari, A.J.; Darvas, F.; Bading, J.R.; Moats, R.A.; Conti, P.S.; Smith, D.J.; Cherry, S.R.; Leahy, R.M. Hyperspectral and multispectral bioluminescence optical tomography for small animal imaging. Phys. Med. Biol. 2005, 50, 5421–5441. [Google Scholar] [CrossRef]

- Arridge, S.R.; Dorn, O.; Kaipio, J.P.; Kolehmainen, V.; Schweiger, M.; Tarvainen, T.; Vauhkonen, M.; Zacharopoulos, A. Reconstruction of subdomain boundaries of piecewise constant coefficients of the radiative transfer equation from optical tomography data. Inverse Probl. 2006, 22, 2175–2196. [Google Scholar] [CrossRef]

- Kim, H.K.; Zhao, Y.; Raghuram, A.; Robinson, J.T.; Veeraraghavan, A.N.; Hielscher, A.H. Ultrafast and Ultrahigh-Resolution Diffuse Optical Tomography for Brain Imaging with Sensitivity Equation based Noniterative Sparse Optical Reconstruction (SENSOR). J. Quant. Spectrosc. Radiat. Transf. 2021, 276, 107939. [Google Scholar] [CrossRef]

- Kim, H.K.; Hielscher, A.H. A PDE-constrained SQP algorithm for optical tomography based on the frequency-domain equation of radiative transfer. Inverse Probl. 2009, 25, 015010. [Google Scholar] [CrossRef]

- Eda, H.; Oda, I.; Ito, Y.; Wada, Y.; Oikawa, Y.; Tsunazawa, Y.; Takada, M.; Tsuchiya, Y.; Yamashita, Y.; Oda, M.; et al. Multichannel time-resolved optical tomographic imaging system. Rev. Sci. Instrum. 1999, 70, 3595–3602. [Google Scholar] [CrossRef]

- Solomon, M.; White, B.R.; Nothdruft, R.E.; Akers, W.; Sudlow, G.; Eggebrecht, A.T.; Achilefu, S.; Culver, J.P. Video-rate fluorescence diffuse optical tomography for in vivo sentinel lymph node imaging. Biomed. Opt. Express 2011, 2, 3267–3277. [Google Scholar] [CrossRef] [PubMed]

- Mehta, A.D.; Jung, J.C.; Flusberg, B.A.; Schnitzer, M.J. Fiber optic in vivo imaging in the mammalian nervous system. Curr. Opin. Neurobiol. 2004, 14, 617–628. [Google Scholar] [CrossRef]

- Ripoll, J.; Schulz, R.B.; Ntziachristos, V. Free-space propagation of diffuse light: Theory and experiments. Phys. Rev. Lett. 2003, 91, 103901. [Google Scholar] [CrossRef]

- Schulz, R.B.; Ripoll, J.; Ntziachristos, V. Noncontact optical tomography of turbid media. Opt. Lett. 2003, 28, 1701–1703. [Google Scholar] [CrossRef] [PubMed]

- Meyer, H.; Garofaiakis, A.; Zacharakis, G.; Psycharakis, S.; Mamalaki, C.; Kioussis, D.; Economou, E.N.; Ntziachristos, V.; Ripoll, J. Noncontact optical imaging in mice with full angular coverage and automatic surface extraction. Appl. Opt. 2007, 46, 3617–3627. [Google Scholar] [CrossRef]

- Schulz, R.B.; Peter, J.; Semmler, W.; D’Andrea, C.; Valentini, G.; Cubeddu, R. Comparison of noncontact and fiber-based fluorescence-mediated tomography. Opt. Lett. 2006, 31, 769–771. [Google Scholar] [CrossRef]

- Elaloufi, R.; Arridge, S.; Pierrat, R.; Carminati, R. Light propagation in multilayered scattering media beyond the diffusive regime. Appl. Opt. 2007, 46, 2528–2539. [Google Scholar] [CrossRef]

- Dehghani, H.; Brooksby, B.; Vishwanath, K.; Pogue, B.W.; Paulsen, K.D. The effects of internal refractive index variation in near-infrared optical tomography: A finite element modelling approach. Phys. Med. Biol. 2003, 48, 2713–2727. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.H.; Jacques, S.L.; Zheng, L.Q. MCML—Monte-Carlo Modeling of Light Transport in Multilayered Tissues. Comput. Methods Programs Biomed. 1995, 47, 131–146. [Google Scholar] [CrossRef] [PubMed]

- Atif, M.; Khan, A.; Ikram, M. Modeling of Light Propagation in Turbid Medium Using Monte Carlo Simulation Technique. Opt. Spectrosc. 2011, 111, 107–112. [Google Scholar] [CrossRef]

- Kumari, S.; Nirala, A.K. Study of light propagation in human and animal tissues by Monte Carlo simulation. Indian J. Phys. 2012, 86, 97–100. [Google Scholar] [CrossRef]

- Chen, X.L.; Gao, X.B.; Qu, X.C.; Chen, D.F.; Ma, X.P.; Liang, J.M.; Tian, J. Generalized free-space diffuse photon transport model based on the influence analysis of a camera lens diaphragm. Appl. Opt. 2010, 49, 5654–5664. [Google Scholar] [CrossRef]

- Chen, X.L.; Gao, X.B.; Qu, X.C.; Liang, J.M.; Wang, L.; Yang, D.A.; Garofalakis, A.; Ripoll, J.; Tian, J. A study of photon propagation in free-space based on hybrid radiosity-radiance theorem. Opt. Express 2009, 17, 16266–16280. [Google Scholar] [CrossRef]

- Duma, V.-F. Radiometric versus geometric, linear, and nonlinear vignetting coefficient. Appl. Opt. 2009, 48, 6355–6364. [Google Scholar] [CrossRef]

- Strojnik, M.; Bravo-Medina, B.; Martin, R.; Wang, Y. Ensquared Energy and Optical Centroid Efficiency in Optical Sensors: Part 1, Theory. Photonics 2023, 10, 254. [Google Scholar] [CrossRef]

- Gao, H.; Zhao, H. Multilevel bioluminescence tomography based on radiative transfer equation Part1: L1 regularization. Opt. Express 2010, 18, 1854–1871. [Google Scholar] [CrossRef] [PubMed]

- Modest, M.F. Radiative Heat Transfer, 2nd ed.; Academic Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Meseguer, J.; Perez-Grande, I.; Sanz-Andres, A. Thermal Radiation Heat Transfer; Woodhead Publ. Mech.: Cambridge, UK, 2012; Volume E, pp. 73–86. [Google Scholar]

- Coxeter, H.S.M. Barycentric Coordinates, 2nd ed.; §13.7 in Introduction to Geometry; Wiley: New York, NY, USA, 1969; pp. 216–221. [Google Scholar]

- Holmes, M.H. Introduction to Perturbation Methods, 2nd ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Cook, R.L.; Porter, T.; Carpenter, L. Distributed Ray Tracing. In Proceedings of the 11th Annual Conference on Computer Graphics and Interactive Techniques, Minneapolis, MN, USA, 23–27 July 1984; Volume 18, pp. 137–145. [Google Scholar]

- Gardner, I.C. Validity of the Cosine 4th Power Law of Illumination. J. Res. Nat. Bur. Stand. 1947, 39, 213–219. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, H.K.; Chandhanayingyong, C.; Lee, F.Y.I.; Hielscher, A.H. Non-contact small animal fluorescence imaging system for simultaneous multi-directional angular-dependent data acquisition. Biomed. Opt. Express 2014, 5, 2301–2316. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, H.K.; Jia, J.; Fong, C.; Hielscher, A.H. A Fast full-body fluorescence/bioluminescence imaging system for small animals. Opt. Tomogr. Spectrosc. Tissue X 2013, 8578, 857821. [Google Scholar]

- Hoi, J.W.; Kim, H.K.; Fong, C.J.; Zweck, L.; Hielscher, A.H. Non-contact dynamic diffuse optical tomography imaging system for evaluating lower extremity vasculature. Biomed. Opt. Express 2018, 9, 5597–5614. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.H.; Jia, J.; Hielscher, A.H. Angle-Dependent Transport Theory-Based Ray Transfer Function for Non-Contact Diffuse Optical Tomographic Imaging. Photonics 2023, 10, 767. https://doi.org/10.3390/photonics10070767

Kim SH, Jia J, Hielscher AH. Angle-Dependent Transport Theory-Based Ray Transfer Function for Non-Contact Diffuse Optical Tomographic Imaging. Photonics. 2023; 10(7):767. https://doi.org/10.3390/photonics10070767

Chicago/Turabian StyleKim, Stephen Hyunkeol, Jingfei Jia, and Andreas H. Hielscher. 2023. "Angle-Dependent Transport Theory-Based Ray Transfer Function for Non-Contact Diffuse Optical Tomographic Imaging" Photonics 10, no. 7: 767. https://doi.org/10.3390/photonics10070767

APA StyleKim, S. H., Jia, J., & Hielscher, A. H. (2023). Angle-Dependent Transport Theory-Based Ray Transfer Function for Non-Contact Diffuse Optical Tomographic Imaging. Photonics, 10(7), 767. https://doi.org/10.3390/photonics10070767