Recognition of Orbital Angular Momentum of Vortex Beams Based on Convolutional Neural Network and Multi-Objective Classifier

Abstract

:1. Introduction

2. Designs and Methods

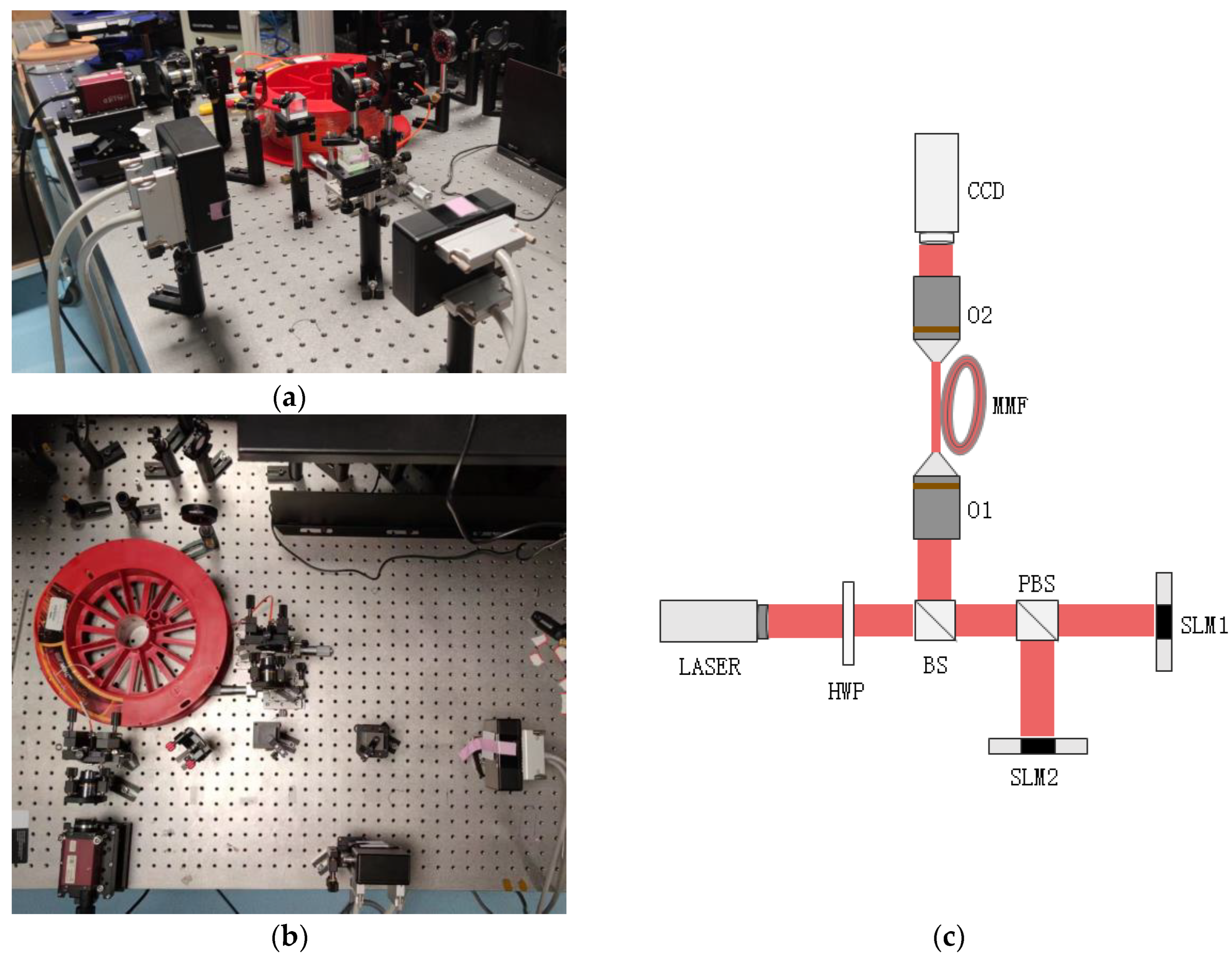

2.1. Dataset

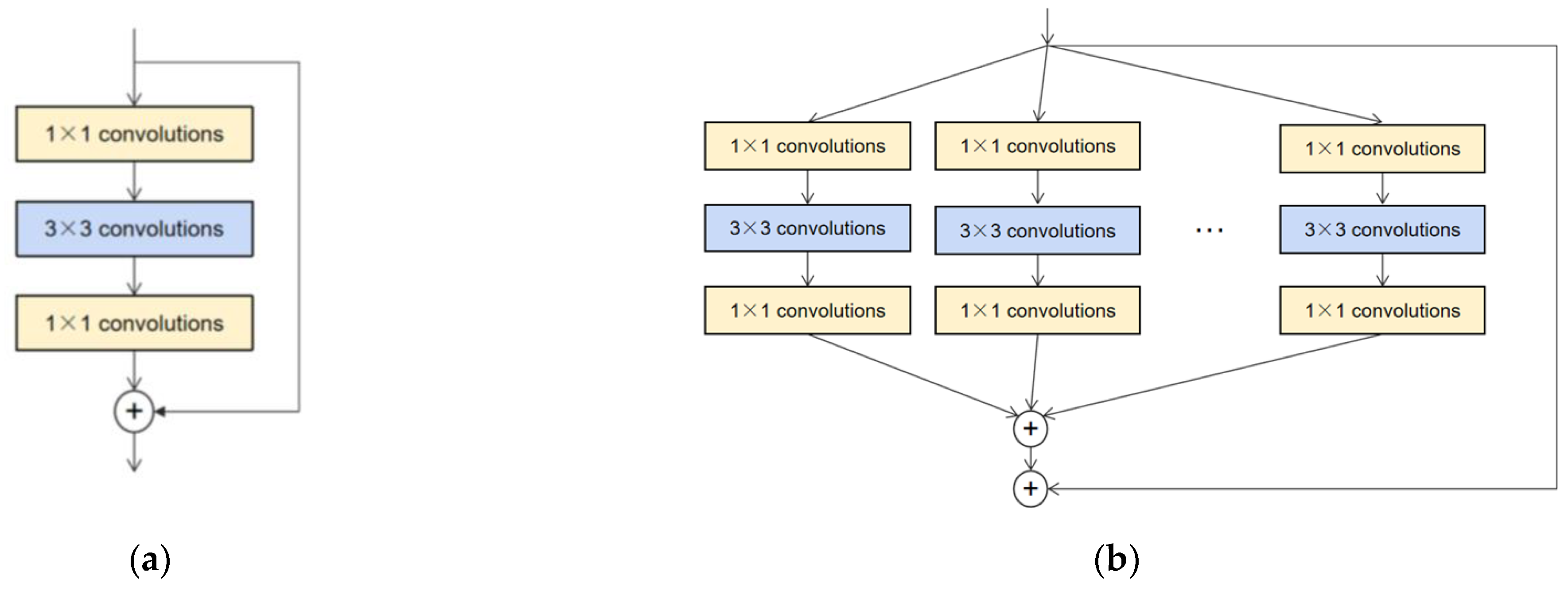

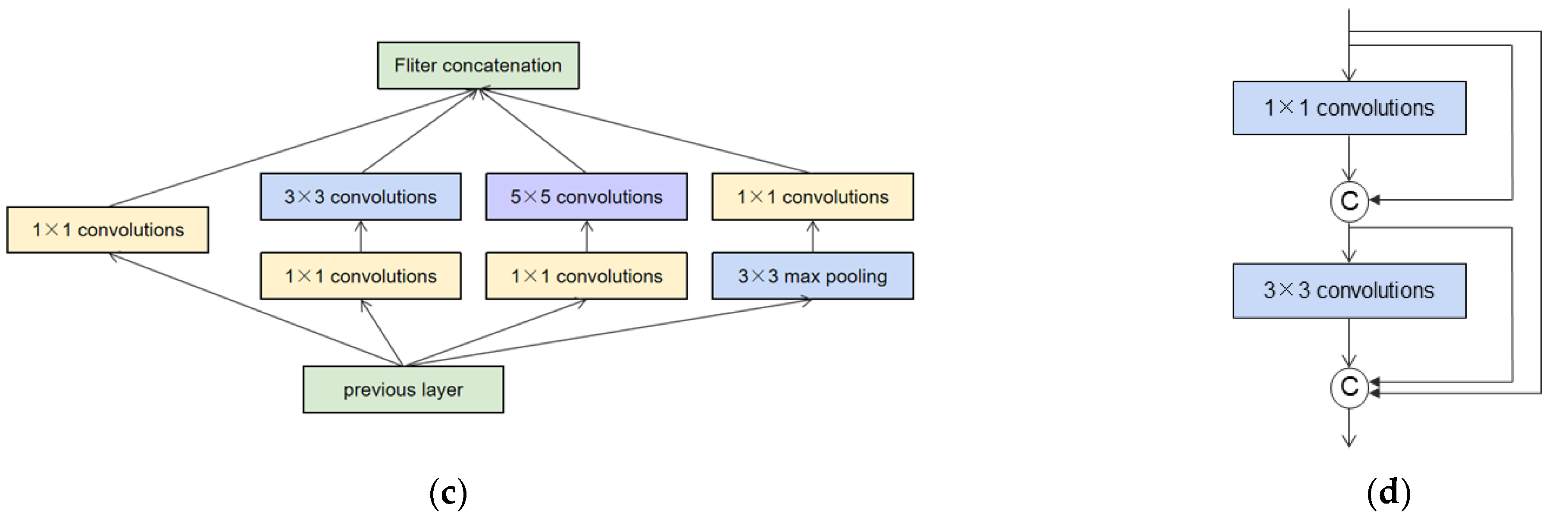

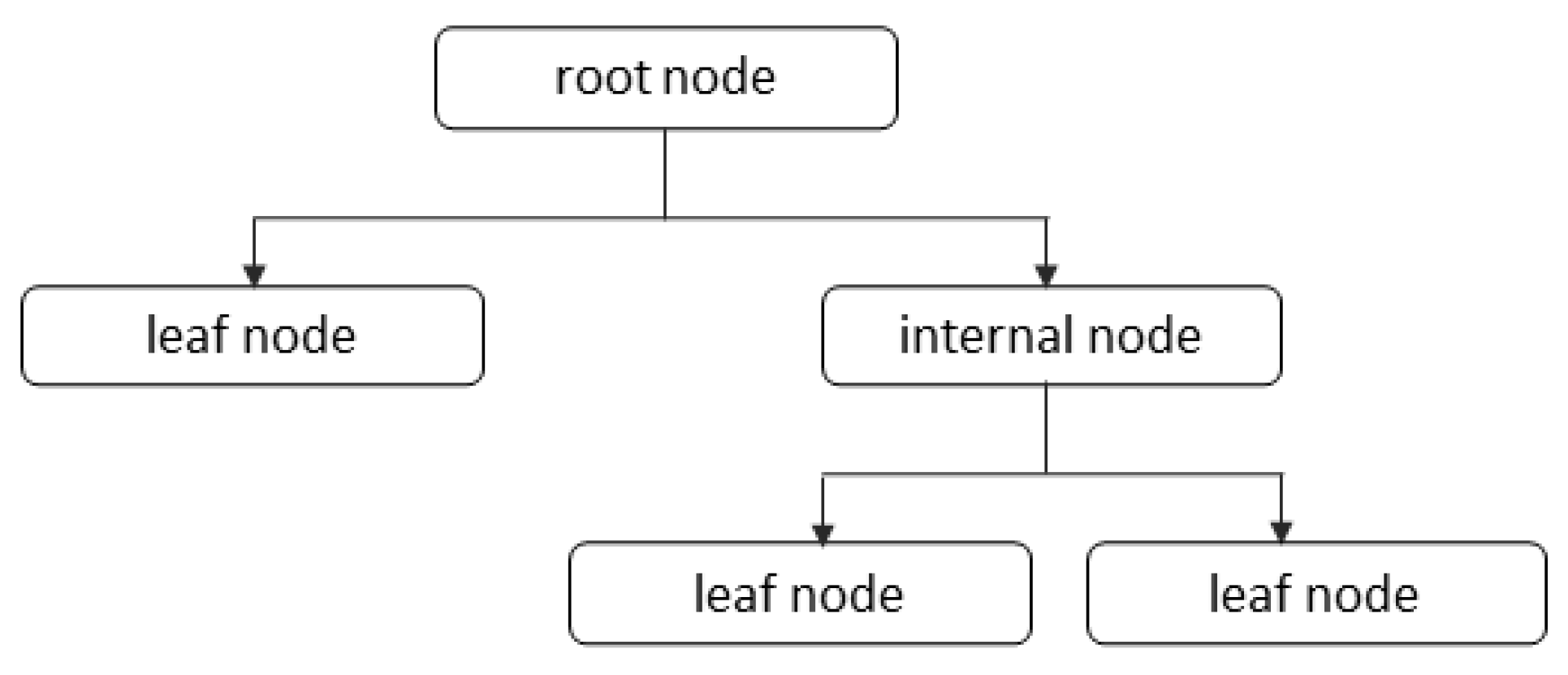

2.2. Network Structure

3. Results and Discussion

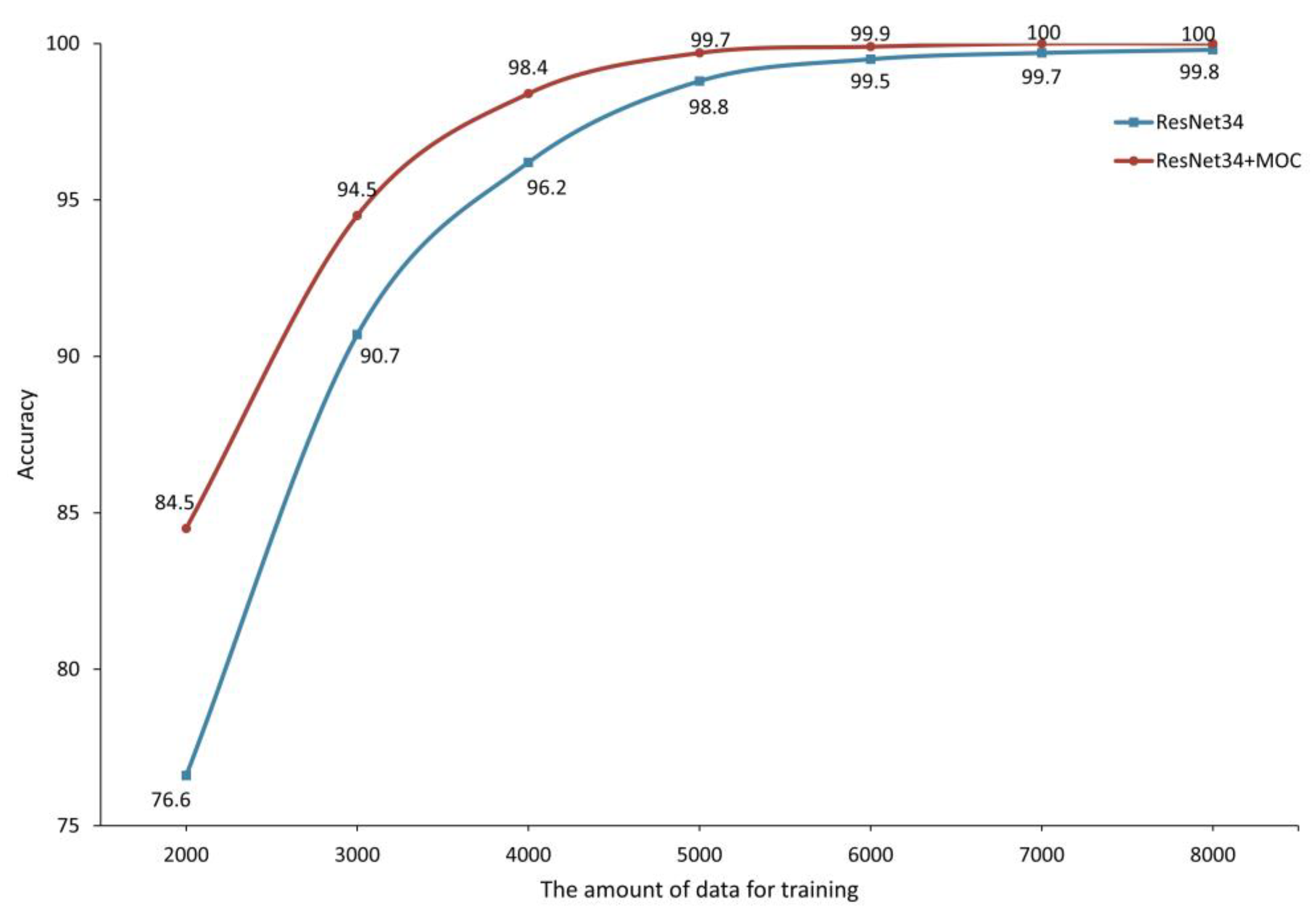

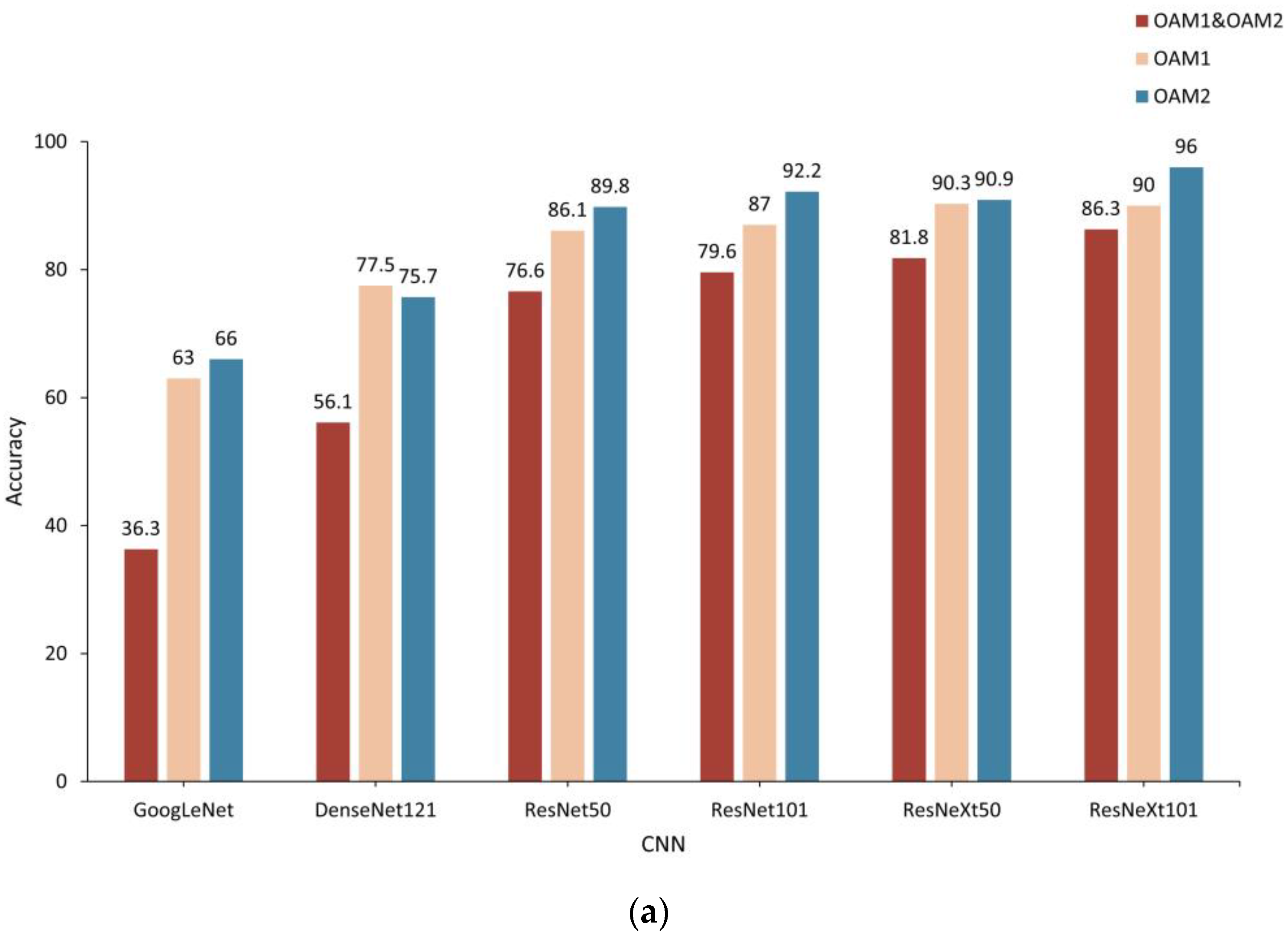

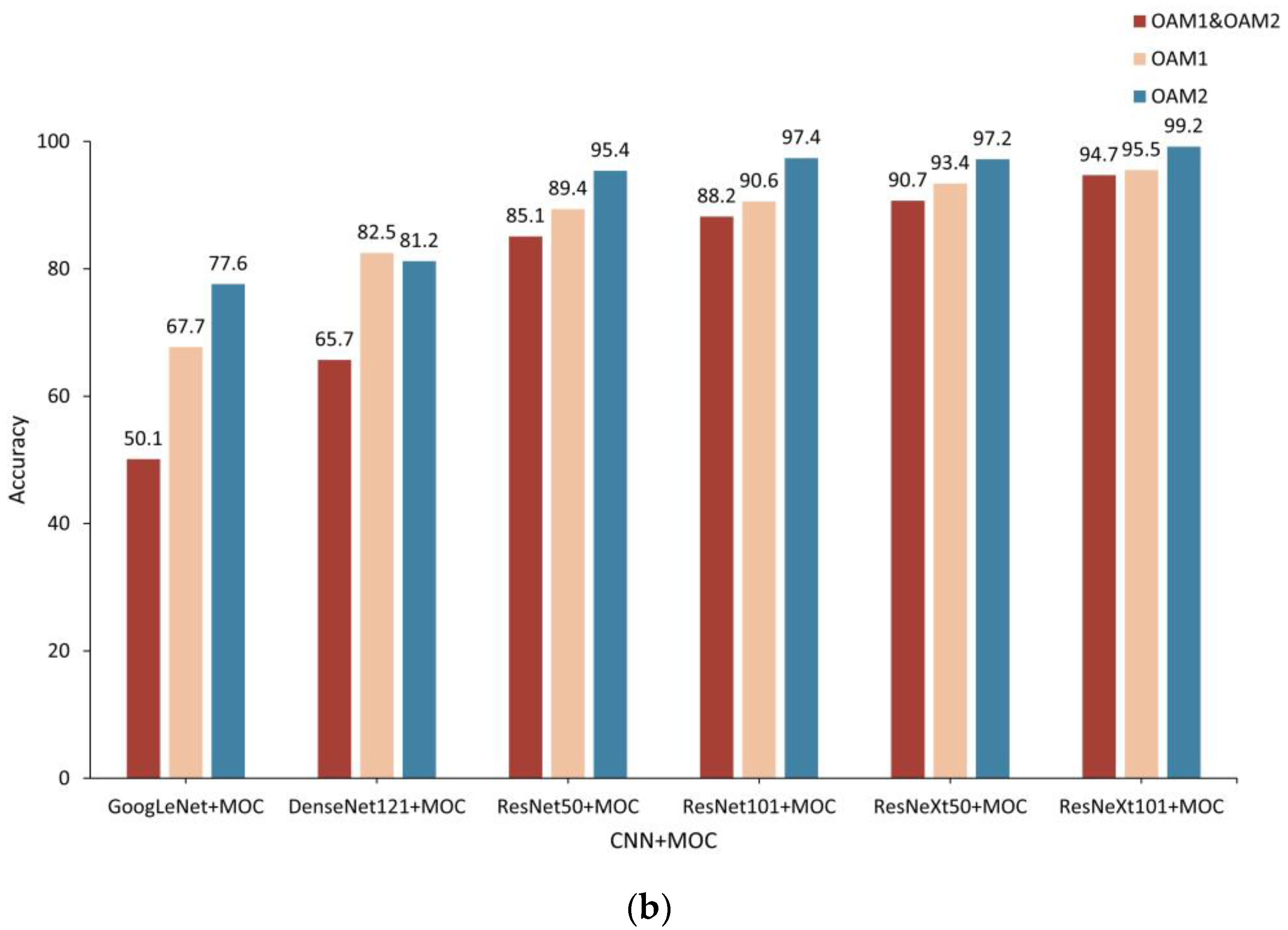

3.1. Results

3.2. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Massari, M.; Ruffato, G.; Gintoli, M.; Romanato, F. Fabrication and characterization of high-quality spiral phase plates for optical applications. Appl. Opt. 2015, 54, 4077–4083. [Google Scholar] [CrossRef]

- Gao, Y.; Jiao, S.; Fang, J.; Lei, T.; Xie, Z.; Yuan, X. Multiple-image encryption and hiding with an optical diffractive neural network. Opt. Commun. 2020, 463, 125476. [Google Scholar] [CrossRef]

- Khoury, A.Z.; Souto Ribeiro, P.H.; Dechoum, K. Quantum theory of two-photon vector vortex beams. arXiv 2020, arXiv:2007.03883. [Google Scholar]

- Gisin, N.; Thew, R. Quantum communication. Nat. Photonics 2007, 1, 165–171. [Google Scholar] [CrossRef]

- Gupta, M.K.; Dowling, J.P. Multiplexing OAM states in an optical fiber: Increase bandwidth of quantum communication and QKD applications. In Proceedings of the APS Division of Atomic, Molecular and Optical Physics Meeting Abstracts, Columbus, OH, USA, 8–12 June 2015; p. M5-M008. [Google Scholar]

- Hernandez-Garcia, C.; Vieira, J.; Mendonca, J.T. Generation and applications of extreme-ultraviolet vortices. Photonics 2017, 4, 28. [Google Scholar] [CrossRef]

- Pyragaite, V.; Stabinis, A. Free-space propagation of overlapping light vortex beams. Opt. Commun. 2002, 213, 187–192. [Google Scholar] [CrossRef]

- Berkhout, G.C.; Lavery, M.P.; Courtial, J.; Beijersbergen, M.W.; Padgett, M.J. Efficient sorting of orbital angular momentum states of light. Phys. Rev. Lett. 2010, 105, 153601. [Google Scholar] [CrossRef]

- Lavery, M.P.; Courtial, J.; Padgett, M. Measurement of light’s orbital angular momentum. In The Angular Momentum of Light; Cambridge University Press: Cambridge, UK, 2013; p. 330. [Google Scholar]

- Padgett, M.; Arlt, J.; Simpson, N.; Allen, L. An experiment to observe the intensity and phase structure of Laguerre–Gaussian laser modes. Am. J. Phys. 1996, 64, 77–82. [Google Scholar] [CrossRef]

- Khajavi, B.; Gonzales Ureta, J.R.; Galvez, E.J. Determining vortex-beam superpositions by shear interferometry. Photonics 2018, 5, 16. [Google Scholar] [CrossRef]

- Azimirad, V.; Ramezanlou, M.T.; Sotubadi, S.V. A consecutive hybrid spiking-convolutional (CHSC) neural controller for sequential decision making in robots. Neurocomputing 2022, 490, 319–336. [Google Scholar] [CrossRef]

- Nabizadeh, M.; Singh, A.; Jamali, S. Structure and dynamics of force clusters and networks in shear thickening suspensions. Phys. Rev. Lett. 2022, 129, 068001. [Google Scholar] [CrossRef]

- Mozaffari, H.; Houmansadr, A. E2FL: Equal and equitable federated learning. arXiv 2022, arXiv:2205.10454. [Google Scholar]

- Roshani, G.H.; Hanus, R.; Khazaei, A. Density and velocity determination for single-phase flow based on radiotracer technique and neural networks. Flow Meas. Instrum. 2018, 61, 9–14. [Google Scholar] [CrossRef]

- Krenn, M.; Fickler, M.; Fink, M.; Handsteiner, J.; Malik, M.; Scheidl, T.; Ursin, R.; Zeilinger, A. Communication with spatially modulated light through turbulent air across Vienna. New J. Phys. 2014, 16, 113028. [Google Scholar] [CrossRef]

- Knutson, E.; Lohani, S.; Danaci, O.; Huver, S.D.; Glasser, R.T. Deep learning as a tool to distinguish between high orbital angular momentum optical modes. In Optics and Photonics for Information Processing X; SPIE: Bellingham, WA, USA, 2016; pp. 236–242. [Google Scholar]

- Doster, T.; Watnik, A.T. Machine learning approach to OAM beam demultiplexing via convolutional neural networks. Appl. Opt. 2017, 56, 3386–3396. [Google Scholar] [CrossRef]

- Li, J.; Zhang, M.; Wang, D. Adaptive demodulator using machine learning for orbital angular momentum shift keying. IEEE Photonics Technol. Lett. 2017, 29, 1455–1458. [Google Scholar] [CrossRef]

- Huang, H.; Milione, G.; Lavery, M.P.; Xie, G.; Ren, Y.; Cao, Y.; Ahmed, N.; An Nguyen, T.; Nolan, D.A.; Li, M.J. Mode division multiplexing using an orbital angular momentum mode sorter and MIMO-DSP over a graded-index few-mode optical fibre. Sci. Rep. 2015, 5, 14931. [Google Scholar] [CrossRef] [PubMed]

- Bozinovic, N.; Golowich, S.; Kristense, P.; Ramachandran, S. Control of orbital angular momentum of light with optical fibers. Opt. Lett. 2012, 37, 2451–2453. [Google Scholar] [CrossRef]

- Gregg, P.; Kristensen, P.; Ramachandran, S. Conservation of orbital angular momentum in air-core optical fibers. Optica 2015, 2, 267–270. [Google Scholar] [CrossRef]

- Chen, L.; Singh, R.K.; Dogariu, A.; Chen, Z.; Pu, J. Estimating topological charge of propagating vortex from single-shot non-imaged speckle. Opt. Lett. 2021, 19, 022603. [Google Scholar] [CrossRef]

- Wang, Z.; Lai, X.; Huang, H.; Li, H.; Chen, Z.; Han, J.; Pu, J. Recognizing the orbital angular momentum (OAM) of vortex beams from speckle patterns. Sci. China Phys. Mech. 2022, 65, 244211. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 17–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 1492–1500. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- Deng, J. A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 22–24 June 2009; pp. 248–255. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

| Model | OAM1 & OAM2 | OAM1 | OAM2 |

|---|---|---|---|

| ResNeXt101 | 87.6% | 90.2% | 97.4% |

| ResNeXt101+MOC | 96.4% | 96.8% | 99.6% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Zhao, H.; Wu, H.; Chen, Z.; Pu, J. Recognition of Orbital Angular Momentum of Vortex Beams Based on Convolutional Neural Network and Multi-Objective Classifier. Photonics 2023, 10, 631. https://doi.org/10.3390/photonics10060631

Zhang Y, Zhao H, Wu H, Chen Z, Pu J. Recognition of Orbital Angular Momentum of Vortex Beams Based on Convolutional Neural Network and Multi-Objective Classifier. Photonics. 2023; 10(6):631. https://doi.org/10.3390/photonics10060631

Chicago/Turabian StyleZhang, Yanzhu, He Zhao, Hao Wu, Ziyang Chen, and Jixiong Pu. 2023. "Recognition of Orbital Angular Momentum of Vortex Beams Based on Convolutional Neural Network and Multi-Objective Classifier" Photonics 10, no. 6: 631. https://doi.org/10.3390/photonics10060631

APA StyleZhang, Y., Zhao, H., Wu, H., Chen, Z., & Pu, J. (2023). Recognition of Orbital Angular Momentum of Vortex Beams Based on Convolutional Neural Network and Multi-Objective Classifier. Photonics, 10(6), 631. https://doi.org/10.3390/photonics10060631