Abstract

Vortex beams carry orbital angular momentum (OAM), and their inherent infinite dimensional eigenstates can enhance the ability for optical communication and information processing in the classical and quantum fields. The measurement of the OAM of vortex beams is of great significance for optical communication applications based on vortex beams. Most of the existing measurement methods require the beam to have a regular spiral wavefront. Nevertheless, the wavefront of the light will be distorted when a vortex beam propagates through a random medium, hindering the accurate recognition of OAM by traditional methods. Deep learning offers a solution to identify the OAM of the vortex beam from a speckle field. However, the method based on deep learning usually requires a lot of data, while it is difficult to attain a large amount of data in some practical applications. To solve this problem, we design a framework based on convolutional neural network (CNN) and multi-objective classifier (MOC), by which the OAM of vortex beams can be identified with high accuracy using a small amount of data. We find that by combining CNN with different structures and MOC, the highest accuracy reaches 96.4%, validating the feasibility of the proposed scheme.

1. Introduction

As a special beam-carrying OAM and possessing spiral wavefront, the optical vortex has important applications in many fields such as optical manipulation [1], optical information processing [2], photon computer [3], quantum communication [4,5,6], and free space optical communication [7]. OAM-based optical communication has become one of the research hotspots in recent years. In addition to using amplitude, phase, frequency, and polarization to modulate information, OAM can be used as a new modulation parameter in the optical communication system. Because OAM and other physical quantities are independent of each other, OAM can effectively integrate with other communication methods, which greatly upgrades the transmission capability.

The demand for the transmission of large amounts of data in the communication field is becoming more and more urgent. OAM-based free-space optical communication has unique advantages and has great prospects in future communication applications. OAM measurement is critical for these applications. Methods, such as spiral interference fringes and optical conversion, have been proposed to measure the OAM of vortex beams [8,9,10,11]. However, the above methods are limited to identify the OAM of vortex beams in free space due to the requirement of a well-defined intensity pattern. With the continuous development of artificial intelligence, machine learning techniques are widely applied in many engineering fields such as spectral analysis, computer vision, intelligent machine control, etc. [12,13,14,15] because of their advantages of automatically learning data trends and patterns that may be ignored by humans. Many OAM recognition methods based on machine learning have been proposed. In 2014, Krenn et al. proposed for the first time using the BP-ANN model to identify the intensity map of superimposed Laguerre-Gaussian (LG) beams, using 16 combinations with an error rate of 1.7% [16]. In 2016, Knuston et al. used the VGG16 network model to classify 110 different OAM states with a classification accuracy of 74% [17]. In 2017, Doster and Watnik validated that the demultiplexing effect to identify Bessel Gaussian multiplexed beams by the Alexnet network model is better than the traditional method [18]. Compared with the recognition rates of BP-ANN, Li et al. demonstrated that machine learning methods based on the convolutional neural network are better choices for demultiplexing LG beams [19]. These results show that pattern recognition based on machine learning solves the limitation that the traditional methods are limited to recognizing the OAM of vortex beams in free space, and offers a new solution for OAM recognition.

Optical fiber is widely used to transmit information over long distances. A specially designed optical fiber can ensure the distortionless transmission of vortex beams [20,21,22]. However, this specially designed optical fiber requires a complex manufacturing process and high cost, hindering popularization and application. In contrast, the manufacturing process for ordinary optical fiber is mature and low-cost. A multimode fiber (MMF) can simultaneously transmit a large number of modes, providing a solution for large-capacity data transmission. When a vortex beam is transmitted in the MMF, the irregular speckle image is generated at the distal end due to mode coupling and superposition, which hinders the recognition of the OAM [23]. A deep learning–based method realized the recognition of OAM from the speckle image at the distal end of the MMF [24], but a large amount of data is required in this method. The results of deep learning–based methods are directly proportional to the amount of data. Excellent results depend on a large amount of data, which is difficult to obtain in some practical problems. The machine learning algorithm can effectively deal with small data problems and can make correct, but not necessarily optimal, decisions. In order to fully combine their advantages, in this study we propose a framework based on a CNN and an MOC which achieves a high accuracy recognition of the OAM of a vortex beam from speckle with a small amount of data. We extract features from the pretrained CNN model, send the extracted features and corresponding tags to the MOC for training, and finally classify them. This method can greatly reduce the amount of data used while maintaining high recognition accuracy.

2. Designs and Methods

2.1. Dataset

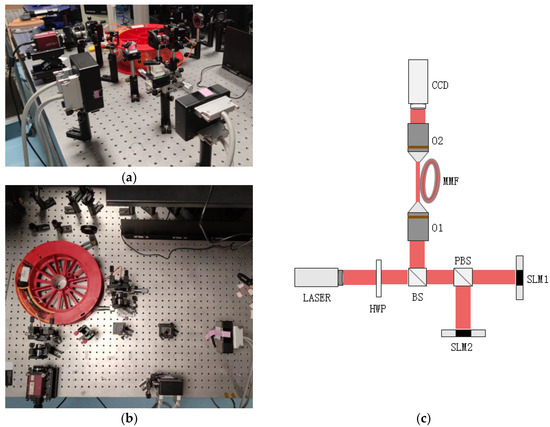

The experimental setup is shown in Figure 1. A vertically polarized laser beam (Onefive Origami-10XP, 400 fs, 1 MHz) with a wavelength of 1028 nm propagates through a half-wave plate (HWP), which changes the polarization direction of the beam to 45° respective to the horizontal axis. The transmitted light through the beam splitter (BS) is divided into two beams with orthogonal polarization by the polarization beam splitter (PBS). Two phase-only spatial light modulators (SLM1, HAMAMATSUX13138-03 and SLM2, HAMAMATSUX13138-09) impose helical phase to horizontally and vertically polarized beams, respectively. Two vortex beams with orthogonal polarization and the same or different OAM are recombined by a PBS and coupled to an MMF (Thorlabs, M31L20, 62.5 μm, NA = 0.275, 20 m) through microscope objective lens 1 (O1). The speckle image at the distal end of the MMF is collected by microscope objective lens 2 (O2). Finally, the image is captured by a charge coupler device (CCD, Pike F421B, AVT). The OAM-related topological charge of the vortex beam generated by SLM1 and SLM2 changes from 1 to 10.9 with an interval of 0.1. As the two topological charges change alternately, 10,000 different speckle images are generated at the distal end of the MMF. The distribution of light intensity is shown in Figure 2.

Figure 1.

Experimental configuration of transmission of two orthogonally polarized vortex beams in MMF. (a) side shot image; (b) overhead shot image; (c) light path map.

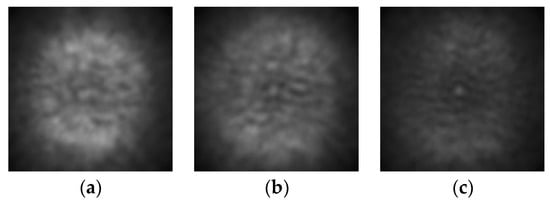

Figure 2.

The speckle images. (a) l1 = 1.0 & l2 = 1.0; (b) l1 = 5.2 & l2 = 3.7; (c) l1 = 10.9 & l2 = 10.9.

2.2. Network Structure

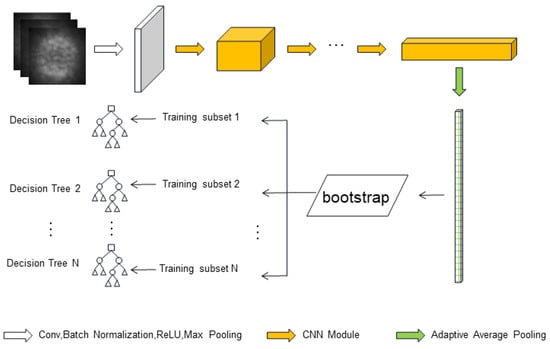

To ensure high recognition accuracy and reduce the training-required data, we designed an architecture based on CNN and MOC. The network architecture is shown in Figure 3.

Figure 3.

Overall network architecture.

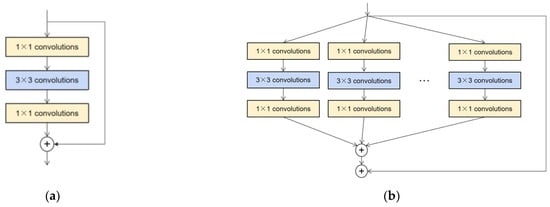

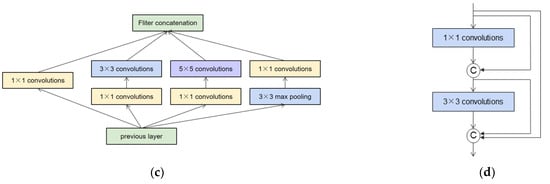

The CNN is used to fully extract the image features. The manual feature extraction method of traditional machine learning technology has limitations in the correlation of features and may include human bias, affecting the quality of elements and the corresponding results. Therefore, we use CNN to extract features and learn the importance of features automatically through backpropagation, thus eliminating some of the problems and limitations of manual feature extraction. The feature extraction depends on the structures of CNN. In this study, different structures of CNN, including ResNet [25], ResNeXt [26], DenseNet [27], and GoogLeNet [28], are selected to extract features. The module structure diagrams of these networks are shown in Figure 4.

Figure 4.

The module structure of the network. (a) ResNet module; (b) ResNeXt module; (c) GoogLeNet module; (d) DenseNet module.

ResNet and ResNeXt are composed of residual structures with jump connections. Based on this jump connection structure, the problem of gradient disappearance is solved, and a deeper network can be built. Figures a and b in Figure 4 show the ResNet module and the ResNeXt module, respectively. ResNeXt decomposes the residual module of ResNet into several uniform branch structures. Through this design, the network structure becomes clearer and more modular. The number of parameters that need to be adjusted manually is reduced, and the performance is better in the case of the same number of parameters.

The GoogLeNet is made up of several identical modules connected in series. The module structure is shown in the Figure 4c. With this structure, more convolution can be stacked in the receptive field of the same size, which is beneficial in learning more abundant features, thus improving the performance of the network.

DenseNet is a densely connected network. Different from the addition operation between layers of ResNet and ResNeXt, the connection between different layers of DenseNet becomes a splicing operation (superposition on dimensions). This connection mode reduces the number of parameters in the network. The feature reuse of the dense connection and rich feature information acquisition of the stitching operation benefits the extraction of more features with less convolution. In addition, this structure builds a deeper network and reduces the risk of overfitting by improving the flow of information and gradients in the whole network. The structure diagram is shown in Figure 4d.

To extract features with CNN, we used the pretrained model to finetune the network parameters with our data. The image propagates between each layer and stops at the last layer, where the current vector is taken as the feature vector. This training method is called transfer learning, and the pretraining model is obtained by training on ImageNet data [29]. The dataset includes 1000 categories composed of 14 billion images. A model trained on such a large number of data sets has learned more important features. Only finetuning is needed to train our added final classification layer.

The second part of the architecture is the MOC, which can achieve high recognition accuracy with a small amount of data. A speckle pattern is generated by the transmission of two vortex beams through an MMF, indicating that each sample has two target values. Therefore, it is necessary to use a MOC to fit and predict each target by selecting the type of evaluator. The evaluator used in this study is the random forest ensemble learning classifier [30], whose basic estimator is the decision tree [31].

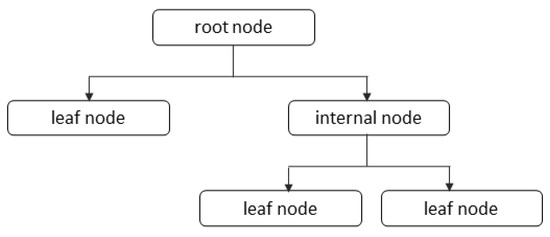

As a basic classification and regression algorithm, the decision tree shows a tree structure composed of nodes and directed edges (Figure 5). A decision tree contains a root node, several internal nodes, and leaf nodes. The root node includes all the sample sets, the leaf node corresponds to the decision result, and the other nodes correspond to the attribute test. The path from the root node to each leaf node corresponds to a judgment test sequence. The core of the algorithm is to recursively select the optimal feature and segment the data according to the feature so as to find the best classification result for each sub-data set.

Figure 5.

Structure diagram of the decision tree.

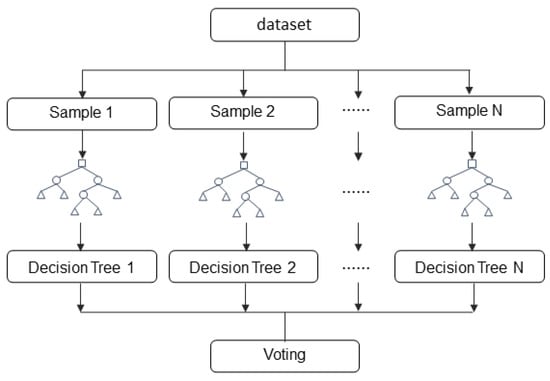

Random forest is a combinatorial classification algorithm of ensemble learning (Figure 6). Ensemble learning is mainly focused on producing a strong classifier with an excellent classification performance by combining several base classifiers. Based on this idea, multiple decision trees generate random forests. The core idea of the random forest algorithm is to resample the training set to form multiple training subsets. Each subset generates a decision tree, and the final result is decided by all the decision trees through voting.

Figure 6.

The basic flow of the random forest algorithm.

3. Results and Discussion

3.1. Results

As the two topological charges of vortex beams change from 1 to 10.9 alternately, 10,000 different speckle patterns are recorded by CCD. These speckle images are randomly selected as the training data or test data to train and test the network. To finetune the pretrained CNN, the input images need to be preprocessed. The method of preprocessing is central cropping and normalization, and the data are converted into tensors in the network. The training set, verification set, and test set are processed in the same way. After the training of the multi-objective classifier, the performance of the network is tested with the test data. The test results are compared with real tags to calculate the recognition accuracy of two OAMs and a single OAM. All the charts and diagrams in our study were derived from Origin, a data analysis and mapping software, and the results are as follows.

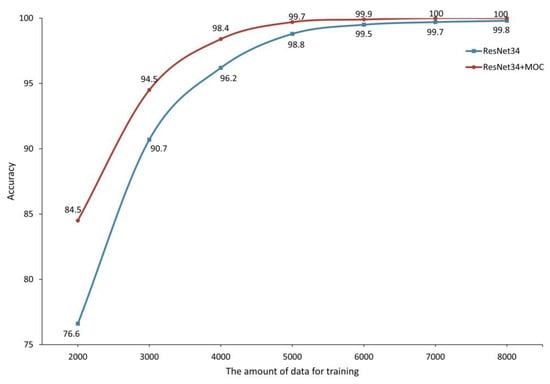

The recognition accuracy depends on the amount of data used to train the network (Figure 7). The image is cut to the size of 224 × 224 by the central clipping method. The blue curve and red curve correspond to the test result of ResNet34 and ResNet34+MOC, respectively. High accuracy can be achieved by using the CNN method with a large amount of data. The accuracy is improved by adding an MOC. The accuracy equals to 100% by training the combined network with enough data.

Figure 7.

Test results of trained networks with different amounts of data.

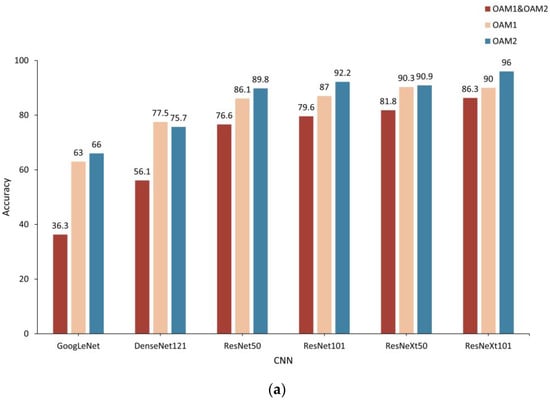

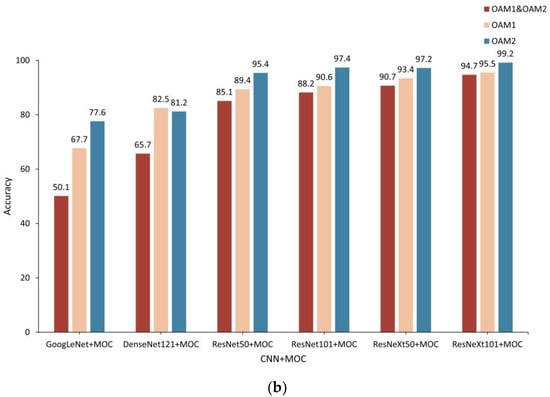

Conventionally, since a larger amount of training set benefits the training of the network, the amount of the training set is much larger than that of the testing set. On the contrary, in this test, the training data contains 2000 speckle images, while the test data contains 8000 speckle images. The image is cut to the size of 224 × 224 by central clipping. The CNN used are GoogLeNet, DenseNet121, ResNet50, ResNet101, ResNeXt50, and ResNeXt101. Figure 8a shows the test results of the CNN, and Figure 8b shows the test results of the CNN+MOC. The values in red correspond to the recognition accuracy of both OAM1 and OAM2, and the values in beige and blue correspond to the recognition accuracy of OAM1 and OAM2, respectively.

Figure 8.

Test results of trained CNN with different structures by 2000 data. (a) Test results of trained CNN with different structures (b) Test results of trained CNN+MOC with different structures.

Based on the ResNeXt101 network with the best accuracy, the network is also trained with 2000 pieces of data, and the image of the original size 256 × 256 is used as input. The test results are shown in Table 1.

Table 1.

2000 pieces of original-size data are used for training, ResNeXt101 and ResNeXt101+MOC test results.

3.2. Discussion

With a large amount of data for training, ResNet34 can effectively learn and extract useful features. The OAM recognition accuracy is 99.8% (Figure 7). After further training by the MOC, the accuracy reaches 100%. With the continuous reduction in training data, the recognition accuracy also decreases. When the training data are reduced to 2000, ResNet34 can learn and extract useful features to a certain extent. However, due to the small amount of data, the learned features are limited, and the recognition accuracy of OAM is greatly reduced to 76.6%. After further training by the MOC, the recognition accuracy is improved, reaching 84.5%.

To further increase the recognition accuracy, we change the structure of the CNN. To effectively learn and extract the features, semantic information and resolution information are indispensable. More abstract features can be learned and extracted by increasing the depth of the network, and features in a larger resolution can be learned and extracted by enhancing the function of the convolution module. However, the performance cannot be improved by blindly deepening the number of layers and enhancing the function; the performance needs to be analyzed and balanced according to the actual problems. The recognition accuracy of GoogLeNet and DenseNet121 is only 36.3% and 56.1%, respectively. The reason is that GoogLeNet pays more attention to the module function than to the depth of the network, which leads to underfitting due to the failure to fully learn and extract the features. Although DenseNet121 pays attention to the network depth and module function simultaneously, due to the small amount of data the network fits well in the training data, while the poor fitting in the test data leads to overfitting. Since ResNet34 has better learning and feature extraction performance, ResNet50 and ResNet101 improve the performance by increasing the number of network layers to deepen the network depth. In order to further improve the performance, ResNeXt50 and ResNeXt101 are designed to optimize the module structure to enhance the module function on the basis of the deep network. ResNeXt101 has achieved the highest recognition accuracy of 86.3% with its optimal module structure and deeper network level. Then the extracted features and corresponding tags are trained by the MOC, and the recognition accuracy is further improved by up to 94.7%.

By adding information, the size of the original image is input into the network, so that the network with good feature learning and extraction functions can learn more rich information. This information can further improve the performance of the network, and the accuracy of OAM recognition reaches 96.4%.

By increasing the depth of the network and optimizing the network structure to enhance the learning and extraction of semantic and resolution information, the recognition accuracy of OAM is improved. By selecting CNN that can effectively extract semantic information and resolution information, and then through MOC training, the highest recognition accuracy of OAM is 94.7%. Further input containing more information can again improve the performance, and the recognition accuracy is more than 96%. Therefore, the architecture based on CNN and MOC proposed in this study can recognize the OAM of vortex beams from the speckle patterns with high accuracy.

4. Conclusions

In conclusion, we propose a combined CNN and MOC method, which successfully identifies the OAM of vortex beams from speckle patterns. Although traditional CNN can recognize the OAM of vortex beams from speckle patterns, excellent performance requires a large amount of training data support. To reduce this dependence, we further introduce a MOC to CNN. Through the combination of CNN with different structures and MOC, the highest recognition accuracy can reach 96.4% even with only a small amount data to train the network. The proposed network structure offers a solution to deal with the problem of small data.

Author Contributions

Conceptualization, Y.Z., J.P., Z.C., H.Z. and H.W.; methodology, Y.Z., J.P. and Z.C.; software, H.Z. and H.W.; validation, Y.Z., H.Z. and H.W.; formal analysis, Y.Z.; investigation, H.Z. and H.W.; resources, Y.Z., J.P. and Z.C.; data curation, H.Z. and H.W.; writing—original draft preparation, Y.Z.; writing—review and editing, Y.Z.; visualization, H.Z. and H.W.; supervision, Y.Z., J.P. and Z.C.; project administration, Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript

Funding

This study was funded by the National Key Laboratory Project, grant number 2021JCJQLB055006, and by the Liaoning Provincial Education Department Scientific Research Project, grant number LJKZ0245.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to acknowledge the experimental equipment and valuable discussion provided by the Fujian Key Laboratory of Optical Transmission and Transformation, China.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Massari, M.; Ruffato, G.; Gintoli, M.; Romanato, F. Fabrication and characterization of high-quality spiral phase plates for optical applications. Appl. Opt. 2015, 54, 4077–4083. [Google Scholar] [CrossRef]

- Gao, Y.; Jiao, S.; Fang, J.; Lei, T.; Xie, Z.; Yuan, X. Multiple-image encryption and hiding with an optical diffractive neural network. Opt. Commun. 2020, 463, 125476. [Google Scholar] [CrossRef]

- Khoury, A.Z.; Souto Ribeiro, P.H.; Dechoum, K. Quantum theory of two-photon vector vortex beams. arXiv 2020, arXiv:2007.03883. [Google Scholar]

- Gisin, N.; Thew, R. Quantum communication. Nat. Photonics 2007, 1, 165–171. [Google Scholar] [CrossRef]

- Gupta, M.K.; Dowling, J.P. Multiplexing OAM states in an optical fiber: Increase bandwidth of quantum communication and QKD applications. In Proceedings of the APS Division of Atomic, Molecular and Optical Physics Meeting Abstracts, Columbus, OH, USA, 8–12 June 2015; p. M5-M008. [Google Scholar]

- Hernandez-Garcia, C.; Vieira, J.; Mendonca, J.T. Generation and applications of extreme-ultraviolet vortices. Photonics 2017, 4, 28. [Google Scholar] [CrossRef]

- Pyragaite, V.; Stabinis, A. Free-space propagation of overlapping light vortex beams. Opt. Commun. 2002, 213, 187–192. [Google Scholar] [CrossRef]

- Berkhout, G.C.; Lavery, M.P.; Courtial, J.; Beijersbergen, M.W.; Padgett, M.J. Efficient sorting of orbital angular momentum states of light. Phys. Rev. Lett. 2010, 105, 153601. [Google Scholar] [CrossRef]

- Lavery, M.P.; Courtial, J.; Padgett, M. Measurement of light’s orbital angular momentum. In The Angular Momentum of Light; Cambridge University Press: Cambridge, UK, 2013; p. 330. [Google Scholar]

- Padgett, M.; Arlt, J.; Simpson, N.; Allen, L. An experiment to observe the intensity and phase structure of Laguerre–Gaussian laser modes. Am. J. Phys. 1996, 64, 77–82. [Google Scholar] [CrossRef]

- Khajavi, B.; Gonzales Ureta, J.R.; Galvez, E.J. Determining vortex-beam superpositions by shear interferometry. Photonics 2018, 5, 16. [Google Scholar] [CrossRef]

- Azimirad, V.; Ramezanlou, M.T.; Sotubadi, S.V. A consecutive hybrid spiking-convolutional (CHSC) neural controller for sequential decision making in robots. Neurocomputing 2022, 490, 319–336. [Google Scholar] [CrossRef]

- Nabizadeh, M.; Singh, A.; Jamali, S. Structure and dynamics of force clusters and networks in shear thickening suspensions. Phys. Rev. Lett. 2022, 129, 068001. [Google Scholar] [CrossRef]

- Mozaffari, H.; Houmansadr, A. E2FL: Equal and equitable federated learning. arXiv 2022, arXiv:2205.10454. [Google Scholar]

- Roshani, G.H.; Hanus, R.; Khazaei, A. Density and velocity determination for single-phase flow based on radiotracer technique and neural networks. Flow Meas. Instrum. 2018, 61, 9–14. [Google Scholar] [CrossRef]

- Krenn, M.; Fickler, M.; Fink, M.; Handsteiner, J.; Malik, M.; Scheidl, T.; Ursin, R.; Zeilinger, A. Communication with spatially modulated light through turbulent air across Vienna. New J. Phys. 2014, 16, 113028. [Google Scholar] [CrossRef]

- Knutson, E.; Lohani, S.; Danaci, O.; Huver, S.D.; Glasser, R.T. Deep learning as a tool to distinguish between high orbital angular momentum optical modes. In Optics and Photonics for Information Processing X; SPIE: Bellingham, WA, USA, 2016; pp. 236–242. [Google Scholar]

- Doster, T.; Watnik, A.T. Machine learning approach to OAM beam demultiplexing via convolutional neural networks. Appl. Opt. 2017, 56, 3386–3396. [Google Scholar] [CrossRef]

- Li, J.; Zhang, M.; Wang, D. Adaptive demodulator using machine learning for orbital angular momentum shift keying. IEEE Photonics Technol. Lett. 2017, 29, 1455–1458. [Google Scholar] [CrossRef]

- Huang, H.; Milione, G.; Lavery, M.P.; Xie, G.; Ren, Y.; Cao, Y.; Ahmed, N.; An Nguyen, T.; Nolan, D.A.; Li, M.J. Mode division multiplexing using an orbital angular momentum mode sorter and MIMO-DSP over a graded-index few-mode optical fibre. Sci. Rep. 2015, 5, 14931. [Google Scholar] [CrossRef] [PubMed]

- Bozinovic, N.; Golowich, S.; Kristense, P.; Ramachandran, S. Control of orbital angular momentum of light with optical fibers. Opt. Lett. 2012, 37, 2451–2453. [Google Scholar] [CrossRef]

- Gregg, P.; Kristensen, P.; Ramachandran, S. Conservation of orbital angular momentum in air-core optical fibers. Optica 2015, 2, 267–270. [Google Scholar] [CrossRef]

- Chen, L.; Singh, R.K.; Dogariu, A.; Chen, Z.; Pu, J. Estimating topological charge of propagating vortex from single-shot non-imaged speckle. Opt. Lett. 2021, 19, 022603. [Google Scholar] [CrossRef]

- Wang, Z.; Lai, X.; Huang, H.; Li, H.; Chen, Z.; Han, J.; Pu, J. Recognizing the orbital angular momentum (OAM) of vortex beams from speckle patterns. Sci. China Phys. Mech. 2022, 65, 244211. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 17–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 1492–1500. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- Deng, J. A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 22–24 June 2009; pp. 248–255. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).