Design of Dual-Focal-Plane AR-HUD Optical System Based on a Single Picture Generation Unit and Two Freeform Mirrors

Abstract

:1. Introduction

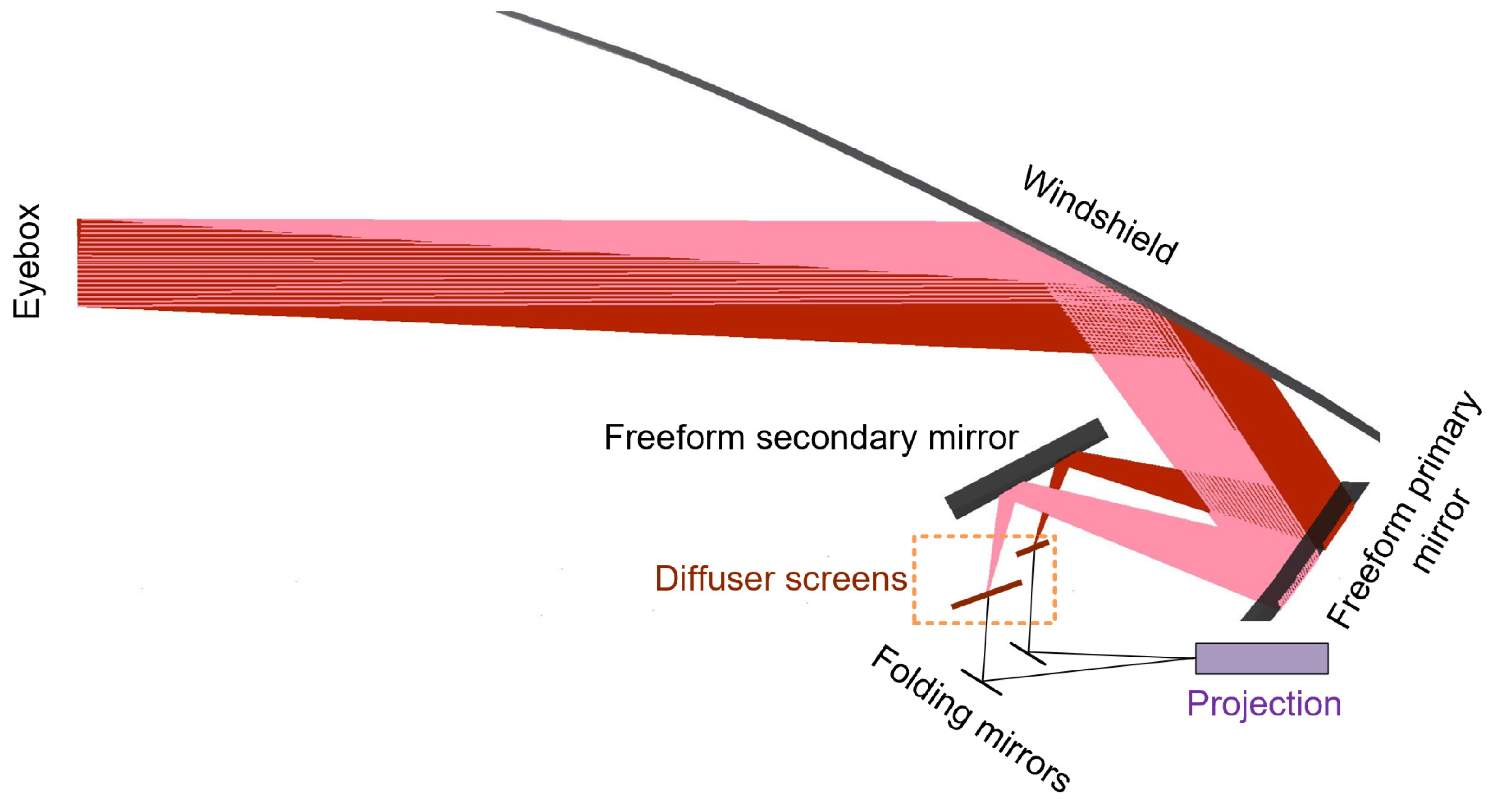

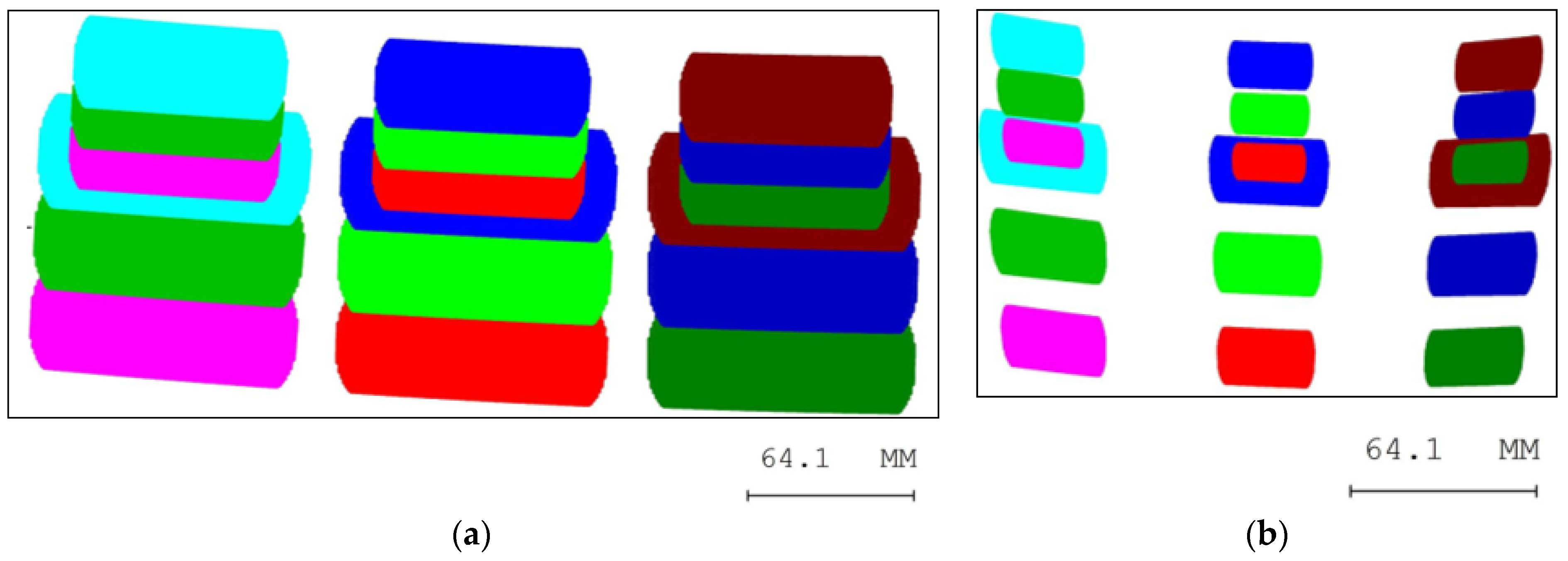

2. Dual-Focal-Plane AR-HUD Structure and Principle

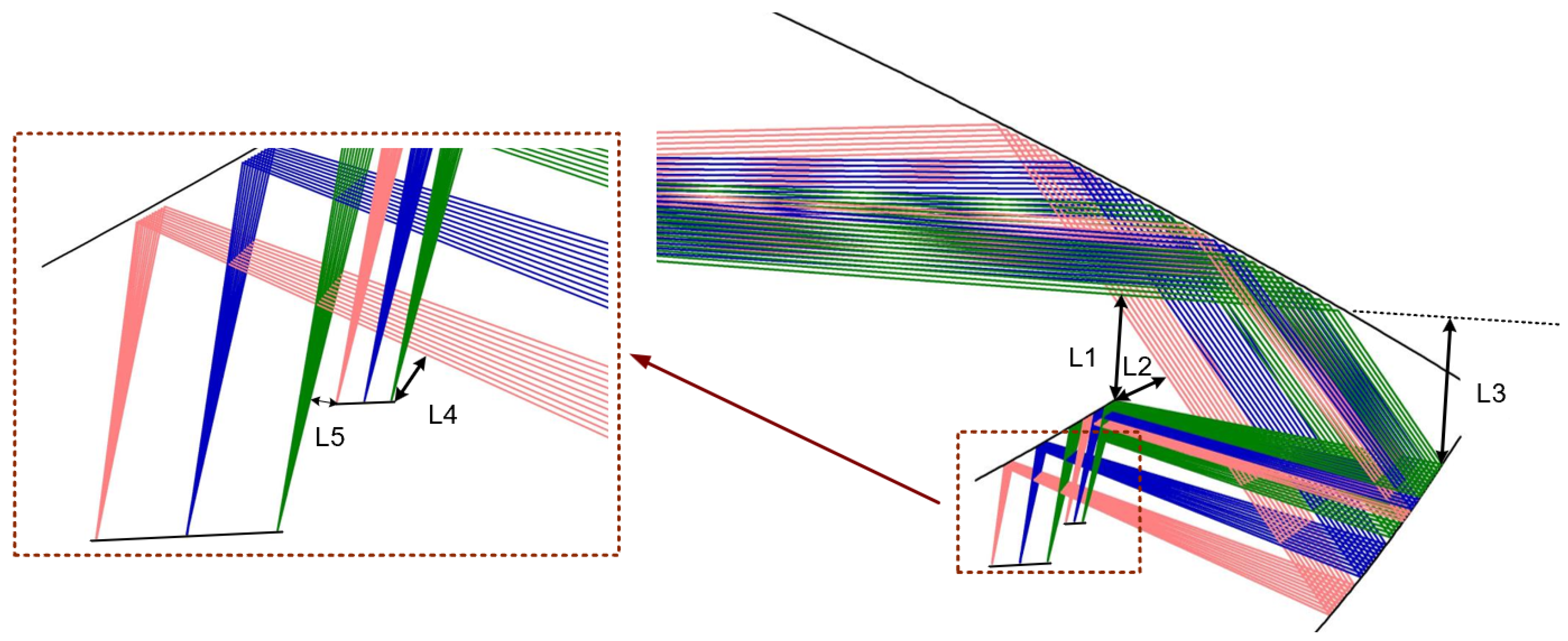

3. AR-HUD Optical System Design

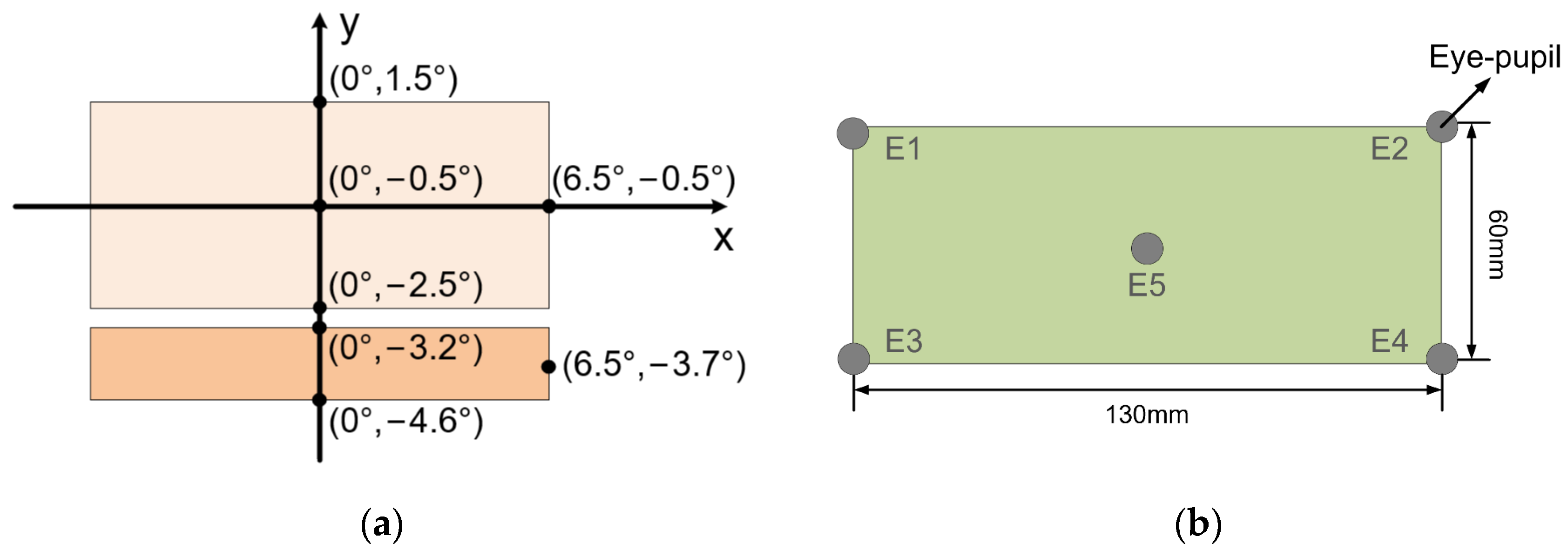

3.1. Design Considerations

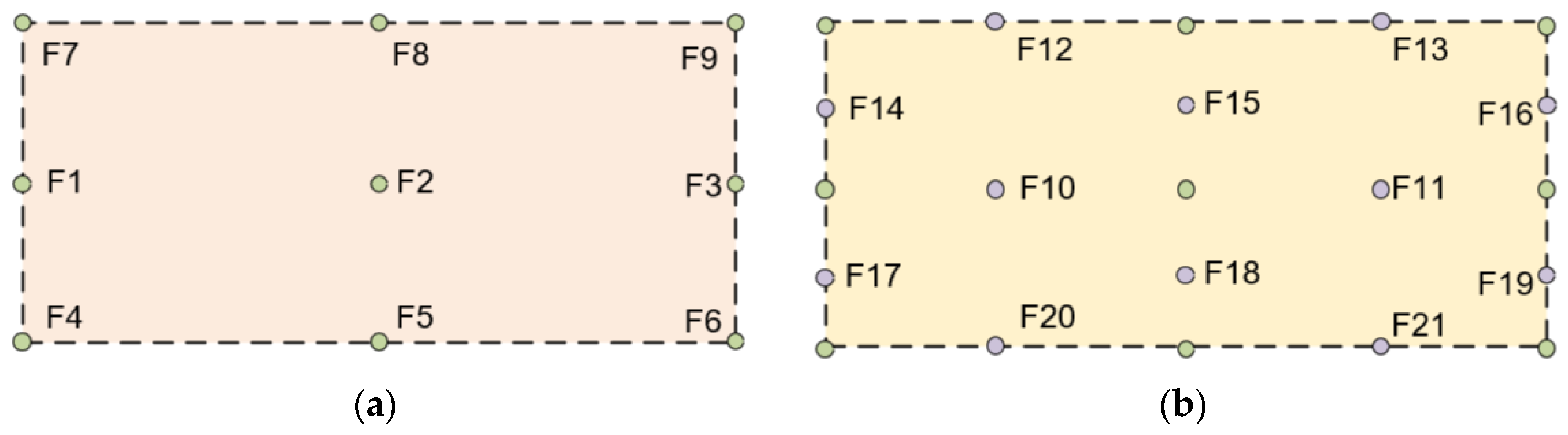

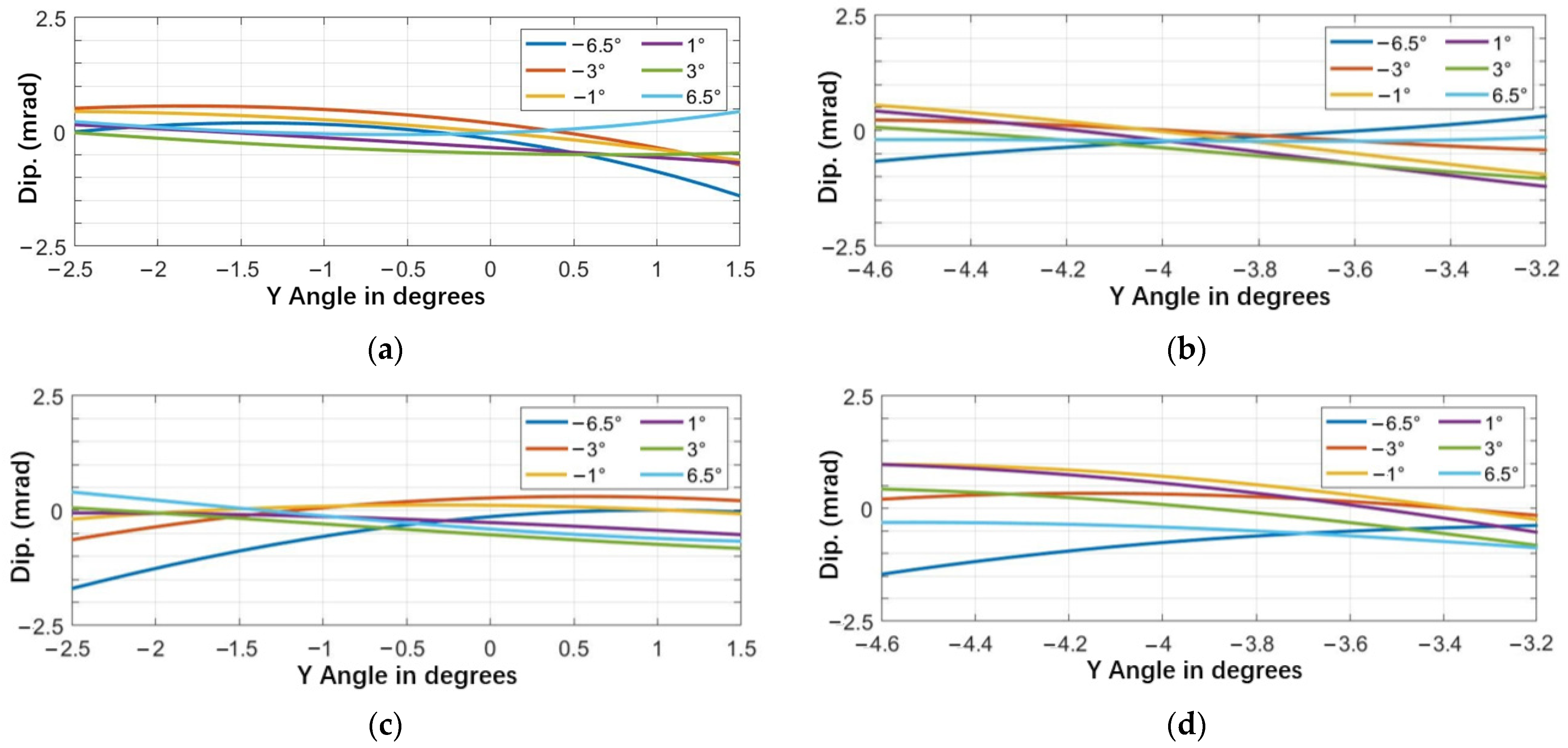

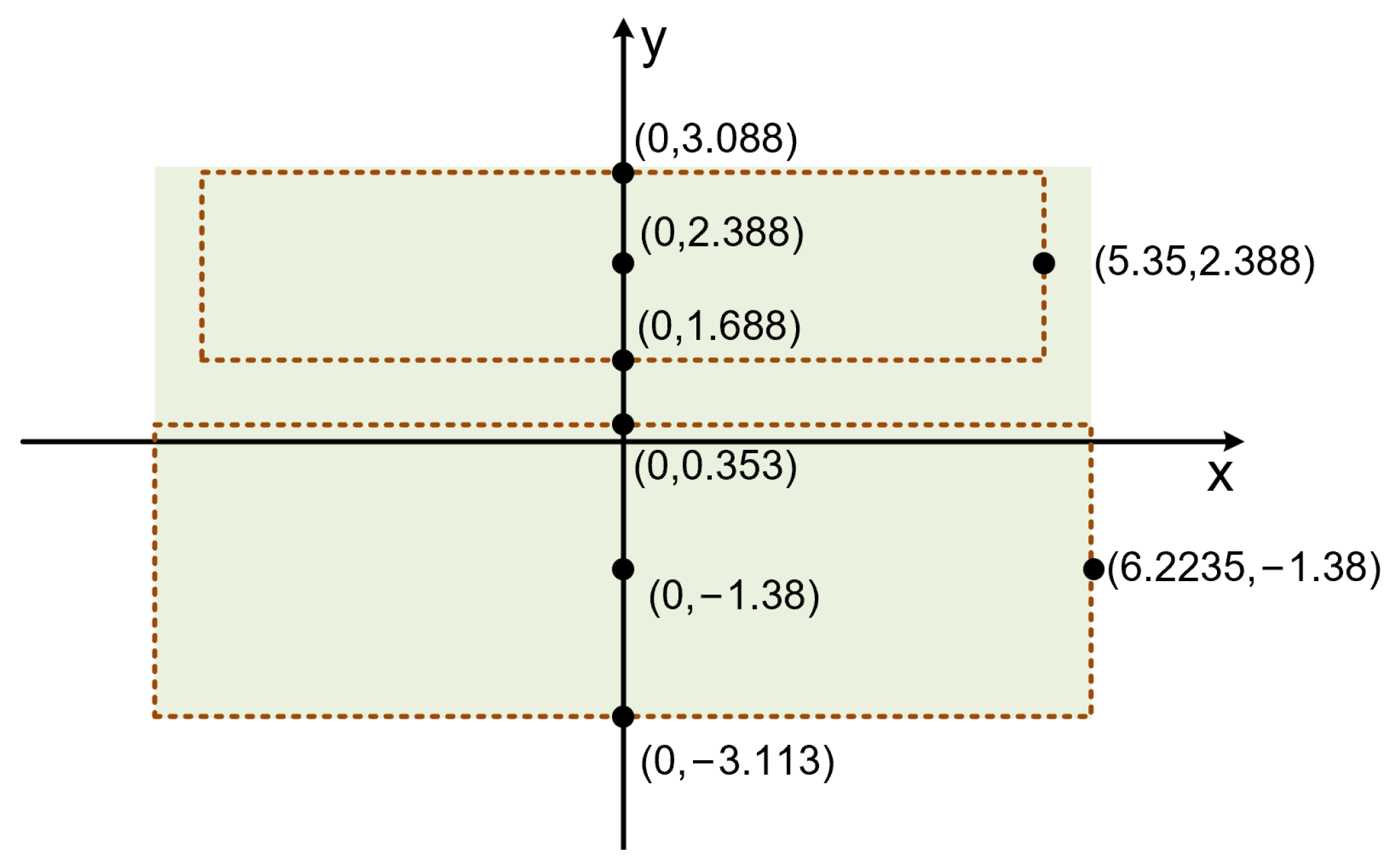

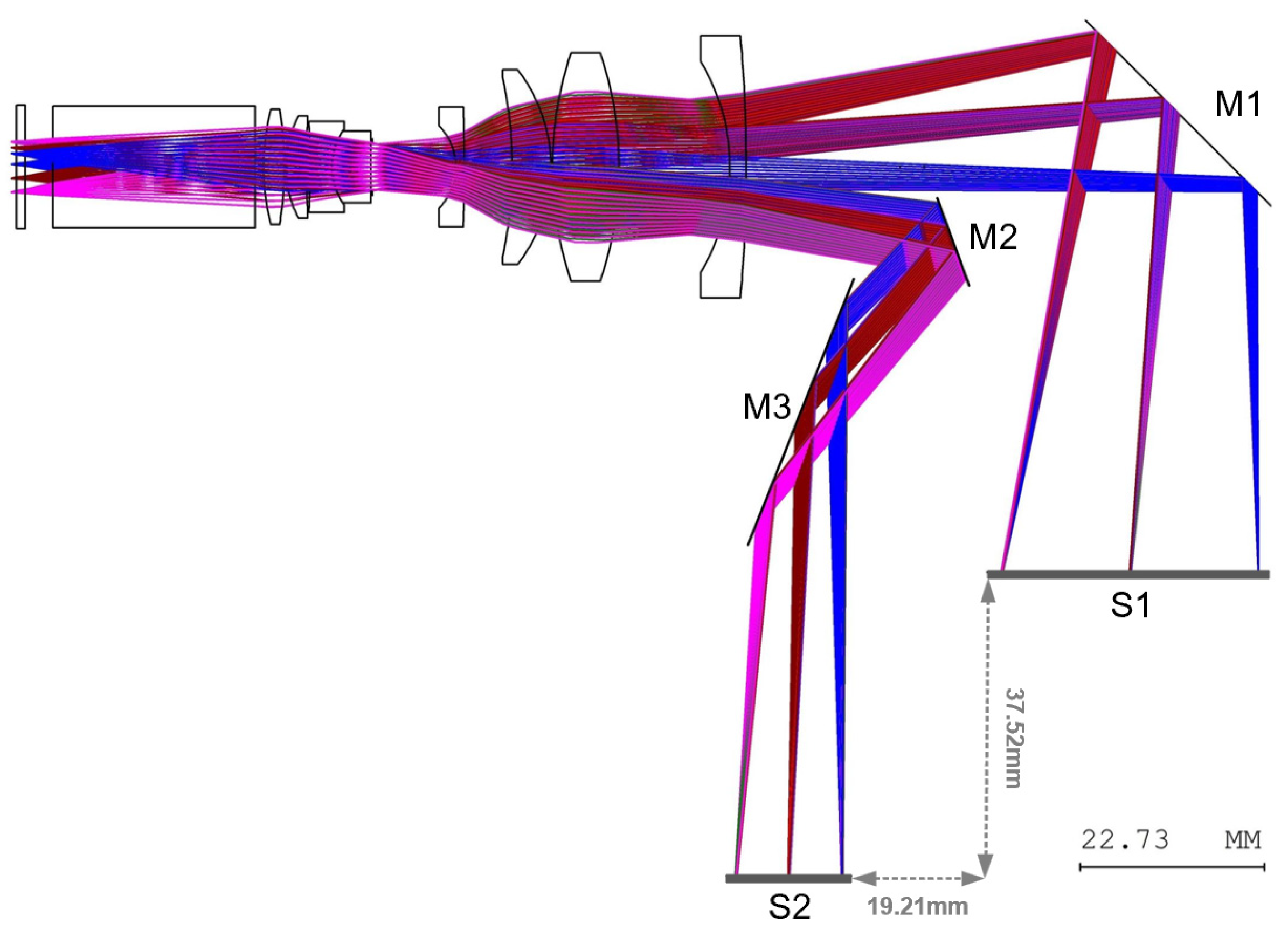

3.2. Optical Design Optimization

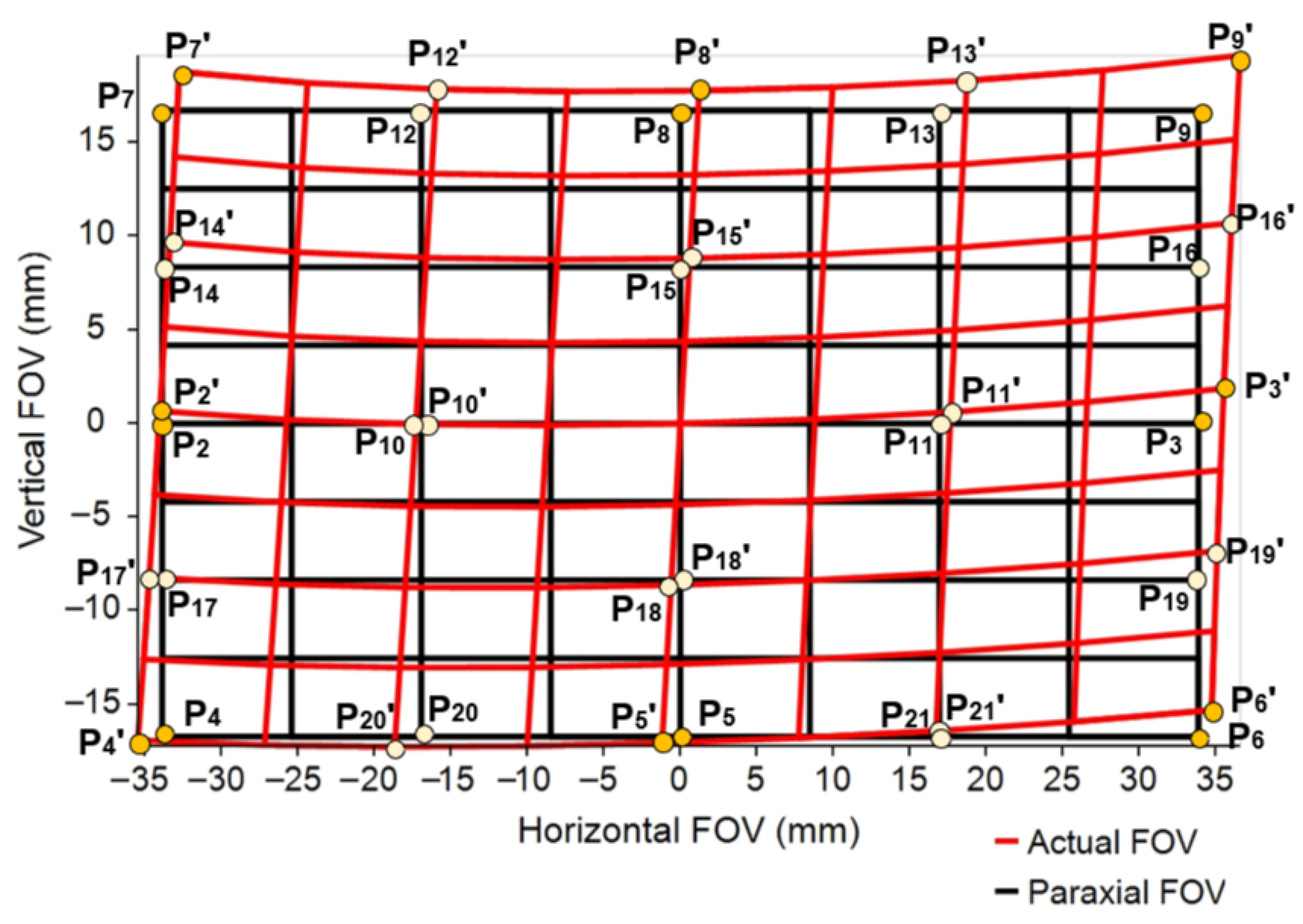

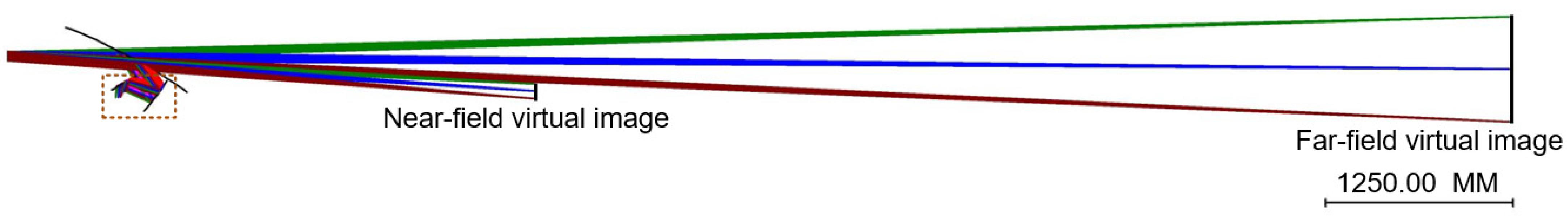

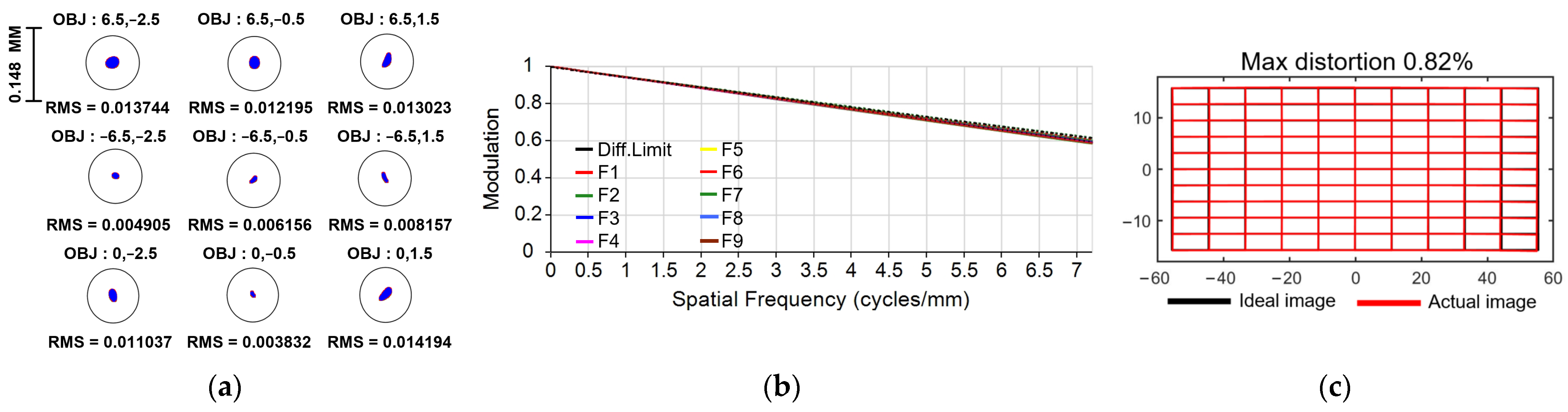

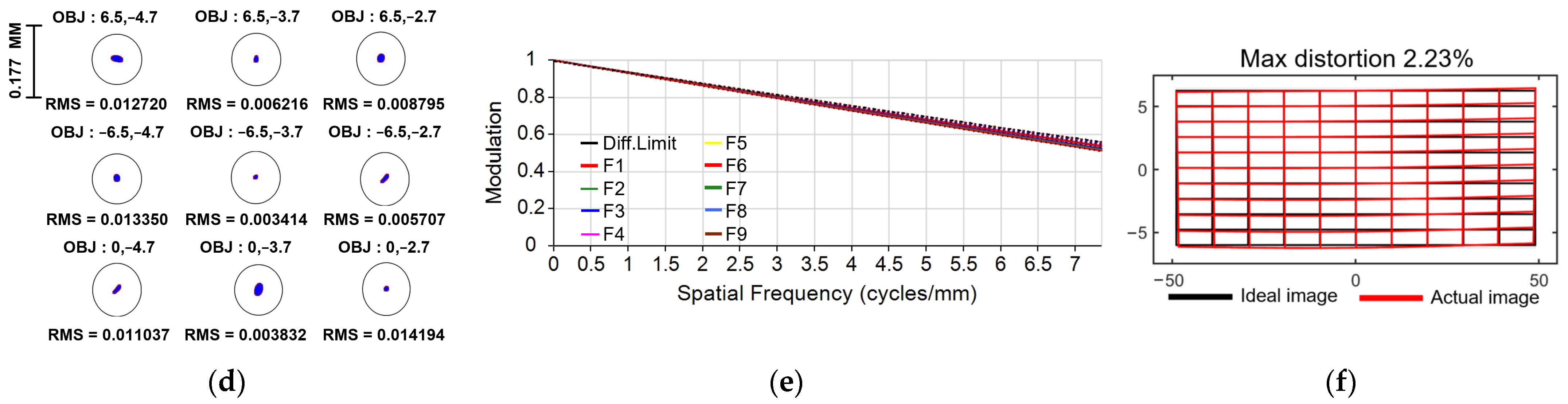

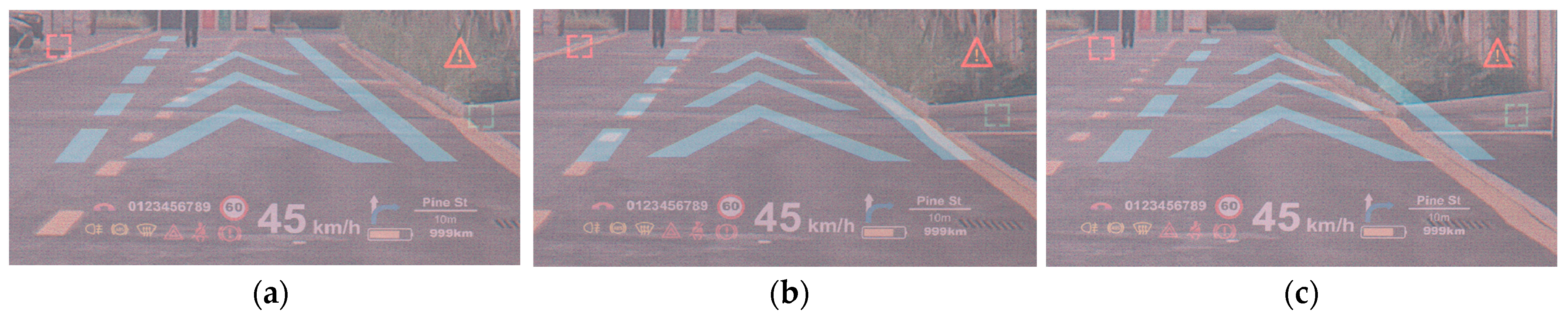

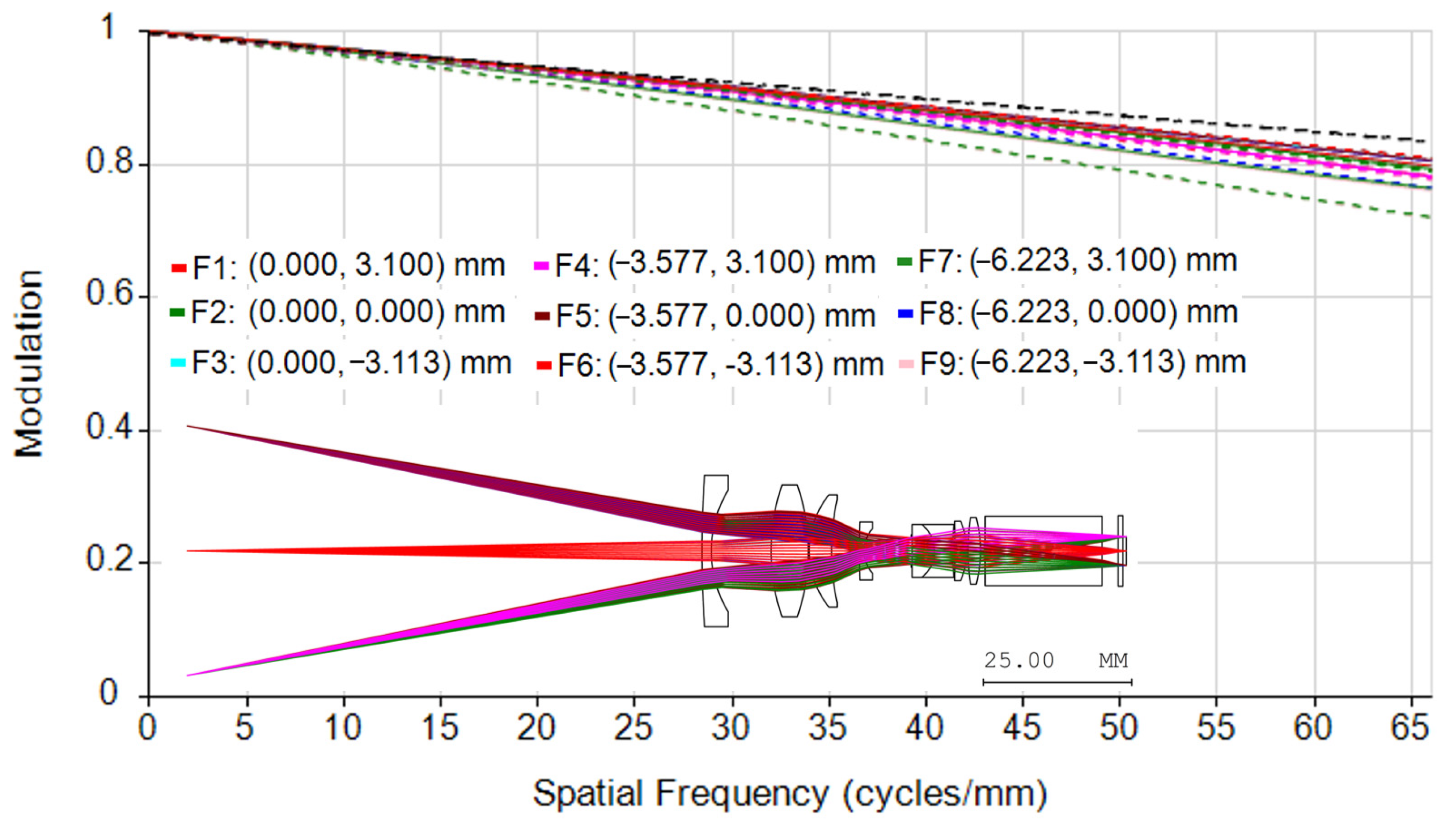

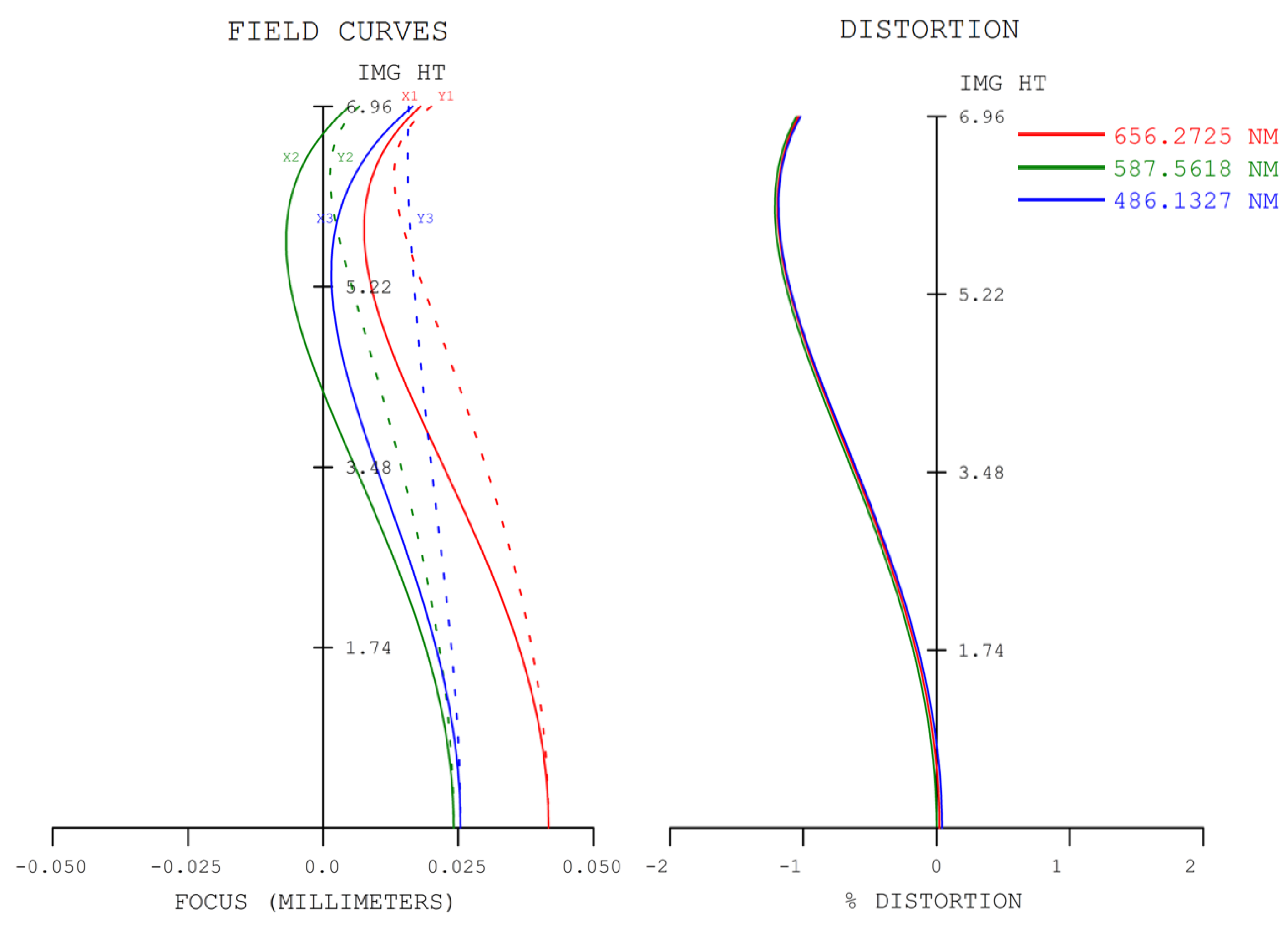

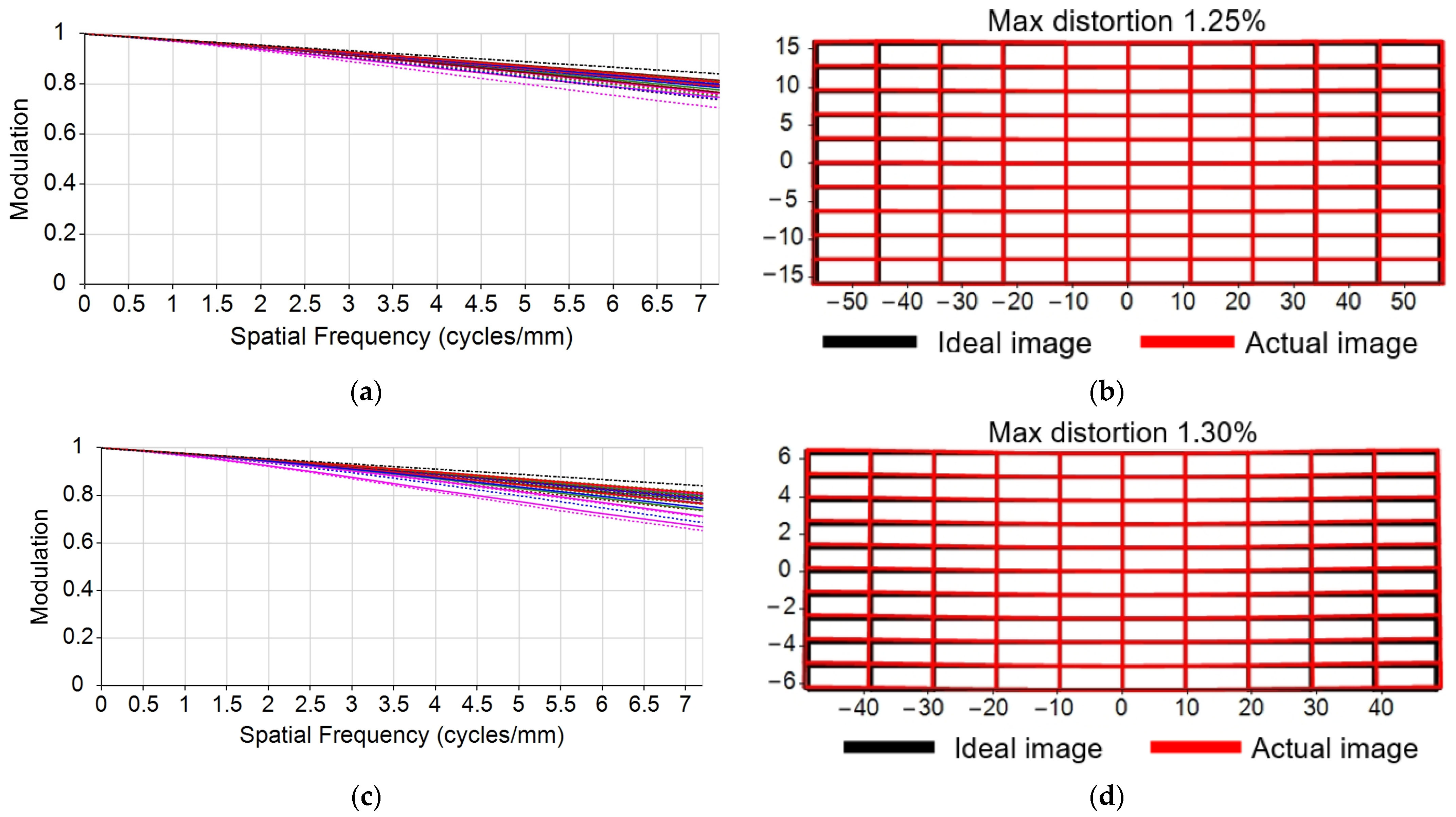

3.3. Performance Analysis

4. Projection Lens Design and Overall Evaluation

5. Discussion of Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Y.C.; Wen, M.H. Comparison of head-up display (HUD) vs. head-down display (HDD): Driving performance of commercial vehicle operators in Taiwan. Int. J. Hum. Comput. Stud. 2004, 61, 679–697. [Google Scholar] [CrossRef]

- Smith, S.; Fu, S.H. The relationships between automobile head-up display presentation images and drivers’ Kansei. Displays 2011, 32, 58–68. [Google Scholar] [CrossRef]

- Qin, Z.; Lin, F.-C.; Huang, Y.-P.; Shieh, H.-P.D. Maximal acceptable ghost images for designing a legible windshield-type vehicle head-up display. IEEE Photonics J. 2017, 9, 7000812. [Google Scholar] [CrossRef]

- Horrey, W.J.; Wickens, C.D.; Alexander, A.L. The Effects of Head-Up Display Clutter and In-Vehicle Display Separation on Concurrent Driving Performance. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2003, 47, 1880–1884. [Google Scholar] [CrossRef]

- Mahajan, S.; Khedkar, S.B.; Kasav, S.M. Head-up display techniques in cars. Int. J. Eng. Sci. Innov. Technol. 2015, 4, 119–124. [Google Scholar]

- Ott, P. Optic design of head-up displays with freeform surfaces specified by NURBS. In Proceedings of the Optical Design and Engineering III, Glasgow, UK, 27 September 2008; pp. 339–350. [Google Scholar]

- Wei, S.; Fan, Z.; Zhu, Z.; Ma, D. Design of a head-up display based on freeform reflective systems for automotive applications. Appl. Opt. 2019, 58, 1675–1681. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.-H.; Park, S.-C. Design of confocal off-axis two-mirror system for head-up display. Appl. Opt. 2019, 58, 677–683. [Google Scholar] [CrossRef]

- Ting, Z.; Jianyu, H.; Linsen, C.; Wen, Q. Status and prospect of augmented reality head up display. Laser Optoelectron. Prog. 2023, 60, 0811008–0811014. [Google Scholar]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the glass: Driver challenges and opportunities for AR automotive applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- Fan, R.; Wei, S.; Ji, H.; Qian, Z.; Tan, H.; Mo, Y.; Ma, D. Automated design of freeform imaging systems for automotive heads-up display applications. Opt. Express 2023, 31, 10758–10774. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-W.; Yoon, C.-R.; Kang, J.; Park, B.-J.; Kim, K.-H. Development of lane-level guidance service in vehicle augmented reality system. In Proceedings of the 2015 17th International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Republic of Korea, 1–3 July 2015; pp. 263–266. [Google Scholar]

- Betancur, J.A.; Villa-Espinal, J.; Osorio-Gómez, G.; Cuéllar, S.; Suárez, D. Research topics and implementation trends on automotive head-up display systems. Int. J. Interact. Des. Manuf. 2018, 12, 199–214. [Google Scholar] [CrossRef]

- Seo, J.H.; Yoon, C.Y.; Oh, J.H.; Kang, S.B.; Yang, C.; LEE, M.R.; Han, Y.H. 59-4: A Study on Multi-depth Head-Up Display. SID 2017, 48, 883–885. [Google Scholar] [CrossRef]

- Foryou Multimedia Electronics Co., Ltd. Available online: http://www.adayome.com/E_detail01.html (accessed on 3 August 2023).

- Kim, K.-H.; Park, S.-C. Optical System Design and Evaluation for an Augmented Reality Head-up Display Using Aberration and Parallax Analysis. Curr. Opt. Photonics 2021, 5, 660–671. [Google Scholar]

- Kong, X.; Xue, C. Optical design of dual-focal-plane head-up display based on dual picture generation units. Acta Opt. Sin. 2022, 42, 1422003. [Google Scholar]

- Başak, U.Y.; Kazempourradi, S.; Ulusoy, E.; Ürey, H. Wide field-of-view dual-focal-plane augmented reality display. In Proceedings of the Advances in Display Technologies IX, San Francisco, CA, USA, 6–7 February 2019; pp. 62–68. [Google Scholar]

- Ma, S.; Hong, T.; Shi, B.; Li, D.; Wu, N.; Zhou, C. 57.6: Stray Light Suppression for a Dual Depth HUD System. SID 2019, 50, 635–637. [Google Scholar] [CrossRef]

- Shi, B.; Hong, T.; Wei, W.; Li, D.; Yang, F.; Wang, X.; Wu, N.; Zhou, C. 34.3: A Dual Depth Head Up Display System for Vehicle. SID 2018, 49, 371–374. [Google Scholar] [CrossRef]

- Qin, Z.; Lin, S.M.; Lu, K.T.; Chen, C.H.; Huang, Y.P. Dual-focal-plane augmented reality head-up display using a single picture generation unit and a single freeform mirror. Appl. Opt. 2019, 58, 5366–5374. [Google Scholar] [CrossRef]

- Liu, S.; Hua, H.; Cheng, D. A novel prototype for an optical see-through head-mounted display with addressable focus cues. IEEE Trans. Vis. Comput. Graph. 2009, 16, 381–393. [Google Scholar]

- Li, L.; Wang, Q.-H.; Jiang, W. Liquid lens with double tunable surfaces for large power tunability and improved optical performance. J. Opt. 2011, 13, 115503. [Google Scholar] [CrossRef]

- Zhan, T.; Lee, Y.-H.; Tan, G.; Xiong, J.; Yin, K.; Gou, F.; Zou, J.; Zhang, N.; Zhao, D.; Yang, J. Pancharatnam–Berry optical elements for head-up and near-eye displays. JOSA B 2019, 36, D52–D65. [Google Scholar] [CrossRef]

- Chen, H.-S.; Wang, Y.-J.; Chen, P.-J.; Lin, Y.-H. Electrically adjustable location of a projected image in augmented reality via a liquid-crystal lens. Opt. Express 2015, 23, 28154–28162. [Google Scholar] [CrossRef] [PubMed]

- Christmas, J.; Collings, N. 75-2: Invited Paper: Realizing Automotive Holographic Head Up Displays. SID 2016, 47, 1017–1020. [Google Scholar]

- Wakunami, K.; Hsieh, P.-Y.; Oi, R.; Senoh, T.; Sasaki, H.; Ichihashi, Y.; Okui, M.; Huang, Y.-P.; Yamamoto, K. Projection-type see-through holographic three-dimensional display. Nat. Commun. 2016, 7, 12954. [Google Scholar] [CrossRef] [PubMed]

- Teich, M.; Schuster, T.; Leister, N.; Zozgornik, S.; Fugal, J.; Wagner, T.; Zschau, E.; Häussler, R.; Stolle, H. Real-time, large-depth holographic 3D head-up display: Selected aspects. Appl. Opt. 2022, 61, B156–B163. [Google Scholar] [CrossRef]

- Yang, T.; Duan, Y.; Cheng, D.; Wang, Y. Freeform imaging optical system design: Theories, development, and applications. Acta Opt. Sin. 2021, 41, 0108001. [Google Scholar] [CrossRef]

- Gish, K.W.; Staplin, L. Human Factors Aspects of Using Head Up Displays in Automobiles: A Review of the Literature; Report DOT HS 808 320; National Highway Traffic Safety Administration, U.S. Department of Transportation: Washington, DC, USA, 1995.

- AS8055; Minimum Performance Standard for Airborne Head up Display (HUD). SAE International: Warrendale, PA, USA, 2015.

- SAIC Volkswagen Automotive Co., Ltd. Available online: https://www.svw-volkswagen.com/id4x/ (accessed on 18 September 2023).

| Parameter | Value |

|---|---|

| FOV | Far: 13° × 4° Near: 13° × 1.4° |

| VID | Far: 10 m Near: 3.5 m |

| Eyebox size | 130 mm × 60 mm |

| Distortion | <5% |

| Pupil diameter | 6 mm |

| Modulation transfer function (MTF) @ Nyquist frequency | >0.3 lp/mm |

| xmyn Item | PM | SM |

|---|---|---|

| x | / | −3.690 × 10−5 |

| y | / | −9.107 × 10−17 |

| x2 | −7.694 × 10−5 | −4.278 × 10−4 |

| xy | 2.114 × 10−5 | −2.338 × 10−5 |

| y2 | 2.352 × 10−4 | −3.614 × 10−5 |

| x3 | −3.691 × 10−8 | −1.816 × 10−7 |

| x2y | 1.411 × 10−7 | 1.261 × 10−6 |

| xy2 | −4.745 × 10−8 | −9.538 × 10−8 |

| y3 | 2.666 × 10−7 | −9.404 × 10−7 |

| x4 | −1.047 × 10−10 | 1.749 × 10−10 |

| x3y | −3.501 × 10−12 | 2.898 × 10−10 |

| x2y2 | 2.373 × 10−10 | 1.284 × 10−10 |

| x2y3 | 9.535 × 10−10 | 3.851 × 10−9 |

| y4 | 4.867 × 10−9 | 1.407 × 10−8 |

| Qin’s [21] | Shi’s [20] | Volkswagen ID4′s [32] | Ours | |

|---|---|---|---|---|

| FOV | Far: 10° × 3° Near: 6° × 2° | Far: 10° × 5° Near: 5° × 1° | Far: 9° × 4° Near: 7° × 1° | Far: 13° × 4° Near: 13° × 1.4° |

| VID | Far: 9 m Near: 2.5 m | Far: 7.8 m Near: 2.7 m | Far: 10 m Near: 3 m | Far: 10 m Near: 3.5 m |

| Eyebox size | 120 mm × 60 mm | 150 mm × 80 mm | / | 130 mm × 60 mm |

| Volume | 8.5 L | 30 L | 14 L | 16 L |

| PGU | Single LCD | Two LCDs | Two LCDs | Single DLP |

| Distortion | <3.05% | <3.60% | / | <3.58% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, C.; Kong, L.; Yang, B.; Wan, X. Design of Dual-Focal-Plane AR-HUD Optical System Based on a Single Picture Generation Unit and Two Freeform Mirrors. Photonics 2023, 10, 1192. https://doi.org/10.3390/photonics10111192

Fan C, Kong L, Yang B, Wan X. Design of Dual-Focal-Plane AR-HUD Optical System Based on a Single Picture Generation Unit and Two Freeform Mirrors. Photonics. 2023; 10(11):1192. https://doi.org/10.3390/photonics10111192

Chicago/Turabian StyleFan, Chengxiang, Lingbao Kong, Bo Yang, and Xinjun Wan. 2023. "Design of Dual-Focal-Plane AR-HUD Optical System Based on a Single Picture Generation Unit and Two Freeform Mirrors" Photonics 10, no. 11: 1192. https://doi.org/10.3390/photonics10111192

APA StyleFan, C., Kong, L., Yang, B., & Wan, X. (2023). Design of Dual-Focal-Plane AR-HUD Optical System Based on a Single Picture Generation Unit and Two Freeform Mirrors. Photonics, 10(11), 1192. https://doi.org/10.3390/photonics10111192