Positioning of a Photovoltaic Device on a Real Two-Dimensional Plane in Optical Wireless Power Transmission by Means of Infrared Differential Absorption Imaging

Abstract

:1. Introduction

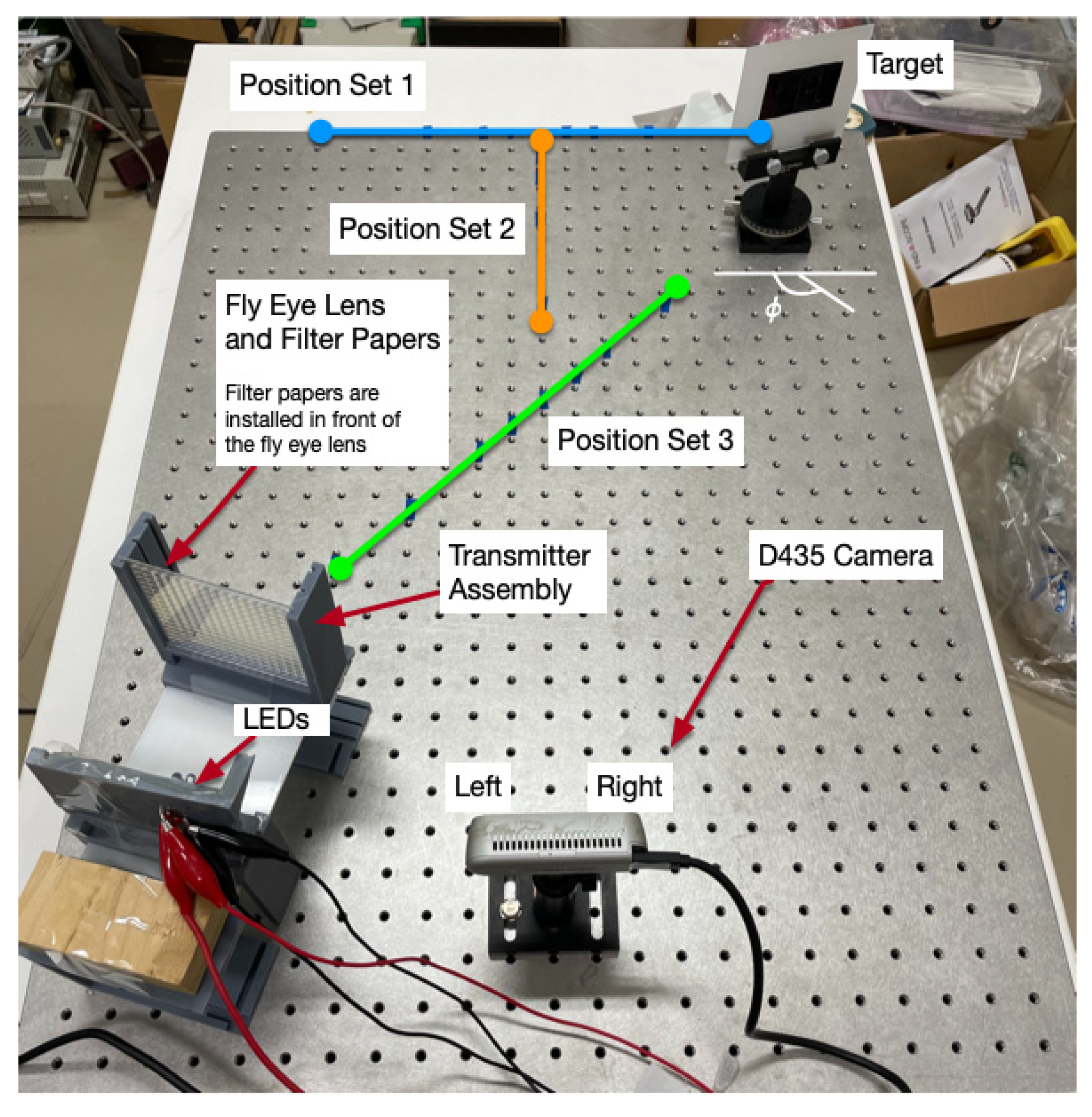

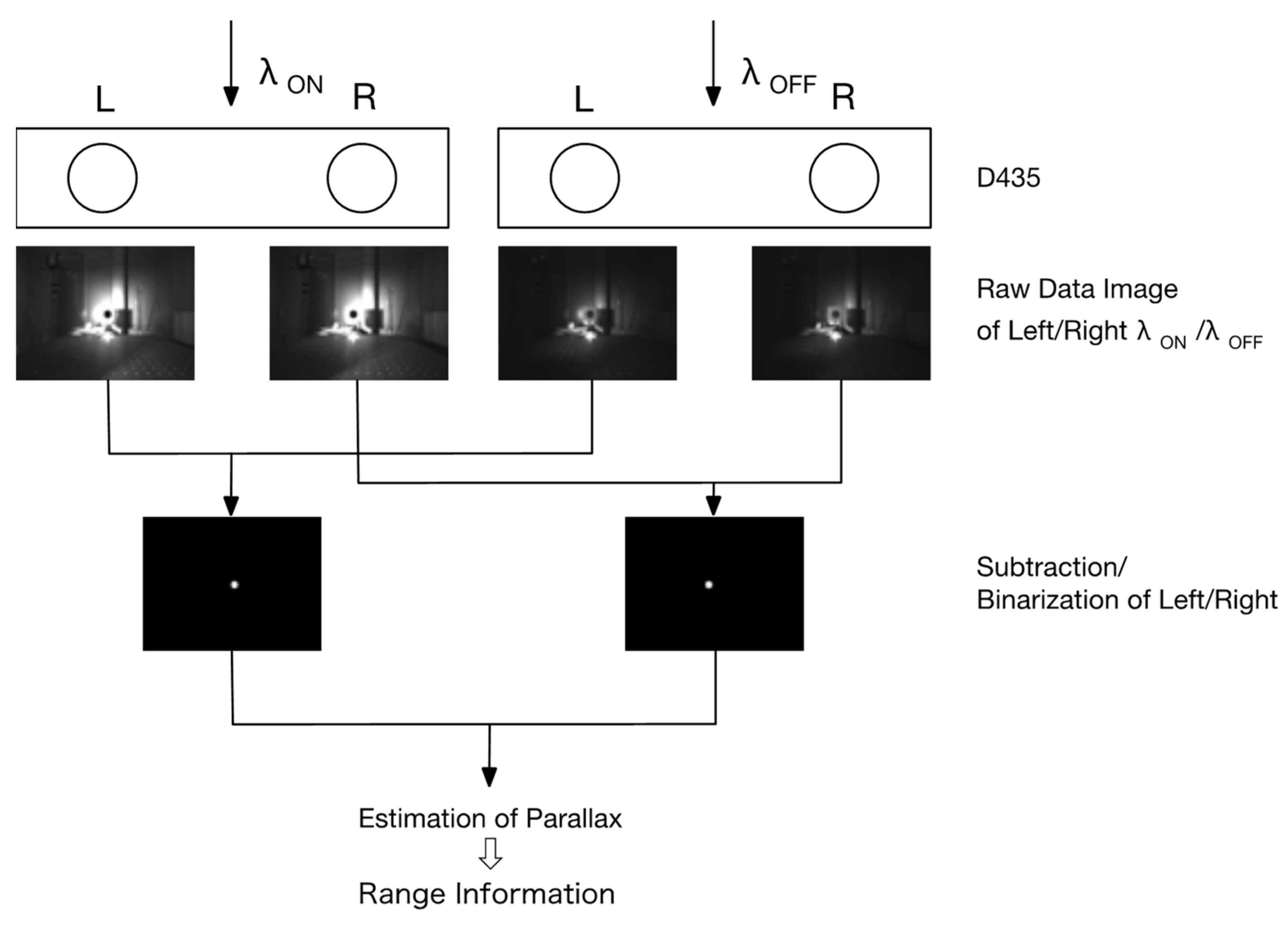

2. Experiments on a GaAs Substrate with Stereo Imagery

2.1. Experiment Configuration and Coordinate System

2.2. Integrity Measure

- First condition (C1)

- Second condition (C2)

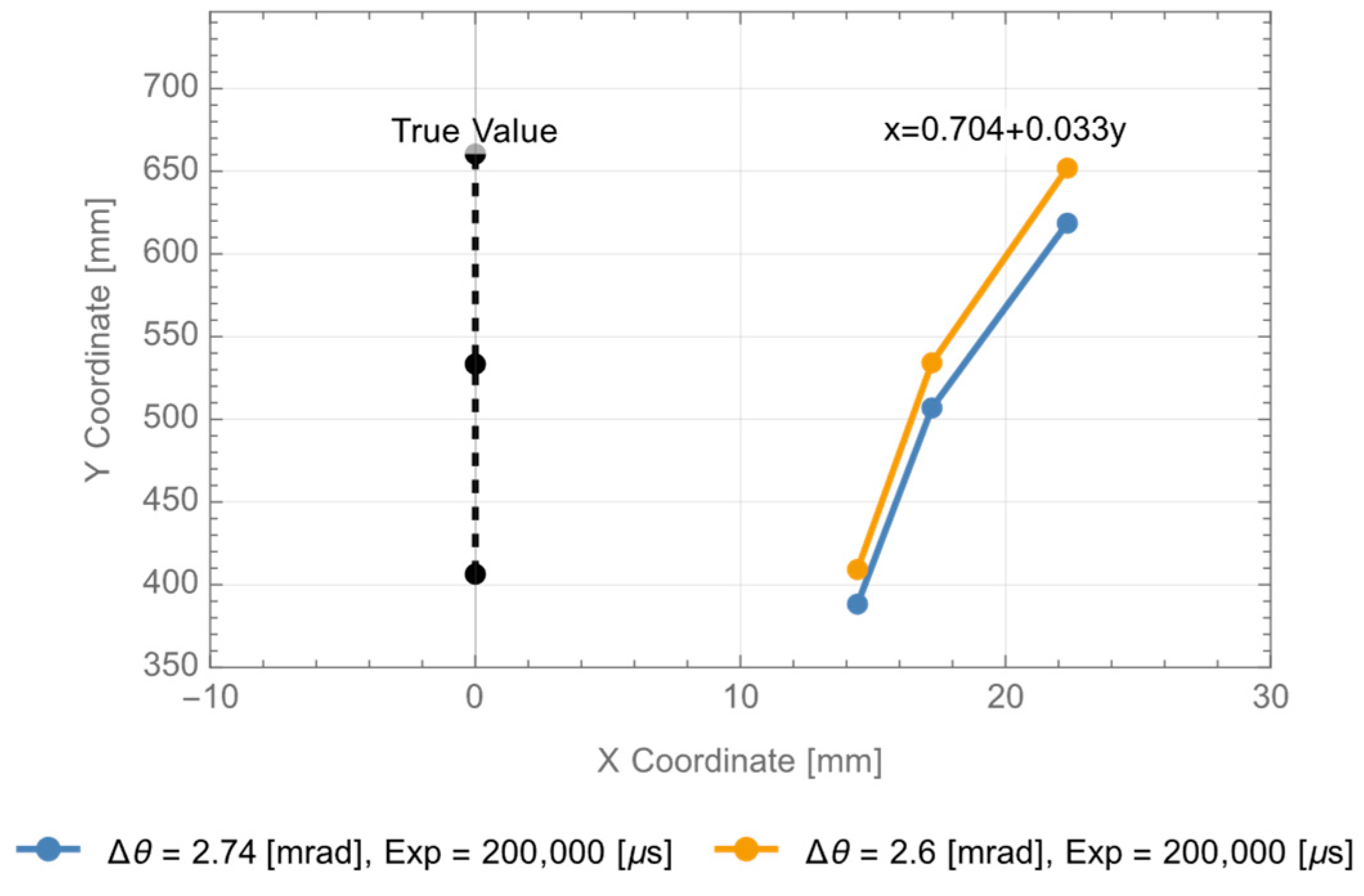

2.3. Experiments of GaAs Substrate Position Estimation

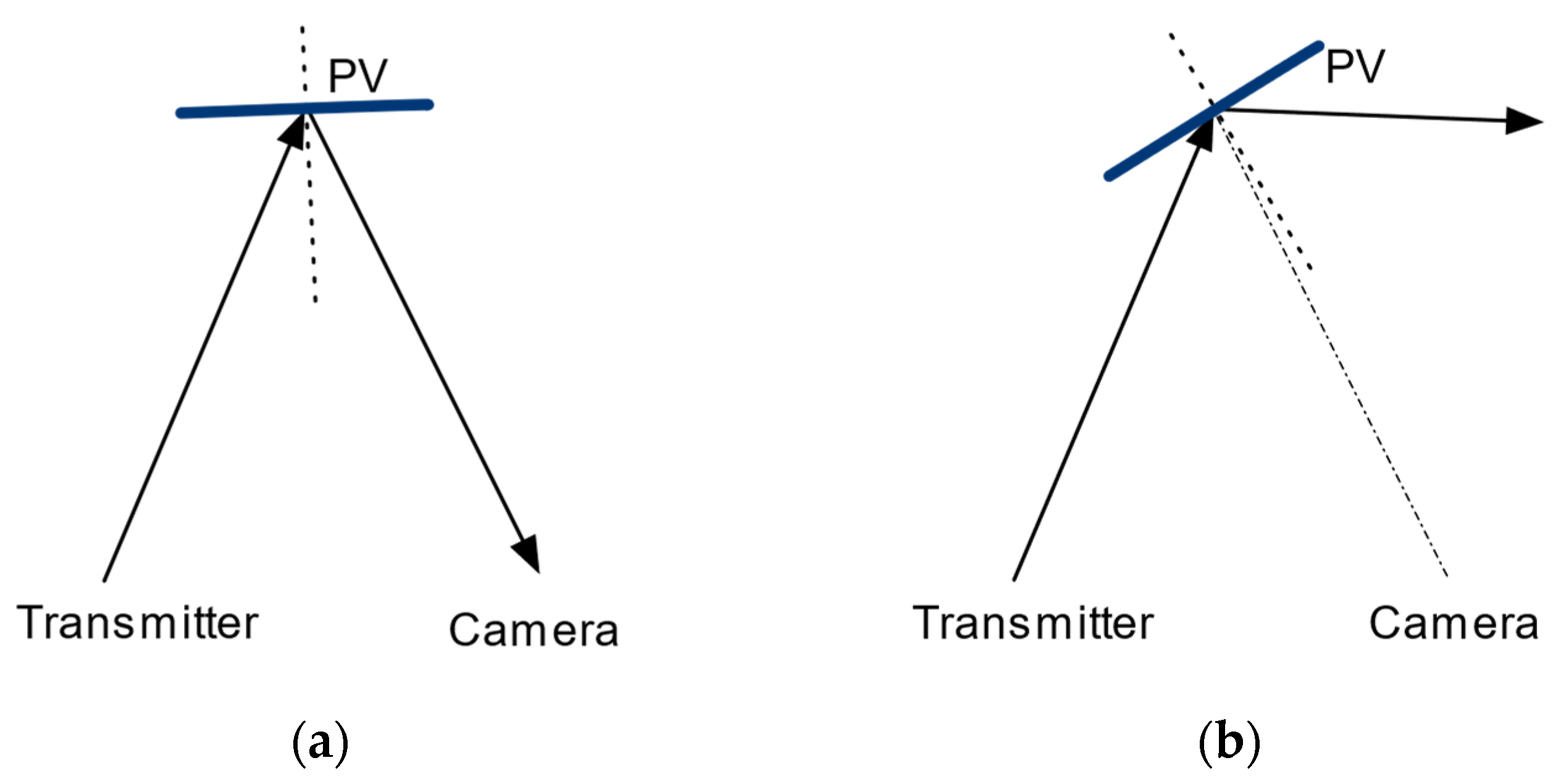

2.4. Introducing Coaxial Optics

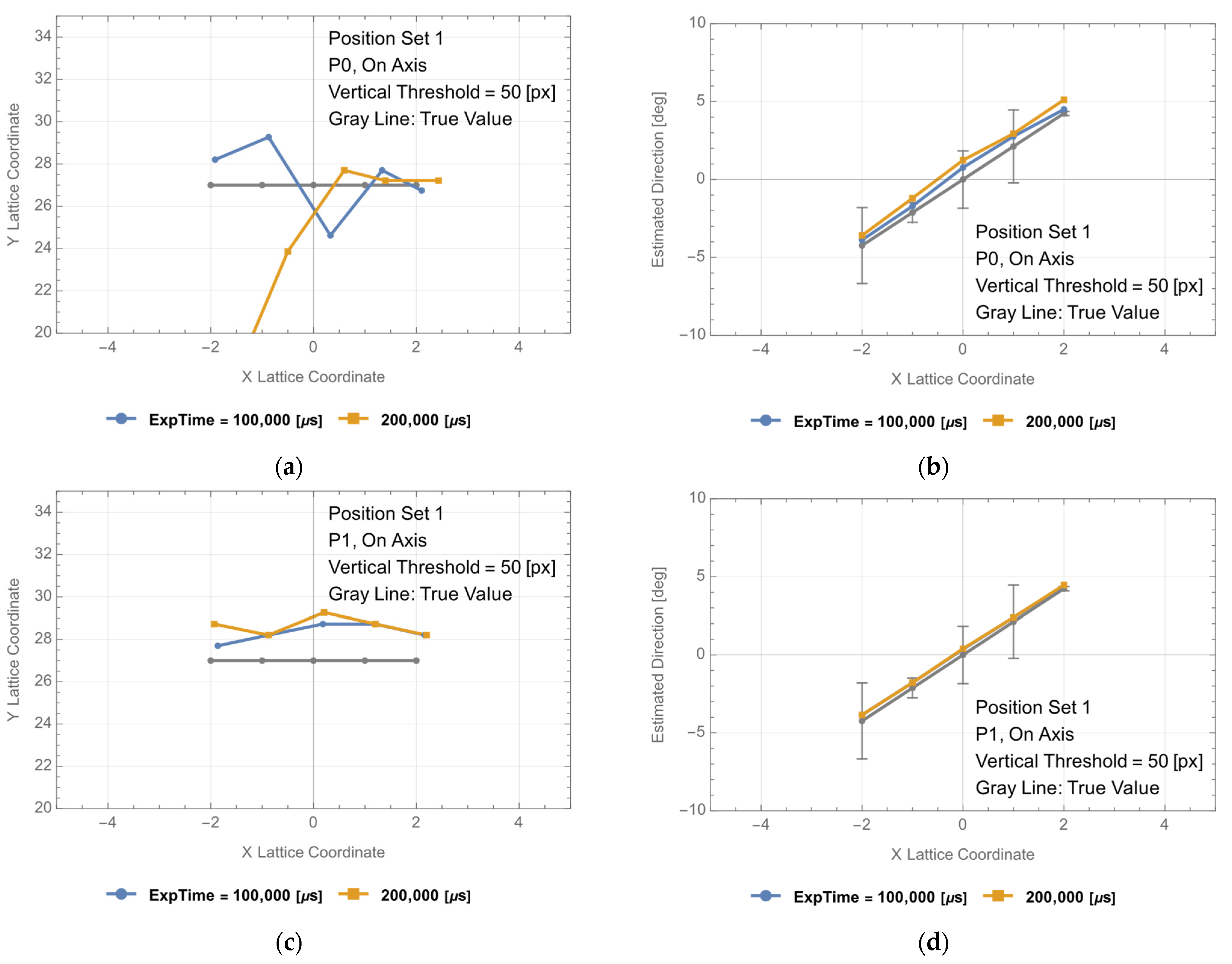

2.5. GaAs Substrate Experiments Using the Coaxial Optics

3. PV Positioning Experiments

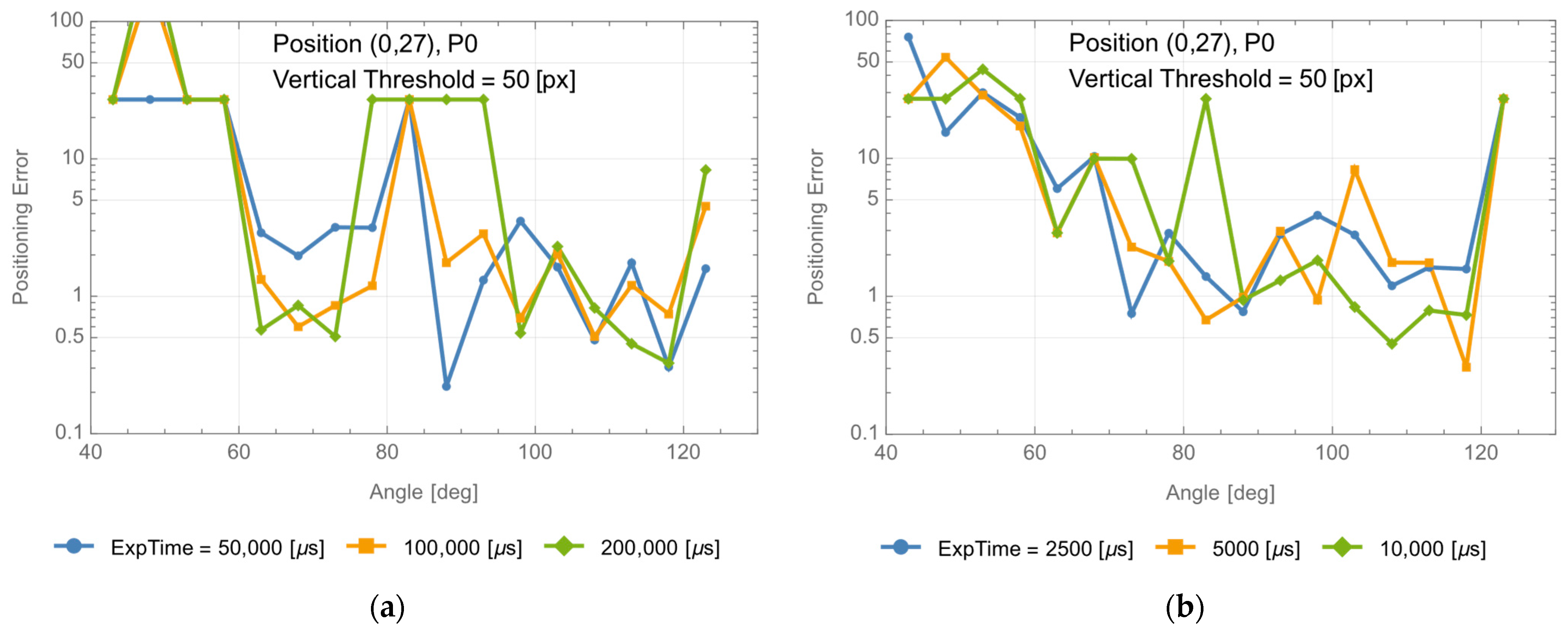

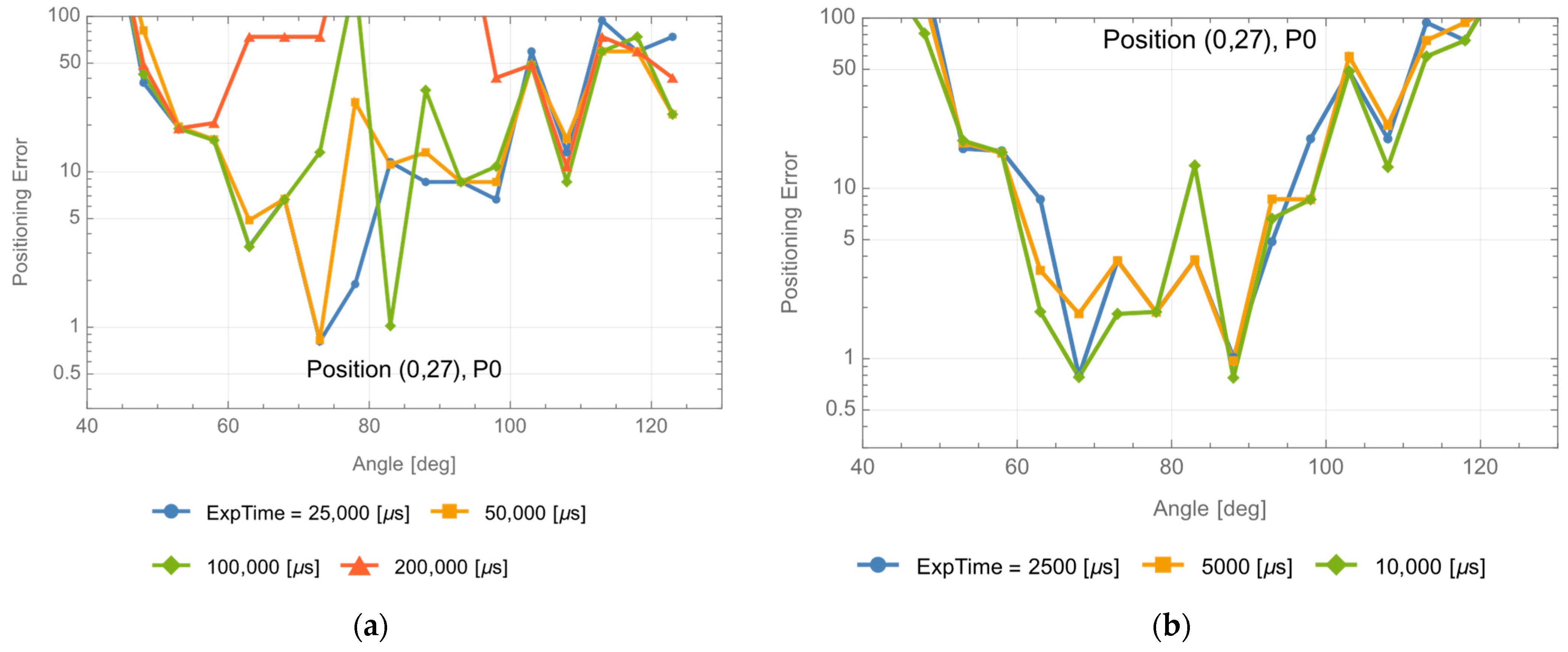

3.1. Positioning of the PV at (0, 27)

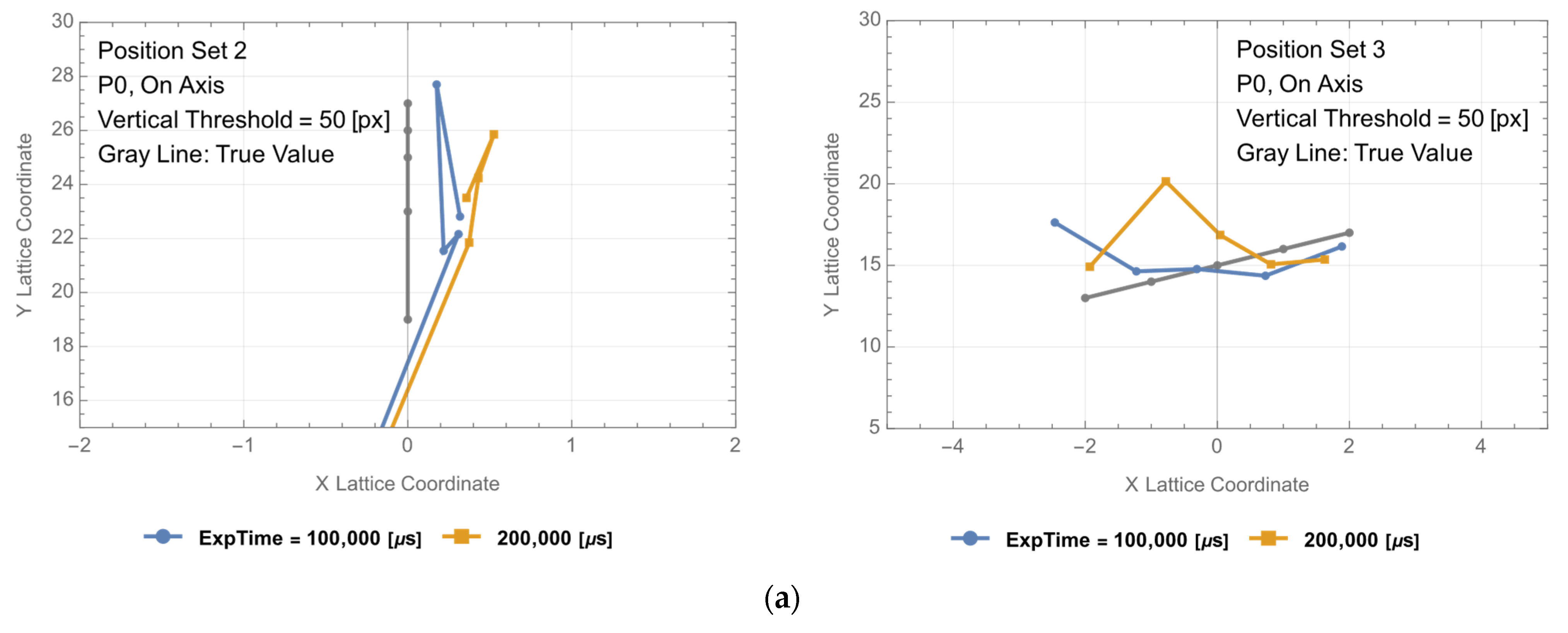

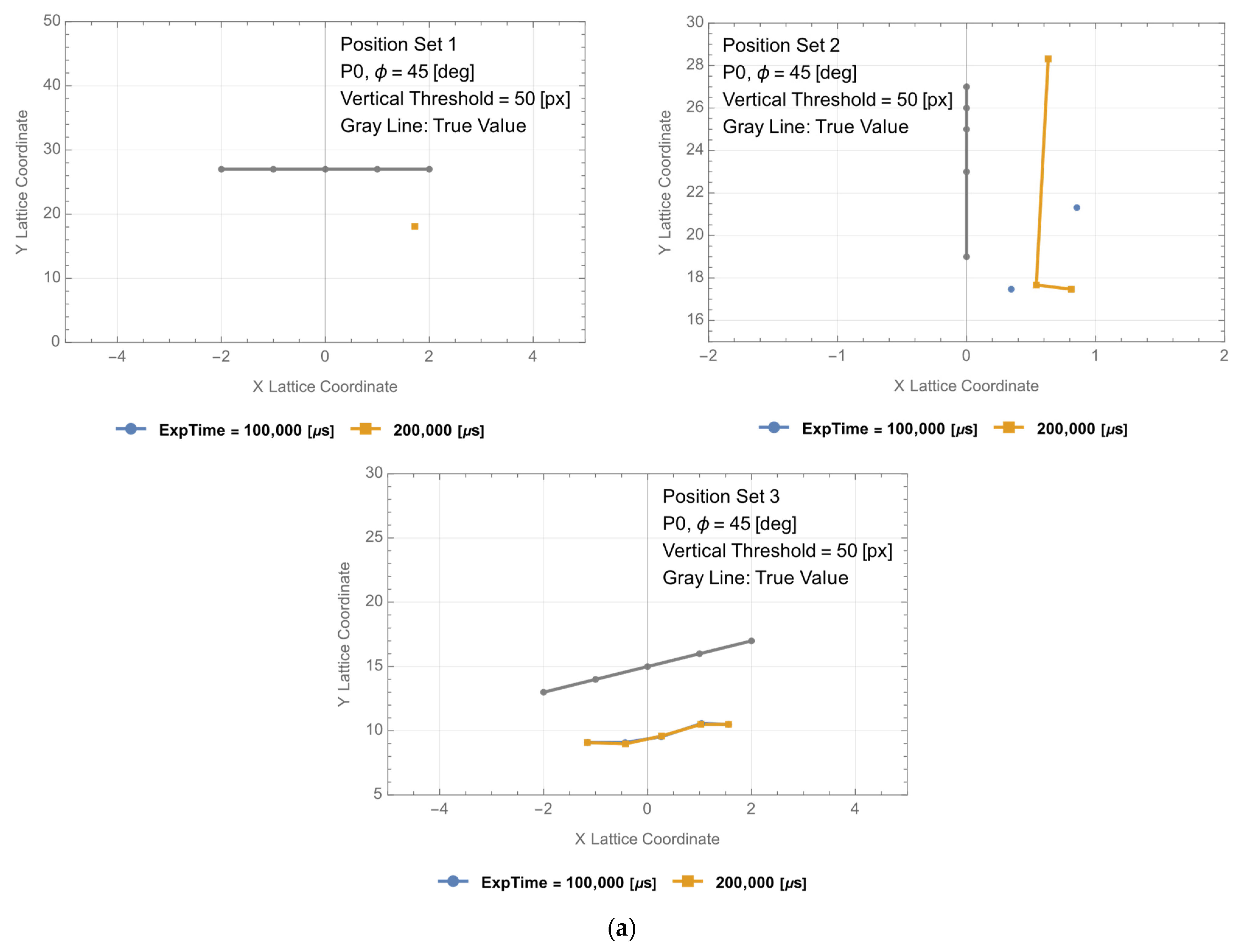

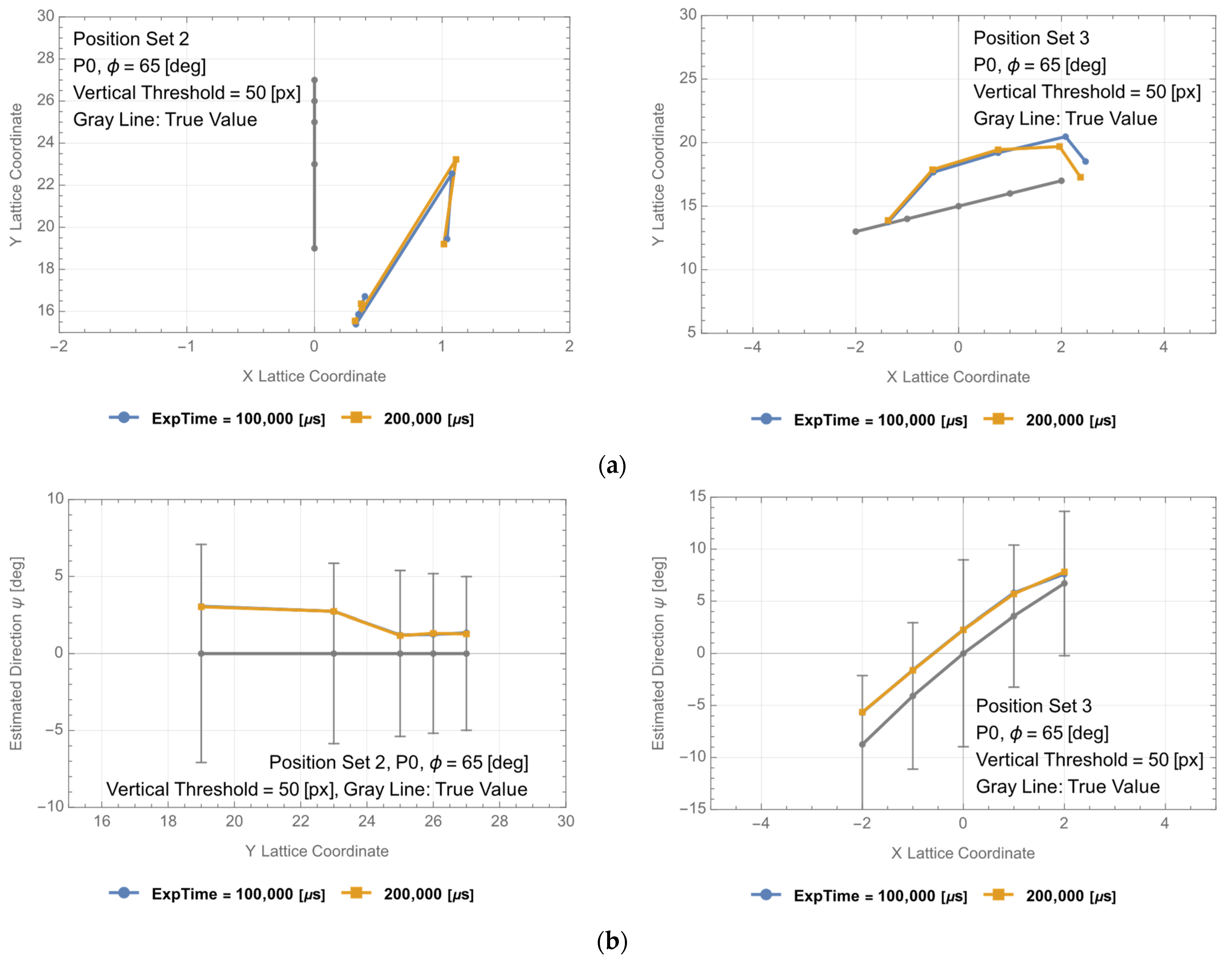

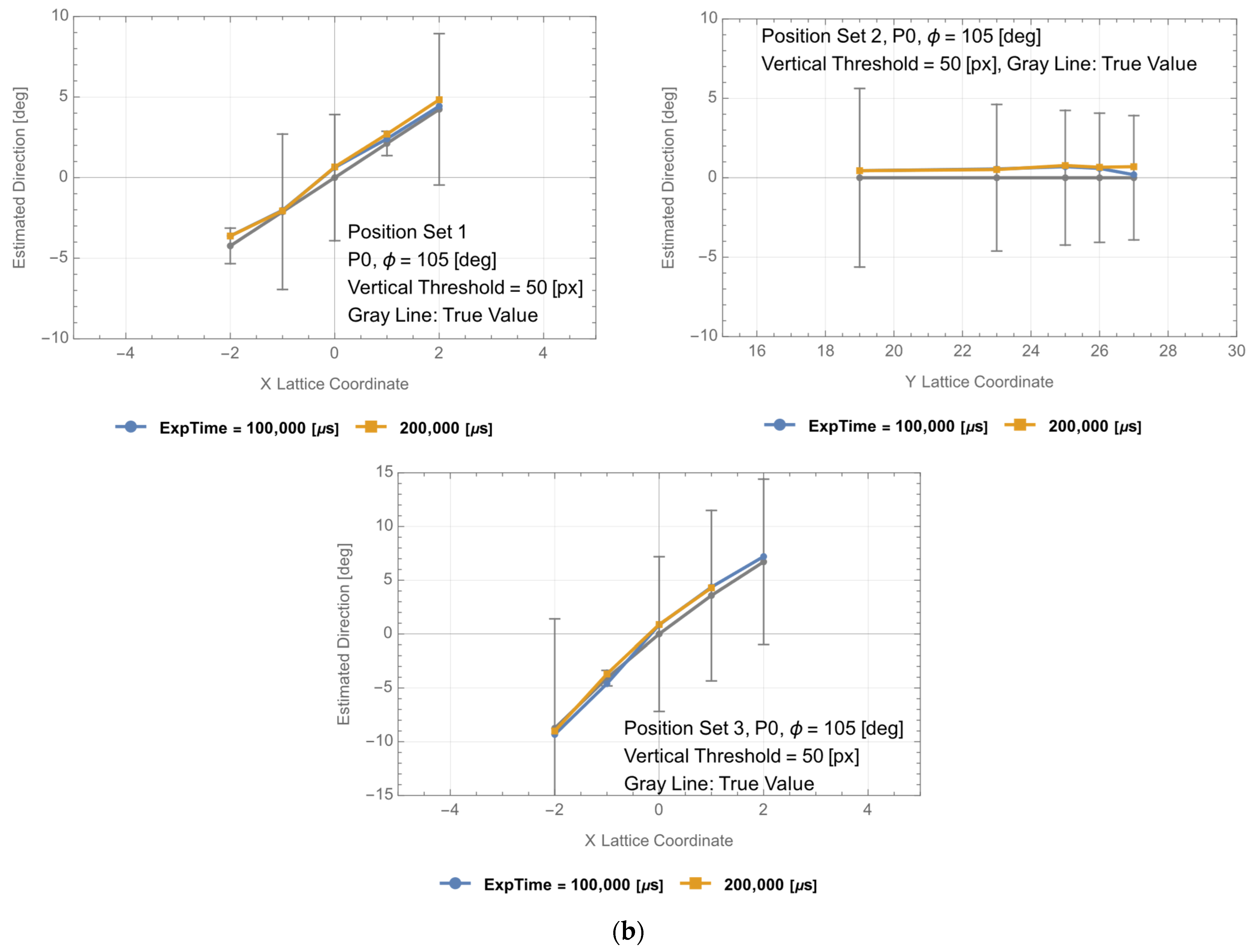

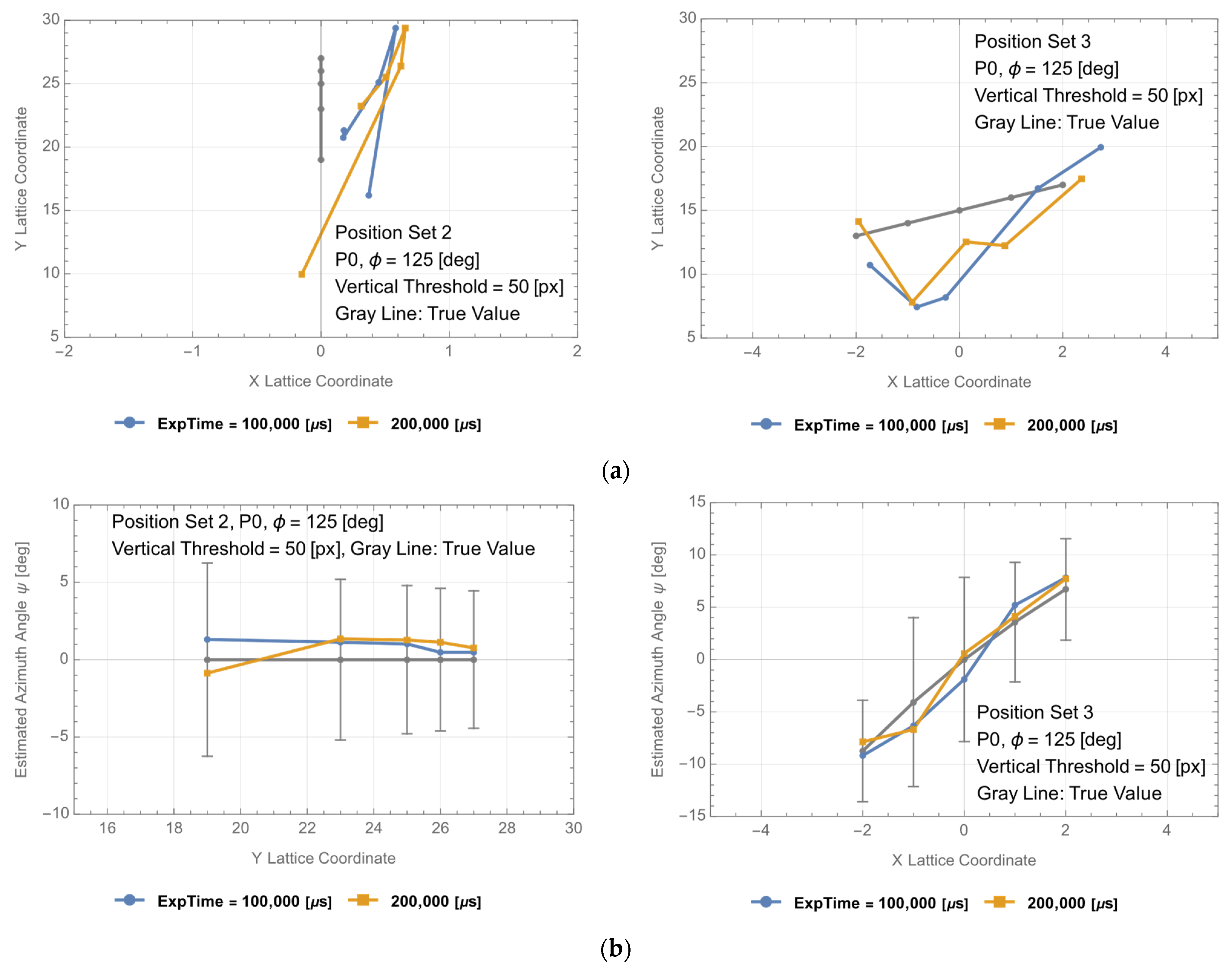

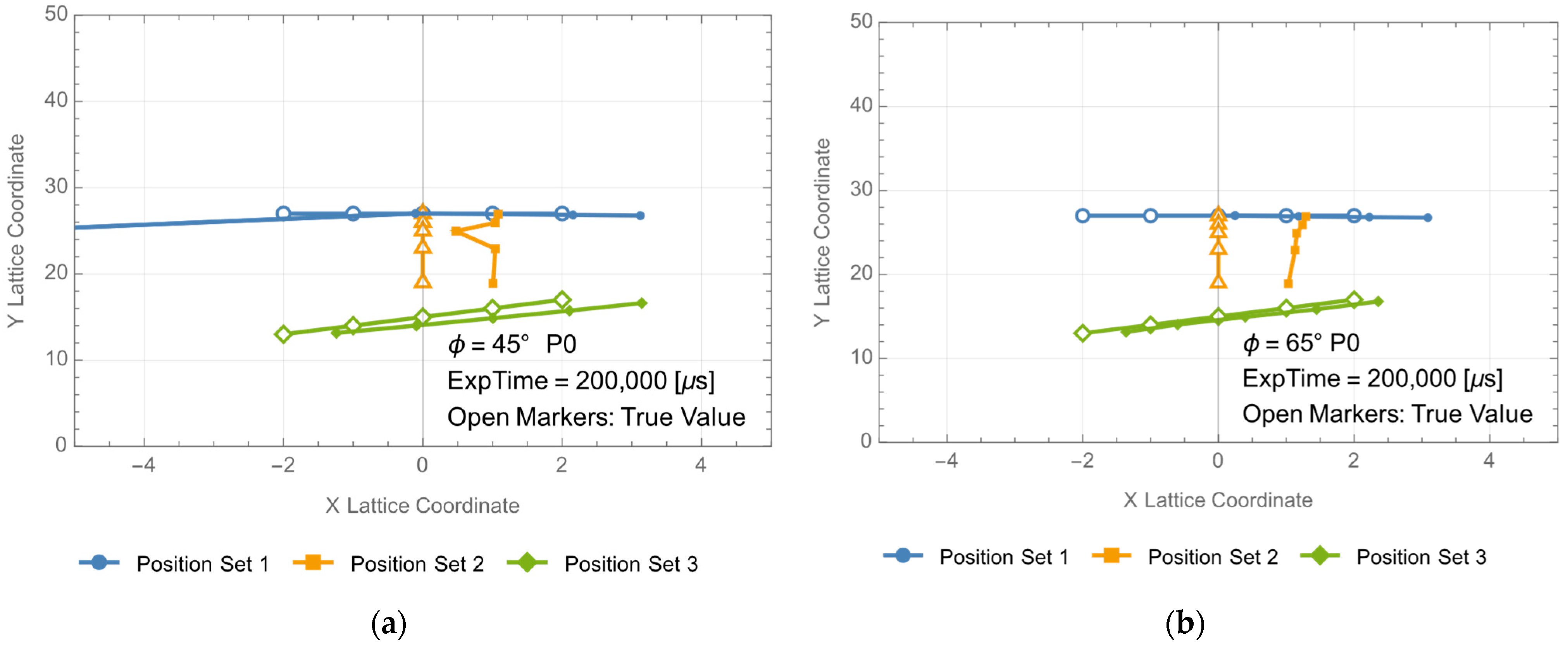

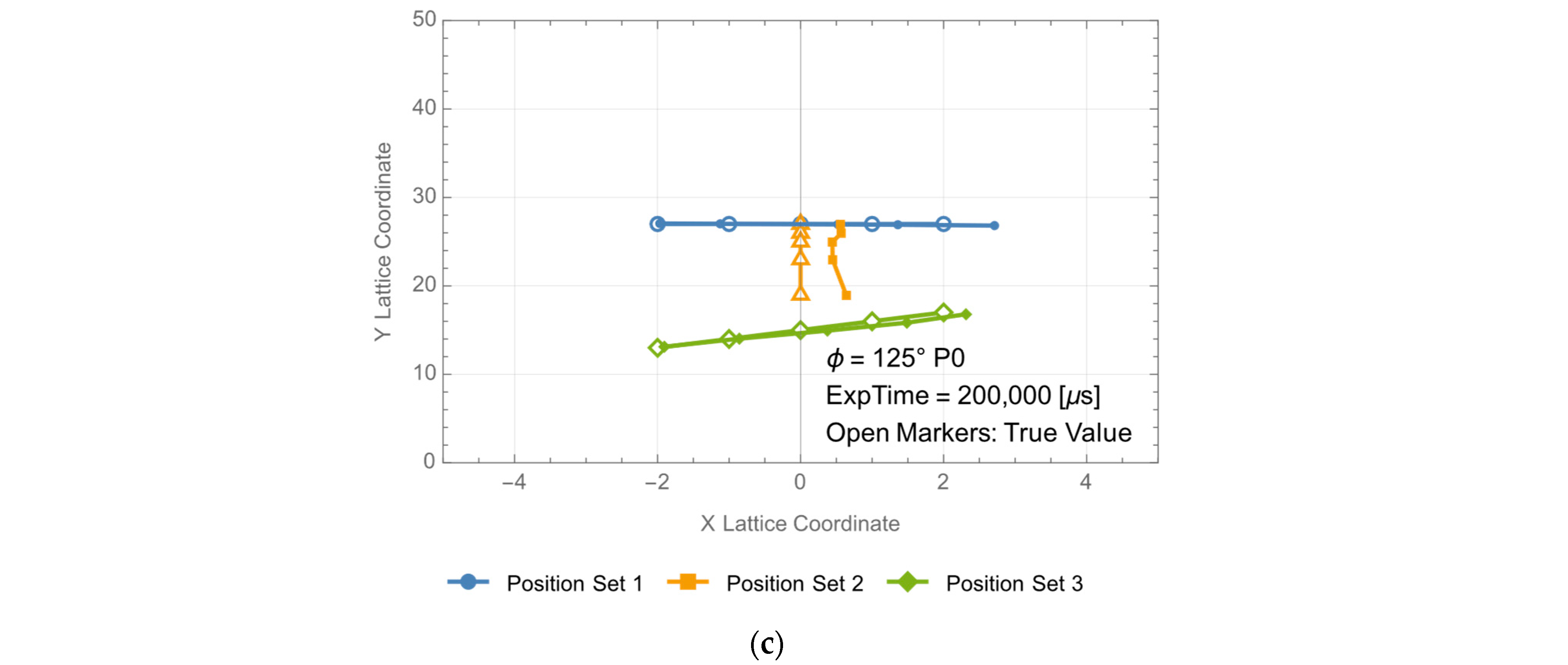

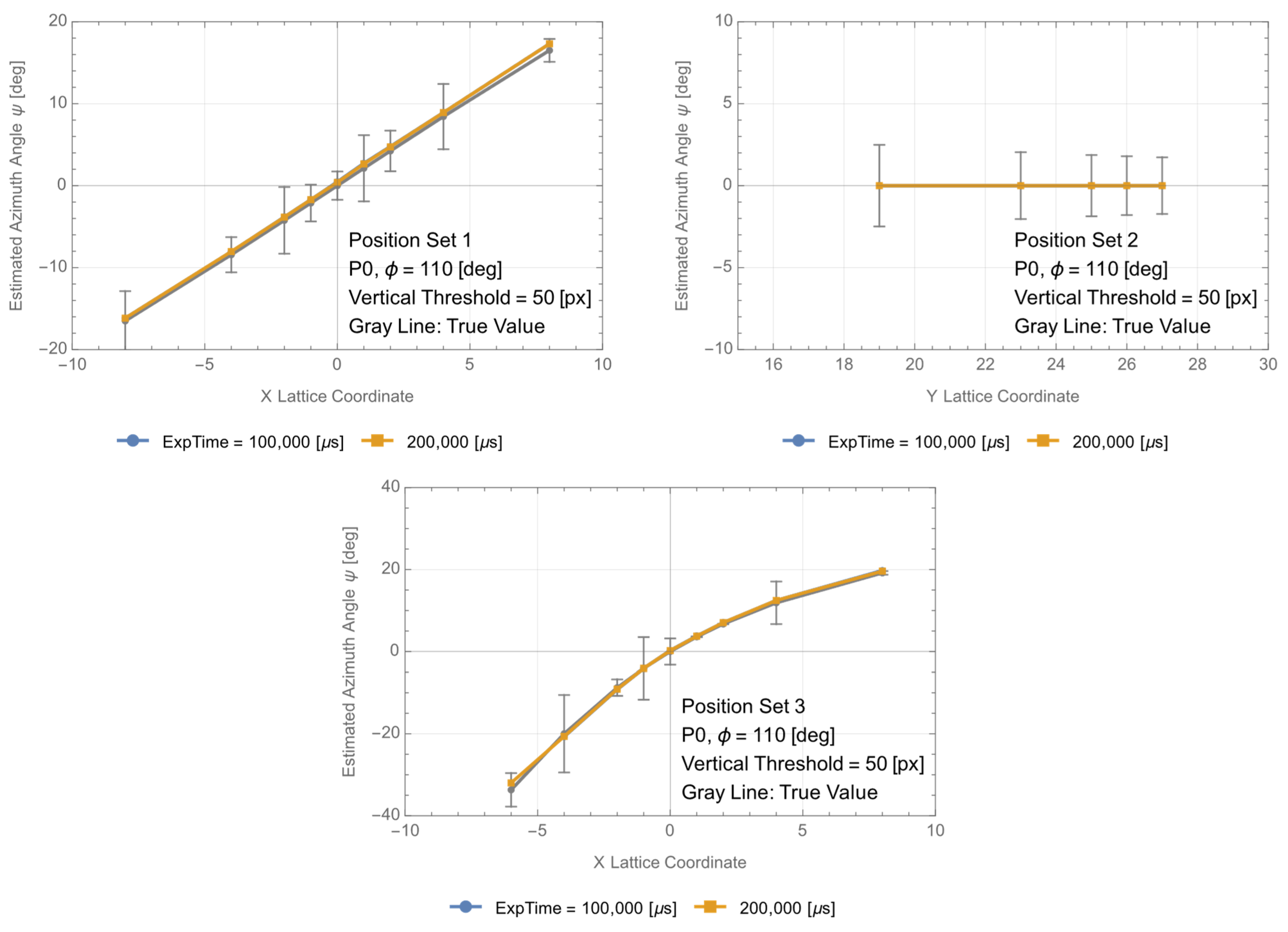

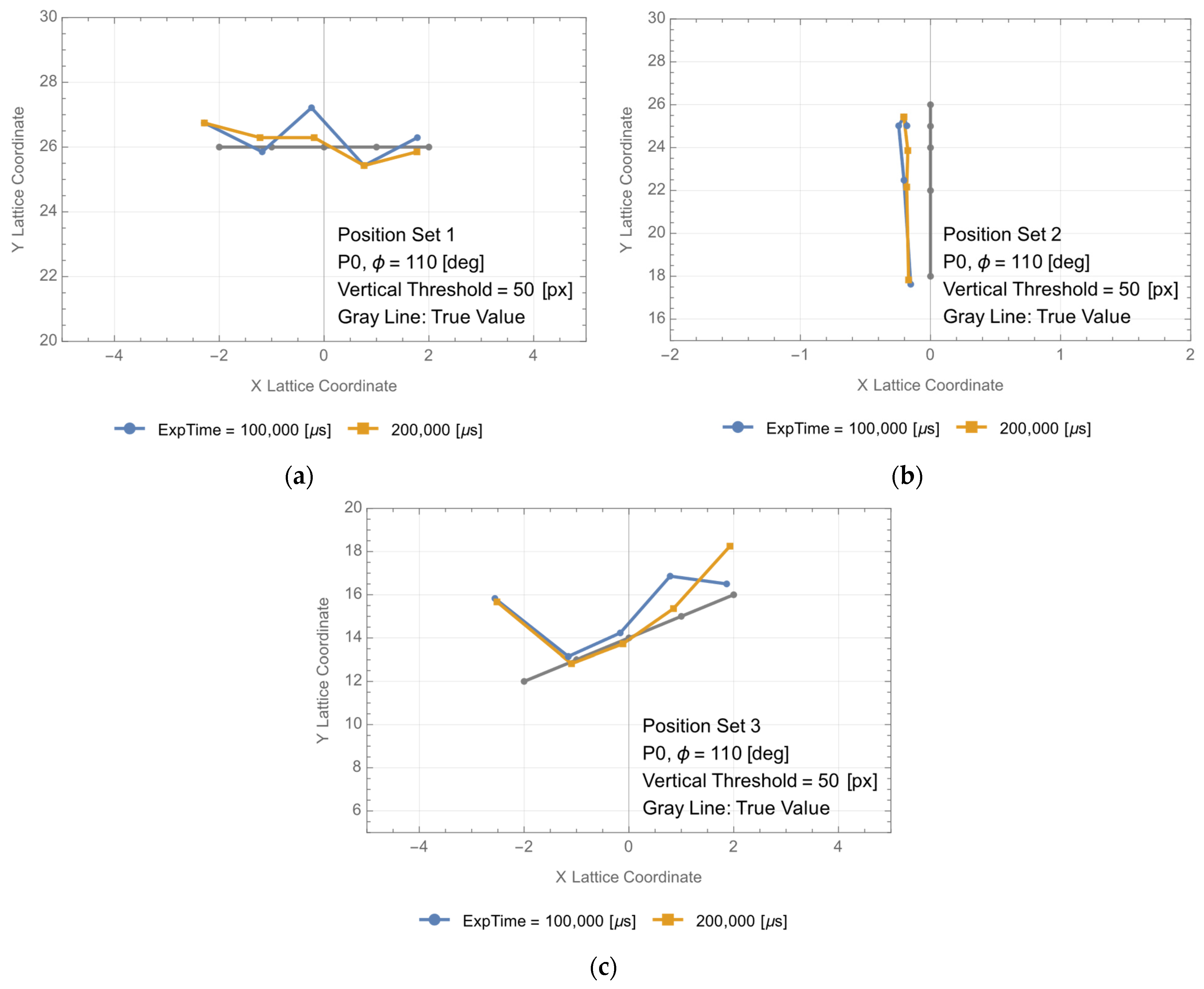

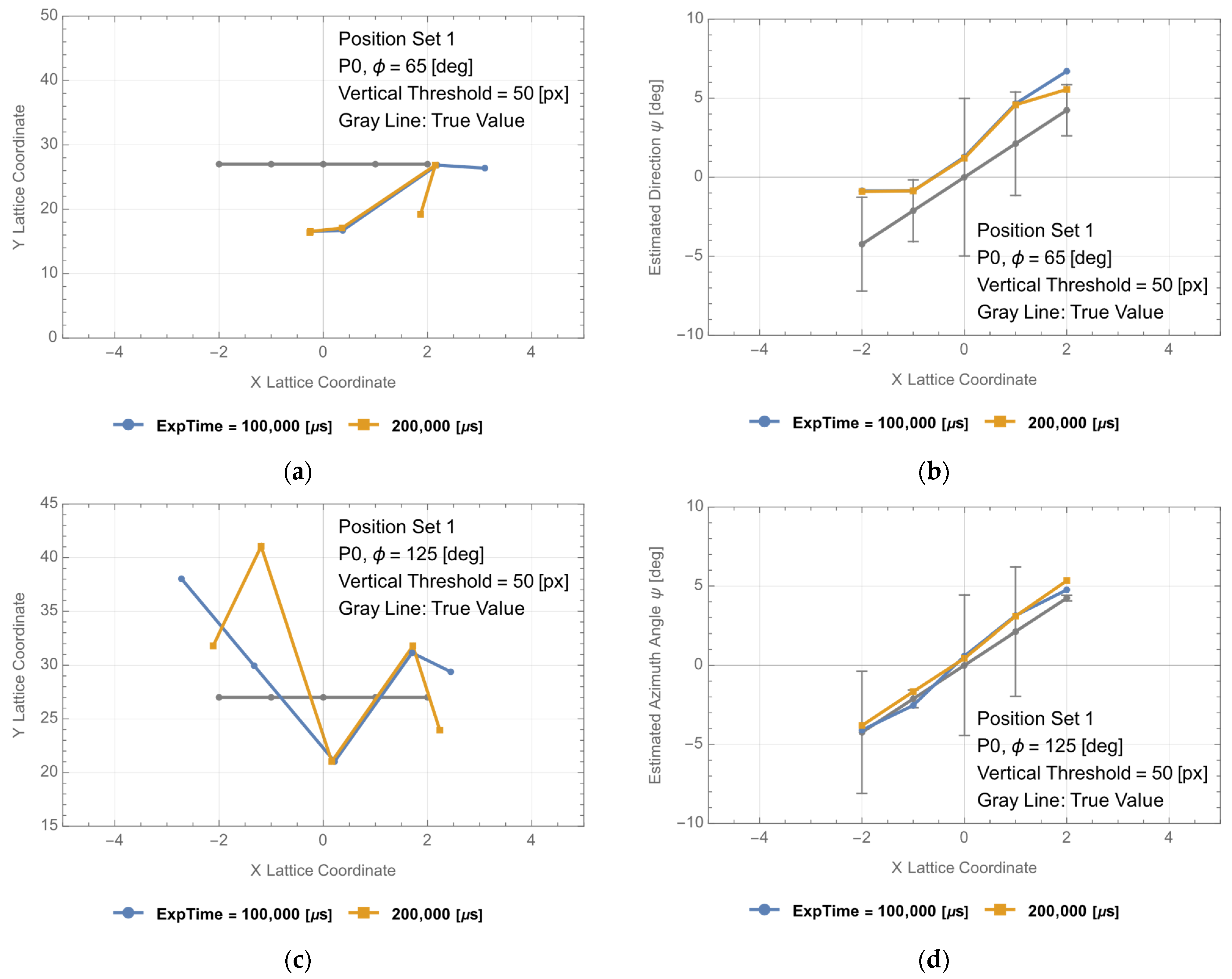

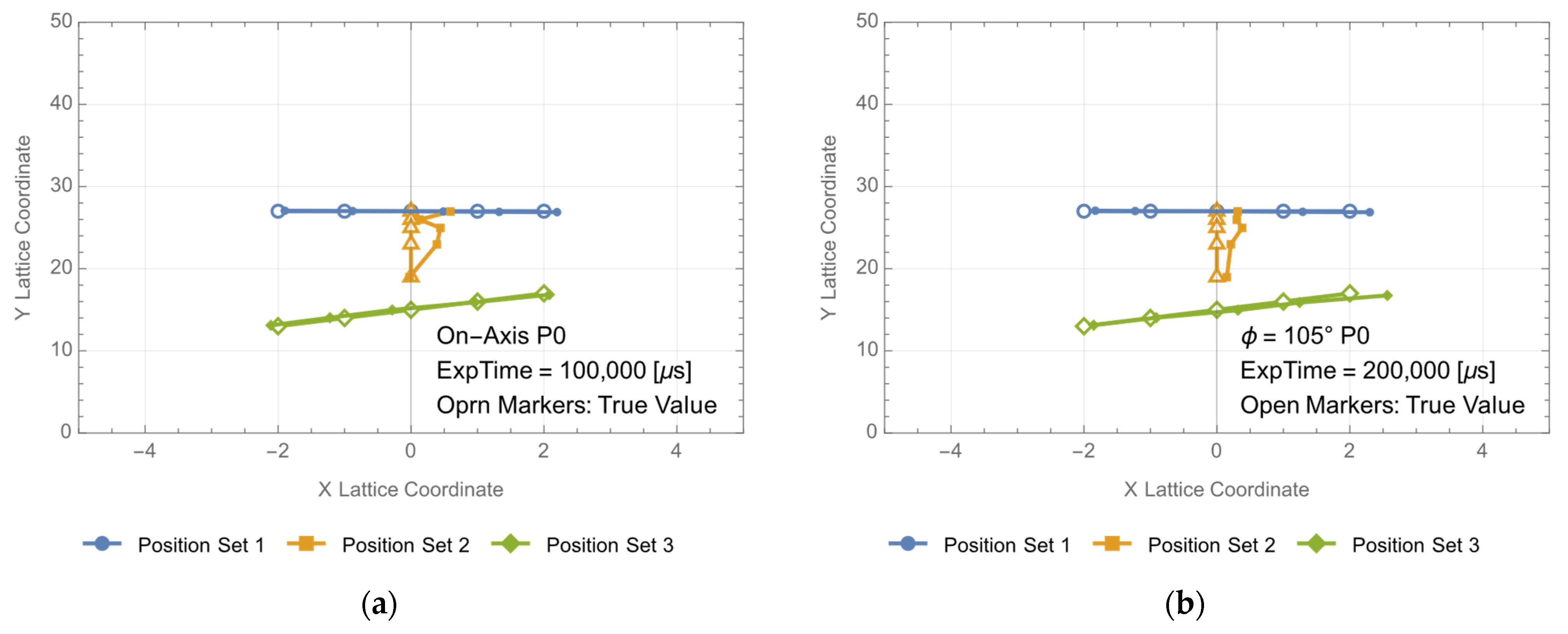

3.2. Positioning of the PV at Position Sets 1, 2, and 3

4. Discussion

4.1. GaAs Substrate

4.2. GaAs PV

4.2.1. Positioning of the PV at (0, 27)

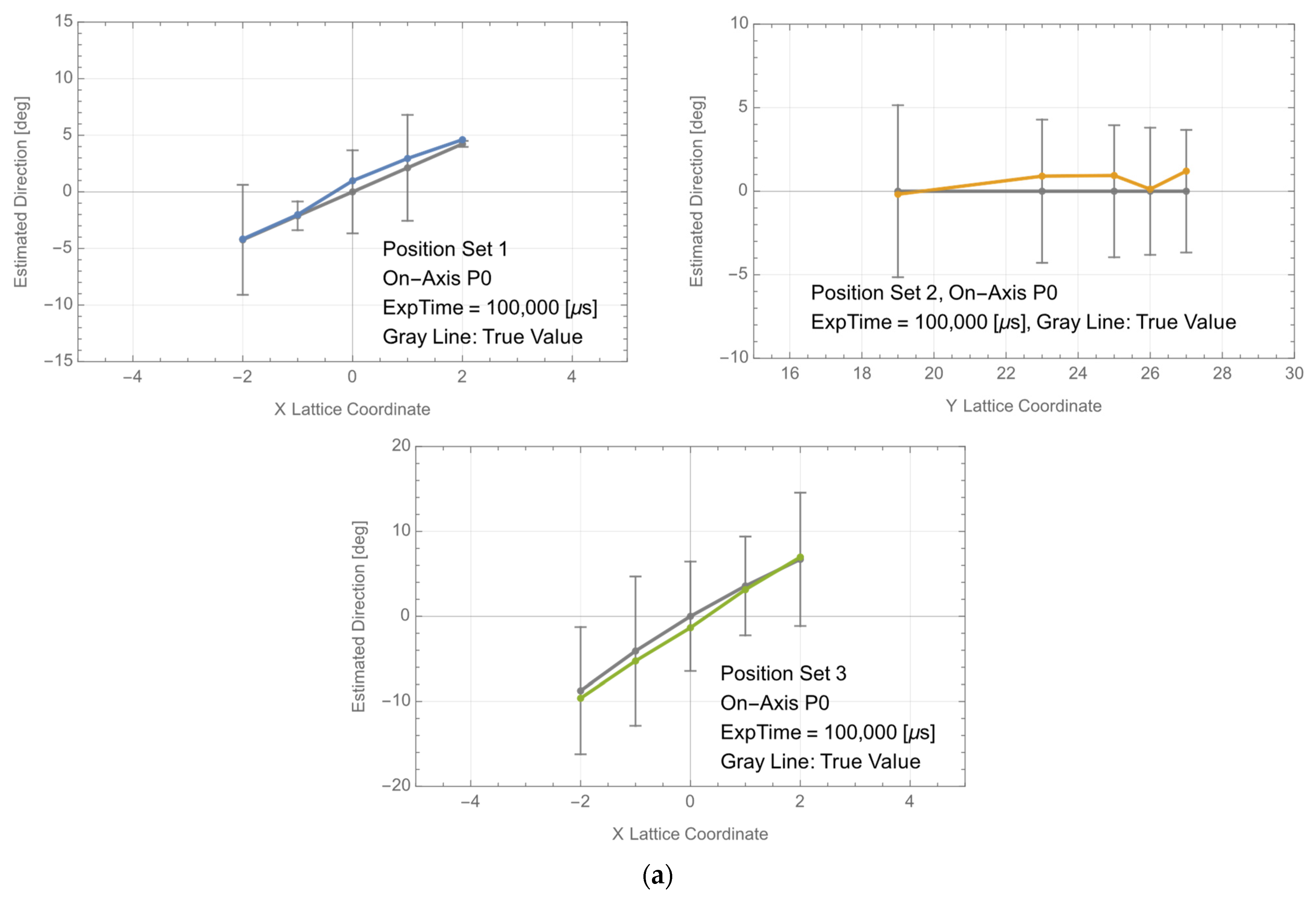

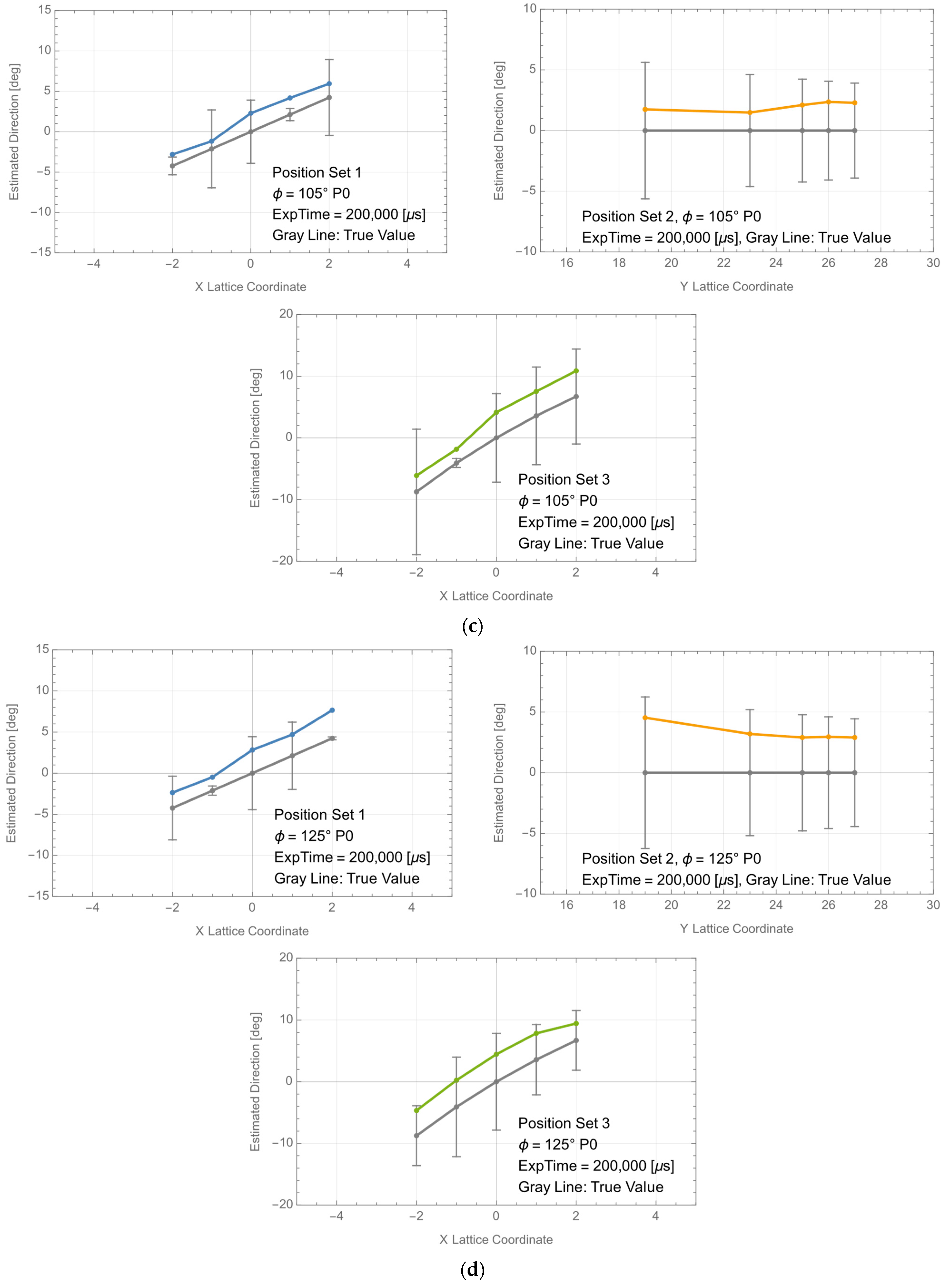

4.2.2. Positioning of the PV at Position Sets 1, 2, and 3

4.2.3. Position Estimation with External Ranging Information

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

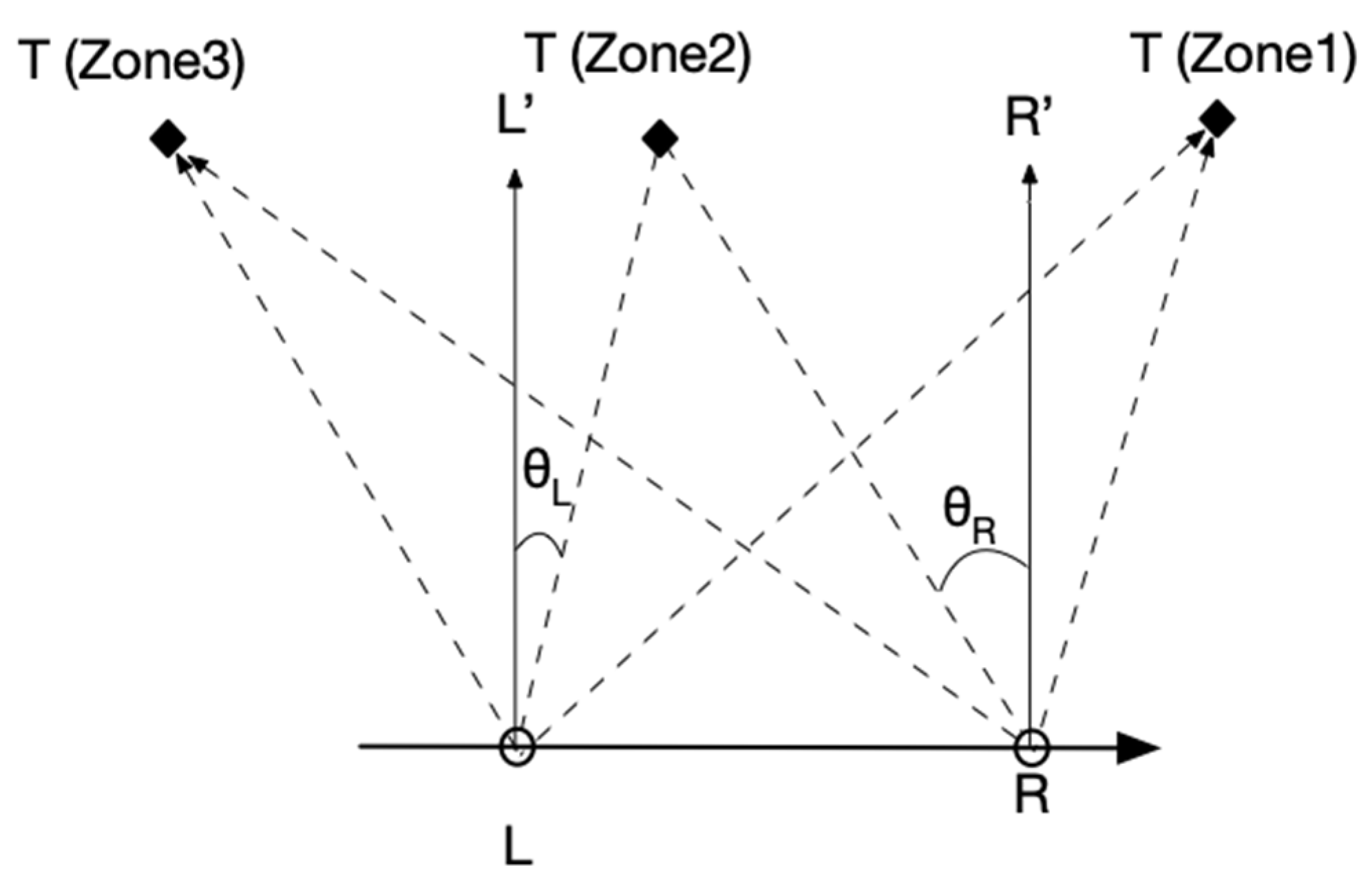

Appendix A. Mathematical Formulation of PV Positioning

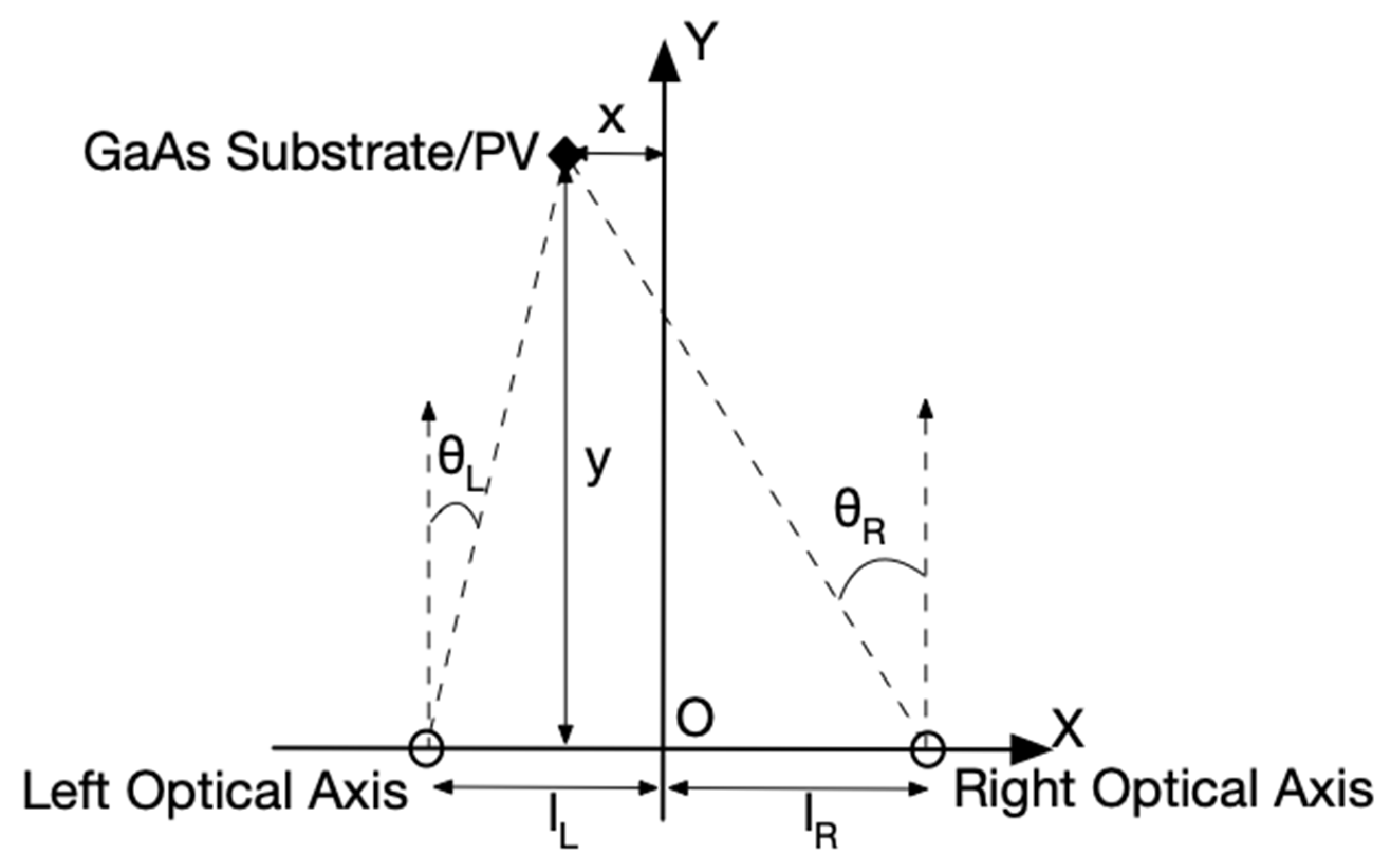

Appendix A.1. Positioning Formula with Stereo Imagery

Appendix A.2. Estimation of Pixel (px)→Angle Conversion Constant ()

| Position of the Frosted Glass | Distance (mm) | Apparent Size in px (X) | Apparent Size in px (Y) | Estimated (X) (mrad/px) | Estimated (Y) (mrad/px) |

|---|---|---|---|---|---|

| (0, 16) | 406.4 | 86 (91.5) | 88 | 2.69 | 2.80 |

| (0, 21) | 533.4 | 65 (69.1) | 67.5 | 2.71 | 2.78 |

| (0, 26) | 660.4 | 53 (56.4) | 55 | 2.68 | 2.75 |

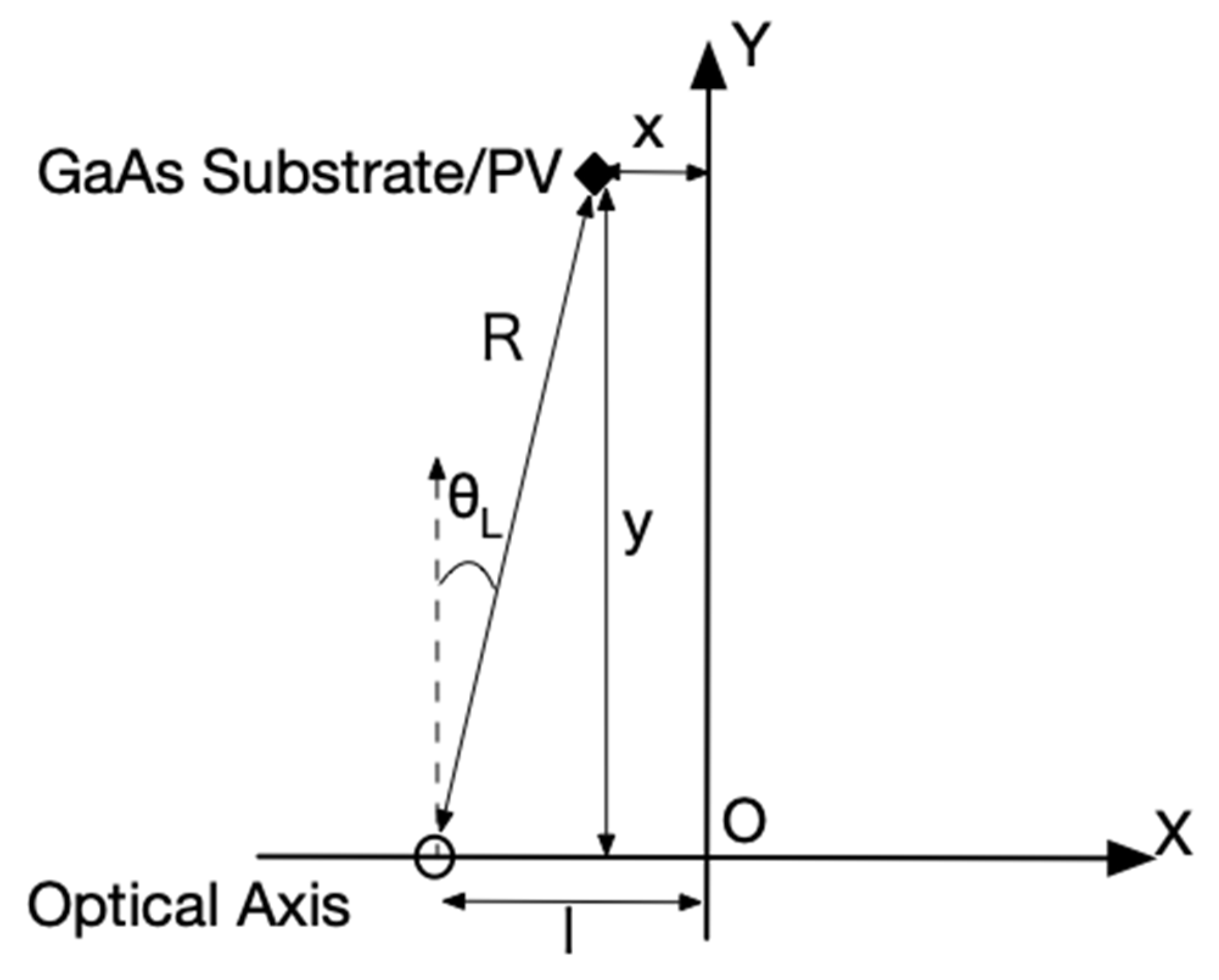

Appendix A.3. Positioning Formula without Stereo Imagery

Appendix B. Position and Direction Determination of the GaAs PV (On-Axis and Off-Axis for = 45 deg, 65 deg, 105 deg, and 125 deg)

Appendix B.1. On-Axis

Appendix B.2. Off-Axis

Appendix C. Position Determination Based on Range Estimation by the Apparent Size of the Target Combined with Direction Estimation

Appendix C.1. On-Axis

Appendix C.2. Off-Axis

Appendix D. Position Determination with External Ranging Information

References

- Frolova, E.; Dobroskok, N.; Morozov, A. Critical Review of Wireless Electromagnetic Power Transmission Methods. In Proceedings of the International Scientific and Practical Conference “Young Engineers of the Fuel and Energy Complex: Developing the Energy Agenda of the Future” (EAF 2021), Saint Petersburg, Russia, 10–11 December 2021; Atlantis Press: Dordrecht, The Netherlands, 2022. [Google Scholar] [CrossRef]

- PowerLight Technologies. Available online: https://powerlighttech.com/ (accessed on 23 May 2022).

- Liu, Q.; Xiong, M.; Liu, M.; Jiang, Q.; Fang, W.; Bai, Y. Charging A Smartphone Over the Air: The Resonant Beam Charging Method. IEEE Internet Things J. 2022, 9, 13876–13885. [Google Scholar] [CrossRef]

- The Wireless Power Company. Wi-Charge. Available online: https://www.wi-charge.com (accessed on 9 May 2022).

- Wang, J.X.; Zhong, M.; Wu, Z.; Guo, M.; Liang, X.; Qi, B. Ground-based investigation of a directional, flexible, and wireless concentrated solar energy transmission system. Appl. Energy 2022, 322, 119517. [Google Scholar] [CrossRef]

- Baraskar, A.; Yoshimura, Y.; Nagasaki, S.; Hanada, T. Space solar power satellite for the Moon and Mars mission. J. Space Saf. Eng. 2022, 9, 96–105. [Google Scholar] [CrossRef]

- Lee, N.; Blanchard, J.T.; Kawamura, K.; Weldon, B.; Ying, M.; Young, S.A.; Close, S. Supporting Uranus Exploration with Deployable ChipSat Probes. In Proceedings of the AIAA SCITECH 2022 Forum, San Diego, CA, USA, Virtual, 3–7 January 2022; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2022. [Google Scholar] [CrossRef]

- Landis, G.A. Laser Power Beaming for Lunar Polar Exploration. In Proceedings of the AIAA Propulsion and Energy 2020 Forum, Virtual Event, 24–28 August 2020; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2020. [Google Scholar] [CrossRef]

- Asaba, K.; Miyamoto, T. System Level Requirement Analysis of Beam Alignment and Shaping for Optical Wireless Power Transmission System by Semi–Empirical Simulation. Photonics 2022, 9, 452. [Google Scholar] [CrossRef]

- Asaba, K.; Miyamoto, T. Relaxation of Beam Irradiation Accuracy of Cooperative Optical Wireless Power Transmission in Terms of Fly Eye Module with Beam Confinement Mechanism. Photonics 2022, 9, 995. [Google Scholar] [CrossRef]

- Differential Absorption Lidar|Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/B9780123822253002048?token=ACBBE2F8761774F5628FC13C0F6B8E339140949E50BF9DE9F5063F95873CC081FB2353E862E10CA4576416252562C600&originRegion=us-east-1&originCreation=20220824035349 (accessed on 24 August 2022).

- Dual-Wavelength Measurements Compensate for Optical Interference|7 May 2004. Available online: https://www.biotek.com/resources/technical-notes/dual-wavelength-measurements-compensate-for-optical-interference/ (accessed on 24 August 2022).

- Herrlin, K.; Tillman, C.; Grätz, M.; Olsson, C.; Pettersson, H.; Svahn, G.; Wahlström, C.-G.; Svanberg, S. Contrast-enhanced radiography by differential absorption, using a laser-produced x-ray source. Investig. Radiol. 1997, 32, 306–310. [Google Scholar] [CrossRef]

- Asaba, K.; Moriyama, K.; Miyamoto, T. Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing. Photonics 2022, 9, 861. [Google Scholar] [CrossRef]

- Asaba, K.; Miyamoto, T. Solar Cell Detection and Position, Attitude Determination by Differential Absorption Imaging in Optical Wireless Power Transmission. Photonics 2023, 10, 553. [Google Scholar] [CrossRef]

- Lagendijk, R.; Franich, R.E.; Hendriks, E. Stereoscopic Image Processing. In Video, Speech, and Audio Signal Processing and Associated Standards; In Electrical Engineering Handbook; CRC Press: Oxford, UK, 2009; Volume 20096073, pp. 1–11. [Google Scholar] [CrossRef]

- Lu, Y.; Kubik, K. Stereo Image Matching Using Robust Estimation and Image Analysis Techniques for Dem Generation. In Proceedings of the International Archives of Photogrammetry and Remote Sensing. Vol. XXXIII, Part B3, Amsterdam, The Netherlands, 16–22 July 2000; The International Society for Photogrammetry and Remote Sensing: Hannover, Germany, 2000. Available online: https://www.isprs.org/proceedings/XXXIII/congress/part3/ (accessed on 11 September 2023).

- IKONOS Stereo Satellite Imagery, Satellite Images|Satellite Imaging Corp. Available online: https://www.satimagingcorp.com/satellite-sensors/ikonos/ikonos-stereo-satellite-images/ (accessed on 15 May 2023).

- “OS13CA5111A,” Opto Supply. Available online: https://www.optosupply.com/uppic/20211028118028.pdf (accessed on 29 December 2022).

- “OSI5FU511C-40,” Akizuki Denshi. Available online: https://akizukidenshi.com/download/OSI5FU5111C-40.pdf (accessed on 29 December 2022).

- “Depth Camera D435,” Intel® RealSenseTM Depth and Tracking Cameras. Available online: https://www.intelrealsense.com/depth-camera-d435/ (accessed on 15 May 2023).

- Intel, Intel® RealSenseTM D400 Series Data Sheet. Available online: https://www.intelrealsense.com/wp-content/uploads/2023/07/Intel-RealSense-D400-Series-Datasheet-July-2023.pdf?_ga=2.241913287.432115363.1694669790-809475082.1652760187 (accessed on 24 June 2023).

- “Welcome to Python.org,” Python.org. Available online: https://www.python.org/ (accessed on 24 August 2022).

- “Intel® RealSenseTM,” Intel® RealSenseTM Depth and Tracking Cameras. Available online: https://www.intelrealsense.com/sdk-2/ (accessed on 24 August 2022).

- “Open CV,” OpenCV. Available online: https://opencv.org/ (accessed on 23 August 2022).

- Wolfram Mathematica: Modern Technical Computing. Available online: https://www.wolfram.com/mathematica/ (accessed on 15 August 2022).

- Home|AXT Inc. Available online: http://www.axt.com/site/index.php?q=node/1 (accessed on 15 May 2023).

- 850 nm CWL, 12.5 mm Dia., Hard Coated OD 4.0 10 nm Bandpass Filter. Available online: https://www.edmundoptics.jp/p/850nm-cwl-125mm-dia-hard-coated-od-4-10nm-bandpass-filter/28574/ (accessed on 24 April 2023).

- 940 nm CWL, 12.5 mm Dia., Hard Coated OD 4.0 10 nm Bandpass Filter. Available online: https://www.edmundoptics.jp/p/940nm-cwl-125mm-dia-hard-coated-od-4-10nm-bandpass-filter/19778/ (accessed on 24 April 2023).

- ATI—Advanced Technology Institute, Inc.—BioInformatics/Mathematical Information Engineering. Available online: http://www.advanced-tech-inst.co.jp/ (accessed on 14 February 2023).

| Transmitter Assembly | |

|---|---|

| LED power | 2 mW × 2 for = 850 nm and 940 nm |

| Beam divergence | 85 deg (full angle) |

| Filter paper transmittance | 50%/paper(typical) |

| Target Assembly | |

| GaAs substrate | 2-inch diameter |

| GaAs PV | 6 cm × 4 cm |

| Distance from the camera assembly | 660 mm(typical) |

| Attitude angle | 43~123 deg (typical) |

| Camera Assembly | |

| Camera | D435 × 2 |

| Exposure time | 25, 50, 100, 250, 500, 1000, 2500, 5000, 10,000, 25,000, 50,000, 100,000 and 200,000 |

| Image size | 640 × 480 px |

| pass filter | 0.54 | 0 |

| pass filter | 0 | 0.27 |

| stereo imagery | 1 to 3 deg | 0.47 to 1.41 |

| apparent size measurement | 2 to 5 deg | 9.94 to 2.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asaba, K.; Miyamoto, T. Positioning of a Photovoltaic Device on a Real Two-Dimensional Plane in Optical Wireless Power Transmission by Means of Infrared Differential Absorption Imaging. Photonics 2023, 10, 1111. https://doi.org/10.3390/photonics10101111

Asaba K, Miyamoto T. Positioning of a Photovoltaic Device on a Real Two-Dimensional Plane in Optical Wireless Power Transmission by Means of Infrared Differential Absorption Imaging. Photonics. 2023; 10(10):1111. https://doi.org/10.3390/photonics10101111

Chicago/Turabian StyleAsaba, Kaoru, and Tomoyuki Miyamoto. 2023. "Positioning of a Photovoltaic Device on a Real Two-Dimensional Plane in Optical Wireless Power Transmission by Means of Infrared Differential Absorption Imaging" Photonics 10, no. 10: 1111. https://doi.org/10.3390/photonics10101111

APA StyleAsaba, K., & Miyamoto, T. (2023). Positioning of a Photovoltaic Device on a Real Two-Dimensional Plane in Optical Wireless Power Transmission by Means of Infrared Differential Absorption Imaging. Photonics, 10(10), 1111. https://doi.org/10.3390/photonics10101111