A Data Generation Method for Image Flare Removal Based on Similarity and Centrosymmetric Effect

Abstract

:1. Introduction

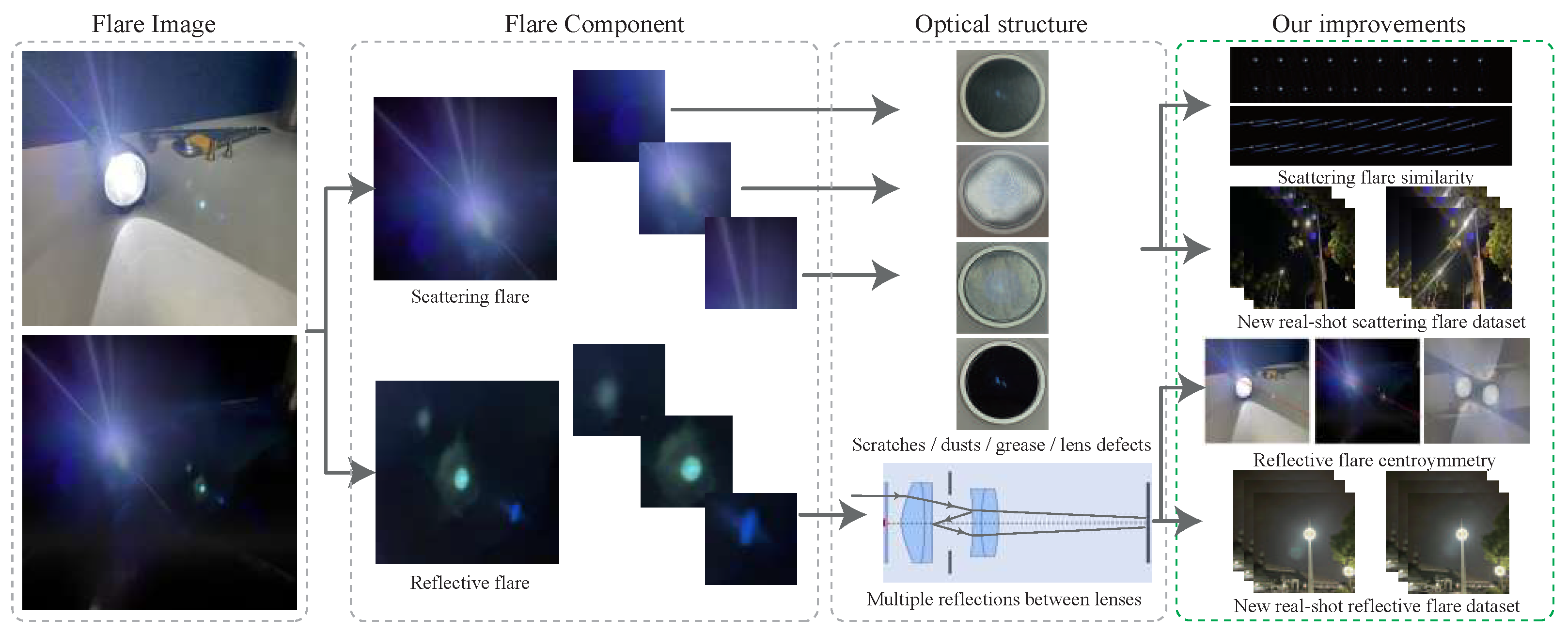

- Based on the principles of Fourier optics, we propose the similarity effect of scattering flare. The effectiveness of this principle has been demonstrated through both theoretical analysis and optical experiments.

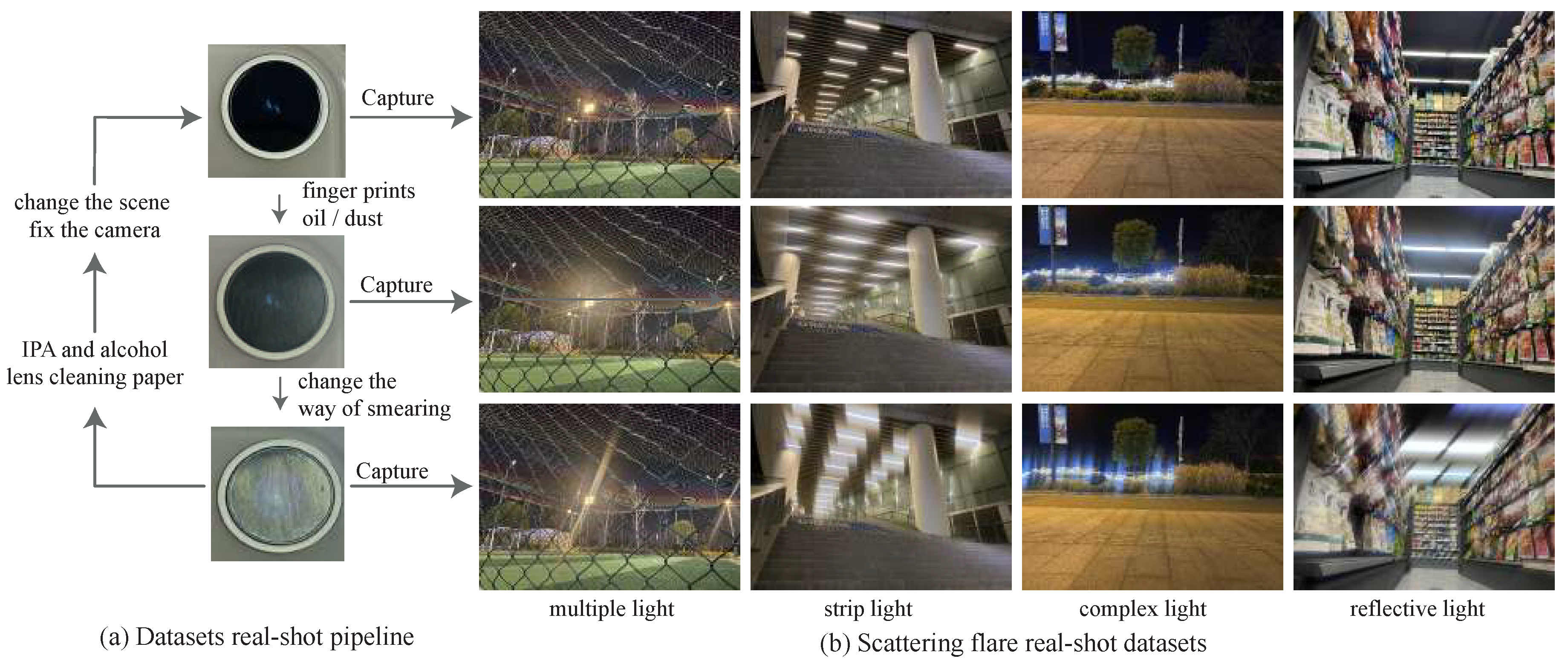

- Based on the similarity effect of scattering flare, we propose a real-world dataset of scattering flare with multiple light sources

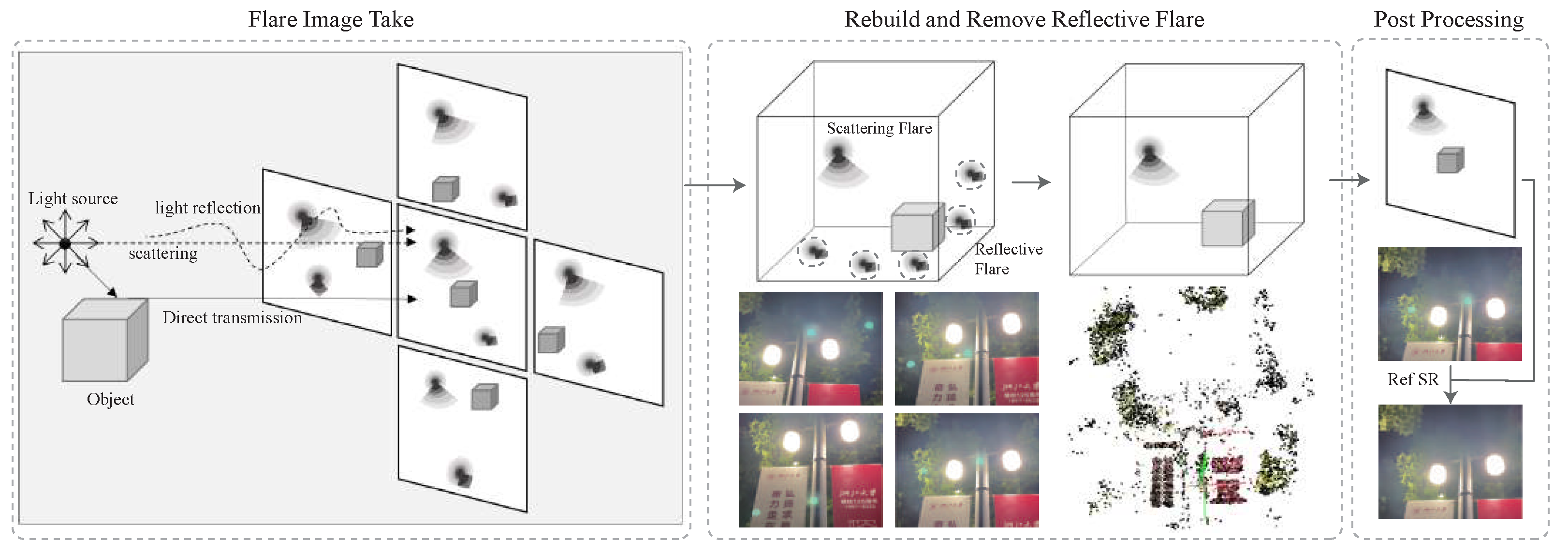

- Based on the principles of optical lens design, we propose the central symmetry effect of reflective flare.

- Based on the centrosymmetric effect of reflective flare, we propose a real-world dataset for removing reflective flare based on three-dimensional reconstruction.

- We apply the similarity effect of scattering flare and the centrosymmetric effect of reflective flare in the generation of simulated data, enabling the network to achieve better anti-flare effects after training.

2. Related Work

2.1. Single Image Flare Removal

2.2. NeRF-Based View Rendering

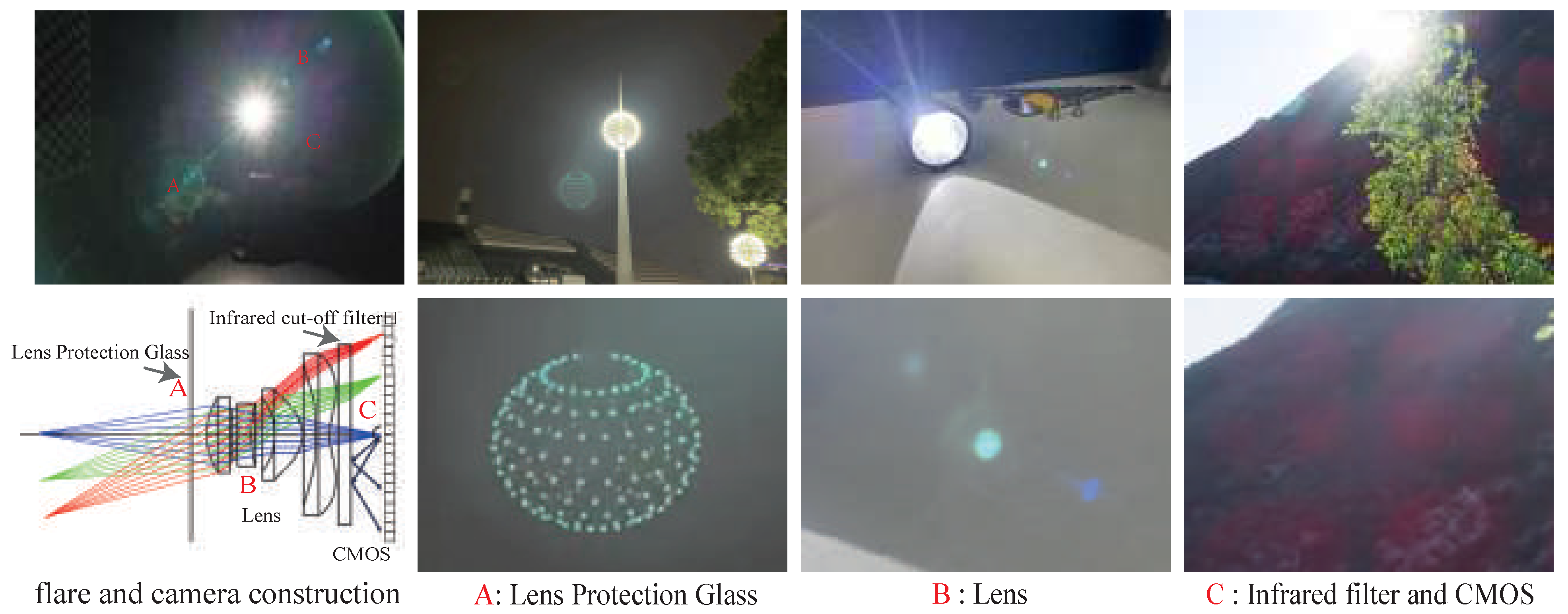

3. Physics of Lens Flare

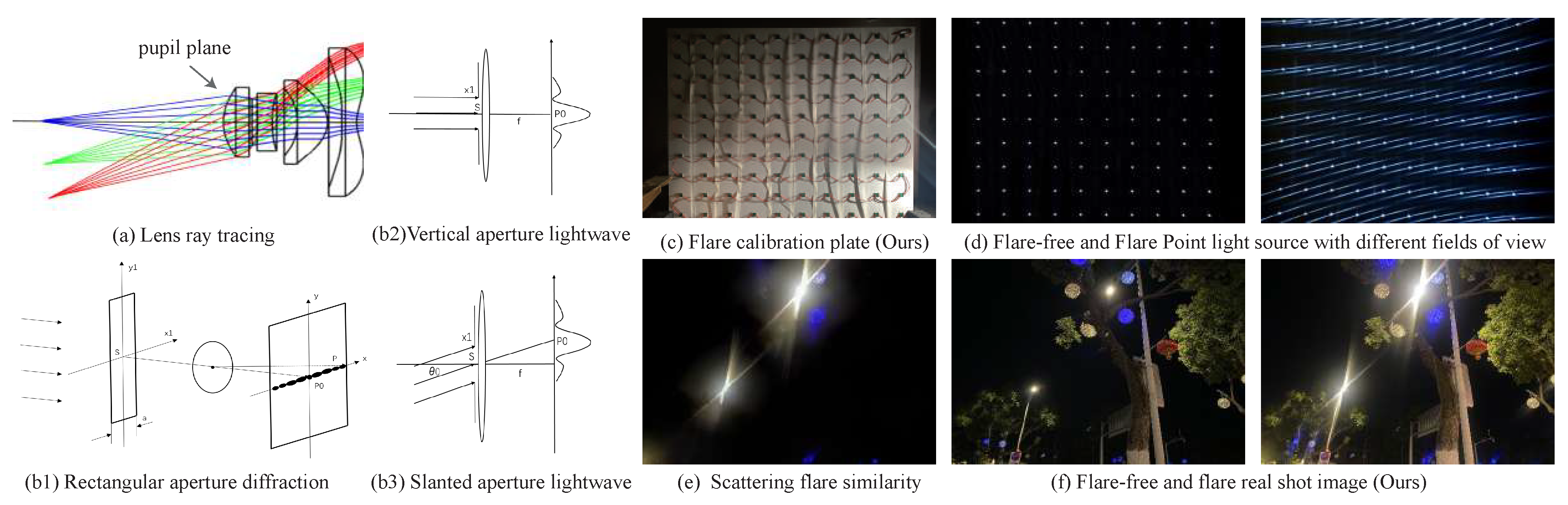

3.1. Scattering Flare

3.2. Reflective Flare

4. Proposed Method

4.1. Scattering Flare Similarity

4.2. Scattering Flare Datasets

- (1)

- Wipe the protective glass with isopropylamine (IPA) and a cleaning cloth.

- (2)

- Find a suitable shooting position where a light source in the scene will produce some flare, and hold the camera steady.

- (3)

- Take pictures of the ground truth.

- (4)

- Spread oil and dust onto the protective glass to degrade the pupil plane.

- (5)

- Take pictures of the flare caused by the degraded pupil plane.

- (6)

- Repeat steps 4 and 5 continuously to obtain multiple sets of scattered flare images, and return to step 1 to clean the protective glass and start the process again.

4.3. Reflective Flare Centrosymmetry

4.4. Reflective Flare Datasets

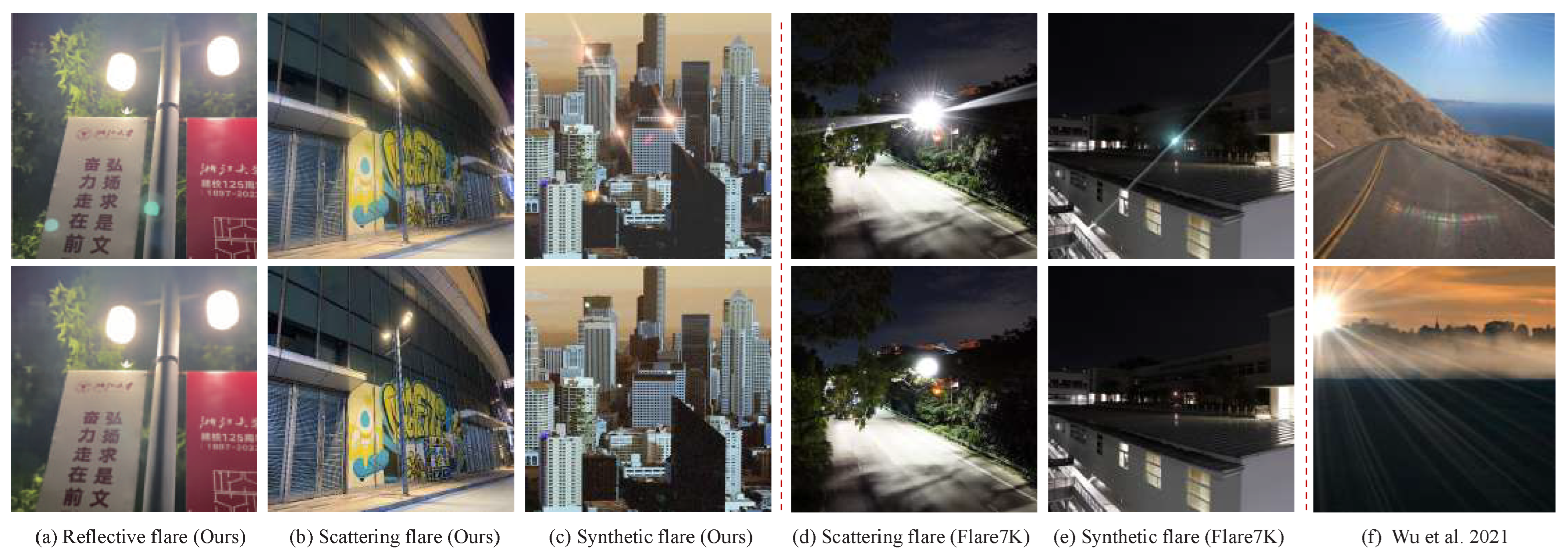

4.5. Comparison with Existing Flare Dataset

5. Experimental Results

5.1. Simulation Data Evaluation

- Base: added scattering flare same to Flare7K.

- R: added scattering and reflective flare same to Flare7K.

- RP: with added scattering flare and reflective flare based on centrosymmetric effect.

- MR: with added scattering flare based on similarity effect and reflective flare.

- MRP: with added scattering flare based on similarity effect and reflective flare base on centrosymmetric effects.

- w/o L. the ground truth image without a light source, the flare should be removed first before adding the light source.

5.2. Real Shot Data Evaluation

5.3. Dataset Performance Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Crocherie, A.; Pond, J.; Gomez, F.D.; Channon, K.; Fantoni, F. Micro to macro scale simulation coupling for stray light analysis. Opt. Express 2021, 29, 37639–37652. [Google Scholar] [CrossRef] [PubMed]

- Dai, Y.; Li, C.; Zhou, S.; Feng, R.; Loy, C.C. Flare7K: A Phenomenological Nighttime Flare Removal Dataset. Adv. Neural Inf. Process. Syst. 2022, 35, 3926–3937. [Google Scholar]

- Wu, Y.; He, Q.; Xue, T.; Garg, R.; Chen, J.; Veeraraghavan, A.; Barron, J.T. How to train neural networks for flare removal. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2239–2247. [Google Scholar]

- Fu, Y.; Liu, Y.; Li, J.; Luo, D.; Lv, S.; Jv, Y.; Xie, L. Uformer: A unet based dilated complex & real dual-path conformer network for simultaneous speech enhancement and dereverberation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7417–7421. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Talvala, E.V.; Adams, A.; Horowitz, M.; Levoy, M. Veiling glare in high dynamic range imaging. ACM Trans. Graph. (TOG) 2007, 26, 37-es. [Google Scholar] [CrossRef]

- Sassoon, E.; Schechner, Y.Y.; Treibitz, T. Flare in interference-based hyperspectral cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10174–10182. [Google Scholar]

- Reinhard, E.; Ward, G.; Pattanaik, S.N.; Debevec, P.E.; Heidrich, W. High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting; Morgan Kaufmann: Burlington, MA, USA, 2010. [Google Scholar]

- Asha, C.; Bhat, S.K.; Nayak, D.; Bhat, C. Auto removal of bright spot from images captured against flashing light source. In Proceedings of the 2019 IEEE International Conference on Distributed Computing, VLSI, Electrical Circuits and Robotics(DISCOVER), Manipal, India, 11–12 August 2019; pp. 1–6. [Google Scholar]

- Vitoria, P.; Ballester, C. Automatic Flare Spot Artifact Detection and Removal in Photographs. J. Math. Imaging Vis. 2019, 61, 515–533. [Google Scholar] [CrossRef]

- Qiao, X.; Hancke, G.P.; Lau, R. Light Source Guided Single-Image Flare Removal From Unpaired Data. In Proceedings of the International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Sun, Q.; Tseng, E.; Fu, Q.; Heidrich, W.; Heide, F. Learning Rank-1 Diffractive Optics for Single-Shot High Dynamic Range Imaging. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Feng, R.; Li, C.; Chen, H.; Li, S.; Loy, C.C.; Gu, J. Removing diffraction image artifacts in under-display camera via dynamic skip connection network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 662–671. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision Transformers for Single Image Dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Yang, Y.; He, K.; Lin, S.; Hopcroft, J.E. Single Image Reflection Removal Through Cascaded Refinement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Gu, J.; Ramamoorthi, R.; Belhumeur, P.; Nayar, S. Removing image artifacts due to dirty camera lenses and thin occluders. In ACM SIGGRAPH Asia 2009 Papers; ACM: New York, NY, USA, 2009; pp. 1–10. [Google Scholar]

- Dai, Y.; Li, C.; Zhou, S.; Feng, R.; Luo, Y.; Loy, C.C. Flare7K++: Mixing Synthetic and Real Datasets for Nighttime Flare Removal and Beyond. arXiv 2023, arXiv:2306.04236. [Google Scholar]

- Dai, Y.; Li, C.; Zhou, S.; Feng, R.; Zhu, Q.; Sun, Q.; Sun, W.; Loy, C.C.; Gu, J.; Liu, S.; et al. MIPI 2023 Challenge on Nighttime Flare Removal: Methods and Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2852–2862. [Google Scholar]

- Lan, F.; Chen, C.W. Tackling Scattering and Reflective Flare in Mobile Camera Systems: A Raw Image Dataset for Enhanced Flare Removal. arXiv 2023, arXiv:2307.14180. [Google Scholar]

- Dai, Y.; Luo, Y.; Zhou, S.; Li, C.; Loy, C.C. Nighttime smartphone reflective flare removal using optical center symmetry prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 20783–20791. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ren, N. NeRF: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2022, 65, 99–106. [Google Scholar] [CrossRef]

- Zhang, K.; Riegler, G.; Snavely, N.; Koltun, V. Nerf++: Analyzing and improving neural radiance fields. arXiv 2020, arXiv:2010.07492. [Google Scholar]

- Yu, A.; Ye, V.; Tancik, M.; Kanazawa, A. pixelnerf: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4578–4587. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Tancik, M.; Casser, V.; Yan, X.; Pradhan, S.; Mildenhall, B.; Srinivasan, P.P.; Barron, J.T.; Kretzschmar, H. Block-NeRF: Scalable Large Scene Neural View Synthesis. arXiv 2022, arXiv:2202.05263. [Google Scholar]

- Turki, H.; Ramanan, D.; Satyanarayanan, M. Mega-nerf: Scalable construction of large-scale nerfs for virtual fly-throughs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12922–12931. [Google Scholar]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. Nerf in the wild: Neural radiance fields for unconstrained photo collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7210–7219. [Google Scholar]

- Mildenhall, B.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P.; Barron, J.T. Nerf in the dark: High dynamic range view synthesis from noisy raw images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16190–16199. [Google Scholar]

- Hullin, M.; Eisemann, E.; Seidel, H.P.; Lee, S. Physically-based real-time lens flare rendering. In ACM SIGGRAPH 2011 Papers, Proceedings of the SIGGRAPH ’11: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Vancouver, BC, Canada, 7ߝ11 August 2011; ACM: New York, NY, USA, 2011; pp. 1–10. [Google Scholar]

- Lee, S.; Eisemann, E. Practical real-time lens-flare rendering. In Computer Graphics Forum; Wiley Online Library: New York, NY, USA, 2013; Volume 32, pp. 1–6. [Google Scholar]

- Zhang, X.; Ng, R.; Chen, Q. Single image reflection separation with perceptual losses. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4786–4794. [Google Scholar]

- Rabbani, M.; Jones, P.W. Digital Image Compression Techniques; SPIE Press: Bellingham, WA, USA, 1991; Volume 7. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| Simulation | Real (Benchmark) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | Scattered | Similarity | Reflected | Centrosymmetry | Type | Scattered | Similarity | Reflected | Centrosymmetry |

| Wu et al. [3] | 2000 | 2000 | ✓ | 20 | 20 | ||||

| Flare7K | 7000 | 7000 | 100 | ||||||

| Ours | 5000 × 5 | ✓ | 5000 × 4 | ✓ | 500 | ✓ | 2000 | ✓ | |

| Increase scattering flare | ✓ | ✓ | |||

| Reflective flare in input | ✓ | ✓ | ✓ | ✓ | |

| Scattering flare similarity | ✓ | ||||

| Reflective flare centrosymmetry | ✓ | ✓ | ✓ | ||

| Dataset no light source | base w/oL | R w/oL | RP w/oL | MR w/oL | MRP w/oL |

| Restormer (PSNR) | 24.04 (2.92%) | 23.45 (10.2%) | 24.15 (16.2%) | 24.29 (0.00%) | 23.91 (4.47%) |

| Restormer (SSIM) | 0.905 (4.40%) | 0.898 (12.1%) | 0.909 (0.00%) | 0.907 (2.20%) | 0.906 (3.30%) |

| Uformer (PSNR) | 24.39 (6.41%) | 24.69 (2.80%) | 23.13 (23.0%) | 24.47 (5.44%) | 24.93(0.00%) |

| Uformer (SSIM) | 0.887 (24.2%) | 0.909 (0.00%) | 0.854 (60.4%) | 0.897 (13.2%) | 0.902 (7.70%) |

| Dataset Light source in GT | base | R | RP | MR | MRP |

| Restormer (PSNR) | 24.15 (12.7%) | 24.38 (9.77%) | 24.43 (9.14%) | 24.45 (8.89%) | 25.19 (0.00%) |

| Restormer (SSIM) | 0.907 (5.68%) | 0.902 (11.3%) | 0.912 (0.00%) | 0.909 (3.41%) | 0.912 (0.00%) |

| Uformer (PSNR) | 24.85 (2.21%) | 24.88 (1.86%) | 24.92 (1.39%) | 24.99 (0.58%) | 25.04 (0.00%) |

| Uformer (SSIM) | 0.910 (25.0%) | 0.913 (20.8%) | 0.918 (13.9%) | 0.917 (15.3%) | 0.928 (0.00%) |

| Scattering flare | ✓ | ✓ | ✓ | ✓ | ✓ |

| Reflective flare | ✓ | ✓ | ✓ | ✓ | |

| Scattering flare similarity | ✓ | ✓ | |||

| Reflective flare centrosymmetry | ✓ | ✓ | |||

| Dataset | base | R | RP | MR | MRP |

| Flare7K unreal (PSNR) | 24.52 (22.6%) | 25.46 (10.0%) | 24.72 (19.8%) | 25.76 (6.29%) | 26.29 (0.00%) |

| Flare7K unreal (SSIM) | 0.942 (7.41%) | 0.944 (3.70%) | 0.943 (5.56%) | 0.946 (0.00%) | 0.936 (18.5%) |

| Flare7K real (PSNR) | 24.15 (12.7%) | 24.38 (9.77%) | 24.43 (9.14%) | 24.45 (8.89%) | 25.19 (0.00%) |

| Flare7K real (SSIM) | 0.907 (5.68%) | 0.902 (11.4%) | 0.912 (0.00%) | 0.909 (3.41%) | 0.912 (0.00%) |

| Wu’s real (PSNR) | 21.07 (36.3%) | 21.52 (29.4%) | 23.25 (6.05%) | 23.37 (4.59%) | 23.76 (0.00%) |

| Wu’s real (SSIM) | 0.890 (6.80%) | 0.892 (4.85%) | 0.896 (0.97%) | 0.890 (6.80%) | 0.897 (0.00%) |

| Scattering flare real (ours) (PSNR) | 24.75 (6.66%) | 24.82 (5.80%) | 24.79 (6.17%) | 25.09 (2.56%) | 25.31 (0.00%) |

| Scattering flare real (ours) (SSIM) | 0.916 (6.33%) | 0.917 (5.06%) | 0.919 (2.53%) | 0.921 (0.00%) | 0.914 (8.86%) |

| Reflective flare real (ours) (PSNR) | 33.92 (14.6%) | 33.76 (16.7%) | 34.16 (11.4%) | 27.19 (149%) | 35.10 (0.00%) |

| Reflective flare real (ours) (SSIM) | 0.983 (30.8%) | 0.983 (30.8%) | 0.984 (23.1%) | 0.980 (53.9%) | 0.987 (0.00%) |

| Reflective flare real (ours) (LPIPS) | 0.1412 (18.6%) | 0.1384 (16.2%) | 0.1292 (8.48%) | 0.1569 (31.7%) | 0.1191 (0.00%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, Z.; Feng, H.; Xu, Z.; Chen, Y. A Data Generation Method for Image Flare Removal Based on Similarity and Centrosymmetric Effect. Photonics 2023, 10, 1072. https://doi.org/10.3390/photonics10101072

Jin Z, Feng H, Xu Z, Chen Y. A Data Generation Method for Image Flare Removal Based on Similarity and Centrosymmetric Effect. Photonics. 2023; 10(10):1072. https://doi.org/10.3390/photonics10101072

Chicago/Turabian StyleJin, Zheyan, Huajun Feng, Zhihai Xu, and Yueting Chen. 2023. "A Data Generation Method for Image Flare Removal Based on Similarity and Centrosymmetric Effect" Photonics 10, no. 10: 1072. https://doi.org/10.3390/photonics10101072

APA StyleJin, Z., Feng, H., Xu, Z., & Chen, Y. (2023). A Data Generation Method for Image Flare Removal Based on Similarity and Centrosymmetric Effect. Photonics, 10(10), 1072. https://doi.org/10.3390/photonics10101072