1. Introduction

The Traveling Salesman Problem (TSP) naturally occurs in different engineering disciplines. The basic problem formulation is simple: a person has a map of cities to visit, must visit each city exactly once before returning to the starting position, while minimizing the total distance traveled.

Efficient solutions to routing and combinatorial optimization problems such as the TSP underpin a wide range of applications in engineering design, robotics, logistics, and smart manufacturing [

1,

2,

3,

4]. By improving the robustness and convergence characteristics of evolutionary operators—resulting in shorter total traveling distances—the analysis presented here contributes to the development of optimization frameworks that support sustainable technological systems and intelligent decision-making.

Efficient TSP solvers have broader societal relevance, as they align with several United Nations Sustainable Development Goals (SDGs) [

5]. Their impact extends beyond computational optimization into real-world sustainability challenges:

SDG 3 (Good Health and Well-Being): Efficient routing contributes indirectly to improved public health by reducing vehicle emissions, noise pollution, and traffic congestion.

SDG 9 (Industry, Innovation, and Infrastructure): Optimized routing enhances the efficiency of industrial and manufacturing systems, including automated guided vehicles (AGVs), robotic manipulators, and production logistics.

SDG 11 (Sustainable Cities and Communities): In urban contexts, improved routing algorithms are applied to public transport scheduling, postal delivery, and waste collection.

SDG 12 (Responsible Consumption and Production): Reduced travel distances translate directly into lower resource and energy consumption per delivered product.

SDG 13 (Climate Action): Transportation is a significant contributor to greenhouse gas emissions. Even modest improvements in route efficiency, when applied across vehicle fleets, yield substantial reductions in CO2 output.

Collectively, these outcomes demonstrate how algorithmic intelligence contributes to sustainable and resilient engineering solutions—bridging computational optimization with environmental and societal impact.

Despite the simplicity of the problem formulation, the solution to this problem is mathematically complex, since the problem is NP-hard [

6]. This implies that computational effort to find an exact optimal solution grows factorially with the number of cities,

n!. The consequence is that brute-force approaches to finding optimal solutions become infeasible for already moderately sized problem instances. Assuming 2 × 10

9 ops/s, the time

t for solving a 10-city instance requires microseconds, a 15-city instance seconds, and for 20 cities it is already measured in years. That is why alternative approaches to solving this problem are an important and active field of research and show that even advanced solvers struggle to scale well beyond tens of cities [

7,

8]. A well-known improvement over brute force is the Held–Karp dynamic programming algorithm [

9], which substantially reduces the search effort compared to enumerating all possible tours. However, the time and memory required to store intermediate states in dynamic tables grow exponentially, which makes this algorithm typically applicable to smaller instances around 20–25 cities, on standard hardware [

10,

11,

12]. Thus, although considerably more efficient than brute force, such exact approaches remain limited to small problem sizes.

This limitation motivates the development of advanced heuristic and metaheuristic approaches for solving the TSP and related combinatorial optimization problems, but also general high-dimensional optimization problems [

13]. Among the most widely studied are Evolutionary Algorithms (EA) [

14], Particle Swarm Optimization (PSO) [

15], Ant Colony Optimization (ACO) [

16] and Artificial Bee Colony (ABC) [

17] algorithms, but also a combination of these algorithms [

18]. In [

19], the authors use rule-based mechanisms for the PSO algorithm to successfully solve problems of hundreds of nodes. A hybrid evolutionary algorithm is successfully used to solve multiple TSP and mTSP problems [

20]. Ant Colony Optimization ACO algorithm is successfully applied to TSP with parameter tuning in [

21,

22]. Another swarm algorithm, ABC with local heuristic mechanisms, is successfully used for a variety of TSP instances of up to 64 cities in [

23]. All these methods share similar characteristics—they employ nature-inspired algorithms based on the social behavior of biological organisms to solve a complex problem. Moreover, all these algorithms are so-called population-based algorithms. That means that they operate on populations of potential solutions, rather than a single solution, to explore the search space in a parallel manner [

24].

Recent studies also highlight the importance of proper operator selection within evolutionary algorithms [

25]. However, either small TSP instances or single-run results without rigorous statistical evaluation are usually reported. As a result, a fundamental question of how canonical crossover operators differ in convergence behavior, solution quality, and computational efficiency under controlled conditions remains insufficiently addressed.

These algorithms are heuristic, meaning they do not formally guarantee an optimal solution [

26]. The quality of the solution depends, besides problem size, on the algorithm’s parameters. In terms of evolutionary algorithms (EAs), and in particular genetic algorithms (GAs), which are used in this study, these parameters are: the crossover operator and its probability, the mutation operator and its probability, and algorithm parameters such as population size and selection mechanism. When applied to TSP, the computational effort of GA is mainly related to the number of generations (or iterations) it executes, population size (number of candidate solutions evaluated per generation), and the cost of evaluating a single tour. This evaluation in symmetric TSP requires simply adding distances between consecutive cities. Thus, the work for processing a candidate solution grows proportionally with the number of cities. If population size and number of generations are fixed, or increase slowly, overall computational effort increases roughly proportionally with the number of cities. This represents a significant improvement compared to exhaustive or exact methods, where requirements grow factorially and become infeasible for moderately sized instances.

The important tradeoff is that heuristic algorithms, including GA, cannot guarantee global optimality. The main conclusion here is that EAs are applicable to large TSP instances, where exact methods are computationally infeasible. However, because of the heuristic nature, there is no guarantee that the absolute shortest route will be found. The good and practical fact is that even if the absolute shortest path is not found, a significantly better solution will almost certainly be found. Comparing this solution at the end of the evolutionary search to the best individual from the initial population clearly and measurably indicates the improvement the algorithm has made, despite it not being the best possible solution. In engineering applications, such tradeoffs are often entirely acceptable since high-quality solutions can be obtained at a realistic effort compared to the high cost of searching for the absolute optimum.

Despite extensive research on the Traveling Salesman Problem (TSP) and the broad use of evolutionary, as well as other, algorithms for its solution, a clear understanding of how different crossover operators affect convergence, solution quality, and computational efficiency under controlled conditions remains largely unanswered.

In this paper, we present a statistically controlled comparison of five canonical crossover operators across multiple datasets of different sizes, using 30 independent GA runs and nonparametric statistical testing (the Friedman test, and Wilcoxon with Holm correction). The analysis confirms measurable performance differences at the raw level, although after multiplicity correction, only AEX remains consistently distinguishable from the other operators. In addition, we demonstrate practical relevance by optimizing a real route for 50 and 100 Croatian cities and quantifying associated impacts on energy use and CO2 emissions. The findings provide a rigorous comparative evaluation of crossover operators and highlight their broader relevance in the context of the UN Sustainable Development Goals.

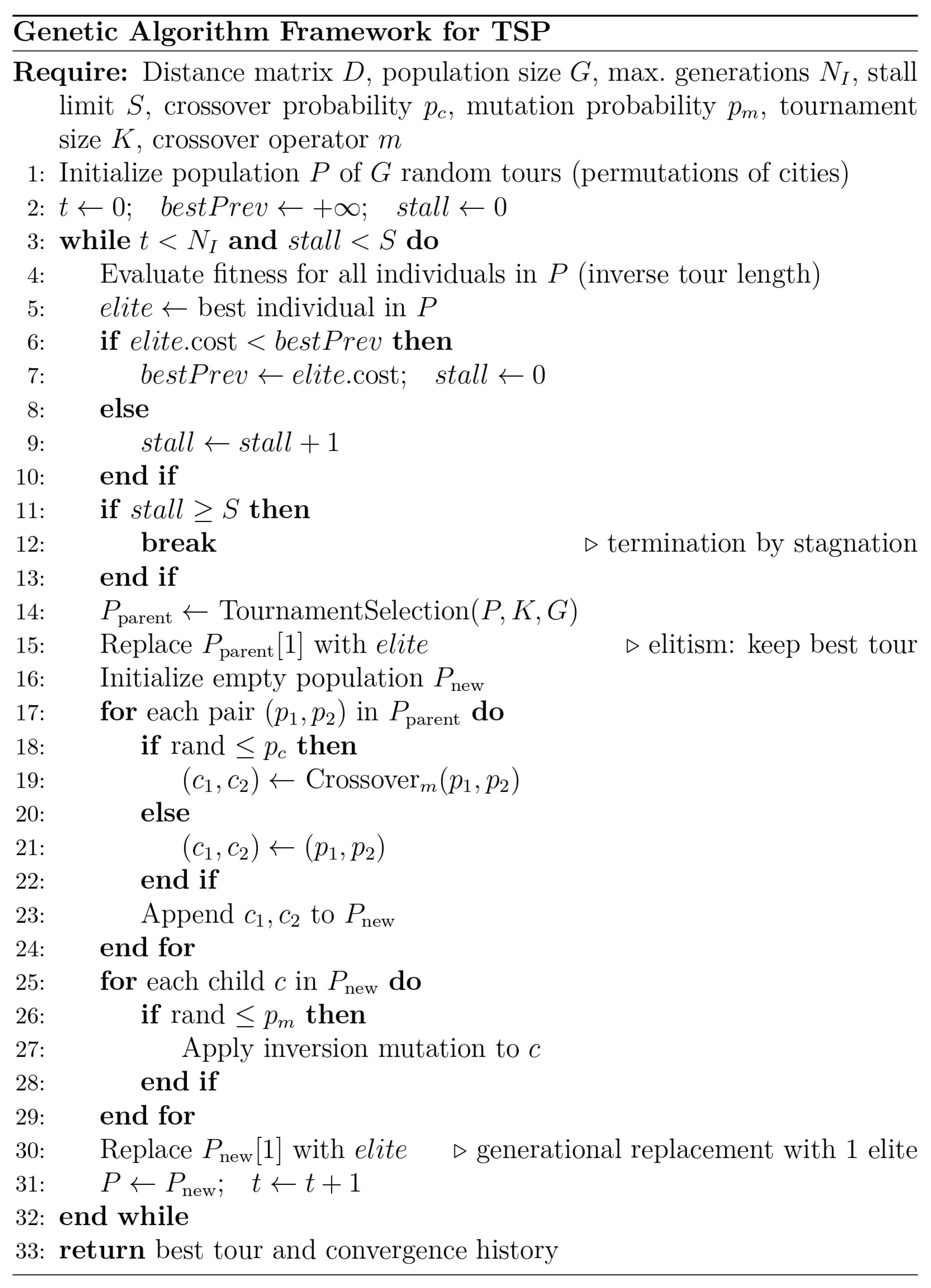

3. Implementation of Genetic Algorithm

An initial parameter sweep is performed on a randomly generated TSP map with 50 uniformly distributed nodes. The motivation for this preliminary step is to identify favorable combinations of mutation probability, crossover probability, and population size across all crossover operators. Such values, if they exist, would later be used as a fixed baseline for comparative testing of the five crossover operators. The main algorithmic procedure of the Genetic algorithm used in this study is given in

Figure 1.

The genetic algorithm (GA) employs tournament selection of size

k = 3 with one elite member, which ensures monotonic improvement of the fitness function. For the exploratory sweep step, exhaustive search over the parameter space is performed with the following parameters:

Increments were defined as 0.1 for crossover and mutation sweep grid. In the case of crossover, there is an additional 0.95 value with half the step to test also for very high crossover rates. Population sizes were discretized and evaluated in the set:

The algorithm was run for

NI = 5000 (hard limit) generations, with an early stopping criterion (stall limit) if no improvement occurred over the last

S = 500 generations. Each configuration was repeated 10 times to increase the representativeness of the results. This setup is computationally very demanding, as the total number of runs grows rapidly due to the combinatorial expansion of tested parameter configurations. For this reason, population sizes greater than 200 individuals were not considered, as they would be computationally prohibitive within the scope of this study. Moreover, as shown in

Section 4, a population of 200 individuals already provides sufficient diversity for the best-performing operators to reach near-optimal solutions within an acceptable runtime.

Table 1 summarizes the results of the parameter sweep step, reporting for each operator the combination of parameters with the lowest average tour length across the entire search space. These optimal configurations are also given in

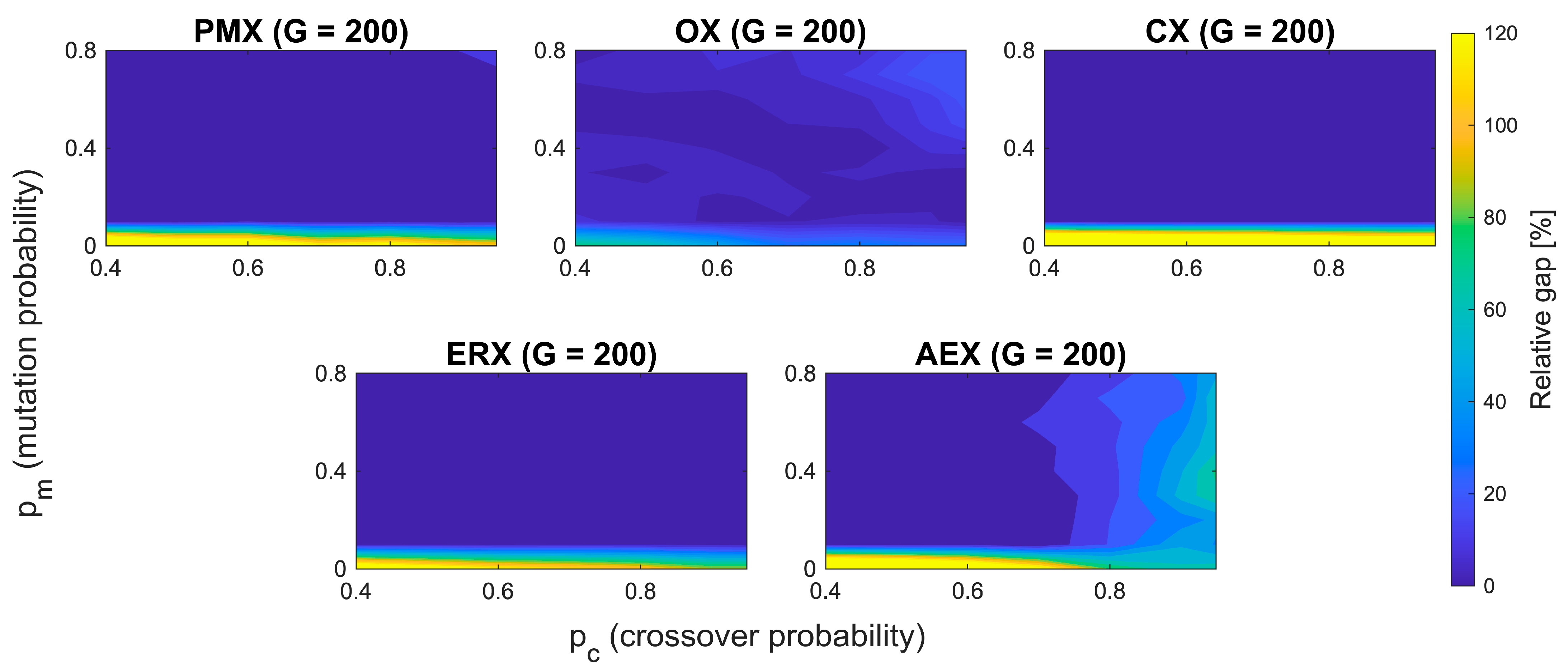

Figure 2.

Complete parameter landscape plots with top 10% contour regions are provided in

Figures S1–S5. For consistency, only the best-performing configurations per operator are given in

Table 1. All the operators show decreasing optimality gap for increased population sizes, except for PMX, which shows similar results for 150 and 200 population sizes. All the operators except AEX show a stable basin of performance across a relatively large range of mutation and crossover probabilities (large top 10% contour regions—

Figures S1–S5). AEX has, on the contrary, a different behavior. Firstly, it shows a low sensitivity on population size, with decreasing optimality gap only marginally as population increases. Second, sharp boundaries are present, causing sudden changes from good to bad—for example, across the crossover probability of ~0.85, where a slight increase in

pc degrades performance. Similar holds for mutation probability, where a horizontal flat segment around

pm ~ 0.1 indicates high sensitivity at this value. Compared to other operators, AEX shows much more unpredictable behavior and sensitivity, which leads to fragility with respect to both parameters

pm and

pc.

To quantify the sensitivity of the AEX operator under its best achievable performance, for each of the 7 × 9 (pc, pm) parameter combinations, the shortest tour length across defined four population sizes (50, 100, 150, and 200) was identified. The standard deviation was afterwards computed across the 63 best achieved values. For AEX, calculated variability is , which is approximately 3.27 times larger than the corresponding value for OX . In terms of variance, this indicates that AEX is an order of magnitude ≈ 10.7 times less stable than OX, confirming its fragility to parameter settings even in its best configurations. Based on these insights, a shared region is identified that provides consistently good performance across all the operators.

Although AEX shows fragmented behavior, the remaining operators (PMX, OX, CX, ERX) all share broad and stable region

pm ≈ [0.4, 0.7] and

pc ≈ [0.6, 0.9]. To ensure comparability across operators and to avoid regions that cause clearly unstable behavior in AEX, a common agreement is made on parameters such as

pm = 0.4,

pc = 0.7, and

G = 200. For every operator, this parameter combination lies within its own top 10% performance region. This means that each operator is evaluated under settings which are close to its own best performing region, without giving bias to any parameter. The relative gap is calculated as follows:

where

is for the mean best route length obtained over ten independent runs for a given combination of

pc and

pm, and population size

G.

Lmin is the minimum mean tour length achieved across all tested parameter combinations for the same crossover operator and population size (not exact TSP route optimum). Runtime was measured, and it scales approximately linearly with population size. Average values across all combinations of

pm and

pc, all crossover operators mean runtime per run was approximately: 0.92 s (G = 50), 1.81 s (G = 100), 2.68 s (G = 150) and 3.13 s (G = 200). Detailed run times are given in the following sections per operator and per map.

Operators CX, PMX, and OX are the fastest because they rely on simple positional and mapping operations. ERX is slower since it must maintain adjacency lists during recombination, but it remains competitive in terms of run time to CX, PMX, and OX. The AEX is significantly slower, typically 2 to 4 times slower across all experiments, compared to other operators. This underperformance, both in terms of solution quality and run time efficiency, can be linked to its alternating edge construction mechanism. AEX switches between parents’ edges in every step, thus frequently proposing edges that lead to cities already included in the partially constructed tour. This repeatedly triggers the procedure, which replaces the proposed edge with the lowest-indexed unvisited city. The consequence here is that a large fraction of edges in the offspring are not inherited from parents but inserted as the lowest index unvisited city. This has a disruptive effect and destroys repeatedly useful information from parents. It hinders the exploitation of quality links from parents and brings excessive randomness into the offspring. As a consequence, this operator shows higher variance, slower convergence, and less efficient runtime than the other four operators.

4. Results

To provide a consistent and reproducible experimental environment, each crossover operator was first evaluated on three standard benchmark problems from TSPLIB. Berlin 52, kroA100, and pr124 are used as the benchmarks. These maps present different layouts and impose potentially different requirements for the optimization algorithm. Berlin52 [

40] is built upon actual 52 locations in the city of Berlin, with geometric layout forming several local groupings, kroA100 [

41] is a highly irregular synthetic instance with mixed densities, while pr124 has a striped structure with linear elongated clusters. All the following experiments use the same GA configuration identified in the sweep step:

G = 200,

pc = 0.7,

pm = 0.4,

NI = 5000, and stall limit

S = 500.

Random seed was fixed with MATLAB’s rng(1) to ensure reproducibility and repeatability of results. This enables other researchers to reproduce the same experimental conditions and validate the findings. All simulations were executed on a workstation equipped with an Apple M1 Silicon processor (8-core CPU, 3.2 GHz maximum clock frequency, 8 GB unified RAM) running macOS 15.6.1. The algorithms were implemented in MATLAB Version 24.1.0.253703 (R2024a), utilizing the Optimization Toolbox (v24.1) for the mixed-integer linear programming solver (intlinprog) and the Statistics and Machine Learning Toolbox (v24.1) for hypothesis testing.

The relative optimality gap is calculated to compare the solutions found by each operator to the known solution from the TSP library. In the case of randomly generated maps, for smaller problems, intlinprog solver built into MATLAB can be used to calculate optimal solutions. This solver implements a proprietary mixed-integer linear programming (MILP) engine using branch-and-bound with linear programming (LP) relaxations. It is guaranteed to return a globally optimal solution only when the optimality gap is closed, i.e., when AbsoluteGap = U − L ≤ AbsoluteGapTolerance; where U and L are the solver’s upper and lower bounds. In our experiment, AbsoluteGapTolerance was set to 10−6, and the Croatia 50-city case reached a final optimality gap of 0, confirming that the returned tour is globally optimal. Larger instances (typically ≥ 100 cities) become computationally intractable for MILP under standard constraints and are therefore not solved exactly in this study.

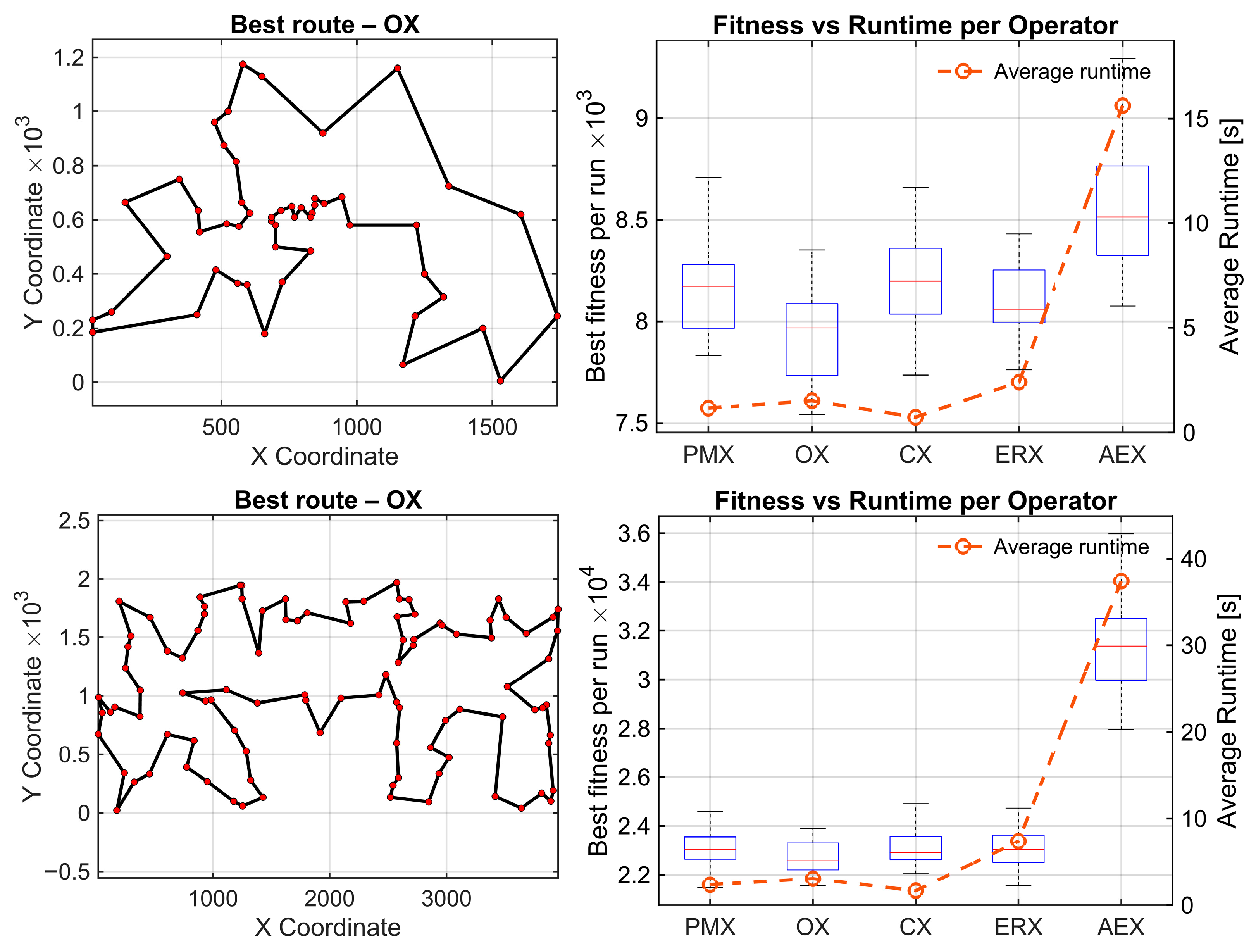

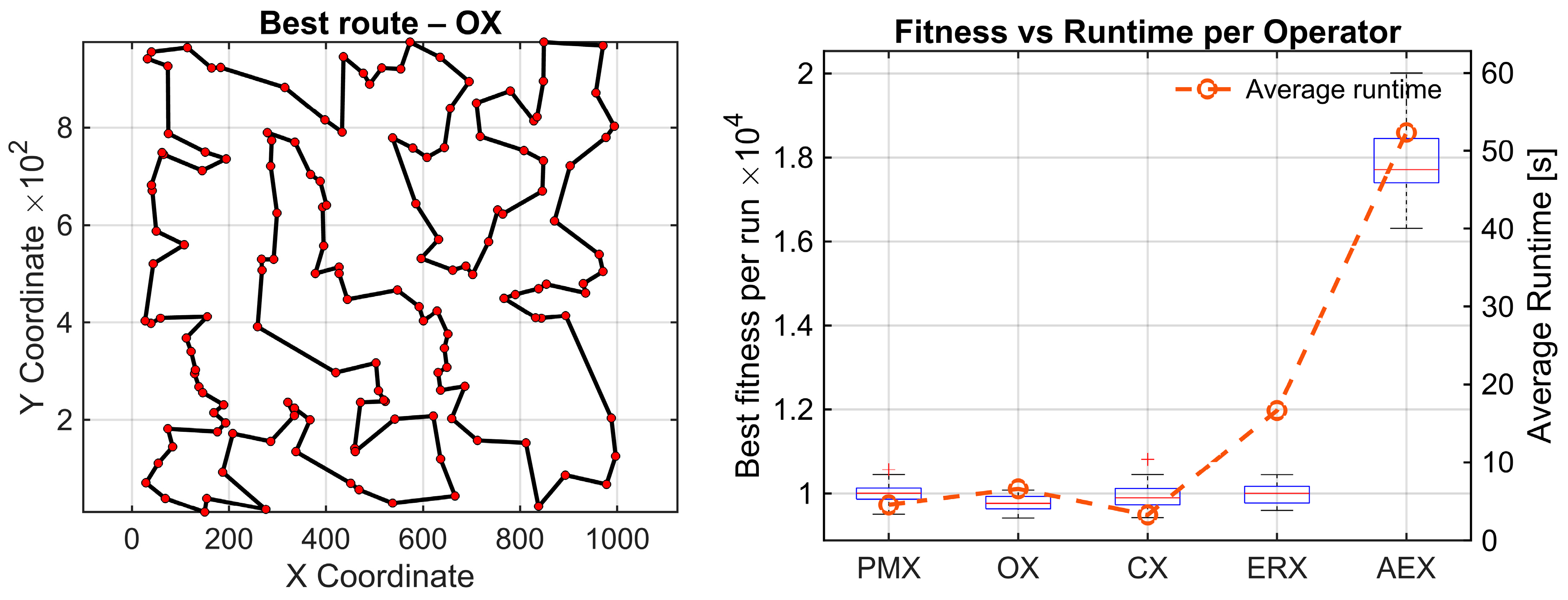

Results for these three benchmark maps are presented in

Figure 3. On the left side, the best route found by the statistically best-performing operator for each benchmark is shown. The right side shows box plots summarizing the distribution of shortest routes across 30 independent runs for each crossover operator. The average runtime for every operator is also given for all operators for one full evolutionary run. This is the mean wall-clock time required per operator to execute one complete evolutionary run—all the generations averaged over 30 independent runs. To investigate statistical significance of differences within results for each parameter, statistical analysis is performed using the Friedman test and Wilcoxon post-hoc pairwise comparisons with Holm correction. The use of the Friedman test is validated by performing the Shapiro–Wilk test on paired performance differences for berlin52.tsp. Normality is violated in most comparisons (9 of 10 pairs with

)

Based on these results, the parametric ANOVA assumptions are not met, so the Friedman test followed by Holm-corrected Wilcoxon signed-rank test is appropriate. The algorithm runs 5000 generations and evaluates each of the five operators 30 times to get statistically representative samples.

To formally compare the crossover operators, the Friedman test was applied to the berlin52, kroA100, and pr124 benchmarks (n = 52, 100, and 124 nodes, respectively). For each map and each operator, the final tour length obtained at the end of the GA run served as the performance metric. Lower tour lengths indicate better solution quality. The Friedman test does not operate on raw values but on ranks. Thus, for each benchmark, the operators were ranked from best to worst based on their tour lengths, with Rank 1 corresponding to the best (shortest) route and Rank 5 to the worst (longest). These rank matrices constitute the input to the Friedman test.

For all the examples, the degree of freedom equals 4. On berlin52, the Friedman test detected a highly significant overall performance difference among five crossover operators (χ2(4) = 52.81, p = 9.3 × 10−11), with a medium–strong effect size (Kendall’s W = 0.440). OX achieved the best average rank (1.60), while AEX was consistently the worst (4.50). Raw Wilcoxon signed-rank tests indicate several moderate differences among the best performing operators (e.g., PMX–OX: p = 7.15 × 10−4, OX–CX: p = 4.44 × 10−5, OX–ERX: p = 7.27 × 10−3, CX–ERX: p = 0.0368). All comparisons against AEX show extremely strong raw effects, with p-values between 1.73 × 10−6 and 4.86 × 10−5. After applying Holm’s step-down correction, all AEX-related comparisons remain statistically significant (pHolm between 1.73 × 10−5 and 3.26 × 10−4). In this dataset, also PMX-OX, OX-CX, and OX-ERX comparisons remain significant. Remaining mid-range comparisons (PMX-CX, PMX-ERX, CX-ERX) become nonsignificant.

For kroA100, the Friedman test again reports highly significant overall differences (χ2(4) = 62.95, p = 6.95 × 10−13), with a strong effect size (Kendall’s W = 0.525). Average ranks show the four stronger operators form a group (OX = 2.12, ERX = 2.50, CX = 2.60, PMX = 2.78), whereas AEX is clearly weakest (avg. rank = 5.00). Raw Wilcoxon results reveal moderate p-values among the stronger operators (e.g., PMX–OX: 0.035, OX–CX: 0.047, OX–ERX: 0.082), while all comparisons involving AEX report extremely small p-values (1.73 × 10−6 in every AEX-related pair). After applying Holm correction, all AEX-related comparisons remain statistically significant (pHolm ≈ 1.73 × 10−5), confirming AEX performs significantly worse than the remaining operators. None of the pairwise comparisons among PMX, OX, CX, and ERX remain statistically and significantly different after correction. Consequently, AEX is the only operator that clearly differs from the rest, whereas no statistically reliable differences appear among PMX, OX, CX, and ERX.

On pr124, the Friedman test once more confirms significant operator differences (χ2(4) = 61.95, p = 1.13 × 10−12) with a strong effect (Kendall’s W = 0.516). Average ranks again show a compact group of four strong operators (OX = 2.23, CX = 2.40, ERX = 2.60, PMX = 2.77) and a clearly inferior AEX (5.00).

Raw Wilcoxon comparisons among the stronger operators yield only mild or nonsignificant p-values (e.g., PMX–OX: 0.213, OX–ERX: 0.131, CX–ERX: 0.572), whereas all AEX-related comparisons again produce identical extremely small values (p = 1.73 × 10−6). After Holm correction, the four AEX-related comparisons remain significant (pHolm ≈ 1.73 × 10−5). All other pairs remain nonsignificant, meaning that these operators form a compact, highly performing group and cannot be statistically separated on the pr124 dataset.

Performance results on the three TSPLIB benchmarks are summarized in

Table 2, which shows that OX achieves the lowest average route lengths, whereas AEX consistently yields the largest optimality gaps and longest runtimes.

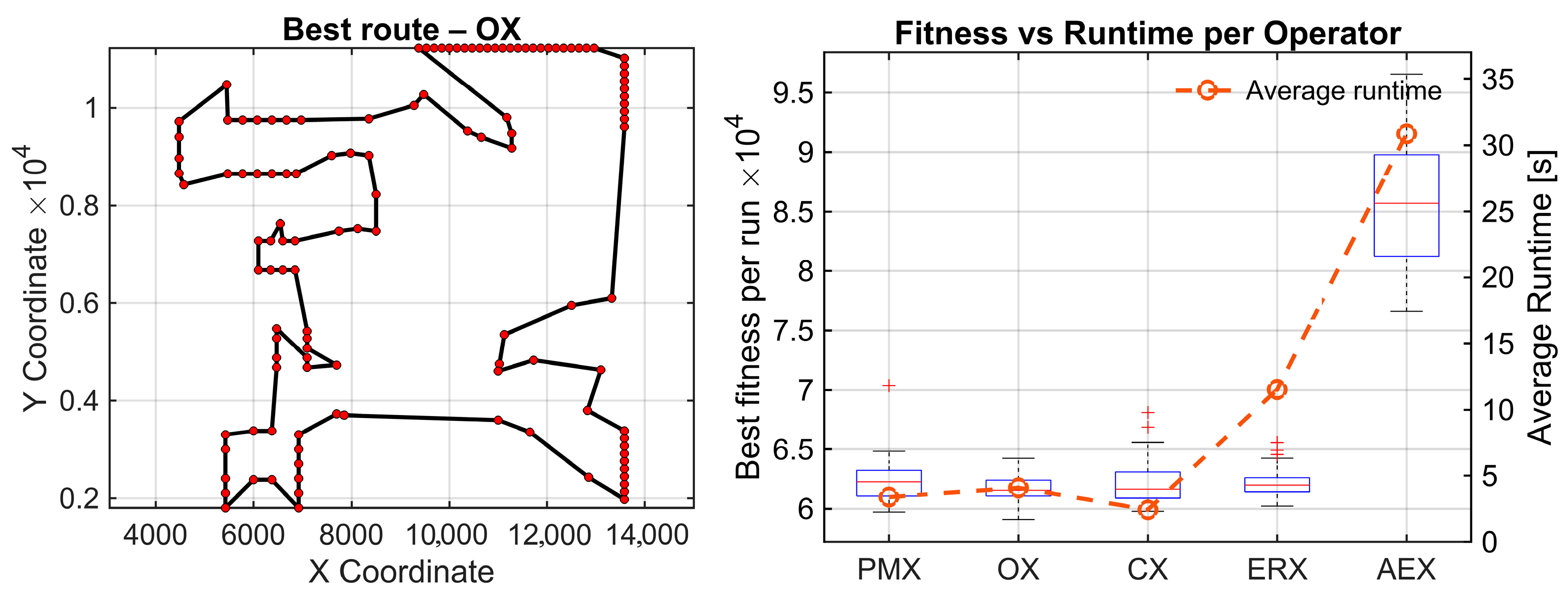

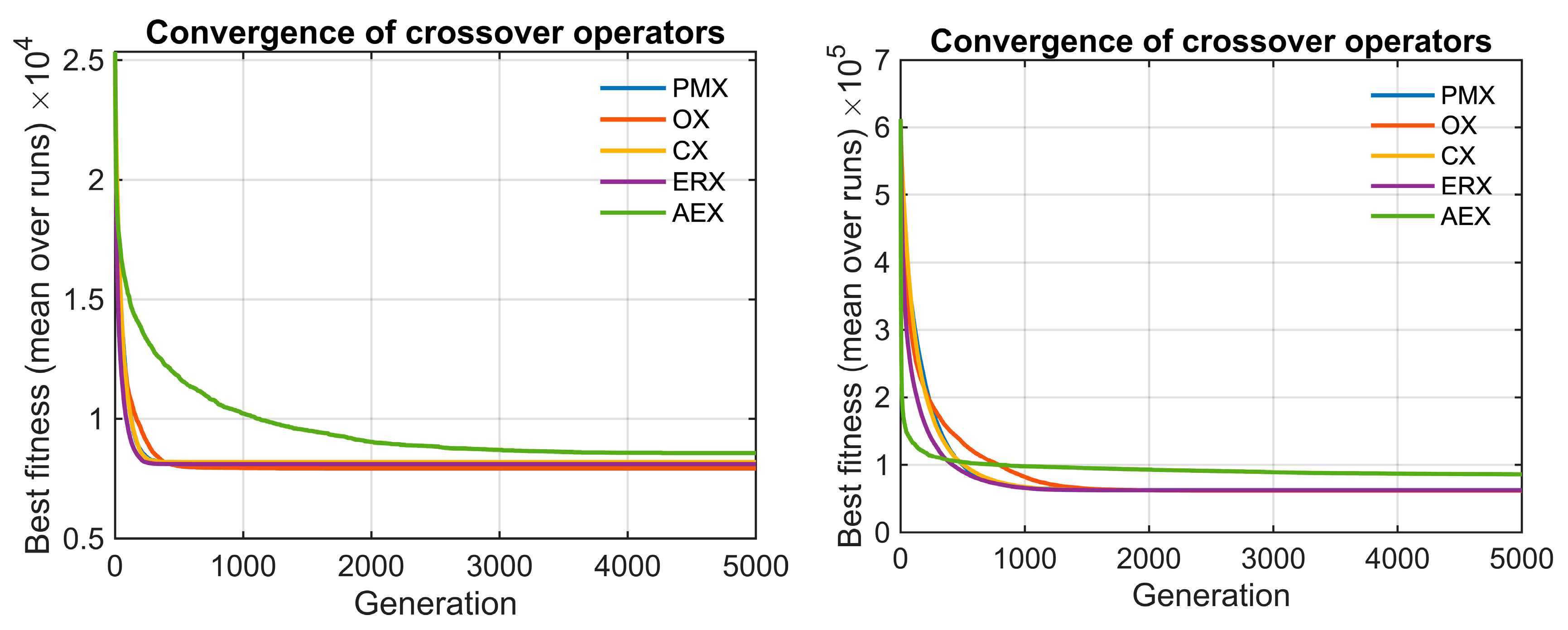

The convergence behavior of the crossover operators is presented in

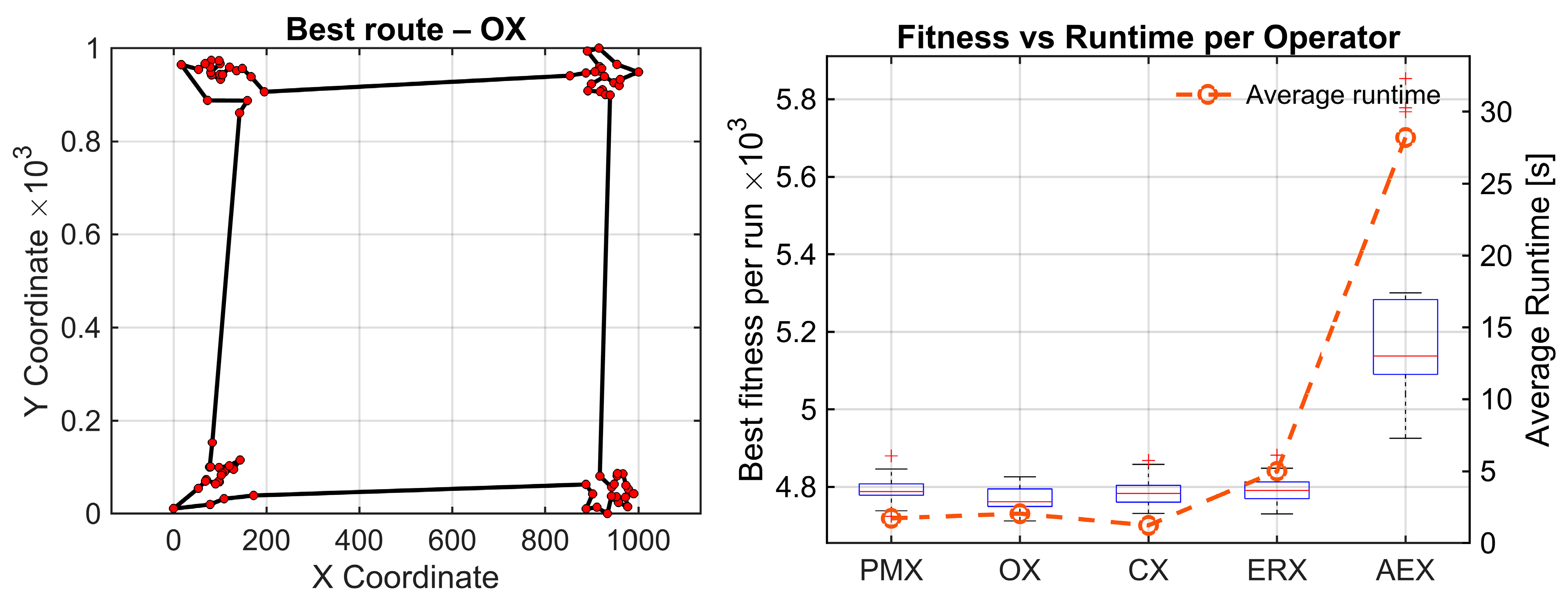

Figure 4 for berlin52 and pr124 instances. AEX consistently stagnates and fails to improve over generations, while the remaining operators converge very fast toward near-optimal solution quality. To verify whether similar performance patterns exist also under different spatial structures and problem sizes, we additionally evaluated the operators on two synthetically generated maps. We created two instances: C4x20.tsp, consisting of four clusters with 20 nodes in each, and random150.tsp with 150 uniformly distributed nodes. Parameters of the genetic algorithm were kept identical to those used on previously described benchmarks.

The clustered C4x20 instance produced a highly significant Friedman test again (χ2(4) = 63.91, p = 4.36 × 10−13), with a strong effect size (Kendall’s W = 0.533). The average ranks show a compact cluster of strong operators (OX = 2.03, ERX = 2.58, CX = 2.67, PMX = 2.72), while AEX is clearly inferior with a rank of 5.00. The Holm-corrected Wilcoxon pairwise comparisons indicate that none of the top four operators differ significantly from the other.

The only reliable signal is that AEX is consistently inferior, because all raw comparisons between AEX and every other operator are extremely small , and effect sizes are large (r = −0.87). All AEX-related differences remain significant after Holm correction (pHolm ≈ 1.73 × 10−5), confirming its inferiority.

The random 150 instances yield the strongest statistical contrast for all the analyzed datasets. The Friedman test reports (χ

2(4) = 67.81,

p = 6.57 × 10

−14), with the highest effect size observed in this study (Kendall’s W = 0.565). Again, four operators form a tight top group (OX = 1.87, CX = 2.43, ERX = 2.83, PMX = 2.87), whereas AEX remains the clear outlier with an average rank of 5.00. All comparisons related to AEX remain statistically significant after Holm correction (

pHolm ≈ 1.73 × 10

−5) with very large effect sizes (r = −0.87). This confirms AEX is inferior to all other operators in this instance. For this dataset, significant differences remain after Holm correction between OX-PMX

, effect size r = 0.60, and OX-ERX,

, and r = 0.55. Other differences remain nonsignificant. The results of these experiments are given in

Figure 5.

Based on this strict statistical interpretation, the combined evidence across datasets analyzed in this study allows us to identify OX as the best-performing operator. This conclusion is based on the global pattern of average ranks and the consistency of performance across a complete set of heterogeneous maps. On every dataset, OX attains the best, or second-best, average rank. Other operators (PMX, CX, and ERX) either alternate between strong and moderate performance, indicating no stable dominance. Thus, although the Holm-corrected post-hoc tests do not statistically confirm that OX outperforms PMX, CX, or ERX, the practical evidence and ranking stability consistently point to OX as the most reliable and best-performing operator across the analyzed benchmark instances. Effect sizes They are used to further clarify the practical importance of statistical data. Comparisons of OX vs. PMX/CX/ERX show medium effect sizes () across datasets consistently in favor of OX. Comparison of OX vs. AEX shows very large effects ( uniformly over all datasets. Comparisons among PMX, CX, and ERX yield a small () effect, indicating negligible practical differences within operators that form the middle-performing group.

AEX is the only operator that is inferior to others. Its average ranks are consistently the lowest (rank 5.00 across all datasets, and 4.50 on berlin52), and the paired Wilcoxon tests yield very small

p-values and large effect sizes, indicating a large and systematic performance deficit. After applying the Holm correction, all AEX related comparisons remain highly significant, confirming AEX is inferior to all other crossover operators across all datasets. All statistical results are provided in

Tables S2–S21.

To apply the presented algorithm to a real-world case, two original datasets for Croatia were created. An instance of 50 and 100 Croatian cities, respectively. These are fully TSPLIB-compliant datasets provided in EUC2D and GEO systems and generated to sample cities uniformly from all regions of Croatia. The benchmark datasets used in this research have been deposited in the Zenodo repository and are freely accessible under the MIT License:

https://doi.org/10.5281/zenodo.17587540.

For each city, geographic coordinates (latitude and longitude) were obtained from publicly available open data sets. Since the coordinates were in decimal degrees, using the World Geodetic System 1984 (WGS84) datum, the haversine formula is used to convert to pairwise distances that account for Earth’s curvature. For conversion details, please refer to

Equations (S1)–(S4).

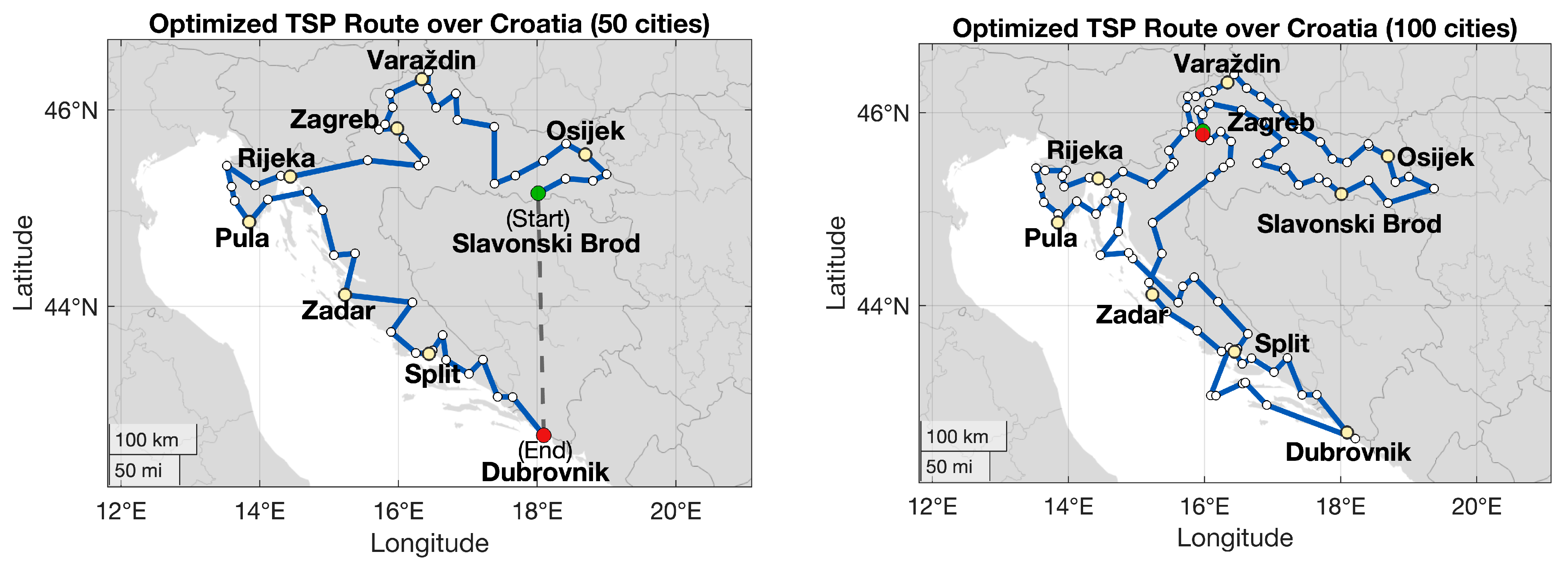

Then the resulting distance matrix was used as input to the TSP algorithm using solely the OX operator, which was identified as the best performing operator in the tested benchmark sets. The algorithm was run for 5000 generations, and successfully found the optimal route presented on the left side of

Figure 6. The length of this route is ~1822 km, which is confirmed by the intlinprog function to be optimal. The same algorithm with identical parameters is then applied to the 100-city map in Croatia, as illustrated on the right side. Although the resulting route (~2465 km) is not guaranteed to be globally optimal, it represents a substantial improvement compared to the initial random population solution and demonstrates the scalability and practical utility of evolutionary crossover operators for realistic routing scenarios. This result also motivates further exploration of potential environmental benefits enabled by route optimization techniques.

To illustrate the environmental impact of optimized and unoptimized routes, transparent simplifications are adopted. Distances are piecewise linear segments without considering actual road layout, curvature, elevation differences, or traffic conditions. This allows us to assess differences only because of routing efficiency. This is a standard approach in exploratory sustainability assessments.

Three vehicle types are analyzed: (1) a petrol passenger car, (2) a diesel passenger car, and (3) a battery electric vehicle (BEV). For petrol and diesel, we use the Tier 1 CO

2 emission factors reported in the EMEP/EEA Air Pollutant Emission Inventory Guidebook [

42] (

Figure S6) combined with the typical fuel-consumption values for passenger cars from (

Figure S7) (61.9 g/km for petrol and 56.8 g/km for diesel). Using the corresponding fuel densities (0.750 kg/L for petrol and 0.840 kg/L for diesel), these values convert to volumetric fuel consumption of 8.25 L/100 km for petrol and 6.76 L/100 km for diesel, and to distance-based CO

2 intensities of 196 g/km for petrol and 181 g/km for diesel. For the BEV, we assume an energy use of 21 kWh/100 km [

43], representative of mid-sized BEVs, and apply the 2024 Croatian grid emission factor of 165 g CO

2/kWh [

44], yielding a BEV carbon intensity of 34.7 g/km. Details on conversion are provided in

Equations (S5)–(S19) in Supplementary Materials.

Under these assumptions, the optimal 1822 km route results in approximately 357 kg CO2 for petrol, 329 kg CO2 for diesel, and 63 kg CO2 for the BEV. Because energy use per kilometer is assumed to be constant, emissions scale linearly with route length. Therefore, a route that is 5.91 times longer produces emissions approximately 5.91 times higher. The same proportionality applies to the 100-city instance.

5. Discussion

The extensive evaluation across multiple datasets provides a coherent picture of how canonical operators behave within a GA framework used across TSP instances in this study. The general pattern emerges that OX, PMX, CX, and ERX form a group of well-performing operators, while AEX is isolated as an underperformer. This is reflected both with average ranks and Wilcoxon statistics, where AEX shows an extremely large performance deficit relative to all the other operators.

An important outcome from this study is the distinction between the raw performance differences and statistically reliable differences after multiplicity correction. On most datasets, several raw pairwise comparisons among the best-performing operators yield moderately small p-values, but they do not survive Holm correction, which is the consequence of the conservative nature of the procedure. However, on the berlin52 and random150 datasets, performance gaps among the strongest operators are large enough and remain statistically significant after Holm correction. This means that sufficiently large effect sizes will cause statistically reliable differences to remain detected. The only consistent pattern across all datasets is related to AEX. Comparisons of AEX with every other operator show extremely small raw p-values with very large effect sizes before and after Holm correction. This allows us to mark AEX as the inferior operator across all instances.

Another important observation is that the operators behave stably across problem sizes and spatial structures. Although effect sizes (Kendall’s W) increase slightly for larger maps, the separation pattern does not change—OX maintains the most favorable average rank, AEX remains inferior, and PMX/CX/ERX fluctuate within a narrow performance window. This suggests that the operators’ structural properties, rather than the TSP instance, are responsible for their relative effectiveness. OX benefits from its ability to preserve relative orderings of cities, which appears to generalize well across different geometries. AEX’s alternating-edge mechanism systematically destroys too many useful adjacencies, which renders it the lowest rank.

Average rank stability across all datasets makes OX the most reliable choice. Even if Holm-corrected tests can not declare it statistically superior to PMX, ERX, or CX on most datasets, the empirical evidence consistently places OX at or near the top across all experiments. AEX is, in contrast, both statistically and practically inferior.

Finally, the example of application to real Croatian routing instances illustrates how algorithmic improvements translate into real-world sustainability impacts. Even modest reductions in route length led to meaningful reductions in fuel consumption, travel time, and CO2 emissions. This demonstrates a broader societal relevance of efficient evolutionary operators and links combinatorial optimization to multiple United Nations Sustainable Development Goals.