Abstract

Our work targets automated analysis to quantify the growth dynamics of a population of bacilliform bacteria. We propose an innovative approach to frame-sequence tracking of deformable-cell motion by the automated minimization of a new, specific cost functional. This minimization is implemented by dedicated Boltzmann machines (stochastic recurrent neural networks). Automated detection of cell divisions is handled similarly by successive minimizations of two cost functions, alternating the identification of children pairs and parent identification. We validate the proposed automatic cell tracking algorithm using (i) recordings of simulated cell colonies that closely mimic the growth dynamics of E. coli in microfluidic traps and (ii) real data. On a batch of 1100 simulated image frames, cell registration accuracies per frame ranged from 94.5% to 100%, with a high average. Our initial tests using experimental image sequences (i.e., real data) of E. coli colonies also yield convincing results, with a registration accuracy ranging from 90% to 100%.

Keywords:

stochastic neural networks; cell tracking; microscopy image analysis; detection-and-association methods MSC:

62H35; 62M45

1. Introduction

Technology advances have led to increasing magnitudes of data generation with increasing levels of precision [1,2]. However, data generation presently far outpaces data analysis and drives the requirement for analyzing such large-scale data sets with automated tools [3,4,5]. The main goal of the present work is to develop computational methods for an automated analysis of microscopy image sequences of colonies of E. coli growing in a single layer. Such recordings can be obtained from colonies growing in microfluidic devices, and they provide a detailed view of individual cell-growth dynamics as well as population-level, inter-cellular mechanical and chemical interactions [6,7,8].

However, to understand both variability and lineage-based correlations in cellular response to environmental factors and signals from other cells requires the tracking of large numbers of individual cells across many generations. This can be challenging, as large cell numbers tightly packed in microfluidic devices can compromise spatial resolution, and toxicity effects can place limits on the temporal resolution of the recordings [9,10]. One approach to better understand and control the behavior of these bacterial colonies is to develop computational methods that capture the dynamics of gene networks within single cells [6,11,12]. For these methods to have a practical impact, one ultimately has to fit the models to the data, which allows us to infer hidden parameters (i.e., characteristics of the behavior of cells that cannot be measured directly). Image analysis and pattern recognition techniques for biological imaging data [13,14,15], like the methods developed in the present work, can be used to track lineages and thus automatically infer how gene expression varies over time. These methods can serve as an indispensable tool to extract information to fit and validate both coarse and detailed models of bacterial population, thus allowing us to infer model parameters from recordings.

Here, we describe an algorithm that provides quantitative information about the population dynamics, including the life cycle and lineage of cells within a population from recordings of cells growing in a mono-layer. A typical sequence of frames of cells growing in a microfluidic trap is shown in Figure 1. We describe the design and validation of algorithms for tracking individual cells in sequences of such images [11,16,17]. After segmentation of individual image frames to identify each cell, tracking individual cells from frame to frame is a combinatorial problem. To solve this problem we take into account the unknown cell growth, cell motion, and cell divisions that occur between frames. Segmentation and tracking are complicated by imaging noise and artifacts, overlap of bacteria, similarity of important cell characteristics across the population (shape; length; and diameter), tight packing of bacteria, and large interframe durations resulting in significant cell motion, and up to a 30% increase in individual cell volume.

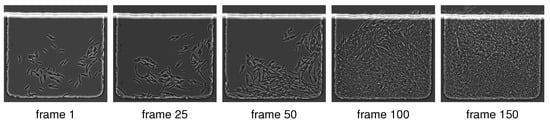

Figure 1.

Typical microscopy image sequence. We show five frames out of a total of 150 frames of an image sequence showing the dynamics of E. coli in a microfluidic device [18] (real laboratory image data). These cells are are about 1 m in diameter and on average 3 m in length, and they divide about every 30 min. The original images exported from the microscope are 0.11 m/pixel. We report results for these real datasets in Section 4.

1.1. Related Work

The present work focuses on tracking E. coli in a time series of images. A comparison of different cell-tracking algorithms can be found in [19,20]. Multi-object tracking in video sequences and object recognition in time series of images is a challenging task that arises in numerous applications in computer vision [21,22]. In (biomedical) image processing, motion tracking is often referred to as “image registration” [19,23,24,25,26] or “optical flow” [27,28,29,30]. Typical solutions used in the defense industry, for instance, track small numbers of fast moving targets by image sequence analysis at pixel levels and use sophisticated reconstruction of the optical flow, combined with real time segmentation, and quick combinatoric exploration at each image frame. Initially, we did implement several well-known algorithms for reconstruction of the optical flow, but the results we obtained were not satisfactory due to long interframe times and high noise levels. Moreover, we are not interested in tracking individual pixels but rather cells (i.e., rod-shaped, deformable shapes), while recognizing events of cell division and recording cell lineage. Consequently, we decided to first segment each image frame to isolate each cell, and then to match cells between successive frames.

As for the problem at hand, one approach proposed in prior work to simplify the tracking task is to make the experimental setup more rigid by confining individual cell lineages to small tubes; the associated microfluidic device is called a “mother machine” [31,32,33,34,35,36]. The microfluidic devices we consider here yield more complicated data as cells are allowed to move and multiply freely in two dimensions (constrained to a mono-layer). We refer to Figure 1 for a typical sequence of experimental images considered in the present work.

Turning to methods that work on more complex biological cell imaging data, we can distinguish different classes of tracking methods. “Model-based evolution methods” operate on the image intensities directly. They rely on particle filters [37,38,39] or active contour models [40,41,42,43,44]. These methods work well if the cells are not tightly packed. However, they may lead to erroneous results if the cells are close together, the inter-cellular boundaries are blurry, or the cells move significantly. Our work belongs to another class—the so called “detection-and-association methods” [45,46,47,48,49,50,51,52,53,54,55], which first detect cells in each frame and then solve the tracking problem/association task across successive frames. (We note that the segmentation and tracking of cells does not necessarily need to be implemented in two distinct steps. In many image sequence analyses, implementing these two steps jointly can be beneficial [37,49,54,56,57,58]. However, for the clarity of exposition and easier implementation of our new tracking technique, we present these steps separately.) Doing so necessitates the segmentation of cells within individual frames. We refer to [59] for an overview of cell segmentation approaches. Deep learning strategies have been widely used for this task [5,50,54,58,60,61,62,63,64,65]. We consider a framework based on convolutional neural networks (CNNs). Others have also used CNNs for cell segmentation [62,64,66,67,68]. We omit a detailed discussion of our segmentation approach within the main body of this paper, as we do not view it as our main contribution (see Section 1.2). However, the interested reader is referred to Appendix D for some insights. To solve the tracking problem after the cell detection, many of the methods cited above use hand-crafted association scores based on the proximity of the cells and shape similarity measures [46,48,51,54]. We follow this approach here. We note that we not only consider local association scores between cells but also include measures for the integrity of a cell’s neighborhood (i.e., “context information”).

Our method is tailored for tracking cells in tightly packed colonies of rod-shaped E. coli bacteria. This problem has been considered previously [5,45,49,52]. However, we are not aware of any large-scale datasets that provide ground truth tracking data for these types of recordings, but note that there are community efforts for providing a framework for testing cell tracking algorithms [20,69] (see, e.g., [37,70]). (Cell tracking challenge: http://celltrackingchallenge.net (accessed on 15 December 2021).) Works that consider these data are for example [37,54,57,58,62,67]. The cells in this dataset have significantly different characteristics compared to those considered in the present work. As we describe below, our approach is based on distinct characteristics of the bacteria cells and, consequently, does not directly apply to these data. Therefore, we have developed our own validation and calibration framework (see Section 2.1).

Standard graph matching algorithms (see, e.g., [71]) do not directly apply to our problem. Indeed, a fundamental complication is that cells can divide between successive images. Hence, each assignment from one frame to its successor is not a one-to-one mapping but a one-to-many correspondence. More advanced graph matching strategies are described in [72,73]. Graph-based matching strategies for cell-tracking that are somewhat related to our approach are described in [70,74,75,76,77]. Like the methods mentioned above, they consider various association scores for tracking. Individual cells are represented as nodes, and neighbors are connected through edges. Our approach also introduces cost terms for structural matching of local neighborhoods by specific scoring for single nodes, pairs of nodes, and triplets of nodes, after a (modified) Delaunay triangulation. By using a graph-like structure, cell divisions can be identified by detecting changes in the topology of the graph [75,76]. We tested a similar strategy, but came to the conclusion that we cannot reliably construct neighborhood networks between frames for which topology changes only occur due to cell division; the main issue we observed is that the significant motion of cells between frames can introduce additional topology changes in our neighborhood structure. Consequently, we decided to relax these assumptions.

Refs. [71,78,79,80] implement multi-target tracking in videos by stochastic models based on random finite set densities and variants thereof. The fit to the data are based on Gibbs sampling to maximize the posterior likelihood. A key challenge of these approaches is the estimation of an adequate finite number of Gibbs sampling iterations when one computes posterior distributions. Most Gibbs samplers are ergodic Markov chains on a finite but huge state spaces, so that their natural exponential rate of convergence is not a practically reassuring feature.

As mentioned above, some recent works jointly solve the tracking and segmentation problem [37,49,54,56,57,58]. Contrary to observations we have made in our data, these approaches rely (with the exception of [49]) on the fact that the tracking problem is inherent to the segmentation problem (“tracking-by-detection methods” [54]; see also [5]). That is, the key assumption made by many of these algorithms is that cells belonging to the same lineage overlap across frames (see also [47]). In this case, cell-overlap can serve as a good proxy for cell-tracking [54]. We note that in our data we cannot guarantee that the frame rate is sufficiently high for this assumption to hold. Refs. [56,57,67] exploited machine learning techniques for segmentation and motion tracking. One key challenge here is to provide adequate training data for these methods to be successful. Here, we describe simulation-based techniques that can be extended to produce training data, which we use for parameter tuning [12,81].

The works that are most similar to ours are [45,49,52]. They perform a local search to identify the best cell-tracking candidates across frames. One key difference across these works are the matching criteria. Moreover, Refs. [45,49] employ a local greedy-search, whereas we consider stochastic neural network dynamics for optimization. Ref. [52] constructs score matrices within a score based neighborhood tracking method; an integer programming method is used to generate frame-to-frame correspondences between cells and the lineage map. Other approaches that consider linear programming to maximize an association score function for cell tracking can be found in [47,54,82].

As we have mentioned in the abstract, we obtain a tracking accuracy that ranges from 90% to 100%, respectively. Overall, our method is competitive with existing approaches: For example, Ref. [45] reports a tracking accuracy of up to 97% for data that are similar to ours, while Ref. [74] reports a tracking accuracy (spatial, temporal, and cell division detection) at the order of 95% (between about 93% and 98%, respectively). The second group also reports results for their prior approach [75], with an accuracy at the order of 90% (ranging from about 87% to 92%, respectively). Accuracies reported in [77] range from about 92% to 97%, respectively. This work also includes a comparison to one of their earlier approaches [76] with an accuracy of up to 85% and 89% if the datasets are pre-aligned. We note that the data considered in [74,75,76,77] are quite different from ours. Refs. [37,54,57,58,70] consider the data from the cell tracking challenge [20,69] to evaluate the performance of their methods. As in the previously mentioned work, these data are again quite different from ours. To evaluate the performance of the methodology, the so-called acyclic oriented graph matching measure [83] is considered. We refer to the webpage of the cell tracking challenge for details on the evaluation metrics (see http://celltrackingchallenge.net/evaluation-methodology, accessed on 15 December 2021). Based on these, the reported tracking scores are between 0.873 and 0.902 [37], 0.901 and 1.00 [70], 0.950 and 0.987 [54], 0.788 and 0.982 [58] and 0.765 and 0.915 [57] depending on the considered data set, respectively.

1.2. Contributions

For image segmentation, we first apply two well-known, powerful variational segmentation algorithms to generate a large training set of correctly delineated single cells. We can then train a CNN dedicated to segmenting out each single cell. Using a CNN significantly reduces the runtime of our computational framework for cell identification. The frame-to-frame tracking of individual cells in tightly packed colonies is a significantly more challenging task, and is hence the main topic discussed in the present work. We develop a set of innovative automatic cell tracking algorithms based on the successive minimization of three dedicated cost functionals. For each pair of successive image frames, minimizing these cost functionals over all potential cell registration mappings poses significant computational and mathematical challenges. Standard gradient descent algorithms are inefficient for these discrete and highly combinatorial minimization problems. Instead, we implement the stochastic neural network dynamics of BMs, with architectures and energy functions tailored to effectively solve our combinatorial tracking problem. Our major contributions are: (i) The design of a multi-stage cell tracking algorithm that starts with a parent–children pairing step, followed by removal of identified parent–children triplets, and concludes with a cell-to-cell registration step. (ii) The design of dedicated BM architectures, with several energy functions, respectively, minimized by true parent–children pairing and by true cell-to-cell registration. Energy minimizations are then implemented by simulation of BM stochastic dynamics. (iii) The development of automatic algorithms for the estimation of unknown weight parameters of our BM energy functions, using convex-concave programming tools [84,85,86]. (iv) The evaluation of our methodology on synthetic and real image sequences of cell colonies. The massive effort involved in human expert annotation of cell colony recordings limits the availability of “ground truth tracking” data for dense bacterial colonies. Therefore, we first validated the accuracy of our cell tracking algorithms on recordings of simulated cell colonies, generated by the dedicated cell colony simulation software [12,81]. This provided us with ground truth frame-by-frame registration for cell lineages, enabling us to validate our methodology.

1.3. Outline

In Section 2, we describe the synthetic image sequence (see Section 2.1) and experimental data (see Section 2.2) of cell colonies considered as benchmarks for our cell tracking algorithms. In Section 2.3, we describe key cell characteristics considered in our tracking methodology to define metrics that enter our cost functionals. Our tracking approach is developed in greater detail in Section 3. We define valid cell registration mappings between successive image frames in Section 3.1. We outline how to automatically calibrate the weights of our various penalty terms in Section 3.2. Our algorithms for pairing parent cells with their children and for cell-to-cell registration are developed in Section 3.3, Section 3.4, Section 3.5, Section 3.6, Section 3.7, Section 3.8, Section 3.9. We present our main validation results on long image sequences (time series of images) in Section 4 and conclude with Section 5.

2. Datasets

Below, we introduce the datasets used to evaluate the performance of the proposed methodology. The synthetic data are described in Section 2.1. The experimental data (real imaging data) are described in Section 2.2.

2.1. Synthetic Videos of Simulated Cell Colonies

To validate our cell tracking algorithms, we consider simulated image sequences of dense cell populations. We refer to [12,81] for a detailed description of this mathematical model and its implementation. (The code for generating the synthetic data has been released at https://github.com/jwinkle/eQ, accessed on 15 December 2021) The simulated cell colony dynamics are driven by an agent based model [12,81], which emulates live colonies of growing, moving, and dividing rod-like E. coli cells in a 2D microfluidic trap environment. Between two successive frames J, , cells are allowed to move until they nearly bump into each other, and to grow at multiplicative rate denoted with an average value of per minute.

The cells are modeled as 2D spherocylinders of constant 1 m width. Each cell grew exponentially in length with a doubling time of 20 min. To prevent division synchronization across the population when a mother cell of length divides, the two daughter cells are assigned random birth lengths and , where is a random number sampled independently at each division from a uniform distribution on . Consequently, a bacterial cell b of length divides into two cells and , their lengths , satisfy and , , is a random number. The cells have a length of approximately 2 m after division and 4 m right before division. We refer to [81] for additional details. The simulation keeps track of cell lineage, cell size, and cell location (among other parameters). The main output of each such simulation considered here is a binary image sequence of the cell colony with a fixed interframe duration. Each such synthetic image sequence is used as the sole input to our cell tracking algorithm. The remaining meta-data generated by the simulations are only used as ground truth to evaluate the performance of our tracking algorithms.

We consider several benchmark datasets of synthetic image sequences of simulated cell colonies of different complexity. We refer to these benchmarks as BENCH1 (500 frames), BENCH2 (300 frames), BENCH3 (300 frames), and BENCH6 (100 frames), with an interframe duration of 1, 2, 3 and 6 min, respectively. Notice that there is no explicit noise on the growth rate. However, due to the crowding of cells, the growth rate will vary from cell-to-cell. The generated binary images are of size pixels. We summarize these benchmarks in Table 1. The associated image sequences involve between 100 up to 500 frames, respectively. In Figure 2, we display an example of two simulated consecutive frames separated by 1 min. To simplify our presentation and validation tests, we control our simulations to make sure that cells will not exit the region of interest from one frame to the next, and we exclude cells that are only partially visible in the current frames.

Table 1.

Benchmark datasets. To test the tracking software, we consider simulated data. We have generated data of varying complexity with different interframe durations. We note that we also consider these data to train our algorithms for tracking cells. We report the label for each dataset, the interframe duration, as well as the number of frames generated. We set the cell growth factor to per min. We refer to the text for details about how these data have been generated.

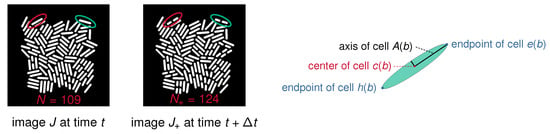

Figure 2.

Simulated data and cell characteristics considered in the proposed algorithm. (Left): Two successive images generated by dynamic simulation for a colony of rod-shaped bacteria. The left image J displays cells at time t. At time with min, cells have moved and grown, and some have divided. These cells are displayed in image , which contains cells. We highlight two cells that have undergone a division between the frames (red and green ellipses). (Right): Geometry of a rod shaped bacterium. We consider different quantities of interest in the proposed algorithm. These include the center of a cell, the two end points and , and the long axis , respectively.

2.2. Laboratory Image Sequences (Real Biological Data)

We also verify the performance of our approach on real datasets of E. coli bacteria. These bacteria are about 1 m in diameter and on average 3 m in length, and they divide about every 30 min. The original images exported from the microscope are 0.11 m/pixel. The microscopy experimental data were obtained using JS006 [87] (BW25113 araC lacI) E. coli strains containing a plasmid constitutively expressing yellow or cyan fluorescent protein (sfyfp or sfcfp) for identification. The plasmid also contains an ampicillin resistance gene and p15A origin. These cells were grown overnight in LB medium with 100 g/mL ampicillin for 18 h. These cultures were diluted in the morning into 1/1000 into 50 mL fresh LB with 100 g/mL ampicillin and grown for 3 h until they reached an OD600 of about 0.3. The cells were then concentrated by centrifuging 30 mL of culture at 2000× g for 5 min and then resuspending in 10 mL of fresh LB. The concentrated culture was loaded into a hallway microfluidic device prewarmed and flushed with 0.1% (v/v) Tween-20 [88]. In the microfluidic device, the cells were provided with continuous fresh LB with 100 g/mL ampicillin and 0.075% (v/v) Tween-20. The microfluidic device was placed onto an 60× oil objective and imaged every 6 min at phase contrast, YFP, and CFP filter settings using an inverted fluorescence microscope. We show a representative dataset in Figure 1.

2.3. Cell Characteristics

Next, we discuss characteristics of the E. coli bacteria important for our tracking algorithm.

Cell Geometry. In accordance with the dynamics of bacterial colonies in microfluidic traps, the dynamic simulation software generates colonies of rod-shaped bacteria. Cell shapes can be approximated by long and thin ellipsoids, which are geometrically well identified by their center, their long axis, and the two endpoints of this long axis. The center is the centroid of all pixels belonging to cell b. The long axis of cell b is computed by principal component analysis (PCA). The endpoints and of cell b are the first and last cell pixels closest to ; see Figure 2 (right) for a schematic illustration.

Cell Neighbors. For each image frame J, denote as the set of fully visible cells in J, and by the number of these cells. Let V be the set of all cell centers with . Denote the Delaunay triangulation [89] of the finite planar set V with N vertices. We say that two cells , in B are neighbors if they verify the following three conditions: (i) are connected by the edge of one triangle in . (ii) The edge does not intersect any other cell in B. (iii) Their centers verify , where is a user defined parameter.

For the synthetic images of size that we considered (see Section 2.1), we take pixels. We write for short, whenever , are neighbors (i.e, satisfy the three conditions identified above).

Cell Motion. Let J, denote two successive images (i.e., frames). Denote , as the associated sets of cells. Superpose temporarily the images J and so that they then have the same center pixel. Any cell , which does not divide in the interframe , becomes a cell in image . The “motion vector” of cell b from frame J to is then defined by . If the cell b does divide between J and , denote as the last position reached by cell b at the time of cell division, and define similarly the motion . In our experimental recordings of real bacterial colonies with interframe duration 6 min, there is a fixed number such that for all cells for all pairs J, . In particular, we observed that, for real image sequences, pixels is an adequate choice. Consequently, we select pixels for all simulated image sequences of BENCH6. For BENCH1, we select pixels, again based on a comparison with real experimental recordings. Overall, the meta-parameter w is assumed to be a fixed number and to be known, since is an observable upper bound for the cell motion norm for a particular image sequence of a lab experiment.

Target Window. Recall that J, are temporarily superposed. Let be a square window of width w, with the same center as cell b. The target window is the set of all cells in having their centers in . Since , the cell must belong to the target window .

3. Methodology

3.1. Registration Mappings

Next, we discuss our assumptions on a valid registration mapping that establishes cell-to-cell correspondences between two frames. Let J, denote two successive images, with cell sets B and , respectively. As above, we let , and . Our goal is to track each cell from J to . For each cell , there exist three possible evolutions between J and :

- Case 1:

- Cell did not divide in the interframe , and has become a cell ; that is, has grown and moved during the interframe time interval.

- Case 2:

- Cell divided between J and , and generated two children cells ; we then denote .

- Case 3:

- Cell disappeared in the interframe , so that is not defined.

To simplify our exposition, we ignore Case 3. We discuss Case 3 in greater detail in the conclusions in Section 5. Consequently, a valid (true) registration mapping f will take values in the set .

3.2. Calibration of Cost Function Weights

With the notation we introduced, fix any two finite sets A, . Let be the set of all mappings g: . Fix m penalty functions , . Let be the ground truth mapping we want to discover through minimization in g of some given cost function defined by the linear combination of the penalty functions , the contributions of which are controlled by the cost function weights . In this section, we present a generic weight calibration algorithm, extending a technique introduced and applied in [90,91] for Markov random fields based image analysis.

The cost function must perform well (with the same weights) for hundreds of pairs of (synthetic) images J, . We consider one such synthetic pair for which the ground truth registration mapping is known, and use it to compute an adequate set of weights, which will then be used on all other synthetic pairs J, . Notice that, for experimental recordings of real cell colonies, no ground truth registration mappings f are available. In this case, f should be replaced by a set of user constructed, correct partial mappings defined on small subsets of A. The proposed weight calibration algorithm will also work in those situations.

We now show how knowing one ground truth mapping f can be used to derive the best feasible weights ensuring that f should be a plausible minimizer of the cost functional over . Let be the vector of m penalties for any mapping . Let be the weight vectors. Then, . Replacing g by another mapping induces the penalty changes and the cost change . Now, fix any known ground truth mapping . We want f to be a minimizer of COST, so we should have for all modifications .

For each , select an arbitrary (where is the target window for cell a; see Section 2.3), to define a new mapping from A to by , and for all . Since f is a minimizer of COST, this single point modification must generate the following cost increase

Denote the vector . Then, the positive vector , , should verify the set of linear constraints for all . There may be too many such linear constraints. Consequently, we relax these constraints by introducing a vector , , of slack variables indexed by all the . (In optimization, slack variables are introduced as additional unknowns to transform inequality constraints to an equality constraint and a non-negativity constraint on the slack variables.) We require the unknown positive vector and the slack variables vector y to verify the system of linear constraints:

where . The normalizing constant 1000 can be arbitrarily changed by rescaling. We seek high positive values for and small -norm for the slack variable vector y. Thus, we will seek two vectors and solving the following convex-concave minimization problem, where is a user selected (large) meta parameter:

subject to (1), where we denote for arbitrary x. To numerically solve the constrained minimization problem (2), we use the libraries CVXPY and DCCP (disciplined convex-concave programming) [84,85,86]. DCCP is a package for convex-concave programming designed to solve non-convex problems. (DCCP can be downloaded at https://github.com/cvxgrp/dccp (last accessed on 20 January 2022)) It can handle objective functions and constraints with any known curvature as defined by the rules of disciplined convex programming [92]. We give examples of numerically computed weight vectors below. The computing time was less than 30 s for the data that we have prepared. For simplicity, we just considered one step changes in our computations, which make the overlap penalty weak. To increase the accuracy of the model, it is possible to consider a larger number of samples (i.e., multi-step changes). Note that the solutions of (2) are of course not unique, even after normalization by rescaling.

3.3. Cell Divisions and Parent–Children Short Lineages

Next, we discuss how we tackle the assignment problem when cells divide.

3.3.1. Cell Divisions

We now outline a cost function based methodology to detect cell divisions. The first step will be to seek the most likely parent for each potential pair of children cells. Fix two successive synthetic image frames J, with short interframe time equal to 1 minute. Their cell sets B, have cardinality N and , respectively. For our synthetic image sequences, all cells still exist in —either as whole cells or after dividing into two children cells, and no new cell enters the field of view during the interframe . This forces , and implies that the number of cell divisions occurring in this interframe verifies . Each children pair is born from a single parent . Thus, the set of all such true children pairs must then verify

For our video recordings of actual cell populations, during any interframe, we may have cells exiting the field of view and cells entering it, so that may be of the order of . To take this into account, we relax the constraint in (3) as follows:

where is a fixed bound estimated from our experiments. For simplicity, we have restricted our methodology to the situation where and are always 0. However, even in that case, there was a computational advantage to using the slightly relaxed constraint (4) with .

3.3.2. Most Likely Parent Cell for a Given Children Pair

For successive images J, with 1 min interframe, define the set of plausible children pairs by

where the threshold is user selected and fixed for the whole benchmark set BENCH1 of synthetic image sequences.

To evaluate if a pair of cells can qualify as a pair of children generated by division of a parent cell , we now quantify the “geometric distortion” between b and . Cell division of b into occurs with small motions of , . During the short interframe duration, the initial centers , of , in image J move by at most pixels each (see Section 2.3), and their initial distance to the center c of b is roughly at most , where is the long axis of cell b. Hence, the centers c, , of b, , should verify the constraint

Define the set of potential short lineages as the set all triplets with , , verifying the preceding constraint (6). For each potential lineage , define three terms penalizing the geometric distortions between a parent and a pair of children by the following formulas, where we denote c, , , the centers of cells b, , and A, , their long axes, respectively: (i) center distortion , () size distortion , and () angle distortion

Here, angle denotes “angles between non-oriented straight lines,” with a range from 0 to . Introduce three positive weights , , (to be estimated), and for every short lineage define its distortion cost by

For each plausible pair of children , we will compute the most likely parent cell as the cell minimizing in (7) over all , as summarized by the formula

To force this minimization to yield a reliable estimate of for most true pairs of children , we calibrate the weights , by the algorithm outlined in Section 3.2, using as “ground truth set” a fairly small set of visually identified true short lineages . For fixed , the set of potential parent cells has very small size due to the constraint (6). Hence, brute force minimization of the functional in (7) over all such that , is a fast computation for each in . The distortion minimizing yields the most likely parent cell . The brute force minimization in b of is still a greedy minimization in the sense that other soft constraints introduced further on are not taken into consideration during this preliminary fast computation of .

3.3.3. Penalties to Enforce Adequate Parent–Children Links

Any true pair of children cells should belong to , but must also verify lineage and geometric constraints which we now enforce via several penalties. Note that the new penalties introduced here are fully distinct from the three penalties specified above to define .

“Lineage” Penalty. Valid children pairs should be correctly matchable with their most likely parent cell (see (8)). Thus, we define the “lineage” penalty by

Notice that the computation of is quite fast.

“Gap” Penalty. Denote as the set of two endpoints of any cell b. For any pair , define endpoints and the gap penalty by

with .

“Dev” Penalty. For rod-shaped bacteria, a true pair of just born children must have a small and roughly aligned cells and . For , we quantify the deviation from alignment as follows. Let , be the closest endpoints of , (see (9)). Let be the straight line linking the centers , of , . Let , be the distances from , to the line . Then, set

“Ratio” Penalty. True children pairs must have nearly equal lengths. Thus, for with lengths , , we define the length ratio penalty by

“Rank” Penalty. Let be the minimum cell length over all cells in . In , children pairs just born during interframe must have lengths , close to . Thus, for , we define the rank penalty by

Given two successive images J, , we seek the set of true children pairs in , which is an unknown subset of . In Section 3.5 below, we replace X by its indicator function z and we build a cost function which should be nearly minimized when z is close to the indicator of . A key term of will be a weighted linear combination of the penalty functions . Since these penalties are different from those introduced in Section 3.3.2, we estimate their weights in the cost function by the algorithm outlined in Section 3.2. The minimization of will be implemented by simulations of a BM with energy function . We present these stochastic neural networks in the next section.

3.4. Generic Boltzmann Machines (BMs)

Minimization of our main cost functionals is a heavily combinatorial task, since the unknown variable is a mapping between two finite sets of sizes ranging from 80 to 120. To handle these minimizations, we use BMs originally introduced by Hinton et al. (see [93,94]). Indeed, these recurrent stochastic neural networks can efficiently emulate some forms of simulated annealing.

Each BM implemented here is a network of N stochastic neurons . In the BM context, the time is discretized and represents the number of steps in a Markov chain, where the successive updates of the BM configuration are analogous to the steps of a Gibbs sampler. The configuration of the whole network at time t is defined by the random states of all neuron . Each belongs to a fixed finite set . Hence, belongs to the configurations set .

Neuron interactivity is specified by a finite set of cliques. Each clique K is a subset of . During configuration updates , neurons may interact only if they are in the same clique. Here, all cliques K are of small sizes 1, or 2, or 3.

For each clique K, one specifies an energy function defined for all , with depending only on the such that . The full energy of configuration z is then defined by

The BM stochastic dynamics is driven by the energy function , and by a fixed decreasing sequence of virtual temperatures , tending slowly to 0 as . Here, we use standard temperature schemes of the form with fixed and slow decay rate .

We have implemented the classical “asynchronous” BM dynamics. At each time t, only one random neuron may modify its state, after reading the states of all neurons belonging to cliques containing . A much faster alternative, implementable on GPUs, is the “synchronous” BM dynamics, where at each time t roughly 50% of all neurons may simultaneously modify their states (see [95,96,97]). The detailed BM dynamics are presented in the appendix (see Appendix A).

When the virtual temperatures decrease slowly enough to 0, the energy converges in probability to a local minimum of the BM energy over all configurations .

3.5. Optimized Set of Parent–Children Triplets

Next, we formulate the search for bona fide parent–children triplets as an optimization problem. For brevity, this outline is restricted to situations where (3) holds, as is the case for our synthetic image data. Simple modifications extend this approach to the relaxed constraint (4), which we used for lab videos of live cell populations. Fix successive images J, with a positive number of cell divisions . Denote the set of m plausible children pairs in . The penalties lin, gap, dev, ratio, and rank defined above for all pairs determine five numerical vectors , , , , in with coordinates , , , , .

We now define a binary BM constituted by mbinary stochastic neurons , . At time , each has a random binary valued state or 0. The random configuration of this BM belongs to the configuration space of all binary vectors . Let be the set of all subsets of . Each configuration is the indicator function of a subset of . We view each as a possible estimate for the unknown set of true children pairs . For each potential estimate of , the “lack of quality” of the estimate will be penalized by the energy function of our binary BM. We now specify the energy for all by combining the penalty terms introduced above. Note that the penalty terms introduced in Section 3.3.2 are quite different from those introduced in Section 3.3.3. No cell in can be assigned to more than one parent in b. To enforce this constraint, define the symmetric binary matrix by (i) if and the two pairs , have one cell in common, (ii) if and the two pairs , have no cell in common, (iii) for all j.

The quadratic penalty is non-negative for , and must be zero if . Introduce six positive weight parameters to be selected further on , . Define the vector as a weighted linear combination of the penalty vectors , , , ,

For any configuration , the BM energy is defined by the quadratic function

We already know that the unknown set of true children pairs must have cardinal . Thus, we seek a configuration minimizing the energy under the rigid constraint . Let be the vector with all its coordinates equal to 1. The constraint on z can be reformulated as . We want the unknown to be close to the solution of the constrained minimization problem

To force this minimization to yield a reliable estimate of , we calibrate the six weights

by the algorithm in Section 3.2. Denote the set of all such that . To minimize under the constraint , fix a slowly decreasing temperature scheme as in Section 3.4. We need to force the BM stochastic configurations to remain in . Then, for large time step t, the will converge in probability to a configuration approximately minimizing under the constraint .

Start with any . Assume that, for , one has already dynamically generated BM configurations . Then, randomly select two sites j, k such that and . Compute a virtual configuration Y by setting , , and for all sites i different from j and k. Compute the energy change , and the probability , where . Then, randomly select or with respective probabilities and . Clearly, this forces .

3.6. Performance of Automatic Children Pairing on Synthetic Videos

In the following subsections, we provide experimental results for pairing children and parent cells.

3.6.1. Children Pairing: Fast BM Simulations

For , one can reduce the computational cost for BM dynamics simulations by pre-computing and storing the symmetric binary matrix Q, as well as the m-dimensional vectors , , , , and their linear combination V. A priori reduction of m significantly reduces the computing times, and can be implemented by trimming away the pairs for which the penalties , , , , and are all larger than predetermined empirical thresholds. We performed a study on 100 successive (synthetic) images. We show scatter plots for the most informative penalty terms in Figure 3. These plots allow us to determine adequate thresholds for the penalty terms. We observed that, for the synthetic and real data, we considered the trimming of , , and reduced the percentage of invalid children pairs by 95%, therefore drastically reducing the combinatorial complexity of the problem.

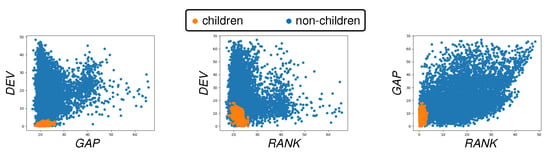

Figure 3.

Scatter plots for tandems of the penalty terms , , and . We mark in orange the true children pairs and in blue invalid children pairs. These plots allow us to identify appropriate empirical thresholds to trim the (considered synthetic) data in order to reduce the computational complexity of the parent–children pairing.

The quadratic energy function is the sum of clique energies involving only cliques of cardinality 1 and 2. For any clique of cardinality 1, with , one has . For any clique of cardinality 2, with , one has . A key computational step when generating is to evaluate the energy change when one flips the binary values and by the new value for a fixed single site i. This step is quite fast since it uses only the numbers , , and , , where is the row of the matrix Q.

3.6.2. Children Pairing: Implementation on Synthetic Videos

We have implemented our children pairing algorithms on synthetic image sequences having 100 to 500 image frames with 1 min interframe (benchmark set BENCH1; see Section 2.1). The cell motion bound per interframe was defined by pixels. The parameter that defines the sets of plausible children pairs (see (5)) was set at pixels.

The known true cell registrations indicated that, in our typical BENCH1 image sequence, the successive sets had average cardinalities of 120, while the number of true children pairs per roughly ranged from 2 to 6 with a median of 4. The size of the reduced configuration space per image frame thus ranged from to with a median of .

Our weights estimation technique introduced in Section 3.2 yields the weights

and

for the penalties introduced in Section 4. To reduce the computing time for hundreds of BM energy minimizations on the BENCH1 image sequences, we excluded obviously invalid children pairs in each set, by simultaneously thresholding of the penalty terms. The BM temperature scheme was , with the number of epochs capped at 5000. The average CPU time for BM energy minimization dedicated to optimized children pairing was about 30 seconds per frame. (We provide hardware specifications in Appendix B).

3.6.3. Parent–Children Matching: Accuracy on Synthetic Videos

For each successive image pair J, , with cells B, of cardinality , our parent–children matching algorithm computes a set of short lineages , where the cell is expected to be the parent of cells . Recall that provides the number of cell divisions during the interframe . The number of correctly reconstructed short lineages is obtained by direct comparison to the known ground truth registration . For each frame J, we define the pcp-accuracy of our parent–children pairing algorithm as the ratio .

We have tested our parent–children matching algorithm on three long synthetic image sequences BENCH1 (500 frames), BENCH2 (300 frames), and BENCH3 (300 frames), with respective interframes of 1, 2, and 3 min. For each frame , we computed the pcp-accuracy between and .

We report the accuracies of our parent–children pairing algorithms in Table 2. For BENCH1, all 500 pcp-accuracies reached 100%. For BENCH2, pcp-accuracies reach 100% for 298 frames out of 300, and for the remaining two frames, accuracies were still high at 93% and 96%. For BENCH3, where interframe duration was longest (3 min), the 300 pcp-accuracies decreased slightly but still averaged 99%, and never fell below 90%.

Table 2.

Accuracies of parent–children pairing algorithm. We applied our parent–children pairing algorithm to three long synthetic image sequences BENCH1 (500 frames), BENCH2 (300 frames), and BENCH3 (300 frames), with interframe intervals of 1, 2, 3 min, respectively. The table summarizes the resulting pcp-accuracies. Note that pcp-accuracies are practically always at 100%. For BENCH2, pcp-accuracies are 100% for 298 frames out of 300, and for the remaining two frames, accuracies were still high at 93% and 96%. For BENCH3, the average pcp-accuracy for the 3 min interframe is 99%.

3.7. Reduction to Registrations with No Cell Division

Fix successive frames and their cell sets B, . We seek the unknown registration mapping , where iff cell b did not divide during the interframe and iff cell b divided into during the interframe.

If , we know that the number of cell divisions during the interframe should be . We then apply the parent–children matching algorithm outlined above to compute a set of short lineages with , and . For each , the cell b is computed by as the parent cell of the two children cells .

For each , eliminate from B the parent cell, b, and eliminate from the two children cells , . We are left with two residual sets, and having the same cardinality, . Assuming that our set of short lineages is correctly determined, the cells should not divide in the interframe , and hence have a single (still unknown) registration . Thus, the still unknown part of the registration f is a bijection from to .

Let and . For each , the cell b divides into the unique pair of cells, such that . Hence, we can set for all . Thus, the remaining problem to solve is to compute the bijective registration . We have reduced the registration discovery to a new problem, where no cell divisions occur in the interframe duration. In what follows, we present our algorithm to solve this registration problem.

3.8. Automatic Cell Registration after Reduction to Cases with No Cell Division

As indicated above, we can explicitly reduce the generic cell tracking problem to a problem where there is no cell division. We consider images J, with associated cell sets B, such that . Hence, there are no cell divisions in the interframe and the map f of this reduced problem is (in principle) a bijection with . In Figure 4, we show two typical successive images we use for testing with no cell division generated by the simulation software [12,81] (see Section 2.1).

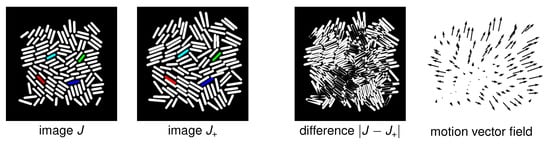

Figure 4.

Simulated cell dynamics. From left to right, two successive simulated images J and with an interframe time of six minutes and no cell division, their image difference , and the associated motion vectors. For the image J and we color four pairs of cells in , which should be matched by the true cell registration mapping. Notice that the motion for an interframe time of six minutes is significant. We can observe that, even without considering cell division, we can no longer assume that corresponding cells in frame J and overlap.

3.8.1. The Set of Many-to-One Cell Registrations

We have reduced the registration search to a situation where, during the interframe , no cell has divided, no cell has disappeared, and no cell has suddenly emerged in without originating from B. The unknown registration should then in principle be injective and onto. However, for computational efficiency, we will temporarily relax the bijectivity constraint on f. We will seek f in the set of all many-to-one mappings such that for each , the cell is in the target window (see Section 2.3).

3.8.2. Registration Cost Functional

To design a cost functional , which should be roughly minimized when is very close to the true registration from B to , we linearly combine penalties , , , weighted by unknown positive weights , , , , to write, for all registrations ,

We specify the individual terms that appear in (11) below. Ideally, the minimizer of over all is close to the unknown true registration mapping . To enforce a good approximation of this situation, we first estimate efficient positive weights by applying our calibration algorithm (see Section 3.2). The actual minimization of over all is then implemented by a BM described in Section 3.9.

Cell Matching Likelihood: . Here, we extend a pseudo likelihood approach used to estimate parameters in Markov random fields modeling by Gibbs distributions (see [98]). Recall that is the known average cell growth rate. For any cells , , the geometric quality of the matching relies on three main characteristics: (i) motion of the cell center , () angle between the long axes and , () cell length ratio . Thus, for all and in the target window , define (i) Kinetic energy: . (ii) Distortion of cell length: . (iii) Rotation angle: is the geometric angle between the straight lines carrying and .

Fix , and let run through the whole target window . The finite set of values thus reached by the kinetic penalties has two smallest values , . Define , which is a list of “low” kinetic penalty values. Repeat this procedure for the penalties and to similarly define a of “low” distortion penalty values, and a of “low” rotation penalty values.

The three sets , , can be viewed as three random samples of size , respectively, generated by three unknown probability distributions , , . We approximate these three probabilities by their empirical cumulative distribution functions , , , which can be readily computed. We now use the right tails of these three CDFs to compute separate probabilistic evaluations of how likely the matching of cell with cell is. For any fixed mapping , and any , set . Compute the three penalties , , , and define three associated “likelihoods” for the matching :

High values of the penalties , , thus will yield three small likelihoods for the matching . With this, we can define a “joint likelihood” evaluating how likely is the matching :

Note that higher values of correspond to a better geometric quality for the matching of b with . To avoid vanishingly small likelihoods, whenever , we replace it by . Then, for any mapping , we define its likelihood by the finite product

The product of these N likelihoods is typically very small, since can be large. Thus, we evaluate the geometric matching quality of the mapping f via the averaged log-likelihood of f, namely,

Good registrations should yield small values for the criterion .

Overlap: . We expect bona fide cell registrations to be bijections. Consequently, we want to penalize mappings f which are many-to-one. We say that two distinct cells do overlap for the mapping if . The total number of overlapping pairs for f defines the overlap penalty:

Neighbor Stability: . Let . Denote as the set of all neighbors for cell in B (i.e., ; see Section 2.3). For bona fide registrations , and for most pairs of neighbors in B, we expect and to remain neighbors in . Consequently, we penalize the lack of “neighbors stability” for f by

Neighbor Flip: . Fix any mapping , any cell and any two neighbors , of b in B. Let , , . Let c, , and d, , be the centers of cells b, , and z, , . Let be the oriented angle between and , and let be the angle between and , respectively. We say that the mapping f has flipped cells , around b, and we set if , are both neighbors of z, and the two angles , have opposite signs. In all other cases, we set .

For any registration , define the flip penalty for f by

where is the neighborhood of cell b in B. In Figure 5, we illustrate an example of an unwanted cell flip.

Figure 5.

Illustration of an undesirable flip for the mapping f. The cells and are neighbors of , and mapped by f on neighbors of , as should be expected for bona fide cell registrations. However, for this mapping f, we have above above , whereas, for the original cells, we had above above . Our cost function penalizes flips of this nature.

3.9. BM Minimization of Registration Cost Function

In what follows, we define the optimization problem for the registration of cells from one frame to another (i.e., cell tracking), as well as associated methodology and parameter estimates.

3.9.1. BM Minimization of over

Let B, be two successive sets of cells. As outlined above, we have reduced the problem to one in which we can assume that , so that there is no cell division during the interframe. Write . For short, denote instead of the target window of cell . We seek to minimize over all registrations . Let be a BM with sites and stochastic neurons . At time t, the random state of will be some cell belonging to the target window and the random configuration of the whole belongs to the configurations set .

To any configuration , we associate a unique cell registration defined by for all j, denoted by . This determines a bijection from onto . The inverse of will be called , and is defined by , when for all j.

3.9.2. BM Energy Function

We now define the energy function of our BM for all . Denote . Since is a bijection from to , we must have

Our goal is to minimize , and we know that BM simulations should roughly minimize over all . Thus, we define the BM energy function by forcing

for any registration mapping , which—due to the preceding subsection—is equivalent to

for all configurations . The next subsection will explicitly express the energy in terms of cliques of neurons. Due to (13) and (14), we have

For large time t, the BM stochastic configuration tends with high probability to concentrate on configurations , which roughly minimize . The random registration will belong to and verify , so that . Consequently, for large t—with high probability—the random mapping will have a value of the cost functional close to .

3.9.3. Cliques of Interactive Neurons

The BM energy function just defined turns out to involve only three sets of small cliques: (i) is the set of all singletons , with . (ii) is the set of all pairs such that cells and are neighbors in B. (iii) is the set of all triplets such that cells and are both neighbors of in B. Denote as the set of all cliques for our BM.

Cliques in . For each clique in , and each , define its energy by

where LIK is given by (12). Set for K in . For all , define the energy by

which implies that the registration verifies .

Cliques in . For all , all cliques in , define the clique energies and by and

where and are the numbers of neighbors in B for cells and , respectively. Set for K in . Define the two energy functions

which implies that verifies and .

Cliques in . For each clique in , define the clique energy by

where is any registration mapping , , onto , , . The indicator FLIP was defined in Section 3.8.2. Set for K in . Define the energy

which implies that verifies .

Finally, define the clique energy for all by the linear combination

Summing this relation over all yields

Define then the final BM energy function by

For any , the associated registration verifies , , . By weighted linear combination of these equalities, and, due to (15), we obtain for all configurations , when or, equivalently, when .

3.9.4. Test Set of 100 Synthetic Image Pairs

As shown above, the minimization of over all registrations is equivalent to seeking BM configurations with minimal energy . We have implemented this minimization of by the long-term asynchronous dynamics of the BM just defined. This algorithm was designed for the registration of image pairs exhibiting no cell division, and was, therefore, implemented after the automatic reduction of the generic registration problem, as indicated earlier. We have tested this specialized registration algorithm on a set of 100 pairs of successive images of simulated cell colonies exhibiting no cell divisions. These 100 image pairs were extracted from the benchmark set BENCH6 of synthetic image sequence described in Section 2.1. The 100 pairs of cell sets B, had sizes ranging from 80 to 100 cells. For each test pair B, , each target window typically contained 30 to 40 cells. The set of configurations had huge cardinality ranging from to . However, the average number of neighbors of a cell was around 4 to 5.

3.9.5. Implementation of BM Minimization for

The numbers , , of cliques in , , have the following rough ranges , , and . For , denote the numbers of non-zero values for when z runs through and K runs through all cliques of cardinality k. One easily checks the rough upper bounds ; ; . Hence, to automatically register B to , one could pre-compute and store all the possible values of for all cliques and all the configurations . This accelerates the key computing steps of the asynchronous BM dynamics, namely, for the evaluation of energy change , when configurations z and differ at only one site . Indeed, the single site modification affects only the energy values for the very small number of cliques K, which contain the site j. In our benchmark sets of synthetic images, one had for all . Hence, the computation of was fast since it requires retrieving at most 24 pairs of pre-computed , , and evaluating the 24 differences . Another practical acceleration step is to replace the ubiquitous computations of probabilities by simply testing the value against 100 precomputed logarithmic thresholds.

In our implementation of ABM dynamics, we used virtual temperature schemes such as with . The BM simulation was stopped when the stochastic energy had remained roughly stable during the last N steps. Since all target windows had cardinality smaller 40, the initial configuration was computed via

where the likelihoods LIK were defined by (12).

3.9.6. Weight Calibration

For the pair of successive synthetic images J, displayed in Figure 4, we have cells. The ground truth registration f is known by construction; we used it to apply the weight calibration described in Section 3.2. We set the meta-parameter to and obtained the vector of weights

These weights are kept fixed for all the 100 pairs of images taken from the set BENCH6. The determined weights are used in the cost function defined above. This correctly parametrized the BM energy function . We then simulated the BM stochastic dynamics to minimize the BM energy .

3.9.7. BM Simulations

We launched 100 simulations of the asynchronous BM dynamics, one for each pair of successive images in our test set of 100 images taken from BENCH6. For each such pair, the ground truth mapping was known by construction and the stochastic minimization of the BM energy generated an estimated cells registration . For each pair B, in the considered set of 100 images, the accuracy of this automatically computed registration was evaluated by the percentage of cells such that . When , our BM has N stochastic neurons, and the asynchronous BM dynamics proceeds by successive epochs. Each epoch is a sequence of N single site updates of the BM configuration. For each one of our 100 simulations of BM asynchronous dynamics, the number of epochs ranged from 250 to 450.

The average computing time was about eight minutes per epoch, which entailed a computing time ranging from 30 to 50 min for each one of our 100 automatic registrations reported here. (We specify the hardware used to carry out these computations in Appendix B). Each image contains about 100 to 150 cells. Consequently, the runtime for the algorithm is approximately 20 s per cell for our prototype implementation. We note that this is only a rough estimate. The runtime depends on several factors, such as the number of cells in an image; the number of mother and daughter cells (i.e., how many cells divide); the size of the neighborhood of each individual cell (window size); the weights used in the cost function (which affects the number of epochs), etc. We note that the temperature scheme had not been optimized yet, so that these computing times are upper bounds. Earlier SBM studies [99,100,101,102] indicate that the same energy minimizations on GPUs could provide a computational speedup by a factor ranging between 30 and 50. We report registration accuracies in Table 3. For each pair of images in the considered set of 100 images, the accuracy of automatic registration was larger than 94.5%. The overall average registration accuracy was quite high at 99%.

Table 3.

Registration accuracy for synthetic image sequence BENCH. We consider 100 pairs of consecutive synthetic images taken from the benchmark dataset BENCH6. Automatic registration was implemented by BM minimization of the cost function , which was parametrized by the vector of optimized weights in (17). The average registration accuracy was 99%.

4. Results

In this section, we report results for the registration for cell dynamics involving growth, motion, and cell divisions.

4.1. Tests of Cell Registration Algorithms on Synthetic Data

We now consider more generic long synthetic image sequences of simulated cell colonies, with a small interframe duration of one minute. We still impose the mild constraint that no cell is lost between two successive images. The main difference with the earlier benchmark of 100 images from BENCH6 is that cells are allowed to freely divide during interframes, as well as to grow and to move. For the full implementation on 100 pairs of successive images, we first execute the parent–children pairing, and remove the identified parent–children triplets; we can then apply our cell registration algorithmic on the reduced sets cells. Our image sequence contained 760 true parent–children triplets, which we automatically identified with an accuracy of 100%. As outlined earlier, we removed all these identified cell triplets and then applied our tracking algorithm. This left us with a total of 12,631 cells (spread over 100 frames). Full automatic registration was then implemented with an accuracy higher than 99.5%.

4.2. Tests of Cell Registration Algorithms on Laboratory Image Sequences

To test our cell tracking algorithm on pairs of consecutive images extracted from recorded image sequences of bacterial colonies (real data), we had to automatically delineate all individual cells in each image. Representative frames of these data are shown in Figure 1. We describe these data in more detail in Section 2.2. We will only briefly outline the overall segmentation approach to not distract from our main contribution—the cell tracking algorithm. We use the watershed algorithm [103] (also used, e.g., in [76]) to segment each frame into individual image segments containing one single cell each. Consequently, these regions represent over segmentations of the individual cells; we only know that each region will contain a bacteria cell b. To segment individual cells, an additional step is necessary. We then apply ad hoc nonlinear filters to remove minor segmentation artifacts. In a second step, we then identified the contour of each single cell b by applying the Mumford–Shah algorithm [104] within the image segment containing a cell b. Since this procedure is quite time-consuming for large images, we have implemented it to produce a training set of delineated individual cells to train a CNN for image segmentation. After automatic training, this CNN substantially reduces the runtime of the cell segmentation/delineation procedure. We show the resulting segmentations in Figure 6. We provide additional information regarding our approach for the segmentation of individual bacteria cells in the appendix (see Appendix D).

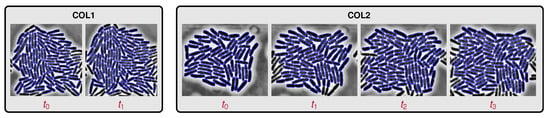

Figure 6.

Segmentation results for experimental recordings of live cell colonies. We show two short image sequences extracts COL1 (left) and COL2 (right). The interframe duration is six minutes. The image sequence extract COL1 has only two successive image frames. The image sequence extract COL2 has four successive image frames. We are going to automatically compute four cell registrations, one for each pair of successive images in COL1 and COL2.

After each cell has been identified (i.e., segmented out) in each pair J, of successive images, we transform J, into binary images, where cells appear in white on a black background. For each resulting pair B, of successive sets of cells, we apply the parent–children pairing algorithm outlined in Section 3.3 to identify all the short lineages. For the two successive images in COL1, the discovered short lineages are shown in Figure 7 (left pair of images). Here, color designates the cell triplet algorithmically identified: parent cell in image J and its two children in image . We then remove each identified “parent” from B and its two children from . This yields the reduced cell sets and . We can then apply our tracking algorithm (see Section 3.7) dedicated to situations where cells do not divide during the interframe.

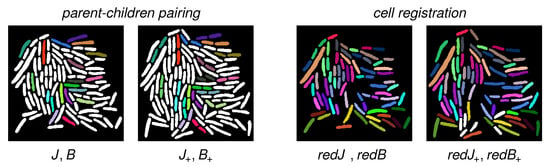

Figure 7.

Cell tracking results for the pair COL1 of successive images J, shown in Figure 6. The interframe duration is six minutes. (Left): Results for parent–children pairing on COL1. Automatically detected parent–children triplets are displayed in the same color. (Right): Computed registration. The removal of the automatically detected parent–children triplets (see left column) generates the reduced cell sets and . Automatic registration of and is again displayed via identical color for the registered cell pairs . Mismatches are mostly due to previous errors in parent–children pairing (see Figure 8 for a more detailed assessment).

For image sequences of live cell colonies, we had to re-calibrate most of our weight parameters. The weight parameters used for these image sequences are summarized in Table 4.

Table 4.

Cost function weights for parent–children pairing in the COL1 images displayed in Figure 6.

The BM temperature scheme was , with the number of epochs capped at 5000. We illustrate our COL1 automatic registration results in Figure 7 (right pair of images). Here, if cell has been automatically registered onto cell , b, share the same color. The cells colored in white in are cells which the registration algorithm did not succeed in matching to some cell in . These errors can essentially be attributed to errors in the parent–children pairing step. By visual inspection, we have determined that there are 14 true parent–children triplets in the successive images of COL1. Our parent–children pairing algorithm did correctly identify 11 of these 14 triplets. To check further the performance of our registration algorithm on live images, we also report automatic registration results for “manually prepared” true versions of and , obtained by removing “manually” the true parent–children triplets determined by visual inspection. For the short image sequence COL2, results are displayed in Figure 8.

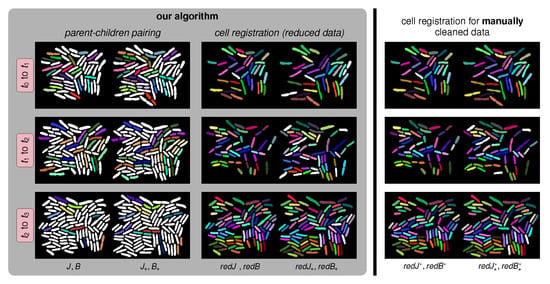

Figure 8.

Cell tracking results for the short image sequence COL2 in Figure 6. The interframe duration for COL2 is six minutes. COL2 involves four successive images , . In our figure, each one of the three rows displays the automatic cell registration results between images and for . We report the accuracies of parent–children pairing and of the registration in Table 5. (Left column): Results for parent–children pairing. Each parent–children triplet is identified by the same color for each parent cell and its two children. (Middle column): Display of the automatically computed registration after removing the parent–children triplets already identified in order to generate two reduced sets and of cells. Again, the same color is used for each pair of automatically registered cells. The white cells in are cells which could not be registered to some cell in . (Right column): To differentiate between errors induced during automatic identification of and errors generated by automatic registration between and , we manually removed all “true” parent–children triplets and then applied our registration algorithm to this “cleaned” (reduced) cell sets and .

The display setup is the same: The left column shows the results of automatic parent–children pairing. The middle column illustrates the computed registration after automatic removal of the computer identified parent–children triplets. The third column displays the computed registration after “manually” removing the true parent–children triplets determined by visual inspection. Note that the overall matching accuracy can be improved if we reduce errors in the parent–children pairing. We report quantitative accuracies in Table 5. For parent–children pairing, accuracy ranges between 70% and 78%. For pure registration after correct parent–children pairing, accuracy ranges between 90% and 100%.

Table 5.

Cell tracking accuracy for the short image sequence COL2 in Figure 6 with an interframe of six minutes. We report the ratio of correctly predicted cell matches over the total number of true cell matches and the associated percentages. The accuracy results quantify four distinct percentages of correct detections (i) for parent cells in image J, (ii) for children cells in image , (iii) for parent–children triplets, and (iv) for registered pairs of cells .

Table 5.

Cell tracking accuracy for the short image sequence COL2 in Figure 6 with an interframe of six minutes. We report the ratio of correctly predicted cell matches over the total number of true cell matches and the associated percentages. The accuracy results quantify four distinct percentages of correct detections (i) for parent cells in image J, (ii) for children cells in image , (iii) for parent–children triplets, and (iv) for registered pairs of cells .

| Task | Accuracy | |||||

|---|---|---|---|---|---|---|

| correctly detected parents | 15/19 | 79% | 20/21 | 95% | 7/10 | 70% |

| correctly detected children | 35/38 | 92% | 32/42 | 76% | 14/20 | 70% |

| correct parent–children triplets | 15/19 | 78% | 16/21 | 76% | 7/10 | 70% |

| correctly registered cell pairs | 36/36 | 100% | 44/49 | 90% | 76/80 | 95% |

5. Conclusions and Future Work

We have developed a methodology for automatic cell tracking in recordings of dense bacterial colonies growing in a mono-layer. We have also validated our approach using synthetic data from agent based simulations, as well as experimental recordings of E. coli colonies growing in microfluidic traps. Our next goal is to streamline our implementation for systematic cell registration on experimentally acquired recordings of such cell colonies, to enable automated quantitative analysis and modeling of cell population dynamics and lineages.

There are a number of challenges for our cell tracking algorithm: Inherent imaging artifacts such as noise or intensity drifts, cell overlaps, similarity of cell shape characteristics across the population, tight packing of cells, somewhat large interframe times, cell growth combined with cell motion, and cell divisions represent just a few of these challenges. Overall, the cell tracking problem has combinatorial complexity, and for large frames is beyond the concrete patience of human experts. We tackle these challenges by developing a two-stage algorithm that first identifies parent–children triplets and subsequently computes cell registration from one frame to the next, after reducing the two original cell sets by automatic removal of the identified parent–children triplets. Our algorithms specify innovative cost functions dedicated to these registration challenges. These cost functions have combinatorial complexity. To discover good registrations, we minimize these cost functions numerically by intensive stochastic simulations of specifically structured BMs. We have validated the potential of our approach by reporting promising results obtained on long synthetic image sequences of simulated cell colonies (which naturally provide a ground truth for cell registration from one frame to the next). We have also successfully tested our algorithms on experimental recordings of live bacterial colonies.

The choice of adequate cost functions to drive each major cost optimization step in our multi-step cell tracking algorithms is essential for obtaining good tracking. Selecting the proper formulation had a strong impact on actual tracking accuracy. Our cost functions are fundamentally nonlinear, which entails additional complications. We introduced a set of meta-parameters for each cost function, and proposed an original learning algorithm to automatically identify good ranges for these meta-parameters.

Our BMs are focused on stochastic minimization of dedicated cost functions. An interesting feature of BMs we will explore in future work is the simplicity of their natural massive parallelization for fast stochastic minimization [90]. This allows us to mitigate the slow convergence typically observed for Gibbs samplers on discrete state spaces with high cardinality. Parallelized BMs implement a form of massively parallel simulated annealing. Sequential simulated annealing has been explored by physicists [105,106,107,108] seeking to minimize spin–glasses energies. For these clique-based energies, reaching global minima requires unfeasible CPU times, and much faster parallel simulated annealing yields only good local minima, via a sophisticated but still greedy stochastic search. Parallel stochasticity favors ending in rather stable local minima, which in turn enforces low sensitivity to small changes in energy parameters. Robustness to small changes in the coefficients of our cost functions is a desirable feature, since our algorithmic calibration of cost coefficients focuses on computing good ranges for these meta-parameters. We do not aim to seek global minima, generally a very elusive search because computing speed and scalability are important features in our problem. Recall the established results of Huber [109] showing that optimal estimators of the mean for a Gaussian distribution lose efficiency very quickly when the Gaussian data are slightly perturbed.

In future work, we will further improve the stability and accuracy of our cell registration algorithms by exploring natural modifications of our cost functions. In the present work, we have not yet explicitly considered the case of cells vanishing between successive frames. This is a critical issue that can occur due to cells exiting or entering the field of view as well as due to errors in cell segmentation. The problem is somewhat controlled and/or mitigated in our experimental setup, where we expect cells to enter or vanish close to a precisely positioned trap edge and/or near frame boundaries. Since we intend to track lineages, each frame-to-frame error of this type may be problematic, and it will be instrumental for our future work to address these issues.

Linking parents to children involves an optimization distinct from the final optimization of frame-to-frame registrations. This did reduce computing time without reducing the quality for our benchmark results. However, in future work, one could attempt to iterate this sequence of two optimizations in order to reach a better minimum.

We note that our algorithm does work for experimental setups in which the frame rate of the video recordings is not fixed. This will require an adaptive parameter selection that depends on the frame rate. This can be implemented based on a trivial rescaling procedure. However, note that, for larger interframe times, more errors will impact tracking results. Indeed, large interframe durations intensify fluctuations in key parameters of cell dynamics, and increase the range of cell displacement, imposing searches in larger cell neighborhoods for cell pairing, as well as increased combinatorial complexity.