An Autoencoder Gated Recurrent Unit for Remaining Useful Life Prediction

Abstract

1. Introduction

2. Literature Review

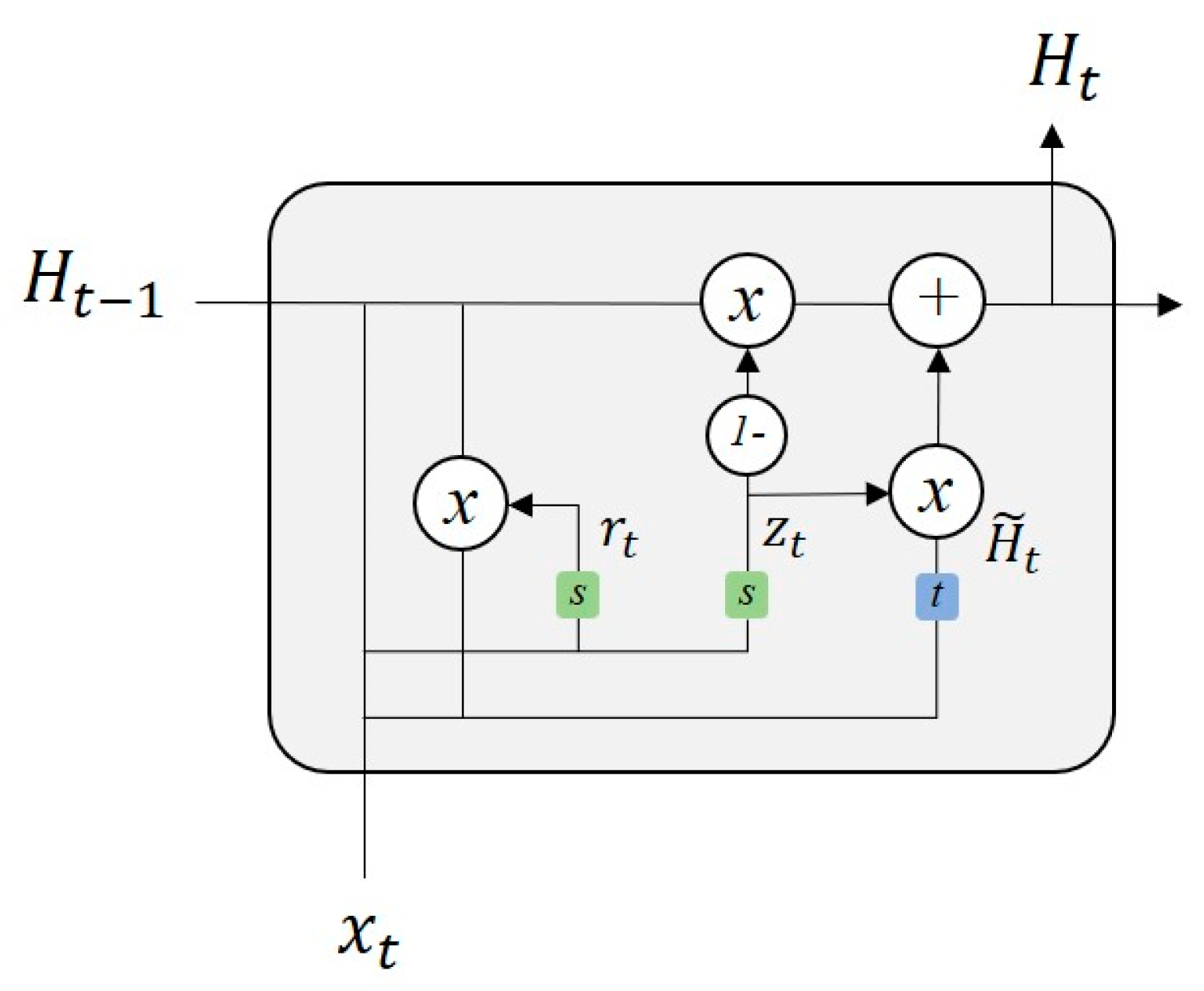

2.1. Recurrent Neural Networks

2.2. RNN Applications in Time Series Forecasting

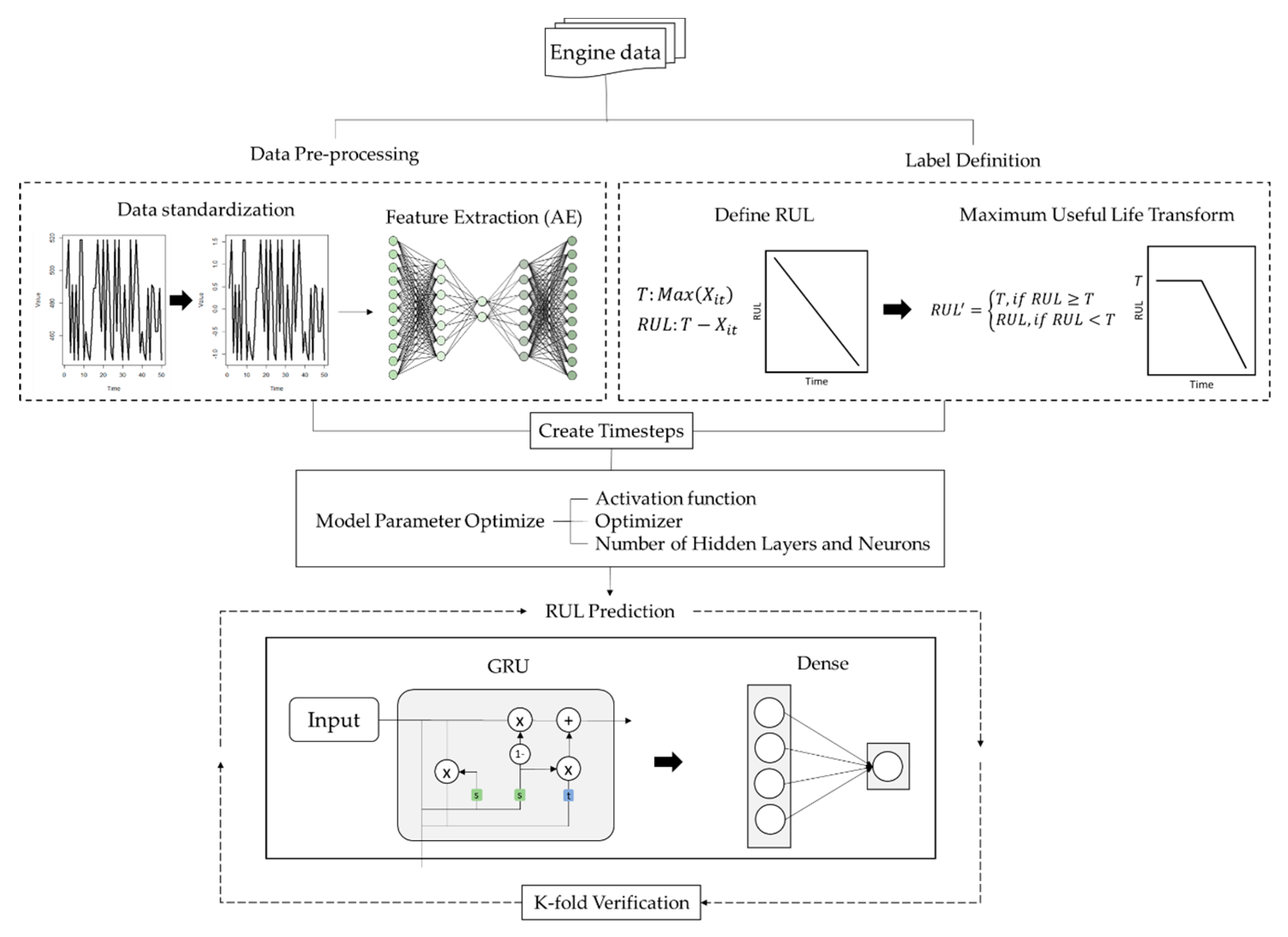

3. Research Framework

3.1. Data Pre-Processing

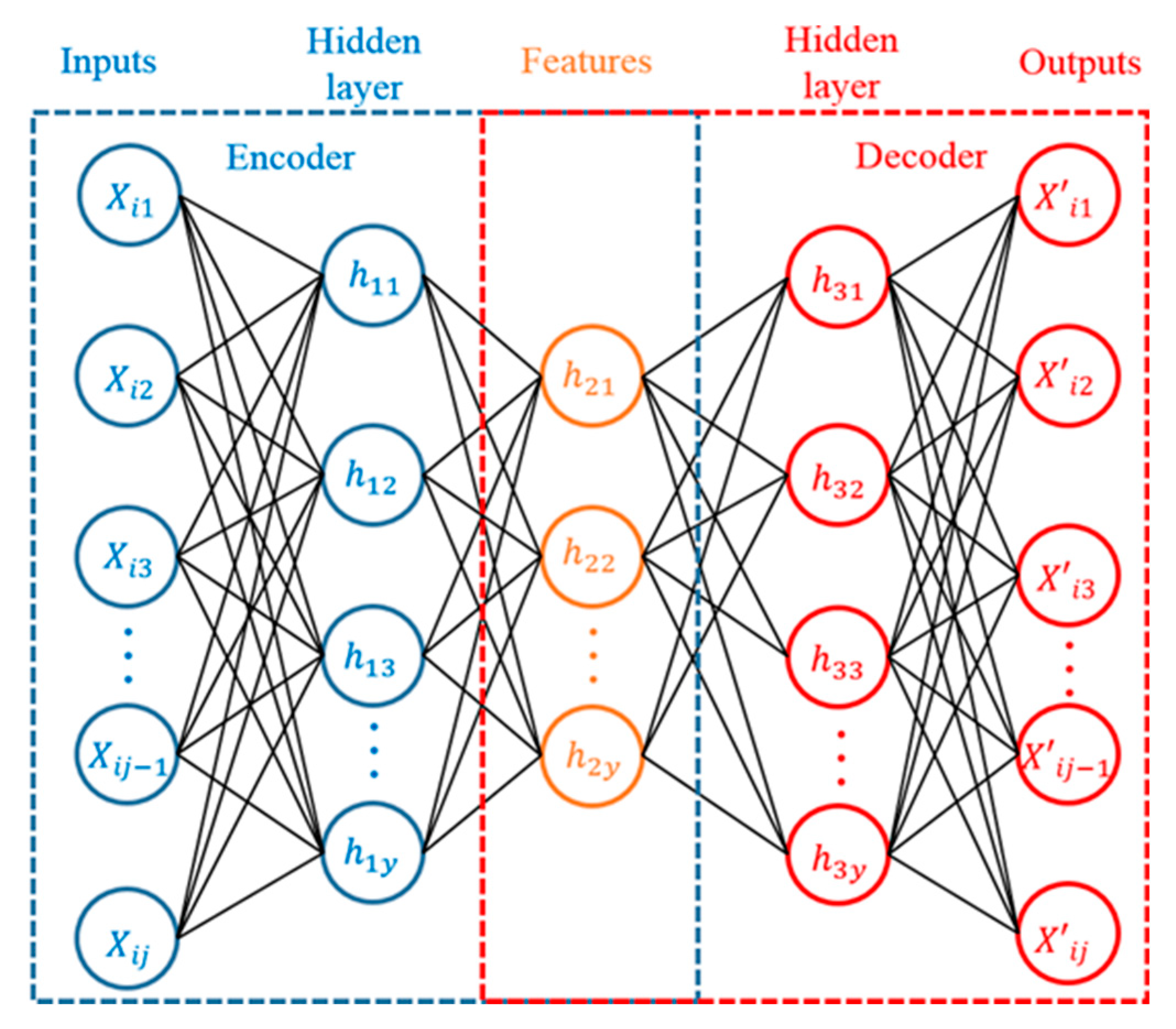

3.1.1. Feature Extraction

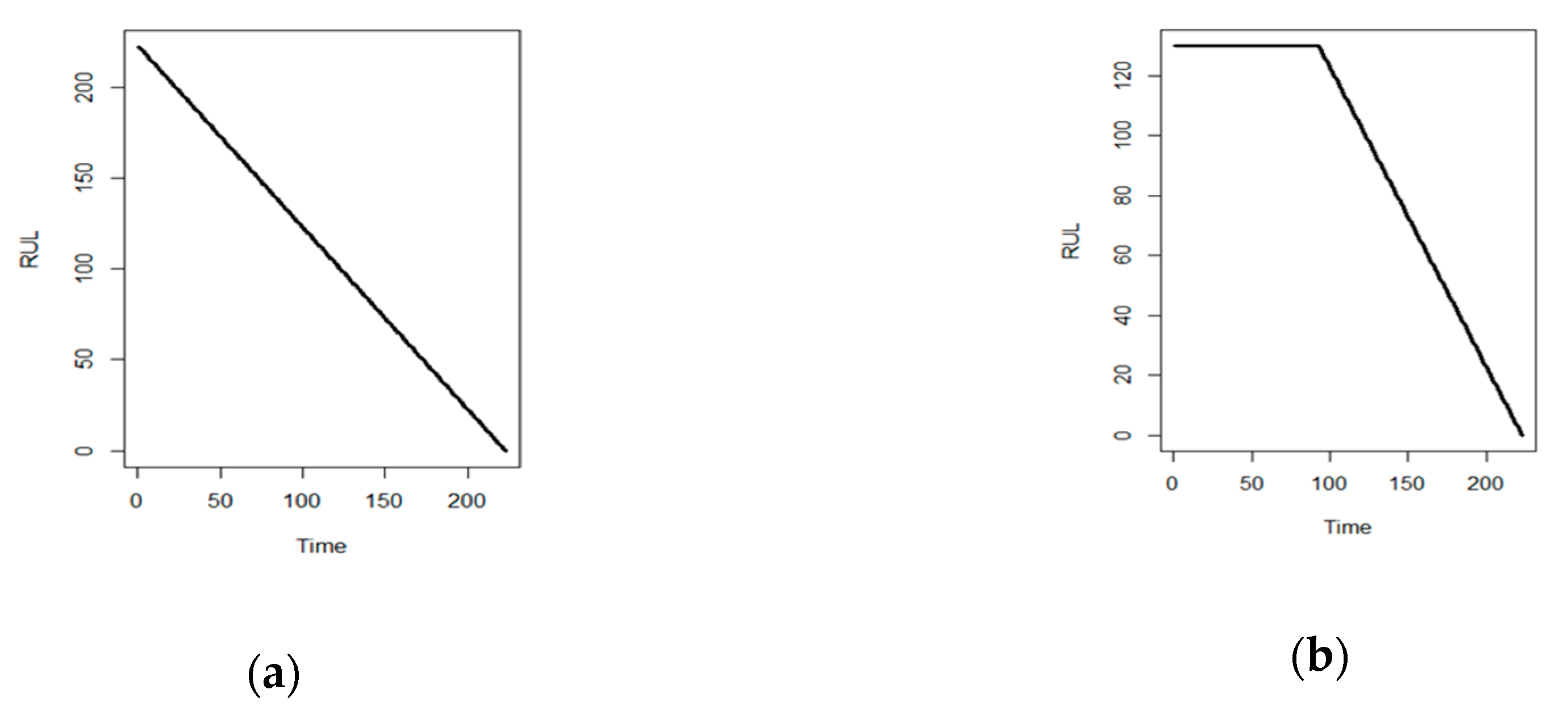

3.1.2. Maximum RUL Transformation

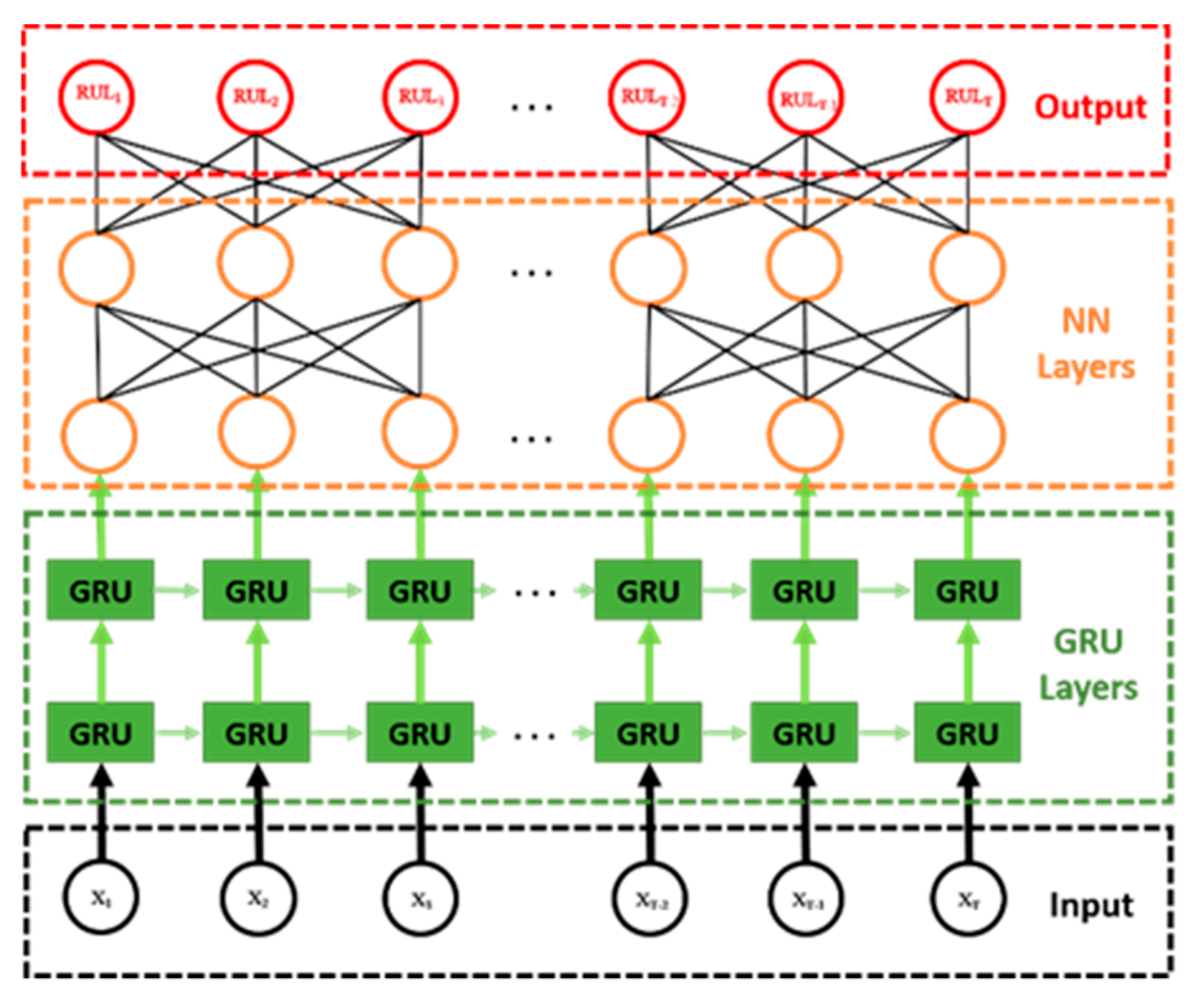

3.2. RUL Prediction Model

4. Result Evaluation

4.1. Data Collection

4.2. Hyperparameters Setting

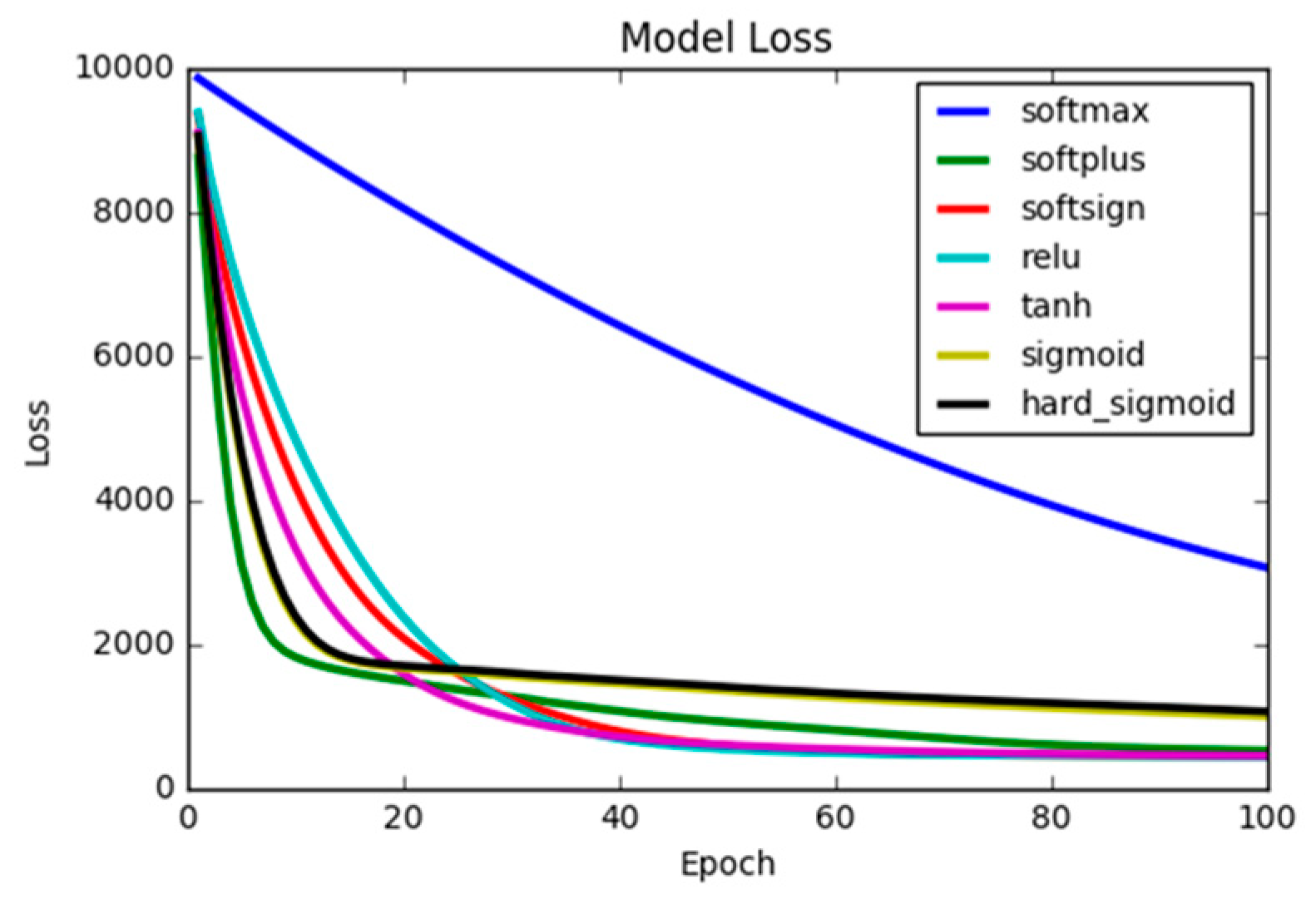

4.2.1. Activation Function

- Epochs: 100;

- Optimizers: Adam;

- Batch_size: 128;

- Hidden layer, number of neurons: 1, 50;

- Inputs: 24.

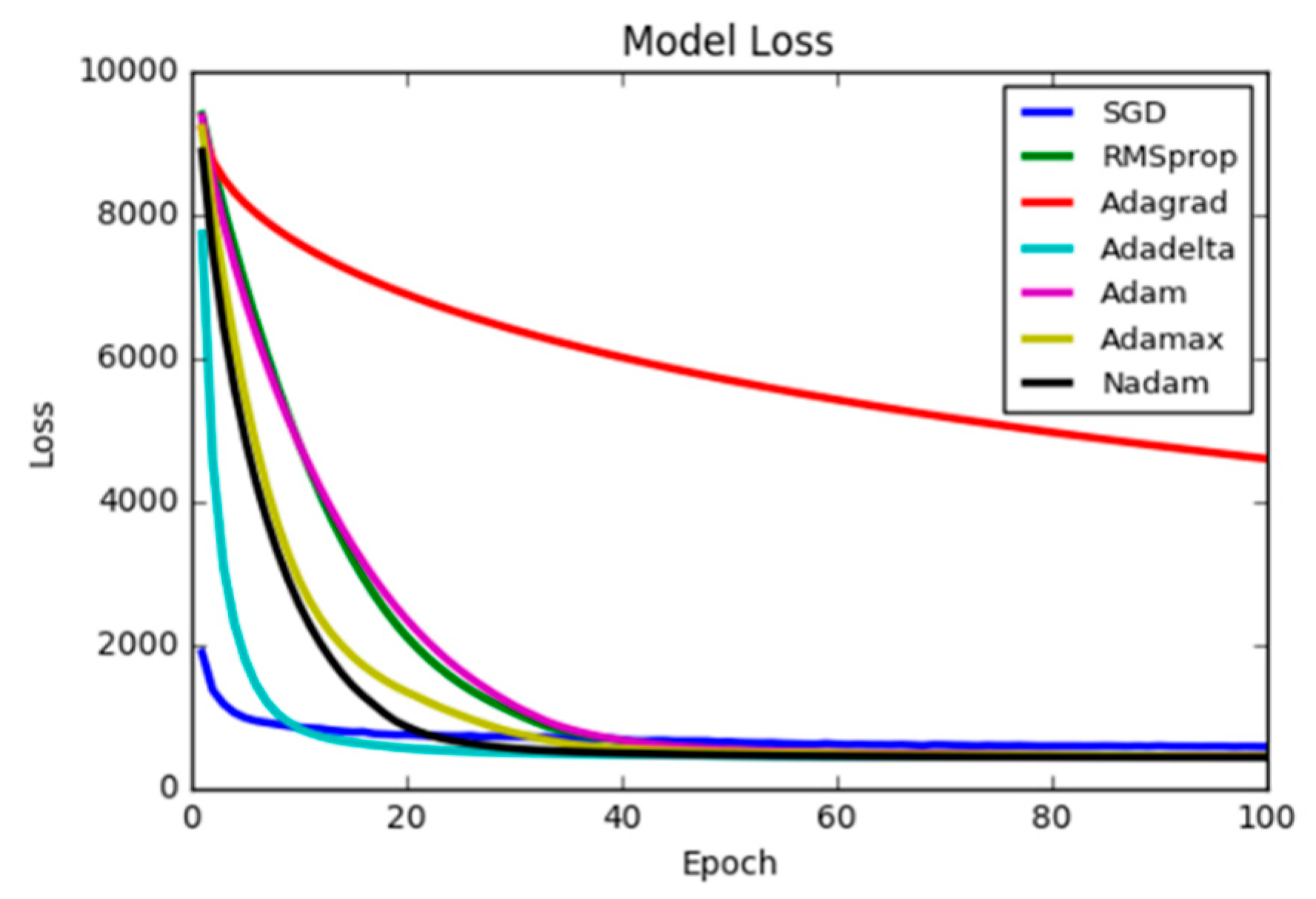

4.2.2. Optimizer

- Epochs: 100;

- Activation: relu;

- Batch_size: 128;

- Hidden layer, number of neuron: 1, 50;

- Inputs: 24.

4.2.3. Number of Hidden Layers and Neurons

4.3. Model Evaluation

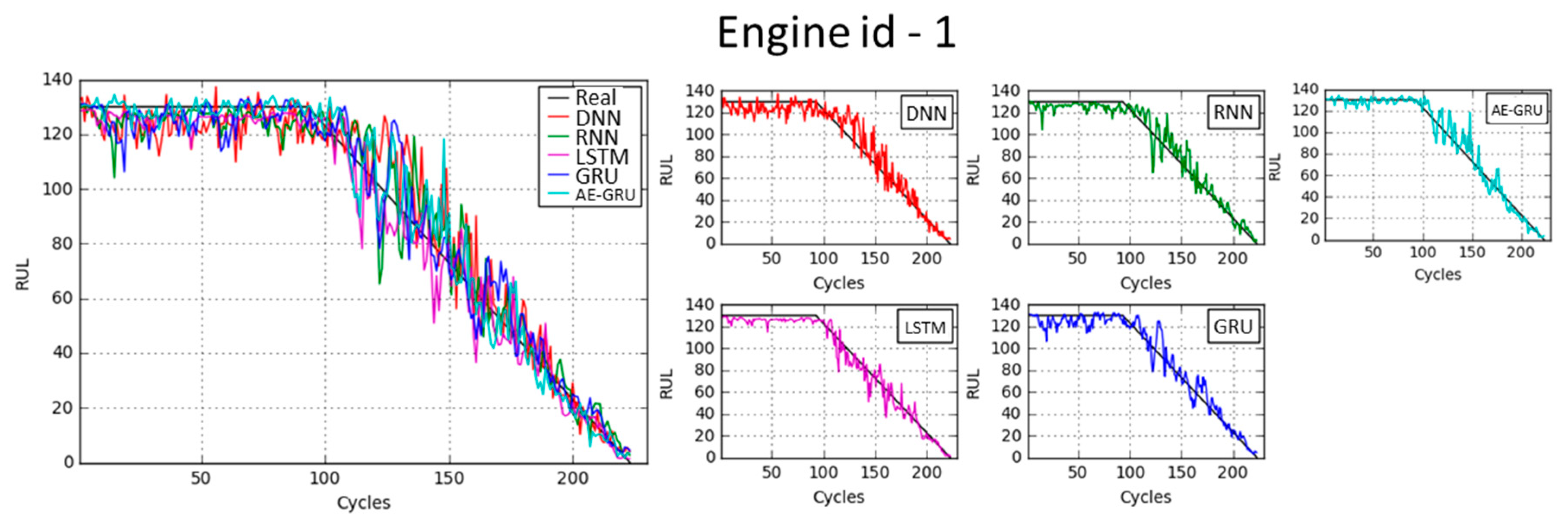

4.3.1. Model Comparison

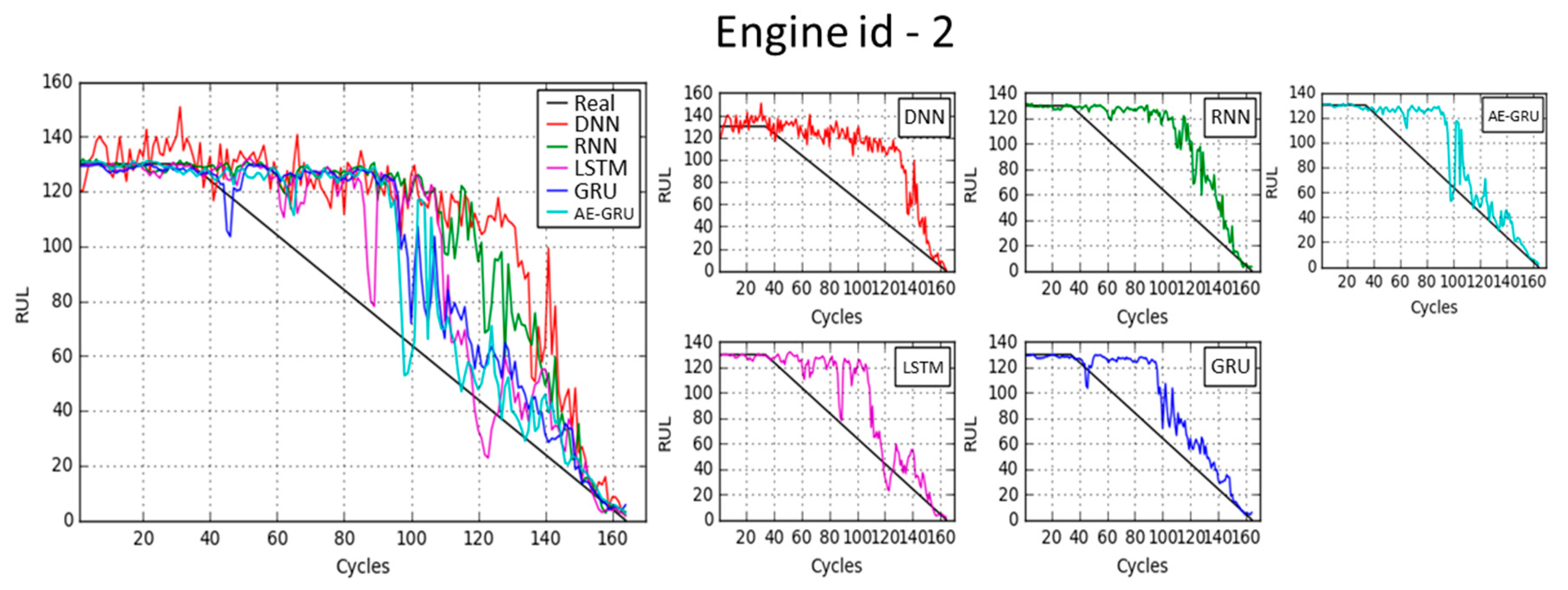

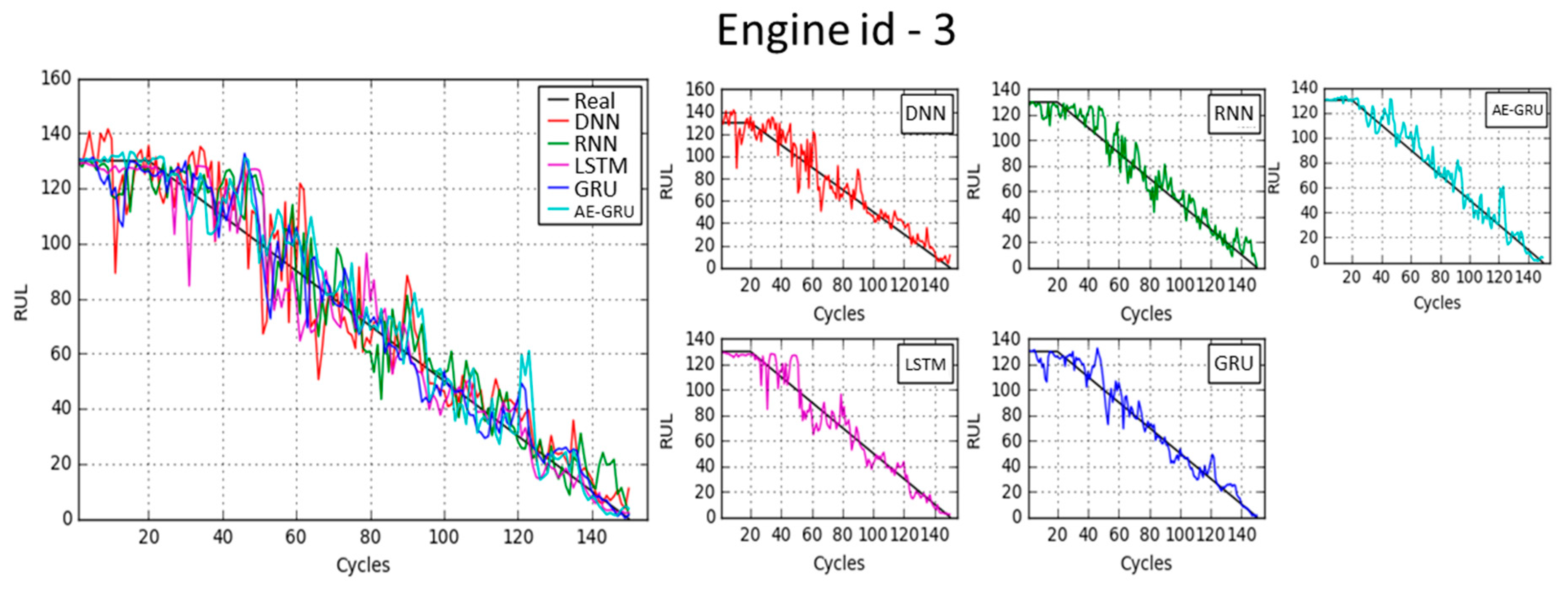

4.3.2. RUL Prediction

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, S.; Wan, J.; Li, D.; Zhang, C. Implementing Smart Factory of Industrie 4.0: An Outlook. Int. J. Distrib. Sens. Netw. 2016, 12, 3159805. [Google Scholar] [CrossRef]

- Chien, C.-F.; Hsu, C.-Y.; Chen, P.-N. Semiconductor Fault Detection and Classification for Yield Enhancement and Manufacturing Intelligence. Flex. Serv. Manuf. J. 2013, 25, 367–388. [Google Scholar] [CrossRef]

- Hsu, C.-Y.; Liu, W.-C. Multiple Time-Series Convolutional Neural Network for Fault Detection and Diagnosis and Empirical Study in Semiconductor Manufacturing. J. Intell. Manuf. 2020, 1–14. [Google Scholar] [CrossRef]

- Fan, S.-K.S.; Hsu, C.-Y.; Tsai, D.-M.; He, F.; Cheng, C.-C. Data-Driven Approach for Fault Detection and Diagnostic in Semiconductor Manufacturing. IEEE Trans. Autom. Sci. Eng. 2020, 1–12. [Google Scholar] [CrossRef]

- Shrouf, F.; Ordieres, J.; Miragliotta, G. Smart factories in Industry 4.0: A review of the concept and of energy management approached in production based on the Internet of Things paradigm. In Proceedings of the 2014 IEEE International Conference on Industrial Engineering and Engineering Management, Selangor, Malaysia, 9–12 December 2014; pp. 697–701. [Google Scholar]

- Kothamasu, R.; Huang, S.H.; VerDuin, W.H. System health monitoring and prognostics—A review of current paradigms and practices. Int. J. Adv. Manuf. Technol. 2006, 28, 1012–1024. [Google Scholar] [CrossRef]

- Peng, Y.; Dong, M.; Zuo, M.J. Current status of machine prognostics in condition-based maintenance: A review. Int. J. Adv. Manuf. Technol. 2010, 50, 297–313. [Google Scholar] [CrossRef]

- Soh, S.S.; Radzi, N.H.; Haron, H. Review on scheduling techniques of preventive maintenance activities of railway. In Proceedings of the 2012 Fourth International Conference on Computational Intelligence, Modelling and Simulation, Kuantan, Malaysia, 25–27 September 2012; pp. 310–315. [Google Scholar]

- Heimes, F.O. Recurrent neural networks for remaining useful life estimation. In Proceedings of the 2008 International Conference on Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008; pp. 1–6. [Google Scholar]

- Li, Y.; Shi, J.; Gong, W.; Liu, X. A data-driven prognostics approach for RUL based on principle component and instance learning. In Proceedings of the 2016 IEEE International Conference on Prognostics and Health Management (ICPHM), Ottawa, ON, Canada, 20–22 June 2016; pp. 1–7. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016. [Google Scholar]

- Han, Z.; Zhao, J.; Leung, H.; Ma, K.F.; Wang, W. A review of deep learning models for time series prediction. IEEE Sens. J. 2019. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. Statistical and Machine Learning Forecasting Methods: Concerns and Ways Forward. PLoS ONE 2018, 13, e0194889. [Google Scholar] [CrossRef] [PubMed]

- Alfred, R.; Obit, J.H.; Ahmad Hijazi, M.H.; Ag Ibrahim, A.A. A performance comparison of statistical and machine learning techniques in learning time series data. Adv. Sci. Lett. 2015, 21, 3037–3041. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Lin, P.; Tao, J. A Novel Bearing Health Indicator Construction Method Based on Ensemble Stacked Autoencoder. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management (ICPHM), San Francisco, CA, USA, 17–20 June 2019; pp. 1–9. [Google Scholar]

- Yin, C.; Zhang, S.; Wang, J.; Xiong, N.N. Anomaly Detection Based on Convolutional Recurrent Autoencoder for IoT Time Series. IEEE Trans. Syst. Man Cybern. Syst 2020, 1–11. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiong, R.; He, H.; Liu, Z. A LSTM-RNN method for the lithuim-ion battery remaining useful life prediction. In Proceedings of the 2017 Prognostics and System Health Management Conference (PHM-Harbin), Harbin, China, 9–12 July 2017; pp. 1–4. [Google Scholar]

- Mathew, V.; Toby, T.; Singh, V.; Rao, B.M.; Kumar, M.G. Prediction of Remaining Useful Lifetime (RUL) of turbofan engine using machine learning. In Proceedings of the 2017 IEEE International Conference on Circuits and Systems (ICCS), Batumi, Georgia, 5–8 December 2017; pp. 306–311. [Google Scholar]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long short-term memory network for remaining useful life estimation. In Proceedings of the 2017 IEEE international conference on prognostics and health management (ICPHM), Dallas, TX, USA, 19–21 June 2017; pp. 88–95. [Google Scholar]

- Chen, J.; Jing, H.; Chang, Y.; Liu, Q. Gated recurrent unit based recurrent neural network for remaining useful life prediction of nonlinear deterioration process. Reliab. Eng. Syst. Safe. 2019, 185, 372–382. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Chen, Z.; Liu, Y.; Liu, S. Mechanical state prediction based on LSTM neural netwok. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 3876–3881. [Google Scholar]

- Zheng, J.; Xu, C.; Zhang, Z.; Li, X. Electric load forecasting in smart grids using long-short-term-memory based recurrent neural network. In Proceedings of the 2017 51st Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 22–24 March 2017; pp. 1–6. [Google Scholar]

- ElSaid, A.; Wild, B.; Higgins, J.; Desell, T. Using LSTM recurrent neural networks to predict excess vibration events in aircraft engines. In Proceedings of the 2016 IEEE 12th International Conference on e-Science, Baltimore, MD, USA, 23–27 October 2016; pp. 260–269. [Google Scholar]

- Cenggoro, T.W.; Siahaan, I. Dynamic bandwidth management based on traffic prediction using Deep Long Short Term Memory. In Proceedings of the 2016 2nd International Conference on Science in Information Technology (ICSITech), Balikpapan, Indonesia, 26–27 October 2016; pp. 318–323. [Google Scholar]

- Bao, W.; Yue, J.; Rao, Y. A deep learning framework for financial time series using stacked autoencoders and long-short term memory. PLoS ONE 2017, 12, e0180944. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, H.; Dong, J.; Zhong, G.; Sun, X.J.I.G.; Letters, R.S. Prediction of sea surface temperature using long short-term memory. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1745–1749. [Google Scholar] [CrossRef]

- How, D.N.T.; Sahari, K.S.M.; Yuhuang, H.; Kiong, L.C. Multiple sequence behavior recognition on humanoid robot using long short-term memory (LSTM). In Proceedings of the 2014 IEEE international symposium on robotics and manufacturing automation (ROMA), Kuala Lumpur, Malaysia, 15–16 December 2014; pp. 109–114. [Google Scholar]

- Truong, A.M.; Yoshitaka, A. Structured LSTM for human-object interaction detection and anticipation. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Kuan, L.; Yan, Z.; Xin, W.; Yan, C.; Xiangkun, P.; Wenxue, S.; Zhe, J.; Yong, Z.; Nan, X.; Xin, Z. Short-term electricity load forecasting method based on multilayered self-normalizing GRU network. In Proceedings of the 2017 IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 26–28 November 2017; pp. 1–5. [Google Scholar]

- Zhang, D.; Kabuka, M.R. Combining weather condition data to predict traffic flow: A GRU-based deep learning approach. IET Intell. Transp. Syst. 2018, 12, 578–585. [Google Scholar] [CrossRef]

- Sharma, S. Activation functions in neural networks. Int. J. Eng. Appl. Sci. Technol. 2020, 4, 310–316. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Dozat, T. Incorporating nesterov momentum into adam. In Proceedings of the 2016 International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–4. [Google Scholar]

| Engine | Usage Time | Parameter 1 | Parameter 2 | Parameter 24 | RUL |

|---|---|---|---|---|---|

| 1 | 1 | 10.0047 | 0.2501 | 17.1735 | 222 |

| 1 | 2 | 0.0015 | 0.0003 | 23.3619 | 221 |

| … | … | … | … | … | … |

| 1 | 223 | 34.9992 | 0.84 | 8.6695 | 0 |

| … | … | … | … | … | … |

| 218 | 132 | 35.007 | 0.8419 | 8.6761 | 1 |

| 218 | 133 | 25.007 | 0.6216 | 8.512 | 0 |

| Networks | Epochs | Training Time | RMSE |

|---|---|---|---|

| G(32,32)N(8,8) | 33 | 98.4 s | 20.18 |

| G(64,64)N(8,8) | 20 | 89.3 s | 20.24 |

| G(32,64)N(8,8) | 19 | 76.8 s | 20.37 |

| G(32,64)N(16,16) | 28 | 103.7 s | 20.24 |

| G(96,96)N(8,8) | 25 | 150.3 s | 20.20 |

| G(96,96)N(16,16) | 24 | 151.4 s | 20.18 |

| Networks | Epochs | Training Time | RMSE |

|---|---|---|---|

| G(32,32)N(8,8) | 21 | 52.0 s | 20.32 |

| G(64,64)N(8,8) | 24 | 75.8 s | 20.25 |

| G(32,64)N(8,8) | 23 | 63.8 s | 20.33 |

| G(32,64)N(16,16) | 24 | 66.7 s | 20.36 |

| G(96,96)N(8,8) | 15 | 80.1 s | 20.44 |

| G(96,96)N(16,16) | 14 | 78.3 s | 20.44 |

| Networks | Epochs | Training Time | RMSE |

|---|---|---|---|

| G(32,32)N(8,8) | 42 | 60.4 s | 20.16 |

| G(64,64)N(8,8) | 34 | 70.1 s | 20.07* |

| G(32,64)N(8,8) | 33 | 60.3 s | 20.20 |

| G(32,64)N(16,16) | 52 | 85.6 s | 20.19 |

| G(96,96)N(8,8) | 42 | 119.4 s | 20.15 |

| G(96,96)N(16,16) | 36 | 115.7 s | 20.12 |

| Input | 15 | 10 | 5 |

|---|---|---|---|

| Loss | 7.7 × 10−6 | 2.6 × 10−5 | 3.0 × 10−5 |

| DNN Inputs:24 | RNN Inputs:24 | LSTM Inputs:24 | GRU Inputs:24 | AE-GRE Inputs:15 | |

|---|---|---|---|---|---|

| 1 | 18.57 | 18.06 | 17.80 | 17.68 | 17.64 |

| 2 | 18.76 | 18.22 | 18.00 | 17.88 | 17.70 |

| 3 | 18.73 | 18.47 | 18.19 | 18.03 | 17.98 |

| 4 | 19.11 | 18.44 | 18.45 | 18.16 | 18.20 |

| 5 | 19.12 | 18.43 | 18.34 | 18.12 | 18.20 |

| Average | 18.86 | 18.32 | 18.16 | 17.96 | 17.94 |

| DNN Inputs:24 | RNN Inputs:24 | LSTM Inputs:24 | GRU Inputs:24 | AE-GRE Inputs:15 | |

|---|---|---|---|---|---|

| 1 | 19.21 | 15.08 | 11.20 | 10.31 | 10.39 |

| 2 | 19.21 | 14.26 | 11.52 | 11.05 | 10.02 |

| 3 | 19.07 | 15.86 | 10.78 | 10.75 | 10.70 |

| 4 | 19.77 | 15.07 | 11.49 | 11.33 | 10.69 |

| 5 | 19.69 | 17.27 | 10.81 | 10.54 | 11.31 |

| Average | 19.39 | 15.51 | 11.16 | 10.79 | 10.62 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.-W.; Hsu, C.-Y.; Huang, K.-C. An Autoencoder Gated Recurrent Unit for Remaining Useful Life Prediction. Processes 2020, 8, 1155. https://doi.org/10.3390/pr8091155

Lu Y-W, Hsu C-Y, Huang K-C. An Autoencoder Gated Recurrent Unit for Remaining Useful Life Prediction. Processes. 2020; 8(9):1155. https://doi.org/10.3390/pr8091155

Chicago/Turabian StyleLu, Yi-Wei, Chia-Yu Hsu, and Kuang-Chieh Huang. 2020. "An Autoencoder Gated Recurrent Unit for Remaining Useful Life Prediction" Processes 8, no. 9: 1155. https://doi.org/10.3390/pr8091155

APA StyleLu, Y.-W., Hsu, C.-Y., & Huang, K.-C. (2020). An Autoencoder Gated Recurrent Unit for Remaining Useful Life Prediction. Processes, 8(9), 1155. https://doi.org/10.3390/pr8091155