Innovative Flow Pattern Identification in Oil–Water Two-Phase Flow via Kolmogorov–Arnold Networks: A Comparative Study with MLP

Abstract

1. Introduction

2. Method

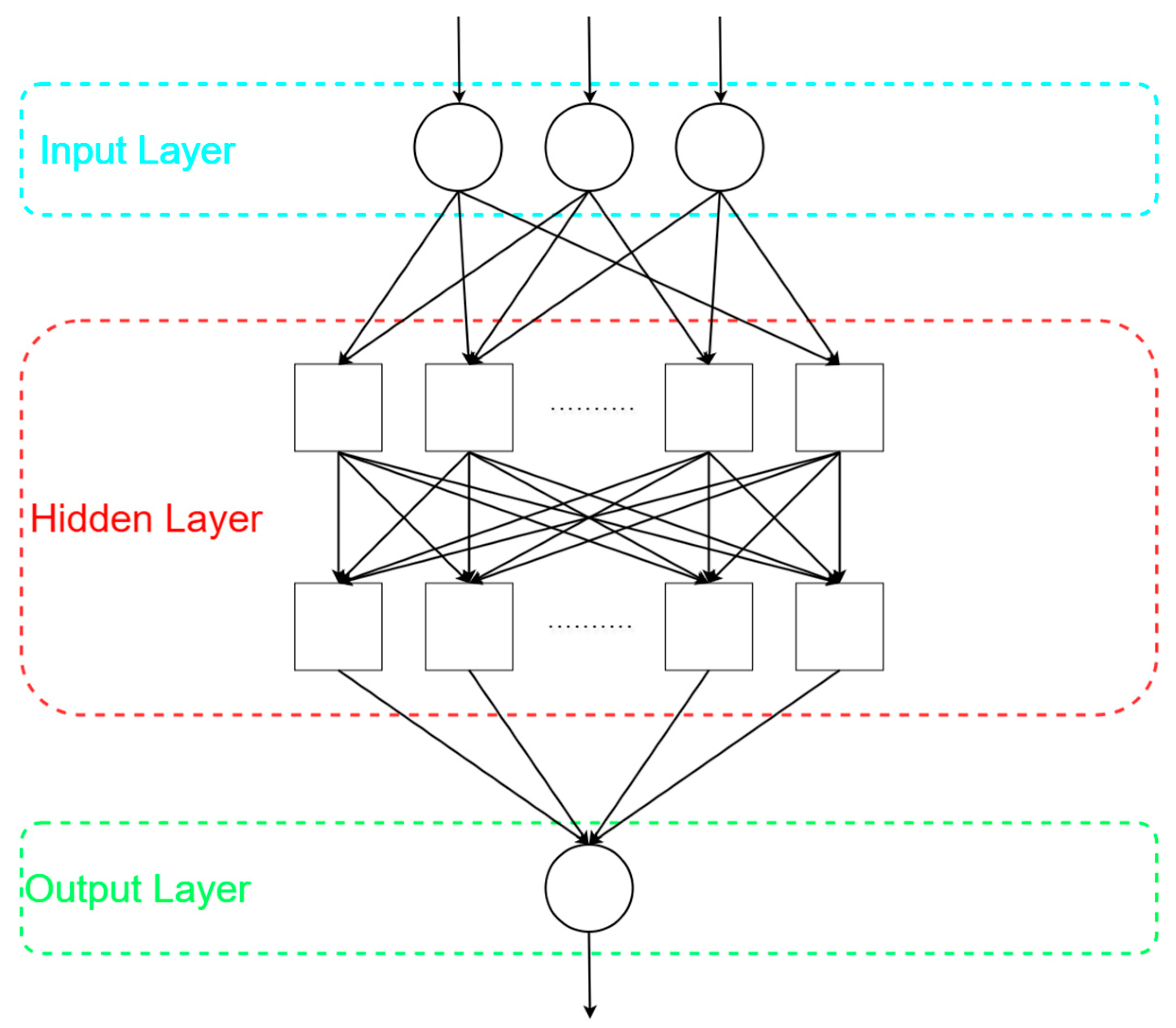

2.1. Multi-Layer Perceptron

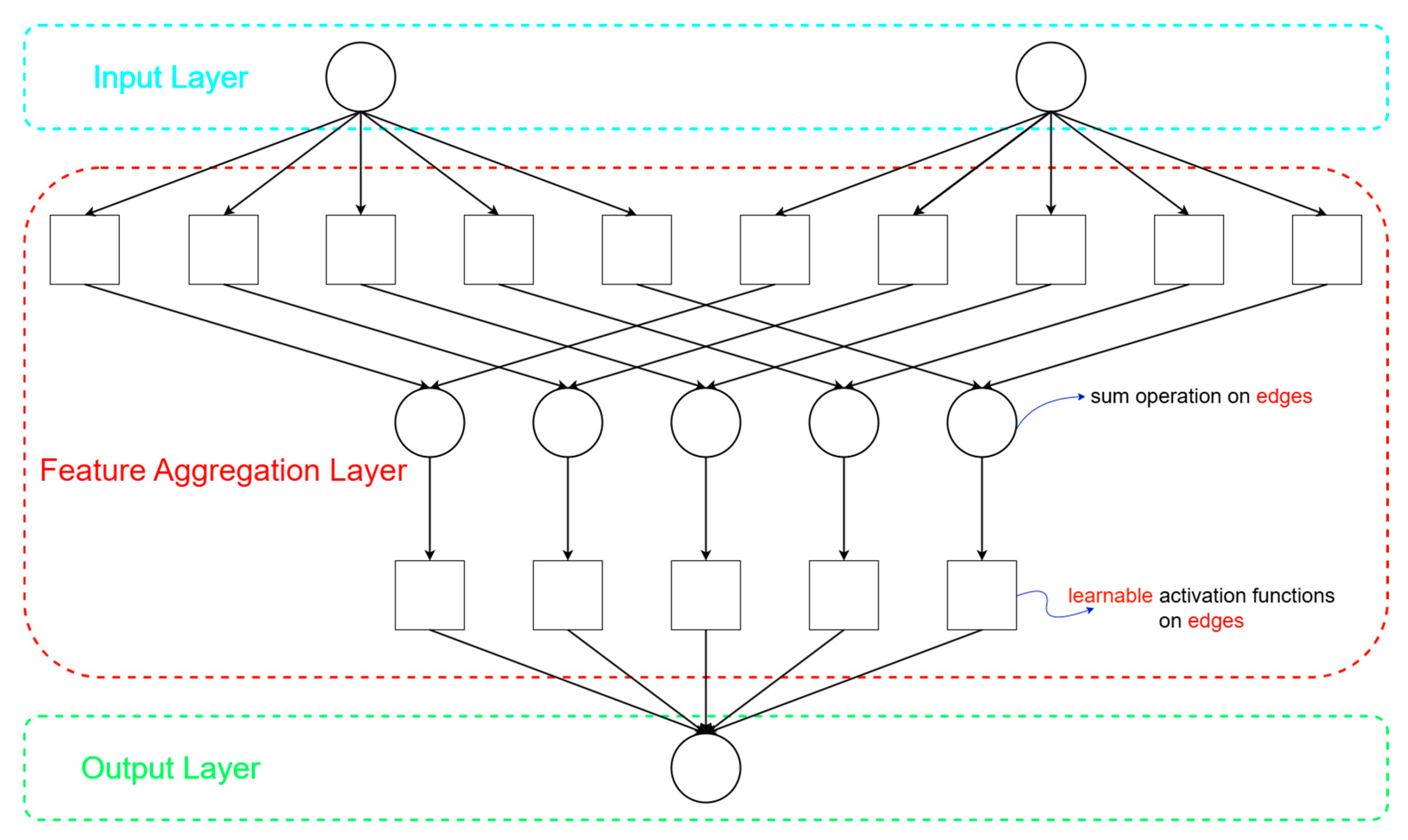

2.2. Kolmogorov–Arnold Network

3. Experimental Work

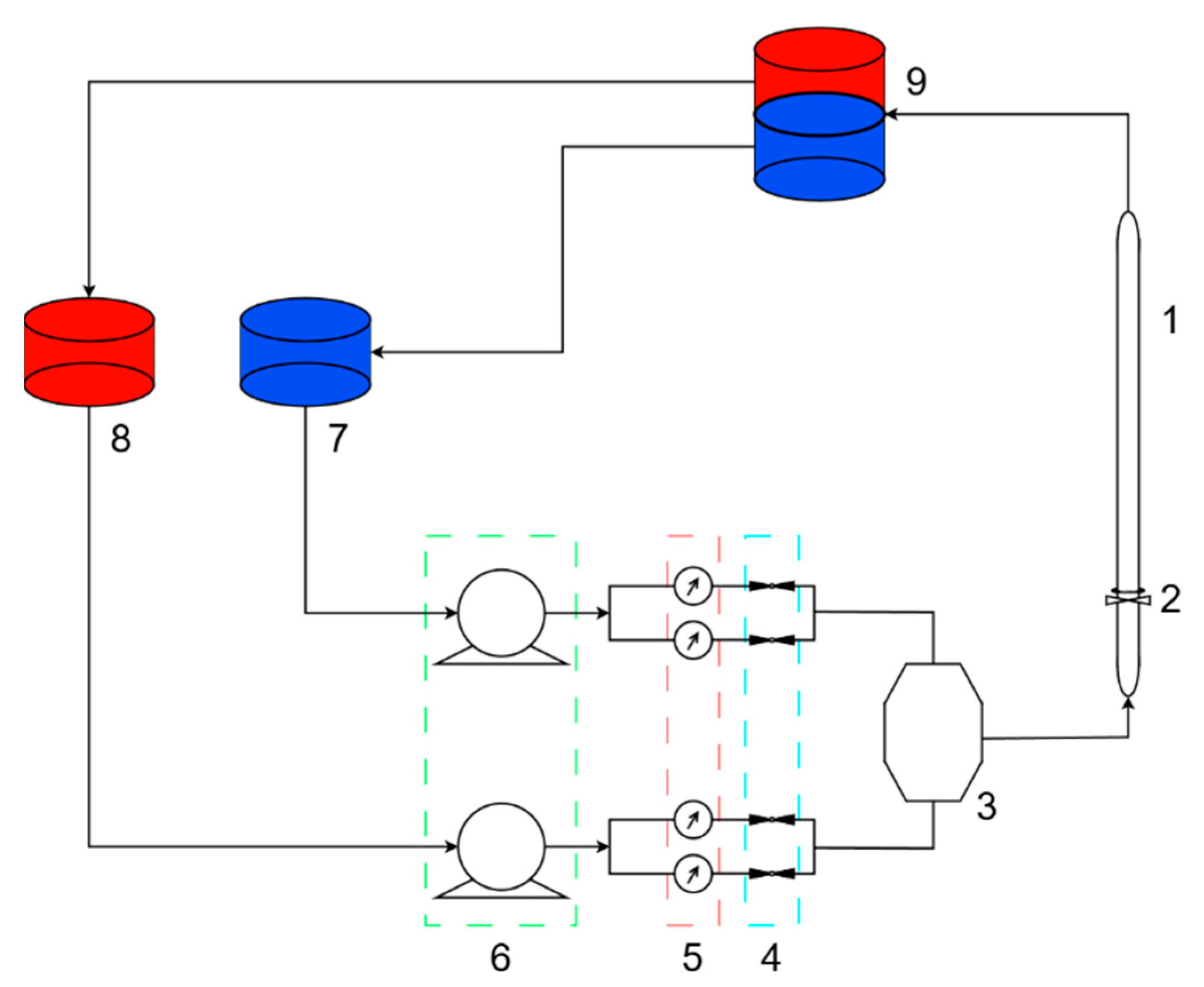

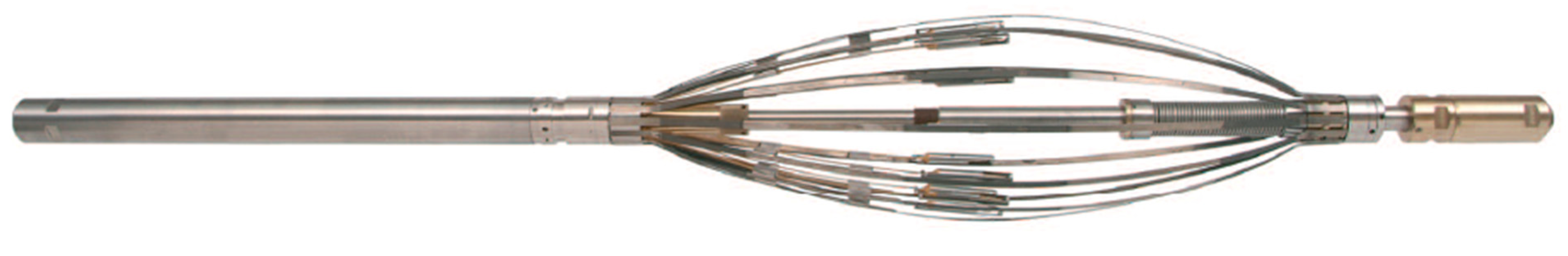

3.1. Experimental Device

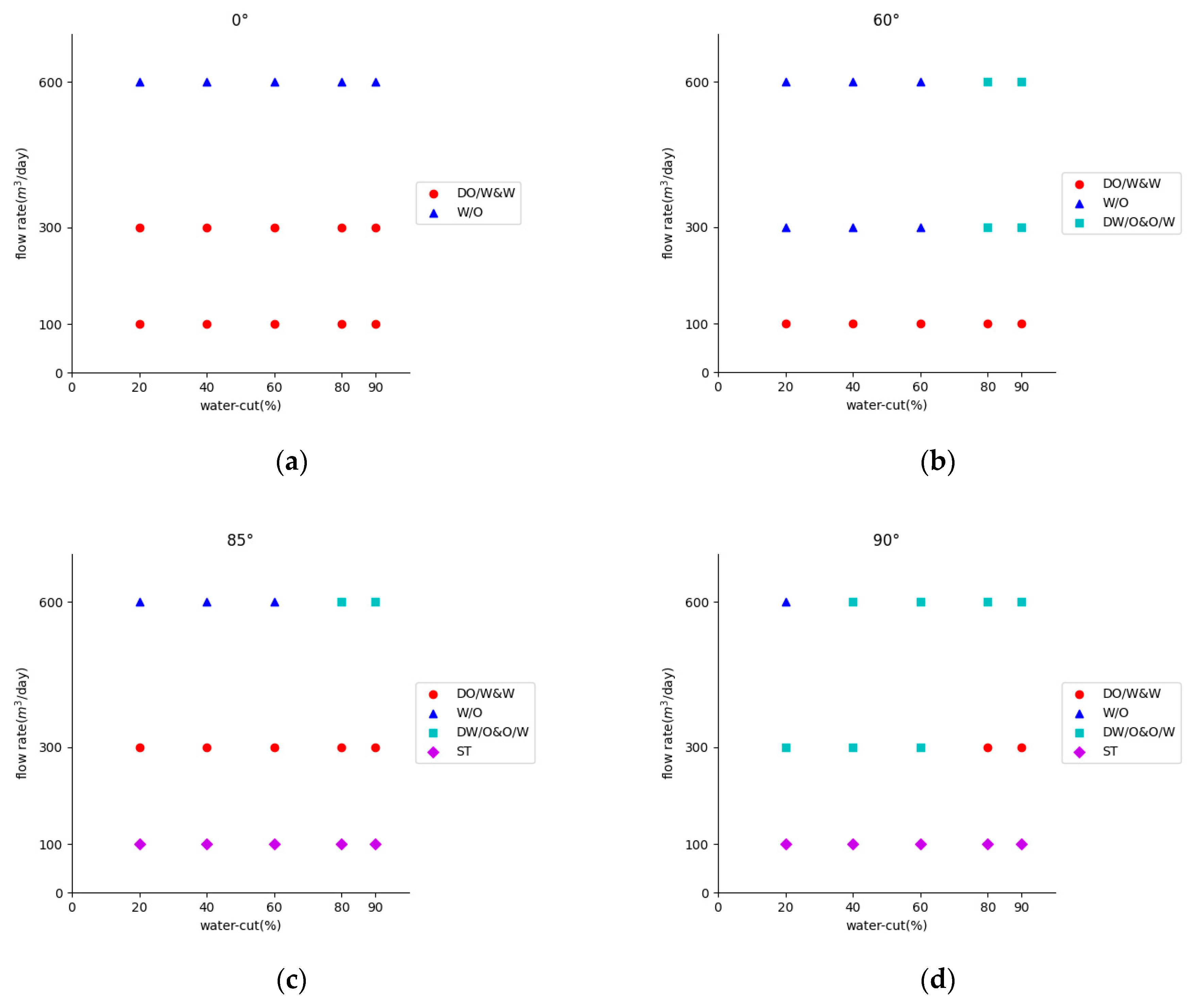

3.2. Experimental Design

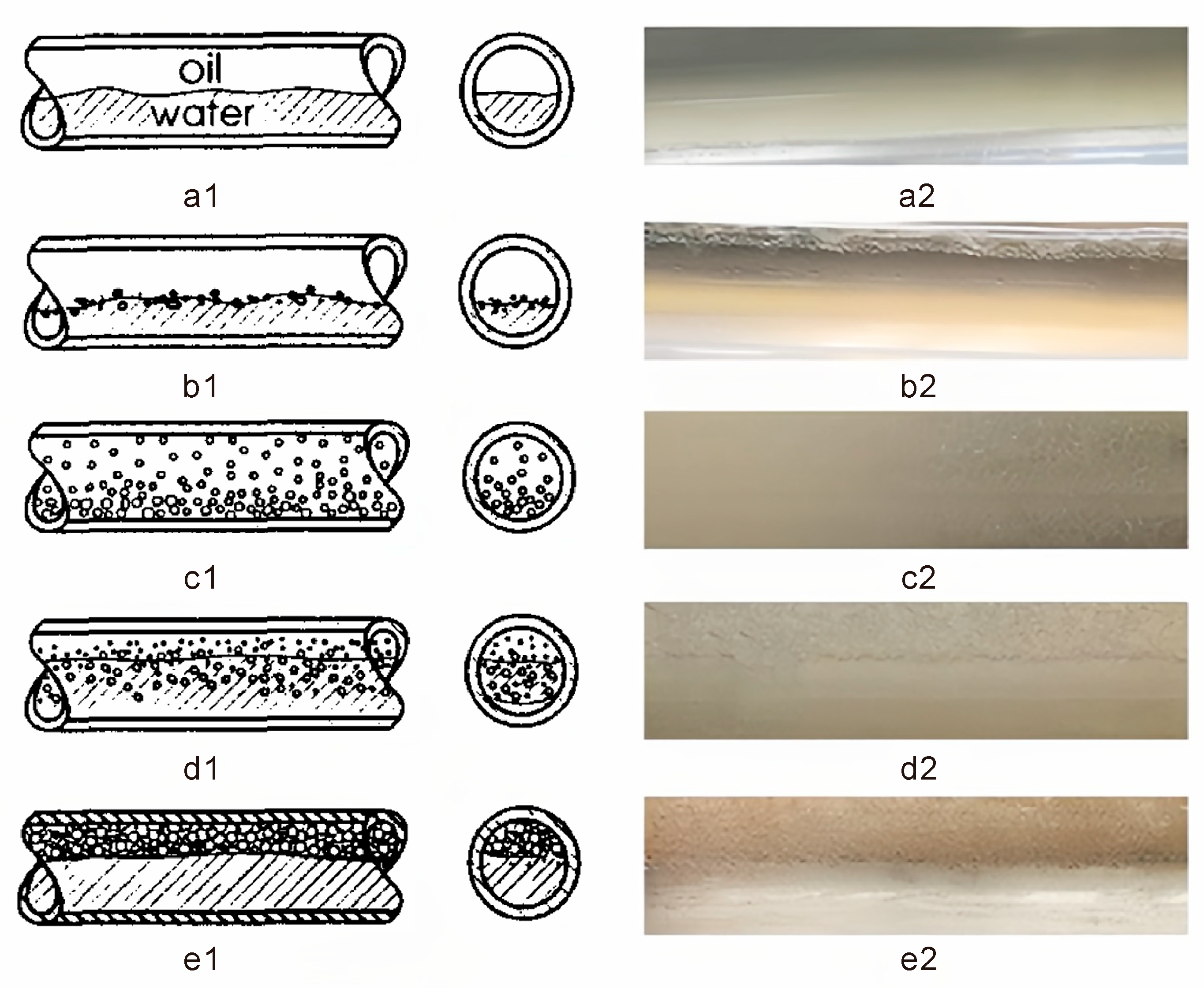

- SS (a1,a2): The oil and water flow in distinct layers with a smooth interface.

- ST & MI (b1,b2): The oil and water flow in layers, but there is some mixing at the interface.

- W/O (c1,c2): The water is dispersed in the oil phase.

- DW/O & O/W (d1,d2): The upper layer is water dispersed in oil, and the lower layer is oil with water droplets.

- DO/W & W (e1,e2): The upper layer is oil dispersed in water, and the lower layer is water.

3.3. Experimental Data Processing

4. Network Training

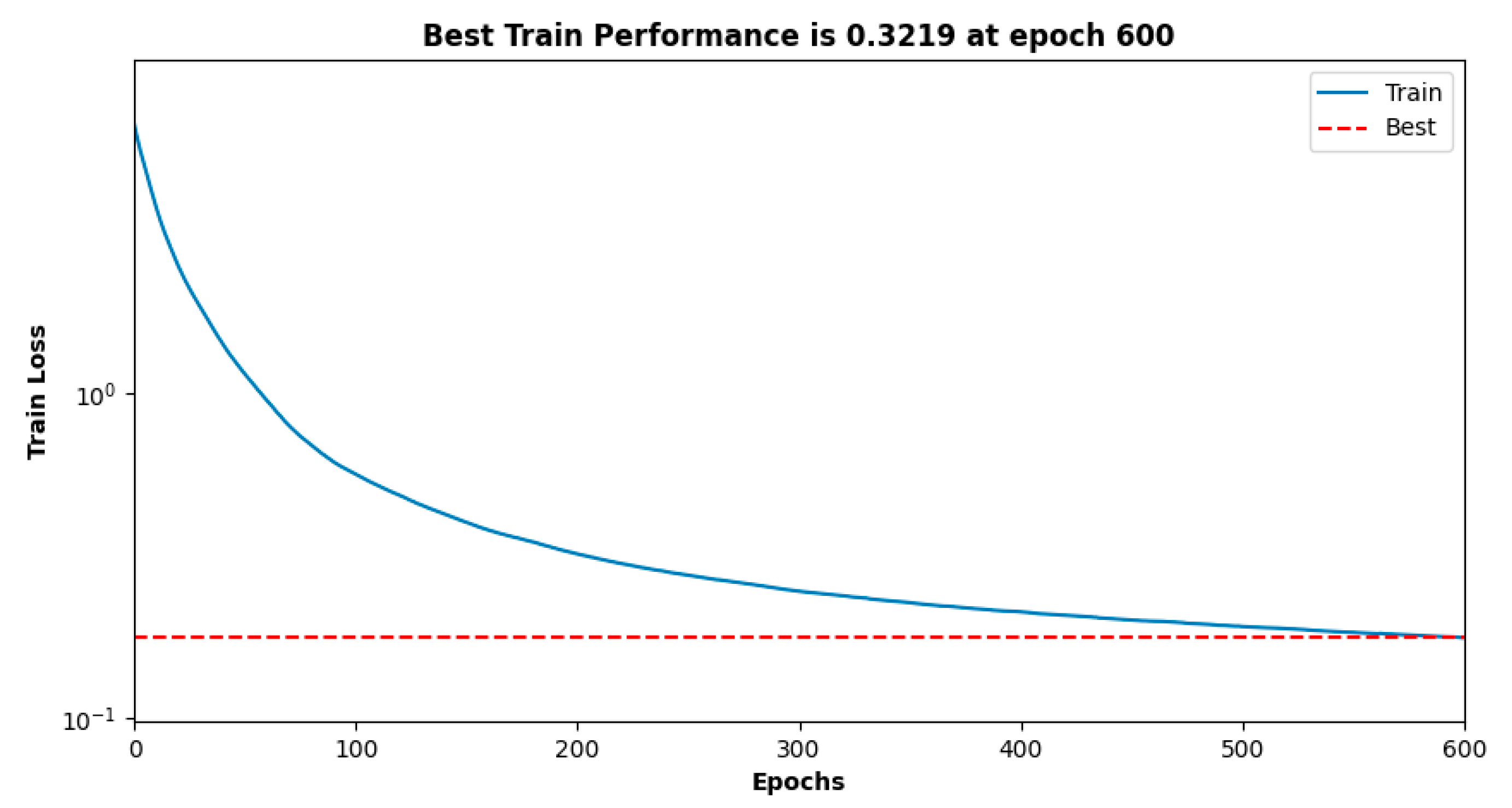

4.1. Implementation Steps of MLP

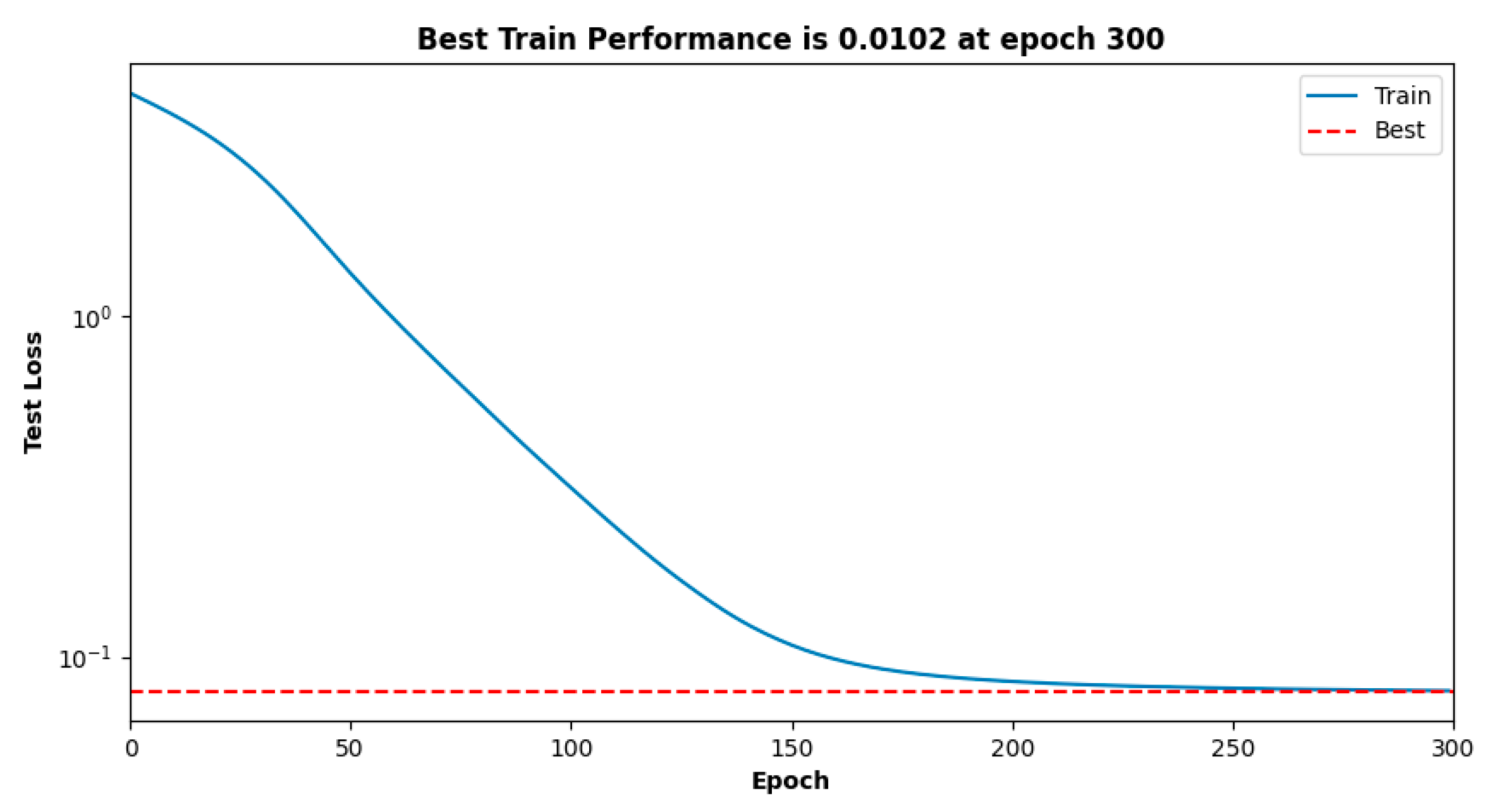

4.2. Implementation Steps of KAN

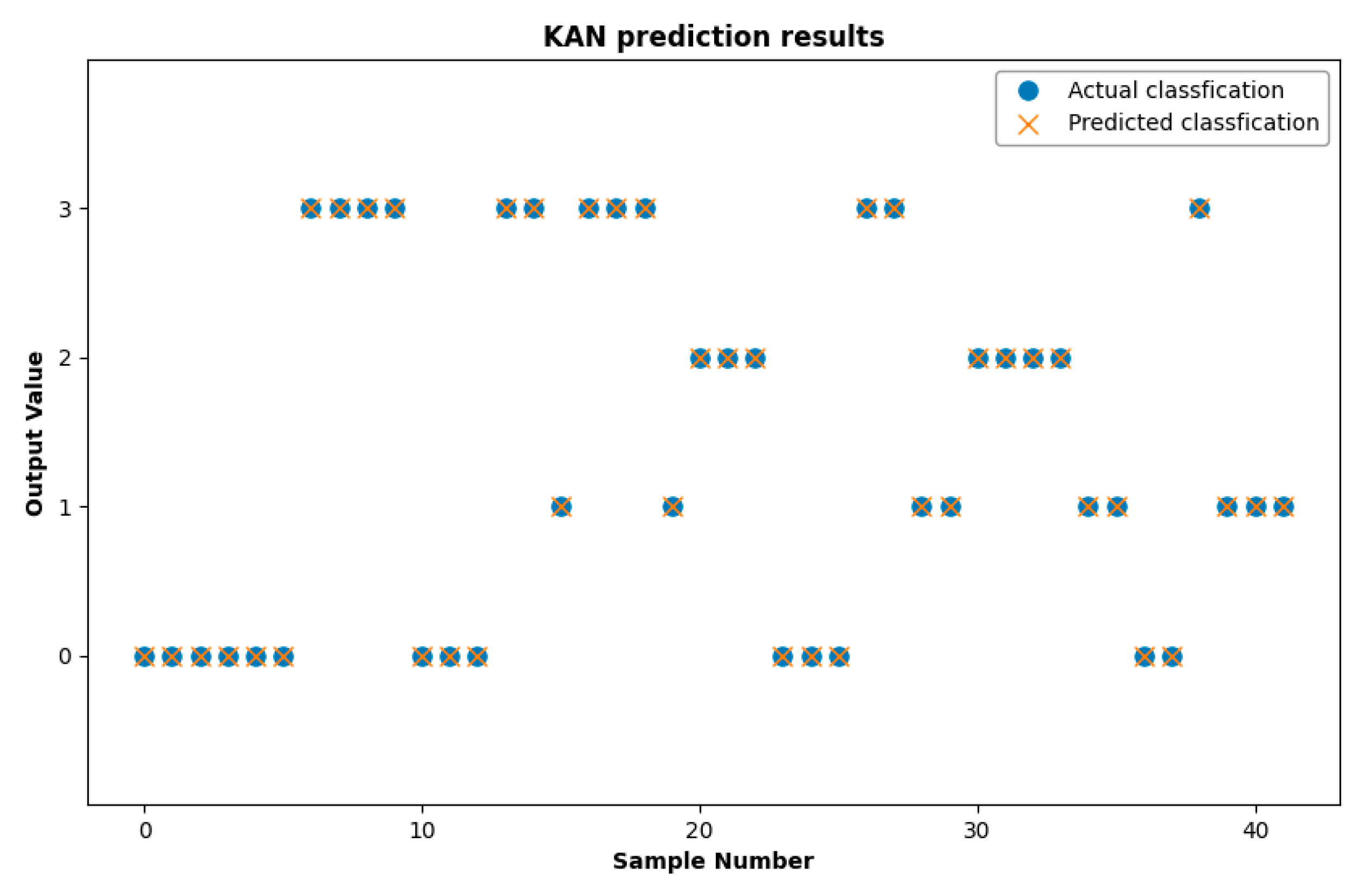

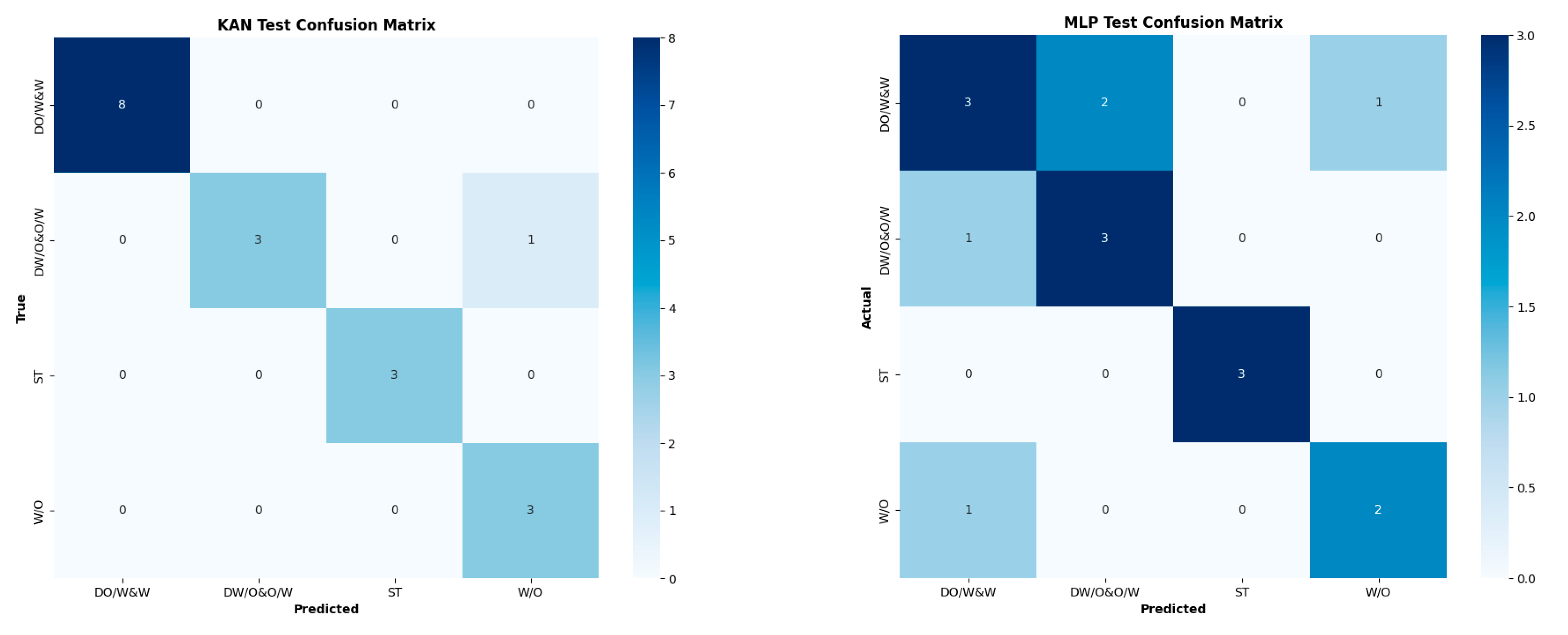

5. Result & Discussion

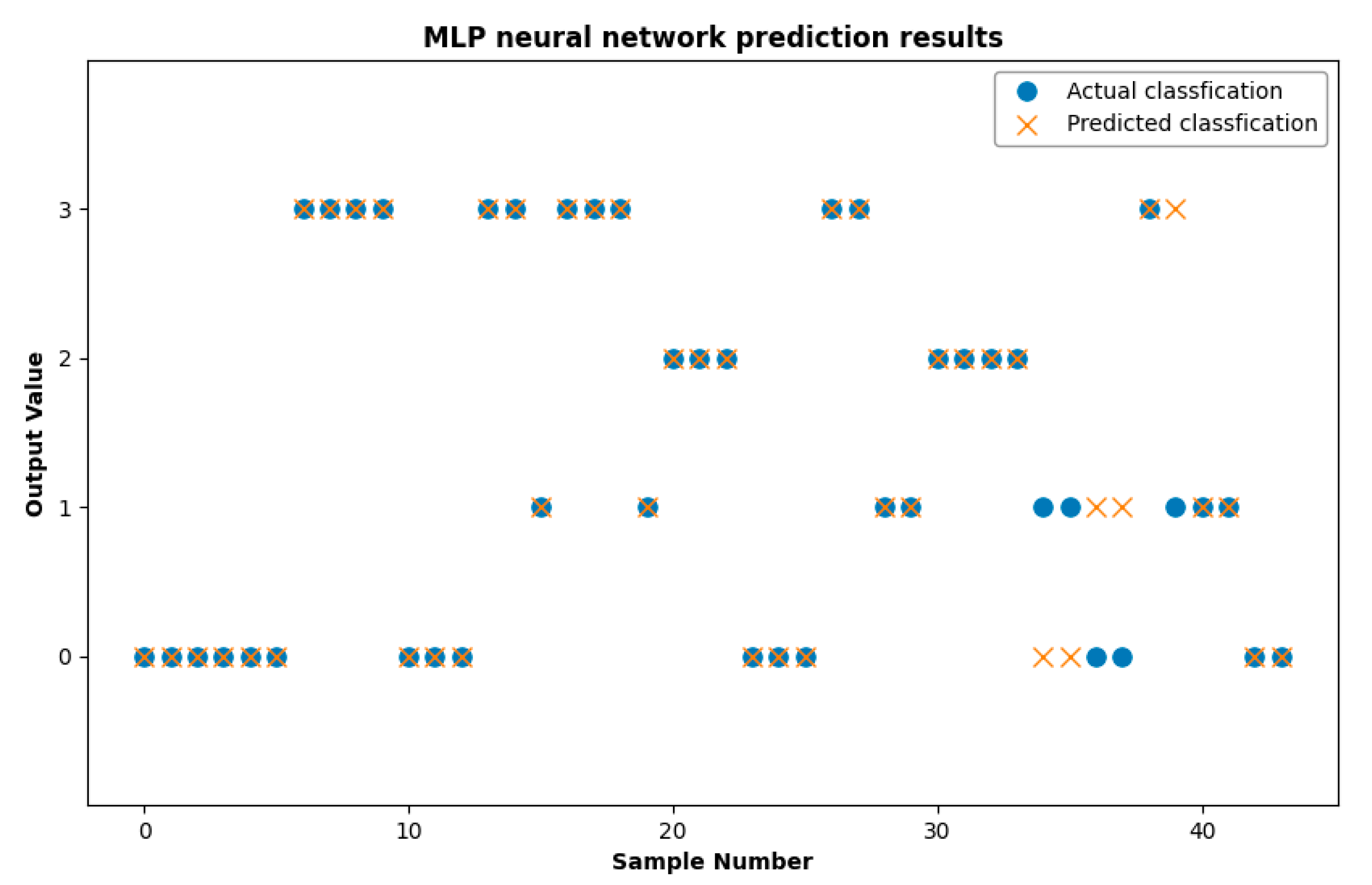

Comparison of the KAN Model with the MLP Neural Network

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| KAN | Kolmogorov–Arnold Networks |

| MLP | Multi-Layer Perceptron |

| SS | Smooth Stratified Flow |

| W/O | Water in Oil Emulsion |

| ST & MI | Stratified Flow with Mixing at the Interface |

| DO/W | Dispersion of Oil in Water and Water |

| DW/O&O/W | Dispersions of Water in Oil and Oil in Water |

References

- Puneett, B.; Anupama, R.; Richa, M. Understanding critical service factors in neobanks: Crafting strategies through text mining. J. Model. Manag. 2025, 20, 894–922. [Google Scholar]

- Amrita, C. Financial inclusion, information and communication technology diffusion, and economic growth: A panel data analysis. Inf. Technol. Dev. 2020, 26, 607–635. [Google Scholar] [CrossRef]

- Wei, J.; Xin, W.; Shu, Z. Integrating multi-modal data into AFSA-LSTM model for real-time oil production prediction. Energy 2023, 279, 127935. [Google Scholar]

- Shammre, A.S.A.; Chidmi, B. Oil Price Forecasting Using FRED Data: A Comparison between Some Alternative Models. Energies 2023, 16, 4451. [Google Scholar] [CrossRef]

- Al-Tarawneh, M.A.; Al-irr, O.; Al-Maaitah, K.S.; Kanj, H.; Aly, W.H.F. Enhancing Fake News Detection with Word Embedding: A Machine Learning and Deep Learning Approach. Computers 2024, 13, 239. [Google Scholar] [CrossRef]

- Chaudhuri, R.; Chatterjee, S.; Vrontis, D.; Thrassou, A. Adoption of robust business analytics for product innovation and organizational performance: The mediating role of organizational data-driven culture. Ann. Oper. Res. 2021, 339, 1–35. [Google Scholar] [CrossRef]

- Bassam, A.M.; Phillips, A.B.; Turnock, S.R.; Wilson, P.A. Artificial neural network based prediction of ship speed under operating conditions for operational optimization. Ocean Eng. 2023, 278, 114613. [Google Scholar] [CrossRef]

- Vahid, A.; Ataallah, S.; Saeid, S. Robust prediction and optimization of gasoline quality using data-driven adaptive modeling for a light naphtha isomerization reactor. Fuel 2022, 328, 125304. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Y.; Li, Y.; Chen, P. A data-driven approach for oil production prediction and water injection recommendation in well groups. Geoenergy Sci. Eng. 2025, 247, 213682. [Google Scholar] [CrossRef]

- Xie, J.; Jia, C.; Wang, Z. Data-driven machine-learning models for predicting non-uniform confinement effects of FRP-confined concrete. Structures 2025, 74, 108555. [Google Scholar] [CrossRef]

- Talpur, S.A.; Thansirichaisree, P.; Anotaipaiboon, W.; Mohamad, H.; Zhou, M.; Ejaz, A.; Hussain, Q.; Saingam, P.; Chaimahawan, P. Data-driven prediction of failure loads in low-cost FRP-confined reinforced concrete beams. Compos. Part C Open Access 2025, 17, 100579. [Google Scholar] [CrossRef]

- Qi, W.L.; Wang, R.; Yang, M.S.; Pan, Z.R.; Liu, K.Z. Data-driven dynamic periodic event-triggered control for unknown linear systems: A hybrid system approach. Syst. Control Lett. 2025, 199, 106067. [Google Scholar] [CrossRef]

- Li, X.; Liu, Y.; Zhang, R.; Zhang, N. Probabilistic failure assessment of oil and gas gathering pipelines using machine learning approach. Reliab. Eng. Syst. Saf. 2025, 256, 110747. [Google Scholar] [CrossRef]

- Komara, E.; Nugraha, M.F.; Rafi, M.; Lestari, W. Lithology Prediction Using K-Nearest Neighbors (KNN) Algorithm Study Case in Upper Cibulakan Formation. IOP Conf. Ser. Earth Environ. Sci. 2024, 1418, 012062. [Google Scholar] [CrossRef]

- Madamanchi, A.; Rabbi, F.; Sokolov, A.M.; Hossain, N.U.I. A Machine Learning-Based Corrosion Level Prediction in the Oil and Gas Industry. Eng. Proc. 2024, 76, 38. [Google Scholar]

- Wang, H.; Guo, Z.; Kong, X.; Zhang, X.; Wang, P.; Shan, Y. Application of Machine Learning for Shale Oil and Gas “Sweet Spots” Prediction. Energies 2024, 17, 2191. [Google Scholar] [CrossRef]

- Ashraf, M.W.; Dua, V. Data Information integrated Neural Network (DINN) algorithm for modelling and interpretation performance analysis for energy systems. Energy AI 2024, 16, 100363. [Google Scholar] [CrossRef]

- Li, F.; Zurada, J.M.; Liu, Y.; Wu, W. Input Layer Regularization of Multilayer Feedforward Neural Networks. IEEE Access 2017, 5, 10979–10985. [Google Scholar] [CrossRef]

- Xu, L.; Klasa, S. Recent advances on techniques and theories of feedforward networks with supervised learning. Sci. Artif. Neural Netw. 1992, 1710, 317–328. [Google Scholar]

- Ying, X. An Overview of Overfitting and Its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.J.; Tegmark, M. KAN: Kolmogorov-Arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Yu, R.; Yu, W.; Wang, X. Kan or mlp: A fairer comparison. arXiv 2024, arXiv:2407.16674. [Google Scholar]

- Shukla, K.; Toscano, J.D.; Wang, Z.; Zou, Z.; Karniadakis, G.E. A comprehensive and fair comparison between mlp and kan representations for differential equations and operator networks. Comput. Methods Appl. Mech. Eng. 2024, 431, 117290. [Google Scholar] [CrossRef]

- Xu, K.; Chen, L.; Wang, S. Kolmogorov-Arnold networks for time series: Bridging predictive power and interpretability. arXiv 2024, arXiv:2406.02496. [Google Scholar]

- Guo, C.; Sun, L.; Li, S.; Yuan, Z.; Wang, C. Physics-informed Kolmogorov-Arnold Network with Chebyshev polynomials for fluid mechanics. arXiv 2024, arXiv:2411.04516. [Google Scholar]

- Qiu, B.; Zhang, J.; Yang, Y.; Qin, G.; Zhou, Z.; Ying, C. Research on Oil Well Production Prediction Based on GRU-KAN Model Optimized by PSO. Energies 2024, 17, 5502. [Google Scholar] [CrossRef]

- Brill, J.P. Multiphase flow in wells. J. Pet. Technol. 1987, 39, 15–21. [Google Scholar] [CrossRef]

- Wu, Y.; Guo, H.; Song, H.; Deng, R. Fuzzy inference system application for oil-water flow patterns identification. Energy 2022, 239, 122359. [Google Scholar] [CrossRef]

- Riedmiller, M. Multi Layer Perceptron. Machine Learning Lab Special Lecture; University of Freiburg: Freiburg, Germany, 2014; 139p. [Google Scholar]

- Abdurrakhman, A.; Sutiarso, L.; Ainuri, M.; Ushada, M.; Islam, M.P. A Multilayer Perceptron Feedforward Neural Network and Particle Swarm Optimization Algorithm for Optimizing Biogas Production. Energies 2025, 18, 1002. [Google Scholar] [CrossRef]

- Tasha, N.; Nima, M. Understanding the Representation and Computation of Multilayer Perceptrons: A Case Study in Speech Recognition. Proc. Mach. Learn. Res. 2017, 70, 2564–2573. [Google Scholar]

- Malavika, A.; Pai, L.M.; Johny, K. Ocean subsurface temperature prediction using an improved hybrid model combining ensemble empirical mode decomposition and deep multi-layer perceptron (EEMD-MLP-DL). Remote Sens. Appl. Soc. Environ. 2025, 38, 101556. [Google Scholar] [CrossRef]

- Zhang, X.; Cheng, Y.; Chen, H.; Cheng, H.; Gao, Y. Refrigerant charge fault diagnosis in VRF systems using Kolmogorov-Arnold networks and their convolutional variants: A comparative analysis with traditional models. Energy Build. 2025, 336, 15608. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, T. ATP-Pred: Prediction of Protein-ATP Binding Residues via Fusion of Residue-Level Embeddings and Kolmogorov-Arnold Network. J. Chem. Inf. Model. 2025, 65, 3812–3826. [Google Scholar] [CrossRef]

- Zheng, B.; Chen, Y.; Wang, C.; Heidari, A.A.; Liu, L.; Chen, H.; Liang, G. Kolmogorov-Arnold networks guided whale optimization algorithm for feature selection in medical datasets. J. Big Data 2025, 12, 69. [Google Scholar] [CrossRef]

- Nädler, M.; Mewes, D. Flow induced emulsification in the flow of two immiscible liquids in horizontal pipes. Int. J. Multiph. Flow 1997, 23, 55–68. [Google Scholar] [CrossRef]

| Flow Pattern | Num |

|---|---|

| W/O | 0 |

| ST | 1 |

| DO/W | 2 |

| DW/O&O/W | 3 |

| Well Angle (°) | Flow Rate (m3/Day) | Water Cut (%) | Oil Density (g/cm3) | Water Density (g/cm3) | Actual Pattern | Predicted Pattern |

|---|---|---|---|---|---|---|

| 0 | 100 | 80 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 100 | 90 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 300 | 20 | 0.8263 | 0.9884 | DO/W&W | DW/O&O/W |

| 0 | 300 | 40 | 0.8263 | 0.9884 | DO/W&W | DW/O&O/W |

| 0 | 600 | 20 | 0.8263 | 0.9884 | W/O | W/O |

| 60 | 100 | 20 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 60 | 100 | 40 | 0.8263 | 0.9884 | DO/W&W | W/O |

| 60 | 300 | 60 | 0.8263 | 0.9884 | W/O | W/O |

| 60 | 300 | 80 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

| 60 | 600 | 90 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

| 85 | 100 | 20 | 0.8263 | 0.9884 | ST | ST |

| 85 | 100 | 90 | 0.8263 | 0.9884 | ST | ST |

| 85 | 300 | 80 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 85 | 300 | 90 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 85 | 600 | 60 | 0.8263 | 0.9884 | W/O | DO/W&W |

| 90 | 100 | 80 | 0.8263 | 0.9884 | ST | ST |

| 90 | 300 | 40 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&W |

| 90 | 600 | 80 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

| Well Angle (°) | Flow Rate (m3/Day) | Water Cut (%) | Oil Density (g/cm3) | Water Density (g/cm3) | Actual Pattern | Predicted Pattern |

|---|---|---|---|---|---|---|

| 0 | 100 | 80 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 100 | 90 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 300 | 20 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 300 | 40 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 0 | 600 | 20 | 0.8263 | 0.9884 | W/O | W/O |

| 60 | 100 | 20 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 60 | 100 | 40 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 60 | 300 | 60 | 0.8263 | 0.9884 | W/O | W/O |

| 60 | 300 | 80 | 0.8263 | 0.9884 | DW/O&O/W | W/O |

| 60 | 600 | 90 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

| 85 | 100 | 20 | 0.8263 | 0.9884 | ST | ST |

| 85 | 100 | 90 | 0.8263 | 0.9884 | ST | ST |

| 85 | 300 | 80 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 85 | 300 | 90 | 0.8263 | 0.9884 | DO/W&W | DO/W&W |

| 85 | 600 | 60 | 0.8263 | 0.9884 | W/O | W/O |

| 90 | 100 | 80 | 0.8263 | 0.9884 | ST | ST |

| 90 | 300 | 40 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

| 90 | 600 | 80 | 0.8263 | 0.9884 | DW/O&O/W | DW/O&O/W |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ouyang, M.; Guo, H.; Yu, L.; Peng, W.; Sun, Y.; Li, A.; Wang, D.; Guo, Y. Innovative Flow Pattern Identification in Oil–Water Two-Phase Flow via Kolmogorov–Arnold Networks: A Comparative Study with MLP. Processes 2025, 13, 2562. https://doi.org/10.3390/pr13082562

Ouyang M, Guo H, Yu L, Peng W, Sun Y, Li A, Wang D, Guo Y. Innovative Flow Pattern Identification in Oil–Water Two-Phase Flow via Kolmogorov–Arnold Networks: A Comparative Study with MLP. Processes. 2025; 13(8):2562. https://doi.org/10.3390/pr13082562

Chicago/Turabian StyleOuyang, Mingyu, Haimin Guo, Liangliang Yu, Wenfeng Peng, Yongtuo Sun, Ao Li, Dudu Wang, and Yuqing Guo. 2025. "Innovative Flow Pattern Identification in Oil–Water Two-Phase Flow via Kolmogorov–Arnold Networks: A Comparative Study with MLP" Processes 13, no. 8: 2562. https://doi.org/10.3390/pr13082562

APA StyleOuyang, M., Guo, H., Yu, L., Peng, W., Sun, Y., Li, A., Wang, D., & Guo, Y. (2025). Innovative Flow Pattern Identification in Oil–Water Two-Phase Flow via Kolmogorov–Arnold Networks: A Comparative Study with MLP. Processes, 13(8), 2562. https://doi.org/10.3390/pr13082562