1. Introduction

Since fossil fuels are non-renewable and their consumption leads to significant environmental pollution, there is an urgent need to replace them with renewable and environmentally friendly energy sources, supraduction as wind energy. Wind power generation offers several advantages: it is pollution-free, renewable, and economically viable. In recent years, China’s electricity demand and installed power generation capacity have continued to grow, accelerating the nation’s transition to clean energy. As a result, the scale of renewable energy—particularly wind power—has expanded rapidly. By the end of December 2024, China’s cumulative installed power generation capacity reached approximately 3.35 billion kilowatts, representing a year-on-year increase of 14.6%. Among this, wind power accounted for around 520 million kilowatts, an 18.0% increase compared to the previous year [

1]. As both total and wind-specific installed capacities continue to rise, wind power forecasting plays an increasingly vital role in power system management and economic operations. However, the high volatility and unpredictability of wind speed pose considerable challenges to power system stability and market efficiency. Therefore, accurate wind power forecasting is crucial for grid scheduling, maintaining system reliability, and improving the economic returns for wind farm operators [

2]. From the perspective of modeling approaches, wind power forecasting methods are generally classified into three categories: physical models, time series models, and artificial intelligence-based models [

3].

The physical modeling approach to wind power forecasting primarily relies on Numerical Weather Prediction (NWP) techniques [

4]. This method uses real-time meteorological observations and terrain features as initial boundary conditions to construct a three-dimensional atmospheric model. By solving fluid dynamics and thermodynamic equations, it simulates the spatial and temporal distribution of wind speed and direction at the hub height of wind turbines. These simulated meteorological parameters are then converted into theoretical power output using the specific power curve of a given wind farm. Reference [

5] introduced an integrated approach that combines the outputs of three different meteorological models. By analyzing the covariance matrix of prediction deviations and errors from individual models, optimal weights are determined to enhance forecasting accuracy. Reference [

6] proposed a short-term wind speed forecasting method that precomputes discrete boundary conditions and utilizes a flow field characteristics database for interpolation, thereby estimating wind speed and direction under specific scenarios. To address the limitations of low-resolution NWP data, Reference [

7] analyzed the influence of terrain and wake effects on wind flow using fluid mechanics principles, leading to more accurate predictions. Despite their strengths, physical models face several application limitations in wind power forecasting. They are highly sensitive to the accuracy of input data—particularly meteorological and terrain parameters—and are vulnerable to environmental disturbances. Moreover, these models typically have limited generalizability when applied to different sites. From a computational perspective, solving the underlying fluid mechanics equations requires substantial computing resources and time, making this approach computationally intensive. Additionally, forecasting errors tend to accumulate over longer prediction horizons, which reduces the reliability of physical models in short-term forecasting tasks. However, physical modeling methods exhibit better performance in medium- to long-term forecasting scenarios, particularly in strategic applications such as site selection, wind resource assessment, and long-term energy planning [

8,

9].

In recent years, research has increasingly focused on the practical demands of power load forecasting and renewable energy modeling, conducting in-depth analyses of the applicability of traditional modeling approaches in complex scenarios. Reference [

10] reported that, in short-term load forecasting experiments during the Easter holiday in Italy, the accuracy of traditional statistical models dropped significantly due to increased volatility during the holiday period, making them inadequate for capturing abrupt fluctuations in demand. Increment (2021) further pointed out that as the forecasting horizon and input data complexity increase, the linear modeling capability of conventional time series methods gradually falls short of the precision requirements in modern energy systems, necessitating the adoption of more expressive machine learning approaches. Reference [

11] also emphasized that in hourly load estimation under a 100% renewable energy scenario, the non-stationary and intermittent nature of wind and solar power poses serious challenges to classical time series models, thus highlighting the urgent need for high-resolution nonlinear modeling frameworks to enhance model adaptability and robustness.

Time series forecasting models predict future trends by analyzing the temporal patterns embedded in historical data. Among these methods, the persistence model is the simplest and most straightforward strategy, which assumes that the value observed at the current time step will remain unchanged in the next. More sophisticated models include the Autoregressive (AR) model [

12], Moving Average (MA) model [

13], Autoregressive Moving Average (ARMA) model [

14], and Autoregressive Integrated Moving Average (ARIMA) model [

15]. Each of these methods employs distinct mathematical formulations to capture the dynamics of time series data and improve forecasting accuracy. For instance, Reference [

16] applied time series analysis techniques to develop a wind speed prediction model for wind farms. Further, studies have shown that employing an improved ARMA model can significantly enhance the accuracy of short- and medium-term wind power forecasts [

17]. However, traditional time series models have several intrinsic limitations. First, these methods typically assume that the input data are stationary. When applied to inherently non-stationary wind power data, their predictive accuracy tends to deteriorate significantly. Second, while these models are effective in capturing linear dependencies, they struggle to model the complex nonlinear interactions that often exist in real-world wind power data. Third, such models are generally better suited for static environments and may underperform in scenarios where data exhibit dynamic and evolving patterns. Notably, when applied to time series with complex characteristics, the limitations of traditional single-model approaches in feature extraction and representation become increasingly pronounced, making it difficult to meet the growing demands for high-accuracy wind power forecasting.

Single-model forecasting approaches often struggle to fully capture the complex dynamic characteristics of wind power data, which exhibit strong nonlinearity and multi-scale fluctuations, thereby limiting their predictive performance. To address this limitation, researchers in recent years have increasingly turned to multi-model fusion strategies, which integrate the structural advantages of different models. This approach has gradually become the mainstream trend in wind power forecasting research. Combined models not only significantly improve prediction accuracy but also enhance model stability and generalization ability. For example, reference [

18] proposed an improved artificial neural network model that incorporates particle swarm optimization (PSO) and genetic algorithm (GA) for joint parameter optimization, effectively improving convergence speed and forecast accuracy. Reference [

19] developed a deep neural network that combines wavelet transform with a Transformer architecture, demonstrating excellent multivariate time series modeling capabilities and achieving high-precision prediction of wind speed and power. Moreover, reference [

20] proposed a hybrid forecasting system that integrates LSTM and Transformer frameworks to jointly model the power generation process of wind, solar, and battery storage systems, thereby significantly improving the prediction reliability and practical applicability of renewable energy systems.

With the continuous advancement of artificial intelligence (AI) technologies, a wide range of statistical methods based on intelligent learning have emerged, among which machine learning has seen particularly extensive application. As a key branch of AI, machine learning models can adaptively learn from existing data to optimize decision-making and predict future or unseen scenarios, thereby enhancing both accuracy and generalizability [

21]. Commonly used machine learning algorithms in wind power and wind speed forecasting include regression models, Support Vector Machines (SVMs), Random Forests (RFs), Bayesian Additive Regression Trees (BARTs), and K-Nearest Neighbors (KNNs). These algorithms have demonstrated strong capabilities in capturing complex patterns in meteorological and power generation data. In recent years, the rapid development of deep learning has driven significant breakthroughs in artificial intelligence, particularly in domains such as speech recognition and computer vision. This technological wave has also profoundly influenced the field of wind power forecasting. For example, Reference [

22] proposed a hybrid method combining Empirical Mode Decomposition (EMD) with Artificial Neural Networks (ANN) for wind power prediction. Reference [

23] applied an improved Variational Mode Decomposition (VMD) to preprocess wind power data and employed a Long Short-Term Memory (LSTM) network for forecasting, significantly enhancing prediction accuracy. These studies introduced innovative data processing and modeling strategies aimed at improving both the precision and robustness of wind power forecasting. Furthermore, Reference [

24] developed a hybrid LSTM–SARIMA model for ultra-short-term wind power forecasting, integrating LSTM with the Seasonal Autoregressive Integrated Moving Average (SARIMA) model. This approach accounts for both meteorological and seasonal variations, effectively capturing wind power fluctuations and improving upon the limitations of traditional models. Reference [

25] presented a comprehensive energy load forecasting framework based on hybrid mode decomposition and deep learning. The framework adopts a multi-stage decomposition strategy: initially, Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEMDAN) is applied to disaggregate the electricity, cooling, and heating load data into intrinsic mode components. For components exhibiting significant residual non-stationarity, VMD is subsequently used for secondary refinement.

Existing research has demonstrated that decomposing historical wind power time series prior to forecasting can significantly improve prediction accuracy. Modal decomposition techniques enable the extraction of intrinsic components from complex signals, helping to uncover latent patterns and improve model performance. These techniques are particularly well-suited to nonlinear and non-stationary signals, offering benefits such as noise reduction, enhanced data preprocessing, and improved predictive capabilities. Moreover, modal decomposition facilitates a deeper understanding of signal characteristics, thereby enhancing the quality of time series analysis. For these reasons, it has been widely adopted in wind power forecasting and related fields. Compared with conventional forecasting methods, decomposition-based hybrid models offer a relatively simple structure, high computational efficiency, and strong adaptability. Such models can be effectively applied to various forecasting scenarios, including point forecasts, multi-step forecasts, and day-ahead predictions. However, a notable limitation of current decomposition-prediction models is the lack of clear guidance on determining the appropriate length of the time series segment to be decomposed. The choice of time window length can significantly influence the quality of decomposition and, consequently, the accuracy of the final forecast. Despite its importance, this issue remains insufficiently addressed in existing studies. Therefore, there is an urgent need for further research to explore and optimize time series segmentation strategies in the context of decomposition-based wind power forecasting.

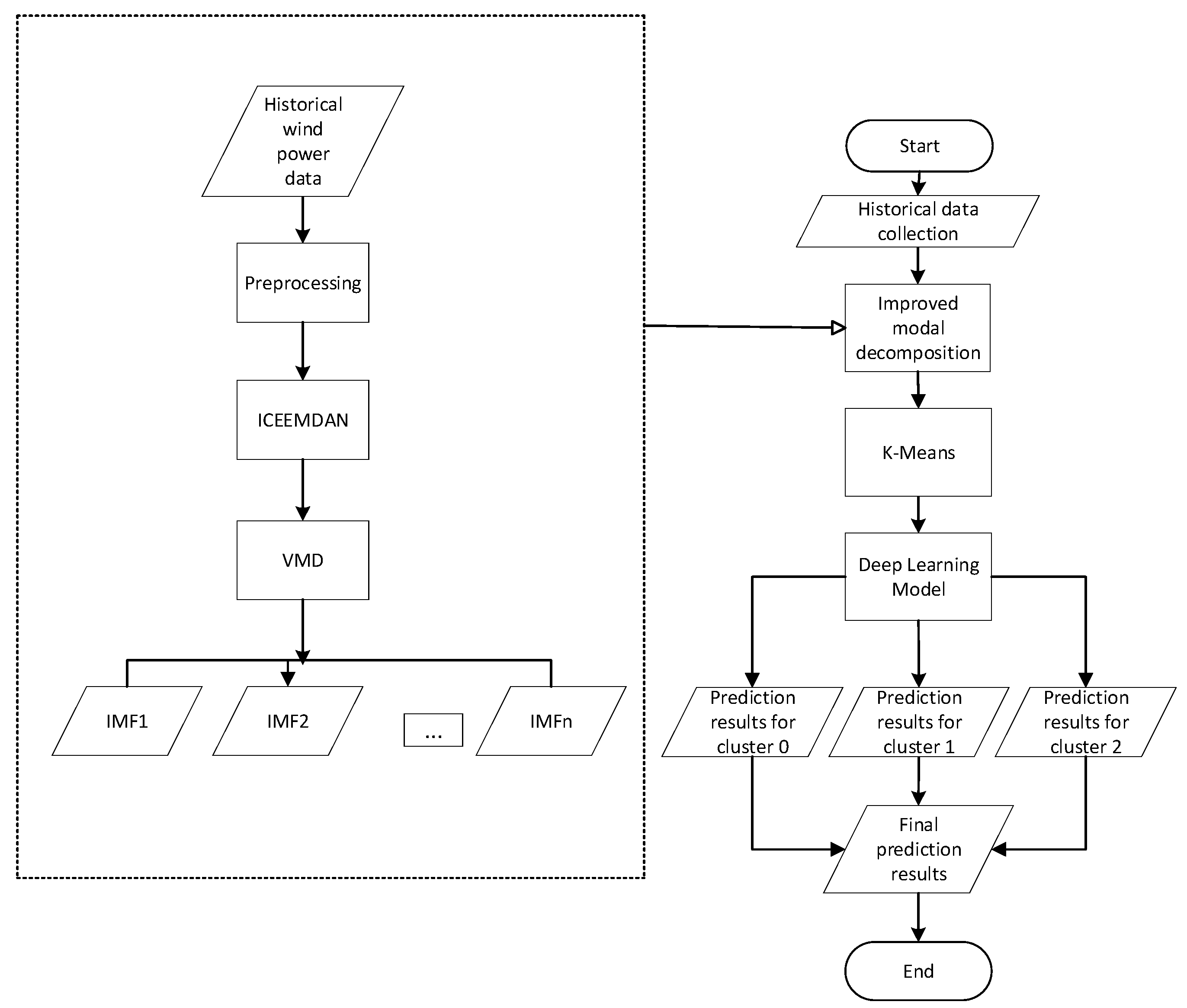

Based on the above background, this paper proposes a short-term wind power forecasting method that integrates improved modal decomposition with deep learning, aiming to address the challenge of determining the appropriate length of time series for decomposition. The main contributions of this study are summarized as follows:

(1) A novel forecasting framework is proposed that combines improved modal decomposition with deep learning to enhance short-term wind power prediction. The method adopts a multi-stage processing strategy for historical wind power data to improve forecasting accuracy.

(2) Time series segmentation is performed prior to modal decomposition using the Pruned Exact Linear Time (PELT) method, enabling precise determination of the optimal time series length. A two-stage modal decomposition process is then applied: the first stage reduces noise and complexity, while the second targets high-frequency oscillatory components to further refine the decomposition and simplify prediction.

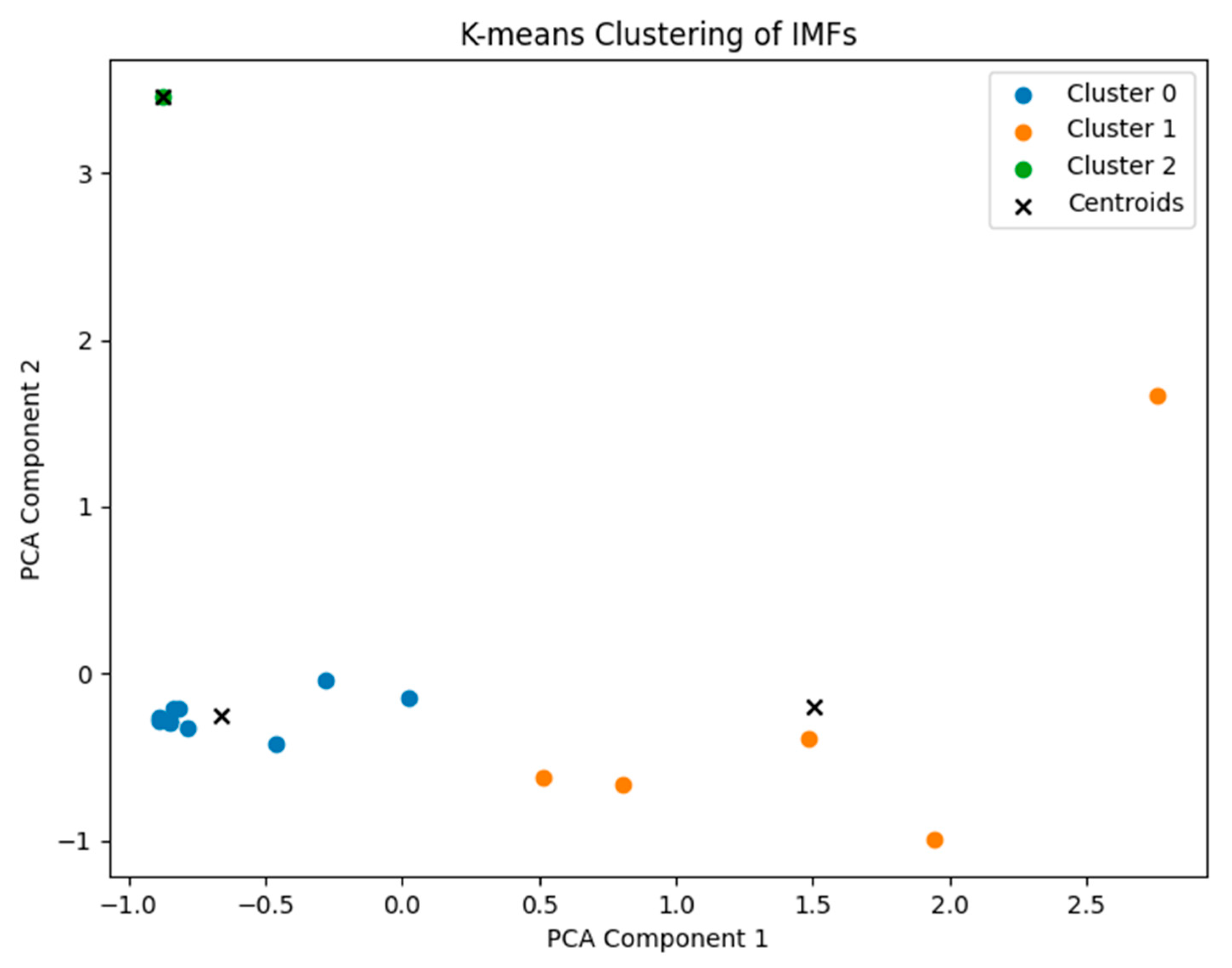

(3) K-means clustering is employed after decomposition to group similar modal components. This not only reduces computational cost but also leverages shared characteristics among components, improving efficiency and prediction performance.

3. Results

This paper uses the historical wind power generation data of a wind farm from 1 January 2014 to 28 February 2015, with a sampling interval of 15 min. First, PELT is used to segment the wind power time series. Then, two segmented time series are selected. Finally, one time series is used to verify the effectiveness of PELT; the other time series is used to verify the effectiveness of two-mode decompositions and K-means.

3.1. Experiment Details

To ensure the reproducibility of the experimental process and the transparency of methodological design, this study provides a detailed account of the key parameter configurations for the ICEEMDAN, VMD, and LSTM modules within the proposed forecasting framework.

In the ICEEMDAN decomposition stage, the noise amplitude coefficient was set to to control the intensity of the added white noise in each iteration. This choice ensures controlled perturbation while enhancing decomposition stability. A total of 100 realizations of independent white noise were introduced for ensemble averaging, which increases the robustness of the extracted IMFs. The sifting process in EMD was limited to a maximum of 50 iterations to balance decomposition accuracy and computational efficiency. The extraction process terminated when the standard deviation of the residual dropped below 0.1% of the total signal energy. Up to 10 intrinsic mode functions (IMFs) were adaptively extracted, based on the energy threshold and complexity of the input signal.

The selection of the IMF number (up to 10) was not fixed arbitrarily but followed an energy convergence criterion, ensuring that only the physically meaningful modes were retained while avoiding over-decomposition. This makes the ICEEMDAN configuration particularly robust for non-stationary signals like wind power.

Subsequently, the residual component from ICEEMDAN was further processed by Variational Mode Decomposition (VMD) to improve the selectivity and sparsity of the frequency bands. The number of modes in VMD was set to based on spectral analysis and entropy evaluation of the ICEEMDAN residual. This number was selected to ensure that the major frequency components are isolated while avoiding excessive decomposition, which could lead to redundant information. The penalty parameter controlling the bandwidth of each mode was set to to ensure a good trade-off between detail preservation and smoothness. This value falls within the range recommended in previous literature for wind power signals. The convergence tolerance was , and the maximum number of iterations was 500. The initial center frequencies were automatically initialized with uniform spacing to cover the major spectral components of the signal. This VMD configuration ensures that modal overlap is minimized and components are compact in the frequency domain.

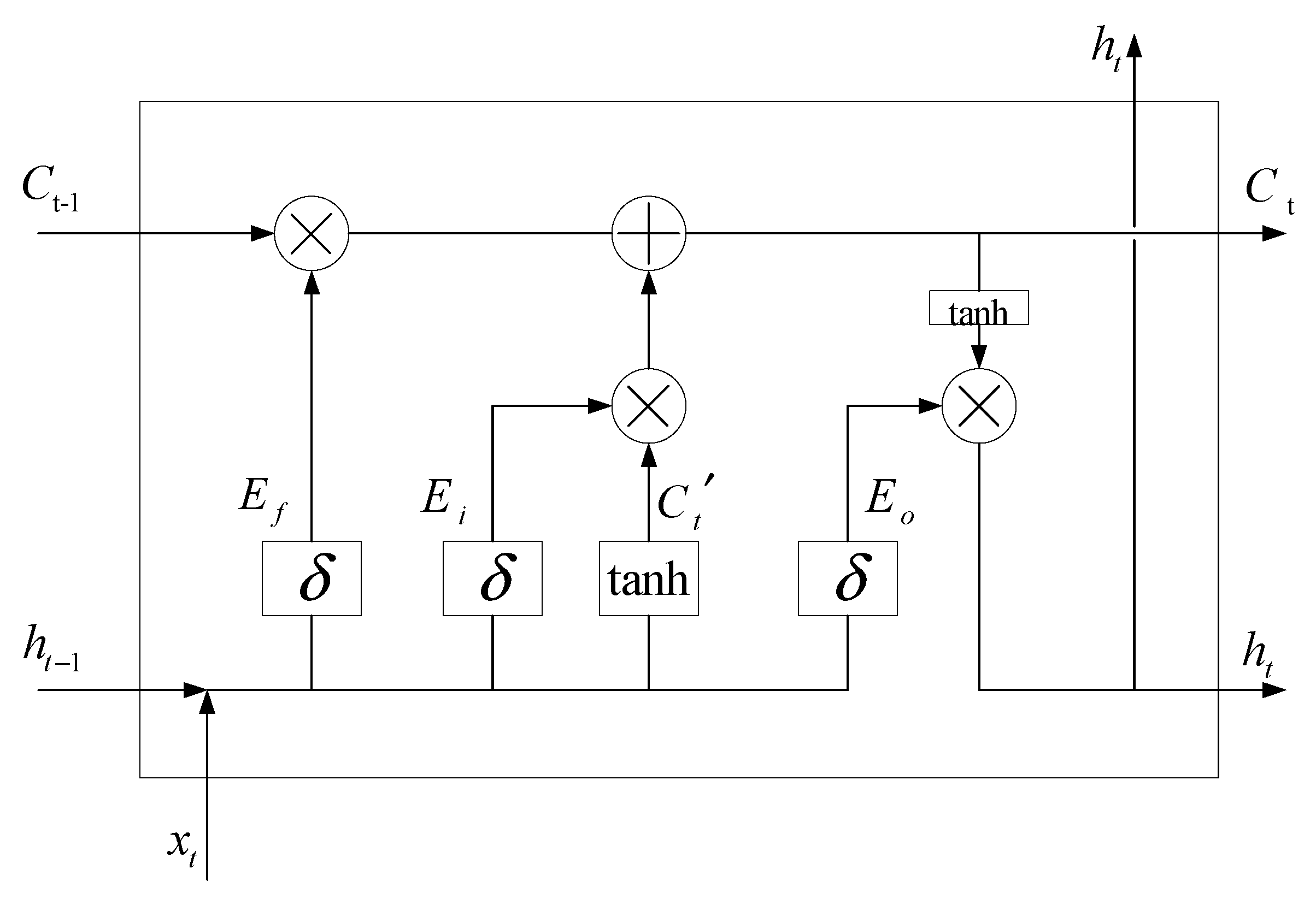

For the final forecasting stage, a two-layer stacked Long Short-Term Memory (LSTM) network was constructed. Each layer contained 64 hidden units, which were sufficient to capture the deep temporal dynamics of the time series with moderate model complexity. The layer depth and hidden unit size were chosen through cross-validation to achieve the best trade-off between accuracy and computational cost. To mitigate overfitting, a dropout strategy was employed between LSTM layers with a dropout rate of 0.2. The Adam optimizer was used to adaptively adjust the learning rate, initialized at 0.001. The model was trained using mean squared error (MSE) as the loss function, with a mini-batch size of 32. Early stopping was also employed to avoid overfitting, with a maximum of 100 training epochs. Training was terminated early if no significant improvement was observed in the validation error over a predefined number of epochs. This training protocol has been proven effective for moderate-scale time series forecasting tasks.

3.2. PELT-Mean&Variance Segmentation

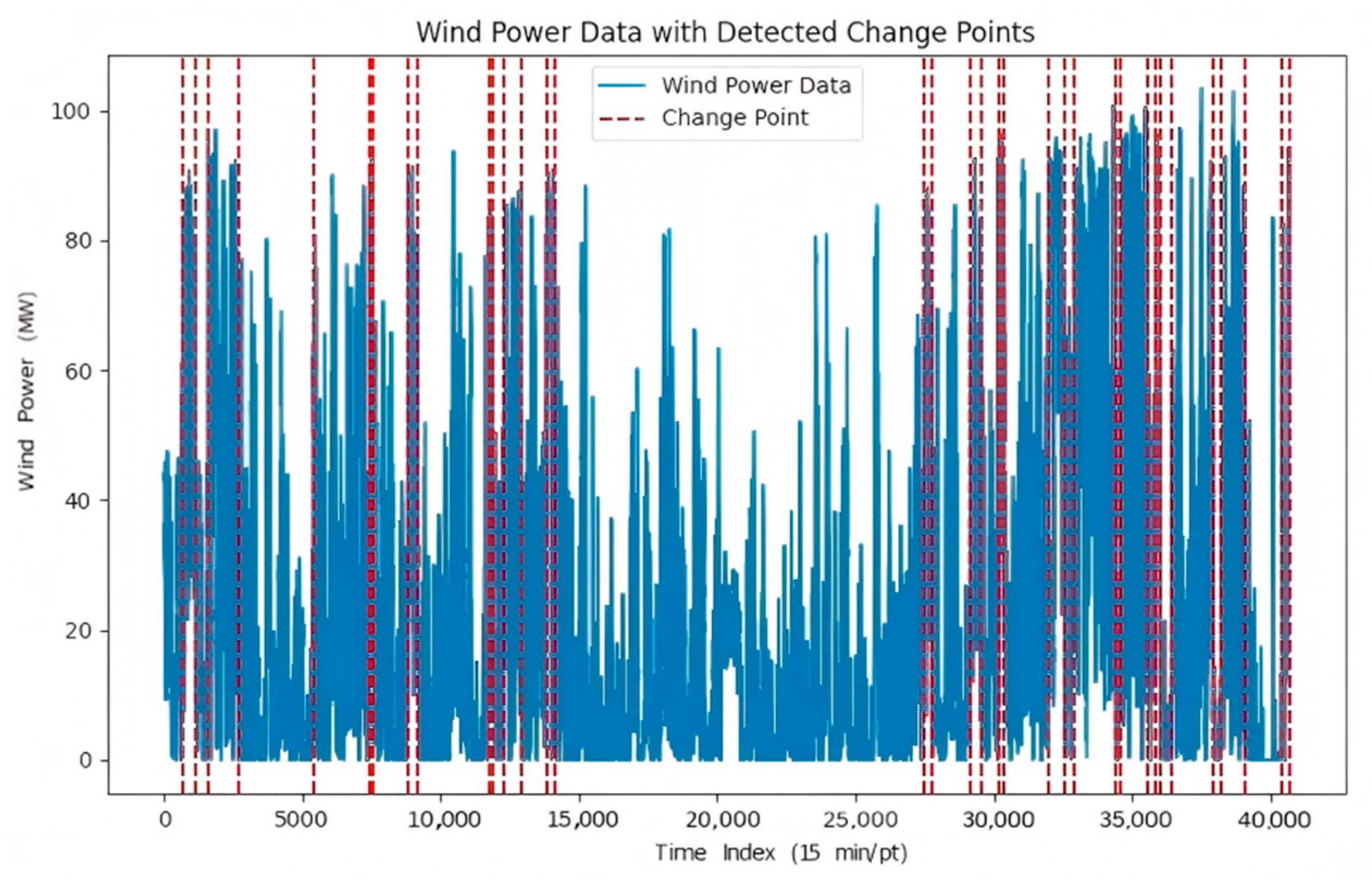

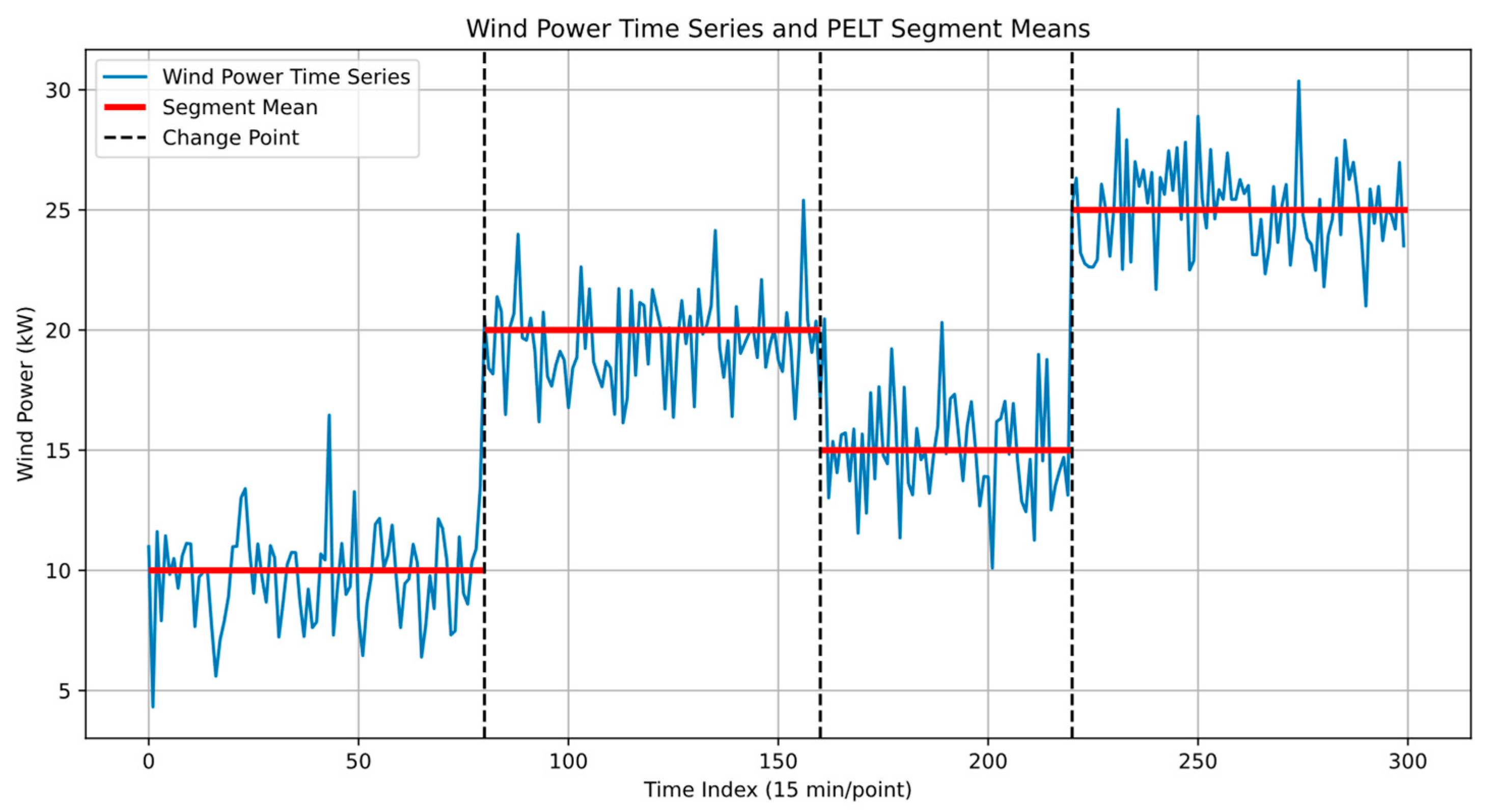

PELT-Mean&Variance is used to segment historical wind power data to improve prediction accuracy. The segmentation is shown in the following

Figure 4:

The

Figure 5 shows that the simulated data is successfully divided into four segments with distinctly different statistical characteristics by the PELT algorithm. The segment means and change points accurately reflect the structural changes in the time series. The bar chart of segment variances reveals the differences in the amplitude of fluctuations within each segment, illustrating the dynamic variation characteristics of wind power. This illustrative figure effectively demonstrates the capability of the PELT method in capturing statistical changes in wind power data and achieving reasonable segmentation.

The figure displays the wind power time series (blue line) along with the segment means detected by the PELT algorithm (red horizontal lines). Vertical dashed lines indicate the detected change points dividing the time series into statistically distinct segments. The horizontal axis represents the time index with each unit corresponding to a 15 min interval, and the vertical axis indicates wind power in kilowatts (kW). This visualization illustrates how the PELT method effectively captures the structural changes and segments the wind power data accordingly.

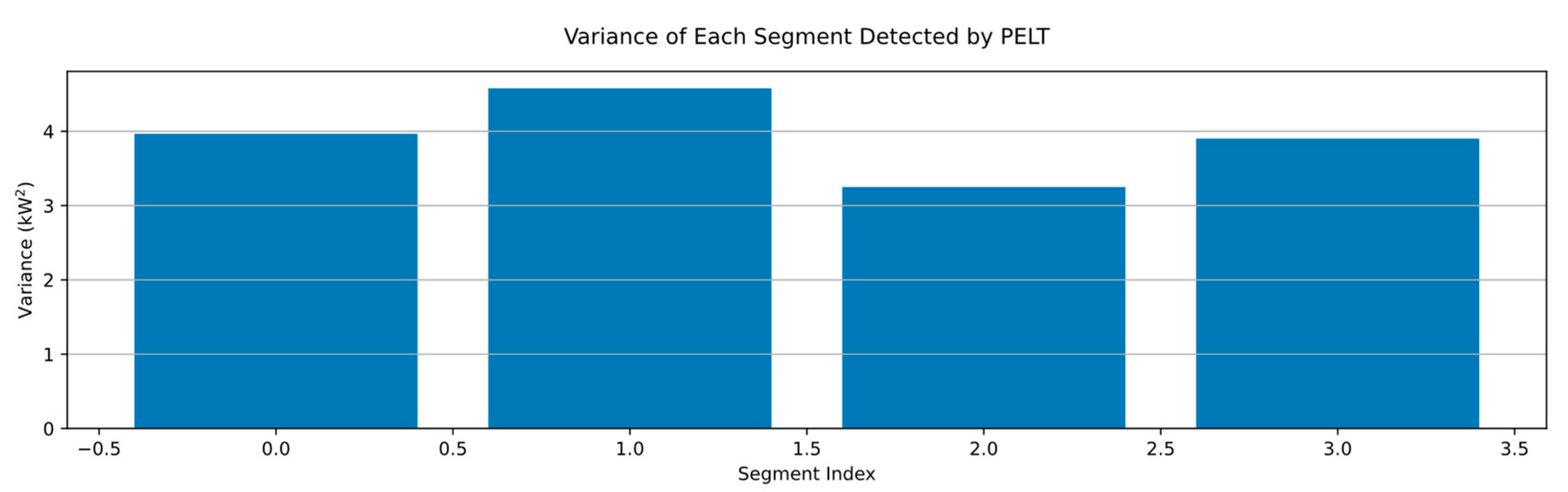

This

Figure 6 shows the variance within each segment identified by the PELT algorithm. The horizontal axis corresponds to the segment index, while the vertical axis indicates the variance value measured in kilowatt squared (

). The differences in variance highlight the dynamic fluctuations of wind power within each segment, demonstrating the capability of PELT to detect not only mean shifts but also changes in data volatility.

Since the wind speed in different time periods has different characteristics, the corresponding power curves also have different characteristics. In order to facilitate subsequent predictions, the change points that are too close to each other are removed. Two sets of data are selected for subsequent comparative experiments; one set is the data from 25 February to 15 March, and the other set is the data from 13 October to 10 November.

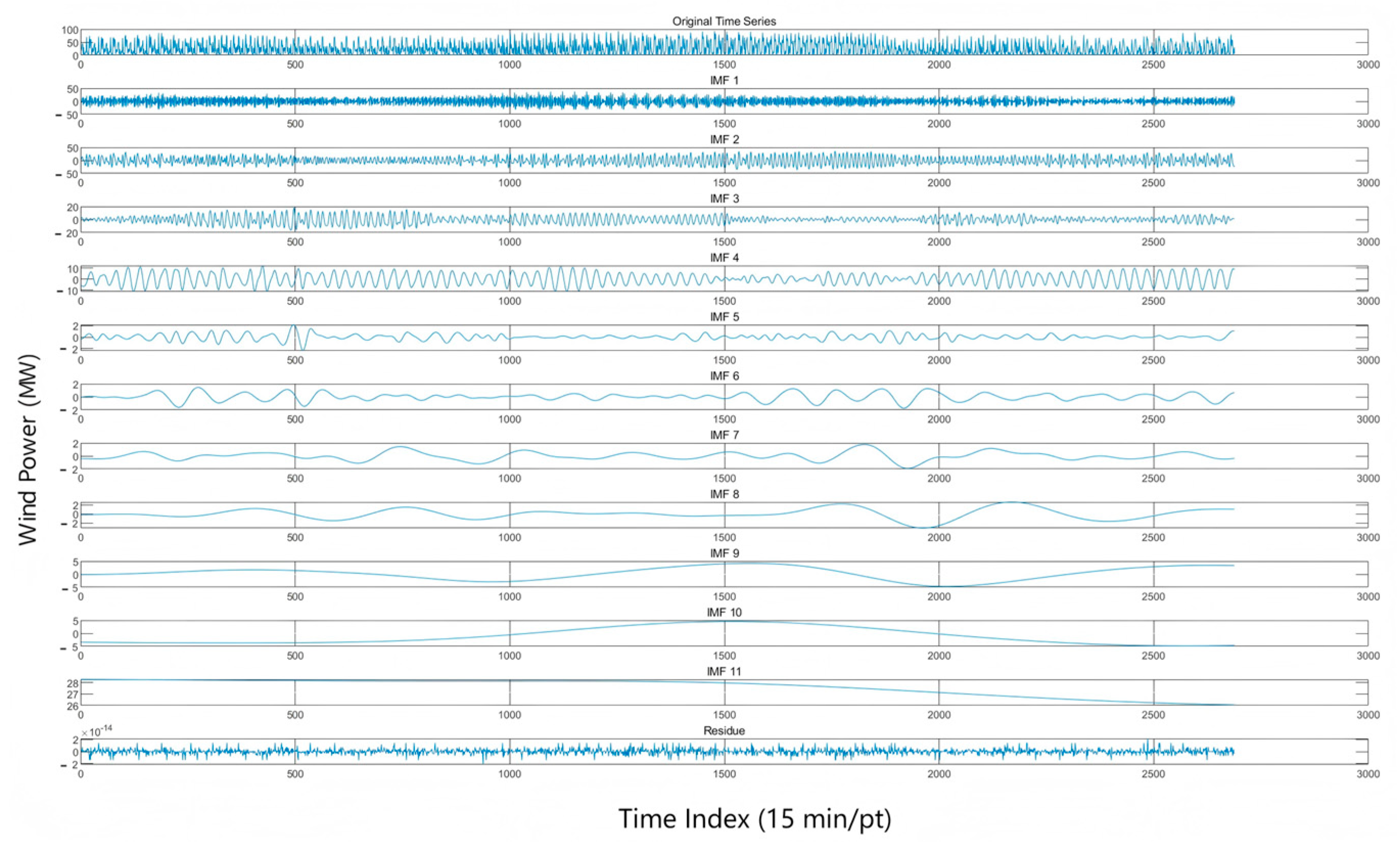

3.3. Two-Mode Decomposition

As mentioned above, only one group of segmented data is selected to verify the effectiveness of two-mode decomposition and K-means. Here, two-mode decomposition is not performed on multiple groups of time series. The results of the two-mode decomposition of data from 13 October to 10 November are shown in

Figure 7 as follows:

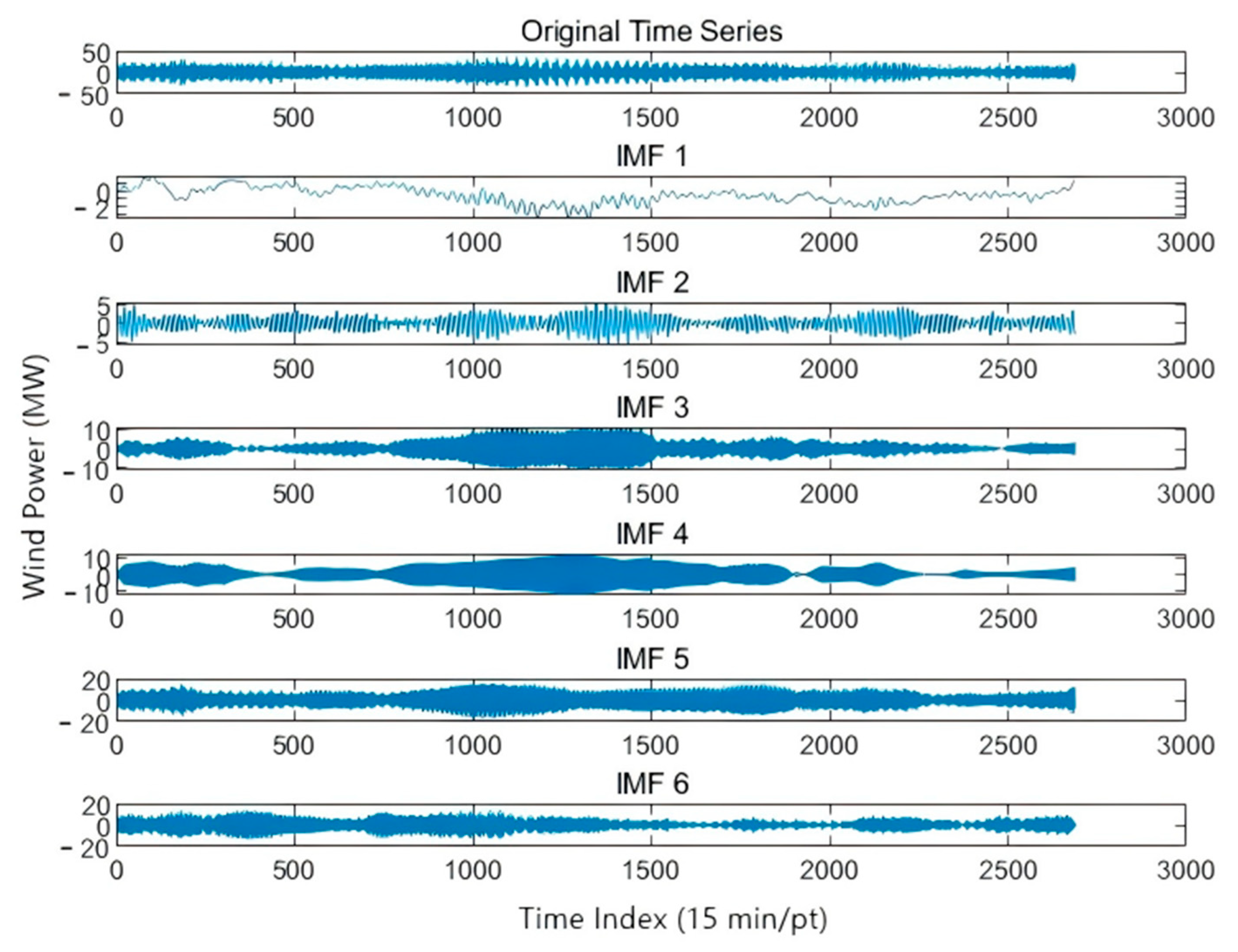

In order to avoid mode aliasing and fully decompose the sequence, the high-frequency components are further decomposed using VMD with stronger frequency resolution performance. The following

Figure 8 shows the result of VMD decomposition of IMF1 in the above figure:

3.4. Evaluation Indicators

This paper uses

RMSE and

MAE to evaluate the prediction accuracy. The expression is as follows:

The smaller the RMSE and MAE values are, the better the fitting effect and the higher the accuracy.

3.5. Comparative Analysis

3.5.1. PELT Validation

To verify the effectiveness of the PELT algorithm in improving wind power forecasting accuracy, this study selects three historical wind power time series of different lengths as input to LSTM models, aiming to predict wind power for 16 March and evaluate the performance differences. The first dataset corresponds to the time interval automatically determined by the PELT algorithm, spanning from 25 February to 15 March (a total of 20 days), which is treated as the optimal segmented sample. The second dataset is a shorter time period, from 6 March to 15 March (10 days), used to evaluate the performance with a limited historical sequence. The third dataset covers a longer period, from 16 February to 15 March (28 days), intended to examine whether excessive sequence length introduces redundancy. All three datasets are used to train LSTM models for wind power prediction on 16 March, and the prediction accuracy is compared using common error metrics, as shown in

Table 1.

The results in

Table 1 demonstrate that the model based on PELT-segmented data (PELT-LSTM) outperforms the other two configurations in terms of both RMSE and MAE. Specifically, compared with the short-sequence model, the RMSE and MAE are reduced by 2.31 MW and 1.72 MW, respectively; compared with the long-sequence model, they are reduced by 0.87 MW and 0.98 MW, respectively.

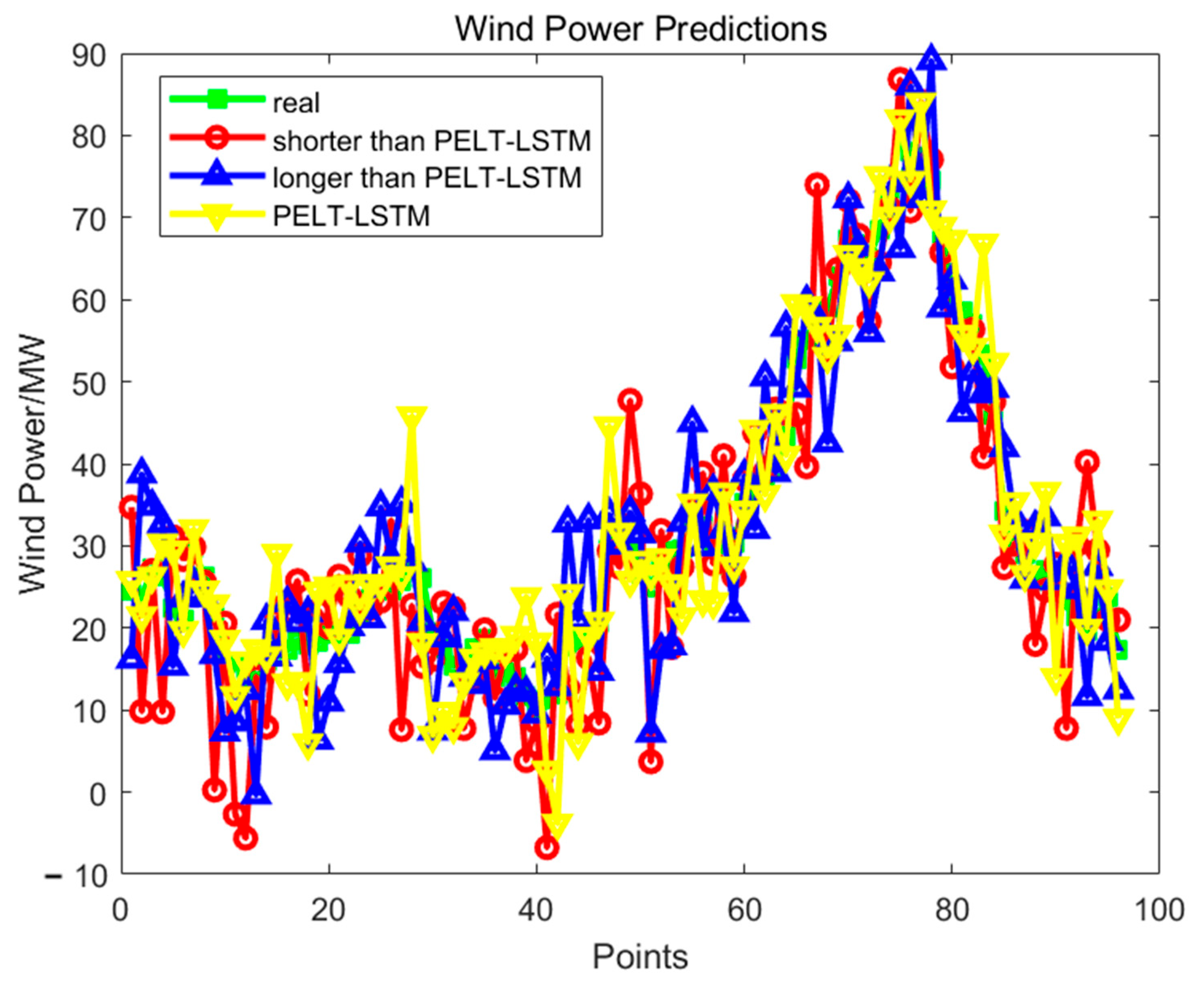

Figure 9 further illustrates the prediction curves of the three models on the target day. In this figure, the

x-axis labeled “Points” represents the time steps on 16 March, indicating the temporal resolution of the forecast output. If the data is sampled hourly, there are 24 points; if sampled every 15 min, there are 96 points. The

y-axis represents the predicted wind power values at each time step. It can be observed that the prediction curve of the PELT-LSTM model aligns more closely with the actual measurements, especially in regions of rapid fluctuation, showing superior fitting performance.

3.5.2. Verification of the Effectiveness of Two Modal Decompositions and Clustering

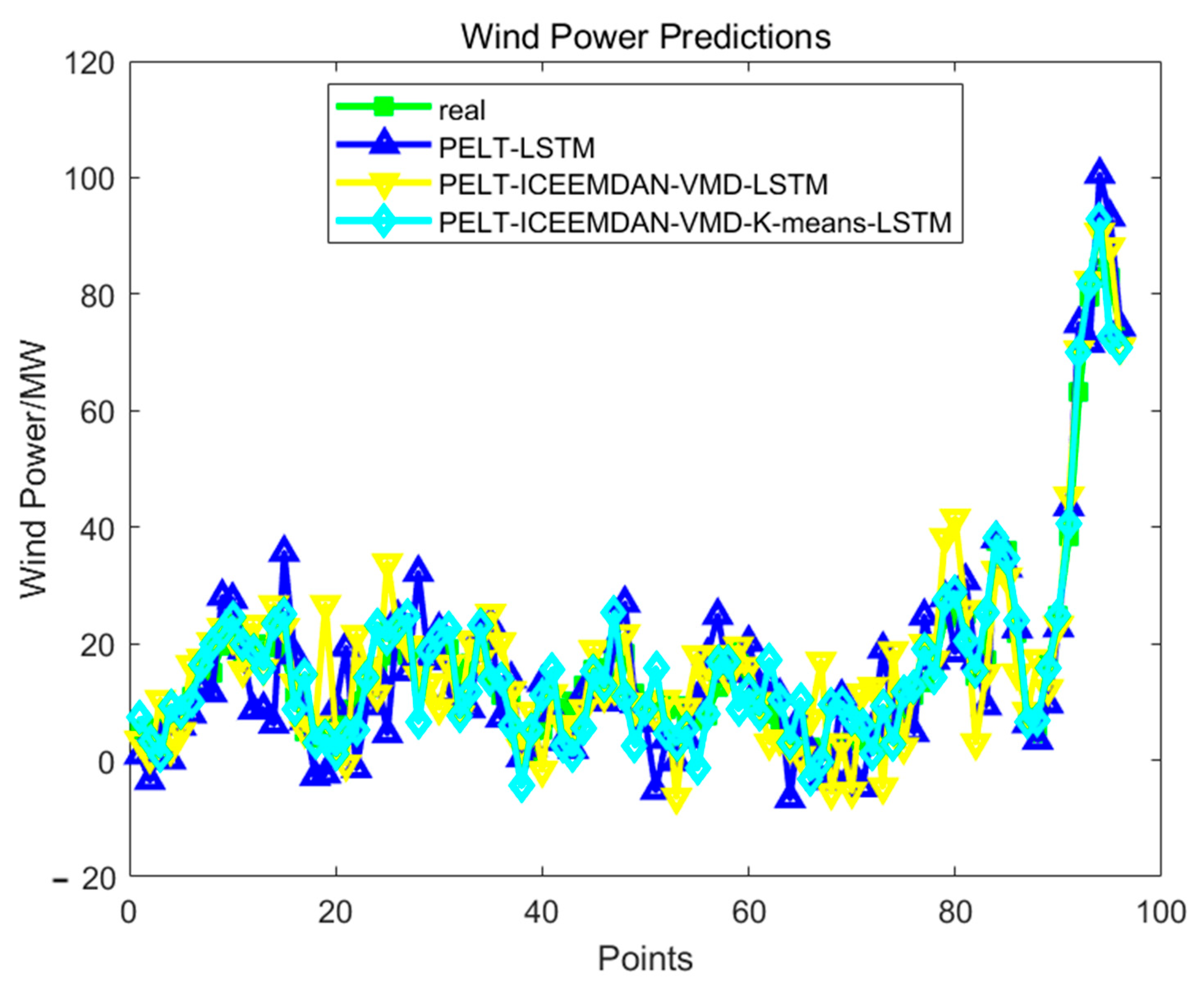

To validate the effectiveness of the proposed two-stage modal decomposition and clustering strategy in improving wind power forecasting accuracy, this study employs the same dataset obtained through PELT segmentation, covering the period from 13 October to 9 November, totaling 28 days of historical wind power data. As shown in

Figure 10, three sequence processing strategies are designed based on this dataset: (1) using the raw time series without any modal decomposition (PELT-LSTM); (2) applying two sequential decomposition methods—ICEEMDAN followed by VMD (PELT-ICEEMDAN-VMD-LSTM); and (3) incorporating K-means clustering after the two-stage decomposition to further reduce redundancy (PELT-ICEEMDAN-VMD-K-means-LSTM). All three strategies are integrated with LSTM to build forecasting models for the target day. The forecasting results and associated error metrics are presented in

Table 2. Compared with the baseline (raw sequence), the introduction of two-stage decomposition alone reduces RMSE and MAE by approximately 3.19% and 4.14%, respectively. When further combined with K-means clustering, the error rates drop significantly, with RMSE and MAE reduced by about 28.45% and 27.38%. These results strongly support the proposed framework’s ability to capture critical patterns in wind power time series and significantly enhance prediction performance.

To verify the effectiveness of K-means clustering and explore the impact of the optimal number of clusters

on model prediction performance, this paper introduced K-means clustering after ICEEMDAN-VMD decomposition and designed comparative experiments with different numbers of clusters

to

. After clustering, the resulting cluster components were fed into an LSTM network for training and prediction. The performance metrics are shown in

Table 3.

Table 3 shows that when the number of clusters is set to

, the model performs optimally, with the lowest prediction error, RMSE = 4.93, MAE = 4.03. Prediction accuracy decreases when the number of clusters is less than or greater than 3. This suggests that a too small number of clusters leads to inadequate segmentation of modal components, making it difficult to capture feature differences; whereas a too large number of clusters may introduce redundant noise, reduce stability, and lead to overfitting.

The experimental results verify the effectiveness of the clustering enhancement mechanism proposed in this paper; that is, through a reasonable number of clustering operations, the model’s ability to express modal information can be significantly improved, thereby reducing the model complexity while maintaining information integrity, and ultimately achieving higher wind power prediction accuracy.

As shown in

Table 4, to evaluate the contribution of each module within the forecasting framework, this study conducts a systematic performance assessment across different model combinations. The experimental results demonstrate that the progressive integration of processing modules significantly enhances the accuracy of wind power forecasting, reflecting a well-structured, hierarchical improvement.

First, the Baseline-LSTM model directly utilizes the raw wind power time series without addressing its inherent nonlinearity and nonstationarity, resulting in relatively high prediction errors (RMSE = 6.12 MW, MAE = 5.42 MW). Upon incorporating the PELT change-point detection method, the resulting PELT-LSTM model achieves slight improvements by segmenting the sequence, which reduces local volatility and enhances robustness, indicating the effectiveness of structural change awareness.

Further, the ICEEMDAN-LSTM model decomposes the original sequence into multiple Intrinsic Mode Functions (IMFs), effectively extracting dynamic features across different scales. This improves the model’s ability to learn from nonstationary signals, with errors reduced to RMSE = 5.38 MW and MAE = 4.58 MW. To further refine high-frequency component representation, the ICEEMDAN-VMD-LSTM model applies VMD to decompose IMF1 obtained from ICEEMDAN, enhancing spectral separation between modes and yielding improved performance (RMSE = 5.01 MW, MAE = 4.29 MW).

Finally, the proposed full model, referred to as Ours (PELT + ICEEMDAN + VMD + K-means + LSTM), introduces a K-means clustering step to aggregate redundant modes before feeding them into the LSTM network. This operation reduces input dimensionality, increases representational efficiency, and improves training stability. Under this configuration, the model achieves the best performance, with RMSE reduced to 4.93 MW and MAE to 4.03 MW—representing reductions of 1.6% and 6.1%, respectively, compared to the ICEEMDAN-VMD-LSTM model without clustering. This confirms the significant role of clustering in enhancing model generalization and compressing redundant inputs.

Furthermore,

Figure 9 presents the prediction curves of the three methods for the target day. In the figure, the

x-axis labeled “Points” represents the time steps across the entire day of 10 November, reflecting the temporal resolution of the model’s forecast output. If the wind power data are sampled hourly, the

x-axis includes 24 points; if sampled every 15 min, they includes 96 points. The

y-axis shows the predicted wind power values at each time step. It is evident that the model with two-stage modal decomposition (PELT-ICEEMDAN-VMD-LSTM) achieves a modest improvement over the baseline PELT-LSTM model, reducing RMSE by 0.23 MW and MAE by 0.22 MW. On this basis, further applying K-means clustering to the decomposed components yields a more substantial enhancement in prediction accuracy, with RMSE and MAE decreasing by 1.74 MW and 1.29 MW, respectively. This result indicates that the clustering step helps the model better exploit structural differences among modal components.

To comprehensively evaluate the impact of different time series lengths on wind power forecasting performance, this study conducted two sets of experiments from the perspectives of input window length selection and signal preprocessing strategy. In

Section 3.5.1 (PELT validation), three representative historical wind power sequences were selected as model inputs: a short sequence from 6 March to 15 March (10 days), a PELT-segmented sequence from 25 February to 15 March (20 days), and a long sequence from 16 February to 15 March (28 days). All three datasets were used to train LSTM models to forecast wind power for 16 March. The results demonstrated that the model based on PELT-segmented data (PELT-LSTM) achieved the best accuracy in terms of both RMSE and MAE.

This finding indicates that a sequence that is too short may fail to capture sufficient temporal patterns, leading to underfitting, while an excessively long sequence may introduce redundant or noisy information that degrades model performance. In contrast, using the PELT algorithm to identify structurally stable segments helps determine a more appropriate time window for training, thereby enhancing the representativeness of the input data and improving forecasting accuracy. This demonstrates that choosing a proper historical sequence length is critical for model performance, and the PELT algorithm provides an adaptive, data-driven approach to optimal window selection.

In

Section 3.5.2 (modal decomposition and clustering validation), the input sequence length was fixed at 28 days (13 October to 9 November) for all models in order to isolate the impact of different preprocessing strategies. Three approaches were compared: no decomposition, two-stage modal decomposition (ICEEMDAN followed by VMD), and two-stage decomposition with K-means clustering. The results showed that while modal decomposition alone led to moderate improvements in forecasting accuracy, incorporating K-means clustering further enhanced performance significantly. This confirms the effectiveness of combining modal decomposition and clustering in capturing structural characteristics and reducing model complexity.

3.5.3. Comparative Study of the Proposed Model and State-of-the-Art Methods

As shown in

Table 5, the proposed PELT-ICEEMDAN-VMD-LSTM model demonstrates clear superiority in wind power short-term forecasting. Specifically, it achieves the lowest RMSE of 4.93 MW, representing a 44.8% reduction compared to ARIMA (8.93 MW), and a 27.2% improvement over GRU (6.77 MW). In terms of MAE, our model achieves 4.03 MW, which is significantly lower than all baseline models.

While deep learning models, such as CNN-LSTM and BiLSTM, offer moderate improvements by learning temporal dependencies, they struggle to fully capture complex non-stationary patterns inherent in wind power data. Models incorporating decomposition techniques (e.g., EMD-LSTM, VMD-LSTM, and ICEEMDAN-LSTM) enhance signal representation, yet they often lack adaptive segmentation or synergistic mode coordination, limiting their predictive performance.

In contrast, the proposed method effectively integrates PELT-based segmentation to identify structural changes in the time series, applies dual-mode decomposition—ICEEMDAN for adaptive denoising and VMD for sparse frequency localization—and, finally, employs LSTM for deep temporal modeling. This multi-stage collaborative framework results in the best overall performance in both RMSE and MAE metrics, confirming its robustness and practical superiority in wind power forecasting tasks.

As shown in

Table 6 our model, PELT-ICEEMDAN-VMD-LSTM, not only achieves the lowest mean RMSE (4.93 MW) but also exhibits the smallest standard deviation (0.10) and the narrowest 95% confidence interval ([4.83, 5.04]) among all compared models. This clearly indicates that our model provides superior prediction accuracy and stability across multiple independent forecasting trials.

In contrast, conventional methods, such as ARIMA and SVR, yield higher RMSE values (8.91 and 7.62, respectively), with wider confidence intervals and greater variability, suggesting limited reliability. While advanced deep learning models like GRU and BiLSTM show moderate improvements in mean RMSE, their prediction stability remains suboptimal due to relatively wider confidence intervals.

Even decomposition-based models, such as ICEEMDAN-LSTM, despite achieving a lower RMSE (5.36), still exhibit higher variance (0.11) and broader confidence intervals ([5.25, 5.47]) than our model. These findings underscore the effectiveness of combining adaptive segmentation (PELT) with dual-mode decomposition (ICEEMDAN and VMD), which enables our model to maintain both high forecasting accuracy and low variance, thereby ensuring better generalization performance in wind power prediction tasks.