1. Introduction

Substations are pivotal components of power grids, functioning as essential nodes for voltage transformation, current distribution, and power management [

1]. They play a critical role in ensuring the stability and efficiency of electricity transmission and distribution [

2]. In modern power systems, the reliable operation of substations is indispensable in preventing power outages, equipment failures, and disruptions to critical infrastructure [

3]. However, the increasing complexity of substation configurations, the diversity of the installed equipment, and the growing demand for uninterrupted power supply have introduced new challenges in their operation and maintenance [

4,

5]. Substation equipment is prone to various types of defects, such as insulator cracks, corrosion, loose connections, overheating, and oil leaks [

6]. These anomalies, if not detected and addressed promptly, can escalate into major failures, causing significant economic losses and threatening grid stability. Traditional methods for inspecting and maintaining substation equipment primarily rely on manual inspections conducted by skilled personnel [

7]. While manual inspections are still widely used, they suffer from several limitations, including high labor costs, time inefficiency, and subjective evaluations [

8]. Moreover, the increasing scale and automation of power systems have made manual inspections insufficient to meet the growing demand for efficient and accurate equipment monitoring [

9]. Thus, intelligent substation inspections using computer vision and deep learning are gaining traction.

The advent of computer vision and deep learning has brought about new possibilities for automating substation equipment inspection [

10,

11]. Numerous studies have explored deep learning techniques for fault detection, achieving remarkable progress in image-based anomaly detection [

12]. For example, convolutional neural networks (CNNs) have been employed for insulator crack detection [

13], and advanced object detection frameworks such as Faster R-CNN [

14], YOLO [

15], and SSD [

16] have been adapted for defect localization. More recently, transformer-based detection models, such as DETR and Deformable DETR [

17], have demonstrated superior performance on generic object detection benchmarks. However, despite these advancements, several challenges remain unresolved in the domain of substation defect detection.

Substation defect datasets are often highly imbalanced, with very few samples of rare but critical fault types. Furthermore, due to data confidentiality and security concerns, acquiring large, annotated datasets from substations can be difficult. This scarcity of labeled data limits the applicability of data-hungry deep learning models, resulting in suboptimal performance.

The definition of “defect” or “abnormality” is often context dependent and varies between substations. Factors such as the equipment age, model, operational environment, and maintenance history can influence what is considered a defect. For instance, a crack on a newer insulator may be deemed critical, while a similar crack on older equipment might be considered acceptable due to different tolerance levels. This variability poses challenges for designing generalizable defect detection models. While various deep learning models have been proposed for defect detection, effectively enhancing the subtle and diverse anomaly features in complex substation environments remains a significant hurdle. Challenges such as the low visual distinctiveness of certain defects (e.g., incipient cracks, slight corrosion), the high variability in defect appearance, and the influence of operational context on defect definition all complicate the task of designing robust feature enhancement mechanisms. Existing generic feature enhancement techniques, including standard attention modules, often struggle to adapt to these specificities, sometimes amplifying irrelevant dominant features or failing to capture context-dependent anomaly cues. Integrating multi-modal information, such as text, images, audio, video, structured data, etc., into deep learning frameworks remains an underexplored area.

To address this, we propose the Language-Guided Enhanced Anomaly Power Equipment Detection Network (LEAD-Net), a novel framework built upon the DETR (De-tection Transformer) architecture. Specifically, unlike most existing methods which rely solely on image annotations during training, LEAD-Net uniquely leverages textual descriptions of equipment conditions as auxiliary guidance during its training phase. This approach effectively helps the network to learn intricate target feature information, thereby enhancing its defect detection performance. A key component of our framework is the LA-FEM module, which dynamically adjusts channel attention in the image encoder based on these text-derived features. This overcomes the limitations of standard channel attention, which can be biased towards dominant, non-anomalous features, allowing LA-FEM to dynamically focus on the image channels most relevant to text-described anomalies, thus improving detection accuracy. Crucially, during the inference (testing) phase, LEAD-Net operates solely on image data and does not require any additional text input. This distinguishes LEAD-Net from other multi-modal models by requiring fewer inputs during testing, significantly increasing its practical applicability and real-world deployment value in substation environments.

To operationalize this semantic-guided feature enhancement, we propose the Language-Guided Enhanced Anomaly Power Equipment Detection Network (LEAD-Net), built upon the DETR architecture. A key advantage of our framework is that, while textual descriptions guide the learning of enhanced anomaly features during training, LEAD-Net retains fully image-driven during inference, ensuring its feasibility for real-world deployment in substation environments where contemporaneous text input may not be available.

The remainder of this study is organized as follows:

Section 2 provides a review of the research background for anomaly defect detection in power systems.

Section 3 describes the architecture of LEAD-Net and details the design of LAFEM.

Section 4 presents the experimental setup, results, and analysis.

Section 5 shows the ablation experiment and analysis of the innovation module.

Section 6 concludes the study with a discussion of future research directions.

3. Proposed Method

3.1. Framework

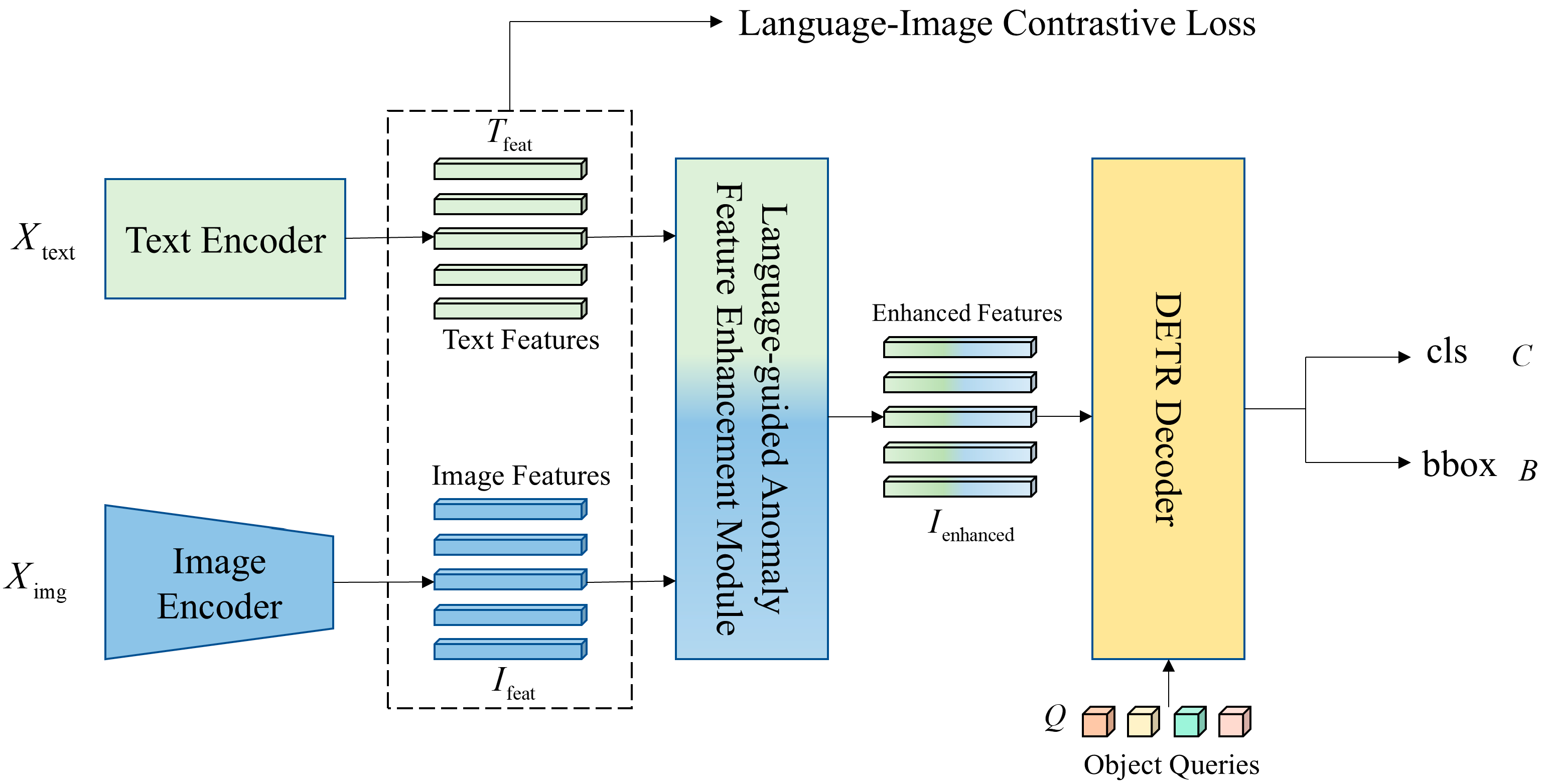

The proposed Language-Guided Enhanced Anomaly Power Equipment Detection Network (LEAD-Net) is illustrated in

Figure 1. LEAD-Net builds upon the DETR framework and extends its capabilities by introducing a novel text-guided learning mechanism to address key challenges in detecting anomalies in substation equipment. During the training phase, LEAD-Net incorporates textual information, such as maintenance records and inspection reports, as auxiliary guidance to enhance the network’s ability to learn subtle anomaly features. This integration of multi-modal information enables LEAD-Net to overcome the limitations of vision-only approaches, such as difficulties in detecting rare or subtle anomalies. Importantly, during the inference phase, LEAD-Net operates solely on image data, ensuring its practicality in real-world applications where textual descriptions may not always be available.

LEAD-Net consists of three main components: an image encoder, a text encoder, and the LAFEM module. The image encoder employs a CNN-Transformer architecture identical to the standard DETR framework, enabling the network to extract both local and global contextual information from substation equipment images. This ensures robust feature representation of both coarse and fine-grained visual patterns. Simultaneously, textual descriptions are processed by a BERT-based text encoder, which generates semantic feature representations. These textual features provide critical contextual information about equipment conditions and defects, which are leveraged during training to guide anomaly detection. LAFEM serves as the core innovation of LEAD-Net, refining image feature representations by dynamically incorporating text-derived guidance. This module ensures that anomaly-related features are effectively emphasized, addressing the limitations of traditional attention mechanisms that often fail to prioritize subtle anomalies in complex visual data.

In the training phase, LEAD-Net extracts feature from images using an image encoder. This process can be described as follows:

where

represents the extracted image features, and

is the input image. Here,

serves as the image feature extractor. In LEAD-Net, the image encoder employs the same CNN-Transformer architecture as DETR, allowing it to capture both local and global contextual information.

Additionally, the textual input is processed by a text feature extractor to generate corresponding textual features. This process is defined as:

where

denotes the extracted textual features, and

is the input text. The text encoder

in LEAD-Net adopts BERT as the feature extractor, which is well-suited for capturing semantic representations from textual inputs.

Channel attention enhances feature representation by emphasizing informative channels. However, in power equipment anomaly detection, unguided attention can prioritize dominant components over subtle anomalies, hindering accuracy. We address this with the LAFEM, detailed in

Section 2.2. LAFEM uses text to guide channel attention, focusing on anomaly-related features. A significant innovation in LEAD-Net is the Language-Guided Anomaly Feature Enhancement Module (LAFEM), which addresses the limitations of standard channel attention mechanisms. Traditional attention mechanisms, such as SE-Net, often prioritize dominant visual features in an image, inadvertently suppressing subtle anomaly patterns that are crucial for effective defect detection. This is particularly problematic in substation environments, where many anomalies, such as small cracks, discoloration, or surface corrosion, are visually inconspicuous and can be easily overshadowed by larger, non-anomalous regions. LAFEM overcomes this limitation by dynamically guiding attention based on textual features. Specifically, LAFEM projects textual features into the same feature space as the image features and computes cross-modal attention to emphasize the image channels most relevant to the described anomalies.

The enhanced features generated by LAFEM are passed through the DETR decoder, which predicts the categories, positions, and dimensions of the detected anomalies. The decoding process is expressed as follows.

The enhanced features

generated by LAFEM are decoded using the DETR decoder to predict the categories, positions, and dimensions of anomalies. This process is expressed as:

where

represents the predicted anomaly categories (e.g., cracks, corrosion), and

denotes the bounding box parameters, including the center coordinates and dimensions. The DETR decoder, following the standard DETR structure, employs a set-based matching algorithm to directly predict the final anomaly detections without requiring post-processing steps such as non-maximum suppression (NMS). This end-to-end detection process ensures efficiency and accuracy in anomaly detection.

LEAD-Net operates differently during training and inference, ensuring both robustness and practicality. In the training phase, both image and textual inputs are utilized. The image encoder extracts visual features, while the text encoder generates semantic features from the accompanying textual descriptions. LAFEM combines these features to dynamically guide the network’s attention toward anomaly-related patterns. During the inference phase, the textual input is removed, and the network relies solely on image data. This decoupling of textual guidance during training and inference makes LEAD-Net practical for deployment in real-world substation environments, where textual descriptions may not always be available.

In summary, LEAD-Net introduces a novel approach to anomaly detection by integrating textual guidance into the DETR framework. The multi-modal learning process enables the network to effectively capture subtle and rare anomaly features that vision-only methods often miss. LAFEM plays a central role in this process by dynamically refining channel attention based on text-derived information, ensuring that anomaly-related features are emphasized while suppressing dominant but less relevant patterns. With its ability to operate solely on image data during inference, LEAD-Net strikes a balance between enhanced detection abilities and practical applicability, making it a promising solution for intelligent substation equipment inspection.

3.2. LAFEM Module

The LAFEM module is the core innovation in LEAD-Net, designed to overcome the inherent limitations of traditional channel attention mechanisms in visual anomaly detection. Standard attention mechanisms are often biased toward dominant, non-anomalous features, especially in complex substation equipment images, where anomalies such as small cracks or surface discoloration may be visually subtle. LAFEM addresses this issue by dynamically guiding channel attention using textual features, enabling the network to amplify responses to anomaly-related features described in the accompanying text. By embedding text-guided contextual information into the image feature space, LAFEM significantly enhances the network’s ability to detect subtle and rare anomalies.

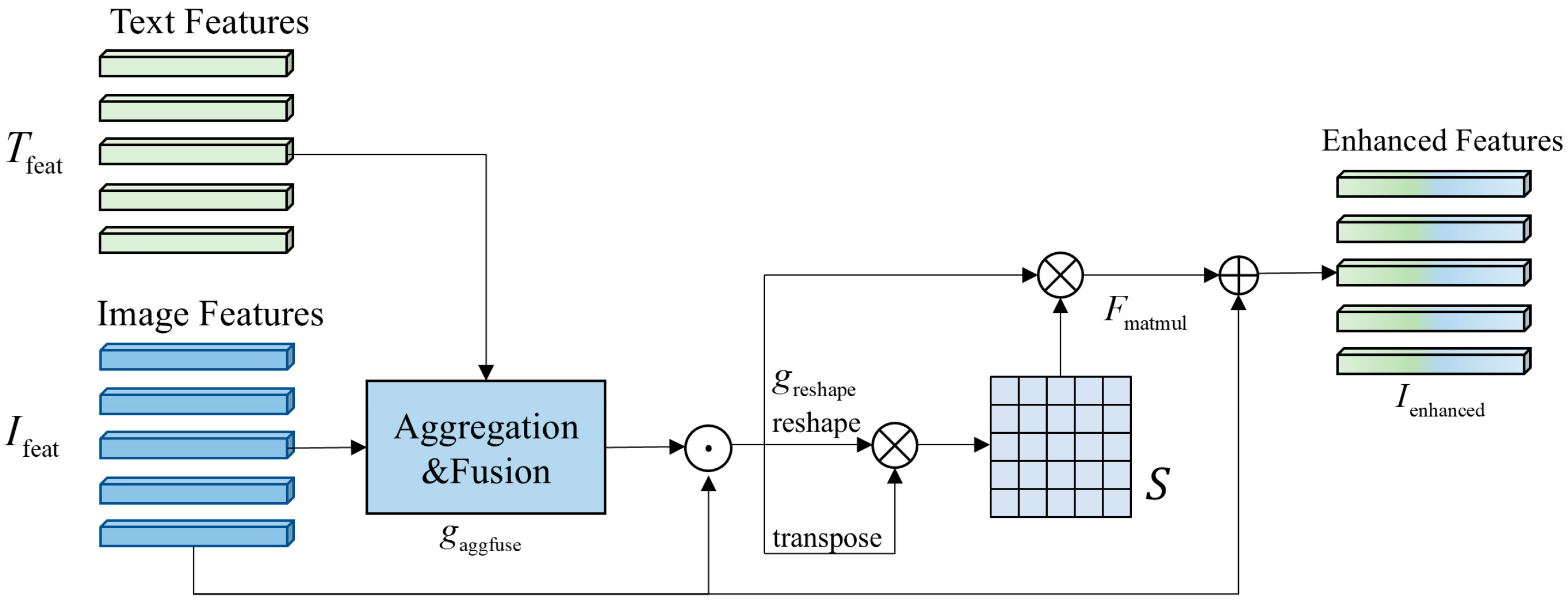

As shown in

Figure 2, the architecture of LAFEM consists of three key stages: (1) the aggregation of textual features, (2) the aggregation of image features, and (3) the generation of channel guidance to refine image feature representations. During the training phase, textual features

, extracted using a BERT-based text encoder, are aggregated through a global pooling operation to distill the most salient semantic information. This process can be described as:

where

represents the aggregated textual features,

denotes global average pooling, and

is a multi-layer perceptron. This aggregation ensures that the textual information is condensed into a compact representation, retaining only the most relevant semantic cues related to potential anomalies. This resulting feature vector

provides a high-level semantic summary of the textual description, ready for integration with visual features.

Similarly, LAFEM aggregates the image features

, extracted from the CNN-Transformer-based image encoder, to derive channel-wise feature embedding. The aggregation process for image features is defined as:

where

denotes the image features embedded with channel guidance and

is, again, a 1 × 1 convolution. This operation reduces the dimensionality of the image feature map while retaining its spatial structure, facilitating the subsequent fusion of textual and image features. The output provides a spatially aware yet channel-condensed representation of the image features, summarizing their content for cross-modal interactions.

Once the textual features

and image features

are aggregated, LAFEM combines them to generate channel guidance. This fusion process is performed by computing a weighted combination of the two modalities, embedding the textual semantic information into the image feature space. The combined features

are expressed as:

where

denotes the image features embedded with channel guidance. This operation first fuses the aggregated text and image features to derive channel-wise attention weights, which are then applied in an element-wise manner to the original image features. This process effectively re-weights the image features based on the combined textual and visual cues, enhancing anomaly-relevant information.

The enhanced features

amplify the response of anomaly-related channels, allowing the network to focus on features relevant to power equipment anomalies. This enhancement process can be described as:

where

represents the reshaped version of

, and

denotes the enhanced features. Here,

refers to matrix multiplication, and

is the self-attention weight calculated based on channel correlations.

Through this process, LAFEM utilizes textual features during training to guide the network’s focus on anomaly information while reducing overfitting to non-anomalous regions of the equipment.

During the testing phase, since textual features are not available, LAFEM generates channel guidance solely from image features. This simplifies the formulation to:

LAFEM introduces a novel mechanism for text-guided channel attention, addressing the limitations of standard attention mechanisms in visual anomaly detection. By dynamically fusing textual and image features during training, LAFEM enables the network to focus on subtle, text-described anomalies while reducing overfitting to dominant, non-anomalous regions. The ability to operate effectively in both the training and testing phases makes LAFEM a versatile and practical component of LEAD-Net, significantly contributing to its overall performance in substation equipment defect detection.

4. Experiments and Analysis

4.1. Dataset and Setup

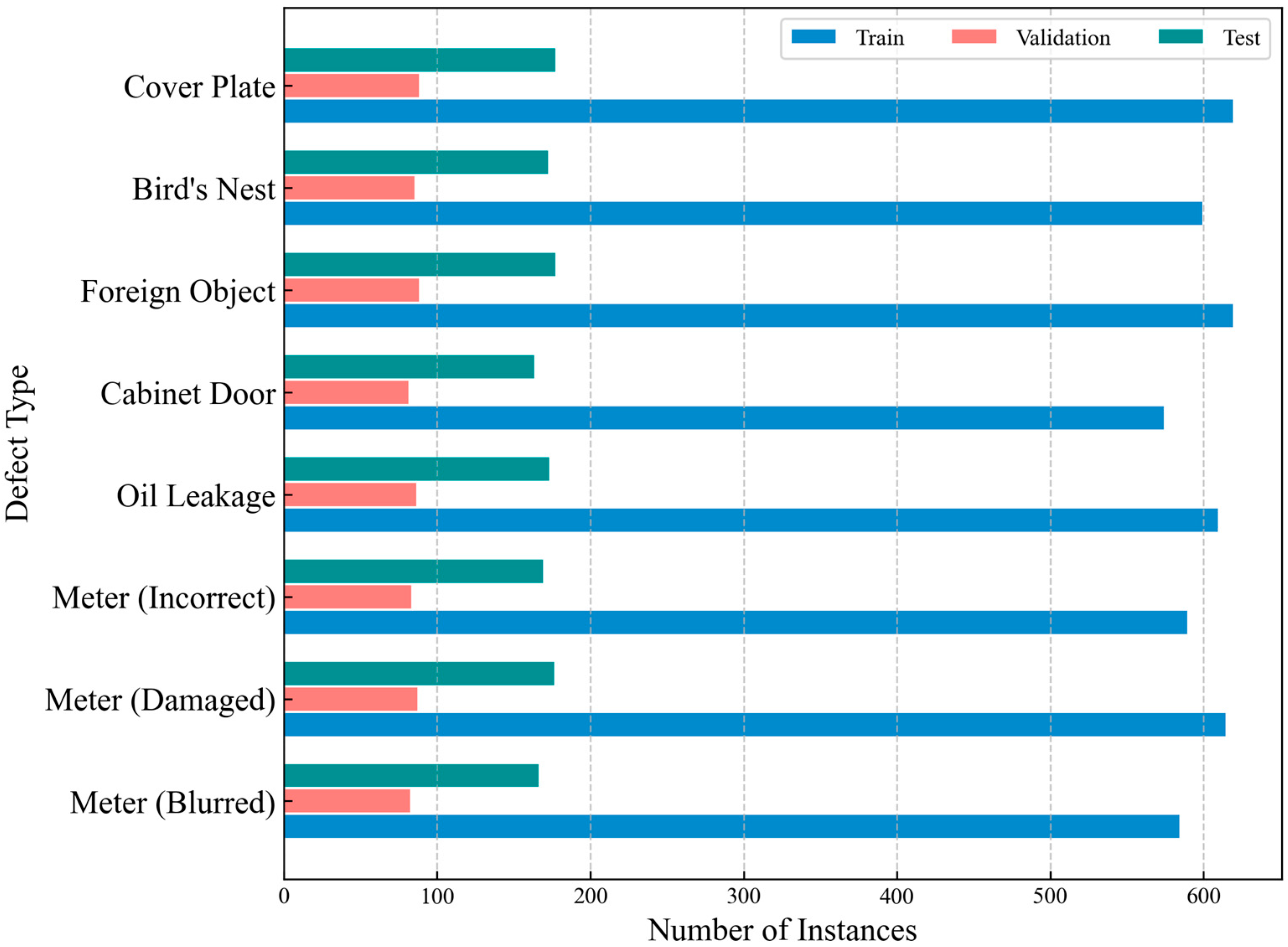

To evaluate the performance and robustness of our proposed LEAD-Net framework, we conducted experiments on a real-world substation equipment defect dataset, meticulously collected from a major power grid company in China. This dataset was curated to encapsulate the complexities and challenges of practical substation equipment inspections. It comprises a total of 8307 high-quality image–text pairs, where each image corresponds to a visible-light photograph of substation equipment, accompanied by a relevant textual description. The dataset was carefully designed to provide a comprehensive benchmark for anomaly detection, encompassing a diverse range of scenarios and conditions.

The dataset includes images with diverse original resolutions and aspect ratios. To ensure a uniform input for our deep learning model and facilitate efficient batch processing, all images were subjected to a preprocessing pipeline involving resizing and padding to a fixed input size of 800 × 1333 before being utilized for training and inference. In the experiment, we adopted random flipping, random scaling and cropping, random color jitter, and random rotation as methods for data augmentation.

The visible defects captured in the images span multiple categories, including cracks, discoloration, rust, corrosion, insulation damage, loose components, and other signs of wear and tear. The dataset also includes images of normal equipment without defects, ensuring a balanced representation of anomalous and non-anomalous samples.

The textual descriptions paired with each image complement the visual data by providing additional semantic context related to the equipment’s operational state, potential defects, and maintenance history. These descriptions were meticulously compiled from multiple sources, including inspection reports, maintenance logs, equipment manuals, and annotations provided by experienced substation inspectors.

The dataset focuses on eight distinct types of equipment defects, providing a fine-grained categorization of anomalies. To ensure consistency and facilitate text-guided learning, we established a standardized textual annotation protocol for each defect type.

Table 1 provides representative examples of these annotations, showcasing the format and level of detail used to describe each anomaly, along with corresponding example images. The text annotations are concise and precise, focusing on the visual characteristics. For instance, a blurred meter dial is consistently described as “This meter has blurred dial”, while an oil leakage is denoted as “This equipment has oil leakage/oil stain on the ground”.

Figure 3 presents the distribution, by train/validation/test, of examples of each of the eight defect types. This carefully curated dataset, with its standardized textual annotations, is crucial for training and evaluating the text-guided feature enhancement capabilities of LEAD-Net.

The experiments are conducted using PyTorch (version 2.1.0) with Python 3.12 and CUDA version 12.6. DETR served as the base architecture. The textual features are extracted using BERT-base. The model is trained with the AdamW optimizer (10−4 learning rate, 10−4 weight decay), and a cosine annealing schedule is applied. Training runs for 50 epochs with a batch size of 16 on two NVIDIA A6000 GPUs.

We evaluate the detection performance using average precision (AP) and mean average precision (mAP). The AP is calculated for each class, and the mAP is computed by averaging the AP values across all classes. The formula for AP is given by:

where

is the precision at the

-th threshold, and

is the change in recall from the

-th threshold to the

-th threshold. The mAP is then given by:

where

is the number of classes.

4.2. Comparative Experiments

Table 2 presents a detailed performance comparison between the proposed LEAD-Net and several state-of-the-art object detection algorithms, including Faster R-CNN, YOLOv9, DETR, and Deformable DETR. The evaluation metrics include average precision (AP) for eight distinct defect categories and the overall mean average precision (mAP) across all categories. LEAD-Net achieves the highest mAP of 79.51%, surpassing all baseline models, which include Faster R-CNN (68.79%), YOLOv9 (75.88%), DETR (72.64%), and Deformable DETR (77.94%). This improvement underscores the effectiveness of our proposed method, which integrates a text-guided feature enhancement mechanism during training to optimize anomaly detection in complex substation equipment images.

A closer examination of the results reveals that LEAD-Net exhibits balanced and robust performance across all defect categories, achieving the highest AP scores in IR (incorrect reading), OLS (oil leakage/stain), and DMC (damaged/missing cover), with AP values of 82.80%, 80.30%, and 84.00%, respectively. These scores significantly outperform other methods, demonstrating the model’s ability to detect subtle and context-dependent anomalies with high precision. Notably, YOLOv9 and Deformable DETR also perform well in these categories; however, LEAD-Net’s ability to outperform these strong baselines by 3.63% and 1.57% in mAP, respectively, highlights its superior feature extraction and anomaly localization capabilities.

This superior performance can be attributed to the Language-Guided Anomaly Feature Enhancement Module (LAFEM), which uses textual descriptions during training to guide the network’s attention toward anomaly-relevant features. This mechanism helps the model focus on subtle defect patterns that are otherwise overshadowed by dominant, non-anomalous features, which is a common limitation in traditional object detection algorithms. For instance, defects such as oil stains or cracked covers can often blend with the background or normal surface textures, making them harder to detect without additional semantic guidance. This is reflected in the notably high AP scores achieved by LEAD-Net in categories such as OLS (80.30%) and DMC (84.00%), which involve challenging visual characteristics, demonstrating LAFEM’s effectiveness in highlighting subtle visual cues.

While LEAD-Net achieves exceptional results in most categories, certain challenges remain in categories such as FO (foreign object), where the AP score is slightly lower at 72.20%. Although this score is still higher than the corresponding results from other algorithms (e.g., YOLOv9 achieves 66.20%, Deformable DETR achieves 70.20%), it indicates potential room for improvement. The slightly lower performance in this category can likely be attributed to the inherent ambiguity in the visual features of foreign objects, which may vary significantly in shape, size, and appearance. Addressing these issues through enhanced data augmentation strategies or category-specific feature refinement could further boost performance in future work.

Another interesting observation is LEAD-Net’s performance in categories such as ADC (abnormal door closure) and BN (bird’s nest), where it achieves AP scores of 84.30% and 79.10%, respectively. These categories often involve irregular shapes or non-standard equipment states, making anomaly detection more challenging. The results highlight LEAD-Net’s ability to generalize well across diverse and complex defect types, ensuring robust performance even in non-standard scenarios. The strong performance in ADC (84.30%) and BN (79.10%) suggests that LEAD-Net effectively leverages both visual and textual context to identify anomalies defined by relative positioning or unusual configurations.

YOLOv9 and Deformable DETR stand out among the baseline algorithms, achieving competitive mAP values of 75.88% and 77.94%, respectively. These models are well-known for their efficiency and ability to capture spatial relationships, with Deformable DETR providing additional flexibility in handling object deformations. However, LEAD-Net’s integration of multi-modal learning, particularly its use of textual guidance during training, gives it a clear edge. By leveraging textual information to refine channel attention and enhance anomaly-related features, LEAD-Net introduces a significant advancement over these baselines, particularly in scenarios where defects are context-dependent or visually subtle. The consistent performance gain across categories, culminating in the highest overall mAP, validates the efficacy of incorporating semantic guidance for object detection in complex industrial settings.

The consistent improvement of LEAD-Net across all defect categories validates its effectiveness in real-world substation inspection scenarios. The incorporation of textual features as guidance during training addresses a critical limitation in existing object detection algorithms, which often struggle with subtle defects or low inter-class variance. Moreover, the use of transformer-based architectures, such as DETR, provides LEAD-Net with the ability to model long-range dependencies and global context, further improving its performance in complex images with dense equipment layouts.

5. Ablation Study

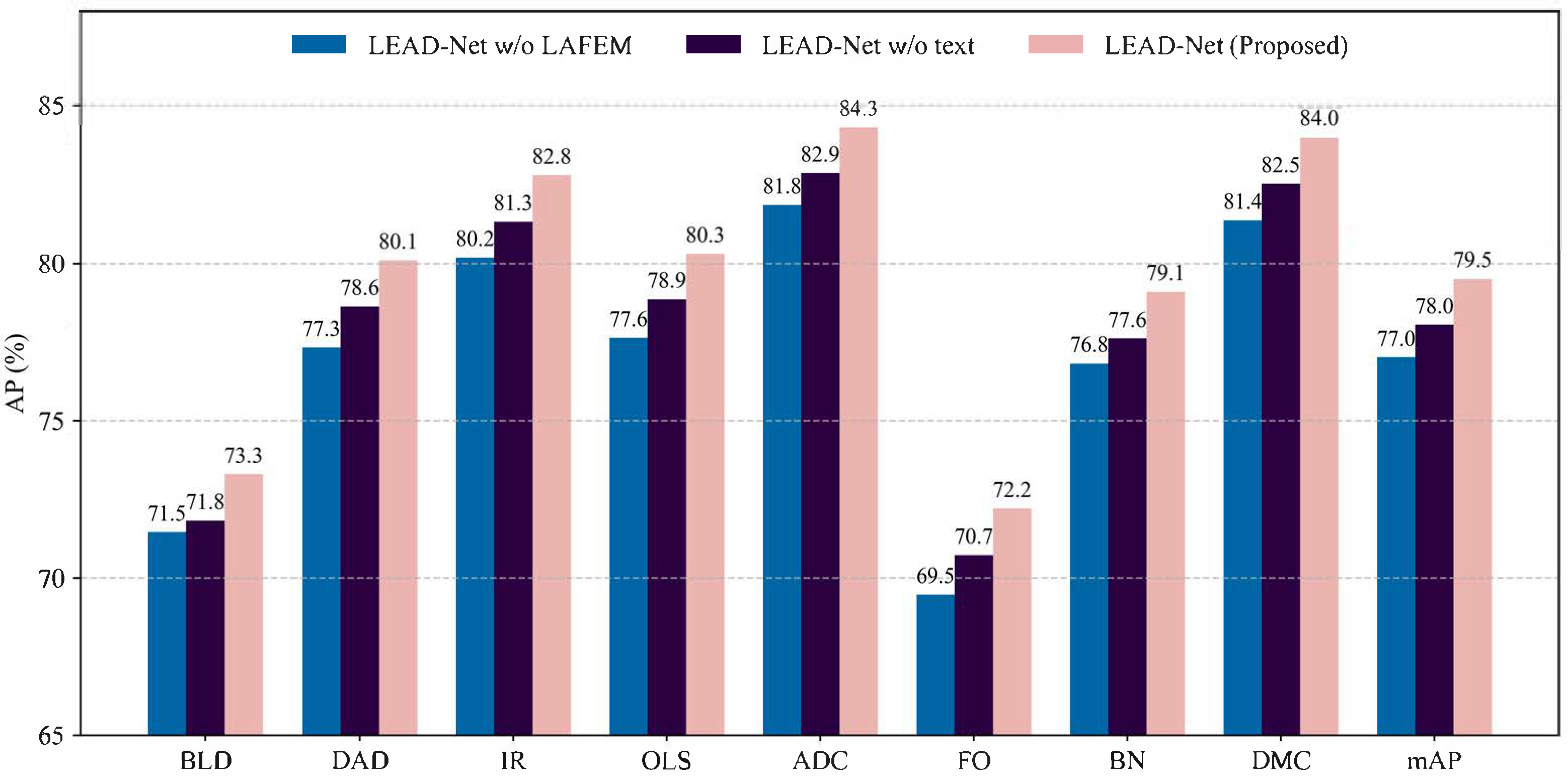

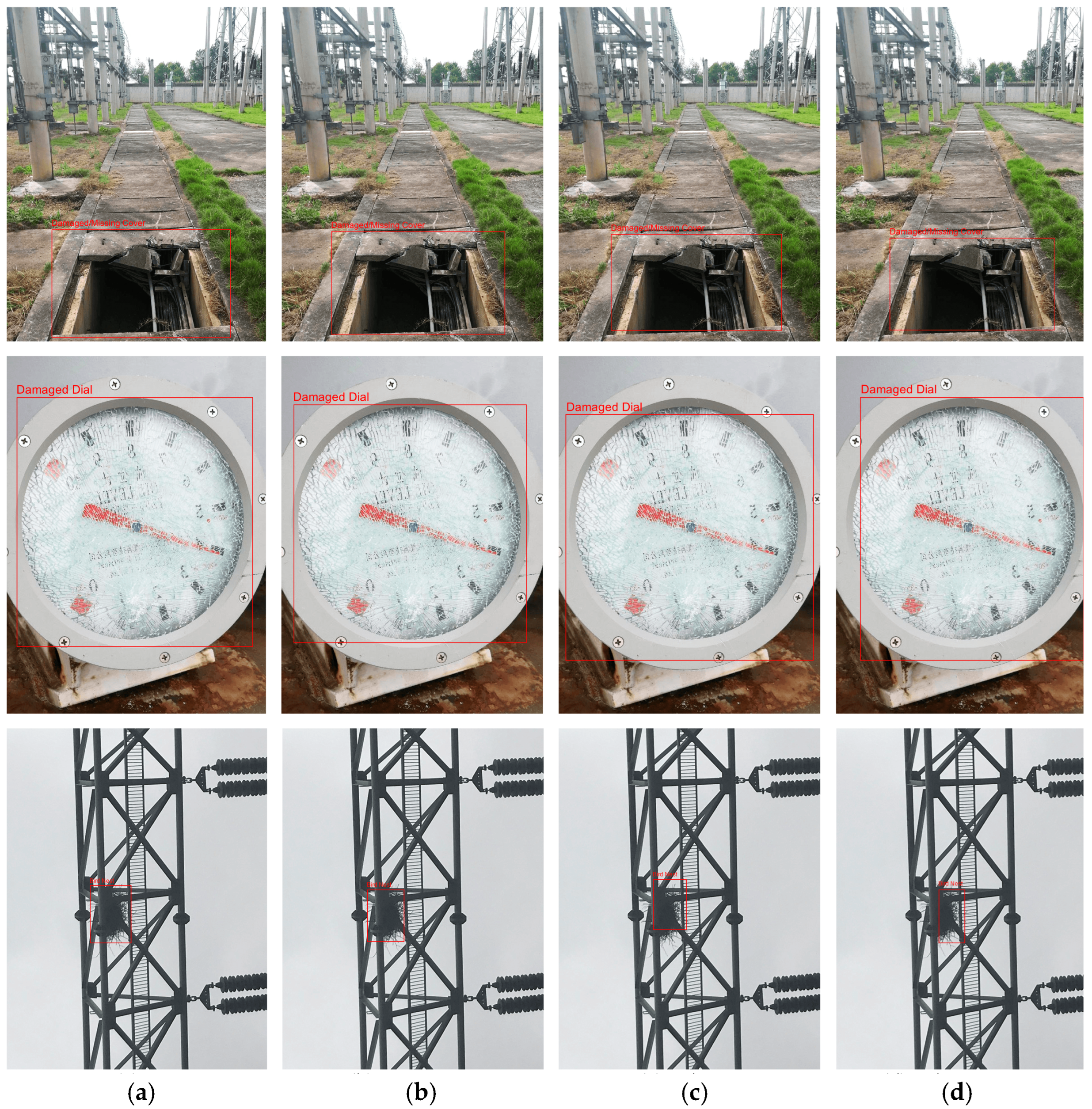

Figure 4 and

Table 3 present the results of the ablation study, which evaluates the contributions of the LAFEM module and the training-time text-guided feature mechanism to the overall performance of LEAD-Net. The study systematically removes these components to quantify their individual impacts on the model’s ability to detect anomalies across various defect categories. The results provide valuable insights into the effectiveness and synergy of these components in improving the robustness and accuracy of LEAD-Net.

The removal of LAFEM leads to a substantial 2.5 percentage point decrease in mAP (from 79.51% to 77.01%), demonstrating its critical role in enhancing the detection of both structural and visually subtle anomalies. As shown in

Figure 4, the absence of LAFEM has the most pronounced effect on categories including FO (foreign object) and DMC (damaged/missing cover), where AP scores drop by 2.7 and 2.6 percentage points, respectively. These categories often involve structural irregularities or abnormalities with inconsistent visual patterns, making them particularly challenging to detect. LAFEM addresses these challenges by dynamically refining channel attention and amplifying anomaly-specific features, enabling the model to focus on subtle defects that may otherwise be overlooked.

Interestingly, even categories such as IR (incorrect reading) and OLS (oil leakage/stain), which generally exhibit more consistent visual characteristics, experience performance degradation without LAFEM. This further underscore its general importance in learning robust and discriminative features across a wide range of defect types. The significant mAP drops upon removing LAFEM highlights its fundamental role in feature refinement and anomaly localization accuracy across diverse visual characteristics.

While text input is not used during inference, the removal of training-time text guidance results in a 1.47 percentage point mAP reduction (from 79.51% to 78.04%), highlighting the successful knowledge transfer from the textual modality to the visual features during training. As seen in

Figure 4, categories such as ADC (abnormal door closure) and OLS are most affected, with AP drops of approximately 1.4 percentage points each. These categories often require contextual understanding, where defects are defined by the relationships between different visual elements rather than standalone features. The textual descriptions during training provide crucial semantic cues to guide the model in learning these nuanced, context-dependent relationships, ultimately enhancing its ability to detect such anomalies during inference. The performance degradation without text guidance confirms its value in providing essential semantic context during training, particularly benefiting categories that are reliant on relational information.

For example, in the ADC category, text guidance might describe a “partially closed door” or “misaligned hinges”, which directs the model’s attention to specific spatial relationships and edge patterns, enabling better feature learning. The degradation in performance when text guidance is removed suggests that visual features alone may not sufficiently capture such contextual anomalies without the semantic grounding provided by text.

The highest performance is achieved when both LAFEM and text guidance are applied together, yielding a mAP of 79.51%. This demonstrates the strong synergy between these components, as LAFEM amplifies the anomaly-relevant features identified through textual guidance during training. The complementary nature of these mechanisms ensures that LEAD-Net is both structurally aware (via LAFEM) and contextually grounded (via text guidance), making it highly effective in handling a diverse range of anomalies.

The results also reveal that LAFEM contributes more significantly to overall performance than text guidance when evaluated individually. This is evident from the larger mAP drop (2.5 vs. 1.47 percentage points) upon its removal. However, the persistent impact of text guidance on inference-time performance highlights its critical role in enabling effective modality transfer, where knowledge from the textual modality enhances the learning of visual features.

Figure 5 provides a visual comparison of defect detection performance under different configurations of LEAD-Net, illustrating the impact of removing LAFEM and training-time text guidance. These qualitative results complement the quantitative findings by vividly demonstrating how each component contributes to more accurate and precise defect detection.

From

Figure 5, it is evident that LAFEM plays a critical role in refining features and improving localization precision for defect detection. Without LAFEM (column d), the detection results show significant localization errors. For example, in the top image, the bounding box for the “damaged/missing cover” is excessively large, covering a substantial portion of non-defective areas. Similarly, in the middle image, the bounding box for the “damaged dial” is misaligned, extending beyond the actual damaged region. The absence of LAFEM hinders the model’s ability to suppress irrelevant features and focus on defect-specific areas, leading to imprecise and overly broad detections. This highlights LAFEM’s importance in enhancing the detection of structural anomalies, such as missing or damaged components.

Training-time text guidance (column c) contributes significantly to the model’s understanding of semantic context. Without text guidance, the model’s performance improves compared to when LAFEM is absent, but it still falls short of optimal precision. For instance, in the top image, the bounding box for the “damaged/missing cover” is slightly smaller than in column d but remains misaligned, capturing portions of non-defective areas. In the middle image, the “damaged dial” is detected with reasonable accuracy; however, the model struggles with more context-dependent defects, where semantic relationships (e.g., “partially missing” or “misaligned”) are critical for accurate detection. These results indicate that text guidance provides meaningful semantic cues during training, enabling the model to better capture contextual relationships and achieve superior performance during inference.

The complete LEAD-Net model (column b), which incorporates both LAFEM and text guidance, achieves the best detection performance. The bounding boxes are tightly aligned with the ground truth annotations (column a) across all examples, demonstrating the model’s robustness in detecting both subtle defects (e.g., damaged dials) and structural anomalies (e.g., damaged powerline components). LAFEM enhances feature refinement and precise localization, while text guidance provides global semantic context, ensuring the model can handle both visually subtle and contextually complex defects. The synergy between these components addresses the dual challenges of feature precision and context awareness, making LEAD-Net highly effective for real-world substation equipment inspection tasks.

6. Conclusions

This study presents LEAD-Net, a novel and effective framework for substation equipment defect detection. Unlike traditional methods, LEAD-Net uniquely leverages training-time text guidance, in the form of defect descriptions, to significantly enhance anomaly detection accuracy. The core innovation, the Language-Guided Anomaly Feature Enhancement Module (LAFEM), uses these descriptions to refine channel attention within the image encoder. This enables the network to focus on subtle visual cues related to anomalies that might otherwise be missed, effectively addressing a key limitation of existing approaches. A crucial advantage of LEAD-Net is its ability to operate without text input during inference, ensuring practicality for real-world deployment and minimizing computational overheads. The experimental results when the model was used on a challenging real-world dataset demonstrate LEAD-Net’s superior performance compared to state-of-the-art object detection methods, including Faster R-CNN, YOLOv9, DETR, and Deformable DETR.

Comprehensive ablation studies further validated the individual and combined contributions of both LAFEM and the training-time text guidance, emphasizing the synergistic benefits of this approach. Future work will focus on expanding the range of textual information, exploring methods to further enhance LAFEM, and investigating the deployment of LEAD-Net on edge computing devices for real-time inspection. The presented methods have shown significant potential for related anomaly detection tasks, paving the way for more advanced and practical defect detection solutions in industrial applications.