The Voltage Regulation of Boost Converters via a Hybrid DQN-PI Control Strategy Under Large-Signal Disturbances

Abstract

1. Introduction

2. Problem Formulation and DQN Revisit

2.1. Problem Formulation

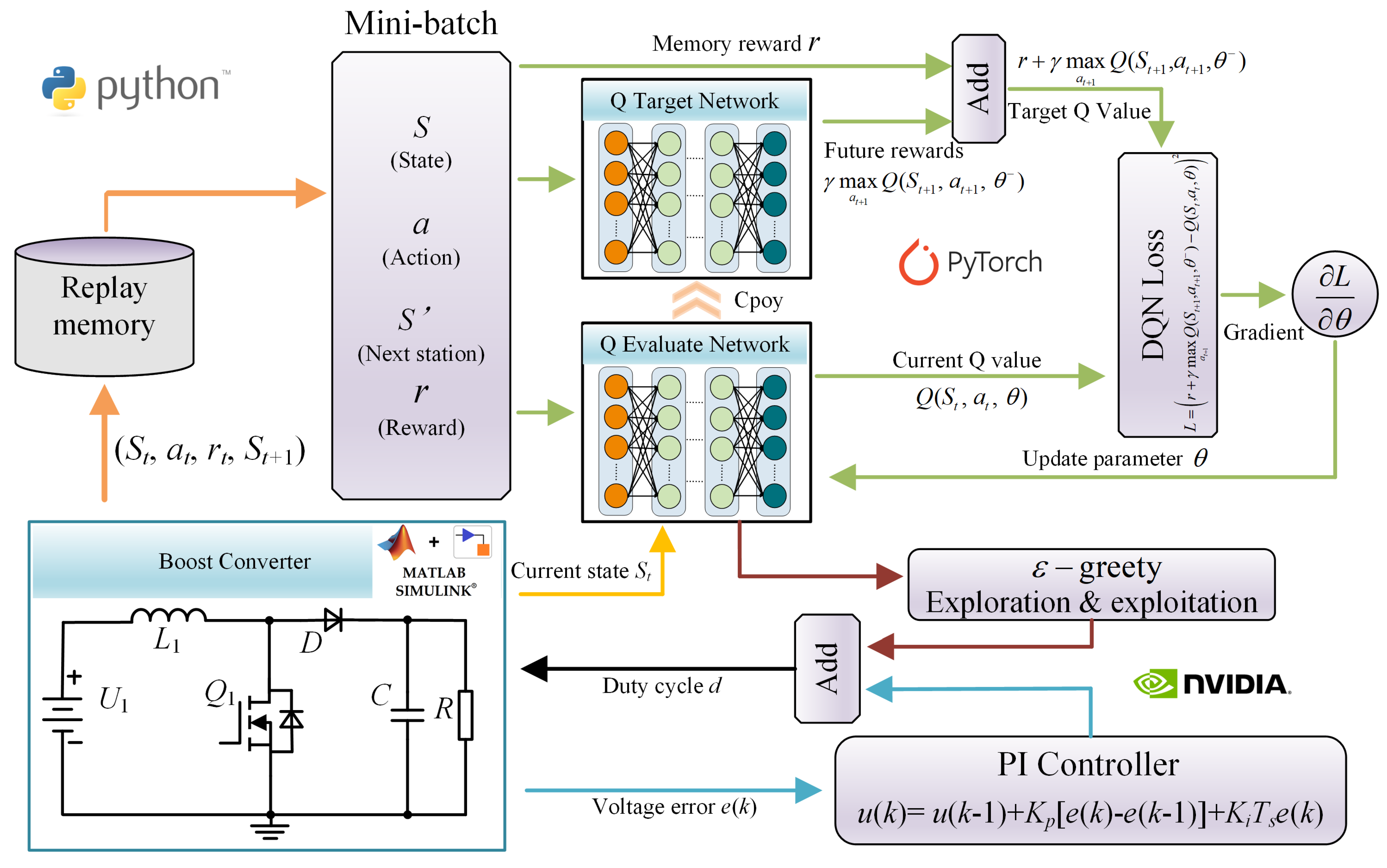

2.2. DQN Algorithm Revisit

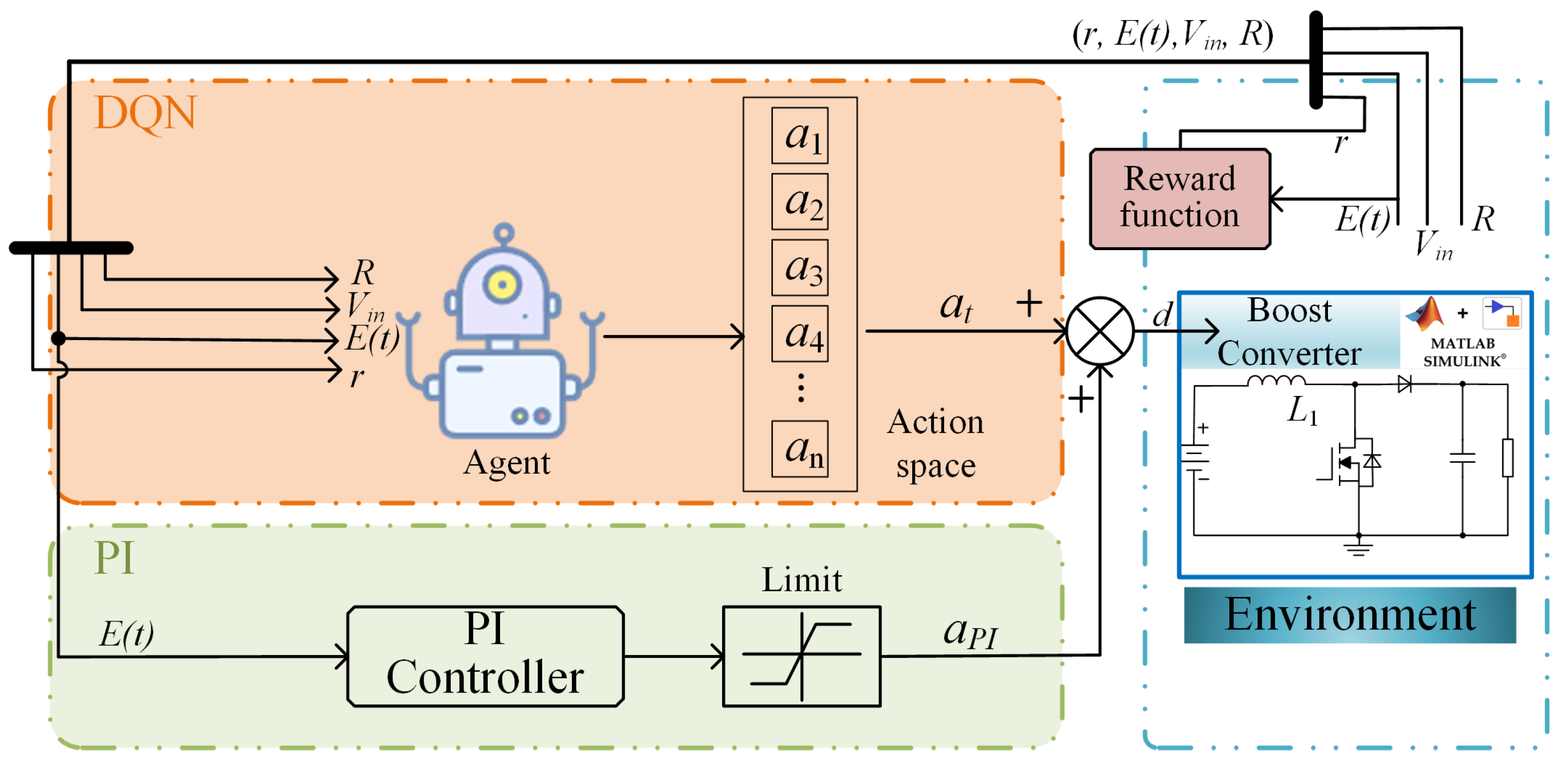

3. Control System Design

3.1. State Space

3.2. Action Space

3.3. Reward Function

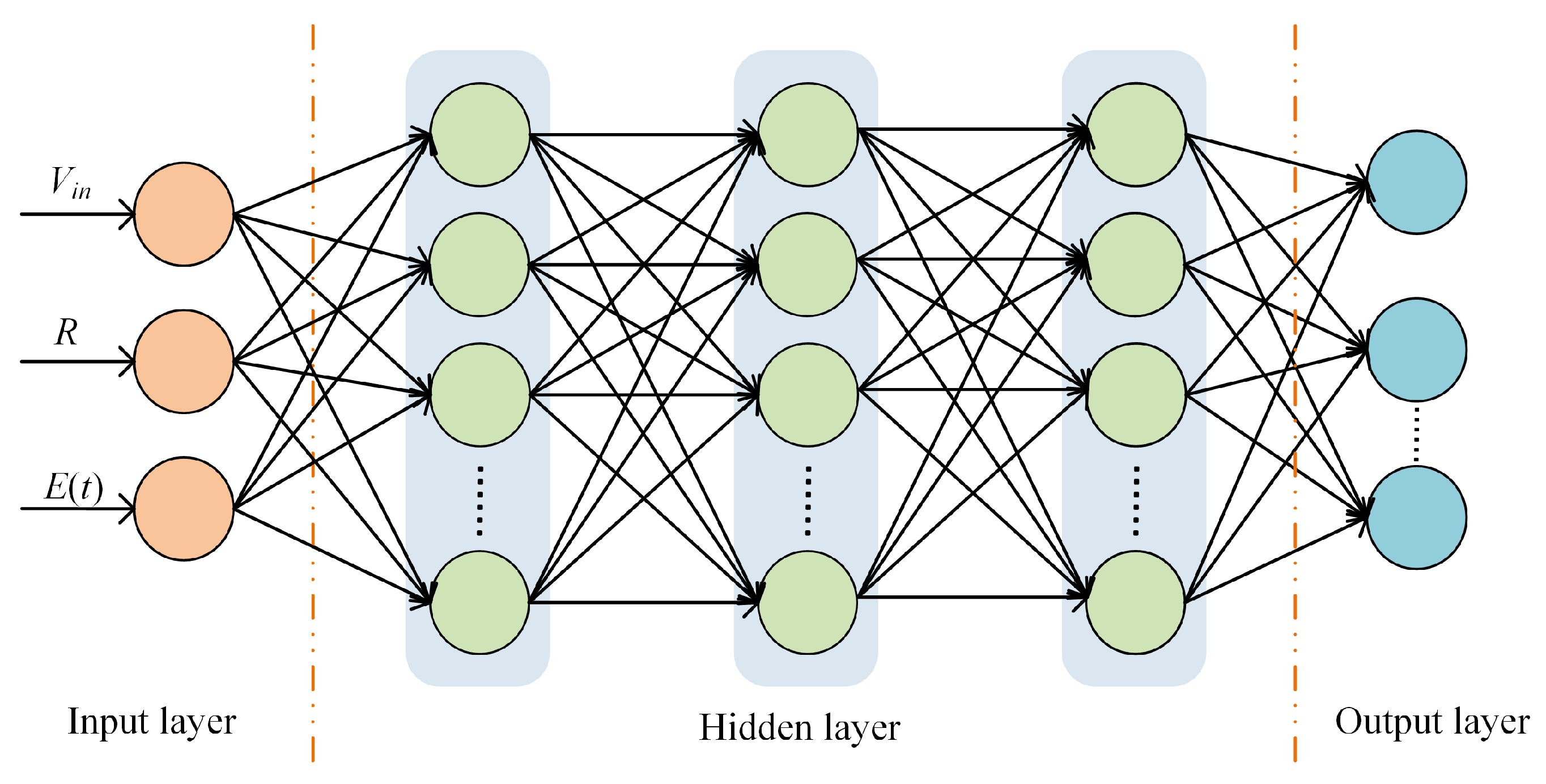

3.4. DNN Design

3.5. PI Controller Design

4. Simulation Verification

4.1. Simulation Configuration

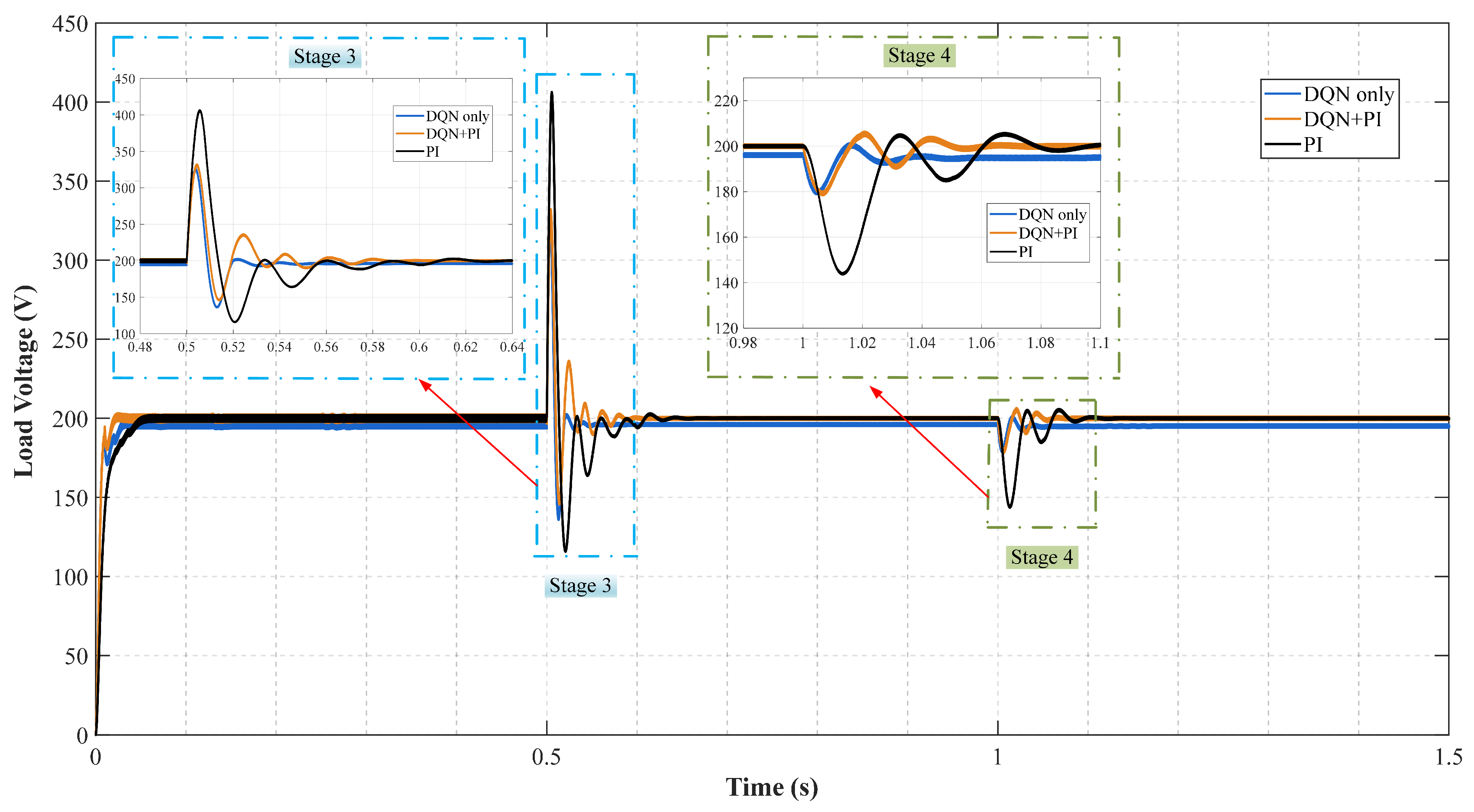

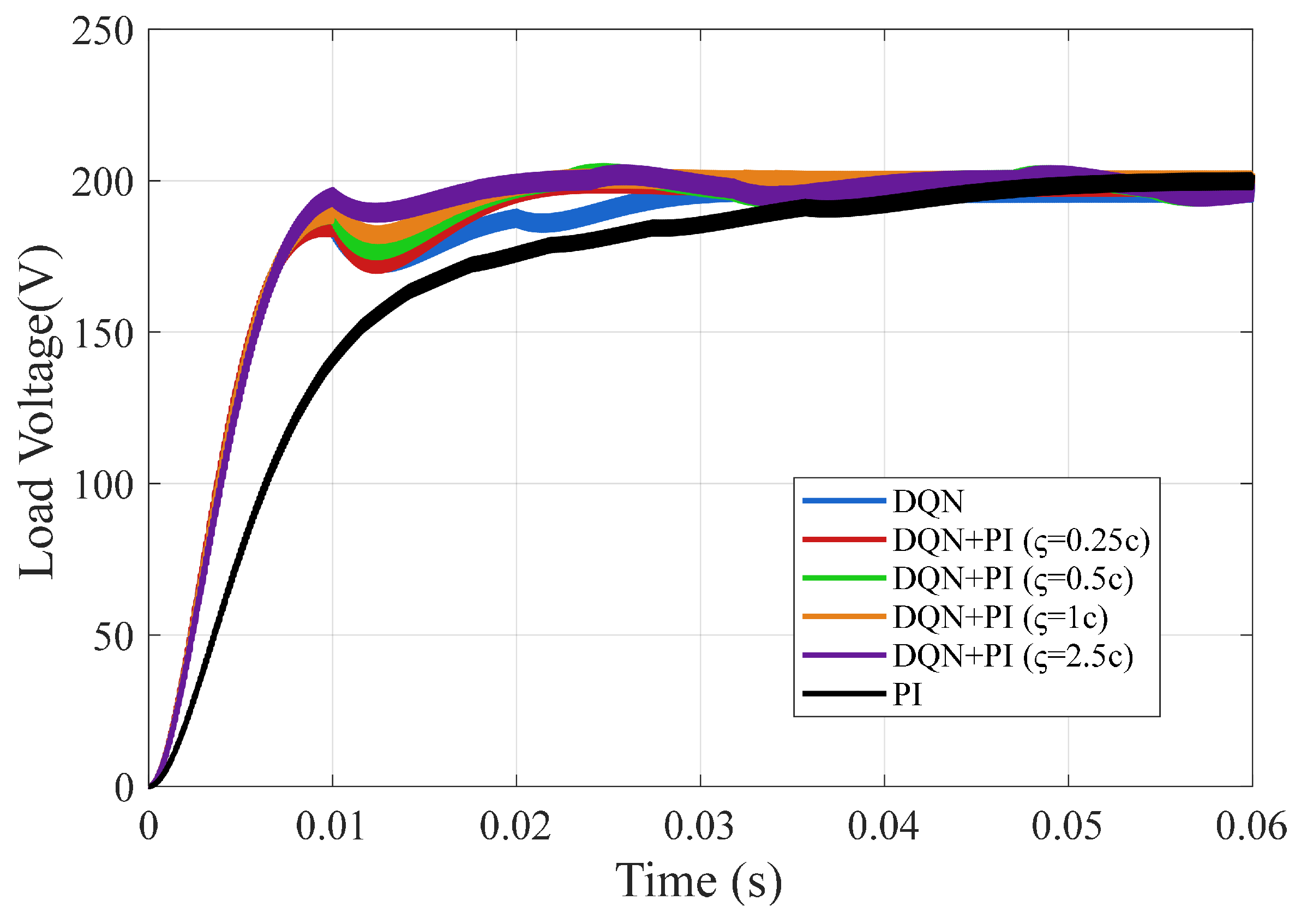

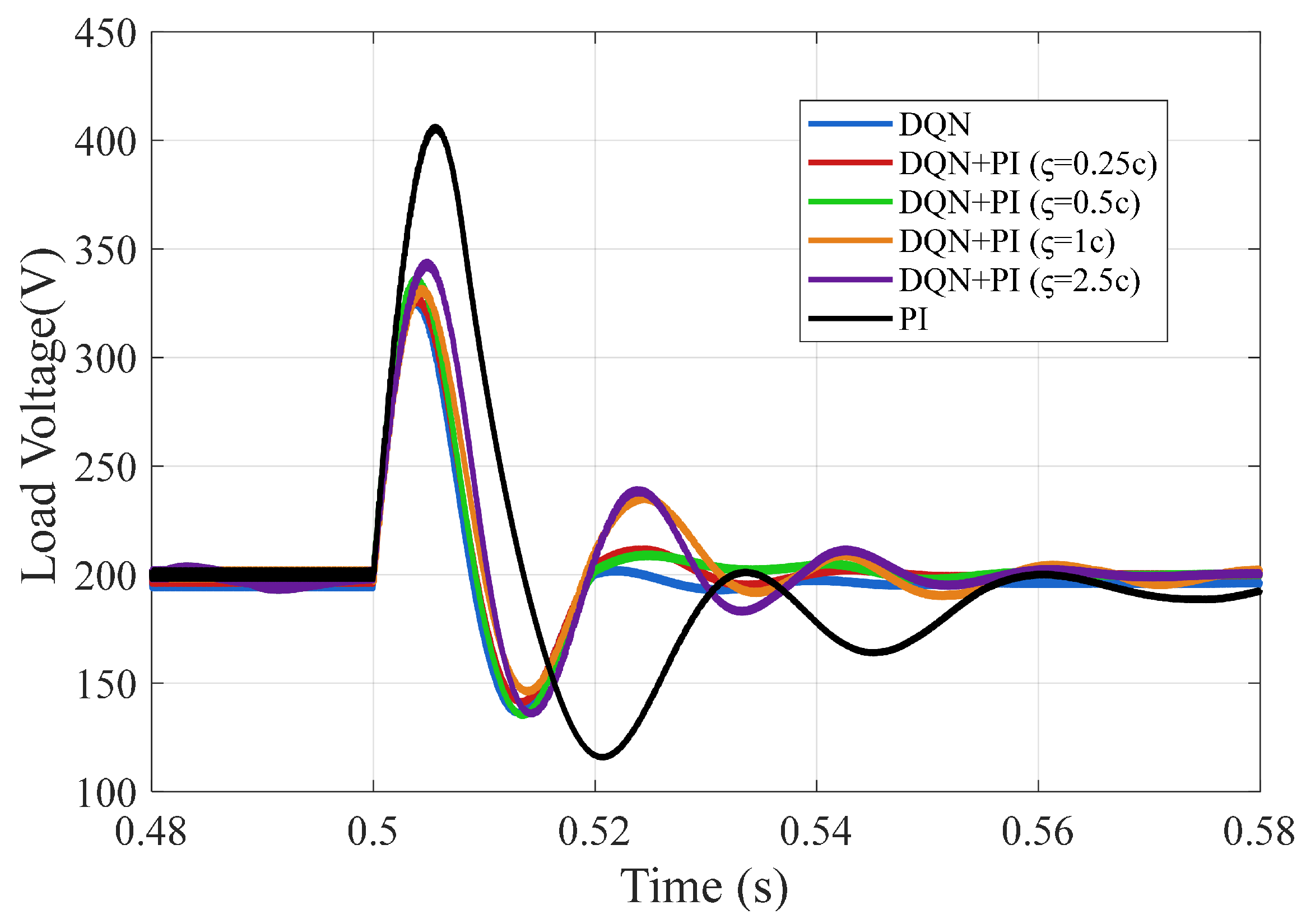

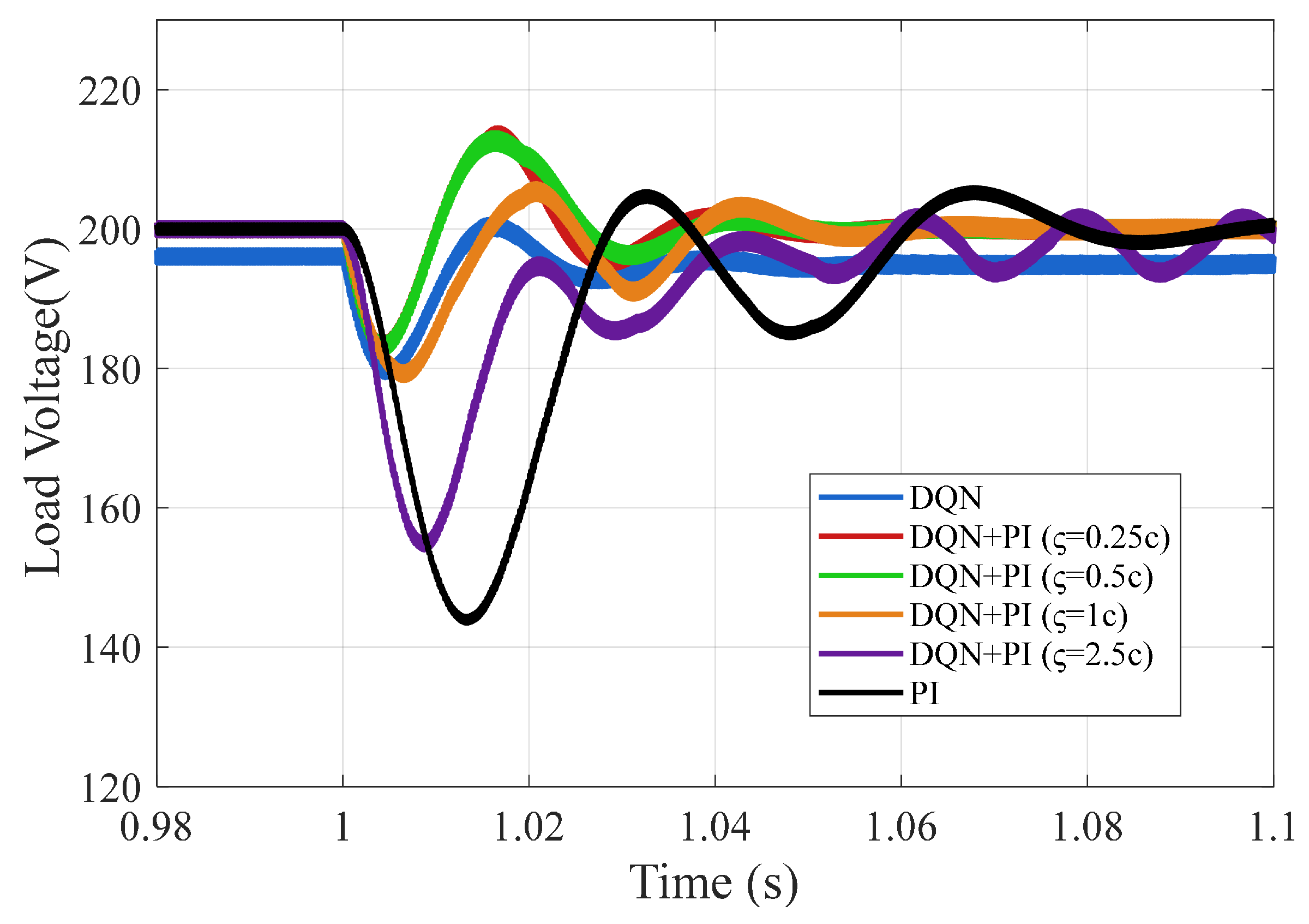

4.2. Comparative Performance Evaluation of PI, DQN, and DQN+PI Controllers

- Load Step Disturbance: At s, the load resistance was abruptly increased from to , corresponding to a 200% increase.

- Input Voltage Drop Disturbance: At s, the input voltage was reduced from to , representing a 20% drop.

4.3. Impact of PI Output Saturation Limit on System

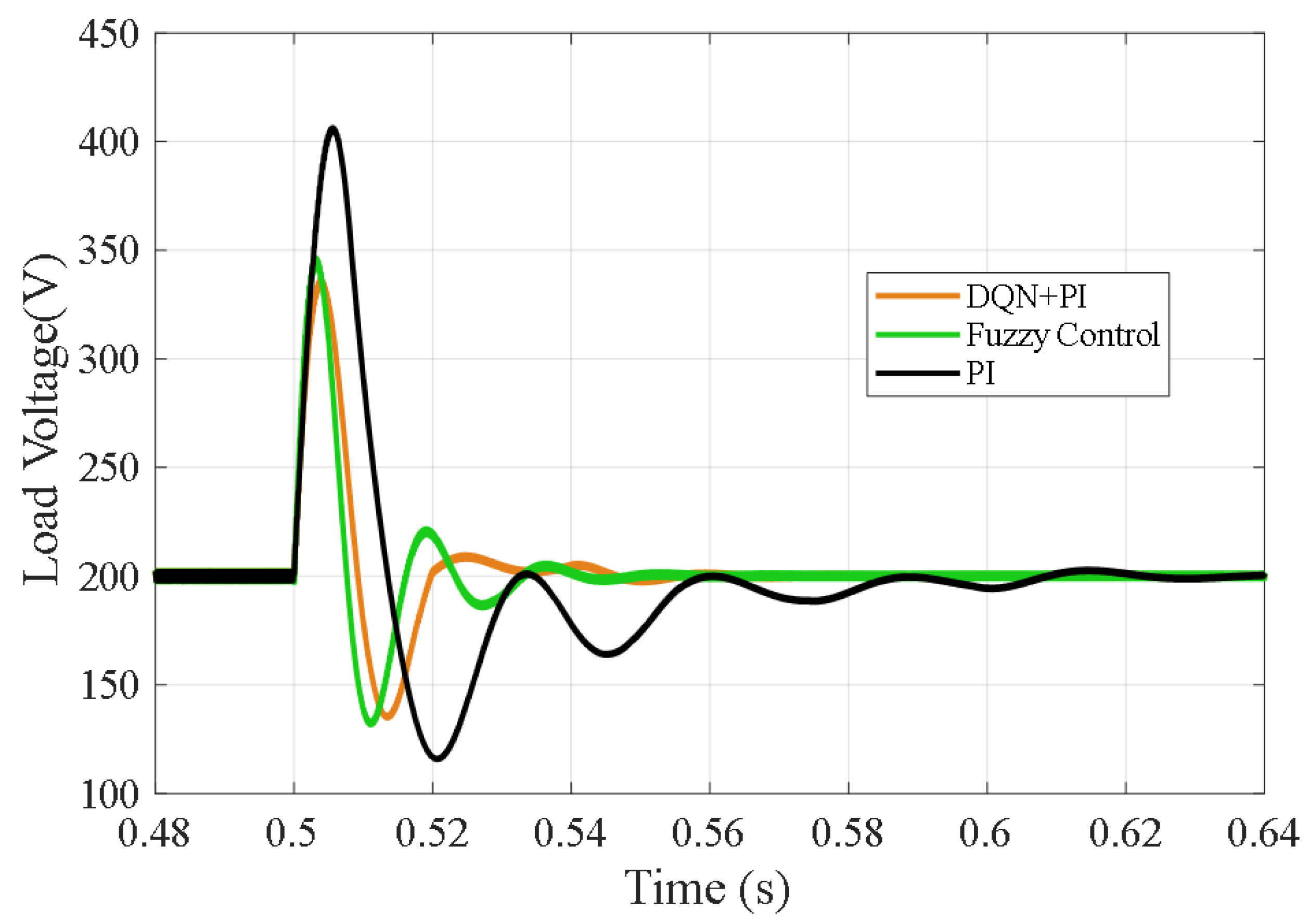

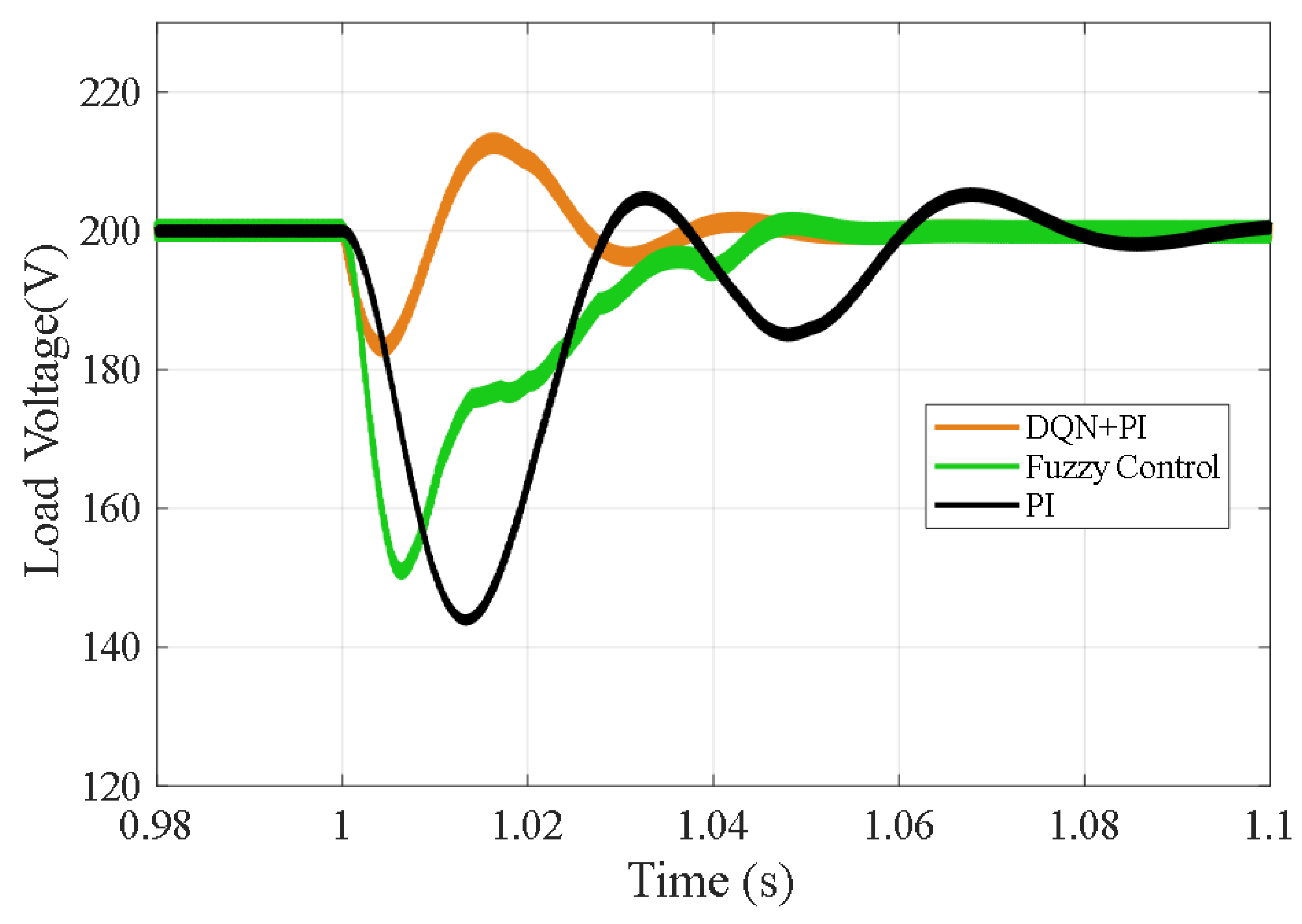

4.4. Performance Comparison with PI and Fuzzy Control

- Phase 1: Input Signal Preprocessing. The controller receives the instantaneous voltage error and its rate of change as input variables. These signals are normalized through gain blocks (Gain1 and Gain2) to ensure compatibility with the fuzzy inference system’s universe of discourse. Two limiters are used to constrain the input ranges with predefined bounds before feeding them into the fuzzy controller.

- Phase 2: Fuzzy Controller. The fuzzy controller, consisting of fuzzification, rule evaluation, and defuzzification stages, was implemented using MATLAB’s Fuzzy Logic Toolbox. Both input and output variables are defined using seven triangular membership functions, covering the linguistic range from Negative Big (NB) to Positive Big (PB). The rule base, constructed based on the system dynamics, is summarized in Table 4.

- Phase 3: Output Signal Processing. The fuzzy controller produces a duty cycle adjustment signal , which is scaled using a gain block (Gain3) to tailor its magnitude. The final PWM duty cycle applied to the boost converter is determined as

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- El-Shahat, A.; Sumaiya, S. DC-Microgrid System Design, Control, and Analysis. Electronics 2019, 8, 124. [Google Scholar] [CrossRef]

- Jithin, K.; Haridev, P.P.; Mayadevi, N.; Harikumar, R.P.; Mini, V.P. A Review on Challenges in DC Microgrid Planning and Implementation. J. Mod. Power Syst. Clean Energy 2022, 11, 1375–1395. [Google Scholar] [CrossRef]

- Al-Ismail, F.S. DC Microgrid Planning, Operation, and Control: A Comprehensive Review. IEEE Access 2021, 9, 36154–36172. [Google Scholar] [CrossRef]

- Özdemir, A.; Erdem, Z. Double-Loop PI Controller Design of the DC-DC Boost Converter with a Proposed Approach for Calculation of the Controller Parameters. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2018, 232, 137–148. [Google Scholar] [CrossRef]

- Hu, J.; Shan, Y.; Guerrero, J.M.; Ioinovici, A.; Chan, K.W.; Rodriguez, J. Model Predictive Control of Microgrids—An Overview. Renew. Sustain. Energy Rev. 2021, 136, 110422. [Google Scholar] [CrossRef]

- Komurcugil, H.; Biricik, S.; Bayhan, S.; Zhang, Z. Sliding mode control: Overview of its applications in power converters. IEEE Ind. Electron. Mag. 2020, 15, 40–49. [Google Scholar] [CrossRef]

- Siano, P.; Citro, C. Designing fuzzy logic controllers for DC-DC converters using multi-objective particle swarm optimization. Electr. Power Syst. Res. 2014, 112, 74–83. [Google Scholar] [CrossRef]

- Restrepo, C.; Barrueto, B.; Murillo-Yarce, D.; Muñoz, J.; Vidal-Idiarte, E.; Giral, R. Improved Model Predictive Current Control of the Versatile Buck-Boost Converter for a Photovoltaic Application. IEEE Trans. Energy Convers. 2022, 37, 1505–1519. [Google Scholar] [CrossRef]

- Kart, S.; Demir, F.; Kocaarslan, İ.; Genc, N. Increasing PEM Fuel Cell Performance via Fuzzy-Logic Controlled Cascaded DC-DC Boost Converter. Int. J. Hydrogen Energy 2024, 54, 84–95. [Google Scholar] [CrossRef]

- Li, Y.; Sahoo, S.; Dragičević, T.; Zhang, Y.; Blaabjerg, F. Stability-Oriented Design of Model Predictive Control for DC/DC Boost Converter. IEEE Trans. Ind. Electron. 2023, 71, 922–932. [Google Scholar] [CrossRef]

- Inomoto, R.S.; de Almeida Monteiro, J.R.B.; Sguarezi Filho, A.J. Boost Converter Control of PV System Using Sliding Mode Control with Integrative Sliding Surface. IEEE J. Emerg. Sel. Top. Power Electron. 2022, 10, 5522–5530. [Google Scholar] [CrossRef]

- Chen, P.; Zhao, J.; Liu, K.; Zhou, J.; Dong, K.; Li, Y.; Guo, X.; Pan, X. A review on the applications of reinforcement learning control for power electronic converters. IEEE Trans. Ind. Appl. 2024, 60, 8430–8450. [Google Scholar] [CrossRef]

- Alfred, D.; Czarkowski, D.; Teng, J. Reinforcement learning-based control of a power electronic converter. Mathematics 2024, 12, 671. [Google Scholar] [CrossRef]

- Cheng, H.; Jung, S.; Kim, Y.-B. A Novel Reinforcement Learning Controller for the DC-DC Boost Converter. Energy 2025, 321, 135479. [Google Scholar] [CrossRef]

- Ghamari, S.; Hajihosseini, M.; Habibi, D.; Aziz, A. Design of an Adaptive Robust PI Controller for DC/DC Boost Converter Using Reinforcement-Learning Technique and Snake Optimization Algorithm. IEEE Access 2024, 12, 141814–141829. [Google Scholar] [CrossRef]

- Huangfu, B.; Cui, C.; Zhang, C.; Xu, L. Learning-Based Optimal Large-Signal Stabilization for DC/DC Boost Converters Feeding CPLs via Deep Reinforcement Learning. IEEE J. Emerg. Sel. Top. Power Electron. 2022, 11, 5592–5601. [Google Scholar] [CrossRef]

- Gheisarnejad, M.; Farsizadeh, H.; Tavana, M.-R.; Khooban, M.H. A Novel Deep Learning Controller for DC-DC Buck-Boost Converters in Wireless Power Transfer Feeding CPLs. IEEE Trans. Ind. Electron. 2020, 68, 6379–6384. [Google Scholar] [CrossRef]

- Kishore, P.S.V.; Jayaram, N.; Rajesh, J. Performance Enhancement of Buck Converter Using Reinforcement Learning Control. In Proceedings of the 2022 IEEE Delhi Section Conference (DELCON), New Delhi, India, 11–13 February 2022; pp. 1–5. [Google Scholar]

- Tang, Y.; Hu, W.; Cao, D.; Hou, N.; Li, Z.; Li, Y.W.; Chen, Z.; Blaabjerg, F. Deep Reinforcement Learning Aided Variable-Frequency Triple-Phase-Shift Control for Dual-Active-Bridge Converter. IEEE Trans. Ind. Electron. 2022, 70, 10506–10515. [Google Scholar] [CrossRef]

- Mazaheri, N.; Santamargarita, D.; Bueno, E.; Pizarro, D.; Cobreces, S. A Deep Reinforcement Learning Approach to DC-DC Power Electronic Converter Control with Practical Considerations. Energies 2024, 17, 3578. [Google Scholar] [CrossRef]

- Cui, C.; Yan, N.; Huangfu, B.; Yang, T.; Zhang, C. Voltage Regulation of DC-DC Buck Converters Feeding CPLs via Deep Reinforcement Learning. IEEE Trans. Circuits Syst. II Express Briefs 2021, 69, 1777–1781. [Google Scholar] [CrossRef]

| Parameter | Definition | Value |

|---|---|---|

| Input voltage | 100 V | |

| Reference output voltage | 200 V | |

| L | Inductance | 10 mH |

| C | Capacitance | 470 F |

| f | Switching frequency | 10 KHz |

| Resistance | 5–20 ohms |

| Parameter | Definition | Value |

|---|---|---|

| Learning rate | ||

| Discount factor | 0.98 | |

| Exploration rate | 0.05 | |

| , , | Reward function parameters | 10, 5, −15 |

| D | Nominal duty ratio | 0.5 |

| Fluctuation range | 0.1 | |

| c | Minimum step | 0.02 |

| B | Mini-batch size | 64 |

| M | Replay memory size | 5000 |

| N | Training episodes | 300 |

| Controller | Load Step | Voltage Drop | ||

|---|---|---|---|---|

| Settling Time | Overshoot | Settling Time | Overshoot | |

| PI | 0.14 s | 103% | 0.12 s | 28% |

| DQN | 0.051 s | 62% | 0.055 s | 10.5% |

| DQN+PI | 0.085 s | 65% | 0.065 s | 10.5% |

| NB | NM | NS | ZE | PS | PM | PB | |

|---|---|---|---|---|---|---|---|

| NB | NB | NB | NM | NM | NS | ZE | ZE |

| NM | NB | NM | NM | NS | ZE | ZE | PS |

| NS | NM | NM | NS | ZE | PS | PM | PM |

| ZE | NM | NS | ZE | ZE | ZE | PS | PM |

| PS | NS | ZE | PS | PM | PM | PM | PB |

| PM | ZE | PS | PM | PM | PM | PB | PB |

| PB | ZE | PS | PM | PM | PB | PB | PB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nie, P.; Wu, Y.; Wang, Z.; Xu, S.; Hashimoto, S.; Kawaguchi, T. The Voltage Regulation of Boost Converters via a Hybrid DQN-PI Control Strategy Under Large-Signal Disturbances. Processes 2025, 13, 2229. https://doi.org/10.3390/pr13072229

Nie P, Wu Y, Wang Z, Xu S, Hashimoto S, Kawaguchi T. The Voltage Regulation of Boost Converters via a Hybrid DQN-PI Control Strategy Under Large-Signal Disturbances. Processes. 2025; 13(7):2229. https://doi.org/10.3390/pr13072229

Chicago/Turabian StyleNie, Pengqiang, Yanxia Wu, Zhenlin Wang, Song Xu, Seiji Hashimoto, and Takahiro Kawaguchi. 2025. "The Voltage Regulation of Boost Converters via a Hybrid DQN-PI Control Strategy Under Large-Signal Disturbances" Processes 13, no. 7: 2229. https://doi.org/10.3390/pr13072229

APA StyleNie, P., Wu, Y., Wang, Z., Xu, S., Hashimoto, S., & Kawaguchi, T. (2025). The Voltage Regulation of Boost Converters via a Hybrid DQN-PI Control Strategy Under Large-Signal Disturbances. Processes, 13(7), 2229. https://doi.org/10.3390/pr13072229