Abstract

With the integration of high proportions of new energy and high proportions of power electronic devices, the spatial–temporal correlation scale of emerging distribution business has experienced a sudden increase, and the demand for inter-distribution area collaboration is increasing steadily. Currently, distribution systems heavily rely on cloud master stations to facilitate the state synchronization process across feeders for such business. However, this approach struggles to adapt to the diverse delay-sensitive characteristics anticipated in future large-scale integrations. Therefore, a novel edge-side state synchronization method for cross-feeder operations in distribution systems tailored to diverse delay-sensitive services is proposed. Firstly, the traditional integrated hierarchical vertical network structure is evolved to construct “edge–cloud–edge” and “edge–edge” dual data transmission channels for inter-distribution area nodes. Secondly, considering the unique characteristics of each channel, their expected synchronization delays for differentiated services are calculated. An optimization problem is then formulated with the objective of maximizing the minimum expected synchronization delay redundancy rate. Finally, an iterative variable weighting method is designed to solve this optimization problem. Simulation analysis shows that the proposed algorithm can better adapt to the high-concurrency differentiated inter-distribution area status synchronization demands of diverse time-sensitive businesses, efficiently supporting the flexible, intelligent, and digital transformation of distribution networks.

1. Introduction

With the access of large amounts of renewable energy and power electronic equipment, the operation mechanism of the distribution system has undergone significant changes, so academia and industry have jointly put forward the concept of a new-type distribution system to characterize the new form and characteristics of the distribution system that will be derived under the goal of “dual-carbon”. Hence, corresponding new-type services are called new-type distribution services. The scale of spatiotemporal correlations in new-type distribution services has increased sharply. Taking transformer districts as typical regional planning units of distribution networks, the demand for cross-regional collaboration is growing day by day, with significant characteristics of diverse delay sensitivity. This is specifically manifested in the differentiated deterministic synchronization requirements for the cross-regional collaborative business states of new-type distribution systems, such as low-voltage flexible DC interconnection [1,2]. However, at the current stage, distribution systems heavily rely on cloud master stations to support low-voltage cross-regional collaborative businesses, such as energy exchange in transformer districts, which makes it difficult to adapt to the increasing business scale in the future. How to fully explore the collaborative effectiveness of heterogeneous resources in various regions has become the key to adapting to large-scale cross-regional collaborative businesses [3].

The key to determining the effectiveness of cross-station state synchronization for multivariate delay-sensitive services mainly includes network architecture design and delay optimization. Network architecture design specifically refers to the data transmission network constructed by integrating heterogeneous communication technologies and nodes. Delay optimization refers to the time required for cross-station transmission of relevant service data. The details of these two aspects of work are described below.

In terms of network architecture design, the literature [4,5] conducted grid planning for distribution networks with the objectives of minimizing annual comprehensive costs and minimizing the life-cycle cost, respectively. The literature [6] established a multi-objective planning model for distribution networks by considering grid security, power supply reliability, and load coverage. The literature [7] established a grid planning model for distribution networks that included microgrids. The literature [8] considered the impact of load forecasting uncertainty on distribution network grid planning. The literature [9] adopted a genetic algorithm to optimize the planning of distribution network grids. However, most of the existing design methods for distribution communication network grids adopt an integrated hierarchical vertical model, where cross-transformer district nodes cannot communicate directly, and the cloud master station is the only hub to support cross-regional collaborative businesses, making it difficult to adapt to the increasing business scale in the future.

In addition, end-to-end communication delay is an important indicator for ensuring the deterministic synchronization of cross-regional collaborative business states. With the goal of optimizing end-to-end data transmission delay, in terms of joint optimization of relevant heterogeneous resources, the literature [10] proposed a convex optimization-based joint allocation algorithm for power and bandwidth in heterogeneous wireless networks, which maximizes the total system capacity under the constraints of meeting user minimum rates and fairness. The literature [11] modeled the joint optimization problem of power and bandwidth as a min–max fractional stochastic programming. The literature [12] proposed a resource allocation algorithm for cellular-assisted device-to-device communication in advanced metering infrastructure for smart grids. The literature [13] proposed a knowledge-assisted domain adversarial neural network-based resource allocation strategy aimed at reducing the number of underperforming base stations by dynamically allocating wireless resources to meet real-time mobile traffic demands. However, most of the above methods are based on a single data channel and rarely involve the many-to-many matching problem of processing concurrent businesses on multiple available channels.

Based on the above analysis in Table 1, an edge-side cross-area state synchronization method for new distribution systems adapted to multiple delay-sensitive services (SA-MDS) is proposed, and the main contribution is as follows:

Table 1.

Difference between the proposed algorithm and previous work.

Firstly, the traditional integrated hierarchical vertical grid is evolved to build “edge–cloud–edge” and “edge–edge” dual data transmission channels for cross-transformer district nodes.

Secondly, the expected synchronization delays for differentiated businesses are calculated considering the characteristics of different channels, and an optimization problem is established with the goal of maximizing the minimum expected synchronization delay redundancy rate, thereby meeting their large-scale cross-regional collaboration needs with the proportion of deterministic support of 100% based on less resource compared with the related work.

Finally, an iterative variable weighting method is designed to solve it, reasonably allocating data transmission priorities, channels, and computing resources. Simulation results show that the proposed SA-MDS can better adapt to the high-concurrency differentiated cross-transformer district state synchronization requirements of multiple delay-sensitive businesses.

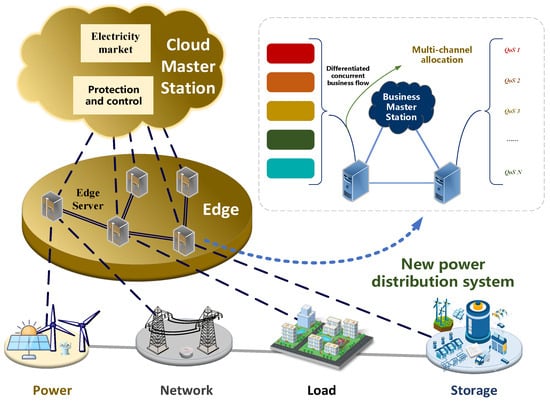

2. System Model

In order to better adapt to large-scale cross-regional collaborative business operations and consider the diverse delay-sensitive characteristics in distribution systems (i.e., the varying requirements for data transmission delay across different business operations), based on the traditional integrated hierarchical vertical network architecture, we propose to incrementally deploy “edge-to-edge” direct data transmission channels for cross-substation nodes [12], as shown in Figure 1. Let denote the cloud-edge collaborative precise data interconnection system model for cross-regional edge substations, let represent the business cloud master station (assuming it can transparently transmit data uploaded from edge substations to another substation’s edge substation), let be the set of edge substations where denotes the identifier of each edge substation, and is the set of “edge-to-cloud-to-edge” and “edge-to-edge” channels. Additionally, let represent the set of differentiated concurrent business operations where denotes the identifier of each business operation. The main abbreviations and symbols are shown in Table 2.

Figure 1.

System model diagram.

Table 2.

Definition of symbols and abbreviations.

The business scenarios addressed by the system model primarily target substation areas with complementary or interrelated temporal and spatial characteristics of power sources and loads. Specific business operations include, for example, energy mutual support among substation areas, fault section isolation, intelligent self-healing in distribution networks, etc. It is assumed that synchronization of such business status data is required between edge substations across different substation areas, with varying delay requirements. Edge substations in different substation areas can synchronize status data through either “edge-to-cloud-to-edge” or “edge-to-edge” channels, involving processes such as queue scheduling [13], data compression [14], and channel allocation [15]. Compared to the traditional integrated hierarchical vertical network architecture, this model introduces additional “edge-to-edge” channels that support direct data connection between edge substations, complementing the traditional single “edge-to-cloud-to-edge” channel to cater to businesses with lower delay requirements. Through the proposed new network architecture, when the number of edge nodes grows, each node on the edge side will have the ability of local multi-hop self-organized communication. Although the actual environment may cause interference to the edge-to-edge communication due to the constraints on the performance of the communication technology, the proposed dual-channel network architecture can adaptively adjust the synchronization scheme of multi-service data across the stations to meet the corresponding latency requirements as much as possible.

3. Edge-Side Cross-Substation Area Business Status Synchronization Method

Based on the aforementioned system model, the objective is to achieve deterministic synchronization of cross-substation area status for diverse delay-sensitive business operations. We derive relevant formulas to quantitatively characterize the expected delay in synchronizing a given business status across different channels. Based on this, we design and solve corresponding optimization problems, as detailed below:

3.1. Calculation of Expected Synchronization Delay

According to the system model, the state data processing flow includes data compression, which is related to the allocated computing resources for the business operation [16,17,18]. The corresponding compression time calculation method is given by the following Formula (1):

Here, represents the time required for compressing the business data packet k at the sending-side edge substation, denotes the initial data size of the business data packet k, indicates the number of CPU cycles required to process one unit of data, and represents the allocated frequency for compressing the business data packet k.

Taking into account the data compression time, the calculation method for the end-to-end expected synchronization delay of the business operation supported by different data transmission channels is given by the following Formulas (2) and (3). Specifically, if the “edge-to-edge” channel is used, it must satisfy Formula (2):

If the “edge-to-cloud-to-edge” channel is adopted, it must satisfy Formula (3).

Here, represents the waiting time in the channel queue at the sending-side edge substation for the compressed business data packet to be transmitted, which is the sum of the transmission times required for all business data packets located before it. denotes the data size of the corresponding business status data after compression. represents the time required for the sending-side edge substation to directly transmit the compressed business data packet k to the receiving-side edge substation via the “edge-to-edge” channel, where denotes the channel bandwidth from the sending-side edge substation to the receiving-side edge substation. is the time required for decompressing the business data packet k after it arrives at the receiving-side edge substation. Assuming that the receiving-side edge substation can allocate the same amount of computing resources as the sending-side edge substation for the corresponding business data based on the accompanying information, the decompression time is simplified as a certain proportion of its compression time, with being the corresponding decompression coefficient. is the time required for the sending-side edge substation to transmit the compressed business data packet k to the cloud master station, where denotes the channel bandwidth from the sending-side edge substation to the cloud master station. represents the time required for the cloud platform to transparently forward the business data packet k, with being the amount of business data pending forwarding when the business data packet k arrives at the cloud platform. is the time required for the business cloud master station to transmit the compressed business data packet k to the receiving-side edge substation, where denotes the channel bandwidth from the cloud master station to the receiving-side edge substation.

3.2. Mathematical Model Description

The optimization problem formulated, with the objective of maximizing the minimum redundancy rate of the expected synchronization delay for concurrent differentiated services, and taking synchronization priority, available transmission channels, and computing resources as control variables, is expressed as (4) and (5):

Here, represents the expected synchronization delay redundancy rate for service k, calculated through Formulas (1)–(4) based on the allocated priority, channel, and computing resources. denotes the synchronization delay requirement for service k. As indicated by Formula (4), a larger redundancy rate signifies better service quality from the perspective of synchronization delay. Constraints C1 and C2 state that the computing resources allocated to each service are subject to the total limit determined by the performance capacity of the source-side edge substation. Constraints C3 and C4 specify that the system assigns either the “edge-to-cloud-to-edge” or “edge-to-edge” channel to each service within the same operational cycle. Constraint C5 outlines the range for quantifying the synchronization priority assigned by the source-side edge substation to each service [19,20,21,22].

3.3. Solving Strategies of the Problem

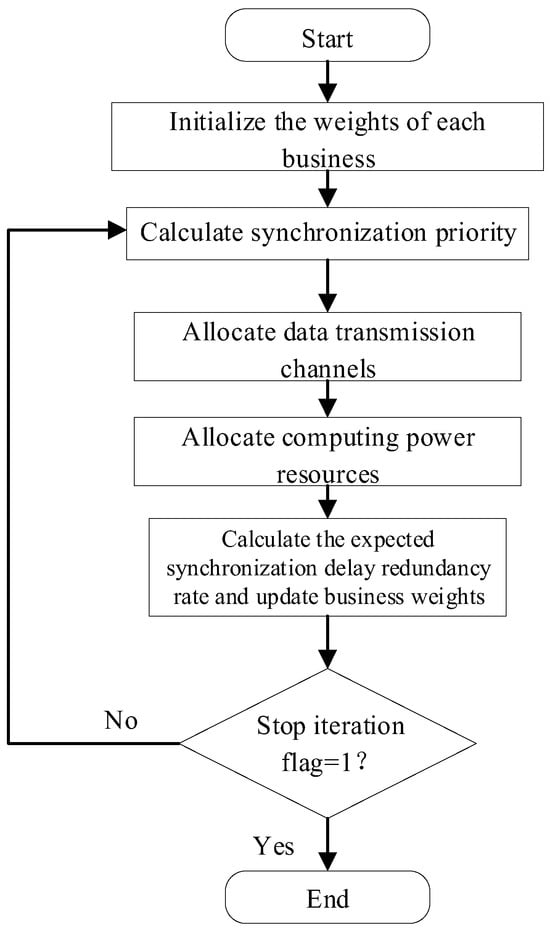

Considering the differences in delay requirements among concurrent services targeted by the optimization problem OP, an adaptive and joint allocation method for heterogeneous resources based on an iterative variable weighting mechanism is designed to efficiently solve the aforementioned problem [23,24,25]. The solving process is illustrated in Figure 2, with the specific steps as follows:

Figure 2.

Flowchart of solution.

Step 1: Considering the synchronization delay requirements of different services, initialize the weights of each service based on Equation (6). Services with lower synchronization delays are assigned higher initial priorities.

Step 2: Calculate the synchronization priorities of services at the source-side edge substation based on their respective weights. Taking service as an example, its priority calculation method is as follows:

Here, represents the priority of service k among all services to be synchronized, where the service with indicates it is to be transmitted first. represents the set of other concurrent services to be synchronized whose weights are lower than that of service k, and represents the number of services in this set. When the delay requirement of service k is the lowest, the set is an empty set, i.e., .

Step 3: Allocate data transmission channels to each service based on their synchronization priorities. Taking service k as an example, its available channel calculation method is as follows:

Here, indicates that the “edge-to-edge” channel is in an idle state. According to Equation (8), when the data transmission for all other concurrent services with higher synchronization priorities than service k has already commenced, and either the “edge-to-cloud-to-edge” or “edge-to-edge” channel is in an idle state, priority is given to allocating the “edge-to-edge” channel to service k. Conversely, if neither condition is met, the “edge-to-cloud-to-edge” channel is allocated to it.

Step 4: Allocate computing resources to each service based on their respective weights to minimize cross-regional end-to-end data transmission volume. Taking service k as an example, its available computing resource calculation method is as follows:

Here, represents the available computing resources allocated to service k, and represents the total computing resources available at the sending-side edge substation.

Step 5: Based on the synchronization priorities, transmission channels, and computing resources allocated to each service, calculate the corresponding expected synchronization delay redundancy ratio using Formulas (1)–(4). Then, update the weights of each service according to their respective expected synchronization delay redundancy ratios using Equation (10). Taking service k as an example, its weight updating method is as follows:

According to the above formula, when the initial weight allocation is unreasonable, resulting in a negative expected synchronization delay redundancy ratio for certain services (i.e., the service experiences a timeout), their weights will be automatically increased in the next round of the variable weighting process, and vice versa.

Step 6: Repeat steps 2–6 until the conditions specified in Equations (11) and (12) are met. Here, represents the stop iteration flag, and iteration ceases when is true. denotes the real-time iteration count, and represents the maximum number of iterations, with gain updates being halted once the iteration count exceeds this maximum. Additionally, represents the negative gain flag, and iteration halts immediately if the minimum expected synchronization delay redundancy ratio for each service shows no gain over the last iterations. The superscript in indicates the corresponding iteration number. At this point, the corresponding resource allocation scheme is presented and implemented.

4. Case Study

A comparative analysis between the proposed SA-MDS and related algorithms was conducted in a simulated environment based on Python 2021.2, involving two edge substations and one cloud master station. The edge substations are responsible for handling corresponding multi-element delay-sensitive services within their respective districts. The operational process is detailed in the second paragraph of the system model section, and specific simulation parameters are shown in Table 3. The names and principles of the compared algorithms are as follows:

Table 3.

Simulation parameters and their values.

1. Single Channel Average Resource Allocation algorithm (SCAR-A): This algorithm employs the same network configuration as SA-MDS but only provides a single “edge-to-cloud-to-edge” channel for transmitting differentiated services. On this basis, it equally allocates channel and computing resources to all services.

2. Dual-Channel Average Resource Allocation algorithm (DCAR-A): This algorithm also adopts the same network configuration as SA-MDS and equally allocates channel and computing resources to all services.

Algorithm Performance Analysis

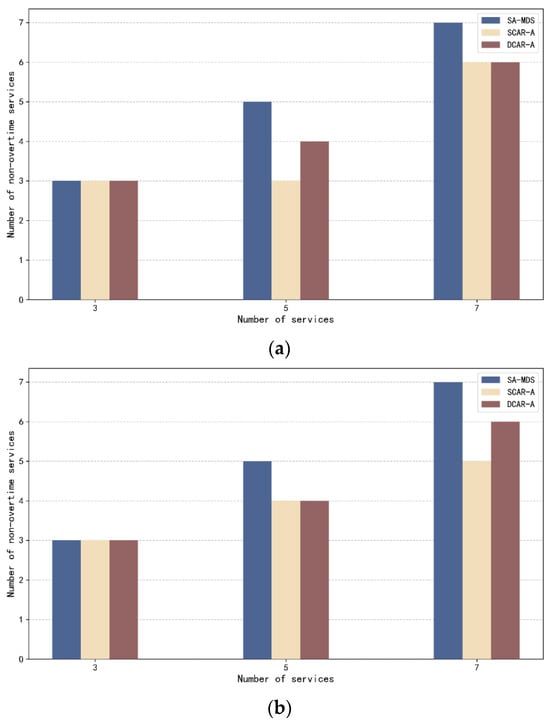

Firstly, we analyze the impact of different numbers of services on the performance of the algorithms, and in terms of algorithm universality, it is stipulated that satisfying the transmission delay is considered as successful transmission. Figure 3 shows the performance of different algorithms with different numbers of services (3, 5, 7) when the service packet size is 1500 bit and 1000 bit. In Figure 3a, when the number of services is 3, all three algorithms have a completion number of 3, and all of them can handle all the services successfully. As the number of operations increases, the successful transmission of SCAR-A and DCAR-A deteriorates. In Figure 3b, the SA-MDS algorithm maintains efficient and stable performance when dealing with different numbers of services after the change of the size of the service data volume, and the number of successful transmissions is always equal to the number of services, while the number of completions of the SCAR-A and DCAR-A algorithms shows a significant decreasing trend with the increase in the data volume and the number of services.

Figure 3.

Number of non-overtime services of different numbers of services. (a) (Edge-to-cloud channel bandwidth = 10 Mbps, Packet sizes for services = 1500 bits). (b) (Edge-to-cloud channel bandwidth = 10 Mbps, Packet sizes for services = 1000 bits).

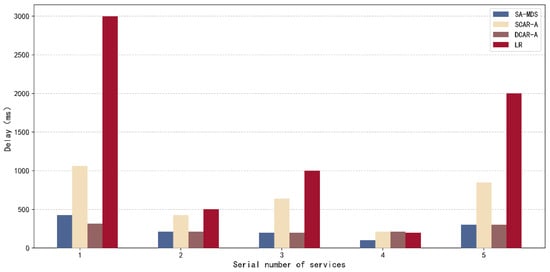

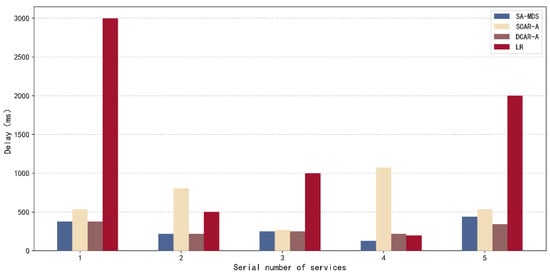

As shown in Figure 4 in the case of 1GHz arithmetic power, 10 Mbps edge–edge and edge–cloud, 1000 bit packet size, and service 5, the delay of SA-MDS under each service is relatively stable, and the fluctuation between the neighboring service numbers is small, which is close to the corresponding delay requirement. The delay of the SCAR-A algorithm fluctuates drastically and is on the high side in general; for example, the delay of service 5 reaches 849 ms, which is more than 200% of the corresponding delay of SA-MDS. In comparison with the delay requirement, all the services of SA-MDS are lower than the delay requirement, while SCAR-A and DCAR-A cannot effectively meet the standard. It can be seen that the SA-MDS algorithm has better performance and applicability, and it can provide more reliable support for delay-sensitive services.

Figure 4.

Comparison of transmission delay for different algorithmic services.

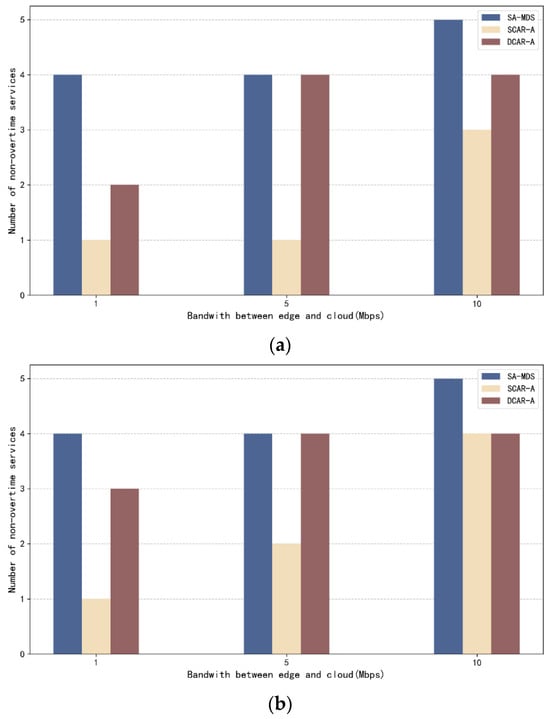

Then, we analyze the impact of edge–cloud bandwidth on the performance of the algorithms, and Figure 5 shows the differences in the number of successfully transmitted services by different algorithms under different edge–cloud channel bandwidths (1 Mbps, 5 Mbps, and 10 Mbps) when the service packet sizes are 1500 bit and 1000 bit, respectively, with the number of concurrent services being 5. In Figure 5a, the SA-MDS algorithm maintains better transmission performance under different bandwidths and has the strongest adaptability to bandwidth changes; the SCAR-A algorithm performs poorly under low bandwidths but improves greatly under high bandwidths; and the DCAR-A algorithm performs poorly. Comparing Figure 5a and Figure 5b, it can be found that the SA-MDS algorithm still has obvious advantages in high bandwidth; the SCAR-A algorithm improves its performance in low bandwidth and remains stable in high bandwidth; the DCAR-A algorithm is almost unaffected by the data volume, and its performance is stable but with limited improvement. On the whole, the SA-MDS algorithm still has the best performance under different data volume and bandwidth conditions.

Figure 5.

Number of non-overtime services of different numbers of services. (a) (Number of services = 5, Packet sizes for services = 1500 bits. (b) Number of services = 5, Packet sizes for services = 1000 bits).

As shown in Figure 6 in the arithmetic 1 GHz, edge–edge 10 Mbps, edge–cloud 8 Mbps, packet size 1000 bit, the SA-MDS algorithm in the delay performance under each service number is more excellent, and its delay in the delay requirements within the cut fluctuation is relatively smooth; the SCAR-A algorithm delay in the service 4 for the delay requirements of more than 500%, much higher than the delay requirements, and delay fluctuation is relatively big. The delay of the SCAR-A algorithm in business 4 is more than 500% of the delay requirement, much higher than the delay requirement, and the delay fluctuation is relatively large. Compared with the above figure, the delay performance of the SA-MDS algorithm is more stable and lower after changing the bandwidth of the edge cloud channel, which is closer to the delay requirement; the SCAR-A algorithm has larger delay fluctuations and is overall high; the DCAR-A algorithm is similar to the SA-MDS algorithm in the delay performance under part of the service number, but the delay fluctuates in service numbers 4 and 5, which is worse than the SA-MDS algorithm in terms of stability.

Figure 6.

Comparison of transmission delay for different algorithmic services.

5. Summary and Outlook

For the new-type power distribution system, a novel edge-side cross-district state synchronization method for power distribution systems that accommodates multi-element delay-sensitive services is proposed. This method dynamically allocates the cross-domain end-to-end transmission process of differentiated concurrent services to two transmission channels: “edge-to-cloud-to-edge” and “edge-to-edge.” By reasonably and jointly deploying heterogeneous resources such as communication and computing power, it effectively addresses the challenge of deterministic state synchronization for cross-regional collaborative services from the perspective of delay, thereby meeting their large-scale cross-regional collaboration needs with a proportion of deterministic support of 100% based on fewer resources compared with the related work. The aim is to facilitate the flexible, intelligent, and digital transformation of the power distribution grid. In the future, we will build on this work to further coordinate the data acquisition process of wireless sensor networks and strive to achieve the whole life cycle delay control of business data.

Author Contributions

Conceptualization, Y.Z., L.L., C.W. and L.Y.; methodology, Y.Z., L.L., C.W. and L.Y.; software, Y.Z., L.L., C.W. and L.Y.; investigation, Y.Z., L.L., C.W. and L.Y.; writing—original draft preparation, Y.Z., L.L., C.W. and L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by Project Funding from State Grid Jibei Electric Power Co., Ltd. (No. SGJBJY00SJJS2400031).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

Authors Yi Zhou, Li Li and Lin Yang were employed by the State Grid Jibei Electric Power Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Yu, Y.; Wang, T.; Xie, M.; Xie, M.; Zhang, D.; Sun, W.; Zhang, J. Research and application of intelligent distributed distribution network protection based on a 5G network. Power Syst. Prot. Control 2021, 49, 16–23. [Google Scholar]

- Chen, J.; Sun, Z.; Zeng, Z.; Sui, L.; Wang, L.; Yang, C. Design of Communication Network Architecture for “Power+5G” Fusion Technology. Microcomput. Appl. 2023, 39, 212–215. [Google Scholar]

- Yao, P.; Fan, Q.; Cao, Y. Research on communication technology of smart distribution network. Sci. Technol. Innov. Appl. 2023, 13, 189–192. [Google Scholar]

- Zhou, L.; Wang, X.; Ding, X.; Yan, Z.; Li, S. Application of genetic algorithm/tabu search combination algorithm in distribution network structure planning. Power Syst. Technol. 1999, 23, 35–37. [Google Scholar]

- Su, H.; Zhang, J.; Liang, Z.; Niu, S. Multi-stage Planning Optimization for Power Distribution Network Based on LCC and Improved PSO. Proc. CSEE 2013, 33, 118–125. [Google Scholar]

- Peng, X.; Lin, L.; Weng, Y.; Lin, Z.; Liu, Y. Decision-making Method for Anti-disaster Distribution Network Backbone Upgrade Based on Fuzzy Comprehensive Evaluation and Comprehensive Weights. Autom. Electr. Power Syst. 2015, 39, 172–178. [Google Scholar]

- Fan, P.; Zhang, L.; Xiong, H.; Zhang, H.; Wang, D. Distribution network planning containing micro-grid based on improved bacterial colony chemotaxis algorithm. Power Syst. Prot. Control 2012, 40, 12–18. [Google Scholar]

- Li, Z.; Wang, G.; Chen, Z.; Wang, L. Flexible network planning considering islanding scheme for distribution systems with distributed generatorsp. Autom. Electr. Power Autom. Electr. Power Syst. 2013, 37, 42–47. [Google Scholar]

- Yan, W.; Wang, L. Distribution network structure planning based on improved immune genetic algorithm. Chongqing Univ. (Nat. Sci. Ed.) 2007, 30, 28–31. [Google Scholar]

- Miao, J.; Hu, Z.; Yang, K.; Wang, C.; Tian, H. Joint Power and Bandwidth Allocation Algorithm with QoS Support in Heterogeneous Wireless Networks. IEEE Commun. Lett. 2012, 16, 479–481. [Google Scholar] [CrossRef]

- Xu, L.; Zhuang, W. Energy-Efficient Cross-Layer Resource Allocation for Heterogeneous Wireless Access. IEEE Trans. Wirel. Commun. 2018, 17, 4819–4829. [Google Scholar] [CrossRef]

- Liu, K.; Sheng, W.; Zhao, P.; Ye, X. Distributed Control Strategy of Photovoltaic Cross-district Based on Consistency Algorithm. High Volt. Eng. 2021, 47, 4431–4439. [Google Scholar]

- Yang, M.; Wu, C.; Shen, C.; Qiu, Y. Research on Queue Scheduling Strategy on IEEE 802.1 Based TSN Switch. ACTA Electron. Sin. 2022, 50, 2090–2095. [Google Scholar]

- Yao, J.; Yu, Z.; Kong, D.; Xin, P.; Fu, Z.; Zhang, S.; Zhou, Z. Intelligent Resource Allocation Method of Communication, Sensing, and Computing Considering Service Adaptability in Distribution Grid. Power Syst. Technol. 2025, 1–13. [Google Scholar] [CrossRef]

- Chen, Z.; Mi, Y.; Du, G.; Xu, C.; Liu, G.; Li, G. Flow Distribution Performance Research on Solar Filed of Molten Salt Parabolic trough Solar Power Plants. Acta Energiae Solaris Sin. 2023, 44, 516–524. [Google Scholar]

- Al-Kadhim, H.M.; Al-Raweshidy, H.S. Energy efficient data compression in cloud based IoT. IEEE Sens. J. 2021, 21, 12212–12219. [Google Scholar] [CrossRef]

- Yuan, L.; Liu, T.; He, Y.; Zhang, H.; Shu, H. Power quality data compression method based on lightningsearch algorithm and atomic decomposition. J. Electron. Meas. Instrum. 2022, 36, 98–108. [Google Scholar]

- Yang, T.; Li, D.; Cai, S.; Yang, F. Power Data Compression and Encryption Method for User Privacy Protection. Proc. CSEE 2022, 42, 58–69. [Google Scholar]

- Zhou, Z.; Wang, Z.; Liao, H.; Wang, Y.; Zhang, H. 5G cloud-edge-end collaboration framework and resource scheduling method in power internet of things. Power Syst. Technol. 2022, 46, 1641–1651. [Google Scholar]

- Zhang, X.; Liu, D.; Li, B.; Cai, W.; Zhao, G. Discussion on the functional architecture system design and innovation mode of intelligent power internet of things. Power Syst. Technol. 2022, 46, 1633–1640. [Google Scholar]

- Li, H.; Assis, K.D.R.; Yan, S.; Simeonidou, D. DRL based long-term resource planning for task offloading policies in multiserver edge computing networks. IEEE Trans. Netw. Serv. Manag. 2022, 19, 4151–4164. [Google Scholar] [CrossRef]

- Wang, Z.; Xue, G.; Qian, S.; Li, M. CampEdge: Distributed computation offloading strategy under large-scale AP-based edge computing system for IoT applications. IEEE Internet Things J. 2021, 8, 6733–6745. [Google Scholar] [CrossRef]

- Liu, Z.; Li, B.; Yang, M.; Yan, Z. Adaptive channel reservation multiple access protocol based on differentiated traffic guarantee. J. Northwestern Polytech. Univ. 2024, 42, 84–91. [Google Scholar] [CrossRef]

- Song, Y.; Kong, P.-Y.; Kim, Y.; Baek, S.; Choi, Y. Cellular-Assisted D2D Communications for Advanced Metering Infrastructure in Smart Gird. IEEE Syst. J. 2019, 13, 1347–1358. [Google Scholar] [CrossRef]

- Chen, Y.; Zheng, Y.; Xu, J.; Lin, H.; Cheng, P.; Ding, M. Knowledge-Assisted Resource Allocation With Domain Adversarial Neural Networks. IEEE Trans. Netw. Serv. Manag. 2024, 21, 6493–6504. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).