Abstract

With increasing digitization worldwide, machine learning has become a crucial tool in industrial design. This study proposes a novel machine learning-guided optimization approach for enhancing the structural design of protective helmets. The optimal model was developed using machine learning algorithms, including random forest (RF), support vector machine (SVM), eXtreme gradient boosting (XGB), and multilayer perceptron (MLP). The hyperparameters of these models were determined by ten-fold cross-validation and grid search. The experimental results showed that the RF model had the best predictive performance, providing a reliable framework for guiding structural optimization. The results of the SHapley Additive exPlanations (SHAP) method on the contribution of input features show that three structures—the transverse curvature at the foremost point of the forehead, the helmet forehead bottom edge elevation angle, and the maximum curvature along the longitudinal centerline of the forehead—have the highest contribution in both optimization goals. This research achievement provides an objective approach for the structural optimization of protective helmets, further promoting the development of machine learning in industrial design.

1. Introduction

Artificial intelligence technology, as one of the most popular research methods in global scientific research in recent years, has led to significant developments in various fields. With the development of industrial technologies and the intensification of market competition, an increasing number of enterprises are paying attention to the quality and efficiency of product design. As a branch of artificial intelligence, machine learning can provide efficient, accurate, and innovative solutions for industrial design [1,2,3]. Machine learning has the capacity to extract patterns and structures from extensive datasets and subsequently apply them to novel designs [4,5,6]. In the realm of industrial design, machine learning has been deployed for tasks such as data processing, analysis, classification, and prediction, providing precise and efficient support to the field of industrial design [7,8]. The main characteristic of machine learning is the technology that endows computers with self-learning and adaptive capabilities. Through analysis and induction of a large amount of data, patterns and regularities in the data are extracted. These patterns and regularities are then utilized for predictions and decision making [9]. The advantage of this approach is that it can handle complex problems, even if manually coding the solution to the problem is challenging [10,11,12,13,14]. Under this characteristic, machine learning has also matured and achieved many significant breakthroughs. For example, in the field of image recognition, machine learning can accurately identify objects and faces, while in natural language processing, machine learning can automatically understand and translate human language [15,16,17,18]. With further technological advancements, machine learning is expected to achieve more significant breakthroughs, bringing additional convenience and innovation to humanity.

On the battlefield, soldiers may suffer a range of injuries, including penetrating injuries, impact injuries, blunt force trauma, burns, and radiation injuries. Among these, impact injuries are the most common and often unavoidable. Therefore, improving protection against impact injuries can significantly reduce the likelihood of soldiers being harmed in combat. Given the critical importance of the head in the human body, safeguarding it from impact is paramount. The structural design of the protective helmet is a crucial aspect of protective equipment design. A well-designed structure can significantly enhance soldiers’ ability to navigate various hazardous situations on the battlefield. In actual combat, protective helmets primarily serve to safeguard the head from external threats like bullets, artillery, and explosions. Hence, modern helmet designs must prioritize exceptional ballistic resistance and protective capabilities. Additionally, minimizing weight is essential to ensuring wearer mobility [19,20,21,22,23]. In addition, various functional modules, such as communication, night vision, and environmental sensing, are integrated into protective helmets, providing soldiers with additional support and convenience [24,25,26]. Despite the considerable development in the design of protective helmets over an extended period in the past, they still face certain challenges [27,28,29]. In the event of an impact, an inappropriate helmet structure may lead to severe brain injuries for the wearer [30,31,32]. Therefore, further research and improvement are needed to design a helmet structure that complies with ergonomics and enhances the safety of the helmet during impacts. In the past, helmet designs relied on simulation software to assess stamping performance or underwent pressure testing after manufacturing [33,34]. However, optimizing protective performance often depends on the designer’s experience. The emergence of machine learning provides new solutions to this problem. Through machine learning methods, extensive head injury data can be analyzed, and in-depth insights into the protective performance of helmets under various threats can be gained. Such analysis can provide valuable information for designers, assisting them in the improvement of the helmet structure to enhance its ballistic, protective performance, and comfort.

In this study, a method that utilizes machine learning for the optimization of protective helmet structural design is proposed. By analyzing the impact severity of different helmet shapes during the impact process, the method of optimization of helmet structure is explored. Using machine learning methods, the study analyzed the relationships between helmet structural parameters, rotational acceleration during impact, and injury severity. This analysis identified helmet structures that are significantly linked to impact-induced damage, offering valuable scientific guidance for helmet designers. Machine learning methods such as random forests (RFs), eXtreme gradient boosting (XGB), support vector machines (SVMs), and multilayer perceptions (MLPs) were used for the analysis of impact data. Potential head–brain injuries under impact conditions were evaluated using the brain injury criteria (BrIC) and the angular velocity of the head centroid as criteria. The impact of various helmet structures on brain injury during the impact process was analyzed using machine learning methods, and the obtained results were used for reverse optimization of helmet structure. This enables helmet designers to efficiently design helmets according to specific requirements, providing soldiers with stronger protection and improving their survival and combat effectiveness on the battlefield.

2. Research Methods

2.1. Experimental Design and Data Acquisition

This study primarily utilizes machine learning to analyze helmet structural data and predict the resulting angular velocity or injury severity, with the goal of establishing a relationship between helmet structure, angular velocity, and injury severity. The data collection process prior to the study plays a crucial role in shaping subsequent experimental procedures. To measure the angular velocity and injury severity resulting from helmet impacts, a testing platform consisting of sensors and a head dummy model was developed to facilitate data acquisition and computation. In this study, Kistler 9712B5000 (Purchased from Kistler Group, Winterthur, Switzerland) force sensors were utilized with a data acquisition frequency of 100 kHz and a filter grade (CFC) of 5000. These sensors featured a measurement range of up to 22 kN, with seven units deployed during testing. For acceleration measurements, Kistler M0064C (Purchased from Kistler Group, Winterthur, Switzerland) series accelerometers were employed, operating at a sampling frequency of 20 kHz and providing a measurement range of ±2000 g. Additionally, DTS angular velocity sensors, model ARS PRO-8K (Purchased from DTS, USA, Seal Beach, California), were used with a sampling frequency of 20 kHz. The head forms utilized in this research were sourced from Biokinetics and Associates Ltd., conforming to ISO specifications, with a circumference of 575 mm.

In terms of experimental setup, the deployment of related equipment was conducted with reference to the methodology described in Philippens, M. M. G. M., Anctil, B., & Markwardt, K. C. (2014) [35] in their article “Results of a round robin ballistic load sensing headform test series”. A reference plane was established as the benchmark for subsequent experiments, with fixtures employed to stabilize testing distances and angles, thereby minimizing potential experimental variables. During the tests, an impact device was used to subject the helmeted head dummy to impacts, with thin membranes placed at regular intervals between the impactor and the helmet to verify whether the impact reached the helmet. Building on this experimental framework, different helmet types were systematically varied for data collection. Prior to testing, advanced scanning techniques were utilized to model helmet parameters in detail, providing a foundation for subsequent simulations and determining the structural parameters of the helmets used in the experiments.

2.2. Machine Learning Framework

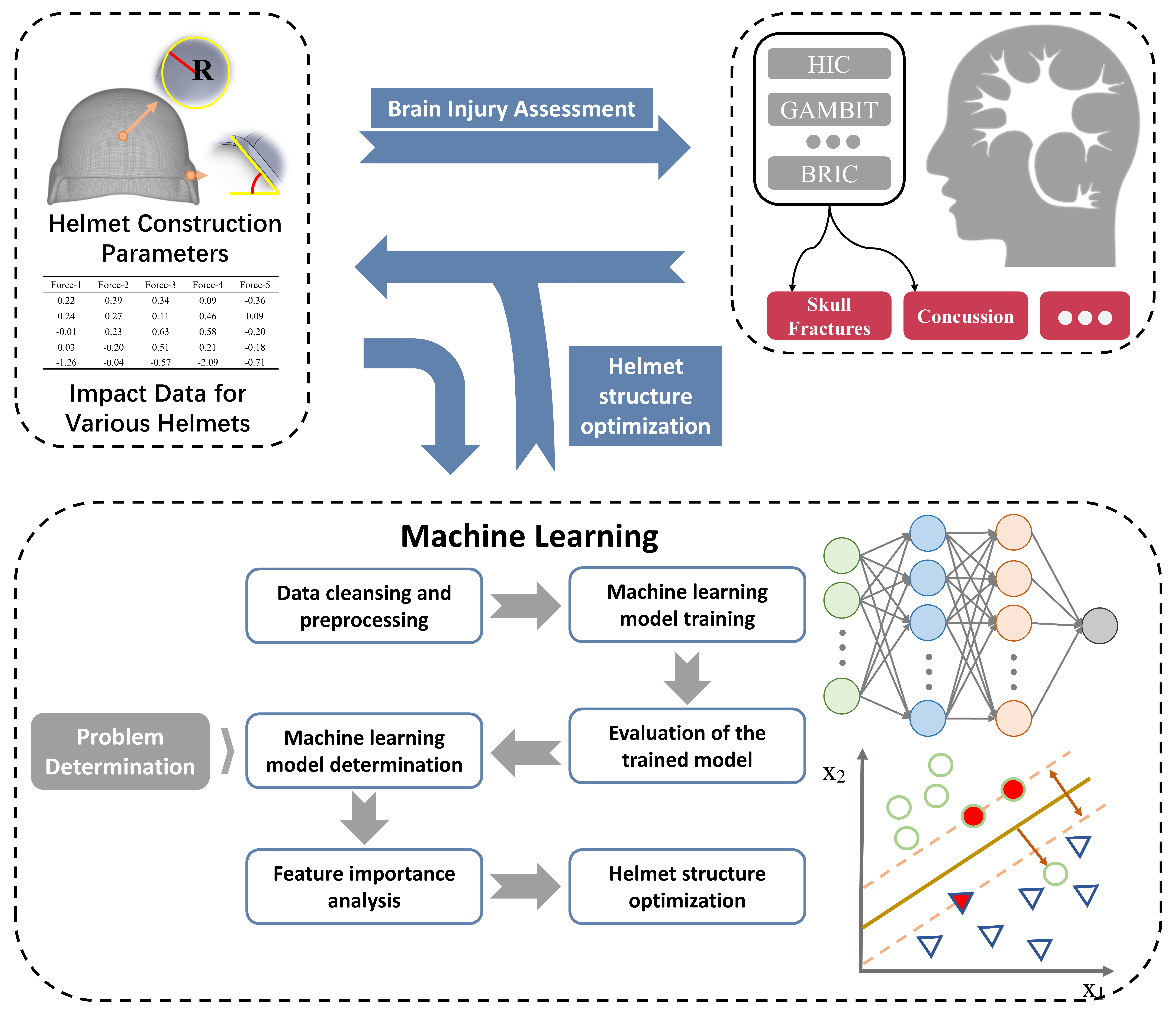

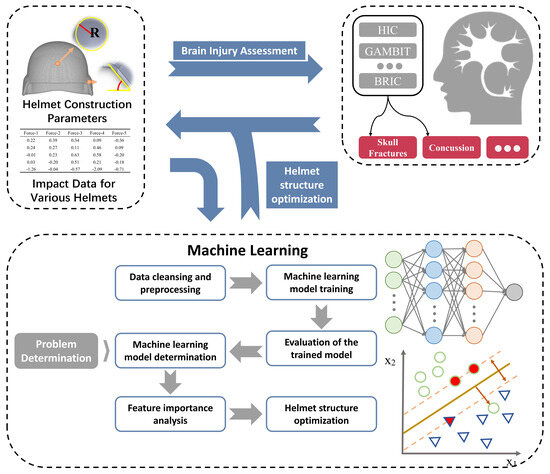

The application of machine learning methods in the optimization of protective helmet structures relies fundamentally on the excellent data processing capabilities of machine learning. The modeling data for protective helmet structures and the corresponding protective effect data are processed to achieve the nonlinear mapping of structural parameters for protective effects. By conducting an importance analysis of input features, beneficial key structural information on the helmet under impact was obtained and utilized for subsequent structural optimization. The structural optimization of an impact-resistant helmet was achieved through machine learning. The complete framework of the machine learning model for helmet design optimization is illustrated in Figure 1.

Figure 1.

The technological pathway for utilizing machine learning in the optimization of protective helmet structures.

The fundamental process of solving problems using machine learning typically involves the following steps: firstly, it is necessary to clearly define the problem to be solved and determine the type of problem, such as classification, regression, clustering, etc. [36,37]. Once the problem to be addressed is determined, data collection and preparation should be undertaken, ensuring the quality and completeness of the data [38,39]. During this process, preliminary understanding and exploration of the data needs to be conducted. It is essential to understand the characteristics, structure, and quality of the data, and perform data cleaning for missing values, outliers, duplicates, etc. [40,41,42]. Subsequently, feature engineering is conducted. By selecting and creating appropriate features, model performance can be enhanced. This process includes tasks such as feature selection, feature transformation, and feature dimensionality reduction [43,44,45]. In this study, to mitigate the risk of excessive dimensions during structural data processing, we simplified certain structural features and retained only those that are most representative. After feature processing is completed, the dataset is split into training, validation, and testing sets. The training set is utilized for model training, the validation set is used to tune hyperparameters and evaluate model performance, and the test set is used for the ultimate evaluation of the generalization performance of the models. Following the dataset split, an appropriate machine learning algorithm is selected based on the characteristics of the problem, and the model is trained using the training set. The training process typically involves the selection of appropriate loss functions and hyperparameter tuning. After the completion of training, the model is evaluated using the test set to examine its generalization ability and predictive performance. Due to the predominant use of regression algorithms in this study, evaluation metrics used include Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R-squared, and correlation coefficient, etc. [46,47,48]. If the model performs poorly, adjustments need to be made based on its performance on the validation set. This may involve the adjustment of hyperparameters in the model and the selection of features [49,50,51,52]. Finally, the best-performing model is applied to helmet protective performance prediction. After deploying the model, subsequent continuous monitoring and performance optimization of the model can be conducted to ensure its effectiveness and reliability in practical applications.

2.3. Brain Injury Assessment Methods

From a biomechanical perspective, traumatic brain injury is considered the consequence of a series of complex interactions between scalp–skull–meninges–brain and the physical environment. Head acceleration, angular velocity, pressure, and stress–strain parameters experienced by the head are considered as criteria for determining the severity of traumatic brain injury [53,54]. In this context, common assessment criteria for traumatic brain injury include Head Injury Criterion (HIC), Generalized Acceleration Model for Brain Injury Threshold (GAMBIT), Brain Injury Criteria (BrIC), and other standards [55,56,57,58]. The various assessment criteria can be divided into two categories: one is based on linear acceleration, which considers traumatic brain injury as a result of the pressure gradient within the skull caused by pure linear acceleration, while the second focuses on angular velocity, suggesting that under impact, traumatic brain injury is mainly caused by shear stress resulting from differential motion between the skull and the brain. Current research has demonstrated that rotational acceleration contributes more significantly to the occurrence of concussive injuries, diffuse axonal injuries, and subdural hematomas than linear acceleration. Moreover, nearly every known type of head injury can be induced by rotational acceleration. BrIC is a typical standard that uses angular velocity to calculate the extent of injury. Therefore, in this paper, the BrIC standard and original angular velocity are used as the basis for helmet structural optimization.

As early as 2011, the BrIC was proposed by Takhounts [59] et al. through experimental studies on 50th percentile mechanical dummies. In the development of this criterion, the researchers first derived a brain injury criterion based on the dynamic response of the SIMon cranial finite element model through a large number of animal experimental data. Subsequently, the relationship between head load and the dynamic response of the brain finite element model was established through collision experiments on dummies. On this basis, the BrIC2011 criteria were proposed for different dummy models [60]. A similar approach was used to study diffuse brain injury in American football players after collisions, and the new BrIC2013 injury standard was proposed [61]. The formula of BrIC is shown in Equation (1):

where , , represent the angular velocity components of the brain in different directions, while , , are the respective angular velocity thresholds. Their values are set to 66.25, 56.45, and 42.87, with units of Rad/s. The values of , , and are typically collected from sensors. Although the BrIC can be used as a standard for the assessment of brain injury criteria, it should be noted that linear and angular velocities can individually lead to brain injuries in experimental animals, but the cost of collecting data on such severe head injuries is extremely high. Therefore, it is challenging to obtain data that satisfies the injury threshold values in the collected real-world data.

AIS [62,63], which stands for Abbreviated Injury Scale, is currently the most commonly used anatomical injury severity scoring system in trauma assessment. In past studies, when BrIC reached 0.605, the probability of experiencing AIS2+ risk is 8.08%; when the value reached 0.854, the probability of AIS2+ risk is as high as 64.6%, while the risk probability of AIS4+ is 25.8%. The AIS2+ risk already encompasses the risks of skull vault fractures and memory loss, while AIS4+ includes risks such as prolonged unconsciousness, neurological disorders, depressed skull fractures, meningeal rupture, or tissue damage. Therefore, the predicted BrIC values can more intuitively reflect the actual possibility and severity of injuries when subjected to impact in real life, providing better guidance for helmet designers to optimize helmet structure design.

3. Results and Discussion

3.1. Data Preprocessing

This study digitized the collected data on helmet structures and investigated the protective performance of helmet structures in frontal collisions. In this study, common protective helmets were subjected to impact experiments, during which data such as internal pressure and rotational angular velocity were recorded. The collected experimental data were then used to calculate injury severity using the BrIC method. Following this, the structural features of the helmets were digitized to generate structural data for model training, allowing for the analysis of the relationship between helmet design and injury severity. During this process, data from 24 different helmet structures were extracted, including helmet mass, helmet size, maximum curvature in different directions, and their corresponding positions. Specific structural parameter details are provided in the Supplemental Information. The structural feature parameters obtained are derived from the structural considerations necessary for helmet design and measured using a standardized measurement approach. Due to variations in liners from different manufacturers, defining liner structural parameters would further expand the feature space and increase model complexity. Therefore, data collection for all helmets utilized a consistent liner type. After combining the structural parameter information with the corresponding impact response, a dataset for the machine learning was constructed. This dataset comprises 1200 different data points for training the machine learning models.

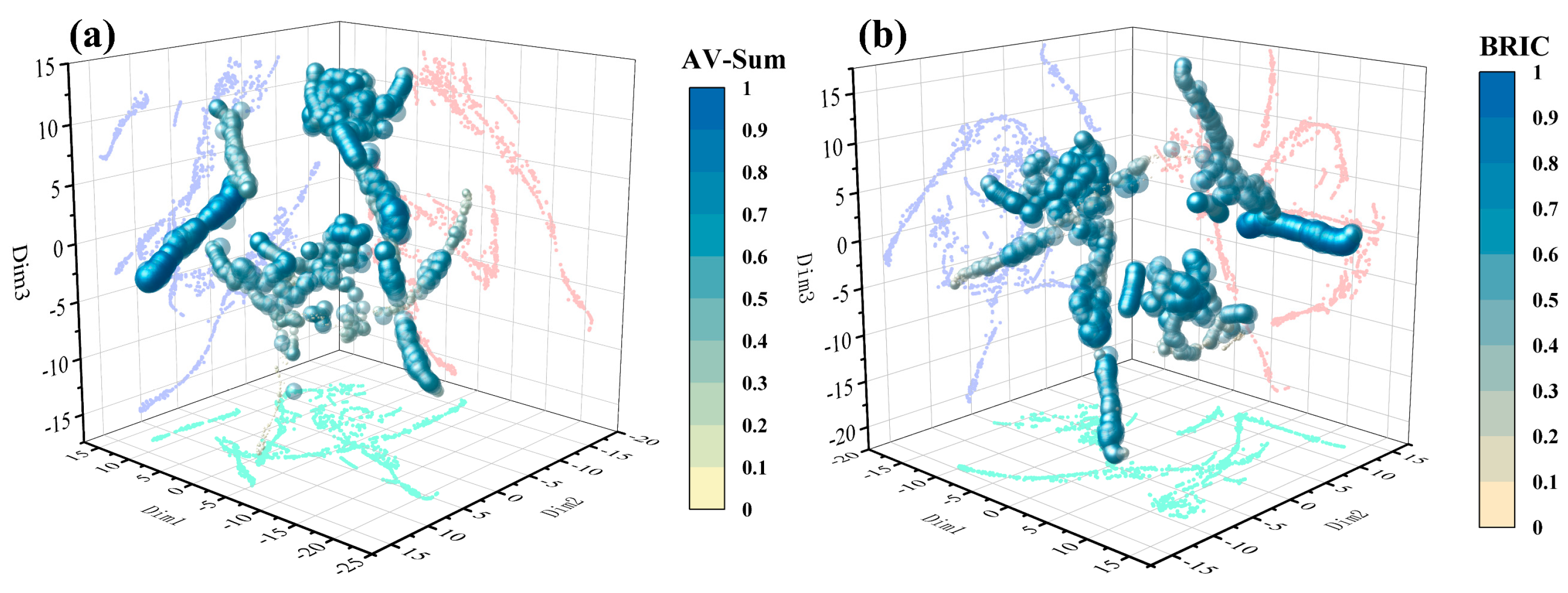

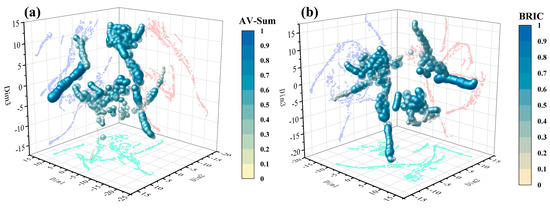

Data preprocessing involves transforming features, such as logarithmic transformation, standardization, and normalization, etc., to better align the data with the model assumptions, thereby enhancing the training speed and accuracy of the model. In addition, dimensionality reduction can be performed during data preprocessing to reduce the number of features in high-dimensional data [64,65], thereby improving computational efficiency. In this work, dimensionality reduction visualization was performed on the collected data, and the visualization results are presented in Figure 2. The synthesized angular velocity data of the head model obtained in the experiment were normalized before visualization. The data used to study the BrIC injury criterion were derived from comprehensive calculations of experimental data. Data visualization showed that the data collected from two different research directions were relatively scattered, without any noticeable clustering phenomenon. However, some outliers remained in the data. Therefore, an anomaly detection method based on density values, Local Outlier Factor (LOF) [66], was used to handle the outlier points. After LOF processing, a thorough investigation was conducted into potential outliers in the dataset. While ensuring that no key information was lost, the outliers were cleaned to prevent the influence of individual data points that are difficult to fit on the overall performance of the model.

Figure 2.

(a) Visualization results of the angular velocity dataset at the center of mass of the helmet; (b) BrIC brain injury assessment data.

3.2. Construction of Angular Velocity Prediction Model

3.2.1. Construction of Prediction Model

After visualizing and preprocessing, the data were used to construct machine learning models. In head injury assessment methods such as GAMBIT and BrIC, the angular velocity at the center of mass of the head under the helmet is considered an important parameter. Therefore, investigation of the relationship between helmet structure and angular velocity after impact can be an important basis for the optimization of helmet structure.

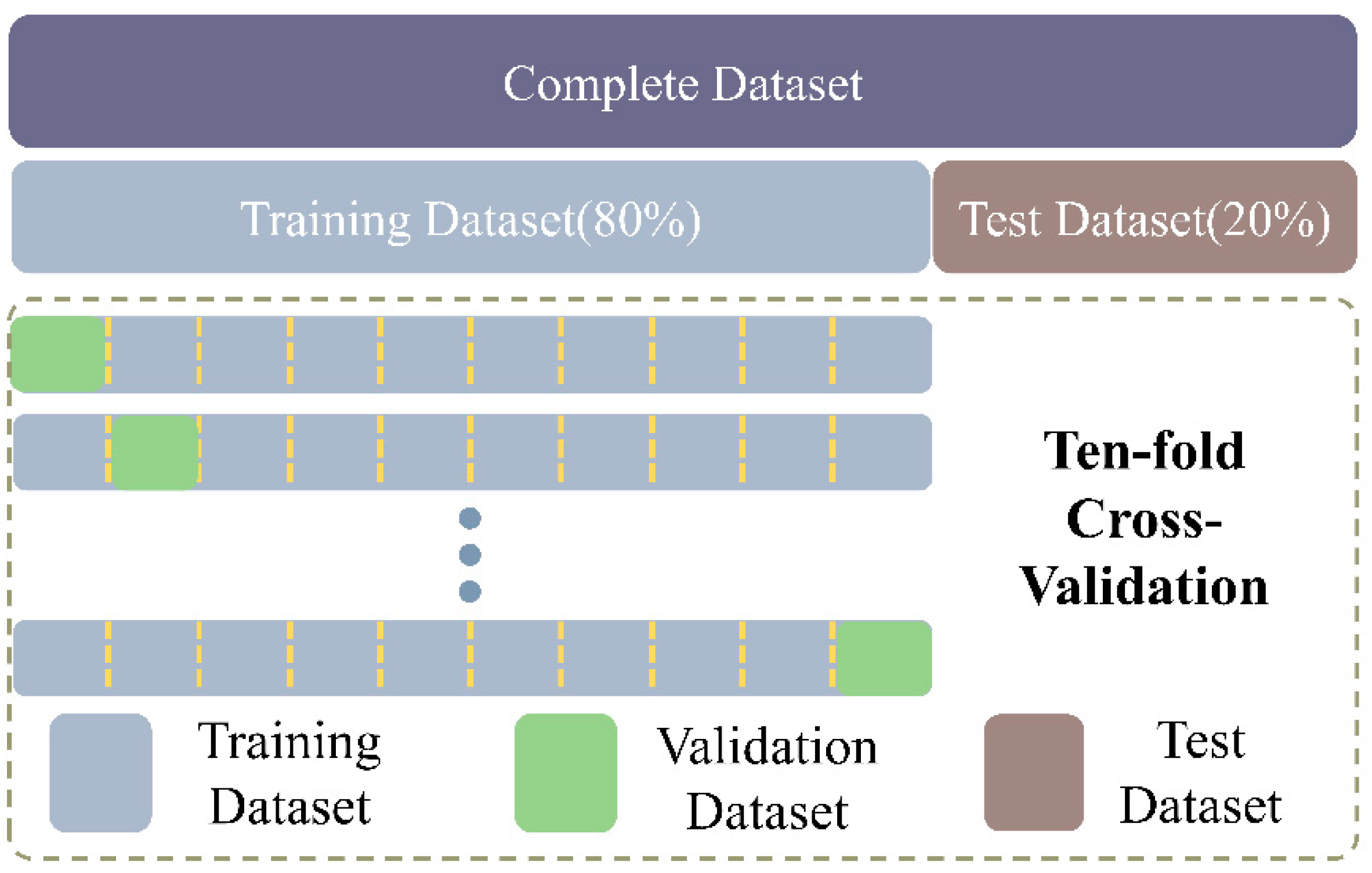

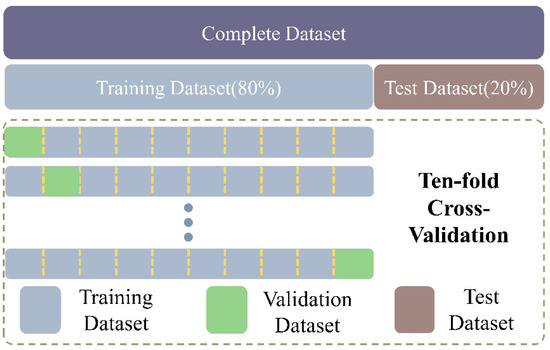

Before initiating machine learning training, the fitting task of the model needs to be defined. SVM is a supervised learning algorithm that uses distance metrics to calculate the hyperplane for classification tasks. By employing different kernel functions, SVM can also perform well in regression tasks. Both Random Forest (RF) and Extreme Gradient Boosting (XGBoost) are decision tree-based models, with the key difference lying in how they produce results: RF typically outputs the average of predictions from multiple decision trees, while XGBoost aggregates the prediction results from individual trees. Additionally, the Multilayer Perceptron (MLP) is a prediction model based on fully connected neural networks. Because angular velocity was a continuous variable in the dataset, regression machine learning models were used. The SVM, RF, XGB, and MLP are commonly used for regression task predictions. These four models have been proven to perform well with small sample data. Therefore, in this study, these four models were utilized to model the constructed dataset due to the small size of the dataset obtained. Accordingly, regression model evaluation methods such as MSE, RMSE, R-squared, and correlation were used to evaluate the trained models. The original dataset was split (the split results are shown in Figure 3), and 80% of the total dataset was allocated for model training, while the remaining 20% constituted the test set. In the training dataset, the model was trained using a ten-fold cross-validation approach [67]. The ten-fold cross-validation method divided the training dataset into ten equally sized subsets, with nine subsets used for training and the remaining subset used for validation. The training of the model was repeated ten times. In each training iteration, a different subset was used as the validation data. The results of ten iterations were compared to evaluate the model, thereby reducing the randomness of the evaluation.

Figure 3.

Schematic diagram of model training data allocation.

During the machine learning training process, the hyperparameters of the four models were adjusted via combination of ten-fold cross-validation and grid search. Before grid search, the number of trees in the RF and XGB models was determined using generalization error as the evaluation criterion. In the study, a seven-layer MLP architecture comprising input and output layers and five hidden layers was utilized. The number of neurons in the hidden layers was also considered as the target for parameter search. Four prediction models were trained for the same prediction task. It is important to note that the datasets used for both tasks are identical. The first task aims to predict angular velocity using machine learning methods, while the second task bypasses angular velocity and establishes a direct relationship between the input features and injury.

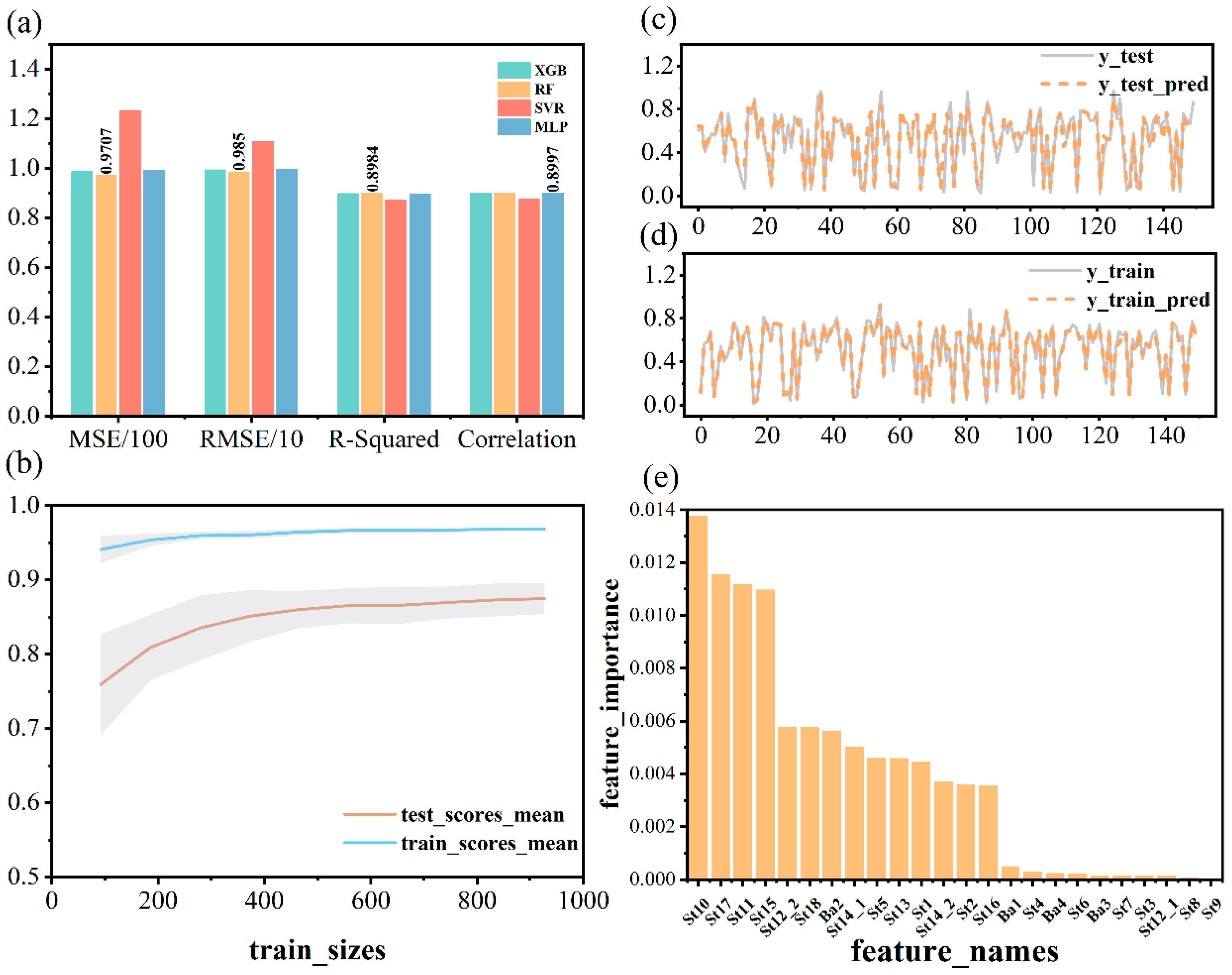

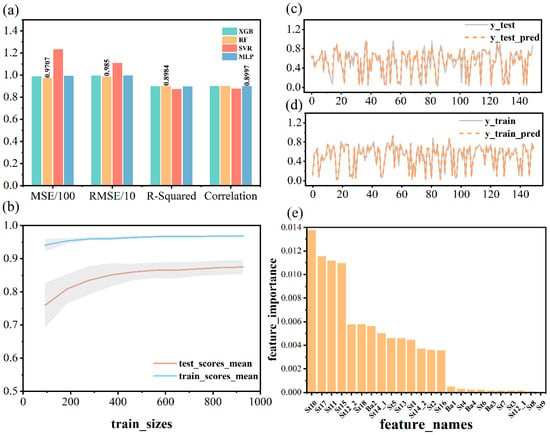

3.2.2. Model Evaluation and Analysis

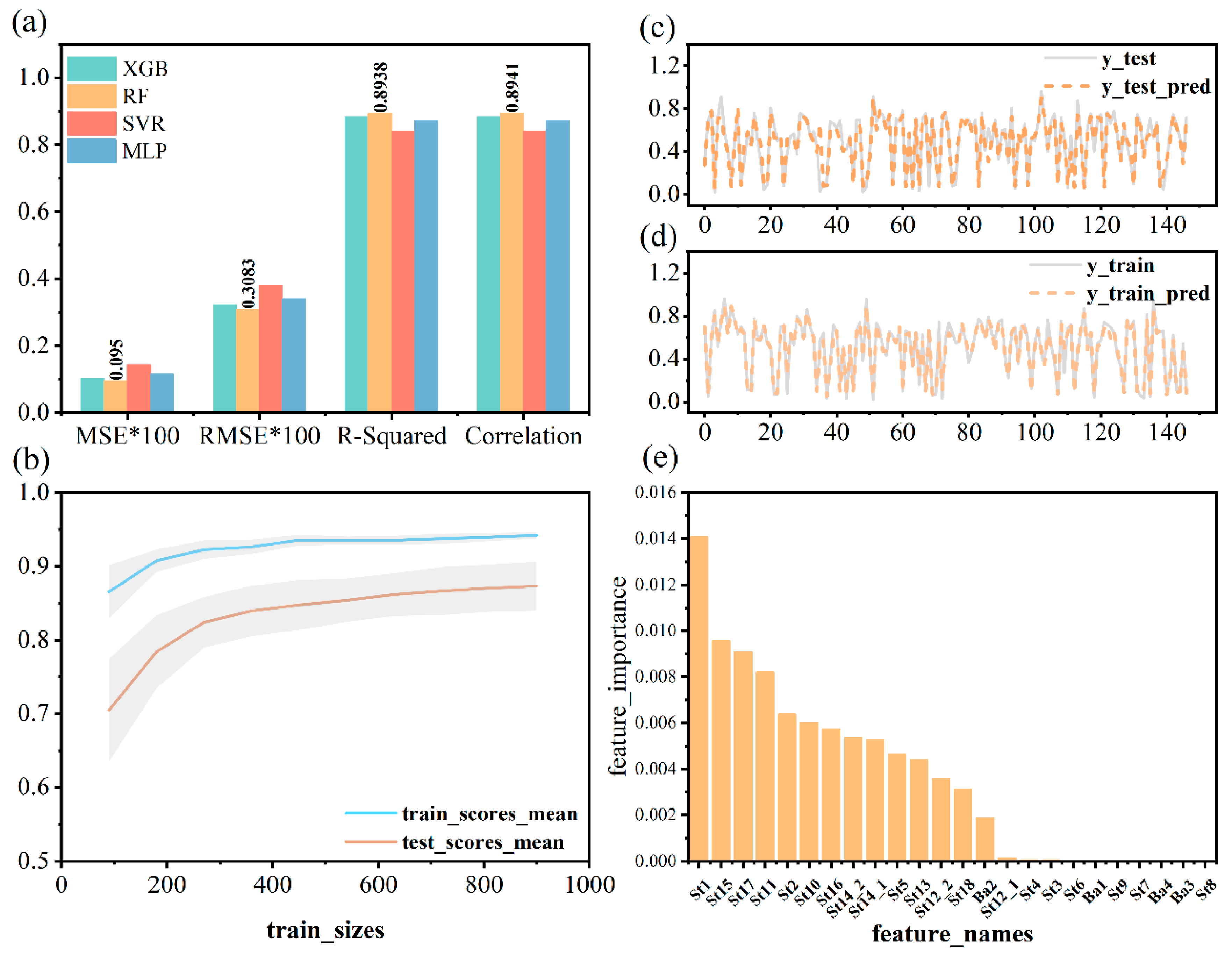

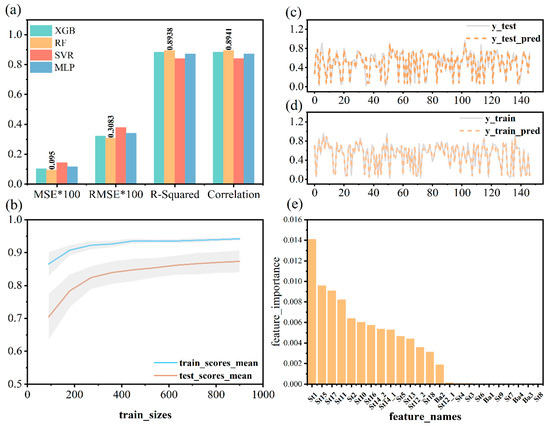

The training results obtained after determining the hyperparameters of each model are presented in Table 1, demonstrating the optimal fitting performance of each model under these parameters. The RF model exhibited the best performance, with an R-squared value of 0.898 and RMSE of 9.85. This was followed by the XGB model, with an R-squared value of 0.896 and RMSE of 9.93. The comparison results of scaled MSE and RMSE are displayed in Figure 4a. By comparing the horizontal comparison results of the four trained models, it was observed that the decision tree class models exhibited a favorable fitting state for the dataset. Among them, the RF model exhibited the best performance. From the learning curve of the RF (Figure 4b), it can be observed that the RF model converges rapidly and does not exhibit overfitting or underfitting. Therefore, the RF model was determined to have higher reliability and could be used for subsequent analysis of helmet structure importance. The effect of fitting the first 150 data points is shown in Figure 4c,d, where the fitting status of the model on the training and test sets reflected by the learning curves can be observed; the higher the overlap between the two curves, the better the fitting performance of the model. The fitting performance plot shows that the prediction accuracy of the training dataset is higher than that of the testing dataset, further confirming the training status indicated by the learning curve of the RF model.

Table 1.

Performance of the centroid angular velocity prediction model.

Figure 4.

(a) Horizontal comparison chart of each model evaluation index using dataset training and evaluation. (b) RF learning curve, where the abscissa is the amount of data used for training and the ordinate is the score. (c) Performance of the RF model on the test set. (d) RF performance on training set. (e) Ranking of input feature contribution based on SHAP machine learning model interpretation method.

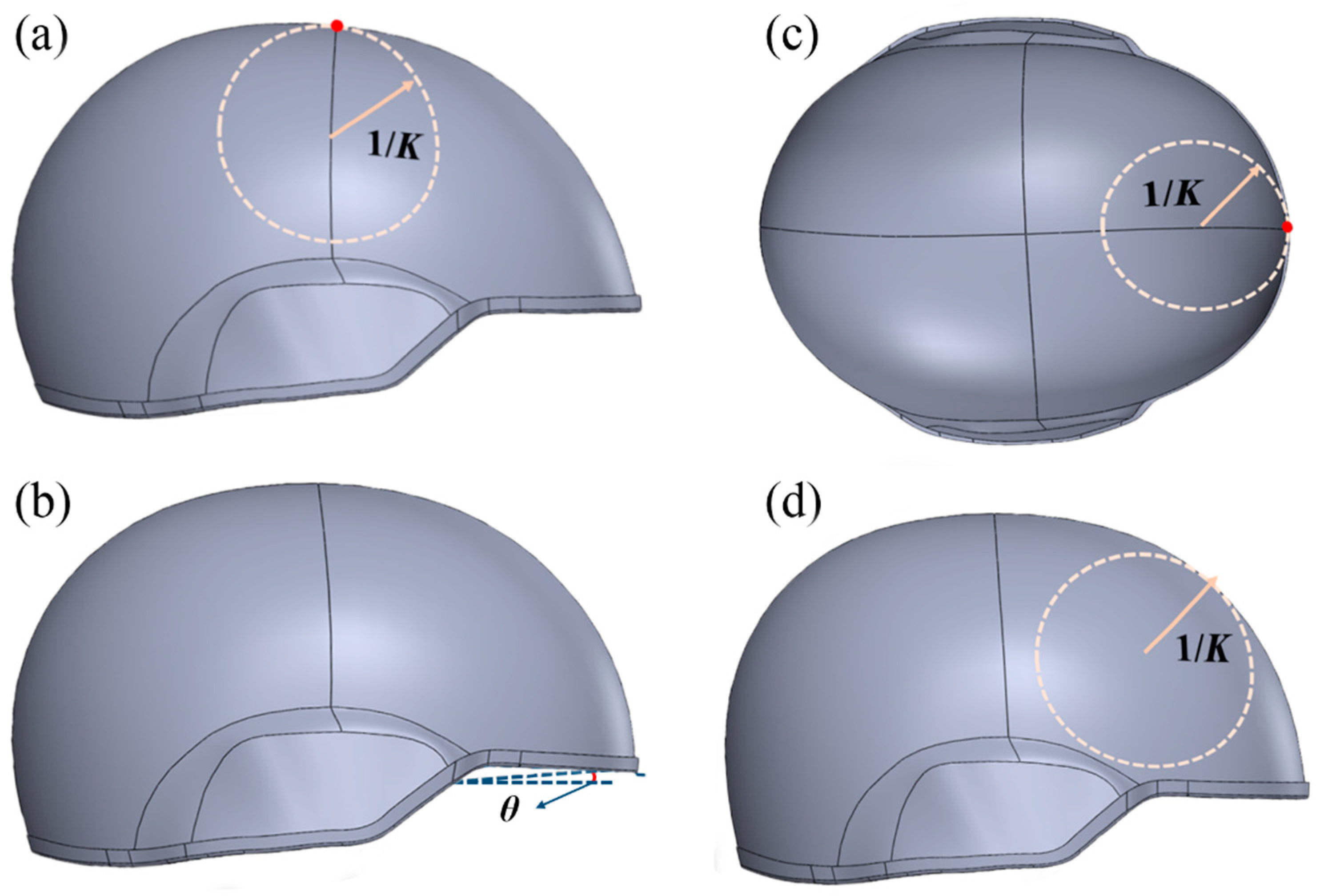

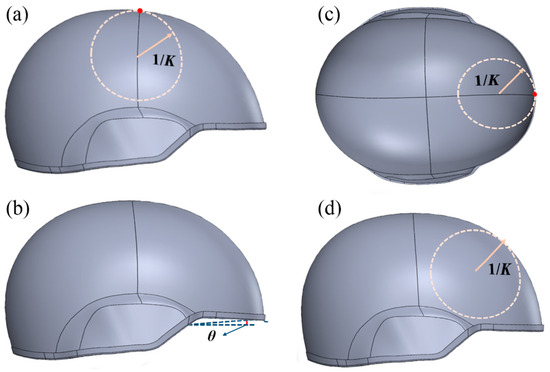

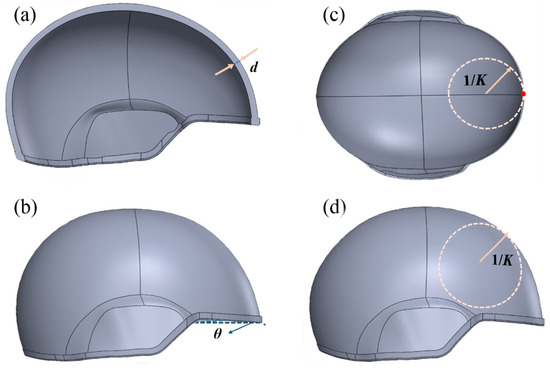

On the basis of RF, SHapley Additive exPlanations [68,69] (an explanatory machine learning model based on the concept of Shapley value in game theory) was used to evaluate the importance of input features. From this, the features in the helmet structure that significantly influenced output predictions of the model were determined, guiding helmet structure optimization. The results of the feature contribution analysis conducted using SHapley Additive exPlanations (SHAP) are displayed in Figure 4e. Among the 24 provided structural features, the first four features exhibit significant advantages over the others. These labels mainly include St10, St17, St11, and St15. The corresponding helmet structures are, respectively, the longitudinal curvature of the top of the helmet, the transverse curvature at the foremost point of the forehead, the helmet forehead bottom edge elevation angle, and the maximum curvature along the longitudinal centerline of the forehead. The corresponding helmet structure is shown in Figure 5. Through interpretability analysis of the model, it is observed that these four types of structures have a significant impact on the angular velocity of the head center of mass when the helmet is subjected to frontal impact. Designers can achieve helmet optimization design with the angular velocity at the center of mass of the head as the optimization target by adjusting the four aforementioned structures. Therefore, this reduces the angular velocity experienced by the head of the wearer during impact, alleviating the risk of head injuries in soldiers wearing helmets when subjected to impacts on the battlefield.

Figure 5.

(a) Longitudinal curvature of the top of the helmet; (b) the angular elevation of the helmet’s lowest point of front; (c) the transverse curvature at the foremost point of the forehead; (d) the maximum curvature along the longitudinal centerline of the forehead.

3.3. Brain Injury Prediction Model Based on BrIC

Result Analysis

It is more intuitive to predict the severity of head injuries under helmet wearing conditions based on BrIC standards. Following a consistent model construction approach, RF, XGB, SVM, and MLP were selected as the target models for training. Similarly, ten-fold cross-validation and grid search were used to adjust the hyperparameters of the models, with the best-performing model selected as the guiding model for helmet structure optimization.

Similar to the training approach in the previous section, the dataset was split into training set, validation set, and test set. The validation set was used to adjust model hyperparameters, and the test set was used for the final model evaluation. The evaluation criteria included MSE, RMSE, R-squared, and correlation coefficient. After determining the model parameters using grid search and ten-fold cross-validation, the model was trained and evaluated using the test set. As shown in Table 2, the evaluation results demonstrate the performance of the model. The RF model continued to exhibit optimal performance, with an R-squared value of 0.8938 and RMSE of 0.003. This was followed by the XGB model, with an R-squared of 0.8837 and RMSE of 0.0032. Therefore, it can be concluded that the best performance is exhibited by the RF model on the current dataset, which can be utilized for subsequent evaluations of helmet structure. The performance of various models in Figure 6a indicates that the RF model exhibits superior performance in various evaluation results, particularly in terms of R-squared and RMSE, when compared to the other models. After selecting the model, further exploration of its stability was conducted by plotting the learning curve (Figure 6b). The performance of the model tended to stabilize with an increasing number of training data samples under the current hyperparameters, indicating the convergence of the machine learning model on the dataset. The performance of the RF model on both the test set and the training set indicates that the model is in a good fitting state, with no obvious signs of overfitting observed.

Table 2.

Performance evaluation of the prediction model based on the BrIC assessment criteria.

Figure 6.

(a) Horizontal comparison chart of each model evaluation index using dataset training and evaluation. (b) RF learning curve, where the abscissa is the amount of data used for training and the ordinate is the score. (c) Performance of the RF model on the test set. (d) RF performance on training set. (e) Input feature contribution ranking.

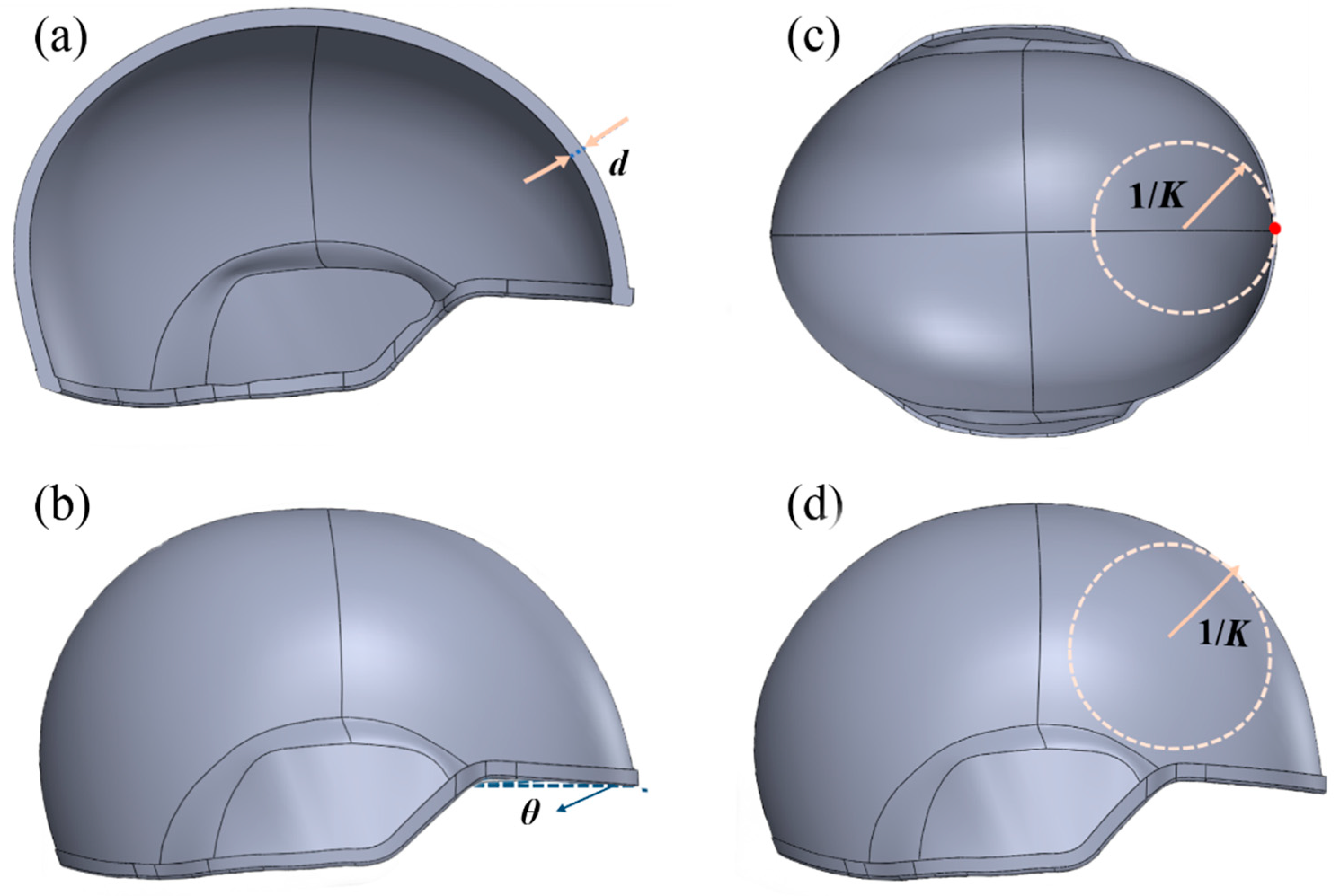

Subsequently, the visual representation of the machine learning model predictions on the training set and test set was further observed, as shown in Figure 6c,d. From the degree of overlap between the predictions of the model and the original data curves of the first 150 data points in the test set, it can be seen that the model performed well in both training and testing sets. After finalizing the machine learning model, the trained model was used for feature importance assessment, and the evaluation results are depicted in Figure 6e. The results indicate that the first four features exhibited higher levels of importance. Thus, it is determined that the optimization of the corresponding helmet structures could influence the output of the model. Based on the helmet structure and corresponding illustrations, the structures with significant impact in BrIC assessment are helmet thickness, transverse curvature at the foremost point of the forehead, the angular elevation of the helmet’s lowest point of front, and the maximum curvature along the longitudinal centerline of the forehead. Each structural schematic is displayed in Figure 7. Comparing the results of the previous prediction, it is observed that among the 24 structures analyzed, the transverse curvature at the foremost point of the forehead, the helmet forehead bottom edge elevation angle, and the maximum curvature along the longitudinal centerline of the forehead give high contribution assessment values in both machine learning models. Therefore, whether optimizing the angular velocity of the center of the head of mass or BRIC injury, the three helmet structures should be given priority consideration. In the optimization process, increasing the priority of these three structures could assist helmet designers in optimizing the helmet structure more effectively.

Figure 7.

(a) Helmet thickness; (b) the angular elevation of the helmet’s lowest point of front; (c) the transverse curvature at the foremost point of the forehead; (d) the maximum curvature along the longitudinal centerline of the forehead.

3.4. Discussion

This study employs machine learning methods for helmet structure optimization design, using helmet structural data and damage calculations under specific test conditions as model inputs to establish a relationship between helmet structure and potential damage from frontal impact. By analyzing the impact of input helmet structural data on damage severity, it is possible to identify the importance of various helmet features during design optimization. While this approach offers valuable guidance to some extent, there are still certain limitations in the overall research, including the following issues:

Insufficient Detail in Input Structural Description: The study used only a subset of key structural parameters to digitize the helmet structure. While this partially reflects the structure of the helmet, some detailed structural information was inevitably missed, which could limit the effectiveness of the training approach.

Uniform Use of Helmet Padding in Data Collection: To ensure consistency during data acquisition, the same helmet padding was used across all tests. However, it is important to consider that different padding materials and configurations could introduce varying impacts on the helmet’s performance. Incorporating diverse padding options could expand the model’s input features, as additional characteristics such as material and structure would need to be considered. Nevertheless, the current approach remains suitable for optimization studies.

Application of Linear Acceleration in Model Research: In the study, only angular velocity was considered as a factor influencing impact effects, which inevitably introduces limitations. Currently, there are numerous methods that incorporate both linear acceleration and angular velocity into injury research. This direction can serve as an important area for future research expansion and refinement.

High Data Acquisition Costs: The data were derived from an experimental study conducted in the laboratory, where data acquisition typically involves significant costs. These high costs present restrictions on the development of machine learning applications in design research. Simulation-based virtual testing, such as using modeling software to simulate helmet structures and employing finite element analysis for data generation, could serve as a potential alternative approach in future work.

4. Conclusions

A novel machine learning-guided optimization approach for the design of protective helmet structures was proposed in this research, and relevant studies were conducted. In the study, modeling was performed on datasets using algorithms such as RF, SVM, XGB, and MLP. Ten-fold cross-validation combined with grid search was used to determine the optimal hyperparameters of the model, obtaining the best model to guide structural optimization. Among the obtained models, the RF exhibited the best performance in optimizing angular velocity, achieving a testing set RMSE of 9.85 and an R-squared value of 0.8984, indicating good fitting performance. Consequently, RF guidance for structural design with the objective of centroid angular velocity will result in higher reliability. In the predictive models for BrIC injury, the RF model continued to demonstrate the best performance. The RMSE value is 0.003 and the R-squared value is 0.8938. The contribution analysis of input features by the two optimal models indicated that three structures, namely the transverse curvature at the foremost point of the forehead, the helmet forehead bottom edge elevation angle, and the maximum curvature along the longitudinal centerline of the forehead, exhibited high contributions in both models. Therefore, regardless of whether the optimization target is angular velocity or BrIC injury, helmet design should prioritize the aforementioned three structures to avoid problems associated with ineffective designs caused by the subjective actions of designers.

Machine learning for helmet design can provide designers with objective design recommendations, but caution is essential in its use. As a data-driven research approach, the quality of data determines the reliability of the model. Inherent limitations in the data can potentially reduce the generalizability of the model. Therefore, future research should focus on continuously enriching the available training data to further enhance model performance.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/pr13030877/s1, Table S1: Identifier structure comparison table.

Author Contributions

Methodology, Y.C. and H.J.; software, Y.C., J.W., P.L. and Y.W.; validation, J.W. and P.L.; investigation, Y.C., J.W. and P.L.; data curation, Y.C. and J.W.; writing—original draft preparation, Y.C., H.J. and Y.K.; writing—review and editing, H.J., B.L., T.M., X.H., W.L. and Y.K.; supervision, H.J., T.M. and Y.K.; funding acquisition, H.J. and B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the National Natural Science Foundation of China (No. 51902276, 62005234), the Natural Science Foundation of Hunan Province (No. 2019JJ50583, 2023JJ30585, 2023JJ30596), and the Scientific Research Fund of Hunan Provincial Education Department (No. 21B0111, 21B0136).

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Cascarano, A.; Mur-Petit, J.; Hernández-González, J.; Camacho, M.; de Toro Eadie, N.; Gkontra, P.; Chadeau-Hyam, M.; Vitrià, J.; Lekadir, K. Machine and Deep Learning for Longitudinal Biomedical Data: A Review of Methods and Applications. Artif. Intell. Rev. 2023, 56, 1711–1771. [Google Scholar] [CrossRef]

- Li, F.; Yigitcanlar, T.; Nepal, M.; Nguyen, K.; Dur, F. Machine Learning and Remote Sensing Integration for Leveraging Urban Sustainability: A Review and Framework. Sustain. Cities Soc. 2023, 96, 104653. [Google Scholar] [CrossRef]

- Osman, A.I.; Zhang, Y.; Lai, Z.Y.; Rashwan, A.K.; Farghali, M.; Ahmed, A.A.; Liu, Y.; Fang, B.; Chen, Z.; Al-Fatesh, A.; et al. Machine Learning and Computational Chemistry to Improve Biochar Fertilizers: A Review. Environ. Chem. Lett. 2023, 21, 3159–3244. [Google Scholar] [CrossRef]

- Pichler, M.; Hartig, F. Machine Learning and Deep Learning—A Review for Ecologists. Methods Ecol. Evol. 2023, 14, 994–1016. [Google Scholar] [CrossRef]

- Zhang, L.; Wen, J.; Li, Y.; Chen, J.; Ye, Y.; Fu, Y.; Livingood, W. A Review of Machine Learning in Building Load Prediction. Appl. Energy 2021, 285, 116452. [Google Scholar] [CrossRef]

- Flach, P.A. On the State of the Art in Machine Learning: A Personal Review. Artif. Intell. 2001, 131, 199–222. [Google Scholar] [CrossRef]

- Dong, X.; Thanou, D.; Toni, L.; Bronstein, M.; Frossard, P. Graph Signal Processing for Machine Learning: A Review and New Perspectives. IEEE Signal Process. Mag. 2020, 37, 117–127. [Google Scholar] [CrossRef]

- Koushik, A.N.P.; Manoj, M.; Nezamuddin, N. Machine Learning Applications in Activity-Travel Behaviour Research: A Review. Transp. Rev. 2020, 40, 288–311. [Google Scholar] [CrossRef]

- Reel, P.S.; Reel, S.; Pearson, E.; Trucco, E.; Jefferson, E. Using Machine Learning Approaches for Multi-Omics Data Analysis: A Review. Biotechnol. Adv. 2021, 49, 107739. [Google Scholar] [CrossRef]

- Gao, P.; Zhang, H.; Yu, J.; Lin, J.; Wang, X.; Yang, M.; Kong, F. Secure Cloud-Aided Object Recognition on Hyperspectral Remote Sensing Images. IEEE Internet Things J. 2021, 8, 3287–3299. [Google Scholar] [CrossRef]

- Li, B.; Xiong, W.; Wu, O.; Hu, W.; Maybank, S.; Yan, S. Horror Image Recognition Based on Context-Aware Multi-Instance Learning. IEEE Trans. Image Process. 2015, 24, 5193–5205. [Google Scholar] [CrossRef] [PubMed]

- Adlung, L.; Cohen, Y.; Mor, U.; Elinav, E. Machine Learning in Clinical Decision Making. Med 2021, 2, 642–665. [Google Scholar] [CrossRef] [PubMed]

- Myszczynska, M.A.; Ojamies, P.N.; Lacoste, A.M.B.; Neil, D.; Saffari, A.; Mead, R.; Hautbergue, G.M.; Holbrook, J.D.; Ferraiuolo, L. Applications of Machine Learning to Diagnosis and Treatment of Neurodegenerative Diseases. Nat. Rev. Neurol. 2020, 16, 440–456. [Google Scholar] [CrossRef] [PubMed]

- Jung, Y.H.; Hong, S.K.; Wang, H.S.; Han, J.H.; Pham, T.X.; Park, H.; Kim, J.; Kang, S.; Yoo, C.D.; Lee, K.J. Flexible Piezoelectric Acoustic Sensors and Machine Learning for Speech Processing. Adv. Mater. 2020, 32, 1904020. [Google Scholar] [CrossRef]

- Liu, D.; Dai, W.; Zhang, H.; Jin, X.; Cao, J.; Kong, W. Brain-Machine Coupled Learning Method for Facial Emotion Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10703–10717. [Google Scholar] [CrossRef] [PubMed]

- Machado, G.R.; Silva, E.; Goldschmidt, R.R. Adversarial Machine Learning in Image Classification: A Survey Toward the Defender’s Perspective. ACM Comput. Surv. 2021, 55, 1–38. [Google Scholar] [CrossRef]

- Piris, Y.; Gay, A.-C. Customer Satisfaction and Natural Language Processing. J. Bus. Res. 2021, 124, 264–271. [Google Scholar] [CrossRef]

- Zhao, L.; Alhoshan, W.; Ferrari, A.; Letsholo, K.J.; Ajagbe, M.A.; Chioasca, E.-V.; Batista-Navarro, R.T. Natural Language Processing for Requirements Engineering: A Systematic Mapping Study. ACM Comput. Surv. 2021, 54, 1–41. [Google Scholar] [CrossRef]

- Carr, D.J.; Lewis, E.; Mahoney, P. Uk Military Helmet Design and Test Methods. BMJ Mil. Health 2020, 166, 342–346. [Google Scholar] [CrossRef]

- Hamouda, A.; Sohaimi, R.; Zaidi, A.; Abdullah, S. Materials and Design Issues for Military Helmets. In Advances in Military Textiles and Personal Equipment; Elsevier: Amsterdam, The Netherlands, 2012; pp. 103–138. [Google Scholar]

- Misra, A.; Srivastava, R.; Sarma, A. Design and Development of Customized Helmet for Military Personnel. In Proceedings of the International Conference on Production and Industrial Engineering, Online, 10–12 March 2023; Springer: Berlin, Germany, 2023; pp. 143–150. [Google Scholar]

- Natsa, S.; Akindapo, J.; Garba, D. Development of a Military Helmet Using Coconut Fiber Reinforced Polymer Matrix Composite. Eur. J. Eng. Technol. Vol. 2015, 3, 2056–5860. [Google Scholar]

- Sone, J.Y.; Kondziolka, D.; Huang, J.H.; Samadani, U. Helmet Efficacy against Concussion and Traumatic Brain Injury: A Review. J. Neurosurg. 2017, 126, 768–781. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, S.G.; Gao, X.-L.; Horner, S.; Zheng, J.Q.; David, N. Ballistic Helmets–Their Design, Materials, and Performance Against Traumatic Brain Injury. Compos. Struct. 2013, 101, 313–331. [Google Scholar] [CrossRef]

- McIver, K.G. Engineering Better Protective Headgear for Sport and Military Applications; Purdue University: West Lafayette, IN, USA, 2019. [Google Scholar]

- Wang, J.J.; Triplett, D.J. In Multioctave Broadband Body-Wearable Helmet and Vest Antennas. In 2007 IEEE Antennas and Propagation Society International Symposium; IEEE: Piscataway, NJ, USA, 2007; pp. 4172–4175. [Google Scholar]

- Leng, B.; Ruan, D.; Tse, K.M. Recent Bicycle Helmet Designs and Directions for Future Research: A Comprehensive Review from Material and Structural Mechanics Aspects. International journal of impact engineering. 2022, 168, 104317. [Google Scholar] [CrossRef]

- Chang, L.; Guo, Y.; Huang, X.; Xia, Y.; Cai, Z. Experimental Study on the Protective Performance of Bulletproof Plate and Padding Materials Under Ballistic Impact. Mater. Des. 2021, 207, 109841. [Google Scholar] [CrossRef]

- Huang, X.; Zheng, Q.; Chang, L.; Cai, Z. Study on Protective Performance and Gradient Optimization of Helmet Foam Liner Under Bullet Impact. Sci. Rep. 2022, 12, 16061. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Fan, H.; Gao, X.-L. Ballistic Helmets: Recent Advances in Materials, Protection Mechanisms, Performance, and Head Injury Mitigation. Compos. Part B Eng. 2022, 238, 109890. [Google Scholar] [CrossRef]

- Żochowski, P.; Cegła, M.; Berent, J.; Grygoruk, R.; Szlązak, K.; Smędra, A. Experimental and Numerical Study on Failure Mechanisms of Bone Simulants Subjected to Projectile Impact. Int. J. Numer. Methods Biomed. Eng. 2023, 39, e3687. [Google Scholar] [CrossRef]

- Lee, J.M. Mandatory Helmet Legislation as a Policy Tool for Reducing Motorcycle Fatalities: Pinpointing the Efficacy of Universal Helmet Laws. Accid. Anal. Prev. 2018, 111, 173–183. [Google Scholar] [CrossRef]

- Bottlang, M.; DiGiacomo, G.; Tsai, S.; Madey, S. Effect of Helmet Design on Impact Performance of Industrial Safety Helmets. Heliyon 2022, 8, e09962. [Google Scholar] [CrossRef]

- Palomar, M.; Lozano-Mínguez, E.; Rodríguez-Millán, M.; Miguélez, M.H.; Giner, E. Relevant Factors in the Design of Composite Ballistic Helmets. Compos. Struct. 2018, 201, 49–61. [Google Scholar] [CrossRef]

- Philippens, M.M.G.M.; Anctil, B.; Markwardt, K.C. Results of a Round Robin Ballistic Load Sensing Headform Test Series. In Proceedings of the Personal Armour Systems Symposium, Cambridge, UK, 8–12 September 2014. [Google Scholar]

- Ahuja, R.; Chug, A.; Gupta, S.; Ahuja, P.; Kohli, S. Classification and Clustering Algorithms of Machine Learning with Their Applications. In Nature-Inspired Computation in Data Mining and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 225–248. [Google Scholar]

- Kotsiantis, S.B.; Zaharakis, I.D.; Pintelas, P.E. Machine Learning: A Review of Classification and Combining Techniques. Artif. Intell. Rev. 2006, 26, 159–190. [Google Scholar] [CrossRef]

- Sluijterman, L.; Cator, E.; Heskes, T. How to Evaluate Uncertainty Estimates in Machine Learning for Regression? Neural Netw. 2024, 173, 106203. [Google Scholar] [CrossRef]

- Yildiz, B.; Bilbao, J.I.; Sproul, A.B. A Review and Analysis of Regression and Machine Learning Models on Commercial Building Electricity Load Forecasting. Renew. Sustain. Energy Rev. 2017, 73, 1104–1122. [Google Scholar] [CrossRef]

- Chen, Y.; Ji, H.; Lu, M.; Liu, B.; Zhao, Y.; Ou, Y.; Wang, Y.; Tao, J.; Zou, T.; Huang, Y.; et al. Machine Learning Guided Hydrothermal Synthesis of Thermochromic VO2 Nanoparticles. Ceram. Int. 2023, 49, 30794–30800. [Google Scholar] [CrossRef]

- Xu, P.; Ji, X.; Li, M.; Lu, W. Small Data Machine Learning in Materials Science. npj Comput. Mater. 2023, 9, 42. [Google Scholar] [CrossRef]

- Zelaya, C.V.G. Towards Explaining the Effects of Data Preprocessing on Machine Learning. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–12 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2086–2090. [Google Scholar]

- Kotsiantis, S.B.; Kanellopoulos, D.; Pintelas, P.E. Data Preprocessing for Supervised Leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar]

- Tao, S.; Sun, C.; Fu, S.; Wang, Y.; Ma, R.; Han, Z.; Sun, Y.; Li, Y.; Wei, G.; Zhang, X.; et al. Battery Cross-Operation-Condition Lifetime Prediction Via Interpretable Feature Engineering Assisted Adaptive Machine Learning. ACS Energy Lett. 2023, 8, 3269–3279. [Google Scholar] [CrossRef]

- Wei, J.; Chu, X.; Sun, X.-Y.; Xu, K.; Deng, H.-X.; Chen, J.; Wei, Z.; Lei, M. Machine Learning in Materials Science. InfoMat 2019, 1, 338–358. [Google Scholar] [CrossRef]

- Zheng, A.; Casari, A. Feature Engineering for Machine Learning: Principles and Techniques for Data Scientists; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2018. [Google Scholar]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Coefficient of Determination R-Squared Is More Informative Than Smape, Mae, Mape, Mse and Rmse in Regression Analysis Evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef]

- Gao, J. R-Squared (R2)–How Much Variation Is Explained? Res. Methods Med. Health Sci. 2024, 5, 104–109. [Google Scholar] [CrossRef]

- Wang, A.; Xu, J.; Tu, R.; Saleh, M.; Hatzopoulou, M. Potential of Machine Learning for Prediction of Traffic Related Air Pollution. Transp. Res. Part D Transp. Environ. 2020, 88, 102599. [Google Scholar] [CrossRef]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Sra, S.; Nowozin, S.; Wright, S.J. Optimization for Machine Learning; Mit Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Sun, S.; Cao, Z.; Zhu, H.; Zhao, J. A Survey of Optimization Methods from a Machine Learning Perspective. IEEE Trans. Cybern. 2019, 50, 3668–3681. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Shami, A. On Hyperparameter Optimization of Machine Learning Algorithms: Theory and Practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Blennow, K.; Brody, D.L.; Kochanek, P.M.; Levin, H.; McKee, A.; Ribbers, G.M.; Yaffe, K.; Zetterberg, H. Traumatic Brain Injuries. Nat. Rev. Dis. Primers 2016, 2, 16084. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Aravind, A.; Pfister, B.J.; Chandra, N.; Haorah, J. Animal Models of Traumatic Brain Injury and Assessment of Injury Severity. Mol. Neurobiol. 2019, 56, 5332–5345. [Google Scholar] [CrossRef] [PubMed]

- Marjoux, D.; Baumgartner, D.; Deck, C.; Willinger, R. Head Injury Prediction Capability of the Hic, Hip, Simon and Ulp Criteria. Accid. Anal. Prev. 2008, 40, 1135–1148. [Google Scholar] [CrossRef]

- Shuaeib, F.M.; Hamouda, A.M.S.; Radin Umar, R.S.; Hamdan, M.M.; Hashmi, M.S.J. Motorcycle Helmet: Part I. Biomechanics and Computational Issues. J. Mater. Process. Technol. 2002, 123, 406–421. [Google Scholar] [CrossRef]

- Newman, J.A.; Shewchenko, N. A Proposed New Biomechanical Head Injury Assessment Function—The Maximum Power Index; The Stapp Association: Ann Arbor, MI, USA, 2000. [Google Scholar]

- JA, N. In A Generalized Acceleration Model for Brain Injury Threshold (Gambit). In Proceedings of the International IRCOBI Conference, Zurich, Switzerland, 2–4 September 1986. [Google Scholar]

- McLEAN, A.J. Brain Injury without Head Impact? J. Neurotrauma 1995, 12, 621–625. [Google Scholar] [CrossRef]

- Takhounts, E.G.; Hasija, V.; Ridella, S.A.; Rowson, S.; Duma, S.M. Kinematic Rotational Brain Injury Criterion (Bric). In Proceedings of the 22nd Enhanced Safety of Vehicles Conference, Washington, DC, USA, 13–16 June 2011; pp. 1–10. [Google Scholar]

- Takhounts, E.G.; Craig, M.J.; Moorhouse, K.; McFadden, J.; Hasija, V. Development of Brain Injury Criteria (Bric); SAE Technical Paper; SAE International: Warrendale, PA, USA, 2013. [Google Scholar]

- Civil, I.D.; Schwab, C.W. The Abbreviated Injury Scale, 1985 Revision: A Condensed Chart for Clinical Use. J. Trauma 1988, 28, 87–90. [Google Scholar] [CrossRef]

- Belkina, A.C.; Ciccolella, C.O.; Anno, R.; Halpert, R.; Spidlen, J.; Snyder-Cappione, J.E. Automated Optimized Parameters for T-Distributed Stochastic Neighbor Embedding Improve Visualization and Analysis of Large Datasets. Nat. Commun. 2019, 10, 5415. [Google Scholar] [CrossRef] [PubMed]

- Bro, R.; Smilde, A.K. Principal Component Analysis. Anal. Methods 2014, 6, 2812–2831. [Google Scholar] [CrossRef]

- Cheng, Z.; Zou, C.; Dong, J. Outlier Detection Using Isolation Forest and Local Outlier Factor. In Proceedings of the Conference on Research in Adaptive and Convergent Systems, Chongqing, China, 24–27 September 2019; pp. 161–168. [Google Scholar]

- van der Gaag, M.; Hoffman, T.; Remijsen, M.; Hijman, R.; de Haan, L.; van Meijel, B.; van Harten, P.N.; Valmaggia, L.; De Hert, M.; Cuijpers, A. The Five-Factor Model of the Positive and Negative Syndrome Scale Ii: A Ten-Fold Cross-Validation of a Revised Model. Schizophr. Res. 2006, 85, 280–287. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.L.S.-I. A Unified Approach to Interpreting Model Predictions. arXiv 2017, arXiv:1705.07874. [Google Scholar]

- Mangalathu, S.; Hwang, S.-H.; Jeon, J.-S. Failure Mode and Effects Analysis of Rc Members Based on Machine-Learning-Based Shapley Additive Explanations (Shap) Approach. Eng. Struct. 2020, 219, 110927. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).