1. Introduction

Cracks in concrete are considered as a significant flaw when inspecting civil engineering projects. From an engineering perspective, fractures affect not only the stability and longevity of engineering constructions, but also the durability of concrete [

1], which may be caused by small or massive cracks that slowly spread and cause the final collapse or destruction of the structure. Currently, the primary approaches to detect crack-like flaws refer to simple instrumental measurements and visual inspection. The latter, however, is considered as an arduous operation. Moreover, there may be significant misdetection and omission in some regions with problems [

2]. Manual crack detection is not ideal for mass detection since it frequently encounters problems such as heavy workload, complex structure, and inconsistent evaluation standards. Compared with manual inspection, machine vision inspection shows the features of efficiency as well as safety and reliability due to its lack of contact with the object. Traditional machine vision methods have been extensively used to solve industrial problems, including object inspection [

3], material contour measurement [

4], distance measurement [

5], etc. For example, multi-vision measurement methods can be used to accurately measure the surface deformation and full-field strain values of steel pipe concrete columns [

6]. The use of exponential functional density clustering models can perform better than the clustering and deep learning (DL) methods for indoor object extraction tasks [

7]. Despite the considerable achievements, conventional vision technologies still require expert analysis and fine-tuning for their application, making them inappropriate for complex problems. Due to the continuous innovation and development of digital images, the combination of digital image processing methods and DL in the engineering structural defect detection industry is a new research direction in crack inspection technology in recent years. Then, the DL algorithms for autonomous detection are used to identify the target defects on the surface of the engineering structure rather than identifying them based on the artificial experience of using an unmanned aerial vehicle [

8] or a wall-climbing robot [

9] carrying relevant equipment to capture a significant amount of image data on the surface of engineering structures. In recent years, DL algorithms have made significant strides in the field of computer vision [

10]. According to recent research, convolutional neural networks (CNN) can be utilized for tasks including classification [

11], localization [

12], and segmentation [

13] in crack detection tasks. For example, researchers can augment digital image data with Generative adversarial networks (GAN) and combine them with improved visual geometry group (VGG) networks to achieve crack classification [

14]. In terms of crack width measurement, a new crack width measurement method based on backbone dual-scale features can improve detection automation [

15]. These studies are increasingly concentrated on employing new DL methods with successful outcomes. In certain studies on new cement repair materials, fracture segmentation algorithms based on DL have even been employed to evaluate the material performance [

16].

Grey-scale segmentation, conditional random fields, and other more conventional methods constitute the majority of early segmentation algorithms, although it is very challenging to describe complicated classes using only grey-level information. With the introduction of the first semantic segmentation model based on DL, it has gained popularity in semantic segmentation tasks, that is, FCN [

17], which extends end-to-end convolutional networks to semantic segmentation. To increase the efficiency of training detection with minimal datasets, an image segmentation algorithm U-Net [

18] for medical image segmentation is proposed. The concept of encoder–decoder proposed by SegNet [

19] is crucial to modern segmentation algorithms. Two primary optimization techniques focus on using atrous convolution and amplified convolution kernel size to successfully expand the perceptual range of feature extraction. The first is to give the network a null convolution [

20]. Deeplab v1 [

21], Deeplab v2 [

22], DeepLab v3 [

23], DeepLab v3+ [

24], and DenseASPP [

25] are more algorithms based on this concept. The second is to expand the convolution’s kernel size to create a wider effective receptive field [

26]. Additionally, other technologies [

27] make use of this concept to optimize feature extraction, and employ huge kernel pooling layers to gather these data and record the entire image. In recent years, a self-attentive semantic segmentation model [

28] that employs a local-to-global approach is proposed for medical image segmentation. The success of Swin transformer in the field of image recognition shows the application potential of the transformer in vision. The main goal of these algorithms is to give the network a wider perceptual area, so as to facilitate the network to gather more global data. However, not all segmentation domains, such as crack segmentation, need global data.

The existing crack segmentation technologies can be divided into two primary groups: one is the technology of using semantic segmentation models in other domains and the other is the technology of mixing multiple networks to create a dual network for crack detection. For a crack dataset with a small amount of data, Carr et al. [

29] proposed a structure with a feature pyramid core and an underlying feedforward ResNet. Yang et al. [

30] proposed a pyramid and hierarchical improvement network for pavement crack detection, while Jiang et al. [

31] suggested a DL-based hybrid extended convolutional block network for crack detection at the pixel level. Other effective segmentation technologies [

32,

33,

34,

35,

36,

37,

38] likewise mostly rely on the concepts of SegNet and U-Net concepts to complete the task. Despite the excellent performance of these crack segmentation models, no studies show how the parameters of these networks affect the outcomes, for example, how much local information is collected by the network when the crack image is subjected to feature extraction, where the Skip Connection in the coding and decoding structure is located, and how many image channels are used in the feature transfer.

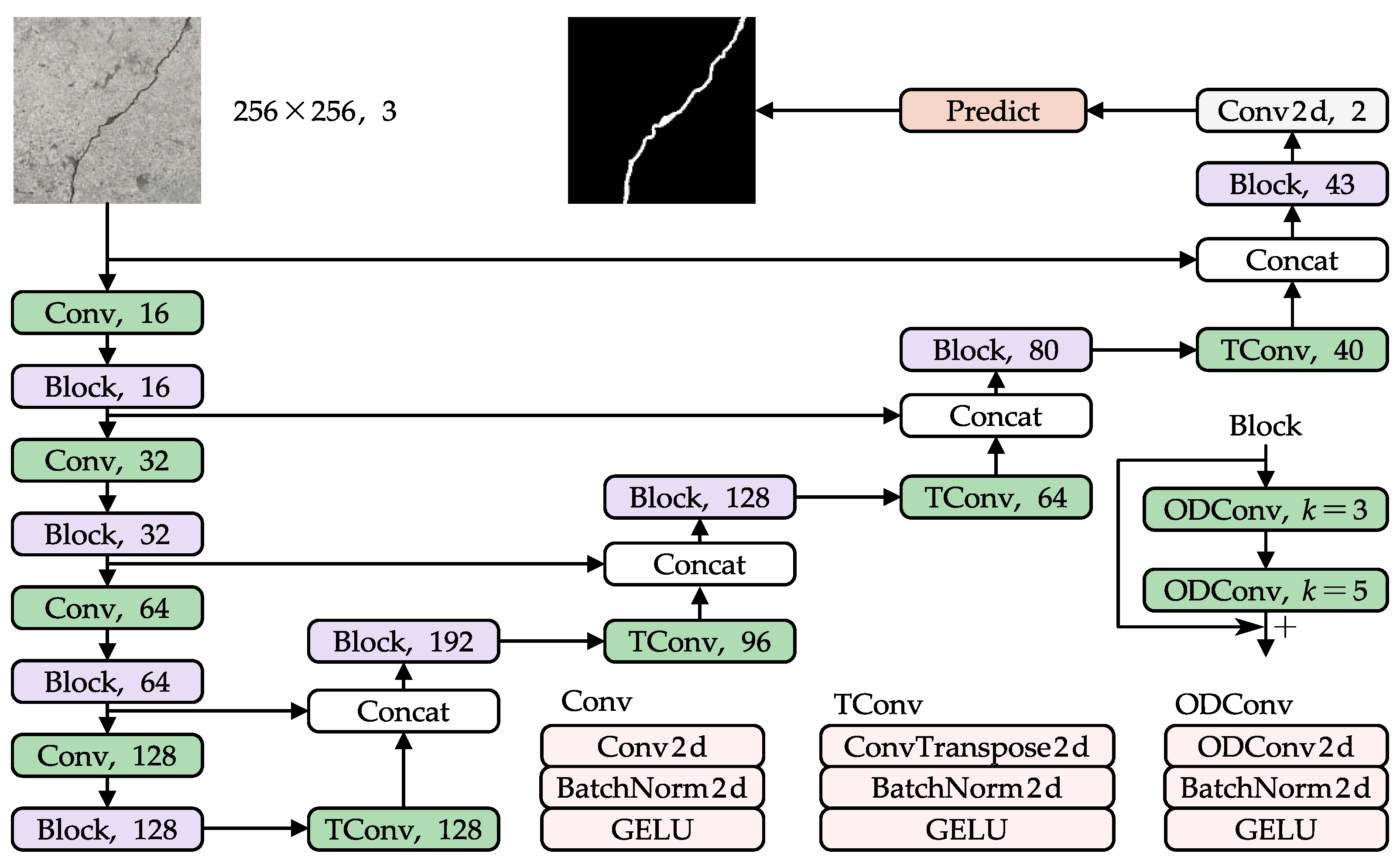

A pixel-level crack segmentation network (CCSN) is proposed as a solution to this issue and as a means to identify a network structure appropriate for crack segmentation. The network uses a residual network built on a feature pyramid to realize Omni-Dimensional Dynamic Convolution in the network’s fundamental block using a residual network built on a feature pyramid. The loss calculation employs a mix of the dice coefficient [

39] and focal loss [

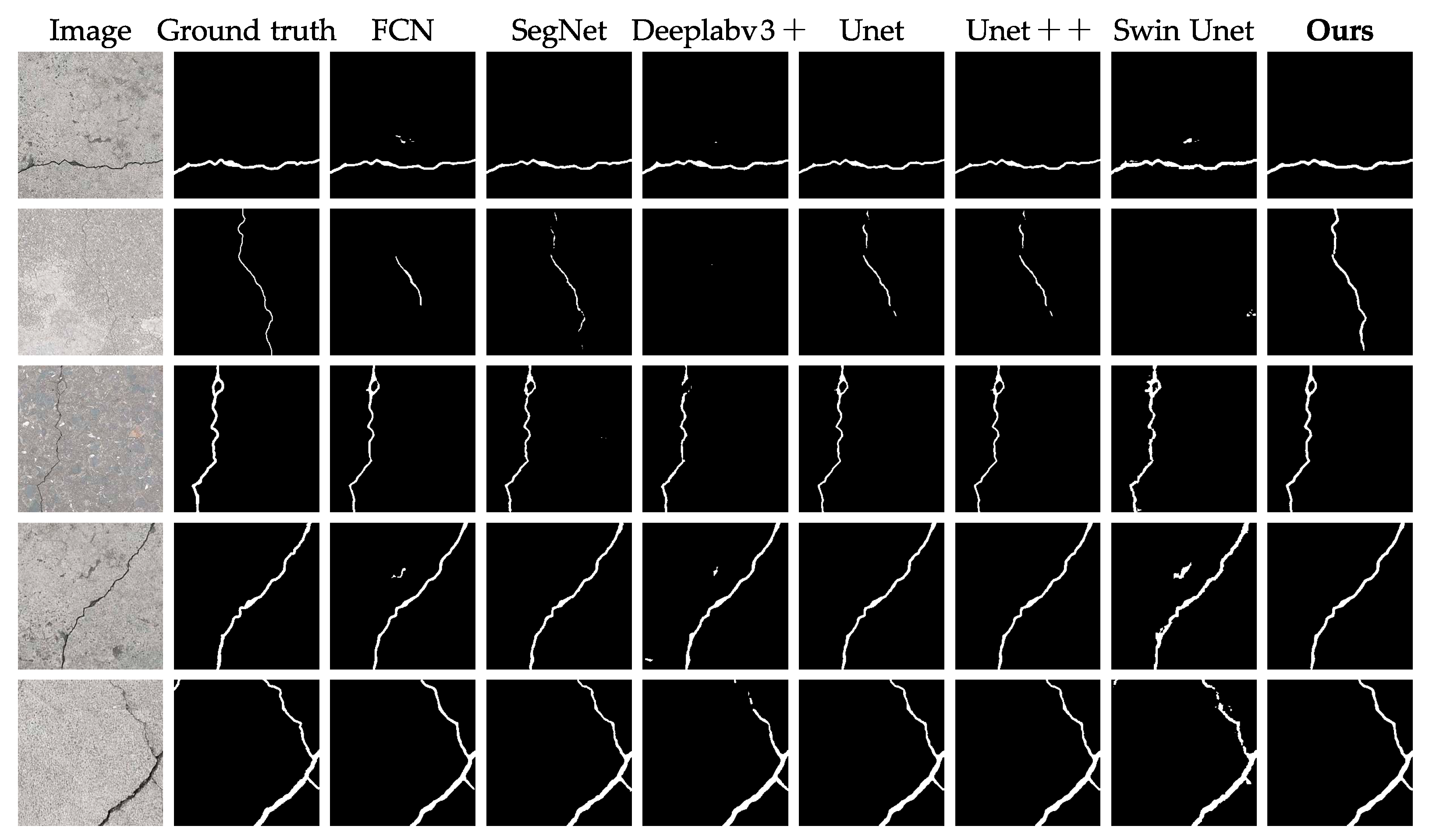

40] to handle the issue of sample imbalance. The created dataset supports the claim that CCSN performs better than networks such as SegNet, DeeplabV3+, and Swin-unet. The performance of these networks is shown in

Figure 1.

The main contributions of this research are summarized as follows:

- 1)

First, a CNN structure for crack segmentation is proposed by combining residual networks and Omni-Dimensional Dynamic Convolution. Its performance under various convolution kernels, channel numbers, connection schemes, and loss functions is thoroughly investigated to find a relatively stable and high-quality structure.

- 2)

Then, mIoU, mPA, and accuracy are used as the primary evaluation metrics and various loss functions are used to target binary classification and sample imbalance.

- 3)

Finally, a dataset for concrete cracks with distinct environments and different orientations is created and utilized for training and validation.

The remainder of this study is arranged as follows.

Section 2 focuses on the work related to the method.

Section 3 describes the proposed crack segmentation method in detail.

Section 4 analyzes the performance of the method under different datasets. Finally,

Section 5 draws the conclusion of this paper.

4. Experiments and Results

This section presents the experimental results of the proposed method, including its performance under different network parameters, connection strategies, and losses. At the same time, this network is compared with other networks of the same type. In this paper, to verify the effectiveness of the method, the public crack dataset is selected for further validation.

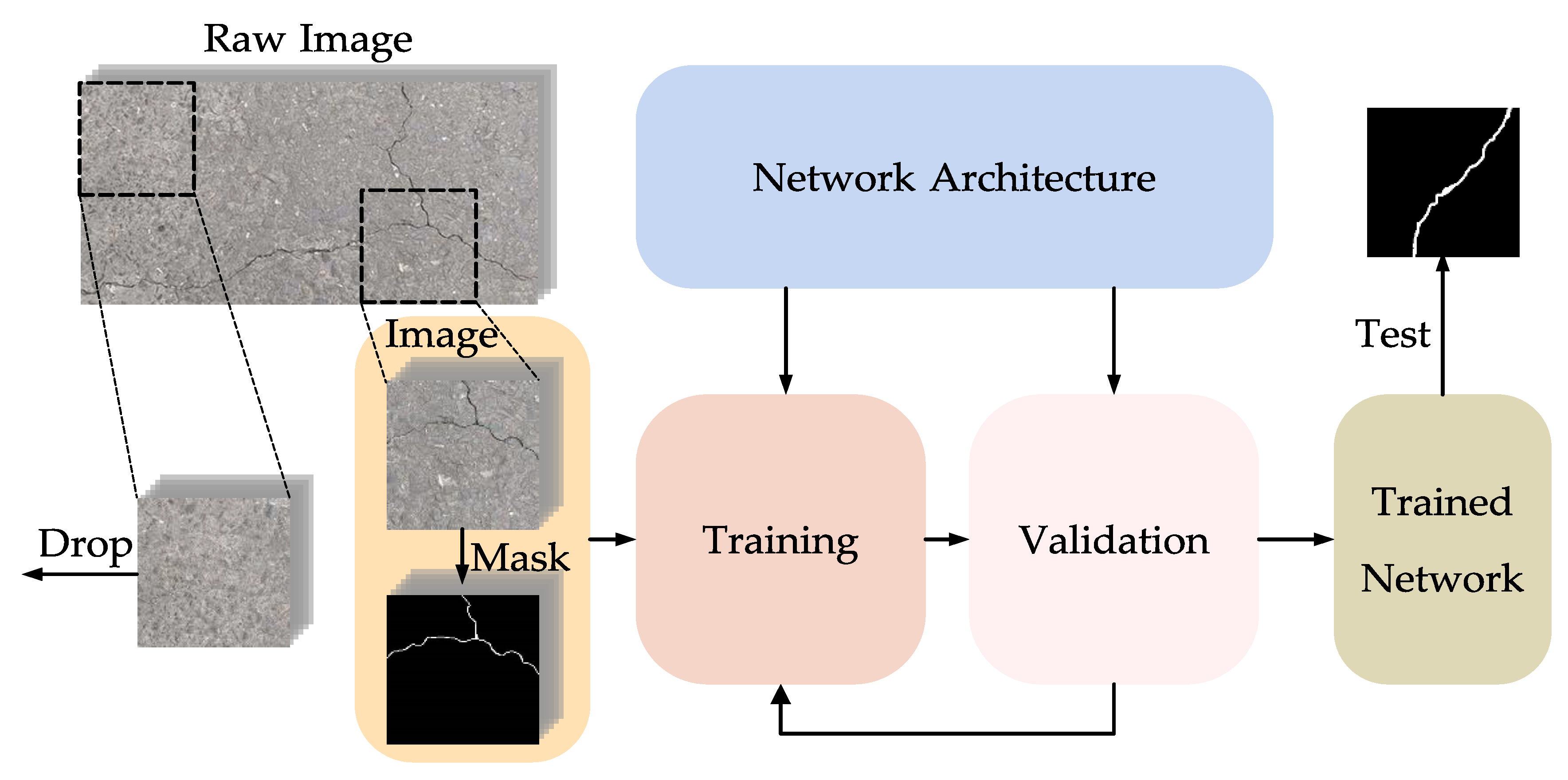

4.1. Training Configuration

The experiments were conducted using AMD Ryzen7 5800H Processor with 16 GB RAM, and NVIDIA GeForce RTX 3060 Laptop with 6 GB RAM GPU. The DL framework is pytorch, and Adam with a momentum of 0.9 was chosen as the optimizer during training. The initial learning rate and minimum learning rate were set to and , respectively, and the learning rate descent formula is cos. Due to memory constraints, the number of multithreads and batch size were set to 4, and 50 epochs were used for training. The training process was analyzed using different sets of hyperparameters to select the optimal validated model configuration. The dataset was portioned into 80% (1600 images) for training, 10% (200 images) for validation, and 10% (200 images) for testing. All images used in the experiment were set to 256 × 256.

4.2. Evaluation Metric

To evaluate this crack segmentation network, the images were trained with a variety of different parameters, losses, architectures, and networks. Since the crack dataset is a class-imbalanced dataset, if the mean accuracy is simply used as an evaluation metric, the accuracy of the crack pixels will be masked by the accuracy of background pixels and the results will not be well observed. The mIoU is used as the main evaluation metric to assess the performance of the method, and the metrics such as precision (P), recall (R), F1-score, accuracy, and mPA (mean pixel accuracy) are compared. These evaluation metrics can be derived from a confusion matrix. The confusion matrix is shown in

Table 2.

The evaluation metrics for single class are shown as follows:

The precision indicates the percentage of correctly predicted pixels out of all pixels predicted by the model as positive examples. Recall indicates the percentage of all samples with positive true pixels predicted. mPA indicates the average value of the sum of the precision of all classes. Accuracy indicates the number of correctly predicted pixels as a percentage of all pixels. Moreover, mIoU indicates the summed re-average of all classes of IoU.

4.3. Network Results

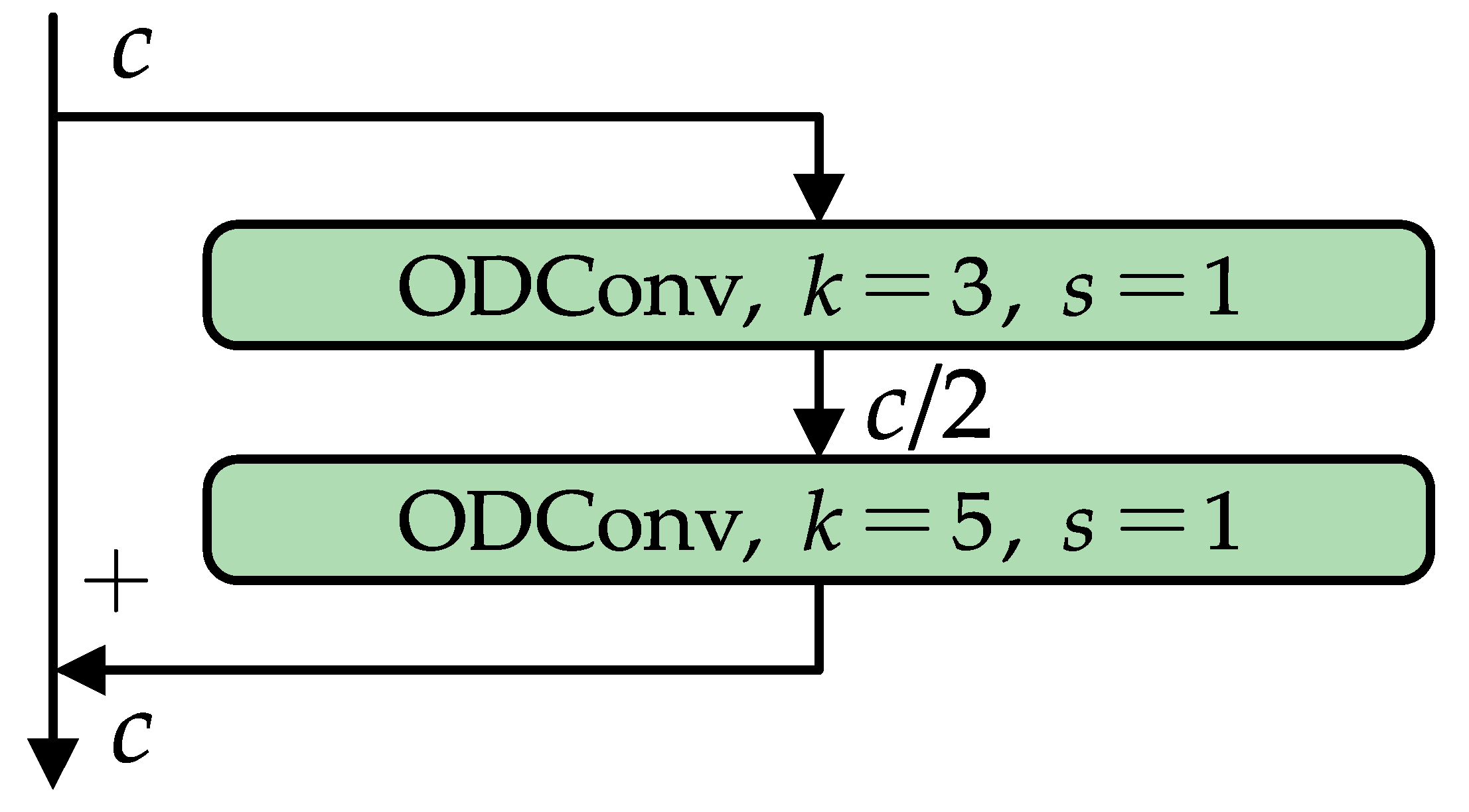

4.3.1. Block

For cracked pixels, it has not been investigated how large a window should be used in the feature extraction process to obtain the connection with the surrounding pixels. To find the corresponding parameters suitable for crack segmentation, similar to ConvNeXt with a guaranteed number of parameters, this paper explores the different kernel sizes of the two ODConvs in the block.

The structure of the block is shown in

Figure 6, with the kernel sizes

and

set in the order of network connections. To verify the advantages of ODConv and compare the results with the block using normal convolutional operations, the comparison results are shown in

Table 3. It is obvious that

and

have better performance when set to 3 and 5, respectively.

To verify the superiority of the method, the blocks in this paper are also compared with ResBlock, MobileNetV3 [

50], and ConvNeXt. The experiments were performed in the same environment, but the difference is to replace the blocks with the corresponding methods. The experimental results are shown in

Table 4. The method proposed in this paper is notably superior to the alternatives.

4.3.2. Connection Strategy

The feature fusion at different scales of the network is based on the feature pyramid networks. Even for the same network structure, different connection modes may not increase the number of parameters, but they will cause different results. In this paper, four different feature fusion patterns are investigated, as shown in

Figure 7. The results in

Table 5 show that (a) type of connectivity achieves better results in this method.

4.3.3. Pyramid Structure

The width (channels) of the feature map in the decoder is shown in

Figure 8, where the numbers indicate the number of channels. A total of 128 channels were input to the decoder section, and 64, 32, 16, and 3 channels were incorporated into the feature fusion phase from the encoder section when the feature dimension was transformed. The whole network constitutes a feature pyramid. This part obtains the feature pyramid suitable for this network by studying the changing trend of the feature pyramid.

In this paper, the trends of the four feature widths are compared and validated, as shown in

Table 6, where width indicates the number of channels per TConv output since the features are transmitted along in the direction. The results show that widths of 128, 96, 64, and 40 perform best in this order.

4.4. Loss Results

The loss functions are described in detail in the previous sections. This section examines the performance of different loss functions on our network, as shown in

Table 7. The results show that this method, which uses focal loss and takes into account the dice coefficient, outperforms other loss functions.

4.5. Other Network Results

4.5.1. Results on CCD

To validate the effectiveness of the proposed method, the method in this paper is compared with other methods, including classical algorithms FCN, SegNet, Unet, and Deeplabv3+. In addition, the method is compared with Swin-unet using pure transformer, which further demonstrates its advantages in crack segmentation task.

The experimental results are shown in

Table 8, where the U-shaped network and the coder–decoder structure crack segmentation task exhibit better performances, while algorithms such as Deeplabv3+ and Swin-unet show disappointing performances. The method proposed in this paper outperforms other methods in terms of accuracy, F1-score, mIoU, and mPA. Samples of crack detection using different networks are shown in

Figure 9. In the second test sample, for example, fine (only one pixel wide) and blurred cracks pose a challenge to detection, but our method is able to detect the whole crack more completely and comes closest to the truth image of the ground.

4.5.2. Results on BCL Dataset

To enhance the persuasiveness of the methods in this paper, the Bridge Crack Library (BCL) [

51] dataset published on Harvard Dataverse in 2020 was used to validate the mentioned network, as shown in

Table 9. In a random sample of 2000 images from the BCL dataset, 80% of them were used for training, 10% for validation, and 10% for testing, so as to ensure that the same environment as the CCD is used to start the experiments. The experimental results show that the proposed method in this paper achieves an accuracy of 98.89%, mIoU of 82.9%, and mPA of 90.47% on the BCL dataset, which are higher than other networks.

4.6. Computational Comparison

The computational complexity of the proposed CCSN network was evaluated against other networks (FCN, SegNet, DeepLabv3+, Unet, Unet++, Swin-unet). The actual performance of this method was evaluated by comparing the number of parameters, floating point operations (FLOPs), memory storage, and FPS comparisons of these networks. The computational complexity is shown in

Table 10. For all networks including training datasets, test datasets, and hyperparameters, the network training criteria were set identically. The proposed CCSN network consists of 4.28 million learnable parameters, with only 7.17 GFLOPs and 9.61 MB storage, all of which are much lower than other networks. In addition, the training time of this method in the experiment is 65.67 min, which is shorter among all methods. In terms of FPS, the proposed network in this paper outperforms DeepLabv3+ and Unet++, and is slightly lower than SegNet, Unet, and Swin-unet. However, its overall evaluation metrics are still greatly dominated.