Using Random Ordering in User Experience Testing to Predict Final User Satisfaction

Abstract

1. Introduction

2. Literature Review

2.1. UX Evaluation Methods

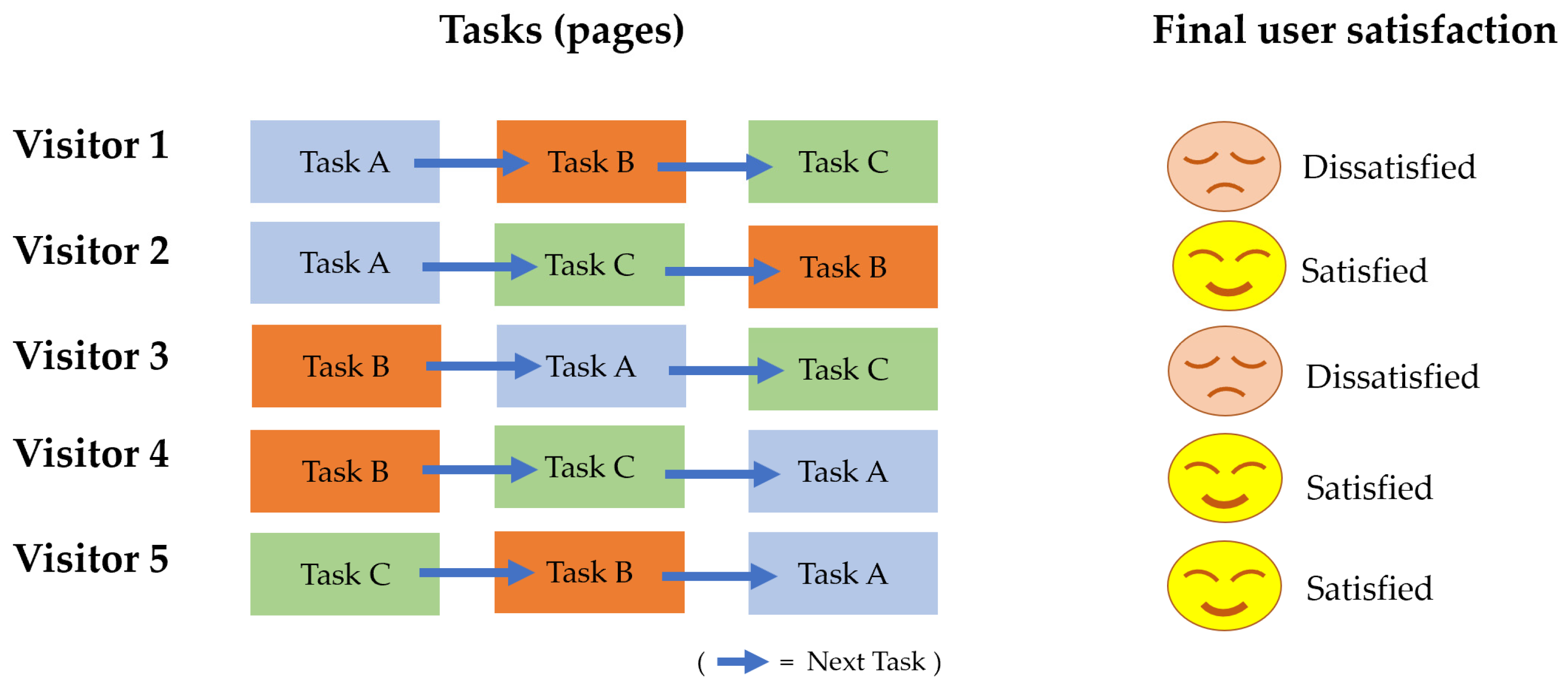

2.2. Order Effect in UX

2.3. Machine Learning

2.4. Sampling Techniques

3. Materials and Methods

Proposed Framework

4. Experiments

4.1. Preliminary Experiments

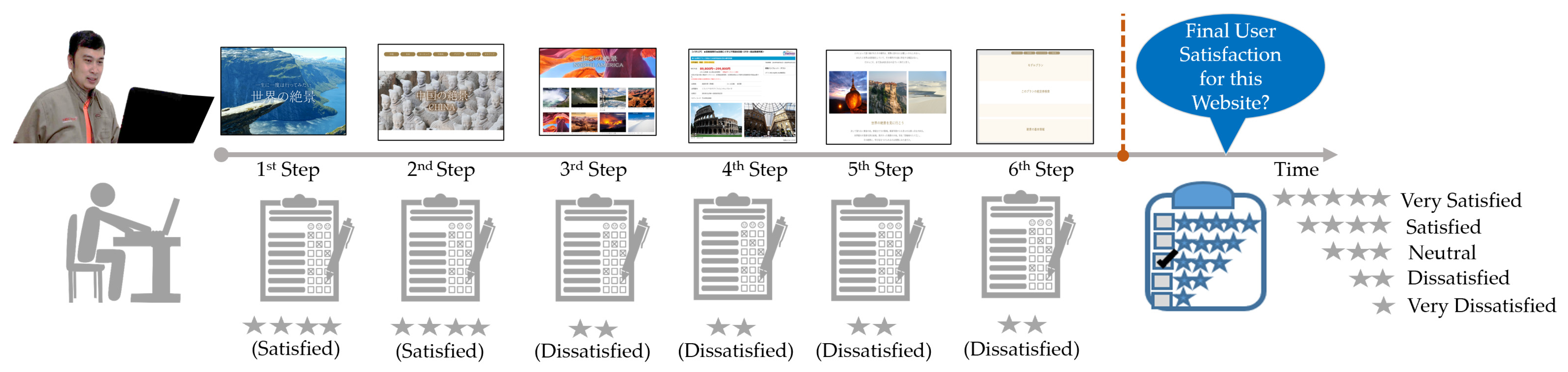

4.1.1. Preliminary Experiment I: Travel Agency Website

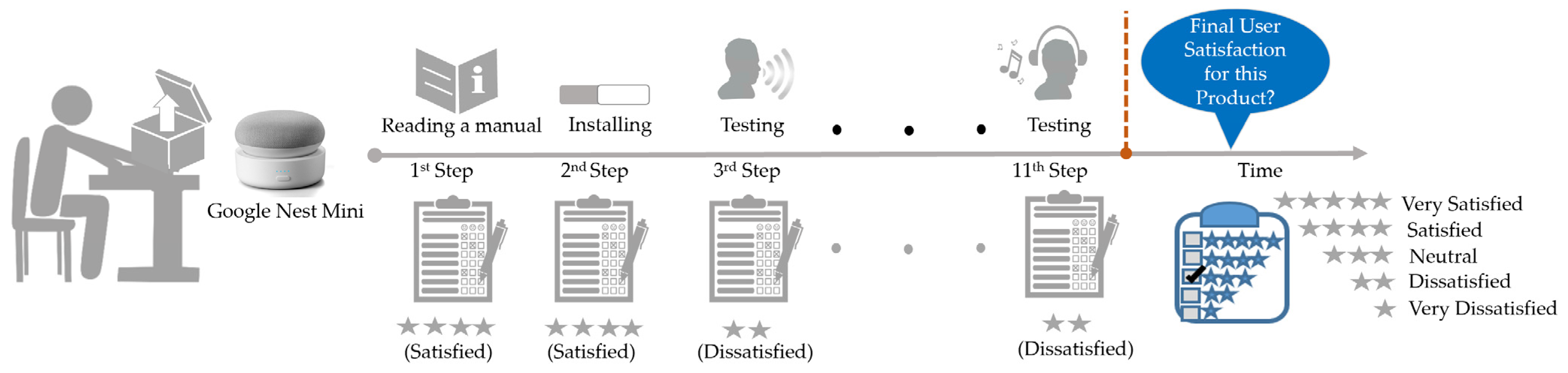

4.1.2. Preliminary Experiment II: Google Nest Mini

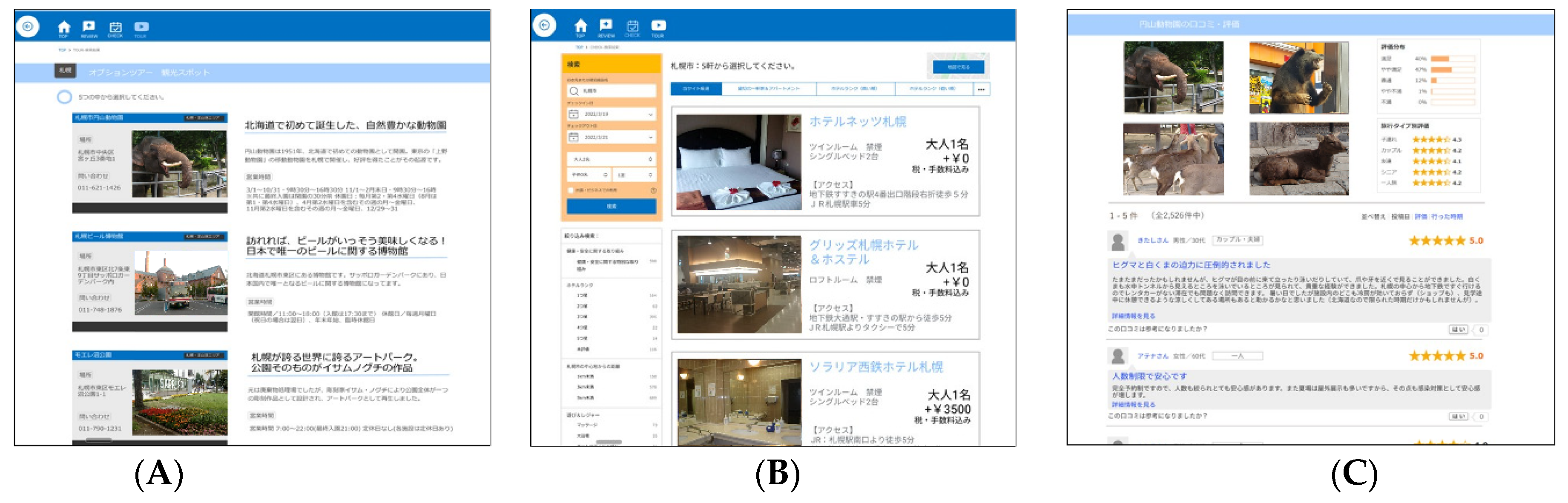

4.2. Main Experiment

4.2.1. Dataset Structure

4.2.2. Building Classification Models

4.2.3. Model Evaluation

5. Results and Discussion

5.1. Accounting for Actual Task Order in Randomly Ordered UX

5.2. Machine Learning Algorithms

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Koonsanit, K.; Nishiuchi, N. Predicting Final User Satisfaction Using Momentary UX Data and Machine Learning Techniques. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 3136–3156. [Google Scholar] [CrossRef]

- Borsci, S.; Federici, S.; Bacci, S.; Gnaldi, M.; Bartolucci, F. Assessing user satisfaction in the era of user experience: Comparison of the SUS, UMUX, and UMUX-LITE as a function of product experience. Int. J. Hum.-Comput. Interact. 2015, 31, 484–495. [Google Scholar] [CrossRef]

- Kaul, D. Customer Relationship Management (CRM), Customer Satisfaction and Customer Lifetime Value in Retail. Rev. Prof. Manag. J. New Delhi Inst. Manag. 2017, 15, 55. [Google Scholar] [CrossRef]

- Bujlow, T.; Carela-Español, V.; Sole-Pareta, J.; Barlet-Ros, P. A survey on web tracking: Mechanisms, implications, and defenses. Proc. IEEE 2017, 105, 1476–1510. [Google Scholar] [CrossRef]

- Hussain, J.; Ali Khan, W.; Hur, T.; Muhammad Bilal, H.S.; Bang, J.; Ul Hassan, A.; Afzal, M.; Lee, S. A Multimodal Deep Log-Based User Experience (UX) Platform for UX Evaluation. Sensors 2018, 18, 1622. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Wei, W. An empirical study on user experience evaluation and identification of critical UX issues. Sustainability 2019, 11, 2432. [Google Scholar] [CrossRef]

- Angamuthu, B. Impact of customer relationship management on customer satisfaction and its role towards customer loyalty and retention practices in the hotel sector. BVIMSR’s J. Manag. Res. 2015, 7, 43–52. [Google Scholar]

- Rahimi, R.; Kozak, M. Impact of Customer Relationship Management on Customer Satisfaction: The Case of a Budget Hotel Chain. J. Travel Tour. Mark. 2017, 34, 40–51. [Google Scholar] [CrossRef]

- Badran, O.; Al-Haddad, S. The Impact of Software User Experience on Customer Satisfaction. J. Manag. Inf. Decis. Sci. 2018, 21, 1–20. [Google Scholar]

- Roto, V.; Law, E.; Vermeeren, A.; Hoonhout, J. User experience white paper: Bringing clarity to the concept of user experience. In Proceedings of the Dagstuhl Seminar on Demarcating User Experience, Wadern, Germany, 15–17 September 2011; p. 12. [Google Scholar]

- Marti, P.; Iacono, I. Anticipated, momentary, episodic, remembered: The many facets of User eXperience. In Proceedings of the 2016 Federated Conference on Computer Science and Information Systems (FedCSIS), Gdansk, Poland, 11–14 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1647–1655. [Google Scholar]

- Kujala, S.; Roto, V.; Väänänen-Vainio-Mattila, K.; Karapanos, E.; Sinnelä, A. UX Curve: A method for evaluating long-term user experience. Interact Comput. 2011, 23, 473–483. [Google Scholar] [CrossRef]

- Kujala, S.; Roto, V.; Väänänen, K.; Karapanos, E.; Sinnelä, A. Guidelines How to Use the UX Curve Method 2013. Available online: https://www.researchgate.net/publication/242019830_Guidelines_how_to_use_the_UX_Curve_method (accessed on 12 January 2022).

- Hashizume, A.; Kurosu, M. UX Graph Tool for Evaluating the User Satisfaction. Int. J. Comput. Sci. Issue 2016, 13, 86–93. [Google Scholar] [CrossRef]

- Kurosu, M.; Hashizume, A.; Ueno, Y.; Tomida, T.; Suzuki, H. UX Graph and ERM as Tools for Measuring Kansei Experience. In Proceedings of the 18th International Conference on Human-Computer Interaction. Theory, Design, Development and Practice, Toronto, ON, Canada, 17–22 July 2016; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9731, pp. 331–339. [Google Scholar] [CrossRef]

- Sukamto, R.A.; Wibisono, Y.; Agitya, D.G. Enhancing The User Experience of Portal Website using User-Centered Design Method. In Proceedings of the 2020 6th International Conference on Science in Information Technology (ICSITech), Palu, Indonesia, 21–22 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 171–175. [Google Scholar] [CrossRef]

- Pushparaja, V.; Yusoff, R.C.M.; Maarop, N.; Shariff, S.A.; Zainuddin, N.M. User Experience Factors that Influence Users’ Satisfaction of Using Digital Library. Open Int. J. Inform. 2021, 9, 28–36. [Google Scholar]

- Mominzada, T.; Abd Rozan, M.Z.B.; Aziz, N.A. Consequences Of User Experience in A Gamified E-Commerce Platform. Int. J. Electron. Commer. Stud. 2021, 13, 113–136. [Google Scholar] [CrossRef]

- Nwakanma, C.I.; Hossain, M.S.; Lee, J.-M.; Kim, D.-S. Towards machine learning based analysis of quality of user experience (QoUE). Int. J. Mach. Learn. Comput. 2020, 10, 752–758. [Google Scholar] [CrossRef]

- Keiningham, T.L.; Aksoy, L.; Malthouse, E.C.; Lariviere, B.; Buoye, A. The cumulative effect of satisfaction with discrete transactions on share of wallet. J. Serv. Manag. 2014, 3, 310–333. [Google Scholar] [CrossRef]

- Min, K.S.; Jung, J.M.; Ryu, K.; Haugtvedt, C.; Mahesh, S.; Overton, J. Timing of apology after service failure: The moderating role of future interaction expectation on customer satisfaction. Mark. Lett. 2020, 31, 217–230. [Google Scholar] [CrossRef]

- Cong, J.; Zheng, P.; Bian, Y.; Chen, C.-H.; Li, J.; Li, X. A machine learning-based iterative design approach to automate user satisfaction degree prediction in smart product-service system. Comput. Ind. Eng. 2022, 165, 107939. [Google Scholar] [CrossRef]

- Doi, T.; Doi, S.; Yamaoka, T. The peak–end rule in evaluating product user experience: The chronological evaluation of past impressive episodes on overall satisfaction. Hum. Factors Ergon. Manuf. Serv. Ind. 2022, 32, 256–267. [Google Scholar] [CrossRef]

- Kumar, S.; Zymbler, M. A machine learning approach to analyze customer satisfaction from airline tweets. J. Big Data 2019, 6, 62. [Google Scholar] [CrossRef]

- Torres-Valencia, C.; Álvarez-López, M.; Orozco-Gutiérrez, Á. SVM-based feature selection methods for emotion recognition from multimodal data. J. Multimodal User Interfaces 2017, 11, 9–23. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J.; Williamson, R.C.; Bartlett, P.L. New support vector algorithms. Neural Comput. 2000, 12, 1207–1245. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Tian, Z.G.; Wang, J.W.; Wang, W.M. Extraction of affective responses from customer reviews: An opinion mining and machine learning approach. Int. J. Comput. Integr. Manuf. 2020, 33, 670–685. [Google Scholar] [CrossRef]

- Khondoker, M.; Dobson, R.; Skirrow, C.; Simmons, A.; Stahl, D. A comparison of machine learning methods for classification using simulation with multiple real data examples from mental health studies. Stat. Methods Med. Res. 2016, 25, 1804–1823. [Google Scholar] [CrossRef] [PubMed]

- Beleites, C.; Neugebauer, U.; Bocklitz, T.; Krafft, C.; Popp, J. Sample size planning for classification models. Anal. Chim. Acta 2013, 760, 25–33. [Google Scholar] [CrossRef] [PubMed]

- Oppong, S.H. The problem of sampling in qualitative research. Asian J. Manag. Sci. Educ. 2013, 2, 202–210. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Longadge, R.; Dongre, S. Class imbalance problem in data mining review. Int. J. Comput. Sci. Netw. 2013, 2, 1–6. [Google Scholar]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar]

- Nguyen, H.M.; Cooper, E.W.; Kamei, K. Borderline over-sampling for imbalanced data classification. Int. J. Knowl. Eng. Soft Data Paradig. 2011, 3, 4–21. [Google Scholar] [CrossRef]

- Batista, G.E.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Ng, A.Y. Preventing “overfitting” of cross-validation data. In Proceedings of the ICML, Nashville, TN, USA, 8–12 July 1997; Carnegie Mellon University: Pittsburgh, PA, USA, 1997; Volume 97, pp. 245–253. [Google Scholar]

- Yadav, S.; Shukla, S. Analysis of k-fold cross-validation over hold-out validation on colossal datasets for quality classification. In Proceedings of the 2016 IEEE 6th International Conference on Advanced Computing (IACC), Bhimavaram, India, 27–28 February 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 78–83. [Google Scholar] [CrossRef]

- Ben-Hur, A.; Weston, J. A user’s guide to support vector machines. In Data Mining Techniques for the Life Sciences; Springer: Berlin/Heidelberg, Germany, 2010; pp. 223–239. [Google Scholar]

| Leave-One-Out Cross-Validation (LOOCV) | Dataset W1 (Shuffled Ordered of Tasks) | Dataset W2 (Actual Order of Tasks) |

|---|---|---|

| Cross validation accuracy without oversampling | 0.48 | 0.72 |

| Cross validation accuracy with oversampling (SMOTEN) | 0.58 | 0.90 |

| Leave-One-Out Cross-Validation (LOOCV) | Dataset P1 (Shuffled Ordered of Tasks) | Dataset P2 (Actual Order of Tasks) |

|---|---|---|

| Cross validation accuracy without oversampling | 0.56 | 0.60 |

| Cross validation accuracy with oversampling (SMOTEN) | 0.64 | 0.76 |

| Main Task A: Finding a Tour | Main Task B: Finding a Hotel | Main Task C: Reviewing Information |

|---|---|---|

| Subtask A1: view tours Subtask A2: read tour details Subtask A3: compare and book a tour | Subtask B1: view hotels Subtask B2: read hotel details Subtask B3: compare and book a hotel | Subtask C1: read trip reviews Subtask C2: read tour reviews Subtask C3: read hotel reviews |

| Scores | Dataset | Random Forest | KNN |

SVM Poly |

SVM Linear |

SVM RBF |

SVM Sigmoid | AdaBoost | |

|---|---|---|---|---|---|---|---|---|---|

| LOOCV | Cross-Validation Accuracy | Dataset I | 0.68 | 0.61 | 0.68 | 0.60 | 0.75 | 0.46 | 0.61 |

| Dataset II | 0.70 | 0.71 | 0.76 | 0.76 | 0.76 | 0.61 | 0.70 | ||

| Split for training/test (80/20) | Accuracy | Dataset I | 0.83 | 0.90 | 0.93 | 0.83 | 0.93 | 0.57 | 0.87 |

| Dataset II | 0.83 | 0.83 | 0.97 | 0.83 | 0.93 | 0.70 | 0.73 | ||

| Precision | Dataset I | 0.85 | 0.92 | 0.93 | 0.87 | 0.94 | 0.54 | 0.89 | |

| Dataset II | 0.88 | 0.85 | 0.97 | 0.88 | 0.94 | 0.84 | 0.80 | ||

| Recall | Dataset I | 0.83 | 0.90 | 0.93 | 0.83 | 0.93 | 0.57 | 0.87 | |

| Dataset II | 0.85 | 0.83 | 0.97 | 0.83 | 0.93 | 0.70 | 0.73 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koonsanit, K.; Hiruma, D.; Yem, V.; Nishiuchi, N. Using Random Ordering in User Experience Testing to Predict Final User Satisfaction. Informatics 2022, 9, 85. https://doi.org/10.3390/informatics9040085

Koonsanit K, Hiruma D, Yem V, Nishiuchi N. Using Random Ordering in User Experience Testing to Predict Final User Satisfaction. Informatics. 2022; 9(4):85. https://doi.org/10.3390/informatics9040085

Chicago/Turabian StyleKoonsanit, Kitti, Daiki Hiruma, Vibol Yem, and Nobuyuki Nishiuchi. 2022. "Using Random Ordering in User Experience Testing to Predict Final User Satisfaction" Informatics 9, no. 4: 85. https://doi.org/10.3390/informatics9040085

APA StyleKoonsanit, K., Hiruma, D., Yem, V., & Nishiuchi, N. (2022). Using Random Ordering in User Experience Testing to Predict Final User Satisfaction. Informatics, 9(4), 85. https://doi.org/10.3390/informatics9040085