Decision Tree Modeling for Osteoporosis Screening in Postmenopausal Thai Women

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.2. Experimental Design

2.3. Data Preparation

2.3.1. Missing Data Handling

2.3.2. Training and Testing Data

2.3.3. Imbalanced Data Handling

2.4. DT Algorithms

2.4.1. CART Algorithm

2.4.2. QUEST Algorithm

2.4.3. CHAID Algorithm

2.4.4. C4.5 Algorithm

2.5. Model Construction and Selection

- Accuracy = (TP + TN)/(TP + TN + FP + FN)

- Sensitivity = TP/(TP + FN)

- Specificity = TN/(TN + FP)

- Positive Predictive value (PPV) = TP/(TP + FP)

- Negative predictive value (NPV) = TN/(TN + FN)

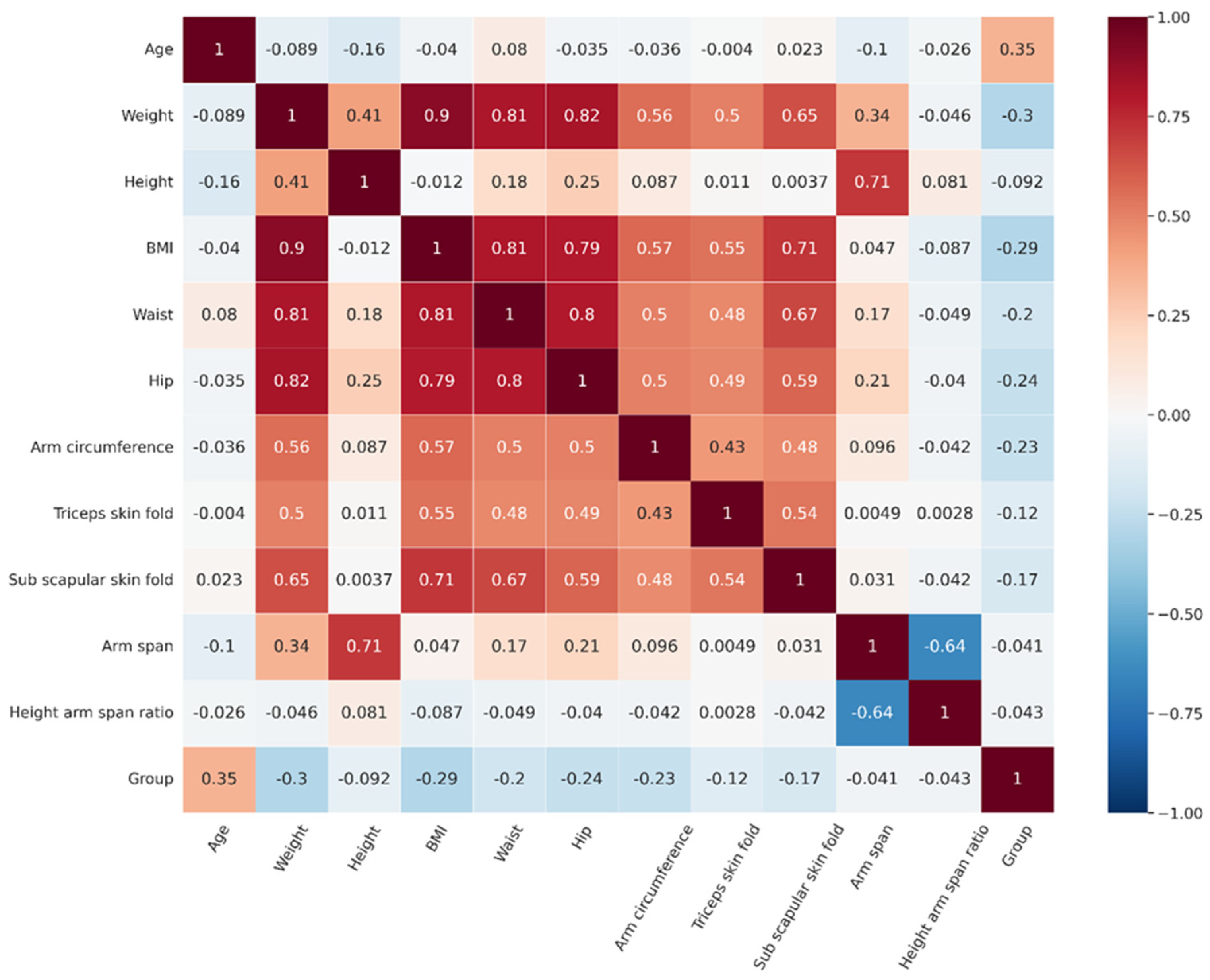

2.6. Statistical Analysis

3. Experimental Results

3.1. General Characteristics and the Difference in Parameters between the Normal and Abnormal Groups

3.2. Performance of Four Decision Tree Algorithms and the Selected Model

3.3. Blind Data Testing, Final Model Selection and Rule Extraction

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- WHO. Prevention and Management of Osteoporosis; (0512-3054 (Print) 0512-3054 (Linking)); World Health Organ Tech Rep Ser, Issue; WHO: Geneva, Switzerland, 2003. Available online: https://www.ncbi.nlm.nih.gov/pubmed/15293701 (accessed on 7 September 2022).

- National Statistical Office. Survey Report of the Elderly Population in Thailand 2007; Tanapress Co., Ltd.: Bangkok, Thailand, 2008.

- Pothisiri, W.; Teerawichitchainan, B. National Survey of Older Persons in Thailand. In Encyclopedia of Gerontology and Population Aging; Gu, D., Dupre, M.E., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 1–5. [Google Scholar]

- Pongchaiyakul, C.; Nguyen, N.D.; Pongchaiyakul, C.; Nguyen, T.V. Development and validation of a new clinical risk index for prediction of osteoporosis in Thai women. J. Med. Assoc. Thail. 2004, 87, 910–916. [Google Scholar]

- Panichkul, S.; Sripramote, M.; Sriussawaamorn, N. Diagnostic performance of quantitative ultrasound calcaneus measurement in case finding for osteoporosis in Thai postmenopausal women. J. Obstet. Gynaecol. Res. 2004, 30, 418–426. [Google Scholar] [CrossRef]

- Koh, L.; Ben Sedrine, W.; Torralba, T.; Kung, A.; Fujiwara, S.; Chan, S.; Huang, Q.; Rajatanavin, R.; Tsai, K.; Park, H. A simple tool to identify Asian women at increased risk of osteoporosis. Osteoporos. Int. 2001, 12, 699–705. [Google Scholar] [CrossRef] [PubMed]

- Prommahachai, A.; Soontrapa, S.; Chaikitpinyo, S. Validation of the KKOS scoring system for Screening of Osteoporosis in Thai Elderly Woman aged 60 years and older. Srinagarind Med. J. 2009, 24, 9–16. [Google Scholar]

- Zhang, J.; Wu, T.; Yang, D. A study on osteoporosis screening tool for Chinese women. Zhongguo Xiu Fu Chong Jian Wai Ke Za Zhi = Zhongguo Xiufu Chongjian Waike Zazhi Chin. J. Reparative Reconstr. Surg. 2007, 21, 86–89. [Google Scholar]

- Kanis, J.A. Fracture Risk Assessment Tool. Centre for Metabolic Bone Disease, University of Sheffield. Available online: https://www.sheffield.ac.uk/FRAX/tool.aspx?lang=en (accessed on 16 August 2022).

- Yingyuenyong, S. Validation of FRAX® WHO Fracture Risk Assessment Tool with and without the Alara Metriscan Phalangeal Densitometer as a screening tool to identify osteoporosis in Thai postmenopausal women. Thai J. Obstet. Gynaecol. 2012, 20, 111–120. [Google Scholar]

- Weinstein, L.; Ullery, B. Identification of at-risk women for osteoporosis screening. Am. J. Obstet. Gynecol. 2000, 183, 547–549. [Google Scholar] [CrossRef]

- Cadarette, S.M.; Jaglal, S.B.; Kreiger, N.; McIsaac, W.J.; Darlington, G.A.; Tu, J.V. Development and validation of the Osteoporosis Risk Assessment Instrument to facilitate selection of women for bone densitometry. CMAJ 2000, 162, 1289–1294. [Google Scholar] [PubMed]

- Sedrine, W.B.; Chevallier, T.; Zegels, B.; Kvasz, A.; Micheletti, M.; Gelas, B.; Reginster, J.-Y. Development and assessment of the Osteoporosis Index of Risk (OSIRIS) to facilitate selection of women for bone densitometry. Gynecol. Endocrinol. 2002, 16, 245–250. [Google Scholar] [CrossRef] [PubMed]

- Lydick, E.; Cook, K.; Turpin, J.; Melton, M.; Stine, R.; Byrnes, C. Development and validation of a simple questionnaire to facilitate identification of women likely to have low bone density. Am. J. Manag. Care 1998, 4, 37–48. [Google Scholar]

- Black, D.; Steinbuch, M.; Palermo, L.; Dargent-Molina, P.; Lindsay, R.; Hoseyni, M.; Johnell, O. An assessment tool for predicting fracture risk in postmenopausal women. Osteoporos. Int. 2001, 12, 519–528. [Google Scholar] [CrossRef] [PubMed]

- Jabarpour, E.; Abedini, A.; Keshtkar, A. Osteoporosis Risk Prediction Using Data Mining Algorithms. J. Community Health Res. 2020, 9, 69–80. [Google Scholar] [CrossRef]

- Kim, D.W.; Kim, H.; Nam, W.; Kim, H.J.; Cha, I.-H. Machine learning to predict the occurrence of bisphosphonate-related osteonecrosis of the jaw associated with dental extraction: A preliminary report. Bone 2018, 116, 207–214. [Google Scholar] [CrossRef] [PubMed]

- Kong, S.H.; Ahn, D.; Kim, B.; Srinivasan, K.; Ram, S.; Kim, H.; Hong, A.R.; Kim, J.H.; Cho, N.H.; Shin, C.S. A Novel Fracture Prediction Model Using Machine Learning in a Community-Based Cohort. JBMR Plus 2020, 4, e10337. [Google Scholar] [CrossRef] [PubMed]

- Moudani, W.; Shahin, A.; Chakik, F.; Rajab, D. Intelligent predictive osteoporosis system. Int. J. Comput. Appl. 2011, 32, 28–37. [Google Scholar]

- Wang, W.; Richards, G.; Rea, S. Wang, W.; Richards, G.; Rea, S. Hybrid data mining ensemble for predicting osteoporosis risk. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2005, 2006, 886–889. [Google Scholar] [PubMed]

- Yoo, T.K.; Kim, S.K.; Kim, D.W.; Choi, J.Y.; Lee, W.H.; Park, E.-C. Osteoporosis risk prediction for bone mineral density assessment of postmenopausal women using machine learning. Yonsei Med. J. 2013, 54, 1321–1330. [Google Scholar] [CrossRef] [PubMed]

- Tayefi, M.; Tajfard, M.; Saffar, S.; Hanachi, P.; Amirabadizadeh, A.R.; Esmaeily, H.; Taghipour, A.; Ferns, G.A.; Moohebati, M.; Ghayour-Mobarhan, M. hs-CRP is strongly associated with coronary heart disease (CHD): A data mining approach using decision tree algorithm. Comput. Methods Programs Biomed. 2017, 141, 105–109. [Google Scholar] [CrossRef]

- Yeo, B.; Grant, D. Predicting service industry performance using decision tree analysis. Int. J. Inf. Manag. 2018, 38, 288–300. [Google Scholar] [CrossRef]

- Arditi, D.; Pulket, T. Predicting the outcome of construction litigation using boosted decision trees. J. Comput. Civ. Eng. 2005, 19, 387–393. [Google Scholar] [CrossRef]

- Lee, M.-J.; Hanna, A.S.; Loh, W.-Y. Decision tree approach to classify and quantify cumulative impact of change orders on productivity. J. Comput. Civ. Eng. 2004, 18, 132–144. [Google Scholar] [CrossRef]

- Liu, L.; Si, M.; Ma, H.; Cong, M.; Xu, Q.; Sun, Q.; Wu, W.; Wang, C.; Fagan, M.J.; Mur, L.; et al. A hierarchical opportunistic screening model for osteoporosis using machine learning applied to clinical data and CT images. BMC Bioinform. 2022, 23, 63. [Google Scholar] [CrossRef] [PubMed]

- Varlamis, I.; Apostolakis, I.; Sifaki-Pistolla, D.; Dey, N.; Georgoulias, V.; Lionis, C. Application of data mining techniques and data analysis methods to measure cancer morbidity and mortality data in a regional cancer registry: The case of the island of Crete, Greece. Comput. Methods Programs Biomed. 2017, 145, 73–83. [Google Scholar] [CrossRef] [PubMed]

- Ramezankhani, A.; Hadavandi, E.; Pournik, O.; Shahrabi, J.; Azizi, F.; Hadaegh, F. Decision tree-based modelling for identification of potential interactions between type 2 diabetes risk factors: A decade follow-up in a Middle East prospective cohort study. BMJ Open 2016, 6, e013336. [Google Scholar] [CrossRef] [PubMed]

- Sreejith, S.; Nehemiah, H.K.; Kannan, A. Clinical data classification using an enhanced SMOTE and chaotic evolutionary feature selection. Comput. Biol. Med. 2020, 126, 103991. [Google Scholar] [CrossRef] [PubMed]

- Ture, M.; Tokatli, F.; Kurt, I. Using Kaplan–Meier analysis together with decision tree methods (C&RT, CHAID, QUEST, C4. 5 and ID3) in determining recurrence-free survival of breast cancer patients. Expert Syst. Appl. 2009, 36, 2017–2026. [Google Scholar]

- Han, J.; Pei, J.; Kamber, M. Data Mining: Concepts and Techniques; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Rokach, L.; Maimon, O. Decision trees. In Data Mining and Knowledge Discovery Handbook; Springer: Berlin/Heidelberg, Germany, 2005; pp. 165–192. [Google Scholar]

- Kass, G.V. Significance testing in automatic interaction detection (AID). J. R. Stat. Soc. Ser. C 1975, 24, 178–189. [Google Scholar]

- Jerez, J.M.; Molina, I.; Garcia-Laencina, P.J.; Alba, E.; Ribelles, N.; Martin, M.; Franco, L. Missing data imputation using statistical and machine learning methods in a real breast cancer problem. Artif. Intell. Med. 2010, 50, 105–115. [Google Scholar] [CrossRef]

- Rajula, H.S.R.; Verlato, G.; Manchia, M.; Antonucci, N.; Fanos, V. Comparison of Conventional Statistical Methods with Machine Learning in Medicine: Diagnosis, Drug Development, and Treatment. Medicina 2020, 56, 455. [Google Scholar] [CrossRef]

- Limpaphayom, K.K.; Taechakraichana, N.; Jaisamrarn, U.; Bunyavejchevin, S.; Chaikittisilpa, S.; Poshyachinda, M.; Taechamahachai, C.; Havanond, P.; Onthuam, Y.; Lumbiganon, P.; et al. Prevalence of osteopenia and osteoporosis in Thai women. Menopause 2001, 8, 65–69. [Google Scholar] [CrossRef] [PubMed]

- Songpatanasilp, T.; Sritara, C.; Kittisomprayoonkul, W.; Chaiumnuay, S.; Nimitphong, H.; Charatcharoenwitthaya, N.; Pongchaiyakul, C.; Namwongphrom, S.; Kitumnuaypong, T.; Srikam, W. Thai Osteoporosis Foundation (TOPF) position statements on management of osteoporosis. Osteoporos. Sarcopenia 2016, 2, 191–207. [Google Scholar] [CrossRef]

- Chaysri, R.; Leerapun, T.; Klunklin, K.; Chiewchantanakit, S.; Luevitoonvechkij, S.; Rojanasthien, S. Factors related to mortality after osteoporotic hip fracture treatment at Chiang Mai University Hospital, Thailand, during 2006 and 2007. J. Med. Assoc. Thai 2015, 98, 59–64. [Google Scholar]

- Suwan, A.; Panyakhamlerd, K.; Chaikittisilpa, S.; Jaisamrarn, U.; Hawanond, P.; Chaiwatanarat, T.; Tepmongkol, S.; Chansue, E.; Taechakraichana, N. Validation of the thai osteoporosis foundation and royal college of orthopaedic surgeons of Thailand clinical practice guideline for bone mineral density measurement in postmenopausal women. Osteoporos. Sarcopenia 2015, 1, 103–108. [Google Scholar] [CrossRef][Green Version]

- Clague, C. Thailand: Osteoporosis Moves Up the Health Policy Agenda. The Economist Intelligence Unit Limited 2021. 2021. Available online: https://impact.economist.com/perspectives/perspectives/sites/default/files/eco114_amgen_thailand_and_philippines_1_3.pdf (accessed on 16 August 2022).

- Indhavivadhana, S.; Rattanachaiyanont, M.; Angsuwathana, S.; Techatraisak, K.; Tanmahasamut, P.; Leerasiri, P. Validation of osteoporosis risk assessment tools in middle-aged Thai women. Climacteric 2016, 19, 588–593. [Google Scholar] [CrossRef]

- Mithal, A.; Ebeling, P.; Kyer, C.S. The Asia-Pacific Regional Audit: Epidemiology, Costs&burden of Osteoporosis in 2013; International Osteoporosis Foundation: Nyon, Switzerland, 2013. [Google Scholar]

- Pongchaiyakul, C.; Songpattanasilp, T.; Taechakraichana, N. Burden of osteoporosis in Thailand. J. Med. Assoc. Thail. 2008, 91, 261–267. [Google Scholar] [CrossRef]

- Nayak, S.; Edwards, D.; Saleh, A.; Greenspan, S. Systematic review and meta-analysis of the performance of clinical risk assessment instruments for screening for osteoporosis or low bone density. Osteoporos. Int. 2015, 26, 1543–1554. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.J.; Chen, Y.J.; Cheng, C.H.; Hwang, H.F.; Chen, C.Y.; Lin, M.R. Comparisons of different screening tools for identifying fracture/osteoporosis risk among community-dwelling older people. Medicine 2016, 95, e3415. [Google Scholar] [CrossRef]

- Toh, L.S.; Lai, P.S.M.; Wu, D.B.-C.; Bell, B.G.; Dang, C.P.L.; Low, B.Y.; Wong, K.T.; Guglielmi, G.; Anderson, C. A comparison of 6 osteoporosis risk assessment tools among postmenopausal women in Kuala Lumpur, Malaysia. Osteoporos. Sarcopenia 2019, 5, 87–93. [Google Scholar] [CrossRef]

- Bui, M.H.; Dao, P.T.; Khuong, Q.L.; Le, P.-A.; Nguyen, T.-T.T.; Hoang, G.D.; Le, T.H.; Pham, H.T.; Hoang, H.-X.T.; Le, Q.C. Evaluation of community-based screening tools for the early screening of osteoporosis in postmenopausal Vietnamese women. PLoS ONE 2022, 17, e0266452. [Google Scholar] [CrossRef] [PubMed]

- Chailurkit, L.O.; Kruavit, A.; Rajatanavin, R. Vitamin D status and bone health in healthy Thai elderly women. Nutrition 2011, 27, 160–164. [Google Scholar] [CrossRef]

- Chavda, S.; Chavda, B.; Dube, R. Osteoporosis Screening and Fracture Risk Assessment Tool: Its Scope and Role in General Clinical Practice. Cureus 2022, 14, e26518. [Google Scholar] [CrossRef] [PubMed]

- Mitek, T.; Nagraba, L.; Deszczyński, J.; Stolarczyk, M.; Kuchar, E.; Stolarczyk, A. Genetic Predisposition for Osteoporosis and Fractures in Postmenopausal Women. Adv. Exp. Med. Biol. 2019, 1211, 17–24. [Google Scholar] [PubMed]

- Nuti, R.; Brandi, M.L.; Checchia, G.; Di Munno, O.; Dominguez, L.; Falaschi, P.; Fiore, C.E.; Iolascon, G.; Maggi, S.; Michieli, R.; et al. Guidelines for the management of osteoporosis and fragility fractures. Intern. Emerg. Med. 2019, 14, 85–102. [Google Scholar] [CrossRef] [PubMed]

| Parameters | Normal (BMD T-Score ≥ −1.0 SD) (n = 90) | Abnormal Group (BMD T-Score < −1.0 SD) (n = 266) | ||

|---|---|---|---|---|

| Missing Value | Missing Value | |||

| (n) | % | (n) | % | |

| Age (years) | 0 | 0.0 | 0 | 0.0 |

| Weight (kg) | 0 | 0.0 | 0 | 0.0 |

| Height (cm) | 0 | 0.0 | 0 | 0.0 |

| Body mass index (kg/m2) | 2 | 2.2 | 0 | 0.0 |

| Waist (cm) | 2 | 2.2 | 4 | 1.5 |

| Hip (cm) | 2 | 2.2 | 5 | 1.9 |

| Arm circumference (cm) | 2 | 2.2 | 0 | 0.0 |

| Triceps skinfold (mm) | 2 | 2.2 | 2 | 0.8 |

| Sub scapular skinfold (mm) | 2 | 2.2 | 2 | 0.8 |

| Arm span (cm) | 2 | 2.2 | 1 | 0.4 |

| Height arm span ratio | 2 | 2.2 | 1 | 0.4 |

| DT Algorithms | Measure Used to Select Input Variable | Hyperparameters | Value |

|---|---|---|---|

| CART | Gini index | Maximum Tree Depth | 5 |

| Minimum Number of Cases: Parent node | 100 | ||

| Minimum Number of Cases: Child node | 50 | ||

| QUEST | F/Chi-square test | Maximum Tree Depth | 5 |

| Minimum Number of Cases: Parent node | 100 | ||

| Minimum Number of Cases: Child node | 50 | ||

| CHAID | Chi-square test | Significance Level for Split | 0.05 |

| Significance Level for Merge | 0.05 | ||

| Maximum Tree Depth | 2 | ||

| Minimum Number of Cases: Parent node | 100 | ||

| Minimum Number of Cases: Child node | 50 | ||

| C4.5 (J48) | Entropy/information gain | Confidence factor | 0.25 |

| Minimum number of objects | 20 |

| Parameters | Normal Group (T-Score ≥ −1.0 SD) (n = 90) | Abnormal Group (T-Score < −1.0 SD) (n = 266) | p-Value | ||

|---|---|---|---|---|---|

| Mean (SD) | Median (IQR) | Mean (SD) | Median (IQR) | ||

| Age, years | 52.80 (6.95) | 53.00 (8.00) | 58.67 (6.39) | 59.00 (9.00) | 0.000 a |

| Weight, kg | 61.84 (10.32) | 59.50 (13.60) | 55.18 (8.46) | 54.50 (9.60) | 0.000 b |

| Height, cm | 156.09 (5.43) | 156.00 (8.00) | 154.90 (5.25) | 155.00 (7.00) | 0.111 b |

| Body mass index, kg/m2 | 25.42 (3.98) | 24.93 (6.18) | 22.98 (3.14) | 22.60 (3.92) | 0.000 b |

| Waist, cm | 82.02 (9.61) | 81.00 (14.00) | 77.65 (8.70) | 77.00 (10.00) | 0.000 b |

| Hip, cm | 99.69 (7.64) | 99.75 (9.80) | 95.37 (7.10) | 95.00 (6.50) | 0.000 b |

| Arm circumference, cm | 29.65 (7.51) | 28.25 (5.50) | 27.13 (2.86) | 27.00 (4.00) | 0.000 b |

| Triceps Skin fold, mm | 23.24 (5.66) | 24.00 (9.00) | 21.34 (6.64) | 21.00 (7.00) | 0.003 b |

| Sub Scapular Skin Fold, mm | 27.50 (6.90) | 28.00 (9.50) | 24.88(6.81) | 24.00 (10.00) | 0.003 b |

| Arm Span, cm | 159.00 (7.26) | 158.00 (10.80) | 158.46 (7.18) | 158.00 (9.50) | 0.768 b |

| Height arm span ratio | 0.98 (0.03) | 0.98 (0.04) | 0.98 (0.00) | 0.98 (0.04) | 0.617 b |

| Models | Accuracy | Sensitivity | Specificity | AUC |

|---|---|---|---|---|

| Mean (SD) | Mean (SD) | Mean (SD) | Mean (SD) | |

| CART | 0.699 (0.045) | 0.709 (0.106) | 0.646 (0.133) | 0.686 (0.027) |

| QUEST | 0.662 (0.042) | 0.653 (0.123) | 0.688 (0.137) | 0.670 (0.061) |

| CHAID | 0.665 (0.078) | 0.661(0.123) | 0.676 (0.101) | 0.716 (0.060) |

| C4.5 | 0.677 (0.047) | 0.710 (0.072) | 0.584 (0.113) | 0.672 (0.071) |

| p-value | 0.433 | 0.591 | 0.243 | 0.156 |

| Representative Algorithm | Accuracy | Sensitivity | Specificity | AUC |

|---|---|---|---|---|

| CART | 0.784 | 0.847 | 0.600 | 0.702 |

| QUEST | 0.722 | 0.667 | 0.880 | 0.773 |

| CHAID | 0.804 | 0.875 | 0.600 | 0.735 |

| C4.5 | 0.711 | 0.764 | 0.560 | 0.639 |

| No. | Age | Weight | BMI | Diagnosis | CART | QUEST | CHAID | C4.5 | OSTA | KKOS |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 40 | 65.0 | 28.89 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 47 | 62.0 | 24.84 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 47 | 49.0 | 20.66 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| 4 | 49 | 54.9 | 22.27 | 1 | 1 | 0 | 1 | 1 | 0 | 0 |

| 5 | 50 | 48.9 | 18.40 | 1 | 1 | 0 | 1 | 1 | 0 | 1 |

| 6 | 50 | 54.5 | 21.29 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| 7 | 52 | 48.7 | 20.94 | 1 | 1 | 0 | 1 | 1 | 0 | 1 |

| 8 | 52 | 55.5 | 21.15 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| 9 | 53 | 57.0 | 25.33 | 1 | 0 | 0 | 1 | 0 | 0 | 0 |

| 10 | 54 | 51.3 | 21.35 | 1 | 1 | 0 | 1 | 1 | 0 | 0 |

| 11 | 54 | 56.0 | 22.43 | 1 | 1 | 0 | 1 | 1 | 0 | 0 |

| 12 | 54 | 48.0 | 18.75 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 13 | 54 | 59.8 | 25.55 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 14 | 55 | 51.7 | 23.29 | 1 | 1 | 0 | 1 | 1 | 0 | 1 |

| 15 | 56 | 48.8 | 19.80 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 16 | 56 | 68.0 | 29.43 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 17 | 57 | 62.0 | 25.81 | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

| 18 | 58 | 38.1 | 15.46 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 19 | 58 | 54.8 | 22.81 | 1 | 1 | 1 | 1 | 1 | 0 | 1 |

| 20 | 58 | 55.0 | 22.03 | 1 | 1 | 1 | 1 | 1 | 0 | 0 |

| 21 | 58 | 57.5 | 23.03 | 1 | 0 | 1 | 1 | 0 | 0 | 0 |

| 22 | 58 | 58.5 | 23.73 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 23 | 59 | 49.5 | 22.75 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 24 | 59 | 46.1 | 20.49 | 0 | 1 | 1 | 1 | 1 | 1 | 1 |

| 25 | 60 | 95.0 | 33.26 | 1 | 1 | 1 | 1 | 0 | 0 | 0 |

| 26 | 62 | 51.8 | 20.49 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 27 | 62 | 62.0 | 26.14 | 0 | 1 | 1 | 1 | 1 | 0 | 0 |

| 28 | 63 | 48.0 | 19.98 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 29 | 64 | 61.0 | 24.75 | 1 | 1 | 1 | 1 | 1 | 0 | 0 |

| 30 | 65 | 61.2 | 27.20 | 1 | 1 | 1 | 1 | 1 | 0 | 0 |

| 31 | 65 | 42.5 | 19.40 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 32 | 68 | 51.4 | 23.47 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 33 | 68 | 57.0 | 23.12 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 34 | 69 | 45.8 | 20.91 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 35 | 70 | 55.2 | 24.53 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| Model | Accuracy | Sensitivity | Specificity | PPV | NPV | No. of Variable | Features in the Model | ||

|---|---|---|---|---|---|---|---|---|---|

| CART | 0.743 | 0.846 | 0.444 | 0.815 | 0.500 | 2 | age | weight | |

| QUEST | 0.629 | 0.615 | 0.667 | 0.842 | 0.375 | 1 | age | ||

| CHAID | 0.800 | 0.923 | 0.444 | 0.828 | 0.667 | 2 | age | weight | |

| C4.5 | 0.714 | 0.808 | 0.444 | 0.808 | 0.444 | 3 | age | weight | BMI |

| OSTA | 0.543 | 0.423 | 0.889 | 0.917 | 0.348 | 2 | age | weight | |

| KKOS | 0.657 | 0.577 | 0.889 | 0.938 | 0.421 | 2 | age | weight | |

| Rule | Length | Condition | Prediction |

|---|---|---|---|

| 1 | 1 | Age > 58 | 1, Abnormal |

| 2 | 2 | Age ≤ 58 and weight > 57.8 | 0, Normal |

| 3 | 2 | Age ≤ 58 and weight ≤ 57.8 | 1, Abnormal |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Makond, B.; Pornsawad, P.; Thawnashom, K. Decision Tree Modeling for Osteoporosis Screening in Postmenopausal Thai Women. Informatics 2022, 9, 83. https://doi.org/10.3390/informatics9040083

Makond B, Pornsawad P, Thawnashom K. Decision Tree Modeling for Osteoporosis Screening in Postmenopausal Thai Women. Informatics. 2022; 9(4):83. https://doi.org/10.3390/informatics9040083

Chicago/Turabian StyleMakond, Bunjira, Pornsarp Pornsawad, and Kittisak Thawnashom. 2022. "Decision Tree Modeling for Osteoporosis Screening in Postmenopausal Thai Women" Informatics 9, no. 4: 83. https://doi.org/10.3390/informatics9040083

APA StyleMakond, B., Pornsawad, P., & Thawnashom, K. (2022). Decision Tree Modeling for Osteoporosis Screening in Postmenopausal Thai Women. Informatics, 9(4), 83. https://doi.org/10.3390/informatics9040083