Abstract

Immersive technologies have been shown to significantly improve learning as they can simplify and simulate complicated concepts in various fields. However, there is a lack of studies that analyze the recent evidence-based immersive learning experiences applied in a classroom setting or offered to the public. This study presents a systematic review of 42 papers to understand, compare, and reflect on recent attempts to integrate immersive technologies in education using seven dimensions: application field, the technology used, educational role, interaction techniques, evaluation methods, and challenges. The results show that most studies covered STEM (science, technology, engineering, math) topics and mostly used head-mounted display (HMD) virtual reality in addition to marker-based augmented reality, while mixed reality was only represented in two studies. Further, the studies mostly used a form of active learning, and highlighted touch and hardware-based interactions enabling viewpoint and select tasks. Moreover, the studies utilized experiments, questionnaires, and evaluation studies for evaluating the immersive experiences. The evaluations show improved performance and engagement, but also point to various usability issues. Finally, we discuss implications and future research directions, and compare our findings with related review studies.

1. Introduction

Immersive technologies create distinct artificial experiences by blurring the line between the real and virtual worlds [1]. Immersive technologies, including virtual reality (VR), augmented reality (AR), and mixed reality (MR), have recently become prevalent in various domains, including marketing [2], healthcare [3], entertainment [4], and education [5]. In fact, immersive technologies are expected to harness more than 12 billion USD in revenue in 2023 [6].

The incorporation of immersive technologies in education is on the rise as they help students visualize abstract concepts and engage them with a realistic experience [7]. Further, immersive technologies help students to develop special skills that are much harder to attain with traditional pedagogical resources [8]. As a matter of fact, immersive technologies have been shown to improve participation [9] and amplify engagement [10]. Innovative education based on immersive technologies is particularly crucial for generation-Z students who prefer learning from the internet to learning from traditional means [11].

The extant literature review studies sought to recap the present-day efforts to apply immersive technologies in education. As an example, Radianti et al. [12] and Pellas et al. [13] presented the learning theories and pedagogical strategies applied in immersive learning experiences, while Akçayır and Akçayır [8] highlighted the motivations and benefits of immersive technologies in education. Moreover, Bacca et al. [14] and Quintero et al. [15] discussed the role of immersive technologies in educational inclusion. Santos et al. [16] and Radianti et al. [12] illustrated the design methods of immersive systems in education. Lastly, Luo et al. [17] investigated the evaluation methods of immersive systems in education.

The current review studies contributed to the body of knowledge, albeit their primary focus was using immersive technologies for improving learning outcomes [12], identifying the advantages and obstacles of applying immersive technologies in education [8,14,18], and determining the types of immersive technologies in education [19]. Moreover, the existing studies focused on a specific type of immersive technology (e.g., AR, VR) or a specific level of education (K-12, higher education), and hardly covered interaction techniques or how immersive affordances can be useful in education.

Given the vast research on overall immersive technologies in education, it is crucial to systematically review the literature to illuminate various key elements: application field, types of technology, the role of technology in education, pedagogical strategies, interaction styles, evaluation methods, and challenges.

By systematically examining 42 articles illustrating immersive learning experiences (ILEs), our work presents: (1) an extensive analysis of the approaches and interaction styles of immersive technologies used to enhance learning, (2) an illustration of the role of immersive technologies and their educational affordances, (3) a detailed presentation of the evaluation methods utilized to support the validity of the ILEs, and (4) a discussion of the challenges, implications, and future research directions related to ILEs. This research will benefit the human-computer interaction (HCI) community, educators, and researchers involved in immersive learning research.

This article is structured as follows: Section 2 highlights background information about immersive technologies, while Section 3 examines the related work. Section 4 illustrates the methodology, and Section 5 explains the results. Section 6 discusses the findings, Section 7 presents the study’s limitations, and Section 8 concludes the study.

2. Background

This section gives an overview of the immersive technologies covered in this study. Moreover, it also illustrates interaction techniques employed in immersive lessons. Finally, the section introduces an educational model (SAMR) used in this study to define the role of immersive technologies in education.

2.1. Immersive Learning

There are several definitions of what constitutes immersive learning as several authors mean different things when talking about the term [20]. For instance, some authors define immersive learning as learning enabled by the use of immersive technologies [21]. However, some researchers argued for distinguishing the technology from the effect it creates [22]. The term immersion describes technological elements of a medium and the response emerging from a combination of the human perceptual and motor system. To that effect, Dengel and Magdefrau [23] divided immersive learning into use and supply sides. The use side focuses on learning processes moderated through the feeling of presence, while the supply side is concerned with the educational medium. By concentrating on the impact of immersion on the learning and perceptual processes as opposed to the technological features, immersive learning becomes timeless and independent from technological advances [20]. As such, immersive learning facilitates learning using technological affordances, inducing a sense of presence (the feeling of being there), co-presence (the feeling of being there together), and the building of identity (connecting the visual representation to the self) [22,24].

Different frameworks and models were introduced and discussed how immersive affordances can be useful in education. For instance, a framework for the use of immersive virtual reality (iVR) technologies based on the cognitive theory of multimedia learning (CMTL) [25] is used to identify the objective and subjective factors of presence. The objective factors are the immersive technology, while the subjective factors consist of motivational, emotional and cognitive aspects. The cognitive affective model of immersive learning (CAMIL) is another model introduced to help understand how to use immersive technology in learning environments based on cognitive and affective factors that include interests, motivation, self-efficacy, cognitive load, and self-regulation [26]. It describes how these factors lead to acquiring factual, conceptual and procedural knowledge. Immersive technologies can enrich teaching and learning environments; however, they are technology-driven and miss instructional concepts.

2.2. Virtual Reality (VR)

In general, VR can be defined as the sum of hardware and software that creates an artificially simulated experience akin to or different from the real world [27]. The concept of VR can be traced back to old novels prior to being introduced as a technology [28]. However, Ivan Sutherland is thought to be the first to introduce VR as a computer technology in his PhD thesis [29]. He contributed a man–machine graphical communication system called SketchPad [30]. However, VR was made popular by Jaron Lanier, who founded the virtual programming language research community [31]. Subsequently, researchers studied and closely examined the technology. Over time, communities from several fields, including engineering, physics, and chemistry, contributed to the evolution of the technology [28].

Table 1 shows an overview of existing VR systems. In terms of immersion, VR experiences can be partially or fully immersive. Partially immersive VR systems give participants the feeling of being in a simulated reality, but they remain connected to their physical environment, while full immersion allows users a more realistic feeling of the artificial environment, complete with sound and sight [32]. Further, fully immersive VR systems supply a 3D locus in a large field of vision [33].

Table 1.

An overview of VR systems.

Partially immersive VR systems use surface projection. A wall projector does not require participants to wear goggles, but they wear tracking gloves allowing the users to interact with the system [34]. ImmersaDesk requires users to wear specific goggles so that they can view the projected content in a 3D setting. Each participant’s eye views the same scene but with a rather different perspective [35].

Fully immersive VR systems can be based on a head-mounted display (HMD) or a room with projection screen walls, or a room allowing for vehicle simulation. HMDs are binocular head-based devices that participants wear on their heads. The devices deliver auditory and visual feedback. HMDs feature a large field of vision as it provides two screens for the user’s eyes [36]. HMDs also track the head position allowing for feedback and interactivity.

Room-based VR systems allow users to experience virtual reality in a room. The cave automated virtual environment (CAVE) is a darkened-room environment covered with wall sized displays, motion-tracking technology, and computer graphics to provide a full-body experience of a virtual reality environment [37].

Another type of room-based VR system is the vehicle simulator. As an example, users are trained to react to emergencies and dangerous situations associated with driving vehicles on mine sites [28]. Another example of vehicle simulation systems is flight simulators to reduce the risks of flight testing and the restrictions of designing new aircraft [38].

Some authors consider non-immersive devices such as monitor-based systems to be part of VR systems [39] as they provide mental immersion [28]. However, in our study, we only include learning experiences based on partially and fully immersive VR systems.

2.3. Augmented Reality (AR)

AR systems blend virtual content with real imagery [45]. Since this happens in real-time as the user interacts with the system, AR can enhance the interaction with the real world by illustrating concepts and principles in the real world. There are various taxonomies of AR that consider educational aspects [19], input/output [46], popular uses [47], and technology used [48]. Table 2 shows various examples of AR systems found in the existing taxonomies. This study does not cover AR systems that augment other senses, such as touch, smell, and taste [46].

Table 2.

An overview of AR systems.

AR systems can be marker-based and markerless. Marker-based AR systems depend on the positioning of fiducial markers (e.g., QR codes, bar codes) that are caught by the camera, thus providing an AR experience [49]. The markers may be printed on a piece of paper. Users scan the marker using a handheld device or an HMD initiating imagery for users to view [47]. Alternatively, the marker may be a physical object. For example, Aurasma could augment the appearance of real-world banknotes by showing entertaining and patriotic animation [48].

Markerless AR systems rely on natural features for the implementation of tracking as opposed to fiducial markers [50]. Tracking systems strive to provide accuracy, ergonomic comfort, and calibration [51]. Thus, the user experience is essential to the evaluation of markerless AR systems. Examples of markerless AR systems include location, projection, and superimposition-based systems. Location-based AR uses a global positioning system (GPS), a gyroscope, and an accelerometer to provide data based on the location of the user [52]. Google Maps uses location-based AR systems to provide directions to users as well as information about points of interest. Projection-based AR uses projection technology to improve 3D objects and environments in the physical world by projecting imagery onto their surfaces [53]. A notable example is the storyteller sandbox at D23 expo having an interactive environment with projected imagery onto the surface of a table filled with sand [54]. Superimposition-based AR replaces the view of an object partially or fully with an augmented view of the same object [55]. This type of AR is often used in the medical field to superimpose useful imagery guiding surgeons, for example, drill stop during dental implant surgery [56].

2.4. Mixed Reality (MR)

The scientific community has established a clear distinction between VR and AR. VR allows users to manipulate digital objects in an artificial environment, while AR alters the user’s visual perception but also allows for interaction with the physical world [61]. MR is an emerging immersive technology that is gaining ground. However, there is no consensus on what constitutes MR [62]. In fact, many do not distinguish between MR and AR, while others consider MR a superset of AR [63]. However, for the sake of our study, we follow the definition introduced in [62], stating that MR takes AR further by allowing users to walk into and manipulate virtual objects shown in the real world. Giant tech corporations are increasingly driving this new technology. A popular example of MR is Microsoft Hololens [64], an HMD that uses spatial mapping to place virtual objects in the surrounding space and support embodied interaction with those objects. Another more affordable MR kit is Zapbox, which combines a headset with a regular phone to create an MR experience [65].

2.5. Interaction Techniques of Immersive Technologies

Immersive technologies enable tasks to be conducted in a real or virtual 3D spatial environment making the interaction harder to implement than other fields of human–computer interaction [66]. Since immersive technologies demand unconventional methods of setting up devices, strategies, metaphors, and a vast range of input and output methods for interaction, a plethora of opportunities emerge for interaction possibilities [67].

In this study, we define the interaction techniques in immersive learning experiences based on a classification in a recent review study [68]. The interaction techniques can be defined on the input and task levels. In terms of input, the interaction can be hand-based using hand gestures, speech-based using voice commands, head-based using gaze, orientation, or head gestures, and hardware-based using specific controllers. In terms of task, the interaction allows for pointing, selection, translation, scaling, menu-based selection, rotation, or abstract functionality (e.g., edit, add, delete, etc.).

2.6. The SAMR Model

The substitution augmentation modification redefinition (SAMR) model by Puentedura [69,70] was developed to examine how technology is infused into instructional activities. The SAMR model allows educators to reflect and evaluate their technology integration practices while attempting powerful learning experiences. Hamilton et al. [71] suggested that context should be considered as an implicit aspect of the SAMR model, such as appropriate learning outcomes, students’ needs, and expectations. In addition, the process of teaching and learning should be the central focus in choosing the appropriate choice of technology based on the students’ needs. The first two steps in the SAMR model involve technology as enhancement tools, while the last two steps involve technology as a transformation tool. In some circumstances, the steps between enhancement and transformation can take time as educators practice, reflect, and learn how to choose the appropriate tool.

Substitution is the first step in the enhancement level of technology integration where it acts as a direct tool substituting the use of analog version without functional change. An example of substitution is the use of math games to perform the basic math operations of addition, subtraction, multiplication, or division instead of practicing using paper and pencil. Another example is the use of an e-reader instead of a textbook without any functionalities added.

Augmentation is the next step in the enhancement level. It is a direct substitution tool with functional improvement. In this step, the technology adds functionality that would not be possible to use otherwise. An example of this step is using game-based learning to allow players to learn basic concepts of programming using a card game and solving problems.

Modification is the first step in the transformation level where a significant task redesign takes place and a definite change in the lesson occurs. Modification demands more reflection and teachers’ facilitation. As an example of modification is a virtual laboratory or simulator that helps students to test ideas and observe results.

Redefinition is the second step in the SAMR model, where a clear transformation and depth of learning occur. In redefinition, technology allows for the creation of new tasks that were previously inconceivable. An example of redefinition is the use of immersive technology to scrutinize concepts that cannot be easily imagined without the technology. In other words, using interactive online learning tools to understand the complexity of the human body as well as explore and examine how different systems of the human body (e.g., tissues and organs) function together to perform properly.

3. Related Work

In the past decade, several studies reviewed existing immersive learning experiences in education. Table 3 shows an overview of the areas the studies covered. The studies focused on the types of immersive technologies used [8,19], applications [18,19], learning theories, and pedagogy underpinning the learning experiences [12,13,17,18,72], motivations and benefits of the technology challenges [8,13,14,15,18], the role of the technology in education [14,15], design methods [12,13,16,17], and evaluation methods [13,14,16,17].

Table 3.

Areas that existing review studies focused on.

Kesim and Ozarslan [19] provided an overview of the types of immersive systems used in education. For example, HMDs provide video and optic see-through systems, while hand-held devices allow for projecting 3D models onto the real world. Additionally, Akçayır and Akçayır [8] noted that desktop computers could also be used to provide AR learning experiences.

Kesim and Ozarslan [19] discussed applications of AR systems in education, such as the enablement of real-world and collaborative tasks. Moreover, Kavanagh et al. [18] cited other applications such as simulation and training.

Radianti et al. [12] gave an overview of various educational domains in which virtual reality systems were used. Examples include engineering, computer science, astronomy, biology, art-science, and more.

In terms of the learning theories and pedagogical principles underpinning the learning experiences, Radianti et al. [12] cited experiential learning and game-based learning, among others. Other pedagogical principles include collaborative learning [18], activity-based learning [13], architectural pedagogy [72], and scaffolding [17].

Concerning the motivations and benefits of immersive technologies in education, review studies cited improved learning performance [8], encouragement of active learning [18], increased students’ motivation [13], the facilitation of social learning [72], and the promotion of imagination [17].

Challenges to implementing immersive technologies education include cost, lack of usability, cognitive overload [8], insufficient realism [18], and being limited to a specific field [14].

Quintero et al. [15] discussed the role of immersive technology in educational inclusion, for instance, AR systems have been used to support the education of children with various disabilities (e.g., learning, psychological, visual, etc.). On the other hand, Bacca et al. [14] did not find evidence of AR educational applications addressing the special needs of students.

Concerning the design strategies used in immersive educational experiences, Santos et al. [16] mentioned design strategies that allow for exploration and ensure immersion. Other review studies cited design strategies that support collaboration, discovery [13], and realistic surroundings [12].

Santos et al. [16] presented an overview of evaluation methods used to substantiate immersive learning experiences, such as experiments and usability studies. Other studies cited qualitative exploratory studies [14], mixed methods [13], and interviews [17].

Although these studies have contributed to the literature, they mainly focused on immersive technologies as a learning aid and covered one type of immersive technology (e.g., AR or VR). This study reviews educational immersive learning experiences using seven dimensions: field, type of technology, the role of immersive technology in education, pedagogical strategies, evidence for effectiveness, interaction techniques, and limitations.

Table 4 shows a comparison between this study and the related reviews. In terms of field of application, two studies partially covered the application fields, while three others fully covered them. However, this study covers the application field in more detail as it lists the field and discusses the subfield and level of education.

Table 4.

Comparison between this work and relevant studies.

Concerning the type of immersive technology, two review studies partially covered that dimension. For instance, Kesim and Ozarslan [19] provided a brief overview of AR systems used in education. Three other review studies gave full details on the educational immersive technologies. For instance, Luo et al. [17] listed the percentages of studies using various types of VR systems used in education. However, this study, uses a more comprehensive taxonomy for VR systems [28], and a classification of AR systems elicited from various current studies.

Several studies partially highlighted the role of immersive technologies in assisting education. Notably, Radianti et al. [12] listed categories where VR can assist an educational environment, for example, by facilitating role management and screen sharing. Pellas et al. [13] highlighted the fact that VR enhances interaction and collaboration. This study uses the SAMR model [69,70] to assess how the immersive technologies were used to support learning.

In terms of the design principles, Santos et al. [16] discussed factors affecting the design of immersive learning experiences, while Luo et al. [17] listed pedagogical strategies underpinning the learning experiences such as collaborative and inquiry-based learning, and scaffolding. Pellas et al. [13] highlighted field trips and role play among others as instructional design techniques. Like the existing studies, this study identifies pedagogical strategies employed in immersive learning environments.

The coverage of interaction techniques was rather limited in the existing review studies. For instance, Kavanagh et al. [18] presented methods of interacting with HMDs. On the other hand, Pellas et al. [13] presented more hardware-level details of interaction such as the detection of head movement. This study classifies the studies based on the task-level interaction techniques presented in the Background section.

Concerning the evidence of effectiveness, four studies briefly discussed the evaluation methods used to substantiate the immersive learning experiences. On the other hand, three studies covered the evaluation methods in more depth. For instance, Asad et al. [72] cited the type of evaluation method and the findings. This study attempts to cover this dimension with rich details, including evaluation method, evidence for significance, and main findings.

Regarding challenges in using the technology, three studies covered this issue with different details. The studies mentioned several challenges, such as lack of usability (Akçayır and Akçayır [8], Santos et al. [16]) and lack of engagement (Kavanagh et al. [18]). This study builds on the work performed by these studies and identifies challenges in using several types of immersive technologies. To conclude, Table 4 shows gaps that this study aims at bridging to reflect on immersive learning experiences in the literature.

4. Methodology

We analyzed the literature associated with immersive learning environments to provide a context for new attempts and methods, and to identify new avenues for further research. This study follows the PRISMA guidelines [73]. We used PRISMA to identify, choose, and assess research critically, thereby lowering bias to enhance the quality of the study and make it more well founded. The process of the study consists of: (1) defining the review protocol, including the research questions, the mechanism to answer them, search plan, and the inclusion and exclusion criteria; (2) conducting the study by selecting the articles, evaluating their quality, and analyzing the results; and (3) communicating the findings.

4.1. Research Questions

Based on the limitations of the existing current related review studies, we developed seven research questions:

RQ1—In what fields are the immersive learning experiences applied?

RQ2—What type of immersive technologies are used in learning experiences?

RQ3—What role do immersive technologies play in supporting students’ learning?

RQ4—What are the pedagogical strategies used to support the immersive learning experiences?

RQ5—What are the interaction styles implemented by the immersive learning experiences?

RQ6—What empirical evidence substantiates the validity of the immersive learning experiences?

RQ7—What are the challenges of applying the immersive learning environments?

The first research question examines the application domains where the immersive technologies are used, while the second question presents the types of immersive technologies used for education. The third question explores the role of the immersive technologies in assisting education. The SAMR model [69,70] is used to classify the immersive technologies with regards to the four levels of the model (substitution, augmentation, modification and redefinition). The fourth question discusses the pedagogical approaches used in immersive learning environments. The fifth question examines the interaction techniques used to support the immersive learning systems. The sixth question investigates the evaluation methods used to back the validity of the immersive learning systems. Finally, the seventh question identifies the challenges reported in the application of immersive learning systems.

4.2. Search Process

We conducted the search during the period (2011–2021) in the following libraries: Scopus, ACM Digital Library, IEEE Xplore, and SpringerLink. We analyzed our objectives, research questions, and the related existing literature review studies to pinpoint keywords for the search string of this study. Thereupon, we improved the keywords and the search string iteratively until we reached encouraging results. We used these search keywords: “Immersive Technologies” and “Education.” Initially, we experimented with various search strings. For instance, we used correlated keywords for “Immersive Technologies” such as “Virtual Reality”, “VR”, “Augmented Reality”, “AR”, “Mixed Reality”, and “MR”. However, this resulted in an excessive number of search results in various search engines (e.g., 60,000+ results on Scopus), and we observed that many results were irrelevant to the purpose of the study. Consequently, we decided to only use “Immersive Technologies” to obtain a manageable number of search results. It is crucial to note that Scopus (the main search library in our survey) does not differentiate between plural and singular keywords. As such, “Technologies” and “Technology” are considered the same. Moreover, a multiple-word phrase such as “Immersive Technologies” is not considered as one search term, but two search terms (i.e., “Immersive” AND “technologies”). Lastly, we included keywords correlated with “Education” such as” Learning,” “Learner,” “Teaching,” “Teacher,” and “Student.” Consequently, combinations such as “Immersive Learning” and “Learning Technology” can also be considered a possible search premutation in Scopus.

Based on this logic, we defined the search string using Boolean operators as follows:

(“Immersive Technologies”) AND (“Education” OR “Learning” OR “Learner” OR “Teaching” OR “Teacher” OR “Student”).

We assessed the articles we found using the inclusion and exclusion criteria (shown in Table 5) allowing us to only incorporate the relevant articles. Moreover, we excluded posters, technical reports, and PhD thesis reports as they are not peer reviewed.

Table 5.

The inclusion and exclusion criteria.

The search query was executed in the selected libraries to start the process of inclusion and exclusion. At first, the number of articles resulting from the search was 702. We imported the metadata of the articles including title, abstract, keywords, and article type into Rayyan [74], a collaborative tool that enables reviewing, including, excluding, and searching for articles.

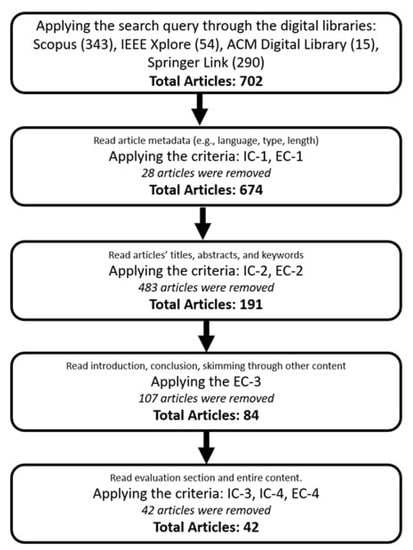

All the authors participated in selecting the articles. To ensure reliability and consistency amongst our decisions, the authors operated in two pairs enabling each author to audit the elimination and selection of the author they worked with. The procedure of article selection was conducted as follows (Figure 1):

Figure 1.

The process of article selection.

- We read the articles’ metainformation and applied the IC-1 and EC-1 criteria. Consequently, the number of articles was reduced to 674.

- We applied the criteria IC-2 and EC-2 by reading the title, abstract, and keywords of the articles, thereby reducing the articles to 191.

- We excluded the articles irrelevant to the research questions and applied the EC-3 criteria, thus reducing the articles to 84.

- Finally, we meticulously read the whole content of the articles while applying IC-3 and IC-4. Further, we applied EC-4, thereby excluding the articles that had little to no empirical evaluation. Consequently, the number of articles was reduced to 42. Table A1 shows the selected articles.

5. Results

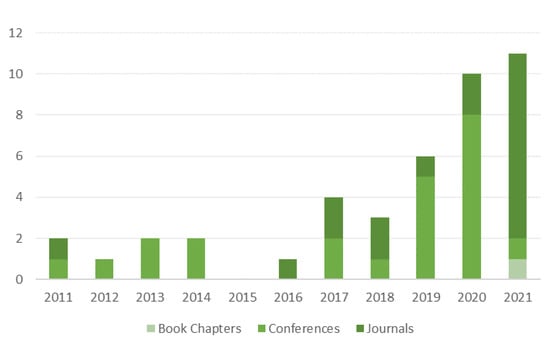

A timeline of the number and type of articles is shown in Figure 2. Slightly more than half of the articles (23; 54.7%) were conference papers, while 42.8% (18) of them were published in journals, and only one (2.3%) article was a book chapter. Intriguingly, 80.9% (34) of the journal articles were published after 2016. The journals were published in diverse venues, such as Applied Sciences (one article), Sustainability (one article), IEEE Access (two articles), and Computers & Education (one article). The journal articles were ranked as Q1 (12 articles), Q2 (4 articles), and Q3 (2 articles) according to Scimago Journal and Country Rank [75].

Figure 2.

A timeline of the selected articles.

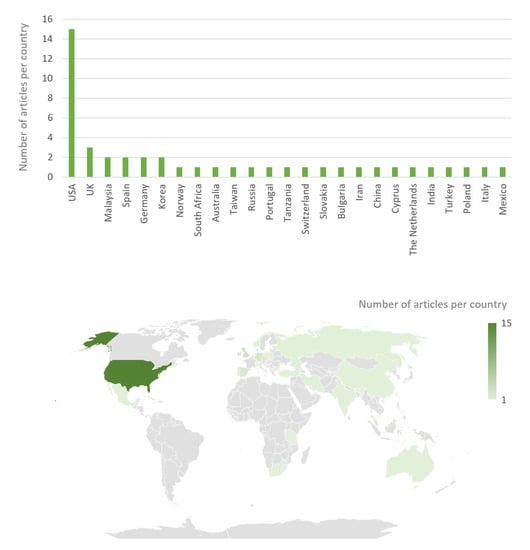

Figure 3 depicts the geographical distribution of the authors’ institutions’ countries. Most articles were written by authors from North American universities (16 articles). Nonetheless, a substantial number of articles were written by authors from European universities (15 articles). The remaining articles are from Asian (10 articles), African (1 article), and Australian (1 article) universities.

Figure 3.

Geographical distribution of the authors’ institutions’ country.

5.1. RQ1—In What Fields Are the Immersive Learning Experiences Applied?

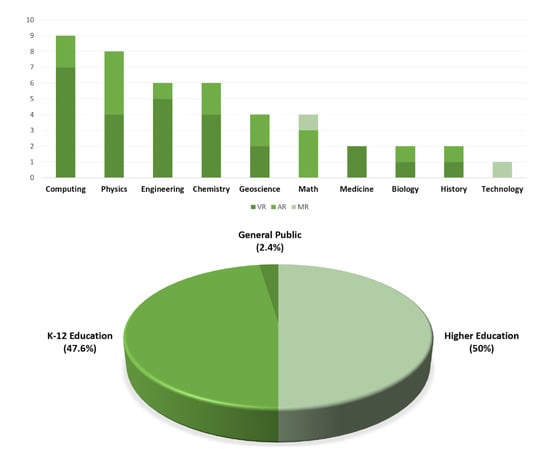

Figure 4 (top) depicts the fields in which the ILEs were applied. Nine (21.4%) articles used immersive technologies to teach computing including artificial intelligence (AI), programming, and robotics. The articles mostly used VR (seven articles) and only two articles used AR technologies. Seven (16.6%) articles taught physics topics with VR and AR are used by three articles each. Interestingly, one article covering physics used both AR and VR. Six (14.2%) articles taught engineering topics such as construction management, and electrical engineering. Apart from one article using AR, the remaining engineering articles used VR.

Figure 4.

Application fields and education level of the immersive learning experiences.

In general, science subjects were strongly present (45.2%). The subjects include physics (16.6%; seven articles), chemistry (14.2%; six articles), geoscience (9.5%; four articles), and biology (4.7%; two articles). A variety of topics were covered such as astronomy, thermodynamics, topology, periodic tables, and insects. Math topics such as geometry and mathematical operations were covered in four articles (9.5%). The remaining subjects are medicine (4.7%; two articles), and one article for technology, history, and education.

Concerning the level of education, most ILEs (50%; 21 articles) were taught in higher education settings, particularly for undergraduate-level courses, except for two ILEs which were designed for graduate-level courses. A total of 47.6% (20) of the articles reported ILEs in K-12 education settings varying from primary, middle, to secondary schools.

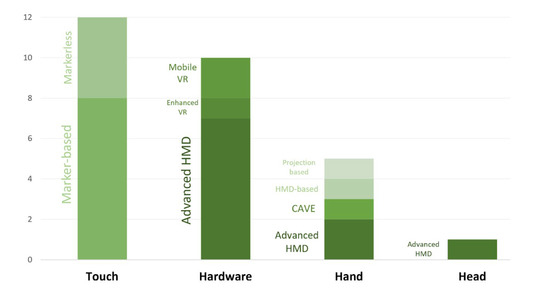

5.2. RQ2—What Types of Immersive Technologies Are Used in Learning Experiences?

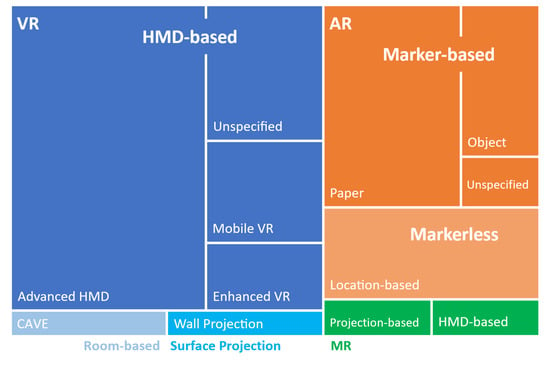

An overview of the immersive technologies as well as the devices used to implement the ILEs are shown in Figure 5 and Figure 6, respectively. By far, most of the selected articles (24 articles, 57.1%) used VR in the ILEs, while fourteen (33.3%) articles described AR-based learning experiences, and only two (4.8%) presented MR experiences. Further, two articles combined AR and VR learning experiences.

Figure 5.

An overview of the immersive technologies used in the ILEs.

Figure 6.

An overview of the devices used in the ILEs.

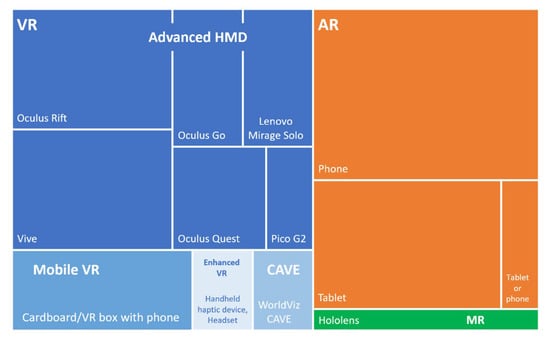

Apart from one article, the VR-based ILEs were fully immersive and mostly based on advanced HMDs (15 articles). The advanced HMDs utilized a variety of devices such as Vive [43] (four articles), Oculus Rift [41] (four articles), Lenovo Mirage Solo [76] (two articles), Oculus Go [77] (two articles), Oculus Quest [42] (two articles), and Pico G2 [78] (one article). To cite a few examples, Chiou et al. [79] used Vive to teach students about engineering wind turbines as the device provided visual and motion stimuli. In comparison, Theart et al. [80] used Oculus Rift to allow students to visualize the topologies of the human brain. Reeves et al. [81] used Lenovo Mirage Solo [76] to teach chemistry in undergraduate courses, while Santos Garduño et al. [82] used Oculus Go to teach the same subject but to high school students. Nersesian et al. [83] employed Oculus Quest to teach middle-school students the binary system. Finally, Erofeeva and Klowait [84] cited the usage of Pico G2 for teaching the assembly of electric circuits.

Only three articles used mobile VR where the students place their phones inside a VR box [85] or cardboard [40] to view a VR experience. Such devices provide an inexpensive alternative to advanced HMDs and enhanced VR, but they lack the tracking features of advanced HMDs. As an example, Truchly et al. [86] used a VR box to teach computer networking concepts to secondary school students.

Two articles used enhanced VR where HMDs, together with sensors, were used. For instance, in the field of medicine, Stone [87] utilized a binocular and two-stylus-like haptic system to help students view bones and feel the sensations and sound effects linked to drilling through various densities of bone.

The remaining four articles did not articulate sufficient details about their HMD-based systems.

Concerning AR systems, eleven (26.2%) articles used marker-based AR systems, while five (11.9%) articles utilized markerless AR. In terms of devices, there is no difference between marker-based and markerless AR as both types can use a tablet or a smart phone.

Regarding marker-based systems, seven (16.6%) articles used marker-based paper where students view visuals and additional information by scanning a marker printed on paper. As a notable example, Restivo et al. (2016) illustrated an example of teaching students the components of DC circuits where students scan various paper-based symbols representing electric components (i.e., battery, switch). In terms of object-based marker-based systems, the marker is a physical object as opposed to a symbol or a QR code. As an example, Lindner et al. (2019) presented an AR application teaching astronomy to children, which converts 2D pictures of Earth into a 3D spinning Earth in a smartphone’s camera.

Regarding markerless AR systems, all of the five articles presented location-based AR systems. For example, Bursztyn et al. (2017) presented an application allowing students to play a game in a 100-m long playing field representing the Grand Canyon. The application shows location-based information such as geological time, structures, and hydrological processes.

Concerning MR ILEs, only two (4.7%) articles utilized MR for education. One article (Salman et al. [88]) employed projection-based MR utilizing a tabletop projector and depth camera to teach math to children. The other article (Wu et al. [89]) used HMD-based MR in the form of a Microsoft HoloLens headset [64] to teach how electromagnetic waves are transmitted. Students could visualize and interact with the information in their environment.

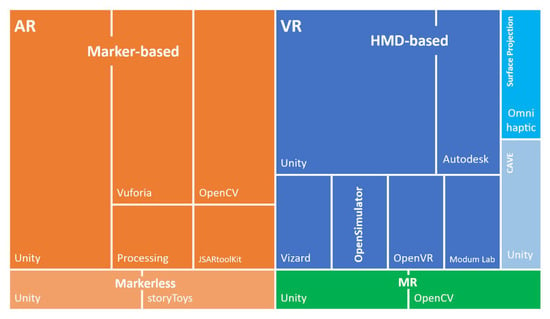

Figure 7 shows an overview of the software tools used in the ILEs. Unity [90] was used to implement 13 (30.9%) ILEs (VR: six articles, AR: six articles, and MR: one article). Unity is a cross-platform game engine that can also be used to create 3D experiences compatible with all immersive technology devices. Two other notable tools used by four (9.5%) and three (7.1%) articles, respectively, are Vuforia [91] and OpenCV [92], AR toolkits allowing developers to place objects in real-world physical environments. Other tools include Autodesk [93], a tool designers and engineer use for creating 3D content, but can also be used to create immersive content; Processing, a general graphics library [94]; JSAR toolkit [95], a web-based tool for creating AR experiences; Vizard [96], a VR tool for researchers; OpenSimulator [97], a tool for creating 3D graphics compatible with immersive technologies; OpenVR [98], a tool that makes VR accessible on VR hardware regardless of the vendor; Modum Lab [99], a tool with readily-made components usable in ILEs; StoryToys [100], a readily available educational AR application; and Omni haptic [101], a tool for integrating haptics into immersive experiences.

Figure 7.

An overview of the software tools used to develop the ILEs.

5.3. RQ3—What Role Do Immersive Technologies Play in Supporting Students’ Learning?

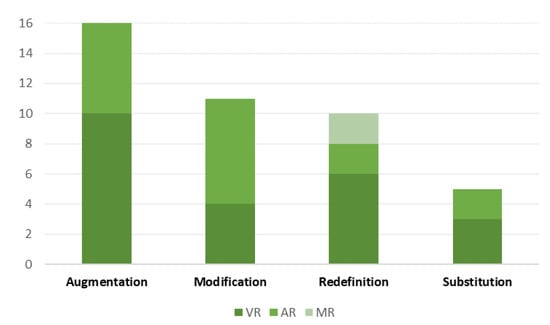

Figure 8 depicts the number of studies included in each level of the SAMR model. To ensure objective ratings of the articles according to the SAMR model, two raters rated each ILE reported in the articles. The Cohen’s kappa coefficient [102] is 0.74, pointing to high reliability. We discussed our disagreements and reconciled them. Sixteen (38.1%) studies were classified under the augmentation level (ten used VR and six used AR), followed by eleven (26.1%) studies in the modification level (seven used AR and four used AR), ten (23.8%) studies in the redefinition level (six used VR, two used AR, and two used MR), and finally five studies in the modification level (three used VR and two used AR).

Figure 8.

The role of immersive technology based on the SAMR model.

By technology type, VR ILEs were mostly in the augmentation level (10 studies, 23.81%), followed by the redefinition level (6 studies, 14.29%), then the modification level (4 studies, 9.52%), and the substitution level (3 studies, 7.14%). On the other hand, the AR ILEs occurred in the following order: modification (seven studies, 16.67%), augmentation (six studies, 14.29%), redefinition (two studies, 4.76%), and substitution (two studies, 4.76%). Lastly, the MR ILEs appeared only in studies categorized in the redefinition level (two studies, 4.76%).

To cite some of the studies in the redefinition level, Hunvik and Lindseth [103], Chiou et al. [79], Cecil et al. [104], and Wei et al. [105] used VR applications to engage students and support their learning of complex scientific phenomena via the use of realistic graphics and interactions that students can hardly experience in everyday life. Other studies combined VR and AR such as Remolar et al. [106] who described a large-scale immersive system deployed at the Museum of Science and Industry in Tampa to facilitate learning in an informal environment where learners use the physical movement and positioning of their entire bodies to enact their understanding of complex concepts. As another example, Salman et al. [88] examined the developed initial tangible-based MR setup with a small tabletop projector and depth camera to observe children’s interaction with the setup to guide the researchers towards developing non-symbolic math training.

For the modification level, AR applications were mostly used compared to VR, where students were engaged in a discussion to reflect and improve their work when needed. For instance, a study by Lindner et al. [107] used an AR application for the demonstration of concepts using 3D visualization and animation to help students understand complex topics and motivate them. Another study mentioned that students can recall, visualize, identify the type of angle, and mark it by drawing on that 3D object (Sarkar et al. [108]). Moreover, Kreienbühl et al. [109] used AR on a tablet to show tangible electricity building blocks used for constructing a working electric circuit.

In the augmentation and substitution levels, the VR applications were dominant in most studies. For augmentation, the use of these tools resulted in deeper understanding and transferable knowledge and skills for the learners where it facilitated an active learning environment. For example, a study used mobile VR (Google Cardboard glasses [40]) and interactive tool to raise students’ interest in STEM and improve their achievements (Woźniak et al. [110]). Another study (Hu-Au and Okita [111]) used a VR-based chemistry laboratory instead of a real-life one. The results showed that students who learned in the VR lab scored higher than those who learned in the RL lab and were able to elaborate and reflect more on the general chemistry content and laboratory safety knowledge compared to the RL environment.

At the substitution level, the technology provides a substitute for other learning activities without functional change to motivate students and enhance learning. For example, Garri et al. [112] presented ARMat, an AR-based application, to teach the operations of addition, subtraction, multiplication and division to children of 6 years of elementary school. Another study compared between the monitor-based and VR-based educational technologies as alternative supplemental learning environments to traditional classroom instruction using lectures, textbooks, and physical labs (Nersesian et al. [113]).

5.4. RQ4—What Are the Pedagogical Strategies Used to Support the Immersive Learning Experiences?

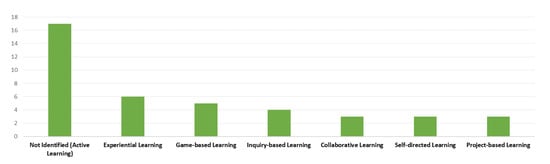

As shown in Figure 9, most studies (17 studies; 41%) did not specify the pedagogical strategies used. However, they mentioned different aspects of active learning approaches focusing on the student-centered method, where students were engaged in doing things and thinking of what they were doing. Examples of the studies can be found in [82,84,103,110].

Figure 9.

An analysis of the pedagogical approaches.

Other studies (six studies; 15%) mentioned the use of an experiential learning approach and the focus on learning by doing while using immersive technologies. In addition, three studies (7%) utilized collaborative learning, which emphasizes the connections occurring to other strategies, while simultaneously using experiential learning. For example, Nordin et al. [114] and Reeves et al. [81] highlighted the use of experiential learning using Kolb’s model and the presence of collaborative learning.

Five studies (12%) stated the use of game-based learning (e.g., Masso and Grace [115] and Nersesian et al. [83]) mentioned, where students were engaged in a deep analysis of solving complex problems and overcoming challenges. However, one study (Nersesian et al. [83]) also used project-based learning.

Four studies (10%) stated the use of inquiry-based learning where students were at the center of the learning process and take the lead in their own learning to pose and answer questions and are involved in several investigations. In addition, three other studies (7%) stated the use of self-directed learning by referring to the inquiry-based learning process while using immersive technology.

Three studies (7%) highlighted the use of project-based learning as a pedagogical approach with immersive technology, where students solve problems to construct and present the end product where a driving question guides them.

5.5. RQ5—What Are the Interaction Styles Implemented by the Immersive Learning Experiences?

Figure 10 shows an overview of the interaction input of the ILEs. The AR-based ILEs relied on handheld phones or tablets, and thus, regardless of the type of used AR (marker-based vs. markerless), the interaction was touch based.

Figure 10.

An overview of the task-based interaction techniques for the ILEs.

The VR ILEs used a variety of devices and thus varied in input. Seven articles (16.6%) using advanced HMDs utilized hardware input such as controllers. For instance, Georgiou et al. [116] reported the usage of VR controllers. Similarly, Santos Garduño et al. [82] cited that students interacted with VR application (Mel Chemistry) which requires VR controllers for interaction. Two articles (4.7%) using mobile VR utilized hardware input as the students solely relied on the VR box to experience the ILE (Peltekova et al. [117]). Concerning enhanced VR, one article used hardware input (Stone [87]) through a stylus-like haptic system that students interact with.

Other VR ILEs used hand movements as interaction input. As an example, an article used an advanced HMD (Vive [43]) indicated that students drew objects as part of topology-related learning activity (Safari Bazargani et al. [118]). Similarly, the article using CAVE noted that students drew connections between various brain sections (de Back et al. [119]). Finally, one VR ILE used interaction with head movements tracked with infrared cameras (Theart et al. [80]). The authors reported that the head movements updated the immersive environment.

The two articles illustrating MR ILEs utilized hand-based interactions. Salman et al. [88] cited object placement with hands as part of learning math, while Wu et al. [89] highlighted the usage of hands as part of selecting items with MS Hololens, a hand-tracking headset.

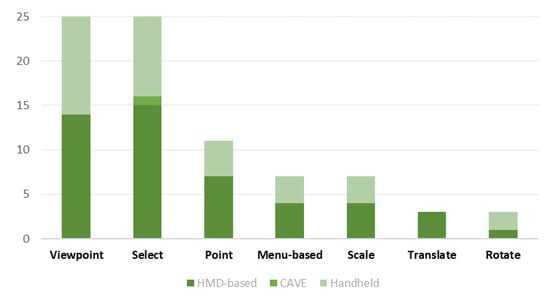

Figure 11 shows an overview of the task-based interaction techniques highlighted in the selected studies. Most ILEs featured viewpoint and select interaction styles (25 articles each, 59.5%). To cite a few examples, 14 (33.3%) HMD-based ILEs provided a viewpoint interaction where the students can zoom and pan within the immersive environment to discover more relevant knowledge and features. Examples can be found in [82,116,120]. Likewise, 11 handheld devices (phones and tablets) reported in AR ILEs allowed users to zoom and pan the environment. Notable examples can be found in [114,121,122].

Figure 11.

An overview of the task-based interaction techniques.

Fifteen (35.7%) HMD-based ILEs featured a select interaction allowing the students to initiate or confirm an interaction. Examples of select interactions can be found in [83,104,123]). Furthermore, a CAVE ILE indicated that students selected and activated an individual part of the brain (de Back et al. [119]). Similarly, nine (21.4%) AR ILEs incorporated a select interaction. For example, Sarkar et al. [108] explained that students could select a geometric object and manipulate it. As another example, Garri et al. [112] cited that students could select a specific learning setting.

Seven (16.6%) HMD-based ILEs allowed students to perform point interactions to search for interactive elements within the environment. Examples of point interactions can be found in [111,113]. Further, four (9.5%) AR systems using handheld devices provided point interactions. As a notable example, Lin et al. [124] highlighted an application allowing students to find interactive elements (for instance, a fruit edible by an insect) and manipulate it.

Four (9.5%) HMD-based articles featured a menu-based interaction where a set of commands, utilities, and tabs are shown to the students. As an example, Georgiou et al. [116] illustrated an application where students select a planet from a menu allowing the student to virtually travel to the planet. Three (7.1%) handheld-based articles featured a menu interaction. For instance, Masso and Grace [115] illustrated an AR application to teach students math where a menu of commands is highlighted to teach students how to play a math game.

Three (7.1%) HMD-based articles incorporated a translate interaction allowing students to move or relocate an element. For instance, Hunvik and Lindseth [103] presented an application teaching neural networks, where students learn neural network notation by placing neurons.

Only one HMD-based article (Theart et al. [80]) featured a rotate interaction allowing students to change the orientation of an interactive element, in this case, colocalized voxels. Two (4.7%) AR-based articles utilized a rotate interaction. As an example, Rossano et al. [125] illustrated an application to teach geometry where students can rotate 3D shapes.

5.6. RQ6—What Empirical Evidence Substantiates the Validity of the Immersive Learning Experiences?

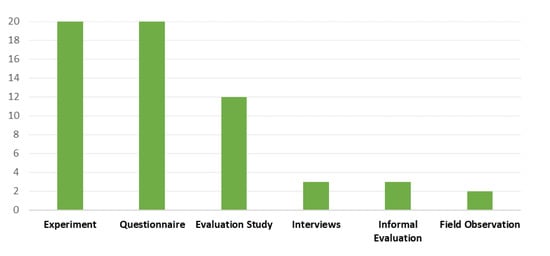

The selected articles utilized various types of evaluation methods to evaluate the effectiveness of the ILEs. In some examples, the researchers combined several assessment methods, potentially to heighten the findings. We divide the evaluation methods as experiments, questionnaires, evaluation studies, interviews, field observations, and longitudinal studies.

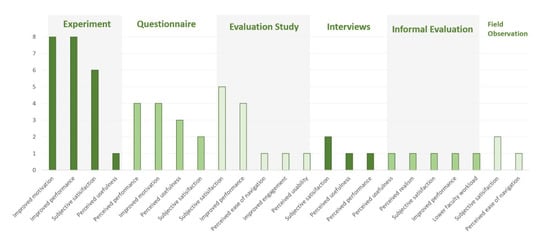

Figure 12 shows an overview of the evaluation methods used to back the validity of the ILEs. Most articles used experiments and questionnaires (20 articles each: 44.4%) as a form of evaluation. Several studies used evaluation studies (13 articles, 28.9%), while only a few articles used qualitative methods such as interviews (3 articles, 6.7%), field observations (3 articles, 6.7%), and informal evaluation (2 articles, 4.4%).

Figure 12.

Evaluation methods used in the ILEs.

Experiments: An experiment is a scientific test conducted under controlled conditions [126] where one factor is changed at a time, while the others remain constant. Experiments include a hypothesis, a variable that researchers can control, and other measurable variables.

Studies evaluated with experiments point to improved motivation and performance, high subjective satisfaction and perceived usefulness (Figure 13). The experiments involved students performing pre-tests and post-tests, and the results were statistically significant. The number of participants recruited for the experiments varied from 20 to 654 students. The experiments were often combined with questionnaires at the end to triangulate the data. To cite notable examples. Bursztyn et al. [127] identified that AR helped students to complete modules faster and increased their motivation, but was not a major driver of increased performance. Increased motivation was also shown to be a benefit of applying VAR in education by Truchly et al. [86], in addition to the fact that it was entertaining. However, some students were uncomfortable with the VR headset.

Figure 13.

Findings of evaluation methods used in the ILEs.

Some articles (e.g., [108,116,124]) reported improved performance as a result of applying immersive technologies in a class setting. For example, Sarkar et al. [108] reported that AR systems made the students more confident and helped them apply the concepts better, leading to improved performance. Georgiou et al. [116] reported that VR supported a deeper understanding of the materials as it helped students develop hands-on skills. Lin et al. [124] reported that students had improved imagination with AR compared to the traditional approach.

Various experiments showed high subjective satisfaction. For instance, Rossano et al. [125] reported that students felt comfortable and satisfied with their performance as a result of engaging with the AR learning system, while Remolar et al. [106] noted that students found the VR-based educational game fun and novel. Lee et al. [128] cited that the students found the VR-based ILS satisfying, and the HMDs were comfortable enough.

Questionnaire: A questionnaire is a method used for data collection using a set of questions [129]. A questionnaire can be administered using several methods, including online, in-person interviews, or by mail.

Only eight articles purely used questionnaires to evaluate the ILEs. The results point to high perceived performance, improved motivation, high perceived usefulness, and subjective satisfaction (Figure 13). To cite a few examples, Stigall and Sharma [130] highlighted that students thought that the VR system helped them learn programming principled better, while Lindner et al. [107] mentioned that the findings indicate that the students were more motivated and engaged with the AR system. Concerning perceived usefulness, Chiou et al. [79] noted that students found the VR learning system useful for learning. Arntz et al. [131] reported that students found the AR learning system satisfactory as it increased their interest, curiosity, and expectations.

Evaluation Studies: The articles that used evaluation studies to assess the ILEs recruited a fewer number of students to perform tasks, and the results were not statistically significant, but worth reporting. In general, the findings reveal subjective satisfaction, improved performance and engagement, ease of navigation, and usability. To illustrate with a few cases, Wei et al. [105] reported positive interaction and flexibility with the VR learning platform. Concerning performance, McCaffery et al. [132] indicated that students found the AR learning system used to teach internet routing, valuable, helpful with the course materials, and easy to navigate. Interestingly, Woźniak et al. [110] conducted a system usability scale (SUS) evaluation which identified high perceived usability of the immersive system used for teaching chemistry to children.

Interviews: Only four articles used interviews as a form of evaluation. The findings of the interviews point to subjective satisfaction and perceived usefulness and performance. To cite an example, Sajjadi et al. [133] conducted interviews showing that students found a VR-based game engaging, but the results were inconclusive. As another example, Reeves et al. [81] reported that students found the VR experience to improve their learning as it helps them learn from their mistakes without the fear of embarrassment.

Informal Evaluation: Three articles used informal evaluation to assess the ILEs. The evaluation was conducted by means of asking students questions verbally in the classroom and collecting their feedback. While not reliable, it still shows useful indications. In general, the informal evaluation points to subjective satisfaction and perceived performance, usefulness, and realism. For example, Stone [87] shows that students thought the VR system was useful in training the students. However, despite the high realistic simulation, the prototype suffered from hyper-fidelity (the inclusion of too much sensory or detail). As another example, Cherner et al. [123] cited that students felt comfortable with the VR system to teach physics. They appreciated that they could learn at their own pace and time.

Field Observations: Only two articles used field observations as a method to assess the ILEs. The authors took notes while observing the students’ interacting with the ILEs. For instance, Nordin et al. [114] observed the students while being engaged in a mobile AR system for robotics education. According to the authors, the students found the system to be engaging and satisfactory.

5.7. RQ7—What Are the Challenges of Applying the Immersive Learning Environments?

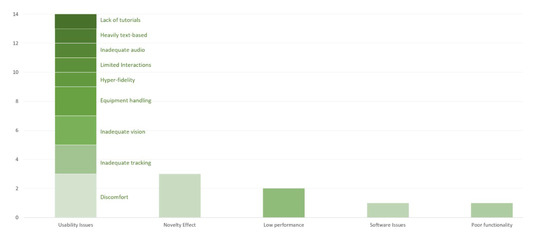

Several challenges and limitations hamper the application of immersive technologies in educational settings. The challenges are shown in Figure 14.

Figure 14.

An overview of the challenges experienced in the ILEs.

Students experienced several usability problems listed as follows:

- Discomfort: Three studies ([83,86,128]) reported that students felt uncomfortable wearing VR HMDs, especially if worn for a long time.

- Inadequate tracking: Theart et al. [80] indicated that students reported inaccuracies in the VR-based hand tracking system, which led to frustration and fatigue. Similarly, Kreienbühl et al. [109] noted that students experienced issues with tracking objects which hindered learning.

- Lack of tutorials: Masso and Grace [115] highlighted that students experienced difficulties understanding how to operate the AR-based game without a tutorial.

- Inadequate vision: Two studies highlighted issues with vision that students experienced due to the immersive technology headsets. Erofeeva and Klowait [84] reported breakdowns of visibility in the classroom causing students to not be able to see each other which impeded collaboration. Nersesian et al. [83] noted that students reported blurry vision as well as disorientation caused by the VR HMDs.

- Difficulty with handling the equipment: Two studies reported that students struggled with operating immersive technologies. Hu-Au and Okita [111] stated that some students faced difficulties with handling the VR equipment leading to a preference of the traditional learning methods. Batra et al. [134] reported that some students could not fit their smart phones inside the VR headsets.

- Heavily text-based: Hunvik and Lindseth [103] stated that students found the amount of text used for the learning experience too high. The students preferred exchanging the text with more immersive materials.

- Inadequate audio: Salman et al. [88] reported that the audio feedback given to assist students with an MR immersive system was insufficient to guide the students.

- Hyper-fidelity: Stone [87] reported that students reported that the VR system used in medical education was burdened with hyper-fidelity as there was excessive sensory data.

- Limited Interaction: Lee [128] stated that students used the HMDs for a long time, and only simple interaction techniques such as pointing were available.

Three articles [106,116,131] reported that potential benefits such as improved learning and positive perception of immersive technologies could be due to a novelty effect as the students had never previously experienced the immersive technologies. As such, future studies are recommended to extend the duration of their evaluation studies.

Two articles [80,103] highlighted that low performance was a significant stumbling block in the success of the ILEs. In particular, the applications were not timely in their responses to students’ interactions.

Chiou et al. [79] reported that students spent considerable time on file compatibility issues with Unity, a game engine.

Lindner et al. [107] reported that two main features in the AR system used to teach astronomy did not work properly, which significantly affected learning.

6. Discussion and Future Research Directions

The purpose of this work was to conduct a systematic review of the immersive learning experiences to understand their fields of applications, types of immersive technologies, the role of immersive technologies in students’ learning, pedagogical strategies, interaction styles, empirical evidence, and challenges. Seven general research questions were formulated in reference to the Objectives.

- RQ1 examined the fields where the immersive learning experiences were applied. Our findings show that computing is the most targeted field, followed by science and engineering topics such as physics, chemistry, geosciences, and math. Other topics include medicine, history, and technology. Our results are somewhat akin to Luo et al. [17] and Radianti et al. [12] where basic and social sciences, engineering, and computing are highly represented. In comparison, Kavanagh et al. [18] identified that most articles focused more on health-related and general education topics, and less on science and engineering topics.

- RQ2 discussed the types of immersive technologies used in educational settings. The results show that more than half of the articles used VR, while a third used AR, and only two articles used MR. VR was mostly HMD-based (in particular, advanced HMDs), and AR experiences were mostly marker-based and used phones and tablets, where MR used projection and HMDs. Concerning VR, our results are rather different from the findings reported by Luo et al. [17] as the authors identified desktop computers to be the most preferred VR devices. Desktop-based VR is considered non-immersive VR, and this study excludes this type of VR systems. Similar to our findings, Luo et al. [17] identified advanced HMDs and mobile VR as forms of VR in educational settings. Concerning AR, Akçayır and Akçayır [8] focused on the devices used to create AR experiences rather than the types of AR technologies (e.g., marker-based, markerless). However, our findings are similar to the authors′ where mobile phones are widely used to create AR experiences. Since MR is an emerging technology in education, no review study has covered educational MR, and thus, our findings are unique.

- RQ3 investigated the role of immersive technology using the SAMR model based on teachers’ actions in developing students’ higher-order thinking skills. The findings of this study show that the MR-based studies were classified in the redefinition level. In addition, most of the VR-based studies were classified in the augmentation level, followed by the redefinition level. The studies using AR were mostly categorized in the modification level followed by augmentation. No related review studies investigated the role of technology using the SAMR model. However, it was stated in a previous systematic review by Blundell et al. [135] that the SAMR model is mostly used to categorize educational practices with digital technologies based on teachers’ and students’ actions.

- RQ4 examined the pedagogical approaches of immersive technology. The results show that most studies did not identify a specific pedagogical approach, however, these studies showed evidence of using an active learning approach. Other pedagogies mentioned in the studies were: experiential learning, game-based learning, and inquiry-based learning. Other studies showed the following pedagogies being used equally: self-directed learning, project-based learning, and collaborative learning. Our results are similar to those of Radianti et al. [12], as most studies on immersive technology did not mention the pedagogical approach, followed by studies that used experiential learning. In contrast, Kavanagh et al. [18] pointed out that most researchers using VR ILEs used collaboration and gamification.

- RQ5 identified the interaction techniques used in the immersive learning experiences. In terms of input, touch-based interaction (mostly AR based) was the most reported, followed by hardware (mostly advanced HMD-based), hand, and head-based interaction. Concerning the task-based interaction techniques, viewpoint and select interactions were the most described, followed by pointing, scaling, translating, and rotating. Our findings are unique as the relevant review studies did not attempt to classify immersive interaction techniques based on existing frameworks. However, Luo et al. [17] identified that most VR systems used minimal interaction, while a few featured high interactions that allowed rich exploration of the environment. Pellas et al. [13] concentrated on the features of advanced HMDs allowing sophisticated tracking of head and hand movements.

- RQ6 examined the empirical evidence backing the validity of the immersive learning environments. Our findings show that the ILEs were evaluated mostly by experiments, questionnaires, evaluation studies, and a few ILEs were also evaluated by interviews, informal evaluation, and field observations. The evaluation shows improved motivation, performance, perceived usefulness, and subjective satisfaction. Our findings resemble those mentioned by Asad et al. [72] where the authors demonstrated similar methods of evaluation such as experiments, interviews, and questionnaires. In comparison, Luo et al. [17] reported that questionnaires were the most-used evaluation method, followed by tests, observations, and interviews.

- RQ7 presented the reported challenges of applying the immersive learning environments. Most of the challenges were related to usability and ergonomics such as discomfort, inadequate tracking, vision, and audio, handling the equipment, and lack of tutorials. Other challenges include low performance, software compatibility issues, and the novelty effect. Our findings are similar to those of Akçayır and Akçayır [8] where usability issues such as the difficulty of usage and cognitive load were reported, but the authors also reported other issues such as some teacher’s inadequacy when it came to using the technology. Kavanagh et al. [18] reported similar usability issues in addition to overhead and perceived usefulness issues.

- To set the ground for future research and implementation of ILEs, we shed some light on a few areas that should be contemplated when designing and implementing ILEs

- Limited Topics: By far, most of the topics presented in the selected studies were STEM (science, technology, engineering, math)-related. While it is natural for such topics to be visualized and illustrated with immersive technologies, future researchers and educators should venture beyond STEM topics and explore how immersive technologies could be impactful in non-STEM contexts such as the arts, humanities, and language learning.

- End-user development (EUD) of the ILEs: EUD is a set of tools and activities allowing non-professional developers to write software programs [136]. EUD equips many people to engage in software development. [137]. Most studies presented programmatic tools such as Unity and Vuforia for building ILEs. Such tools are only accessible to developers. A few articles used existing immersive applications or relied on paid off-the-shelf components such as Modum Lab. However, this limits the range of possibilities and increases the cost of ILEs. Nonetheless, a few commercial tools allow non-developers to build immersive experiences. Examples include VeeRA [138] and Varwin [139]. However, such tools tend to be limited to creating immersive 360-degree videos. As such, future research could experiment with existing EUD tools that allow the implementation of ILEs. Researchers could evaluate such tools′ usability and appropriateness in the educational context.

- Development Framework: Despite being in circulation for decades, there is a lack of guidance in the literature to assist educators in identifying educational contexts that immersive technologies could enhance. Further, there is a lack of guidance to assist educators in selecting and deploying immersive technology and interaction styles appropriate for the educational context of choice. A notable recent effort in this direction is a framework devised by An et al. [140], assisting K-12 educators with the design and analysis of teaching augmentation. While promising, the framework is geared towards the K-12 curriculum and focuses on assisting teachers in their teaching instead of assisting learners in their learning. Another significant effort is the work of Dunleavy [141], in which he described general principles for designing AR learning experiences. The described design principles are useful for leveraging the unique affordances of AR. However, the principles are not grounded in pedagogical learning theories. Further, the work does not accommodate the affordances of MR. As such, future research could focus on developing a conceptual framework to help educators identify contexts for implementing immersive learning experiences and guidance on deployment and integration into classroom settings.

- Usability principles: usability assesses how easy it is to use a user interface. Usability principles can act as guidelines for designing a user interface. As an example, Schneiderman et al. highlighted eight user interface design rules [142]. Moreover, Joyce extended the 10 general usability heuristics defined by Nielsen [143] to accommodate VR experiences [144]. Nevertheless, most studies shied away from explicitly applying usability heuristics. However, the evaluation shows that there were several usability issues. As such, we argue that designing ILEs with usability principles in mind is crucial to avoid such errors. Further, we recommend that future researchers assess the usability of the ILEs during the design process.

7. Study Limitations

Several factors may affect the findings of this study. (1) Our research was restricted to between January 2011 to December 2021. This restriction was essential to enable the authors to realistically begin the analysis of the selected papers. Consequently, the study may have missed some crucially important articles published after the submission date. (2) The search was conducted in four search libraries: IEEE Xplore, Scopus, ACM, and SpringerLink. Accordingly, our study may have missed some relevant papers available in other search libraries. (3) The fact that our search string only used the plural form “Immersive Technologies” (as opposed to “Immersive Technology”) could have caused missing relevant articles in IEEE Xplore, Springer Link, and ACM. However, since Scopus also indexes many IEEE, ACM, and Springer articles, many articles containing the singular term could have already been found by Scopus (since Scopus does not distinguish between the plural and singular form in a search string). (4) Our search string did not contain relevant keywords such as “Media” which could have helped us find more suitable articles. However, when conducting a search on several libraries, the keyword “Immersive Media” combined with “Education” did not yield a high number of results (e.g., 33 results on Scopus and 15 results on IEEE Xplore) and most results were irrelevant. (5) We could have missed significant articles published in countries other than those reported in this study. For instance, we could have conducted a manual search on Google Scholar to find relevant articles published in specific countries. (6) Four researchers with different research experience contributed to this study which may result in inaccuracies in article classification. To mitigate this risk, we cross-checked the work performed by each author to make certain of accurate classification. Moreover, uncertainties were discussed and clarified by the authors in research meetings. Finally, (7) in assessing and excluding some articles, we may have been biased against certain articles that are still relevant such as technical reports or papers without adequate empirical evidence

8. Conclusions

This study illustrated how various educational immersive experiences empower learners. The study analyzed 42 immersive learning experiences proposed in the literature. To analyze the experiences, the study evaluated each experience within seven aspects: educational field, type of immersive technology, role of technology in education, pedagogical strategies, interaction techniques, evaluation methods, and challenges.

The results show that STEM topics were amongst the most covered, with a few other non-STEM topics such as history. Concerning the type of immersive technology, HMD-based VR was highly represented, while AR experiences used mostly handheld-based marker-based learning experiences. Interestingly, only two studies utilized MR for education. Concerning the SAMR model, most studies operated at the augmentation level, followed by modification, redefinition, and substitution. In terms of the pedagogical strategies, most articles did not specifically mention a pedagogical strategy, but used a form of active learning, followed by diverse strategies including experiential, game-based, inquiry-based, collaborative, self-directed, and project-based learning. Regarding the interaction techniques, touch was the most used interaction input for mostly AR experiences, followed by hardware, hand, and head movements. The interactions enabled various tasks, mostly viewpoint and select, in addition to point, menu-based, scale, translate, and rotate. Regarding the evaluation methods, experiments, questionnaires, and evaluation studies were amongst the most used methods. The evaluation shows improved performance, engagement, and subjective satisfaction. The challenges point to various usability issues, in addition to novelty effect and low performance.

Future studies should consider designing immersive learning experiences for topics beyond STEM, such as arts and humanities. Further, future researchers should experiment with existing tools that implement immersive learning experiences. Researchers could evaluate the usability of such tools and their appropriateness in the educational context. Moreover, future research could focus on developing a conceptual framework helping educators identify contexts for implementing immersive learning experiences, in addition to guidance on deployment and integration into classroom settings. Finally, future researchers should assess the usability of immersive learning experiences during the design process.

Author Contributions

Conceptualization, M.A.K. and A.E.; methodology, M.A.K., A.E. and S.F.; validation, M.A.K. and A.E.; formal analysis, M.A.K., A.E., S.F. and A.A.; investigation, M.A.K., A.E., S.F. and A.A.; data curation, M.A.K., A.E., S.F. and A.A.; writing—original draft preparation, M.A.K.; writing—review and editing, M.A.K., A.E., S.F. and A.A.; visualization, M.A.K. and A.E.; supervision, M.A.K.; project administration, M.A.K.; funding acquisition, M.A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Policy Research Incentive Program 2022 at Zayed University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The selected articles in the study.

Table A1.

The selected articles in the study.

| ID | Article | Reference |

|---|---|---|

| A1 | (Stone, 2011) | [87] |

| A2 | (Hunvik and Lindseth, 2021) | [103] |

| A3 | (Arntz et al., 2020) | [131] |

| A4 | (Nordin et al., 2020) | [114] |

| A5 | (Sajjadi et al., 2020) | [133] |

| A6 | (Chiou et al., 2020) | [79] |

| A7 | (Bursztyn et al., 2017) | [121] |

| A8 | (Tims et al., 2012) | [145] |

| A9 | (Batra et al., 2020) | [134] |

| A10 | (Majid and Majid, 2018) | [146] |

| A11 | (Rossano et al., 2020) | [125] |

| A12 | (Cecil et al., 2013,) | [104] |

| A13 | (Theart et al., 2017) | [80] |

| A14 | (Cherner et al., 2019) | [123] |

| A15 | (Wei et al., 2013) | [105] |

| A16 | (McCaffery et al., 2014) | [132] |

| A17 | (Lin et al., 2018) | [124] |

| A18 | (Erofeeva and Klowait, 2021) | [84] |

| A19 | (Masso and Grace, 2011) | [115] |

| A20 | (Garri et al., 2020) | [112] |

| A21 | (Lindner et al., 2019) | [107] |

| A22 | (Bursztyn et al., 2017) | [127] |

| A23 | (Restivo et al., 2014) | [122] |

| A24 | (Nersesian et al., 2019) | [113] |

| A25 | (Nersesian et al., 2020) | [83] |

| A26 | (Kreienbühl et al., 2020) | [109] |

| A27 | (Truchly et al., 2018) | [86] |

| A28 | (Sarkar et al., 2019) | [108] |

| A29 | (Stigall and Sharma, 2017) | [130] |

| A30 | (Peltekova et al., 2019) | [117] |

| A31 | (Woźniak et al., 2020) | [110] |

| A32 | (Salman et al., 2019) | [88] |

| A33 | (Wu et al., 2021) | [89] |

| A34 | (Safari Bazargani et al., 2021) | [118] |

| A35 | (Georgiou et al., 2021) | [116] |

| A36 | (de Back et al., 2021) | [119] |

| A37 | (Reeves et al., 2021) | [81] |

| A38 | (Hu-Au and Okita, 2021) | [111] |

| A39 | (Shojaei et al., 2021) | [120] |

| A40 | (Remolar et al., 2021) | [106] |

| A41 | (Santos Garduño et al., 2021) | [82] |

| A42 | (Lee et al., 2021) | [128] |

References

- Lee, H.-G.; Chung, S.; Lee, W.-H. Presence in virtual golf simulators: The effects of presence on perceived enjoyment, perceived value, and behavioral intention. New Media Soc. 2013, 15, 930–946. [Google Scholar] [CrossRef]

- Huang, T.-L.; Liao, S.-L. Creating e-shopping multisensory flow experience through augmented-reality interactive technology. Internet Res. 2017, 27, 449–475. [Google Scholar] [CrossRef]

- Zhao, M.Y.; Ong, S.K.; Nee, A.Y.C. An Augmented Reality-Assisted Therapeutic Healthcare Exercise System Based on Bare-Hand Interaction. Int. J. Hum.–Comput. Interact. 2016, 32, 708–721. [Google Scholar] [CrossRef]