Abstract

Hyperscanning is a technique which simultaneously records the neural activity of two or more people. This is done using one of several neuroimaging methods, such as electroencephalography (EEG), functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy (fNIRS). The use of hyperscanning has seen a dramatic rise in recent years to monitor social interactions between two or more people. Similarly, there has been an increase in the use of virtual reality (VR) for collaboration, and an increase in the frequency of social interactions being carried out in virtual environments (VE). In light of this, it is important to understand how interactions function within VEs, and how they can be enhanced to improve their quality in a VE. In this paper, we present some of the work that has been undertaken in the field of social neuroscience, with a special emphasis on hyperscanning. We also cover the literature detailing the work that has been carried out in the human–computer interaction domain that addresses remote collaboration. Finally, we present a way forward where these two research domains can be combined to explore how monitoring the neural activity of a group of participants in VE could enhance collaboration among them.

1. Introduction

This paper provides a review of hyperscanning studies in social neuroscience. We explore the growth of the field over the years, and examine the study paradigms used to analyse the concept of inter-brain synchrony using hyperscanning. We then present the case for using the hyperscanning technique in collaborative VEs. The paper concludes with potential directions for research in multi-person social neuroscience in collaborative VEs.

Social interactions are an innate need of the human condition which help ensure psychological well-being [1,2]. They rely on a host of implicit and explicit cues that people convey while engaging in activities together. These cues can range from subtle ones, such as eye gaze [3] and micro-expressions [4], to more explicit cues, like hand gestures [5] and outward displays of emotion, such as laughing [6] and crying [7]. These aspects have been studied in great detail over the years in order to understand the mechanisms that underpin social interactions. With varying degrees of success, researchers have been able to demonstrate the roles that these cues play in social interaction [3,5,6,7,8,9]. However, there is no consensus as to how these social cues integrate with each other when viewed on a larger “interaction scale”. To address this issue, researchers have begun looking at the origins of these cues—the brain.

Social neuroscience is an emerging field that integrates both neuroscience and social psychology to better understand how humans communicate with each other [10]. Social psychology, which mainly focuses on behavioral aspects, is concerned with the individual psyche in the context of relations with other people. Social psychologists have collaborated with neuroscientists to better understand the underlying neural mechanisms of human interaction (considering both neural and behavioral aspects) [10]. Social neuroscience has acquired greater importance in being able to explain the mechanisms that underpin social interaction. Studies have been devised to mimic real-world social interactions, with one of the participants’ neural data often being recorded [11,12]. Unfortunately, this method does not provide a complete picture of social exchanges. Analysis of neural data from only one participant does not always correlate with the behavioural observations recorded for one or both participants, which makes it extremely difficult to fully understand how social interactions work at a deeper level [13].

An interest in “two-person neuroscience” [13,14] has been around for a long time [15]. Technology developments and increasing affordability of high-quality devices capable of monitoring neural activity have made advances in this area of research possible. This paper reviews the literature detailing the work that has been carried out in this area. Specifically, we look at research that has addressed social interaction, cooperation, and collaboration between two people. However, it must be made clear that we have not surveyed all of the literature that is categorised under these terms. Our focus has been the literature that deals with hyperscanning. For greater detail on the methodology used to filter publication for the purpose of this review, refer to Section 2. Following this, we list some data acquisition and analysis techniques that have been used by researchers. A case is presented for adopting social neuroscience experimental techniques in human–computer interaction (HCI) research—particularly virtual reality (VR)-based computer-supported collaborative work (CSCW).

Recent advances in technology have resulted in VR and augmented reality (AR) establishing a foothold in the consumer market. Research in the HCI domain has demonstrated the usefulness of these technologies to mediate remote collaboration [16,17,18,19]. Collaboration in virtual environments (VE) has been shown to be nearly as effective as that in face-to-face situations in the real world [16,18,19]. The advantage of VEs is that interaction techniques in such environments can be tailored in order to implement optimal interactions between the collaborators and the environment [20].

Several VR-based collaborative platforms, such as AltSpace VR (https://altvr.com/) and Mozilla Hubs (https://hubs.mozilla.com/) now allow people from all around the wold to interact in a shared VE. While these platforms enable inter-personal interactions that are, in many ways, superior to existing video conferencing platforms (such as Skype and Google Meet), they lack an essential trait—the communication of implicit cues. A large body of research demonstrates that these cues form the foundations of inter-personal interactions. Furthermore, the precursors to the physical manifestations of these cues lie in the brain. For a detailed overview of the role implicit cues play in social cognition and their relation to its explicit processes, read the review by [21].

Given these advances in the field of social neuroscience and HCI, it appears logical that combining the understandings gained from these two domains could benefit us in numerous ways. In this literature review, we cover some of the work that has been done in the social neuroscience domain using a relatively new neural monitoring technique called hyperscanning. We then present our case for the use of hyperscanning in VR. Based on the literature reviewed here, the paper attempts to lay out the potential directions that HCI research can take in order to realise the development of interfaces that can not only mediate communication between individuals in VEs, but also help in creating VEs that can promote collaboration.

Overall, we reviewed 34 papers published between 2002 and 2020. While we were able to see a dramatic increase in the use of hyperscanning as a technique to study social interactions, we limited the type of studies reviewed to those that fell within the task types listed by Wang et al. [22]. These task types were chosen as they represented a broad spectrum of tasks—from the seemingly abstract tasks derived from current experimental psychology methodologies, to ecologically valid tasks designed to explore how social interactions work in the real world. A broader, but equally useful, classification of tasks was also described by Difei et al. [23]. This review is important because it presents work that was undertaken in two seemingly disparate fields that are, in our opinion, two sides of the same coin. As technology evolves, it is important for us to study the effects that it can have on inter-personal interactions. The understanding of social interactions in VEs from a behavioural and neuroscientific perspective stands to revolutionize the manner in which we interact and communicate with each in VEs in the future. This paper attempts to present ongoing research in the two fields and propose potential directions it can be steered in, in order to achieve the ultimate goal of empathic tele-existence in collaborative VEs.

The rest of the paper is organized as follows: A methodology section details the inclusion criteria and the number of papers that were chosen for the review; a background section then covers existing work in the hyperscanning domain, with two subsections detailing the study paradigms and evaluation methods—subjective and objective—that have been used to date. This is followed by a section where we make the case for using hyperscanning in VEs, particularly in collaborative environments. Following this, we conclude with our views on the current state of the art, as well as potential directions for future research in the area.

2. Methodology

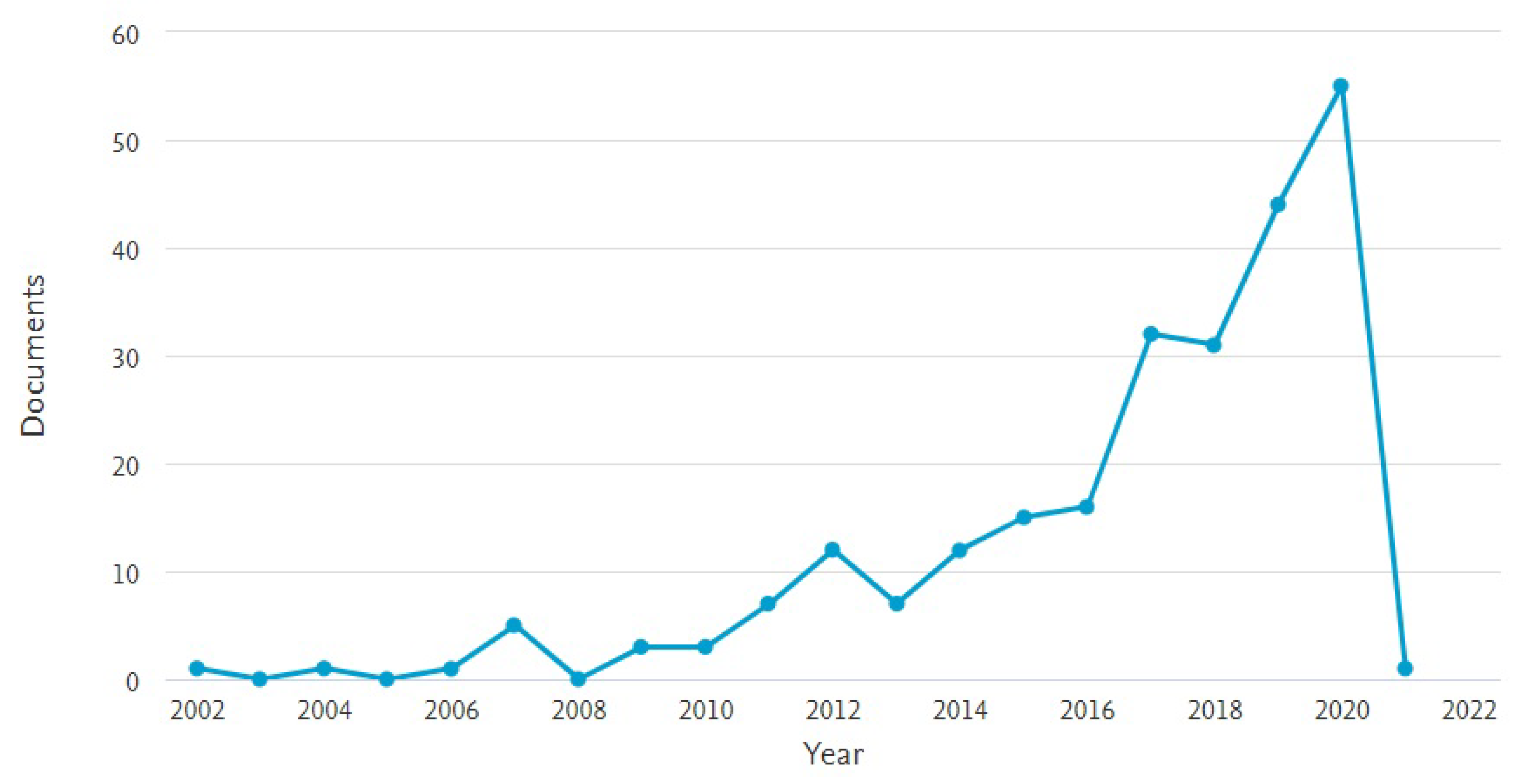

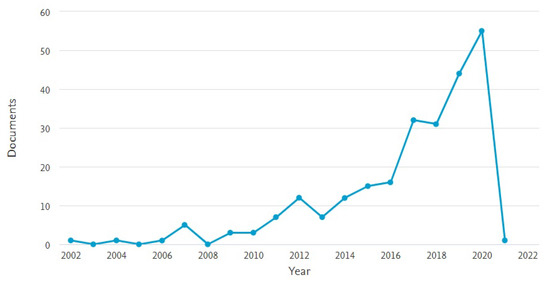

The field of multi-person social interaction is a rapidly expanding research area. The availability of relatively low-cost, high-quality equipment alongside a rapidly evolving set of experimental paradigms has contributed to its growth. In recent years, there has been a push towards recording the neural activity of two or more people simultaneously. This technique has been labelled as hyperscanning [24]. While not entirely new [15], it has now begun to gain ground as a means for studying social interaction [25]. A search on Scopus (https://www.scopus.com/ accessed on 6 November 2020) for the term “hyperscanning” resulted in a total of 246 documents with the term used in them. It is interesting to note that the last decade has seen a significant uptake in social neuroscience research that uses the hyperscanning method, as shown in Figure 1. The year 2020 in particular has seen 55 publications on the subject of hyperscanning.

Figure 1.

Search results for hyperscanning on Scopus.

The hyperscanning technique has played an integral role in being able to acquire neural data simultaneously during a given experimental task. Given that there now exist several studies that use hyperscanning to explore a range of social interaction types, it was imperative that we set an inclusion criteria for the purpose of this review. Broadly, the inclusion criteria was determined by the task-based paradigms listed by Wang et al. [22] in their review of the field of hyperscanning. Consequently, studies that utilised the following neuro-imaging methods and study paradigms were included:

- Neuro-imaging methods: Electroencephalography (EEG), functional Near-infrared Spectroscopy (fNIRS), and functional Magnetic Resonance Imaging (fMRI).

- Study Paradigms: Imitation tasks, co-ordination tasks, eye contact/gaze-based tasks, co-operation and competition tasks, and ecologically valid/natural scenarios. A detailed description of each of the study paradigms can be found in Section 3.1.

The rationale for opting to review literature that falls within this relatively strict criteria was because we believed the experimental paradigms used in these studies could be easily adapted to VR-based studies. A more detailed explanation can be found towards the end of Section 3.1. As latter sections of this review reveal, there appears to be a complete lack of implementation of the hyperscanning technique in VR.

Using these inclusion criteria, we were able to review 34 papers published between 2002–2020. Amongst these, 21 papers made use of EEG, 6 of fMRI, and 7 of fNIRS as neuro-imaging methods to capture data of two or more interacting participants. It must be noted that these papers were obtained after a search primarily conducted using Scopus and Google Scholar. While Scopus limits the search to academic publications, Google Scholar helped us widen the scope of the search and identify any publications that might have been overlooked by the Scopus database. By this, we mean that Google Scholar was mainly used to find any non-academic publications, such as patents that would not have been listed by Scopus. Given the relatively nascent stage of research in the area, we did not find anything via Google Scholar that was not already covered by the Scopus database. The search terms that were used were “hyperscanning”, “hyperscanning + Virtual Reality”, “Inter-brain connectivity”, “Inter-brain synchrony”, and “Flow”. However, the search term “hyperscanning” provided us with the most relevant results across Scopus and Google Scholar. Therefore, a majority of the papers reviewed as part of this article were obtained from the search results for this term.

3. Background

The three primary methods of monitoring neural activity are via functional magnetic resonance imaging (fMRI), electroencephalography (EEG), and functional near-infrared spectroscopy (fNIRS). A primary driver for hyperscanning studies has been the high quality of signals that these devices can now capture, and the relatively low price point at which they are available, particularly EEG and fNIRS. Table 1 lists some of the hyperscanning studies that have been carried out using these methods.

Table 1.

A list of hyperscanning studies based on the neuroimaging methods used. The studies listed in the table reflect only a subset of those used for the purpose of the literature review.

These studies have demonstrated that many researchers using different neuroimaging methods have validated the technique of hyperscanning. Each of these methods have their advantages and disadvantages. For example, while fMRI provides good spatial resolution [26], it lacks temporal resolution [27] and is very expensive to operate. Studies conducted with fMRIs also lack ecological validity given the conditions under which they need to be carried out [22]. EEG and fNIRS, on the other hand, demonstrate significantly better temporal resolution [26,28], but lack the ability to monitor neural activity from the deep brain structures. They also lack the spatial resolution afforded by fMRI. However, these two techniques, EEG more so than fNIRS, have been extensively used over the last decade or so to carry out hyperscanning studies. The quality of the equipment and the cost at which it is available has enabled the exponential growth that the field of neuroscience in general, and particularly social neuroscience, has witnessed. An added advantage has also been the portability and ease of use that EEG and fNIRS offer [26]. Unlike an fMRI setup, both EEG and fNIRS require relatively lesser space, and can be easily carried to a testing site if required.

Given the different methods devised to measure neural activity, researchers have also come up with a range of experimental paradigms that have explored the concept of inter-brain synchrony using the hyperscanning methodology. The next subsection provides an overview of the different study paradigms that have been adopted by researchers over the years. It must be noted that some of these, like the economic exchange task, have been adopted from existing concepts, such as the Prisoner’s Dilemma [29,30].

3.1. Study Paradigms

Taking into account the relative strengths and weaknesses of the methods listed earlier, researchers have devised a range of methodological approaches to study social interaction using neural activity [49]. These range from certain aspects of social interaction, such as gaze, as seen in some fMRI studies [46], to the use of economic exchange games in fNIRS hyperscanning studies [48] and ecologically valid experiments using EEG [36,39]. These study paradigms can be broadly divided into six groups [22]:

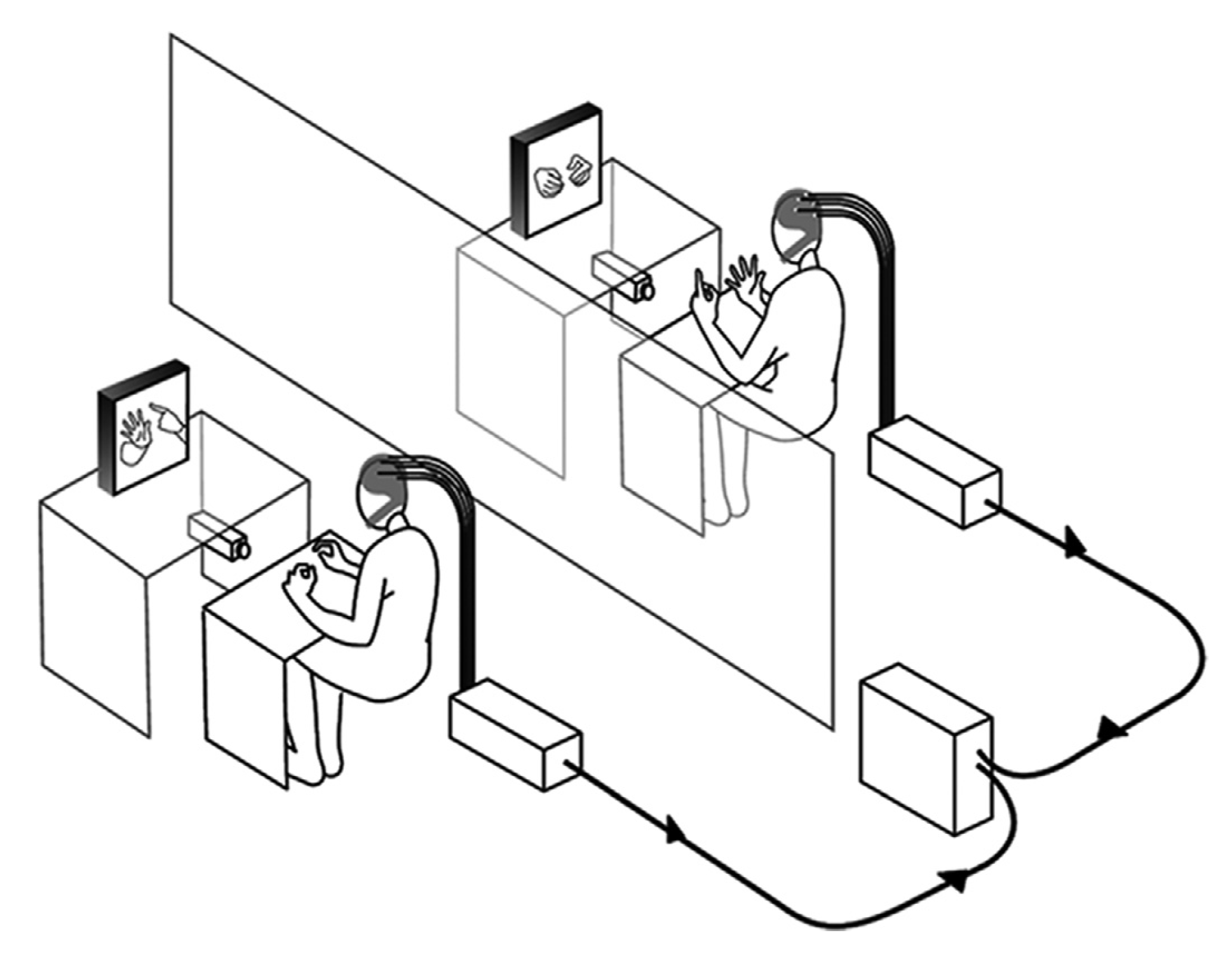

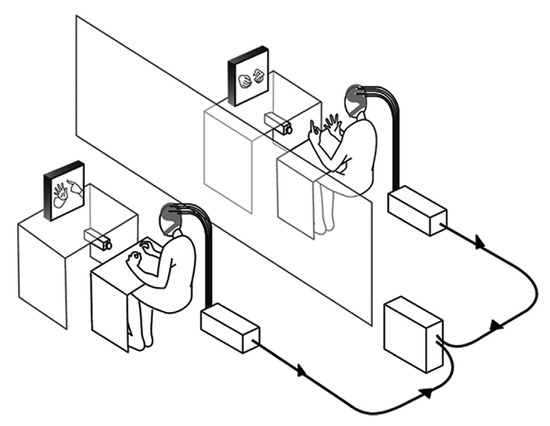

- Imitation tasks: These tasks require one participant to imitate the other’s movement or behaviour. The tasks are designed to assess how movements or behaviours are “taken on board” by the participant, attempting to imitate a given task or behaviour. Results from studies using this paradigm demonstrate that there appears to be a clear correlation of neural activity or inter-brain synchrony between the person performing the task and the one imitating it [34], especially in cases where imitation appears to be “mirrored” [33,34]. Participant pairs that do not perform well on such a task do not appear to exhibit the same level of inter-brain synchrony. Figure 2 shows an imitation task used by Delaherche et al. [34] to study inter-brain synchrony using the hyperscanning method.

Figure 2. An imitation task, as described by [34].

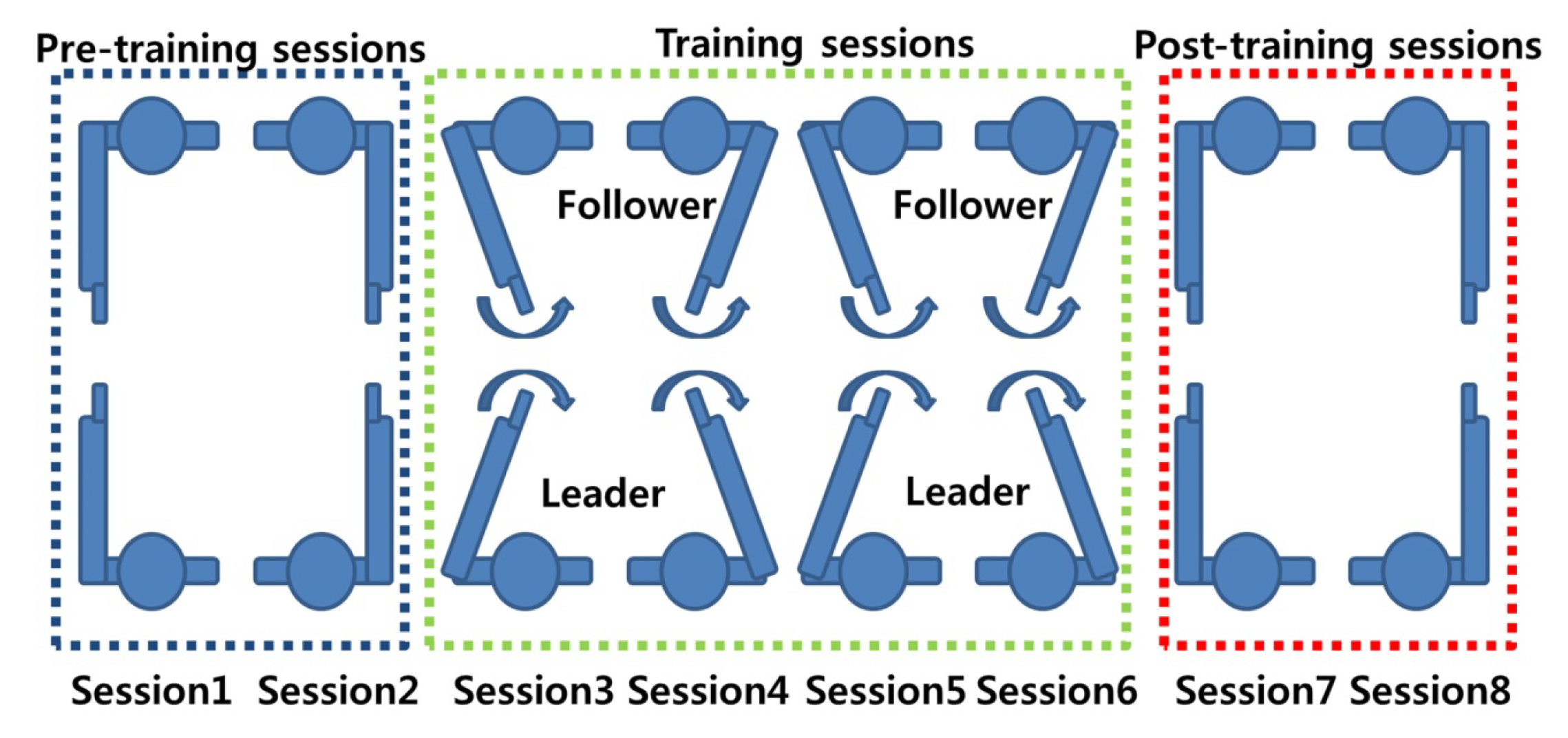

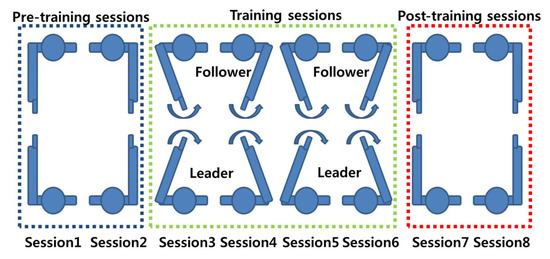

Figure 2. An imitation task, as described by [34]. - Co-ordination tasks: Co-ordination tasks require participants to act in a synchronised manner. These tasks attempt to mimic the behavioural synchronisation that is commonly seen in daily life. For example, the footsteps of two people walking together may unconsciously sync each other up, even though their intrinsic cycles are different [33]. It must be noted that in some of the references listed in this paper, there is little difference between imitation and co-ordination tasks. For example, Yun et al. [33] demonstrated via their experiment that both co-ordination and imitation are intrinsic parts of their experimental design (Figure 3). While only the results from the co-ordination tasks (Sessions 1, 2, 7, and 8) were analysed for inter-brain synchrony, it is the imitation task (training sessions/social interaction) that is said to help induce synchrony between the two brains.

Figure 3. A co-ordination task, as described by [33].

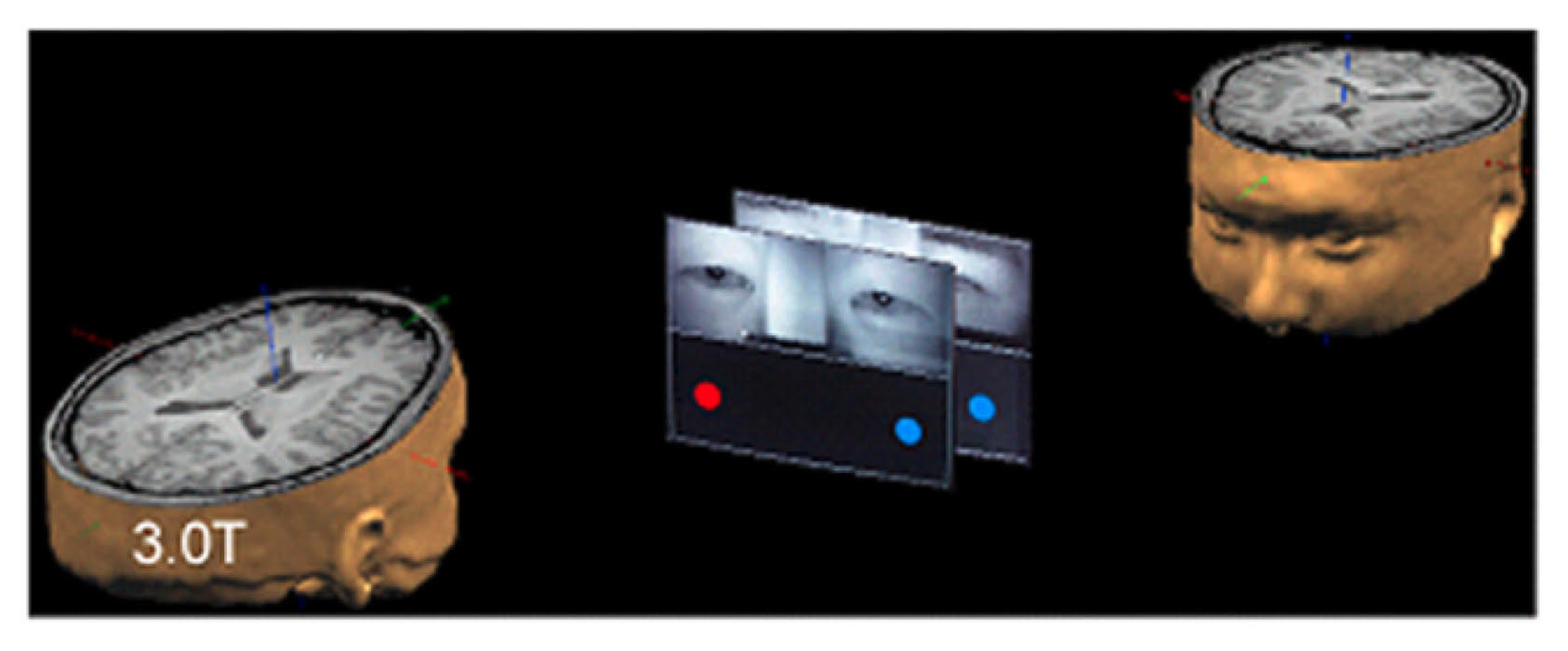

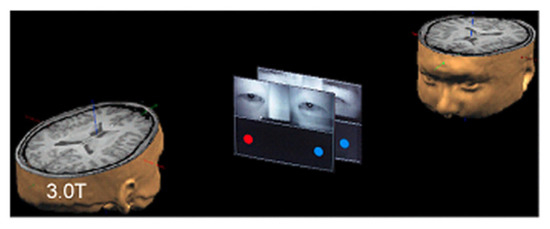

Figure 3. A co-ordination task, as described by [33]. - Eye contact/gaze-based tasks: Studies that have employed this experimental paradigm require participants to look at each other and/or follow the gaze of a participant. Mutual gaze or eye contact between people offers critical cues that are used in social interaction and communication between people. The information exchange between people through eye contact offers an ideal base to study the neural mechanism that underlies this behaviour via hyperscanning. Several studies have demonstrated that the extent of inter-brain synchrony between people can be gauged by studying mutual eye gaze exchanges [27,43,46]. Figure 4 shows a gaze-based task used by Saito et al. [43] to study the neural correlates of joint attention using linked fMRI scanners.

Figure 4. An eye-gaze-based study paradigm, as described by [43].

Figure 4. An eye-gaze-based study paradigm, as described by [43]. - Economic exchange tasks: Economic exchange tasks have also been used to study social interaction using the hyperscanning technique. These tasks generally revolve around one participant offering a certain amount of “money” from a known amount to the other participant. The other participant is free to accept or reject this offer. Studies have demonstrated that offers considered “fair” or equitable generally demonstrate a correlation in neural activity among participating dyads. This is especially true in the case where the dyads are able to view each other’s faces [48]. An interesting variation to the game has been demonstrated by Ciaramidaro et al. [42]. In this study, they empirically demonstrate the existence of empathy between a “punisher” and a player in an economic exchange game involving a triad.

- Cooperation and competition tasks: Cooperation and competition form an intrinsic part of human life. Oftentimes, people need to work together to achieve a common goal, across all spheres of life. Similarly, we sometimes find ourselves competing against another person or several people in order to achieve a goal. Both these behaviours have been studied using hyperscanning in a bid to unearth the workings of social interactions. Studies using this paradigm have demonstrated that inter-brain synchrony is more likely to occur when participants are cooperating towards achieving a common goal rather than competing against each other [35]. Interestingly, it also demonstrates that cooperation in the “virtual environment” evokes a greater level of inter-brain synchrony in comparison to the real-world.

- Ecologically valid/natural scenarios: Of all of the hyperscanning experimental paradigms, this is the most interesting one, as it puts participants into real-world scenarios to study social interaction via neuroimaging techniques. The most commonly used neuroimaging technique employed for these studies appears to be EEG. This is possibly due to the advances in quality, portability, and the relatively low cost of EEG headsets. Additionally, the decreasing costs have allowed for relatively large-scale studies to be conducted while being able to obtain good-quality data, such as that demonstrated by Dikker et al. [37] who captured data from 12 EEG systems. Other researchers have also employed this experimental paradigm in scenarios ranging from card game play [31] and music performance [50] to piloting an aircraft [36], as shown in Figure 5.

Figure 5. A real-world study paradigm, as demonstrated by [36].

Figure 5. A real-world study paradigm, as demonstrated by [36].

All the study paradigms that we have looked at have been used and validated by several researchers. Each of these have their pros and cons. While the ecologically valid/natural scenarios seem to be the best way to study social interaction, they are not ideal for teasing out individual components of social interaction, such as mutual gaze, facial expressions, and gestures. It can also be argued that some of the experimental paradigms listed in this section somewhat overlap in terms of the activities that they constitute. For example, imitation and coordination tasks can be bunched together. There is also a case for labelling a coordination task as being cooperative, given that participants are required to “coordinate” each other’s movements towards a common end goal. How one decides to classify an experimental task is largely up to them. From the perspective of applying these experimental paradigms in VR, we believe the movement co-ordination tasks will translate well to VEs. Similarly, gaze-based tasks also stand to demonstrate measurable results in VEs. This is because, in our opinion, these two tasks form the basis of human–human interactions. If these two tasks translate well to VEs, and demonstrate measurable inter-brain synchrony between participants via hyperscanning, we can be certain that more natural, real-world-like interactions will demonstrate similar effects. It is important that future studies follow these basic experimental paradigms in order to explore the neural correlates of the individual components that constitute social interaction. Results from such studies will aid the interpretation of neural activity observed in ecologically valid experimental paradigms. Additionally, it will allow us to directly compare results from real-world studies versus those conducted in VEs. It will also help formulate efficient strategies to study social interaction from a neurological standpoint. Table 2 lists some studies that utilise these experimental paradigms.

Table 2.

A list of hyperscanning studies based on the study paradigm. The studies listed in the table reflect only a subset of those used for the purpose of the literature review.

While study paradigms have had to evolve to keep pace with the direction of research, data acquisition and analysis techniques have also evolved. Given that hyperscanning is a relatively nascent field, there are several methods used to analyse the data that are obtained. This includes both subjective and objective data measures. Both these measures play an important role in being able to analyse and interpret neural and behavioural activities. Neural activity is able to provide us with concrete information regarding neural activation of the different parts of the brain during a task. Traditional behavioural observations give us insights into the “visible” aspects of human beings when undertaking an activity. Social neuroscience allows us to correlate these two forms of data gathering techniques in an attempt to unpack the neural underpinnings of the behaviours that we observe among interacting human beings. The next subsection provides a brief overview of the data gathering and analysis techniques that have been used in hyperscanning studies to date. We also cover some of the analysis methods that have been used.

3.2. Data Acquisition and Analysis

An important consideration for data acquired using the hyperscanning methodology is the analysis of the data. There are mainly two types of data that researchers collect during hyperscanning studies:

- Objective Data: This refers to the neural recordings made of the users utilising any of the neuroimaging techniques described earlier.

- Subjective Data: This includes questionnaires, such as the Positive and Negative Affect Schedule (PANAS) [51] and Self Assessment Manakin (SAM) [52] that are administered as part of the study. These questionnaires provide the researcher with “emotion” and other subjective measures of the participants. These questionnaires also provide important information regarding the user’s mental state during the activity.

In addition to the data-gathering techniques listed above, researchers have also recorded behavioural observations of participants in a study. When looking at all this information together, a holistic picture of social interaction can be built. This allows researchers to easily correlate behavioural observations with mental states and neural activity, making it easier to identify patterns—in behaviour, mental states, and brain activity—that correspond to a range of social activities. For the purpose of this review, we will first look at some of the objective analysis methods that have been employed by researchers in hyperscanning studies.

3.2.1. Objective Data

In the case of social neuroscience, the data collected using any of the neuroimaging methods mentioned earlier are considered objective data. The recorded neural activity can be analysed using a range of techniques. hyperscanning is a relatively new field in neuroscience, and the methods used to analyse the data collected using this technique are constantly evolving. Nearly all of the methods used for hyperscanning data analysis are based on comparisons between recorded neural activities of two or more people. However, there are a handful that seek to measure the direction of information flow between brains as well [25,27,32]. It is imperative that the methods used for data analysis are not susceptible to detecting spurious connections between the brains based on the recorded neural data [53]. This is a significant concern, since two or more people exposed to the same stimulus simultaneously are likely to demonstrate similar neural responses. The methods used to analyse objective data must take these phenomena into account when evaluating such data sets. The rest of this section will briefly cover some of the analysis techniques that are currently used to analyse data from hyperscanning studies.

- Partial Directed Coherence (PDC): PDC was introduced by [54] as a means to describe the relationship between multivariate time series data. The PDC from y to x is defined as:where is an element in , which is the Fourier Transform of the multivariate auto-regressive model coefficients, , of the time series; is the column of . With this method, the relationship between the data sets is expressed as the direction of information flow between the brains. PDC is based on multivariate auto-regressive modeling and Grander Causality. The analysis technique appears to lend itself to studies where one person’s behaviour drives another’s.

- Phase Locking Value (PLV): PLV, as defined by [55], is:where N represents the total number of epochs (a specified time window based on which data are segregated into equal parts for analysis) and and represent the phase of the signals for the electrodes i and j. The phase difference between the electrodes is given by . For the inter-brain synchrony analysis, the PLV value for each pair of electrodes i and j, where i belongs to one subject and j to the other, is computed. The value of the PLV measure varies between 0 and 1, with 1 indicating perfect inter-brain synchrony and 0 indicating no synchrony of phase-locking between the two signals.

This is a frequently used method for demonstrating brain-to-brain coupling between socially interacting individuals. PLV measures the instantaneous phase of the two signals at any given point in the time series data to determine connectivity between the two individuals. Given that PLV is based on the phase difference across trials, it is only suitable for event-related paradigms [53].

- 3.

- Amplitude and Power Relation: The most frequently used method to study inter-brain synchrony between socially interacting individuals has been the changes in EEG amplitude or power. The changes in amplitude and/or power are estimated from event-related changes. The demonstration of a co-variance of these markers constitutes a display of inter-brain synchrony. This is, however, a weak form of demonstrating neural coupling among socially interacting individuals. While this sort of coupling is suggestive of inter-brain synchrony, it is by no means conclusive [53].

It must be noted that some hyperscanning studies make use of two or more of the methods described above in order to analyse the data from different aspects. Given that current methods are constantly evolving, it is prudent to adopt such an approach to compare the results that one obtains from these methods.

While the three methods listed above constitute a large part of the analysis techniques that have been applied in hyperscanning research, researchers have suggested other methods to analyse data from hyperscanning studies. The Circular Correlation Coefficient (CCorr) [56] and Kraskov Mutual Information (KMI) [57] have been shown to demonstrate good robustness when it comes to analysing hyperscanning data [53]. CCorr in particular has been shown to be a good alternative to PLV, not having to rely on the instantaneous phase difference. Rather, CCorr measures the co-variance of the phase of two unrelated signals in order to determine whether they are related.

In this section, we have briefly covered some of the most popular analysis methods that are used to analyse data obtained from hyperscanning studies. Table 3 lists some studies and the analysis methods that have been used. Given the recent rise in hyperscanning studies, it is likely that these techniques will evolve significantly in the near future. The next subsection covers some of the subjective data-gathering and analysis tools used to obtain a holistic picture of hyperscanning studies.

Table 3.

A list of the analysis techniques used for analysing neural (objective) data. PLI = Phase Locking Index, PLV = Phase Locking Value, SI = Synchronization Index, TF = Time-Frequency analysis, WTC = Wavelet Transform Coherence. The studies listed in the table reflect only a subset of those used for the purpose of the literature review.

3.2.2. Subjective Data

To evaluate social connectedness and presence during collaborative tasks or communications, researchers use a range of questionnaires designed to help participants convey these “feelings”. These serve as a means to quantify data that, as yet, we cannot accurately measure. Such subjective measures help correlate the objective data that are collected to the “experience” that participants report via these tools. Studies involving collaborative tasks in VEs regularly make use of presence questionnaires, such as the one developed by Witmer and Singer [68] and Kort et al. [69]. These kinds of questionnaires help researchers determine how participants in a virtual environment are affected by each other’s presence, and the factors that affect the sense of presence and connectedness.

For example, in a remote collaboration study conducted by Gupta et al. [70], participants were asked to answer a set of 11 questions that were designed to assess their experience collaborating with another person in a VE. The questions asked participants to rate the level of connectedness they felt with their collaborator, the level of focus they believed they afforded the collaborator and the collaborator afforded them, and the assistance they believe they rendered or were provided among them. These questions were extrapolated from a set of questions used by Kim et al. [71] for a similar study. In both cases, the goal of the study was to develop a VE and provide interaction techniques that had the potential to enhance the collaborative experience in a VE by promoting connectedness and a mutual exchange of information, both implicit and explicit.

In the social neuroscience domain, subjective observations have primarily consisted of behavioural observations of subjects [72], either in real-time [44] or video recorded [41] for analysis at a later stage. These behavioural observations are used to correlate the objective data to the recorded behavioural observations. An important aspect that is missing from such observations is the input of the participants in the study. It is important to gain insights from the participants regarding their perceptions of the environment and the “feelings” that they are able to elicit. This will help understand how the objective and observational data relate to participant feedback. This aspect of subjective assessments is now being incorporated into social neuroscience studies.

For example, Hu et al. [73] have used a set of pre- and post-experiment questionnaires to explore participants’ feelings about each other and the environment in which they interact with each other. As part of the pre-experiment questionnaire, participants were asked to answer questions about their partner’s likeability, attractiveness, and how much they trust other people in real life. Likewise, after the experiment, participants’ feelings regarding pleasantness and satisfaction of the tasks they performed during the experiment, as well as the likability and cooperativeness of their partner was assessed on a 7-point Likert scale. Similarly, Pönkänen et al. [11] used the Situational Self-Awareness Scale questionnaire developed by Govern and Marsh [74] to investigate the subjective ratings of self-awareness in gaze-based studies, especially when this awareness is linked to one’s perception of the self in another’s eyes.

The last decade and a half has seen a concerted effort by researchers to develop questionnaires that attempt to capture what are generally thought of as “intangible qualities” of interaction. Social presence [69], social connectedness [75], and the measurement of affect [76] rank highly on the list of seemingly intangible qualities of interaction that researchers are looking to quantify in an attempt to understand the systems that underlie social interactions. Based on some of the work covered in this subsection, we can see that subjective evaluations can provide a wealth of information about an individual’s state of mind before, during, and after a task. Gathering this data makes it possible for researchers to construct a better picture of the environment and interactions from a subject’s perspective. The addition of neuroimaging methods makes it possible to identify the neural activity that is possibly representative of the mental states and/or emotions reported by participants during their interactions in the environment. Together with the objective methods described earlier in the paper, they make for a powerful combination in order to help unravel the workings of social interactions.

4. The Case for Using Hyperscanning in Virtual Environments

To the best of our knowledge, this paper has examined an extensive portion of the existing literature in hyperscanning and its use as a means to understand social interactions. All the studies we have looked at demonstrated that neural correlates of social interaction can be clearly identified during the process of interaction between two or more people. One of the most important factors of these studies is that they were run with participants carrying out tasks in the real world. For the purpose of this section of the review, we will refer to the real world as anything that is not in a VE. Every study we have looked at, barring ones by [31,37,42], involves two participants. This clearly points to a current interest in the two-person neuroscience that we have talked about earlier in this paper. We expect this to gradually move to multi-person social interactions. In light of recent global events and the increased adoption of video conferencing technologies like Zoom (https://zoom.us/) and Google Meet (https://apps.google.com/meet/) as a result, studying multi-person interactions in a VE has acquired greater importance. We believe that large-scale social interaction in VEs is set to witness an exponential growth with the availability of low-cost VR headsets, and the current research trend in VEs also reflects this trend [77].

VR is a technology has been around for at least five decades. In recent years, it has matured enough for it to be made available commercially. As the growth of VR has increased, we are seeing it actively used across a wide range of application domains, such as entertainment, therapy [78,79], medicine [80] construction [81,82], and training [83], to name a few. Its ability to immerse users into a computer-generated environment is one of its key strengths, which has allowed developers to create a number of different VEs, be it for entertainment or training, that a user can inhabit.

In recent years, VR has been used as a tool for remote collaboration amongst a wider section of society. Commercially/freely available tools, such as AltSpace VR (https://altvr.com/), Hubs (https://hubs.mozilla.com/), and Spoke (https://hubs.mozilla.com/spoke) by Mozilla now allow users to create, meet, and collaborate in VE. Users are now able to work together in real-time in a collaborative, virtual work-spaces, despite being geographically separated. Given that some of today’s VR/AR headsets come equipped with eye-tracking, they are able to share implicit social cues, such as gazes with their collaborators. Despite these advances in the VR/AR space with regards to the hardware, and progress made on interactivity within a VE—both with the VE and other users—a major drawback is its inability to be able to convey implicit cues, such as subtle hand gestures and eye contact in a convincing manner. While there exist some means to convey some of these implicit cues in VE, they lack the ability to capture the subtle nuances that underlie implicit communication. In real-world, face-to-face conversations, these implicit cues can inform people of each others’ intentions, emotions, mental state, and so forth.

In an attempt to help improve the communication between people in a collaborative VE, researchers have begun to incorporate physiological sensors that measure heart rate (HR) and galvanic skin response (GSR), among others [84,85,86,87]. Among these physiological sensors, the electroencephalograph (EEG) appears to have become popular among researchers looking for new ways to interact with and convey mental states in a VE [88,89,90,91]. This increased interest in using the EEG in a VE has been encouraged by the availability of high-quality EEG headsets at a low price point. Manufacturers like OpenBCI (https://openbci.com/) and Emotiv (https://www.emotiv.com/) provide EEG hardware and software tools at a price point that makes such technology easily accessible.

This availability of equipment at a lower price point has also spurred the growth of neurological research that studies social interaction. EEG headsets have been at the forefront of the uptake in social neuroscience research. Two-person neuroscience has seen a significant increase in the number of studies that are being conducted. While researchers have also been interested in how the brain works in a social setting, until recently, studying this has not been possible given how cumbersome and expensive monitoring neural activity has been. The emergence of low-cost, high-quality EEG headsets appears to have addressed these issues. Another important advance that has been instrumental in advancing the field has been the development of tools and techniques that help analyse data in greater detail than previously possible. The previous section has listed some of the subjective and objective data analysis techniques used by researchers. These advances have led researchers to develop new study paradigms suited to the monitoring of neural activity from two or more people simultaneously. One of the primary motivations for studying social interactions between people has been to explore the concepts of social co-operation, inter-brain synchrony, and flow. For example, Babiloni et al. [31] have demonstrated that co-operative/team-based game-play with the common goal of beating an opponent elicits similar neural activity among members of the same team. The study also demonstrated that there was a marked difference in the neural activity between the individuals of two completing teams. It must be noted that the study paradigm adopted by Babiloni et al. for this experiment was that of a popular card game. This is a great example of how the process of social interaction can be studied in realistic environments, given the advances in technology. Another compelling example of studying social interaction in a real-world social scenario of critical importance has been described by Toppi et al. [36]. In this study, the neural activity and interactions of a pilot and co-pilot are studied during a simulated flight in an industry standard flight simulator. Results from this study demonstrate that the pilot and co-pilot exhibit a dense connection network between their brains when involved in co-operative tasks that are vital to the safety of the flight and completion of the mission. Conversely, the number of connection, and as a result, inter-brain synchrony reduced drastically when the pilots were involved in tasks that did not require them to co-operate.

Researchers have also explored the concept of flow [92], as demonstrated by Shehata et al. [93]. The flow state, or being “in the zone”, is said to be a psychological phenomenon that develops when there is a balance between the skills of an individual and the challenge of the task, as well as clear goals, and immediate feedback [94]. As with the studies described earlier, flow state is believed to be achieved when a group of people reach high task engagement in the pursuit of a common goal. Shehata el al. have demonstrated that the brains of people engaged in a co-operative task that is achieved successfully with a high level of accuracy both report and demonstrate a level of brain activity linked with the flow state. We have seen how the availability of high-quality neural activity monitoring at a relatively low price point has led to the emergence of the social neuroscience field. Researchers are now able to simultaneously monitor the neural activity from two or more people in real-world scenarios, demonstrating how the brain functions in such environments. This also lends greater credibility to the results that are obtained from such studies, given that they are run in ecologically valid settings.

One of the most important research trends to emerge from the availability of affordable VR/AR and EEG hardware has been the rise of the Brain Computer Interface (BCI), and its use in VEs [95] to facilitate interactions between a human and a computer. There are numerous examples of an EEG headset being used in VR and AR as a Brain–Computer Interface (BCI) [89,91,96,97,98]. BCIs have the ability to translate a user’s thought into action in the virtual world [96,99]. There are several examples of such implementations of the BCI across a variety of use cases in VE. For example, Chin et al. [100] have demonstrated the use of a BCI using Motor Imagery (MI) as a means to move a virtual hand. The applications for such an interface can range from implementation of interactions within a VE, to using it for rehabilitation of stroke victims with impaired upper or lower limb function. Similarly, VR has been used to help train paraplegic and quadriplegic users of powered wheelchairs in the use of a BCI as a means to control the wheelchair [101]. Another interesting application of the BCI has been in communication between users in a VE. Kerous et al. [98] have used the P300 speller as a means to implement communication between two people in a VE. The P300 speller relies on the evoked potential recorded approximately 300 ms after the onset of a stimulus of interest [102]. This is achieved, in most cases, using the “oddball paradigm”, where the stimulus of interest is seen/heard relatively fewer times than the rest of the components [103]. The lower number of occurrences of the target stimulus result in a “spike” (event-related potential) in neural activity when the target stimulus is observed [103,104]. For a detailed overview of the P300 paradigm, please read [105,106]. While arguably a slow and inefficient method [102,107], it demonstrates the possibility of using a BCI as a device to facilitate communication between participants of a VE. Additionally, BCIs have also been shown to be capable of distinguishing emotion [108,109] and cognitive load to dynamically alter the virtual environment (VE) to suit the user’s needs.

Here, we have covered some of the applications of BCIs that are currently being researched. There is, as we can see, a multitude of ways in which BCIs can be used in VEs to affect interactions, monitor neural states, and even augment communication. An important implication of the studies covering the use of BCIs in VEs is that they provide evidence that EEG sensors can be integrated with VR HMDs. Furthermore, studies, such as the one conducted by Bernal et al. [110], demonstrate that such a set-up can even be integrated into an HMD, providing better fit and comfort. Commercial versions of such HMDs or EEG headsets that can be retrofitted are now being manufactured by companies like Looxid Labs (https://looxidlabs.com/) and Neurable (https://www.neurable.com/).

5. Practical Implications for Using Hyperscanning in Virtual Environments

While EEG has been used in a number of VR studies, there appear to be no studies, barring one [111], that explore employing hyperscanning in VR. Employing this technique in VR could greatly benefit studying interactions among users in a multi-user VE. It will also help formulate design strategies that promote inter-brain synchrony in VEs to facilitate better interaction among users. For example, in the case of VR-based training scenarios, equipping the trainer and trainee with EEG sensors will allow the trainer to monitor whether the trainee’s neural activity matches his/her own. In the event that the trainee is distracted or inattentive, his/her neural activity will not only reflect this state, but will also show up as not being “in sync” with that of the trainer. Such a tool will greatly benefit users in a remote collaboration setting. Approaching the same scenario from the viewpoint of VR, a notable aspect of VR is the ability to provide varying visual perspectives, such as the first person or third person. In the scenario described above, these changes in visual perspective can be studied to explore how they affect inter-brain synchrony. It is this facet of VR that we believe will be the most useful in enhancing collaboration, and will be studied using the hyperscanning technique.

It is important to note that hyperscanning, unlike a BCI, is a tool to identify inter-brain synchrony among participants. The information provided by such a system can be used proactively and dynamically to alter interaction strategies to engage user attention and promote inter-brain synchrony for efficient task completion. These changes can be implemented by a computer, as in the case of a BCI, or the user(s).

6. Conclusions

Hyperscanning is a rapidly evolving technique used by social neuroscientists to study social interactions between two or more people. Popularly labelled as two-person neuroscience, it aims to unravel the neural underpinnings of social interactions by providing empirical evidence. This primarily consists of recorded neural data that are analysed using a host of techniques. These analyses are also buttressed by several subjective measures and traditional observation-based methods. These emerging techniques demonstrate that there is a concerted effort in the scientific community to understand how social interaction between two or more people functions at a neural level. Understanding this will help corroborate behavioural observations made by scientists during such studies and provide a holistic understanding of social interactions. Given the plethora of devices that have now democratised access to a VE, it is important that we also understand how social interactions work in such environments.

Using the themes of social interactions and VEs as our guiding beacons, this paper has covered the literature relevant to the subject of two-person and multi-person social interaction from the perspective of social neuroscience and the rise of VEs. The literature on multi-person social neuroscience, particularly centered around hyperscanning, has demonstrated that it is an effective method to monitor neural activity during social interactions between two or more people. Of great importance is the fact that EEG devices now give researchers the ability to monitor neural activity in real-world scenarios, as some of the studies we have reviewed demonstrate. We have also covered how VR, as a medium, is starting to gain mainstream popularity. The fusing of EEG and VR in the form of a BCI has also been included in the review. This is of particular importance, as it demonstrates that two seemingly disparate disciplines can be brought together to affect better interaction in VEs. More importantly, the use of a BCI clearly demonstrates that the approach of hyperscanning in collaborative VEs is not only feasible, but desirable, given that such environments are beginning to gain popularity, as evidenced by the growing use of platforms, such as AltSpace VR and Mozilla Hubs.

These two emerging trends—two-person neuroscience and collaborative VEs—warrant an investigation into how they can be combined in order to improve social interactions in collaborative VEs. There are numerous use cases for a system that combines the two. For example, in a training scenario, a trainer can alter the content being delivered to the trainee based on a number of parameters, such as inter-brain connectivity, cognitive load, attentiveness, and so forth. The hyperscanning technique can be used to assess inter-brain connectivity in such a scenario to help a trainer make decisions in a dynamic fashion that can enhance the manner in which the training programme is delivered.

In order to enable such interactions, we must first explore and identify the neural correlates that underlie social exchanges in VEs. The results from such studies can be used to help design VEs that promote inter-brain synchrony and help participants achieve a state of flow in order to complete tasks in a quick and efficient manner. Given that, to the best of our knowledge, there appears to be only one study that use hyperscanning to examine social interactions in VEs [111], we propose to undertake such an experiment as a means to establish a methodological approach to conducting future investigations into the subject. This preliminary experiment will aim to replicate an existing hyperscanning study carried out in the real world. The experiment will be carried out both in the real world, as in the study, and in VE. We believe the results from such a study will help us identify the differences, if any, in the neural activity recorded in the real world versus that in VE. Establishing these fundamental parameters early will help steer investigations into this topic in the right direction. In addition to this, it would be useful to carry out hyperscanning studies in VEs that study other aspects of social interactions, such as gaze and hand gestures. Such studies will help us build a clearer picture of how multi-person social interactions function in VEs, and how we can build virtual collaborative spaces that promote neural synchrony between their users.

Author Contributions

Conceptualization, M.B.; methodology, A.D. and A.B.; writing—original draft preparation, A.B.; writing—review and editing, A.B., A.D., I.G., A.F.H., G.L. and M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

Two of the authors on this paper, A.D. and M.B., are editors for the special issue of Informatics—Emotion, Cognition, and Empathy in Extended Reality Applications—this review is being submitted to.

Glossary of Terms

| AR | Augmented Reality |

| BCI | Brain Computer Interface |

| CCorr | circular Correlation Coefficient |

| CSCW | Computer Supported Collaborative Work |

| EEG | Electroencephalograph |

| fMRI | Functional Magnetic Resonance Imaging |

| fNIRS | Functional Near-Infrared Spectroscopy |

| GSR | Galvanic Skin Response |

| HCI | Human-Computer Interaction |

| HMD | Head Mounted Display |

| HR | Heart Rate |

| KMI | Kraskov Mutual Information |

| MI | Motor Imagery |

| PANAS | Positive and Negative Affect Schedule |

| PDC | Partial Directed Coherence |

| PLI | Phase Locking Index |

| PLV | Phase Locking Value |

| SAM | Self Assessment Manakin |

| SI | Synchronization Index |

| TF | Time-Frequency Analysis |

| VR | Virtual Reality |

| VE | Virtual Environment |

| WTC | Wavelet Transform Coherence |

References

- Brandão, T.; Matias, M.; Ferreira, T.; Vieira, J.; Schulz, M.S.; Matos, P.M. Attachment, emotion regulation, and well-being in couples: Intrapersonal and interpersonal associations. J. Personal. 2020, 88, 748–761. [Google Scholar] [CrossRef] [PubMed]

- Karreman, A.; Vingerhoets, A.J. Attachment and well-being: The mediating role of emotion regulation and resilience. Personal. Individ. Differ. 2012, 53, 821–826. [Google Scholar] [CrossRef]

- Caruana, N.; Mcarthur, G.; Woolgar, A.; Brock, J. Simulating social interactions for the experimental investigation of joint attention. Neurosci. Biobehav. Rev. 2017, 74, 115–125. [Google Scholar] [CrossRef] [PubMed]

- Mojzisch, A.; Schilbach, L.; Helmert, J.R.; Pannasch, S.; Velichkovsky, B.M.; Vogeley, K. The effects of self-involvement on attention, arousal, and facial expression during social interaction with virtual others: A psychophysiological study. Soc. Neurosci. 2006, 1, 184–195. [Google Scholar] [CrossRef] [PubMed]

- Innocenti, A.; De Stefani, E.; Bernardi, N.F.; Campione, G.C.; Gentilucci, M. Gaze direction and request gesture in social interactions. PLoS ONE 2012, 7, e36390. [Google Scholar] [CrossRef]

- Treger, S.; Sprecher, S.; Erber, R. Laughing and liking: Exploring the interpersonal effects of humor use in initial social interactions. Eur. J. Soc. Psychol. 2013, 43, 532–543. [Google Scholar] [CrossRef]

- Holm Kvist, M. Children’s crying in play conflicts: A locus for moral and emotional socialization. In Research on Children and Social Interaction; Equinox Publishing Ltd.: Sheffield, UK, 2018; Volume 2. [Google Scholar]

- Graham, R.; Labar, K.S. Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia 2012, 50, 553–566. [Google Scholar] [CrossRef][Green Version]

- Ishii, R.; Nakano, Y.I.; Nishida, T. Gaze awareness in conversational agents: Estimating a user’s conversational engagement from eye gaze. ACM Trans. Interact. Intell. Syst. 2013, 3, 1–25. [Google Scholar] [CrossRef]

- Cacioppo, J.T.; Berntson, G.G.; Adolphs, R.; Carter, C.S.; McClintock, M.K.; Meaney, M.J.; Schacter, D.L.; Sternberg, E.M.; Suomi, S.; Taylor, S.E. Foundations in Social Neuroscience; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Pönkänen, L.M.; Peltola, M.J.; Hietanen, J.K. The observer observed: Frontal EEG asymmetry and autonomic responses differentiate between another person’s direct and averted gaze when the face is seen live. Int. J. Psychophysiol. 2011, 82, 180–187. [Google Scholar] [CrossRef]

- Pönkänen, L.M.; Hietanen, J.K. Eye contact with neutral and smiling faces: Effects on autonomic responses and frontal EEG asymmetry. Front. Hum. Neurosci. 2012, 6, 122. [Google Scholar] [CrossRef]

- Hasson, U.; Ghazanfar, A.A.; Galantucci, B.; Garrod, S.; Keysers, C. Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn. Sci. 2012, 16, 114–121. [Google Scholar] [CrossRef] [PubMed]

- Hari, R.; Kujala, M.V. Brain basis of human social interaction: From concepts to brain imaging. Physiol. Rev. 2009, 89, 453–479. [Google Scholar] [CrossRef] [PubMed]

- Duane, T.D.; Behrendt, T. Extrasensory electroencephalographic induction between identical twins. Science 1965, 150, 367. [Google Scholar] [CrossRef]

- Billinghurst, M.; Kato, H. Collaborative augmented reality. Commun. ACM 2002, 45, 64–70. [Google Scholar] [CrossRef]

- Kim, S.; Billinghurst, M.; Lee, G. The effect of collaboration styles and view independence on video-mediated remote collaboration. Comput. Support. Coop. Work CSCW 2018, 27, 569–607. [Google Scholar] [CrossRef]

- Yang, J.; Sasikumar, P.; Bai, H.; Barde, A.; Sörös, G.; Billinghurst, M. The effects of spatial auditory and visual cues on mixed reality remote collaboration. J. Multimodal User Interfaces 2020, 14, 337–352. [Google Scholar] [CrossRef]

- Bai, H.; Sasikumar, P.; Yang, J.; Billinghurst, M. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Piumsomboon, T.; Day, A.; Ens, B.; Lee, Y.; Lee, G.; Billinghurst, M. Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–5. [Google Scholar]

- Frith, C.D.; Frith, U. Implicit and explicit processes in social cognition. Neuron 2008, 60, 503–510. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.Y.; Luan, P.; Zhang, J.; Xiang, Y.T.; Niu, H.; Yuan, Z. Concurrent mapping of brain activation from multiple subjects during social interaction by hyperscanning: A mini-review. Quant. Imaging Med. Surg. 2018, 8, 819. [Google Scholar] [CrossRef]

- Liu, D.; Liu, S.; Liu, X.; Zhang, C.; Li, A.; Jin, C.; Chen, Y.; Wang, H.; Zhang, X. Interactive brain activity: Review and progress on EEG-based hyperscanning in social interactions. Front. Psychol. 2018, 9, 1862. [Google Scholar] [CrossRef]

- Montague, P.R.; Berns, G.S.; Cohen, J.D.; McClure, S.M.; Pagnoni, G.; Dhamala, M.; Wiest, M.C.; Karpov, I.; King, R.D.; Apple, N.; et al. Hyperscanning: Simultaneous fMRI during Linked Social Interactions. NeuroImage 2002, 16, 1159–1164. [Google Scholar] [CrossRef]

- Scholkmann, F.; Holper, L.; Wolf, U.; Wolf, M. A new methodical approach in neuroscience: Assessing inter-personal brain coupling using functional near-infrared imaging (fNIRI) hyperscanning. Front. Hum. Neurosci. 2013, 7, 813. [Google Scholar] [CrossRef] [PubMed]

- Koike, T.; Tanabe, H.C.; Sadato, N. hyperscanning neuroimaging technique to reveal the “two-in-one” system in social interactions. Neurosci. Res. 2015, 90, 25–32. [Google Scholar] [CrossRef]

- Bilek, E.; Ruf, M.; Schäfer, A.; Akdeniz, C.; Calhoun, V.D.; Schmahl, C.; Demanuele, C.; Tost, H.; Kirsch, P.; Meyer-Lindenberg, A. Information flow between interacting human brains: Identification, validation, and relationship to social expertise. Proc. Natl. Acad. Sci. USA 2015, 112, 5207–5212. [Google Scholar] [CrossRef] [PubMed]

- Babiloni, F.; Astolfi, L. Social neuroscience and hyperscanning techniques: Past, present and future. Neurosci. Biobehav. Rev. 2014, 44, 76–93. [Google Scholar] [CrossRef] [PubMed]

- Rapoport, A.; Chammah, A.M.; Orwant, C.J. Prisoner’s Dilemma: A Study in Conflict and Cooperation; University of Michigan Press: Ann Arbor, MI, USA, 1965; Volume 165. [Google Scholar]

- Axelrod, R. Effective choice in the prisoner’s dilemma. J. Confl. Resolut. 1980, 24, 3–25. [Google Scholar] [CrossRef]

- Babiloni, F.; Cincotti, F.; Mattia, D.; Mattiocco, M.; Fallani, F.D.V.; Tocci, A.; Bianchi, L.; Marciani, M.G.; Astolfi, L. Hypermethods for EEG hyperscanning. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 31 August–3 September 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3666–3669. [Google Scholar]

- Yun, K.; Chung, D.; Jeong, J. Emotional interactions in human decision making using EEG hyperscanning. In Proceedings of the International Conference of Cognitive Science, Seoul, Korea, 27–29 July 2008; p. 4. [Google Scholar]

- Yun, K.; Watanabe, K.; Shimojo, S. Interpersonal body and neural synchronization as a marker of implicit social interaction. Sci. Rep. 2012, 2, 959. [Google Scholar] [CrossRef] [PubMed]

- Delaherche, E.; Dumas, G.; Nadel, J.; Chetouani, M. Automatic measure of imitation during social interaction: A behavioral and hyperscanning-EEG benchmark. Pattern Recognit. Lett. 2015, 66, 118–126. [Google Scholar] [CrossRef]

- Sinha, N.; Maszczyk, T.; Wanxuan, Z.; Tan, J.; Dauwels, J. EEG hyperscanning study of inter-brain synchrony during cooperative and competitive interaction. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4813–4818. [Google Scholar]

- Toppi, J.; Borghini, G.; Petti, M.; He, E.J.; De Giusti, V.; He, B.; Astolfi, L.; Babiloni, F. Investigating cooperative behavior in ecological settings: An EEG hyperscanning study. PLoS ONE 2016, 11, e0154236. [Google Scholar] [CrossRef]

- Dikker, S.; Wan, L.; Davidesco, I.; Kaggen, L.; Oostrik, M.; McClintock, J.; Rowland, J.; Michalareas, G.; Van Bavel, J.J.; Ding, M.; et al. Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 2017, 27, 1375–1380. [Google Scholar] [CrossRef]

- Pérez, A.; Carreiras, M.; Duñabeitia, J.A. Brain-to-brain entrainment: EEG interbrain synchronization while speaking and listening. Sci. Rep. 2017, 7, 1–12. [Google Scholar] [CrossRef]

- Sciaraffa, N.; Borghini, G.; Aricò, P.; Di Flumeri, G.; Colosimo, A.; Bezerianos, A.; Thakor, N.V.; Babiloni, F. Brain interaction during cooperation: Evaluating local properties of multiple-brain network. Brain Sci. 2017, 7, 90. [Google Scholar] [CrossRef] [PubMed]

- Szymanski, C.; Pesquita, A.; Brennan, A.A.; Perdikis, D.; Enns, J.T.; Brick, T.R.; Müller, V.; Lindenberger, U. Teams on the same wavelength perform better: Inter-brain phase synchronization constitutes a neural substrate for social facilitation. Neuroimage 2017, 152, 425–436. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhou, Z. Multiple Human EEG Synchronous Analysis in Group Interaction-Prediction Model for Group Involvement and Individual Leadership. In International Conference on Augmented Cognition; Springer: Cham, Switzerland, 2017; pp. 99–108. [Google Scholar]

- Ciaramidaro, A.; Toppi, J.; Casper, C.; Freitag, C.; Siniatchkin, M.; Astolfi, L. Multiple-brain connectivity during third party punishment: An EEG hyperscanning study. Sci. Rep. 2018, 8, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Saito, D.N.; Tanabe, H.C.; Izuma, K.; Hayashi, M.J.; Morito, Y.; Komeda, H.; Uchiyama, H.; Kosaka, H.; Okazawa, H.; Fujibayashi, Y.; et al. “Stay tuned”: Inter-individual neural synchronization during mutual gaze and joint attention. Front. Integr. Neurosci. 2010, 4, 127. [Google Scholar] [CrossRef] [PubMed]

- Stephens, G.J.; Silbert, L.J.; Hasson, U. Speaker–listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. USA 2010, 107, 14425–14430. [Google Scholar] [CrossRef]

- Dikker, S.; Silbert, L.J.; Hasson, U.; Zevin, J.D. On the same wavelength: Predictable language enhances speaker–listener brain-to-brain synchrony in posterior superior temporal gyrus. J. Neurosci. 2014, 34, 6267–6272. [Google Scholar] [CrossRef]

- Koike, T.; Tanabe, H.C.; Okazaki, S.; Nakagawa, E.; Sasaki, A.T.; Shimada, K.; Sugawara, S.K.; Takahashi, H.K.; Yoshihara, K.; Bosch-Bayard, J.; et al. Neural substrates of shared attention as social memory: A hyperscanning functional magnetic resonance imaging study. Neuroimage 2016, 125, 401–412. [Google Scholar] [CrossRef]

- Nozawa, T.; Sasaki, Y.; Sakaki, K.; Yokoyama, R.; Kawashima, R. Interpersonal frontopolar neural synchronization in group communication: An exploration toward fNIRS hyperscanning of natural interactions. Neuroimage 2016, 133, 484–497. [Google Scholar] [CrossRef]

- Tang, H.; Mai, X.; Wang, S.; Zhu, C.; Krueger, F.; Liu, C. Interpersonal brain synchronization in the right temporo-parietal junction during face-to-face economic exchange. Soc. Cogn. Affect. Neurosci. 2016, 11, 23–32. [Google Scholar] [CrossRef]

- Liu, T.; Pelowski, M. Clarifying the interaction types in two-person neuroscience research. Front. Hum. Neurosci. 2014, 8, 276. [Google Scholar] [CrossRef]

- Acquadro, M.A.; Congedo, M.; De Riddeer, D. Music performance as an experimental approach to hyperscanning studies. Front. Hum. Neurosci. 2016, 10, 242. [Google Scholar] [CrossRef] [PubMed]

- Watson, D.; Clark, L.A. The PANAS-X: Manual for the Positive and Negative Affect Schedule-Expanded Form. Available online: https://ir.uiowa.edu/cgi/viewcontent.cgi?article=1011&context=psychology_pubs (accessed on 23 November 2020).

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Burgess, A.P. On the interpretation of synchronization in EEG hyperscanning studies: A cautionary note. Front. Hum. Neurosci. 2013, 7, 881. [Google Scholar] [CrossRef] [PubMed]

- Baccalá, L.A.; Sameshima, K. Partial directed coherence: A new concept in neural structure determination. Biol. Cybern. 2001, 84, 463–474. [Google Scholar] [CrossRef] [PubMed]

- Lachaux, J.P.; Rodriguez, E.; Martinerie, J.; Varela, F.J. Measuring phase synchrony in brain signals. Hum. Brain Mapp. 1999, 8, 194–208. [Google Scholar] [CrossRef]

- Jammalamadaka, S.R.; Sengupta, A. Topics in Circular Statistics; World Scientific: Singapore, 2001; Volume 5. [Google Scholar]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef]

- Tognoli, E.; Lagarde, J.; DeGuzman, G.C.; Kelso, J.S. The phi complex as a neuromarker of human social coordination. Proc. Natl. Acad. Sci. USA 2007, 104, 8190–8195. [Google Scholar] [CrossRef]

- Mu, Y.; Han, S.; Gelfand, M.J. The role of gamma interbrain synchrony in social coordination when humans face territorial threats. Soc. Cogn. Affect. Neurosci. 2017, 12, 1614–1623. [Google Scholar] [CrossRef]

- Kawasaki, M.; Kitajo, K.; Yamaguchi, Y. Sensory-motor synchronization in the brain corresponds to behavioral synchronization between individuals. Neuropsychologia 2018, 119, 59–67. [Google Scholar] [CrossRef]

- Konvalinka, I.; Bauer, M.; Stahlhut, C.; Hansen, L.K.; Roepstorff, A.; Frith, C.D. Frontal alpha oscillations distinguish leaders from followers: Multivariate decoding of mutually interacting brains. Neuroimage 2014, 94, 79–88. [Google Scholar] [CrossRef]

- Ménoret, M.; Varnet, L.; Fargier, R.; Cheylus, A.; Curie, A.; des Portes, V.; Nazir, T.A.; Paulignan, Y. Neural correlates of non-verbal social interactions: A dual-EEG study. Neuropsychologia 2014, 55, 85–97. [Google Scholar] [CrossRef] [PubMed]

- Reindl, V.; Gerloff, C.; Scharke, W.; Konrad, K. Brain-to-brain synchrony in parent-child dyads and the relationship with emotion regulation revealed by fNIRS-based hyperscanning. NeuroImage 2018, 178, 493–502. [Google Scholar] [CrossRef] [PubMed]

- Cui, X.; Bryant, D.M.; Reiss, A.L. NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. NeuroImage 2012, 59, 2430–2437. [Google Scholar] [CrossRef] [PubMed]

- Pan, Y.; Cheng, X.; Zhang, Z.; Li, X.; Hu, Y. Cooperation in lovers: An fNIRS-based hyperscanning study. Hum. Brain Mapp. 2017, 38, 831–841. [Google Scholar] [CrossRef]

- Holper, L.; Scholkmann, F.; Wolf, M. Between-brain connectivity during imitation measured by fNIRS. NeuroImage 2012, 63, 212–222. [Google Scholar] [CrossRef]

- Hirsch, J.; Zhang, X.; Noah, J.A.; Ono, Y. Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. NeuroImage 2017, 157, 314–330. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- De Kort, Y.A.; IJsselsteijn, W.A.; Poels, K. Digital games as social presence technology: Development of the Social Presence in Gaming Questionnaire (SPGQ). Proc. Presence 2007, 195203, 1–9. [Google Scholar]

- Gupta, K.; Lee, G.A.; Billinghurst, M. Do you see what i see? the effect of gaze tracking on task space remote collaboration. IEEE Trans. Vis. Comput. Graph. 2016, 22, 2413–2422. [Google Scholar] [CrossRef]

- Kim, S.; Lee, G.; Sakata, N.; Billinghurst, M. Improving co-presence with augmented visual communication cues for sharing experience through video conference. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 83–92. [Google Scholar]

- Singer, T.; Lamm, C. The social neuroscience of empathy. Ann. N. Y. Acad. Sci. 2009, 1156, 81–96. [Google Scholar] [CrossRef]

- Hu, Y.; Pan, Y.; Shi, X.; Cai, Q.; Li, X.; Cheng, X. Inter-brain synchrony and cooperation context in interactive decision making. Biol. Psychol. 2018, 133, 54–62. [Google Scholar] [CrossRef] [PubMed]

- Govern, J.M.; Marsch, L.A. Development and validation of the situational self-awareness scale. Conscious. Cogn. 2001, 10, 366–378. [Google Scholar] [CrossRef] [PubMed]

- Van Bel, D.T.; Smolders, K.; IJsselsteijn, W.A.; de Kort, Y. Social connectedness: Concept and measurement. Intell. Environ. 2009, 2, 67–74. [Google Scholar]

- Yarosh, S.; Markopoulos, P.; Abowd, G.D. Towards a questionnaire for measuring affective benefits and costs of communication technologies. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, MD, USA, 15–19 February 2014; pp. 84–96. [Google Scholar]

- Ens, B.; Lanir, J.; Tang, A.; Bateman, S.; Lee, G.; Piumsomboon, T.; Billinghurst, M. Revisiting collaboration through mixed reality: The evolution of groupware. Int. J. Hum. Comput. Stud. 2019, 131, 81–98. [Google Scholar] [CrossRef]

- Klinger, E.; Bouchard, S.; Légeron, P.; Roy, S.; Lauer, F.; Chemin, I.; Nugues, P. Virtual reality therapy versus cognitive behavior therapy for social phobia: A preliminary controlled study. Cyberpsychol. Behav. 2005, 8, 76–88. [Google Scholar] [CrossRef]

- Matamala-Gomez, M.; Donegan, T.; Bottiroli, S.; Sandrini, G.; Sanchez-Vives, M.V.; Tassorelli, C. Immersive virtual reality and virtual embodiment for pain relief. Front. Hum. Neurosci. 2019, 13, 279. [Google Scholar] [CrossRef]

- Joda, T.; Gallucci, G.; Wismeijer, D.; Zitzmann, N. Augmented and virtual reality in dental medicine: A systematic review. Comput. Biol. Med. 2019, 108, 93–100. [Google Scholar] [CrossRef]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. From BIM to extended reality in AEC industry. Autom. Constr. 2020, 116, 103254. [Google Scholar] [CrossRef]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. BIM/MR-Lean Construction Project Delivery Management System. In Proceedings of the 2019 IEEE Technology & Engineering Management Conference (TEMSCON), Atlanta, GA, USA, 12–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. Virtual reality for design and construction education environment. In AEI 2019: Integrated Building Solutions—The National Agenda; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 193–203. [Google Scholar]

- Masai, K.; Kunze, K.; Sugimoto, M.; Billinghurst, M. Empathy Glasses. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, CHI EA’16, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1257–1263. [Google Scholar] [CrossRef]

- Dey, A.; Piumsomboon, T.; Lee, Y.; Billinghurst, M. Effects of Sharing Physiological States of Players in a Collaborative Virtual Reality Gameplay. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI’17, Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 4045–4056. [Google Scholar] [CrossRef]

- Dey, A.; Chen, H.; Zhuang, C.; Billinghurst, M.; Lindeman, R.W. Effects of Sharing Real-Time Multi-Sensory Heart Rate Feedback in Different Immersive Collaborative Virtual Environments. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 165–173. [Google Scholar] [CrossRef]

- Dey, A.; Chen, H.; Hayati, A.; Billinghurst, M.; Lindeman, R.W. Sharing Manipulated Heart Rate Feedback in Collaborative Virtual Environments. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; pp. 248–257. [Google Scholar] [CrossRef]

- Dey, A.; Chatburn, A.; Billinghurst, M. Exploration of an EEG-Based Cognitively Adaptive Training System in Virtual Reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 220–226. [Google Scholar] [CrossRef]

- Salminen, M.; Järvelä, S.; Ruonala, A.; Timonen, J.; Mannermaa, K.; Ravaja, N.; Jacucci, G. Bio-adaptive social VR to evoke affective interdependence: DYNECOM. In Proceedings of the 23rd International Conference on Intelligent User Interfaces, Tokyo, Japan, 7–11 March 2018; pp. 73–77. [Google Scholar]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef]

- Jantz, J.; Molnar, A.; Alcaide, R. A brain-computer interface for extended reality interfaces. In ACM Siggraph 2017 VR Village; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–2. [Google Scholar]

- Csikszentmihalyi, M.; Csikzentmihaly, M. Flow: The Psychology of Optimal Experience; Harper & Row New York: New York, NY, USA, 1990; Volume 1990. [Google Scholar]

- Shehata, M.; Cheng, M.; Leung, A.; Tsuchiya, N.; Wu, D.A.; Tseng, C.H.; Nakauchi, S.; Shimojo, S. Team Flow Is a Unique Brain State Associated with Enhanced Information Integration and Neural Synchrony. 2020. Available online: https://authors.library.caltech.edu/104079/ (accessed on 23 November 2020).

- Nakamura, J.; Csikszentmihalyi, M. The Concept of Flow; Flow and the Foundations of Positive Psychology; Springer: Dordrecht, The Netherlands, 2014; pp. 239–263. [Google Scholar]

- Bohil, C.J.; Alicea, B.; Biocca, F.A. Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 2011, 12, 752–762. [Google Scholar] [CrossRef]

- Lotte, F.; Faller, J.; Guger, C.; Renard, Y.; Pfurtscheller, G.; Lécuyer, A.; Leeb, R. Combining BCI with virtual reality: Towards new applications and improved BCI. In Towards Practical Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2012; pp. 197–220. [Google Scholar]

- Lenhardt, A.; Ritter, H. An augmented-reality based brain-computer interface for robot control. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 58–65. [Google Scholar]

- Kerous, B.; Liarokapis, F. BrainChat-A Collaborative Augmented Reality Brain Interface for Message Communication. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 279–283. [Google Scholar]

- Vourvopoulos, A.; Liarokapis, F. Evaluation of commercial brain–computer interfaces in real and virtual world environment: A pilot study. Comput. Electr. Eng. 2014, 40, 714–729. [Google Scholar] [CrossRef]

- Chin, Z.Y.; Ang, K.K.; Wang, C.; Guan, C. Online performance evaluation of motor imagery BCI with augmented-reality virtual hand feedback. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Montréal, QC, Canada, 20 July 2020; IEEE: Piscataway, NJ, USA, 2010; pp. 3341–3344. [Google Scholar]

- Naves, E.L.; Bastos, T.F.; Bourhis, G.; Silva, Y.M.L.R.; Silva, V.J.; Lucena, V.F. Virtual and augmented reality environment for remote training of wheelchairs users: Social, mobile, and wearable technologies applied to rehabilitation. In Proceedings of the 2016 IEEE 18th International Conference on e-Health Networking, Applications and Services (Healthcom), Munich, Germany, 14–16 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–4. [Google Scholar]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Nijboer, F.; Sellers, E.; Mellinger, J.; Jordan, M.A.; Matuz, T.; Furdea, A.; Halder, S.; Mochty, U.; Krusienski, D.; Vaughan, T.; et al. A P300-based brain–computer interface for people with amyotrophic lateral sclerosis. Clin. Neurophysiol. 2008, 119, 1909–1916. [Google Scholar] [CrossRef] [PubMed]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Pritchard, W.S. Psychophysiology of P300. Psychol. Bull. 1981, 89, 506. [Google Scholar] [CrossRef]

- Fabiani, M.; Gratton, G.; Karis, D.; Donchin, E. Definition, identification, and reliability of measurement of the P300 component of the event-related brain potential. Adv. Psychophysiol. 1987, 2, 78. [Google Scholar]