This section reports the results of the experimental study. The results will consist of the following two sub-sections: (1) performance results, which includes statistical analysis of the results, comparing how the treatment group performed on the tasks as compared to the control group; and (2) response to VINCENT, which includes the participants’ feelings towards completing the tasks. All participants’ comments reported in this section are verbatim.

To statistically evaluate the quantitative results, we have used two tests: Mann–Whitney U and Chi-square. Mann–Whitney U tests are used to compare differences between ordinal/continuous variables of two independent groups that have non-normally distributed data. For this study, we used Mann–Whitney U tests to examine if there were significant differences between the two groups with regard to the number of completed tasks, the performance scores on the tasks, and the perceived easiness of and confidence in performing the tasks. Chi-square tests, on the other hand, are used to compare the distribution of nominal variables for independent groups. For this study, we used Chi-square tests to examine if there were significant differences between the two groups with regard to tasks/subtasks that had binary (right or wrong) results. It is important to note that not every participant able to complete all 10 tasks. Therefore, we have reported in the results tables the sample size, reflecting how many of the 17 participants in each group completed the task.

4.1. Performance Results

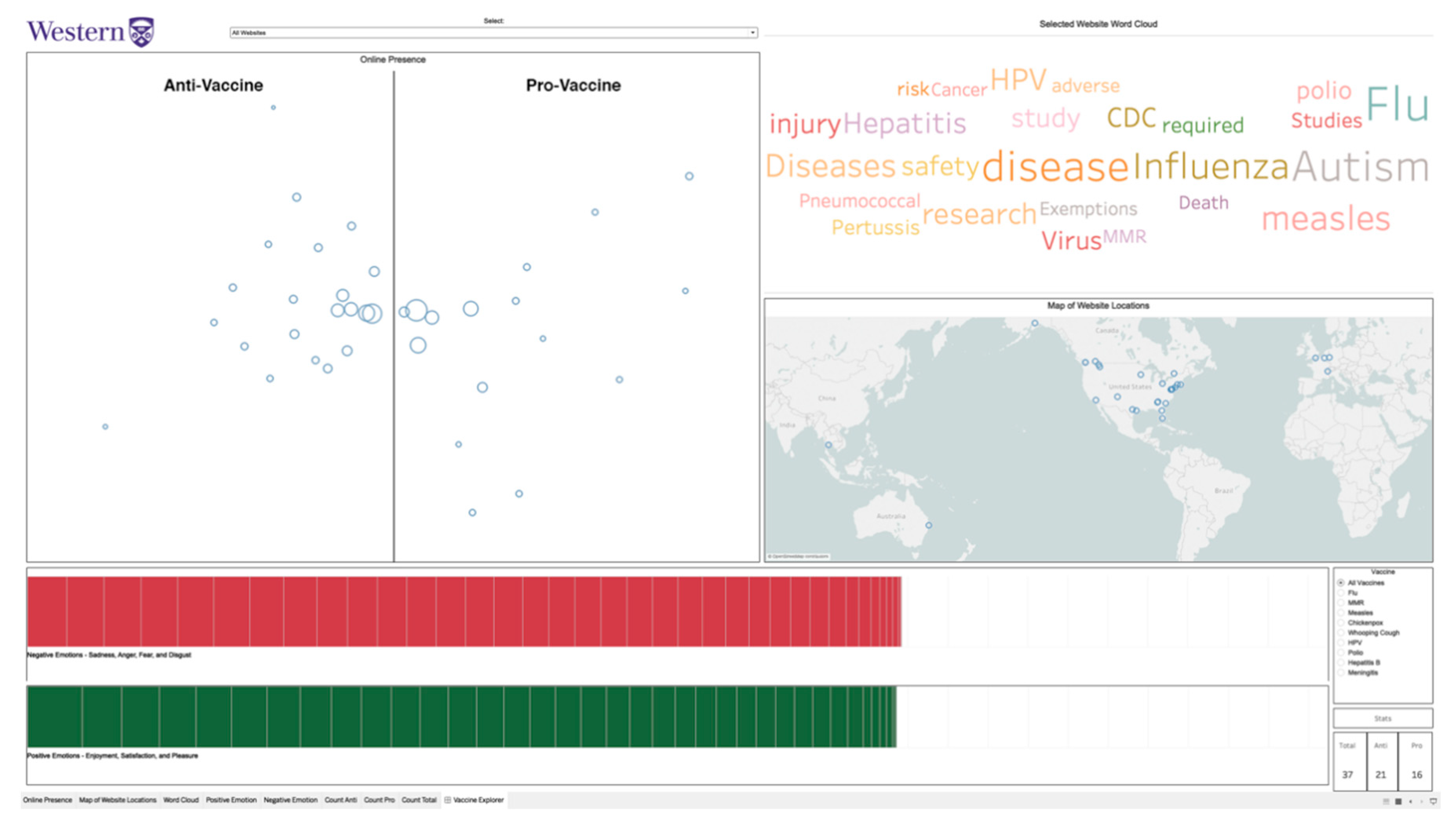

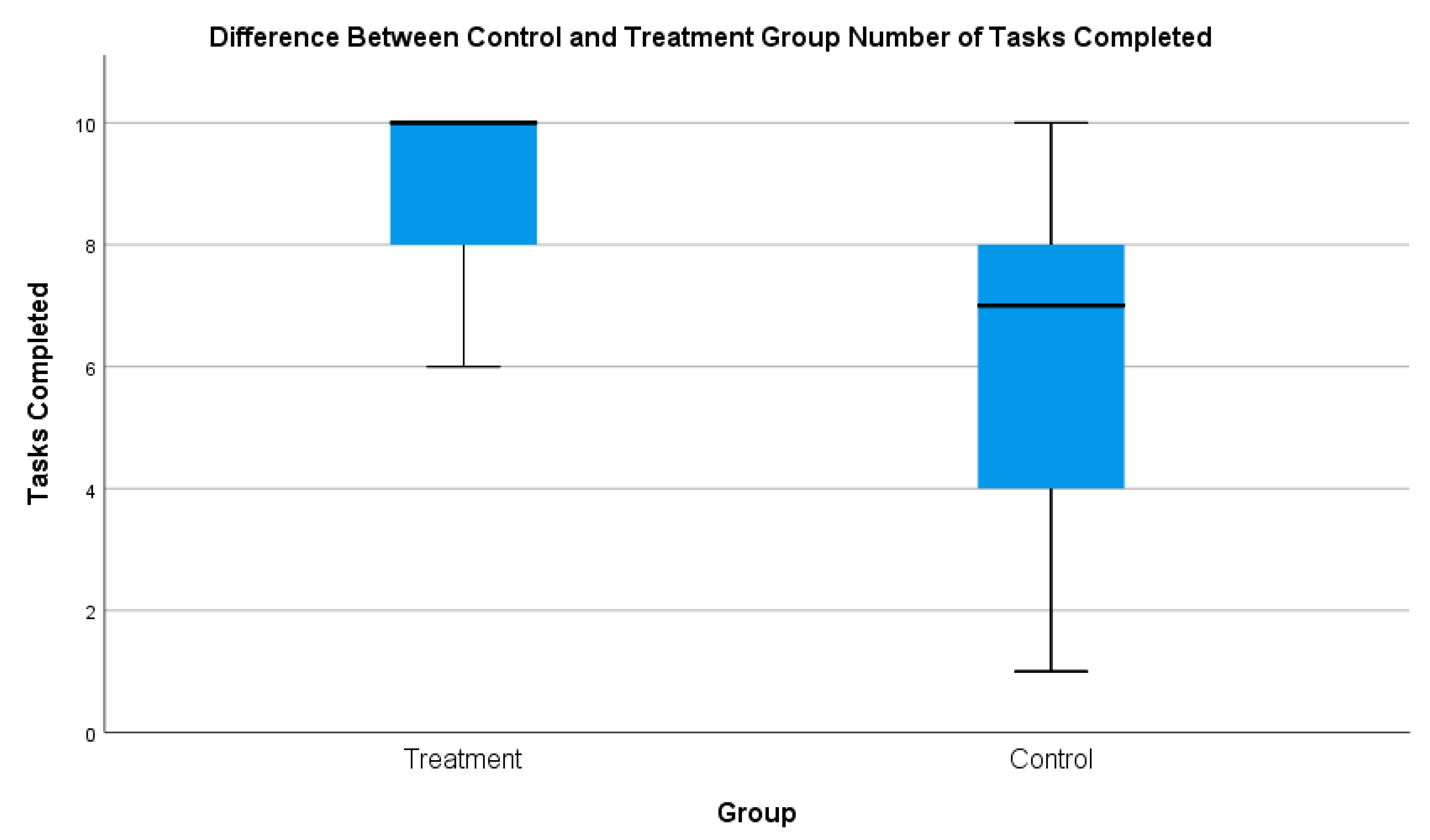

The control group was included to determine by comparison whether VINCENT influenced participants’ ability to investigate the online vaccine debate. With regard to completing the tasks, at a descriptive level, the treatment group was able to complete far more than the control group (see

Figure 2). The mean number of completed tasks for the treatment group was 8.9/10, while for the control group it was 6.7/10.

With regard to the average score (for only the completed tasks), at a descriptive level, the treatment group greatly outperformed the control group (see

Figure 3). Every participant in the treatment group outperformed every participant in the control group.

When comparing the results of the two groups using the Mann–Whitney U test, a significant difference was observed.

Table 1 presents the overall statistical analysis of the two groups. Overall, the treatment group was able to complete significantly more tasks and, on the tasks they did complete, were significantly more effective than the control group.

In

Section 4.1.1 and

Section 4.1.2, we discuss the results from the webometrics-based tasks, which helped users assess the websites’ online presence (

Table 2) and geographic locations (

Table 3). Tasks that utilized primarily online presence included Tasks 1, 2, and 4, while tasks that utilized primarily geographic locations included Tasks 3 and 10. Then, in

Section 4.1.3 and

Section 4.1.4, we discuss the results from the NLP-based tasks, which helped users assess websites’ focus (

Table 4) and emotion in the website text when discussing specific vaccines or vaccines in general (

Table 5). Tasks that utilized primarily focus included Tasks 5 and 6 while tasks that utilized primarily emotion included Tasks 7–9.

4.2. Response to VINCENT

Overall, the treatment group responded much more positively to the tasks than the control group. At a descriptive level, the treatment group found the tasks much easier to complete (see

Figure 4). The median response for how easy they found the tasks was, for the treatment group, easy, while for the control group it was somewhat difficult.

As well, at a descriptive level, the treatment group was much more confident in their responses (see

Figure 5). The majority of responses from the treatment group ranged from extremely confident to somewhat confident, while for the control group, the majority of responses were somewhat confident to somewhat not confident.

Integrating the webometrics and NLP components made sense to the treatment group, as they responded that they found it easy to connect the information across multiple visualizations. This further indicates that the system was usable both overall and not just with regard to the various components of the system, as will be described subsequently.

The treatment group found most of the tasks to be straightforward with the use of VINCENT. They had to identify what the task was asking them to find, search the VAS for the corresponding information, analyze the information (if required), and develop/identify the appropriate response. It was challenging when the system did not match their mental model of how the interaction should work or if the task required them to go beyond simply finding the information in the system and required them to evaluate the information presented to them in further detail.

Comparing the two groups’ responses to the tasks, a significant difference was observed (

Table 6). The treatment group found the tasks to be much easier to complete and were much more confident in their responses than the control group.

Two main observations were identified from the interview sessions that helped explain, in a general sense, these responses. First, the amount of time they had to complete the tasks was an important factor. VINCENT helped the participants deal with the vast amount of information quickly. Using the system, the treatment group could easily and rapidly find the information they needed to complete the tasks while the control group, on the contrary, found it very time consuming to go through the websites and get the information they needed.

Participant 8: “I didn’t have enough time … if it was 10 websites, obviously, I would have done way better than 37 websites. I would have been able to look over them all in depth. However, 37 websites were a lot and I had to look through every single one”

Participant 12: “I just found it pretty challenging overall just because the amount of websites I was given and the fact that I had to analyze them beyond just the first page or the domain”

Participant 2: “The tasks that required me to go through all the websites and determine are they pro-vaccine or anti-vaccine (among other things) … it was super hard.”

Second, VINCENT made it easier (and in some cases, possible) for participants to analyze and evaluate the information required to complete the tasks. The system offloaded much of the analysis and allowed the treatment group to visualize the data from the websites in ways that helped them to easily see patterns and make judgments about the data. One treatment group participant highlighted this sentiment.

Participant 9: “I was confident (in my responses) because … I would read the question and straight after I looked at the visualization … (and would) find what I was looking for. The terminology of the question was right there in the visualization. So, it was not like I had to do any further research. (The task) asked something, I clicked it, I hovered, I did some maneuvering around and navigating around the visualization and it was right there. Nothing was hidden, everything was just there.”

We will discuss the response to the various components of VINCENT in the following sub-sections. In

Section 4.2.1 and

Section 4.2.2, we discuss the user response to the system with regard to the webometrics-based tasks, which helped users assess websites’ online presence (

Table 7) and geographic location (

Table 8). These included Tasks 1, 2, 3, 4, and 10. In

Section 4.2.3 and

Section 4.2.4, we discuss the user response to the system with regard to the NLP-based tasks, which helped users assess websites’ focus (

Table 9) and website text emotion when discussing specific vaccines or vaccines in general (

Table 10). These included Tasks 5–9.

4.2.1. Online Presence

The treatment group found Task 1 to be significantly easier to complete than the control group. The treatment group quickly understood how to read the online presence map to get the information they needed. The control group did poorly on this task, with the majority responding incorrectly that the set of websites had more pro-vaccine than anti-vaccine websites. To complete this task, the control group had to find ways to investigate the set of websites quickly and effectively. The control group cited several reasons they struggled to do this, including that there were too many websites they had to assess, and they could not find appropriate identifying factors or indicators of what makes a website pro-vaccine or anti-vaccine.

Participant 8: “I would determine if they were pro- or not pro- by their layout or their about section … It was kind of hard to keep track of every website within the time frame.”

Participant 12: “The first task, I found pretty challenging because there were over 30 websites and the titles themselves, some of them didn’t really give away whether they were pro- or anti-vaccine. So, I had to basically click through all of them and then, even on the cover page, I was sometimes not even sure. Then so I would have to explore the website and that took a really long time.”

Participant 12: “Sometimes it was clear from the outside what the bias was, for instance the title of the webpage often communicated what the stance was, but that can be misleading. The quality of the webpage, a lot of the anti-vaccine websites looked like they were hastily put together whereas the pro-vaccine websites were usually government organizations and often times that was a hint, but ultimately it is the words that count.”

After seeing VINCENT, the control group discussed how the system would have helped them complete the task and saw how their original perceptions were inaccurate. As well, the treatment group discussed how they felt they would have fared on the task without the system. Some factors they observed that made the task more difficult for the control group included: pre-existing biases, difficulty quickly judging websites, and the juxtaposition of the websites effecting their determination of the website’s stance (i.e., a very anti-vaccine website next to a somewhat anti-vaccine website made the latter appear less anti-vaccine).

Participant 8: “So, I thought (Australian Vaccination-Risks Network) was pro-vaccination but really it is anti-vaccination, I didn’t get the whole vibe or the whole message of it being anti-vaccination … I guess I just didn’t go as in depth as other websites … This system would have helped since it not only marked it as anti-vaccination but I could see (for example) what negative emotions it had”

Participant 20: “I don’t think I’d make as objective a decision (without the system) on which websites are anti- or pro- as with (the) system, but also going back to back one website may seem more pro- or anti-vaccine because it was just after another type of website. If I had looked at a really anti-vaccine website and then looked at another anti-vaccine website but it was more mild, I may have personally put it in pro-vaccine category because of my own personal experience.”

Participant 26: “(Without the tool I would look at) how I trusted the name of the website … I know what government websites would be called, I know I can trust them in general, and I would put it as a pro-vaccine website … whereas something like Vaxxter, I’d be instantly questionable and think it’s anti-vaccine … it’s not a real word, its playing on catchphrasiness and that is a common thing with dubious websites, but then there is one, at the same time, called GAVI vaccine alliance … which if you were to ask me right of the bat if its pro- or anti, I’d say its anti- … but … I actually found out its a pro- one, so (my assumptions) don’t (always) work.”

The treatment group found Task 2 to be significantly easier than the control group as well. The treatment group was quickly able to understand that the size of the circles reflected online presence of each website and were quickly and easily able to identify the two websites of interest. The control group struggled with the task and found it more difficult to complete. The difficulty was due to the control group not identifying successful ways to judge online presence quickly. They tended to depend on superficial aspects of the website in an attempt to make these determinations, including the look of the website, the content, or the amount of built in interaction.

Participant 2: “Online presence for me was the quality of the content and representation … If I’m going through a website that has nothing in it and is just 1 page, that is for me, I don’t think that is going to have much attention or online presence than a website that has a blog and different authors write in it and it is, for example, interactive, you can go and comment and different posts etc. …”

Participant 8: “If I were a mother, I would choose websites that were the most family related. So, in that sense, I would choose those ones as the ones that had the strongest online presence”

The treatment group found Task 4 to be slightly easier than the control. However, no significant difference was observed between the two groups’ results. This was a task that required going beyond just finding information on VINCENT, requiring participants to compare and analyze the information. Some participants in the treatment group highlighted the reasons they felt the task was challenging even with VINCENT, ranging from uncertainty on how to read the online presence map as well as being distracted by the amount of information they needed to assess and report.

Participant 6: “The horizontal axis on the (online presence map) I roughly interpret as the farther left it is the more anti-vaccine it is, the farther right it is the more pro-vaccine it is … But I don’t know what the vertical axis is telling but it seems like it is a really useful amount of real estate. If it has the opportunity to tell me something, that’d be fantastic … Because I was putting myself in a mental state of ‘what does the vertical axis mean’, that took a lot of time for me to figure out what I thought was going on”

Participant 9: “I wish I could keep both Xs on the map to help with compare and contrast”

Participant 5: “I didn’t feel super confident about this because I think I went off in too much detail talking about all the differences and maybe the negative and positive emotions thing tripped me up…”

Participant 20: “Because there is so much information here, I wrote -as you can see here- a lot. And I feel like for me it was more difficult because I wanted to write more and there wasn’t enough time to do so.”

The control group found Task 4 easier to complete than they had found Tasks 1 or 2. An important reason they found this comparatively easier to the previous tasks was that instead of looking through all of the websites, this task only required them to focus on and compare two websites at a time. In the eyes of the control group, this was much more manageable and gave them an opportunity to look more closely at the information they had to assess.

Participant 3: “To me it was an easy task to complete in terms of the other tasks because I was only comparing 2 websites at a time”

Participant 12: “With the specific websites, I was prompted to look at one aspect of them and since it was only about 2 at a time it was easier to remember what I looked at on the first website and then compare that to the next one versus (looking at all the website). I had already forgotten what I looked at 2 websites ago and since I wasn’t looking for anything specific it was sort of overall the feel of the website, that was a lot harder”

4.2.2. Geographic Locations

The treatment group found Task 3 significantly easier to complete than the control group. With VINCENT, participants understood how the map worked and how to locate the websites to uncover the information. Participants reinforced this finding in the interviews, explaining how the map made sense to them and that it was easy for them to see and evaluate the information.

Participant 26: “There are visual spaces in the app that are definitely more approachable … For example, the (geographic) map, most people have a mental model of how a map works, so they see the map and they see locations dotted on a map and they can easily approach this and get instant context”

Participant 3: “I would have (felt it was) the easiest and had the highest confidence (in my response with the system) because even just seeing here, you can see there are 6 countries and I would immediately be able to get the information I needed.”

The control group struggled to find the information they needed. A common strategy was to look to see if there was any indication about the geographic location from the name of the website or the top-level domain (e.g., .uk, .au). One participant (participant 2) had a computer science background and mentioned how they used these skills to help do this task, specifically using WHOIS to help locate the websites. But even this method was only somewhat effective, as they were limited in time and could only search the websites that they suspected of being located outside of North America.

Participant 2: “One task … (asked me to determine) which country is this website coming from. So, in order to do that, I looked up some of the websites from WHOIS. Some of the websites didn’t include their address or postal code in their about page or any other page, so I had to look up online to see from which country is this website coming from.”

Participant 8: “I looked at the URL. I think .com is North America, so there are some that are .eu or .uk so I thought those would be Europe our United Kingdom. So that is what I put as the answer … This question would have been easier (with the system) with the system because the map shows where the website is located.”

Participant 3: “For example, looking at the locations, the best I could do was try and look at the ending of the URL, the domain, and then go to that website and see if I could find out anything about where it was from.”

The treatment group also found Task 10 to be significantly easier to complete than the control group. In general, the treatment group seemed to understand what they were looking for and how to interact with the system to get that information. One aspect of this task that both the treatment and control groups identified having difficulty understanding was some of the geographic terminology used. “Midwestern USA” specifically seemed to cause confusion amongst participants. Some participants expressed their confusion about what this area meant. Participants said they would have benefited from having geographic regional labels added to the map to help them keep track of and identify the various regions.

Participant 20: “I would have kind of recognized western North America is here, eastern North America is here, Europe, but Midwestern USA, I don’t know what that means … If you had those questions and the labels were on the map (it would have helped)”

Participant 9: “I felt like the majority of the websites I was looking at fell in both (Midwest and Western USA) so I couldn’t specify which one”

One treatment group participant highlighted why VINCENT was useful for this task or any other task that required examining groups of websites by their geographic locations. With the system, the user can quickly put websites together based on geography and see if any relationships exist between this and vaccine position, focus, or emotions regarding vaccines.

Participant 6: “I think of geographical terms … so I want to know what these clusters of websites have in common because of their geographical proximities and simply by highlighting them there happens to be in the pacific northwest a strong anti-vaccine tendency at least from the sample we have available, which is interesting to me”