Explainable AI for Clinical Decision Support Systems: Literature Review, Key Gaps, and Research Synthesis

Abstract

1. Introduction

2. Background

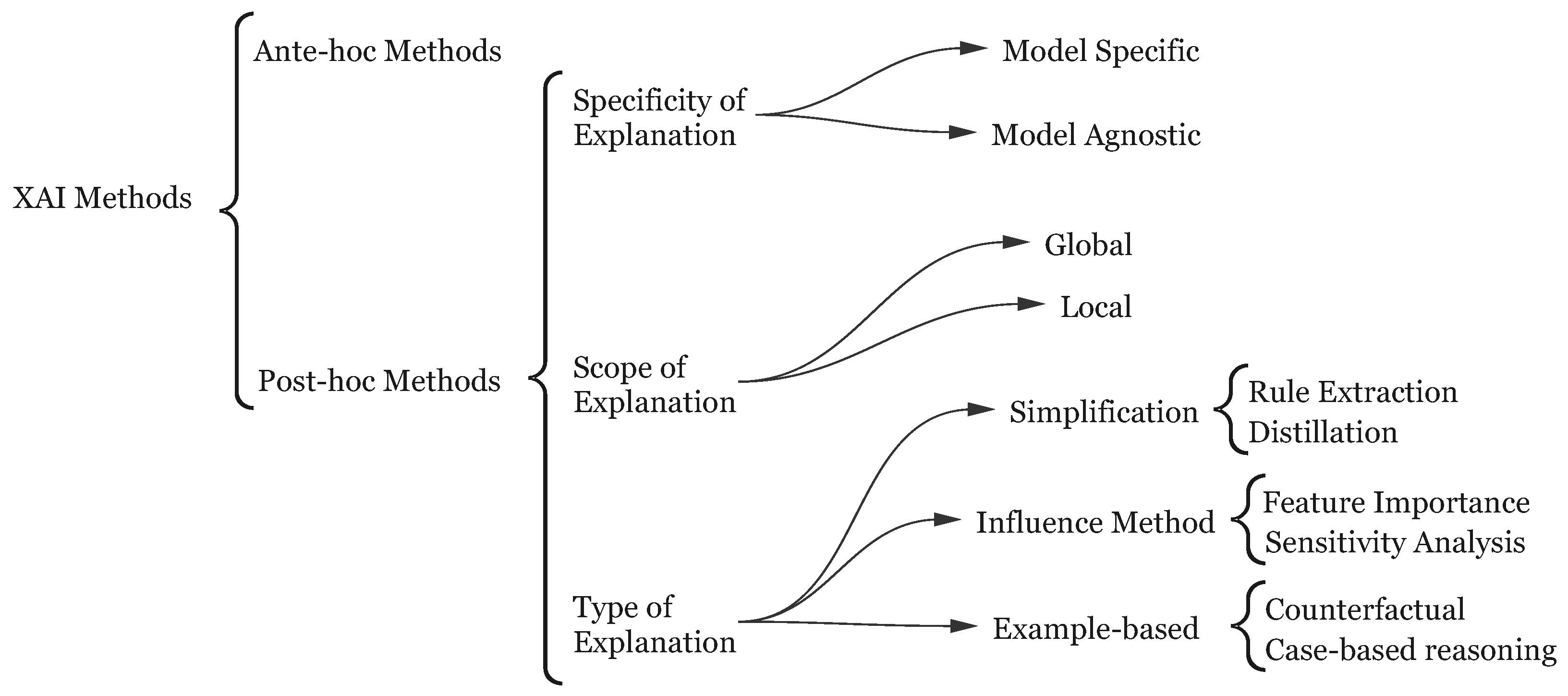

2.1. Explainable AI

2.2. Joint Cognitive Systems

3. Review Methodology

4. Literature Review: State of XAI in CDSS

4.1. Addressing Gaps in User-Centered XAI-CDSS Evaluation

4.2. Understanding Clinician Trust, Acceptance, and Interaction

4.3. Advancements in XAI Evaluation Methodologies

4.4. Frameworks for Responsible and Trustworthy XAI-CDSS

| Theme | Key Findings/Trends in Recent Literature | Representative Citations |

|---|---|---|

| 1. User-Centeredness and Contextual Needs Analysis | Persistent gap between technical XAI and clinical usability; lack of understanding/transparency hinders adoption; need for deep contextual understanding, workflow integration, and early user involvement (co–design); meaningful transparency beyond basic XAI required. | Ayorinde et al. [51], Turri et al. [57], Panigutti et al. [56], Nasarian et al. [72], Aziz et al. [55], Amann et al. [52], Pierce et al. [53] |

| 2. Clinician Trust, Acceptance, and Interaction | Trust is complex, multi–faceted (cognitive/affective), and inconsistently measured; XAI impact on trust is nuanced (depends on quality, type, and context); disconnect between stated trust and behavioral reliance (WoA); nuanced interaction patterns (“Negotiation”); risks of over-reliance and under-reliance; trust calibration is the goal. | Rosenbacek et al. [59], Sivaraman et al. [62], Laxar et al. [61], Gomez et al. [60], Micocci et al. [58] |

| 3. Evaluation Methodologies | Critique of traditional metrics (accuracy and simple surveys); need for robust, behavioral, and user-centered evaluation; emergence of behavioral testing frameworks (e.g., Zeno); novel methods for scalable human assessment (e.g., GWAPs); increased focus on evaluating the explanation itself (strategies, types, and formats). | Cabrera et al. [69], Morrison et al. [64], Cabrera et al. [69], Rosenbacek et al. [59], Aziz et al. [55], Sivaraman et al. [62], Laxar et al. [61] |

| 4. Frameworks for Responsible and Trustworthy AI | Proliferation of frameworks guiding development (resilience and responsible collaboration); shared emphasis on user needs, trust, evaluation, and ethics; move towards principled, structured approaches beyond ad hoc XAI application. | Sáez et al. [71], Nasarian et al. [72], Panigutti et al. [56], Cabrera et al. [69] |

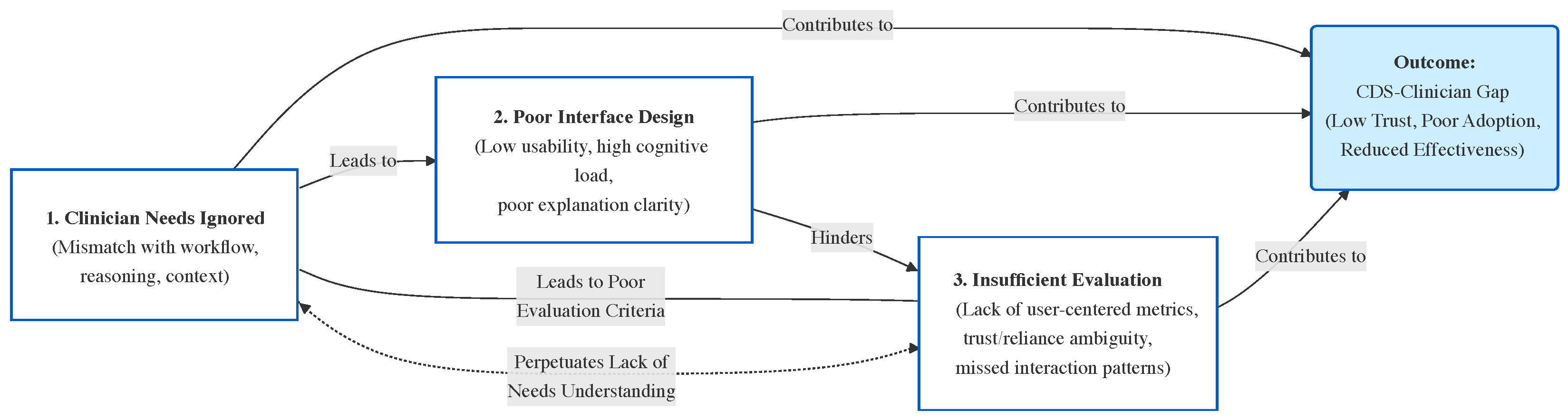

4.5. The CDS-Clinician Gap

- Clinicians’ needs and abilities: Our review (Section 4) indicates that existing XAI efforts in CDSS rarely select explanation methodologies based on the characteristics of end-users. This is a missed opportunity; different XAI methods could be tailored to different users’ reasoning processes and preferences. The main goal of XAI is to explain the model to users, and users will only trust the model if they understand how its outputs are produced. However, studies show that many XAI methods are designed according to developers’ intuition of what constitutes a ‘good’ explanation, rather than actual insights into users’ needs [73]. In this regard, it is useful to consider how explanations are defined in fields like philosophy and psychology: explanations are often viewed as a conversation or interaction intended to transfer knowledge, implying that the explainer must leverage the receiver’s existing understanding to enhance it. Different individuals reason differently and perceive information in varied ways. Therefore, accommodating the user’s mode of reasoning is crucial in designing useful, effective, and practical XAI. Not considering end-users can result in systems that clinicians do not want to use; ignoring users is a major design flaw. Designing an explanation mechanism in isolation from the decisions it is meant to inform will not lead to effective support. Clinical decision-making is highly information-intensive and, especially under complex conditions, involves complex human cognition. As discussed in the background, distributed cognition theory posits that cognitive activities involve both the mind and the external environment. This means that to design effective explanations, the reasoning processes of users (and how they use external aids) must be taken into account.

- Interface Design: A gap often exists between how information is represented by a CDSS and how humans conceptualize that information. Many CDSS interfaces fail to address this mismatch, leading to ineffective communication of information and a poor user experience. Most current interfaces rely on static representations of data (e.g., tables or fixed graphs), which force users to mentally bridge gaps in the presented information. This weak coupling between the system and user increases cognitive load, making it harder for clinicians to derive meaningful insights. Dynamic and interactive interfaces could help by allowing users to explore data in ways that align with their mental models and clinical tasks. However, such capabilities are largely underdeveloped in today’s CDSS. For example, a clinician trying to investigate the underlying drivers of a cluster of patients may struggle if the system only provides static visualizations that cannot be filtered or tailored, or if the clinician cannot incorporate additional contextual data. The lack of interactivity limits the clinician’s ability to iteratively refine their understanding or adapt the system’s outputs to their specific needs. The concept of coupling the degree of interconnection between the user and the interface is particularly relevant here. Weak coupling, characterized by static and disconnected representations, forces clinicians to infer relationships or trends manually, increasing the likelihood of errors. Strong coupling, on the other hand, involves dynamic, reciprocal interactions that allow users to engage with the system more intuitively and effectively. Despite its importance, the idea of strengthening this user–interface coupling is rarely discussed or prioritized in current XAI–CDSS design. Additionally, the visual design of many CDSS interfaces fails to balance clarity and complexity. Overloaded interfaces with excessive information or poorly designed visualizations can overwhelm users, whereas overly simplified displays risk omitting critical details. These interface shortcomings further exacerbate the clinician–CDSS gap, reducing user trust and hindering adoption.

- Evaluation of XAI Methods: The evaluation of XAI methods within CDSS is another underdeveloped area. In many cases, it remains unknown to what degree the provided AI explanations are understandable or useful to end-users. There is a clear need to evaluate the interpretability and explainability of XAI methods to ensure their usability and effectiveness. Despite a growing body of XAI research, relatively few studies have focused on evaluating these explanation methods in practice or assessing their influence on decision-making. In our survey of the literature, only a handful of papers included formal user evaluations of their XAI components, and just one study explicitly investigated whether providing explanations actually helped users trust the system or use the model’s output in their decisions [68]. This highlights a pressing need for systematic evaluation to validate, compare, and quantify different explanation approaches in terms of user comprehension and decision impact [11]. Without such evaluation, developers may not know which explanations truly aid clinicians or how to improve them.

5. Proposed Framework

- User-Centered Design Approach: Engaging end-users (healthcare professionals such as doctors and nurses, as well as patients and other stakeholders when appropriate) is a cornerstone of the XAI development process. Early and continuous involvement of these users ensures the system aligns with their diverse needs, expectations, and cognitive abilities. Techniques like participatory design, structured interviews, and focus groups help reveal how users interact with CDSS and what they require from explanations. Healthcare providers vary in their expertise and familiarity with AI models. For example, technically proficient clinicians may prefer detailed, model-specific explanations (such as feature importance graphs or counterfactual examples), while others might find simplified visualizations like heatmaps or plain-language summaries more helpful. Liang and Sedig emphasize that interactive tools tailored to users’ cognitive processes can significantly improve reasoning and decision-making performance [74]. Patients, who typically lack technical expertise, might benefit from explanations that focus on the clinical implications of the system’s outputs, helping them understand how recommendations impact their care. By involving end-users from the beginning, developers can create XAI systems that foster trust, are usable, and fit into real-world workflows. This participatory approach boosts decision support, ensures explanations resonate with users, and ultimately improves adoption and patient care outcomes.

- Customizable Explanation Level: Offering explanations at varying levels of granularity is critical to address the diverse expertise and preferences of users. Non-experts (such as patients or clinicians not deeply familiar with AI) may benefit from simplified explanations that highlight key clinical takeaways, whereas experienced medical professionals often require more detailed insights to support their reasoning. For example, a CDSS designed for radiologists might include heatmaps on medical images to highlight regions of interest, directly tying the AI model’s output to specific visual evidence. In contrast, primary care physicians might prefer plain-language summaries emphasizing the most relevant factors influencing a model’s prediction (for instance, a risk score based on patient history). Liang and Sedig underscore the importance of adapting information to users’ cognitive styles in order to improve comprehension and reduce cognitive load during decision-making tasks [74]. Gadanidis et al. [75] similarly argue that enabling users to customize the level of detail in explanations not only enhances usability but also empowers them to engage with the system at a depth aligned with their individual needs and expertise, making explanations more actionable and meaningful. By tailoring the depth and complexity of explanations to each user, XAI systems can better support decision processes, ensure interpretability, and foster trust in AI-driven recommendations. This adaptability also makes the system more inclusive, effectively serving a broader range of stakeholders.

- User Profiling: Creating detailed user profiles based on factors like experience level, domain specialty, and preferred interaction style is vital for personalizing XAI explanations. Understanding users’ backgrounds and their familiarity with AI enables developers to ensure explanations align with their needs and cognitive preferences. For instance, novice users might benefit from intuitive, step-by-step explanations that draw attention to the key elements of a decision, whereas advanced users might prefer complex visualizations or interactive tools that allow deeper exploration and customization of information. Tailoring explanations to fit users’ mental models (their internal understanding of how a process works) is particularly important. For example, a clinician with a diagnostic reasoning mindset might prefer explanations structured around causal relationships and decision pathways, enabling them to map the CDSS’s reasoning to their own diagnostic process. In contrast, a clinician focused on patient monitoring might prioritize trend-based visualizations or alerts tied to threshold values. By considering such user profile factors, the system can present explanations in a form that each user finds intuitive and valuable.

- Interactivity: Facilitate immediate exploration of the model’s behavior using interactive visualization tools. Users should be able to perform ‘what-if’ analyses by adjusting input variables and observing how the model’s predictions change. For example, a clinician could modify patient parameters (like age or lab results) to see how the recommendation from the model would differ. Such hands-on capabilities give users valuable insight into the model’s reasoning and enable clinicians to validate or challenge recommendations through real-time experimentation—ultimately fostering greater trust.

- Multi-modal Explanations: Present information through multiple forms of visual representation (such as heatmaps, line graphs, bar charts, text summaries, etc.) to convey different aspects of the model’s reasoning. Different modalities can cater to different user preferences and cognitive styles. For instance, one clinician might prefer a graphical visualization of feature importance, while another might find a textual explanation more accessible. Providing explanations in various formats ensures that a wider range of users can engage effectively with the XAI system.

- Interactive Explanations: Allow users to control and customize the explanation outputs. Users might adjust parameters of the explanation (for example, changing the threshold for what constitutes a ‘high’ feature importance) or explore alternative decision pathways suggested by the model. Enabling such user control fosters a sense of ownership and control over the AI-assisted decision process. For example, a clinician could interactively explore why the AI recommended a certain treatment by manipulating elements of a visualization; this deeper engagement can lead to more informed and confident use of the CDSS.

- User Satisfaction Surveys: User satisfaction is a key metric for evaluating XAI systems. Conduct surveys and interviews with end-users (clinicians and potentially patients for patient-facing tools) to gather feedback on the clarity, usefulness, and trustworthiness of the AI’s explanations. Regularly collecting this feedback ensures the system evolves in response to user expectations and helps pinpoint areas for improvement. These iterative feedback loops are especially valuable; over successive versions, explanations can be refined to better meet user needs, thereby enhancing usability and adoption.

- Diagnostic Accuracy with Explanations: Perform comparative assessments to measure clinicians’ diagnostic accuracy or decision outcomes when using the CDSS with versus without the AI explanations. This helps determine whether the presence of explanations positively influences decision-making processes and clinical outcomes. Importantly, it can also verify that adding explanations does not inadvertently degrade the model’s predictive performance or the clinician’s speed and accuracy. By linking explanations to tangible improvements (such as increased diagnostic confidence, better decision accuracy, or reduced errors), this evaluation underscores the practical benefits of integrating XAI into healthcare workflows.

- Comprehensibility Metrics: Comprehensibility is critical; explanations must be understandable to be actionable. Use quantifiable metrics to assess whether explanations are accessible to their intended audience. For example, apply readability scores like Flesch–Kincaid [62] to textual explanations to ensure they are pitched at an appropriate level (perhaps simpler for patients, more technical for expert users). For visual explanations, one might use measures of complexity or conduct comprehension quizzes. Ensuring that explanations are easily understood by users with varying expertise levels fosters effective communication between the XAI system and its users, promoting trust and adoption.

- Uncertainty Quantification: Incorporate evaluation of how well the system communicates uncertainty in its predictions. It is essential to inform users about the reliability or confidence of the AI’s recommendations. Provide metrics or visual cues indicating uncertainty (for instance, confidence intervals or probability estimates) and then assess whether users appropriately factor this uncertainty into their decisions. Highlighting low-confidence predictions or cases where the model is extrapolating beyond the data can prompt users to be cautious or seek additional information. Transparent communication of uncertainty can improve decision-making and help prevent over-reliance on the AI.

- Bias and Fairness Analysis: Evaluate the system for biases and fairness to ensure equitable outcomes across diverse patient groups. Assess both the training data and model outputs for any bias that could disproportionately affect certain populations (e.g., under-performance on minority groups). Strategies to mitigate identified biases might include diversifying training data or adjusting the algorithm. Regularly auditing the system’s recommendations for fairness and documenting these evaluations helps prevent discriminatory practices and promotes impartial decision support.

- Continuous Improvement and User Feedback: Integrate continuous feedback mechanisms so the XAI system can adapt over time to changing clinical contexts and user needs. For example, implement a way for clinicians to flag when an explanation was not helpful or when the system made an unexpected suggestion. Such feedback can drive updates: improving the model, refining explanations, or adjusting the interface. This ensures the system remains up-to-date with medical knowledge and evolving user expectations. Regular updates based on real-world feedback not only improve the system’s utility but also demonstrate a commitment to transparency and accountability, further reinforcing user trust.

6. Case Study: Applying the User-Centered XAI Framework

6.1. Case Study Selection and Rationale

- High Relevance: This study directly investigates clinician interaction, acceptance, and trust—including ‘negotiation’ behaviors—with an interpretable AI-CDSS for sepsis treatment [62].

- Illustrates Framework Phases: It provides empirical data on context/user needs (Phase 1), interface/explanation design (Phase 2), and a mixed-methods evaluation examining user interactions (Phase 3).

- Highlights Key Gaps: The research explicitly surfaces unmet user needs, interface design issues, and evaluation shortcomings—validating the need for our structured approach.

- Detailed Reporting: The article provides clear details on methodology, interface (‘AI Clinician Explorer’), the XAI technique (SHAP), and user feedback—enabling a comprehensive re-analysis through our framework.

6.2. Applying the Three-Phase Framework to the Case Study

- Context: The Sivaraman study is set in the ICU for sepsis management—a high-stakes, time-pressured domain [62]. Such environments demand extremely high trustworthiness from any CDSS. Phase 1 of our framework would emphasize deep contextual understanding before beginning design.

- User Needs Identification: Through think-aloud protocols and interviews, several clinician needs not fully addressed by the AI system were identified:

- -

- Desire for validation evidence to justify trusting the recommendations.

- -

- Inclusion of ‘gestalt’/bedside context.

- -

- Alignment with clinical workflow (discrepancy between AI’s discrete intervals and clinicians’ continuous management).

- -

- Clearer explanations, especially for non-standard recommendations.

Transparency of the underlying model was also a concern (echoed in Laxar et al. [61]). In the Sivaraman study, these surfaced after deployment; Phase 1 would advocate for uncovering such needs before design, via ethnography, contextual inquiry, and participatory workshops. - XAI Method Selection: The system used SHAP (feature attribution), but the publication does not document clinician involvement in this choice. Our framework would have called for XAI method selection based on user reasoning processes—potentially identifying, for example, the value of example-based explanations if clinicians favored case-based reasoning (as in Gomez et al. [60]). A purely technical or developer-driven selection risks persistent mismatches.

- Phase 1: Summary: Many gaps could have been identified by a proactive, user-centered needs analysis and method selection—aligning the explanation approach, data, and interface with clinician reasoning and practice before deployment, not after.

- Interface Design: The ‘AI Clinician Explorer’ included patient trajectory visualization, navigation controls, and an explanation panel for recommendations [62]. This tool is central to Phase 2 of our framework.

- Explanation Presentation: Three interface conditions were tested: (1) Text-Only, (2) Feature Importance (SHAP bar chart), and (3) Alternative Treatments (lists with historical frequency) [62]. Comparing multiple formats fits Phase 2’s recommendation to iteratively prototype and evaluate different presentation solutions.

- Design Rationale and Feedback: SHAP-based visualizations improved confidence in the AI, suggesting increased transparency, but ‘Alternative Treatments’ received mixed reactions (some found them confusing or less helpful). Critically, a mismatch remained: clinicians managed sepsis as a continuous process, but the AI provided discrete recommendations. Phase 2 in our framework emphasizes co-design, real-world simulation, and deep integration into clinical workflow, which might have addressed this issue earlier.

- Phase 2: Summary: The case demonstrates the difficulty of bridging AI logic and clinical workflow. Despite thoughtful design, full alignment with real-world tasks and needs may require more simulation, prototyping, and continuous user feedback, as prescribed by Phase 2.

- Evaluation Methodology: Sivaraman et al. used a mixed-methods approach: 24 clinicians applied the tool to real cases with both quantitative (concordance and confidence ratings) and qualitative (think-aloud and interviews) outcomes [62]. Coding interaction patterns revealed a ‘negotiation’ mode, neither blind trust nor rejection—capturing nuances a binary approach would miss.

- Key Findings and Limitations: SHAP explanations increased confidence but not concordance with recommendations. Qualitative insights (negotiation behavior) highlight the value of behavioral evaluation advocated in Phase 3. As with Laxar et al. [61], actual reliance and self-reported trust may diverge—suggesting the importance of multifaceted metrics.

- Trust and Acceptance Factors: Trust was driven more by evidence of external validation than interface features or explanation types. Phase 3 advises not only measuring outcomes but probing underlying reasons for acceptance, trust, or skepticism—aiding iterative improvement.

- Iterative Refinement: Gaps identified (need for validation, holistic context, and workflow integration) would, under our framework, feed back to Phase 1 and Phase 2—closing the design loop. Solutions might include adding evidence summaries or continuous integration into the workflow.

- Phase 3: Summary: The case shows how mixed-method evaluation uncovers real barriers and opportunities for improvement and highlights the importance of iterative design refinement driven by real-world user feedback.

6.3. Discussion: Illustrating the Framework’s Value

- The ‘negotiation’ interaction pattern demonstrates the limitations of binary or technical evaluation metrics and justifies our framework’s Phase 3 call for nuanced, mixed-method, behavioral evaluation.

- User needs such as system validation, workflow integration, and holistic context—surfaced post hoc—could have shaped better decisions had they been proactively addressed in Phases 1 and 2.

- Evaluation challenges like the trust–validation paradox [62] reinforce that assessment must be reflective and continuous, not a one-time event.

- Findings from Laxar et al. (difference between self-reported trust and real reliance) and Gomez et al. (explanation type and reliance) further strengthen the need for user-aligned method selection and interface design—and for complex, multi-modal evaluation.

7. Limitations and Conclusions

Considerations for Data Modality and XAI Complexity

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| XAI | Explainable Artificial Intelligence |

| CDSS | Clinical Decision Support Systems |

| ML | Machine Learning |

| EHR | Electronic Health Records |

References

- Berner, E.S.; La Lande, T.J. Overview of clinical decision support systems. In Clinical Decision Support Systems: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1–17. [Google Scholar]

- Kubben, P.; Dumontier, M.; Dekker, A. Fundamentals of Clinical Data Science; Springer: New York, NY, USA, 2019. [Google Scholar]

- Shamout, F.; Zhu, T.; Clifton, D.A. Machine learning for clinical outcome prediction. IEEE Rev. Biomed. Eng. 2020, 14, 116–126. [Google Scholar] [CrossRef]

- Montani, S.; Striani, M. Artificial intelligence in clinical decision support: A focused literature survey. Yearb. Med. Inform. 2019, 28, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of interpretability of machine learning. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), Turin, Italy, 1–3 October 2018; IEEE: New York, NY, USA, 2018; pp. 80–89. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef] [PubMed]

- Tonekaboni, S.; Joshi, S.; McCradden, M.D.; Goldenberg, A. What clinicians want: Contextualizing explainable machine learning for clinical end use. In Proceedings of the Machine Learning for Healthcare Conference, Ann Arbor, MI, USA, 9–10 August 2019; PMLR: Cambridge, MA, USA, 2019; pp. 359–380. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (XAI): Toward medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef]

- Bunn, J. Working in contexts for which transparency is important: A recordkeeping view of explainable artificial intelligence (XAI). Rec. Manag. J. 2020, 30, 143–153. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. Acm Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Tan, S.; Caruana, R.; Hooker, G.; Lou, Y. Distill–and–compare: Auditing black-box models using transparent model distillation. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; pp. 303–310. [Google Scholar]

- Xu, K.; Park, D.H.; Yi, C.; Sutton, C. Interpreting deep classifier by visual distillation of dark knowledge. arXiv 2018, arXiv:1803.04042. [Google Scholar] [CrossRef]

- Che, Z.; Purushotham, S.; Khemani, R.; Liu, Y. Distilling knowledge from deep networks with applications to healthcare domain. arXiv 2015, arXiv:1512.03542. [Google Scholar] [CrossRef]

- Tickle, A.B.; Andrews, R.; Golea, M.; Diederich, J. The truth will come to light: Directions and challenges in extracting the knowledge embedded within trained artificial neural networks. IEEE Trans. Neural Netw. 1998, 9, 1057–1068. [Google Scholar] [CrossRef] [PubMed]

- Su, C.T.; Chen, Y.C. Rule extraction algorithm from support vector machines and its application to credit screening. Soft Comput. 2012, 16, 645–658. [Google Scholar] [CrossRef]

- De Fortuny, E.J.; Martens, D. Active learning-based pedagogical rule extraction. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2664–2677. [Google Scholar] [CrossRef] [PubMed]

- Bologna, G.; Hayashi, Y. A rule extraction study from svm on sentiment analysis. Big Data Cogn. Comput. 2018, 2, 6. [Google Scholar] [CrossRef]

- Hailesilassie, T. Rule extraction algorithm for deep neural networks: A review. arXiv 2016, arXiv:1610.05267. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Magesh, P.R.; Myloth, R.D.; Tom, R.J. An explainable machine learning model for early detection of Parkinson’s disease using LIME on DaTSCAN imagery. Comput. Biol. Med. 2020, 126, 104041. [Google Scholar] [CrossRef]

- Zhang, Y.; Wallace, B. A sensitivity analysis of (and practitioners’ guide to) convolutional neural networks for sentence classification. arXiv 2015, arXiv:1510.03820. [Google Scholar]

- Hooker, S.; Erhan, D.; Kindermans, P.J.; Kim, B. A benchmark for interpretability methods in deep neural networks. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Hara, S.; Ikeno, K.; Soma, T.; Maehara, T. Maximally invariant data perturbation as explanation. arXiv 2018, arXiv:1806.07004. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Štrumbelj, E.; Kononenko, I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014, 41, 647–665. [Google Scholar] [CrossRef]

- Fong, R.C.; Vedaldi, A. Interpretable explanations of black boxes by meaningful perturbation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3429–3437. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; PMLR: Cambridge, MA, USA, 2015; pp. 2048–2057. [Google Scholar]

- Montavon, G.; Samek, W.; Müller, K.R. Methods for interpreting and understanding deep neural networks. Digit. Signal Process. 2018, 73, 1–15. [Google Scholar] [CrossRef]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef] [PubMed]

- Samek, W.; Montavon, G.; Binder, A.; Lapuschkin, S.; Müller, K.R. Interpreting the predictions of complex ML models by layer-wise relevance propagation. arXiv 2016, arXiv:1611.08191. [Google Scholar] [CrossRef]

- Nguyen, A.; Dosovitskiy, A.; Yosinski, J.; Brox, T.; Clune, J. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Bien, J.; Tibshirani, R. Prototype selection for interpretable classification. arXiv 2011, arXiv:1202.5933. [Google Scholar] [CrossRef]

- Sharma, S.; Henderson, J.; Ghosh, J. Certifai: Counterfactual explanations for robustness, transparency, interpretability, and fairness of artificial intelligence models. arXiv 2019, arXiv:1905.07857. [Google Scholar] [CrossRef]

- Mothilal, R.K.; Sharma, A.; Tan, C. Explaining machine learning classifiers through diverse counterfactual explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 607–617. [Google Scholar]

- Yuan, X.; He, P.; Zhu, Q.; Li, X. Adversarial examples: Attacks and defenses for deep learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2805–2824. [Google Scholar] [CrossRef]

- Lamy, J.B.; Sekar, B.; Guezennec, G.; Bouaud, J.; Séroussi, B. Explainable artificial intelligence for breast cancer: A visual case-based reasoning approach. Artif. Intell. Med. 2019, 94, 42–53. [Google Scholar] [CrossRef]

- Sedig, K.; Parsons, P. Interaction design for complex cognitive activities with visual representations: A pattern-based approach. Ais Trans. Hum. Comput. Interact. 2013, 5, 84–133. [Google Scholar] [CrossRef]

- Sedig, K.; Naimi, A.; Haggerty, N. Aligning information technologies with evidence-based health-care activities: A design and evaluation framework. Hum. Technol. Interdiscip. J. Humans Ict Environ. 2017, 13, 180–215. [Google Scholar] [CrossRef]

- Parsons, P.; Sedig, K. Distribution of information processing while performing complex cognitive activities with visualization tools. In Handbook of Human Centric Visualization; Springer: New York, NY, USA, 2013; pp. 693–715. [Google Scholar]

- Lamy, J.B.; Sedki, K.; Tsopra, R. Explainable decision support through the learning and visualization of preferences from a formal ontology of antibiotic treatments. J. Biomed. Inform. 2020, 104, 103407. [Google Scholar] [CrossRef] [PubMed]

- Choi, E.; Bahadori, M.T.; Sun, J.; Kulas, J.; Schuetz, A.; Stewart, W. Retain: An interpretable predictive model for healthcare using reverse time attention mechanism. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Kyrimi, E.; Mossadegh, S.; Tai, N.; Marsh, W. An incremental explanation of inference in Bayesian networks for increasing model trustworthiness and supporting clinical decision making. Artif. Intell. Med. 2020, 103, 101812. [Google Scholar] [CrossRef] [PubMed]

- Ming, Y.; Qu, H.; Bertini, E. Rulematrix: Visualizing and understanding classifiers with rules. IEEE Trans. Vis. Comput. Graph. 2018, 25, 342–352. [Google Scholar] [CrossRef]

- Che, Z.; Purushotham, S.; Khemani, R.; Liu, Y. Interpretable deep models for ICU outcome prediction. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 4–8 November 2017; Volume 2016, p. 371. [Google Scholar]

- Yang, Y.; Tresp, V.; Wunderle, M.; Fasching, P.A. Explaining therapy predictions with layer-wise relevance propagation in neural networks. In Proceedings of the 2018 IEEE International Conference on Healthcare Informatics (ICHI), New York, NY, USA, 4–7 June 2018; IEEE: New York, NY, USA, 2018; pp. 152–162. [Google Scholar]

- Böhle, M.; Eitel, F.; Weygandt, M.; Ritter, K. Layer-wise relevance propagation for explaining deep neural network decisions in MRI-based Alzheimer’s disease classification. Front. Aging Neurosci. 2019, 11, 194. [Google Scholar] [CrossRef]

- Slijepcevic, D.; Horst, F.; Lapuschkin, S.; Raberger, A.M.; Zeppelzauer, M.; Samek, W.; Breiteneder, C.; Schöllhorn, W.I.; Horsak, B. On the explanation of machine learning predictions in clinical gait analysis. arXiv 2020, arXiv:1912.07737. [Google Scholar] [CrossRef]

- Šajnović, U.; Vošner, H.B.; Završnik, J.; Žlahtič, B.; Kokol, P. Internet of things and big data analytics in preventive healthcare: A synthetic review. Electronics 2024, 13, 3642. [Google Scholar] [CrossRef]

- Ayorinde, A.; Mensah, D.O.; Walsh, J.; Ghosh, I.; Ibrahim, S.A.; Hogg, J.; Peek, N.; Griffiths, F. Health Care Professionals’ Experience of Using AI: Systematic Review With Narrative Synthesis. J. Med. Internet Res. 2024, 26, e55766. [Google Scholar] [CrossRef]

- Amann, J.; Vetter, D.; Blomberg, S.N.; Christensen, H.C.; Coffee, M.; Gerke, S.; Gilbert, T.K.; Hagendorff, T.; Holm, S.; Livne, M.; et al. To explain or not to explain? Artificial intelligence explainability in clinical decision support systems. PLoS Digit. Health 2022, 1, e0000016. [Google Scholar] [CrossRef]

- Pierce, R.L.; Van Biesen, W.; Van Cauwenberge, D.; Decruyenaere, J.; Sterckx, S. Explainability in medicine in an era of AI-based clinical decision support systems. Front. Genet. 2022, 13, 903600. [Google Scholar] [CrossRef]

- Kim, S.Y.; Kim, D.H.; Kim, M.J.; Ko, H.J.; Jeong, O.R. XAI-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2024, 14, 6638. [Google Scholar] [CrossRef]

- Aziz, N.A.; Manzoor, A.; Mazhar Qureshi, M.D.; Qureshi, M.A.; Rashwan, W. Explainable AI in Healthcare: Systematic Review of Clinical Decision Support Systems. medRxiv 2024. [Google Scholar] [CrossRef]

- Panigutti, C.; Beretta, A.; Fadda, D.; Giannotti, F.; Pedreschi, D.; Perotti, A.; Rinzivillo, S. Co-design of human-centered, explainable AI for clinical decision support. ACM Trans. Interact. Intell. Syst. 2023, 13, 1–35. [Google Scholar] [CrossRef]

- Turri, V.; Morrison, K.; Robinson, K.M.; Abidi, C.; Perer, A.; Forlizzi, J.; Dzombak, R. Transparency in the Wild: Navigating Transparency in a Deployed AI System to Broaden Need-Finding Approaches. In Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency, Rio de Janeiro, Brazil, 3–6 June 2024; pp. 1494–1514. [Google Scholar]

- Micocci, M.; Borsci, S.; Thakerar, V.; Walne, S.; Manshadi, Y.; Edridge, F.; Mullarkey, D.; Buckle, P.; Hanna, G.B. Attitudes towards trusting artificial intelligence insights and factors to prevent the passive adherence of GPs: A pilot study. J. Clin. Med. 2021, 10, 3101. [Google Scholar] [CrossRef]

- Rosenbacke, R.; Melhus, Å.; McKee, M.; Stuckler, D. How Explainable Artificial Intelligence Can Increase or Decrease Clinicians’ Trust in AI Applications in Health Care: Systematic Review. JMIR AI 2024, 3, e53207. [Google Scholar] [CrossRef]

- Gomez, C.; Smith, B.L.; Zayas, A.; Unberath, M.; Canares, T. Explainable AI decision support improves accuracy during telehealth strep throat screening. Commun. Med. 2024, 4, 149. [Google Scholar] [CrossRef]

- Laxar, D.; Eitenberger, M.; Maleczek, M.; Kaider, A.; Hammerle, F.P.; Kimberger, O. The influence of explainable vs non-explainable clinical decision support systems on rapid triage decisions: A mixed methods study. BMC Med. 2023, 21, 359. [Google Scholar] [CrossRef]

- Sivaraman, V.; Bukowski, L.A.; Levin, J.; Kahn, J.M.; Perer, A. Ignore, trust, or negotiate: Understanding clinician acceptance of AI-based treatment recommendations in health care. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–18. [Google Scholar]

- Sivaraman, V.; Morrison, K.; Epperson, W.; Perer, A. Over-Relying on Reliance: Towards Realistic Evaluations of AI-Based Clinical Decision Support. arXiv 2025, arXiv:2504.07423. [Google Scholar]

- Morrison, K.; Jain, M.; Hammer, J.; Perer, A. Eye into AI: Evaluating the Interpretability of Explainable AI Techniques through a Game with a Purpose. Proc. ACM Hum. Comput. Interact. 2023, 7, 1–22. [Google Scholar] [CrossRef]

- Morrison, K.; Shin, D.; Holstein, K.; Perer, A. Evaluating the impact of human explanation strategies on human-AI visual decision-making. Proc. ACM Hum. Comput. Interact. 2023, 7, 1–37. [Google Scholar] [CrossRef]

- Katuwal, G.J.; Chen, R. Machine learning model interpretability for precision medicine. arXiv 2016, arXiv:1610.09045. [Google Scholar] [CrossRef]

- Giordano, C.; Brennan, M.; Mohamed, B.; Rashidi, P.; Modave, F.; Tighe, P. Accessing artificial intelligence for clinical decision-making. Front. Digit. Health 2021, 3, 645232. [Google Scholar] [CrossRef]

- Kwon, B.C.; Choi, M.J.; Kim, J.T.; Choi, E.; Kim, Y.B.; Kwon, S.; Sun, J.; Choo, J. Retainvis: Visual analytics with interpretable and interactive recurrent neural networks on electronic medical records. IEEE Trans. Vis. Comput. Graph. 2018, 25, 299–309. [Google Scholar] [CrossRef] [PubMed]

- Cabrera, Á.A.; Fu, E.; Bertucci, D.; Holstein, K.; Talwalkar, A.; Hong, J.I.; Perer, A. Zeno: An interactive framework for behavioral evaluation of machine learning. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–14. [Google Scholar]

- Zihni, E.; Madai, V.I.; Livne, M.; Galinovic, I.; Khalil, A.A.; Fiebach, J.B.; Frey, D. Opening the black box of artificial intelligence for clinical decision support: A study predicting stroke outcome. PLoS ONE 2020, 15, e0231166. [Google Scholar] [CrossRef] [PubMed]

- Sáez, C.; Ferri, P.; García-Gómez, J.M. Resilient artificial intelligence in health: Synthesis and research agenda toward next-generation trustworthy clinical decision support. J. Med. Internet Res. 2024, 26, e50295. [Google Scholar] [CrossRef] [PubMed]

- Nasarian, E.; Alizadehsani, R.; Acharya, U.R.; Tsui, K.L. Designing interpretable ML system to enhance trust in healthcare: A systematic review to proposed responsible clinician-AI-collaboration framework. Inf. Fusion 2024, 108, 102412. [Google Scholar] [CrossRef]

- Miller, T. Explanation in artificial intelligence: Insights from the social sciences. Artif. Intell. 2019, 267, 1–38. [Google Scholar] [CrossRef]

- Sedig, K.; Liang, H.N. On the design of interactive visual representations: Fitness of interaction. In Proceedings of the EdMedia+ Innovate Learning, Vancouver, BC, Canada, 25–29 June 2007; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2007; pp. 999–1006. [Google Scholar]

- Gadanidis, G.; Sedig, K.; Liang, H.N. Designing online mathematical investigation. J. Comput. Math. Sci. Teach. 2004, 23, 275–298. [Google Scholar]

- Xie, Y.; Gao, G.; Chen, X. Outlining the design space of explainable intelligent systems for medical diagnosis. arXiv 2019, arXiv:1902.06019. [Google Scholar] [CrossRef]

- Tory, M. User studies in visualization: A reflection on methods. In Handbook of Human Centric Visualization; Springer: New York, NY, USA, 2013; pp. 411–426. [Google Scholar]

- Parsons, P.; Sedig, K. Adjustable properties of visual representations: Improving the quality of human-information interaction. J. Assoc. Inf. Sci. Technol. 2014, 65, 455–482. [Google Scholar] [CrossRef]

- Sedig, K.; Parsons, P.; Dittmer, M.; Haworth, R. Human-centered interactivity of visualization tools: Micro-and macro-level considerations. In Handbook of Human Centric Visualization; Springer: New York, NY, USA, 2014; pp. 717–743. [Google Scholar]

- Hundhausen, C.D. Evaluating visualization environments: Cognitive, social, and cultural perspectives. In Handbook of Human Centric Visualization; Springer: New York, NY, USA, 2013; pp. 115–145. [Google Scholar]

- Freitas, C.M.; Pimenta, M.S.; Scapin, D.L. User-centered evaluation of information visualization techniques: Making the HCI-InfoVis connection explicit. In Handbook of Human Centric Visualization; Springer: New York, NY, USA, 2014; pp. 315–336. [Google Scholar]

- Abbas, Q.; Jeong, W.; Lee, S.W. Explainable AI in Clinical Decision Support Systems: A Meta-Analysis of Methods, Applications, and Usability Challenges. Healthcare 2025, 13, 2154. [Google Scholar] [CrossRef]

- Zytek, A.; Liu, D.; Vaithianathan, R.; Veeramachaneni, K. Sibyl: Understanding and addressing the usability challenges of machine learning in high-stakes decision making. IEEE Trans. Vis. Comput. Graph. 2021, 28, 1161–1171. [Google Scholar] [CrossRef]

- Gambetti, A.; Han, Q.; Shen, H.; Soares, C. A Survey on Human-Centered Evaluation of Explainable AI Methods in Clinical Decision Support Systems. arXiv 2025, arXiv:2502.09849. [Google Scholar] [CrossRef]

| XAI Keyword | CDSS Keyword |

|---|---|

| Explainable AI | Clinical Decision Support |

| Interpretable AI | Clinical Decision Support Systems |

| Transparent AI | Healthcare Decision Support |

| Accountable AI | Medical Decision Support Systems |

| Interpretable Machine Learning | Medicine |

| Explainable Machine Learning | Clinical Decision Support Tools |

| Black Box | Treatment Recommendations |

| Interpretable Algorithm | Clinical Prediction |

| Explainable Algorithm | Clinical Decision-Making |

| Model Explanation | Patient Outcomes |

| XAI | CDSS |

| Transparent Machine Learning | Healthcare |

| Real-world XAI in Medicine | Disease Diagnosis |

| Framework Phase | Aspect | Case Study Details (Sivaraman et al.) | Connection to Framework Principles/Goals |

|---|---|---|---|

| Context | High-stakes ICU environment; sepsis treatment (uncertainty, variability). | Aligns with Phase 1 goal of understanding the specific clinical setting. | |

| Phase 1: User-Centered XAI Method Selection | User Needs Analysis | Needs identified post hoc via evaluation: validation evidence for trust, inclusion of bedside/gestalt info, workflow alignment, explanation for deviations. Lack of model transparency also noted in other contexts (Laxar et al. [61]). | Demonstrates importance of proactive Phase 1 needs analysis (interviews, observation) rather than discovering needs late. |

| XAI Method Selection | SHAP used for feature explanation; no documented user input in selection. Contrast with Gomez et al. [60], who found higher trust for example-based explanations aligning with clinical reasoning. | Phase 1 emphasizes selecting methods based on identified user needs and reasoning styles—not just technical availability. | |

| Interface Design | ‘AI Clinician Explorer’ interface with trajectory views, controls, recommendation panel. | Exemplifies the design artifact created in Phase 2. | |

| Phase 2: User-Centered Interface Design | Explanation Presentation | Tested: Text-Only; Feature Explanation (SHAP chart); Alternative Treatments (AI-ranked actions + historical frequency). | Demonstrates testing multiple explanation formats as part of iterative Phase 2 refinement. |

| Design Feedback | Feature Explanation increased confidence; Alternative Treatments had mixed reception; mismatch between AI’s discrete outputs and clinical workflow noted. Context-dependence also seen in Laxar et al. (time–pressure) studies. | Phase 2 requires translating Phase 1 needs into usable interfaces via iterative design and testing; feedback pinpoints areas for improvement. | |

| Evaluation Method | Mixed-methods: 24 clinicians, real cases, think-aloud, interviews, quantitative ratings, concordance analysis, qualitative coding for interaction patterns. | Exemplifies robust Phase 3 evaluation combining quantitative and qualitative insights. | |

| Phase 3: Evaluation and Iterative Refinement | Key Findings and Limitations | Explanations boosted confidence but not binary concordance; ‘Negotiation’ pattern dominant; trust linked to external validation; gaps identified (data, workflow). Disconnect between reliance (WoA) and trust seen elsewhere (Laxar et al. [61]). | Demonstrates Phase 3 goal of evaluating beyond simple metrics and understanding complex behaviors (‘Negotiation’). |

| Trust Calibration and Iterative Refinement | Identified the ‘chicken-and-egg’ problem of validation ↔ trust. Contrasting risks of over-reliance (Micocci et al. [58]) and under-reliance (Gomez et al. [60]) noted. | Phase 3 aims for appropriate trust calibration. Findings feed back into refining Phases 1 and 2. |

| Attribute | Image-Based CDSS | Structured EHR-Based CDSS |

|---|---|---|

| Data Modality | Continuous, spatial, high-dimensional (e.g., pixels in an MRI). | Discrete, tabular, categorical/numerical features (e.g., lab values, diagnosis codes). |

| Primary User Question | ‘Where did the model focus?’ | ‘Which factors influenced the prediction and by how much?’ |

| Common Explanation Type | Spatial Attribution (Visual Overlays like heatmaps). | Feature Attribution (Contribution plots, lists, e.g., SHAP). |

| Primary User Task | Visual correlation and anatomical plausibility assessment. | Logical review and clinical pathway validation. |

| Key User-Centered Challenge | Interpreting spatial ambiguity, avoiding visual confirmation bias, and assessing the fidelity of the visual explanation [82]. | Managing feature overload, understanding complex feature interactions, and assessing the plausibility of abstract feature importance values [83]. |

| Potential for Misinterpretation | Trusting a visually plausible but mechanistically flawed localization. | Dismissing a counter-intuitive but statistically correct feature importance. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salimparsa, M.; Sedig, K.; Lizotte, D.J.; Abdullah, S.S.; Chalabianloo, N.; Muanda, F.T. Explainable AI for Clinical Decision Support Systems: Literature Review, Key Gaps, and Research Synthesis. Informatics 2025, 12, 119. https://doi.org/10.3390/informatics12040119

Salimparsa M, Sedig K, Lizotte DJ, Abdullah SS, Chalabianloo N, Muanda FT. Explainable AI for Clinical Decision Support Systems: Literature Review, Key Gaps, and Research Synthesis. Informatics. 2025; 12(4):119. https://doi.org/10.3390/informatics12040119

Chicago/Turabian StyleSalimparsa, Mozhgan, Kamran Sedig, Daniel J. Lizotte, Sheikh S. Abdullah, Niaz Chalabianloo, and Flory T. Muanda. 2025. "Explainable AI for Clinical Decision Support Systems: Literature Review, Key Gaps, and Research Synthesis" Informatics 12, no. 4: 119. https://doi.org/10.3390/informatics12040119

APA StyleSalimparsa, M., Sedig, K., Lizotte, D. J., Abdullah, S. S., Chalabianloo, N., & Muanda, F. T. (2025). Explainable AI for Clinical Decision Support Systems: Literature Review, Key Gaps, and Research Synthesis. Informatics, 12(4), 119. https://doi.org/10.3390/informatics12040119