Theoretical Models for Acceptance of Human Implantable Technologies: A Narrative Review

Abstract

:1. Introduction

2. Related Work

2.1. Embeddables’ Qualities

2.2. Theoretical Models of Technology Acceptance

3. Study

3.1. Materials and Methods

3.2. Analysis

4. Results

4.1. Types of Embeddables

- Subcutaneous microchips (SM)—tiny integrated circuits that are about the size of a rice grain, usually encased inside transponders and placed underneath the skin. Five articles specifically explored the acceptance of subcutaneous microchip implants.

- Capacity-enhancing nanoimplants—refers to a type of nanotechnology-based implant that can be integrated into the human body to augment or enhance certain abilities or functions. These implants are typically designed at the nanoscale, allowing for precise manipulation and interaction with biological systems.

- Neural implants—technological devices that are implanted inside the brain to improve the memory performance of an individual. Two studies investigated people’s acceptance of neural implants for memory and performance enhancement purposes.

- Cyborg technologies—cyborg is a frankenword that is used to describe people enhanced with both organic and digital (implantable or insideable) body parts. Cyborg technologies refer to any type of embeddable technologies that are used by a healthy individual to enhance innate human capabilities.

4.2. Technology Acceptance Model

4.2.1. Emphasis on Perceived Usefulness and Ease of Use

4.2.2. Incorporation of Perceived Trust and Health Concerns

4.2.3. Consideration of Privacy and Security

4.2.4. Exploration of Demographics and Individual Factors

4.2.5. Limitations and Opportunities

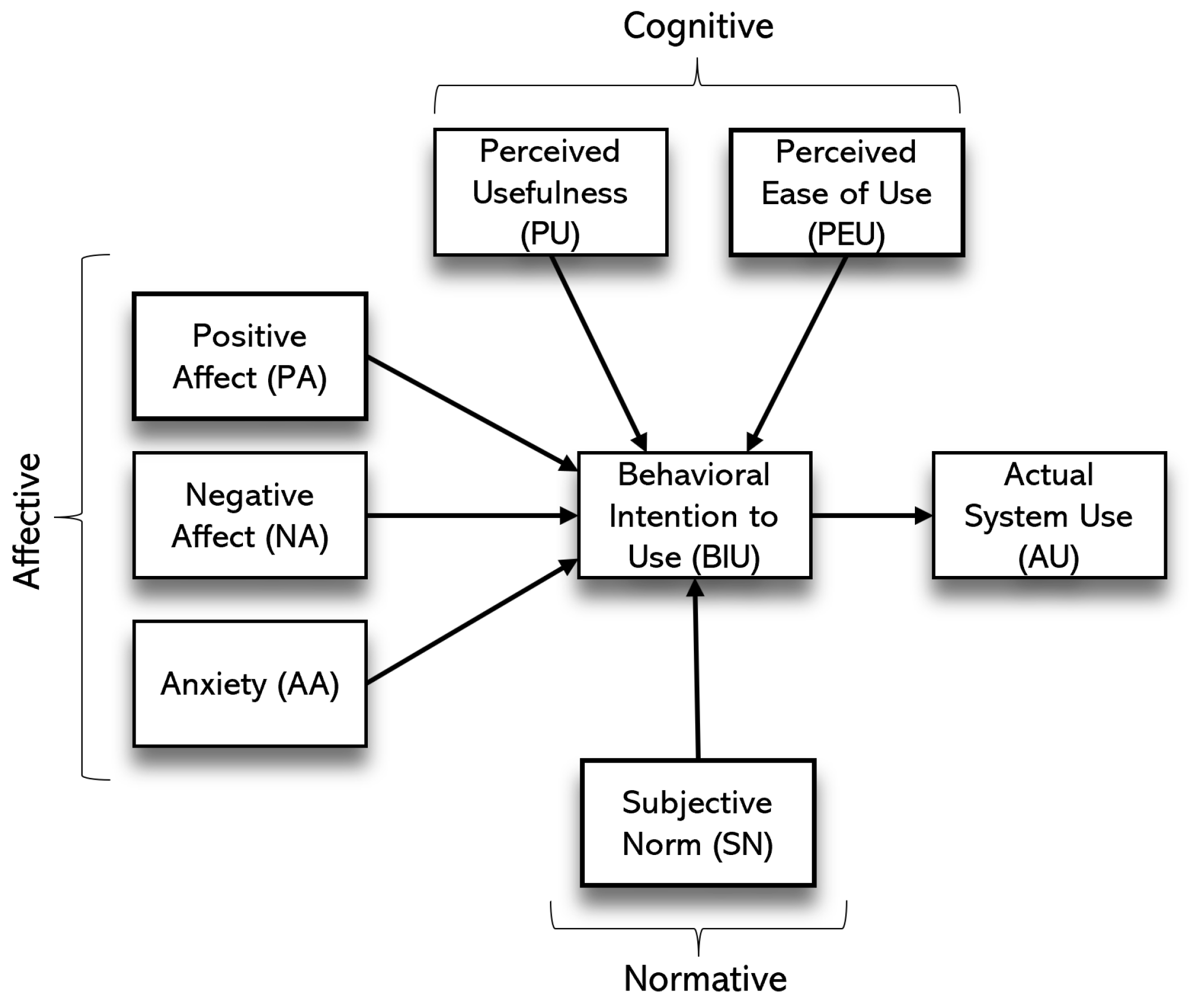

4.3. Cognitive–Affective–Normative Model

4.3.1. Embracing Emotional Responses

4.3.2. Incorporating Social Influence

4.3.3. Ethical Considerations

4.3.4. Limitations and Opportunities

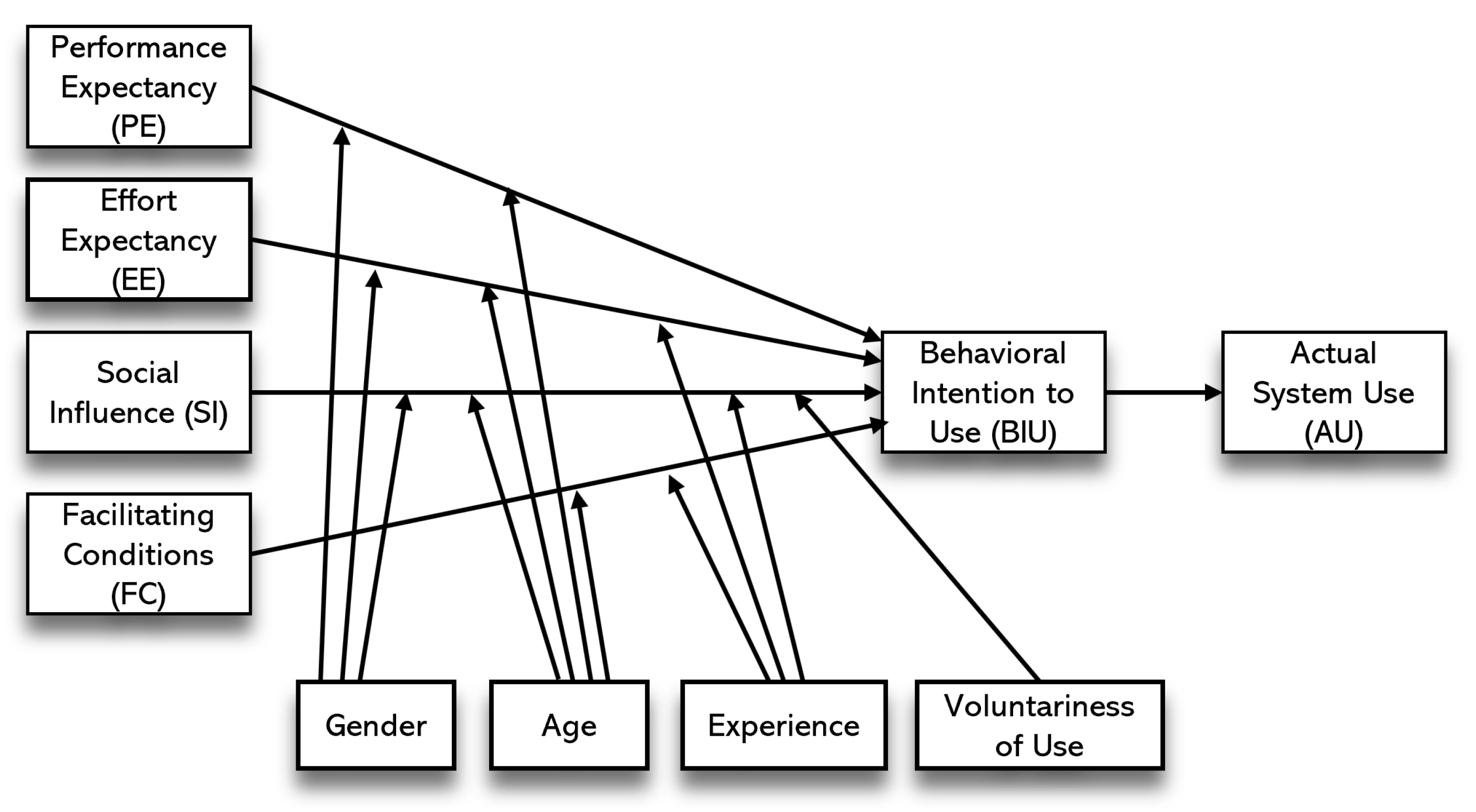

4.4. Unified Theory of Acceptance and Use of Technology

4.4.1. UTAUT Modification for Implantable Technology

4.4.2. Limitations and Opportunities

4.5. Psychological Constructs

4.5.1. Technology Anxiety and Privacy Concerns

4.5.2. Personality Dimensions

4.5.3. Ethical Awareness and Cultural Considerations

4.5.4. Motivation and Trust

4.5.5. Perfectionism and Locus of Control

4.5.6. Implicit Psychosocial Drivers

4.5.7. Limitations and Opportunities

5. Discussion

5.1. Principal Findings

5.2. Research Implications

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gangadharbatla, H. Biohacking: An exploratory study to understand the factors influencing the adoption of embedded technologies within the human body. Heliyon 2020, 6, e03931. [Google Scholar] [CrossRef] [PubMed]

- Licklider, J.C. Man-computer symbiosis. IRE Trans. Hum. Factors Electron. 1960, HFE-1, 4–11. [Google Scholar] [CrossRef]

- Bardini, T. Bootstrapping: Douglas Engelbart, Coevolution, and the Origins of Personal Computing; Stanford University Press: Redwood City, CA, USA, 2000. [Google Scholar]

- Weiser, M. The computer for the 21st century. ACM Sigmobile Mob. Comput. Commun. Rev. 1999, 3, 3–11. [Google Scholar] [CrossRef]

- Goodman, A. Embeddables: The Next Evolution of Wearable Tech. In Designing for Emerging Technologies: UX for Genomics, Robotics, and the Internet of Things; Follett, J., Ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2014; Chapter 8; pp. 205–224. [Google Scholar]

- Alomary, A.; Woollard, J. How is technology accepted by users? A review of technology acceptance models and theories. In Proceedings of the 5th International Conference on 4E, London, UK, 8–12 June 2015. [Google Scholar]

- Taherdoost, H. A review of technology acceptance and adoption models and theories. Procedia Manuf. 2018, 22, 960–967. [Google Scholar] [CrossRef]

- Werber, B.; Baggia, A.; Žnidaršič, A. Factors affecting the intentions to use RFID subcutaneous microchip implants for healthcare purposes. Organizacija 2018, 51, 121–133. [Google Scholar] [CrossRef]

- Mohamed, M.A. Modeling of Subcutaneous Implantable Microchip Intention of Use. In Proceedings of the International Conference on Intelligent Human Systems Integration, Modena, Italy, 19–21 February 2020; pp. 842–847. [Google Scholar]

- Shafeie, S.; Chaudhry, B.M.; Mohamed, M. Modeling Subcutaneous Microchip Implant Acceptance in the General Population: A Cross-Sectional Survey about Concerns and Expectations. Informatics 2022, 9, 24. [Google Scholar] [CrossRef]

- Cristina, O.P.; Jorge, P.B.; Eva, R.L.; Mario, A.O. From wearable to insideable: Is ethical judgment key to the acceptance of human capacity-enhancing intelligent technologies? Comput. Hum. Behav. 2021, 114, 106559. [Google Scholar] [CrossRef]

- Z̆nidars̆ic̆, A.; Werber, B.; Baggia, A.; Vovk, M.; Bevanda, V.; Zakonnik, L. The Intention to Use Microchip Implants Model Extensions after the Pandemics. In Proceedings of the the 16th International Symposium on Operational Research in Slovenia, Bled, Slovenia, 22–24 September 2021; pp. 247–252. [Google Scholar]

- Sparks, H. Pentagon Develops Implant that could Help Detect COVID under Your Skin; New York Post: New York, NY, USA, 2022. [Google Scholar]

- Hart, R. Elon Muskś Neuralink Wants to Put Chips in Our Brains—How It Works and Who Else Is Doing It; Forbes: Jersey City, DC, USA, 2023. [Google Scholar]

- Warwick, K. Implants; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Kiyoshi, M.; Andrew, A.A.; Yasunori, F.; Yohko, O.; Mario, A.O.; Jorge, P.B. From a Science Fiction to the Reality: Cyborg Ethics in Japan. Orbit J. 2017, 1, 1–15. [Google Scholar] [CrossRef]

- Heersmink, R. The Philosophy of Human-Technology Relations. Philos. Technol. 2018, 31, 305–319. [Google Scholar]

- Hansson, S.O. Implantable Computers: The Next Step in Computer Evolution? Ethics Inf. Technol. 2005, 7, 115–126. [Google Scholar]

- Gray, C.H. Cyborg Citizen: Politics in the Posthuman Age; Routledge: London, UK, 2001. [Google Scholar]

- Grunwald, A. Nano- and Information Technology: Ethical Aspects. Int. J. Technol. Assess. Health Care 2004, 20, 15–23. [Google Scholar]

- Foster, K.R.; Jaeger, J. Ethical Implications of Implantable Radiofrequency Identification (RFID) Tags in Humans. Am. J. Bioeth. 2005, 5, 6–7. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, N. Technology Acceptance for Hearing Aids: An Analysis of Adoption and Innovation; Aalborg University: Copenhagen, Denmark, 2021. [Google Scholar]

- Pommer, B.; Zechner, W.; Watzak, G.; Ulm, C.; Watzek, G.; Tepper, G. Progress and trends in patients’ mindset on dental implants. II: Implant acceptance, patient-perceived costs and patient satisfaction. Clin. Oral Implant. Res. 2011, 22, 106–112. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Unified Theory of Acceptance and Use of Technology: A Synthesis and the Road Ahead. J. Assoc. Inf. Syst. 2016, 17, 328–376. [Google Scholar] [CrossRef]

- Ibáñez-Sánchez, S.; Orus, C.; Flavian, C. Augmented reality filters on social media. Analyzing the drivers of playability based on uses and gratifications theory. Psychol. Mark. 2022, 39, 559–578. [Google Scholar] [CrossRef]

- Falgoust, G.; Winterlind, E.; Moon, P.; Parker, A.; Zinzow, H.; Madathil, K.C. Applying the uses and gratifications theory to identify motivational factors behind young adult’s participation in viral social media challenges on TikTok. Hum. Factors Healthc. 2022, 2, 100014. [Google Scholar] [CrossRef]

- Ajzen, I.; Kruglanski, A.W. Reasoned action in the service of goal pursuit. Psychol. Rev. 2019, 126, 774. [Google Scholar] [CrossRef]

- Go, H.; Kang, M.; Suh, S.C. Machine learning of robots in tourism and hospitality: Interactive technology acceptance model (iTAM)—Cutting edge. Tour. Rev. 2020, 75, 625–636. [Google Scholar] [CrossRef]

- Alfadda, H.A.; Mahdi, H.S. Measuring students’ use of zoom application in language course based on the technology acceptance model (TAM). J. Psycholinguist. Res. 2021, 50, 883–900. [Google Scholar] [CrossRef]

- Zhou, J.; Fan, T. Understanding the factors influencing patient E-health literacy in online health communities (OHCs): A social cognitive theory perspective. Int. J. Environ. Res. Public Health 2019, 16, 2455. [Google Scholar] [CrossRef]

- Chen, C.C.; Tu, H.Y. The effect of digital game-based learning on learning motivation and performance under social cognitive theory and entrepreneurial thinking. Front. Psychol. 2021, 12, 750711. [Google Scholar] [CrossRef]

- Pousada García, T.; Garabal-Barbeira, J.; Porto Trillo, P.; Vilar Figueira, O.; Novo Díaz, C.; Pereira Loureiro, J. A framework for a new approach to empower users through low-cost and do-it-yourself assistive technology. Int. J. Environ. Res. Public Health 2021, 18, 3039. [Google Scholar] [CrossRef] [PubMed]

- Jader, A.M.A. Factors Affecting the Behavioral Intention to Adopt Web-Based Recruitment in Human Resources Departments in Telecommunication Companies in Iraq. Al-Anbar Univ. J. Econ. Adm. Sci. 2022, 14, 404–416. [Google Scholar]

- Zheng, K.; Kumar, J.; Kunasekaran, P.; Valeri, M. Role of smart technology use behaviour in enhancing tourist revisit intention: The theory of planned behaviour perspective. Eur. J. Innov. Manag. 2022. ahead of print. [Google Scholar] [CrossRef]

- Choe, J.Y.; Kim, J.J.; Hwang, J. Innovative robotic restaurants in Korea: Merging a technology acceptance model and theory of planned behaviour. Asian J. Technol. Innov. 2022, 30, 466–489. [Google Scholar] [CrossRef]

- Li, L. A critical review of technology acceptance literature. Ref. Res. Pap. 2010, 4, 2010. [Google Scholar]

- Yuen, K.F.; Wong, Y.D.; Ma, F.; Wang, X. The determinants of public acceptance of autonomous vehicles: An innovation diffusion perspective. J. Clean. Prod. 2020, 270, 121904. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Olushola, T.; Abiola, J. The efficacy of technology acceptance model: A review of applicable theoretical models in information technology researches. J. Res. Bus. Manag. 2017, 4, 70–83. [Google Scholar]

- Van der Heijden, H. User acceptance of hedonic information systems. Mis Q. 2004, 28, 695–704. [Google Scholar] [CrossRef]

- Liao, Y.K.; Wu, W.Y.; Le, T.Q.; Phung, T.T.T. The integration of the technology acceptance model and value-based adoption model to study the adoption of e-learning: The moderating role of e-WOM. Sustainability 2022, 14, 815. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Chang, A. UTAUT and UTAUT 2: A review and agenda for future research. Winners 2012, 13, 10–114. [Google Scholar] [CrossRef]

- Lowry, P.B.; Gaskin, J.; Twyman, N.; Hammer, B.; Roberts, T. Taking ‘fun and games’ seriously: Proposing the hedonic-motivation system adoption model (HMSAM). J. Assoc. Inf. Syst. 2012, 14, 617–671. [Google Scholar] [CrossRef]

- Hu, L.; Filieri, R.; Acikgoz, F.; Zollo, L.; Rialti, R. The effect of utilitarian and hedonic motivations on mobile shopping outcomes. A cross-cultural analysis. Int. J. Consum. Stud. 2023, 47, 751–766. [Google Scholar] [CrossRef]

- Bagozzi, R.P.; Baumgartner, J.; Yi, Y. An investigation into the role of intentions as mediators of the attitude-behavior relationship. J. Econ. Psychol. 1989, 10, 35–62. [Google Scholar] [CrossRef]

- Eagly, A.H.; Chaiken, S. The Psychology of Attitudes; Harcourt Brace Jovanovich College Publishers: New York, NY, USA, 1993. [Google Scholar]

- Mallat, N. Exploring consumer adoption of mobile payments—A qualitative study. J. Strateg. Inf. Syst. 2007, 16, 413–432. [Google Scholar] [CrossRef]

- Kim, Y.; Park, Y.; Choi, J. A study on the adoption of IoT smart home service: Using Value-based Adoption Model. Total Qual. Manag. Bus. Excell. 2017, 28, 1149–1165. [Google Scholar] [CrossRef]

- Demiris, G.; Oliver, D.P.; Washington, K.T. Defining and analyzing the problem. In Behavioral Intervention Research in Hospice and Palliative Care: Building an Evidence Base; Academic Press: Cambridge, MA, USA, 2019; pp. 27–39. [Google Scholar]

- Reinares-Lara, E.; Olarte-Pascual, C.; Pelegrín-Borondo, J.; Pino, G. Nanoimplants that enhance human capabilities: A cognitive-affective approach to assess individuals’ acceptance of this controversial technology. Psychol. Mark. 2016, 33, 704–712. [Google Scholar] [CrossRef]

- Pelegrin-Borondo, J.; Reinares-Lara, E.; Olarte-Pascual, C. Assessing the acceptance of technological implants (the cyborg): Evidences and challenges. Comput. Hum. Behav. 2017, 70, 104–112. [Google Scholar] [CrossRef]

- Reinares-Lara, E.; Olarte-Pascual, C.; Pelegrín-Borondo, J. Do you want to be a cyborg? The moderating effect of ethics on neural implant acceptance. Comput. Hum. Behav. 2018, 85, 43–53. [Google Scholar] [CrossRef]

- Dragović, M. Factors Affecting RFID Subcutaneous Microchips Usage. In Proceedings of the Sinteza 2019-International Scientific Conference on Information Technology and Data Related Research; Singidunum University: Belgrade, Serbia, 2019; pp. 235–243. [Google Scholar]

- Murata, K.; Arias-Oliva, M.; Pelegrín-Borondo, J. Cross-cultural study about cyborg market acceptance: Japan versus Spain. Eur. Res. Manag. Bus. Econ. 2019, 25, 129–137. [Google Scholar]

- Boella, N.; Gîrju, D.; Gurviciute, I. To Chip or Not to Chip? Determinants of Human RFID Implant Adoption by Potential Consumers in Sweden & the Influence of the Widespread Adoption of RFID Implants on the Marketing Mix. Master’s Thesis, Lund University, Lund, Sweden, 2019. [Google Scholar]

- Gauttier, S. ‘I’ve got you under my skin’—The role of ethical consideration in the (non-) acceptance of insideables in the workplace. Technol. Soc. 2019, 56, 93–108. [Google Scholar] [CrossRef]

- Pelegrín-Borondo, J.; Arias-Oliva, M.; Murata, K.; Souto-Romero, M. Does ethical judgment determine the decision to become a cyborg? J. Bus. Ethics 2020, 161, 5–17. [Google Scholar] [CrossRef]

- Žnidaršič, A.; Baggia, A.; Pavlíček, A.; Fischer, J.; Rostański, M.; Werber, B. Are we Ready to Use Microchip Implants? An International Cross-sectional Study. Organizacija 2021, 54, 275–292. [Google Scholar] [CrossRef]

- Sabogal-Alfaro, G.; Mejía-Perdigón, M.A.; Cataldo, A.; Carvajal, K. Determinants of the intention to use non-medical insertable digital devices: The case of Chile and Colombia. Telemat. Inform. 2021, 60, 101576. [Google Scholar] [CrossRef]

- Arias-Oliva, M.; Pelegrín-Borondo, J.; Murata, K.; Gauttier, S. Conventional vs. disruptive products: A wearables and insideables acceptance analysis: Understanding emerging technological products. Technol. Anal. Strateg. Manag. 2021, 1–13. [Google Scholar] [CrossRef]

- Chebolu, R.D. Exploring Factors of Acceptance of Chip Implants in the Human Body. Bachelor’s Thesis, University of Central Florida, Orlando, FL, USA, 2021. [Google Scholar]

- Ahadzadeh, A.S.; Wu, S.L.; Lee, K.F.; Ong, F.S.; Deng, R. My perfectionism drives me to be a cyborg: Moderating role of internal locus of control on propensity towards memory implant. Behav. Inf. Technol. 2023, 1–14. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Boughzala, I. How Generation Y Perceives Social Networking Applications in Corporate Environments. In Integrating Social Media into Business Practice, Applications, Management, and Models; IGI Global: Hershey, PA, USA, 2014; pp. 162–179. [Google Scholar]

- Pelegrín-Borondo, J.; Reinares-Lara, E.; Olarte-Pascual, C.; Garcia-Sierra, M. Assessing the moderating effect of the end user in consumer behavior: The acceptance of technological implants to increase innate human capacities. Front. Psychol. 2016, 7, 132. [Google Scholar] [CrossRef]

- Oliva, M.A.; Borondo, J.P. Cyborg Acceptance in Healthcare Services: Theoretical Framework. In Proceedings of the Paradigm Shifts in ICT Ethics: Proceedings of the ETHICOMP* 2020; Universidad de La Rioja: Logroño, Spain, 2020; pp. 50–55. [Google Scholar]

- Nguyen, N.T.; Biderman, M.D. Studying ethical judgments and behavioral intentions using structural equations: Evidence from the multidimensional ethics scale. J. Bus. Ethics 2008, 83, 627–640. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Pramatari, K.; Theotokis, A. Consumer acceptance of RFID-enabled services: A model of multiple attitudes, perceived system characteristics and individual traits. Eur. J. Inf. Syst. 2009, 18, 541–552. [Google Scholar] [CrossRef]

- Perakslis, C.; Michael, K.; Michael, M.; Gable, R. Perceived barriers for implanting microchips in humans: A transnational study. In Proceedings of the 2014 IEEE Conference on Norbert Wiener in the 21st Century (21CW), Boston, MA, USA, 24–26 June 2014; pp. 1–8. [Google Scholar]

- Giger, J.C.; Gaspar, R. A look into future risks: A psychosocial theoretical framework for investigating the intention to practice body hacking. Hum. Behav. Emerg. Technol. 2019, 1, 306–316. [Google Scholar] [CrossRef]

- Wolbring, G.; Diep, L.; Yumakulov, S.; Ball, N.; Yergens, D. Social robots, brain machine interfaces and neuro/cognitive enhancers: Three emerging science and technology products through the lens of technology acceptance theories, models and frameworks. Technologies 2013, 1, 3–25. [Google Scholar] [CrossRef]

- Salovaara, A.; Tamminen, S. Acceptance or appropriation? A design-oriented critique of technology acceptance models. In Future Interaction Design II; Springer: Berlin/Heidelberg, Germany, 2009; pp. 157–173. [Google Scholar]

- Holden, H.; Rada, R. Understanding the influence of perceived usability and technology self-efficacy on teachers’ technology acceptance. J. Res. Technol. Educ. 2011, 43, 343–367. [Google Scholar] [CrossRef]

- Card, S.K.; Moran, T.P.; Newell, A. The Psychology of Human-Computer Interaction; CRC Press: Cleveland, OH, USA, 2018. [Google Scholar]

- Martinez, A.P.; Scherer, M.J. Matching Person & Technology (MPT) Model” for Technology Selection as well as Determination of Usability and Benefit from Use; Department of Physical medicine & Rehabilitation, University of Rochester Medical Center: Rochester, NY, USA, 2018; p. 1 3140.

- Kim, H.W.; Chan, H.C.; Gupta, S. Value-based adoption of mobile internet: An empirical investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

| Theory/Model | Year | Application Examples |

|---|---|---|

| Uses and gratification theory (UGT) | 1973 | [25,26] |

| Theory of reasoned action (TRA) | 1975 | [27] |

| Technology acceptance model (TAM) | 1986 | [28,29] |

| Social cognitive theory (SCT) | 1986 | [30,31] |

| Matching person and technology model (MPTM) | 1989 | [32] |

| Model of PC utilization (MPCU) | 1991 | [33] |

| Theory of planned behavior (TPB) | 1991 | [34,35] |

| Motivation model (MM) | 1992 | [36] |

| Combined TAM–TPB | 1995 | [36] |

| Innovation diffusion theory (IDT) | 1995 | [37] |

| Extension of TAM (TAM 2) | 2000 | [38] |

| Unified theory of acceptance and use of technology (UTAUT) | 2003 | [39] |

| Hedonic system adoption model (HSAM) | 2004 | [40] |

| Valued-based adoption model (VAM) | 2007 | [41] |

| Technology acceptance model (TAM 3) | 2008 | [42] |

| Extended UTAUT (UTAUT 2) | 2012 | [43,44] |

| Hedonic-motivation system adoption model (HMSAM) | 2013 | [45,46] |

| Study (Year) | Theory/Model | Technology | Population (Size) | Findings |

|---|---|---|---|---|

| [52] (2016) | Extended TAM (Cognitive + Affective) | Capacity-enhancing nanoimplants | >16 years (n = 600) | Model explained 65.9% of the variance in attitude and 58.4% of the intention to undergo implantation. Predictive power of 0.53 for respondents’ attitudes and 0.57 for their intentions. |

| [53] (2017) | CAN | Capacity-enhancing insideables (being a cyborg) | >16 years (n = 600) | CAN explains 73.9% of variance in responses and has a predictive power of 0.7160. Affective and normative factors have the greatest influence on the acceptance. Positive emotions have the greatest impact. |

| [54] (2018) | CAN | Neural implants | ≥18 years (n = 900) | Ethics has a moderating effect on the intention to use implants. |

| [8] (2018) | Extended TAM | SM | Unselected population (n = 531) | Therapeutic uses are more acceptable (44%) than enhancement uses (35–22%). |

| [55] (2019) | TAM 2 | SM | Undergrad students (n = 100) | Lack of trust poses a barrier for adoption. |

| [56] (2019) | Ethical awareness innovativeness perceived risk | Capacity-enhancing insideables (being a cyborg) | University students Japan (n = 300) & Spain (n = 286) | Ethical awareness strongly influences cyborg technology acceptance, while innovativeness plays a lesser role, and perceived risk has no significant impact. Culture does not affect the results. |

| [57] (2019) | Extended UTAUT 2 | SM | Potential customers (n = 22) and marketing companies | Model will affect marketing activities if the technology is widely adopted. |

| [58] (2019) | Refined TAM | Insideables | Employees in workplace | Constructs for acceptance and nonacceptance proposed based on a case study analysis. |

| [1] (2020) | TAM, self-efficacy, IDT, social exchange theory | Embeddables | Online survey, 18–86 years (n = 1063) | Self-efficacy, perceived risk and privacy concerns explain the adoption of embeddables. |

| [59] (2020) | Extended TAM (Using MES) | Capacity-enhancing insideables (being a cyborg) | Online survey of higher education students (n = 1563) | Ethical dimensions explain 48% of the intention to use cyborg technologies. Egoism is the most influential, while contractualism is the least. |

| [60] (2021) | Extended TAM | SM | Unselected population (n = 804) | Perceived trust influences privacy and technology safety. Health concerns reduce perceived usefulness. |

| [61] (2021) | UTAUT 2 | Insertables | Undergraduate students (n = 672), Colombia & Chile | Hedonic motivation, social influence (SI), habit and performance expectancy positively influence use intention in both countries. Habit mediates relationship between SI and intention. Effort expectations are significant in Chile. |

| [62] (2021) | UTAUT 2 | Insideables & wearbales | Higher education students (n = 1563) | Performance expectancy more strongly influences the adoption of wearables, while social influence plays a greater role in the adoption of insideables. |

| [63] (2021) | Self-determination theory | Implantables | Undergrad students (n = 111) | Trust in technology and high motivation correlate with technology use. Personality traits do not. |

| [10] (2022) | Extended TAM | SM | General public, 18–80 years (n = 179) | Additional determinants for acceptance are proposed. |

| [64] (2023) | Perfectionism and locus of control | Memory implants | University students (n = 686) | Traits of perfectionism have positive relationships with the intent to use memory implants. Internal LOC acts as a moderator. |

| TAM | CAN | UTAUT | |

|---|---|---|---|

| Direct determinants of BIU | Perceived usefulness Perceived ease of use Attitude | Perceived ease of use Perceived usefulness Positive affect Negative affect Anxiety Subjective norms | Performance expectancy Effort expectancy Social influence Facilitating conditions |

| Additional Determinants | Perceived trust Privacy concerns Perceived awareness Perceived choice Financial burden Ethical concerns Innovativeness Perceived risk Technology self-efficacy | Ethical concerns | Hedonic motivation Price value Habits Functionality Health concerns Invasiveness Privacy concerns Safety concerns |

| Moderating variables | Age, gender | Culture | Age, experience Gender, culture |

| External variables | Health concerns Misinformation/fake news Conspiracy theory beliefs | - | - |

| Category | Determinant | Definition | Equivalent Concepts | Source |

|---|---|---|---|---|

| Effort expectancy | The degree of a person’s belief that they will be able to use the technology with ease. | Perceived ease of use | TAM, UTAUT, CAN, [8,10,55,57] | |

| Performance expectancy | The degree of a person’s belief that the technology will augment their work performance. | Perceived usefulness | TAM, UTAUT, CAN, [8,10,55,57] | |

| Cognitive | Health concerns | The degree of a person’s belief that using the technology would have a negative impact on their health. | Health | [8,10,55,57] |

| Perceived trust | The degree of a person’s belief that the technology would be free from harm. | Safety, perceived risk | [1,8,55,57] | |

| Self-efficacy | The degree of an individual’s belief in their ability to carry out a specific task or reach their goals. | - | [1] | |

| Affective | Negative affect | The degree to which an individual harbors negative emotions toward an innovation. | Invasiveness | CAN, [57] |

| Positive affect | The degree to which an individual harbors positive emotions toward an innovation. | - | CAN | |

| Anxiety | The degree to which an individual feels a sense of uneasiness, distress, or dread toward the idea of being implanted. | Perceived pain | CAN, [1,8,10,55] | |

| *** Hedonism | The amount of fun and pleasure that an individual believes they can derive from using the innovation. | - | UTAUT 2, [57] | |

| Facilitating conditions | The degree to which an individual believes organizational and technical infrastructure is ready to support the use of an innovation. | Perceived awareness, relatedness | UTAUT, [10,63] | |

| Normative | Social influence | The degree of an individual’s belief that (family and friends) would be supportive of their decision to use an innovation. | Subjective (social) norm | UTAUT, CAN, [57] |

| *** Price value | The degree of an individual’s belief that the cost of an innovation meets its value. | Financial burden, social exchange theory | UTAUT 2, [10,57,63] | |

| Behavioral | Experience and habits | The extent to which people tend to perform behaviors automatically because of learning. | - | UTAUT 2, [57] |

| Motivation | The degree of an individual’s desire to do something to achieve a certain goal. | - | [1] | |

| Ethical | Ethical judgment | A subjective process that is used to decide whether an action is morally correct or not. | - | [54] |

| Perceived choice | A subjective process that helps an individual decide that the society preserves their right to make a choice to be implanted. | Autonomy | [10,63] | |

| Privacy | The ability of a new system to safeguard an individual’s private information. | Technology expectancy | [1,10,57] | |

| Technical | Functionality | The amount of useful functionality offered by the innovation that is aligned with an individual’s goals. | Technology expectancy, IDT | [1,10,57] |

| *** Design | Technology’s physical attributes that align with an individual’s goals. | Technology expectancy | [10] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chaudhry, B.M.; Shafeie, S.; Mohamed, M. Theoretical Models for Acceptance of Human Implantable Technologies: A Narrative Review. Informatics 2023, 10, 69. https://doi.org/10.3390/informatics10030069

Chaudhry BM, Shafeie S, Mohamed M. Theoretical Models for Acceptance of Human Implantable Technologies: A Narrative Review. Informatics. 2023; 10(3):69. https://doi.org/10.3390/informatics10030069

Chicago/Turabian StyleChaudhry, Beenish Moalla, Shekufeh Shafeie, and Mona Mohamed. 2023. "Theoretical Models for Acceptance of Human Implantable Technologies: A Narrative Review" Informatics 10, no. 3: 69. https://doi.org/10.3390/informatics10030069

APA StyleChaudhry, B. M., Shafeie, S., & Mohamed, M. (2023). Theoretical Models for Acceptance of Human Implantable Technologies: A Narrative Review. Informatics, 10(3), 69. https://doi.org/10.3390/informatics10030069

_Bryant.png)