A Machine Learning Python-Based Search Engine Optimization Audit Software

Abstract

:1. Introduction

2. Search Engine Optimization Overview

2.1. Title Tag

2.2. SEO-Friendly URL

2.3. Alternative Tags and Image Optimization

2.4. Link HREF Alternative Title Tags

2.5. Meta Tags

2.6. Heading Tags

2.7. Minified Static Files

2.8. Sitemap and RSS Feed

2.9. Robots.txt

2.10. Responsive and Mobile-Friendly Design

2.11. Webpage Speed and Loading Time

2.12. SSL Certificates and HTTPS

2.13. Accelerated Mobile Pages (AMP)

2.14. Structured Data

2.15. Open Graph Protocol (OGP)

2.16. Off-Page SEO Techniques

3. Materials and Methods

- Identification of on-page and off-page SEO techniques;

- Development of functions that detect SEO techniques in the source code of the webpage for each SEO technique;

- Creation of classes containing SEO techniques;

- Integration of the software with a third-party API for fetching SERPs;

- Integration of the software with third-party APIs for gathering data related to speed, responsive design, and Domain Authority;

- Generation of a dataset from live websites with measurements for Domain Authority, Linking Domains, and Backlinks;

- Training of the Random Forest Regression model to predict Linking Domains and Backlinks based on Domain Authority;

- Evaluation of the model’s results;

- Selection of a high-traffic relevant keyword;

- Collection of ranking data for websites ranking on the first page of SERPs for the specific keyword;

- Competitor analysis using the SEO audit tool;

- Assessment of a live e-commerce site before implementing SEO techniques and keyword targeting;

- Application of the SEO techniques suggested by the software audit tool to the target e-commerce site;

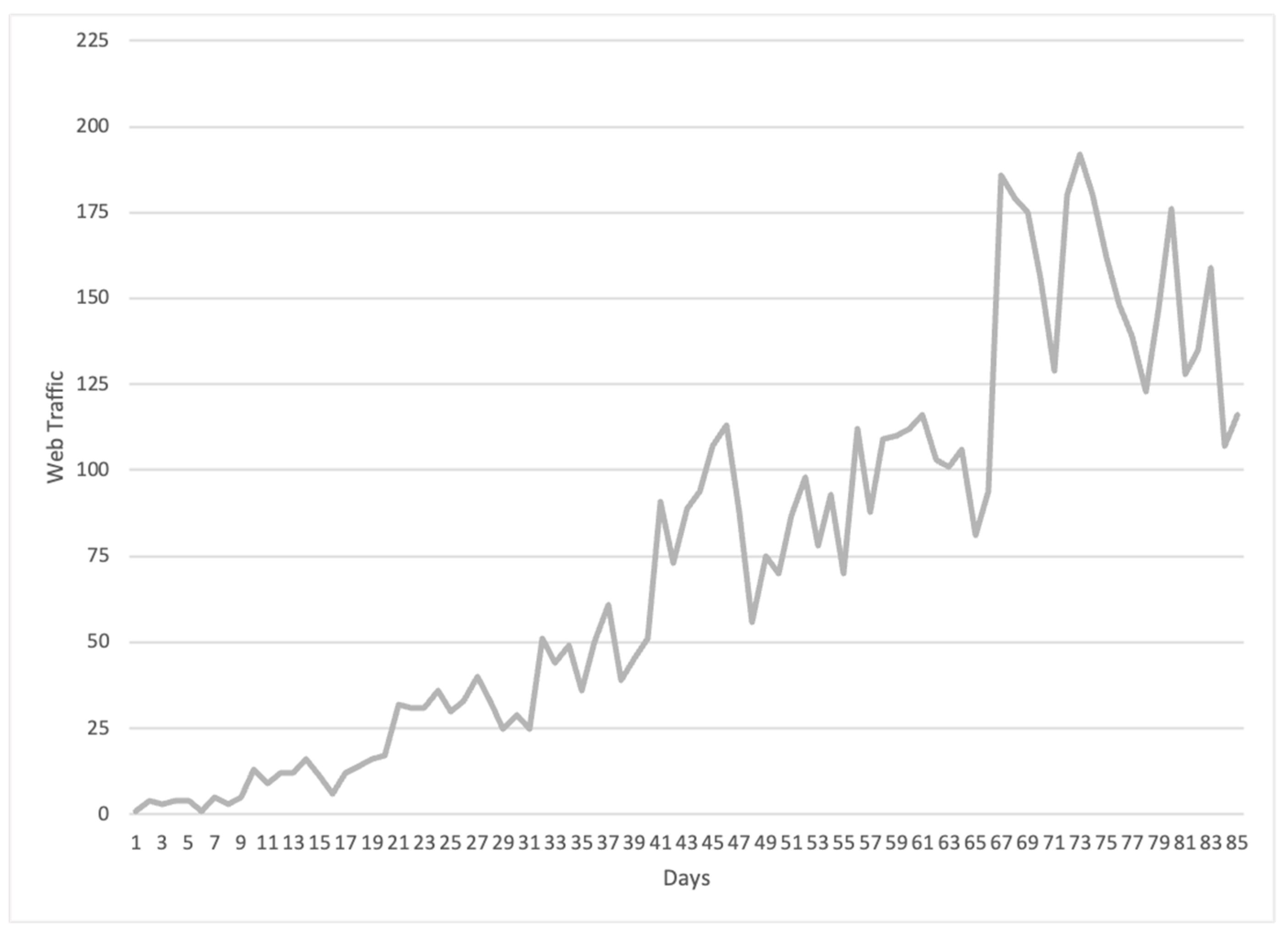

- Collection of ranking data after 85 days of implementing SEO techniques on the targeted e-commerce site;

- Evaluation of traffic results from Google Analytics for the e-commerce site.

3.1. SEO Tool Functionality and APIs

3.1.1. Retrieve Search Engine Results Pages from Google (SERPs)

3.1.2. SEO Techniques Methods and Python Packages

- The requests library is utilized to make API requests, while the JSON library is used to convert JSON data into a Python dictionary.

- The urllib.parse library is used to parse the URL in the get_robots(URL) function.

- The re library, which supports regular expressions, is also used in the code.

- The csv library is used to save the dictionary to a CSV file.

- The BeautifulSoup library is also employed for web scraping purposes, allowing the code to extract data from HTML files.

- Finally, the Mozscape library obtains a website’s domain authority.

3.1.3. External APIs

- Mobile-Friendly Test Tool API, developed by Google, is a web-based service verifying a URL for mobile-usability issues. Specifically, the API assesses the URL against responsive design techniques and identifies any problems that could impact users visiting the page on a mobile device. The assessment results are then presented to the user in the form of a list, allowing for targeted optimizations to improve the website’s mobile-friendliness [30].

- PageSpeed Insights API, developed by Google, is a web-based tool designed to measure the performance of a given web page. The API provides users with a comprehensive analysis of the page’s performance, including metrics related to page speed, accessibility, and SEO. The tool can identify potential performance issues and return suggestions on optimizing the page’s performance. This allows website owners to make informed and specific decisions regarding speed optimization that can be made to improve user experience and overall page performance [53].

- Mozscape API, The Mozscape API, developed by MOZ, is a web-based service that provides accurate metrics related to a website’s performance. Specifically, the API takes a website’s URL as input and returns a range of metrics, including Domain Authority. Domain Authority is a proprietary metric developed by MOZ that measures the strength of a website’s overall link profile. The metric is calculated using a complex algorithm that considers various factors, such as the quality and quantity of inbound links. It objectively assesses a website’s authority relative to its competitors. The Mozscape API is a valuable tool for website owners and SEO professionals seeking insights into their website’s performance and improving their overall search engine rankings [47].

- Google SERP API, developed by ZenSerp API, is a web-based service that allows users to efficiently and accurately scrape search results from Google. The API is designed to provide users with a seamless experience, offering features such as rotating IP addresses to prevent detection and blocking by Google and returning search results in a JSON-structured format. The tool is a valuable asset for SEO professionals and website owners seeking to gain insights into their website’s performance and improve their search engine rankings. The Google SERP API developed by ZenSerp API is an efficient and reliable tool for web scraping, offering accurate search results and facilitating the process of data analysis and SEO optimization [52].

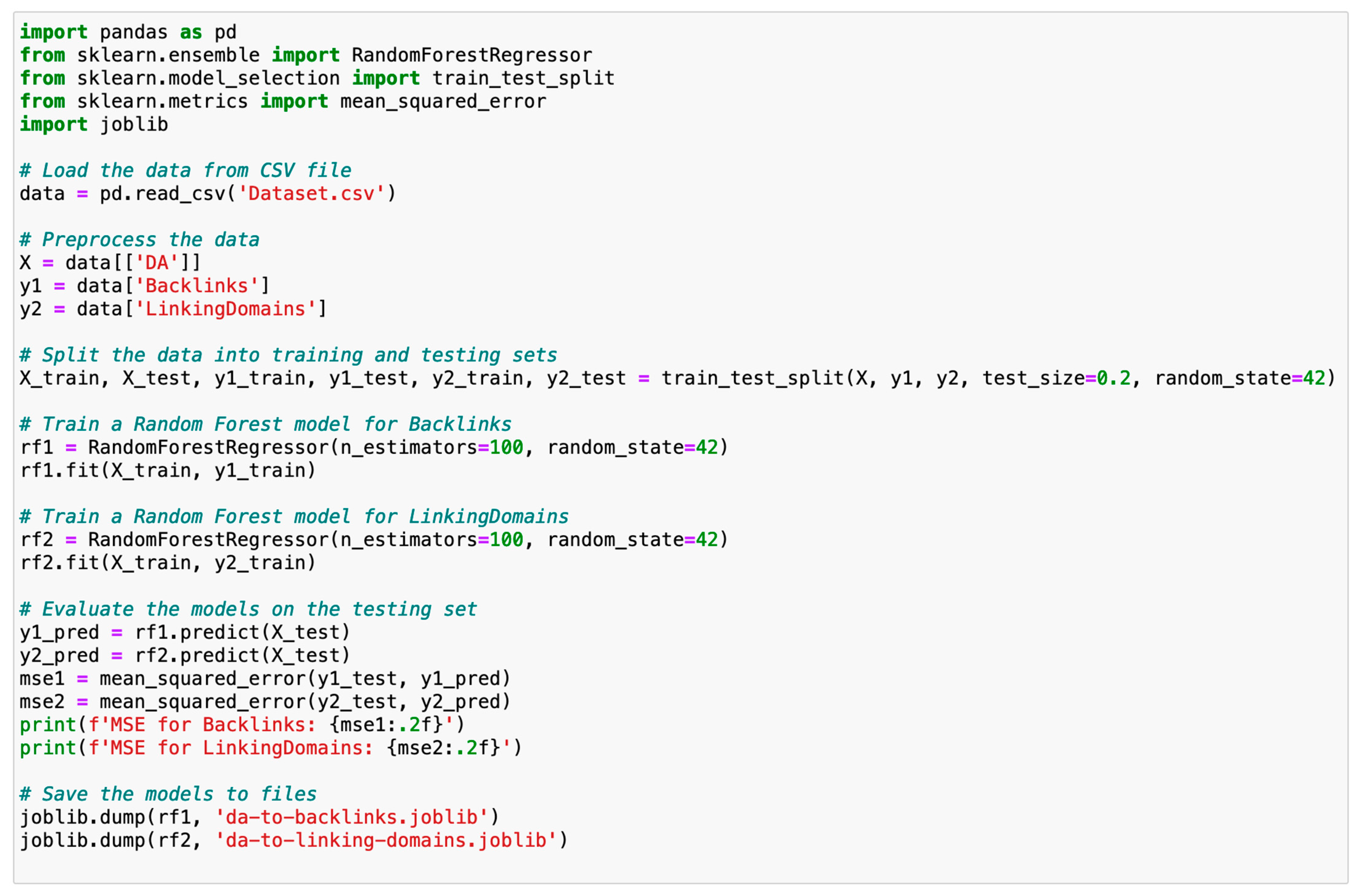

3.2. Machine Learning

3.2.1. Model Training

- Pandas is a widely used Python library that offers an extensive array of functions for manipulating and analyzing data. Its functionalities include support for data frames and series, which enables structured data processing [54]. The software employs pandas to read the data from a CSV file, preprocess it, and create new data frames to store the independent and dependent variables.

- Scikit-learn is a machine-learning Python library that offers a diverse set of tools for data analysis and modeling [55]. In this software, scikit-learn is employed to train and evaluate the Random Forest Regression models.

- RandomForestRegressor is a class implemented in the scikit-learn library that embodies the Random Forest Regression algorithm [55]. This class is utilized in the code to train Random Forest Regression models, which are utilized to make predictions of the dependent variables, namely Backlinks and LinkingDomains, based on the independent variable, DA. The RandomForestRegressor technique operates as an ensemble approach, seamlessly blending numerous decision trees to forge a sturdy and precise model. Its versatility spans both regression and classification tasks, rendering it a fitting selection for prognosticating numerical metrics such as backlinks and linking domains. Also, they are less prone to overfitting compared to individual decision trees. They create multiple trees and aggregate their predictions, reducing the risk of learning noise in the data. This can lead to more reliable and stable predictions, which is crucial when dealing with real-world data. Finally, by training multiple trees and combining their predictions, random forests tend to be less sensitive to fluctuations in the dataset, resulting in a more consistent performance across different subsets of data.

- train_test_split is a function incorporated in the scikit-learn library, which is employed to partition the dataset into training and testing sets [55]. This function randomly splits the data into two separate subsets, where one is used for training the machine learning model, and at the same time, the other is utilized for testing its performance.

- The mean_squared_error function in scikit-learn is a mathematical function that calculates the mean squared error (MSE) between the actual and predicted values of the dependent variable. In the context of the presented software, this function is used to evaluate the performance of the trained Random Forest Regression models on the testing data. It measures the average squared difference between the actual and predicted values, where a lower MSE indicates a better fit of the model to the data [55].

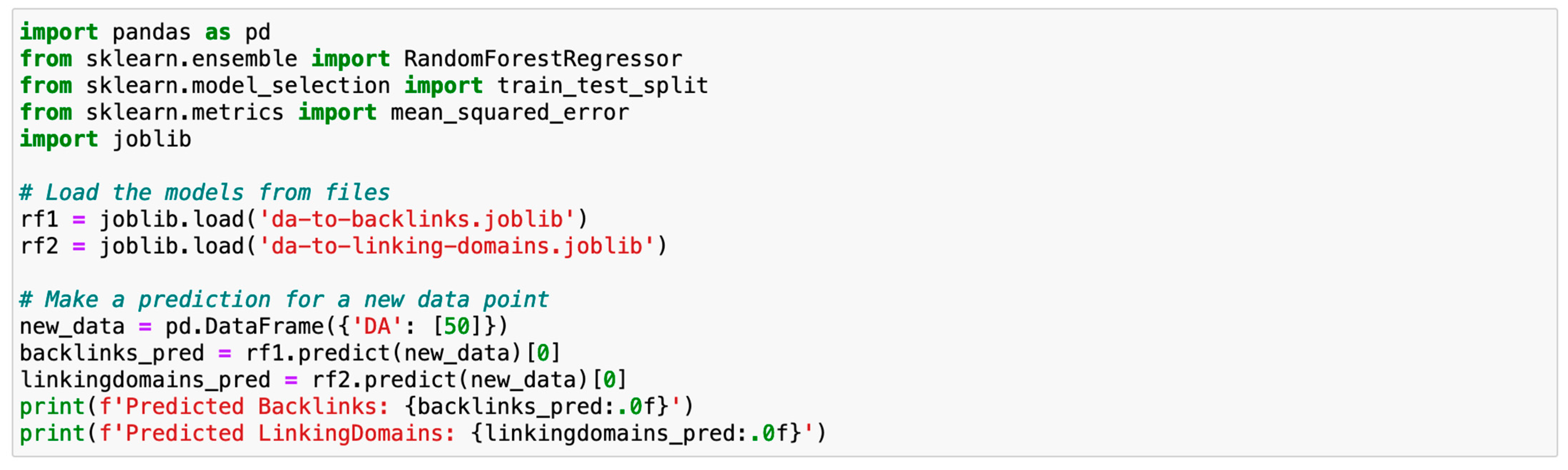

- Joblib is a Python library that provides tools for the efficient serialization and deserialization of Python objects [56]. In this software, it is used to store the trained models on disk and retrieve them later to make predictions on new data. By storing the models as files, the trained models can be shared and used in other applications without retraining. This also enables efficient storage and retrieval of models, which can be especially useful for larger models requiring significant computational resources.

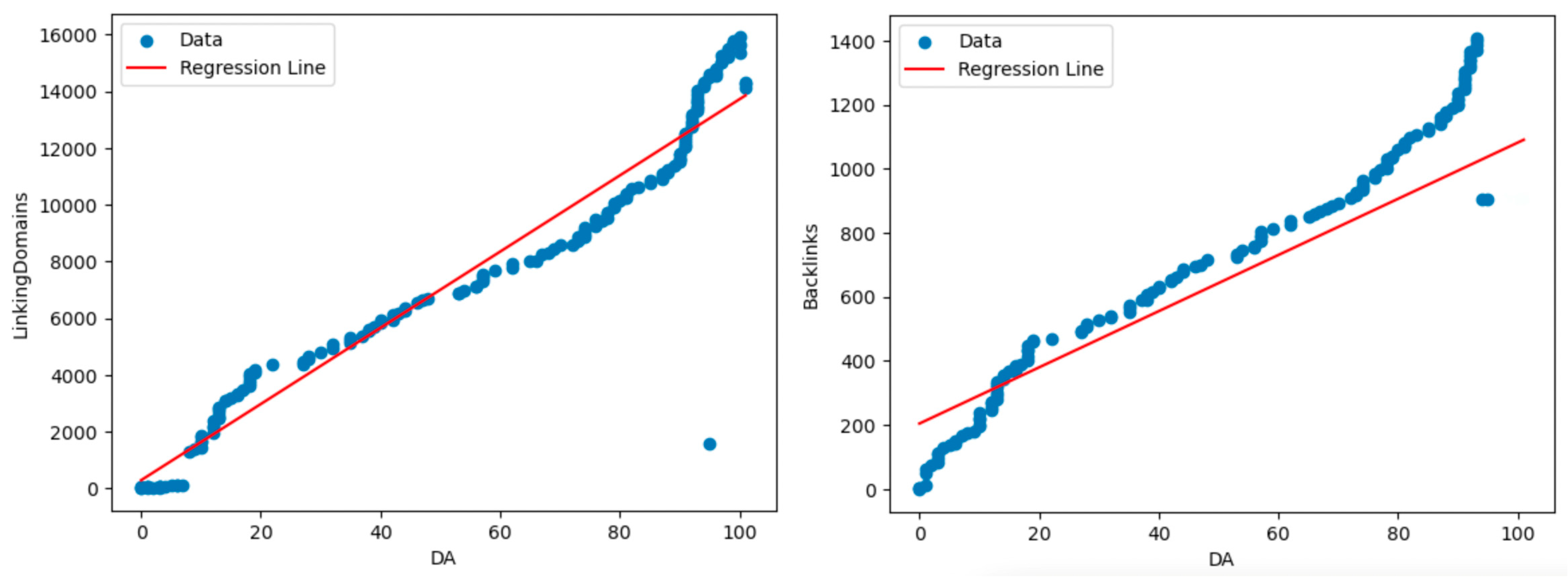

- The results of the regression analysis reveal valuable insights into the relationship between the DA and LinkingDomains variables. The p-values associated with the coefficients provide critical information about the statistical significance of each variable in the model. The p-value for the variable “DA” is exceptionally low at approximately 8.85 × 10−98 indicating an extremely high level of statistical significance. This suggests a strong relationship between the “DA” variable and the predicted “LinkingDomains” values. The R-squared value of 0.93 further reinforces the model’s effectiveness in explaining the variation in the dependent variable. With an R-squared value close to 1, it can be inferred that around 93% of the variation in “LinkingDomains” is accounted for by the linear relationship with “DA.” This robust R-squared value signifies that the regression model provides a compelling fit to the data, underlining its predictive capability and potential for insights into the relationship between these variables.

- The regression analysis conducted on the relationship between Domain Authority (DA) and Backlinks has also generated significant insights. The Ordinary Least Squares (OLS) regression model demonstrates a strong fit to the data, with an R-squared value of 0.630, implying that approximately 63% of the variability in Backlinks can be explained by changes in Domain Authority. The F-statistic of 282.7 with a corresponding p-value of 1.10 × 10−37 highlights the overall significance of the model, suggesting that the model as a whole is able to predict the Backlinks effectively.

3.2.2. Predictions Based on DA

4. Results

5. Discussion

5.1. Importance of Accessibility and Affordability

5.2. Integration of Traditional Programming and Machine Learning

5.3. Micro-Level Insights and Tailored Guidance

5.4. Competitor Analysis and SEO Methodologies

5.5. Limitations and Future Implications

- The tool retrieves data for websites ranking on the first page of search results. If there are numerous Google Ads or Google product listings, or if there is a substantial presence of rich results (structured data), the number of web pages appearing in the organic search results may be fewer than eight.

- The tool relies on web scraping techniques to access each competitor’s website and identify the SEO techniques employed. If any of the competitor’s websites are unavailable at the time of scraping or if the tool’s access is blocked by the website’s firewall, no results will be displayed for that particular website.

- The tool relies on the SERP API to gather ranking data. The data retrieval location is determined by the location of the API’s web server. Consequently, search results and rankings from this specific location may slightly differ from what users observe from a different location.

- We must highlight that the accuracy of the model’s predictions presented in Section 3.2 could be enhanced with a larger and more representative sample of websites.

- Regarding User Interface (UI) Design, the user interface has not been developed, which means that users will only be able to view the results either in Excel format or within the terminal.

- The software is user-friendly; however, it does require a certain level of background knowledge in executing Python code.

- As an open-source software, it can be easily customized and extended with additional features, such as a Usability and Security Testing function to predict Click-Through Rate [60].

- Data Privacy is ensured since the software runs locally on the user’s computer, and their data remains within their system without any exposure to the internet.

- Input Validation is implemented to ensure the security of the software. User-entered data is considered safe, and extreme validations are not applied to the input.

- We could bolster the software’s capability to enhance predictive accuracy: A larger dataset would result in heightened accuracy when forecasting the most effective quantities of backlinks and linking domains, thereby contributing to a more successful SEO strategy.

- We could employ unsupervised machine learning to uncover novel SEO techniques from well-established websites that have not yet been revealed to the broader public.

- We could adapt to evolving algorithms: Given the constant evolution of search engine algorithms, a robust dataset enables the software to adapt and remain current with these changes, ensuring the recommended SEO strategies maintain their efficacy.

- Semantic SEO: Leveraging semantic search principles to produce content aligned with user intent and context can enhance search visibility.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Method | SEO Technique | Description |

|---|---|---|

| perform_seo_checks | - | The method uses the requests method to obtain the website’s source code and the BeautifulSoup library as html.parser to parse the code. Finally, it utilizes all the methods that detect SEO techniques by returning the results. |

| get_organic_serps | - | The method utilizes the ZenSerp application programming interface (API) to retrieve a comprehensive list of websites that are listed on the first page of search results in response to a given keyword query. |

| get_image_alt | Image Alternative Attribute | The method is designed to identify and flag instances of missing alternative attributes for images from a provided list. |

| get_links_title | Link Title Attribute | The method involves the identification of missing title attributes within a list of links. |

| get_h_text | Heading 1 and 2 Tags | The method detects and counts the occurrences of H1 and H2 tags within the source code, followed by a search for the specified target keyword within these tags. |

| get_title_text | Title Tag | The method conducts a search for the presence of the title tag within the source code and verifies the existence of the target keyword in conjunction with the tag. |

| get_meta_description | Meta Description | The method conducts a search for the presence of the meta description within the source code and verifies the existence of the target keyword in conjunction with the tag. |

| get_meta_opengraph | Opegraph | The method conducts a search for the presence of the opengraph tag within the source code and verifies the existence of the target keyword in conjunction with the tag. |

| get_meta_responsive | Responsive Tag | The method conducts a search for the presence of the viewport within the source code and verifies the existence of the target keyword in conjunction with the tag. |

| get_style_list | Minified CSS | The method identifies the stylesheets present in the source code and examines whether they have been minified. |

| get_script_list | Minified JS | The method identifies the scripts present in the source code and examines whether they have been minified. |

| get_sitemap | Sitemap | The method identifies the xml sitemap present in the source code. |

| get_rss | RSS | The method identifies the RSS feed present in the source code. |

| get_json_ld | JSON-LD structured data | The method identifies the JSON-LD present in the source code. |

| get_item_type_flag | Microdata structured data | The method identifies the Microdata present in the source code. |

| get_rdfa_flag | RDFA structured data | The method identifies RDFA present in the source code. |

| get_inline_css_flag | In-line CSS | The method detects any in-line CSS code in source code. |

| get_robots | Robots.txt | The method verifies the presence of a Robots.txt file in the root path of the website. |

| get_gzip | GZip | The method performs an evaluation to detect the presence of gzip Content-Encoding in response headers. |

| get_web_ssl | SSL Certificates | The method performs an analysis to determine if the webpage has been provided with Secure Sockets Layer (SSL) certificates, which ensure that the connection between the user’s browser and the website is encrypted and secure. |

| seo_friendly_url | SEO Friendly Url | The method examines whether the provided URL is optimized for search engine optimization (SEO), conforming to the best practices and guidelines. |

| get_speed | Loading Time | The method employs the Lighthouse API to measure the web page’s loading time. |

| get_responsive_test | Responsive Design | The method employs the mobileFriendlyTest API to ascertain whether a given webpage is responsive, i.e., capable of rendering suitably on different devices and screen sizes. |

| get_da | Domain Authority | The method employs the MOZ API to obtain the Domain Authority (DA) of a website. |

Appendix B

References

- Roumeliotis, K.I.; Tselikas, N.D. An effective SEO techniques and technologies guide-map. J. Web Eng. 2022, 21, 1603–1650. [Google Scholar] [CrossRef]

- Roumeliotis, K.I.; Tselikas, N.D.; Nasiopoulos, D.K. Airlines’ Sustainability Study Based on Search Engine Optimization Techniques and Technologies. Sustainability 2022, 14, 11225. [Google Scholar] [CrossRef]

- Matoševic, G.; Dobša, J.; Mladenic, D. Using Machine Learning for Web Page Classification in Search Engine Optimization. Future Internet 2021, 13, 9. [Google Scholar] [CrossRef]

- Webmaster Guidelines, Google Search Central, Google Developers. Available online: https://developers.google.com/search/docs/advanced/guidelines/webmaster-guidelines (accessed on 12 May 2023).

- Sakas, D.P.; Reklitis, D.P. The Impact of Organic Traffic of Crowdsourcing Platforms on Airlines’ Website Traffic and User Engagement. Sustainability 2021, 13, 8850. [Google Scholar] [CrossRef]

- Luh, C.-J.; Yang, S.-A.; Huang, T.-L.D. Estimating Google’s search engine ranking function from a search engine optimization perspective. Online Inf. Rev. 2016, 40, 239–255. [Google Scholar] [CrossRef]

- Bing Webmaster Guidelines. Available online: https://www.bing.com/webmasters/help/webmaster-guidelines-30fba23a (accessed on 8 August 2023).

- Iqbal, M.; Khalid, M. Search Engine Optimization (SEO): A Study of important key factors in achieving a better Search Engine Result Page (SERP) Position. Sukkur IBA J. Comput. Math. Sci. SJCMS 2022, 6, 1–15. [Google Scholar] [CrossRef]

- Ziakis, C.; Vlachopoulou, M.; Kyrkoudis, T.; Karagkiozidou, M. Important Factors for Improving Google Search Rank. Future Internet 2019, 11, 32. [Google Scholar] [CrossRef]

- Saura, J.R.; Reyes-Menendez, A.; Van Nostrand, C. Does SEO Matter for Startups? Identifying Insights from UGC Twitter Communities. Informatics 2020, 7, 47. [Google Scholar] [CrossRef]

- Patil, V.M.; Patil, A.V. SEO: On-Page + Off-Page Analysis. In Proceedings of the International Conference on Information, Communication, Engineering and Technology (ICICET), Pune, India, 29–31 August 2018. [Google Scholar]

- Santos Gonçalves, T.; Ivars-Nicolás, B.; Martínez-Cano, F.J. Mobile Applications Accessibility: An Evaluation of the Local Portuguese Press. Informatics 2021, 8, 52. [Google Scholar] [CrossRef]

- Roumeliotis, K.I.; Tselikas, N.D. Evaluating Progressive Web App Accessibility for People with Disabilities. Network 2022, 2, 350–369. [Google Scholar] [CrossRef]

- Kumar, G.; Paul, R.K. Literature Review on On-Page & Off-Page SEO for Ranking Purpose. United Int. J. Res. Technol. UIJRT 2020, 1, 30–34. [Google Scholar]

- Wang, F.; Li, Y.; Zhang, Y. An empirical study on the search engine optimization technique and its outcomes. In Proceedings of the 2nd International Conference on Artificial Intelligence, Management Science and Electronic Commerce (AIMSEC), Zhengzhou, China, 8–10 August 2011. [Google Scholar]

- (Meta) Title Tags + Title Length Checker [2021 SEO]–Moz. Available online: https://moz.com/learn/seo/title-tag (accessed on 12 May 2023).

- Van, T.L.; Minh, D.P.; Le Dinh, T. Identification of paths and parameters in RESTful URLs for the detection of web Attacks. In Proceedings of the 4th NAFOSTED Conference on Information and Computer Science, Hanoi, Vietnam, 24–25 November 2017. [Google Scholar]

- Rovira, C.; Codina, L.; Lopezosa, C. Language Bias in the Google Scholar Ranking Algorithm. Future Internet 2021, 13, 31. [Google Scholar] [CrossRef]

- Roumeliotis, K.I.; Tselikas, N.D. Search Engine Optimization Techniques: The Story of an Old-Fashioned Website. In Proceedings of the Business Intelligence and Modelling, IC-BIM 2019, Paris, France, 12–14 September 2019; Springer Book Series in Business and Economics; Springer: Cham, Switzerland, 2019. [Google Scholar]

- URL Structure [2021 SEO]—Moz SEO Learning Center. Available online: https://moz.com/learn/seo/url (accessed on 12 May 2023).

- An Image Format for the Web|WebP|Google Developers. Available online: https://developers.google.com/speed/webp (accessed on 5 May 2022).

- Zhou, H.; Qin, S.; Liu, J.; Chen, J. Study on Website Search Engine Optimization. In Proceedings of the International Conference on Computer Science and Service System, Nanjing, China, 11–13 August 2012. [Google Scholar]

- Zhang, S.; Cabage, N. Does SEO Matter? Increasing Classroom Blog Visibility through Search Engine Optimization. In Proceedings of the 47th Hawaii International, Conference on System Sciences, Wailea, HI, USA, 7–10 January 2013. [Google Scholar]

- All Standards and Drafts-W3C. Available online: https://www.w3.org/TR/ (accessed on 12 May 2023).

- Shroff, P.H.; Chaudhary, S.R. Critical rendering path optimizations to reduce the web page loading time. In Proceedings of the 2nd International Conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2017. [Google Scholar]

- Tran, H.; Tran, N.; Nguyen, S.; Nguyen, H.; Nguyen, T.N. Recovering Variable Names for Minified Code with Usage Con-texts. In Proceedings of the IEEE/ACM 41st International Conference on Software Engineering (ICSE), Montreal, QC, Canada, 25–31 May 2019. [Google Scholar]

- Ma, D. Offering RSS Feeds: Does It Help to Gain Competitive Advantage? In Proceedings of the 42nd Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 5–8 January 2009. [Google Scholar]

- Gudivada, V.N.; Rao, D.; Paris, J. Understanding Search-Engine Optimization. Computer 2015, 48, 43–52. [Google Scholar] [CrossRef]

- Percentage of Mobile Device Website Traffic Worldwide. Available online: https://www.statista.com/statistics/277125/share-of-website-traffic-coming-from-mobile-devices/ (accessed on 8 August 2023).

- Mobile-Friendly Test Tool. Available online: https://search.google.com/test/mobile-friendly (accessed on 12 May 2023).

- MdSaidul, H.; Abeer, A.; Angelika, M.; Prasad, P.W.C.; Amr, E. Comprehensive Search Engine Optimization Model for Commercial Websites: Surgeon’s Website in Sydney. J. Softw. 2018, 13, 43–56. [Google Scholar]

- Xilogianni, C.; Doukas, F.-R.; Drivas, I.C.; Kouis, D. Speed Matters: What to Prioritize in Optimization for Faster Websites. Analytics 2022, 1, 175–192. [Google Scholar] [CrossRef]

- Kaur, S.; Kaur, K.; Kaur, P. An Empirical Performance Evaluation of Universities Website. Int. J. Comput. Appl. 2016, 146, 10–16. [Google Scholar] [CrossRef]

- Google Lighthouse. Available online: https://developers.google.com/web/tools/lighthouse (accessed on 12 May 2023).

- Pingdom Website Speed Test. Available online: https://tools.pingdom.com/ (accessed on 12 May 2023).

- Google Chrome Help. Available online: https://support.google.com/chrome/answer/95617?hl=en (accessed on 12 May 2023).

- Jun, B.; Bustamante, F.; Whang, S.; Bischof, Z. AMP up your Mobile Web Experience: Characterizing the Impact of Google’s Accelerated Mobile Project. In Proceedings of the MobiCom’19: The 25th Annual International Conference on Mobile Computing and Networking, Los Cabos, Mexico, 21–25 October 2019. [Google Scholar]

- Roumeliotis, K.I.; Tselikas, N.D. Accelerated Mobile Pages: A Comparative Study. In Proceedings of the Business Intelligence and Modelling IC-BIM 2019, Paris, France, 12–14 September 2019; Springer Book Series in Business and Economics; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Start Building Websites with AMP. Available online: https://amp.dev/documentation/ (accessed on 12 May 2023).

- Phokeer, A.; Chavula, J.; Johnson, D.; Densmore, M.; Tyson, G.; Sathiaseelan, A.; Feamster, N. On the potential of Google AMP to promote local content in developing regions. In Proceedings of the 11th International Conference on Communication Systems & Networks (COMSNETS), Bengaluru, India, 7–11 January 2019; pp. 80–87. [Google Scholar]

- Welcome to Schema.org. Available online: https://schema.org/ (accessed on 12 May 2023).

- Guha, R.; Brickley, D.; MacBeth, S. Schema.org: Evolution of Structured Data on the Web: Big data makes common schemas even more necessary. Queue 2015, 13, 10–37. [Google Scholar] [CrossRef]

- Navarrete, R.; Lujan-Mora, S. Microdata with Schema vocabulary: Improvement search results visualization of open eductional resources. In Proceedings of the 13th Iberian Conference on Information Systems and Technologies (CISTI), Caceres, Spain, 13–16 June 2018. [Google Scholar]

- Navarrete, R.; Luján-Mora, S. Use of embedded markup for semantic annotations in e-government and e-education websites. In Proceedings of the Fourth International Conference on eDemocracy & eGovernment (ICEDEG), Quito, Ecuador, 19–21 April 2017. [Google Scholar]

- The Open Graph Protocol. Available online: https://ogp.me/ (accessed on 12 May 2023).

- Brin, S.; Page, L. Reprint of: The anatomy of a large-scale hypertextual web search engine. Comput. Netw. 2012, 56, 3825–3833. [Google Scholar] [CrossRef]

- Mozscape API. Available online: https://moz.com/products/api (accessed on 12 May 2023).

- Vyas, C. Evaluating state tourism websites using search engine optimization tools. Tour. Manag. 2019, 73, 64–70. [Google Scholar] [CrossRef]

- Mavridis, T.; Symeonidis, A.L. Identifying valid search engine ranking factors in a web 2.0 and web 3.0 context for building efficient Seo Mechanisms. Eng. Appl. Artif. Intell. 2015, 41, 75–91. [Google Scholar] [CrossRef]

- SEO Audit Software. Available online: https://github.com/rkonstadinos/python-based-seo-audit-tool (accessed on 12 May 2023).

- Free Proxy Python Package. Available online: https://pypi.org/project/free-proxy/ (accessed on 18 August 2023).

- ZenSerp API. Available online: https://zenserp.com/ (accessed on 12 May 2023).

- Pagespeedapi Runpagespeed. Available online: https://developers.google.com/speed/docs/insights/v4/reference/pagespeedapi/runpagespeed (accessed on 12 May 2023).

- McKinney, W. Data Structures for Statistical Computing in Python. Proc. Python Sci. Conf. 2020, 9, 56–61. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Vanderplas, J. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Joblib Development Team. Joblib: Running Python Functions as Pipeline Jobs. 2019. Available online: https://joblib.readthedocs.io/en/latest/ (accessed on 12 May 2023).

- Seositecheckup. Available online: https://seositecheckup.com/ (accessed on 18 August 2023).

- Seobility. Available online: https://www.seobility.net/en/seocheck/ (accessed on 18 August 2023).

- Ahref Backlink Checker. Available online: https://ahrefs.com/backlink-checker (accessed on 18 August 2023).

- Damaševičius, R.; Zailskaitė-Jakštė, L. Usability and Security Testing of Online Links: A Framework for Click-Through Rate Prediction Using Deep Learning. Electronics 2022, 11, 400. [Google Scholar] [CrossRef]

| SEO Techniques/Page | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | U |

|---|---|---|---|---|---|---|---|---|---|

| position | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 0 |

| amp (Section 2.13.) | |||||||||

| images_alt (Section 2.3.) | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| links_title (Section 2.4.) | ✓ | ✓ | |||||||

| heading1 (Section 2.6.) | |||||||||

| heading2 (Section 2.6.) | |||||||||

| title (Section 2.1.) | |||||||||

| meta_description (Section 2.5.) | |||||||||

| opengraph (Section 2.15.) | ✓ | ✓ | ✓ | ||||||

| style (Section 2.7.) | ✓ | ✓ | |||||||

| sitemap (Section 2.8.) | |||||||||

| rss (Section 2.8.) | |||||||||

| script (Section 2.7.) | ✓ | ✓ | |||||||

| json_ld (Section 2.14.) | ✓ | ✓ | ✓ | ||||||

| inline_css | ✓ | ✓ | |||||||

| microdata (Section 2.14.) | ✓ | ||||||||

| rdfa (Section 2.14.) | |||||||||

| robots (Section 2.9.) | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| gzip (Section 2.11.) | ✓ | ✓ | ✓ | ✓ | |||||

| web_ssl (Section 2.12.) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| seo_friendly_url (Section 2.2.) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| speed (Section 2.11.) | 2.9 s | 1.4 s | 1.5 s | 0.4 s | 3.8 s | 1.7 s | 1.9 s | 1.3 s | 2.8 s |

| responsive (Section 2.10.) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| da (Section 2.16.) | 10 | 10 | 20 | 16 | 19 | 5 | 18 | 23 | 4 |

| backlinks (Section 2.16.) | 1191 | 1191 | 17,324 | 2908 | 17,324 | 6 | 3261 | 6054 | 4 |

| linking_domains (Section 2.16.) | 84 | 84 | 211 | 119 | 211 | 4 | 214 | 183 | 3 |

| Missing SEO Technique | Comments | SEO Rules |

|---|---|---|

| gzip | It is suggested to use the [gzip] SEO technique, which the majority of the competition have also applied. |

|

| Missing SEO Technique | Comments | Data | SEO Rules |

|---|---|---|---|

| links_title | The links on the WebPage are missing title tags. It is recommended to add title tags to all links for better user experience and SEO optimization. | {‘/service/xondriki-polisi’: ‘‘, ‘product/bazo-130-mL-451’: ‘‘, ‘product/bazaki-me-fello-524’: ‘‘, ‘product/bazaki-me-fello’: ‘‘, ‘product/bazo-130mL-396’: ‘‘, ‘product/lampada-gamou-f12cm-20-cm’: ‘‘, ‘product/pasxalini-lampada-strogguli-krakele-mob’: ‘‘, ‘product/pasxalini-lampada-strogguli-krakele-prasini’: ‘‘, ‘product/lampada-gamou-tetragoni-masif-12cm12cm-22cm’: ‘‘, ‘product/parafinelaio’: ‘‘, ‘product/pasxalini-lampada-plake-xusti-sapio-milo’: ‘‘, ‘product/ekklisiastiko-keri-n1’: ‘‘, ‘product/kormos-apo-keri-f9cm95cm-455’: ‘‘, ‘product/parafini’: ‘‘, ‘product/keri-citronella-n1’: ‘‘, ‘product/premium-futika-keria-me-kapaki’: ‘‘, ‘product/potiri-vintage’: ‘‘, ‘product/potiri-stroggulo’: ‘‘, ‘product/kormos-apo-keri-9cm-9-cm-15cm’: ‘‘, ‘product/bazaki-me-fello-323’: ‘‘, ‘product/antipagotiko-keri’: ‘‘, ‘product/pasxalini-lampada-strogguli-mob’: ‘‘, ‘product/pasxalini-lampada-plake-krakele-prasini’: ‘‘, ‘product/lampada-baptisis-roz’: ‘‘, ‘product/akatergasto-prosanamma’: ‘‘, ‘product/pasxalini-lampada-strogguli-xusti-galazia’: ‘‘, ‘product/premium-futika-keria-me-kapaki-418’: ‘‘, ‘product/pasxalini-lampada-plake-xusti-galazia’: ‘‘, ‘product/pasxalini-lampada-plake-galazia’: ‘‘, ‘product/lampada-baptisis-galazia’: ‘‘, ‘product/set-kormon-apo-keri-339’: ‘‘, ‘product/ekklisiastiko-keri-n1-500gr’: ‘‘, ‘product/potiri-funky-xalkino’: ‘‘, ‘product/potiri-koukounara’: ‘‘} |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roumeliotis, K.I.; Tselikas, N.D. A Machine Learning Python-Based Search Engine Optimization Audit Software. Informatics 2023, 10, 68. https://doi.org/10.3390/informatics10030068

Roumeliotis KI, Tselikas ND. A Machine Learning Python-Based Search Engine Optimization Audit Software. Informatics. 2023; 10(3):68. https://doi.org/10.3390/informatics10030068

Chicago/Turabian StyleRoumeliotis, Konstantinos I., and Nikolaos D. Tselikas. 2023. "A Machine Learning Python-Based Search Engine Optimization Audit Software" Informatics 10, no. 3: 68. https://doi.org/10.3390/informatics10030068

APA StyleRoumeliotis, K. I., & Tselikas, N. D. (2023). A Machine Learning Python-Based Search Engine Optimization Audit Software. Informatics, 10(3), 68. https://doi.org/10.3390/informatics10030068