1. Introduction and Motivation

According to the Insurance Information Institute (

https://www.iii.org/publications/2021-insurance-fact-book/life-health-financial-data/payouts (accessed on 3 March 2021)), the life insurance industry paid a total of nearly

$76 billion as death benefits in 2019. Life insurance is in the business of providing a benefit in the event of premature death, something that is understandably difficult to predict with certainty. Claims arising from mortality are not surprisingly the largest cash flow item that affects both the income statement and the balance sheet of a life insurer. Life insurance contracts are generally considered long term, where the promised benefit could be unused for an extended period of time before being realized. In effect, not only do life insurers pay out death claims in aggregate on a periodic basis; they are also obligated to have sufficient assets set aside as reserves to fulfill this long term obligation. See

Dickson et al. (

2013).

Every life insurer must have in place a systematic process of tracking and monitoring its death claims experience. This tracking and monitoring system is an important risk management tool. It should involve not only identifying statistically significant deviations of actual to expected experience, but also be able to understand and explain the effects of patterns. Such deviations might be considered normal patterns of deviation that are anomalies for short durations, while of more considerable importance are deviations considered to follow a trend for longer durations.

Prior to sale, insurance companies exercise underwriting to identify the degrees of mortality risk to applicants. As a consequence, there is a selection effect on the underlying mortality of life insurance policyholders; normally, the mortality of policyholders is considered better than the general population. However, this mortality selection wears off over time, and in spite of this selection, it is undeniably important for a life insurance company to have a monitoring system.

Vadiveloo et al. (

2014) listed some of these benefits and we reiterate their importance again as follows:

A tracking and monitoring system is a risk management tool that can assist insurers to take the actions necessary to mitigate the economic impact of mortality deviations.

It is a tool for improved understanding of the emergence of death claims experience, thereby helping an insurer in product design, underwriting, marketing, pricing, reserving, and financial planning.

It provides a proactive tool for dealing with regulators, credit analysts, investors, and rating agencies who may be interested in reasons for any volatility in earnings as a result of death claim fluctuations.

A better understanding of the company’s emergence of death claims experience helps to improve its claims predictive models.

The results of a tracking and monitoring system provide the company a benchmark for its death claims experience that can be relatively compared with that of other companies in the industry.

Despite these apparent benefits, some insurers may still not have a systematic process of tracking and monitoring death claims. Such a process clearly requires a meticulous investigation of historical death claims experience. In this article, we explore the use of data clustering to examine and understand how actual death claims differ from expected ones. By naturally subdividing the policyholders into clusters, this process of exploration through data clustering will provide us a better understanding of the characteristics of the life insurance portfolio according to their historical claims experience. This can be important in the early stage of monitoring death claims and subsequent management of portfolio risks for the life insurer.

As information stored in data grows rapidly in the modern world, several industries, including the insurance industry, have started to implement practices to analyze datasets and to draw meaningful results for more effective decision making. The magnitude and scale of information from these datasets continue to increase at a rapid pace, and so does the ease of access. Data analytics have become an important function in every organization, and how to deal with huge data sets has become an important issue. In many instances, information comes in unstructured forms so that unsupervised learning methods are instituted for preliminary investigation and examination.

The most commonly used unsupervised learning technique is cluster analysis. It involves partitioning observations into groups or clusters where observations within each cluster are optimally similar, while at the same time, observations between clusters are optimally dissimilar. Among many clustering algorithms developed in the past few decades, the

k-means clustering algorithm (

MacQueen (

1967)) is perhaps the simplest, most straightforward, and most popular method that efficiently partitions the data set into

k clusters. With

k initial arbitrarily centroid set, the

k-means algorithm finds the locally optimal solutions by gradually minimizing the clustering error calculated according to numerical attributes. While the technique has been applied in several disciplines, (

Thiprungsri and Vasarhelyi (

2011);

Sfyridis and Agnolucci (

2020);

Jang et al. (

2019)), there is less related work in life insurance.

Devale and Kulkarni (

2012) suggests the use of

k-means to identify population segments to increase customer base. Different methods of clustering were employed to select representative policies when building predictive models in the valuation of large portfolios of variable annuity contracts (

Gan (

2013);

Gan and Valdez (

2016,

2020)).

For practical implementation, the algorithm has drawbacks that present challenges with our life insurance dataset: (i) it is particularly sensitive to the initial cluster assignment which is randomly picked, and (ii) it is unable to handle categorical attributes. While the

k-prototype clustering is lesser known, it provides the advantage of being able to handle mixed data types, including numerical and categorical attributes. For numerical attributes, the distance measure used may still be based on Euclidean. For categorical attributes, the distance measure used is based on the number of matching categories. The

k-prototype algorithm is also regarded as more efficient than other clustering methods (

Gan et al. (

2007)). For instance, in hierarchical clustering, the optimization requires repeated calculations of very high-dimensional distance matrices.

This paper extends the use of the

k-prototype algorithm proposed by

Huang (

1997) to provide insights into and draw inferences from a real-life dataset of death claims experience obtained from a portfolio of contracts of a life insurance company. The

k-prototype algorithm has been applied in marketing for segmenting customers to better understand product demands

Hsu and Chen (

2007) and in medical statistics for understanding hospital care practices

Najjar et al. (

2014). This algorithm integrates the procedures of

k-means and

k-modes to efficiently cluster datasets that contain, as said earlier, numerical and categorical variables; the nature of our data, however, contains a geospatial variable. The

k-means can only handle numerical attributes while the

k-modes can only handle categorical attributes. We therefore improve the

k-prototype clustering by adding a distance measurement to the cost function so that it can also deal with the geodetic distance between latitude–longitude spatial data points. The latitude is a numerical measure of the distance of a location from far north or south of the equator; longitude is a numerical measure of the distance of a location from east-west of the “meridians”. Some work related to geospatial data clustering can be found in the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) (

Ester et al. 1996) and in ontology (

Wang et al. 2010). The addition of spatial data points in clustering gives us the following advantages: (i) we are able to make full use of available information in our dataset; (ii) we can implicitly account for possible neighborhood effect of mortality; and (iii) we provide additional novelty and insights into the applications.

Our empirical data have been drawn from the life insurance portfolio of a major insurer and contain observations of approximately 1.14 million policies with a total insured amount of over 650 billion dollars. Using our empirical data, we applied the

k-prototype algorithm that ultimately yielded three optimal clusters determined using the concept of gap statistics. Shown to be an effective method for determining the optimal number of clusters, the gap statistic is based on evaluating “the change in within-cluster dispersion with that expected under an appropriate reference null distribution” (

Tibshirani et al. 2001).

To provide further insights into the death claims experience of our life insurance data set, we compared the aggregated actual to expected deaths for each of the optimal clusters. For a life insurance contract, it is most sensible to measure the magnitude of deaths based on the face amount, and thus, we computed the ratio of the aggregated actual face amounts of those who died to the face amounts of expected deaths for each optimal cluster. Under some mild regularity conditions, necessary to prove normality, we were able to construct statistical confidence intervals of the ratio on each of the clusters, thereby allowing us to draw inferences as to the significant statistical deviations of the mortality experience for each of the optimal clusters. We provide details of the proofs for the asymptotic development of these confidence intervals in

Appendix A. Each cluster showed different patterns of mortality deviation and we can deduce the dominant characteristics of the policies from this cluster-based analysis. The motivation was to assist the life insurance company in gaining some better understanding of potential favorable and unfavorable clusters.

The rest of this paper is organized as follows. In

Section 2, we briefly describe the real data set from an insurance company, including the data elements and the preprocessing of the data in preparation of cluster analysis. In

Section 3, we provide details of the

k-prototype clustering algorithm and discuss how the balance weight parameter is estimated and how to choose the optimal number of clusters. In

Section 4, we present the clustering results together with interpretation. In

Section 5, we discuss their implications and applications to monitoring the company’s death claims experience. We conclude in

Section 6.

2. Empirical Data

We illustrate

k-prototype clustering algorithm based on the data set we obtained from an insurance company. This data set contains 1,137,857 life insurance policies issued in the third quarter of 2014. Each policy is described by 8 attributes with 5 categorical and 2 numerical data elements, and longitude-latitude coordinates.

Table 1 shows the description and basic summary statistics of each variable.

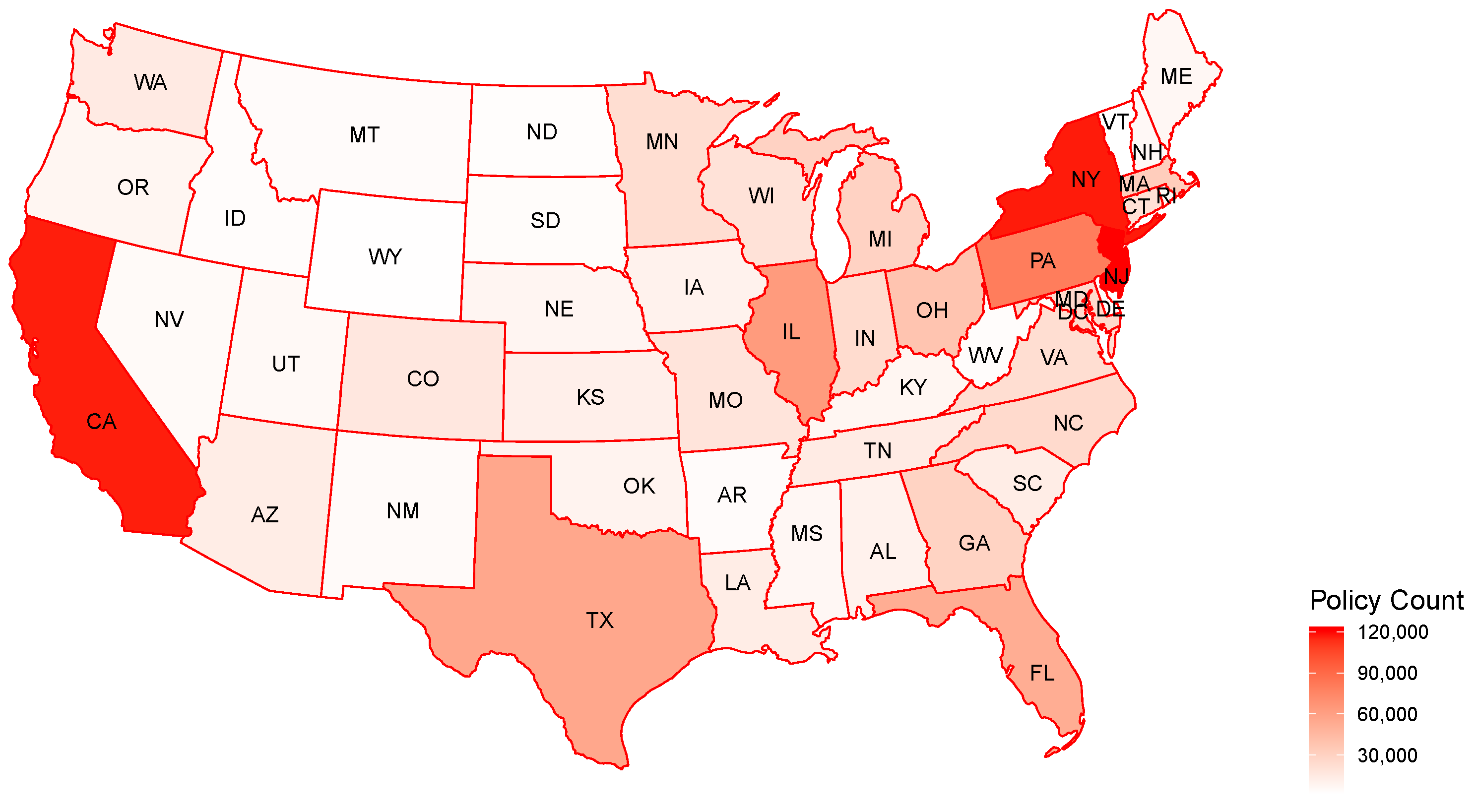

Figure 1 provides a visualization of the distribution of the policies across the states. We only kept the policies issued in the continental United States, and therefore, excluded the policies issued in Alaska, Hawaii, and Guam. First, the frequency the latter policies observed from these states is not materially plentiful. Second, since those states or territories are outside the mainland United States, geodetic measurements are distorted and clustering results may become less meaningful. The saturated color indicates a high frequency of the policy distributed in a particular state. The distribution of the policy count is highly skewed, with New York, New Jersey, California, and Pennsylvania having significantly more insureds than other states. The spatial attributes are represented by latitude and longitude coordinate pairs.

The insured’s sex indicator, gender, is also a discrete variable with 2 levels, female and male, with the number of males being almost twice that of females. Smoker status indicates the insured’s smoking status with nonsmokers and the remaining smokers. The variable underwriting type reflects two types of underwriting: of the policies were fully underwritten at issue while the remaining were term conversions. Term conversions refer to those policies originally with a fixed maturity (or term) that were converted into permanent policies at a later date, without any additional underwriting. The variable “substandard indicator” indicates whether policy has been issued as substandard or not. Substandard policies are issued after an underwriting is performed that have expected mortality worse than standard policies. Substandard policies come with an extra premium. In our dataset, there are about policies considered substandard and the remaining are standard. The variable plan has three levels: the term insurance plan (term), universal life with secondary guarantees (ULS), and variable life with secondary guarantees (VLS).

In our dataset, there are two continuous variables. The variable “issue age” refers to the policyholder’s age at the time of issuing; the range of issue ages is from as young as a newborn to as old as 90 years, with an average of about 44 years old. The variable “face amount” refers to the sum insured, either fixed at policy issuing or accumulated to this level at the most recent time of valuation. As is common with data clustering, we standardized these two continuous variables by rescaling the values in order to be in the range of

. The general formula used in our normalization is

where

x is the original value and

is the standardized (or normalized) value. This method is usually more robust than other normalization formulas. However, for the variable face amount, we found few extreme values that may be further distorting the spread or range of possible values. To fix this additional concern, we take the logarithm of the original values before applying the normalization formula:

3. Data Clustering Algorithms

Data clustering refers to the process of dividing a set of objects into homogeneous groups or clusters (

Gan 2011;

Gan et al. 2007) using some similarity criterion. Objects in the same cluster are more similar to each other than to objects from other clusters. Data clustering is an unsupervised learning process and is often used as a preliminary step for data analytics. In bioinformatics, for example, data clustering is used to identify the patterns hidden in gene expression data (

MacCuish and MacCuish 2010). In big data analytics, data clustering is used to produce a good quality of clusters or summaries for big data to address the storage and analytical issues (

Fahad et al. 2014). In actuarial science, data clustering is also used to select representative insurance policies from a large pool of policies in order to build predictive models (

Gan 2013;

Gan and Lin 2015;

Gan and Valdez 2016).

Figure 2 shows a typical clustering process described in

Jain et al. (

1999). The clustering process consists of five major steps: pattern representation, dissimilarity measure definition, clustering, data abstraction, and output assessment. In the pattern representation step, the task is to determine the number and type of the attributes of the objects to be clustered. In this step, we may extract, select, and transform features to identify the most effective subset of the original attributes to use in clustering. In the dissimilarity measure definition step, we select a distance measure that is appropriate to the data domain. In the clustering step, we apply a clustering algorithm to divide the data into a number of meaningful clusters. In the data abstraction step, we extract one or more prototypes from each cluster to help comprehend the clustering results. In the final step, we use some criteria to assess the clustering results.

Clustering algorithms can be divided into two categories: partitional and hierarchical clustering algorithms. A partitional clustering algorithm divides a dataset into a single partition; a hierarchical clustering algorithm divides a dataset into a sequence of nested partitions. In general, partitional algorithms are more efficient than hierarchical algorithms because the latter usually require calculating the pairwise distances between all the data points.

3.1. The k-Prototype Algorithm

The

k-prototype algorithm (

Huang 1998) is an extension of the well-known

k-means algorithm for clustering mixed type data. In the

k-prototype algorithm, the prototype is the center of a cluster, just as the mean is the center of a cluster in the

k-means algorithm.

To describe the

k-prototype algorithm, let

,

denote a dataset containing

n observations. Each observation is described by

d variables, including

numerical variables,

categorical variables, and

spatial variables. Without loss of generality, we assume that the first

variables are numerical, the remaining

variables are categorical, and the last two variables are spatial. Then the dissimilarity measure between two points

and

used by the

k-prototype algorithm is defined as follows:

where

and

are balancing weights with respect to numerical attributes that are used to avoid favoring types of variables other than numerical,

is the simple-matching distance defined as

and

returns the spatial distance between two points with latitude–longitude coordinates using great circle distance (WGS84 ellipsoid) methods. We have

,

and the radius of the Earth

r = 6,378,137 m from WGS84 axis (

Carter 2002):

where

f is the flattening of the Earth (use

according to WGS84). WGS84 is the common system of reference coordinate used by the Global Positioning System (GPS), and is also the standard set by the U.S. Department of Defense for a global reference system for geospatial information. In the absence of detailed location for each policy, we used the latitude–longitude coordinates of the capital city within the state.

The

k-prototype algorithm aims to minimize the following objective (cost) function:

where

is an

partition matrix,

is a set of prototypes, and

k is the desired number of clusters. The

k-prototype algorithm employs an iterative process to minimize this objective function. The algorithm starts with

k initial prototypes selected randomly from the dataset. Given the set of prototypes

Z, the algorithm then updates the partition matrix as follows:

Given the partition matrix

U, the algorithm updates the prototypes as follows:

where

and

. When

is calculated, we exclude the previous spatial prototype. The numerical components of the prototype of a cluster are updated to the means, the categorical components are updated to the modes, and the new spatial prototype is the coordinate closest to the previous one.

Algorithm 1 shows the pseudo-code of the

k-prototype algorithm. A major advantage of the

k-prototype algorithm is that it is easy to implement and efficient for large datasets. A drawback of the algorithm is that it is sensitive to the initial prototypes, especially when

k is large.

| Algorithm 1: Pseudo-code of the k-prototype algorithm. |

![Risks 09 00047 i001 Risks 09 00047 i001]() |

3.2. Determining the Parameters and

The cost function in Equation (

2) can be further rewritten as:

where

and the inner term

is the total cost when

X is assigned to cluster

l. Note that we can subdivide these measurements into

that represent the total cost from the numerical, categorical, and spatial attributes, respectively.

It is easy to show that the total cost

is minimized by individually minimizing

,

, and

(

Huang (

1997)).

can be minimized through Equations (

4a).

, the total cost from categorical attributes of

X, can be rewritten as

where

is the set of all unique levels of the

jth categorical attribute of

X and

denotes the probability that the

jth categorical attribute of prototype

occurs given cluster

l.

and

are chosen to prevent over-emphasizing either categorical or spatial with respect to numerical attributes and thereby are dependent on the distributions of those numerical attributes (

Huang (

1997)). In the R package

Szepannek (

2017), the value of

is suggested to be the ratio of average of variance of numerical variables to the average concentration of categorical variables:

where

is the frequency of the

kth level of the

jth categorical variable. See also,

Szepannek (

2019). For each categorical variable, we consider it to have a distribution with a probability of each level to be the frequency of this level. For example, the categorical data element

plan has three levels: term, universal life with secondary guarantees (ULS), and variable life with secondary guarantees (VLS). Then the concentration of plan can be measured by Gini impurity:

. Therefore, under the condition that all the variables are independent, the total Gini impurity for categorical variables is

, since

. The average of the total variance for the numerical variables

can be considered to be the estimate of the population variance. Subsequently,

becomes a reasonable estimate and is easy to calculate.

Similarly, , where the concentration of spatial attributes is estimated by the variance of the Great Circle distances between and the center of the total longitude-latitude coordinates.

3.3. Determining the Optimal Number of Clusters

As alluded to in

Section 1, the gap statistic is used to determine the optimal number of clusters. Data

,

consist of

d features measured on

n independent observations.

denotes the distance, defined in Equation (

1), between observations

i and

j. Suppose that we have partitioned the data into

k clusters

and

. Let

be the sum of the pairwise distance for all points within cluster

l and set

The idea of the approach is to standardize the comparison of

with its expectation under an appropriate null reference distribution of the data. We define

where

denotes the average

of the samples

generated from the reference distribution with predefined

k. The gap statistic can be calculated by the following steps:

Set ;

Run k-prototype algorithm and calculate under each for the original data ;

For each

, generate a reference data set

with sample size

n. Run the clustering algorithm under the candidate

k values and compute

and

;

Define , where

; and

Choose the optimal number of clusters as the smallest k such that .

This estimate is broadly applicable to any clustering method and distance measure

. We use

and randomly draw

of the data set using stratified sampling to keep the same proportion of each attribute. The gap and the quantity

against the number of clusters

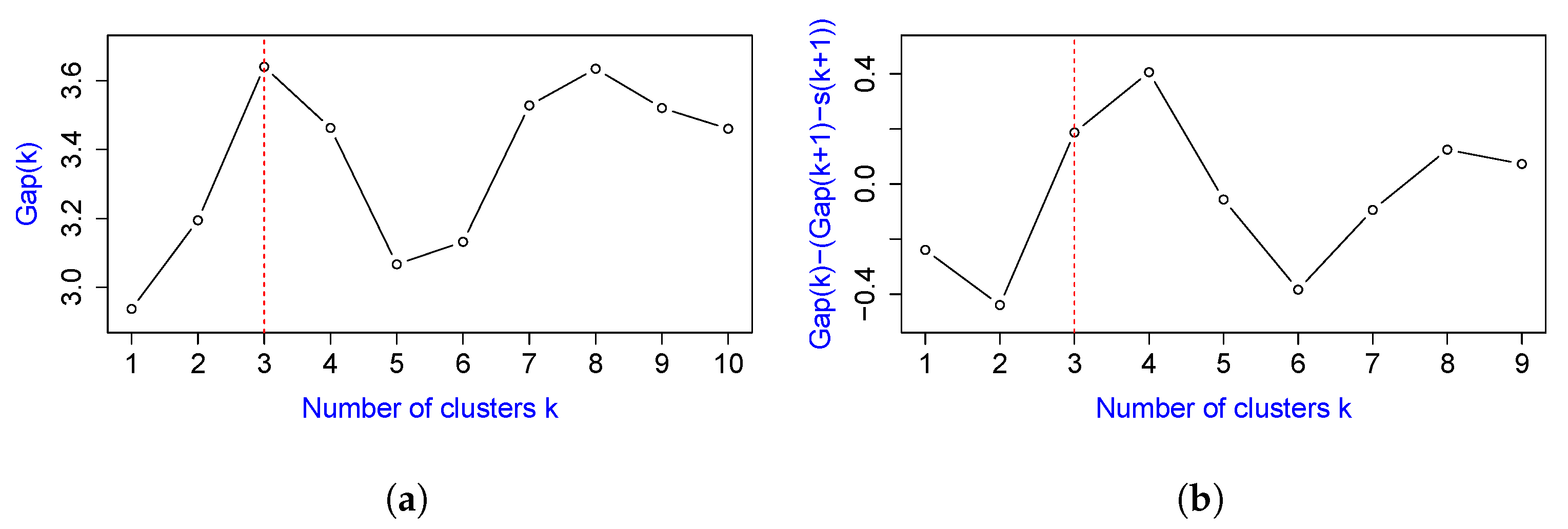

k are shown in

Figure 3. The gap statistic clearly peaks at

and the criteria for choosing

k displayed in the right panel. The correct

is the smallest for which the quantity

becomes positive.

There is the possible drawback of the highly sensitivity of the initial choice of prototypes. In order to minimize the impact, we run the k-prototype algorithm with correct starting with 20 different initializations and then choose the one with the smallest total sum squared errors.

5. Discussion

We now discuss how the clustering results in the previous section can be applied as a risk management tool for mortality monitoring. In particular, we compare these clusters with respect to their deviations of actual to expected mortality. It is a typical practice in the life insurance industry that when analyzing and understanding such deviations, we compare the actual to expected (A/E) death experiences.

To illustrate how we made the comparison, consider one particular cluster containing

n policies. We computed the actual number of deaths for this entire cluster by adding up all the face amounts of those who died during the quarter. Let

be the face amount of policyholder

i in this particular cluster. Thus, the aggregated actual face amount among those who died is equal to

where

indicates the policyholder died and the aggregated expected face amount is

where the expected mortality rate,

, is based on the latest 2015 valuation basic table (VBT), using smoker-distinct and ALB (age-last-birthday) (

https://www.soa.org/resources/experience-studies/2015/2015-valuation-basic-tables/ (accessed on 3 March 2021)). The measure of deviation,

R, is then defined to be

Clearly, a ratio indicates better than expected mortality, and indicates worse than expected mortality.

The death indicator

is a Bernoulli distributed random variable with parameter

which represents the probability of death, or loosely speaking, the mortality rate. For large

n, i.e., as

, the ratio

R converges in distribution to a normal random variable with mean 1 and variance

. The details of proofs for this convergence are provided in

Appendix A.

Based on the results of this convergence, it allowed us to construct 90% and 95% confidence intervals of the ratio

R or the A/E of mortality. We display

Figure 6a,b, which depict the differences in the A/E ratio for the three different clusters, based on 90% and 95% confidence intervals, respectively.

Based on this company’s claims experience, these figures provide some good news overall. The observed A/E ratios for all clusters are all below 1, which as said earlier, indicates that the actual mortality is better than expected for all three clusters. There are some peculiar observations that we can draw from the clusters:

Cluster 1 has the most favorable A/E ratio among all the cluster—significantly less than 1 at the 10% significance level, with moderate variability. This can be explained reasonably by this dominant feature compared with other clusters: Its gender make-up of all females in the entire portfolio. Females live longer than males on the average. There is a larger percentage of variable life plans, and slightly fewer smokers, term conversion, and substandard policies than clusters 2 and 3. In addition, the violin plots for the numerical attributes show that the youngest group with smallest amount of insurance coverage belongs to this cluster. We expect this youngest group to have generally low mortality rates. Geographically, the insureds in this cluster are mostly distributed in the northeastern regions, such as New Jersey, New York, Rhode Island, and New Hampshire. It may be noted that policyholders tend to come from this region where the people typically have better incomes with better employer-provided health insurance.

Cluster 2 has the A/E ratio of 0.68—not significantly less than 1 at both 5% and 10% significance levels; it has the largest variability of this ratio among all clusters. Cluster 2 has, therefore, the most unfavorable A/E ratio from a statistical perspective. The characteristics of this cluster can be captured by these dominant features: (i) Its gender make-up is a mix of males and females, with more males than females. (ii) It has the largest proportions of smokers, term conversion underwriting type policies, and substandard policies. (iii) When it comes to plan type though, 91% of them have universal life contracts and no term policies. (iv) With respect to age at issuing and amount of insurance coverage, this cluster has the largest proportion of elderly people and therefore, has lower face amounts. All these dominating features help explain a generally worse mortality and larger variability of deviations. For example, the older group has a higher mortality rate than the younger group, and along with the largest proportion of smokers, this explains the compounded mortality. To some extent, with the largest proportions of term conversion underwriting types and substandard policies, they reasonably indicate more inferior mortality experience.

Cluster 3 has the A/E ratio that is most significantly less than 1, even though it has the worst A/E ratio among all the clusters. The characteristics can be captured by some dominating features in the cluster: male policyholders dominate this cluster and it has the smallest proportions of smokers and term conversion underwriting type policies among ALL three clusters. More than 90% of the policyholders purchased Term plans and most of them have larger face amounts than other clusters. The policyholders in this cluster are more often middle aged compared to other clusters according to the violin plots. The policyholders are more geographically scattered in Arkansas, Alabama, Mississippi, Tennessee, and Oregon. We generally know that smokers’ mortality is worse than non-smokers. Relatively younger age groups have a lower mortality rate than other age groups. Term plans generally have fixed terms and are more subject to frequent underwriting. The small variability can be explained by having more policies giving enough information, and hence, much more predictable mortality.

6. Conclusions

This paper has presented the concept of the k-prototype algorithm for clustering datasets with variables that are of mixed type. Here, our empirical data consist of a large portfolio of life insurance contracts that contain numerical, categorical, and spatial attributes. With clustering, the goal is to subdivide the large portfolio into different groups (or clusters), with members of the same cluster that are more similar to each other in some form than those in other clusters. The concept of similarity presents an additional challenge when objects in the portfolio are of mixed type. We constructed the k-prototype algorithm by minimizing the cost function so that: (i) for numerical attributes, similarity is based on the Euclidean distance, (ii) for categorical attributes, similarity is based on simple-matching distance, and (iii) for spatial attributes, similarity is based on a distance measure, as proposed in WGS84. Based on the gap statistics, we found that our portfolio yielded three optimally unique clusters. We have described and summarized the peculiar characteristics in each cluster.

More importantly, as a guide to practitioners wishing to perform a similar study, we demonstrated how these resulting clusters can then be used to compare and monitor actual to expected death claims experience. Each cluster has lower actual to expected death claims but with differing variabilities, and each optimal cluster showed patterns of mortality deviation for which we are able to deduce the dominant characteristics of the policies within a cluster. We also found that the additional information drawn from the spatial nature of the policies contributed to an explanation of the deviation of mortality experience from what was expected. The results may be helpful for decision making because of an improved understanding of potential favorable and unfavorable clusters. We hope that this paper stimulates further work in this area, particularly in life insurance portfolios with richer and more informative sets of feature variables to enhance the explainability of the results. With each cluster used as a label to the observations, a follow-up study will be done to implement supervised learning to improve understanding of risk classification of life insurance policies. More advanced techniques for clustering, e.g.,

Ahmad and Khan (

2019), can also be used as part of future work.