Abstract

The aim of this project is to develop a stochastic simulation machine that generates individual claims histories of non-life insurance claims. This simulation machine is based on neural networks to incorporate individual claims feature information. We provide a fully calibrated stochastic scenario generator that is based on real non-life insurance data. This stochastic simulation machine allows everyone to simulate their own synthetic insurance portfolio of individual claims histories and back-test thier preferred claims reserving method.

1. Introduction

The aim of this project is to develop a stochastic simulation machine that generates individual claims histories of non-life insurance claims. These individual claims histories should depend on individual claims feature information such as the line of business concerned, the claims code involved or the age of the injured. This feature information should influence the reporting delay of the individual claim, the claim amount paid, its individual cash flow pattern as well as its settlement delay. The resulting (simulated) individual claims histories should be as ‘realistic’ as possible so that they may reflect a real insurance claims portfolio. These simulated claims then allow us to back-test classical aggregate claims reserving methods—such as the chain-ladder method—as well as to develop new claims reserving methods which are based on individual claims histories. The latter has become increasingly popular in actuarial science, see Antonio and Plat (2014), Hiabu et al. (2016), Jessen et al. (2011), Martínez-Miranda et al. (2015), Pigeon et al. (2013), Taylor et al. (2008), Verrall and Wüthrich (2016) and Wüthrich (2018a) for recent developments. A main shortcoming in this field of research is that there is no publicly available individual claims history data. Therefore, there is no possibility to back-test the proposed individual claims reserving methods. For this reason, we believe that this project is very beneficial to the actuarial community because it provides a common ground and publicly available (synthetic) data for research in the field of individual claims reserving.

This paper is divided into four sections. In this first section we describe the general idea of the simulation machine as well as the chosen data used for model calibration. In Section 2 we describe the design of our individual claims history simulation machine using neural networks. Section 3 focuses on the calibration of these neural networks. In Section 4 we carry out a use test by comparing the real data to the synthetically generated data in a chain-ladder claims reserving analysis. Appendix A presents descriptive statistics of the real data. Since the real insurance portfolio is confidential, we also design an algorithm to generate synthetic insurance portfolios of a similar structure as the real one, see Appendix B. Finally, in Appendix C we provide sensitivity plots of selected neural networks.

1.1. Description of the Simulation Machine

The simulation machine is programmed in the language R. The corresponding .zip-folder can be downloaded from the website:

| https://people.math.ethz.ch/~wmario/simulation.html |

This .zip-folder contains all parameters, a file readme.pdf which describes the use of our R-functions, as well as the two R-files Functions.V1 and Simulation.Machine.V1. The first R-file Functions.V1 contains the two R-functions Feature.Generation and Simulation.Machine. The former is used to generate synthetic insurance portfolios (this is described in more detail in Appendix B) and the latter to simulate the corresponding individual claims histories (this is described in the main body of this manuscript). The R-file Simulation.Machine.V1 demonstrates the use of these two R-functions, also providing a short chain-ladder claims reserving analysis.

1.2. Procedure of Developing the Simulation Machine

In recent years, neural networks have become increasingly popular in all fields of machine learning. They have proved to be very powerful tools in classification and regression problems. Their drawbacks are that they are rather difficult to calibrate and, once calibrated, they act almost like black boxes between inputs and outputs. Of course, this is a major disadvantage in interpretation and getting deeper insight. However, the missing interpretation is not necessarily a disadvantage in our project because it implies—in back-testing other methods—that the true data generating mechanism cannot easily be guessed.

To construct our individual claims history simulation machine, we design a neural network architecture. This architecture is calibrated to real insurance data consisting of 9,977,298 individual claims that have occurred between 1994 and 2005. For each of these individual claims, we have full information of 12 years of claims development as well as the relevant feature information. Together with a portfolio generating algorithm (see Appendix B), one can then use the calibrated simulation machine to simulate as many individual claims development histories as desired.

1.3. The Chosen Data

The chosen data has been preprocessed correcting for wrong entries—for instance, an accident date that is bigger than the reporting date, etc. Moreover, we have dropped claims with missing feature components—for instance, if the age of the injured was missing. However, this was a negligible number of claims that we had to drop, and this does not distort the general calibration. The final (cleaned) data set consists of 9,977,298 individual claims histories. The following feature information is available for each individual claim:

- the claims number ClNr, which serves as a distinct claims identifier;

- the line of business LoB, which is categorical with labels in ;

- the claims code cc, which is categorical with labels in and denotes the labor sector of the injured;

- the accident year AY, which is in ;

- the accident quarter AQ, which is in ;

- the age of the injured age (in 5 years age buckets), which is in ;

- the injured part inj_part, which is categorical with labels in and denotes the part of the body injured;

- the reporting year RY, which is in .

Not all values in are needed for the labeling of the categorical classes of the feature component inj_part. In fact, only 46 different values are attained, but for simplicity, we have decided to keep the original labeling received from the insurance company. 46 different labels may still seem to be a lot and a preliminary classification could allow to reduce this number, here we refrain from doing so because each label has sufficient volume.

For all claims , we are given the individual claims cash flow , where is the payment for claim i in calendar year —and where denotes the accident year of claim i. Note that we only consider yearly payments, i.e., multiple payments and recovery payments within calendar year are aggregated into a single, annual payment . This single, annual payment can either be positive or negative, depending on having either more claim payments or more recovery payments in that year. The sum over all yearly payments of a given claim i has to be non-negative because recoveries cannot exceed payments (this is always the case in the considered data). Remark that our simulation machine will allow for recoveries.

Finally, for claims , we are given the claim status process determining whether claim i is open or closed at the end of each accounting year. More precisely, if , claim i is open at the end of accounting year , and if , claim i is closed at the end of that accounting year. Our simulation machine also allows for re-opening of claims, which is quite common in our real data. More description of the data is given in Appendix A.

2. Design of the Simulation Machine Using Neural Networks

In this section we describe the architecture of our individual claims history simulation machine. It consists of eight modeling steps: (1) reporting delay T simulation; (2) payment indicator Z simulation; (3) number of payments K simulation; (4) total claim size Y simulation; (5) number of recovery payments simulation; (6) recovery size simulation; (7) cash flow simulation and (8) claim status simulation. Each of these eight modeling steps is based on one or several feed-forward neural networks. We introduce the precise setup of such a neural network in Section 2.1 for the simulation of the reporting delay T. Before, we present a global overview of the architecture of our simulation machine. Afterwards, in Section 2.1–Section 2.8, each single step is described in detail.

To start with, we define the initial feature space consisting of the original six feature components as

Observe that we drop the claims number ClNr because it does not have explanatory power. Apart from these six feature values, the only other model-dependent input parameters of our simulation machine are the standard deviations for the total individual claim sizes and the total individual recoveries, see Section 2.4 and Section 2.6 below. During the simulation procedure, not all of the subsequent steps (1)–(8) may be necessary—e.g., if we do not have any payments, then there is no need to simulate the claim size or the cash flow pattern. We briefly describe the eight modeling steps (1)–(8).

(1) In the first step, we use the initial feature space to model the reporting delay T indicating the annualized difference between the reporting year and the accident year.

(2) For the second step, we extend the initial feature space by including the additional information of the reporting delay T, i.e., we set

We use to model the payment indicator Z determining whether we have a payment or not.

(3) For the third step, we set and model the number of (yearly) payments K.

(4) In the fourth step, we extend the feature space by including the additional information of the number of payments K, i.e., we set

which is used to model the total individual claim size Y.

(5) In the fifth step, we model the number of recovery payments . We therefore work on the extended feature space

(6) In the sixth step, we model the total individual recovery . To this end, we set . We understand the total individual claim size Y to be net of recovery . Thus, the total payment from the insurance company to the insured is , paid in yearly payments. The total recovery from the insured to the insurance company is , paid in yearly payments.

(7) In the seventh step, the task is to generate the cash flows . Therefore, we have to split the total gross claim amount into positive payments and the total recovery into negative payments and distribute these K payments among the 12 development years. For this modeling step, we use different feature spaces , all being a subset of

see Section 2.7 below for more details.

(8) In the last step, we model the claim status process , where we use the feature space

Each of these eight modeling steps (1)–(8) consists of one or even multiple feature-response problems, for which we design neural networks. In the end, the full individual claims history simulation machine consists of 35 neural networks. We are going to describe this neural network architecture in more detail next. We remark that some of these networks are rather similar. Therefore, we present the first neural network in full detail, and for the remaining neural networks we focus on the differences to the previous ones.

2.1. Reporting Delay Modeling

To model the reporting delay, we work with the initial feature space given in (1). Let 9,977,298 be the number of individual claims in our data. We consider the (annualized) reporting delays , for , given by

where is the accident year and the reporting year of claim i. For confidentiality reasons, we have only received data on a yearly time scale (with the additional information of the accident quarter AQ). A more accurate modeling would use a finer time scale.

The three feature components LoB, cc and inj_part are categorical. For neural network modeling, we need to transform these categorical feature components to continuous ones. This could be done by dummy coding, but we prefer the following version because it leads to less parameters. We replace, for instance, the claims code cc by the sample mean of the reporting delay restricted to the corresponding feature label, i.e., for claims code , we set

where are the observed claims codes. By slight abuse of notation, we obtain a dimensional feature space where we may assume that all feature components of are continuous. Such feature pre-processing as in (6) will be necessary throughout this section for the components LoB, cc and inj_part: we just replace in (6) by the respective response variable. Note that from now on this will be done without any further reference.

The above procedure equips us with the data

with being the observed features and the observed responses. For an insurance claim with feature , the corresponding reporting delay is modeled by a categorical distribution

This requires that we model probability functions of the form

satisfying normalization , for all . We design a neural network for the modeling of these probability functions and we estimate the corresponding network parameters from the observations .

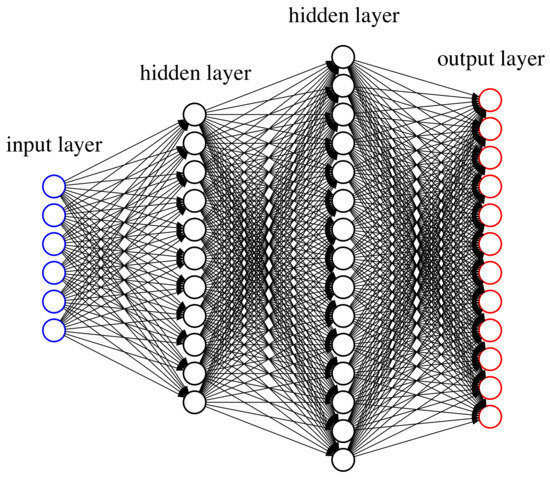

We choose a classical feed-forward neural network with multiple layers. Each layer consists of several neurons, and weights connect all neurons of a given layer to all neurons of the next layer. Moreover, we use a non-linear activation function to pass the signals from one layer to the next. The first layer—consisting of the components of a feature —is called input layer (blue circles in Figure 1). In our case, we have neurons in this input layer. The last layer is called output layer (red circles in Figure 1) and it contains the categorical probabilities . In between these two layers, we choose two hidden layers having and hidden neurons, respectively (black circles in Figure 1 with and ).

Figure 1.

Deep neural network with two hidden layers: the first column (blue circles) illustrates the dimensional feature vector (input layer), the second column gives the first hidden layer with neurons, the third column gives the second hidden layer with neurons and the fourth column gives the output layer (red circle) with 12 neurons.

More formally, we choose the hidden neurons in the first hidden layer as follows

for given weights and for the hyperbolic tangent activation function

This is a centered version of the sigmoid activation function, with range . Moreover, we have , which is a useful property in the gradient descent method described in Section 3, below.

The activation is then propagated in an analogous fashion to the hidden neurons in the second hidden layer, that is, we set

For the 12 neurons in the output layer, we use the multinomial logistic regression assumption

with regression functions for all given by

for given weights . We define the network parameter of all involved parameters by

The classification model for the tuples is now fully defined and there remains the calibration of the network parameter and the choice of the hyperparameters and . Assume for the moment that and are given. In order to fit to our data , we aim to minimize a given loss function . Therefore, we assume that are drawn independently from the joint distribution of . The corresponding deviance statistics loss function of the categorical distribution of our data is then given by

The optimal network parameter is found by minimizing this deviance statistics loss function. We come back to this problem in Section 3.2.1, below. Since for different hyperparameters and we get different network structures, every pair corresponds to a separate model. The choice of appropriate hyperparameters and is discussed in Section 3.3, below.

After the calibration of , and to our data , we can simulate the reporting delay of a claim with given feature by using the resulting categorical distribution given by (7). This simulated value will then allow us to go to the next modeling step (2), see (2).

We close this first part with the following remark: Our choice to work with two hidden layers may seem arbitrary since we could also have chosen more hidden layers or just one of them. From a theoretical point of view, one hidden layer would be sufficient to approximate a vast collection of regression functions to any desired degree of accuracy, provided that we have sufficiently many hidden neurons in that layer, see Cybenko (1989) and Hornik et al. (1989). However, these models with large-scale numbers of hidden neurons are known to be difficult to calibrate, and it is often more efficient to use fewer neurons but more hidden layers to get an appropriate complexity in the regression function.

2.2. Payment Indicator Modeling

In our real data, we observe that roughly 29% of all claims can be settled without any payment. For this reason, we model the claim sizes by compound distributions. First, we model a payment indicator Z that determines whether we have a payment or not. Then, conditionally on having a payment, we determine the exact number of payments K. Finally, we model the total individual claim size Y for claims with at least one payment.

In order to model the payment indicator, we work with the dimensional feature space introduced in (2). Let and be the observed features, where this time the reporting delay T is also included. For all , we define the number of payments and the payments indicator by

This provides us with the data

For a claim with feature , the corresponding payment indicator is a Bernoulli random variable with

for a given (but unknown) probability function

Note that this Bernoulli model is a special case of the categorical model of Section 2.1. Therefore, it can be calibrated completely analogously, as described above. However, we emphasize that instead of working with two probability functions and for the two categories , we set , which implies . Moreover, the multinomial probabilities (7) simplify to the binomial case

with regression function

for a neural network with two hidden layers and network parameter given by

Finally, the corresponding deviance statistics loss function to be minimized is given by

From this calibrated model, we simulate the payment indicator , which then allows us to go to the next modeling step. If this indicator is equal to one, we move to step (3), see Section 2.3; if this indicator is equal to zero, we directly go to step (8), see Section 2.8.

2.3. Number of Payments Modeling

We use the dimensional feature space to model the number of payments, conditioned on the event that the payment indicator Z is equal to one. We define to be the number of claims with payment indicator equal to one and order the claims appropriately in i such that for all . Then, we define the number of payments as in (9), for all . This gives us the data

For a claim with feature and payment indicator , we could now proceed as in Section 2.1 in order to model the number of payments . However, the claims with are so dominant in the data that a good calibration of the categorical model (7) becomes difficult. For this reason, we choose a different approach: in a first step, we model the events and , conditioned on , and, in a second step, we consider the conditional distribution of , given . In particular, in the first step we have a Bernoulli classification problem that is modeled completely analogously to Section 2.2, only replacing the data by

The case is then modeled analogously to the categorical case of Section 2.1, with 11 categories and data only considering the claims with more than one payment.

The simulation of the number of payments for a claim with feature , reporting delay T and payment indicator needs more care than the corresponding task in Section 2.1: here we have the restriction . If , then we automatically need to have . For and if the first neural network leads to , then the categorical conditional distribution for , given , can only take the values . For this reason, instead of using the original conditional probabilities resulting from the second neural network, we use in that case the modified conditional probabilities , for , given by

2.4. Total Individual Claim Size Modeling

For the modeling of the total individual claim size, we add the number of payments K to the previous feature space and work with given in (3). Let and consider the same ordering of the claims as in Section 2.3. Then, we define the total individual claim size of claim i as

for all . In particular, the total individual claim size is always to be understood net of recoveries. This leads us to the data

For a claim with feature and payment indicator , we model the total individual claim size with a log-normal distribution. We therefore choose a regression function

of type (10) for a neural network with two hidden layers. This regression function is used to model the mean parameter of the total individual claim sizes, i.e., we make the model assumption

for given variance parameter . This choice implies

The density of then motivates the choice of the square loss function (deviance statistics loss function)

with network parameter . The optimal model for the total individual claim size is then found by minimizing the loss function (14), which does not depend on .

This calibrated model together with the input parameter can be used to simulate the total individual claim size from (13). Note that the expected claim amount is increasing in , as we have

2.5. Number of Recovery Payments Modeling

For the modeling of the number of recovery payments, we use the dimensional feature space introduced in (4). Furthermore, we only consider claims i with , because recoveries may only happen if we have at least one positive payment. We define to be the number of claims with more than one payment and order the claims appropriately in i such that for all . Then, we define the number of recovery payments of claim i as

for all . In particular, for all observed claims i with more than two recovery payments, we set . This reduces combinatorial complexity in simulations (without much loss of accuracy) and provides us with the data

For a claim with feature and payments, the corresponding number of recovery payments , conditioned on the event , is a categorical random variable taking values in , i.e., we are in the same setup as in Section 2.1—with only three categorical classes. Thus, the calibration is done analogously.

This model then allows us to simulate the number of recovery payments . Note that also this simulation step needs additional care: if , then we can have at most one recovery payment. Thus, we have to apply a similar modification as given in (12) in this case.

2.6. Total Individual Recovery Size Modeling

The modeling of the total individual recovery size is based on the feature space , given in (4), and we restrict to claims with . The number of these claims is denoted by . Appropriate ordering provides us with the total individual recovery of claim i as

for all . This gives us the data

The remaining part is completely analogous to Section 2.4, we only need to replace the standard deviation parameter by a given .

2.7. Cash Flow Pattern Modeling

The modeling of the cash flow pattern is more involved, and we need to distinguish different cases. This distinction is done according to the total number of payments , the number of positive payments as well as the number of recovery payments .

2.7.1. Cash Flow for Single Payments

The simplest case is the one of having exactly one payment . In this case, we consider the payment delay after the reporting date. We define to be the number of claims with exactly one payment and order the claims appropriately in i such that for all . Then, we define the payment delay of claim i as

for all . In other words, we simply subtract the reporting year from the year in which the unique payment occurs. This provides us with the data

with being the observed features, where we use

as dimensional feature space. For a claim with feature and payment, the corresponding payment delay is a categorical random variable assuming values in . Similarly as for the number of payments, the claims with are rather dominant. Therefore, we apply the same two-step modeling approach as in Section 2.3.

This calibrated model then allows us to simulate the payment delay . For given reporting delay T, we have the restriction , which is treated in the same way as in (12). Finally, the cash flow is given by with

2.7.2. Cash Flow for Two Payments

Now we consider claims with exactly two payments. Here we distinguish further between the two cases: (1) both payments are positive, and (2) one payment is positive and the other one negative.

(a) Two Positive Payments

We first consider the case where both payments are positive, i.e., and . In this case, we have to model the time points of the two payments as well as the split of the total individual claim size to the two payments. For both models, we use the dimensional feature space , see (16). We define to be the number of claims with exactly two positive payments and no recovery and order them appropriately in i such that and for all . The time points and of the two payments are given by

for all . Then, we modify the two-dimensional vector to a one-dimensional categorical variable by setting

for all . This leads us to the data

Note that is categorical with possible values. That is, we are in the same setup as in Section 2.1—with 66 different classes. Once again, the calibration is done in an analogous fashion as above.

Next, we model the split of the total individual claim size for claims with . Let , , see (16), and define the proportion of the total individual claim size that is paid in the first payment by

for all . This gives us the data

For a claim with feature and , the corresponding proportion of its total individual claim size Y that is paid in the first payment is for simplicity modeled by a deterministic function . Note that one could easily randomize using a Dirichlet distribution. However, at this modeling stage, the resulting differences would be of smaller magnitude. Hence, we directly fit the proportion function

Similarly to the calibration in Section 2.2, we assume a regression function of type (10) for a neural network with two hidden layers. Then, for the output layer, we use

and as loss function the cross entropy function, see also (11),

where is the network parameter containing all the weights of the neural network.

From this model, we can then simulate the cash flow for a claim with . First, we simulate . If , we have and . If , we have

The cash flow is given by with

(b) One Positive Payment, One Recovery Payment

Now we focus on the case where we have positive and negative payment. Here we only have to model the time points of the two payments, since we know the total individual claim size as well as the total individual recovery and we assume that the positive payment precedes the recovery payment. The modeling of the time points of the two payments is done as above, except that this time we use the dimensional feature space

where we include the information of the total individual recovery . Moreover, we define to be the number of claims with exactly one positive payment and one recovery payment and order the claims appropriately in i such that and for all . This provides us with the data

with defined as in (17). The rest is done as above. We obtain the cash flow with

Remark that we again have combinatorial complexity of for the time points of the two payments. Since data is sparse, for this calibration we restrict to the 35 most frequent distribution patterns. More details on this restriction are provided in the next section.

2.7.3. Cash Flow for More than Two Payments

On the one hand, the models for the cash flows in the case of more than two payments depend on the exact number of payments K. On the other hand, they also depend on the respective numbers of positive payments and negative payments . If we have zero or one recovery payment (), then we need to model (a) the time points where the K payments occur and (b) the proportions of the total gross claim amount paid in the positive payments. If , then there are no recovery payments and, thus, . If , the recovery payment is always set at the end. In the case of recovery payments, in addition to (a) and (b), we use another neural network to model (c) the proportions of the total individual recovery paid in the two recovery payments. The time point of the first recovery payment is for simplicity assumed to be uniformly distributed on the set of time points of the 2nd up to the -st payment. The second recovery payment is always set at the end. The time point of the first payment is excluded for recovery in our model since we first require a positive payment before a recovery is possible. The three neural networks considered in this modeling part are outlined below in (a)–(c). Afterwards, we can model the cash flow for claims with payments, see item (d) below.

(a) Distribution of the K Payments

If we have payments, then the distribution of these payments to the 12 development years is trivial, as we have a payment in every development year. Since the model is pretty much the same in all other cases , we present here the case as illustration.

For the modeling of the distribution of the payments to the development years, we slightly simplify our feature space by dropping the categorical feature components and . Moreover, we simplify the feature with its four categorical classes: since the lines of business one and four as well as the lines of business two and three behave very similarly w.r.t. the cash flow patterns, we merge these lines of business in order to get more volume (and less complexity). We denote this simplified lines of business by . Thus, we work with the dimensional feature space

Let be the number of claims with exactly six payments and order the claims appropriately in i such that for all . The time points of the six payments are given by

for all and . Then, we use the following binary representation

for all , for the time points of the six payments. This leads us to the data

where and for some set . Since there are possibilities to distribute the payments to the 12 development years, we have distribution patterns. To reduce complexity (in view of sparse data), we only allow for the most frequently observed distributions of the payments to the development years. For , we work with 21 different patterns, which cover of all claims with . We denote the set containing these 21 patterns by . See Table 1 for an overview, for each , of the number of possible different patterns, the number of allowed different patterns and the percentage of all claims covered with this choice of allowed distribution patterns.

Table 1.

Number of possible and allowed distribution patterns for payments.

Note that for , we allow for all the 12 possible distribution patterns. Going back to the case , we denote by the number of claims with exactly payments and with a distribution of these six payments to the 12 development years contained in the set . Then, we modify the data accordingly to by only considering the relevant observations in . This provides us with a classification problem similar to the one in Section 2.1—with classes.

(b) Proportions of the Positive Payments

If the number of positive payments is equal to one, then the amount paid in this unique positive payment is given by the total gross claim amount . That is, we do not need to model the proportions of the positive payments. Since the model is basically the same in all other cases , we present here the case as illustration.

As in the previous part, we use the dimensional feature space . Let be the number of claims with exactly six positive payments and order the claims appropriately in i such that for all . We define

for all and , to be the time points of the six positive payments. Then, we can define

to be the proportion of the total gross claim amount that is paid in the k-th positive, annual payment, for all and . This equips us with the data

For a claim with feature and positive payments, the corresponding proportions of the total gross claim amount that are paid in the six positive payments are for simplicity assumed to be deterministic. Note that we could randomize these proportions by simulating from a Dirichlet distribution, but—as in Section 2.7.2—we refrain from doing so. Hence, we consider the proportion functions

for all , with normalization , for all . We use the same model assumptions as in (7) by setting for

for appropriate regression functions resulting as output layer from a neural network with two hidden layers. As in (19), we consider the cross entropy loss function

where is the corresponding network parameter. This model is calibrated as described in Section 2.1. Remark that if , the model (20) simplifies to the binomial case, see (18).

(c) Proportions of the Recovery Payments if

In the case of recovery payments, we need to model the proportion of the total individual recovery that is paid in the first recovery payment. For this, we work with the dimensional feature space

We denote by the number of claims with exactly two recovery payments and order the claims appropriately in i such that for all . Recall that we set for all claims i with two or more recovery payments, see (15). Moreover, we add all the amounts of the recovery payments done after the second recovery payment to the second one. Let

denote the time point of the first recovery payment, for all . Then, the proportion of the total individual recovery that is paid in the first recovery payment is given by

for all . This provides us with the data

The remaining modeling part is then done completely analogously to the second part of the two positive payments case (a) in Section 2.7.2.

(d) Cash Flow Modeling

Finally, using the three neural network models outlined above, we can simulate the cash flow for a claim with more than two payments and with feature , see (5). We illustrate the case . Note that we only allow for cash flow patterns in that are compatible with the reporting delay T. We start by describing the case . In this case, there is no difficulty and we directly simulate the cash flow pattern . This provides us six payments in the time points

For reporting delay , the set of potential cash flow patterns becomes smaller because some of them have to be dropped to remain compatible with . For this reason, we simulate with probability a pattern from , and with probability the six time points are drawn in a uniform manner from the remaining possible time points in . For , the potential subset of patterns in becomes (almost) empty. For this reason, we simply simulate uniformly from the compatible configurations in .

Having the six time points for the payments, we distinguish the three different cases :

Case : we calculate the proportions according to point (b) above and we receive the cash flow with

Case : we have five positive payments with proportions modeled according to point (b) above. This provides the cash flow with

Case : we have four positive payments with proportions according to point (b) above and two negative payments with proportions and according to point (c) above. The time point of the first recovery is simulated uniformly from the set of time points . Note that the time point is reserved for the first positive payment and the time point for the second recovery payment. We write for the time points of the four positive payments. Summarizing, we get the cash flow with

Of course, if and , we do not need to simulate the proportions of the positive payments, as there is only one positive payment, which occurs in the beginning. Similarly, if , we do not need to simulate the time points of the payments, since there is a payment in every development year.

2.8. Claim Status Modeling

Finally, we design the model for the claim status process which indicates whether a claim is open or closed at the end of each accounting year. This process modeling will also allow for re-opening. Similarly to the payments, we do not model the status of a claim or its changes within an accounting year, but only focus on its status at the end of each accounting year. The modeling procedure of the claim status uses two neural networks, which are described below.

We remark that the closing date information was of lower quality in our data set compared to all other information. For instance, some of the dates have been modified retrospectively which, of course, destroys the time series aspect. For this reason, we have decided to model this process in a more crude form, however, still capturing predictive power.

2.8.1. Re-Opening Indicator

We start by modeling the occurrence of a re-opening, i.e., whether a claim gets re-opened after having been closed at an earlier date. We use the dimensional feature space

where we do not consider the exact payment amounts, but the simplified version

for all . Let denote the number of claims i for which we have the full information . For the ease of data processing, we set for all development years before claims reporting . Then, we can define the re-opening indicator as

for all . In particular, if , then claim i has at least one re-opening, and if , then claim i has not been re-opened. This leads us to the data

where . For a given feature , the corresponding re-opening indicator is a Bernoulli random variable. Thus, model calibration is done analogously to Section 2.2 with, however, a neural network with only one hidden layer.

2.8.2. Closing Delay Indicator for Claims without a Re-Opening

For claims without a re-opening, we model the closing delay indicator determining whether the closing occurs in the same year as the last payment or if the closing occurs later. In case of no payments (), we replace the year of the last payment by the reporting year. We use the same dimensional feature space as for the re-opening indicator and set , see (21). Let be the number of claims without a re-opening and order them appropriately in i such that for all . Then, we define the closing delay indicator as

for all . Hence, we have if the closing occurs in a later year compared to the year of the last payment (or in a later year compared to the claims reporting year in case there is no payment) and otherwise. This leads us to the data

For a claim with feature , the corresponding closing delay indicator is again a Bernoulli random variable. Therefore, model calibration is done analogously to Section 2.2. Similarly as for the re-opening indicator, we use a neural network with only one hidden layer.

2.8.3. Simulation of the Claim Status

Based on the feature space , we first simulate the re-opening indicator leading to the two cases (i) and (ii) described below. Note that before a claim is reported—for ease of data processing—we simply set its status to open (this has no further relevance).

(i) Case (without re-opening): for the given feature , we calculate the closing delay probability using the neural network of Section 2.8.2. The closing delay is then sampled from a categorical distribution on with probabilities

The resulting closing delay is added to the year of the last payment (or to the reporting year if there is no payment). If this sum exceeds the value 11, the claim is still open at the end of the last modeled development year. This provides the claim status process with

(ii) Case (with re-opening): if we have at least one payment for the considered claim, then the first settlement time is simulated from a uniform distribution on the set

The second settlement time is simulated from a uniform distribution on the set

In particular, the first settlement arrives between the reporting year and the year of the last payment. Then, the claim gets re-opened in the year following the first settlement. The second settlement, if there is one, arrives between two years after the year of the last payment and the last modeled development year. In case the second settlement arrives after the last modeled development year, we simply cannot observe it and the claim is still open at the end of the last modeled development year. In case the first settlement happens in the last modeled development year, we do not even observe the re-opening.

If the claim does not have any payment, we set for the first settlement time. In particular, the claim gets closed for the first time in the same year as it is reported. The second settlement time is simulated from a uniform distribution on the set .

This leads to the claim status process with

3. Model Calibration Using Momentum-Based Gradient Descent

In Section 2 we have introduced several neural networks that need to be calibrated to the data. This calibration involves the choice of the numbers of hidden neurons and as well as the choice of the corresponding network parameter . We first focus on the network parameter for given and .

3.1. Gradient Descent Methods

State-of-the-art for finding the optimal network parameter w.r.t. a given differentiable loss function is the gradient descent method (GDM). The GDM locally improves the loss in an iterative way. Consider the Taylor approximation of around , then

as . The locally optimal move points into the direction of the negative gradient . If we choose a learning rate into that direction, we obtain a local loss decrease

for small. Iterative application of these locally optimal moves—with tempered learning rates—will converge ideally to the (local) minimum of the loss function. Note that (a) it is possible to end up in saddle points; (b) different starting points of this algorithm should be explored to see whether we converge to different (local) minima resp. saddle points and (c) the speed of convergence should be fine-tuned. An improved version of the GDM is the so-called momentum-based GDM introduced in Rumelhart et al. (1986). Consider a velocity vector v with the same dimensions as and initialize , corresponding to zero velocity in the beginning. Then, in every iteration step of the GDM, we are building up velocity to achieve a faster convergence. In formulas, this provides

where is the momentum coefficient controlling how fast velocity is built up. By choosing , we get the original GDM without a velocity vector, see (23). Fine-tuning may lead to faster convergence. We refer to the relevant literature for more on this topic.

3.2. Gradients of the Loss Functions Involved

In Section 2 we have met three different model types of neural networks:

- categorical case with more than two categorical classes;

- Bernoulli case with exactly two categorical classes;

- log-normal case.

In order to apply the momentum-based GDMs, we need to calculate the gradients of the corresponding loss functions of these three model types. As illustrations, we choose the reporting delay T for the categorical case, the payment indicator Z for the Bernoulli case and the total individual claim size Y for the log-normal case.

3.2.1. Categorical Case (with More than Two Categorical Classes)

The loss function for the modeling of the reporting delay T is given in (8). The gradient can be calculated as

We have for the last gradients

for all and . Collecting all terms, we conclude

There remains to calculate the gradients , for all and . This is done using the back-propagation algorithm, which in today’s form goes back to Werbos (1982).

3.2.2. Bernoulli Case (Two Categorical Classes)

We calculate the gradient for the modeling of the payment indicator Z with corresponding loss function given in (11). We get as in the categorical case above

with gradient

for all . Collecting all terms, we obtain

We again apply back-propagation to calculate the gradient , for all .

3.2.3. Log-Normal Case

Finally, the loss function for the modeling of the total individual claim size Y is given in (14). Hence, for the gradient , we have

where the last gradient , for all , is again calculated using back-propagation.

3.3. Choice of the Numbers of Hidden Neurons

For each modeling step of our simulation machine, we still need to determine the optimal neural network in terms of the numbers and of hidden neurons. These hyperparameters are determined by splitting the original data set into a training set and a validation set, where for each calibration we choose at random 90% of the data for the training set. The training set is then used to fit the models for the different choices of hyperparameters and by minimizing the corresponding (training) in-sample losses of the functions . This is done as described in the previous sections—for given and . The hyperparameter choices and —and model choices, respectively—are then done by choosing the model with the smallest (validation) out-of-sample loss on the validation set.

4. Chain-Ladder Analysis

In this section we use the calibrated stochastic simulation machine to perform a small claims reserving analysis. We generate data from the simulation machine and compare it to the real data. For both data sets, we analyze the resulting claims reporting patterns and the corresponding claims cash flow patterns. For claims reportings, we separate the individual claims by accident year and reporting delays . For claims cash flows, we separate the individual claims again by accident year and aggregate the corresponding payments over the development delays . The reported claims and the claims payments that are available by the end of accounting year 2005 then provide the so-called upper claims reserving triangles. These triangles of reported claims of real and simulated data are shown in Table 2 and Table 3, the triangles of cumulative claims payments of real and simulated data are given in Table 4 and Table 5. At a first glance, these triangles show that the simulated data looks very similar to the real data, with a slightly bigger similarity for claims reportings than for claims cash flows.

Table 2.

Triangle of reported claims of the real data.

Table 3.

Triangle of reported claims of the simulated data.

Table 4.

Triangle of cumulative claims payments (in 10,000 CHF) of the real data.

Table 5.

Triangle of cumulative claims payments (in 10,000 CHF) of the simulated data.

These data sets can be used to perform a chain-ladder (CL) claims reserving analysis. We therefore use Mack’s chain-ladder model, for details we refer to Mack (1993). We calculate the chain-ladder reserves for both the real and the simulated data, and we also calculate Mack’s square-rooted conditional mean square error of prediction .

We start the analysis on the claims reportings. Using the chain-ladder method, we predict the number of incurred but not yet reported (IBNYR) claims. These are the predicted numbers of late reported claims in the lower triangles in Table 2 and Table 3. The resulting predictions are provided in the 2nd and 5th columns of Table 6. We observe a high similarity between the results on the real and the simulated data. In particular, for all the individual accident years, the chain-ladder predicted numbers of IBNYR claims of the real data and the simulated data are very close to each other. Aggregating over all accident years, the chain-ladder predicted number of the total IBNYR claims is only 0.2% higher for the simulated data compared to the real data. This similarity largely carries over to the prediction uncertainty analysis illustrated by the columns in Table 6. Indeed, comparing the real and the simulated data, we see that is of similar magnitude for most accident years. Only for the accident years 2003 and 2004 it seems notably higher for the real data. From this, we conclude that, at least from a chain-ladder reserving point of view, our stochastic simulation machine provides very reasonable claims reporting patterns.

Table 6.

Chain-ladder predicted numbers of incurred but not yet reported (IBNYR) claims and Mack’s for the real and the simulated data.

Finally, Table 7 shows the results of the chain-ladder analysis for claims payments. Columns 2 and 5 of that table provide the chain-ladder reserves. These are the payment predictions for the cash flows paid after accounting year 2005 and complete the lower triangles in Table 4 and Table 5. Also here we see high similarities between the real data and the simulated data analysis: the corresponding total chain-ladder reserves as well as the corresponding reserves for most of the individual accident years are rather close to each other. In particular, the total chain-ladder reserves are only higher for the simulated data. We only observe slightly shorter cash flow patterns in the simulated data, which partially carries over to the prediction uncertainties illustrated by the columns in Table 7.

Table 7.

Chain-ladder reserves for claims payments (in 10,000 CHF) and Mack’s for the real and the simulated data.

5. Conclusions

We have developed a stochastic simulation machine that generates individual claims histories of non-life insurance claims. This simulation machine is based on neural networks which have been calibrated to real non-life insurance data. The inputs of the simulation machine are a portfolio of non-life insurance claims—for which we want to simulate the corresponding individual claims histories—and the two variance parameters (for the total individual claim size, see (13)) and (for the total individual recovery, see Section 2.6). Together with a portfolio generating algorithm, see Appendix B, one can use this simulation machine to simulate as many individual claims histories as desired. In a chain-ladder analysis we have seen that the simulation machine leads to reasonable results, at least from a chain-ladder reserving point of view. Therefore, our simulation machine may serve as a stochastic scenario generator for individual claims histories, which provides a common ground for research in this area, we also refer to the study in Wüthrich (2018b).

Acknowledgments

Our greatest thanks go to Suva, Peter Blum and Olivier Steiger for providing data, their insights and for their immense support.

Author Contributions

Both authors contributed equally to this work.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Descriptive Statistics of the Chosen Data Set

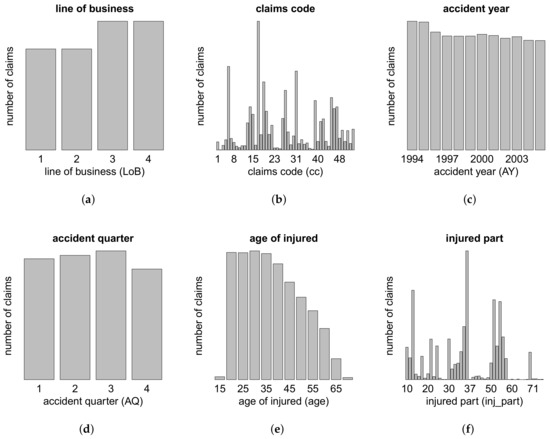

In this appendi we provide descriptive statistics of the data used to calibrate the individual claims history simulation machine. For confidentiality reasons, we can only show aggregate statistics of the claims portfolio, see Figure A1, Figure A2, Figure A3 and Figure A4 below.

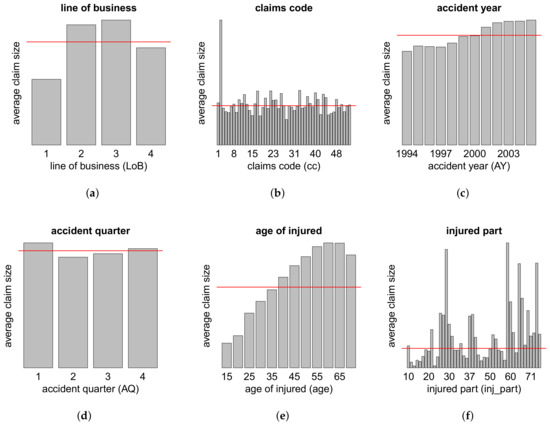

Figure A1.

Portfolio distributions w.r.t. the features (a) LoB; (b) cc; (c) AY; (d) AQ; (e) age and (f) inj_part.

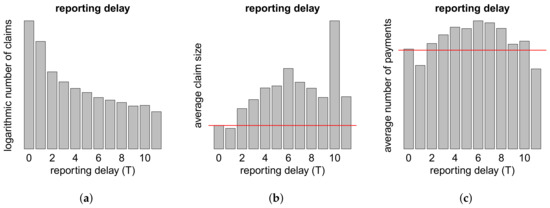

Figure A2.

(a) Logarithmic number of claims; (b) average claim size and (c) average number of payments w.r.t. the reporting delay T; the red lines show the averages.

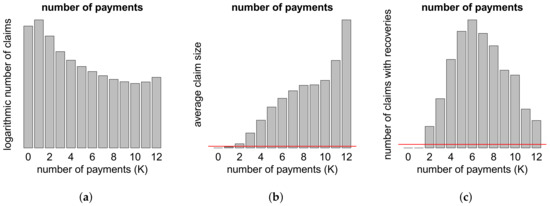

Figure A3.

(a) Logarithmic number of claims; (b) average claim size and (c) number of claims with recoveries w.r.t. the number of payments K; the red lines show the averages.

Figure A4.

Average claim size w.r.t. the features (a) LoB; (b) cc; (c) AY; (d) AQ; (e) age and (f) inj_part; the red lines show the averages.

Appendix B. Procedure of Generating a Synthetic Portfolio

In order to use the stochastic simulation machine derived above, we require a portfolio of features , see (1). Therefore, we need an additional scenario generator that simulates reasonable synthetic portfolios. In this appendix we describe the design of our portfolio scenario generator which provides portfolios similar in structure to the original portfolio.

Our algorithm of synthetic portfolio generation uses the following input parameters:

- totally expected number of claims;

- categorical distribution for the allocation of the claims to the four lines of business;

- growth parameters for the numbers of claims in the 12 accident years for each of the four lines of business.

In a first step, we use these parameters to simulate the total number of claims and allocate them to the lines of business LoB and the accident years AY. We start by simulating according to

To determine the distribution of the claims among the 12 accident years within each line of business , we simulate from a normal distribution according to

Then, we define the weights and

for all and . Finally, we set

for all and , to be the expected number of claims in line of business l with accident year j. Conditionally given , we simulate the number of claims in line of business l with accident year j from a Poisson distribution according to

for all and . Note that we have , which justifies the above modeling choices.

After having simulated the number of claims for each line of business l and accident year j, we need to establish these claims with the remaining feature components cc, AQ, age and inj_part. This is achieved by choosing a multivariate distribution having a Gaussian copula and appropriate marginal densities. These densities and the covariance parameters of the Gaussian copula have been estimated from the real data. For the explicit parametrization, we refer to the R-function Feature.Generation in our simulation package.

Appendix C. Sensitivities of Selected Neural Networks

In this final appendix we consider 11 selected neural networks of our simulation machine and present the impact on the response variable of the respective most influential features. For each neural network considered, we use the corresponding calibration data set, fix a feature component—e.g., the accident quarter AQ—and vary its value over its entire domain—e.g., for the accident quarter AQ—to analyze the sensitivities in this feature component.

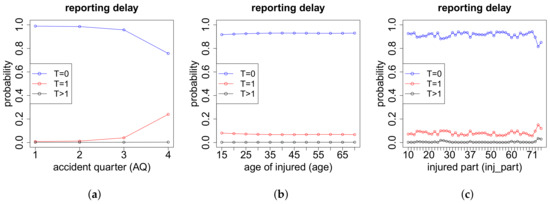

In Figure A5 we analyze the reporting delay T as a function of the features AQ, age and inj_part. Not surprisingly, the accident quarter has the biggest influence, because a claim occurring in December is likely to be reported only in the next accounting year.

Figure A5.

Reporting delay T w.r.t. the features (a) AQ; (b) age and (c) inj_part.

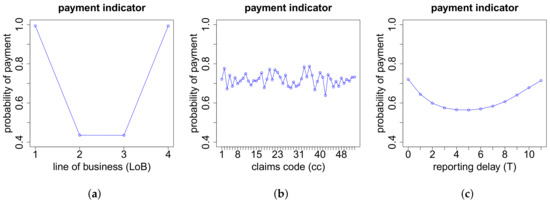

Figure A6 tells us that claims in lines of business one and four almost always have a payment. In contrast, we expect only roughly half of the claims in lines of business two and three to have a payment. Furthermore, the claims code cc causes some variation in the probability of having a payment, and claims with either a small or a large reporting delay T have a higher probability of having a payment than claims with a medium reporting delay.

Figure A6.

Payment indicator Z w.r.t. the features (a) LoB; (b) cc and (c) reporting delay T.

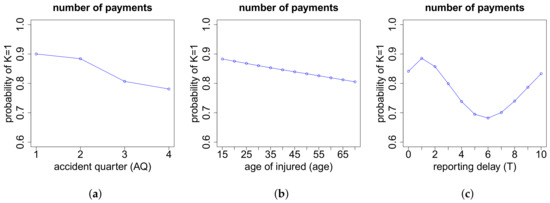

Recall that in determining the number of payments K, we use two neural networks, where in the first one we model whether we have or payments. According to Figure A7, claims that occur later in a year tend to have a higher probability of having more than one payment. The same holds true with increasing age of the injured. In passing from reporting delay to , the probability of having only one payment increases. But then we observe a sinus curve shape in that probability as a function of T.

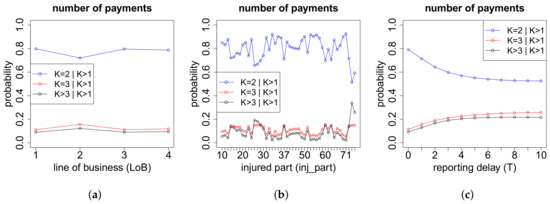

The second neural network used to determine the number of payments K models the distribution of K, conditioned on . As we see in Figure A8, claims in line of business two tend to have more payments than claims in other lines of business, and both inj_part and reporting delay T heavily influence the number of payments.

Figure A7.

Indicator whether we have or payments w.r.t. the features (a) AQ; (b) age and (c) reporting delay T.

Figure A8.

Conditional distribution of the number of payments K, given , w.r.t. the features (a) LoB; (b) inj_part and (c) reporting delay T.

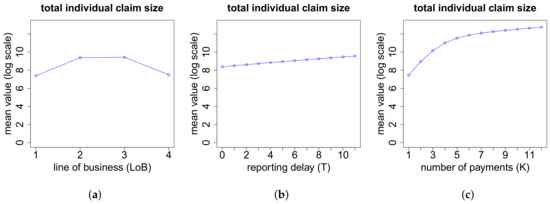

In Figure A9 we present sensitivities for the expected total individual claim size Y on the log scale. The main drivers here are the line of business LoB and the number of payments K.

Figure A9.

Total individual claim size Y (on log scale) w.r.t. the features (a) LoB; (b) reporting delay T and (c) number of payments K.

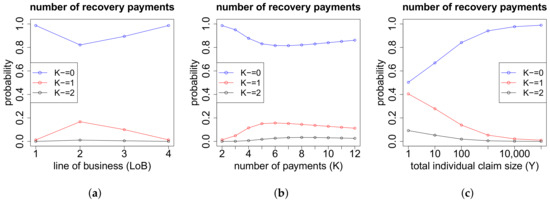

Figure A10 tells us that claims in lines of business one and four almost never have a recovery. Moreover, the probability of having at least one recovery payment first increases with the number of payments K but then slightly decreases again. Finally, up to of the claims with a small total individual claim size Y (of less than 10 CHF) have a recovery. This also comprises claims whose recovery is almost equal to the total gross claim amount, leading to a small net claim size. In general, the higher the total individual claim size, the less likely are recovery payments.

Figure A10.

Number of recovery payments w.r.t. the features (a) LoB; (b) number of payments K and (c) total individual claim size Y.

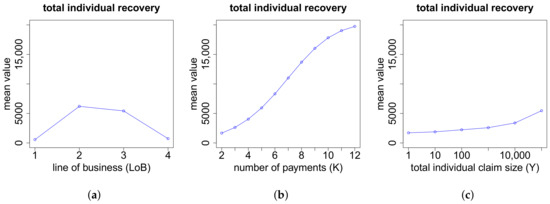

According to Figure A11, the total individual recovery is substantially higher for claims in lines of business two and three, compared to claims in lines of business one and four. Furthermore, if we have a recovery, then the higher the number of payments K and the total individual claim size Y, the higher also the recovery, where the increase w.r.t. the number of payments is decisively more pronounced.

Figure A11.

Total individual recovery w.r.t. the features (a) LoB; (b) number of payments K and (c) total individual claim size Y.

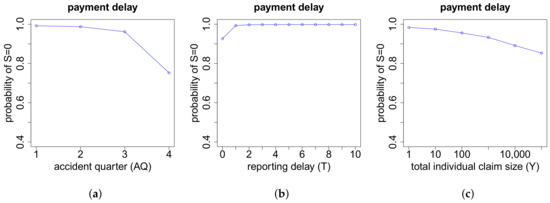

In determining the payment delay S for claims with exactly one payment, we use two neural networks. In the first one, we model whether or , and in the second one, we consider the conditional distribution of S, given . Here we only present sensitivities for the first neural network. We observe, see Figure A12, that the probability of a payment delay equal to zero decreases with increasing accident quarter AQ and increasing total individual claim size Y. In particular, claims that occur in the last quarter of a year have a considerably higher probability of having a payment delay. This might be explained by claims for which the short time lag between the accident date and the end of the year only suffices for claims reporting but not for claims payments, leading to a payment delay. Finally, claims with a reporting delay almost never have an additional payment delay.

Figure A12.

Indicator whether we have payment delay or in the case of payment w.r.t. the features (a) AQ; (b) reporting delay T and (c) total individual claim size Y.

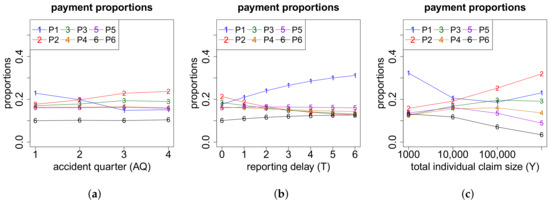

As a representative of the neural networks that calculate the proportions with which the total gross claim amount is distributed among the positive payments, we choose the one for . According to Figure A13, we see some monotonicity, but apart from that these proportions do not vary considerably. For claims which occur early during a year or have a high reporting delay T or a comparably small total individual claim size Y, the biggest proportion of the total gross claim amount is paid in the first (positive) payment.

Figure A13.

Proportions of the total gross claim amount paid in the positive payments w.r.t. the features (a) AQ; (b) reporting delay T and (c) total individual claim size Y.

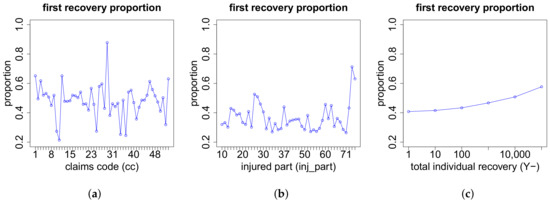

According to Figure A14, in the case of recovery payments, the proportion of the total individual recovery that is paid in the first recovery payment varies substantially for the different values of the features cc and inj_part. We also observe that the higher the total individual recovery, the higher the proportion paid in the first recovery.

Figure A14.

Proportion of the total individual recovery paid in the first recovery payment in the case of recovery payments w.r.t. the features (a) cc; (b) inj_part and (c) total individual recovery .

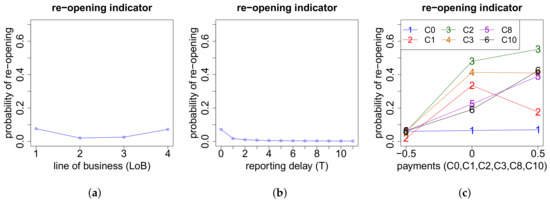

In Figure A15 we see that claims in lines of business one and four have a higher re-opening probability than claims in lines of business two and three. Moreover, the higher the reporting delay T of a claim, the lower the rate of reopening. Finally, the probability of re-opening heavily depends on the cash flow. In order to not overload the plot, we only show sensitivities w.r.t. the payments . Recall that for this neural network, the yearly payments are coded with the values and , see (22). Summarizing, one can say that if we have a payment after the first development year, then the probability of re-opening is quite high.

Figure A15.

Re-opening indicator V w.r.t. the features (a) LoB; (b) reporting delay T and (c) yearly payments .

References

- Antonio, Katrien, and Richard Plat. 2014. Micro-Level Stochastic Loss Reserving for General Insurance. Scandinavian Actuarial Journal 7: 649–69. [Google Scholar] [CrossRef]

- Cybenko, George. 1989. Approximation by Superpositions of a Sigmoidal Function. Mathematics of Control, Signals, and Systems (MCSS) 2: 303–14. [Google Scholar] [CrossRef]

- Hiabu Munir, Carolin Margraff, Maria D. Martínez-Miranda, and Jens P. Nielsen. 2016. The Link between Classical Reserving and Granular Reserving through Double Chain-Ladder and its Extensions. British Actuarial Journal 21: 97–116. [Google Scholar] [CrossRef]

- Hornik, Kurt, Maxwell Stinchcombe, and Halbert White. 1989. Multilayer Feedforward Networks are Universal Approximators. Neural Networks 2: 359–66. [Google Scholar] [CrossRef]

- Jessen, Anders H., Thomas Mikosch, and Gennady Samorodnitsky. 2011. Prediction of Outstanding Payments in a Poisson Cluster Model. Scandinavian Actuarial Journal 3: 214–37. [Google Scholar] [CrossRef]

- Mack, Thomas. 1993. Distribution-Free Calculation of the Standard Error of Chain Ladder Reserve Estimates. ASTIN Bulletin 23: 213–25. [Google Scholar] [CrossRef]

- Martínez-Miranda, Maria D., Jens P. Nielsen, Richard J. Verrall, and Mario V. Wüthrich. 2015. The Link between Classical Reserving and Granular Reserving through Double Chain-Ladder and its Extensions. Scandinavian Actuarial Journal 5: 383–405. [Google Scholar]

- Pigeon, Mathieu, Katrien Antonio, and Michel Denuit. 2013. Individual Loss Reserving with the Multivariate Skew Normal Framework. ASTIN Bulletin 43: 399–428. [Google Scholar] [CrossRef]

- Rumelhart, David E., Geoffrey E. Hinton, and Ronald J. Williams. 1986. Learning Representations by Back- Propagating Errors. Nature 323: 533–36. [Google Scholar] [CrossRef]

- Taylor, Greg, Gráinne McGuire, and James Sullivan. 2008. Individual Claim Loss Reserving Conditioned by Case Estimates. Annals of Actuarial Science 3: 215–56. [Google Scholar] [CrossRef]

- Verrall, Richard J., and Mario V. Wüthrich. 2016. Understanding Reporting Delay in General Insurance. Risks 4: 25. [Google Scholar] [CrossRef]

- Werbos, Paul J. 1982. Applications of Advances in Nonlinear Sensitivity Analysis. In System Modeling and Optimization. Paper Presented at the 10th IFIP Conference, New York City, NY, USA, 31 August–4 September 1981. Edited by Rudolf F. Drenick and Frank Kozin. Berlin and Heidelberg: Springer, pp. 762–70. [Google Scholar]

- Wüthrich, Mario V. 2018. Machine Learning in Individual Claims Reserving. To appear in Scandinavian Actuarial Journal 25: 1–16. [Google Scholar]

- Wüthrich, Mario V. 2018. Neural Networks Applied to Chain-Ladder Reserving. SSRN Manuscript. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).