Deep-Learning-Based AI-Model for Predicting Dental Plaque in the Young Permanent Teeth of Children Aged 8–13 Years

Abstract

1. Introduction

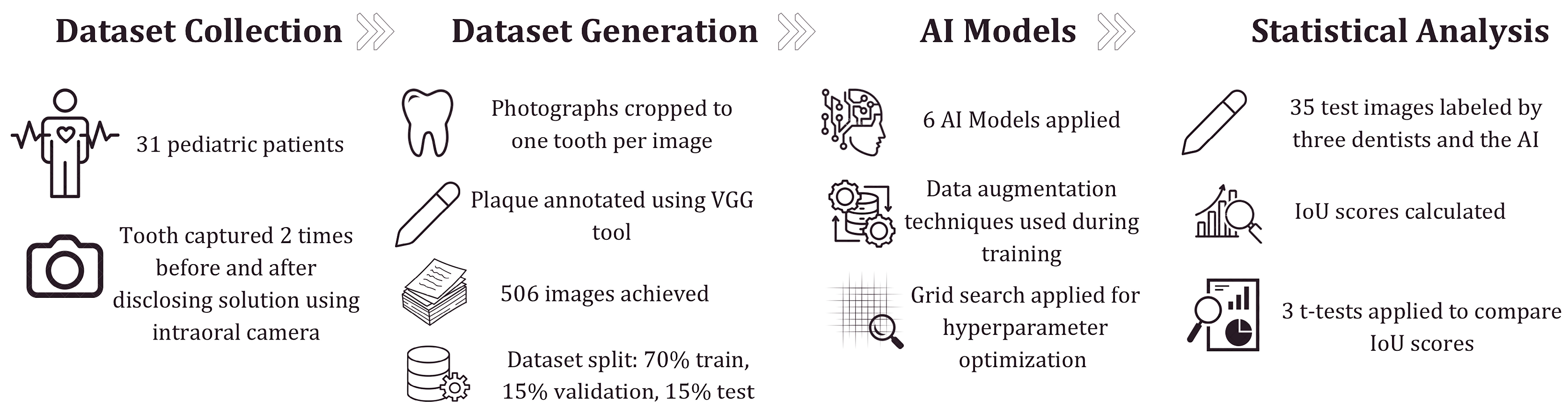

2. Materials and Methods

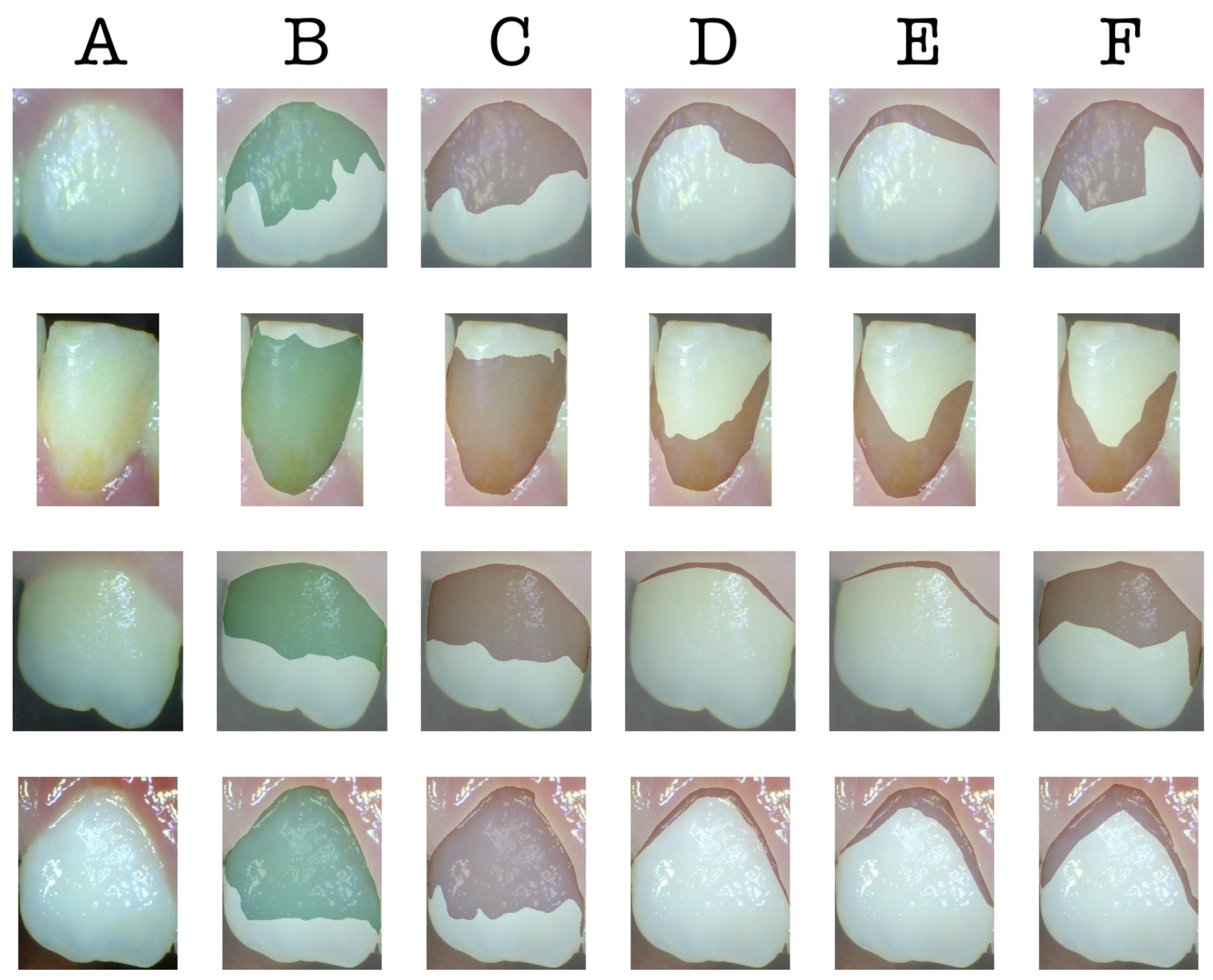

2.1. Dataset Collection

2.2. The Architecture of Deep Learning Models

2.3. Evaluation Metrics for Image Segmentation

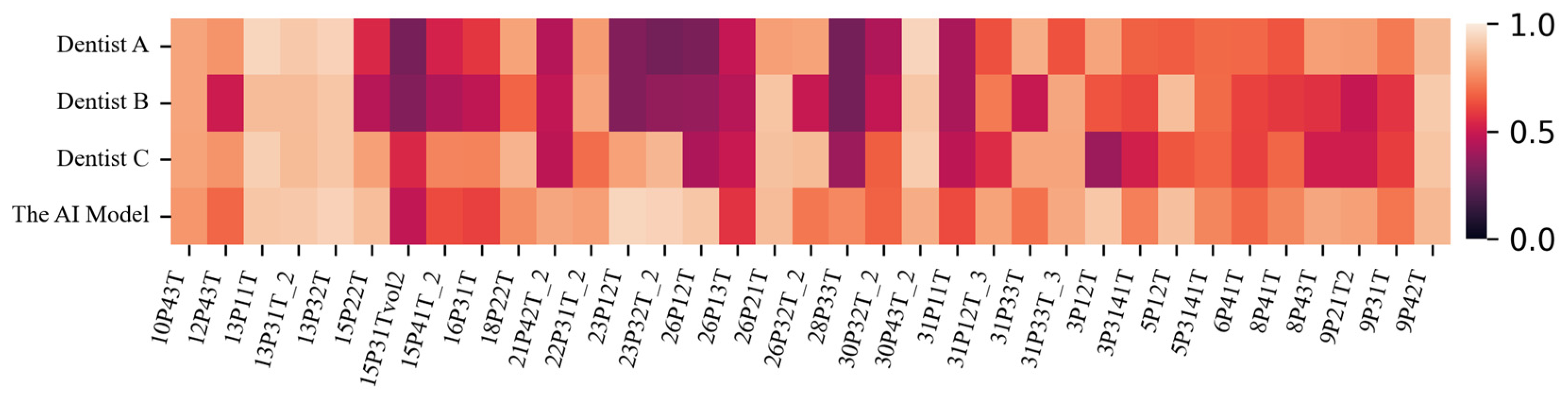

2.4. Statistical Analysis of the Difference Between the AI Model and Dentists

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Abbreviations

| AI | Artificial Intelligence |

| VGG | Visual Geometry Group |

| IoU | Intersection Over Union |

References

- Marsh, P.; Martin, M. Oral Microbiology, 5th ed.; Elsevier: Edinburgh, UK; New York, NY, USA, 2009; ISBN 978-0-443-10144-1. [Google Scholar]

- Hannig, C.; Hannig, M.; Attin, T. Enzymes in the Acquired Enamel Pellicle. Eur. J. Oral Sci. 2005, 113, 2–13. [Google Scholar] [CrossRef]

- Bos, R. Physico-Chemistry of Initial Microbial Adhesive Interactions—Its Mechanisms and Methods for Study. FEMS Microbiol. Rev. 1999, 23, 179–229. [Google Scholar] [CrossRef] [PubMed]

- Busscher, H.J.; Norde, W.; Van Der Mei, H.C. Specific Molecular Recognition and Nonspecific Contributions to Bacterial Interaction Forces. Appl. Environ. Microbiol 2008, 74, 2559–2564. [Google Scholar] [CrossRef] [PubMed]

- Research, Science and Therapy Committee of the American Academy of Periodontology. Treatment of Plaque-Induced Gingivitis, Chronic Periodontitis, and Other Clinical Conditions. J. Periodontol. 2001, 72, 1790–1800. [Google Scholar] [CrossRef]

- Botero, J.E.; Rösing, C.K.; Duque, A.; Jaramillo, A.; Contreras, A. Periodontal Disease in Children and Adolescents of Latin America. Periodontology 2000 2015, 67, 34–57. [Google Scholar] [CrossRef]

- Çalışır, M.; Akpınar, A. Çocuklarda ve Adolesanlarda Periodontal Hastalıklar. Cumhur. Dent. J. 2013, 16, 226–234. [Google Scholar] [CrossRef]

- Gillings, B.R.D. Recent Developments in Dental Plaque Disclosants. Aust. Dent. J. 1977, 22, 260–266. [Google Scholar] [CrossRef]

- Silness, J.; Loe, H. Periodontal disease in pregnancy II. Correlation between oral hygiene and periodontal condition. Acta Odontol. Scand. 1964, 22, 121–135. [Google Scholar]

- Turesky, S.; Gilmore, N.D.; Glickman, I. Reduced plaque formation by the chloromethyl analogue of victamine C. J. Periodontol. 1970, 41, 41–43. [Google Scholar]

- Joseph, B.; Prasanth, C.S.; Jayanthi, J.L.; Presanthila, J.; Subhash, N. Detection and Quantification of Dental Plaque Based on Laser-Induced Autofluorescence Intensity Ratio Values. J. Biomed. Opt. 2015, 20, 048001. [Google Scholar] [CrossRef]

- Yiğit, T.; Karaaslan, F.; Yiğit, U.; Dikilitaş, A. Comparison of the Plaque Removal Efficacy of Chewable, Electric, and Manual Toothbrushes: A Randomized Clinical Trial. Süleyman Demirel Üniv. Sağlık Bilim. Derg. 2023, 14, 170–177. [Google Scholar] [CrossRef]

- Zou, K.H.; Warfield, S.K.; Bharatha, A.; Tempany, C.M.C.; Kaus, M.R.; Haker, S.J.; Wells, W.M.; Jolesz, F.A.; Kikinis, R. Statistical Validation of Image Segmentation Quality Based on a Spatial Overlap Index1. Acad. Radiol. 2004, 11, 178–189. [Google Scholar] [CrossRef]

- Sudheera, P.; Sajja, V.R.; Deva Kumar, S.; Rao, N.G. Detection of Dental Plaque Using Enhanced K-Means and Silhouette Methods. In Proceedings of the 2016 International Conference on Advanced Communication Control and Computing Technologies (ICACCCT), Ramanathapuram, India, 25–27 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 559–563. [Google Scholar]

- Imangaliyev, S.; van der Veen, M.H.; Volgenant, C.M.C.; Keijser, B.J.F.; Crielaard, W.; Levin, E. Deep Learning for Classification of Dental Plaque Images. In Machine Learning, Optimization, and Big Data, Proceedings of the Second International Workshop, MOD 2016, Volterra, Italy, 14–17 September 2017; Pardalos, P.M., Conca, P., Giuffrida, G., Nicosia, G., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 407–410. [Google Scholar]

- Liu, L.; Xu, J.; Huan, Y.; Zou, Z.; Yeh, S.-C.; Zheng, L.-R. A Smart Dental Health-IoT Platform Based on Intelligent Hardware, Deep Learning, and Mobile Terminal. IEEE J. Biomed. Health Inform. 2020, 24, 898–906. [Google Scholar] [CrossRef]

- Li, S.; Pang, Z.; Song, W.; Guo, Y.; You, W.; Hao, A.; Qin, H. Low-Shot Learning of Automatic Dental Plaque Segmentation Based on Local-to-Global Feature Fusion. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 22 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 664–668. [Google Scholar]

- You, W.; Hao, A.; Li, S.; Wang, Y.; Xia, B. Deep Learning-Based Dental Plaque Detection on Primary Teeth: A Comparison with Clinical Assessments. BMC Oral Health 2020, 20, 141. [Google Scholar] [CrossRef]

- Li, S.; Guo, Y.; Pang, Z.; Song, W.; Hao, A.; Xia, B.; Qin, H. Automatic Dental Plaque Segmentation Based on Local-to-Global Features Fused Self-Attention Network. IEEE J. Biomed. Health Inform. 2022, 26, 2240–2251. [Google Scholar] [CrossRef]

- Yüksel, B.; Özveren, N.; Yeşil, Ç. Evaluation of Dental Plaque Area with Artificial Intelligence Model. Niger. J. Clin. Pract. 2024, 27, 759–765. [Google Scholar] [CrossRef]

- Nantakeeratipat, T.; Apisaksirikul, N.; Boonrojsaree, B.; Boonkijkullatat, S.; Simaphichet, A. Automated Machine Learning for Image-Based Detection of Dental Plaque on Permanent Teeth. Front. Dent. Med. 2024, 5, 1507705. [Google Scholar] [CrossRef]

- Chen, X.; Shen, Y.; Jeong, J.-S.; Perinpanayagam, H.; Kum, K.-Y.; Gu, Y. DeepPlaq: Dental Plaque Indexing Based on Deep Neural Networks. Clin. Oral Investig. 2024, 28, 534. [Google Scholar] [CrossRef]

- Song, W.; Wang, X.; Guo, Y.; Li, S.; Xia, B.; Hao, A. CenterFormer: A Novel Cluster Center Enhanced Transformer for Unconstrained Dental Plaque Segmentation. IEEE Trans. Multimed. 2024, 26, 10965–10978. [Google Scholar] [CrossRef]

- Ramírez-Pedraza, A.; Salazar-Colores, S.; Cardenas-Valle, C.; Terven, J.; González-Barbosa, J.-J.; Ornelas-Rodriguez, F.-J.; Hurtado-Ramos, J.-B.; Ramirez-Pedraza, R.; Córdova-Esparza, D.-M.; Romero-González, J.-A. Deep Learning in Oral Hygiene: Automated Dental Plaque Detection via YOLO Frameworks and Quantification Using the O’Leary Index. Diagnostics 2025, 15, 231. [Google Scholar] [CrossRef]

- Dutta, A.; Gupta, A.; Zissermann, A. VGG Image Annotator (VIA) (version 2.0.11); University of Oxford: Oxford, UK, 2016. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.-Y.; Girshick, R. Detectron2; Facebook AI Research: Menlo Park, CA, USA, 2019. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 5 March 2023).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Le’Clerc Arrastia, J.; Heilenkötter, N.; Otero Baguer, D.; Hauberg-Lotte, L.; Boskamp, T.; Hetzer, S.; Duschner, N.; Schaller, J.; Maass, P. Deeply Supervised UNet for Semantic Segmentation to Assist Dermatopathological Assessment of Basal Cell Carcinoma. J. Imaging 2021, 7, 71. [Google Scholar] [CrossRef]

- Petit, O.; Thome, N.; Rambour, C.; Themyr, L.; Collins, T.; Soler, L. U-Net Transformer: Self and Cross Attention for Medical Image Segmentation. In Proceedings of the Machine Learning in Medical Imaging, Strasbourg, France, 27 September 2021; Lian, C., Cao, X., Rekik, I., Xu, X., Yan, P., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 267–276. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ruder, S. An Overview of Gradient Descent Optimization Algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Tieleman, T. Lecture 6.5-Rmsprop: Divide the Gradient by a Running Average of Its Recent Magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26. [Google Scholar]

- Ting, K.M. Precision and Recall. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2010; p. 781. ISBN 978-0-387-30164-8. [Google Scholar]

- Jaccard, P. The Distribution Of The Flora In The Alpine Zone. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- F1-Measure. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2010; p. 397. ISBN 978-0-387-30164-8. [Google Scholar]

- Alotaibi, A.K.; Alshayiqi, M.; Ramalingam, S. Does the Presence of Oral Care Guidelines Affect Oral Care Delivery by Intensive Care Unit Nurses? A Survey of Saudi Intensive Care Unit Nurses. Am. J. Infect. Control 2014, 42, 921–922. [Google Scholar] [CrossRef]

- Alotaibi, A.; Alotaibi, S.; Alshayiqi, M.; Ramalingam, S. Knowledge and Attitudes of Saudi Intensive Care Unit Nurses Regarding Oral Care Delivery to Mechanically Ventilated Patients with the Effect of Healthcare Quality Accreditation. Saudi J. Anaesth. 2016, 10, 208–212. [Google Scholar] [CrossRef]

| Type | Criteria |

|---|---|

| Inclusion Criteria | Children aged 8–13 years |

| Individuals in the mixed dentition phase | |

| Patients presenting at the Pediatric Dentistry Clinic, Hamidiye Faculty of Dental Medicine | |

| Patients randomly selected from those attending routine dental check-ups at a public hospital | |

| Individuals who were unaware of the study beforehand (i.e., had not received any prior oral health education or motivation) | |

| Exclusion Criteria | Anterior young permanent teeth with enamel defects such as caries, hypoplasia, or hypomineralization |

| Teeth with restorations or prosthetic treatments | |

| Young permanent teeth located in the posterior region | |

| Primary teeth |

| Model Name | Recall | Precision | Dice Coefficient | IoU |

|---|---|---|---|---|

| DeepLabV3+ | 0.7606 | 0.6664 | 0.7081 | 0.6575 |

| Mask R-CNN (Detectron2) | 0.8027 | 0.7471 | 0.7395 | 0.7229 |

| Super Vison UNet | 0.8277 | 0.8203 | 0.8240 | 0.7793 |

| UNet | 0.8095 | 0.8006 | 0.8037 | 0.7607 |

| UNet Transformer | 0.7718 | 0.8782 | 0.8215 | 0.7845 |

| YOLOv8 | 0.5409 | 0.6600 | 0.5799 | 0.6157 |

| Model Name | Image Size | Batch Size | Optimizer | Learning Rate |

|---|---|---|---|---|

| DeepLabV3+ | 192 | 2 | Adam | |

| Mask R-CNN (Detectron2 with R50-DC5) | 256 | 8 | SGD | |

| Super Vision UNet | 128 | 2 | RMSProp | |

| UNet | 192 | 4 | Adam | |

| UNet Transformer | 256 | 4 | RMSProp | |

| YOLOv8 | 128 | 4 | Adam |

| Recall | Precision | Dice Coefficient | IoU | |

|---|---|---|---|---|

| Dentist A | 0.5324 | 0.8661 | 0.6122 | 0.6565 ± 0.204 |

| Dentist B | 0.4405 | 0.8652 | 0.5304 | 0.6065 ± 0.196 |

| Dentist C | 0.6352 | 0.8494 | 0.6785 | 0.6898 ± 0.170 |

| The AI model | 0.7796 | 0.8398 | 0.7942 | 0.7783 ± 0.115 |

| t | df | p | |

|---|---|---|---|

| AI model & Dentist A | −3.077 | 53.742 | 0.003 |

| AI model & Dentist B | −4.467 | 55,009 | 0.000 |

| AI model & Dentist C | −2.549 | 59.799 | 0.013 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tez, B.Ç.; Güzel, Y.; Kızıltan Eliaçık, B.B.; Aydın, Z. Deep-Learning-Based AI-Model for Predicting Dental Plaque in the Young Permanent Teeth of Children Aged 8–13 Years. Children 2025, 12, 475. https://doi.org/10.3390/children12040475

Tez BÇ, Güzel Y, Kızıltan Eliaçık BB, Aydın Z. Deep-Learning-Based AI-Model for Predicting Dental Plaque in the Young Permanent Teeth of Children Aged 8–13 Years. Children. 2025; 12(4):475. https://doi.org/10.3390/children12040475

Chicago/Turabian StyleTez, Banu Çiçek, Yasin Güzel, Bahar Başak Kızıltan Eliaçık, and Zafer Aydın. 2025. "Deep-Learning-Based AI-Model for Predicting Dental Plaque in the Young Permanent Teeth of Children Aged 8–13 Years" Children 12, no. 4: 475. https://doi.org/10.3390/children12040475

APA StyleTez, B. Ç., Güzel, Y., Kızıltan Eliaçık, B. B., & Aydın, Z. (2025). Deep-Learning-Based AI-Model for Predicting Dental Plaque in the Young Permanent Teeth of Children Aged 8–13 Years. Children, 12(4), 475. https://doi.org/10.3390/children12040475