Can Functional Cognitive Assessments for Children/Adolescents Be Transformed into Digital Platforms? A Conceptual Review

Highlights

- Most functional cognitive assessments (n = 13) for children and adolescents with high ecological validity remain unavailable in digital formats, highlighting a significant gap between traditional tools and technology-based solutions.

- Digitization of these assessments offers potential benefits, such as improved accessibility, precision in data collection, and scalability; however, replicating real-life contexts and capturing strategy use digitally remains challenging.

- Innovative digital tools are needed that successfully achieve high ecological validity by preserving real-world context and observation of strategies, while maximizing digital availability through advanced technologies (e.g., VR, mobile platforms).

- The proposed conceptual framework can guide clinicians, researchers, and developers in prioritizing features for future technology-enhanced assessments, promoting evidence-based, context-sensitive evaluation practices.

Abstract

1. Introduction

- Examine the current extent and nature of adaptations of traditional performance-based cognitive assessments into digital platforms.

- Compare the ecological validity and scoring metrics of traditional tools versus digital platforms.

- Identify opportunities and propose evidence-informed recommendations for the future development of digital platforms for functional cognition assessment in children and adolescents.

2. Materials and Methods

- Structured search query: The query was entered into the Elicit “Find Papers” feature, which retrieves relevant academic articles based on the prompt, “Find papers related to the following topic—transforming traditional functional cognitive assessments into digital platforms: feasibility, effectiveness, and potential advantages for children and youth.”

- Filtering and refining results: using built-in filters for publication year (e.g., last 10 years to focus on recent advancements), as well as English-language publication

- Extracting key information in the following categories: (a) title, authors, and publication year, link to full text, and number of its citations; (b) abstract summary; (c) performance-based (yes/no); (d) transformation to digital platform (yes/no); (e) remote administration (yes/no); and (f) advantages and disadvantages.

- Based on this selection, another thorough manual process was applied. This second analysis focused on identifying manuscripts that discussed performance-based cognitive assessments on either traditional or digital platforms. Of the initial 240 papers, the resulting data totaled 45 papers, which were exported into a spreadsheet for further in-depth manual analysis performed by all the researchers.

- To mitigate potential biases from the AI-assisted search, such as the under- or over-retrieval of certain literature, a thorough manual review of all results was conducted. This manual screening involved excluding papers that discussed only intervention tools (e.g., computer programs, mobile applications, web and video conferencing platforms, computerized cognitive behavioral therapy interventions, cognitive training, and neuro-feedback) and not assessment tools. Non-performance-based cognitive assessments, such as interviews, were also excluded. Papers were also excluded if they involved participants other than children or adolescents or the researchers had not identified the selection as an academic manuscript. Thirteen papers covering various assessment methods, including traditional paper-and-pencil tests, tablet-based assessments, computerized neuropsychological tests, game-based assessments, and remote teleassessments, remained.

3. Results

3.1. Trends in Adapting Traditional Performance-Based Cognitive Assessments into Digital Platforms

| Assessment | Purpose: To … | Description | Population: Developed for … | Scoring Metric | Age Range (Years) | Psychometric Properties: Reliability and Validity Types Assessed | Digital Platform |

|---|---|---|---|---|---|---|---|

| Weekly Calendar Planning Activity (WCPA) [33] | examine impact of EFs difficulties on the ability to perform daily activities involving multiple steps | Participants enter a list of appointments on a weekly schedule according to specified rules. There are three difficulty levels. | adults and adapted for children and adolescents | Number of accurate meetings, rules followed, number of strategies, planning time, total time, efficiency score | 6–21 | Interrater reliability [34], test–retest reliabilities among college student [35], discriminate validity [31,35,36]. There is normative data for adolescents 12–18 years [37]. | No digital version available |

| Test of Everyday Attention for Children (TEA-Ch) [38] | measure multiple aspects of attention. The second edition (TEA–Ch2) [39] provides a simplified arrangement for ages 5 to 7 years and an extended arrangement for ages 8 to 15 years. | A battery of game-like assessments comprising nine distinct tasks | adults and adapted for children and adolescents | Sustained attention, selective attention, and attentional control | 6–16 | Test–retest reliability [40], convergent validity [40], discriminate validity [41,42], construct validity [41,42,43] | Computer program measures reaction times, accuracy, and scores as part of TEA-Ch2 |

| Behavioral Assessment of the Dysexecutive Syndrome for Children (BADS-C) [44] | evaluate EF through tasks that simulate real-life scenarios and problem-solving demands. | A battery of tasks, including the Playing Cards, Water, and Key Search tests and three versions of the Zoo Map Test. | adults and adapted for children and adolescents | Total time, planning time, and number of errors | 8–16 | Interrater reliability [44], ecological validity, construct validity [45], construct validity (e.g., [46]), discriminate validity (e.g., [47], concurrent validity [47]. Norms are available from 7 years old [44]. | No digital version available |

| Children’s Cooking Task [48] | examine EF, problem-solving, and sequencing skills through a cooking task simulation. | Participate in preparing a chocolate cake and juice using the recipe provided. | adults and adapted for children and adolescents | Goal accomplishment, dangerous behavior, need for adult assistance, total time, total number of errors additions, omissions, comments/ questions, estimation errors, substitution sequence errors, control errors, context neglect, environmental adherence, purposeless actions and displacements, dependency, inappropriate behavior | 8–20 | Internal consistency, test–retest reliability, discriminant validity, concurrent validity [32,48] | No digital version available |

| Do-Eat performance-based assessment [49] | evaluate areas of strength and difficulty in activities of daily living and instrumental activities of daily living among children with various disorders and help define therapeutic goals for occupational therapy intervention focusing on motor, EF, sensory, and emotional skills | Conducted in a natural setting, involving three tasks: make a sandwich, prepare chocolate milk, and complete a certificate of achievement. | children with neurodevelopmental disorders | Total time, total score, cue scores, sensory motor skills, EF skills (attention, initiation, sequencing, shifting, spatial organization, temporal organization, inhibition, problem-solving, remembering instructions), and task performance. | 5–8 | internal consistency, interrater reliability, construct validity, concurrent validity [49,50] | No digital version available |

| Children’s Kitchen Task assessment [51] | assess EFs and process skills during cooking activities, focusing on problem-solving and error detection. | A Play-Do task accompanied by written and pictorial instructions. The child receives examiner-provided cues as needed to successfully complete the activity. | adults and adapted for children and adolescents | Total time, total score, number of cues, organization score | 8–12 | Interrater reliability, internal consistency [51,52] | No digital version available |

| Preschool Executive Task Assessment [53] | assess EFs among young children and determine the level of assistance they need to accomplish the task | The child is instructed to draw a caterpillar picture. The child receives a box containing the necessary equipment and a comprehensive illustrated instruction book. | preschool children | Total time, total cues, total score, performance measure (working memory, organization, emotional ability, distractibility) | 3–6 | Interrater reliability [53], concurrent validity [52,54] | No digital version available |

| Children’s Memory Test (CMT) [55] | measure aspects of memory like immediate and delayed recall and meta-memory abilities in children (version 2, CMT-2, is available) | Memory task that involves four scenes relating to everyday living situations, each containing 20 pictures of objects. | adults and adapted for children and adolescents | Immediate recall, delayed recall, meta-memory (performance, prediction, performance estimation), and strategy used | 5–16 | Internal consistency, content validity, construct validity, concurrent validity [56] | Transferred to digital format |

| Cambridge Neuropsychological Test Automated Battery (CANTAB) [57] | assess cognitive abilities, such as visual memory, visual attention, and working memory/planning | Computerized neuropsychological tests that assess various cognitive functions. In this flexible battery, the researcher can select a subtask based on the participant’s interests. | adults and adapted for children and adolescents | Attention and psychomotor speed (mean reaction time, correct responses, false alarms, omission errors, sensitivity index, response variability, movement time, EF (number of problems solved, total errors, mean initial thinking time between/within errors, total stages completed, pre-extradimensional shift errors, total trials completed, strategy score, emotional/ social cognition: number of correctly identified emotions, accuracy per emotion (e.g., happiness, fear),memory, total correct responses, trials to success/trials to criterion, mean correct latency,% correct, delayed recall accuracy (e.g., reaction time). | 4–90. | Internal consistency, construct validity [58], discriminant validity [59] | Developed as digital |

| Tower of London Test [60] | measure planning and problem-solving abilities | Solve a problem using two wooden towers and diameter balls by reaching the examiner’s tower abstraction within a specified time and number of moves. Different difficulty levels and versions (different numbers of balls) exist. | children with neurodevelopmental disorders | Total score, planning time, task level achieved, execution time | 6–80 (digital version intended for 5–53) | Cronbach’s alpha convergent validity, discriminant validity [61] | Transferred to digital format |

| Wisconsin Card Sorting Test for children (WCST) [62] | assess abstract reasoning, cognitive flexibility, and EFs by evaluating the ability to adapt to changing sorting rules | Four stimulus cards and 128 response cards with printed objects that differ in number, color, and shape: The child matches the response cards to the stimulus cards with correct or incorrect feedback. A short form with 64 cards is available. | adults and adapted for children and adolescents | Total number of correct answers, total errors, perseverative responses, perseverative errors, non-perseverative errors, categories completed, number of trials to complete the category, % conceptual level response, failure to maintain set. | 6.5–89.0 | Norms are available for children 6 months–6 years [62] | Transferred to digital format |

| The Birthday Task Assessment [63] | assess performance in a complex, multistep task requiring EF abilities | A role-playing scenario related to a birthday party. The child must complete three tasks of varying difficulty according to specific rules: prepare two sandwiches with peanut butter and jelly, wrap two birthday presents, and prepare a card for the birthday party. | children with neurodevelopmental disorders. | Total time, broken rules, errors (omission, object substitution, action addition, total errors) | 8–16 | Interrater reliability [63] | No digital version available |

| Adaptive Cognitive Evaluation Explorer (ACE-X) [64] | assess cognitive abilities, including working memory, attention, and cognitive flexibility. The assessment was adapted from the Adaptive Cognitive Evaluation-Classroom (ACE-C). | This mobile EF assessment tool includes 15 tasks in real-world settings. An incorporated algorithm enables repeatedly administering the same tasks without losing sensitivity to low performance levels. | anyone 7–107 years experiencing cognitive difficulties | Processing speed, working memory, inhibitory control, and cognitive flexibility | 7–107 | Intraclass correlation coefficients, test–retest reliability, concurrent validity [64] | Developed as digital |

3.2. Ecological Validity of Traditional Tools Versus Digital Platform Assessments

3.3. Scoring Metrics Across Cognitive Domains

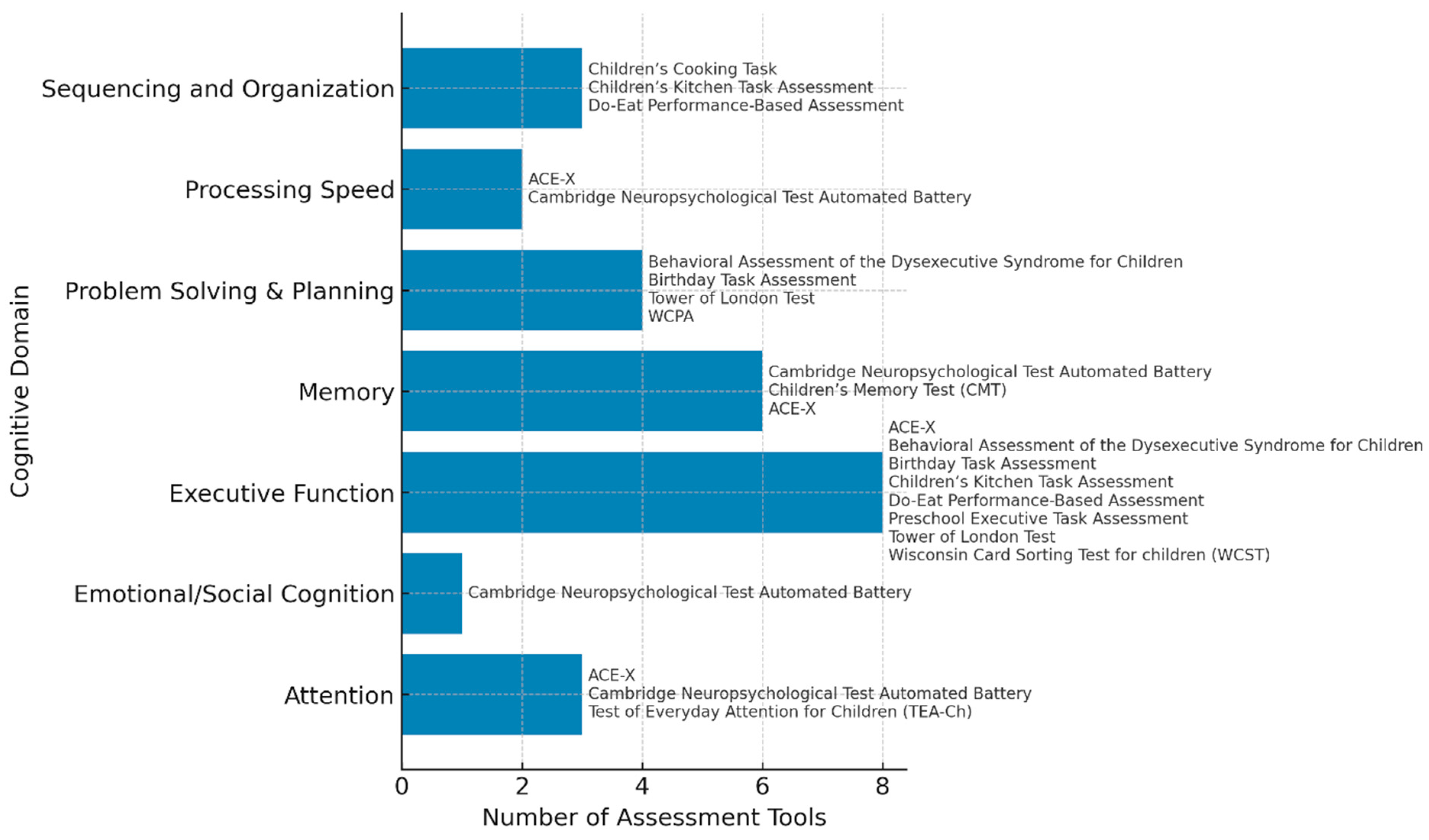

3.3.1. Rationale for the Eight-Domain Classification

3.3.2. The Seven Domains

- Executive functions: This broad category encompasses planning, inhibition, self-monitoring, cognitive flexibility, and strategy use. Most instruments reviewed assessed at least one executive component, justifying the domain’s centrality.

- Attention: Sustained, selective, and divided attention were clustered as a distinct domain, given that several assessments exclusively targeted attentional capacity independent of broader executive processes.

- Processing speed: Assessments measuring reaction time, information processing efficiency, and cognitive fluency were grouped under this domain.

- Problem-solving and planning: This domain included assessments that required multistep reasoning, hypothesis generation, and goal-directed behavior (e.g., Tower of London, WCPA).

- Sequencing and organization: Specific to everyday tasks requiring ordered steps and spatial-temporal organization, this domain emerged from assessments such as the Do-Eat and cooking tasks.

- Memory: This domain encompasses immediate recall and the ability to hold and manipulate information during task performance. It included encoding, storage, and information retrieval processes essential for everyday functioning. Assessment tools that capture this domain include the CMT, ACE-X, and selected CANTAB subtests.

- Emotional/social cognition: Although assessed less frequently than the other domains, we retained this domain to reflect assessments that include affect recognition and social reasoning, particularly in computerized batteries like the CANTAB.

4. Discussion

4.1. Digital Transformation Trends

4.2. Ecological Validity and Digital Availability

4.3. Scoring Metrics Across Cognitive Domains

4.4. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADL | Activity of daily living |

| ACE-X | Adaptive Cognitive Evaluation Explorer |

| BADS | Behavioral Assessment of the Dysexecutive Syndrome |

| CANTAB | Cambridge Neuropsychological Test Automated Battery |

| CCT | Children’s Cooking Task |

| CMT | Children’s Memory Test |

| EFs | Executive functions |

| TEA-Ch | Test of Everyday Attention for Children |

| VR | Virtual reality |

| WCPA | Weekly Calendar Planning Activity |

| WCST | Wisconsin Card Sorting Test |

References

- Wesson, J.; Clemson, L.; Brodaty, H.; Reppermund, S. Estimating functional cognition in older adults using observational assessments of task performance in complex everyday activities: A systematic review and evaluation of measurement properties. Neurosci. Biobehav. Rev. 2016, 68, 335–360. [Google Scholar] [CrossRef] [PubMed]

- Edemekong, P.F.; Bomgaars, D.; Sukumaran, S.; Levy, S.B. Activities of Daily Living; StatPearls Publishing: Treasure Island, FL, USA, 2019. [Google Scholar]

- Lee, Y.; Randolph, S.B.; Kim, M.Y.; Foster, E.R.; Kersey, J.; Baum, C.; Connor, L.T. Performance-based assessments of functional cognition in adults, part 1—Assessment characteristics: A systematic review. Am. J. Occup. Ther. 2025, 79, 7904205130. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Randolph, S.B.; Kim, M.Y.; Foster, E.R.; Kersey, J.; Baum, C.; Connor, L.T. Performance-based assessments of functional cognition in adults, part 2—Psychometric properties: A systematic review. Am. J. Occup. Ther. 2025, 79, 7904205140. [Google Scholar] [CrossRef] [PubMed]

- Giles, G.M.; Edwards, D.F.; Morrison, M.T.; Baum, C.; Wolf, T.J. Screening for functional cognition in postacute care and the Improving Medicare Post-Acute Care Transformation (IMPACT) Act of 2014. Am. J. Occup. Ther. 2017, 71, 7105090010p1–7105090010p6. [Google Scholar] [CrossRef]

- Wolf, T.J.; Edwards, D.F.; Giles, G.M. Functional Cognition and Occupational Therapy: A Practical Approach to Treating Individuals with Cognitive Loss; AOTA Press: Bethesda, MD, USA, 2019. [Google Scholar]

- Edwards, D.F.; Wolf, T.J.; Marks, T.; Alter, S.; Larkin, V.; Padesky, B.L.; Spiers, M.; Al-Heizan, M.O.; Giles, G.M. Functional cognition: Conceptual foundations for intervention in occupational therapy. Am. J. Occup. Ther. 2019, 73, 7303205010p1–7303205010p18. [Google Scholar]

- American Occupational Therapy Association. Occupational therapy practice framework: Domain and process, 3rd ed. Am. J. Occup. Ther. 2014, 68, S1–S48. [Google Scholar] [CrossRef]

- American Occupational Therapy Association. Occupational therapy practice framework: Domain and process, 4th ed. Am. J. Occup. Ther. 2020, 74, 7412410010p1–7412410010p87. [Google Scholar] [CrossRef]

- Toglia, J.; Foster, E. The Multicontext Approach: A Metacognitive Strategy-Based Intervention for Functional Cognition; MC CogRehab Resources: Hastings on Hudson, NY, USA, 2021. [Google Scholar]

- Cermak, S.A.; Toglia, J. Cognitive development across the life-span: Development of cognition and executive functioning in children and adolescents. In Cognition, Occupation, and Participation Across the Lifespan: Neuroscience, Neurorehabilitation, and Models of Intervention in Occupational Therapy, 4th ed.; Katz, N., Toglia, J., Eds.; AOTA Press: Bethesda, MD, USA, 2018; pp. 9–27. [Google Scholar]

- Wallisch, A.; Little, L.M.; Dean, E.; Dunn, W. Executive function measures for children: A scoping review of ecological validity. OTJR 2017, 38, 6–14. [Google Scholar] [CrossRef]

- Gomez, I.N.B.; Palomo, S.A.M.; Vicuña, A.M.U.; Bustamante, J.A.D.; Eborde, J.M.E.; Regala, K.A.; Ruiz, G.M.M.; Sanchez, A.L.G. Performance-based executive function instruments used by occupational therapists for children: A systematic review of measurement properties. Occup. Ther. Int. 2021, 2021, 6008442. [Google Scholar] [CrossRef]

- Giles, G.M.; Edwards, D.F.; Baum, C.; Furniss, J.; Skidmore, E.; Wolf, T.; Leland, N.E. Making functional cognition a professional priority. Am. J. Occup. Ther. 2020, 74, 7401090010p1–7401090010p6. [Google Scholar] [CrossRef]

- Lalonde, G.; Henry, M.; Drouin-Germain, A.; Nolin, P.; Beauchamp, M.H. Assessment of executive function in adolescence: A comparison of traditional and virtual reality tools. J. Neurosci. Methods 2013, 219, 76–82. [Google Scholar] [CrossRef]

- Gioia, G.A.; Isquith, P.K.; Guy, S.C.; Kenworthy, L. Behavior Rating Inventory of Executive Function; Psychological Assessment Resources: Lake Magdalene, FL, USA, 2000. [Google Scholar]

- Delis, D.C.; Kaplan, E.; Kramer, J.H. Delis–Kaplan Executive Function System Examiner’s Manual; Psychological: New York, NY, USA, 2001. [Google Scholar]

- Josman, N.; Rosenblum, S. A metacognitive model for children with atypical brain development. In Cognition, Occupation, and Participation Across the Life Span: Neuroscience, Neurorehabilitation and Models for Intervention in Occupational Therapy; Katz, N., Ed.; AOTA Press: Bethesda, MD, USA, 2018; pp. 223–248. [Google Scholar]

- Hartman-Maeir, A.; Katz, N.; Baum, C.M. Cognitive functional evaluation (CFE) process for individuals with suspected cognitive disabilities. Occup. Ther. Health Care 2009, 23, 1–23. [Google Scholar] [CrossRef]

- Bar-Haim Erez, A.; Katz, N. Cognitive functional evaluation. In Cognition, Occupation and Participation Across the Lifespan: Neuroscience, Neurorehabilitation and Models of Intervention in Occupational Therapy, 4th ed.; Katz, N., Toglia, J., Eds.; AOTA Press: Bethesda, MD, USA, 2018; pp. 69–85. [Google Scholar]

- Paganin, G.; Simbula, S. New technologies in the workplace: Can personal and organizational variables affect the employees’ intention to use a work-stress management app? Int. J. Env. Res. Public. Health 2021, 18, 9366. [Google Scholar] [CrossRef]

- Asensio, D.; Duñabeitia, J.A. The necessary, albeit belated, transition to computerized cognitive assessment. Front. Psychol. 2023, 14, 1160554. [Google Scholar] [CrossRef]

- Parsons, S. Learning to work together: Designing a multi-user virtual reality game for social collaboration and perspective-taking for children with autism. Int. J. Child. Comput. Interact. 2015, 6, 28–38. [Google Scholar] [CrossRef]

- Cook, D.J.; Schmitter-Edgecombe, M.; Jönsson, L.; Morant, A.V. Technology-enabled assessment of functional health. IEEE Rev. Biomed. Eng. 2018, 12, 319–332. [Google Scholar] [CrossRef] [PubMed]

- Ruffini, C.; Tarchi, C.; Morini, M.; Giuliano, G.; Pecini, C. Tele-assessment of cognitive functions in children: A systematic review. Child. Neuropsychol. 2022, 28, 709–745. [Google Scholar] [CrossRef] [PubMed]

- Zeghari, R.; Guerchouche, R.; Tran Duc, M.; Bremond, F.; Lemoine, M.P.; Bultingaire, V.; Langel, K.; De Groote, Z.; Kuhn, F.; Martin, E.; et al. Pilot study to assess the feasibility of a mobile unit for remote cognitive screening of isolated elderly in rural areas. Int. J. Environ. Res. Public. Health 2021, 18, 6108. [Google Scholar] [CrossRef]

- Dawson, D.R.; Marcotte, T.D. Special issue on ecological validity and cognitive assessment. Neuropsychol. Rehabil. 2017, 27, 599–602. [Google Scholar] [CrossRef]

- Diamond, A.; Ling, D.S. Conclusions about interventions, programs, and approaches for improving executive functions that appear justified and those that, despite much hype, do not. Dev. Cogn. Neurosci. 2016, 18, 34–48. [Google Scholar] [CrossRef]

- Guo, C.; Ashrafian, H.; Ghafur, S.; Fontana, G.; Gardner, C.; Prime, M. Challenges for the evaluation of digital health solutions: A call for innovative evidence generation approaches. NPJ Digit. Med. 2020, 3, 110. [Google Scholar] [CrossRef]

- Jaakkola, E. Designing conceptual articles: Four approaches. AMS Rev. 2020, 10, 18–26. [Google Scholar] [CrossRef]

- Toglia, J.; Berg, C. Performance-based measure of executive function: Comparison of community and at-risk youth. Am. J. Occup. Ther. 2013, 67, 515–523. [Google Scholar] [CrossRef][Green Version]

- Fogel, Y.; Rosenblum, S.; Hirsh, R.; Chevignard, M.; Josman, N. Daily performance of adolescents with executive function deficits: An empirical study using a complex-cooking task. Occup. Ther. Int. 2020, 2020, 3051809. [Google Scholar] [CrossRef]

- Toglia, J. Weekly Calendar Planning Activity; AOTA Press: Bethesda, MD, USA, 2015. [Google Scholar]

- Weiner, N.W.; Toglia, J.; Berg, C. Weekly Calendar Planning Activity (WCPA): A performance-based assessment of executive function piloted with at-risk adolescents. Am. J. Occup. Ther. 2012, 66, 699–708. [Google Scholar] [CrossRef]

- Lahav, O.; Ben-Simon, A.; Inbar-Weiss, N.; Katz, N. Weekly Calendar Planning Activity for university students: Comparison of individuals with and without ADHD by gender. J. Atten. Disord. 2018, 22, 368–378. [Google Scholar] [CrossRef]

- Zlotnik, S.; Schiff, A.; Ravid, S.; Shahar, E.; Toglia, J. A new approach for assessing executive functions in everyday life, among adolescents with genetic generalised epilepsies. Neuropsychol. Rehabil. 2020, 30, 333–345. [Google Scholar] [CrossRef]

- Zlotnik, S.; Toglia, J. Measuring adolescent self-awareness and accuracy using a performance-based assessment and parental report. Front. Public Health 2018, 6, 15. [Google Scholar] [CrossRef]

- Manly, T.; Robertson, I.H.; Anderson, V.; Nimmo-Smith, I. The Test of Everyday Attention (TEA-CH); Thames Valley Test: Bury St. Edmunds, UK, 1999. [Google Scholar]

- Manly, T.; Anderson, V.; Crawford, J.; George, M.; Underbjerg, M.; Robertson, I.H. Test of Everyday Attention for Children–Second Edition [TEA-Ch2]; Pearson: London, UK, 2017. [Google Scholar]

- Manly, T.; Anderson, A.; Nimmo-Smith, I.; Turner, A.; Watson, P.; Robertson, I.H. The differential assessment of children’s attention: Yhe Test of Everyday Attention for Children (TEA-Ch), normative sample, and ADHD performance. J. Child. Psychol. Psychiatr. 2001, 42, 1065–1081. [Google Scholar] [CrossRef]

- Malegiannaki, A.-C.; Aretouli, E.; Metallidou, P.; Messinis, L.; Zafeiriou, D.; Kosmidis, M.H. Test of Everyday Attention for Children (TEA-Ch): Greek normative data and discriminative validity for children with combined type of attention-deficit/hyperactivity disorder. Dev. Neuropsychol. 2019, 44, 189–202. [Google Scholar] [CrossRef] [PubMed]

- Fathi, N.; Mehraban, A.H.; Akbarfahimi, M.; Mirzaie, H. Validity and reliability of the test of everyday attention for children (TEACh) in Iranian 8-11 year old normal students. Iran. J. Psychiatry Behav. Sci. 2016, 11, e2854. [Google Scholar] [CrossRef]

- Pardos, A.; Quintero, J.; Zuluaga, P.; Fernández, A. Descriptive analysis of the Test of Everyday Attention for children in a Spanish normative sample. Actas Esp. Psiquiatr. 2016, 44, 183–192. Available online: https://actaspsiquiatria.es/index.php/actas/article/view/1097 (accessed on 15 April 2025). [PubMed]

- Emslie, H.; Wilson, F.C.; Burden, V.; Nimmo-Smith, I.; Wilson, B.A. The Behavioural Assessment of the Dysexecutive Syndrome for Children (BADS-C); Thames Valley Test: Bury St. Edmunds, UK, 2003. [Google Scholar]

- Baron, I.S. Behavioural Assessment of the Dysexecutive Syndrome for Children (BADS-C.) by Emslie, H., Wilson, FC, Burden, V., Nimmo-Smith, I., & Wilson, BA (2003). Child. Neuropsychol. 2007, 13, 539–542. [Google Scholar] [CrossRef]

- Engel-Yeger, B.; Josman, N.; Rosenblum, S. Behavioural Assessment of the Dysexecutive Syndrome for Children (BADS-C): An examination of construct validity. Neuropsychol. Rehabil. 2009, 19, 662–676. [Google Scholar] [CrossRef]

- Longaud-Valès, A.; Chevignard, M.; Dufour, C.; Grill, J.; Puget, S.; Sainte-Rose, C.; Valteau-Couanet, D.; Dellatolas, G. Assessment of executive functioning in children and young adults treated for frontal lobe tumours using ecologically valid tests. Neuropsychol. Rehabili. 2016, 26, 558–583. [Google Scholar] [CrossRef]

- Chevignard, M.P.; Catroppa, C.; Galvin, J.; Anderson, V. Development and evaluation of an ecological task to assess executive functioning post childhood TBI: The Children’s Cooking Task. Brain Impair. 2010, 11, 125–143. [Google Scholar] [CrossRef]

- Josman, N.; Goffer, A.; Rosenblum, S. Development and standardization of a Do–Eat activity of daily living performance test for children. Am. J. Occup. Ther. 2010, 64, 47–58. [Google Scholar] [CrossRef]

- Rosenblum, S.; Frisch, C.; Deutsh-Castel, T.; Josman, N. Daily functioning profile of children with attention deficit hyperactive disorder: A pilot study using an ecological assessment. Neuropsychol. Rehabil. 2015, 25, 402–418. [Google Scholar] [CrossRef]

- Rocke, K.; Hays, P.; Edwards, D.; Berg, C. Development of a performance assessment of executive function: The Children’s Kitchen Task Assessment. Am. J. Occup. Ther. 2008, 62, 528–537. [Google Scholar] [CrossRef]

- Fogel, Y.; Cohen Elimelech, O.; Josman, N. Executive function in young children: Validation of the Preschool Executive Task Assessment. Children 2025, 12, 626. [Google Scholar] [CrossRef]

- Downes, M.; Kirkham, F.J.; Berg, C.; Telfer, P.; de Haan, M. Executive performance on the preschool executive task assessment in children with sickle cell anemia and matched controls. Child. Neuropsychol. 2019, 25, 278–285. [Google Scholar] [CrossRef] [PubMed]

- Downes, M.; Berg, C.; Kirkham, F.J.; Kischkel, L.; McMurray, I.; de Haan, M. Task utility and norms for the Preschool Executive Task Assessment (PETA). Child. Neuropsychol. 2018, 24, 784–798. [Google Scholar] [CrossRef] [PubMed]

- Toglia, J.P. Contextual Memory Test. Multicontext. 2017. Available online: https://multicontext.net/contextual-memory-test (accessed on 10 April 2025).

- Engel-Yeger, B.; Durr, D.H.; Josman, N. Comparison of memory and meta-memory abilities of children with cochlear implant and normal hearing peers. Disabil. Rehabil. 2011, 33, 770–777. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A. Cambridge neuropsychological test automated battery. In Encyclopedia of Autism Spectrum Disorders; Springer: New York, NY, USA, 2013; pp. 498–515. [Google Scholar]

- Luciana, M.; Nelson, C.A. Assessment of neuropsychological function through use of the Cambridge Neuropsychological Testing Automated Battery: Performance in 4-to 12-year-old children. Dev. Neuropsychol. 2002, 22, 595–624. [Google Scholar] [CrossRef]

- Luciana, M.; Lindeke, L.; Georgieff, M.; Mills, M.; Nelson, C.A. Neurobehavioral evidence for working-memory deficits in school-aged children with histories of prematurity. Dev. Med. Child. Neurol. 1999, 41, 521–533. [Google Scholar] [CrossRef]

- Shallice, T. Specific impairments of planning. Philos. Transcr. R. Soc. Lond. 1982, 298, 199–209. [Google Scholar] [CrossRef]

- Injoque-Ricle, I.; Burin, D.I. Validez y fiabilidad de la prueba de Torre de Londres para niños: Un estudio preliminar. Rev. Argent. Neuropsicol. 2008, 11, 21–31. [Google Scholar]

- Heaton, R.K.; Staff, P.A.R. Wisconsin Card Sorting Test: Computer Version 2; Psychological Assessment Resources: Odessa, FL, USA, 1993. [Google Scholar]

- Cook, L.G.; Chapman, S.B.; Levin, H.S. Self-regulation abilities in children with severe traumatic brain injury: A preliminary investigation of naturalistic action. NeuroRehabil 2008, 23, 467–475. [Google Scholar] [CrossRef]

- Hsu, W.Y.; Rowles, W.; Anguera, J.A.; Anderson, A.; Younger, J.W.; Friedman, S.; Gazzaley, A.; Bove, R. Assessing cognitive function in multiple sclerosis with digital tools: Observational study. J. Med. Internet Res. 2021, 23, e25748. [Google Scholar] [CrossRef]

- Toglia, J.P.; Rodger, S.A.; Polatajko, H.J. Anatomy of cognitive strategies: A therapist’s primer for enabling occupational performance. Can. J. Occup. Ther. 2012, 79, 225–236. [Google Scholar] [CrossRef]

- Toglia, J.P. The Multicontext Approach to Cognitive Rehabilitation: A Metacognitive Strategy Intervention to Optimize Functional Cognition; Gatekeeper Press: Citrus Park, FL, USA, 2021. [Google Scholar]

- Germine, L.; Reinecke, K.; Chaytor, N.S. Digital neuropsychology: Challenges and opportunities at the intersection of science and software. Clin. Neuropsychol. 2019, 33, 271–286. [Google Scholar] [CrossRef]

- Condy, E.; Kaat, A.J.; Becker, L.; Sullivan, N.; Soorya, L.; Berger, N.; Berry-Kravis, E.; Michalak, C.; Thurm, A. A novel measure of matching categories for early development: Item creation and pilot feasibility study. Res. Dev. Disabil. 2021, 115, 103993. [Google Scholar] [CrossRef]

- Van Patten, R. Introduction to the Special Issue—Neuropsychology from a distance: Psychometric properties and clinical utility of remote neurocognitive tests. J. Clin. Exper Neuropsychol. 2021, 43, 767–773. [Google Scholar] [CrossRef]

- Lavigne, K.M.; Sauvé, G.; Raucher-Chéné, D.; Guimond, S.; Lecomte, T.; Bowie, C.R.; Menon, M.; Lal, S.; Woodward, T.S.; Bodnar, M.D.; et al. Remote cognitive assessment in severe mental illness: A scoping review. Schizophrenia 2022, 8, 14. [Google Scholar] [CrossRef]

- Parsons, T.D. Virtual reality for enhanced ecological validity and experimental control in the clinical, affective and social neurosciences. Front. Hum. Neurosci. 2015, 9, 660. [Google Scholar] [CrossRef] [PubMed]

- Dumas, C.M.; Grajo, L. Functional cognition in critically ill children: Asserting the role of occupational therapy. Open J. Occup. Ther. 2021, 9, 1–9. [Google Scholar] [CrossRef]

- Edwards, D.; Giles, G. Special issue on functional cognition. OTJR 2022, 42, 251–252. [Google Scholar] [CrossRef] [PubMed]

- Harris, C.; Tang, Y.; Birnbaum, E.; Cherian, C.; Mendhe, D.; Chen, M.H. Digital neuropsychology beyond computerized cognitive assessment: Applications of novel digital technologies. Arch. Clin. Neuropsychol. 2024, 39, 290–304. [Google Scholar] [CrossRef]

- Walker, E.J.; Kirkham, F.J.; Stotesbury, H.; Dimitriou, D.; Hood, A.M. Tele-neuropsychological assessment of children and young people: A systematic review. J. Pediatr. Neuropsychol. 2023, 9, 113–126. [Google Scholar] [CrossRef]

- Agha, R.A.; Mathew, G.; Rashid, R.; Kerwan, A.; Al-Jabir, A.; Sohrabi, C.; Franchi, T.; Nicola, M.; Agha, M.; The TITAN Group. Transparency in the Reporting of Artificial Intelligence—The TITAN Guideline. Prem. J. Sci. 2025, 10, 100082. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fogel, Y.; Josman, N.; Elimelech, O.C.; Zlotnik, S. Can Functional Cognitive Assessments for Children/Adolescents Be Transformed into Digital Platforms? A Conceptual Review. Children 2025, 12, 1384. https://doi.org/10.3390/children12101384

Fogel Y, Josman N, Elimelech OC, Zlotnik S. Can Functional Cognitive Assessments for Children/Adolescents Be Transformed into Digital Platforms? A Conceptual Review. Children. 2025; 12(10):1384. https://doi.org/10.3390/children12101384

Chicago/Turabian StyleFogel, Yael, Naomi Josman, Ortal Cohen Elimelech, and Sharon Zlotnik. 2025. "Can Functional Cognitive Assessments for Children/Adolescents Be Transformed into Digital Platforms? A Conceptual Review" Children 12, no. 10: 1384. https://doi.org/10.3390/children12101384

APA StyleFogel, Y., Josman, N., Elimelech, O. C., & Zlotnik, S. (2025). Can Functional Cognitive Assessments for Children/Adolescents Be Transformed into Digital Platforms? A Conceptual Review. Children, 12(10), 1384. https://doi.org/10.3390/children12101384