Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Detection and Diagnosis in Pediatric Radiology: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Literature Search

2.2. Article Selection

2.3. Data Extraction and Synthesis

3. Results

| Author, Year and Country | Modality | Diagnosis | Diagnostic Performance | ||||||

|---|---|---|---|---|---|---|---|---|---|

| AUC | Sensitivity | Specificity | PPV | NPV | Accuracy | F1 Score | |||

| Brain Imaging | |||||||||

| Dou et al. (2022)—China [30] | MRI | Bipolar disorder | 0.830 | 0.909 | 0.769 | NR | NR | 0.854 | NR |

| Kuttala et al. (2022)—Australia, India & United Arab Emirates [31] | MRI | ADHD and ASD | 0.850 (ADHA); 0.910 (ASD) | NR | NR | NR | NR | 0.854 (ADHA); 0.978 (ASD) | NR |

| Li et al. (2020)—China [32] | MRI | Posterior fossa tumors | 0.865 | 0.929 | 0.800 | NR | NR | 0.878 | NR |

| Peruzzo et al. (2016)—Italy [33] | MRI | Malformations of corpus callosum | 0.953 | 0.923 | 0.904 | 0.906 | NR | 0.914 | NR |

| Prince et al. (2020)—USA [34] | CT & MRI | ACP | 0.978 | NR | NR | NR | NR | 0.979 | NR |

| Tan et al. (2013)—USA [35] | MRI | Congenital sensori-neural hearing loss | 0.900 | 0.890 | 0.860 | NR | NR | 0.870 | NR |

| Xiao et al. (2019)—China [36] | MRI | ASD | NR | 0.980 | 0.936 | 0.959 | 0.971 | 0.963 | NR |

| Zahia et al. (2020)—Spain [37] | MRI | Dyslexia | NR | 0.750 | 0.714 | 0.600 | NR | 0.727 | 0.670 |

| Zhou et al. (2021)—China [38] | MRI | ADHD | 0.698 | 0.609 | 0.676 | NR | NR | 0.643 | 0.626 |

| Cardiac Imaging | |||||||||

| Lee et al. (2022)—South Korea [39] | US | Kawasaki disease | NR | 0.841 | 0.585 | 0.811 | 0.633 | 0.759 | 0.826 |

| Musculoskeletal Imaging | |||||||||

| Petibon et al. (2021)—Canada, Israel and USA [40] | SPECT | Low back pain | 0.830 | NR | NR | NR | NR | NR | NR |

| Sezer and Sezer (2020)—France and Turkey [41] | US | DDH | NR | 0.962 | 0.980 | NR | NR | 0.977 | NR |

| Respiratory Imaging | |||||||||

| Behzadi—Khormouji et al. (2020)—Iran and USA [42] | X-ray | Pulmonary consolidation | 0.995 | 0.987 | 0.864 | NR | NR | 0.945 | NR |

| Bodapati and Rohith (2022)—India [43] | X-ray | Pneumonia | 0.939 | NR | NR | NR | NR | 0.948 | 0.959 |

| Helm et al. (2009)—Canada, UK and USA [44] | CT | Pulmonary nodules | NR | 0.420 | 1.000 | 1.000 | 0.260 | NR | NR |

| Jiang and Chen (2022)-China [45] | X-ray | Pneumonia | NR | 0.894 | NR | 0.918 | NR | 0.912 | 0.903 |

| Liang and Zheng (2020)-China [46] | X-ray | Pneumonia | 0.953 | 0.967 | NR | 0.891 | NR | 0.905 | 0.927 |

| Mahomed et al. (2020)-Netherlands and South Africa [47] | X-ray | Primary-endpoint pneumonia | 0.850 | 0.760 | 0.800 | NR | NR | NR | NR |

| Shouman et al. (2022)-Egypt and Saudi Arabia [48] | X-ray | Bacterial and viral pneumonia | 0.999 | 0.987 | 0.987 | 0.979 | NR | 0.986 | 0.983 |

| Silva et al. (2022)-Brazil [49] | X-ray | Pneumonia | NR | 0.945 | NR | 0.957 | NR | NR | 0.951 |

| Vrbančič and Podgorelec (2022)-Slovenia [50] | X-ray | Pneumonia | 0.952 | 0.976 | 0.927 | 0.973 | NR | 0.963 | 0.974 |

| Urologic Imaging | |||||||||

| Guan et al. (2022)-China [51] | US | Hydronephrosis | NR | NR | NR | NR | NR | 0.891 | 0.895 |

| Zheng et al. (2019)-China and USA [52] | US | CAKUT | 0.920 | 0.86 | 0.880 | NR | NR | 0.870 | NR |

| Author, Year and Country | Modality | Diagnosis | AI Type and Model | Study Design | Dataset Source | Test Set Size | Patient/Population | Sample Size Calculation | Internal Validation Type | External Validation | Reference Standard | AI vs. Clinician | Commercial Availability |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain Imaging | |||||||||||||

| Dou et al. (2022)—China [30] | MRI | Bipolar disorder | ML-LR | Prospective | Private dataset by Second Xiangya Hospital, China | 52 scans | 12–18-year-old children | No | 2-fold cross-validation | No | Clinical diagnosis | No | No |

| Kuttala et al. (2022)—Australia, India and United Arab Emirates [31] | MRI | ADHD and ASD | DL-GAN and softmax | Retrospective | Public datasets (ADHD-200 and Autism Brain Imaging Data Exchange II) | 217 scans | Children (median ages for baseline and follow-up scans: 12 and 15 years, respectively) | No | NR | No | NR | No | No |

| Li et al. (2020)—China [32] | MRI | Posterior fossa tumors | ML-SVM | Prospective | Private dataset by Affiliated Hospital of Zhengzhou University, China | 45 scans | 0–14-year-old children | No | Repeated hold-out with 70:30 random split | No | Histology | No | No |

| Peruzzo et al. (2016)—Italy [33] | MRI | Malformations of corpus callosum | ML-SVM | Retrospective | Private dataset by Scientific Institute “Eugenio Medea”, Italy | 104 scans | 2–12-year-old children | No | Leave-one-out cross validation | No | Expert consensus | Yes | No |

| Prince et al. (2020)—USA [34] | CT and MRI | ACP | DL-CNN | Retrospective | Public dataset (ATPC Consortium) and private datasets by Children’s Hospital Colorado and St. Jude Children’s Research Hospital, USA | 86 CT-MRI scans | Children | No | 60:40 random split and 5-fold cross validation | No | Histology | Yes | No |

| Tan et al. (2013)—USA [35] | MRI | Congenital sensori-neural hearing loss | ML-SVM | Prospective | Private dataset by Cincinnati Children’s Hospital Medical Center, USA | 39 scans | 8–24-month-old children | No | Leave-one-out cross-validation | No | Follow-up | No | No |

| Xiao et al. (2019)—China [36] | MRI | ASD | DL-SAE and softmax | Retrospective | Public dataset (Autism Brain Imaging Data Exchange II) | 198 scans | 5–12-year-old children | No | 11-, 33-, 66-, 99- and 198-fold cross-validation | No | Clinical diagnosis | No | No |

| Zahia et al. (2020)—Spain [37] | MRI | Dyslexia | DL-CNN | Prospective | Private dataset by University Hospital of Cruces, Spain | 55 scans | 9–12-year-old children | No | 4-fold cross validation | No | Clinical diagnosis | No | No |

| Zhou et al. (2021)—China [38] | MRI | ADHD | ML-SVM | Retrospective | Public dataset (Adolescent Brain Cognitive Development Data Repository) | 232 scans | 9–10-year-old children | No | 10-fold cross-validation | No | Clinical diagnosis | No | No |

| Cardiac Imaging | |||||||||||||

| Lee et al. (2022)—South Korea [39] | US | Kawasaki disease | DL-CNN | Retrospective | Private dataset by Yonsei University Gangnam Severance Hospital, South Korea | 203 scans | Children | No | 10-fold cross-validation | No | Single expert reader | No | No |

| Musculoskeletal Imaging | |||||||||||||

| Petibon et al. (2021)—Canada, Israel and USA [40] | SPECT | Low back pain | DL-CNN | Retrospective | Private dataset by Boston Children’s Hospital, USA | 65 scans | 10–17 years old children | No | 3-fold cross-validation | No | Other-ground truth established by artificial lesion insertion | Yes | No |

| Sezer and Sezer (2020)—France and Turkey [41] | US | DDH | DL-CNN | Prospective | Private dataset | 203 scans | 0–6-month-old children | No | 70:30 random split | No | Single expert reader | No | No |

| Respiratory Imaging | |||||||||||||

| Behzadi—Khormouji et al. (2020)—Iran and USA [42] | X-ray | Pulmonary consolidation | DL-CNN | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 582 images | 1–5-year-old children | No | 90:10 random split | No | Expert consensus | No | No |

| Bodapati and Rohith (2022)—India [43] | X-ray | Pneumonia | DL-CNN and CapsNet | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 640 images | 1–5-year-old children | No | NR | No | NR | No | No |

| Helm et al. (2009)—Canada, UK and USA [44] | CT | Pulmonary nodules | NR | Retrospective | Private dataset by a tertiary pediatric hospital | 29 scans | 3 years and 11 months to 18-year-old children | No | NR | Yes | Expert and reader consensus | Yes | Yes |

| Jiang and Chen (2022)—China [45] | X-ray | Pneumonia | DL-ViT | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 624 images | 1–5-year-old children | No | NR | No | NR | No | No |

| Liang and Zheng (2020)—China [46] | X-ray | Pneumonia | DL-CNN | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 624 images | 1–5-year-old children | No | 90:10 random split | No | NR | No | No |

| Mahomed et al. (2020)—Netherlands and South Africa [47] | X-ray | Primary-endpoint pneumonia | ML-SVM | Prospective | Private dataset by Chris Hani Baragwanath Academic Hospital, South Africa | 858 digitized images | 1–59-month-old children | No | 10-fold cross-validation | No | Reader consensus | Yes | No |

| Shouman et al. (2022)—Egypt and Saudi Arabia [48] | X-ray | Bacterial and viral pneumonia | DL-CNN and LSTM | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 586 images | 1–5-year-old children | No | 90:10 random split | No | NR | No | No |

| Silva et al. (2022)—Brazil [49] | X-ray | Pneumonia | DL-CNN | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 1172 images | 1–5-year-old children | No | NR | No | NR | No | No |

| Vrbančič and Podgorelec (2022)—Slovenia [50] | X-ray | Pneumonia | DL-CNN and SGD | Retrospective | Public dataset by Guangzhou Women and Children’s Medical Center, China | 5858 images | 1–5-year-old children | No | 10-fold cross-validation | No | Expert readers | No | No |

| Urologic Imaging | |||||||||||||

| Guan et al. (2022)—China [51] | US | Hydronephrosis | DL-CNN | Prospective | Private dataset by Beijing Children’s Hospital, China | 3257 images | Children | No | 10-fold cross-validation | No | Readers and experts without consensus | No | No |

| Zheng et al. (2019)—China and USA [52] | US | CAKUT | DL-SVM | Retrospective | Private dataset by Children’s Hospital of Philadelphia, USA | 100 scans | Children with mean age of 111 days (SD: 262) | No | 10-fold cross-validation | No | Clinical diagnosis | No | No |

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lu, Y.; Zheng, N.; Ye, M.; Zhu, Y.; Zhang, G.; Nazemi, E.; He, J. Proposing intelligent approach to predicting air kerma within radiation beams of medical x-ray imaging systems. Diagnostics 2023, 13, 190. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Ng, C.K.C. Artificial intelligence (enhanced super-resolution generative adversarial network) for calcium deblooming in coronary computed tomography angiography: A feasibility study. Diagnostics 2022, 12, 991. [Google Scholar] [CrossRef]

- Sun, Z.; Ng, C.K.C. Finetuned super-resolution generative adversarial network (artificial intelligence) model for calcium deblooming in coronary computed tomography angiography. J. Pers. Med. 2022, 12, 1354. [Google Scholar] [CrossRef]

- Ng, C.K.C.; Leung, V.W.S.; Hung, R.H.M. Clinical evaluation of deep learning and atlas-based auto-contouring for head and neck radiation therapy. Appl. Sci. 2022, 12, 11681. [Google Scholar] [CrossRef]

- Choy, G.; Khalilzadeh, O.; Michalski, M.; Do, S.; Samir, A.E.; Pianykh, O.S.; Geis, J.R.; Pandharipande, P.V.; Brink, J.A.; Dreyer, K.J. Current applications and future impact of machine learning in radiology. Radiology 2018, 288, 318–328. [Google Scholar] [CrossRef] [PubMed]

- Chan, H.P.; Hadjiiski, L.M.; Samala, R.K. Computer-aided diagnosis in the era of deep learning. Med. Phys. 2020, 47, e218–e227. [Google Scholar] [CrossRef]

- Suzuki, K. A review of computer-aided diagnosis in thoracic and colonic imaging. Quant. Imaging Med. Surg. 2012, 2, 163–176. [Google Scholar] [CrossRef] [PubMed]

- Wardlaw, J.M.; Mair, G.; von Kummer, R.; Williams, M.C.; Li, W.; Storkey, A.J.; Trucco, E.; Liebeskind, D.S.; Farrall, A.; Bath, P.M.; et al. Accuracy of automated computer-aided diagnosis for stroke imaging: A critical evaluation of current evidence. Stroke 2022, 53, 2393–2403. [Google Scholar] [CrossRef]

- Harris, M.; Qi, A.; Jeagal, L.; Torabi, N.; Menzies, D.; Korobitsyn, A.; Pai, M.; Nathavitharana, R.R.; Ahmad Khan, F. A systematic review of the diagnostic accuracy of artificial intelligence-based computer programs to analyze chest x-rays for pulmonary tuberculosis. PLoS ONE 2019, 14, e0221339. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Heal. 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Masud, R.; Al-Rei, M.; Lokker, C. Computer-aided detection for breast cancer screening in clinical settings: Scoping review. JMIR Med. Inform. 2019, 7, e12660. [Google Scholar] [CrossRef]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic accuracy of deep learning in medical imaging: A systematic review and meta-analysis. NPJ Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef]

- Vasey, B.; Ursprung, S.; Beddoe, B.; Taylor, E.H.; Marlow, N.; Bilbro, N.; Watkinson, P.; McCulloch, P. Association of clinician diagnostic performance with machine learning-based decision support systems: A systematic review. JAMA Netw. Open 2021, 4, e211276. [Google Scholar] [CrossRef]

- Ng, C.K.C. Artificial intelligence for radiation dose optimization in pediatric radiology: A systematic review. Children 2022, 9, 1044. [Google Scholar] [CrossRef] [PubMed]

- Al Mahrooqi, K.M.S.; Ng, C.K.C.; Sun, Z. Pediatric computed tomography dose optimization strategies: A literature review. J. Med. Imaging Radiat. Sci. 2015, 46, 241–249. [Google Scholar] [CrossRef]

- Schalekamp, S.; Klein, W.M.; van Leeuwen, K.G. Current and emerging artificial intelligence applications in chest imaging: A pediatric perspective. Pediatr. Radiol. 2022, 52, 2120–2130. [Google Scholar] [CrossRef] [PubMed]

- Davendralingam, N.; Sebire, N.J.; Arthurs, O.J.; Shelmerdine, S.C. Artificial intelligence in paediatric radiology: Future opportunities. Br. J. Radiol. 2021, 94, 20200975. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.W.; Lee, J.; Choi, S.H.; Huh, J.; Park, S.H. Systematic review and meta-analysis of studies evaluating diagnostic test accuracy: A practical review for clinical researchers-Part I. general guidance and tips. Korean J. Radiol. 2015, 16, 1175–1187. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.J.; Fu, L.R.; Huang, Z.M.; Zhu, J.Q.; Ma, B.Y. Effectiveness evaluation of computer-aided diagnosis system for the diagnosis of thyroid nodules on ultrasound: A systematic review and meta-analysis. Medicine 2019, 98, e16379. [Google Scholar] [CrossRef]

- Devnath, L.; Summons, P.; Luo, S.; Wang, D.; Shaukat, K.; Hameed, I.A.; Aljuaid, H. Computer-aided diagnosis of coal workers’ pneumoconiosis in chest x-ray radiographs using machine learning: A systematic literature review. Int. J. Environ. Res. Public Health 2022, 19, 6439. [Google Scholar] [CrossRef]

- Groen, A.M.; Kraan, R.; Amirkhan, S.F.; Daams, J.G.; Maas, M. A systematic review on the use of explainability in deep learning systems for computer aided diagnosis in radiology: Limited use of explainable AI? Eur. J. Radiol. 2022, 157, 110592. [Google Scholar] [CrossRef] [PubMed]

- Henriksen, E.L.; Carlsen, J.F.; Vejborg, I.M.; Nielsen, M.B.; Lauridsen, C.A. The efficacy of using computer-aided detection (CAD) for detection of breast cancer in mammography screening: A systematic review. Acta Radiol. 2019, 60, 13–18. [Google Scholar] [CrossRef] [PubMed]

- Gundry, M.; Knapp, K.; Meertens, R.; Meakin, J.R. Computer-aided detection in musculoskeletal projection radiography: A systematic review. Radiography 2018, 24, 165–174. [Google Scholar] [CrossRef]

- Waffenschmidt, S.; Knelangen, M.; Sieben, W.; Bühn, S.; Pieper, D. Single screening versus conventional double screening for study selection in systematic reviews: A methodological systematic review. BMC Med. Res. Methodol. 2019, 19, 132. [Google Scholar] [CrossRef]

- Ng, C.K.C. A review of the impact of the COVID-19 pandemic on pre-registration medical radiation science education. Radiography 2022, 28, 222–231. [Google Scholar] [CrossRef]

- PRISMA: Transparent Reporting of Systematic Reviews and Meta-Analyses. Available online: https://www.prisma-statement.org (accessed on 25 January 2023).

- Xu, L.; Gao, J.; Wang, Q.; Yin, J.; Yu, P.; Bai, B.; Pei, R.; Chen, D.; Yang, G.; Wang, S.; et al. Computer-aided diagnosis systems in diagnosing malignant thyroid nodules on ultrasonography: A systematic review and meta-analysis. Eur. Thyroid J. 2020, 9, 186–193. [Google Scholar] [CrossRef]

- Imrey, P.B. Limitations of meta-analyses of studies with high heterogeneity. JAMA Netw. Open 2020, 3, e1919325. [Google Scholar] [CrossRef]

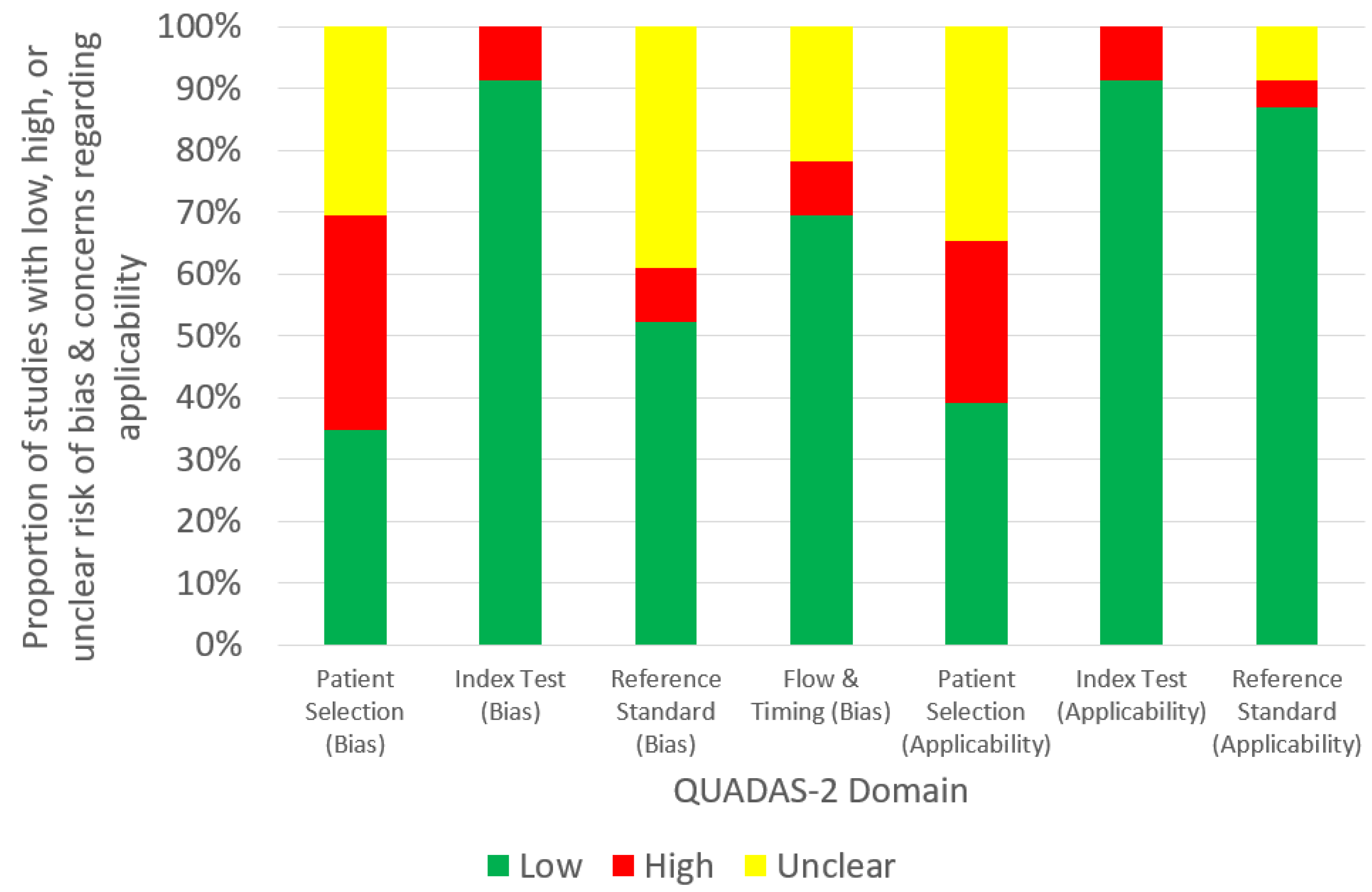

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Dou, R.; Gao, W.; Meng, Q.; Zhang, X.; Cao, W.; Kuang, L.; Niu, J.; Guo, Y.; Cui, D.; Jiao, Q.; et al. Machine learning algorithm performance evaluation in structural magnetic resonance imaging-based classification of pediatric bipolar disorders type I patients. Front. Comput. Neurosci. 2022, 16, 915477. [Google Scholar] [CrossRef]

- Kuttala, D.; Mahapatra, D.; Subramanian, R.; Oruganti, V.R.M. Dense attentive GAN-based one-class model for detection of autism and ADHD. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 10444–10458. [Google Scholar] [CrossRef]

- Li, M.; Wang, H.; Shang, Z.; Yang, Z.; Zhang, Y.; Wan, H. Ependymoma and pilocytic astrocytoma: Differentiation using radiomics approach based on machine learning. J. Clin. Neurosci. 2020, 78, 175–180. [Google Scholar] [CrossRef]

- Peruzzo, D.; Arrigoni, F.; Triulzi, F.; Righini, A.; Parazzini, C.; Castellani, U. A framework for the automatic detection and characterization of brain malformations: Validation on the corpus callosum. Med. Image Anal. 2016, 32, 233–242. [Google Scholar] [CrossRef]

- Prince, E.W.; Whelan, R.; Mirsky, D.M.; Stence, N.; Staulcup, S.; Klimo, P.; Anderson, R.C.E.; Niazi, T.N.; Grant, G.; Souweidane, M.; et al. Robust deep learning classification of adamantinomatous craniopharyngioma from limited preoperative radiographic images. Sci. Rep. 2020, 10, 16885. [Google Scholar] [CrossRef]

- Tan, L.; Chen, Y.; Maloney, T.C.; Caré, M.M.; Holland, S.K.; Lu, L.J. Combined analysis of sMRI and fMRI imaging data provides accurate disease markers for hearing impairment. Neuroimage Clin. 2013, 3, 416–428. [Google Scholar] [CrossRef]

- Xiao, Z.; Wu, J.; Wang, C.; Jia, N.; Yang, X. Computer-aided diagnosis of school-aged children with ASD using full frequency bands and enhanced SAE: A multi-institution study. Exp. Ther. Med. 2019, 17, 4055–4063. [Google Scholar] [CrossRef]

- Zahia, S.; Garcia-Zapirain, B.; Saralegui, I.; Fernandez-Ruanova, B. Dyslexia detection using 3D convolutional neural networks and functional magnetic resonance imaging. Comput. Methods Programs Biomed. 2020, 197, 105726. [Google Scholar] [CrossRef]

- Zhou, X.; Lin, Q.; Gui, Y.; Wang, Z.; Liu, M.; Lu, H. Multimodal MR images-based diagnosis of early adolescent attention-deficit/hyperactivity disorder using multiple kernel learning. Front. Neurosci. 2021, 15, 710133. [Google Scholar] [CrossRef]

- Lee, H.; Eun, Y.; Hwang, J.Y.; Eun, L.Y. Explainable deep learning algorithm for distinguishing incomplete Kawasaki disease by coronary artery lesions on echocardiographic imaging. Comput. Methods Programs Biomed. 2022, 223, 106970. [Google Scholar] [CrossRef]

- Petibon, Y.; Fahey, F.; Cao, X.; Levin, Z.; Sexton-Stallone, B.; Falone, A.; Zukotynski, K.; Kwatra, N.; Lim, R.; Bar-Sever, Z.; et al. Detecting lumbar lesions in 99m Tc-MDP SPECT by deep learning: Comparison with physicians. Med. Phys. 2021, 48, 4249–4261. [Google Scholar] [CrossRef]

- Sezer, A.; Sezer, H.B. Deep convolutional neural network-based automatic classification of neonatal hip ultrasound images: A novel data augmentation approach with speckle noise reduction. Ultrasound Med. Biol. 2020, 46, 735–749. [Google Scholar] [CrossRef]

- Behzadi-Khormouji, H.; Rostami, H.; Salehi, S.; Derakhshande-Rishehri, T.; Masoumi, M.; Salemi, S.; Keshavarz, A.; Gholamrezanezhad, A.; Assadi, M.; Batouli, A. Deep learning, reusable and problem-based architectures for detection of consolidation on chest x-ray images. Comput. Methods Programs Biomed. 2020, 185, 105162. [Google Scholar] [CrossRef]

- Bodapati, J.D.; Rohith, V.N. ChxCapsNet: Deep capsule network with transfer learning for evaluating pneumonia in paediatric chest radiographs. Measurement 2022, 188, 110491. [Google Scholar] [CrossRef]

- Helm, E.J.; Silva, C.T.; Roberts, H.C.; Manson, D.; Seed, M.T.; Amaral, J.G.; Babyn, P.S. Computer-aided detection for the identification of pulmonary nodules in pediatric oncology patients: Initial experience. Pediatr. Radiol. 2009, 39, 685–693. [Google Scholar] [CrossRef]

- Jiang, Z.; Chen, L. Multisemantic level patch merger vision transformer for diagnosis of pneumonia. Comput. Math. Method Med. 2022, 2022, 7852958. [Google Scholar] [CrossRef]

- Liang, G.; Zheng, L. A transfer learning method with deep residual network for pediatric pneumonia diagnosis. Comput. Methods Programs Biomed. 2020, 187, 104964. [Google Scholar] [CrossRef]

- Mahomed, N.; van Ginneken, B.; Philipsen, R.H.H.M.; Melendez, J.; Moore, D.P.; Moodley, H.; Sewchuran, T.; Mathew, D.; Madhi, S.A. Computer-aided diagnosis for World Health Organization-defined chest radiograph primary-endpoint pneumonia in children. Pediatr. Radiol. 2020, 50, 482–491. [Google Scholar] [CrossRef]

- Shouman, M.A.; El-Fiky, A.; Hamada, S.; El-Sayed, A.; Karar, M.E. Computer-assisted lung diseases detection from pediatric chest radiography using long short-term memory networks. Comput. Electr. Eng. 2022, 103, 108402. [Google Scholar] [CrossRef]

- Silva, L.; Araújo, L.; Ferreira, V.; Neto, R.; Santos, A. Convolutional neural networks applied in the detection of pneumonia by x-ray images. Int. J. Innov. Comput. Appl. 2022, 13, 187–197. [Google Scholar] [CrossRef]

- Vrbančič, G.; Podgorelec, V. Efficient ensemble for image-based identification of pneumonia utilizing deep CNN and SGD with warm restarts. Expert Syst. Appl. 2022, 187, 115834. [Google Scholar] [CrossRef]

- Guan, Y.; Peng, H.; Li, J.; Wang, Q. A mutual promotion encoder-decoder method for ultrasonic hydronephrosis diagnosis. Methods 2022, 203, 78–89. [Google Scholar] [CrossRef]

- Zheng, Q.; Furth, S.L.; Tasian, G.E.; Fan, Y. Computer-aided diagnosis of congenital abnormalities of the kidney and urinary tract in children based on ultrasound imaging data by integrating texture image features and deep transfer learning image features. J. Pediatr. Urol. 2019, 15, 75.e1–75.e7. [Google Scholar] [CrossRef]

- Kleinfelder, T.R.; Ng, C.K.C. Effects of image postprocessing in digital radiography to detect wooden, soft tissue foreign bodies. Radiol. Technol. 2022, 93, 544–554. Available online: https://pubmed.ncbi.nlm.nih.gov/35790309/ (accessed on 30 January 2023).

- Sun, Z.; Ng, C.K.C.; Wong, Y.H.; Yeong, C.H. 3D-printed coronary plaques to simulate high calcification in the coronary arteries for investigation of blooming artifacts. Biomolecules 2021, 11, 1307. [Google Scholar] [CrossRef] [PubMed]

- Ng, C.K.C.; Sun, Z. Development of an online automatic computed radiography dose data mining program: A preliminary study. Comput. Methods Programs Biomed. 2010, 97, 48–52. [Google Scholar] [CrossRef]

- Christe, A.; Peters, A.A.; Drakopoulos, D.; Heverhagen, J.T.; Geiser, T.; Stathopoulou, T.; Christodoulidis, S.; Anthimopoulos, M.; Mougiakakou, S.G.; Ebner, L. Computer-aided diagnosis of pulmonary fibrosis using deep learning and CT images. Investig. Radiol. 2019, 54, 627–632. [Google Scholar] [CrossRef]

- Tanaka, H.; Chiu, S.W.; Watanabe, T.; Kaoku, S.; Yamaguchi, T. Computer-aided diagnosis system for breast ultrasound images using deep learning. Phys. Med. Biol. 2019, 64, 235013. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.L.; Ha, E.J.; Han, M. Real-world performance of computer-aided diagnosis system for thyroid nodules using ultrasonography. Ultrasound Med. Biol. 2019, 45, 2672–2678. [Google Scholar] [CrossRef] [PubMed]

- Jeongy, E.Y.; Kim, H.L.; Ha, E.J.; Park, S.Y.; Cho, Y.J.; Han, M. Computer-aided diagnosis system for thyroid nodules on ultrasonography: Diagnostic performance and reproducibility based on the experience level of operators. Eur. Radiol. 2019, 29, 1978–1985. [Google Scholar] [CrossRef]

- Park, H.J.; Kim, S.M.; La Yun, B.; Jang, M.; Kim, B.; Jang, J.Y.; Lee, J.Y.; Lee, S.H. A computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of breast masses on ultrasound: Added value for the inexperienced breast radiologist. Medicine 2019, 98, e14146. [Google Scholar] [CrossRef]

- Zhang, S.; Sun, F.; Wang, N.; Zhang, C.; Yu, Q.; Zhang, M.; Babyn, P.; Zhong, H. Computer-aided diagnosis (CAD) of pulmonary nodule of thoracic CT image using transfer learning. J. Digit. Imaging 2019, 32, 995–1007. [Google Scholar] [CrossRef]

- Wei, R.; Lin, K.; Yan, W.; Guo, Y.; Wang, Y.; Li, J.; Zhu, J. Computer-aided diagnosis of pancreas serous cystic neoplasms: A radiomics method on preoperative MDCT images. Technol. Cancer Res. Treat. 2019, 18, 1533033818824339. [Google Scholar] [CrossRef]

- Chen, C.H.; Lee, Y.W.; Huang, Y.S.; Lan, W.R.; Chang, R.F.; Tu, C.Y.; Chen, C.Y.; Liao, W.C. Computer-aided diagnosis of endobronchial ultrasound images using convolutional neural network. Comput. Methods Programs Biomed. 2019, 177, 175–182. [Google Scholar] [CrossRef] [PubMed]

- Gong, J.; Liu, J.; Hao, W.; Nie, S.; Wang, S.; Peng, W. Computer-aided diagnosis of ground-glass opacity pulmonary nodules using radiomic features analysis. Phys. Med. Biol. 2019, 64, 135015. [Google Scholar] [CrossRef]

- Li, L.; Liu, Z.; Huang, H.; Lin, M.; Luo, D. Evaluating the performance of a deep learning-based computer-aided diagnosis (DL-CAD) system for detecting and characterizing lung nodules: Comparison with the performance of double reading by radiologists. Thorac. Cancer. 2019, 10, 183–192. [Google Scholar] [CrossRef]

- Bajaj, V.; Pawar, M.; Meena, V.K.; Kumar, M.; Sengur, A.; Guo, Y. Computer-aided diagnosis of breast cancer using bi-dimensional empirical mode decomposition. Neural Comput. Appl. 2019, 31, 3307–3315. [Google Scholar] [CrossRef]

- Nayak, A.; Baidya Kayal, E.; Arya, M.; Culli, J.; Krishan, S.; Agarwal, S.; Mehndiratta, A. Computer-aided diagnosis of cirrhosis and hepatocellular carcinoma using multi-phase abdomen CT. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1341–1352. [Google Scholar] [CrossRef] [PubMed]

- Greer, M.D.; Lay, N.; Shih, J.H.; Barrett, T.; Bittencourt, L.K.; Borofsky, S.; Kabakus, I.; Law, Y.M.; Marko, J.; Shebel, H.; et al. Computer-aided diagnosis prior to conventional interpretation of prostate mpMRI: An international multi-reader study. Eur. Radiol. 2018, 28, 4407–4417. [Google Scholar] [CrossRef] [PubMed]

- Ishioka, J.; Matsuoka, Y.; Uehara, S.; Yasuda, Y.; Kijima, T.; Yoshida, S.; Yokoyama, M.; Saito, K.; Kihara, K.; Numao, N.; et al. Computer-aided diagnosis of prostate cancer on magnetic resonance imaging using a convolutional neural network algorithm. BJU Int. 2018, 122, 411–417. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, Y.D.; Yan, X.; Liu, H.; Zhou, M.; Hu, B.; Yang, G. Computer-aided diagnosis of prostate cancer using a deep convolutional neural network from multiparametric MRI. J. Magn. Reason. Imaging. 2018, 48, 1570–1577. [Google Scholar] [CrossRef]

- Yoo, Y.J.; Ha, E.J.; Cho, Y.J.; Kim, H.L.; Han, M.; Kang, S.Y. Computer-aided diagnosis of thyroid nodules via ultrasonography: Initial clinical experience. Korean J. Radiol. 2018, 19, 665–672. [Google Scholar] [CrossRef]

- Al-Antari, M.A.; Al-Masni, M.A.; Choi, M.T.; Han, S.M.; Kim, T.S. A fully integrated computer-aided diagnosis system for digital x-ray mammograms via deep learning detection, segmentation, and classification. Int. J. Med. Inform. 2018, 117, 44–54. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.J.; Baek, J.H.; Park, H.S.; Shim, W.H.; Kim, T.Y.; Shong, Y.K.; Lee, J.H. A computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of thyroid nodules on ultrasound: Initial clinical assessment. Thyroid 2017, 27, 546–552. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Jiang, H.; Wang, Z.; Zhang, G.; Yao, Y.D. An effective computer aided diagnosis model for pancreas cancer on PET/CT images. Comput. Methods Programs Biomed. 2018, 165, 205–214. [Google Scholar] [CrossRef]

- Chang, C.C.; Chen, H.H.; Chang, Y.C.; Yang, M.Y.; Lo, C.M.; Ko, W.C.; Lee, Y.F.; Liu, K.L.; Chang, R.F. Computer-aided diagnosis of liver tumors on computed tomography images. Comput. Methods Programs Biomed. 2017, 145, 45–51. [Google Scholar] [CrossRef]

- Cho, E.; Kim, E.K.; Song, M.K.; Yoon, J.H. Application of computer-aided diagnosis on breast ultrasonography: Evaluation of diagnostic performances and agreement of radiologists according to different levels of experience. J. Ultrasound Med. 2018, 37, 209–216. [Google Scholar] [CrossRef]

- Sun, Z.; Ng, C.K.C.; Sá Dos Reis, C. Synchrotron radiation computed tomography versus conventional computed tomography for assessment of four types of stent grafts used for endovascular treatment of thoracic and abdominal aortic aneurysms. Quant. Imaging Med. Surg. 2018, 8, 609–620. [Google Scholar] [CrossRef]

- Almutairi, A.M.; Sun, Z.; Ng, C.; Al-Safran, Z.A.; Al-Mulla, A.A.; Al-Jamaan, A.I. Optimal scanning protocols of 64-slice CT angiography in coronary artery stents: An in vitro phantom study. Eur. J. Radiol. 2010, 74, 156–160. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Ng, C.K.C. Use of synchrotron radiation to accurately assess cross-sectional area reduction of the aortic branch ostia caused by suprarenal stent wires. J. Endovasc. Ther. 2017, 24, 870–879. [Google Scholar] [CrossRef]

- Zebari, D.A.; Ibrahim, D.A.; Zeebaree, D.Q.; Haron, H.; Salih, M.S.; Damaševičius, R.; Mohammed, M.A. Systematic review of computing approaches for breast cancer detection based computer aided diagnosis using mammogram images. Appl. Artif. Intell. 2021, 35, 2157–2203. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, C.K.C. Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Detection and Diagnosis in Pediatric Radiology: A Systematic Review. Children 2023, 10, 525. https://doi.org/10.3390/children10030525

Ng CKC. Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Detection and Diagnosis in Pediatric Radiology: A Systematic Review. Children. 2023; 10(3):525. https://doi.org/10.3390/children10030525

Chicago/Turabian StyleNg, Curtise K. C. 2023. "Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Detection and Diagnosis in Pediatric Radiology: A Systematic Review" Children 10, no. 3: 525. https://doi.org/10.3390/children10030525

APA StyleNg, C. K. C. (2023). Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Detection and Diagnosis in Pediatric Radiology: A Systematic Review. Children, 10(3), 525. https://doi.org/10.3390/children10030525