Multi-Attribute Online Decision-Making Driven by Opinion Mining

Abstract

1. Introduction

- (a)

- Scheme for the selection of high-quality reviews by incorporating users’ preferences.

- (b)

- Feature ranking scheme based on multiple parameters for a deeper understanding of consumers’ opinions.

- (c)

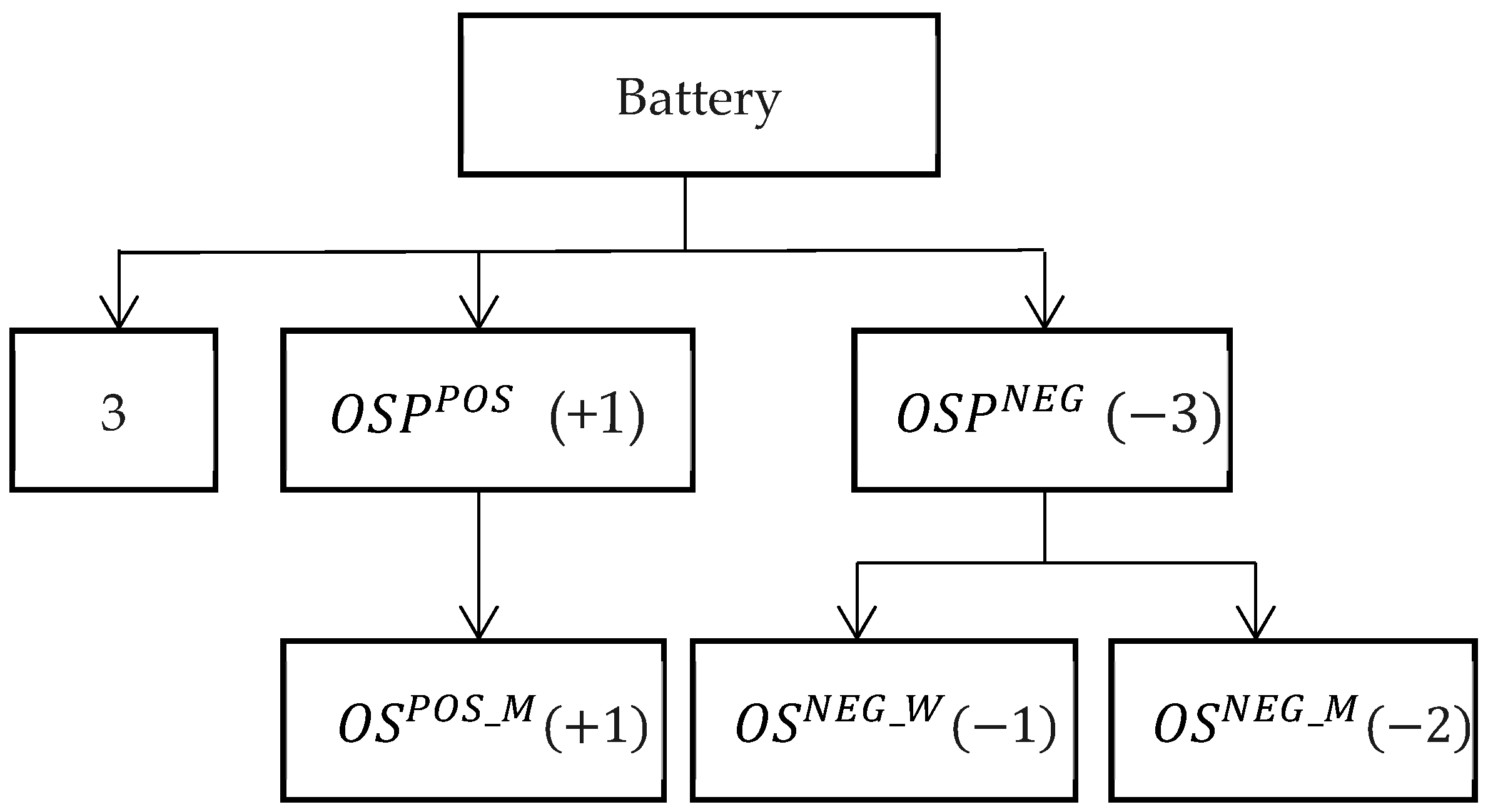

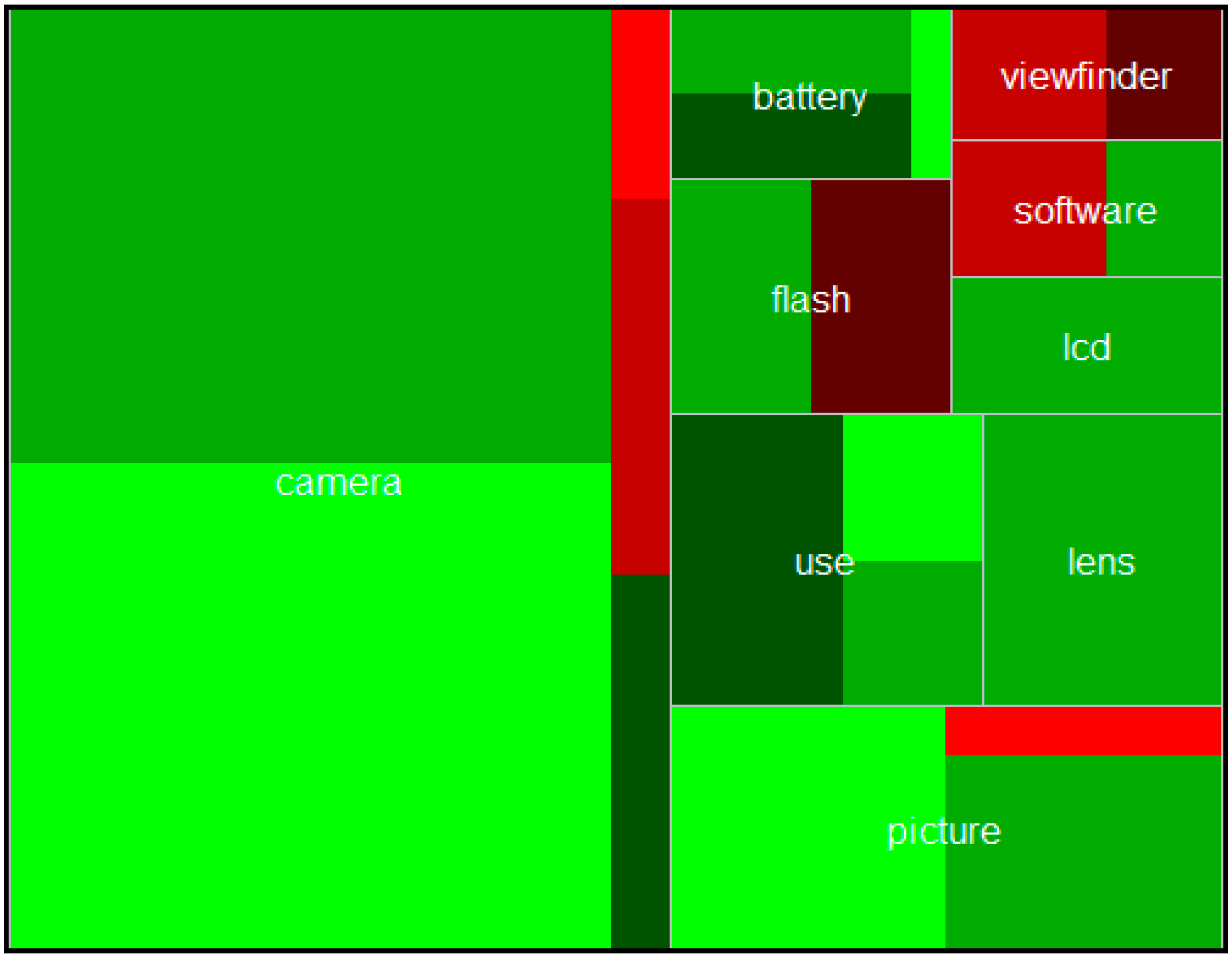

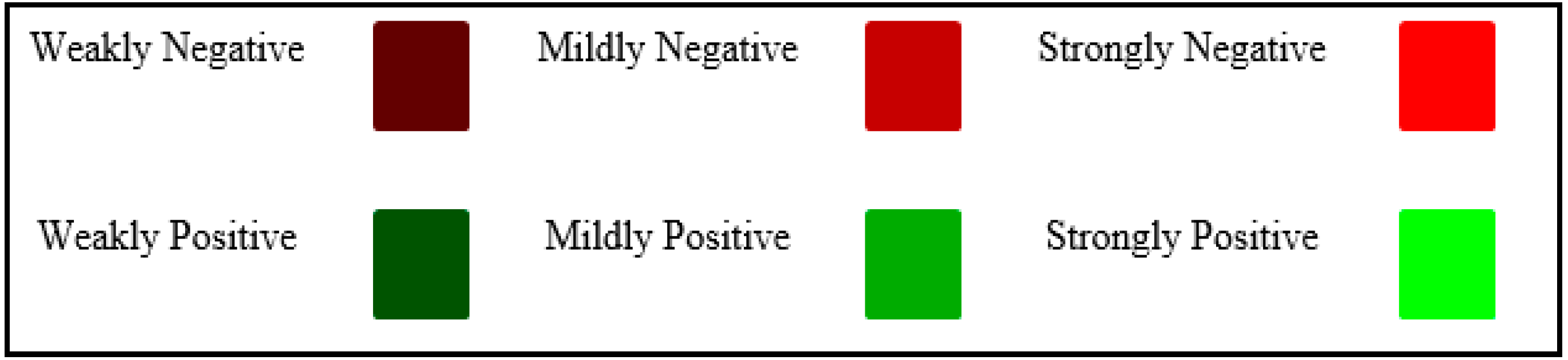

- Opinion-strength-based visualization based on high-quality reviews to provide high-quality information for decision-making. The proposed visualization provides a multi-level detail of consumers’ opinions (ranging from −3 to +3) on critical products features at a glance, which allows entrepreneurs and consumers to highlight decisive product features having a key impact on the sale, product choice and adoption.

- (d)

- Reputation system is evaluated on a real dataset

- (e)

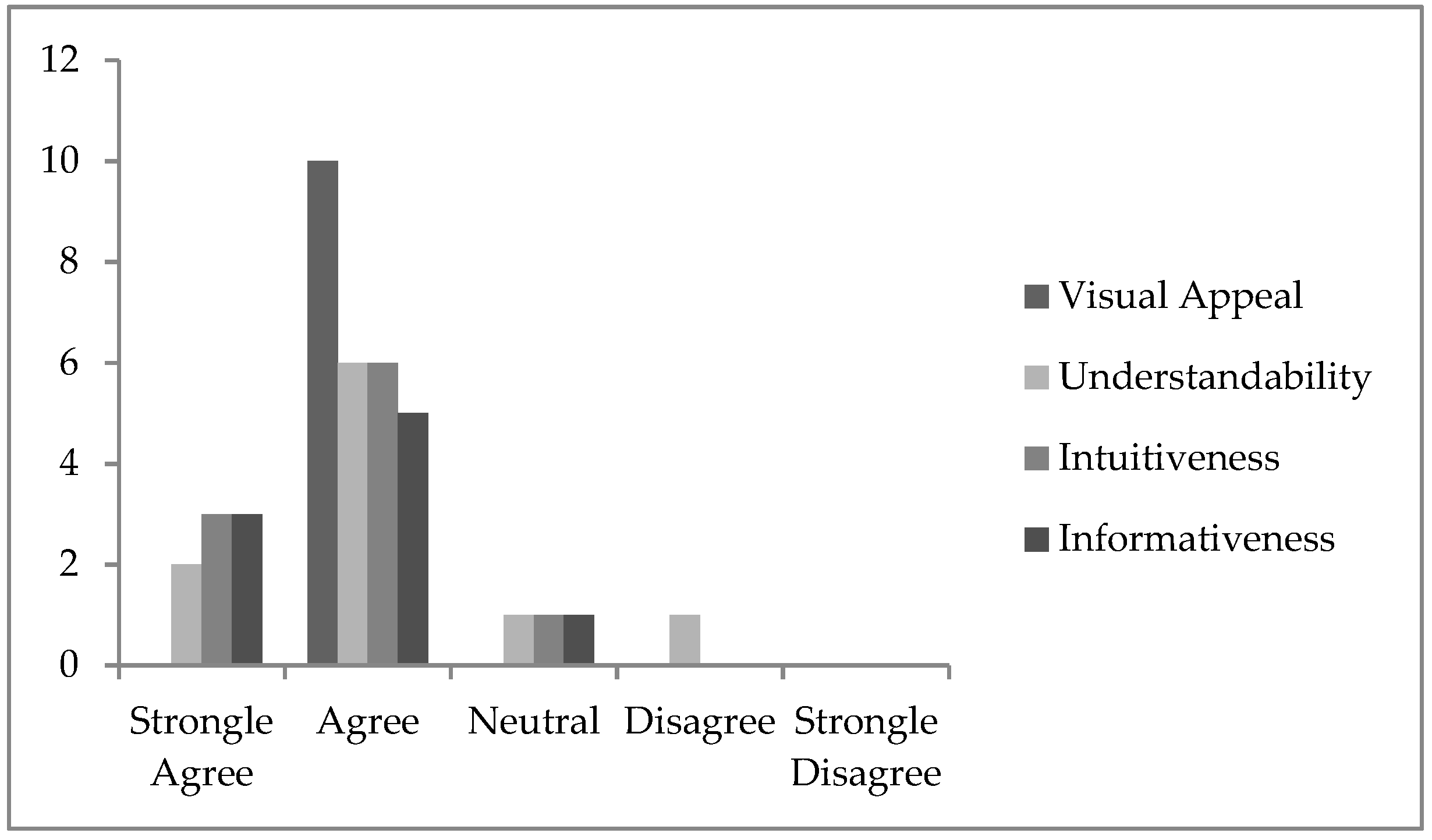

- Usability study for the evaluation of the proposed visualization

2. Related Work

2.1. Review Quality Evaluation and Review Ranking

2.2. Feature Ranking

2.3. Opinion Visualizations

3. Proposed System

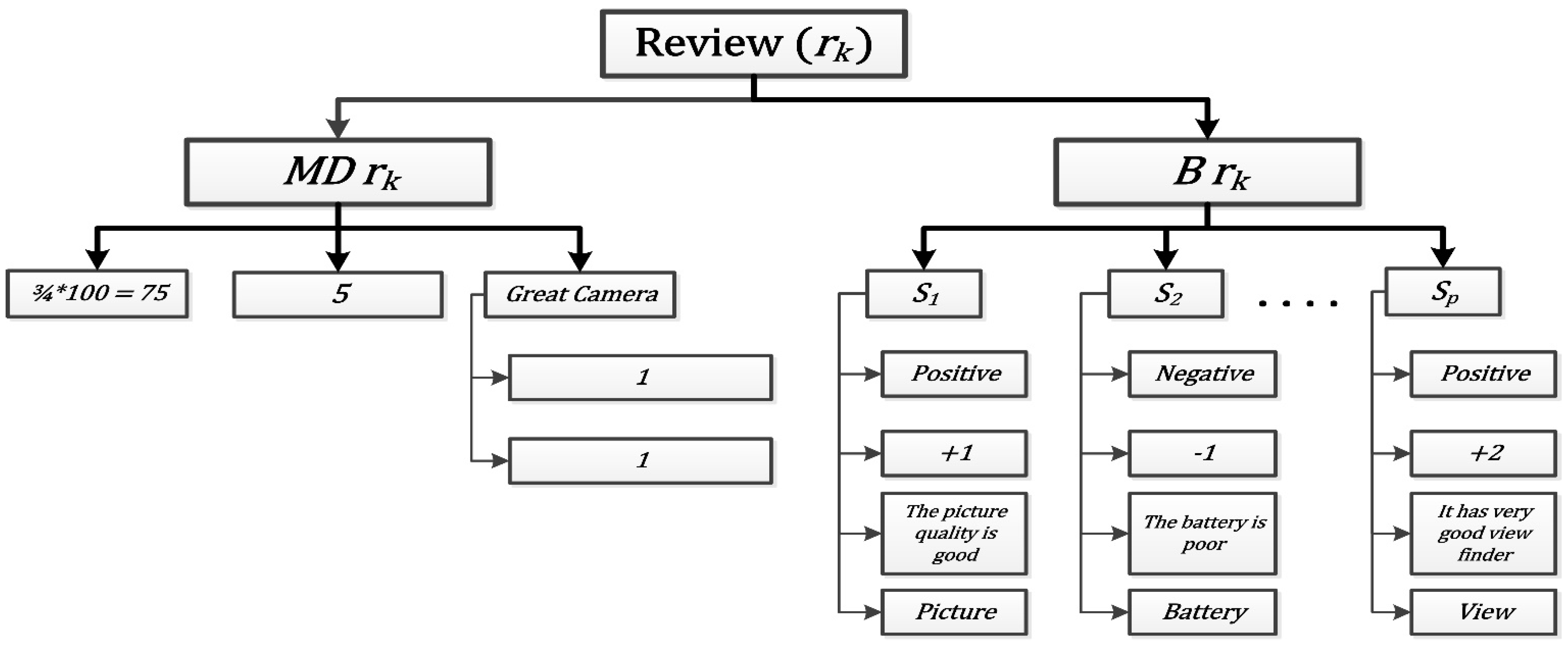

3.1. Theoretical Framework

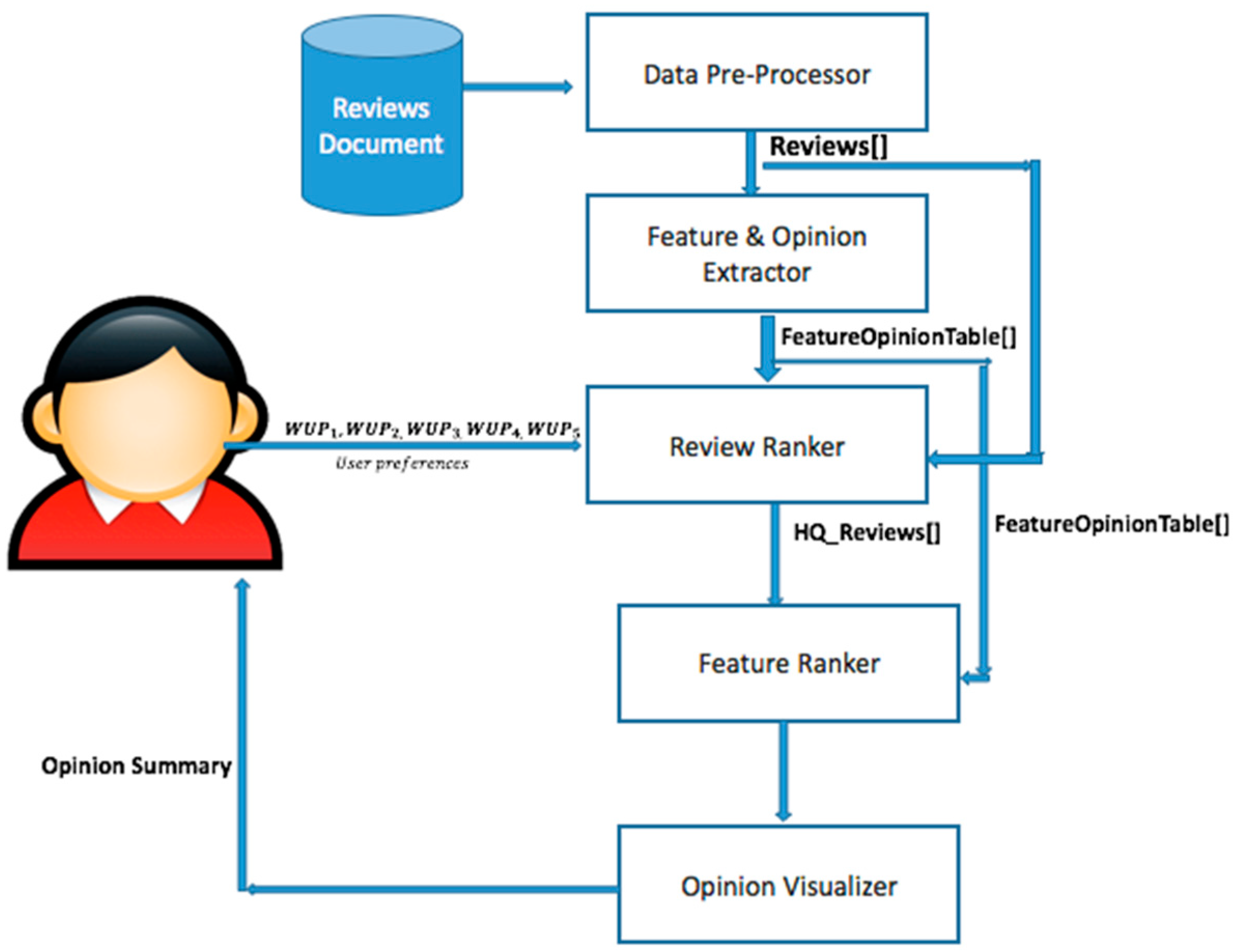

3.2. Architecture of the System

3.2.1. Pre-Processor

3.2.2. Feature and Opinion Extractor

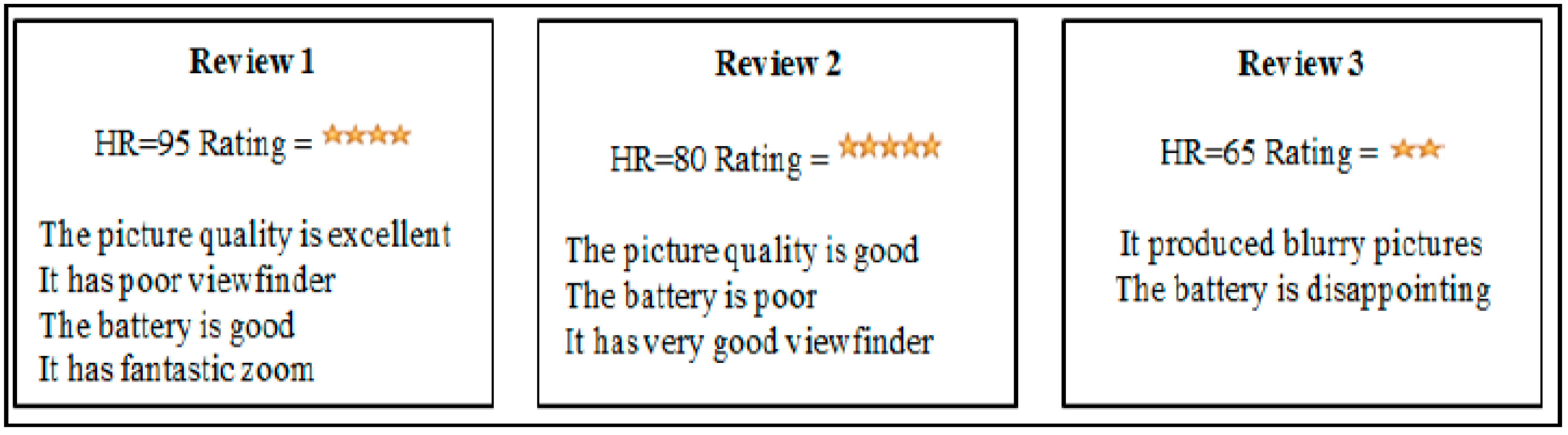

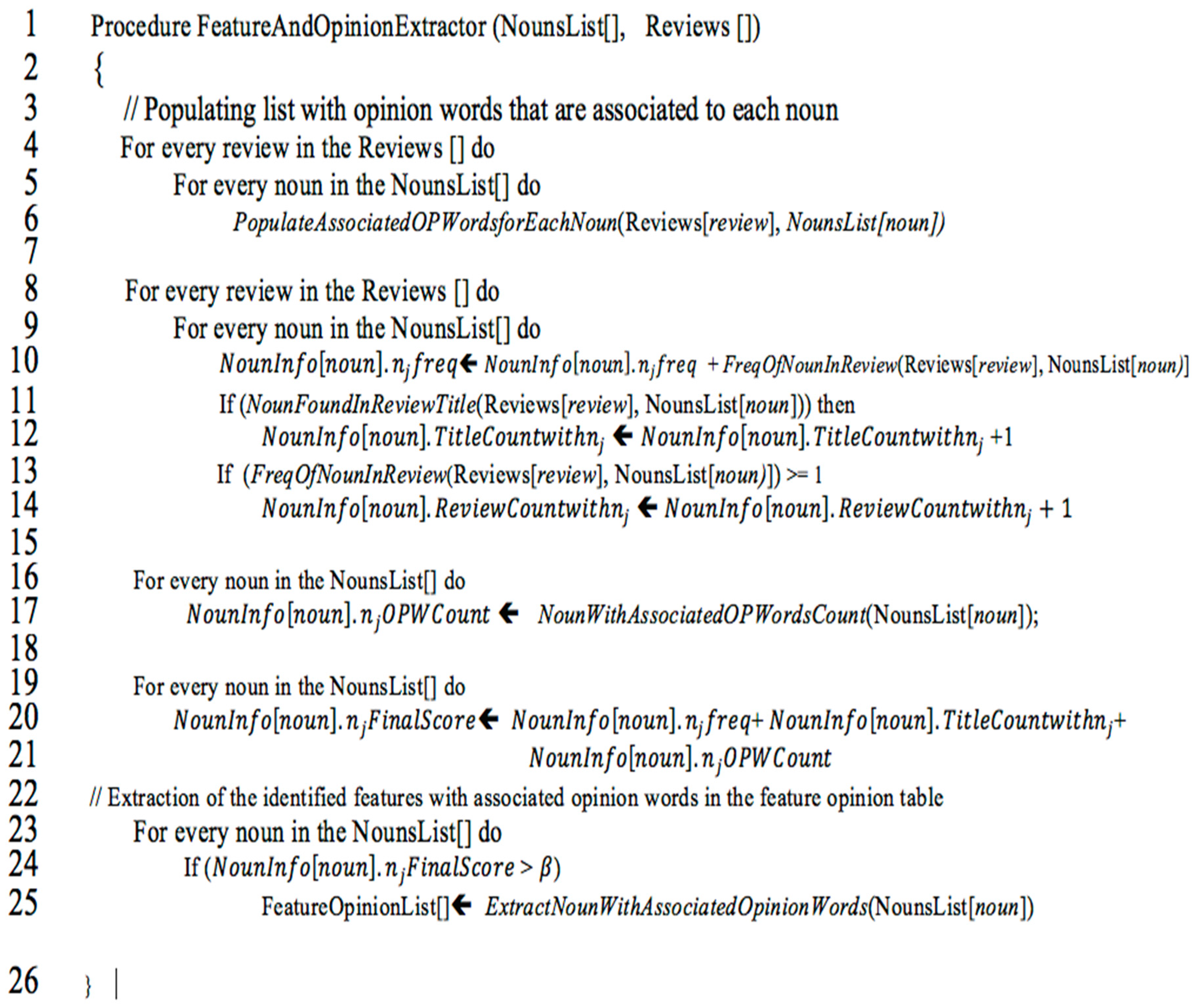

3.2.3. Review Ranker

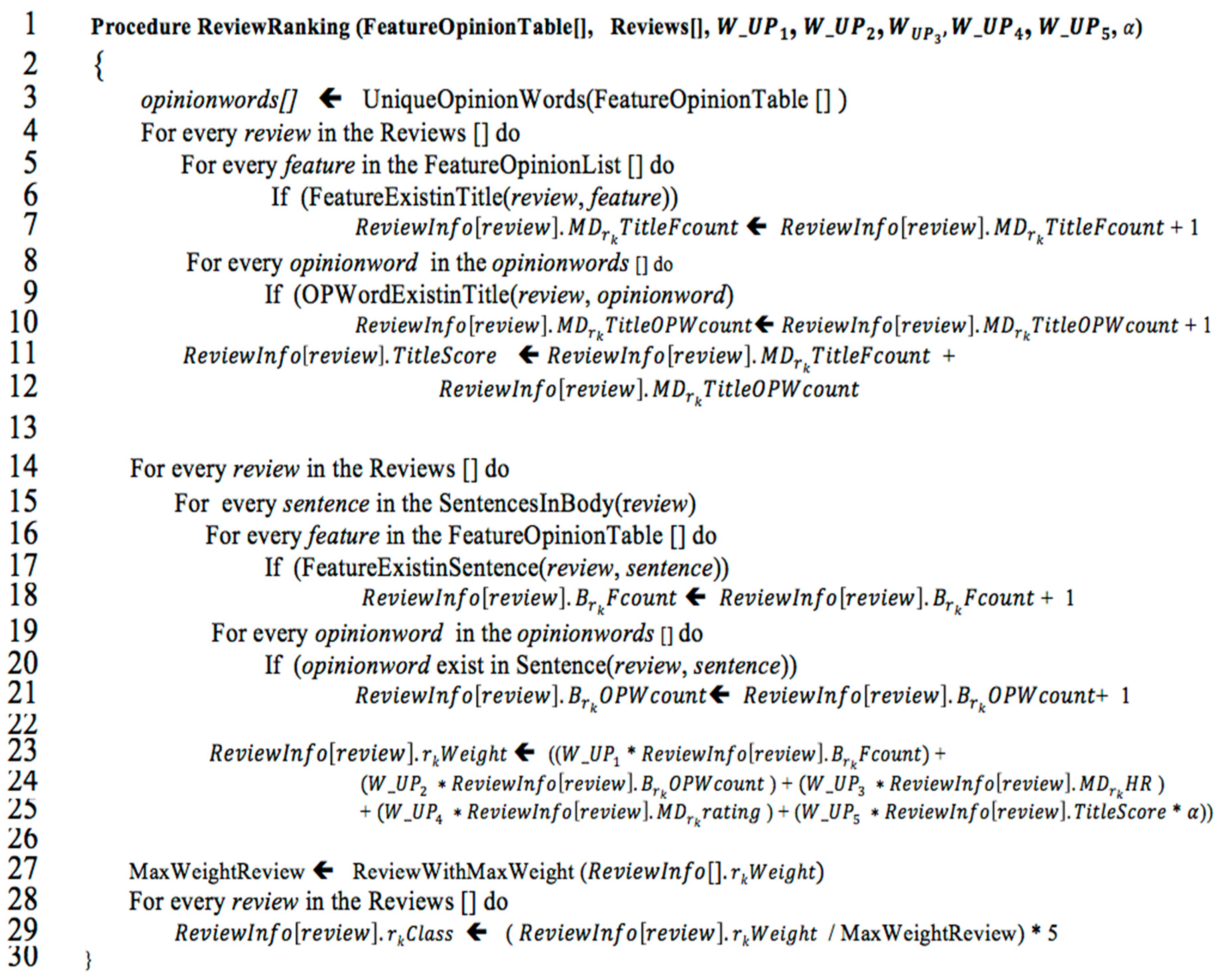

3.2.4. Feature Ranker

3.2.5. Opinion Visualizer

4. Evaluation of Proposed System

4.1. Dataset

4.2. Results and Discussion

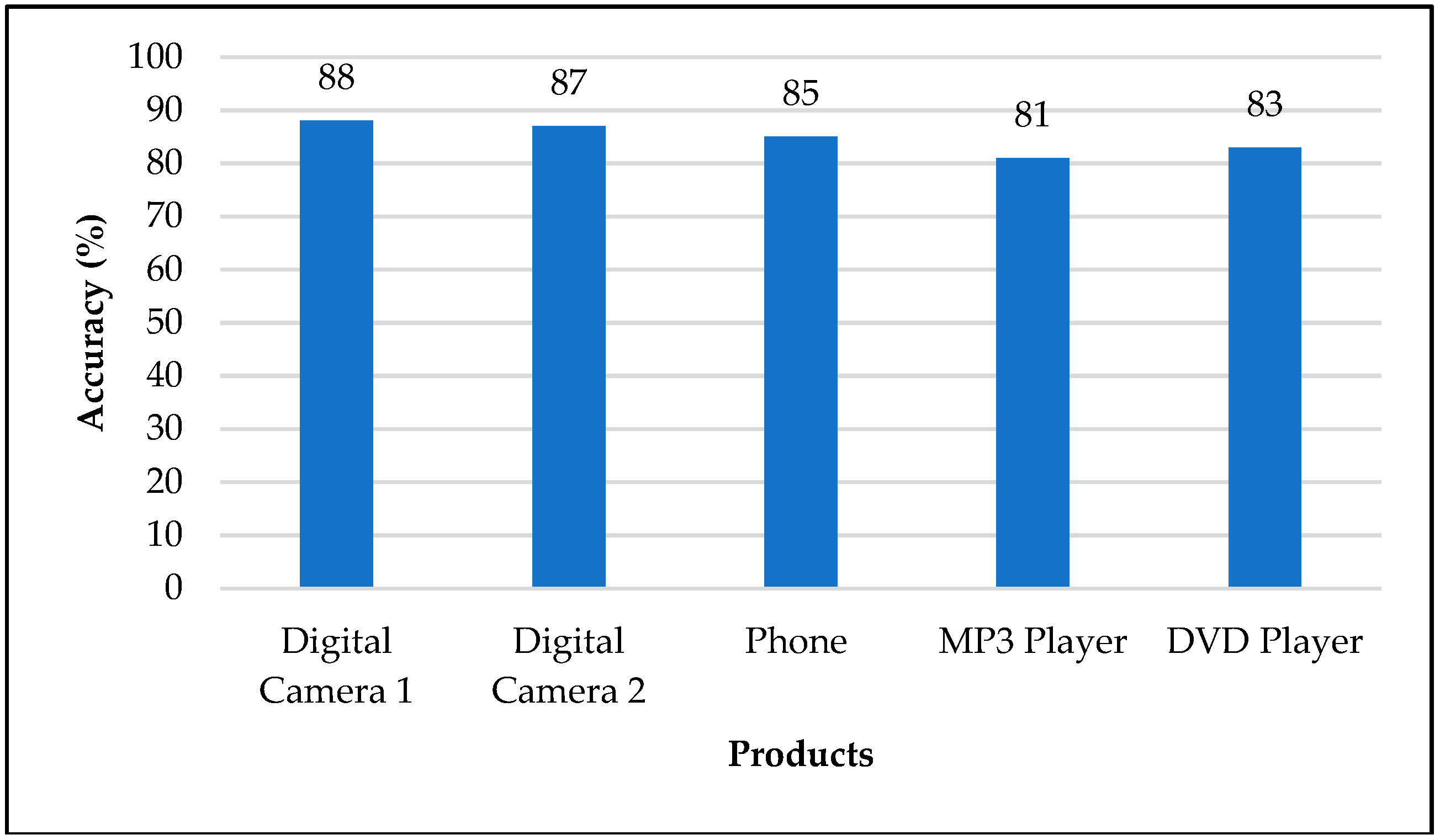

4.2.1. Review Quality Classification

4.2.2. Feature Ranking

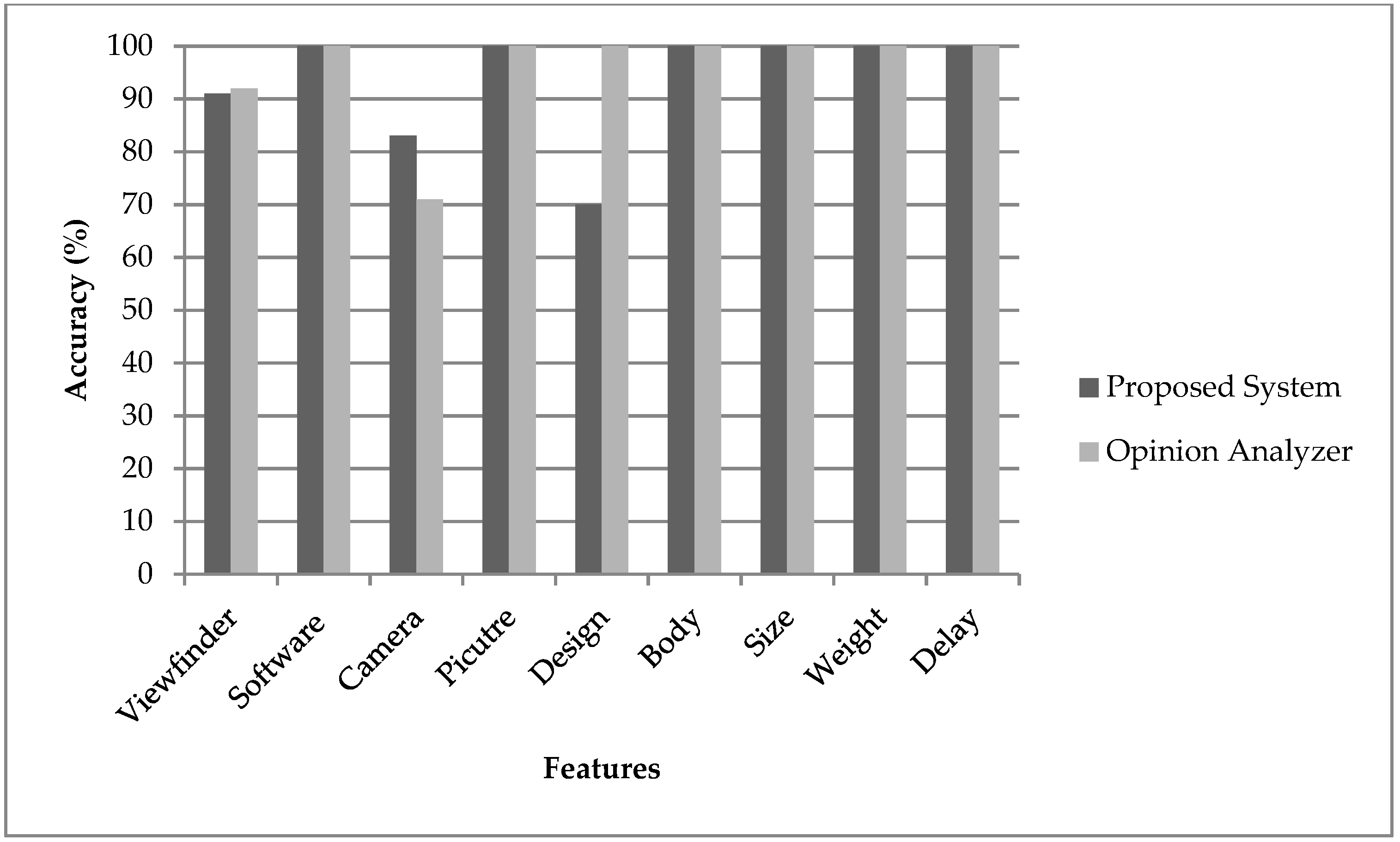

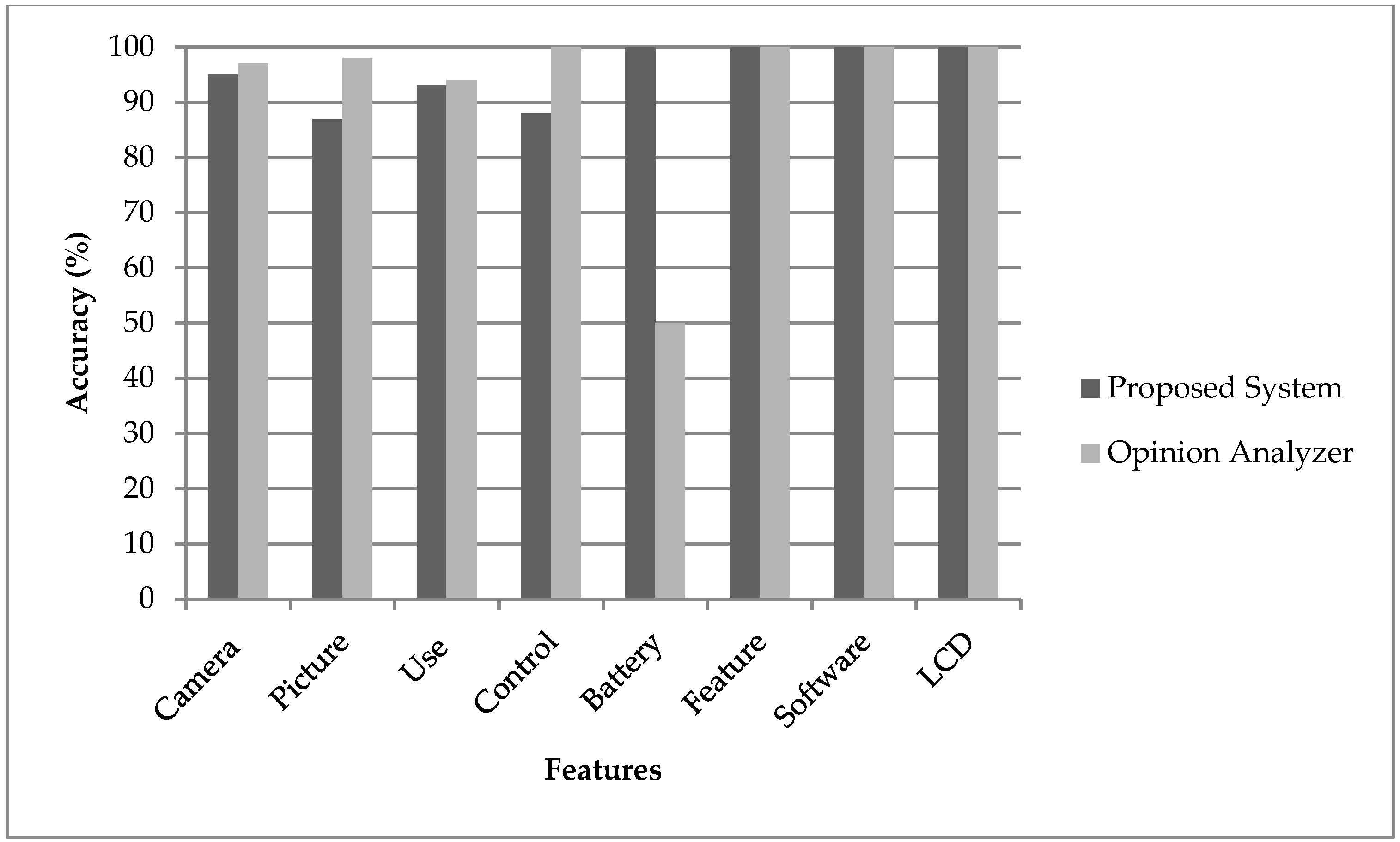

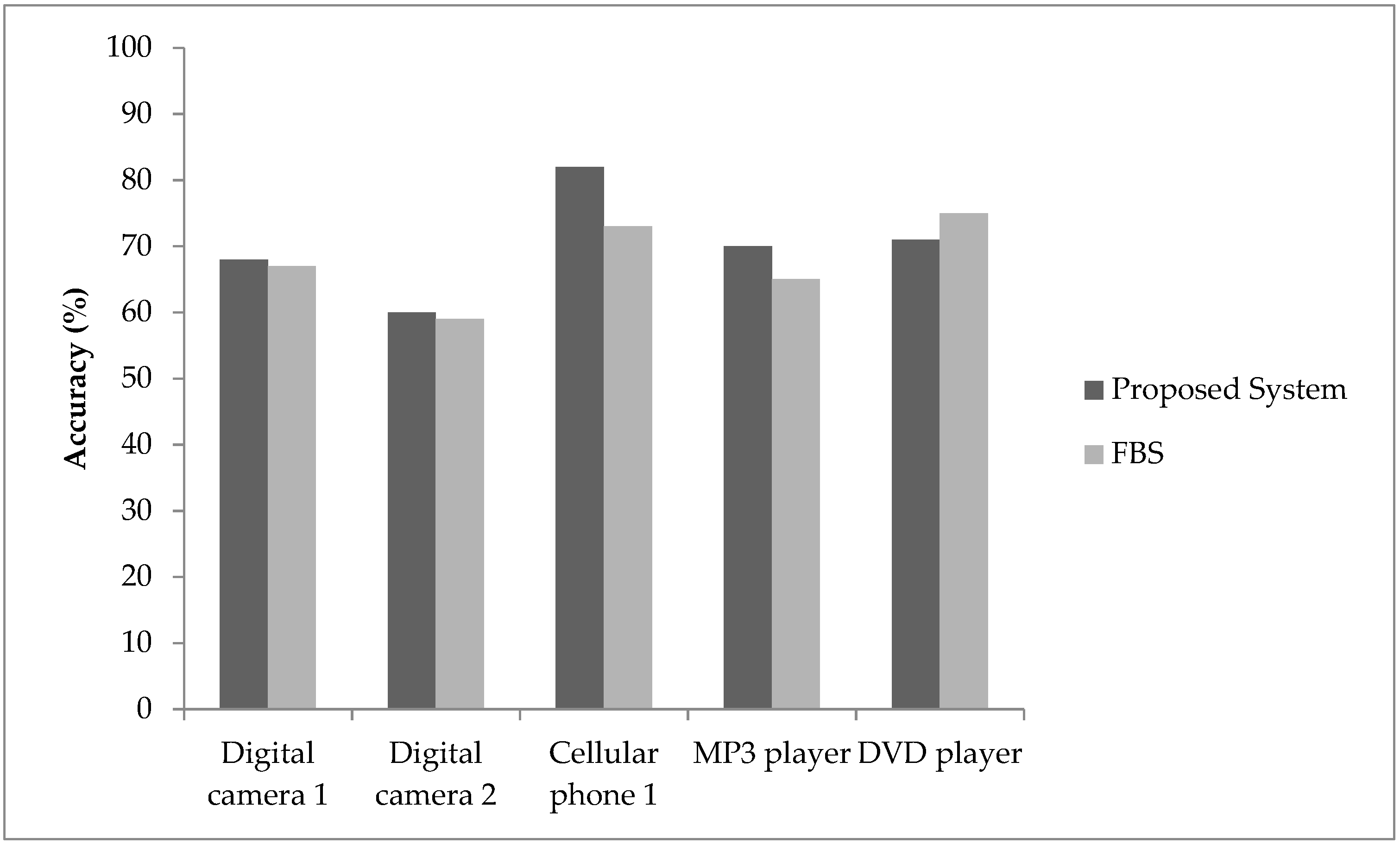

4.3. Comparison of Proposed System with FBS System and Opinion Analyzer

4.4. Opinion Visualizer

Case Study

5. Conclusion, Limitation and Future work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, G.; Wang, H.; Hardjawana, W. New advancement in information technologies for industry 4.0. Enterp. Inf. Syst. 2020, 402–405. [Google Scholar] [CrossRef]

- Khan, S.S.; Khan, M.; Ran, Q.; Naseem, R. Challenges in Opinion Mining, Comprehensive Review. Sci. Technol. J. 2018, 33, 123–135. [Google Scholar]

- Reyes-Menendez, A.; Saura, J.R.; Thomas, S.B. Exploring key indicators of social identity in the# MeToo era: Using discourse analysis in UGC. Int. J. Inf. Manag. 2020, 54, 102129. [Google Scholar]

- Na, J.-C.; Thet, T.T.; Khoo, C.S.G. Comparing sentiment expression in movie reviews from four online genres. Online Inf. Rev. 2010, 34, 317–338. [Google Scholar] [CrossRef]

- Al-Natour, S.; Turetken, O. A comparative assessment of sentiment analysis and star ratings for consumer reviews. Int. J. Inf. Manag. 2020, 54, 102132. [Google Scholar] [CrossRef]

- Lo, Y.W.; Potdar, V. A review of opinion mining and sentiment classification framework in social networks. In Proceedings of the 3rd IEEE International Conference on Digital Ecosystems and Technologies, Istanbul, Turkey, 1–3 June 2009; pp. 396–401. [Google Scholar]

- Hassani, H.; Beneki, C.; Unger, S.; Mazinani, M.T.; Yeganegi, M.R. Text mining in big data analytics. Big Data Cogn. Comput. 2020, 4, 1. [Google Scholar] [CrossRef]

- Singh, R.K.; Sachan, M.K.; Patel, R.B. 360 degree view of cross-domain opinion classification: A survey. Artif. Intell. Rev. 2021, 54, 1385–1506. [Google Scholar] [CrossRef]

- Hao, M.C.; Rohrdantz, C.; Janetzko, H.; Keim, D.A.; Dayal, U.; Haug, L.E.; Hsu, M.; Stoffel, F. Visual sentiment analysis of customer feedback streams using geo-temporal term associations. Inf. Vis. 2013, 12, 273–290. [Google Scholar] [CrossRef]

- Rohrdantz, C.; Hao, M.C.; Dayal, U.; Haug, L.-E.; Keim, D.A. Feature-Based Visual Sentiment Analysis of Text Document Streams. ACM Trans. Intell. Syst. Technol. 2012, 3, 1–25. [Google Scholar] [CrossRef]

- Bilal, M.; Gani, A.; Lali, M.I.U.; Marjani, M.; Malik, N. Social profiling: A review, taxonomy, and challenges. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 433–450. [Google Scholar]

- Chevalier, J.A.; Mayzlin, D. The Effect of Word of Mouth on Sales: Online Book Reviews. J. Mark. Res. 2006, 43, 345–354. [Google Scholar] [CrossRef]

- Moghaddam, S.; Ester, M. ILDA: Interdependent LDA Model for Learning Latent Aspects and their Ratings from Online Product Reviews Categories and Subject Descriptors. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval-SIGIR ’11, Beijing, China, 24–28 July 2011; pp. 665–674. [Google Scholar]

- Lu, Y.; Tsaparas, P.; Ntoulas, A.; Polanyi, L. Exploiting social context for review quality prediction. In Proceedings of the 19th International Conference on World Wide Web-WWW ’10, Raleigh, NC, USA, 26–30 April 2010. [Google Scholar]

- Shamim, A.; Balakrishnan, V.; Tahir, M. Opinion Mining and Sentiment Analysis Systems: A Comparison of Design Considerations. In Proceedings of the 20th IBIMA Conference, Kuala Lumpur, Malaysia, 25–26 March 2013; pp. 1–7. [Google Scholar]

- Xu, K.; Liao, S.S.; Li, J.; Song, Y. Mining comparative opinions from customer reviews for Competitive Intelligence. Decis. Support Syst. 2011, 50, 743–754. [Google Scholar] [CrossRef]

- Jalilvand, M.R.; Samiei, N. The effect of electronic word of mouth on brand image and purchase intention: An empirical study in the automobile industry in Iran. Mark. Intell. Plan. 2012, 30, 460–476. [Google Scholar] [CrossRef]

- Bilal, M.; Marjani, M.; Lali, M.I.; Malik, N.; Gani, A.; Hashem, I.A.T. Profiling Users’ Behavior, and Identifying Important Features of Review “Helpfulness”. IEEE Access. 2020, 8, 77227–77244. [Google Scholar] [CrossRef]

- Dalal, M.K.; Zaveri, M.A. Opinion Mining from Online User Reviews Using Fuzzy Linguistic Hedges. Appl. Comput. Intell. Soft Comput. 2014, 2014, 735942. [Google Scholar] [CrossRef]

- Bilal, M.; Marjani, M.; Hashem, I.A.T.; Gani, A.; Liaqat, M.; Ko, K. Profiling and predicting the cumulative helpfulness (Quality) of crowd-sourced reviews. Information 2019, 10, 295. [Google Scholar] [CrossRef]

- Benlahbib, A. Aggregating customer review attributes for online reputation generation. IEEE Access. 2020, 8, 96550–96564. [Google Scholar] [CrossRef]

- Xu, X. How do consumers in the sharing economy value sharing? Evidence from online reviews. Decis. Support Syst. 2020, 128, 113162. [Google Scholar] [CrossRef]

- Wang, R.; Zhou, D.; Jiang, M.; Si, J.; Yang, Y. A survey on opinion mining: From stance to product aspect. IEEE Access 2019, 7, 41101–41124. [Google Scholar] [CrossRef]

- Shamim, A.; Balakrishnan, V.; Tahir, M. Evaluation of Opinion Visualization Techniques. Inf. Vis. 2015, 14, 339–358. [Google Scholar] [CrossRef]

- Ding, X.; Liu, B.; Yu, P.S.; Street, S.M. A holistic lexicon-based approach to opinion mining. In Proceedings of the International Conference on Web Search and Web Data Mining, Palo Alto, CA, USA, 11–12 February 2008; pp. 231–240. [Google Scholar]

- Binali, H.; Potdar, V.; Wu, C. A State Of The Art Opinion Mining and Its Application Domains. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT ’09), Churchill, Australia, 10–13 February 2009. [Google Scholar]

- Wu, Y.; Wei, F.; Liu, S.; Au, N. OpinionSeer: Interactive Visualization of Hotel Customer Feedback. IEEE Trans. Vis. Comput. Graph. 2010, 16, 1109–1118. [Google Scholar]

- Moghaddam, S.; Jamali, M. ETF: Extended Tensor Factorization Model for Personalizing Prediction of Review Helpfulness Categories and Subject Descriptors. In Proceedings of the Fifth ACM International Conference on Web Search and Data Mining, Seattle, WA, USA, 8–12 February 2012; pp. 163–172. [Google Scholar]

- Liu, J.; Cao, Y.; Lin, C.; Huang, Y.; Zhou, M. Low-Quality Product Review Detection in Opinion Summarization. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 28–30 June 2007; pp. 334–342. [Google Scholar]

- Ngo-Ye, T.L.; Sinha, A.P. Analyzing Online Review Helpfulness Using a Regressional ReliefF-Enhanced Text Mining Method. ACM Trans. Manag. Inf. Syst. 2012, 3. [Google Scholar] [CrossRef]

- Balaji, P.; Haritha, D.; Nagaraju, O. An Overview on Opinion Mining Techniques and Sentiment Analysis. Int. J. Pure Appl. Math. 2018, 118, 61–69. [Google Scholar]

- Zhang, Z.; Varadarajan, B. Utility scoring of product reviews. In Proceedings of the 15th ACM International Conference on Information and Knowledge Management-CIKM ’06, Arlington, VA, USA, 6–11 November 2006. [Google Scholar]

- Kim, S.; Pantel, P.; Chklovski, T.; Pennacchiotti, M. Automatically Assessing Review Helpfulness. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing-EMNLP ’06, Sydney, Australia, 22–23 July 2006; pp. 423–430. [Google Scholar]

- Mudambi, S.M.; Schuff, D. What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Korfiatis, N.; García-Bariocanal, E.; Sánchez-Alonso, S. Evaluating content quality and helpfulness of online product reviews: The interplay of review helpfulness vs. review content. Electron. Commer. Res. Appl. 2012, 11, 205–217. [Google Scholar] [CrossRef]

- Walther, J.B.; Liang, Y.J.; Ganster, T.; Wohn, D.Y.; Emington, J. Online Reviews, Helpfulness Ratings, and Consumer Attitudes: An Extension of Congruity Theory to Multiple Sources in Web 2.0. J. Comput. Commun. 2012, 18, 97–112. [Google Scholar] [CrossRef]

- Tsaparas, P.; Ntoulas, A.; Terzi, E. Selecting a comprehensive set of reviews. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining-KDD ’11, San Diego, CA, USA, 21–24 August 2011; p. 168. [Google Scholar]

- O’Mahony, M.P.; Smyth, B. Learning to recommend helpful hotel reviews. In Proceedings of the Third ACM Conference on Recommender Systems-RecSys ’09, New York, NY, USA, 23–25 October 2009. [Google Scholar]

- Kard, S.T.; Mackinlay, J.D.; Scheiderman, B. Reading in Information Visualization, Using Vision to Think; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 1999. [Google Scholar]

- Liu, Y.; Huang, X.; An, A.; Yu, X. Modeling and Predicting the Helpfulness of Online Reviews. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 443–452. [Google Scholar]

- Yang, J.-Y.; Kim, H.-J.; Lee, S.-G. Feature-based Product Review Summarization Utilizing User Score. J. Inf. Sci. Eng. 2010, 26, 1973–1990. [Google Scholar]

- Eirinaki, M.; Pisal, S.; Singh, J. Feature-based opinion mining and ranking. J. Comput. Syst. Sci. 2012, 78, 1175–1184. [Google Scholar] [CrossRef]

- Moghaddam, S.; Ester, M. Opinion Digger: An Unsupervised Opinion Miner from Unstructured Product Reviews. In Proceedings of the 19th ACM International Conference on Information and Knowledge Management-CIKM ’10, Toronto, ON, Canada, 26–30 October 2010; pp. 1825–1828. [Google Scholar]

- Lei, Z.; Liu, B.; Lim, S.H.; Eamonn, O.-S. Extracting and Ranking Product Features in Opinion Documents. In Proceedings of the 23rd International Conference on Computational Linguistics: Posters, Beijing, China, 23–27 August 2010; pp. 1462–1470. [Google Scholar]

- Kunpeng, Z.; Narayanan, R.; Choudhary, A. Voice of the Customers: Mining Online Customer Reviews for Product Feature-based Ranking. In Proceedings of the 3rd Conference on Online Social Networks, Boston, MA, USA, 22 June 2010. [Google Scholar]

- Kauffmann, E.; Peral, J.; Gil, D.; Ferrández, A.; Sellers, R.; Mora, H. Managing marketing decision-making with sentiment analysis: An evaluation of the main product features using text data mining. Sustainability 2019, 11, 4235. [Google Scholar] [CrossRef]

- Hao, M.; Rohrdantz, C.; Janetzko, H.; Dayal, U.; Keim, D.A.; Haug, L.; Hsu, M. Visual Sentiment Analysis on Twitter Data Streams. In Proceedings of the 2011 IEEE Conference on Visual Analytics Science and Technology (VAST), Providence, RI, USA, 23–28 October 2011; pp. 275–276. [Google Scholar]

- Bilal, M.; Marjani, M.; Hashem, I.A.T.; Abdullahi, A.M.; Tayyab, M.; Gani, A. Predicting helpfulness of crowd-sourced reviews: A survey. In Proceedings of the 2019 13th International Conference on Mathematics, Actuarial Science, Computer Science and Statistics (MACS), Karachi, Pakistan, 14–15 December 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Bilal, M.; Marjani, M.; Hashem, I.A.T.; Malik, N.; Lali, M.I.U.; Gani, A. Profiling reviewers’ social network strength and predicting the ‘Helpfulness’ of online customer reviews. Electron. Commer. Res. Appl. 2021, 45, 101026. [Google Scholar] [CrossRef]

- Ghose, A.; Ipeirotis, P.G. Estimating the Helpfulness and Economic Impact of Product Reviews: Mining Text and Reviewer Characteristics. IEEE Trans. Knowl. Data Eng. 2011, 23, 1498–1512. [Google Scholar] [CrossRef]

- Tsur, O.; Rappoport, A. R EV R ANK: A Fully Unsupervised Algorithm for Selecting the Most Helpful Book Reviews. In Proceedings of the Third International Conference on Weblogs and Social Media, ICWSM 2009, San Jose, CA, USA, 17–20 May 2009. [Google Scholar]

- Shamim, A.; Balakrishnan, V.; Tahir, M.; Shiraz, M. Critical Product Features ’ Identification Using an Opinion Analyzer. Sci. World J. 2014, 2014, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.; Liu, B. Mining and summarizing customer reviews. In Proceedings of the 19th National Conference on Artificial Intelligence, San Jose, CA, USA, 25–29 July 2004; pp. 168–177. [Google Scholar]

- Li, S.; Chen, Z.; Tang, L. Exploiting Consumer Reviews for Product Feature Ranking Ranking Categories and Subject Descriptors. In Proceedings of the 3rd Workshop on Social Web Search and Mining (SWSM’11), Beijing, China, 28 July 2011. [Google Scholar]

- Ahmad, T.; Doja, M.N. Ranking System for Opinion Mining of Features from Review Documents. IJCSI Int. J. Comput. Sci. Issues 2012, 9, 440–447. [Google Scholar]

- Gregory, M.L.; Chinchor, N.; Whitney, P.; Carter, R.; Hetzler, E.; Turner, A. User-directed Sentiment Analysis: Visualizing the Affective Content of Documents. In Proceedings of the Workshop on Sentiment and Subjectivity in Text, Sydney, Australia, 22 July 2006; pp. 23–30. [Google Scholar]

- Chen, C.; Ibekwe-sanjuan, F.; Sanjuan, E.; Weaver, C. Visual Analysis of Conflicting Opinions. In Proceedings of the IEEE Symposium on Visual Analytics and Technology (2006), Baltimore, MD, USA, 31 October–2 November 2006; pp. 59–66. [Google Scholar]

- Miao, Q.; Li, Q.; Dai, R. AMAZING: A sentiment mining and retrieval system. Expert Syst. Appl. 2009, 36, 7192–7198. [Google Scholar] [CrossRef]

- Morinaga, S.; Yamanishi, K.; Tateishi, K.; Fukushima, T. Mining product reputations on the Web. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–26 July 2002; pp. 341–349. [Google Scholar]

- Liu, B.; Hu, M.; Cheng, J. Opinion observer: Analyzing and comparing opinions on the Web. In Proceedings of the 14th International Conference on World Wide Web, Chiba, Japan, 10–14 May 2005; pp. 342–351. [Google Scholar]

- Oelke, D.; Hao, M.; Rohrdantz, C.; Keim, D.A.; Dayal, U.; Haug, L.; Janetzko, H. Visual Opinion Analysis of Customer Feedback Data. In Proceedings of the IEEE Symposium on Visual Analytics Science and Technology, Atlantic City, NJ, USA, 12–13 October 2009; pp. 187–194. [Google Scholar]

- Gamon, M.; Basu, S.; Belenko, D.; Fisher, D.; Hurst, M.; König, A.C. BLEWS: Using Blogs to Provide Context for News Articles. In Proceedings of the International Conference on Weblogs and Social Media, Seattle, WA, USA, 30 March–2 April 2008. [Google Scholar]

- Wanner, F.; Rohrdantz, C.; Mansmann, F.; Oelke, D.; Keim, D.A. Visual Sentiment Analysis of RSS News Feeds Featuring the US Presidential Election in 2008. In Proceedings of the Workshop on Visual Interfaces to the Social and the Semantic Web (VISSW2009), Sanibel Island, FL, USA, 8 February 2009. [Google Scholar]

- Gamon, M.; Aue, A.; Corston-Oliver, S.; Ringger, E. Pulse: Mining customer opinions from free text. In Proceedings of the International Symposium on Intelligent Data Analysis, Madrid, Spain, 8–10 September 2005; pp. 121–132. [Google Scholar]

- Liu, B. Web Data Mining: Exploring Hyperlinks, Contents, and Usage Data; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Pan, S.J.; Ni, X.; Sun, J.-T.; Yang, Q.; Chen, Z. Cross-domain sentiment classification via spectral feature alignment. In Proceedings of the 19th International Conference on World Wide Web-WWW ’10, Raleigh, NC, USA, 26–30 April 2010. [Google Scholar]

- Qiu, G.; Liu, B.; Bu, J.; Chen, C. Expanding Domain Sentiment Lexicon through Double Propagation. In Proceedings of the 21st International Joint Conference on Artificial Intelligence, Pasadena, CA, USA, 11–17 July 2009. [Google Scholar]

| Notation | Description |

|---|---|

| D | Document with n product reviews |

| Review k | |

| Metadata of review k | |

| Body of review k | |

| Helpfulness Ratio of review k (HR is MD element) | |

| Title of review k | |

| Rating of review k (Rating is MD element) | |

| represents the number of features in the review title | |

| depicts the number of opinion words in the review title | |

| number of the features in the body of the review | |

| represents a product feature () in sentence | |

| reflects the semantic polarity (SP) of the feature in sentence in | |

| reflects the opinion strength (OS) of the feature in sentence | |

| Frequency of the feature | |

| ontent | reflects the content of the sentence |

| reflects that the semantic polarity of the opinion is positive | |

| reflects that the opinion semantic polarity is negative | |

| reflects that the opinion strength is strong positive | |

| reflects that the opinion strength is mild positive | |

| reflects that the opinion strength is weak positive | |

| reflects that the opinion strength is strong negative | |

| reflects that the opinion strength is mild negative | |

| reflects that the opinion strength is weak negative | |

| reflects the weight of (i.e., +3) | |

| reflects the weight of (i.e., +2) | |

| reflects the weight of (i.e., +1) | |

| reflects the weight of (i.e., −3) | |

| reflects the weight of (i.e., −2) | |

| reflects the weight of (i.e., −1) |

| Product Type | Product Name | Number of Reviews | Number of Sentences | Length in Words | Length in Characters | |

|---|---|---|---|---|---|---|

| 1. | Digital Camera 1 | Canon G3 | 45 | 597 | 11,280 | 48,714 |

| 2. | Digital Camera 2 | Nikon Coolpix 4300 | 34 | 346 | 6749 | 29,763 |

| 3. | Cellular Phone | Nokia 6610 | 44 | 546 | 9681 | 42,795 |

| 4. | MP3 Player | Creative Labs Nomad Jukebox Zen Xtra 40 GB | 95 | 1716 | 12,719 | 54,872 |

| 5. | DVD Player | Apex AD2600 Progressive-scan DVD player | 100 | 740 | 32,553 | 138,301 |

| Total | 318 | 489 | 72,982 | 314,445 |

| Metrics | Picture Quality |

|---|---|

| 15 | |

| 12 | |

| 12/15 * 100 = 80% | |

| 10 | |

| 9 | |

| 9/10 * 100=90% | |

| 5 | |

| 3 | |

| 3/5 * 100 = 60% |

| Review Classes | Digital Camera 1 | DVD Player |

|---|---|---|

| Excellent | 3 | 6 |

| Good | 17 | 37 |

| Average | 11 | 14 |

| Fair | 5 | 6 |

| Poor | 9 | 36 |

| Features | Weight | Accuracy | Features | Weight | Accuracy | Features | Weight | Accuracy |

|---|---|---|---|---|---|---|---|---|

| Player | 144 | 87 | Player | 196 | 91 | Feature | 23 | 100 |

| Play | 31 | 90 | Play | 35 | 81 | Price | 17 | 61 |

| Price | 28 | 61 | Picture | 27 | 69 | Work | 7 | 71 |

| Feature | 23 | 100 | Apex | 22 | 100 | Product | 3 | 67 |

| Apex | 14 | 93 | Quality | 11 | 58 | Unit | −3 | 100 |

| Picture | 13 | 77 | Video | 9 | 64 | Service | −4 | 100 |

| Work | 8 | 88 | Disc | 8 | 67 | Play | −7 | 58 |

| Product | 7 | 100 | Button | 7 | 100 | Button | −7 | 100 |

| Unit | 4 | 100 | Unit | 7 | 100 | Disc | −8 | 67 |

| Service | 0 | 100 | Product | 4 | 80 | Apex | −9 | 89 |

| Proposed System | Opinion Analyzer | |

|---|---|---|

| Average Accuracy for Positive Rank | 95 | 93 |

| Average Accuracy for Negative Rank | 94 | 96 |

| Average Accuracy for Negative and Positive Rankings | 95 | 95 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shamim, A.; Qureshi, M.A.; Jabeen, F.; Liaqat, M.; Bilal, M.; Jembre, Y.Z.; Attique, M. Multi-Attribute Online Decision-Making Driven by Opinion Mining. Mathematics 2021, 9, 833. https://doi.org/10.3390/math9080833

Shamim A, Qureshi MA, Jabeen F, Liaqat M, Bilal M, Jembre YZ, Attique M. Multi-Attribute Online Decision-Making Driven by Opinion Mining. Mathematics. 2021; 9(8):833. https://doi.org/10.3390/math9080833

Chicago/Turabian StyleShamim, Azra, Muhammad Ahsan Qureshi, Farhana Jabeen, Misbah Liaqat, Muhammad Bilal, Yalew Zelalem Jembre, and Muhammad Attique. 2021. "Multi-Attribute Online Decision-Making Driven by Opinion Mining" Mathematics 9, no. 8: 833. https://doi.org/10.3390/math9080833

APA StyleShamim, A., Qureshi, M. A., Jabeen, F., Liaqat, M., Bilal, M., Jembre, Y. Z., & Attique, M. (2021). Multi-Attribute Online Decision-Making Driven by Opinion Mining. Mathematics, 9(8), 833. https://doi.org/10.3390/math9080833