Abstract

We propose a framework where Fer and Wilcox expansions for the solution of differential equations are derived from two particular choices for the initial transformation that seeds the product expansion. In this scheme, intermediate expansions can also be envisaged. Recurrence formulas are developed. A new lower bound for the convergence of the Wilcox expansion is provided, as well as some applications of the results. In particular, two examples are worked out up to a high order of approximation to illustrate the behavior of the Wilcox expansion.

1. Introduction

Linear differential equations of the form

with a matrix, whose entries are integrable functions of t, are ever-present in many branches of science, the fundamental evolution equation of Quantum Mechanics, the Schrödinger equation, being a particular case. In consequence, solving Equation (1) is of the greatest importance. In spite of their apparent simplicity, however, they are seldom solvable in terms of elementary functions, and so different procedures have been proposed over the years to render approximate solutions. These are specially useful in the analytical treatment of perturbative problems, such as those arising in the time evolution of quantum systems [1], control theory, or problems where time-ordered products are involved [2]. Among them, exponential perturbative expansions have received a great deal of attention, due to some remarkable properties they possess. In particular, if Equation (1) is defined in a Lie group, the approximations they furnish also evolve in the same Lie group. As a consequence, important qualitative properties of the exact solution are also preserved by the approximations. Thus, if Equation (1) represents the time-dependent Schrödinger equation, then the approximate evolution operator is still unitary, and as a consequence, the total sum of (approximate) transition probabilities is the unity, no matter where the expansion is truncated. There are, in fact, many physical problems (in non-linear mechanics, optical spectroscopy, magnetic resonance, etc.) involving periodic fast-oscillating external fields that are also modeled by Equation (1), with periodic. In that case, especially tailored expansions incorporating the well-known Floquet theorem [3], such as the average Hamiltonian theory [4] and the Floquet–Magnus expansion [5,6], have also been proposed.

When dealing with the general problem (1), one of the most widely used exponential approximations corresponds to the Magnus expansion [7]

where is an infinite series

whose terms are linear combinations of time-ordered integrals of nested commutators of A evaluated at different times (see [8] for a review, including applications to several physical and mathematical problems). What is more interesting for our purposes here is that this expansion can be related with a coordinate transformation rendering the original system (1) into the trivial equation

with the static solution , and that the transformation is given precisely by [9].

In contrast to the Magnus expansion, the Floquet–Magnus expansion obtains the solution with two exponential transformations when is periodic, whereas other exponential perturbative expansions are based on infinite product factorizations of ,

such as those proposed by Fer and Wilcox. In fact, as pointed out in [10], both expansions have a curious history, which is worth describing. It was Fer who proposed the expansion that bears his name in [11], although he never applied it to solve any specific problem. Bellman reviewed this paper in the Mathematical Reviews (MR0104009), and even proposed the expansion as an exercise in [12]. Nevertheless, Wilcox identified it in [1] with an alternative factorization, Equation (5), which was indeed a different and new type of expansion. From them on, their historical trajectories move apart. Thus, Fer expansion was rediscovered by Iserles [13] as a tool for the numerical integration of linear differential equations and later on used in Quantum Mechanics [14] and solid-state nuclear magnetic resonance [15], but also as a Lie-group integrator [16,17,18]. On the other hand, Wilcox expansion has been rediscovered several times in the literature, in particular in [19] in the context of nonlinear control systems, and in [20] as a general tool for approximating the time evolution operator in Quantum Mechanics.

The first goal in this work is to recast both infinite product expansions within a unifying framework. This is done by considering, instead of just one exponential transformation, as in the case of the Magnus expansion, a sequence of such transformations, , chosen to satisfy certain requirements. To be more specific, suppose one replaces in Equation (1) by , where is a parameter. Then, if the transformations are chosen so that each is proportional to , we recover the Wilcox expansion, whereas we end up with the Fer expansion when each is an infinite series in whose first term is proportional to .

We also show that further alternative descriptions yield new factorizations. This additional degree of freedom can be indeed used to deal better with the features of the matrix , as in Floquet–Magnus, when is periodic.

One might then consider this sequence of linear transformations as a generalization of the concept of picture in Quantum Mechanics when Equation (1) refers to the Schrödinger equation.

Our second goal consists of obtaining, on the basis of this framework, new results concerning Wilcox expansion. Thus, we develop a recursive procedure to obtain every order of approximation in terms of nested commutators, as well as a convergence radius bound. We also establish a formal connection of the Wilcox expansion with the Zassenhaus formula [7,21].

Eventually, the important problem of expanding the exponential for and A, B, two generic non-commuting operators will be addressed, and two applications of the obtained results.

2. A Sequence of Transformations: The General Case

Given the initial value problem (1), let us consider a linear change in variables of the form

transforming the original system into

For the time being, the generator of the transformation is not specified. Then, can be expressed in terms of and as follows. First, by inserting Equation (6) into Equation (1), and taking Equation (7) into account, one can obtain

whence

The derivative of the matrix exponential can be written as [8]

where the symbol stands for the (everywhere convergent) power series

Here , , and denotes the usual commutator. Therefore

where

and

Of course, nothing prevents us from repeating the whole procedure above and introducing a second transformation to Equation (7) of the form

so that the new variables verify

In general, for the n-th such linear transformation

with

one has

so that the solution of Equation (1) is expressed as

Alternatively, we can write in Equation (19) as follows. Since it is also true that [8]

we have

The important point is, of course, how to choose , or, alternatively, , i.e., the specific requirements each transformation has to satisfy in order to be useful to approximately solve Equation (1). There are obviously many possibilities, and in the following we analyze two of them, leading to two different and well-known exponential perturbation factorizations mentioned in the Introduction, namely the Wilcox [1] and Fer [11] expansions.

3. Wilcox Expansion

3.1. Recurrences

Let us introduce the (dummy) parameter in Equation (1) and replace A with . This is helpful when collecting coefficients, and at the end we can always take .

Since the solution of Equation (1) when A is constant, or more generally when for all , is , it makes sense to take the generator for the first transformation as

Then, according to Equations (12) and (13), we have

where

With the choice , it turns out that is a power series in starting with ,

where

We can analogously choose the second transformation proportional to , i.e., as for a given to be determined. Then, a straightforward calculation shows that

with

The generator is then obtained by imposing that in Equation (28), i.e.,

In this way, is a power series in , starting with ,

with

where stands for the integer part of the argument. In general, the n-th transformation is determined in such a way that the power series of starts with . This can be done as follows: from , we compute

with

Then, is obtained by taking , i.e.,

and, finally, is determined as

with

Notice that, in view of Equations (34) and (37), Equation (35) simplifies to

The solution of Equation (1) is expressed, after n such transformations, as

An approximation to the exact solution containing all the dependence up to is obtained by taking in Equation (39).

In spite of this, the truncated factorization still shares relevant qualitative properties with the evolution operator, such as orthogonality, unitarity, etc.

3.2. Explicit Expressions for

Although the recursive procedure (33)–(38) turns out to be very computationally efficient to construct the exponents for a given in practice, it is clear that much insight about the expansion can be gained if an explicit expression for any can be constructed, thus generalizing the treatment originally done by Wilcox up to [1].

Such an expression could be obtained, in principle, by working out the recurrence (33)–(38), but a more direct approach consists of comparing the Dyson perturbation series of [22] in the associated initial value problem

i.e.,

with the expansion in of the factorization

Thus, for the first terms, one has

In general, we can write

where the sum is extended over the total number of partitions of the integer n. We recall that a partition of the integer n is an n-tuple , such that , with ordering . Thus, the seven partitions of with the chosen ordering are , , , , , and . In Equation (45), is the number of repeated indices in the partition considered.

By working out Equation (45), one can invert the relations and express in terms of for any . Thus, one obtains

Notice that , , is expressed in terms of products of iterated integrals . Interestingly, it is possible to express these products as proper time-ordered integrals by using a procedure developed in [23]. If we denote

so that , then

etc. Taking into account Fubini’s theorem,

it is clear that , and thus

We can proceed analogously with the following products

so that

Carrying out this argument to any order, we can expand all the products of integrals appearing in . As a result, each product is replaced by the sum of all possible permutations of time ordering consistent with the time ordering in the factors of this product [24].

At this point, it is illustrative to consider some examples in detail. Thus, the product gives the sum of all permutations of three elements, such that the second index is less than the third one. With respect to , since there is no special ordering, then all possible permutations have to be taken into account. Finally, for the product appearing in one has

Proceeding in a similar way, one can show that any product of iterated integrals can be expressed as a sum of iterated integrals. This property is, in fact, related to a much deeper characterization of the group of permutations [23]. If denotes the graded -vector space with the fundamental basis given by the disjoint union of the symmetric groups for all , then it is possible to define a product ∗ of permutations and a coproduct in , so that there is a one-to-one correspondence between iterated integrals and permutations

The product ∗ was introduced in [25], and, together with the coproduct , endows with a structure of Hopf algebra [26], the so-called Malvenuto–Reutenauer Hopf algebra of permutations [27].

In sum, the general structure of the Wilcox expansion terms therefore reads as

where the summation extends over all the permutations of . The weights are given by rational numbers that can be determined algorithmically for any n, although the general expression for them is not obvious, in contrast with the Magnus expansion, for which such a closed formula exists [28]. This can be then considered as an open problem.

Moreover, if one is interested in obtaining a compact expression for in terms of independent nested commutators of , as is done in [1] up to ; one can use the class of bases proposed by Dragt & Forest in [24] for the Lie algebra generated by the operators . The same procedure as carried out in [23] for the Magnus expansion can be applied here, so that one obtains the general formula

Here, the sum extends over the permutations of the elements and is a rational number that depends on the particular permutation. For a given permutation, say , its value coincides with the prefactor in Equation (55) of the particular term , corresponding to the permutation , such that

Thus, if we denote

we obtain for the first terms

In sum, the general structure of the Wilcox expansion terms via commutators uses the same weights as in Equation (55) and reads

where the primed sum requires the rightmost element in the permutation to be invariant (as in Equation (56)). This element may be chosen at will and, whatever that value, the permutations are build up with the remaining elements. Different, but equivalent, expressions for in terms of commutators are obtained depending on the value fixed at the rightmost position. We stress once again that, although only the first terms have been collected here for simplicity, the whole procedure is algorithmic in nature and has been implemented in a computer algebra system furnishing to evaluate explicitly for any n [29]. Note that involves a linear combination of iterated integrals.

3.3. Convergence of Wilcox Expansion

Recursion (33)–(38) is also very useful to provide estimates for the radius of convergence of the Wilcox expansion when Equation (1) is defined in a Banach algebra , i.e., an algebra that is also a complete normed linear space with a sub-multiplicative norm,

If this is the case, then and, in general, .

As shown in [30,31], if the series

has a certain radius of convergence for a given t, then, for , the sequence of functions

converges uniformly on any compact subset of the ball . Thus, studying the convergence of the Wilcox expansion reduces analysis of the series and, in particular, its radius of convergence .

Let be a function such that and denote . Then, clearly

and

In general, the following bounds can be established by induction

where

It is clear that if the series converges, so does . Therefore, a sufficient condition for convergence of the Wilcox expansion is obtained by imposing

where

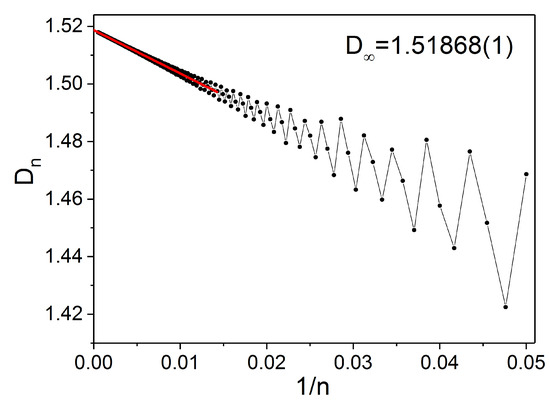

We have computed this quantity up to and then extrapolated to the limit . Then , as seen in Figure 1, and thus the convergence of the Wilcox expansion is ensured at least for values of time t such that

Figure 1.

as a function of , and linear extrapolation (red line).

This type of extrapolation has also been used to estimate the convergence radius of the Magnus expansion [32]. Although the estimate is not completely analytic, the same type of computation has provided accurate results in other settings. In particular, for the Magnus expansion, such an estimate fully agrees with a purely theoretically deduced bound [8,10,32].

4. Fer–Like Expansions

4.1. Standard Fer Expansion

In forming the Wilcox expansion, the first transformation is chosen in such a way that , whereas for . It makes sense, then, to analyze what happens if we impose this condition at each step of the procedure

for all . In that way, expression (22) for clearly simplifies to

In doing, so we recover the precise Fer expansion, see [10,11]. Again, after n transformations, we get

so that, if we impose , we are left with another approximation to the exact solution. Notice that this approximation clearly differs from the previous Wilcox expansion for , as can be seen by analyzing the dependence on of each transformation. Whereas for the Wilcox expansion, now contains terms of order and higher. This can be easily shown by induction: is proportional to , so that , according to Equation (72) contains terms of order (coming from ) and higher. In general, and contain terms of order and higher, so the first term in the series (72) for , i.e., the commutator , produces a term of order in .

Alternatively, expressing Equation (72) as

and taking norms, it is then possible to show that the Fer expansion converges for values of t such that [10]

4.2. Intermediate Fer-Like Expansions

Notice that the -power series of in the Fer expansion contains infinite terms starting with , but the corresponding truncated factorization obtained from Equation (73) by taking is correct only up to terms of order . One might then consider yet another sequence of transformations so that each contains only the relevant terms leading to a correct approximation up to this order. Of course, both factorizations would be different, but nevertheless they would produce the correct power series up to order . The corresponding factorization can be properly called a modified Fer expansion.

Our starting point is, once again, Equation (22). Clearly, the first transformation is the same as in Fer (and Wilcox), i.e.,

and thus

where the rightmost term points out the lowest contribution in the sum.

Next, to reproduce the same dependence on as the Fer expansion, we need to enforce that , and the question is how to choose guaranteeing this feature. An analysis of Equation (22) with reveals that this is achieved by taking as the sum of terms in in Equation (77) contributing to and , i.e.,

since the next term appearing in the expression of involves the computation of . Thus

Likewise, is to be designed so that

and this is guaranteed by taking as the sum all the terms in contributing to powers from up to . From Equation (79) it is clear that

where only the relevant terms in the expansion in have to be taken into account. In this way, we can take

with

Notice, that since the second term in in Equation (78) is , the expression (82) does contain some contributions in and that in principle could be removed. We prefer, however, to maintain them in order to have a more compact expression.

For this modified Fer expansion, is generally chosen, so that is precisely the sum of all terms of containing terms of powers from up to and then appropriately truncating the series of .

Other possibilities for choosing at the successive stages clearly exist, and according to the particular election, different intermediate Fer-like expansions result. In practice, one of those combinations of commutators could be more easily computed for a specific problem.

5. Applications

5.1. Wilcox Expansion as the Continuous Analogue of Zassenhaus Formula

The Zassenhaus formula may be considered as the dual of the Baker–Campbell–Hausdorff (BCH) formula [33] in the sense that it relates the exponential of the sum of two non-commuting operators X and Y with an infinite product of exponentials of these operators and their nested commutators. More specifically,

where is a homogeneous Lie polynomial in X and Y of degree k [1,7,21,34,35]. A very efficient procedure to generate all the terms in Equation (84) is presented in [21] and allows to construct up to a prescribed value of n directly, in terms of the minimum number of independent commutators involving n operators X and Y.

In view of the formal similarity between Equations (39) and (84), Wilcox expansion also has been described as the “continuous analogue of the Zassenhaus formula” [10], just as the Magnus expansion is sometimes called the continuous version of the BCH formula. To substantiate this claim, we next reproduce the Zassenhaus Formula (84) by applying the procedure of Section 3 to a particular initial value problem, namely, the abstract equation

where X and Y are two non-commuting constant operators.

The formal solution is, of course, , but we can also solve Equation (85) by first integrating and factorizing as , where obeys the equation

and finally apply this to Equation (86), the sequence of transformations leading to the Wilcox expansion. Notice, however, that now the coefficient matrix is an infinite series in

so that, when applying the recursion (33)–(38), is no longer , but the term in which is proportional to . In other words,

After some computation, one arrives at

Since , then clearly for all and

By imposing , we get

5.2. Expanding the Exponential

Bellman, in his classic book [36], states that “one of the great challenges of modern physics is that of obtaining useful approximate relations for in the case where ”. One such approximation was proposed and left undisclosed in ([12], p. 175). Assuming that can be written in the form

Bellman proposed to determine the first three terms , , , and pointed out that, contrary to other expansions, the product expansion (95) is unitary if A and B are skew-Hermitian.

It turns out that the Wilcox expansion can be used to provide explicit expressions for for any two indeterminates A and B, as we will see in the sequel.

Before proceeding, it is important to remark that this problem differs from the Zassenhauss formula, in the sense that the expansion parameter affects only one of the operators in the exponential. The solution goes as follows. We write

and solve the differential equation satisfied by V

with the Wilcox expansion, so that

The operators in Equation (95) are then obtained by taking .

5.3. Illustrative Examples

We next particularize the Bellman problem (95) to matrices where closed expressions for can be obtained. The idea is to illustrate the behaviour of the product expansion by computing explicitly high-order terms with matrices in the and the Lie algebras.

5.3.1. Matrices X and Y in

In the first example, we chose and , where , a is a real parameter and

are Pauli matrices. This instance is borrowed from Quantum Mechanics, where is a matrix that transforms the -spin wave function in a Hilbert space.

Using the scalar product notation to write down a linear combination of Pauli matrices: , the matrix exponential reads

In the sequel, we work out the expansion

up to order eleven in and analyze the increasing accuracy of the product expansion as far as more terms are considered. The lhs in Equation (103) may be thought as a transformation involving and . In turn, the rhs is a pure transformation, i.e., , followed by an infinite succession of transformations, , whose effect should decrease with k. The truncated product expansion is expected to be accurate as far as .

In Table 1 we write down the first five contributions for a generic t (expressions for are too involved to be collected here). Wilcox–Bellman’s Formula (103) corresponds then to . All the terms have been obtained with the recurrences of Section 3, starting from

where and .

Table 1.

First five orders in Bellman problem for generic t. The operators in Equation (95) are obtained by taking , i.e., . We have defined and .

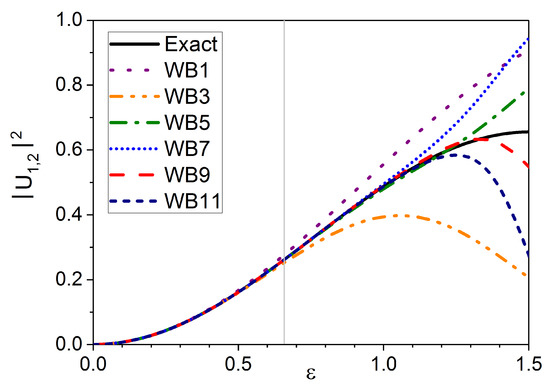

The formulas in Table 1 show that may be considered as an effective expansion parameter. In Figure 2, we illustrate, for , the accuracy of the Wilcox–Bellman product expansion in the example at hand as a function of . We plot the squared modulus of the non-diagonal matrix element, say , of Equation (103) for every analytic approximation up to order eleven in , as well as the exact result. Even orders do not contribute in this test, because is always proportional to , and therefore is a diagonal matrix.

Figure 2.

Accuracy of Wilcox–Bellman product expansion up to order eleven as a function of the ratio , with . The quantity plotted is the squared modulus of the non-diagonal element of the matrix. The vertical grey line stands for the convergence lower bound .Wilcox–Bellman expansion example

As regards convergence of the product expansion, the lower bound of Equation (70) leads to

In turn, the behaviour of the curves in Figure 2 points out that convergence of the product expansion extends well beyond that lower bound for this particular example.

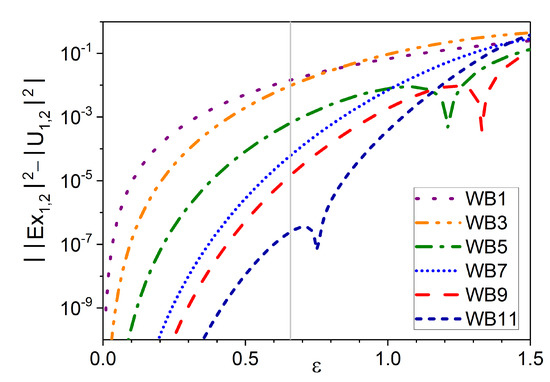

Eventually, Figure 3 shows the logarithm of the absolute error in the approximations given by curves in Figure 2.

Figure 3.

Absolute error of the approximations given by curves in Figure 2 with . The vertical grey line is located at the value of the convergence lower bound .

5.3.2. Matrices X and Y in

The second example refers to the matrix that describes a rotation in three dimensions defined by the vector . Here, stands for the rotation angle around the axis given by the unitary vector . A generic 3D rotation matrix can be written as , where the components of are the three fundamental rotation matrices

We study the particular case , and compare the rotation of angle around the unit vector

with the sequence of transformations

In other words, the question we address is how the pure z-axis rotation in Equation (108), , has to be corrected by an infinite composition of further rotations to reproduce the one defined by . When is small enough, the approach is expected to converge, since the expansion convergence lower bound reads, in this case, .

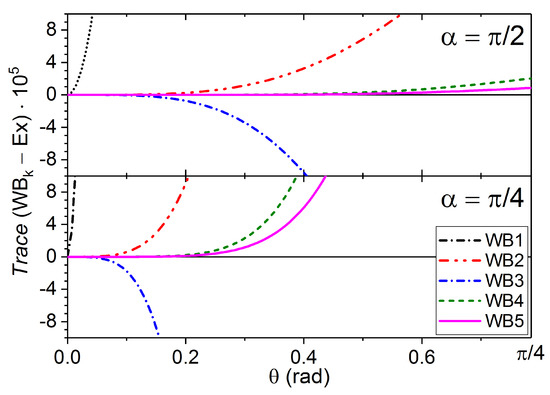

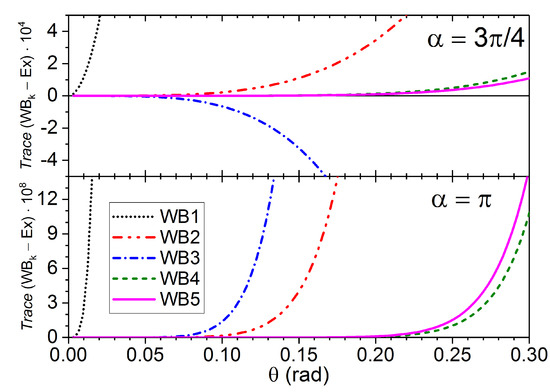

Here, the accuracy of the product expansion will depend on both the rotation angle and the relative orientation of the rotation axis, determined by the angle . This is illustrated in Figure 4 and Figure 5 for the first five orders of approximation, with and .

Figure 4.

Error in the approximations to the matrix trace as a function of the rotation angle , for two values and .

Figure 5.

Error in the approximations to the matrix trace as a function of the rotation angle , for two values and .

In order to test the expansion, we have computed the matrix trace of the successive approximants and compared them with the exact result

The first two approximants are simple enough to be written down

Interestingly, the third approximation order is worse than the second one in all four cases. In the case of , the fourth order is better than the fifth one.

6. Conclusions

When a linear system of differential equations, defined by the coefficient matrix , is transformed under , the coefficient matrix in the new representation becomes an infinite power series in , say . That is the first step of all matrix exponential methods to approximate the time-evolution operator. In the framework that we have introduced, it is the first move in a sequence of exponential transformations that change the linear system from one representation to another with the goal that dynamics will become less and less relevant. Choosing the transformation as the second move and iterating this procedure afterwards yields the Fer expansion. Instead, choosing the transformation , i.e., the leading term of the new coefficient matrix, opens up Wilcox expansion. The framework allows for intermediate expansions, taking as an initiator, as well as jumping between schemes, in accordance with the particular requirements of the problem at hand.

We have seen that the theory of linear transformations (or changes of picture in the language of Quantum Mechanics) provides a unified framework to deal with many different exponential perturbative expansions. Whereas only one linear transformation reducing the dynamics to the trivial Equation (4) or to a system with a constant matrix renders the Magnus [9] and the Floquet–Magnus [37] expansion, respectively, a sequence of such transformations with different choices of the new matrices lead to Wilcox and Fer factorizations. From this perspective, other factorizations are possible depending on the particular problem at hand: one only has to appropriately select the successive transformations.

In the case of Wilcox expansion, we have provided an efficient recursive procedure to compute this. In addition, we have developed a method to build up an explicit expression for any in terms of commutators. This is possible by using similar tools, as in the case of the Magnus expansion, namely by relating products of iterated integrals with the structure of the Hopf algebra of permutations, and by using special bases of nested commutators. A sufficient condition for the expansion convergence has also been obtained.

We have presented some application examples of the results about Wilcox expansion. Firstly, we have shown how to obtain Zassenhaus formula from Wilcox expansion which, in turn, may be interpreted as its continuous analogue. Secondly, we point out that Wilcox expansion solves the problem of expanding the exponential when A and B are non-commuting operators. We refer to this as Wilcox–Bellman expansion. Two practical cases, in this respect, have been analyzed up to high order. Interestingly, in one of them the convergence seems to not be uniform. For convenience, the interested reader can find, in [29], a Mathematica code generating general explicit expressions and recurrences for the Wilcox expansion.

While a full assessment of the Wilcox expansion in comparison with Fer expansion is not the main purpose of this work, we can still mention some of their most distinctive features. Both types of expansion construct the solution of Equation (1) as an infinite exponential factorization, but in Wilcox, the exponent of each factor is proportional to successive powers of the expansion parameter , whereas, in Fer, each exponent contains an infinite sum of powers of . This means that, when truncated after a given number of transformations, say n, the Wilcox expansion differs from the exact solution in the power . In other words, each term in the Wilcox expansion collects the effect of the perturbation at order k. On the other hand, the Fer expansion, when truncated after n transformations, provides a much more accurate approximation. This is true, of course, if the infinite sums involved in each transformation are exactly computed, an almost impossible task unless the time dependence of is simple enough. By contrast, we have explicit expressions for each exponent in the Wilcox expansion for a generic and, by using the same techniques as in the Magnus expansion, we can construct appropriate approximations of the iterated integrals if necessary. As the examples collected here and in some other studies show [20], Wilcox expansion can provide accurate results after only a few such transformations.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

The first three authors have been funded by Ministerio de Ciencia e Innovación (Spain) through projects MTM2016-77660-P and PID2019-104927GB-C21 (AEI/FEDER, UE), and by Universitat Jaume I (grants UJI-B2019-17 and GACUJI/2020/05). The work of J.A.O. has been partially supported by the Spanish MINECO (grant numbers AYA2016-81065-C2-2 and PID2019-109592GB-100).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All the data that support the findings of this study are available from the corresponding author upon request. Codes generating explicit expressions and recurrences for the Wilcox expansion are openly available in http://www.gicas.uji.es/Research/Wilcox.html (accessed on 16 March 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wilcox, R. Exponential operators and parameter differentiation in quantum physics. J. Math. Phys. 1967, 8, 962–982. [Google Scholar] [CrossRef]

- Oteo, J.; Ros, J. From time-ordered products to Magnus expansion. J. Math. Phys. 2000, 41, 3268–3277. [Google Scholar] [CrossRef]

- Hale, J. Ordinary Differential Equations; Krieger Publishing Company: Malabar, FL, USA, 1980. [Google Scholar]

- Maricq, M.M. Application of average Hamiltonian theory to the NMR of solids. Phys. Rev. B 1982, 25, 6622–6632. [Google Scholar] [CrossRef]

- Casas, F.; Oteo, J.A.; Ros, J. Floquet theory: Exponential perturbative treatment. J. Phys. A Math. Gen. 2001, 34, 3379–3388. [Google Scholar] [CrossRef]

- Mananga, E.; Charpentier, T. On the Floquet–Magnus expansion: Applications in solid-state nuclear magnetic resonance and physics. Phys. Rep. 2016, 609, 1–50. [Google Scholar] [CrossRef]

- Magnus, W. On the Exponential Solution of Differential Equations for a Linear Operator. Comm. Pure Appl. Math. 1954, VII, 649–673. [Google Scholar] [CrossRef]

- Blanes, S.; Casas, F.; Oteo, J.; Ros, J. The Magnus expansion and some of its applications. Phys. Rep. 2009, 470, 151–238. [Google Scholar] [CrossRef]

- Casas, F.; Chartier, P.; Murua, A. Continuous changes of variables and the Magnus expansion. J. Phys. Commun. 2019, 3, 095014. [Google Scholar] [CrossRef]

- Blanes, S.; Casas, F.; Oteo, J.; Ros, J. Magnus and Fer expansions for matrix differential equations: The convergence problem. J. Phys. A Math. Gen. 1998, 22, 259–268. [Google Scholar] [CrossRef]

- Fer, F. Résolution de l’equation matricielle U˙= pU par produit infini d’exponentielles matricielles. Bull. Classe Sci. Acad. R. Bel. 1958, 44, 818–829. [Google Scholar]

- Bellman, R. Introduction to Matrix Analysis, 2nd ed.; McGraw-Hill: New York, NY, USA, 1970. [Google Scholar]

- Iserles, A. Solving Linear Ordinary Differential Equations by Exponentials of Iterated Commutators. Numer. Math. 1984, 45, 183–199. [Google Scholar] [CrossRef]

- Klarsfeld, S.; Oteo, J. Exponential infinite-product representations of the time displacement operator. J. Phys. A Math. Gen. 1989, 22, 2687–2694. [Google Scholar] [CrossRef]

- Mananga, E. On the Fer expansion: Applications in solid-state nuclear magnetic resonance and physics. Phys. Rep. 2016, 608, 1–41. [Google Scholar] [CrossRef]

- Casas, F. Fer’s Factorization as a Symplectic Integrator. Numer. Math. 1996, 74, 283–303. [Google Scholar] [CrossRef]

- Zanna, A. Collocation and Relaxed Collocation for the Fer and the Magnus Expansions. SIAM J. Numer. Anal. 1999, 36, 1145–1182. [Google Scholar] [CrossRef][Green Version]

- Iserles, A.; Munthe-Kaas, H.; Nørsett, S.; Zanna, A. Lie-Group Methods. Acta Numer. 2000, 9, 215–365. [Google Scholar] [CrossRef]

- Huillet, T.; Monin, A.; Salut, G. Lie algebraic canonical representation in nonlinear control systems. Math. Syst. Theory 1987, 20, 193–213. [Google Scholar] [CrossRef]

- Zagury, N.; Aragão, A.; Casanova, J.; Solano, E. Unitary expansion of the time evolution operator. Phys. Rev. A 2010, 82, 042110. [Google Scholar] [CrossRef]

- Casas, F.; Murua, A.; Nadinic, M. Efficient computation of the Zassenhaus formula. Comput. Phys. Commun. 2012, 183, 2386–2391. [Google Scholar] [CrossRef]

- Galindo, A.; Pascual, P. Quantum Mechanics; Springer: Berlin/Heidelberg, Germany, 1990. [Google Scholar]

- Arnal, A.; Casas, F.; Chiralt, C. A general formula for the Magnus expansion in terms of iterated integrals of right-nested commutators. J. Phys. Commun. 2018, 2, 035024. [Google Scholar] [CrossRef]

- Dragt, A.; Forest, E. Computation of nonlinear behavior of Hamiltonian systems using Lie algebraic methods. J. Math. Phys. 1983, 24, 2734–2744. [Google Scholar] [CrossRef]

- Agrachev, A.; Gamkrelidze, R. The shuffle product and symmetric groups. In Differential Equations, Dynamical Systems, and Control Science; Elworthy, K., Everitt, W., Lee, E., Eds.; Marcel Dekker: New York, NY, USA, 1994; pp. 365–382. [Google Scholar]

- Hazewinkel, M.; Gubareni, N.; Kirichenko, V. Algebras, Rings and Modules. Lie Algebras and Hopf Algebras; AMS: Providence, RI, USA, 2010. [Google Scholar]

- Malvenuto, C.; Reutenauer, C. Duality between quasi-symmetric functions and the Solomon descent algebra. J. Algebra 1995, 177, 967–982. [Google Scholar] [CrossRef]

- Strichartz, R.S. The Campbell–Baker–Hausdorff–Dynkin Formula and Solutions of Differential Equations. J. Funct. Anal. 1987, 72, 320–345. [Google Scholar] [CrossRef]

- Geometric Integration Research Group. 2021. Available online: http://www.gicas.uji.es/Research/Wilcox.html (accessed on 18 February 2021).

- Bayen, F. On the convergence of the Zassenhaus formula. Lett. Math. Phys. 1979, 3, 161–167. [Google Scholar] [CrossRef]

- Arnal, A.; Casas, F.; Chiralt, C. On the structure and convergence of the symmetric Zassenhaus formula. Comput. Phys. Commun. 2017, 217, 58–65. [Google Scholar] [CrossRef]

- Moan, P.; Oteo, J. Convergence of the exponential Lie series. J. Math. Phys. 2001, 42, 501–508. [Google Scholar] [CrossRef]

- Casas, F.; Murua, A. An efficient algorithm for computing the Baker–Campbell–Hausdorff series and some of its applications. J. Math. Phys. 2009, 50, 033513. [Google Scholar] [CrossRef]

- Suzuki, M. On the convergence of exponential operators—The Zassenhaus formula, BCH formula and systematic approximants. Commun. Math. Phys. 1977, 57, 193–200. [Google Scholar] [CrossRef]

- Weyrauch, M.; Scholz, D. Computing the Baker–Campbell–Hausdorff series and the Zassenhaus product. Comput. Phys. Commun. 2009, 180, 1558–1565. [Google Scholar] [CrossRef]

- Bellman, R. Perturbation Techniques in Mathematics, Engineering & Physics; Dover Publications: Mineola, NY, USA, 1972. [Google Scholar]

- Arnal, A.; Casas, F.; Chiralt, C. Exponential perturbative expansions and coordinate transformations. Math. Comput. Appl. 2020, 25, 50. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).