Abstract

Over the last decade, regularized regression methods have offered alternatives for performing multi-marker analysis and feature selection in a whole genome context. The process of defining a list of genes that will characterize an expression profile remains unclear. It currently relies upon advanced statistics and can use an agnostic point of view or include some a priori knowledge, but overfitting remains a problem. This paper introduces a methodology to deal with the variable selection and model estimation problems in the high-dimensional set-up, which can be particularly useful in the whole genome context. Results are validated using simulated data and a real dataset from a triple-negative breast cancer study.

1. Introduction

Breast cancer (BC) is the most frequent cancer among women, representing around 25% of all new cancer diagnoses in women [1]. One in eight women in developed countries will be diagnosed with BC over the course of a lifetime.

The prognosis of this disease has progressively improved over the past three decades, due to the implementation of population-based screening campaigns and, above all, the introduction of new effective targeted medical therapies, i.e., aromatase inhibitors (effective in hormone receptor-positive tumors) and trastuzumab (effective in HER2-positive tumors). Breast cancer is, however, a heterogeneous disease. The worst outcomes are associated with the so-called triple-negative breast cancer subtype (TNBC), diagnosed in 15–20% of BC patients. TNBC is defined by a lack of immunohistochemistry expression of the estrogen and progesterone receptors and a lack of expression/amplification of HER2 [2]. The absence of expression of these receptors makes chemotherapy the only available therapy for TNBC.

TNBC is usually diagnosed in an operable (early) stage. Surgery, chemotherapy and radiation therapy are the critical components of the treatment of early TNBC. Many early TNBC patients are treated with upfront chemotherapy (neoadjuvant chemotherapy, NACT) and then operated on and, perhaps, irradiated. The rationale for this sequence is the ability to predict the long-term outcome of patients looking at the pathological response achieved with initial NACT [3].

With the currently available neoadjuvant chemotherapy regimens, nearly 50% of TNBC patients achieve a good pathological response to this therapy, whereas the remaining patients have an insufficient response. TNBC patients achieving a complete or almost complete disappearance of the tumor in the breast and axilla after NACT have an excellent outcome (less than 10% of relapses at five years), in contrast with those with significant residual disease (more than 50% of relapses at five years) [4,5].

The identification of these two different populations is therefore of utmost relevance, in order to test new experimental therapies in the population unlikely to achieve a good pathological response.

Several tumor multigene predictors of pathological response of operable BC to NACT have been proposed over the past few years, taking advantage of the recent decreased economic cost of obtaining an individual’s full transcriptome [6,7,8]. Most of them have been tested in unselected populations of BC patients and have shown insufficient positive predictive value and sensitivity.

The process of defining a list of genes that will define a characteristic expression profile is still ambiguous. This process relies upon advanced statistics and can use an agnostic point of view or include some a priori knowledge, but overfitting remains a problem. RNA-Seq has become one of the most appealing tools of modern whole transcriptome analyses because it combines a relatively low cost and a comprehensive approach to transcript quantification. Some approaches to complex disease biomarker discovery already pointed to the need to use a whole genome perspective using joint information in order to predict complex traits instead of a priori selecting individual features [9,10]. This strategy would lead to high predictive accuracy, and there would be no need to know the precise biological associations in the genome background because of the high correlation among the biomarkers [11]. This approach is challenging from the statistical point of view because of the large number of biomarkers that must be tested along the genome in relation to the rather small sample sizes in clinical studies. On the other hand, daily clinical practice scenarios require cheaper and faster quantification platforms than whole-genome RNA-Seq analysis. Thus, it is necessary to reduce the number of biomarkers to focus on in order to define a practical gene expression signature for the clinical community.

Regularized regression methods provide alternatives for performing multi-marker analysis and feature selection in a whole genome context [12]. Specifically, we focus on the sparse-group lasso (SGL) regularization method [13], which generalizes lasso [14], group lasso [15] and elastic-net [16], merging lasso and group lasso penalties. The solution provided by SGL usually involves a small number of predictor variables, given that many coefficients in the solution are exactly zero. It has an advantage over lasso when the predictor variables are grouped, as many groups are entirely zeroed out, but unlike group lasso, the solution is also sparse within those groups that are not completely eliminated from the model. However, as will be explained in the next sections, the SGL is not appropriate for the problem we are dealing with without introducing a broader methodology to control the regularization hyper-parameters, the groups, and the high-dimensionality issue. From a methodological point of view, this paper provides an original contribution to perform variable selection and model fitting in high-dimensional problems, allowing a priori selection of the final number of variables and addressing the problem of overfitting with the introduction of the importance index. Furthermore, the results presented in this paper are the first attempt in a translational oncology scenario at building a predictive model for the response to treatment, based entirely on whole genome RNA-Seq data and conventional clinical variables.

This paper is organized as follows. Section 2 ties together the various theoretical concepts that support our approach. Section 2.1 introduces the mathematical formulation of the SGL as an optimization problem. Section 2.2 discusses the iterative-sparse group lasso, a coordinate descent algorithm used to automatically select the regularization parameters of the SGL. Section 2.3 describes a clustering strategy for the variables, based on principal component analysis, which makes it possible to work with an arbitrarily large number of variables without specifying the groups a priori. Section 2.5 highlights our main methodological contributions: the importance and the power indexes, to weight variables and models, respectively. In Section 3, a simulation study is presented, with several synthetic matrix designs, and varying the number of variables from 40 to 4000. Section 4 highlights the contributions of our methodology on a TNBC cohort that had undergone neoadjuvant docetaxel/carboplatin chemotherapy. Some conclusions and outlines for future work are drawn in the final section.

2. Methodology and Algorithms

Consider the usual logistic regression framework, with N observations in the form , , , where p is the number of features or predictor variables, and is the binary response. We assume that the response comes from a random variable with conditional distribution,

where:

and is the linear predictor,

The objective is to predict the response Y for future observations of , using an estimation of the unknown parameter , given by:

where:

The problem with this approach is that for , the minimization (1) has infinite optimal solutions. When the features represent genetic expressions, this problem of predicting Y becomes more extreme, since we often have N several orders of magnitude smaller than p.

As a solution, variable selection techniques are proposed, in order to tackle the analytical intractability of this problem.

2.1. The Sparse-Group Lasso

It has been shown that SGL can play an important role in addressing the issue of variable selection in genetic models, where genes are grouped following different pathways. The mathematical formulation of this problem is:

Here J is the number of groups, are vectors with the components of corresponding to j-th group (of size ), and , . The regularization parameter is

The problem with (3) is that the vector of estimated coefficients depends on the selection of a vector of regulation parameters , which must be chosen before estimating . The selection of is partly an open problem, because although there are several practical strategies for choosing these parameters, there is no established theoretical criterion to follow. In most cases, the regularization parameters are set a priori, based on some additional information about the data, or the characteristics of the desired solution, e.g., a greater implies that more components of are identically zero. The most commonly used methodology to select consists of moving the regulation parameters in a fixed grid, which is usually not very thin. However, this approach has many disadvantages. By contrast, we propose the iterative-sparse group lasso, a coordinate descent algorithm, recently introduced in [17].

2.2. Selection of the Optimal Regularization Parameter

Traditionally, the data set is partitioned into three disjoint data sets, , and . The data in are used for training the model, i.e., solving (3). is used for validation, i.e., finding the optimal parameter . The remaining observations in are used for testing the prediction ability of the model on future observations. Specifically, the selection of the optimal parameter is based on the minimization of the validation error, defined as:

where:

and:

with # denoting the cardinal of a set. Therefore, the problem of finding the optimal parameter can be formulated as:

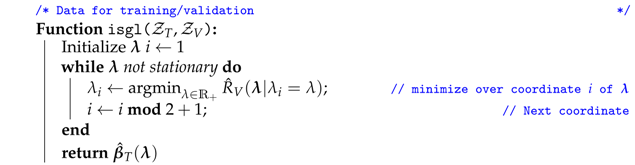

Algorithm 1 describes the two-parameter iterative sparse-group lasso (isgl), a gradient-free coordinate descent method to tune the parameter from the sparse-group lasso (3), which performs well under different scenarios while drastically reducing the number of operations required to find optimal penalty weight parameters that minimize the validation error in (4). The isgl iteratively performs a univariate minimization over one of the coordinates of , while the other coordinate is fixed.

| Algorithm 1: Two-parameter iterative sparse-group lasso (isgl) |

|

As mentioned before, a very useful property of the sparse-group lasso as a variable selection method is the ability to remove entire groups from the model (sending to zero the components of the vector relative to those groups), as is the case with group lasso. However, this means that a grouping among the variables under consideration must be specified. This does not entail a challenge if there are natural groupings among the variables, e.g., if the variables are dummies related to different levels of the same original categorical variable. However, in our study most of the variables are transcriptomes, for which there are no established groupings in the literature. To overcome this problem, we suggest an empirical variable grouping approach, based on the principal component analysis of the data matrix.

2.3. Grouping Variables Using Principal Component Analysis

Principal component analysis (PCA) is a dimension reduction technique, which is very effective in reducing a large number of variables related to each other to a few latent variables while trying to lose a minimum amount of information. The new latent variables obtained (the principal components), which are a linear transformation of the original variables, are uncorrelated and ordered in such a way that the first components capture most of the variation present in all of the original variables.

Given the data matrix , PCA computes the rotation matrix , where is the number of principal components to retain. The transformed data matrix (the principal component matrix) is . This rotation matrix suggests a natural grouping on the columns of , given by:

This strategy will provide at most G groups on the columns of .

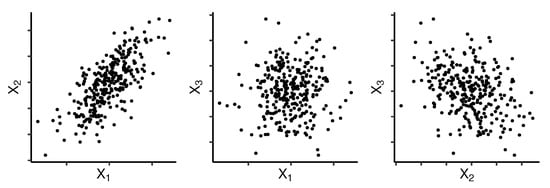

The following example illustrates our approach on a simulated data set. Suppose that we want to cluster variables , and using two groups. There are 300 observations (Figure 1) and they are simulated such that , , and . The principal components rotation matrix is displayed in Table 1.

Figure 1.

Simulated sample from three random variables, that illustrate the grouping based on principal component analysis (PCA).

Table 1.

Principal components rotation matrix .

In this example, and would be grouped together, whereas would be in the other group. This method places highly correlated variables in the same group.

2.4. Mining Influent Variables under a Cross-Validation Approach

In this section, we focus on the problem of variable selection in models where the ratio is in the order of . In these scenarios, even state-of-the-art methods such as SGL find it hard to select an appropriate set of variables related to the response term. We propose a cross-validation approach to fit and evaluate many different models using only a sample size of N initially given observations.

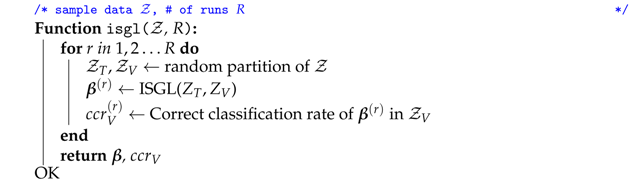

The solution in terms of provided by Algorithm 1 strongly depends on the partition . As a consequence, if we run Algorithm 1 for different partitions of the same data , it will probably result in different coefficient estimates . Therefore, the indicator function of variable included in the model, , will take different values depending on the partition . In order to avoid this dependency in the sample data partition, we propose Algorithm 2, which computes many different solutions of Algorithm 1 for different partitions of the original data sample . The goal of this algorithm is to be able to fit and evaluate many models using the same data. Since the sample size is small compared to the number of covariates, the variable selection will greatly depend on the train–validate partition. We denote by R the total number of models that will be fitted using different partitions from the original sample. Algorithm 2 stores the information of the fitting of each model and the correct classification rate in the validation sample () in each case.

| Algorithm 2: |

|

2.5. Selection of the Best Model

Our objective is to select one of the R models computed in Algorithm 2 to be our final model. We believe that a selection only based on the maximization of could lead to overfit in the training sample data . To overcome this problem, we define two indexes: the importance index of a variable and the power of a model. These indexes are fundamental to choosing a final model that does not overfit the data.

We consider the importance index of variable , defined as:

where and are those returned by Algorithm 2 on data . With the objective of penalizing those models that performed poorly on the validation set, the term has been introduced, which is the maximum between and , i.e., the null model correct classification rate.

The importance index weights each variable differently depending on the correct classification rate of the models in which each variable was present. The larger , the greater the chances of being present in the underlying model that generated the data .

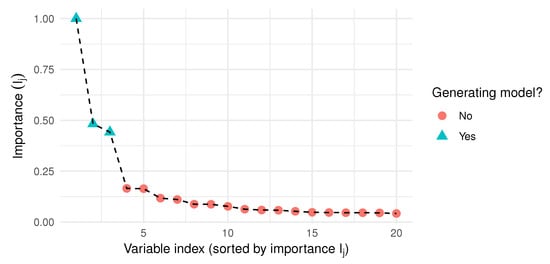

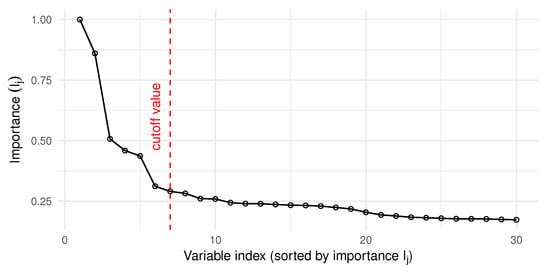

Figure 2 illustrates the importance index, computed on a simulated data set, with observations and variables. Notice that the most important highest variables are actually in the generating model, and there is a clear gap in Figure 2 between them and the rest of the variables.

Figure 2.

Sorted importance index obtained from Algorithm 2, with , and a simulated data sample with observations and variables.

Based on the maximization of the importance index, an appropriate subset is selected from the original p variables. Although the true number of variables involved in the model is unknown, we can focus our attention on a predefined number of important variables K, which depends only on the sample data . We empirically found to achieve good results. Using the importance index of the best K variables, we define the power of a model as:

where denotes the k-th greatest importance index, e.g., . The power index P weights each model, depending on the importance of its included variables.

The selection of the final model is based on the criterion:

Equation (11), Algorithm 2, and the framework that supports them, are the main contribution of this paper from a methodological point of view. Equation (11) is based on the correct classification rates of R different fitted models, two indexes defined in this paper, and the iterative sparse-group lasso, which is a novel algorithm.

3. A Simulation Study

In this section, we illustrate the performance of Algorithm 2 using synthetic data. To generate observations, we have followed simulation designs from [13] (uncorrelated features) and [14,18,19] (correlated features). Since our objective was to evaluate Algorithm 2 in binary classification problems, we used a logistic regression model for the response term using the simulated design matrices in each case. We simulated data from the true model:

with the logistic response given by:

Five scenarios for and were simulated. In each example, our simulated data consisted of a training set of observations and p variables, and an independent test set of 5000 observations and p variables. Models were fitted using training data only. Below are the details of the five scenarios.

- (SFHT_1)

- and are i.i.d , for .

- (SFHT_2)

- In this example, is generated as in SFHT_1, but the rows of the model matrix are i.i.d. generated from a multivariate gaussian distribution with .

- (Tibs_1)

- and the rows of are i.i.d. generated from a multivariate gaussian distribution with .

- (Tibs_4)

- and the rows of are i.i.d. generated from a multivariate gaussian distribution with , and .

- (ZH_d)

- and the rows of were generated as follows:

- , i.i.d. for

where are i.i.d. , for .

We aimed to investigate the robustness of our methodology in each example, measured using the test accuracy, as the number of noisy variables (not in the generating model) increased. Table 2 describes the performance of the final model (Algorithm 2 with importance index) selected under our methodology in the scenarios described above. We have conducted 30 experiments in each case, as we varied the number of variables in the model (p). Mean standard errors are given in parenthesis. To establish a baseline comparison, we also included Lasso with grid search, and our methodology with groups known. Table 2 reveals that for all the configurations (except, perhaps Tibs_1 and SHFT_1) the methodology is very robust with respect to an increase in the number of variables p. In fact, for most of them, the does not vary much from to . Intuitively, the grouping strategy introduced in Section 2.3 places highly correlated variables in the same groups, producing better results when there is correlation between the variables in the model. That is why the simulation scheme SFHT_1 produces the poorest results. In SFHT_1, all the simulated variables are independent and therefore, there is not any clear way to group the variables.

Table 2.

Average correct classification rate (, %) of Algorithm 2 (Alg. 2) in the test data (5000 observations), in 30 experiments for each configuration, and observations in the training sample. Mean standard errors are given in parenthesis. Algorithm 2 was run with . To establish a baseline comparison, we also included Lasso with grid search (Lasso-GS), and our methodology with known groups.

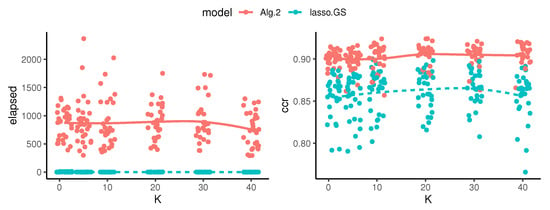

From a computational point of view, however, Algorithm 2 is very expensive compared with lasso. In fact, the parameter R in Algorithm 2 is expected to linearly increase the computational burden of the method. That is because Algorithm 2 loops through R independent runs of Algorithm 1, which multiplies the computational cost of running one instance of Algorithm 1 by a factor R. However, the actual time needed to obtain a solution for Algorithm 2 can be decreased to the maximum of one instance of Algorithm 1, because this is a parallel problem. The remaining computations performed to select the best model can be ignored because they are at the same order of a matrix-vector product. Therefore, the parameter K is not going to affect the computational performance of our method. However, it should affect the predictive performance, since K is directly related to the model selection. Figure 3 compares the computational and predictive performance of our method and lasso on a numerical experiment. Figure 3 confirms that K does not affect the computational burden, and its impact on the predictive performance is also negligible in this case, although it has a peak when K equals the true number of variables in the generating model.

Figure 3.

Elapsed time (in seconds) to obtain a solution (left panel) and accuracy (right panel) of Algorithm 2 (Alg. 2) and lasso with grid search (lasso-GS), on 30 independent runs for each K. The simulation design was ZH_d, with , , , and K varying between 1 and 40. These experiments were run sequentially.

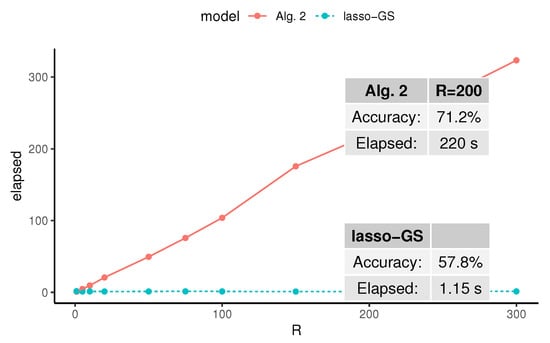

To empirically evaluate the impact of R, we propose a numerical simulation. Figure 4 displays the average elapsed time needed to obtain a solution to Algorithm 2 (Alg. 2) and lasso with grid search (lasso-GS), varying the parameter R (which does not affect lasso, which has been included as a baseline). The illustrated simulation design was ZH_d, with and both fixed, and R varying between 1 and 300. These experiments demonstrate that the relationship between R and the elapsed time is linear. In addition, Figure 4 confirms that we are trading computational cost for an increase of more than in the accuracy, which we believe is a reasonable trade for most applications.

Figure 4.

Average elapsed time (in seconds) needed to obtain a solution of Algorithm 2 (Alg. 2) and lasso with grid search (lasso-GS), on 30 independent runs for each R. The simulation design was ZH_d, with and both fixed, and R varying between 1 and 300. These experiments were run sequentially.

4. Application to Biomedical Data

In this section, we evaluate the methodology described in Algorithm 2 with the model selection criterion given by (11) on a real case study. A sample of TNBC patients from a previously published clinical trial [20] was used to analyze relations between cancer cells’ transcriptome and the response of patients to the given medical treatment (docetaxel plus carboplatin). The dataset was composed of 93 observations (patients) and variables (genetic transcripts and clinical variables).

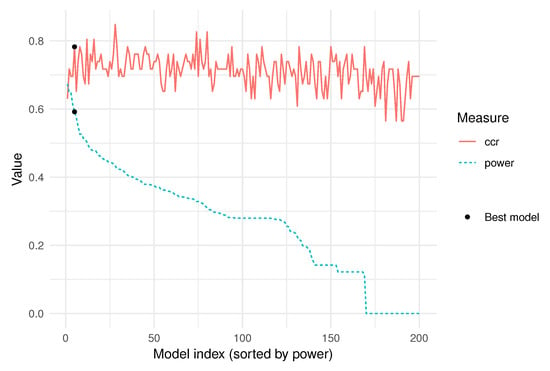

Figure 5 shows the highest 30 importance indexes out of a total of variables. The criterion used to measure the importance of the variables is given in (9). Algorithm 2 was run with , and the cutoff value was set to , as described in Section 2.5. With this importance index, the power of each model was computed using (10) and the best model was chosen according to (11), as highlighted in Figure 6.

Figure 5.

Sorted Importance indexes, according to the criterion given in (9), and after running Algorithm 2 with . The cutoff value was set to , as described in Section 2.5.

Figure 6.

Power index (10), measured in models, in decreasing order, with the corresponding correct classification rate (ccr) of each model in the validation sample.

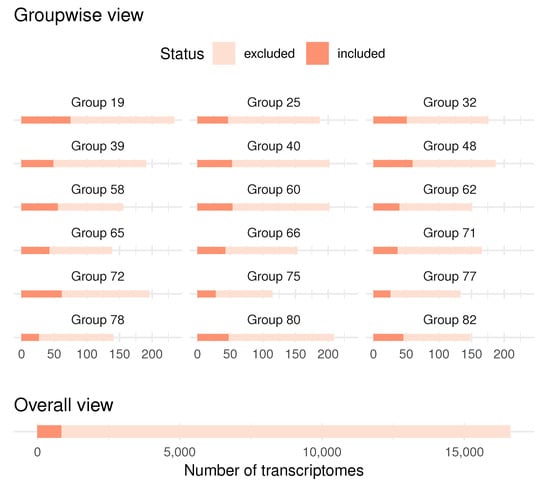

The selected model included 843 out of variables. The grouping strategy mentioned in Section 2.3 resulted in a total of 82 groups, from which 18 were included in the final model.

Figure 7 displays the distribution of the number of non-zero coefficients for each group that was included in the final model, which is revealing in several ways. Firstly, it indicates that PCA finds groups of similar lengths, and secondly, the selected model is sparse at both the group and the variable levels.

Figure 7.

Number of included variables in the final model, by groups (top) and total (bottom). Eighteen out of 82 groups were included.

In an attempt to discover the biological and genetic meaning in the model selected by our methodology, we ran DAVID [21,22] to detect enriched functional-related gene groups. The clustering and functional annotation was performed using the default analysis options, and the role of the potential multiple testing effect was considered using the false discovery rate (FDR). The results are detailed in supplementary Table S1.

We observed just two remarkable families of pathways after the gene enrichment analysis: the homeobox-related and the oxidative phosphorylation pathways. They are both involved in the mechanism of action of docetaxel and carboplatin in response to the provided treatment.

The homeobox genes have been proposed to be involved in mechanisms of resistance to taxane-based oncologic treatments in ovarian and prostate cancer [23,24,25,26]. Docetaxel hyper-stabilizes the microtube structure, irreversibly blocking the cytoskeleton function in the mitotic process and intracellular transport. In addition, this drug induces programmed cell death.

On the other hand, carboplatin attaches alkyl groups to DNA bases, resulting in fragmentation by repair enzymes when trying to repair them. It also inducts to mutations due to nucleotide despairing and generates DNA cross-links that affects the transcription process [27]. The development of resistance to platinum-based schemes of chemotherapy is a common feature. Several studies demonstrate that dysfunctions in mitochondrial processes, in conjunction with the mentioned mechanism of action, can contribute to the development of phenotypes associated with resistance [28,29,30,31,32,33].

5. Conclusions

The present study introduced a methodology to deal with the variable selection problem in a high dimensional set-up. It can be seen as an extension of the sparse-group lasso regularization method, without dependencies on both the hyper-parameters and the groups. There are several critical components in this approach:

- A clustering of the variables, based on PCA, makes it possible to work with an arbitrarily large number of variables without specifying groups a priori.

- The iterative sparse group lasso removes the dependence on the hyper-parameters of the sparse group lasso, but is sensible to the train–validate sample partitions. This problem has been solved running the algorithm for a large number of different train–validate sample partitions (Algorithm 2).

- The correct classification rate of each model in its respective validation sample is stored. Notice that this is an overestimation of the true correct classification rate on future observations, and the highest validation rate does not imply the best model.

- The importance index weighs the variables, based on the correct classification rate of the models that include them.

- The power index weighs the models, based on the importance of the variables they include.

This methodology was tested on a sample of TNBC patients, trying to reveal the genetic profile associated with resistance to the treatment of interest. The literature studies mentioned in Section 4 provide a rationale supporting the potential predictive value of the two gene pathways identified in our study (the homeobox-related and the oxidative phosphorylation pathways). In order to validate these results, we are testing the model in a new cohort of TNBC patients from the same clinical trial.

Future studies should examine other strategies to grouping variables, as discussed in Section 2.3, based on supervised algorithms as well as unsupervised ones.

Supplementary Materials

The following are available online at https://www.mdpi.com/2227-7390/9/3/222/s1: Table S1: DAVID functional analysis of selected genes, the enriched pathways and their sources. Each pathway underwent a modified Fisher’s exact test (EASE score) in order to determine if the sparse-group lasso model genes were over-represented in those gene sets. The Fold Enrichment and the PValue measure the magnitude of enrichment. In addition, Bonferroni, Benjamini, and FDR techniques are provided to globally correct enrichment P-values to control the family-wide false discovery rate. Some basic metrics regarding the number and percentage of genes in the studied pathways are shown in the Count and % columns.

Author Contributions

Data curation, E.Á., S.L.-T., M.d.M.-M. and A.C.P.; Investigation, M.C.A.-M., E.Á., R.E.L. and A.C.P.; Methodology, J.C.L., M.C.A.-M. and R.E.L.; Software, J.C.L., M.C.A.-M. and R.E.L.; Supervision, M.C.A.-M., R.E.L., A.C.P., M.M. and J.R.; Writing—original draft, J.C.L., A.C.P. and M.M.; Writing—review & editing, J.C.L., E.Á., M.C.A.-M., R.E.L. and J.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by a research grant from Instituto de Salud Carlos III (PI 15/00117), co-funded by FEDER, to M. Martín.

Acknowledgments

Simulations in Section 3 and Section 4 have been carried out in Uranus, a supercomputer cluster located at Universidad Carlos III de Madrid and funded jointly by EU-FEDER funds and by the Spanish Government via the National Projects No. UNC313-4E-2361, No. ENE2009-12213-C03-03, No. ENE2012-33219 and No. ENE2015-68265-P.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ferlay, J.; Soerjomataram, I.; Ervik, M.; Dikshit, R.; Eser, S.; Mathers, C.; Rebelo, M.; Parkin, D.; Forman, D.; Bray, F. GLOBOCAN 2012 v1. 0, Cancer Incidence and Mortality Worldwide: IARC CancerBase No. 11; International Agency for Research on Cancer: Lyon, France, 2013. [Google Scholar]

- Dent, R.; Trudeau, M.; Pritchard, K.I.; Hanna, W.M.; Kahn, H.K.; Sawka, C.A.; Lickley, L.A.; Rawlinson, E.; Sun, P.; Narod, S.A. Triple-negative breast cancer: clinical features and patterns of recurrence. Clin. Cancer Res. 2007, 13, 4429–4434. [Google Scholar] [CrossRef]

- Cortazar, P.; Zhang, L.; Untch, M.; Mehta, K.; Costantino, J.P.; Wolmark, N.; Bonnefoi, H.; Cameron, D.; Gianni, L.; Valagussa, P.; et al. Pathological complete response and long-term clinical benefit in breast cancer: the CTNeoBC pooled analysis. Lancet 2014, 384, 164–172. [Google Scholar] [CrossRef]

- Symmans, W.F.; Wei, C.; Gould, R.; Yu, X.; Zhang, Y.; Liu, M.; Walls, A.; Bousamra, A.; Ramineni, M.; Sinn, B.; et al. Long-Term Prognostic Risk After Neoadjuvant Chemotherapy Associated With Residual Cancer Burden and Breast Cancer Subtype. J. Clin. Oncol. Off. J. Am. Soc. Clin. Oncol. 2017, 35, 1049–1060. [Google Scholar] [CrossRef]

- Sharma, P.; López-Tarruella, S.; Garcia-Saenz, J.A.; Khan, Q.J.; Gomez, H.; Prat, A.; Moreno, F.; Jerez-Gilarranz, Y.; Barnadas, A.; Picornell, A.C.; et al. Pathological response and survival in triple-negative breast cancer following neoadjuvant carboplatin plus docetaxel. Clin. Cancer Res. 2018, 24, 5820–5829. [Google Scholar] [CrossRef]

- Tabchy, A.; Valero, V.; Vidaurre, T.; Lluch, A.; Gomez, H.L.; Martin, M.; Qi, Y.; Barajas-Figueroa, L.J.; Souchon, E.A.; Coutant, C.; et al. Evaluation of a 30-gene paclitaxel, fluorouracil, doxorubicin and cyclophosphamide chemotherapy response predictor in a multicenter randomized trial in breast cancer. Clin. Cancer Res. 2010, 16, 5351–5361. [Google Scholar] [CrossRef]

- Hatzis, C.; Pusztai, L.; Valero, V.; Booser, D.J.; Esserman, L.; Lluch, A.; Vidaurre, T.; Holmes, F.; Souchon, E.; Wang, H.; et al. A genomic predictor of response and survival following taxane-anthracycline chemotherapy for invasive breast cancer. JAMA 2011, 305, 1873–1881. [Google Scholar] [CrossRef]

- Chang, J.C.; Wooten, E.C.; Tsimelzon, A.; Hilsenbeck, S.G.; Gutierrez, M.C.; Elledge, R.; Mohsin, S.; Osborne, C.K.; Chamness, G.C.; Allred, D.C.; et al. Gene expression profiling for the prediction of therapeutic response to docetaxel in patients with breast cancer. Lancet 2003, 362, 362–369. [Google Scholar] [CrossRef]

- De Los Campos, G.; Gianola, D.; Allison, D.B. Predicting genetic predisposition in humans: the promise of whole-genome markers. Nat. Rev. Genet. 2010, 11, 880. [Google Scholar] [CrossRef]

- Lupski, J.R.; Belmont, J.W.; Boerwinkle, E.; Gibbs, R.A. Clan genomics and the complex architecture of human disease. Cell 2011, 147, 32–43. [Google Scholar] [CrossRef]

- Offit, K. Personalized medicine: New genomics, old lessons. Hum. Genet. 2011, 130, 3–14. [Google Scholar] [CrossRef]

- Szymczak, S.; Biernacka, J.M.; Cordell, H.J.; González-Recio, O.; König, I.R.; Zhang, H.; Sun, Y.V. Machine learning in genome-wide association studies. Genet. Epidemiol. 2009, 33. [Google Scholar] [CrossRef] [PubMed]

- Simon, N.; Friedman, J.; Hastie, T.; Tibshirani, R. A sparse-group lasso. J. Comput. Graph. Stat. 2013, 22, 231–245. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regression shrinkage and selection via the elastic net, with applications to microarrays. J. R. Stat. Soc. Ser. B 2003, 67, 301–320. [Google Scholar] [CrossRef]

- Laria, J.C.; Carmen Aguilera-Morillo, M.; Lillo, R.E. An iterative sparse-group lasso. J. Comput. Graph. Stat. 2019, 28, 722–731. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Azevedo Costa, M.; de Souza Rodrigues, T.; da Costa, A.G.F.; Natowicz, R.; Pádua Braga, A. Sequential selection of variables using short permutation procedures and multiple adjustments: An application to genomic data. Stat. Methods Med Res. 2017, 26, 997–1020. [Google Scholar] [CrossRef]

- Sharma, P.; López-Tarruella, S.; García-Saenz, J.A.; Ward, C.; Connor, C.; Gómez, H.L.; Prat, A.; Moreno, F.; Jerez-Gilarranz, Y.; Barnadas, A.; et al. Efficacy of neoadjuvant carboplatin plus docetaxel in triple negative breast cancer: Combined analysis of two cohorts. Clin. Cancer Res. 2017, 23, 649–657. [Google Scholar] [CrossRef]

- Huang, D.W.; Sherman, B.T.; Lempicki, R.A. Systematic and integrative analysis of large gene lists using DAVID bioinformatics resources. Nat. Protoc. 2009, 4, 44–57. [Google Scholar] [CrossRef]

- Huang, D.W.; Sherman, B.T.; Lempicki, R.A. Bioinformatics enrichment tools: paths toward the comprehensive functional analysis of large gene lists. Nucleic Acids Res. 2008, 37, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Zhang, Y.; Gao, Y.; Cui, Y.; Liu, H.; Li, M.; Tian, Y. Downregulation of HNF1 homeobox B is associated with drug resistance in ovarian cancer. Oncol. Rep. 2014, 32, 979–988. [Google Scholar] [CrossRef] [PubMed]

- Hanrahan, K.; O’neill, A.; Prencipe, M.; Bugler, J.; Murphy, L.; Fabre, A.; Puhr, M.; Culig, Z.; Murphy, K.; Watson, R.W. The role of epithelial–mesenchymal transition drivers ZEB1 and ZEB2 in mediating docetaxel-resistant prostate cancer. Mol. Oncol. 2017, 11, 251–265. [Google Scholar] [CrossRef] [PubMed]

- Marín-Aguilera, M.; Codony-Servat, J.; Reig, Ò.; Lozano, J.J.; Fernández, P.L.; Pereira, M.V.; Jiménez, N.; Donovan, M.; Puig, P.; Mengual, L.; et al. Epithelial-to-mesenchymal transition mediates docetaxel resistance and high risk of relapse in prostate cancer. Mol. Cancer Ther. 2014, 13, 1270–1284. [Google Scholar] [CrossRef] [PubMed]

- Puhr, M.; Hoefer, J.; Schäfer, G.; Erb, H.H.; Oh, S.J.; Klocker, H.; Heidegger, I.; Neuwirt, H.; Culig, Z. Epithelial-to-mesenchymal transition leads to docetaxel resistance in prostate cancer and is mediated by reduced expression of miR-200c and miR-205. Am. J. Pathol. 2012, 181, 2188–2201. [Google Scholar] [CrossRef]

- Wishart, D.S.; Feunang, Y.D.; Guo, A.C.; Lo, E.J.; Marcu, A.; Grant, J.R.; Sajed, T.; Johnson, D.; Li, C.; Sayeeda, Z.; et al. DrugBank 5.0: A major update to the DrugBank database for 2018. Nucleic Acids Res. 2017, 46, D1074–D1082. [Google Scholar] [CrossRef]

- Matassa, D.; Amoroso, M.; Lu, H.; Avolio, R.; Arzeni, D.; Procaccini, C.; Faicchia, D.; Maddalena, F.; Simeon, V.; Agliarulo, I.; et al. Oxidative metabolism drives inflammation-induced platinum resistance in human ovarian cancer. Cell Death Differ. 2016, 23, 1542. [Google Scholar] [CrossRef]

- Dai, Z.; Yin, J.; He, H.; Li, W.; Hou, C.; Qian, X.; Mao, N.; Pan, L. Mitochondrial comparative proteomics of human ovarian cancer cells and their platinum-resistant sublines. Proteomics 2010, 10, 3789–3799. [Google Scholar] [CrossRef]

- Chappell, N.P.; Teng, P.n.; Hood, B.L.; Wang, G.; Darcy, K.M.; Hamilton, C.A.; Maxwell, G.L.; Conrads, T.P. Mitochondrial proteomic analysis of cisplatin resistance in ovarian cancer. J. Proteome Res. 2012, 11, 4605–4614. [Google Scholar] [CrossRef]

- Marrache, S.; Pathak, R.K.; Dhar, S. Detouring of cisplatin to access mitochondrial genome for overcoming resistance. Proc. Natl. Acad. Sci. USA 2014, 111, 10444–10449. [Google Scholar] [CrossRef]

- Belotte, J.; Fletcher, N.M.; Awonuga, A.O.; Alexis, M.; Abu-Soud, H.M.; Saed, M.G.; Diamond, M.P.; Saed, G.M. The role of oxidative stress in the development of cisplatin resistance in epithelial ovarian cancer. Reprod. Sci. 2014, 21, 503–508. [Google Scholar] [CrossRef] [PubMed]

- McAdam, E.; Brem, R.; Karran, P. Oxidative Stress–Induced Protein Damage Inhibits DNA Repair and Determines Mutation Risk and Therapeutic Efficacy. Mol. Cancer Res. 2016, 14, 612–622. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).