Abstract

This research study investigates the issue of finite-time passivity analysis of neutral-type neural networks with mixed time-varying delays. The time-varying delays are distributed, discrete and neutral in that the upper bounds for the delays are available. We are investigating the creation of sufficient conditions for finite boundness, finite-time stability and finite-time passivity, which has never been performed before. First, we create a new Lyapunov–Krasovskii functional, Peng–Park’s integral inequality, descriptor model transformation and zero equation use, and then we use Wirtinger’s integral inequality technique. New finite-time stability necessary conditions are constructed in terms of linear matrix inequalities in order to guarantee finite-time stability for the system. Finally, numerical examples are presented to demonstrate the result’s effectiveness. Moreover, our proposed criteria are less conservative than prior studies in terms of larger time-delay bounds.

1. Introduction

Neural networks have been intensively explored in recent decades due to their vast range of applications in a variety of fields, including signal processing, associative memories, learning ability and so on [1,2,3,4,5,6,7,8,9,10]. In the study of real systems, time-delay phenomena are unavoidable. Many interesting neural networks, such as Hopfield neural networks, cellular neural networks, Cohen-Grossberg neural networks and bidirectional associative memory neural networks frequently exhibit time delays. In addition, time delays are well recognized as a source of instability and poor performance [11]. Accordingly, stability analysis of delayed neural networks has become a topic of significant theoretical and practical relevance (see [12,13,14,15]), and many important discoveries have been reported on this subject. In recent years, T-S fuzzy delayed neural networks with Markovian jumping parameters using sampled-data control have been presented by Syed Ali et al. [16]. The global stability analysis of fractional-order fuzzy BAM neural networks with time delay and impulsive effects was considered in [17].

Furthermore, conventional neural network models are often unable to accurately represent the qualities of a neural reaction process due to the complex dynamic features of neural cells in the real world. It is only natural for systems to store information about the derivative of a previous state in order to better characterize and analyze the dynamics of such complicated brain responses. Neutral neural networks and neutral-type neural networks are the names given to this new type of neural network. Several academics [18,19,20,21,22,23] have studied neutral-type neural networks with time-varying delays in recent years. In 2018 [24], the authors investigated improved results on passivity analysis of neutral-type neural networks with mixed time-varying delays. In particular, a type of time-varying delay known as distributed delay occurs in networked-based systems and has received a lot of academic interest because of its significance in digital control systems [25]. Then, this system has all three types of delays: discrete delay, neutral delay and distributed delay. As a result, the neutral delay in neural networks has recently been reported, as well as some stability analysis results for neutral-type neural networks with mixed time-varying delays.

The passive theory [26] is a useful tool for analyzing system stability, and it can deal with systems based solely on the input–output dynamics’ general features. The passive theory has been used in engineering applications such as in high-integrity and safety-critical systems. Krasovskii and Lidskii proposed this family of linear systems in 1961 [27]. Researchers have been looking at the passivity of neural networks with delays since then. Many studies have been performed on stability in recent years, including Lyapunov stability, asymptotic stability, uniform stability, eventually uniformly bounded stability and exponential stability, all of which are concerned with the behavior of systems over an indefinite time span. Most actual neural systems, on the other hand, only operate over finite-time intervals. Finite-time passivity is obviously vital and vital for investigating finite-time stabilization of neural networks as a useful tool for analyzing system stability.

This topic has piqued the curiosity of researchers [28,29,30,31,32,33,34,35]. They deal with by Jensen’s and Coppel’s inequality in [28], which is concerned with the problem of finite-time stability of continuous time delay systems. The authors used an unique control protocol based on the Lyapunov theory and inequality technology to examine the finite-time stabilization of delayed neural networks in [29]. Rajavel et al. [30] solves the problem of finite-time non-fragile passivity control for neural networks with time-varying delay using the Lyapunov–Krasovskii functional technique. Researchers used a new Lyapunov–Krasovskii function with triple and four integral terms to examine finite-time passive filtering for a class of neutral time-delayed systems in [31]. The free-weighting matrix approach and Wirtinger’s double integral inequality were used to demonstrate finite-time stability of neutral-type neural networks with random time-varying delays in [32]. Syed Ali et al. [33] studied finite-time passivity for neutral-type neural networks with time-varying delays using the auxiliary integral inequality. Ali et al. [34] explored popular topics including the finite-time boundedness of discrete-time neural networks and norm-bounded disturbances with time-varying delay. In 2021, Phanlert et al. [35] has been researching a finite-time non-neutral system. Based on the above research, there are many different methods for stability analysis. Our research will make stability stronger. However, no results on finite-time passivity analysis of neutral-type neural networks with mixed time-varying delays latency have been reported to the best of the authors’ knowledge. This is the driving force behind our current investigation.

As a result of the foregoing, we investigate three types of finite passivity in neural networks and provide matching criteria for judging network properties using Lyapunov functional theory and inequality technology. The following are the primary contributions of this paper:

- (i)

- We examine a system with mixed time-varying delays in this study. Furthermore, because time-varying delays are distributed, discrete and neutral, the upper bounds for the delays are known.

- (ii)

- We then used the theorems to derive finite-time boundedness, finite-stability and finite-time passivity requirements.

- (iii)

- By using Peng-integral Park’s inequality, model transformation, zero equation and subsequently Wirtinger-based integral inequality approach, some of the simplest LMI-based criteria have been developed.

- (iv)

- Several cases have been examined to ensure that the primary theorem and its corollaries are accurate.

The following is a breakdown of the paper’s structure. Section 2 introduces the network under consideration and offers some definitions, propositions and lemmas. In Section 3, three types of finite-time passivity of the neural network are introduced, and finite-time stability is achieved. In Section 4, several useful outcomes are observed. In Section 4, five numerical examples are presented to demonstrate the usefulness of our proposed results. Finally, in Section 5, we bring this study to a close.

2. Preliminaries

We begin by explaining various notations and lemmas that will be used throughout the study. R denotes the set of all real numbers; denotes the n-dimensional space; denotes the set of all real matrices; denotes the transpose of the matrix A; A is symmetric if ; denotes the set of all eigenvalues of A; and and represent the maximum and minimum eigenvalues of the matrix A, respectively. ∗ represents the elements below the main diagonal of the symmetric matrices; stands for the diagonal matrix.

Consider the study of finite-time passivity analysis of neutral-type neural networks with mixed time-varying delays of the following form:

where is the neural state vector, is the output vector of neuron network, and is the exogenous disturbance input vector belongs to . is a diagonal matrix with . Matrices and are the interconnection matrices representing the weight coefficients of the neurons. Matrices and are known real constant matrices with appropriate dimensions. is the neuron activation function, and denotes the initial function. is the discrete time-varying delay, is the distributed time-varying delay, is neutral delay and .

The variables and represent the mixed delays of the model in (1) and satisfy the following:

where and are positive real constants.

Assumtion 1.

The activation function f is continuous and the exist real constants and such that the following is the case:

for all , and for any satisfies For the sake of presentation convenience, in the following, we denote and .

Assumtion 2.

In the case of a positive parameter δ, is a time-varying external disturbance that satisfies the following.

Definition 1

((Finite-time boundedness) [36,37]). For a positive constant of T, system (1) is finite-time bounded with respect to if there exist constants such that the following is the case:

for a given positive constant , and is a positive definite matrix.

Definition 2

((Finite-time stability) [36,37]). System (1) with is said to be finite-time stable with respect to if there exist constants such that the following is the case:

for a given positive constant , and is a positive definite matrix.

Definition 3

((Finite-time passivity) [37]). System (1) is said to be a finite-time passive with with a prescribed dissipation performance level , if the following relations hold:

- (a)

- For any external disturbances , system (1) is finite-time bounded;

- (b)

- For a given positive scalar , the following relationship holds under a zero initial condition.

Lemma 1

((Jensen’s Inequality) [38]). For each positive definite symmetric matrix , positive real constant and vector function such that the following integral is well defined, then the following is obtained.

Lemma 2

((Wirtinger-based integral inequality) [39]). For any matrix , the following inequality holds for all continuously differentiable function

where .

Lemma 3

((Peng-Park’s integral inequality) [40,41]). For any matrix of the following:, is satisfied by positive constants and , and is a vector function that verifies the integrations in question are correctly specified. We then have the following:

where and .

Lemma 4

([42]). The following inequality applies to a positive matrix

Lemma 5

([43]). is a constant symmetric positive definite matrix. For any constant symmetric positive definite matrix , is a discrete time-varying delay with (2), vector function such that the integrations concerned are well defined, then the following is the case.

Lemma 6

([43]). For any constant matrices , , is a discrete time-varying delay with (2) and vector function such that the following integration is well defined:

where

and the following is the case Π = .

Lemma 7

([43]). Let be a vector-valued function with first-order continuous-derivative entries. For any constant matrices , then the following integral inequality holds, and is a discrete time-varying delay with (2):

where , .

Lemma 8

([44]). For a positive definite matrix and any continuously differentiable function , the following inequality holds:

where the following is the case.

3. Main Results

3.1. Finite-Time Boundedness Analysis

The following finite-time boundedness analysis of neutral-type neural networks with mixed time-varying delays is discussed in this subsection.

In the first subsection, we look at system (5) with (2) that uses new criteria for systems introduced via the LMIs approach.

For future reference, we introduce the following notations in the Appendix A.

Theorem 1.

For < 1, system (5) is finite-time bounded if there exist positive definite matrices any appropriate matrices and where , positive diagonal matrices and positive real constants such that the following symmetric linear matrix inequality holds:

For future reference, we introduce the following notations in Appendix A. Then, in system (9) is defined in Remark 1.

Proof.

First, we show that system (5) is the finite-time bounded analysis. As a result, we consider system (5) to satisfy the following.

We can rewrite system (10) to the following system:

by using the model transformation approach. Construct a Lyapunov–Krasovskii functional candidate for system (10)–(12) of the following form:

where the following is the case:

where

The time derivative of is then computed as the following.

Taking the derivative of along any system solution trajectory, we have the following.

For and , we now have the following.

It is from Lemma 6 that we have the following.

where

Using Lemmas 5 and 7, is computed as follows:

where the following is the case.

Using Lemma 1 (Jensen’s Inequality), we have the following.

Using Lemma 8 to confront , we can obtain the following:

where the following is the case.

According to Lemma 4, we can obtain by performing the following.

Using Lemmas 2 and 3, an upper bound of can be obtained as follows.

Taking the time derivative of , we have the following.

Calculating yields the following.

Furthermore, for any real matrices and of compatible dimensions, we obtain

Based on (14)–(19), it is clear that the following is observed:

where the following is the case.

Then, and we are able to obtain the following.

By multiplying the above inequality by , we can obtain the following.

Integrating the two sides of the inequality (22) from 0 to t, with , we have obtained the following.

They include the following.

Note that and the following relationship can be found.

We have the following:

where the following is the case.

On the other hand, the following condition holds.

Condition indicates that for From Definition 2, system (5) is finite-time bounded with regard to The proof is now finished. □

Remark 1.

Condition (9) is not a standard form of LMIs. In order to verify that this condition is equivalent to the relation of LMIs, let be some positive scalars with the following.

Consider the following.

3.2. Finite-Time Stability Analysis

Remark 2.

If there is an external disruption system (5) changes into the following.

By (8), we provide additional notation for finite-time stability analysis for (28).

We obtain that is the same as in Theorem 1. Then, we define the following:

and construct a new theorem that follows Corollary 1.

Corollary 1.

For < 1, system (28) with is finite-time stable if there exist positive symmetric matrices any appropriate matrices and , where , positive diagonal matrices are and positive real constants are such that the following symmetric linear matrix inequality holds:

where as described in Theorem 1.

Proof.

Since the proof is identical to that of Theorem 1, it is excluded from this section. □

3.3. Finite-Time Passivity Analysis

This section discusses the topic of finite-time passivity analysis investigated for the following system.

Theorem 2.

For < 1, system (43) is finite-time passivity if there exist positive symmetric matrices any appropriate matrices and , where , positive diagonal matrices are and positive real constants are such that the following symmetric linear matrix inequality holds:

where , except

Proof.

The following function is defined using the same Lyapunov–Krasovskii function as Theorem 1.

is show in (35), and then the following is the case.

Then, multiplying (38) by and integrating it between 0 and T, we can obtain the following:

which implies the following.

Due to it is reasonable to obtain it from (39) and the following:

where As a result, we may infer that system (33) is finite-time passive. This completes the proof. □

Remark 3.

When and system (5) changes to delayed neural network, the following is the case.

By (8), we consider system (41) without finite-time stability condition and same proof line of Theorem 1. Moreover, the system is said to be asymptotically stable:

where and the parameters are as defined in Theorem 1. Then, we define the following.

4. Numerical Examples

Simulation examples are provided in this part to show the feasibility and efficiency of theoretic solutions. Five examples are given in this part to demonstrate the key theoretical conclusions that have been offered.

Example 1.

Consider the following matrix parameters for the neutral-type neural networks:

with the following.

Let the following be the case:

and . Using the MATHLAB tools to solve LMIs (8) and (9), we may obtain , indicating that the neutral system under consideration is finite-time bounded. The activation function is described by , and we allow discrete time-varying delays to satisfy and .

Example 2.

Consider the following matrix parameters for the neutral-type neural networks matrix parameters:

with the following.

Let the following be the case:

then . Using the MATHLAB tools to solve LMIs (35) and (36), we may obtain , indicating that the neutral system under consideration is finite-time passive. The activation function is described by , and we allow discrete time-varying delays to satisfy and .

Example 3.

Consider the following matrix parameters for the neutral-type neural networks:

with the following.

Let the following be the case:

and , . Using the MATHLAB tools to solve LMIs (8) and (9), we may obtain , indicating that the neutral system under consideration is finite-time stable. The activation function is described by , and we allow discrete time-varying delays to satisfy and .

Example 4.

Consider the following matrix parameters for the neural networks matrix parameters:

with the following:

then . Using the MATHLAB tools to solve LMIs (35) and (36), we indicate that the neutral system under consideration is finite-time passive. In addition, the acquired results are compared to previously published studies. The findings show that the stability conditions presented in this paper are more effective than those found in previous research. By solving Example 4 with LMI in Remark 3, we can obtain a maximum permissible upper bound for different , as shown in Table 1.

Table 1.

Allowable upper bound for various of Example 4.

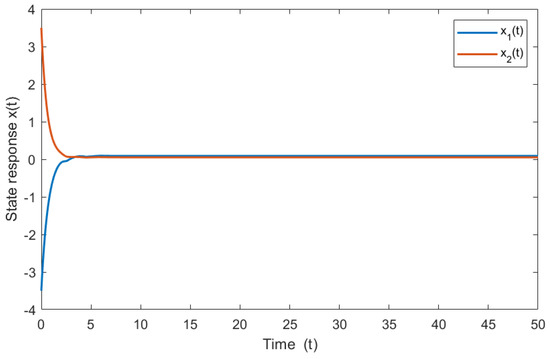

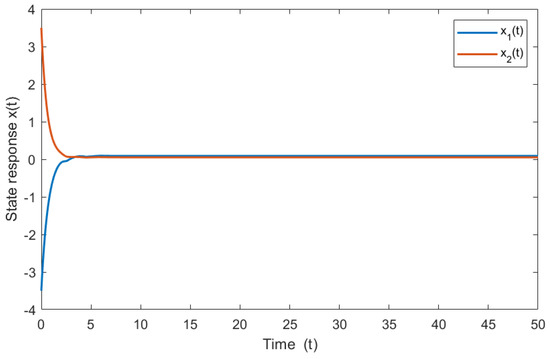

Figure 1 provides the state response of system (4) under zero input and the initial condition . The interval time-varying delays are chosen as , and the activation function is set as .

Figure 1.

It provides the state response of system (4) under zero input and the initial condition . The interval time-varying delays are chosen as , and the activation function is set as .

The permissible upper bound for various is shown in Table 1. Table 1 shows that the conclusions of Remark 3 in this study are less conservative than those in [45,46,47,48], demonstrating the effectiveness of our efforts. Table 1 shows the state variables’ temporal responses. The allowable upper bounds of are listed in Table 1.

Example 5.

Consider the following matrix parameters for the neural networks matrix parameters:

with the following:

then . The maximum delay bounds with μ calculated by Remark 3, as and the recommended criteria are presented in the Table 2.

Table 2.

Allowable upper bound for various of Example 5.

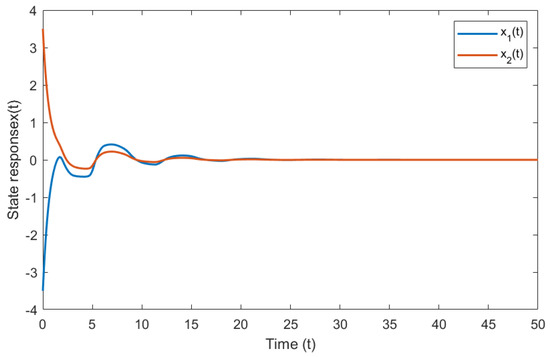

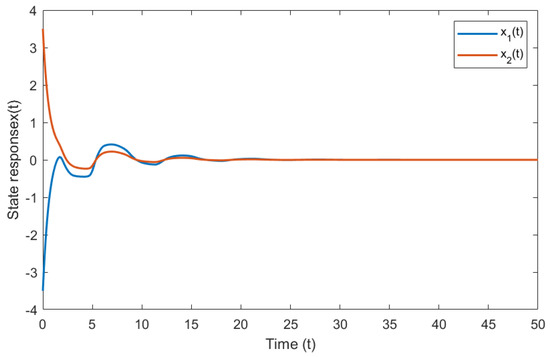

Figure 2 provides the state response of system (4) under zero input and the initial condition . The interval time-varying delays are chosen as , and the activation function is set as .

Figure 2.

It provides the state response of system (4) under zero input and the initial condition . The interval time-varying delays are chosen as , and the activation function is set as .

From Table 2, it follows that Remark 3 provides significantly better results than [49,50,51,52] in the case of = 0.4 and = 0.45. However, in cases where = 0.5 and = 0.55, the results are slightly worse than in [21]. Additionally, the acquired results are compared to previously published studies. The findings show that the stability conditions presented in this paper are more effective than those found in previous research.

5. Conclusions

In this study, a novel result was presented. The new systems have been used to derive the analysis of finite-time passivity analysis of neutral-type neural networks with mixed time-varying delays. The time-varying delays are distributed, discrete and neutral, and the upper bounds for the delays are available. We are investigating the creation of sufficient conditions for finite boundness, finite-time stability and finite-time passivity, which has not been performed before. First, we create a new Lyapunov–Krasovskii functional, Peng–Park’s integral inequality, descriptor model transformation and zero equation use, and then we used Wirtinger’s integral inequality technique. New finite-time stability necessary conditions are constructed in terms of linear matrix inequalities to guarantee finite-time stability for the system. Finally, numerical examples are presented to demonstrate the result’s effectiveness, and our proposed criteria are less conservative than prior studies in terms of larger time-delay bounds. By combining numerous integral components of the Lyapunov–Krasovskii function with inequality, our results offered wider bounds of time-delay than the previous literature (see Table 1 and Table 2). Construction of an LMI variable number based on integral inequalities yields less conservative stability criteria for interval time-delay systems. We expect to be able to improve existing research and lead research into other areas of application.

Author Contributions

Conceptualization, I.K., N.S. and K.M.; methodology, I.K. and N.S.; software, N.S. and I.K.; validation, N.S., T.B. and K.M.; formal analysis, K.M.; investigation, N.S., I.K., T.B. and K.M.; writing—original draft preparation, N.S., I.K. and K.M.; writing—review and editing, N.S., I.K., T.B. and K.M.; visualization, I.K. and K.M.; supervision, N.S. and K.M.; project administration, I.K. and K.M.; funding acquisition, K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Faculty of Engineering, Rajamangala University of Technology Isan Khon Kaen Campus and Research and Graduate Studies, Khon Kaen University (Research Grant RP64-8-002).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank the reviewers for their valuable comments and suggestions, which resulted in the improvement of the content of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

For where the following is the case:

References

- Wu, A.; Zeng, Z. Exponential passivity of memristive neural networks with time delays. Neural Netw. 2014, 49, 11–18. [Google Scholar] [CrossRef]

- Gunasekaran, N.; Zhai, G.; Yu, Q. Sampled-data synchronization of delayed multi-agent networks and its application to coupled circuit. Neurocomputing 2020, 413, 499–511. [Google Scholar] [CrossRef]

- Gunasekaran, N.; Thoiyab, N.M.; Muruganantham, P.; Rajchakit, G.; Unyong, B. Novel results on global robust stability analysis for dynamical delayed neural networks under parameter uncertainties. IEEE Access 2020, 8, 178108–178116. [Google Scholar] [CrossRef]

- Wang, H.T.; Liu, Z.T.; He, Y. Exponential stability criterion of the switched neural networks with time-varying delay. Neurocomputing 2019, 331, 1–9. [Google Scholar] [CrossRef]

- Syed Ali, M.; Saravanan, S. Finite-time stability for memristor base d switched neural networks with time-varying delays via average dwell time approach. Neurocomputing 2018, 275, 1637–1649. [Google Scholar]

- Pratap, A.; Raja, R.; Cao, J.; Alzabut, J.; Huang, C. Finite-time synchronization criterion of graph theory perspective fractional-order coupled discontinuous neural networks. Adv. Differ. Equ. 2020, 2020, 97. [Google Scholar] [CrossRef] [Green Version]

- Pratap, A.; Raja, R.; Alzabut, J.; Dianavinnarasi, J.; Cao, J.; Rajchakit, G. Finite-time Mittag-Leffler stability of fractional-order quaternion-valued memristive neural networks with impulses. Neural Process. Lett. 2020, 51, 1485–1526. [Google Scholar] [CrossRef]

- Li, J.; Jiang, H.; Hu, C.; Yu, J. Analysis and discontinuous control for finite-time synchronization of delayed complex dynamical networks. Chaos Solut. Fractals 2018, 114, 219–305. [Google Scholar] [CrossRef]

- Wang, H.; Liu, P.X.; Bao, J.; Xie, X.J.; Li, S. Adaptive neural output-feedback decentralized control for large-scale nonlinear systems with stochastic disturbances. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 972–983. [Google Scholar] [CrossRef]

- Zheng, M.; Li, L.; Peng, H.; Xiao, J.; Yang, Y.; Zhang, Y.; Zhao, H. Finite-time stability and synchronization of memristor-based fractional-order fuzzy cellular neural networks. Commun. Nonlinear Sci. Numer. Simul. 2018, 59, 272–297. [Google Scholar] [CrossRef]

- Wu, A.; Zeng, Z. Lagrange stability of memristive neural networks with discrete and distributed delays. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 690–703. [Google Scholar] [CrossRef]

- Bai, C.Z. Global stability of almost periodic solutions of Hopfield neural networks with neutral time-varying delays. Appl. Math. Comput. 2008, 203, 72–79. [Google Scholar] [CrossRef]

- Syed Ali, M.; Zhu, Q.; Pavithra, S.; Gunasekaran, N. A study on <(Q,S,R),γ>-dissipative synchronisation of coupled reaction-diffusion neural networks with time-varying delays. Int. J. Syst. Sci. 2017, 49, 755–765. [Google Scholar]

- Chen, Z.; Ruan, J. Global stability analysis of impulsive Cohen-Grossberg neural networks with delay. Phys. Lett. A 2005, 345, 101–111. [Google Scholar] [CrossRef]

- Li, W.J.; Lee, T. Hopfield neural networks for affine invariant matching. IEEE Trans. Neural Netw. Learn. Syst. 2001, 12, 1400–1410. [Google Scholar]

- Syed Ali, M.; Gunasekaran, N.; Zhu, Q. State estimation of T-S fuzzy delayed neural networks with Markovian jumping parameters using sampled-data control. Fuzzy Sets Syst. 2017, 306, 87–104. [Google Scholar] [CrossRef]

- Syed Ali, M.; Narayanana, G.; Sevgenb, S.; Shekher, V.; Arik, S. Global stability analysis of fractional-order fuzzy BAM neural networks with time delay and impulsive effects. Commun. Nonlinear Sci. Numer. Simul. 2019, 78, 104853. [Google Scholar] [CrossRef]

- Samorn, N.; Yotha, N.; Srisilp, P.; Mukdasai, K. LMI-based results on robust exponential passivity of uncertain neutral-type neural networks with mixed interval time-varying delays via the reciprocally convex combination technique. Computation 2021, 9, 70. [Google Scholar] [CrossRef]

- Meesuptong, B.; Mukdasai, K.; Khonchaiyaphum, I. New exponential stability criterion for neutral system with interval time-varying mixed delays and nonlinear uncertainties. Thai J. Math. 2020, 18, 333–349. [Google Scholar]

- Jian, J.; Wang, J. Stability analysis in lagrange sense for a class of BAM neural networks of neutral type with multiple time-varying delays. Neurocomputing 2015, 149, 930–939. [Google Scholar] [CrossRef]

- Lakshmanan, S.; Park, J.H.; Jung, H.Y.; Kwon, O.M.; Rakkiyappan, R. A delay partitioning approach to delay-dependent stability analysis for neutral type neural networks with discrete and distributed delays. Neurocomputing 2013, 111, 81–89. [Google Scholar] [CrossRef]

- Peng, W.; Wu, Q.; Zhang, Z. LMI-based global exponential stability of equilibrium point for neutral delayed BAM neural networks with delays in leakage terms via new inequality technique. Neurocomputing 2016, 199, 103–113. [Google Scholar] [CrossRef]

- Syed Ali, M.; Saravanakumar, R.; Cao, J. New passivity criteria for memristor-based neutral-type stochastic BAM neural networks with mixed time-varying delays. Neurocomputing 2016, 171, 1533–1547. [Google Scholar] [CrossRef]

- Klamnoi, A.; Yotha, N.; Weera, W.; Botmart, T. Improved results on passivity analysis of neutral-type neural networks with mixed time-varying delays. J. Res. Appl. Mech. Eng. 2018, 6, 71–81. [Google Scholar]

- Ding, D.; Wang, Z.; Dong, H.; Shu, H. Distributed H∞ state estimation with stochastic parameters and nonlinearities through sensor networks: The finite-horizon case. Automatica 2012, 48, 1575–1585. [Google Scholar] [CrossRef]

- Carrasco, J.; Baños, A.; Schaft, A.V. A passivity-based approach to reset control systems stability January. Syst. Control Lett. 2010, 59, 18–24. [Google Scholar] [CrossRef] [Green Version]

- Krasovskii, N.N.; Lidskii, E.A. Analytical design of controllers in systems with random attributes. Autom. Remote Control 1961, 22, 1021–1025. [Google Scholar]

- Debeljković, D.; Stojanović, S.; Jovanović, A. Finite-time stability of continuous time delay systems: Lyapunov-like approach with Jensen’s and Coppel’s inequality. Acta Polytech. Hung. 2013, 10, 135–150. [Google Scholar]

- Wang, L.; Shen, Y.; Ding, Z. Finite time stabilization of delayed neural networks. Neural Netw. 2015, 70, 74–80. [Google Scholar] [CrossRef] [PubMed]

- Rajavela, S.; Samiduraia, R.; Caobc, J.; Alsaedid, A.; Ahmadd, B. Finite-time non-fragile passivity control for neural networks with time-varying delay. Appl. Math. Comput. 2017, 297, 145–158. [Google Scholar] [CrossRef]

- Chen, X.; He, S. Finite-time passive filtering for a class of neutral time-delayed systems. Trans. Inst. Meas. Control 2016, 1139–1145. [Google Scholar] [CrossRef]

- Syed Ali, M.; Saravanan, S.; Zhu, Q. Finite-time stability of neutral-type neural networks with random time-varying delays. Syst. Sci. 2017, 48, 3279–3295. [Google Scholar]

- Saravanan, S.; Syed Ali, M.; Alsaedib, A.; Ahmadb, B. Finite-time passivity for neutral-type neural networks with time-varying delays. Nonlinear Anal. Model. Control 2020, 25, 206–224. [Google Scholar]

- Syed Ali, M.; Meenakshi, K.; Gunasekaran, N. Finite-time H∞ boundedness of discrete-time neural networks normbounded disturbances with time-varying delay. Int. J. Control Autom. Syst. 2017, 15, 2681–2689. [Google Scholar] [CrossRef]

- Phanlert, C.; Botmart, T.; Weera, W.; Junsawang, P. Finite-Time Mixed H∞/passivity for neural networks with mixed interval time-varying delays using the multiple integral Lyapunov-Krasovskii functional. IEEE Access 2021, 9, 89461–89475. [Google Scholar] [CrossRef]

- Amato, F.; Ariola, M.; Dorato, P. Finite-time control of linear systems subject to parametric uncertainties and disturbances. Automatica 2001, 37, 1459–1463. [Google Scholar] [CrossRef]

- Song, J.; He, S. Finite-time robust passive control for a class of uncertain Lipschitz nonlinear systems with time-delays. Neurocomputing 2015, 159, 275–281. [Google Scholar] [CrossRef]

- Kwon, O.M.; Park, M.J.; Park, J.H.; Lee, S.M.; Cha, E.J. New delay-partitioning approaches to stability criteria for uncertain neutral systems with time-varying delays. SciVerse Sci. Direct 2012, 349, 2799–2823. [Google Scholar] [CrossRef]

- Seuret, A.; Gouaisbaut, F. Wirtinger-based integral inequality: Application to time-delay system. Automatica 2013, 49, 2860–2866. [Google Scholar] [CrossRef] [Green Version]

- Peng, C.; Fei, M.R. An improved result on the stability of uncertain T-S fuzzy systems with interval time-varying delay. Fuzzy Sets Syst. 2013, 212, 97–109. [Google Scholar] [CrossRef]

- Park, P.G.; Ko, J.W.; Jeong, C.K. Reciprocally convex approach to stability of systems with time-varying delays. Automatica 2011, 47, 235–238. [Google Scholar] [CrossRef]

- Kwon, O.M.; Park, M.J.; Park, J.H.; Lee, S.M.; Cha, E.J. Analysis on robust H∞ performance and stability for linear systems with interval time-varying state delays via some new augmented Lyapunov Krasovskii functional. Appl. Math. Comput. 2013, 224, 108–122. [Google Scholar]

- Singkibud, P.; Niamsup, P.; Mukdasai, K. Improved results on delay-rage-dependent robust stability criteria of uncertain neutral systems with mixed interval time-varying delays. IAENG Int. J. Appl. Math. 2017, 47, 209–222. [Google Scholar]

- Tian, J.; Ren, Z.; Zhong, S. A new integral inequality and application to stability of time-delay systems. Appl. Math. Lett. 2020, 101, 106058. [Google Scholar] [CrossRef]

- Kwon, O.M.; Park, J.H.; Lee, S.M.; Cha, E.J. New augmented Lyapunov-Krasovskii functional approach to stability analysis of neural networks with time-varying delays. Nonlinear Dyn. 2014, 76, 221–236. [Google Scholar] [CrossRef]

- Zeng, H.B.; He, Y.; Wu, M.; Xiao, S.P. Stability analysis of generalized neural networks with time-varying delays via a new integral inequality. Neurocomputing 2015, 161, 148–154. [Google Scholar] [CrossRef]

- Yang, B.; Wang, J. Stability analysis of delayed neural networks via a new integral inequality. Neural Netw. 2017, 88, 49–57. [Google Scholar] [CrossRef]

- Hua, C.; Wang, Y.; Wu, S. Stability analysis of neural networks with time-varying delay using a new augmented Lyapunov-Krasovskii functional. Neurocomputing 2019, 332, 1–9. [Google Scholar] [CrossRef]

- Zhu, X.L.; Yue, D.; Wang, Y. Delay-dependent stability analysis for neural networks with additive time-varying delay components. IET Control Theory Appl. 2013, 7, 354–362. [Google Scholar] [CrossRef]

- Li, T.; Wang, T.; Song, A.; Fei, S. Combined convex technique on delay-dependent stability for delayed neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 1459–1466. [Google Scholar] [CrossRef]

- Zhang, C.K.; He, Y.; Jiang, L.; Wu, M. Stability analysis for delayed neural networks considering both conservativeness and complexity. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 1486–1501. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, C.K.; He, Y.; Jiang, L.; Lin, W.J.; Wu, M. Delay-dependent stability analysis of neural networks with time-varying delay: A generalized free-weighting-matrix approach. Appl. Math. Comput. 2017, 294, 102–120. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).