ANA: Ant Nesting Algorithm for Optimizing Real-World Problems

Abstract

1. Introduction

- (1)

- Proposing a novel metaheuristic algorithm for solving SOPs.

- (2)

- Integrating Pythagorean theorem into the ant nesting model for generating convenient weights that assist the algorithm in both exploration and exploitation phases.

- (3)

- Utilizing a quite different approach from PSO for updating search agent positions and testing the algorithm on several optimization benchmark functions and comparing it to the most well-known and outstanding metaheuristic algorithms like a genetic algorithm (GA), particle swarm optimization (PSO), five modified versions of PSO, dragonfly algorithm (DA), whale optimization algorithm (WOA), salp swarm algorithm (SSA), and fitness dependent optimization (FDO).

- (4)

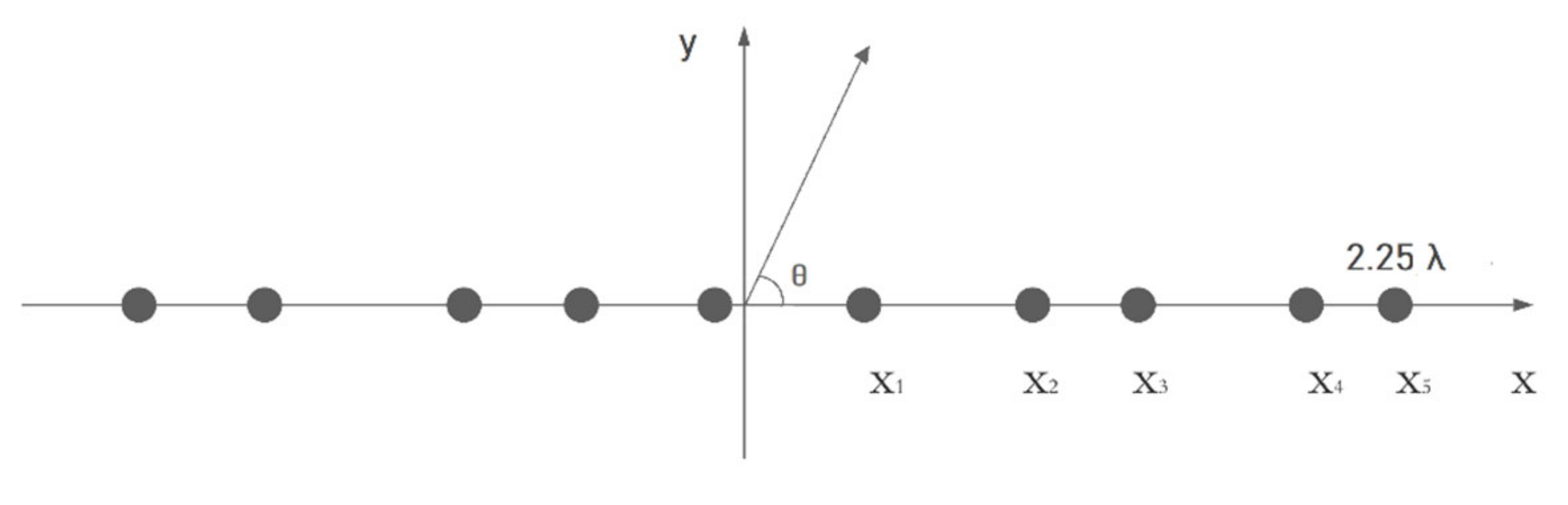

- Applying ANA algorithm for optimizing two real-world engineering problems that are antenna array design and frequency-modulated synthesis.

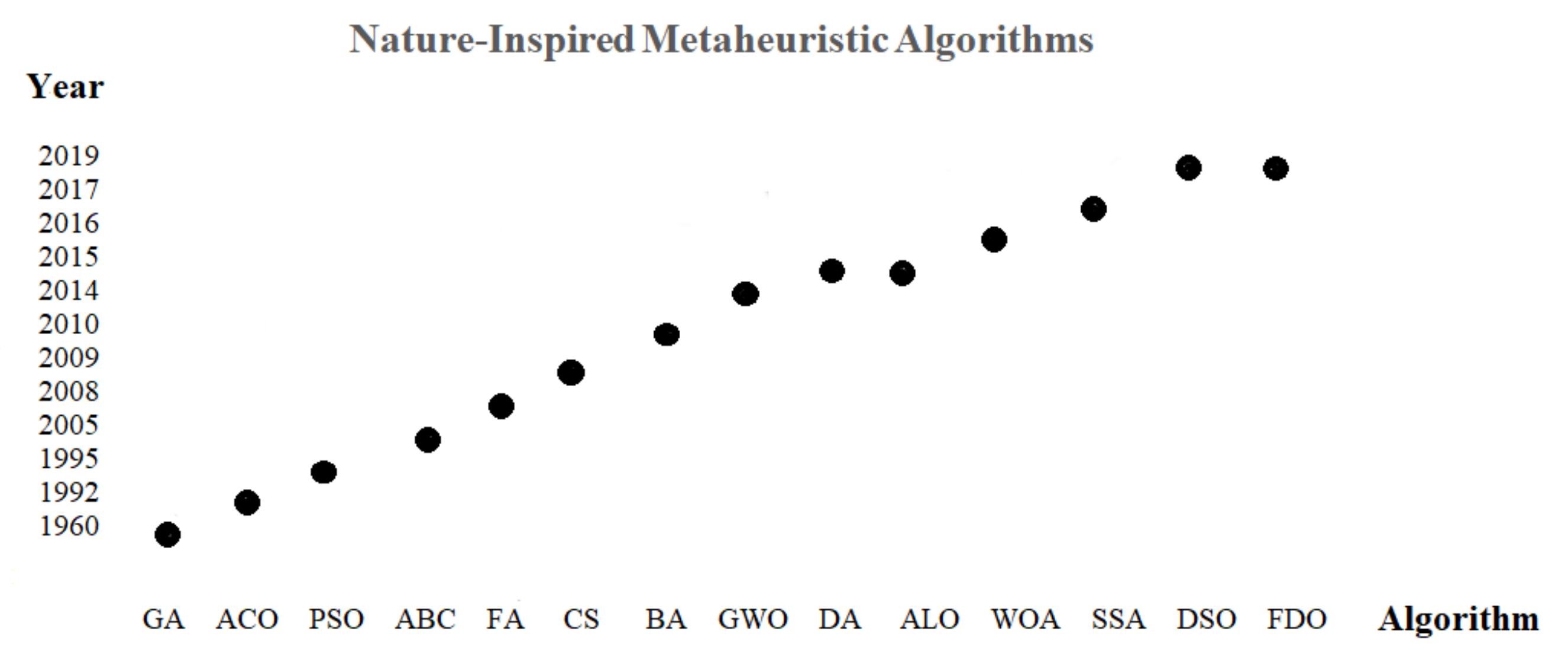

2. Nature-Inspired Metaheuristic Algorithms in Literature

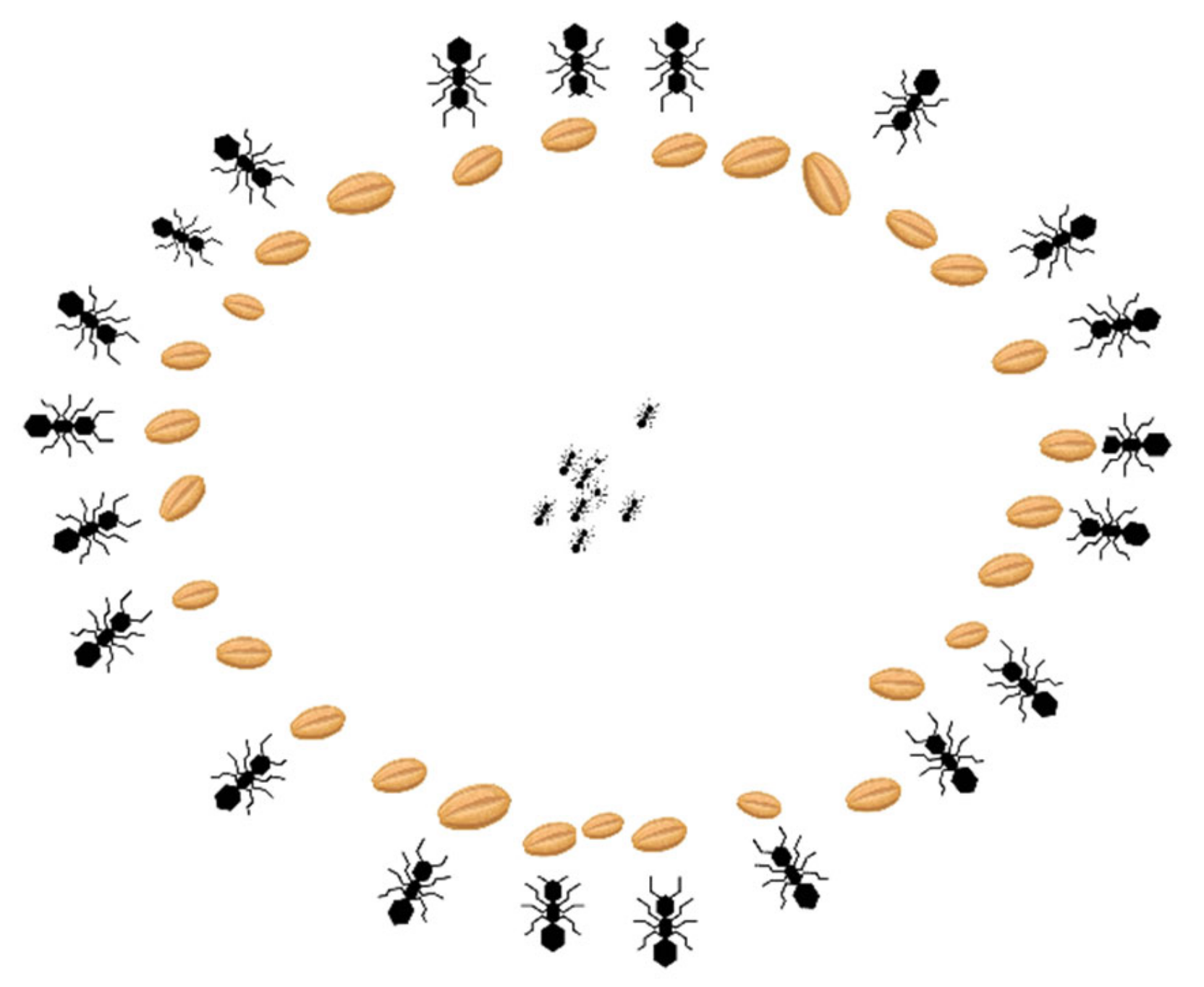

3. Ant Swarming

- Worker ants make a random walk within the nest until they face their nestmates or stationary building materials to deposit; the latter is the major cue for the deposition of another building material [58].

- Each worker ant makes an independent decision about which direction to take around the queen ant for depositing [59].

- Worker ants lean towards the area with the most dropped building material to deposit [58].

- Each worker ant selects an area around the queen ant to start the bulldozing process. A decision is made when all the worker ants in the colony are bulldozing at a potential area, i.e., deposit grain in that area [58].

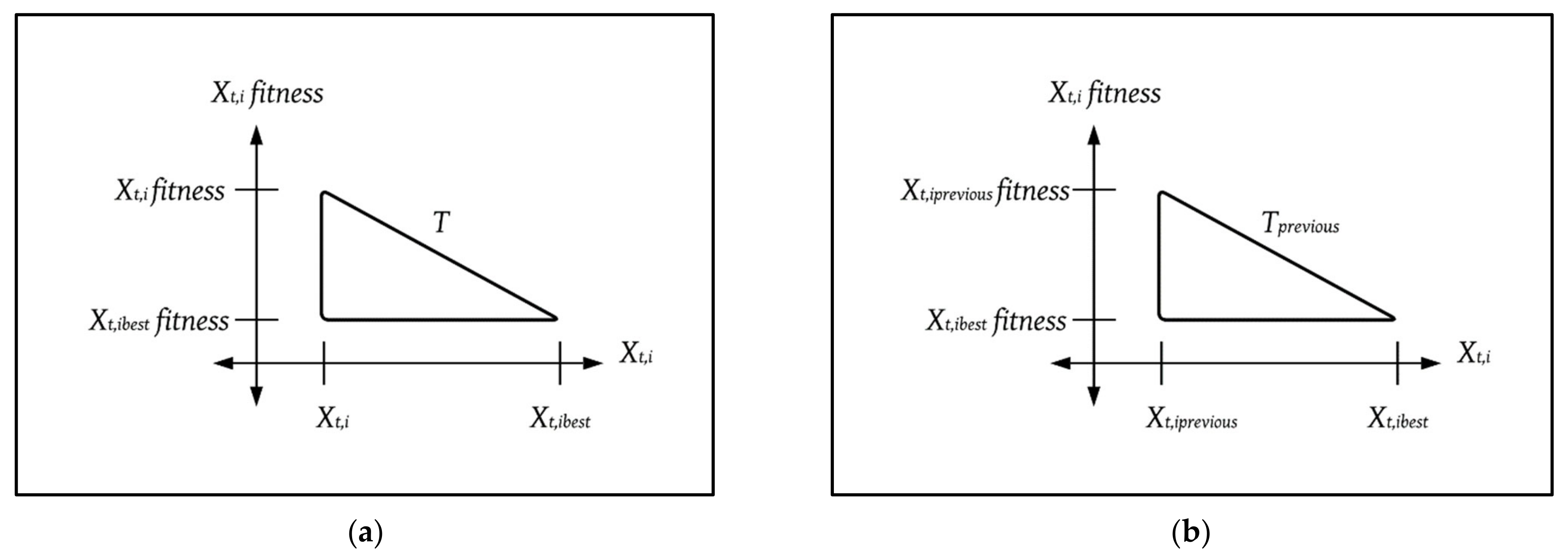

4. Ant Nesting Algorithm

4.1. Entities

4.2. Mathematical Modelling

4.3. Working Mechanism

| Algorithm 1. Pseudocode of ANA for a minimization problem without the loss of generality |

| Initialize worker ant population Xi (i = 1, 2, 3, …, n) Initialize worker ant previous position Xiprevious while iteration (t) limit not reached for each artificial worker ant Xt,i find best artificial worker ant Xt,ibest generate random walk r in [−1, 1] range if (Xt,i == Xt,ibest) calculate ΔXt+1,i using Equation (3) else if (Xt,i = Xt,iprevious) calculate ΔXt+1,i using Equation (4) else calculate T using Equation (6) calculate Tprevious using Equation (7) calculate dw using Equation (5) //for minimization calculate ΔXt+1,i using Equation (2) end if calculate Xt+1,i using Equation (1) if (Xt+1,i fitness < Xt,i fitness) //for minimization move accepted and Xt,i assigned to Xt,iprevious else maintain current position end if end for end while |

5. Testing and Evaluation

5.1. Standard Benchmark Functions

5.1.1. DA, PSO, and GA

5.1.2. PSO, GPSO, EPSO, LNPSO, VC-PSO, and SO-PSO

5.2. CEC-C06 2019 Benchmark Functions

5.3. Comparative Study

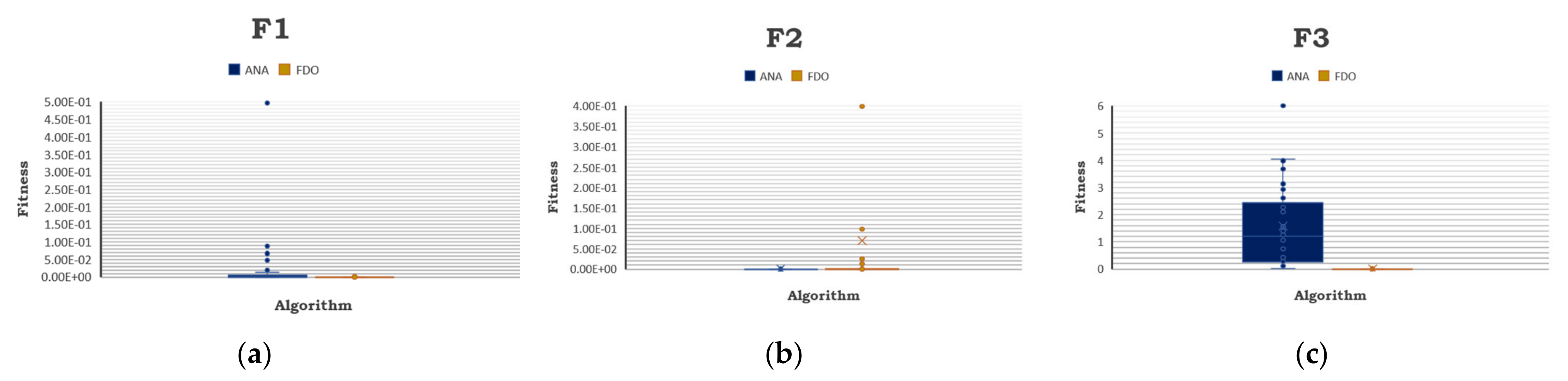

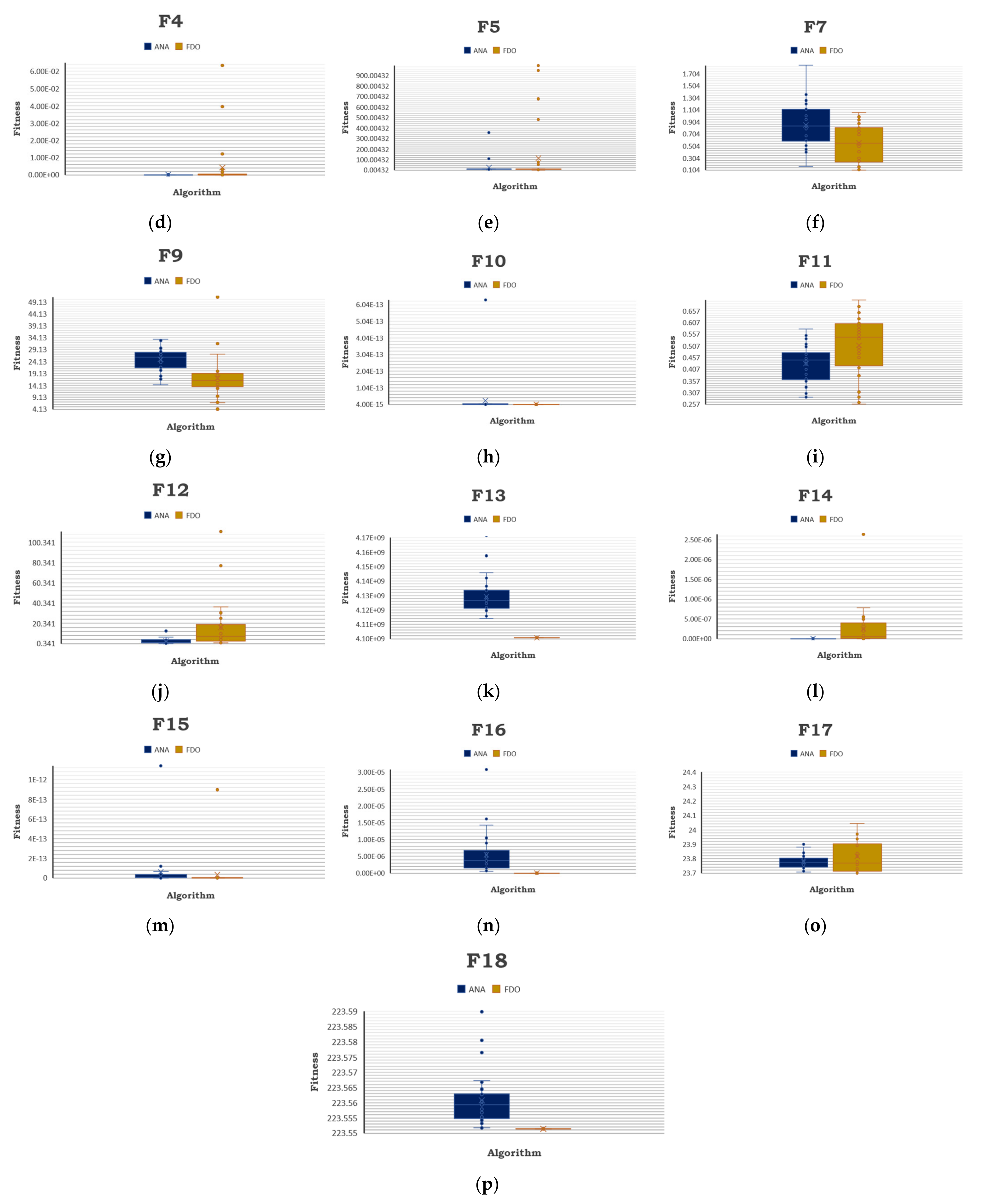

5.4. ANA versus FDO

5.5. Statistical Test

5.6. Real-World Applications of ANA

5.6.1. ANA on Aperiodic Antenna Array Design

5.6.2. ANA on Frequency-Modulated Synthesis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

| Function | Dimension | Range | Shift Position | fmin |

|---|---|---|---|---|

| 10 | [−100, 100] | [−30, −30, … −30] | 0 | |

| 10 | [−10, 10] | [−3, −3, … −3] | 0 | |

| 10 | [−100, 100] | [−30, −30, … −30] | 0 | |

| 10 | [−100, 100] | [−30, −30, … −30] | 0 | |

| 10 | [−30, 30] | [−15, −15, … −15] | 0 | |

| 10 | [−100, 100] | [−750, … −750] | 0 | |

| 10 | [−1.28, 1.28] | [−0.25, …−0.25] | 0 |

| Function | Dimension | Range | Shift Position | fmin |

|---|---|---|---|---|

| 10 | [−500, 500] | [−300, … −300] | −418.9829 | |

| 10 | [−5.12, 5.12] | [−2, −2, …−2] | 0 | |

| 10 | [−32, 32] | [0, 0, … 0] | 0 | |

| 10 | [−600, 600] | [−400, … −400] | 0 | |

| 10 | [−50, 50] | [−30, 30, … 30] | 0 |

| Function | Dimension | Range | fmin |

|---|---|---|---|

| F13 (CF1) | 10 | [−5, 5] | 0 |

| F14 (CF2) | 10 | [−5, 5] | 0 |

| F15 (CF3) | 10 | [−5, 5] | 0 |

| F16 (CF4) | 10 | [−5, 5] | 0 |

| F17 (CF5) | 10 | [−5, 5] | 0 |

| F18 (CF6) | 10 | [−5, 5] | 0 |

| Function No. | Function Name | Dimension | Range | fmin |

|---|---|---|---|---|

| CEC01 | Storn’s Chebyshev Polynomial Fitting Problem | 9 | [−8192, 8192] | 1 |

| CEC02 | Inverse Hilbert Matrix Problem | 16 | [−16,384, 16,384] | 1 |

| CEC03 | Lennard-Jones Minimum Energy Cluster | 18 | [−4, 4] | 1 |

| CEC04 | Rastrigin’s Function | 10 | [−100, 100] | 1 |

| CEC05 | Griewangk’s Function | 10 | [−100, 100] | 1 |

| CEC06 | Weierstrass Function | 10 | [−100, 100] | 1 |

| CEC07 | Modified Schwefel’s Function | 10 | [−100, 100] | 1 |

| CEC08 | Expanded Schaffer’s F6 Function | 10 | [−100, 100] | 1 |

| CEC09 | Happy Cat Function | 10 | [−100, 100] | 10 |

| CEC10 | Ackley Function | 10 | [−100, 100] | 10 |

| Turn | F1 | F2 | F3 | F4 | F5 | F7 | ||||||

| ANA | FDO | ANA | FDO | ANA | FDO | ANA | FDO | ANA | FDO | ANA | FDO | |

| 1 | 1.11 × 10−4 | 3.79 × 10−29 | 8.86 × 10−5 | 1.95 × 10−8 | 2.391747144 | 1.69 × 10−11 | 7.11 × 10−15 | 1.64 × 10−8 | 7.432984162 | 5.389371864 | 0.866804261 | 0.251164251 |

| 2 | 7.23 × 10−8 | 8.58 × 10−28 | 3.54 × 10−5 | 0.025049009 | 3.676873939 | 2.43 × 10−13 | 0 | 1.27 × 10−12 | 8.133632758 | 5.212061321 | 0.165221295 | 0.154350971 |

| 3 | 0.048192575 | 1.14 × 10−28 | 1.50 × 10−4 | 4.08 × 10−6 | 2.60079385 | 2.85 × 10−9 | 0 | 0.063337923 | 7.689508303 | 480.5348286 | 1.202136818 | 0.186249902 |

| 4 | 2.33 × 10−5 | 8.84 × 10−28 | 2.28 × 10−5 | 0.031798849 | 3.977493658 | 5.92 × 10−13 | 0 | 0.004086299 | 8.652707669 | 6.963351041 | 1.026315594 | 0.986077172 |

| 5 | 3.52 × 10−6 | 3.27 × 10−24 | 4.25 × 10−5 | 2.22 × 10−15 | 0.153875296 | 4.75 × 10−9 | 0 | 6.39 × 10−7 | 6.566481502 | 7.547774391 | 0.617639517 | 0.69199572 |

| 6 | 0.066742805 | 4.92 × 10−28 | 2.41 × 10−5 | 2.34 × 10−12 | 0.023104804 | 5.44 × 10−11 | 0 | 0.01211212 | 7.703774666 | 946.2636218 | 0.790594357 | 0.108666552 |

| 7 | 0.003550881 | 4.92 × 10−28 | 1.64 × 10−5 | 8.33 × 10−6 | 1.065088764 | 1.55 × 10−11 | 0 | 5.52 × 10−9 | 18.36487837 | 4.151222988 | 1.357209894 | 0.174620628 |

| 8 | 1.01 × 10−5 | 2.52 × 10−28 | 1.66 × 10−5 | 2.17 × 10−5 | 1.568160542 | 6.87 × 10−14 | 0 | 7.77 × 10−8 | 8.018400623 | 5.380822727 | 0.517424969 | 0.797722139 |

| 9 | 3.12 × 10−7 | 1.72 × 10−27 | 1.45 × 10−4 | 1.48 × 10−11 | 0.102410755 | 6.46 × 10−13 | 0 | 1.06 × 10−9 | 5.536287826 | 4.778696694 | 0.840359419 | 0.284824671 |

| 10 | 5.26 × 10−6 | 6.31 × 10−28 | 3.86 × 10−5 | 2.01 × 10−8 | 0.483393911 | 9.41 × 10−12 | 0 | 0.001673928 | 8.546610565 | 3.215025725 | 0.810047666 | 0.405013575 |

| 11 | 2.78 × 10−6 | 1.14 × 10−28 | 3.67 × 10−5 | 3.64 × 10−14 | 0.864879054 | 1.42 × 10−11 | 0 | 2.51 × 10−10 | 7.874299055 | 0.004319718 | 1.117170129 | 0.935591962 |

| 12 | 0.019792811 | 3.45 × 10−25 | 8.81 × 10−6 | 2.22 × 10−12 | 0.431912446 | 4.05 × 10−12 | 0 | 0 | 7.893741339 | 7.583576095 | 0.953578507 | 0.104218667 |

| 13 | 0.013921899 | 1.77 × 10−28 | 2.11 × 10−6 | 3.29 × 10−10 | 1.196097344 | 6.57 × 10−13 | 9.24 × 10−14 | 9.50 × 10−9 | 8.207516834 | 6.766245837 | 0.832446794 | 0.873904075 |

| 14 | 2.47 × 10−4 | 1.01 × 10−25 | 1.04 × 10−5 | 3.31 × 10−10 | 1.215643383 | 6.47 × 10−11 | 0 | 3.71 × 10−6 | 8.370475137 | 5.806708272 | 0.417010569 | 0.445590624 |

| 15 | 1.14 × 10−5 | 2.26 × 10−25 | 4.63 × 10−5 | 1.511854839 | 0.307561465 | 2.83 × 10−12 | 0 | 8.99 × 10−6 | 7.848728655 | 76.72611459 | 1.11353184 | 0.779961586 |

| 16 | 0.496882545 | 2.08 × 10−25 | 5.05 × 10−6 | 9.10 × 10−14 | 4.031404303 | 3.80 × 10−11 | 3.55 × 10−15 | 1.41 × 10−4 | 24.03502558 | 4.969049398 | 1.258567091 | 0.729519408 |

| 17 | 2.26 × 10−5 | 8.84 × 10−29 | 4.43 × 10−5 | 2.68 × 10−12 | 0.222310655 | 1.18 × 10−10 | 0 | 9.79 × 10−4 | 105.6739992 | 4.118419197 | 0.670713808 | 0.722250032 |

| 18 | 9.52 × 10−5 | 7.83 × 10−28 | 1.05 × 10−4 | 1.16 × 10−4 | 0.235561892 | 1.67 × 10−9 | 0 | 1.32 × 10−4 | 7.950295796 | 676.1226102 | 1.025029377 | 0.297330263 |

| 19 | 6.08 × 10−6 | 8.64 × 10−25 | 4.88 × 10−6 | 0.398966573 | 2.271872396 | 2.33 × 10−13 | 0 | 2.28 × 10−8 | 355.4219987 | 6.054911494 | 1.214174323 | 0.171875021 |

| 20 | 3.59 × 10−4 | 3.79 × 10−28 | 6.07 × 10−6 | 4.98 × 10−9 | 2.107150179 | 5.82 × 10−14 | 1.42 × 10−14 | 6.19 × 10−12 | 8.141655326 | 9.598117721 | 0.60764254 | 0.518382895 |

| 21 | 1.39 × 10−6 | 1.64 × 10−28 | 1.49 × 10−5 | 1.74 × 10−5 | 1.245802434 | 4.86 × 10−14 | 0 | 6.26 × 10−11 | 8.085746671 | 8.081052732 | 0.944769536 | 0.838040939 |

| 22 | 9.09 × 10−6 | 1.07 × 10−25 | 7.92 × 10−6 | 1.54 × 10−5 | 0.187935469 | 5.75 × 10−14 | 0 | 7.33 × 10−10 | 5.964614538 | 994.04003 | 0.536293549 | 0.539834308 |

| 23 | 0.027121243 | 9.72 × 10−28 | 3.43 × 10−5 | 5.24 × 10−10 | 3.129001513 | 1.26 × 10−10 | 0 | 2.13 × 10−14 | 9.549734175 | 5.179255928 | 0.680443422 | 0.784514643 |

| 24 | 2.24 × 10−5 | 1.06 × 10−24 | 1.06 × 10−5 | 5.98 × 10−4 | 2.318721348 | 5.56 × 10−9 | 1.07 × 10−14 | 7.72 × 10−5 | 8.193949903 | 3.072962087 | 0.447296236 | 0.887949921 |

| 25 | 4.07 × 10−5 | 6.73 × 10−24 | 1.07 × 10−5 | 3.33 × 10−14 | 0.121714672 | 3.85 × 10−8 | 8.88 × 10−12 | 0.039483383 | 14.59412606 | 5.38125078 | 1.142057744 | 0.494719509 |

| 26 | 1.02 × 10−5 | 7.32 × 10−28 | 7.53 × 10−6 | 0.098319044 | 2.918241761 | 1.27 × 10−11 | 1.07 × 10−14 | 5.05 × 10−8 | 8.276483401 | 9.063486988 | 0.399833358 | 0.986881941 |

| 27 | 0.088125129 | 5.05 × 10−29 | 1.75 × 10−5 | 1.16 × 10−9 | 6.004686234 | 1.13 × 10−11 | 0 | 3.55 × 10−15 | 9.092436574 | 6.344508738 | 0.594489623 | 0.106760494 |

| 28 | 2.28 × 10−4 | 3.79 × 10−29 | 1.32 × 10−5 | 0.013051787 | 1.397954863 | 2.31 × 10−10 | 0 | 0.003278919 | 7.820602607 | 51.20002849 | 0.509288229 | 0.745339406 |

| 29 | 7.52 × 10−8 | 2.57 × 10−23 | 1.78 × 10−5 | 2.64 × 10−13 | 0.739765656 | 6.68 × 10−11 | 0 | 4.82 × 10−7 | 4.912117549 | 7.903719687 | 1.844587 | 0.56002937 |

| 30 | 1.19 × 10−5 | 3.22 × 10−21 | 1.72 × 10−5 | 7.28 × 10−13 | 0.271081438 | 7.99 × 10−8 | 0 | 4.64 × 10−5 | 8.730117271 | 2.699807344 | 1.003968997 | 1.057408352 |

| F9 | F10 | F11 | F12 | F13 | F14 | |||||||

| ANA | FDO | ANA | FDO | ANA | ANA | ANA | FDO | ANA | FDO | ANA | FDO | |

| 1 | 29.64993919 | 12.79952836 | 4.00 × 10−15 | 4.00 × 10−15 | 0.536669496 | 3.08 × 10−35 | 3.08 × 10−35 | 2.256208702 | 4.13 × 109 | 4.10 × 109 | 3.08 × 10−35 | 1.05 × 10−9 |

| 2 | 23.80324887 | 27.2700813 | 7.55 × 10−15 | 4.00 × 10−15 | 0.47016086 | 2.47 × 10−33 | 2.47 × 10−33 | 15.82823498 | 4.12 × 109 | 4.10 × 109 | 2.47 × 10−33 | 9.27 × 10−8 |

| 3 | 25.58357807 | 21.10470211 | 7.55 × 10−15 | 4.00 × 10−15 | 0.288038193 | 3.59 × 10−26 | 3.59 × 10−26 | 30.62229993 | 4.12 × 109 | 4.10 × 109 | 3.59 × 10−26 | 1.76 × 10−9 |

| 4 | 24.45921514 | 6.868915548 | 7.55 × 10−15 | 4.00 × 10−15 | 0.453501876 | 8.94 × 10−34 | 8.94 × 10−34 | 2.937099088 | 4.13 × 109 | 4.10 × 109 | 8.94 × 10−34 | 4.98 × 10−8 |

| 5 | 27.33640913 | 16.91917734 | 4.00 × 10−15 | 7.55 × 10−15 | 0.477649285 | 0 | 0 | 18.26717959 | 4.14 × 109 | 4.10 × 109 | 0 | 3.63 × 10−7 |

| 6 | 26.14245075 | 13.7293011 | 7.55 × 10−15 | 4.00 × 10−15 | 0.429930625 | 0 | 0 | 20.72761646 | 4.13 × 109 | 4.10 × 109 | 0 | 4.88 × 10−7 |

| 7 | 27.16351739 | 13.64136498 | 6.33 × 10−13 | 4.00 × 10−15 | 0.45735448 | 0 | 0 | 3.707549452 | 4.13 × 109 | 4.10 × 109 | 0 | 6.45 × 10−10 |

| 8 | 21.24192442 | 18.45987669 | 7.55 × 10−15 | 4.00 × 10−15 | 0.578880732 | 2.47 × 10−34 | 2.47 × 10−34 | 7.537494555 | 4.12 × 109 | 4.10 × 109 | 2.47 × 10−34 | 7.47 × 10−23 |

| 9 | 30.65835276 | 13.92959125 | 4.00 × 10−15 | 4.00 × 10−15 | 0.514372619 | 0 | 0 | 36.38507552 | 4.13 × 109 | 4.10 × 109 | 0 | 7.75 × 10−7 |

| 10 | 24.77578868 | 18.32683402 | 4.00 × 10−15 | 4.00 × 10−15 | 0.330318389 | 8.49 × 10−32 | 8.49 × 10−32 | 25.16311633 | 4.12 × 109 | 4.10 × 109 | 8.49 × 10−32 | 1.34 × 10−7 |

| 11 | 18.03815799 | 13.92941673 | 4.00 × 10−15 | 4.00 × 10−15 | 0.519984074 | 3.08 × 10−35 | 3.08 × 10−35 | 1.053823092 | 4.12 × 109 | 4.10 × 109 | 3.08 × 10−35 | 1.89 × 10−7 |

| 12 | 17.42487553 | 16.33601874 | 7.55 × 10−15 | 4.00 × 10−15 | 0.340353647 | 0 | 0 | 15.64424504 | 4.13 × 109 | 4.10 × 109 | 0 | 5.56 × 10−7 |

| 13 | 27.78577148 | 20.43700871 | 4.00 × 10−15 | 4.00 × 10−15 | 0.503012545 | 0 | 0 | 1.005512098 | 4.12 × 109 | 4.10 × 109 | 0 | 6.07 × 10−7 |

| 14 | 30.55497212 | 19.95926201 | 4.00 × 10−15 | 4.00 × 10−15 | 0.362357712 | 4.13 × 10−32 | 4.13 × 10−32 | 110.9137614 | 4.16 × 109 | 4.10 × 109 | 4.13 × 10−32 | 5.12 × 10−9 |

| 15 | 33.47370848 | 18.94197091 | 4.00 × 10−15 | 4.00 × 10−15 | 0.405573309 | 1.23 × 10−34 | 1.23 × 10−34 | 3.343699564 | 4.13 × 109 | 4.10 × 109 | 1.23 × 10−34 | 2.66 × 10−10 |

| 16 | 27.57864812 | 7.016369273 | 7.55 × 10−15 | 7.55 × 10−15 | 0.550530947 | 6.97 × 10−21 | 6.97 × 10−21 | 18.58481895 | 4.12 × 109 | 4.10 × 109 | 6.97 × 10−21 | 1.69 × 10−11 |

| 17 | 22.07756327 | 16.91428894 | 4.00 × 10−15 | 4.00 × 10−15 | 0.452340051 | 7.70 × 10−34 | 7.70 × 10−34 | 77.1872071 | 4.12 × 109 | 4.10 × 109 | 7.70 × 10−34 | 5.02 × 10−7 |

| 18 | 28.19367857 | 16.14777042 | 4.00 × 10−15 | 4.00 × 10−15 | 0.355658197 | 4.01 × 10−34 | 4.01 × 10−34 | 3.79085804 | 4.13 × 109 | 4.10 × 109 | 4.01 × 10−34 | 2.04 × 10−8 |

| 19 | 27.12853068 | 51.1308107 | 4.00 × 10−15 | 4.00 × 10−15 | 0.303665389 | 1.23 × 10−34 | 1.23 × 10−34 | 11.27948275 | 4.13 × 109 | 4.10 × 109 | 1.23 × 10−34 | 2.58 × 10−7 |

| 20 | 27.03229743 | 13.73732476 | 1.47 × 10−14 | 4.00 × 10−15 | 0.434220836 | 2.45 × 10−28 | 2.45 × 10−28 | 3.201621381 | 4.12 × 109 | 4.10 × 109 | 2.45 × 10−28 | 6.04 × 10−33 |

| 21 | 23.98052652 | 12.93481639 | 7.55 × 10−15 | 4.00 × 10−15 | 0.453990137 | 2.37 × 10−29 | 2.37 × 10−29 | 2.24235298 | 4.17 × 109 | 4.10 × 109 | 2.37 × 10−29 | 5.78 × 10−8 |

| 22 | 30.48681497 | 16.18708655 | 7.55 × 10−15 | 4.00 × 10−15 | 0.448005501 | 1.43 × 10−30 | 1.43 × 10−30 | 18.02894669 | 4.12 × 109 | 4.10 × 109 | 1.43 × 10−30 | 5.55 × 10−7 |

| 23 | 20.61803459 | 18.90882319 | 7.55 × 10−15 | 4.00 × 10−15 | 0.366715922 | 0 | 0 | 7.29987667 | 4.13 × 109 | 4.10 × 109 | 0 | 4.66 × 10−27 |

| 24 | 21.55669391 | 13.93157013 | 4.00 × 10−15 | 4.00 × 10−15 | 0.443166004 | 3.08 × 10−35 | 3.08 × 10−35 | 2.820756579 | 4.13 × 109 | 4.10 × 109 | 3.08 × 10−35 | 7.95 × 10−8 |

| 25 | 20.45554822 | 4.13272208 | 4.00 × 10−15 | 4.00 × 10−15 | 0.36216298 | 0 | 0 | 4.134924531 | 4.15 × 109 | 4.10 × 109 | 0 | 6.67 × 10−9 |

| 26 | 16.73090762 | 9.523122913 | 4.00 × 10−15 | 4.00 × 10−15 | 0.477300194 | 9.00 × 10−33 | 9.00 × 10−33 | 1.897965144 | 4.13 × 109 | 4.10 × 109 | 9.00 × 10−33 | 8.84 × 10−10 |

| 27 | 21.69321924 | 20.66968085 | 4.00 × 10−15 | 4.00 × 10−15 | 0.385521001 | 8.35 × 10−30 | 8.35 × 10−30 | 20.48011935 | 4.11 × 109 | 4.10 × 109 | 8.35 × 10−30 | 2.64 × 10−6 |

| 28 | 27.08886055 | 14.50815015 | 4.00 × 10−15 | 4.00 × 10−15 | 0.441443826 | 1.14 × 10−26 | 1.14 × 10−26 | 6.724962686 | 4.14 × 109 | 4.10 × 109 | 1.14 × 10−26 | 6.40 × 10−8 |

| 29 | 32.81824491 | 31.63298352 | 4.00 × 10−15 | 4.00 × 10−15 | 0.474952227 | 6.16 × 10−34 | 6.16 × 10−34 | 9.99826971 | 4.12 × 109 | 4.10 × 109 | 6.16 × 10−34 | 2.92 × 10−10 |

| 30 | 14.31758698 | 13.14186244 | 4.00 × 10−15 | 4.00 × 10−15 | 0.288049224 | 3.08 × 10−35 | 3.08 × 10−35 | 2.066754282 | 4.13 × 109 | 4.10 × 109 | 3.08 × 10−35 | 6.01 × 10−14 |

| F15 | F16 | F17 | F18 | |||||||||

| ANA | FDO | ANA | FDO | ANA | FDO | ANA | FDO | |||||

| 1 | 0 | 2.22 × 10−16 | 4.82 × 10−6 | 9.99 × 10−16 | 23.78881433 | 23.68277601 | 223.5535953 | 223.5513726 | ||||

| 2 | 1.15 × 10−14 | 0 | 1.43 × 10−5 | 1.11 × 10−15 | 23.80232686 | 23.93678471 | 223.5667589 | 223.5513726 | ||||

| 3 | 9.99 × 10−16 | 9.99 × 10−16 | 4.20 × 10−6 | 9.99 × 10−16 | 23.77803764 | 23.69035899 | 223.5553026 | 223.5513726 | ||||

| 4 | 6.28 × 10−14 | 1.11 × 10−16 | 6.74 × 10−7 | 1.33 × 10−15 | 23.74335581 | 23.73681148 | 223.5581154 | 223.5513726 | ||||

| 5 | 1.82 × 10−14 | 8.96 × 10−13 | 1.34 × 10−6 | 1.33 × 10−15 | 23.77743535 | 23.7435614 | 223.5626506 | 223.5513726 | ||||

| 6 | 6.22 × 10−15 | 1.11 × 10−16 | 1.61 × 10−5 | 9.99 × 10−16 | 23.79436261 | 23.71556709 | 223.5598048 | 223.5513726 | ||||

| 7 | 8.22 × 10−15 | 1.11 × 10−16 | 1.03 × 10−6 | 9.99 × 10−16 | 23.71566546 | 23.68432366 | 223.5578919 | 223.5513726 | ||||

| 8 | 1.44 × 10−13 | 5.55 × 10−16 | 3.48 × 10−6 | 8.88 × 10−16 | 23.87966915 | 23.70108012 | 223.5612475 | 223.5513726 | ||||

| 9 | 1.11 × 10−16 | 1.11 × 10−16 | 1.69 × 10−6 | 7.77 × 10−16 | 23.70607016 | 23.93939471 | 223.5542233 | 223.5513726 | ||||

| 10 | 4.44 × 10−16 | 1.33 × 10−15 | 6.06 × 10−6 | 1.22 × 10−15 | 23.71951226 | 23.83709351 | 223.5604607 | 223.5513726 | ||||

| 11 | 1.22 × 10−15 | 9.99 × 10−16 | 3.76 × 10−6 | 7.77 × 10−16 | 23.7136252 | 23.93896182 | 223.5635372 | 223.5513726 | ||||

| 12 | 3.75 × 10−14 | 1.11 × 10−16 | 5.76 × 10−6 | 6.66 × 10−16 | 23.89952076 | 23.69320027 | 223.5517005 | 223.5513726 | ||||

| 13 | 1.26 × 10−13 | 0 | 9.02 × 10−6 | 5.55 × 10−16 | 23.83970207 | 23.94535677 | 223.5764026 | 223.5513726 | ||||

| 14 | 3.00 × 10−15 | 3.33 × 10−16 | 1.58 × 10−6 | 7.77 × 10−16 | 23.79218864 | 23.76833148 | 223.5804875 | 223.5513726 | ||||

| 15 | 3.11 × 10−15 | 4.44 × 10−16 | 1.51 × 10−6 | 1.22 × 10−15 | 23.74107639 | 23.68135525 | 223.5578101 | 223.5513726 | ||||

| 16 | 3.30 × 10−14 | 3.33 × 10−15 | 8.91 × 10−6 | 1.33 × 10−15 | 23.75687297 | 23.6900798 | 223.5595445 | 223.5513726 | ||||

| 17 | 1.19 × 10−13 | 0 | 1.04 × 10−5 | 1.11 × 10−15 | 23.85584942 | 23.81447635 | 223.551743 | 223.5513726 | ||||

| 18 | 1.14 × 10−12 | 2.22 × 10−16 | 3.24 × 10−6 | 5.55 × 10−16 | 23.71853807 | 23.73052044 | 223.5573346 | 223.5513726 | ||||

| 19 | 5.55 × 10−15 | 0 | 1.04 × 10−6 | 8.88 × 10−16 | 23.74365598 | 23.97056993 | 223.5600951 | 223.5513726 | ||||

| 20 | 3.76 × 10−14 | 6.66 × 10−16 | 5.37 × 10−7 | 8.88 × 10−16 | 23.79912171 | 23.84108883 | 223.5606669 | 223.5513726 | ||||

| 21 | 1.78 × 10−14 | 2.55 × 10−15 | 5.21 × 10−6 | 9.99 × 10−16 | 23.73634589 | 24.43502039 | 223.5672378 | 223.5513726 | ||||

| 22 | 8.88 × 10−16 | 1.78 × 10−15 | 3.08 × 10−5 | 5.55 × 10−16 | 23.74978395 | 23.76997022 | 223.5547583 | 223.5513726 | ||||

| 23 | 3.77 × 10−15 | 1.11 × 10−16 | 3.72 × 10−6 | 1.22 × 10−15 | 23.81821264 | 23.7822576 | 223.5545055 | 223.5513726 | ||||

| 24 | 1.71 × 10−14 | 4.77 × 10−15 | 1.88 × 10−6 | 1.33 × 10−15 | 23.77440552 | 23.75868399 | 223.5644214 | 223.5513726 | ||||

| 25 | 2.22 × 10−16 | 2.11 × 10−15 | 3.64 × 10−6 | 8.88 × 10−16 | 23.80311496 | 23.77651152 | 223.5593722 | 223.5513726 | ||||

| 26 | 7.77 × 10−16 | 1.11 × 10−16 | 1.40 × 10−6 | 5.55 × 10−16 | 23.7715509 | 24.04397935 | 223.553155 | 223.5513726 | ||||

| 27 | 6.66 × 10−16 | 3.33 × 10−16 | 5.15 × 10−6 | 4.44 × 10−16 | 23.72388179 | 23.89049499 | 223.5593623 | 223.5513726 | ||||

| 28 | 9.44 × 10−15 | 4.44 × 10−16 | 1.29 × 10−6 | 7.77 × 10−16 | 23.76062509 | 23.7717154 | 223.5567037 | 223.5513726 | ||||

| 29 | 9.21 × 10−15 | 2.22 × 10−16 | 2.75 × 10−6 | 9.99 × 10−16 | 23.79965306 | 23.76744563 | 223.5547438 | 223.5513726 | ||||

| 30 | 5.55 × 10−16 | 2.22 × 10−16 | 9.19 × 10−6 | 1.33 × 10−15 | 23.84596893 | 23.76024475 | 223.589931 | 223.5513726 | ||||

| Algorithm | F1 | F2 | F3 | F4 | F5 | F7 | F9 | F10 |

| ANA | 8.75 × 10−11 | 1.65376 × 10−6 | 0.00229666 | 9.48 × 10−12 | 5.40 × 10−11 | 0.659017 | 0.581344 | 1.10 × 10−11 |

| FDO | 9.34 × 10−12 | 4.45 × 10−11 | 1.09 × 10−10 | 2.47 × 10−10 | 1.58 × 10−9 | 0.0223175 | 4.79981 × 10−5 | 4.59 × 10−11 |

| F11 | F12 | F13 | F14 | F15 | F16 | F17 | F18 | |

| ANA | 0.384774 | 7.40037 × 10−6 | 4.32195 × 10−5 | 8.86 × 10−12 | 7.55 × 10−11 | 2.19701 × 10−6 | 0.149867 | 6.46068 × 10−5 |

| FDO | 0.0515488 | 1.39 × 10−7 | NaN | 9.95 × 10−9 | 9.53 × 10−12 | 0.0560245 | 0.000011896 | 4.27 × 10−13 |

| F1 | F2 | F3 | F4 | F5 | F7 | F9 | F10 |

| 1.33 × 10−1 | 0.18407295 | 5.73 × 10−8 | 9.35 × 10−2 | 8.25 × 10−2 | 0.89784614 | 0.36480241 | 2.91 × 10−1 |

| F11 | F12 | F13 | F14 | F15 | F16 | F17 | F18 |

| 0.03998253 | 0.03998253 | 2.7128 × 10−5 | 8.29 × 10−3 | 5.58 × 10−1 | 0.00033967 | 0.0225408 | 4.3528 × 10−5 |

References

- Russell, S.J.; Norvig, P.; Davis, E. Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Cooper, D. Heuristics for Scheduling Resource-Constrained Projects: An Experimental Investigation. Manag. Sci. 1976, 22, 1186–1194. [Google Scholar] [CrossRef]

- Yang, X.-S. Nature-Inspired Optimization Algorithms, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Lin, M.-H.; Tsai, J.-F.; Yu, C.-S. A Review of Deterministic Optimization Methods in Engineering and Management. Math. Probl. Eng. 2012, 2012, 756023. [Google Scholar] [CrossRef]

- Blake, A. Comparison of the Efficiency of Deterministic and Stochastic Alizorithms for Visual Reconstruction. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 2–12. [Google Scholar] [CrossRef]

- Pierre, C.; Rennard, J.-P. Stochastic Optimization Algorithms. In Handbook of Research on Nature Inspired Computing for Economics and Management; IGI Global: Hershey, PA, USA, 2006; pp. 28–44. [Google Scholar]

- Francisco, M.; Revollar, S.; Vega, P.; Lamanna, R. A comparative study of deterministic and stochastic optimization methods for integrated design of processes. IFAC Proc. Vol. 2005, 38, 335–340. [Google Scholar] [CrossRef]

- Valadi, J.; Siarry, P. Applications of Metaheuristics in Process Engineering, 1st ed.; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Tzanetos, A.; Dounias, G. An Application-Based Taxonomy of Nature Inspired INTELLIGENT Algorithms. Chios. 2019. Available online: http://mde-lab.aegean.gr/images/stories/docs/reportnii2019.pdf (accessed on 22 January 2020).

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence, 1st ed.; University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Younas, I. Using Genetic Algorithms for Large Scale Optimization of Assignment, Planning and Rescheduling Problems; KTH Royal Institute of Technology: Stockholm, Sweden, 2014. [Google Scholar]

- Dorigo, M. Optimization, Learning and Natural Algorithms; Politecnico di Milano: Milano, Italy, 1992. [Google Scholar]

- Gambardella, L.M.; Dorigo, M. An Ant Colony System Hybridized with a New Local Search for the Sequential Ordering Problem. INFORMS J. Comput. 2000, 12, 237–255. [Google Scholar] [CrossRef]

- Gambardella, L.M.; Taillard, É.; Agazzi, G. MACS-VRPTW: A Multiple Ant Colony System for Vehicle Routing Problems with Time Windows. In New Ideas in Optimization; McGraw-Hill: London, UK, 1999; pp. 63–76. [Google Scholar]

- Liang, Y.-C.; Smith, A. An Ant Colony Optimization Algorithm for the Redundancy Allocation Problem (RAP). IEEE Trans. Reliab. 2004, 53, 417–423. [Google Scholar] [CrossRef]

- De A Silva, R.; Ramalho, G. Ant system for the set covering problem. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics. e-Systems and e-Man for Cybernetics in Cyberspace (Cat.No.01CH37236), Tucson, AZ, USA, 7–10 October 2001. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Poli, R. Analysis of the Publications on the Applications of Particle Swarm Optimisation. J. Artif. Evol. Appl. 2008, 2008, 685175. [Google Scholar] [CrossRef]

- Karaboğa, D. An Idea Based on Honey Bee Swarm for Numerical Optimization. 2005. Available online: https://abc.erciyes.edu.tr/pub/tr06_2005.pdf (accessed on 22 January 2020).

- Singh, A. An artificial bee colony algorithm for the leaf-constrained minimum spanning tree problem. Appl. Soft Comput. 2009, 9, 625–631. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, L. Artificial Bee Colony for Two Dimensional Protein Folding. Adv. Electr. Eng. Syst. 2012, 1, 19–23. [Google Scholar]

- Crawford, B.; Soto, R.; Cuesta, R.; Paredes, F. Application of the Artificial Bee Colony Algorithm for Solving the Set Covering Problem. Sci. World J. 2014, 2014, 189164. [Google Scholar] [CrossRef]

- Hossain, M.; El-Shafie, A. Application of artificial bee colony (ABC) algorithm in search of optimal release of Aswan High Dam. J. Phys. Conf. Ser. 2013, 423, 012001. [Google Scholar] [CrossRef]

- Yang, X.-S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: London, UK, 2010. [Google Scholar]

- Almasi, O.N.; Rouhani, M. A new fuzzy membership assignment and model selection approach based on dynamic class centers for fuzzy SVM family using the firefly algorithm. Turk. J. Electr. Eng. Comput. Sci. 2016, 24, 1797–1814. [Google Scholar] [CrossRef]

- Lones, M.A. Metaheuristics in nature-inspired algorithms. In Proceedings of the Companion Publication of the 2014 Annual Conference on Genetic and Evolutionary Computation, Vancouver, BC, Canada, 12–16 July 2014; pp. 1419–1422. [Google Scholar]

- Weyland, D. A critical analysis of the harmony search algorithm—How not to solve sudoku. Oper. Res. Perspect. 2015, 2, 97–105. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Cuckoo Search via Lévy Flights; IEEE: Piscataway, NJ, USA, 2009; pp. 210–214. [Google Scholar]

- Tian, S.; Cheng, H.; Zhang, L.; Hong, S.; Sun, T.; Liu, L.; Zeng, P. Application of Cuckoo Search algorithm in power network planning. In Proceedings of the 2015 5th International Conference on Electric Utility Deregulation and Restructuring and Power Technologies (DRPT), Changsha, China, 26–29 November 2015; pp. 604–608. [Google Scholar]

- Sopa, M.; Angkawisittpan, N. An Application of Cuckoo Search Algorithm for Series System with Cost and Multiple Choices Constraints. Procedia Comput. Sci. 2016, 86, 453–456. [Google Scholar] [CrossRef][Green Version]

- Yang, X.-S.; Deb, S. Engineering optimisation by cuckoo search. Int. J. Math. Model. Numer. Optim. 2010, 1, 330–343. [Google Scholar] [CrossRef]

- Yang, X.-S. A New Metaheuristic Bat-Inspired Algorithm. Nat. Inspired Coop. Strateg. Optim. 2010, 284, 65–74. [Google Scholar]

- Alihodzic, A.; Tuba, M. Bat Algorithm (BA) for Image Thresholding. Baltimore. 2013. Available online: http://www.wseas.us/e-library/conferences/2013/Baltimore/TESIMI/TESIMI-50.pdf (accessed on 22 January 2020).

- Raghavan, S.; Sarwesh, P.; Marimuthu, C.; Chandrasekaran, K. Bat algorithm for scheduling workflow applications in cloud. In Proceedings of the 2015 International Conference on Electronic Design, Computer Networks & Automated Verification (EDCAV), Shillong, India, 29–30 January 2015; pp. 139–144. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2015, 27, 1053–1073. [Google Scholar] [CrossRef]

- Mirjalili, S. The Ant Lion Optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Touma, H.J. Study of The Economic Dispatch Problem on IEEE 30-Bus System using Whale Optimization Algorithm. Int. J. Eng. Technol. Sci. 2016, 3, 11–18. [Google Scholar] [CrossRef]

- Sayed, G.I.; Darwish, A.; Hassanien, A.E.; Pan, J.-S. Breast Cancer Diagnosis Approach Based on Meta-Heuristic Optimization Algorithm Inspired by the Bubble-Net Hunting Strategy of Whales. In International Conference on Genetic and Evolutionary Computing; Springer: Cham, Switzerland, 2016; Volume 536, pp. 306–313. [Google Scholar]

- Kumar, C.S.; Rao, R.S.; Cherukuri, S.K.; Rayapudi, S.R. A Novel Global MPP Tracking of Photovoltaic System based on Whale Optimization Algorithm. Int. J. Renew. Energy Dev. 2016, 5, 225. [Google Scholar] [CrossRef]

- Aljarah, I.; Faris, H.; Mirjalili, S. Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 2016, 22, 1–15. [Google Scholar] [CrossRef]

- Hassanien, A.E.; Abd Elfattah, M.; Aboulenin, S.; Schaefer, G.; Zhu, S.Y.; Korovin, I. Historic handwritten manuscript binarisation using whale optimization. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016. [Google Scholar]

- Dao, T.-K.; Pan, T.-S.; Pan, J.-S. A multi-objective optimal mobile robot path planning based on whale optimization algorithm. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 337–342. [Google Scholar]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Shamsaldin, A.S.; AlRashid, R.; Agha, R.A.A.-R.; Al-Salihi, N.K.; Mohammadi, M. Donkey and smuggler optimization algorithm: A collaborative working approach to path finding. J. Comput. Des. Eng. 2019, 6, 562–583. [Google Scholar] [CrossRef]

- Abdullah, J.M.; Rashid, T.A. Fitness Dependent Optimizer: Inspired by the Bee Swarming Reproductive Process. IEEE Access 2019, 6, 43473–43486. [Google Scholar] [CrossRef]

- Muhammed, D.A.; Saeed, S.A.M.; Rashid, T.A. Improved Fitness-Dependent Optimizer Algorithm. IEEE Access 2020, 8, 19074–19088. [Google Scholar] [CrossRef]

- Pant, M.; Thangaraj, R.; Abraham, A. Particle Swarm Optimization: Performance Tuning and Empirical Analysis. In Foundations of Computational Intelligence; Springer: Berlin, Germany, 2009; Volume 3, pp. 101–128. [Google Scholar]

- Ahmed, A.M.; Rashid, T.A.; Saeed, S.A.M. Dynamic Cat Swarm Optimization algorithm for backboard wiring problem. Neural Comput. Appl. 2021, 33, 13981–13997. [Google Scholar] [CrossRef]

- Mohammed, H.; Rashid, T. A novel hybrid GWO with WOA for global numerical optimization and solving pressure vessel design. Neural Comput. Appl. 2020, 32, 14701–14718. [Google Scholar] [CrossRef]

- Maeterlinck, M. The Life of the White Ant; Dodd, Mead & Company: London, UK, 1939. [Google Scholar]

- Werber, B. Empire of the Ants; Le Livre de Poche: Paris, France, 1991. [Google Scholar]

- Hölldobler, B.; Wilson, E. The Ants; Belknap Press: Berlin, Germany, 1990. [Google Scholar]

- Hölldobler, B.; Wilson, E. Journey to the Ants: A Story of Scientific Exploration, 1st ed.; Harvard University Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Franks, N.R.; Deneubourg, J.-L. Self-organizing nest construction in ants: Individual worker behavior and the nest’s dynamics. Anim. Behav. 1997, 54, 779–796. [Google Scholar] [CrossRef]

- Sumpter, D.J.T. Structures. In Collective Animal Behavior; Princeton University Press: Princeton, NJ, USA, 2010; pp. 151–172. [Google Scholar]

- Katoch, S.; Singh Chauhan, S.; Kumar, V. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef]

- Meraihi, Y.; Ramdane-Cherif, A.; Acheli, D.; Mahseur, M. Dragonfly algorithm: A comprehensive review and applications. Neural Comput. Appl. 2020, 32, 16625–16646. [Google Scholar] [CrossRef]

- Price, K.; Awad, N.; Suganthan, P. The 100-Digit Challenge: Problem Definitions and Evaluation Criteria for the 100-Digit Challenge Special Session and Competition on Single Objective Numerical Optimization; Nanyang Technological University: Singapore, 2018. [Google Scholar]

- Cortés-Toro, E.M.; Crawford, B.; Gómez-Pulido, J.A.; Soto, R.; Lanza-Gutiérrez, J.M. A New Metaheuristic Inspired by the Vapour-Liquid Equilibrium for Continuous Optimization. Appl. Sci. 2018, 8, 2080. [Google Scholar] [CrossRef]

- LaTorre, A.; Molina, D.; Osaba, E.; Poyatos, J.; Del Ser, J.; Herrera, F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021, 67, 100973. [Google Scholar] [CrossRef]

- Baggett, B.M. Optimization of Aperiodically Spaced Phased Arrays for Wideband Applications. 3 May 2011. Available online: https://vtechworks.lib.vt.edu/bitstream/handle/10919/32532/Baggett_BMW_T_2011_2.pdf?sequence=1&isAllowed=y (accessed on 12 May 2020).

- Diao, J.; Kunzler, J.W.; Warnick, K.F. Sidelobe Level and Aperture Efficiency Optimization for Tiled Aperiodic Array Antennas. IEEE Trans. Antennas Propag. 2017, 65, 7083–7090. [Google Scholar] [CrossRef]

- Lebret, H.; Boyd, S. Antenna array pattern synthesis via convex optimization. IEEE Trans. Signal Process. 1997, 45, 526–532. [Google Scholar] [CrossRef]

- Jin, N.; Rahmat-Samii, Y. Advances in Particle Swarm Optimization for Antenna Designs: Real-Number, Binary, Single-Objective and Multiobjective Implementations. IEEE Trans. Antennas Propag. 2007, 55, 556–567. [Google Scholar] [CrossRef]

- Swagatam, D.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems. Available online: https://sci2s.ugr.es/sites/default/files/files/TematicWebSites/EAMHCO/contributionsCEC11/RealProblemsTech-Rep.pdf (accessed on 30 June 2020).

| Nature | Algorithm |

|---|---|

| Worker ant | Search agent |

| Deposition position | Potential solution |

| Deposition position specification | Fitness function |

| Worker ant’s decision factor | Deposition weight |

| Fittest deposition position | Optimum solution |

| Stationary stone and/or nestmate | Previous deposition position |

| Notation | Description |

|---|---|

| t | Current iteration |

| i | Current worker ant |

| m | Iteration number |

| n | Worker ant number |

| Xt,i | Worker ant’s current deposition position |

| Xt,ibest | Worker ant’s local best deposition position |

| Xt,iprevious | Worker ant’s previous deposition position |

| Xt,ifitness | Worker ant’s current deposition position’s fitness |

| Xt,ibestfitness | Worker ant’s local best deposition position fitness |

| Xt,ipreviousfitness | Worker ant’s previous deposition position fitness |

| Xt+1,i | Worker ant’s new deposition position |

| ΔXt+1,i | Worker ant’s deposition position’s rate of change |

| T | Worker ant’s current deposition tendency rate |

| Tprevious | Worker ant’s previous deposition tendency rate |

| dw | Deposition weight |

| r | Random number in the [−1, 1] range |

| Test Function | ANA | DA [38] | PSO [38] | GA [38] | ||||

|---|---|---|---|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | Mean | Standard Deviation | Mean | Standard Deviation | |

| F1 | 0.016299162 | 0.062457134 | 2.85 × 10−18 | 7.16 × 10−18 | 4.20 × 10−18 | 1.31 × 10−17 | 748.5972 | 324.9262 |

| F2 | 2.36 × 10−5 | 2.15 × 10−5 | 1.49 × 10−5 | 3.76 × 10−5 | 0.003154 | 0.009811 | 5.971358 | 1.533102 |

| F3 | 1.239172335 | 1.035406371 | 1.29 × 10−6 | 2.10×10−6 | 0.001891 | 0.003311 | 1949.003 | 994.2733 |

| F4 | 2.37 × 10−6 | 8.86 × 10−16 | 0.000988 | 0.002776 | 0.001748 | 0.002515 | 21.16304 | 2.605406 |

| F5 | 14.95306041 | 30.63521072 | 7.600558 | 6.786473 | 63.45331 | 80.12726 | 133307.1 | 85,007.62 |

| F7 | 0.770696384 | 0.366042632 | 0.010293 | 0.004691 | 0.005973 | 0.003583 | 0.166872 | 0.072571 |

| F9 | 25.46221873 | 4.313900987 | 16.01883 | 9.479113 | 10.44724 | 7.879807 | 25.51886 | 6.66936 |

| F10 | 5.54 × 10−15 | 2.37 × 10−15 | 0.23103 | 0.487053 | 0.280137 | 0.601817 | 9.498785 | 1.271393 |

| F11 | 0.411189712 | 0.073239096 | 0.193354 | 0.073495 | 0.083463 | 0.035067 | 7.719959 | 3.62607 |

| F12 | 3.219956841 | 2.52657309 | 0.031101 | 0.098349 | 8.57 × 10−11 | 2.71 × 10−10 | 1858.502 | 5820.215 |

| F13 | 1.76 × 10−23 | 9.47 × 10−23 | 103.742 | 91.24364 | 150 | 135.4006 | 130.0991 | 21.32037 |

| F14 | 4.26 × 10−14 | 1.54 × 10−13 | 193.0171 | 80.6332 | 188.1951 | 157.2834 | 116.0554 | 19.19351 |

| F15 | 4.89 × 10−6 | 3.31 × 10−6 | 458.2962 | 165.3724 | 263.0948 | 187.1352 | 383.9184 | 36.60532 |

| F16 | 23.76092355 | 0.048390796 | 596.6629 | 171.0631 | 466.5429 | 180.9493 | 503.0485 | 35.79406 |

| F17 | 223.5622125 | 0.008813889 | 229.9515 | 184.6095 | 136.1759 | 160.0187 | 118.438 | 51.00183 |

| F18 | 31.51015225 | 0.020777872 | 679.588 | 199.4014 | 741.6341 | 206.7296 | 544.1018 | 13.30161 |

| Test Function | ANA (This Work) | PSO [51] | GPSO [51] | EPSO [51] | LNPSO [51] | VC-PSO [51] | SO-PSO [51] | |

|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 1.17 × 10−45 | 1.11 × 10−45 | 1.17 × 10−45 | 1.11 × 10−45 | 1.17 × 10−108 | 1.51 × 10−108 |

| Standard deviation | 0 | 5.22 × 10−46 | 4.76 × 10−46 | 5.22 × 10−46 | 4.76 × 10−46 | 4.36 × 10−108 | 4.46 × 10−108 | |

| F5 | Mean | 14.7067849 | 22.19173 | 9.99284 | 8.995165 | 4.405738 | 6.30326 | 6.81079 |

| Standard deviation | 0.17792416 | 1.62 × 104 | 3.16891 | 3.959364 | 4.121244 | 3.99428 | 3.76973 | |

| F7 | Mean | 0.84120263 | 8.681602 | 0.63602 | 0.380297 | 0.537461 | 0.410042 | 0.806175 |

| Standard deviation | 0.55592343 | 9.001534 | 0.29658 | 0.281234 | 0.285361 | 0.294763 | 0.868211 | |

| F9 | Mean | 1.12 × 10−7 | 22.33916 | 9.75054 | 12.17397 | 23.50713 | 9.99929 | 8.95459 |

| Standard deviation | 3.32 × 10−7 | 15.93204 | 5.43379 | 9.274301 | 15.30457 | 4.08386 | 2.65114 | |

| F10 | Mean | 5.42 × 10−15 | 3.48 × 10−18 | 3.14 × 10−18 | 3.37 × 10−18 | 3.37 × 10−18 | 5.47 × 10−19 | 4.59 × 10−19 |

| Standard deviation | 1.74 × 10−15 | 8.36 × 10−19 | 8.60 × 10−19 | 8.60 × 10−19 | 8.60 × 10−19 | 1.78 × 10−18 | 1.54 × 10−18 | |

| F11 | Mean | 0.92650251 | 0.031646 | 0.00475 | 0.011611 | 0.011009 | 0.00147 | 0.001847 |

| Standard deviation | 0.02222257 | 0.025322 | 0.01267 | 0.019728 | 0.019186 | 0.00469 | 0.004855 |

| Test Function | ANA | DA [49] | WOA [49] | SSA [49] | ||||

|---|---|---|---|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | Mean | Standard Deviation | Mean | Standard Deviation | |

| CEC01 | - | - | 5.43 × 1010 | 6.69 × 1010 | 4.11 × 1010 | 5.42 × 1010 | 6.05 × 109 | 4.75 × 109 |

| CEC02 | 4 | 2.87 × 10−14 | 78.0368 | 87.7888 | 17.3495 | 0.0045 | 18.3434 | 0.0005 |

| CEC03 | 13.70240422 | 2.01 × 10−11 | 13.7026 | 0.0007 | 13.7024 | 0 | 13.7025 | 0.0003 |

| CEC04 | 38.50887822 | 10.07245727 | 344.356 | 414.098 | 394.675 | 248.563 | 41.6936 | 22.2191 |

| CEC05 | 1.224598709 | 0.114632394 | 2.5572 | 0.3245 | 2.7342 | 0.2917 | 2.2084 | 0.1064 |

| CEC06 | - | - | 9.8955 | 1.6404 | 10.7085 | 1.0325 | 6.0798 | 1.4873 |

| CEC07 | 116.5962143 | 8.825046006 | 578.953 | 329.398 | 490.684 | 194.832 | 410.396 | 290.556 |

| CEC08 | 5.472814997 | 0.429461877 | 6.8734 | 0.5015 | 6.909 | 0.4269 | 6.3723 | 0.5862 |

| CEC09 | 2.000963996 | 0.00341781 | 6.0467 | 2.871 | 5.9371 | 1.6566 | 3.6704 | 0.2362 |

| CEC10 | 2.718281828 | 4.44 × 10−16 | 21.2604 | 0.1715 | 21.2761 | 0.1111 | 21.04 | 0.078 |

| Test Function | ANA | DA | PSO | GA |

|---|---|---|---|---|

| F1 | 3 | 1 | 2 | 4 |

| F2 | 2 | 1 | 3 | 4 |

| F3 | 3 | 1 | 2 | 4 |

| F4 | 1 | 2 | 3 | 4 |

| F5 | 2 | 1 | 3 | 4 |

| F7 | 4 | 2 | 1 | 3 |

| F9 | 3 | 2 | 1 | 4 |

| F10 | 1 | 2 | 3 | 4 |

| F11 | 3 | 2 | 1 | 4 |

| F12 | 3 | 2 | 1 | 4 |

| F13 | 1 | 2 | 4 | 3 |

| F14 | 1 | 4 | 3 | 2 |

| F15 | 1 | 4 | 2 | 3 |

| F16 | 1 | 4 | 2 | 3 |

| F17 | 3 | 4 | 2 | 1 |

| F18 | 1 | 3 | 4 | 2 |

| Rank | ANA | DA | PSO | GA |

|---|---|---|---|---|

| First | 7 | 4 | 4 | 1 |

| Second | 2 | 7 | 5 | 2 |

| Third | 6 | 1 | 5 | 4 |

| Fourth | 1 | 4 | 2 | 9 |

| Test Function | ANA | PSO | GPSO | EPSO | LNPSO | VC-PSO | SO-PSO |

|---|---|---|---|---|---|---|---|

| F1 | 1 | 6 | 4 | 6 | 4 | 2 | 3 |

| F5 | 6 | 7 | 5 | 4 | 1 | 2 | 3 |

| F7 | 6 | 7 | 4 | 1 | 3 | 2 | 5 |

| F9 | 1 | 6 | 3 | 5 | 7 | 4 | 2 |

| F10 | 7 | 6 | 3 | 4 | 4 | 2 | 1 |

| F11 | 7 | 6 | 3 | 5 | 4 | 1 | 2 |

| Rank | ANA | PSO | GPSO | EPSO | LNPSO | VC-PSO | SO-PSO |

|---|---|---|---|---|---|---|---|

| First | 2 | 0 | 0 | 1 | 1 | 1 | 1 |

| Second | 0 | 0 | 0 | 0 | 0 | 4 | 2 |

| Third | 0 | 0 | 3 | 0 | 1 | 0 | 2 |

| Fourth | 0 | 0 | 2 | 2 | 3 | 1 | 0 |

| Fifth | 0 | 0 | 1 | 2 | 0 | 0 | 1 |

| Sixth | 2 | 4 | 0 | 1 | 0 | 0 | 0 |

| Seventh | 2 | 2 | 0 | 0 | 1 | 0 | 0 |

| Test Function Type | Total Ranking | Total Ranking/No. of Function | Ranking (1–4) |

|---|---|---|---|

| Unimodal | 15 | 15/6 | 2.50 |

| Multimodal | 10 | 10/4 | 2.50 |

| Composite | 8 | 8/6 | 1.33 |

| Total | 33 | 33/16 | 2.06 |

| Test Function | Student’s t-Test | Welch’s t-Test | Wilcoxon Signed-Rank Test |

|---|---|---|---|

| F1 | 0.066202 | 0.137803 | 1.86 × 10−9 |

| F2 | 0.092068 | 0.189324 | 0.685047 |

| F3 | 0.00001 | 3.0678 × 10−6 | 1.86 × 10−9 |

| F4 | 0.046755 | 0.0988506 | 3.73 × 10−9 |

| F5 | 0.04671 | 0.0976895 | 0.404495 |

| F7 | 0.000517 | 0.00104329 | 0.00761214 |

| F9 | 0.000021 | 5.827 × 10−5 | 1.061 × 10−5 |

| F10 | 0.5 | 0.295841 | 0.00559672 |

| F11 | 0.001898 | 0.00403645 | 0.00322299 |

| F12 | 0.001298 | 0.00372609 | 5.1446 × 10−6 |

| F13 | 0.00001 | 1.95 × 10−13 | 1.2508 × 10−6 |

| F14 | - | 0.0120538 | 3.73 × 10−9 |

| F15 | 0.5 | 0.536272 | 0.00021761 |

| F16 | 0.00001 | 4.0245 × 10−5 | 1.86 × 10−9 |

| F17 | 0.100256 | 0.203807 | 0.761065 |

| F18 | 0.00001 | 1.3746 × 10−6 | 1.86 × 10−9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hama Rashid, D.N.; Rashid, T.A.; Mirjalili, S. ANA: Ant Nesting Algorithm for Optimizing Real-World Problems. Mathematics 2021, 9, 3111. https://doi.org/10.3390/math9233111

Hama Rashid DN, Rashid TA, Mirjalili S. ANA: Ant Nesting Algorithm for Optimizing Real-World Problems. Mathematics. 2021; 9(23):3111. https://doi.org/10.3390/math9233111

Chicago/Turabian StyleHama Rashid, Deeam Najmadeen, Tarik A. Rashid, and Seyedali Mirjalili. 2021. "ANA: Ant Nesting Algorithm for Optimizing Real-World Problems" Mathematics 9, no. 23: 3111. https://doi.org/10.3390/math9233111

APA StyleHama Rashid, D. N., Rashid, T. A., & Mirjalili, S. (2021). ANA: Ant Nesting Algorithm for Optimizing Real-World Problems. Mathematics, 9(23), 3111. https://doi.org/10.3390/math9233111