Automatic Convexity Deduction for Efficient Function’s Range Bounding

Abstract

1. Introduction

- — the set of real numbers;

- — the set of integers;

- — the set of positive integers (natural numbers);

- — the set of all intervals in ;

- — intervals are denoted with bold font;

- — the range of function over interval ;

- —an interval extension of a function , i.e., a mapping such that for any , notice, there may be many different interval extensions for a function ;

- — is non-decreasing monotonic on or an interval if additionally specified;

- — is non-increasing monotonic on or an interval if additionally specified.

2. Automatic Deduction of the Convexity and Concavity of a Function

2.1. Deducing Monotonicity

- if on then on ;

- if on then on ;

- if and on then on ;

- if , and , on then on ;

- if and on then on ;

- if and on then on .

- If on , on and then on .

- If on , on and then on .

- If on , on , then on .

- If on , on , then on .

2.2. Deducing Convexity

- is convex on ,

- is convex on if ,

- is convex on .

- g is convex and nondecreasing on , h is convex on , then f is convex on ,

- g is convex and nonincreasing on , h is concave on , then f is convex on ,

- g is concave and nondecreasing on , h is concave on , then f is concave on ,

- g is concave and nonincreasing on , h is convex on , then f is concave on .

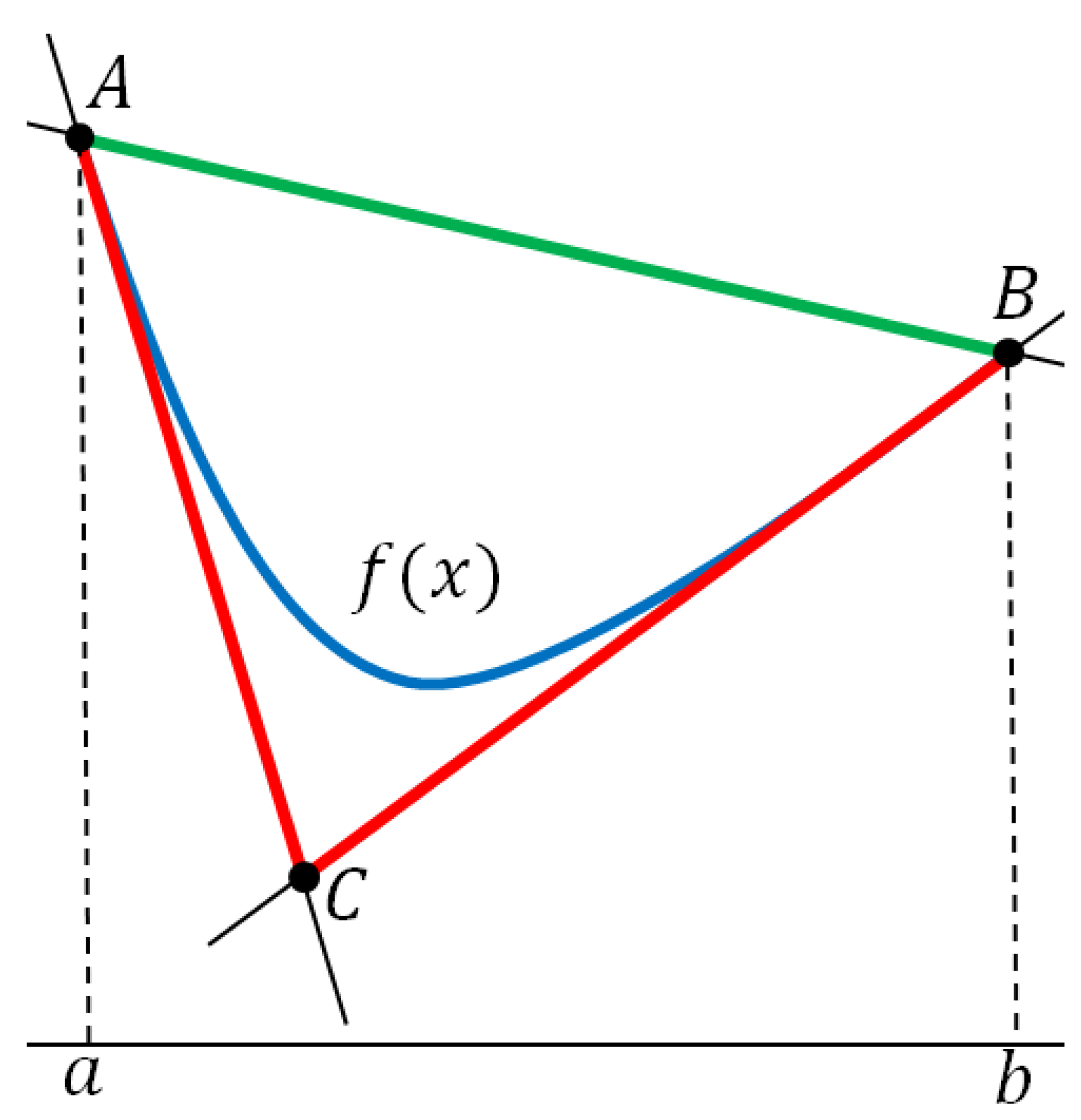

3. Application to Bounding the Function’s Range

4. Numerical Experiments

4.1. Comparison with Interval Bounds

- is concave on ,

- is convex on (by definition),

- x is concave on ,

- is convex on (by definition),

- is convex on (by Proposition 6),

- is convex on (by Proposition 4).

- Natural—a bound computed by the natural interval expansion techniques,

- Taylor—a bound computed by the 1st order Taylor expansion,

- Convex—a bound computed according to Propositions 9 and 10.

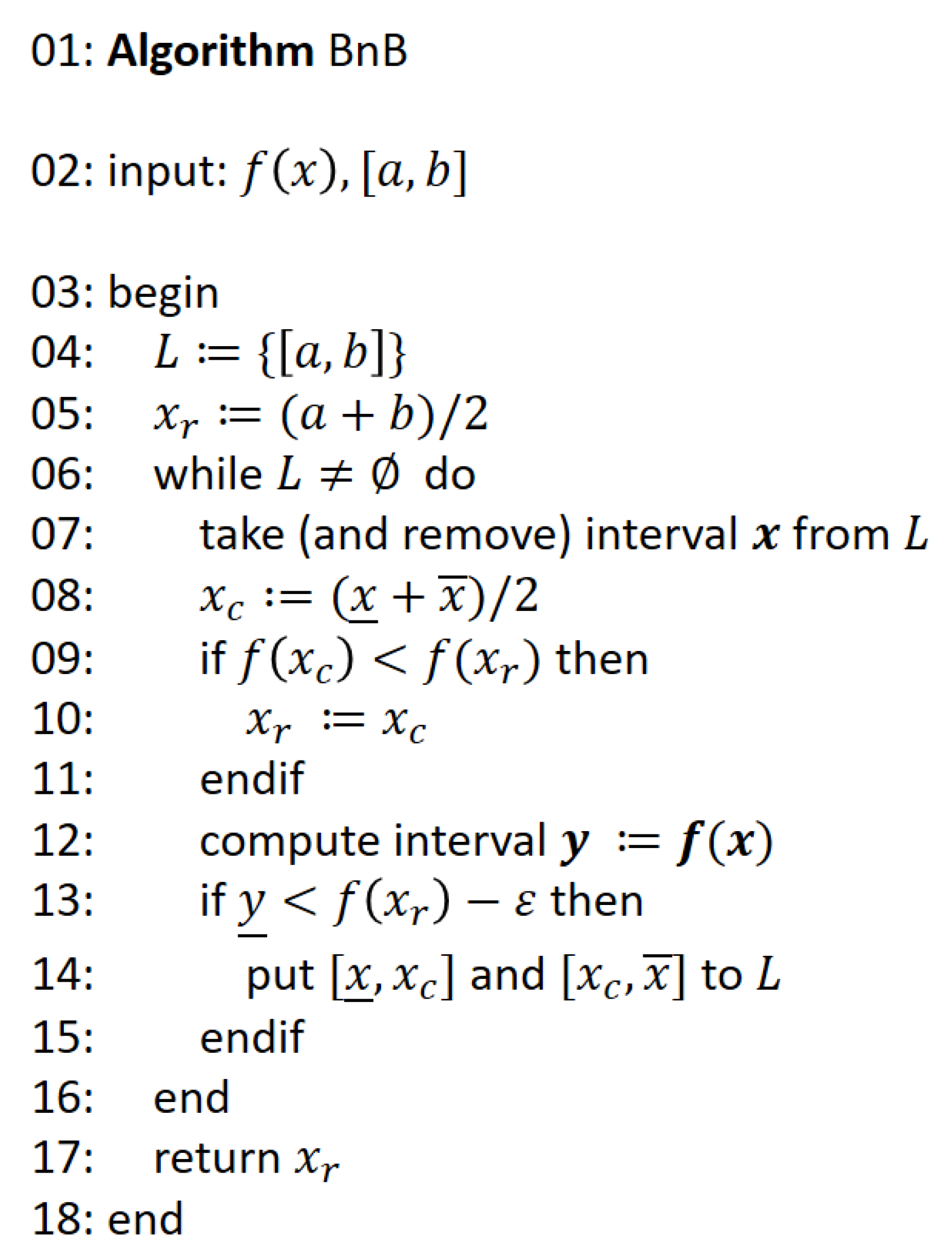

4.2. Impact on the Performance of Global Search

- Natural—the natural interval expansion techniques,

- Taylor—the 1-st order Taylor expansion,

- Convex—the range is computed according to Propositions 3, 9 and 10.

- Natural—pure natural interval expansion;

- Natural + Convex—the natural interval expansion combined with the proposed techniques;

- Natural + Taylor—the natural interval expansion combined with the first-order Taylor expansion;

- Natural + Taylor + Convex—the natural interval expansion combined with the first-order Taylor expansion and the proposed techniques.

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Johnson, D.E. Introduction to Filter Theory; Prentice Hall: Englewood Cliffs, NJ, USA, 1976. [Google Scholar]

- Zilinskas, A. Optimization of one-dimensional multimodal functions. J. R. Stat. Soc. Ser. C Appl. Stat. 1978, 27, 367–375. [Google Scholar]

- Kvasov, D.; Menniti, D.; Pinnarelli, A.; Sergeyev, Y.D.; Sorrentino, N. Tuning fuzzy power-system stabilizers in multi-machine systems by global optimization algorithms based on efficient domain partitions. Electr. Power Syst. Res. 2008, 78, 1217–1229. [Google Scholar] [CrossRef]

- Bedrosian, D.; Vlach, J. Time-domain analysis of networks with internally controlled switches. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 1992, 39, 199–212. [Google Scholar] [CrossRef]

- Femia, N.; Tucci, V. On the modeling of PWM converters for large signal analysis in discontinuous conduction mode. IEEE Trans. Power Electron. 1994, 9, 487–496. [Google Scholar] [CrossRef]

- Lassere, J.B. Connecting optimization with spectral analysis of tri-diagonal matrices. Math. Program. 2020. [Google Scholar] [CrossRef]

- Strongin, R.G.; Sergeyev, Y.D. Global Optimization with Non-Convex Constraints: Sequential and Parallel Algorithms; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 45. [Google Scholar]

- Lera, D.; Sergeyev, Y.D. GOSH: Derivative-free global optimization using multi-dimensional space-filling curves. J. Glob. Optim. 2018, 71, 193–211. [Google Scholar] [CrossRef]

- Lera, D.; Posypkin, M.; Sergeyev, Y.D. Space-filling curves for numerical approximation and visualization of solutions to systems of nonlinear inequalities with applications in robotics. Appl. Math. Comput. 2021, 390, 125660. [Google Scholar] [CrossRef]

- Jensen, P.A.; Bard, J.F.; Jensen, P. Operations Research Models and Methods; John Wiley & Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Pintér, J. Extended univariate algorithms for n-dimensional global optimization. Computing 1986, 36, 91–103. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E. Deterministic Global Optimization: An Introduction to the Diagonal Approach; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Evtushenko, Y.G. Numerical methods for finding global extrema (case of a non-uniform mesh). USSR Comput. Math. Math. Phys. 1971, 11, 38–54. [Google Scholar] [CrossRef]

- Pijavskij, S. An algorithm for finding the global extremum of function. Optim. Decis. 1967, 2, 13–24. [Google Scholar]

- Shubert, B.O. A sequential method seeking the global maximum of a function. SIAM J. Numer. Anal. 1972, 9, 379–388. [Google Scholar] [CrossRef]

- Timonov, L. Algorithm for search of a global extremum. Eng. Cybern. 1977, 15, 38–44. [Google Scholar]

- Jones, D.R.; Perttunen, C.D.; Stuckman, B.E. Lipschitzian optimization without the Lipschitz constant. J. Optim. Theory Appl. 1993, 79, 157–181. [Google Scholar] [CrossRef]

- Kvasov, D.E.; Sergeyev, Y.D. A univariate global search working with a set of Lipschitz constants for the first derivative. Optim. Lett. 2009, 3, 303–318. [Google Scholar] [CrossRef]

- Lera, D.; Sergeyev, Y.D. Acceleration of univariate global optimization algorithms working with Lipschitz functions and Lipschitz first derivatives. SIAM J. Optim. 2013, 23, 508–529. [Google Scholar] [CrossRef]

- Gergel, V.P. A global optimization algorithm for multivariate functions with Lipschitzian first derivatives. J. Glob. Optim. 1997, 10, 257–281. [Google Scholar] [CrossRef]

- Sergeyev, Y.D. Global one-dimensional optimization using smooth auxiliary functions. Math. Program. 1998, 81, 127–146. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Nasso, M.C.; Mukhametzhanov, M.S.; Kvasov, D.E. Novel local tuning techniques for speeding up one-dimensional algorithms in expensive global optimization using Lipschitz derivatives. J. Comput. Appl. Math. 2020, 383, 113134. [Google Scholar] [CrossRef]

- Hansen, P.; Jaumard, B.; Lu, S.H. Global optimization of univariate Lipschitz functions: I. Survey and properties. Math. Program. 1992, 55, 251–272. [Google Scholar] [CrossRef]

- Hansen, P.; Jaumard, B.; Lu, S.H. Global optimization of univariate Lipschitz functions: II. New algorithms and computational comparison. Math. Program. 1992, 55, 273–292. [Google Scholar] [CrossRef]

- Pintér, J.D. Global Optimization in Action: Continuous and Lipschitz Optimization: Algorithms, Implementations and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 6. [Google Scholar]

- Hansen, E.; Walster, G.W. Global Optimization Using Interval Analysis: Revised and Expanded; CRC Press: Boca Raton, FL, USA, 2003; Volume 264. [Google Scholar]

- Moore, R.E.; Kearfott, R.B.; Cloud, M.J. Introduction to Interval Analysis; SIAM: Philadelphia, PA, USA, 2009. [Google Scholar]

- Kearfott, R.B. Rigorous Global Search: Continuous Problems; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 13. [Google Scholar]

- Casado, L.G.; MartÍnez, J.A.; GarcÍa, I.; Sergeyev, Y.D. New interval analysis support functions using gradient information in a global minimization algorithm. J. Glob. Optim. 2003, 25, 345–362. [Google Scholar] [CrossRef]

- Fasano, G.; Pintér, J.D. Efficient piecewise linearization for a class of non-convex optimization problems: Comparative cesults and extensions. In Springer Proceedings in Mathematics & Statistics; Pintér, J., Terlaky, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 279, pp. 39–56. [Google Scholar]

- Posypkin, M.; Usov, A.; Khamisov, O. Piecewise linear bounding functions in univariate global optimization. Soft Comput. 2020, 24, 17631–17647. [Google Scholar] [CrossRef]

- Floudas, C.; Gounaris, C. Tight convex underestimators for C2-continuous functions: I. Univariate functions. J. Glob. Optim 2008, 42, 51–67. [Google Scholar]

- Ratz, D. A nonsmooth global optimization technique using slopes: The one-dimensional case. J. Glob. Optim. 1999, 14, 365–393. [Google Scholar] [CrossRef]

- Tuy, H.; Hoang, T.; Hoang, T.; Mathématicien, V.N.; Hoang, T.; Mathematician, V. Convex Analysis and Global Optimization; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Strekalovsky, A.S. On local search in dc optimization problems. Appl. Math. Comput. 2015, 255, 73–83. [Google Scholar]

- Arıkan, O.; Burachik, R.; Kaya, C. Steklov regularization and trajectory methods for univariate global optimization. J. Glob. Optim. 2020, 76, 91–120. [Google Scholar] [CrossRef]

- Ahmadi, A.; Hall, G. On the complexity of detecting convexity over a box. Math. Program. 2020, 182, 429–443. [Google Scholar] [CrossRef]

- Fourer, R.; Maheshwari, C.; Neumaier, A.; Orban, D.; Schichl, H. Convexity and concavity detection in computational graphs: Tree walks for convexity assessment. Informs J. Comput. 2010, 22, 26–43. [Google Scholar] [CrossRef]

- Grant, M.; Boyd, S. CVX: MATLAB Software for Disciplined Convex Programming. Version 1.21. 2010. Available online: http://cvxr.com/cvx (accessed on 9 January 2020).

- Grant, M.C.; Boyd, S.P. Graph implementations for nonsmooth convex programs. In Recent Advances in Learning and Control; Springer: Berlin/Heidelberg, Germany, 2008; pp. 95–110. [Google Scholar]

- Ratschek, H.; Rokne, J. New Computer Methods for Global Optimization; Horwood: Chichester, UK, 1988. [Google Scholar]

- Nataraj, P.S.; Arounassalame, M. A new subdivision algorithm for the Bernstein polynomial approach to global optimization. Int. J. Autom. Comput. 2007, 4, 342–352. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 87. [Google Scholar]

- Niculescu, C.; Persson, L.-E. Convex Functions and their Applications. A Contemporary Approach; Springer International Publishing: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Hadjisavvas, N.; Komlósi, S.; Schaible, S.S. Handbook of Generalized Convexity and Generalized Monotonicity; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; Volume 76. [Google Scholar]

| Type | Smooth | Non-Smooth |

|---|---|---|

| One variable | , , , , | |

| , , | ||

| Two variables | , | , |

| Function | Increase | Decrease |

|---|---|---|

| , , , | — | |

| , | ||

| — | ||

| — | ||

| — | ||

| , | , | |

| — | ||

| — |

| Function | Convex | Concave |

|---|---|---|

| , , , | — | |

| , , | ||

| — | ||

| — | ||

| , | , | |

| No | Natural | Taylor | Convex | ||

|---|---|---|---|---|---|

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 | |||||

| 5 | — |

| No | |||

|---|---|---|---|

| 1 | 0 | ||

| 2 | 1 | ||

| 3 | |||

| 4 | |||

| 5 | |||

| 6 | |||

| 7 | |||

| 8 | |||

| 9 | |||

| 10 | |||

| 11 | 8 | ||

| 12 | 33 | ||

| 13 | 1 | ||

| 14 | 1 |

| No | Natural | Natural + Convex | Natural + Taylor | Natural + Taylor + Convex |

|---|---|---|---|---|

| 1 | 35 | 15 | 29 | 15 |

| 2 | 135,043 | 199 | 267 | 81 |

| 3 | 98,995 | 107 | 269 | 79 |

| 4 | 72,953 | 151 | 311 | 91 |

| 5 | 443 | 39 | 83 | 39 |

| 6 | 187 | 19 | 47 | 19 |

| 7 | 183 | 39 | 69 | 39 |

| 8 | 189 | 49 | 91 | 49 |

| 9 | 857 | 31 | 75 | 31 |

| 10 | 51 | 19 | 27 | 19 |

| 11 | 55 | 5 | — | — |

| 12 | 579 | 23 | — | — |

| 13 | 35 | 27 | — | — |

| 14 | 125 | 125 | — | — |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Posypkin, M.; Khamisov, O. Automatic Convexity Deduction for Efficient Function’s Range Bounding. Mathematics 2021, 9, 134. https://doi.org/10.3390/math9020134

Posypkin M, Khamisov O. Automatic Convexity Deduction for Efficient Function’s Range Bounding. Mathematics. 2021; 9(2):134. https://doi.org/10.3390/math9020134

Chicago/Turabian StylePosypkin, Mikhail, and Oleg Khamisov. 2021. "Automatic Convexity Deduction for Efficient Function’s Range Bounding" Mathematics 9, no. 2: 134. https://doi.org/10.3390/math9020134

APA StylePosypkin, M., & Khamisov, O. (2021). Automatic Convexity Deduction for Efficient Function’s Range Bounding. Mathematics, 9(2), 134. https://doi.org/10.3390/math9020134