Abstract

In this work, we develop a new class of methods which have been created in order to numerically solve non-linear second-order in time problems in an efficient way. These methods are of the Rosenbrock type, and they can be seen as a generalization of these methods when they are applied to second-order in time problems which have been previously transformed into first-order in time problems. As they also follow the ideas of Runge–Kutta–Nyström methods when solving second-order in time problems, we have called them Rosenbrock–Nyström methods. When solving non-linear problems, Rosenbrock–Nyström methods present less computational cost than implicit Runge–Kutta–Nyström ones, as the non-linear systems which arise at every intermediate stage when Runge–Kutta–Nyström methods are used are replaced with sequences of linear ones.

1. Introduction

In the literature, there are many non-linear second-order in time problems which must be numerically solved by means of appropriate methods. It is usual to use numerical methods that have been designed to numerically solve first-order in time problems by transforming the initial problem into a first-order one. In this way, we have several options to choose from, such as Runge–Kutta methods (RK methods), fractional step Runge–Kutta methods (FSRK methods) or exponential integrators. These methods are a good option if the problem we have is a linear one but they present a high computational cost when the problem we are solving is a non-linear one, as we have to solve non-linear problems at every intermediate stage (see [1,2,3,4]). In this case, a good option is to use Rosenbrock methods or exponential Rosenbrock type methods [5,6,7], for example. However, in this case, when converting the original problem to a first-order one, the dimension of the problem doubles, so the computational cost increases.

Another possibility is to use numerical integrators specially derived to numerically solve second-order in time problems. In this way, we can use, for example, Runge–Kutta–Nyström methods (RKN methods) or fractional step Runge–Kutta–Nyström methods (FSRKN methods) [2,8,9]. When we use this type of method, we have to choose whether to use explicit or implicit methods. When we use explicit methods, there may be stability problems when the problem we are solving is a stiff one. On the other hand, if we choose an implicit method, we can select a method with an infinite stability interval, but with a high computational cost [8] when the problem is non-linear or/and multidimensional in space. In order to avoid the high computational cost that implicit RKN methods present when multidimensional problems in space are solved, FSRKN methods were developed and studied in [9]. The idea of these methods is to split the spatial operator in a suitable way so that at every intermediate stage the problem to be solved is simpler in a certain way than the original one.

In order to avoid all the previous drawbacks when solving a non-linear second-order in time problem, in this paper, we present a new class of methods, which we call Rosenbrock–Nyström methods. These methods avoid the non-linear systems which arise when RKN methods are used by replacing them with sequences of linear ones. Rosenbrock–Nyström methods arise in a natural way as a generalization of Rosenbrock ones when they are applied to second-order in time equations that have been previously transformed into first-order in time problems.

In the literature, the construction of methods of the Rosenbrock type to numerically solve second-order non-linear systems of ordinary differential equations has already been studied [10]. However, the methods presented in that paper differ from the ones presented here. We remark here on three of the most important differences between the methods in [10] and our methods when solving a problem such as , , , with . The first one is that to use the methods presented in [10], we have to define , with and then we have to convert the problem to a first-order one in the following way: , , while with our methods we do not need to convert the problem to a first-order one. The second difference is that in [10], we have to solve two linear systems at each intermediate step, instead of the one linear system we have to solve at each intermediate step when using the methods presented here. The last one is that in the mentioned paper, operator and its Jacobian have to be evaluated at every intermediate stage. When using our method, we evaluate operator at every intermediate stage, but we use fixed values of and at every time step, so the number of function evaluations per intermediate stage is smaller with our methods.

In this article, we show the development of Rosenbrock–Nyström methods, as well as the conditions that must be satisfied to obtain the desired classical order (up to order four) and the main ideas in order to have stability when linear problems are solved. In addition, we show some numerical experiments that prove the good behavior of these new methods.

This paper is structured as follows: in the next section, we give a brief description of Rosenbrock–Nyström methods, together with their development. In Section 3, we describe the stability requirements that these methods should satisfy when integrating linear problems. In Section 4, we deal with the conditions that the coefficients of the method should satisfy in order to have up to classical order four. The construction of such methods is studied in [11]. Finally, in Section 5, we present some numerical experiments in order to test such methods.

2. Development of Rosenbrock–Nyström Methods

Non-linear second-order in time problems can be written in an abstract form as follows:

“Find solution of

where, typically, is a Hilbert space of functions defined in a certain bounded domain , integer with smooth boundary . This formulation involves lots of different problems: partial differential equations, ordinary differential equations, etc.

Example 1.

Let us show here two problems that will be solved in the numerical experiments (Section 5) and can be solved by using Rosenbrock–Nyström methods.

The first problem is a modification of the non-linear wave propagation suggested in [12]

with

is such that the exact solution is given by . Parameter λ controls the stiffness of the problem.

Example 2.

The second example is the following non-linear Euler–Bernoulli equation:

with such that the exact solution is . Operator A is such that . This problem can be discretized in space by taking, for example, a pseudo-spectral discretization.

When solving this problem with an explicit Runge–Kutta–Nyström method, we have to impose very restrictive hypotheses over the time step size in order to guarantee stability.

When we solve a problem such as (1) with a Rosenbrock–Nyström method, the numerical approximation to the exact solution and its derivative, is given by , where , with as the time step size. The numerical approximation proposed by us is calculated as

where , are the intermediate stages and . These intermediate stages are given by

We use notation and to refer to and , respectively. When the problem we are solving is autonomous, that is, of the form

the equations which determine the method are

Notice that in this case, we have a simplification of the main problem (1) and the equations can be obtained by considering that .

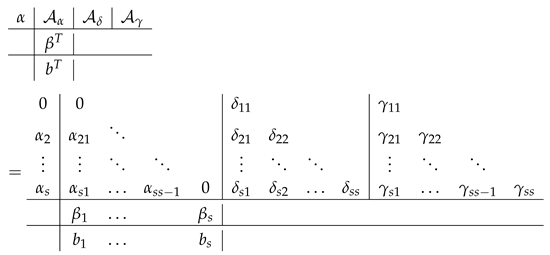

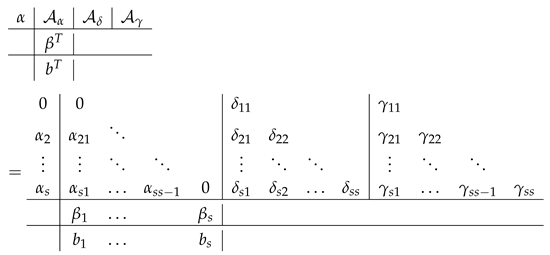

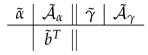

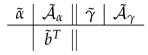

Similarly, as happens with other classical methods such as RK methods, RKN methods, Rosenbrock methods, etc., the coefficients of these methods can be written in a tableau as follows:

where we will assume that .

Notice that at every intermediate stage, the problem to be solved is a linear one, so the computational cost is reduced compared with the equations that implicit RKN methods provide when solving this type of problem. When we select the values , , then, at every intermediate stage , we have to solve a linear problem such as

where I denotes the identity operator and is the term in (4) that does not depend on , . Therefore, the computational cost reduces as remains constant for all the intermediate stages.

In order to guarantee the solvability of the intermediate stages, we will assume that, for every , is such that exists and is bounded for every with .

Remark 1.

When we have that for every , is self-adjoint and negative semi-definite, the solvability and boundedness of the intermediate stages are guaranteed because of the following:

- In the case of being a space differential operator, is the infinitesimal generator of a -semigroup of type , so exists and is bounded for every μ with [13].

- In the case of being a regular function, then, for every , we have that is a symmetric negative semi-definite matrix, so exists for every .

Let us see now the way in which these methods have been developed. The way of defining these new methods is the natural one since they can be obtained from Rosenbrock methods applied to problem (1) when it is transformed to a first-order in time problem. Let us remember that the coefficients of Rosenbrock methods are given by an array of the form

and the equations that these methods give when solving a problem such as

are

where , with as the time step size and as the numerical approximation to . , , are the intermediate stages.

and the equations that these methods give when solving a problem such as

are

where , with as the time step size and as the numerical approximation to . , , are the intermediate stages.

To solve problem (1) with a Rosenbrock method, we first write it as a first-order one, by defining . In this way, we define and, therefore, we have a problem like (7), being . The initial condition is with . We apply a Rosenbrock method to this problem, by using notation .

We operate in the equations that give the intermediate stages, replacing with its expression in the equations for . From this, we obtain

Now, lets us assume that the Rosenbrock method satisfies

The first two conditions are usual restrictions satisfied for many Rosenbrock methods and the third one is the condition to have classical order 1. Then, if we denote , and if we define

what we obtain is precisely Equation (4). Furthermore, we can construct Rosenbrock–Nyström methods that do not come from Rosenbrock ones. This fact gives much more freedom to obtain the coefficients of the desired methods. The proposed methods with classical order 3 and 4 that are used in the numerical experiments have been obtained without using existing Rosenbrock ones.

3. Stability When Solving Linear Ordinary Differential Equations

Following the ideas given for Rosenbrock methods in [6], in this part we deal with the stability of Rosenbrock–Nyström methods when they are applied to a simplified problem such as

where B is a given symmetric positive defined matrix of order and , and .

Here, we study the stability in the energy norm, which is the natural norm for the study of the well-posedness of problem (9). This norm is given by

with being the Euclidean norm in .

When solving problem (9) with a Rosenbrock–Nyström method, we obtain

where terms , are given, in tensorial form, by

with . These elements form matrix ,

By bounding (10) in the energy norm, we obtain that the proof of stability is related to the boundedness of the powers of matrix . As matrix B is assumed to be symmetric and positive definite, then B is normal and we can use the following spectral result:

with being the spectrum of . Then, the boundedness of the powers of matrix is reduced to the study of the boundedness of matrix . (Note: if we assume that B is not normal, we can use a similar result to (11), but considering the numerical range instead of the spectrum [14].)

Following the results in [15], the following definitions and theorem can be stated.

Definition 1.

The interval is the interval of stability of the Rosenbrock–Nyström method if is the highest value, such that

The Rosenbrock–Nyström method is said to be R-stable if .

Definition 2.

The interval is the interval of periodicity of the Rosenbrock–Nyström method if is the highest value, such that

We can say that the Rosenbrock–Nyström method is P-stable if .

Theorem 1.

Under assumptions that

- (i)

- The method is R-stable.

- (ii)

- .

- (iii)

- There exists a value such that does not have double eigenvalues.

- (iv)

- .

Then,

where C is independent of the size of .

This result cannot be obtained if assumption (iv) is not satisfied.

Proof.

The proof of this theorem is straightforward by using the results of Theorems 5 and 7 in [15]. □

Corollary 1.

When the Rosenbrock–Nyström method comes from a Rosenbrock one with classical order greater than or equal to one, the condition

is always satisfied.

Proof.

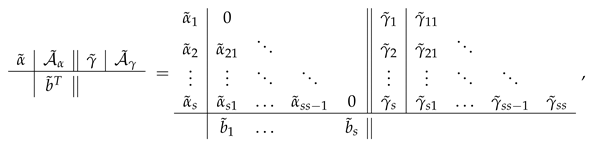

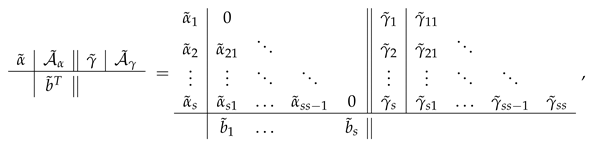

Let us assume that the Rosenbrock–Nyström method comes from a Rosenbrock one with Butcher array

and that the coefficients of the Rosenbrock–Nyström method satisfy relations (8). Then,

and that the coefficients of the Rosenbrock–Nyström method satisfy relations (8). Then,

□

4. Order Conditions for Rosenbrock–Nyström Methods

Let us see the conditions that Rosenbrock–Nyström methods should satisfy to obtain the highest possible order when integrating a problem such as (1). In a similar way as with Runge–Kutta–Nyström methods, a Rosenbrock–Nyström method is said to have classical order p if

where is the numerical solution obtained from the exact solution with time step size .

In order to study the order conditions, it is useful to write the equations as in the autonomous case, given by (5), in the following way,

where the superscript indices in capital letters indicate the component of the vector we are using (in this part, the notation is similar to that used in [6]). In the following, will be denoted by , by , etc.

To obtain the order conditions, we compare the Taylor series of and obtained from the exact solution with the Taylor series of the exact solution.

In this part, the following formulae are used:

These formulae, which can be proved in a recursive manner, are obtained by using that

We differentiate by using the notation together with the previous formulae. Then, we obtain

where we have used that

together with

Then, by using the expressions obtained for , , we have

where we have used that

and therefore

For , , we have

Now, we calculate the derivatives of the exact solution, by taking into account that ,

To obtain the order conditions up to order four, we compare the results in (12) and (13) with the results in (14). Then, these order conditions are:

Order 1: We compare with and with

Order 2: We compare with and with

Order 3: We compare with and with

Order 4: We compare with and with

By using notation and , these order conditions can be written as follows:

Order 1:

Order 2:

Order 3:

Order 4:

5. Numerical Experiments

This section is devoted to the numerical experiments we have carried out in order to prove the advantages of these methods when solving a non-linear equation.

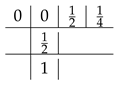

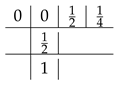

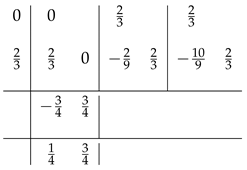

The Rosenbrock–Nyström methods we have chosen are the ones that are developed and studied in [11]. The first one is the one-stage method given by:

This method can be obtained from the well-known Rosenbrock method with

Therefore, the equations that this Rosenbrock–Nyström method provides are the same that we have with the Rosenbrock method when converting the second order problem in a first order one.

This method can be obtained from the well-known Rosenbrock method with

Therefore, the equations that this Rosenbrock–Nyström method provides are the same that we have with the Rosenbrock method when converting the second order problem in a first order one.

This Rosenbrock method is A-stable, but not L-stable [6]. The method presented here is the only Rosenbrock–Nyström method with one stage and classical order 2 that is stable. In fact, it can be proved that this method is P-stable as the eigenvalues of its stability matrix are , for . They are complex conjugate with modulus 1 except for and , where they are double and equal to 1. There are no one-stage Rosenbrock–Nyström methods with classical order 2 that are just R-stable but not P-stable, so in order to obtain methods of this type, we should use at least two stages. Another possibility is to construct one-stage Rosenbrock–Nyström methods with complex coefficients, following the ideas given in [16] for Rosenbrock methods. This will be the idea of future works.

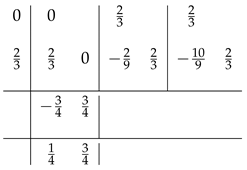

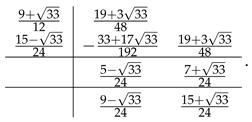

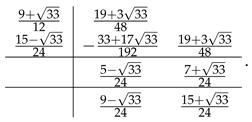

The second one is an R-stable method with two stages and classical order 3, which we will call . The coefficients of this method are given by the following array:

The third one is a method with three stages and classical order 4. This method is called . The coefficients of this method are given by the following array:

In the tables, the local and global orders are given. The global error has been calculated as the difference between the exact solution at and the numerical one obtained with our method.

Example 3.

The first problem we have solved is the first problem presented in Example 1, that is, problem (2). Here, the parameters we have selected are

We present the results obtained for the method with classical order 2 in Table 1, where we can see that the expected orders are obtained for the solution as well as for the derivative. For the local error in the solution, we obtain one order more than the one expected since the odd derivatives vanish.

Table 1.

Local and global errors and orders for problem (2) with RN2.

The results for the method with classical order 3 are given in Table 2 where we can see that the expected orders are obtained for the solution as well as for the derivative. For the local error in the derivative, we obtain one order more than the one expected since the odd derivatives vanish.

Table 2.

Local and global errors and orders for problem (2) with RN3.

The results for the method with classical order 4 are presented in Table 3, where again we can see that the expected results are obtained. The local order in the solution is one unit higher than the expected one as the odd derivatives vanish.

Table 3.

Local and global errors and orders for problem (2) with RN4.

Example 4.

The second problem is the equation of motion of a soliton in an exponential lattice. This problem was first proposed in [17] and it is a highly non-linear system.

with , and . The solution of this problem is .

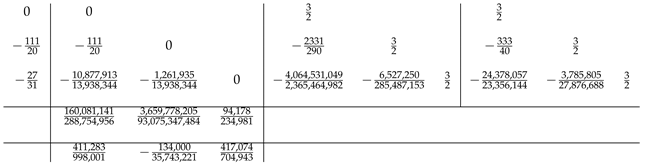

The results for method RN2 can be seen in Table 4. There, we can observe that we also obtain the expected local and global order for the solution and the derivative.

Table 4.

Local and global errors and orders for problem (15) with RN2.

The results for the second method, RN3, can be seen in Table 5, for which the expected results have been obtained.

Table 5.

Local and global errors and orders for problem (15) with RN3.

Finally, the results for method RN4 are presented in Table 6.

Table 6.

Local and global errors and orders for problem (15) with RN4.

Example 5.

The last problem we have solved is the non-linear Euler–Bernoulli Equation (3) presented in Example 2.

We first discretized in space, by using a spectral method suggested in [18] and deeply studied in [8]. This spectral method has been derived to discretize in space a problem such as

with , , , and , with X being a Hilbert space of functions and Y being a suitable space of functions defined on the boundary. In particular, for the problem we have, we take with boundary and boundary conditions , , . For the spatial discretization of this problem, we consider a grid , associated with a natural parameter J (related to the number of nodes on it). Then, we take as the space of polynomials of a degree less than or equal to J, integer , and as the subspace of such that they and their derivatives vanish on the boundary. We will consider in an approximation of the -norm in , which will be denoted by . Then, the spatial discretization is given by

with being the matrix that discretizes and and being the operators associated with the discretization of A which take into account the information on the boundary of u and F, respectively. The interior nodes are denoted by , . They are the zeros of the second derivative of the Legendre polynomial of degree .

In this way, after the spatial discretization, our problem reads

with , and .

In the numerical experiments, we have taken .

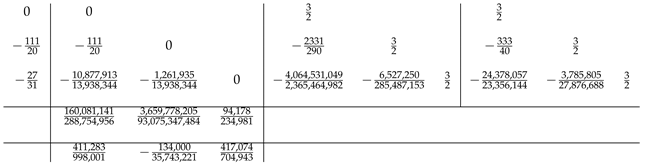

In this part, we present the results that have been obtained with method RN2 in Table 7, the results obtained with method RN3 in Table 8, and the results that have been obtained with method RN4 in Table 9. In this case, with RN4, we do not obtain order 4 in the global error in the derivative because of the order reduction phenomenon, which has been deeply studied for other numerical integrators such as Runge–Kutta methods, Rosenbrock methods, or RKN methods [19,20,21]. The way to avoid this order reduction is the objective of a forthcoming paper.

Table 7.

Local and global errors and orders for problem (3) with RN2.

Table 8.

Local and global errors and orders for problem (3) with RN3.

Table 9.

Local and global errors and orders for problem (3) with RN4.

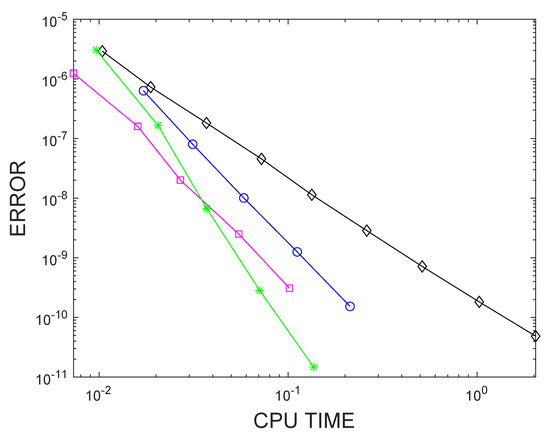

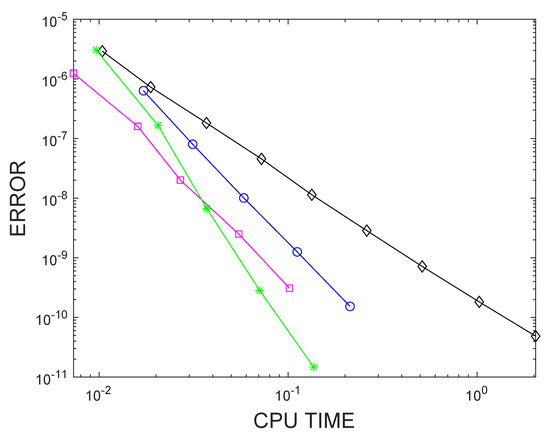

Finally, we present the comparison in terms of computational cost between RN2, RN3, RN4, and the RKN method with two stages and classical order 3 which is given in [22]:

We will denote this method by the abbreviation RKN3.

We will denote this method by the abbreviation RKN3.

The results can be seen in Figure 1 and Table 10, where we have written the values of τ and the value of the computational cost to obtain global errors in the solution in the range . In the graph, the error has been plotted as a function of τ in double logarithmic scale. In this graph and this table, we can see that the method which generates more computational cost to achieve the desired error is RN2. Given a fixed value of τ, this method presents a lower computational cost than the other three, but as its classical order is only two, it needs a lower value of τ to obtain the same error than the other three methods, and in this way, it needs more time steps. For example, if we take , we can see that RN2 takes seconds to obtain the result, but RN4 needs seconds, nearly double. However, for this value, the error that is found with RN2 is , but with RN4 it is . To obtain this error with the RN2 method, we need a value of τ between and , so the number of steps is much greater and so is the computational cost.

Figure 1.

Graph for the global error in the solution with RK2 (⋄, black), RK3 (□, magenta), RK4 (*, green), and RKN3 (o, blue).

Table 10.

Computational cost for problem (3) with RNRN2, RN3, RN4, and RKN3 4.

As we can also see, both RN3 and RN4 are better in terms of the computational cost than RKN3. As RN4 has more stages than RN3, for bigger values of τ, RN3 needs fewer steps to obtain the desired error, but when τ is smaller, because of the classical order of the RN4 method, it requires fewer time steps and the computational cost of this method is lower.

6. Conclusions and Future Work

As we have seen in this work, these new methods are a good choice when we have a second-order in time problem to solve. By using these methods, we avoid the drawback that other integrators ike Runge–Kutta–Nyström ones present when the problem we are solving is a non-linear one.

This work is an introductory work about these methods and there is more work to do in the near future. For example, we have the problem of the order reduction that this type of method presents when we solve a second-order in time PDE with non-vanishing order conditions. This problem has already been studied for other existing methods such as Runge–Kutta, Rosenbrock, and Runge–Kutta–Nyström.

Another piece of work to be done is the construction and study of Rosenbrock–Nyström methods with complex coefficients. As we have seen, the only stable method that exists with only one stage is a P-stable one. To obtain R-stable methods (not P-stable) with classical order two, we should have two intermediate stages. Rosenbrock methods with complex coefficients have been proven to be very efficient when solving stiff problems [16,23]. In this way, one piece of future work is to use this idea of complex coefficients to obtain efficient Rosenbrock–Nyström methods to solve second-order in time problems.

To conclude the work to be done, we are also interested in the comparison between different Rosenbrock–Nyström methods, in order to study the best method to be used in practice. One high-stage scheme could be worse in practice than one method with fewer stages because of machine round-off errors.

Funding

This research was funded by the Ministerio de Ciencia e Innovación through project PGC2018-101443-B-I00.

Acknowledgments

The author acknowledges the useful comments given by the referees.

Conflicts of Interest

The author declares no conflict of interest.

References

- Bujanda, B.; Jorge, J.C. Fractional Step Runge-Kutta methods for time dependent coefficient parabolic problems. Appl. Numer. Math. 2003, 45, 99–122. [Google Scholar] [CrossRef]

- Hairer, E.; Nrsett, S.P.; Wanner, G. Solving Ordinary Differential Equations I, Nonstiff Problems, 2nd ed.; Springer: Berlin, Germany, 2000. [Google Scholar]

- Hochbruck, M.; Ostermann, A. Exponential integrators. Acta Numer. 2010, 19, 209–286. [Google Scholar] [CrossRef]

- Peaceman, D.W.; Rachford, H.H. The numerical solution of parabolic and elliptic differential equations. J. SIAM 1955, 3, 28–42. [Google Scholar] [CrossRef]

- Rosenbrock, H.H. Some general implicit precesses for the numerical solution of differential equations. Comput. J. 1963, 5, 329–330. [Google Scholar] [CrossRef]

- Hairer, E.; Wanner, G. Solving Ordinary Differential Equations II, Stiff and Differential-Algebraic Problems, 2nd ed.; Springer: Berlin, Germany, 1996. [Google Scholar]

- Hochbruck, M.; Ostermann, A.; Schweitzer, J. Exponential Rosenbrock-type methods. SIAM J. Numer. Anal. 2008, 47, 786–803. [Google Scholar] [CrossRef]

- Moreta, M.J. Discretization of Second-Order in Time Partial Differential Equations by Means of Runge-Kutta-Nyström Methods. Ph.D. Thesis, Department of Applied Mathematics, University of Valladolid, Valladolid, Spain, 2005. [Google Scholar]

- Moreta, M.J.; Bujanda, B.; Jorge, J.C. Numerical resolution of linear evolution multidimensional problems of second order in time. Numer. Methods Partial. Differ. Equ. 2012, 28, 597–620. [Google Scholar]

- Goyal, S.; Serbin, S.M. A class of Rosenbrock-type schemes for second-order nonlinar systems of ordinary differential equations. Comput. Math. Appl. 1987, 13, 351–362. [Google Scholar] [CrossRef][Green Version]

- Moreta, M.J. Construction of Rosenbrock-Nyström methods up to order four. 2021; in preparison. [Google Scholar]

- Fermi, E.; Pasta, J.; Ulam, S. Studies of nonlinear problems I. Lect. Appl. Math. 1974, 15, 143–156. [Google Scholar]

- Goldstein, J.A. Semigroups of Linear Operators and Applications; Oxford University Press: New York, NY, USA, 1985. [Google Scholar]

- Crouzeix, M. Numerical Range and Hilbertian Functional Calculus; Institute of Mathematical Research of Rennes, Université de Rennes: Rennes, France, 2003. [Google Scholar]

- Alonso-Mallo, I.; Cano, B.; Moreta, M.J. Stability of Runge-Kutta-Nyström methods. J. Comp. Appl. Math. 2006, 189, 120–131. [Google Scholar] [CrossRef]

- Al’shin, A.B.; Al’shina, E.A.; Kalitkin, N.N.; Koryagina, A.B. Rosenbrock schemes with complex coefficients for stiff and differential-algebraic systems. Comput. Math. Math. Phys. 2006, 46, 1320–1340. [Google Scholar] [CrossRef]

- Toda, M. Waves in nonlinear lattice. Suppl. Prog. Theor. Phys. 1970, 45, 174–200. [Google Scholar] [CrossRef]

- Bernardi, C.; Maday, Y. Approximations Spectrales de Problemés auxd Limites Elliptiques; Springer: Berlin, Germany, 1992. [Google Scholar]

- Alonso-Mallo, I.; Cano, B. Spectral/Rosenbrock discretizations without order reduction for linear parabolic problems. Appl. Num. Math. 2002, 47, 247–268. [Google Scholar] [CrossRef]

- Alonso-Mallo, I.; Cano, B. Avoiding order reduction of Runge-Kutta discretizations for linear time-dependent parabolic problems. BIT 2004, 44, 1–20. [Google Scholar] [CrossRef]

- Alonso-Mallo, I.; Cano, B.; Moreta, M.J. Optimal time order when implicit Runge-Kutta-Nyström methods solve linear partial differential equations. Appl. Numer. Math. 2008, 58, 539–562. [Google Scholar] [CrossRef]

- Alonso-Mallo, I.; Cano, B.; Moreta, M.J. Stable Runge-Kutta-Nyström methods for dissipative problems. Numer. Algorithms 2006, 42, 193–203. [Google Scholar] [CrossRef]

- Al’shin, A.B.; Al’shina, E.A.; Limonov, A.G. Two-stage complex Rosenbrock schemes for stiff systems. Comput. Math. Math. Phys. 2009, 49, 261–278. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).