Abstract

We avoid as as much as possible the order reduction of Rosenbrock methods when they are applied to nonlinear partial differential equations by means of a similar technique to the one used previously by us for the linear case. For this we use a suitable choice of boundary values for the internal stages. The main difference from the linear case comes from the difficulty to calculate those boundary values exactly in terms of data. In any case, the implementation is cheap and simple since, at each stage, just some additional terms concerning those boundary values and not the whole grid must be added to what would be the standard method of lines.

MSC:

65M12; 65M20

1. Introduction

Many physical phenomena are modeled by means of nonlinear time evolutionary partial differential equations. These mathematical models are usually very complex and the numerical analysis is essential in order to obtain quantitative and qualitative information of the solution. A well-known procedure to carry out the numerical approximations is the so-called method of lines which is based on separating the spatial approximation, carried out by finite elements or other classical methods, from the time integration which is made with schemes for ordinary differential schemes [1,2].

In this work we are mainly interested in the time integration taking into account two basic properties of the ordinary differential system obtained after the spatial discretization: the stiffness and the nonlinearity. A good choice is given by the Rosenbrock methods which have been previously considered to time discretize partial differential equations, mainly when they are semilinear [3,4,5,6,7,8,9]. In such a case, the implicitness of the step is given through linear systems and not nonlinear ones, as would happen with Runge-Kutta methods.

Our main goal is to avoid the order reduction phenomenon which appears when Rosenbrock methods are used. This order reduction is well-known for all types of time integrators with internal stages and has been widely studied, although there are few references where the order reduction is avoided for the nonlinear case [4,8,10,11]. In [11], the author uses an extrapolation technique for Runge-Kutta methods, converting the usual scheme into a multistep algorithm. In [10], the authors use, for certain linearly implicit methods, an extension of the technique developed in [12], based on the abstract theory for linear initial boundary values problems introduced in [13,14]. The implementation of the proposed algorithm requires substantial changes of the usual time integration schemes which are also computationally expensive when the problem is not one dimensional. Moreover, the problem studied in [10] is not general since the authors assume that the solution and the source term vanish at the boundary. Finally, in [4], the authors partially avoid the order reduction by imposing additional conditions to increase the order observed in practice for Rosenbrock methods. For this, it is necessary to increase the number of internal stages and, as a consequence, the computational cost grows. The Rosenbrock method ROS3P constructed in [4] has been successfully used in [15] for the numerical approximation of mathematical models in electrocardiology. The same idea has been considered in [8,16] to obtain other Rosenbrock methods with stiff order three. In any case, up to our knowledge, up to now no Rosenbrock methods have been constructed with stiff order greater than 3 for general semilinear initial boundary value problems. Just order 4 has been obtained with the Scholz4-7B method in [17], but for the particular case of Prothero-Robinson problem and only when the scalar value is very big.

In this paper we propose to use a technique similar to the one used in [3,18], also based on the theory developed in [13,14]. The idea is to make the time integration before the spatial one, and choose carefully the boundary values assigned to the internal stages of the Rosenbrock method. In the linear case, those boundary values can be calculated exactly in terms of data independently of the searched order. However, in the nonlinear case, that is more difficult. In any case, our algorithms are computationally cheaper than the methods in [10,11] because they just require modification on the boundary values, which are negligible compared to modifications on the whole domain. On the other hand, our technique is valid for any Rosenbrock method. We do not need to impose to the Rosenbrock method any additional order condition. We also remark that we consider more general problems than the ones in [10,11]; for example, the boundary values of (1) are nonvanishing. Finally, we consider both reaction-diffusion problems and reaction-convection-diffusion problems like the Burger’s equation. There are recent results in the literature concerning the avoidance of order reduction with exponential methods like Lawson methods [19] or more general Runge-Kutta ones [20]. However, only the case of reaction-diffusion problems in which the nonlinear part is very smooth is treated there. Moreover, numerical differentiation is required to calculate the suitable boundary values for the intermediate problems, even to achieve local order 2 with Robin or Neumann boundary conditions and to get local order 3 with Dirichlet and Robin or Neumann boundary conditions. In contrast, in this paper, for those problems and with standard Rosenbrock methods, we just require numerical differentiation in order to achieve local order greater than or equal to 4 with Robin or Neumann boundary conditions, but not with Dirichlet ones. With more stiff equations, like Burger’s equation, we will have to use numerical differentiation to approximate some values on the boundary, in order to achieve local order greater than or equal to 4 with both Dirichlet and Robin or Neumann boundary conditions. In any case, as said before, that has not yet been achieved in the literature with any Rosenbrock method for these problems and the technique, through a summation-by-parts argument, leads to global order 4 for any Rosenbrock method of classical order greater than or equal to 4.

2. Abstract Formulation of the Problem

We need an abstract formulation of time evolutionary partial differential equation to apply our method in order to avoid the order reduction. A crucial factor is that the order reduction appears when the problem is formulated in a spatially bounded domain and the solution does not vanish at the boundary. The linear case has been studied in [13,14]. On the other hand, semilinear problems, but with vanishing boundary conditions, have been studied in [21].

We now state the abstract formulation for a semilinear initial boundary value problem. This approach is useful to obtain a problem similar to a differential ordinary system.

Let X and Y be two complex Banach spaces, be a dense subspace of X, , be a pair of linear operators. Moreover, we take a nonlinear operator . We consider the problem

We are interested in the case of an unbounded operator A because in the applications this operator is the spatial differential operator of a partial differential evolutionary problem. On the other hand, ∂ represents a boundary operator which values are certain traces on the boundary of the solution of (1).

- (A1)

- The boundary operator is onto.

- (A2)

- is dense and , the restriction of A to , is the infinitesimal generator of an analytical semigroup in X.

- (A3)

- If z is a complex number with greater than the type of , then the eigenvalue problempossesses, for each , a unique solution . Moreover, this solution satisfiesfor some constant independent of z, for z in a half-plane of the form .

When , the problem (1) is linear and the results in [13,14] prove that, with the previous hypotheses, it is well-posed and the solution u depends continuously on regular enough data , f, and g. On the other hand, the fact that the semigroup generated by is analytical implies that (1) is a parabolic problem.

From hypothesis (A2) we deduce that, taking , we can define the fractional powers for each . Then, we can also define the Banach spaces with norm .

We now state another hypothesis of the nonlinear problem (1).

- (A4)

- For some , and , the nonlinearitysatisfies the Hölder-Lipschitz conditionfor all .

With this new hypothesis, problem (1) is well-posed when the boundary data satisfies , see for example [21]. In the general case, we can assume that the boundary function satisfies and we can look for a solution of (1) given by:

for some fixed . Then v is solution of an IBVP with vanishing boundary values similar to the one studied in [21] and the well-posedness is a direct consequence if we take the abstract theory for initial boundary value problems in [13,14] into account.

We remark that in [10], a similar problem is used, but it supposes that it is autonomous and the vanishing boundary conditions and . We do not need these assumptions in our work.

Finally, we remark that in this paper we are interested in solutions of (1) which are regular enough. Then, we suppose in practice that the coefficients of the spatial differential operator associated to A and the data are also regular enough and satisfy suitable conditions of compatibility at the boundary.

2.1. Examples

Several well-known evolutionary problems of Applied Mathematics can be written in the previous abstract way. For example, we show two examples which have been studied in [21]. They will be used in our numerical experiments. We remark that other relevant equations are also included, for example the Navier Stokes equation ([21]).

2.1.1. Reaction-Diffusion Equation

Let , be open and bounded with a smooth enough boundary , . We consider the following equation, along with initial and Dirichlet boundary data,

Here u is the temperature, the density, c the specific heat, the conductivity, q is the rate of production of heat per unit of mass (depending on temperature) and v is a field of convection. Since is a symmetric and positive definite matrix for all , the operator

with and satisfies our hypotheses with .

2.1.2. Generalized Burgers Equation

We consider the equation

where c is a constant. The solution is completely determined by the initial value and the Dirichlet boundary conditions. The case of vanishing boundary values (with ) is considered in page 57 of [21]. We suppose that

is measurable in x, locally Hölder continuous in t and Locally Lipschitz continuous in u, uniformly in x, with , , and s a continuous and increasing in its second argument function.

The abstract version of this problem is as follows. We take , and , . We also denote , then and . When the problem is linear, i.e., when and , the problem is well-posed under suitable hypotheses of regularity of the data. On the other hand, the nonlinear case with vanishing boundary values is well-posed with a similar argument to the one used in [21].

3. The Time Semidiscretization

3.1. Rosenbrock Methods for Abstract Initial Boundary Value Problems

Rosenbrock methods are well-known to be determined by the following matrices of coefficients [2]

In this paper, we assume certain usual restrictions on the coefficients of the Rosenbrock method. We suppose that

which simplifies the derivation of the order conditions, and

which implies that only one matrix decomposition is needed per step and permits to obtain desirable stability properties of the Rosenbrock method. We also use the notation

and . Finally, we define the vectors , , and , , by

and the s-dimensional identity matrix is denoted by .

We suppose that the Rosenbrock method has classical order p. The necessary and sufficient order conditions for that can be found in [2].

If is the time step and , for , is the initial value and the values , obtained recursively, are approximations of . The Rosenbrock method applied to (1), without taking into account the boundary values, leads to the following linear equations for the internal stages:

where is the vector of the internal stages and denotes the vector whose i-th component equals

Notice that, for fixed , these are stationary problems which need some given boundary to be completely determined. A suitable choice of the boundary for these stages will lead to avoid order reduction in a similar way as the one used in our previous work [3] for the linear case.

Therefore, we denote as to the vector formed by the boundary values assigned to the stages and we obtain the following equations for the time discretization of (1) by means of a Rosenbrock method:

3.2. The Choice of the Boundary Values for the Stages

Our idea is to use a trick similar to the one in [3], but instead of considering an iterative procedure to find the appropiate boundary values for the stages, we directly look for a truncation of a series expansion on the the timestepsize which satisfies the stage equation until a given order. More precisely, we are looking for the s-dimensional vector such that

and satisfying

for , where . Then we take as boundary values

To illustrate this, notice that for and assuming enough regularity, the corresponding values would be given by the following: As all the terms in the right hand side of (6) have a factor , in order to match with the left-hand side, it follows that

Using this, the term on on the right-hand side of (6) is , from which the following equality applies

Proceeding inductively, it follows that

Here and · is the componentwise product.

3.3. Local Error of the Semidiscretization

In order to study how the local error behaves with this strategy, let us denote by and , the stages and values obtained when the Rosenbrock method is used with in (4a) and (4c) and the boundary values (7) are used in (4b), i.e.,

Let us study the local error when integrating a reaction-diffusion problem, i.e., when f is such that is a bounded operator whenever w is bounded. More precisely,

Theorem 1.

Let us assume that there exists a constant C such that

and that, for some j satisfying ,

- (i)

- with for and every .

- (ii)

- such that, for every ,for and .

Proof.

Let us first decompose

where

By construction of and considering the assumed regularity (i)–(ii), (6) holds for several terms (), whose expression coincides with that of the coefficient of the corresponding power of in the stages which would turn up if A were a bounded operator. Because of that, behaves in those powers of as when integrating a non-stiff problem and, as the classical order of the method is p and ,

Therefore, to end the proof, it suffices to notice that

This comes from the fact that, by computing the difference between (6) and (8a) and taking into account that the boundary values coincide, we have

Looking at the first stage and considering the form of and , this means that

Taking then (9) into account,

Now, for the second stage, it follows that

Notice that because of (12) once it has been proved that because is a bounded operator. On the other hand,

and therefore the norm of this term is using that and the fact that is bounded because of the assumed regularity and that the same happens with due to (13). Because of all this, using again (9), and proceeding inductively with the rest of stages, the result follows. □

Although Theorem 1 holds only when f is a bounded operator, we show in the numerical experiments that our technique is also useful when f is an unbounded operator.

3.4. How to Calculate the Boundary Values for the Stages

The following aim of our work is to see how the boundary values (7) can be obtained from the data of problem (1). In some cases, it will possible to calculate them exactly from the known data. In other cases, in order to achieve a certain order, it will be necessary to resort to numerical differentiation. Let us thoroughly study the different situations:

- We can always takewhich is easily calculable in terms of the data of the problem. In such a way, we get local order 3 if the classical order p satisfies . To compare with what was suggested in [3] for linear problems, if we considerthe result on the order of the local error would be the same as considering boundary (16). As it was already also studied in [3], the classical method of lines, which discretizes firstly in space and then in time, leads to local order 2 for time-dependent boundary value problems, even though the problems are just linear. Therefore, we are gaining at least one order of accuracy with the technique which is suggested in this paper, even without resorting to numerical differentiation.

- In the case that is a bounded operator so that hypotheses (ii) of Theorem 1 are satisfied for , the boundary operator is Dirichlet, and more precisely, f and its derivatives have also sense over , so thatwhere □ denotes any partial derivative, it happens that can also be calculated exactly in terms of data considering thatThen,Therefore, in such a case, local order 4 can also be achieved without resorting to numerical differentiation if the classical order p satisfies .In the case of hypotheses of Theorem 1 are satisfied for , in order to calculate on the boundary so as to achieve local order 5, apart from terms which can be directly calculated in terms of derivatives of f evaluated at and , , , the following terms turn up:The second of those can be calculated as above using (18). As for the first one, notice that, using (1),Therefore, all the terms are easily calculable as above (using also ), except for the last one, which coincides with the third term in (19). In the case that, for example, for some real compact interval I, and for , in order to calculate that term on the boundary, we need , , , . As, again from (1),everything can be calculated in terms of data except for , . The first term is either given by the space discretization itself of (4a)-(4b)-(4c) or must be approximated through numerical space differentiation using the exact values at the boundary and the approximation of the solution at the interior nodal values. As for the second term , it has to be approximated by numerical differentiation in time from the previous approximation values on the boundary (), and for the first value , it suffices to consider the Equation (1) and that is known. Therefore,In such a way, local order 5 can be achieved applying numerical differentiation to approximate first-derivatives if the classical order satisfies .In order to obtain higher local orders, the corresponding expressions to consider at the boundary get more and more complicated. Arguing as before, it is always possible to approximate them through numerical differentiation if the classical order of the method is high enough. However, as the order of the derivatives to approximate at the boundary increases, the numerical differentiation problem can become more unstable.

- In the case that is a bounded operator so that hypotheses of Theorem 1 are satisfied for and (17) and the boundary operator is Robin/Neumann, i.e., for some constants and and ,where is the normal derivative on , can also be calculated using (18), but just in an approximated way. For on the boundary, we will have to take the approximation which is given by the method after the space discretization of (4a)-(4b)-(4c) and then, for , we will take into account thatOn the other hand, will have to be approximated by numerical differentiation in time from the previous approximated values on the boundary , and for the first values , Taylor approximations can be used considering the equation in (1) and successive derivatives with respect to time at , which can be calculated because the initial condition is known. Finally, can be approximated again using the Robin boundary condition, i.e., byTherefore, again local order 4 can be achieved if , although now resorting to numerical differentiation to approximate a derivative in time.As for the calculation of , the same remarks of the previous point 2 apply with the additional difficulties that more derivatives of f will be required since the boundary condition includes the normal derivative, not only (as with ) must be approximated through numerical differentiation in time from the previous values , but also (). Notice that , and will also be required, but they can be directly calculated from the previous values, at least in this case, through (20), (21) and differentiating again (21). That is to say, not only the first time derivative must be approximated numerically but also derivatives until order 4, with the possible instabilities which are caused because of being a badly-posed problem.Considering even higher orders would imply approximating even higher time derivatives of the solution at the boundary, with its possible instability problems.

- In the case in which f is an unbounded operator which can contain derivatives, in order to calculate on the boundary with the aim of obtaining local order 4, some numerical differentiation will be required, which will depend on the particular form of f.For example, for the Equation (2), with and ,Therefore, when considering Dirichlet boundary conditions, must be calculated through numerical differentiation in space from the exact values on the boundary of and those which come from numerical differentiation in time of the approximated values of the solution at the interior nodes.For Neumann/Robin boundary conditions, what must be calculated isknowing thatAs, from the equation itself,it suffices to approximate , and on ( would then be solved by differentiating (22)). Then, from the numerical approximation of the solution which the method gives, numerical differentiation in space may have to be applied to approximate on the boundary, and then numerical differentiation in time must be used to approximate and .Trying to get even higher local order would imply considering numerical differentiation of an order greater than 2.In any case, even without resorting to numerical differentiation, can always be calculated in terms of the data for time-dependent boundary conditions, obtaining local (and by summation by parts global) error of order 3. (Compare with [10] where order 3 was also obtained for this equation for linearly implicit methods, but assuming nonnatural vanishing boundary conditions and with [4] where order three was obtained, but assuming additional conditions on the coefficients of the method).

We end with some remarks on other time integration methods. First, the same conclusions than before can be drawn for implicit Runge-Kutta methods, following similar proofs (cf. [11]). Of course, Rosenbrock methods are more suitable for nonlinear problems.

On the other hand, it is also possible to use W-methods, where the exact Jacobian of the differential system is substituted by an approximation of it, more easy to calculate. Many times, this approximation in our semilinear problems is just taken as the operator A (see [9]). However, in that case, the boundaries for the stages which would lead to order 3 would be

and therefore, in the end, to achieve that order, we would need to evaluate the Jacobian of f. On the one hand, this is not reasonable because W-methods are used precisely to avoid that. On the other hand, when is an operator which contains spatial partial derivatives, the above boundary is not calculable in an exact way in terms of the data. That is the reason why we have preferred to describe our analysis just for Rosenbrock methods. On the other hand, the boundaries which would lead to a greater order under the assumptions of Theorem 1 and assuming that the boundary operator is Dirichlet would be much more difficult to calculate for W-methods because even to achieve local order 4, would be required.

4. Numerical Experiments

To show the previous results in the numerical experiments, we have first integrated the following problem for the nonlinear heat equation

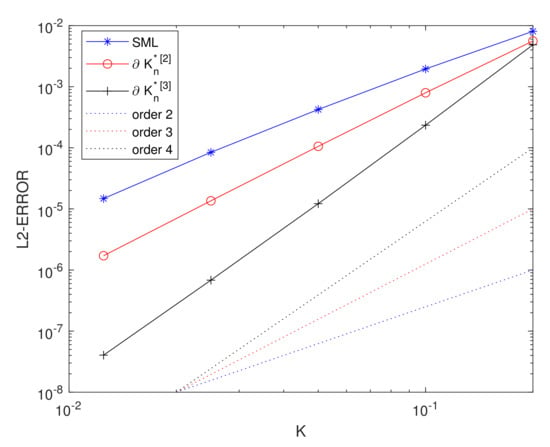

with h such that the exact solution of the above equation is . For the time integration, we have considered the Rosenbrock method GRK4T [22], which has four stages and does not satisfy condition (3.11) (or its equivalent (3.11)’) in [6]. Therefore, the standard method of lines leads to order 2 in time for this method [5]. For the space discretization, we have considered a Gauss-Lobatto collocation spectral method based on 41 nodes, which leads to negligible errors in space. We have implemented the method of lines, considering first the time discretization and then the space one. We have also considered the boundaries and . We have measured the error committed when integrating until time in the discrete -norm which is associated to the Gauss-Lobatto nodes. Figure 1 shows this error in terms of the chosen timestep. More precisely, asterisks (’*’) correspond to the standard method of lines, which makes first the space discretization and then the temporal one, circles (’o’) to the suggested method of lines with and crosses (’+’) to As observed in the figure, the slope of the corresponding lines increases with the last two modifications, in such a way that the errors also become smaller, and the more the more the stepsize decreases. (Notice also that the cost of the last modifications is negligible compared with the total cost of the method because we are just acting on the boundary). In such a way, the classical order 4 of the method is recovered, as Table 1 shows more precisely.

Figure 1.

Semilinear equation with and GRK4T integrator, with Dirichlet boundary conditions.

Table 1.

Orders for global error, GRK4T and with Dirichlet boundary conditions. SML stands for ‘standard method of lines’ and , for the different variations of the technique suggested in this paper.

On the other hand, we have also considered a differential equation like that in (23), but with Dirichlet boundary condition just at , and at , the Robin one

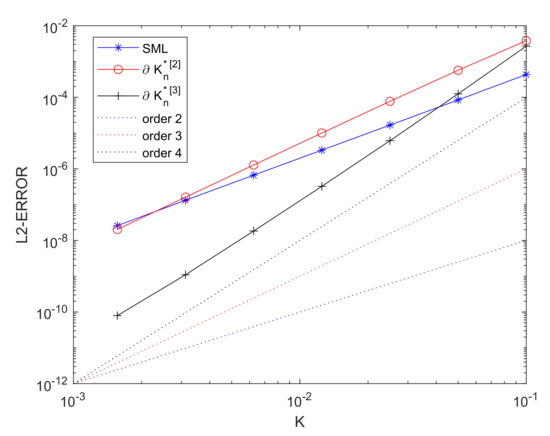

so that the exact solution of the problem continues to be . The implementation of the Robin boundary condition leads now to a Galerkin Gauss-Lobatto spectral discretization for the Lagrange polynomial associated to the last node (), so that the numerical value at is now an additional unknown for the space discretization. In order to get now local order 4 with the suggested technique, we have had to resort to numerical differentiation in time to approximate the time derivative at that last node. We have done so through a Taylor method of order 2 for the first steps and through a 3-BDF formula for the consecutive ones. As Table 2 shows, the orders of the error with the different strategies considered in this paper are the same as those with Dirichlet boundary conditions although, as it can be observed in Figure 2, smaller stepsizes have to be considered so that the errors are smaller than those committed with the standard method of lines. This last remark is more obvious for . In any case, for stepsizes smaller than , the suggested technique with is clearly the one which leads to smaller errors.

Table 2.

Orders for global error, GRK4T and , with Robin boundary condition. SML stands for ‘standard method of lines’ and , for the different variations of the technique suggested in this paper.

Figure 2.

Semilinear equation with and GRK4T integrator, with Robin boundary condition.

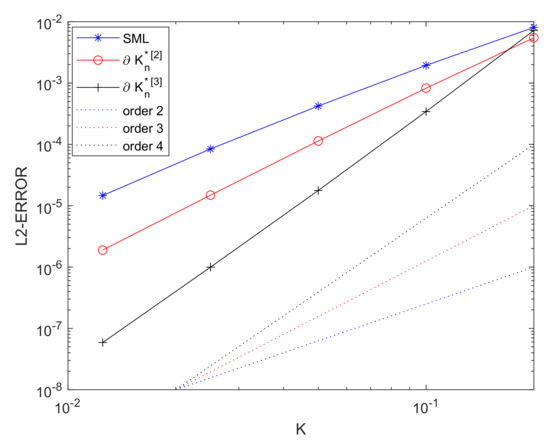

We have also considered a problem where the differential operator has variable coefficients, which is a case also included in the abstract formulation (1). More precisely, we have integrated

with Dirichlet boundary conditions so that again the exact solution is . The results are shown in Figure 3 and Table 3, where it is observed that the technique suggested with both and improves the order of the standard method of lines in the expected way.

Figure 3.

Semilinear equation with and GRK4T integrator, with Dirichlet boundary conditions.

Table 3.

Orders for global error, GRK4T and with Dirichlet boundary conditions. SML stands for ‘standard method of lines’ and , for the different variations of the technique suggested in this paper.

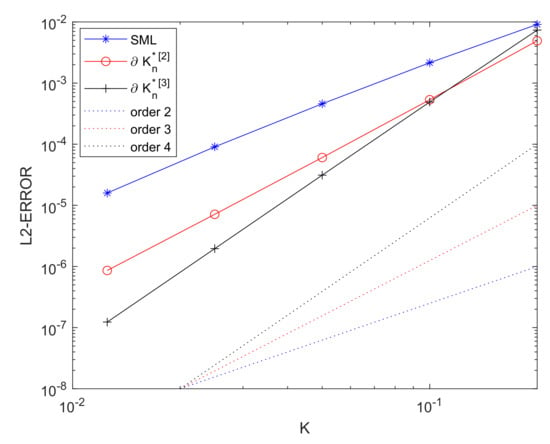

Let us now consider the equation,

with h such that the exact solution of the above equation is again . We have integrated it with the same numerical methods than before and also considering Dirichlet boundary conditions. First, we have used the standard method of lines and then the suggested method of lines with . Finally, as is not exactly calculable in terms of data as in problem (23) with Dirichlet boundary conditions, we have approximated those values through numerical differentiation following item 4 in Section 3.4. More explicitly, we have considered the 3-BDF formula to differentiate in time except for the first steps where Taylor series of order 2 is used taking Burger’s equation into account and the fact that the initial condition is known. Then, we have used differentiation in space of those values through Gauss-Lobatto collocation space discretization and its evaluation on the boundary. As expected, we have obtained order near 2 with the standard method of lines, order near 3 with and order near 4 with . This can be observed in Figure 4 and Table 4.

Figure 4.

Semilinear equation with and GRK4T integrator, with Dirichlet boundary conditions.

Table 4.

Orders for global error, GRK4T and . SML stands for ‘standard method of lines’ and , for the different variations of the technique suggested in this paper.

5. Conclusions

The paper thus provides a technique to avoid order reduction when integrating nonlinear problems with Rosenbrock methods, which is the kind of problems for which these methods were constructed. This technique is cheap when compared with the classic method of lines because additional terms must be added in the calculation of the stages, which imply the calculations of certain suitable boundary values for them. As the boundary grid values are negligible compared with those in the interior of the domain, the cost can also be considered negligible with respect to that of the whole method. We remark that numerical differentiation must be used to achieve a high enough order. That threshold order depends on the problem at hand and it happens that the higher the order to be pursued, the higher the order of the derivatives to approximate numerically, and therefore, the more probable that instability issues due to roundoff turn up. In any case, in the numerical experiments of the previous section, in which we managed to achieve order 4, we could not observe those instabilities. What’s more, to our knowledge, there is no other way to achieve that order for those type of problems when using Rosenbrock methods.

Author Contributions

Conceptualization, I.A.-M. and B.C.; methodology, I.A.-M. and B.C.; software, B.C.; validation, I.A.-M. and B.C.; formal analysis, I.A.-M. and B.C.; investigation, B.C.; resources, I.A.-M. and B.C.; data curation, I.A.-M. and B.C.; writing—original draft preparation, I.A.-M. and B.C.; writing—review and editing, I.A.-M. and B.C.; visualization, I.A.-M.; supervision, I.A.-M. and B.C.; project administration, I.A.-M. and B.C.; funding acquisition, I.A.-M. and B.C. Both authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministerio de Ciencia e Innovación and Regional Development European Funds through project PGC2018-101443-B-I00 and by Junta de Castilla y León and Feder through projects VA169P20 and VA193P20.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hairer, E.; Norsett, S.; Wanner, G. Solving Ordinary Differential Equations. I: Nonstiff Problems, 2nd ed.; Springer Series in Computational Mathematics, 8; Springer: Berlin, Germany, 1993. [Google Scholar]

- Hairer, E.; Wanner, G. Solving Ordinary Differential Equations. II: Stiff and Differential-Algebraic Problems, 2nd ed.; Springer Series in Computational Mathematics, 14; Springer: Berlin, Germany, 1996. [Google Scholar]

- Alonso–Mallo, I.; Cano, B. Spectral/Rosenbrock discretizations without order reduction for linear parabolic problems. Appl. Num. Math. 2002, 47, 247–268. [Google Scholar] [CrossRef]

- Lang, J.; Verwer, J.G. ROS3P—An accurate third-order Rosenbrock solver designed for parabolic problems. BIT 2001, 41, 731–738. [Google Scholar] [CrossRef]

- Lubich, C.; Ostermann, A. Linearly implicit time discretization of non-linear parabolic equations. IMA J. Numer. Anal. 1995, 15, 555–583. [Google Scholar] [CrossRef]

- Ostermann, A.; Roche, M. Rosenbrock methods for partial differential equations and fractional orders of convergence. SIAM J. Numer. Anal. 1993, 30, 1084–1098. [Google Scholar] [CrossRef]

- Ostermann, A.; Thalhammer, M. Non-smooth data error estimates for linearly implicit Runge-Kutta methods. IMA J. Numer. Anal. 2000, 20, 167–184. [Google Scholar] [CrossRef]

- Rang, J.; Angermann, L. New Rosenbrock W-methods of order 3 for partial differential algebraic equations of index 1. BIT 2005, 45, 761–787. [Google Scholar] [CrossRef]

- Schwitzer, F. W-methods for semilinear parabolic problems. Appl. Num. Math. 1995, 18, 351–366. [Google Scholar] [CrossRef]

- Calvo, M.P.; Frutos, J.; Novo, J. An efficient way to avoid the order reduction of linearly implicit Runge-Kutta methods for nonlinear IBVP’s. In Mathematical Modelling, Simulation and Optimization of Integrated Circuits; Antreich, K., Bulirsch, R., Gilg, A., Rentrop, P., Eds.; International Series of Numerical Mathematics Vol. 146; Birkhauser Verlag: Basel, Switzerland, 2003; pp. 321–332. [Google Scholar]

- Keeling, S.L. Galerkin/Runge-Kutta discretizations for semilinear parabolic equations. SIAM J. Numer. Anal. 1990, 27, 394–418. [Google Scholar] [CrossRef][Green Version]

- Calvo, M.P.; Palencia, C. Avoiding the order reduction of Runge–Kutta methods for linear initial boundary value problems. Math. Comput. 2002, 71, 1529–1543. [Google Scholar] [CrossRef][Green Version]

- Alonso–Mallo, I.; Palencia, C. On the convolutions operators arising in the study of abstract initial boundary value problems. Proc. R. Soc. Edinb. 1996, 126A, 515–539. [Google Scholar] [CrossRef]

- Palencia, C.; Alonso–Mallo, I. Abstract initial boundary values problems. Proc. R. Soc. Edinb. 1994, 124A, 879–908. [Google Scholar] [CrossRef]

- Colli Franzone, P.; Deuflhard, P.; Erdmann, B.; Lang, J.; Pavarino, L.F. Adaptivity in space and time for reaction-diffusion systems in electrocardiology. SIAM J. Sci. Comput. 2006, 28, 942–962. [Google Scholar] [CrossRef]

- Rang, J. Improved traditional Rosenbrock-Wanner methods for stiff ODEs and DAEs. J. Comp. Appl. Math. 2015, 286, 128–144. [Google Scholar] [CrossRef]

- Rang, J. The Prothero and Robinson example: Convergence studies for Runge-Kutta and Rosenbrock-Wanner methods. Appl. Num. Math. 2016, 108, 37–56. [Google Scholar] [CrossRef]

- Alonso–Mallo, I. Runge–Kutta methods without order reduction for initial boundary value problems. Numer. Math. 2002, 91, 577–603. [Google Scholar] [CrossRef]

- Cano, B.; Reguera, N. How to avoid order reduction when Lawson methods integrate reaction-diffusion initial boundary value problems. BIT Num. Math. 2021. [Google Scholar] [CrossRef]

- Cano, B.; Moreta, M.J. How to avoid order reduction when explicit Runge-Kutta exponential methods integrate reaction-diffusion initial boundary value problems. 2021; Submitted for Publication. [Google Scholar]

- Henry, D. Geometric Theory of Semilinear Parabolic Problems; Springer Lectures Notes in Mathematics, 840; Springer: Berlin, Germany, 1981. [Google Scholar]

- Kaps, P.; Rentrop, P. Generalized Runge-Kutta methods of order four with stepsize control for stiff ordinary differential equations. Numer. Math. 1979, 33, 55–68. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).