Abstract

We used a Kurchatov-type accelerator to construct an iterative method with memory for solving nonlinear systems, with sixth-order convergence. It was developed from an initial scheme without memory, with order of convergence four. There exist few multidimensional schemes using more than one previous iterate in the very recent literature, mostly with low orders of convergence. The proposed scheme showed its efficiency and robustness in several numerical tests, where it was also compared with the existing procedures with high orders of convergence. These numerical tests included large nonlinear systems. In addition, we show that the proposed scheme has very stable qualitative behavior, by means of the analysis of an associated multidimensional, real rational function and also by means of a comparison of its basin of attraction with those of comparison methods.

1. Introduction

New and efficient iterative techniques are needed for obtaining the solution of a system of nonlinear equations of the form

where , being an open convex set, which is present in scientific, engineering and various other models (details can be found in the articles [1,2,3,4,5]).

The search for the solution of the system is a much more complex problem than finding a solution of a scalar equation . As in the scalar case, we can transform the original system into an equivalent system of the form:

for a given vector function , whose coordinate functions we will denote by and . Starting from an initial approximation , we can generate a succession of vectors of by means of the iterative formula

where .

We say that the process is convergent if when ; then, will be, under certain conditions of the function G, a solution of the system . The vector is called a fixed point of the function G and the algorithm described by the Equation (2) is a fixed point method.

A very important concept of iterative methods is their order of convergence, which provides a measure of the speed of convergence. Let be a succession of vectors of such that they tend to the solution of the nonlinear system when j tends to infinity. The convergence of this sequence is said to be

- (i)

- linear, if there exist M, and such that for all .

- (ii)

- of order p, if there exist and such that for all .

This definition is independent of the norm of used.

We denote by the error in the j-th iteration and call an error equation to the expression , where L is a a p-linear function , and p is the order of convergence of the method.

The most known fixed-point iterative method is Newton’s scheme:

where denotes the Jacobian matrix of operator F. However, there are many practical situations where the calculations of a Jacobian matrix are computationally expensive, and/or it requires a great deal of time for them to be given or calculated. Therefore, derivative-free methods are quite popular for finding the roots of nonlinear equations and systems of nonlinear equations.

The first multidimensional derivative-free method was proposed by Samanskii in [6], by replacing the Jacobian matrix with the divided difference operator:

This scheme keeps the quadratic order of convergence of Newton’s procedure. It is the vectorial extension of scalar Steffensen’s method.

Later on, Traub defined a class of iterative methods (known as Traub-Steffensen’s family) [7], given by

where . The class (3) can be easily recovered from Newton’s well-known method [7] by replacing the Jacobian matrix with operator . Let us remark that, for the particular value of in expression (3), the deduced scheme is Samanskii’s one.

In recent years, different scalar iterative schemes with memory have been designed (a good overview can be found in [8]), mostly derivative-free ones. These have been constructed with increasing orders of convergence, and therefore, with increasing computational complexity. In terms of stability, some researchers compared the amplitude of the set of initial points converging to the same attractor, using complex discrete dynamics techniques. In [9], the authors observed that iterative schemes with seventh-order memory convergence showed better stability properties than many eighth-order optimal procedures without memory. This graphical comparison was subsequently used by different authors; see, for example, the work of Wang et al. in [10] and Cordero et al. [11] in 2016 or the investigations of Bakhtiari et al. [12] in 2016 and Howk et al. [13] in the following years.

Regarding nonlinear vectorial problems, some methods with memory have been developed which improve the convergence rate of Steffensen’s method or Steffensen-type methods at the expense of additional evaluations of vector functions, divided difference or changes in the points of iterations. In past and recent years, a few high-order multi-point extensions of Steffensen’s method or Steffensen-type methods have been proposed and analyzed in the available literature [14,15,16,17] for solving nonlinear systems of equations. All these modifications are in the direction of increasing the local order of convergence with the view of increasing their efficiency indices, as they usually do not involve new functional evaluations. Therefore, these constructions occasionally possess a better order of convergence and efficiency index, but there are very few iterative schemes of this kind in the literature, due in part to their recent development and also due to the difficulty of design and convergence analysis.

In 2020, Chicharro et al. [18] proposed such an extension, which is given by

and its higher-order version is

The versions have third and fifth order of convergence, respectively. An extension of this type was first reported by Chicharro et al. [18] based on Kurchatov’s divided difference operator.

The authors developed in [19] a technique that, using multidimensional real discrete dynamics tools, is able to analyze the stability of iterative schemes with memory, not only in graphical terms, but essentially in analytical terms. Using this technique, the stability of the fixed and critical points of secant, Steffensen’ and Kurchatov’s methods (among others) were studied in [19]. It was also used to analyze other procedures, such as those described in [20], the one defined by Choubey et al. in [21] and those by Chicharro et al. in [22,23,24].

The aim of this work was to produce two new schemes without and with memory of orders four and six, respectively. Our scheme is also based on Kurchatov’s divided difference operator. However, our scheme does not have only higher-order convergence, unlike the recent scheme (5). However, we did not use any additional functional evaluation of F or (Jacobian matrix of F) or another iterative substep. We also provide a deep analysis of the suggested scheme regarding the order of convergence (Section 2) and its stability properties, constructing an associated multidimensional discrete dynamical system. Therefore, the good performance in terms of convergence to the searched roots and wideness of their basins of attraction is proven, as on polynomial functions as on non-polynomial ones, in Section 3. In addition, we compare in Section 4 our methods to the existing recent methods on several numerical problems with similar iterative structures. On the basis of the results, we found that our methods perform better than the existing ones when dealing with residual errors, the difference between two consecutive iterations and stable computational order of convergence. Finally, some conclusions and the references used bring this manuscript to an end.

2. Construction and Convergence of New Iterative Schemes

Combining the Traub-Steffensen family of methods and a second step with different divided-difference operators, we propose the class of iterative schemes described as

where . In order to analyze the convergence of schemes (6), we need the definition of the divided difference operator as well as its Taylor’s expansion (more details can be found in [5]).

Lemma 1.

Suppose is k-times Fréchet differentiable in an open convex set Ω. In addition, we assume that for any , the following expression holds:

Now, we can obtain the following Taylor series expansion of the divided difference operator, by adopting the Genocchi–Hermite formula [5]:

Then, we have

Now, we are in a position to analyze the convergence order of proposed schemes (6), as we can see in the following result. In it, I denotes the identity matrix of size and can be considered as a k-linear operator:

Todeepen one’s understanding of the concepts of Taylor expansion using several variables, we suggest references [5,25].

Theorem 1.

Let be a sufficiently differentiable function in an open convex neighborhood Ω of a zero ξ. Suppose that is a continuous and nonsingular at and the initial guess is close enough to ξ. Then, the iterative schemes defined by (6) have a fourth order of convergence for every β, . They satisfy the following error equation:

where ,

Proof.

Let be the error of the jth-iteration and be a solution of . Then, developing in the neighborhood of , we have

and

Inversion of is given by

where -4.6cm0cm

In a similar fashion as we did in expression (13), we can develop and its derivatives in the neighborhood of , given by

3. Extension to a Higher-Order Scheme with Memory

In this section, we construct a new scheme with memory based on our scheme (6), without using any new functional evaluation. It is straightforward to say from error Equation (20) that we can obtain a higher order of convergence by choosing . However, we also know that the required solution is unknown. We want to increase the order of convergence without additional values of vector function or Jacobian matrix. Therefore, we use one of the most efficient Kurchatov’s divided difference operators, , to approximate the value of , which is given by

Then, we define

Hence, from our scheme (6) we can deduce the following iterative method with memory:

where

In the following result, we analyze the convergence order of scheme (22) with memory, denoted by .

Theorem 2.

Let be a sufficiently differentiable function in an open convex neighborhood Ω of a zero of F, ξ. Suppose that is continuous and non-singular in ξ. In addition, the initial guesses and are close enough to the required solution ξ. Then, the iterative scheme defined by (22) has sixth order of convergence.

4. A Qualitative Study of Iterative Methods with Memory: New and Known

In this section, we analyze the stability of the proposed scheme with memory in its scalar form, as this kind of study yields multidimensional operators to be analyzed and it does not support the use of vectorial schemes. However, its performance on systems of nonlinear equations is checked in Section 5 and it is compared with other known schemes with memory.

The expression of a scalar fixed-point iterative method with memory, using two previous iterations to calculate the following estimation, is

and being the starting estimations. We use the technique presented in [19,20] to describe any method with memory as a discrete real vectorial dynamical system, in order to analyze its qualitative behavior.

In order to calculate the fixed points of an iterative method with iteration function , an auxiliary fixed point multidimensional function can be defined, related to by means of:

and being, again, the initial estimations. Therefore, a fixed point of this operator will be obtained when not only , but also .

From function , a discrete dynamical system in can be defined by , where is the operator of the iterative method with memory. The fixed points of satisfy and . This notation implies and . In the following, we recall some basic dynamical concepts that are direct extensions of those used in complex discrete dynamics analysis (see [26]).

Let us consider the vectorial rational function , usually obtained by applying an iterative method on a scalar polynomial . Then, if a fixed point of operator is different from , where r is a zero of , it is called a strange fixed point. On the other hand, the orbit of a point is defined as the set of successive images from by the vector function—that is, . Indeed, a point is called k-periodic if and , for .

The qualitative performance of a point of is classified depending on its asymptotic behavior. Thus, the stability of fixed points for vectorial operators satisfies the statements appearing in the following result (see, for instance, [27]).

Theorem 3.

Let Γ from to be of class . Assume is a k-periodic point. Let be the eigenvalues of the Jacobian matrix . Then, it holds that

- (a)

- If all the eigenvalues verify , then is attracting.

- (b)

- If one eigenvalue verifies , then is unstable—that is, repelling or saddle.

- (c)

- If all the eigenvalues verify , then is repelling.

Moreover, a fixed point is said to be not hyperbolic if all the eigenvalues of satisfy . Specifically, if there exist an eigenvalue satisfying and another one such that , then it is called saddle point.

There is a key difference between the study of the stability of a fixed point in scalar and vectorial dynamics: In the first case, if then is attracting; in particular it is superattracting if and it is repelling when , being the scalar rational function related to the iterative scheme on a low-degee polynomial ). In the vectorial case, the character of the fixed points is calculated by means of the eigenvalues of the Jacobian matrix (see Theorem 3). Nevertheless, sometimes the Jacobian is not well-defined at the fixed points. In these cases, we impose on the rational operator G the condition , so that it is reduced to a real-valued function. Therefore, the stability of the fixed point can be inferred from the absolute value of its first derivative at the fixed point.

By considering an attracting fixed point of function , we define its basin of attraction as the set of preimages of any order:

A key element in the stability analysis of an iterative method is the set of critical points of its associated rational function : if satisfies , x is called a critical point. A critical point such that c is not a root of is called a free critical point. Another way to get critical points is finding those points that make null the eigenvalues of . As an extension of the scalar case, if they are ant composed by the roots of polynomial , they are named free critical points. Indeed, Julia and Fatou [26] proved that there is at least one critical point associated with each basin of attraction. Therefore, by studying the orbit of the free critical points, all the attracting elements can be found. This result is valid for both complex and real iterative functions.

In this section, we analyze the performance of three different schemes with memory—our proposed scheme (22) and two known similar schemes, which are defined in (5), taken from Chicharro et al. [18]—on quadratic polynomials that we denote by , which use Kurchatov’s divided difference in order to introduce memory. We also analyze scheme

where , and = . It was from Petkovic et al. in [15]. We denote it by and use it with .

These schemes have several similarities: they are vectorial schemes with memory; they include divided differences operators in their iterative expressions; they are mainly used to define their respective accelerating parameters. However, we are going to see that the use of these elements does not determine the wideness of the sets of initial estimations converging to the roots, when real multidimensional discrete dynamics tools are used.

In order to extend the results to any quadratic polynomial, the first analysis is shown for on , so that the value of c yields a situation with real, complex or multiple roots depending on , or , respectively. The multidimensional rational function in this particular case will be denoted in what follows by . This analysis can be summarized in the following result.

Theorem 4.

The multidimensional rational operator associated with proposed scheme , when it is applied on polynomial ——is

and it is

for . Moreover, satisfies:

- (a)

- There are no strange fixed points.

- (b)

- If , there are eight different components of the free critical points, which are defined as , , being the (real) roots of polynomial . If , there are not free critical points.

Proof.

Let us remark that operator can be obtained by directly applying method to polynomial . Moreover, we know that fixed points of will have equal components. This is the reason why, when we force the three consecutive iterates to be equal (), then the only fixed points are composed by the roots . That is, and .

Regarding the critical points, the Jacobian matrix is

with eigenvalues .

By definition, the components of critical points are those values making null the eigenvalues of . By using the change of variables on the second factor of the numerator of the not null eigenvalue, we get . This polynomial only has real roots for , denoted by , . Then, if , there exist eight different componts of free critical points , . □

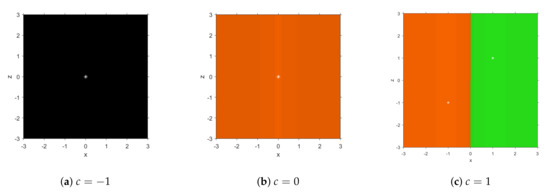

A very useful tool to visualize the analytical results is the dynamical plane of the system, composed by the set of the different basins of attraction. It can be drawn by means of the programs presented in [28], after some changes to adapt them to schemes with memory. The dynamical plane of a method is built by calculating the orbit of a mesh of starting points (although z does not appear in the rational function ) and painting each of them in a different color (orange and green in this case) depending on the attractor they converge to (marked as a white star), with a tolerance of . Additionally, they appear in black if the orbit has not reached any attracting fixed point in a maximum of 80 iterations. In Figure 1, we show the dynamical planes of this method for selected values of c, in order to show its performance.

Figure 1.

Dynamical planes of method on , for different values of c.

Let us remark that, as by definition all the fixed points have equal components, they will always appear in the bisector of the first and third quadrants of the dynamical plane. It can be observed that, when there are no real roots (, Figure 1a), no other attracting element appears; when , the only root is multiple and the convergence is linear, so there is global convergence to , as can be seen in Figure 1b. In Figure 1c, the convergence to the roots is also observed to be global, their basins of attraction being two symmetrical half-planes, which is exactly the same behavior as Newton’s method on quadratic polynomials.

Moreover, let us remark that when (real simple roots case), there are not free critical points, as in this case the only possible performance of the method was the convergence to the roots. The reason for this behavior is that in each basin of attraction there must be a critical point; if the only critical points are the roots of that basin, then there is no other possible convergence.

Comparisons with Other Methods with Memory for Nonlinear Problems

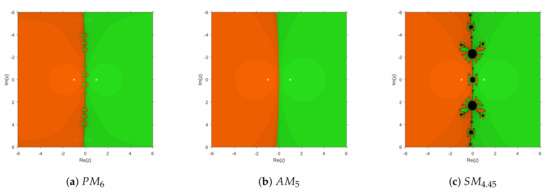

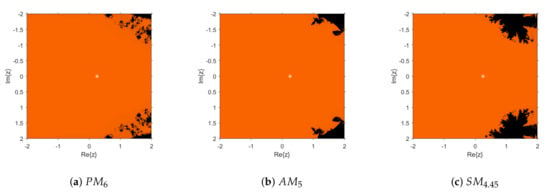

Here we compare the stability of the proposed method with that of known and schemes. Firstly, we show their performances on quadratic polynomials: . The dynamical planes are plotted in the complex plane, by starting the iterative methods with memory with an initial value of the accelerating parameter of om each case, for any initial guess , defined in a mesh of points and with a maximum of 80 iterations.

As shown in Figure 2 and Figure 3, all three methods have been used to estimate the complex roots of the unity of second and third degrees. It can be observed that the performances with and were very similar for quadratic polynomials, showing global convergence, similar to that of Newton’s scheme.

Figure 2.

Complex dynamical planes of new and known methods on .

Figure 3.

Complex dynamical planes of new and known methods on .

However, this global convergence is held on cubic polynomials in the case of , but in case of , black areas of no convergence to the roots appear. Iterative method shows these black regions both for quadratic and cubic polynomials, which are bigger in the cubic case.

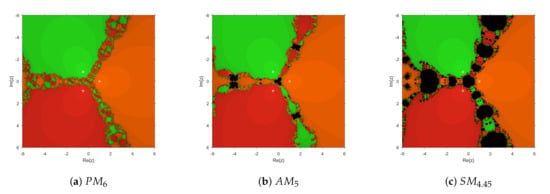

This good performance of the set of converging initial estimations of proposed method is also shown in the case of non-polynomial equations: let us notice the performances of all known and new methods on the complex rational function , with a simple zero at . The basins of attraction of the root appearing in Figure 4, being similar, are wider in case of than for or .

Figure 4.

Complex dynamical planes of new and known methods on with .

Additionally, in Figure 5 the basin of attraction of , the simple root of , is very wide in the case of , with small areas of no convergence to the root, in comparison with those of known methods and .

Figure 5.

Complex dynamical planes of new and known methods on , with .

Thus, it can be concluded that proposed method had very stable performance, as in real and in complex spaces, both for polynomial and non-polynomial functions, in the scalar case. In spite of having the same accelerating parameter as the method, the new scheme has proven to be better than known ones in terms of order of convergence and also in terms of stability. In the next section, its numerical performance on nonlinear systems of different sizes is demonstrated.

5. Numerical Experiments

This section presents the validity of the proposed scheme with memory on some numerical problems. The proposed method (22) was applied to numerical problems and compared with other existing techniques with memory [15,18], presented as and , respectively. In all numerical problems, initially the parameter , where I is the identity matrix, and for method , another parameter was considered. All the numerical tests were conducted by using Mathematica 10, with 400 multiple precision digits of mantissa. For all the examples, we have included in the respective tables the following information: ; ; the residual at ; approximated computational order of convergence (ACOC) denoted as [29]

and .

Further on, the iterative procedure was stopped after three iterations and problems were tested on three different initial values. Notice that the meaning of is in all the tables.

Example 1.

Consider the following system of nonlinear equations in four unknown variables:

The approximate solution is . Table 1 depicts that the proposed scheme with all different initial guesses converged to a solution much faster than the methods and . Clearly, the residual error, functional error and computational time of proposed method were superior to those of and .

Table 1.

Convergence behavior of the schemes for Example 1.

Example 2.

Another system of nonlinear equations defined as:

The exact solution of this problem is This system of nonlinear equations was examined on initial guesses , and ; and results are shown in Table 2. The proposed technique performed better than existing scheme , whereas scheme diverged for initial guess . In the case of a large nonlinear system of equations, the proposed methods demonstrated an efficient order of convergence and short CPU time when compared with other schemes.

Table 2.

Convergence behavior of schemes for Example 2.

Example 3.

Next, we consider the transcendental system of equations shown below

This problem has an approximated solution of . The performance of the proposed method was better than those of the other methods ones, as shown in Table 3.

Table 3.

Convergence behavior of schemes for Example 3.

Example 4.

We have tested the proposed method on another well-known nonlinear problem Fisher’s equation [30] which has many applications in chemistry, heat and mass transfer, biology and ecology. Basically, it determines the process of interaction between diffusion and reaction. This nonlinear problem with homogeneous Neumann’s boundary conditions and diffusion coefficient D can be defined as:

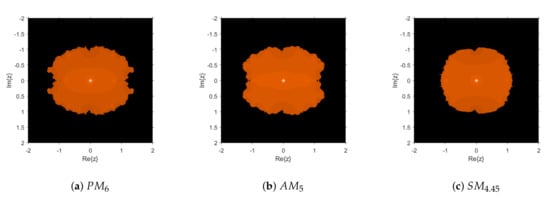

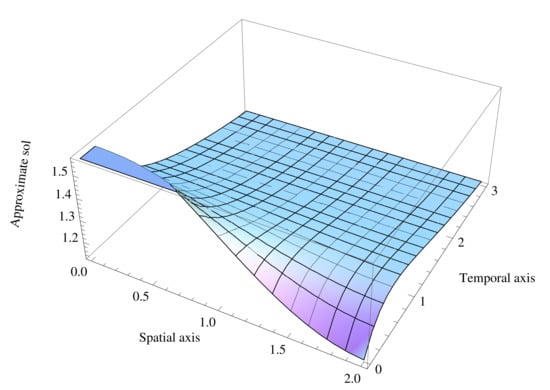

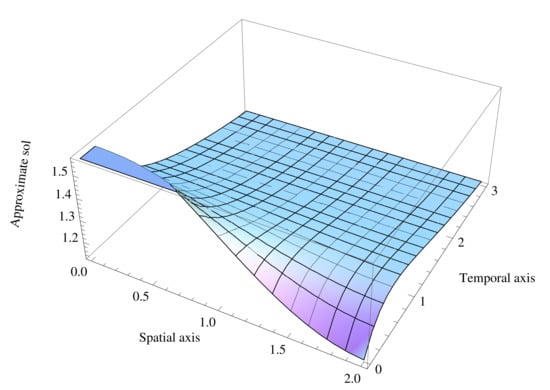

Applying the finite difference discreitization on the Equation (31) leads to a system of nonlinear equations. Suppose is its approximate solution at the grid points of the mesh. Let M and N be the numbers of steps in x and t directions, and h and k be the respective step sizes. Use central difference to approximate the second order partial derivative ; backward difference approximation for the first-order derivative with respect to "t" as ; and forward difference for first order derivative with respect to x as . The solution of the system is obtained by taking steps along x-axis, , and t-axis, , which form a nonlinear system of size 400, with the initial vector . The results have been computed by different methods and are shown in Table 4. It can be noticed that the lowest execution time and residual error at the third iteration correspond to the proposed method . Moreover, the approximate solution has been plotted in Figure 6.

Table 4.

Convergence behavior of schemes for Example 4.

Figure 6.

Approximated Solution for Fisher’s Equation .

6. Conclusions

The number of iterative schemes with memory for solving multidimensional problems is low, in part due to the difficulty of the task. Additionally, it is in part due to the lack of efficiency of the resulting method, if the usual techniques employed in the design of scalar methods with memory are employed as high-degree interpolation polynomials. With the procedure used, the iterative expression of the scheme with memory remains simple, and the order of the original method is increased by . Thus, the efficiency is highly improved. Moreover it has been proven, by means of the associated multidimensional discrete dynamical system, that it is a very stable scheme with wide basins of attraction and global convergence on quadratic polynomials. Its performance on other nonlinear functions was also found to be very stable in comparison with other known schemes. The numerical tests confirmed these results, even for large nonlinear systems and applied problems, such as Fisher’s partial differential equation.

Author Contributions

Conceptualization, R.B.; methodology, A.C.; software, S.B.; validation, J.R.T.; formal analysis, R.B.; investigation, J.R.T.; writing—original draft preparation, S.B.; writing—review and editing, A.C.; supervision, J.R.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by PGC2018-095896-B-C22 (MCIU/AEI/FEDER, UE).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Burden, R.L.; Faires, J.D. Numerical Analysis; PWS Publishing Company: Boston, MA, USA, 2001. [Google Scholar]

- Grosan, C.; Abraham, A. A new approach for solving nonlinear equations systems. IEEE Trans. Syst. Man Cybernet Part A Syst. Hum. 2008, 38, 698–714. [Google Scholar] [CrossRef]

- Moré, J.J. A collection of nonlinear model problems. In Computational Solution of Nonlinear Systems of Equations, Lectures in Applied Mathematics; Allgower, E.L., Georg, K., Eds.; American Mathematical Society: Providence, RI, USA, 1990; Volume 26, pp. 723–762. [Google Scholar]

- Tsoulos, I.G.; Stavrakoudis, A. On locating all roots of systems of nonlinear equations inside bounded domain using global optimization methods. Nonlinear Anal. Real World Appl. 2010, 11, 2465–2471. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Samanskii, V. On a modification of the Newton method. Ukrain. Math. 1967, 19, 133–138. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Petković, M.S.; Neta, B.; Petkovixcx, L.D.; Džunixcx, J. Multipoint Methods for the Solution of Nonlinear Equations; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Cordero, A.; Lotfi, T.; Bakhtiari, P.; Torregrosa, J.R. An efficient two-parametric family with memory for nonlinear equations. Numer. Algorithms 2015, 68, 323–335. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Zhang, T.; Qin, Y. Efficient two-step derivative-free iterative methods with memory and their dynamics. Int. J. Comput. Math. 2016, 93, 1423–1446. [Google Scholar] [CrossRef]

- Cordero, A.; Lotfi, T.; Torregrosa, J.R.; Assari, P.; Taher-Khani, S. Some new bi-accelerator two-point method for solving nonlinear equations. J. Comput. Appl. Math. 2016, 35, 251–267. [Google Scholar] [CrossRef]

- Bakhtiari, P.; Cordero, A.; Lotfi, T.; Mahdiani, K.; Torregrosa, J.R. Widening basins of attraction of optimal iterative methods for solving nonlinear equations. Nonlinear Dyn. 2017, 87, 913–938. [Google Scholar] [CrossRef]

- Howk, C.L.; Hueso, J.L.; Martínez, E.; Teruel, C. A class of efficient high-order iterative methods with memory for nonlinear equations and their dynamics. Math. Meth. Appl. Sci. 2018, 41, 7263–7282. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. Efficient higher order derivative-free multipoint methods with and without memory for systems of nonlinear equations. Int. J. Comput. Math. 2018, 95, 920–938. [Google Scholar] [CrossRef]

- Petkovíc, M.S.; Sharma, J.R. On some efficient derivative-free iterative methods with memory for solving systems of nonlinear equations. Numer. Algor. 2016, 71, 457–474. [Google Scholar] [CrossRef]

- Narang, M.; Bathia, S.; Alshomrani, A.S.; Kanwar, V. General efficient class of Steffensen type methods with memory for solving systems on nonlinear equations. Comput. Appl. Math. 2019, 352, 23–39. [Google Scholar] [CrossRef]

- Cordero, A.; Maimó, J.G.; Torregrosa, J.R.; Vassileva, M.P. Iterative methods with memory for solving systems of nonlinear equations using a second order approximation. Mathematics 2019, 7, 1069. [Google Scholar] [CrossRef] [Green Version]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. On the improvement of the order of convergence of iterative methods for solving nonlinear systems by means of memory. Appl. Math. Lett. 2020, 104. [Google Scholar] [CrossRef]

- Campos, B.; Cordero, A.; Torregrosa, J.R.; Vindel, P. A multidimensional dynamical approach to iterative methods with memory. Appl. Math. Comput. 2015, 271, 701–715. [Google Scholar] [CrossRef] [Green Version]

- Campos, B.; Cordero, A.; Torregrosa, J.R.; Vindel, P. Stability of King’s family of iterative methods with memory. Comput. Appl. Math. 2017, 318, 504–514. [Google Scholar] [CrossRef] [Green Version]

- Choubey, N.; Cordero, A.; Jaiswal, J.P.; Torregrosa, J.R. Dynamical techniques for analyzing iterative schemes with memory. Complexity 2018, 2018. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. Stability and applicability of iterative methods with memory. J. Math. Chem. 2019, 57, 1282–1300. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. On the choice of the best members of the Kim family and the improvement of its convergence. Math. Meth. Appl. Sci. 2020, 43, 8051–8066. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. Impact on stability by the use of memory in Traub-type schemes. Mathematics 2020, 8, 274. [Google Scholar] [CrossRef] [Green Version]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Alg. 2010, 5, 87–99. [Google Scholar] [CrossRef]

- Blanchard, P. Complex Analytic Dynamics on the Riemann Sphere. Bull. AMS 1984, 11, 85–141. [Google Scholar] [CrossRef] [Green Version]

- Robinson, R.C. An Introduction to Dynamical Systems, Continous and Discrete; American Mathematical Society: Providence, RI, USA, 2012. [Google Scholar]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R. Drawing dynamical and parameters planes of iterative families and methods. Sci. World 2013, 2013. [Google Scholar] [CrossRef] [PubMed]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Sauer, T. Numerical Analysis, 2nd ed.; Pearson: Boston, MA, USA, 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).