On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model

Abstract

:1. Introduction

- (i)

- To develop an automatic biomedical image classification model offering satisfactory and reliable performance using large MR image datasets.

- (ii)

- To further improve the performance, hybridized models are proposed for tuning the parameters of the models using bioinspired optimization techniques.

- (iii)

- The lack of salp inspired algorithms in literature is a main motivation of this paper.

- (i)

- Which activation function of ELM network has yielded faster convergence during training?

- (ii)

- Which bioinspired technique optimized the ELM parameters in a better way?

- (iii)

- How much performance improvement was achieved using proper classification models?

- (iv)

- ELM-SSA exhibits superior performance over FLANN, RBFN, and BPNN models hybridized with PSO, DE as well as SSA schemes.

- (v)

- On average, the ELM-SSA model yields lower execution time compared to other models.

- (vi)

- In general, the proposed ELM-SSA model outperforms other hybridized classification models such as FLANN-SSA with an improvement in accuracy of 5.31% and 1.02% for Alzheimer’s and Hemorrhage datasets, respectively.

- (vii)

- The ELM-SSA model has produced 8.79% and 2.06% better accuracy as compared to RBFN-SSA for two datasets.

- (viii)

- ELM-SSA has also shown 7.6% and 1.02% higher accuracy for the two datasets, respectively, as compared to BPNN-SSA.

2. Materials and Methods

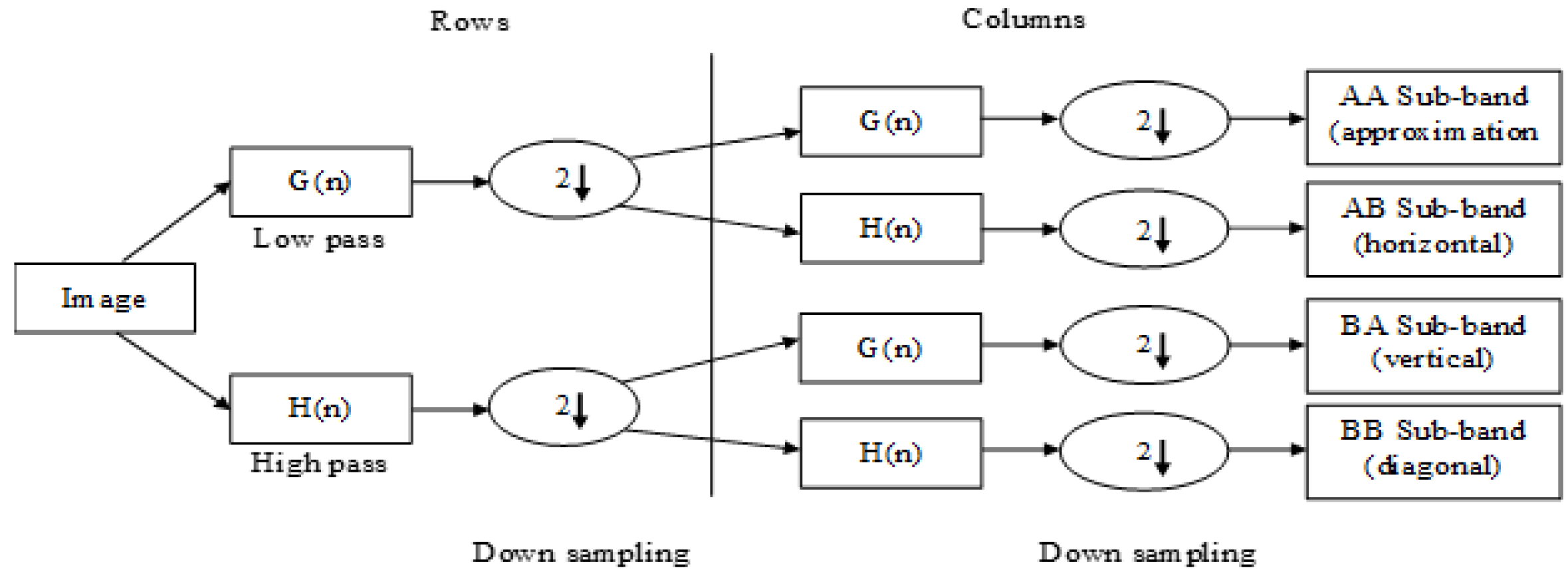

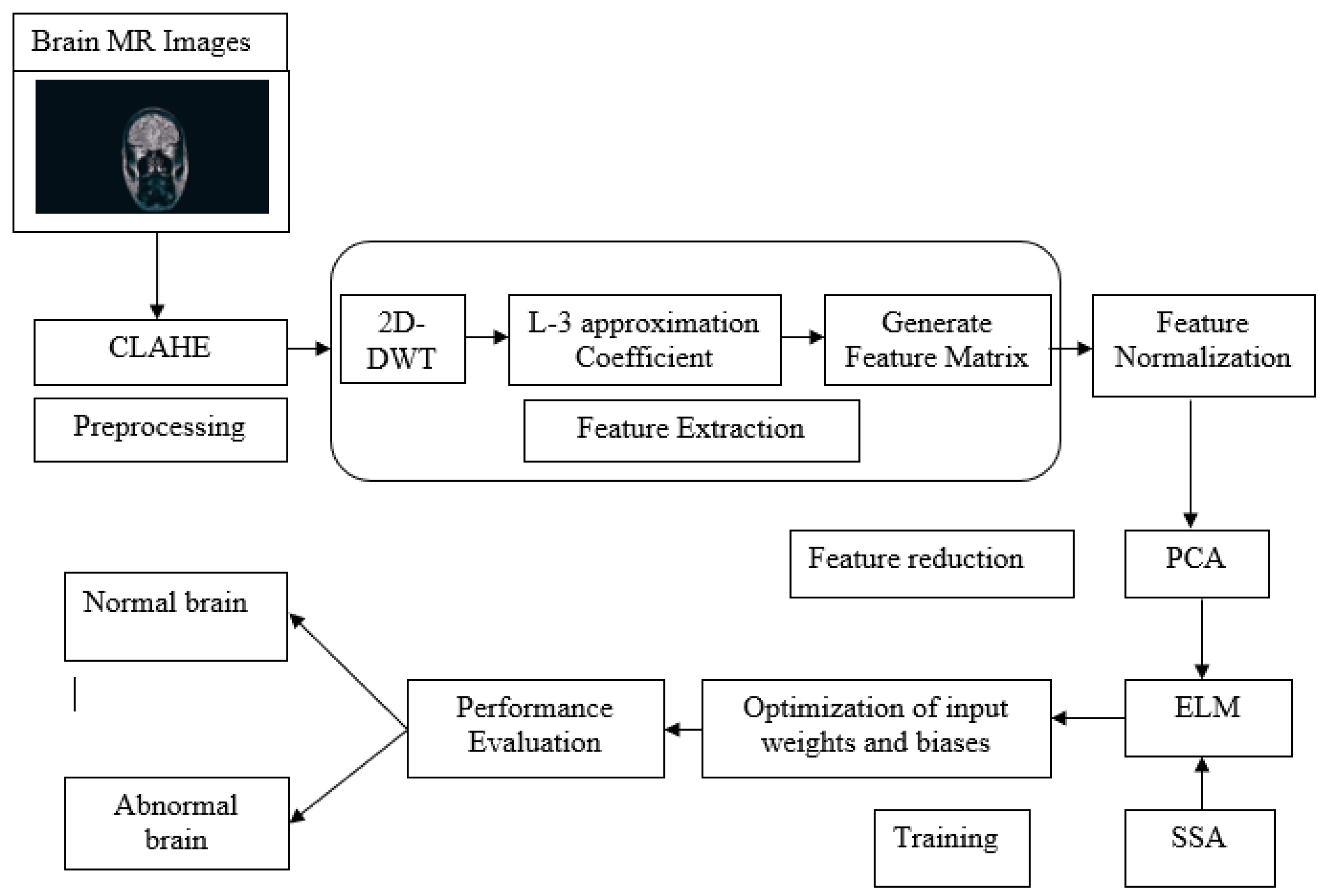

2.1. 2D-DWT for Feature Extraction

| Algorithm 1 Extraction of Feature Matrix (FM) |

|

Input: M: Total number of pictures having size . Output: FM having size : The coefficients of the L-3 Haar wavelet is calculated by . Step 1: Initialize, , (Amount of extracted features) Step 2: Generate an empty matrix and empty vector Step 3: for to M do Step 4: Obtain th MR image Step 5: Step 6: while do Step 7: for do Step 8: for do Step 9: Step 10: Step 11: end for Step 12: end for Step 13: end while Step 14: Step 15: end for |

2.2. Principal Component Analysis (PCA) for Reducing Features

| Algorithm 2 Feature reduction using PCA [34] |

|

Input: Primary feature vector. Output: Reduced feature vector. Let X be an input data set of N points and each having p dimensions, which is represented by Equation (4) . Step1: Compute the mean of which is represented by Equation (5) Step 2: Find out the deviation from mean: Step 3: Calculate the covariance matrix which is mentioned in Equation (6) If , then both are similar. if , then both are independent. if , then i and j are opposite. Step 4: Compute the Eigen Vectors and Eigen Values of . Step 5: Rearrange the Eigen Vectors and Eigen Values: Step 6: The Eigenvectors having the biggest Eigenvalues come to a new space which consists of the essential coefficients, is represented through Equation (8). |

2.3. k-Fold Cross-Validation

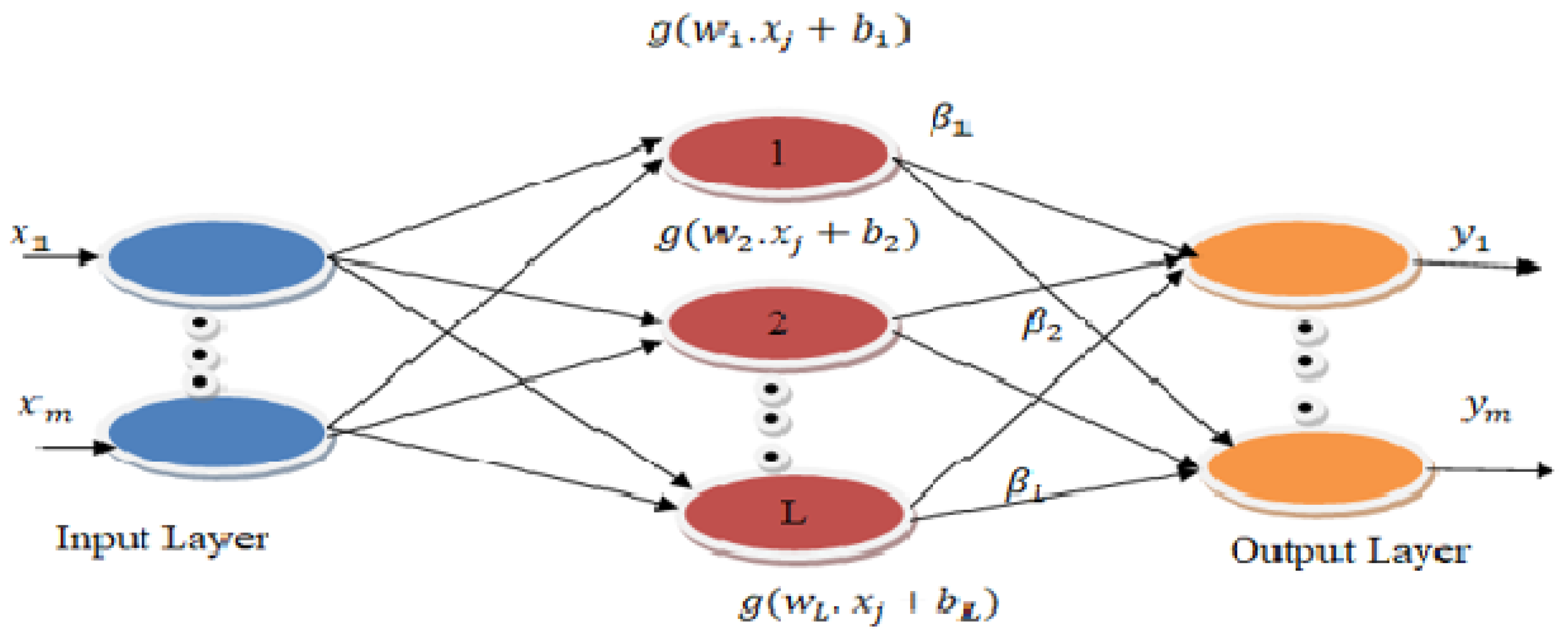

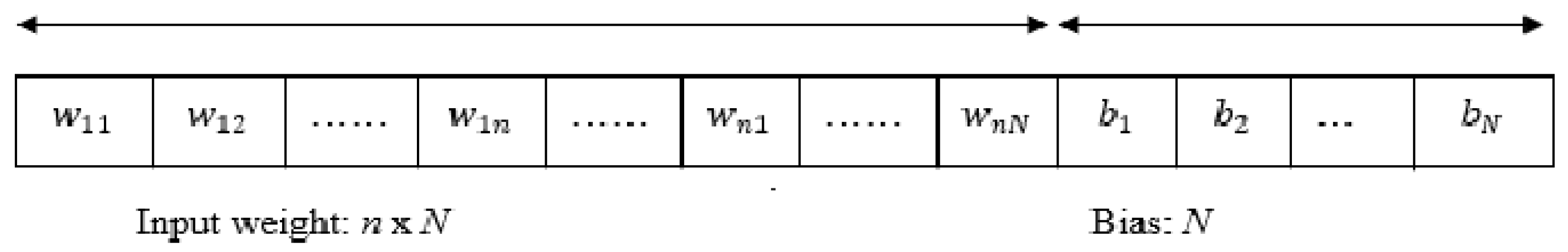

2.4. Classification Using ELM

2.5. Salp Swarm Algorithm (SSA)

- : position vector of food source in dimension.

- U and L: superior and inferior limit, respectively.

- : are random numbers between 0 and 1.

| Algorithm 3 Salp Swarm Algorithm pseudocode |

|

Set the population of salp and their upper and lower bound While (not equal terminate condition) do Calculate the RMSE for every salp Consider Leader salp having lowest RMSE Randomly initialize and between [0, 1] Update by Equation (17) For (each salp ) do if then Leader salp location updated by Equation (16) else Follower salp location updated by Equation (18) Use upper and lower limit of variables to update the population Return F end for loop end while loop |

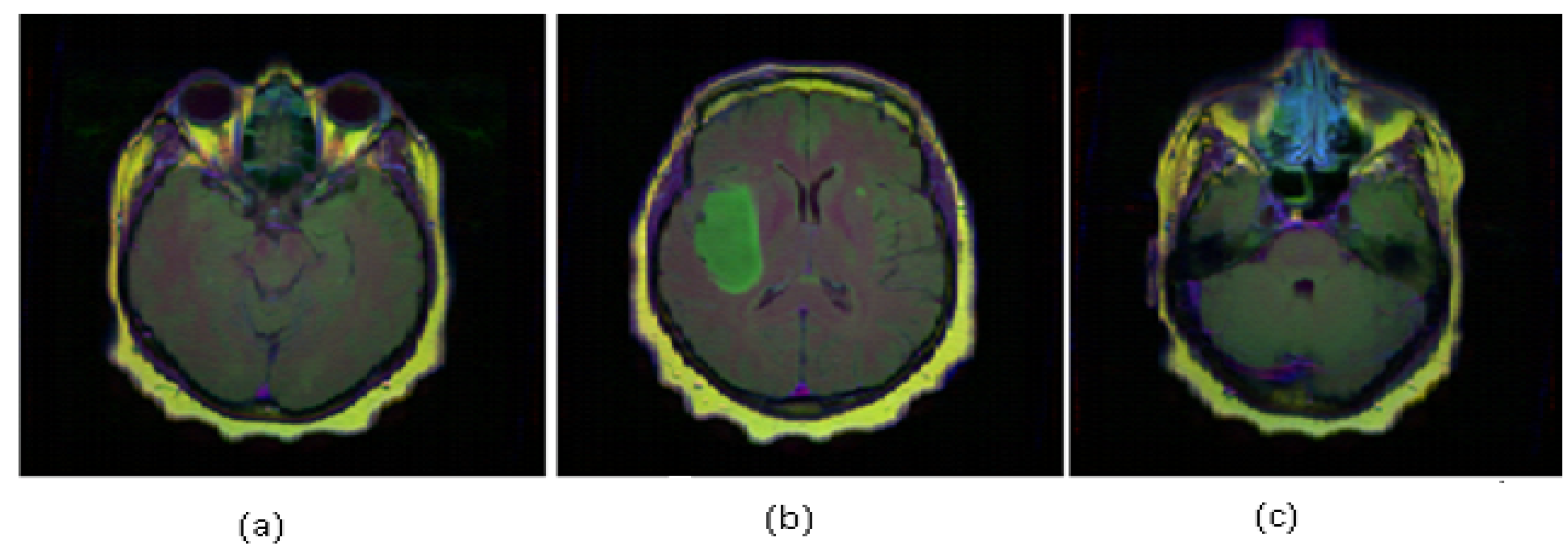

2.6. Data Set Description

3. Proposed Methodology

4. Experimental Results and Discussion

4.1. System Configuration

4.2. Performance Evaluation

- Accuracy: It finds how many brain images are classified correctly from the total image sets tested.where = True Positive, = True Negative, = False Positive, = False Negative.

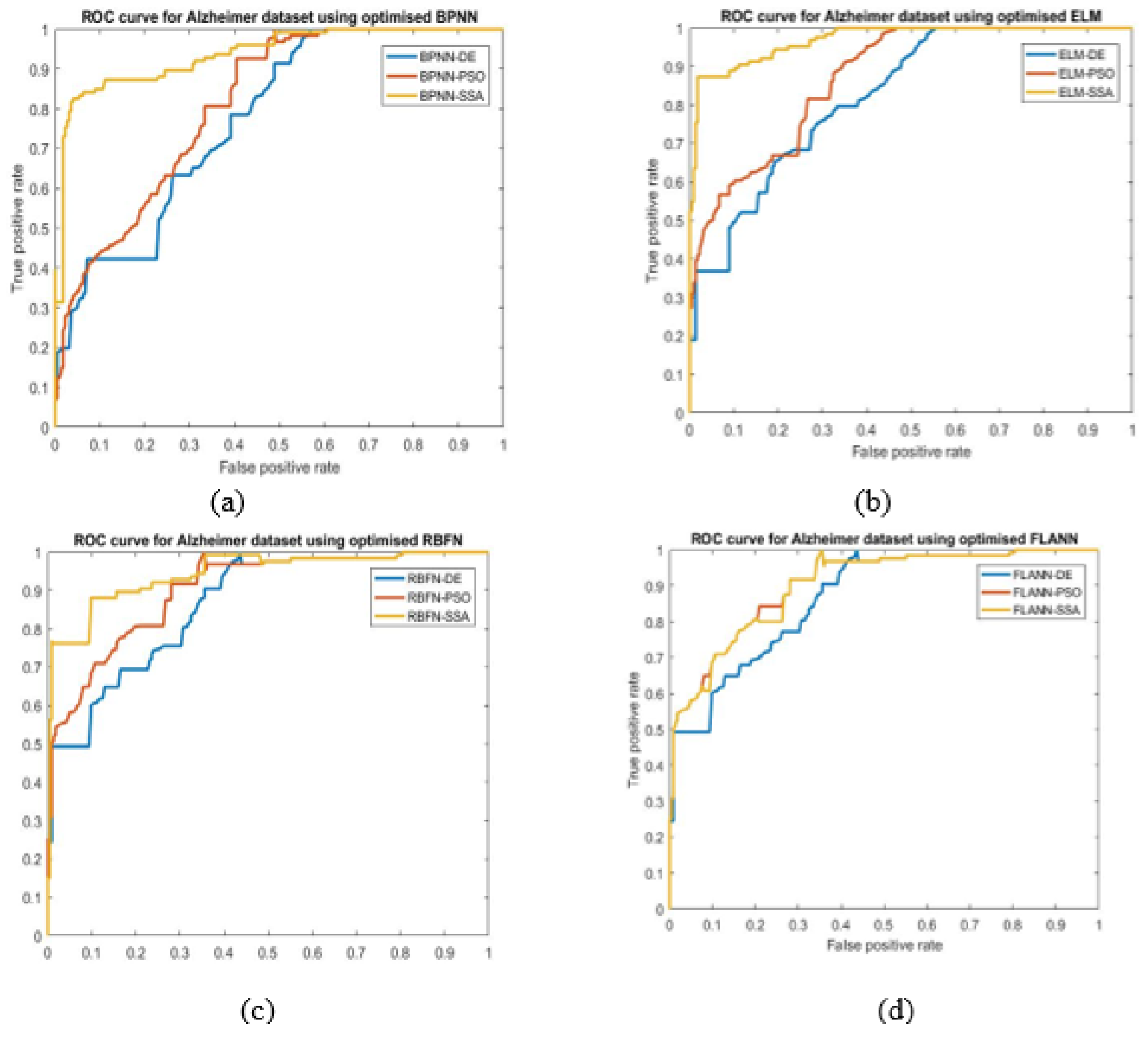

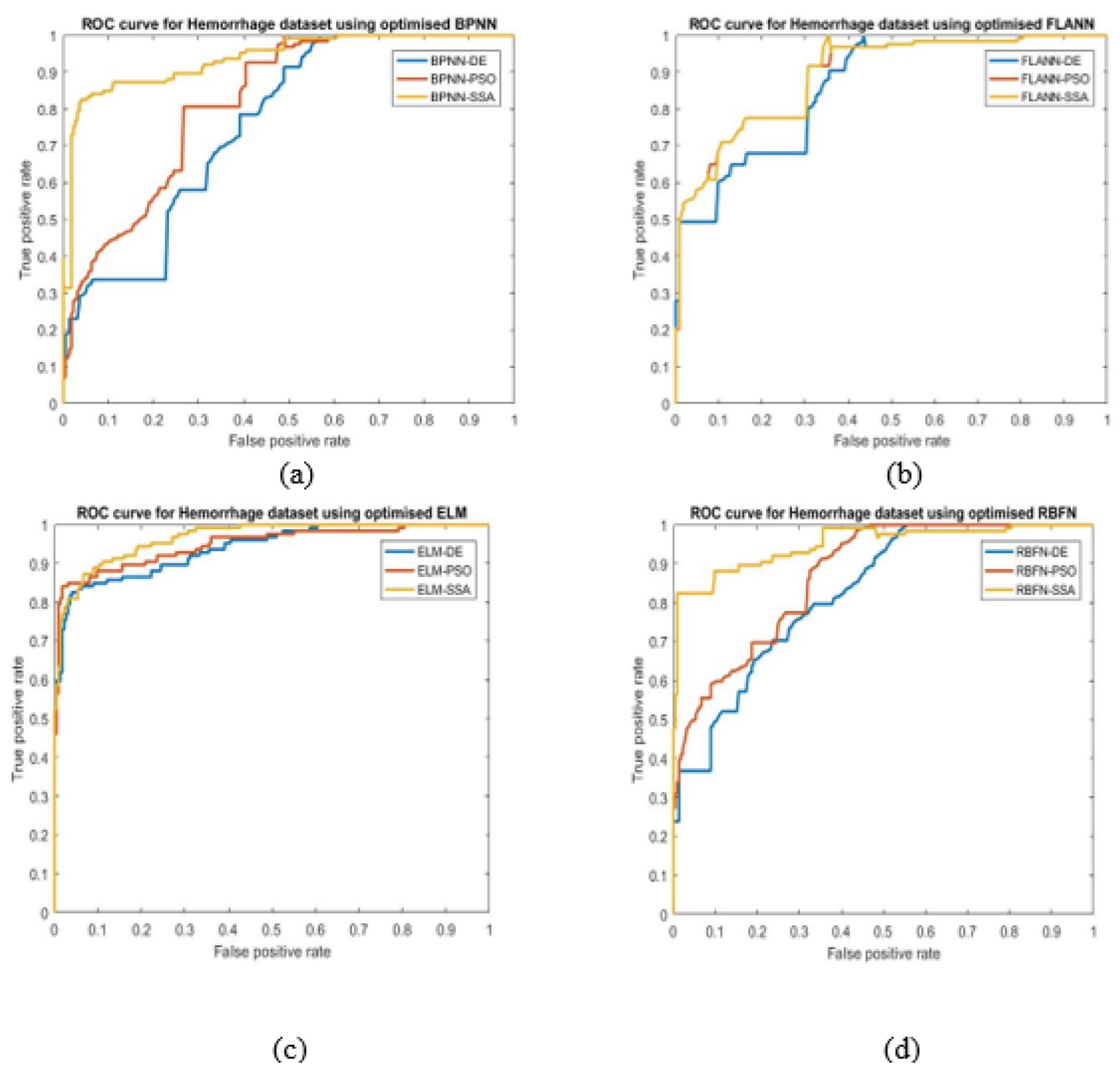

- ROC: It is an evaluation measure of binary classification and indicate diagnostic ability of classifier. It is a probabilistic plot between true positive rate (TPR) and false positive rate (FPR).

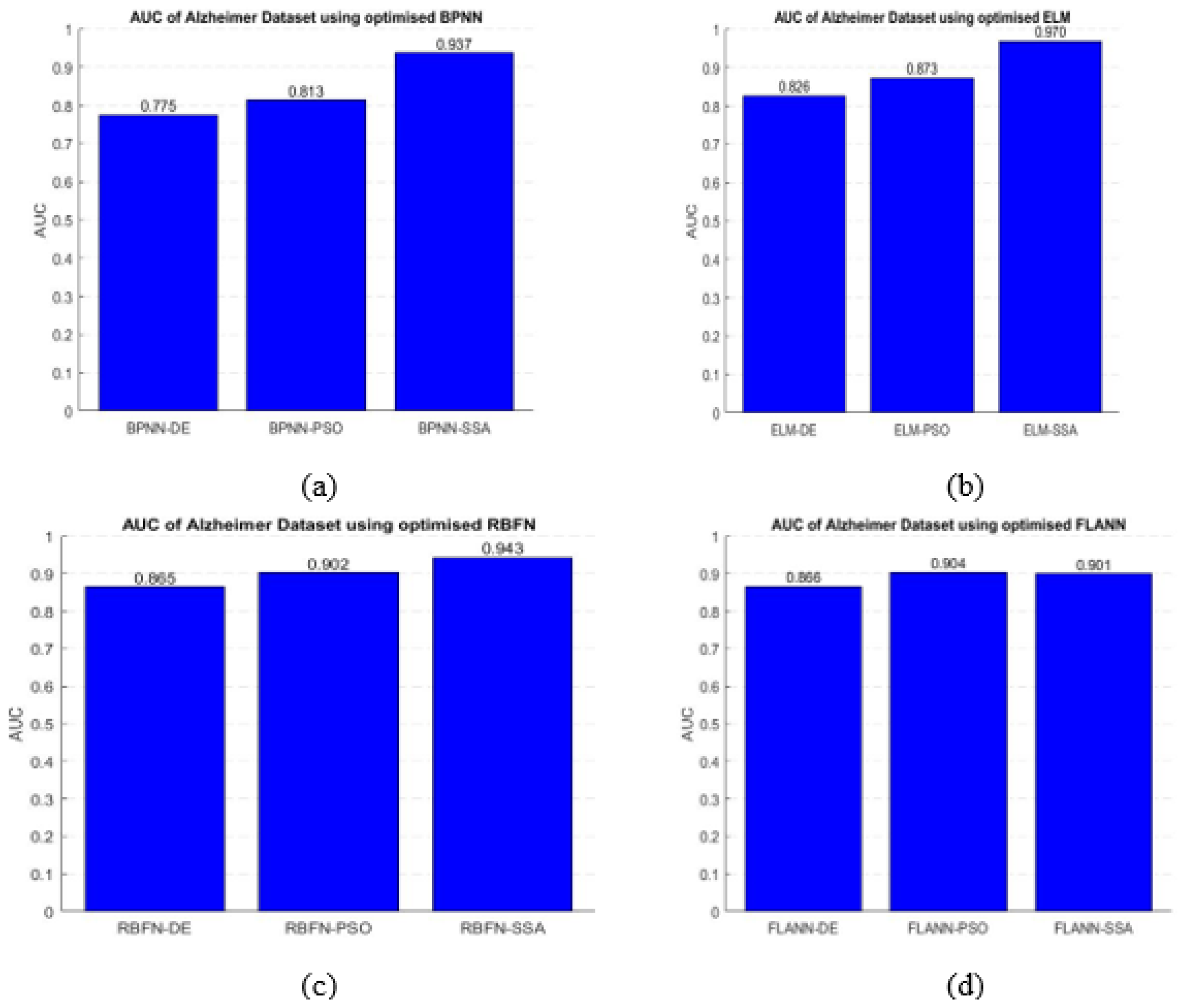

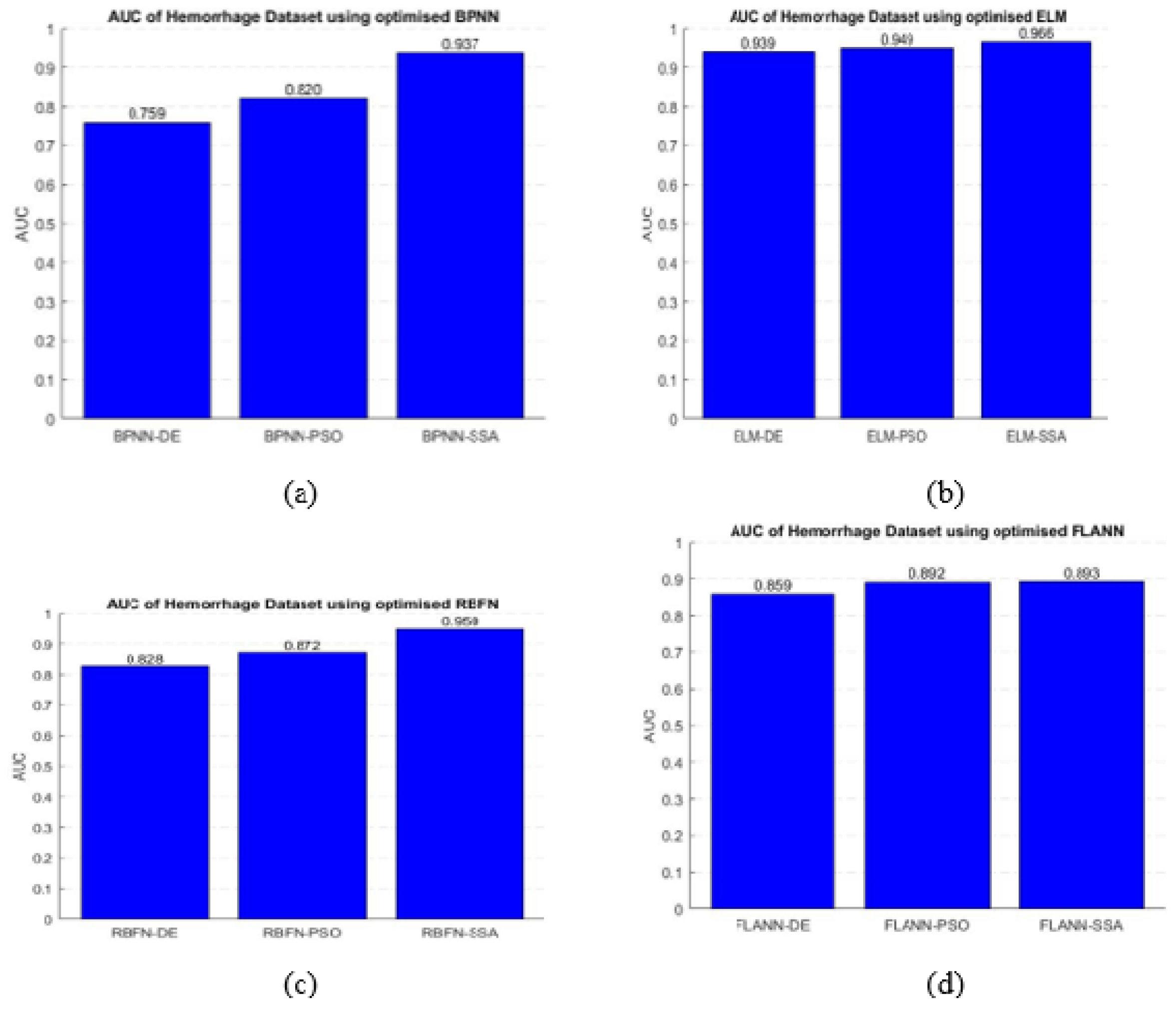

- AUC: It measures the ability of a classifier to differentiate between classes and represents the summary of the ROC curve. A higher AUC exhibits better between two classes.

- Overall improvement: It represents the percentage of improvement of the proposed classifier over other classification models in terms of accuracy, AUC, and ROC.

- Speedup: It measures the relative comparison of execution time without of any standard classifier, which is represented aswhere is the execution time of standard classifier, and is the execution time of proposed hybrid classier.

4.3. Parameters Setting

4.4. Features Extraction and Reduction

4.5. Performance Comparison

4.6. Analysis of Computational Time

- In this work, Alzheimer’s and Hemorrhage brain MRI datasets has been considered and are used for classification with RBFN, FLANN, BPNN, and ELM models.

- The training process of ELM is very simple, but it needs more hidden unit’s comparison to RBFN, FLANN, and BPNN models.

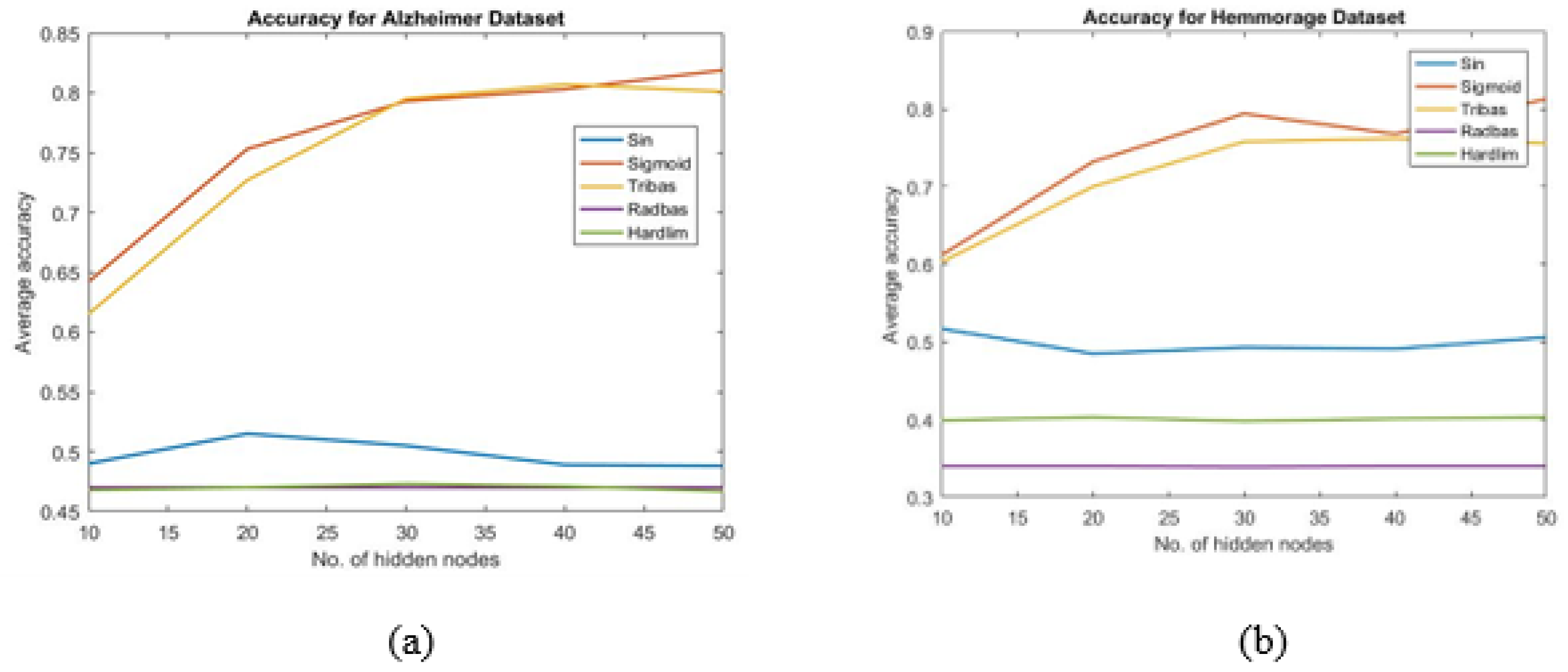

- The suitable activation function and appropriate number of hidden nodes of ELM are chosen by trial and error. In this work, it is found that, activation function and 50 numbers of hidden units provide better accuracy than those obtained by other combination.

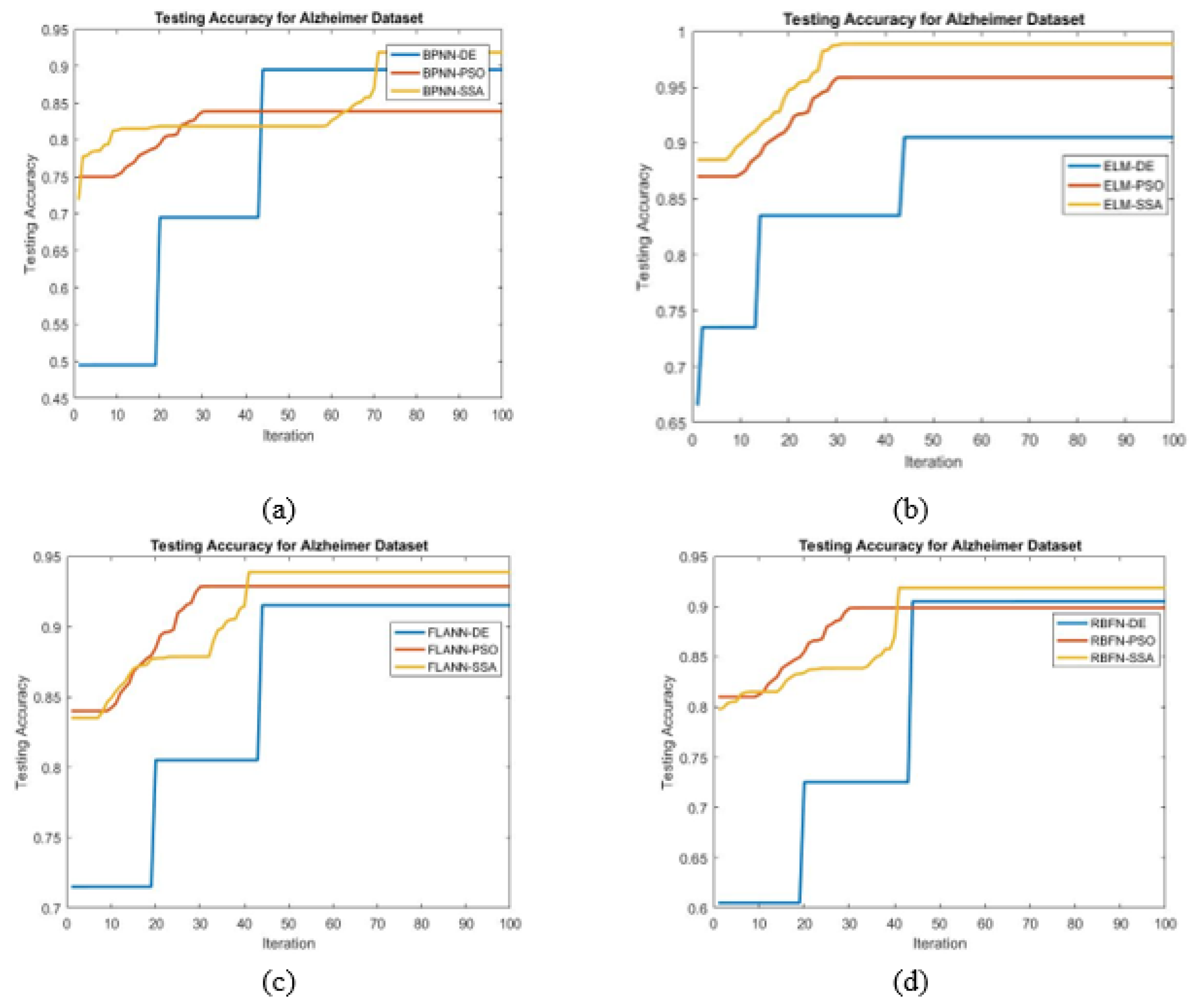

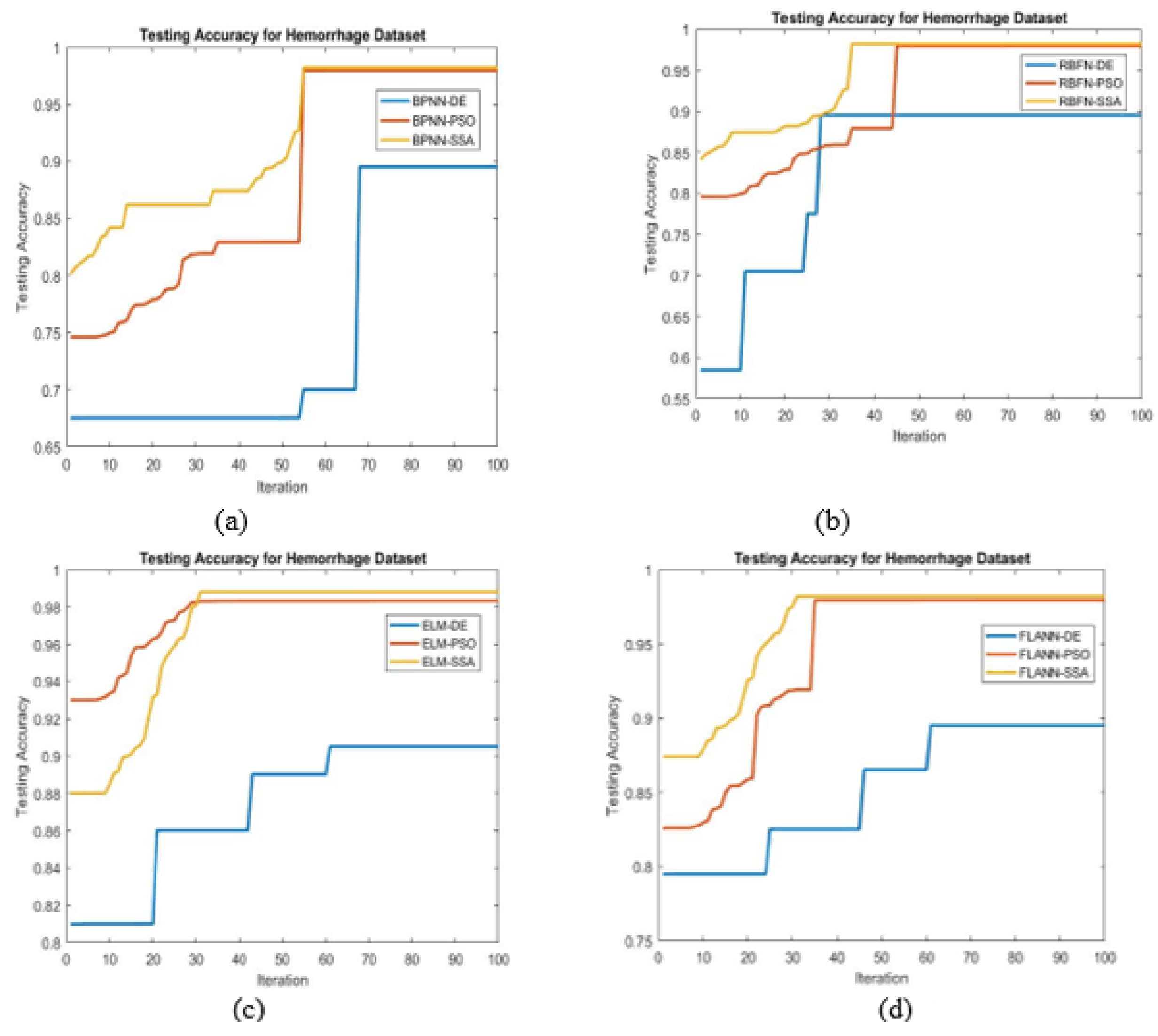

- For achieving the best possible classification, different bioinspired techniques such as PSO, DE, and SSA have been chosen.

- To find the maximum accuracy, the SSA tuned ELM classifier is found to be best.

- For a comparison purpose, same population size and number of iterations are taken in all the evolutionary algorithms

- Higher classification accuracy is demonstrated by the proposed ELM-SSA approach for the two datasets

- The highest AUC value is achieved by the ELM-SSA model compared to other hybridized classifier which is true for the two datasets.

- The ELM-SSA model exhibits the best ROC plots for the two datasets used.

- In Table 6, it is shown that the ELM-SSA combined model produces better classification accuracy than the basic ELM as well as other classification schemes using FLANN, RBFN, and BPNN models.

- It is also observed that feature extraction phase consumes more computational time than either of feature reduction and classification stage.

- In general, the proposed ELM-SSA model outperforms other hybridized classification models such as FLANN-SSA with an improvement in accuracy of and for Alzheimer’s and Hemorrhage datasets respectively. Similarly the ELM-SSA model has produced and better accuracy as compared to RBFN-SSA for two datasets. ELM-SSA has also shown and higher accuracy for Alzheimer’s and Hemorrhage datasets, respectively, as compared to BPNN-SSA.

- In general, it is found that the proposed ELM-SSA model executes faster than other models over both the datasets.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mohsin, S.A.; Sheikh, N.M.; Saeed, U. MRI induced heating of deep brain stimulation leads: Effect of the air-tissue interface. Prog. Electromagn. Res. 2008, 83, 81–91. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Dong, Z.; Wu, L.; Wang, S. A hybrid method for MRI brain image classification. Expert Syst. Appl. 2011, 38, 10049–10053. [Google Scholar] [CrossRef]

- Chaplot, S.; Patnaik, L.M.; Jagannathan, N.R. Classification of magnetic resonance brain images using wavelets an input to support vector machine and neural network. Biomed. Signal Process. Control 2006, 1, 86–92. [Google Scholar] [CrossRef]

- Maitra, M.; Chatterjee, A. A Slantlet transform based intelligent system for magnetic resonance brain image classification. Biomed. Signal Process. Control 2006, 1, 299–306. [Google Scholar] [CrossRef]

- Das, S.; Chowdhury, M.; Kundu, M.K. Brain MR image classification using multiscale geometric analysis of ripplet. Prog. Electromagn. Res. 2013, 137, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Eshtay, M.; Faris, H.; Obeid, N. Metaheuristic-based extreme learning machines: A review of design formulations and applications. Int. J. Mach. Learn. Cybern. 2019, 10, 1543–1561. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Aljarah, I.; Faris, H.; Mirjalili, S.; Al-Madi, N. Training radial basis function networks using a biogeography-based optimizer. Neural Comput. Appl. 2018, 29, 529–553. [Google Scholar] [CrossRef]

- Faris, H.; Aljarah, I.; Mirjalili, S. Training feedforward neural networks using multi-verse optimizer for binary classification problems. Appl. Intell. 2016, 45, 322–332. [Google Scholar] [CrossRef]

- Mansour, R.F.; Escorcia-Gutierrez, J.; Gamarra, M.; Díaz, V.G.; Gupta, D.; Kumar, S. Artificial intelligence with big data analytics-based brain intracranial hemorrhage e-diagnosis using CT images. Neural Comput. Appl. 2021, 1–13. [Google Scholar] [CrossRef]

- Ding, S.; Su, C.; Yu, J.S. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev. 2011, 36, 153–162. [Google Scholar] [CrossRef]

- DGori, M.; Tesi, A. On the problem of local minima in backpropagation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 1, 76–86. [Google Scholar] [CrossRef] [Green Version]

- Gupta, J.N.; Sexton, R.S. Comparing backpropagation with a genetic algorithm for neural network training. Omega 1999, 27, 679–684. [Google Scholar] [CrossRef]

- Sexton, R.S.; Dorsey, R.E.; Johnson, J.D. Optimization of neural networks: A comparative analysis of the genetic algorithm and simulated annealing. Eur. J. Oper. Res. 1999, 114, 589–601. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; pp. 985–990. [Google Scholar]

- El-Dahshan, E.S.A.; Hosny, T.; Salem, A.B.M. Hybrid intelligent techniques for MRI brain images classification. Digit. Signal Process. 2010, 20, 433–441. [Google Scholar] [CrossRef]

- Aljarah, I.; Ala’M, A.Z.; Faris, H.; Hassonah, M.A.; Mirjalili, S.; Saadeh, H. Simultaneous feature selection and support vector machine optimization using the grasshopper optimization algorithm. Cogn. Comput. 2018, 10, 478–441. [Google Scholar] [CrossRef] [Green Version]

- Mafarja, M.; Aljarah, I.; Heidari, A.A.; Hammouri, A.I.; Faris, H.; Ala’M, A.Z.; Mirjalili, S. Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowl.-Based Syst. 2018, 145, 25–45. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Shu, Y. Evolutionary extreme learning machine–based on particle swarm optimization. In International Symposium on Neural Networks; Springer: Berlin/Heidelberg, Germany, 2006; pp. 644–652. [Google Scholar]

- Yang, Z.; Wen, X.; Wang, Z. QPSO-ELM: An evolutionary extreme learning machine based on quantum-behaved particle swarm optimization. In Proceedings of the 2015 Seventh International Conference on Advanced Computational Intelligence (ICACI), Wuyi, China, 27–29 March 2015; pp. 69–72. [Google Scholar]

- El-Dahshan, E.S.A.; Mohsen, H.M.; Revett, K.; Salem, A.B.M. Computer-aided diagnosis of human brain tumor through MRI: A survey and a new algorithm. Expert Syst. Appl. 2014, 41, 5526–5545. [Google Scholar] [CrossRef]

- Dehuri, S.; Roy, R.; Cho, S.B.; Ghosh, A. An improved swarm optimized functional link artificial neural network (ISO-FLANN) for classification. J. Syst. Softw. 2012, 85, 1333–1345. [Google Scholar] [CrossRef]

- Ma, C. An Efficient Optimization Method for Extreme Learning Machine Using Artificial Bee Colony. J. Digit. Inf. Manag. 2017, 15, 135–147. [Google Scholar]

- Eusuff, M.M.; Lansey, K.E. Optimization of water distribution network design using the shuffled frog leaping algorithm. J. Water Resour. Plan. Manag. 2003, 129, 210–225. [Google Scholar] [CrossRef]

- Eusuff, M.; Lansey, K.; Pasha, F. Shuffled frog-leaping algorithm: A memetic meta-heuristic for discrete optimization. Eng. Optim. 2006, 38, 129–154. [Google Scholar] [CrossRef]

- Luo, J.P.; Li, X.; Chen, M.R. Hybrid shuffled frog leaping algorithm for energy-efficient dynamic consolidation of virtual machines in cloud data centers. Expert Syst. Appl. 2014, 41, 5804–5816. [Google Scholar] [CrossRef]

- Nejad, M.B.; Ahmadabadi, M.E.S. A novel image categorization strategy based on salp swarm algorithm to enhance efficiency of MRI images. Comput. Model. Eng. Sci. 2019, 119, 185–205. [Google Scholar]

- Huang, Y.W.; Lai, D.H. Hidden node optimization for extreme learning machine. Aasri Procedia 2012, 3, 375–380. [Google Scholar] [CrossRef]

- Xiao, D.; Li, B.; Mao, Y. A multiple hidden layers extreme learning machine method and its application. Math. Probl. Eng. 2017, 2017, 4670187. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.J.; Wang, Y.; Mukherjee, D.; Chen, Y. ELM Feature Mappings Learning: Single-Hidden-Layer Feedforward Network without Output Weight. In Proceedings of ELM-2014 Volume 1; Springer: Berlin/Heidelberg, Germany, 2015; pp. 311–324. [Google Scholar]

- Setiawan, A.W.; Mengko, T.R.; Santoso, O.S.; Suksmono, A.B. Color retinal image enhancement using CLAHE. In Proceedings of the International Conference on ICT for Smart Society, Jakarta, Indonesia, 13–14 June 2013; pp. 1–3. [Google Scholar]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Reddy, A.V.N.; Krishna, C.P.; Mallick, P.K.; Satapathy, S.K.; Tiwari, P.; Zymbler, M.; Kumar, S. Analyzing MRI scans to detect glioblastoma tumor using hybrid deep belief networks. J. Big Data 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Ma, J.; Yuan, Y. Dimension reduction of image deep feature using PCA. J. Vis. Commun. Image Represent. 2019, 63, 102578. [Google Scholar] [CrossRef]

- Faris, H.; Mirjalili, S.; Aljarah, I.; Mafarja, M.; Heidari, A.A. Salp swarm algorithm: Theory, literature review, and application in extreme learning machines. Nat.-Inspired Optim. 2020, 185–199. [Google Scholar] [CrossRef]

- Islam, J.; Zhang, Y. Brain MRI analysis for Alzheimer’s disease diagnosis using an ensemble system of deep convolutional neural networks. Brain Inform. 2018, 5, 1–14. [Google Scholar] [CrossRef]

- Goceri, E. Diagnosis of Alzheimer’s disease with Sobolev gradient-based optimization and 3D convolutional neural network. Int. J. Numer. Methods Biomed. Eng. 2019, 35, e3225. [Google Scholar] [CrossRef] [PubMed]

- Khan, N.M.; Abraham, N.; Hon, M. Transfer learning with intelligent training data selection for prediction of Alzheimer’s disease. IEEE Access 2019, 7, 72726–72735. [Google Scholar] [CrossRef]

- Farid, A.A.; Selim, G.I.; Khater, H.A.A. Applying artificial intelligence techniques to improve clinical diagnosis of Alzheimer’s disease. Eur. J. Eng. Sci. Technol. 2020, 3, 58–79. [Google Scholar] [CrossRef]

- Anupama, C.S.S.; Sivaram, M.; Lydia, E.L.; Gupta, D.; Shankar, K. Synergic deep learning model–based automated detection and classification of brain intracranial hemorrhage images in wearable networks. Pers. Ubiquitous Comput. 2020, 1–10. [Google Scholar] [CrossRef]

- Kirithika, R.A.; Sathiya, S.; Balasubramanian, M.; Sivaraj, P. Brain Tumor And Intracranial Haemorrhage Feature Extraction And Classification Using Conventional and Deep Learning Methods. Eur. J. Mol. Clin. Med. 2020, 7, 237–258. [Google Scholar]

- Nawresh, A.A.; Sasikala, S. An Approach for Efficient Classification of CT Scan Brain Haemorrhage Types Using GLCM Features with Multilayer Perceptron; ICDSMLA 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 400–412. [Google Scholar]

| Dataset | No. of Instances | Image Format | Image Size | Classes | Sample Type | No. of Attribute | Attributes after PCA |

|---|---|---|---|---|---|---|---|

| Alzheimer’s | 100 | BMP | 2 | T2-weighted | 1296 | 39 | |

| Hemorrhage | 200 | JPEG | 2 | T1-weighted | 1296 | 70 |

| Dataset | Total Number of Images | Number of Normal Training Images | Number of Abnormal Training Images | Number of Normal Testing Images | Number of Abnormal Testing Images |

|---|---|---|---|---|---|

| Alzheimer’s | 100 | 40 | 40 | 10 | 10 |

| Hemorrhage | 200 | 80 | 80 | 20 | 20 |

| Name (Hardware/Software) | Setting |

|---|---|

| CPU | Intel(R) Core(TM) i3-6006U |

| Frequency | 2.0 GHz |

| RAM | 8 GB |

| Hard Drive | 500 GB |

| Operating System | Window 7 |

| Simulation software | Mat lab R2015a |

| ELM | RBFN | FLANN | BPNN | PSO | DE | SSA |

|---|---|---|---|---|---|---|

| Hidden layer size: 50, Activation function: sigmoid | Basis function: Gaussian,Hidden layer size: 10, Learning rate: 0.5 | Size of expansion: 8 | Learning rate: 0.9, Momentum: 0.3, Hidden layer size: 10, Activation function: Gaussian | Iteration: 100, Population Size: 50, Inertia Weight (IW): 0.7, Inertia Weight Damping Ratio: 0.1, : 1.5, : 2 | Iteration: 100, Population Size: 50, Crossover: 0.2, Mutation: 0.4, LB: 0.25, UB: 0.75 | Iteration: 100, Population Size: 50, Inertia weight: 0.7 |

| Methods | Accuracy (Alzheimer’s) | AUC (Alzheimer’s) | Accuracy (Hemorrhage) | AUC (Hemorrhage) |

|---|---|---|---|---|

| ELM-SSA | 0.99 | 0.9695 | 0.99 | 0.9659 |

| ELM-PSO | 0.96 | 0.8731 | 0.96 | 0.9489 |

| ELM-DE | 0.90 | 0.8261 | 0.91 | 0.9393 |

| FLANN-SSA | 0.94 | 0.9007 | 0.98 | 0.8929 |

| FLANN-PSO | 0.93 | 0.9039 | 0.97 | 0.8924 |

| FLANN-DE | 0.91 | 0.8658 | 0.89 | 0.8591 |

| RBFN-SSA | 0.91 | 0.9433 | 0.97 | 0.9501 |

| RBFN-PSO | 0.89 | 0.9019 | 0.96 | 0.8722 |

| RBFN-DE | 0.90 | 0.8650 | 0.89 | 0.8275 |

| BPNN-SSA | 0.92 | 0.9373 | 0.98 | 0.9373 |

| BPNN-PSO | 0.83 | 0.8134 | 0.97 | 0.8204 |

| BPNN-DE | 0.89 | 0.7753 | 0.96 | 0.7590 |

| Methods | Classification Accuracy (Alzheimer’s Dataset) | Performance Improvement of ELM-SSA (%) | Classification Accuracy (Hemorrhage Dataset) | Performance Improvement of ELM-SSA (%) |

|---|---|---|---|---|

| ELM | 0.87 | 13.79 | 0.88 | 12.5 |

| ELM-PSO | 0.96 | 3.12 | 0.96 | 3.12 |

| ELM-DE | 0.90 | 10 | 0.91 | 8.79 |

| FLANN | 0.81 | 22.22 | 0.81 | 22.22 |

| FLANN-SSA | 0.94 | 5.31 | 0.98 | 1.02 |

| FLANN-PSO | 0.93 | 6.4 | 0.97 | 2.06 |

| FLANN-DE | 0.91 | 8.79 | 0.89 | 11.23 |

| RBFN | 0.76 | 30.26 | 0.80 | 23.75 |

| RBFN-SSA | 0.91 | 8.79 | 0.97 | 2.06 |

| RBFN-PSO | 0.89 | 11.23 | 0.96 | 3.12 |

| RBFN-DE | 0.90 | 10 | 0.89 | 11.23 |

| BPNN | 0.78 | 26.92 | 0.79 | 25.31 |

| BPNN-SSA | 0.92 | 7.6 | 0.98 | 1.02 |

| BPNN-PSO | 0.83 | 19.27 | 0.97 | 2.06 |

| BPNN-DE | 0.89 | 11.23 | 0.96 | 3.12 |

| Paper | Classifier (Techniques) | Accuracy (%) of Alzheimer’s Dataset | Accuracy (%) of Hemorrhage Dataset |

|---|---|---|---|

| [36] | Res-Net | 93.18 | - |

| [37] | 3D-CNN | 98.01 | - |

| [38] | VGG | 96.36 | - |

| [39] | SVM(CHFS) | 96.50 | - |

| [40] | CNN(GC-SDL) | - | 95.73 |

| [41] | Alex Net(CLAHE+SITF) | - | 94.26 |

| [42] | K-NN(GLCM) | - | 95.5 |

| [42] | Multilayer Perceptron (GLCM) | - | 95.5 |

| Proposed model | ELM-SSA (DWT+PCA) | 99 | 99 |

| Models | Total Execution Time (Second) (Alzheimer’s Dataset) | Speedup of ELM-SSA | Total Execution Time (Second) (Hemorrhage Dataset) | Speedup of ELM-SSA |

|---|---|---|---|---|

| ELM | 700 | 1.06× | 780 | 1.03× |

| ELM-SSA | 660 | - | 755 | - |

| ELM-PSO | 710 | 1.07× | 790 | 1.04× |

| ELM-DE | 725 | 1.09× | 800 | 1.05× |

| FLANN | 720 | 1.09× | 810 | 1.07× |

| FLANN-SSA | 745 | 1.12× | 850 | 1.12× |

| FLANN-PSO | 680 | 1.03× | 844 | 1.11× |

| FLANN-DE | 755 | 1.14× | 890 | 1.17× |

| RBFN | 758 | 1.14× | 840 | 1.10× |

| RBFN-SSA | 765 | 1.15× | 910 | 1.20× |

| RBFN-PSO | 790 | 1.19× | 940 | 1.24× |

| RBFN-DE | 777 | 1.17× | 955 | 1.26× |

| BPNN | 765 | 1.15× | 880 | 1.16× |

| BPNN-SSA | 810 | 1.22× | 910 | 1.20× |

| BPNN-PSO | 885 | 1.34× | 905 | 1.19× |

| BPNN-DE | 880 | 1.33× | 910 | 1.20× |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pradhan, A.; Mishra, D.; Das, K.; Panda, G.; Kumar, S.; Zymbler, M. On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model. Mathematics 2021, 9, 2095. https://doi.org/10.3390/math9172095

Pradhan A, Mishra D, Das K, Panda G, Kumar S, Zymbler M. On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model. Mathematics. 2021; 9(17):2095. https://doi.org/10.3390/math9172095

Chicago/Turabian StylePradhan, Ashwini, Debahuti Mishra, Kaberi Das, Ganapati Panda, Sachin Kumar, and Mikhail Zymbler. 2021. "On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model" Mathematics 9, no. 17: 2095. https://doi.org/10.3390/math9172095

APA StylePradhan, A., Mishra, D., Das, K., Panda, G., Kumar, S., & Zymbler, M. (2021). On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model. Mathematics, 9(17), 2095. https://doi.org/10.3390/math9172095