Abstract

Metaheuristics are smart problem solvers devoted to tackling particularly large optimization problems. During the last 20 years, they have largely been used to solve different problems from the academic as well as from the real-world. However, most of them have originally been designed for operating over real domain variables, being necessary to tailor its internal core, for instance, to be effective in a binary space of solutions. Various works have demonstrated that this internal modification, known as binarization, is not a simple task, since the several existing binarization ways may lead to very different results. This of course forces the user to implement and analyze a large list of binarization schemas for reaching good results. In this paper, we explore two efficient clustering methods, namely KMeans and DBscan to alter a metaheuristic in order to improve it, and thus do not require on the knowledge of an expert user for identifying which binarization strategy works better during the run. Both techniques have widely been applied to solve clustering problems, allowing us to exploit useful information gathered during the search to efficiently control and improve the binarization process. We integrate those techniques to a recent metaheuristic called Crow Search, and we conduct experiments where KMeans and DBscan are contrasted to 32 different binarization methods. The results show that the proposed approaches outperform most of the binarization strategies for a large list of well-known optimization instances.

1. Introduction

Optimization problems can be seen in different areas of the modern world, such as bridge reinforcement [1], load dispatch [2], location of emergency facilities [3], marketing [4], and social networks [5], among others. To solve them, we must identify and understand to which model the problem belongs. There are three types of models, the first has discrete variables, the second has continuous variables, and the third has both types of variables. Discrete optimization has widely been used to define a large number of problems belonging to the NP-hard class [6]. It is necessary to emphasize that the time to solve this type of problem increases exponentially according to its size. For this reason, we consider it totally prudent to solve these problems through metaheuristics [7,8], which deliver acceptable results in a limited period of time.

There are many metaheuristics and we can see a complete collection of all nature-inspired algorithms in [9] but most work on continuous space. Some researchers have been working in depth on developing binary versions that make these metaheuristics operate in binary spaces. A study of putting continuous metaheuristics to work in binary search spaces [10] clearly describes the two-step technique. These techniques require a large number of combinations and tuning of parameters to binarize and thus be able to obtain the best of all, which is a great waste of time.

Despite successful published work, binary techniques require offline analysis and an expert user in the field to adjust the metaheuristic to the problem domain in addition to working with the various available combinations of the two-step technique. Using gained expertise, our work is focused on two binarization clustering methods to smartly modify a set of real solutions to binary solutions by grouping decision variables, without the need for combinations as in the two-step technique, saving time and algorithm manipulation. The first one proposes to use KMeans [11,12] clustering algorithm, the most common unsupervised machine learning algorithm, to assign each variable in defined groups by the user. It is necessary to assign values of transition to each cluster in order to binarize each variable. The second one integrates DBscan [13,14] as a powerful clustering method for arraying values in a nonapriori determined number of clusters. Unlike KMeans, this technique creates the clusters by itself just knowing the number of variables required to be included in a cluster and the distance between each point in the data set. Furthermore, the transition value to binarize is calculated online and depends on each cluster. To test our approaches, we include these clustering methods in the Crow Search Algorithm (CSA) [15] which is a population-based metaheuristic inspired by the behavior of crows (easy implementation, it works very fast, and there are few parameter settings). In this method, each individual follows others trying to discover the places where they hide the food in order to steal it.

Based on [16,17], both works were used as gold standards, and we perform an experimental design led by quantitative methodology. We implement different criteria such as quality of solutions, robustness in terms of the instances, solving large-scale of well known binary optimization problems: Set Covering Problem (SCP) [18] and 0/1 Knapsack Problem (KP) [19]. These two problems are very popular benchmarks to address and try new strategies with because they have known results with which to compare.

The remainder of this manuscript is organized as follows: Section 2 presents the related work. Section 3 exposes binarization strategies. CSA is described in Section 4. Section 5 explains how to integrate binarizations on CSA. Next, experimental results including a research methodology are explained in Section 6. Discussions are shown in Section 7. At the end of the manuscript, conclusions are presented in Section 8.

2. Related Work

As previously mentioned, binarization is mandatory for continuous metaheuristics when the problem ranges in a binary domain. This task is not simple since experimentation with different binarization schemes is needed to reach good results. The transfer function is the most used binarization method and it was introduced in [20]. The transfer function is a very cheap operator, his range provides probabilities values and tries to model the transition of the particle positions. This function is responsible for the first step of the binarization method which corresponds to map the solutions in solutions. Two types of functions have been used in the literature, the S-shaped [21] and V-shaped [22]. The second step is to apply a binarization rule to the result of the transfer function. Examples of binarization rules are a complement, roulette, static probability, and elitist [22]. “Two-step” binarization is employed to refer to the use of transfer functions and binarization rule together. A detailed description of binarization techniques can be seen in [10].

Our goal here is to alleviate the user involvement in binarization testing and to provide a unique alternative that works better on average when contrasted to a large set of “Two-step” binarization techniques. In this context, the use of machine learning techniques appears as a good candidate to support the binarization process and as a consequence to obtain better search processes. Machine learning has already been used to improve search in metaheuristics [23]. For instance, the first trend is to employ machine learning for parameter tuning, as in [24] the authors use machine learning to parameter control in metaheuristics. The techniques reported in this context can be organized in four groups: Parameter control strategies [25], parameter tuning strategies [26], instance-specific parameter tuning strategies [27], and reactive search [28]. The general idea is to exploit search information in order to find good parameter configurations; this may lead to better quality solutions. An example of this is in [29], where the author uses a metaheuristic to determine the optimal number of hidden nodes and their proper initial locations on neural networks. Machine learning has also been used to build approximation models when objective function and constraints are expensive computationally [30]. Population management can also be improved via machine learning algorithms, obtaining information from already visited solutions, and using it to build new ones [31].

Machine learning has also been used to reduce the search space, for instance by using clustering [32] and neural networks [33]. In these works, two main areas of machine learning were used: forecasting and classify. It is important to mention the uses of machine learning with metaheuristics in these areas. It has been used to forecast different kinds of problems, like optimizing the low-carbon flexible job Shop Scheduling problem [34], electric load [35,36] use to forecast economic recessions in Italy, and other industrial problems as in [37]. As we mentioned, machine learning also can be used to classify some different groups of data sets; in this scenario, this technique has also been used in different problems as the image classification [38], electronic noise classification of bacterial foodbones pathogens [39], urban management [40], to mention some recent studies.

Although the participation of machine learning in the metaheuristic field is large, the specific use in binarization is indeed very limited. To the best of our knowledge, only a few experiments with the clustering techniques DBscan and KMeans have been reported [13,41]. In this way, we believe that binarization has a lot of room for exploration beyond the improvement of metaheuristics, the use of just one machine learning technique to binarization, compared to the 32 combinations of two-step technique that let the user gain simplicity, speediness, and less effort to use the binarization on metaheuristics, which is the aim pursued in this work.

3. Binarization

A study of putting continuous metaheuristics to work in binary search spaces [10] exposes two main groups of binarization techniques. The first group called two-step binarization allows one to put in work the continuous metaheuristics without operator modifications and the second group called continuous-binary operator transformation redefines the algebra of the search space, thereby reformulating the operators.

In this work, we used the first group to test our experiments. These two steps refer to transfer functions, which are responsible for mapping solutions in the real domain to a binary domain. Then we are going to use a machine learning strategy of clustering called KMeans in order to cluster the column of solutions and another strategy called DBscan that will cluster all the solutions (matrix). Both strategies will be used to map solutions between 0 and 1.

3.1. Two Step Binarization

Two-step binarization consists of applying, as its name says, two stages in order to bring the variables of the real search space to the world of binary variables. The first step uses eight transfer functions and the second step uses four discretization functions, therefore generating 32 possible strategies to apply.

3.1.1. First Step—Transfer Functions

These operators are very cheap to implement in the algorithm and their range provides probability values and attempts to model the transition of the particle positions; in other words, these functions transform a real solution that belongs to a real domain, to another real solution but restricted to a domain between 0 and 1. For that, there exist two classic types in the literature [42,43] called S-Shape and V-Shape, which are used.

3.1.2. Second Step—Binarization

A set of rules can be applied to transform a solution in the real domain to a solution in the binary domain. We use Standard, Complement, Static probability, and Elitist. In particle swarm optimization, this approach was first used in [20].

3.2. KMeans

KMeans is one of the simplest and most popular unsupervised machine learning algorithms for clustering. The main goal of KMeans [44,45] is to group similar data points given certain similarities. To achieve this objective, a number of clusters in a data set must be set. Then, every data point is allocated to each of the clusters, the arithmetic representation is as follows, see Equation (1):

where k represents the number of clusters, n depicts the number of cases, defines jth case of ith solution, and exposes the lth centroid (see pseudo-code in Algorithm 1).

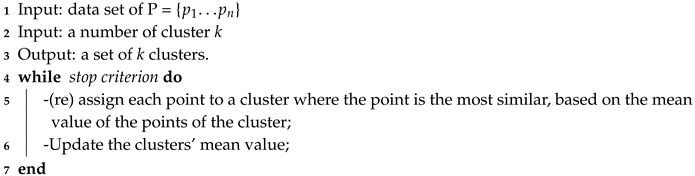

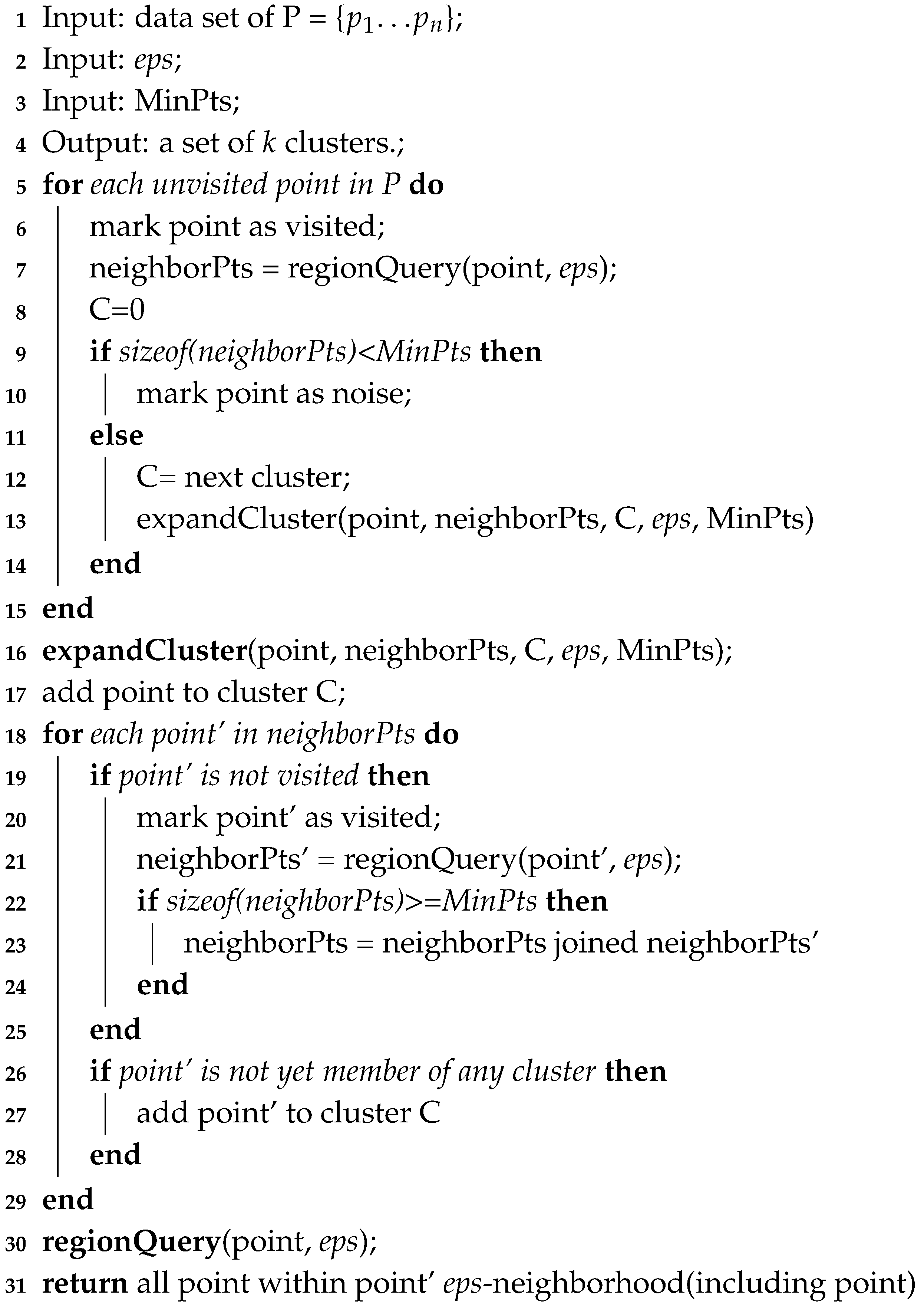

| Algorithm 1: KMeans pseudo-code |

|

The mechanism initially requires the k value as the number of the clusters and the best solution vector of dimension d composed by the decision variables . This vector is passed as input data for being grouped (see the left side of Figure 1). Iteratively, KMeans assigns each decision variable to one group based on the similarity of their features and these are allocated to the closest centroid, minimizing Euclidean distances. After, KMeans recalculates centroids by taking the average of all decision variables allocated to the cluster of that centroid, thus decreasing the complete intracluster variance over the past phase.

Figure 1.

Clustering process of the KMeans algorithm applied to the decision variables.

The right side of Figure 1 illustrates the computed groups. Positions are adjusted in each iteration of the process until the algorithm converges.

3.3. DBscan

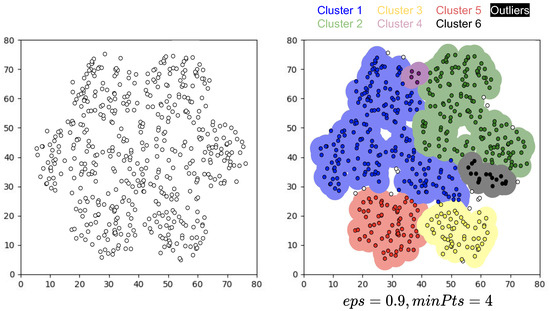

Density-Based Spatial Clustering of Applications with Noise (DBscan) [46] is a well-known data clustering algorithm which is commonly used in data mining and machine learning. It can be used to identify clusters of any shape in a data set containing noise and outliers. Clusters are thick data space areas separated by areas with reduced points density.

The goal is to define thick areas that can be measured by the amount of near-point objects. It is indispensable to know that DBscan has two important parameters that are required for work.

- Epsilon (): Sets how close points should be regarded as part of a cluster to each other.

- Minimum points (MinPts): The minimum number of points to form a dense region.

The main idea is that there must be at least a minimum number of points for each group in the neighborhood of a given radius. The parameter defines the neighborhood radius around a point x, any point x in the data set with a neighbor above or equal to MinPts is marked as a center point, and x is a limit point whether the number of its neighbors is less than MinPts. Finally, if a point is not a core or a point of the edge, then it is called a point of noise or an outer point.

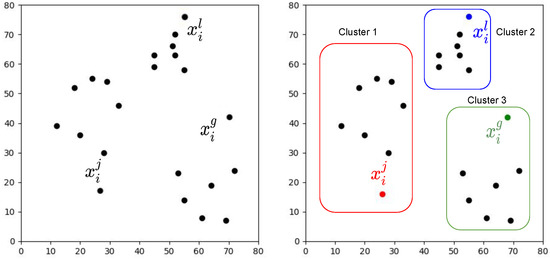

As a summary, this technique helps when you do not know much information about data, see pseudo-code in Algorithm 2.

The right side of Figure 2 illustrates the computed groups. Positions are adjusted in each iteration of the process until the algorithm converges.

| Algorithm 2: DBscan pseudo-code |

|

Figure 2.

Clustering process of the DBscan algorithm applied to the best solution.

4. Crow Search Algorithm

Crows (crow family or corvids) are considered the most intelligent birds. They contain the largest brain relative to their body size. Based on a brain-to-body ratio, their brain is slightly lower than a human brain. Evidence of the cleverness of crows is plentiful. They can remember faces and warn each other when an unfriendly one approaches. Moreover, they can use tools, communicate in sophisticated ways, and recall their food hiding place up to several months later.

CSA tries to simulate the intelligent behavior of the crows to find the solution of optimization problems [15]. Optimization point of view: the crows are searchers, the environment is the search space, each position of the crow corresponds to a feasible solution, the quality of food source is the objective function, and the best food source of the environment is the global solution of the problem. Below, parameters for CSA are shown.

- N: Population.

- AP: Awareness probability.

- : Flight length.

- : Maximum number of iterations.

When CSA is working, two things can happen. For this, let Crow 1 and Crow 2 be different possible solutions belonging to the search space. Firstly, Crow 1 does not know that Crow 2 is following it. As a result, Crow 2 will approach the hiding place of Crow 1. This action is called State 1. On the other hand, Crow 1 knows that Crow 2 is following it. Finally, to protect its hidden place from being stolen, Crow 1 will fool Crow 2 by going to another position of the search space. This action is called state 2. The representation of these states is represented for Equation (2).

where:

- and are random numbers with uniform distribution between 0 and 1.

- denotes the flight length of crows.

- denotes the awareness probability of crows (intensification and diversification).

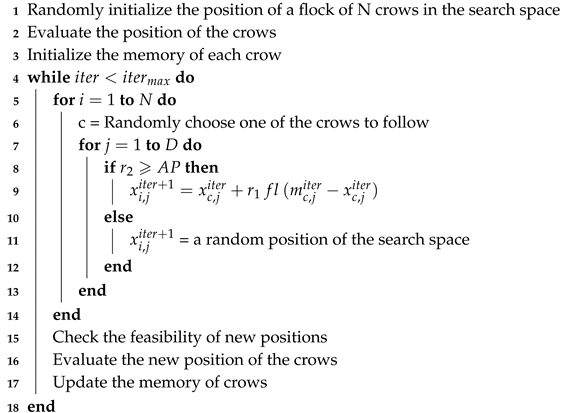

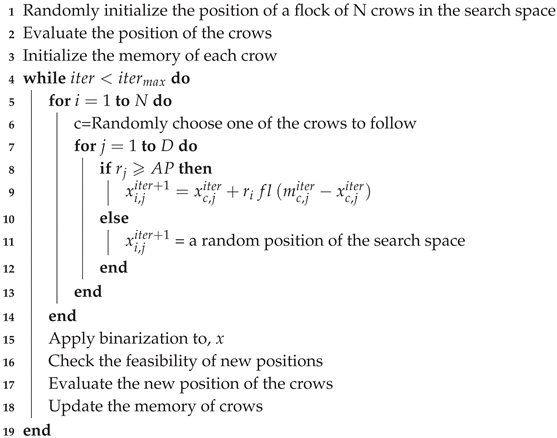

Below, pseudo-code of CSA is shown in Algorithm 3.

| Algorithm 3: CSA pseudo-code |

|

5. Integration: Binarizations on CSA

As we shown in Section 3, to work in a binary search space CSA [15], one must apply the binarization techniques. Firstly, we will solve the problem using Two-steps technique, then we will apply KMeans technique.

To have a high level explanation, the steps are described below:

When CSA evaluate a , a real value is generated, then:

- Two-steps: in t + 1 is binarized column by column applying the first step getting a continuous solution that enters as an input to the second step making the binarization evaluate the solution in the Objective function.

- KMeans: In this case, in t + 1 is clustered. Then each cluster is assigned to a cluster transition value already defined as a parameter. Small centroids get the small cluster transition value and a high centroid value gets a high value of transition. It is necessary to evaluate every point in the clusters, asking if random values between 0 an 1 are equal or greater than a cluster transition value. If we get the position and apply the complement to the in t, then the objective function is evaluated.

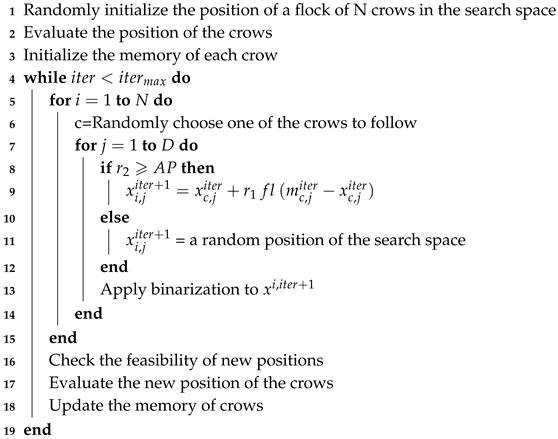

For theses two techniques we take the same strategy that is illustrated in Algorithm 4.

| Algorithm 4: CSA + Two-steps and Kmeans binarization techniques pseudo-code |

|

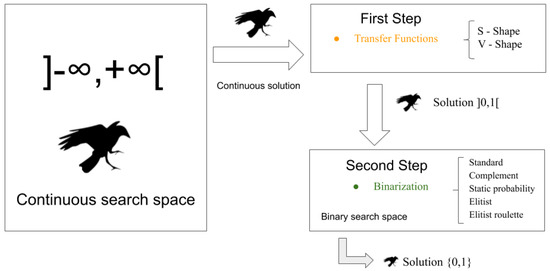

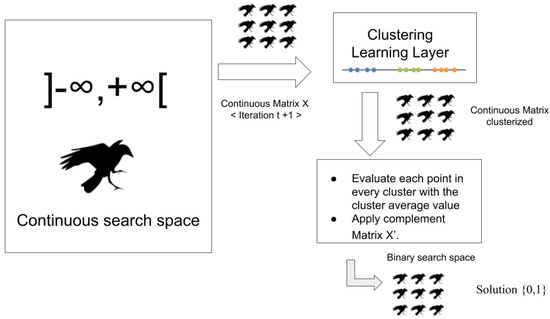

A graphic representation can be seen in Figure 3. A continuous solution from the metaheuristic is transformed into a solution that belongs to real domain to a solution in a real domain but restricted to a domain between 0 and 1, applying the first step. Then, the solution again is transformed into a solution in a binary domain using step 2. Figure 4 shows how the real solution is clusterized and then transformed to a binary solution applying cluster transition value using KMeans.

Figure 3.

Continuous to Binary Two-steps.

Figure 4.

Continuous to Binary KMeans.

Figure 4 shows how the real solution is clusterized and then is transformed to a binary solution applying cluster transition value using KMeans.

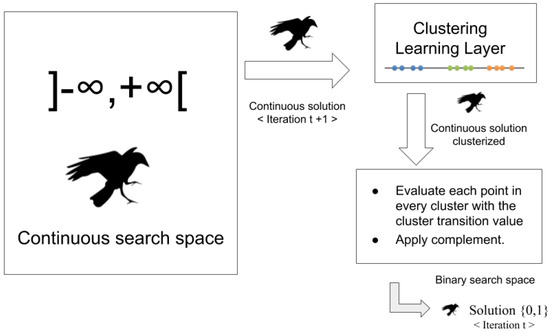

The third technique used is DBscan. This strategy is slightly different from previous ones because what we do is binarize the complete matrix instead of binarizing the solution vector. For that, we will create a matrix replica in its initial state (), then the metaheuristic will normally work by performing its movements in the real search space. Once each iteration is complete, the entire matrix will be clustered. As we have seen before, DBscan receives a distance () and a minimum of points (minPts) as input parameter, with this data the algorithm will only deliver a number of clusters depending on the data behavior. The following steps are performed after the clustering has been completed:

- Calculate the mean of the points to every cluster.

- Sort the clusters from least to greatest taking into account the average value of each cluster.

- Evaluate each point in the clusters by checking if a random value between 0 and 1 is equal to or greater than the average of each cluster; if it is, then we get the position and we apply the complement to the replica of the matrix (). Then the objective function is evaluated.

For this technique, we take the strategy that is illustrated in Algorithm 5. Finally, Figure 5 shows how the matrix x is clusterized and then, it is transformed to a binary matrix applying Dbscan.

| Algorithm 5: CSA + DBscan binarization technique pseudo-code |

|

Figure 5.

Continuous to Binary DBscan.

6. Experimental Results

We propose to test our approach by solving the instances of the SCP [47] and the 0/1 Knapsack Problem [48].

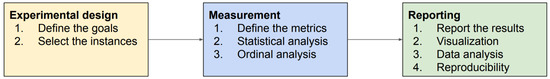

6.1. Methodology

A performance analysis is totally necessary to properly evaluate the performance of metaheuristics [49]. In this study, we use the well known benchmark taken from OR-Library [50] in order to compare the given best solution of the CSA against the best known result of the benchmark. Figure 6 illustrates the steps for making an exhaustive analysis of the improved metaheuristic. For experimental design, we design aims and guidelines to demonstrate the proposed approach is a real alternative to binarization systems. Next, we establish the best value as the critical metric for evaluating future results. In this context, we apply an ordinal analysis and we employ statistical tests to determine which approach is significantly better. Finally, we describe the used hardware and software features to reproduce computational experiments, and then we report all results in tables and distribution charts.

Figure 6.

Steps in performance analysis of a metaheuristic.

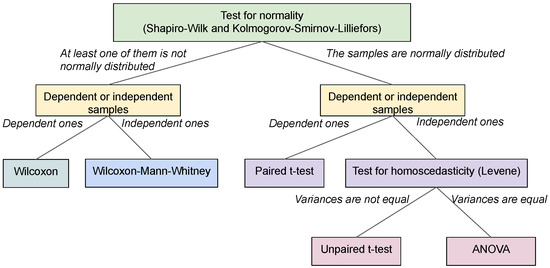

Moreover, it is very important to show how significant of a difference the three strategies have in this type of problem. For this reason we perform a contrast statistical test for each instance through the Kolmogorov-Smirnov-Lilliefors test to determine the independence of samples [51] and the Mann–Whitney–Wilcoxon test [52] to compare the results statistically (see Figure 7).

Figure 7.

Statistical significance test.

Kolmogorov-Smirnov-Lilliefors test allows us to analyze the independence of the samples by determining the or (depending if the problem is minimization or maximization) obtained from the 31 executions of each instance.

The results are evaluated using the relative percentage deviation (RPD). The RPD value quantifies the deviation of the objective value from , which is the minimal best-known value for each instance in our experiment, and it is calculated as follows:

6.2. Set Covering Problem (SCP)

The Set Covering Problem [47] is a classic combinatorial optimization problem, belonging to the NP-hard class [53], that has been applied in several real-world problems like the location of emergency facilities [3], airline and bus crew scheduling [54], vehicle scheduling [55], steel production [56], among others.

Formally, the SCP is defined as follows: let be a binary matrix with M-rows () and N-columns (), and let be a vector representing the cost of each column j, assuming that . Then, it observes that a column j covers a row i if . Therefore, it has:

The SCP consists of finding a set of elements that cover a range of needs at the lowest cost. In its matrix form, a feasible solution corresponds to a subset of columns and the needs are associated with the rows and treated as constraints. The problem aims at selecting the columns that optimally cover all the rows.

The Set Covering Problem finds a minimum cost subset S of columns such that each row is covered by at least one column of S. An integer programming formulation of the SCP is as follows:

Instances: In order to evaluate the algorithm performance when solving the SCP, we use 65 instances taken from the Beasley’s OR-library [50], which are organized in 11 sets.

Table 1 describes instance group, number of rows M, number of columns N, range of costs, density (percentage of nonzeroes in the matrix).

Table 1.

Instances taken from the Beasley’s OR-Library.

Reducing the instance size of SCP: In [57] different preprocessing methods have particularly been proposed to reduce the size of the SCP, two of them have been taken as the most effective ones, which are Column Domination and Column Inclusion; these methods are used to accelerate the processing of the algorithm.

Column Domination: It consists of eliminating the redundant columns of the problem in such a way that it does not affect the final solution.

Steps:

- All the columns are ordered according to their cost in ascending order.

- If there are equal cost columns, these are sorted in descending order by the number of rows that the column j covers.

- Verify if the column j whose rows can be covered by a set of other columns with a cost less than (cost of the column j).

- It is said that column j is dominated and can be eliminated from the problem.

Column Inclusion: If a row is covered only by one column after the domination process, it means that there is no better column to cover those rows; consequently, this column must be included in the optimal solution.

This reduction will be used as input data of instances.

6.3. Knapsack (KP)

0–1 Knapsack Problem is still an actual issue [58] which is a typical NP-hard problem in operations research. There are many studies that use this problem to prove their algorithm, such as in [59,60]. The problem may be defined as follows: given a set of objects from N, each o object has a certain weight and value. Furthermore, the number of items to be included in the collection must be determined to ensure that the full weight is less than or equal to the limit and that the full value is as large as possible.

The problem is to optimize the objective function:

Subject to:

Binary decision variables are used to indicate if item j is included in the knapsack or not. It can be assumed that all benefits and weights are positive and that all weights are smaller than capacity b.

Instances: In order to evaluate the algorithm performance of solving the KP, we used 10 instances [61].

Table 2 describes instance, dimension, and parameters: weight w, profit p, and capacity of the bag b.

Table 2.

0/1 Knapsack Problem instances.

As we can see in the instance Table 2, distinct sorts of problems are solved, each with specific sizes and values to get a wide concept of the algorithm’s behavior.

Software and Hardware: CSA was implemented in Java 1.8. The characteristics of the Personal Computer (PC) were: Windows 10 with an Intel Core i7-6700 CPU (3.4Ghz), 16 GB of RAM.

Parameter settings: Below, Table 3 shows the setup for Two-steps strategy, Table 4 shows the setup for KMeans strategy, and finally Table 5 shows setup for DBscan strategy.

Table 3.

Crow Search Algorithm (CSA) Parameters for Set Covering Problem (SCP) and Knapsack Problem (KP)—Two-steps.

Table 4.

CSA Parameters for SCP and KP—KMeans.

Table 5.

CSA Parameters for SCP and KP—DBscan.

Sixty-five instances of the SCP and ten instances of the 0/1 Knapsack Problem have been considered, which were executed 31 times each.

6.4. Comparison Results and Strategies

In [62] and in Appendix section (Appendix A.1 and Appendix A.2), we can see the results obtained; the information is detailed, also indicating each of the transfer functions and the binarization methods that were used. The data is grouped as follows: instance: name of the instance, MIN: the minimum reached value, MAX: the maximum value reached, AVG: the average value, BKS: the best known solution, RPD it is defined by the Equation (3) and finally TIME: time of execution in seconds.

As we mentioned, the first strategy implemented was Two-steps, the second strategy implemented was KMeans, and the last strategy implemented was DBscan.

Set Covering Problem Results

Comparison strategy: Table 6 shows a summary of the instances grouped by their complexity; we define three groups: G1 (easy), G2 (medium), and G3 (hard). The first group includes instances 4.1 to 6.5, the second group includes instances a.1 to d.5, and the third group includes instances nre.1 to nrh.5.

Table 6.

CSA/SCP—Two-steps vs. KMeans vs. DBscan resume summary (t.o = time out).

To get more information of the comparison between strategies, see [62]. It will easily realize how complex it is to develop all the combinations of two steps in order to get good results vs. clustering techniques.

Summary: Next, we rank the strategies in order of BKS obtained. In addition, the instances that obtained BKS in the 3 strategies are shown.

- Score

- Two-steps got 51/65 BKS.

- DBscan got 25/65 BKS.

- KMeans got 16/65 BKS.

- The three strategies got BKS in the same instances: 4.1-4.3-4.6-4.7-5.1-5.4-5.5-5.6-5.7-5.8-5.9-6.2-6.4-a.5

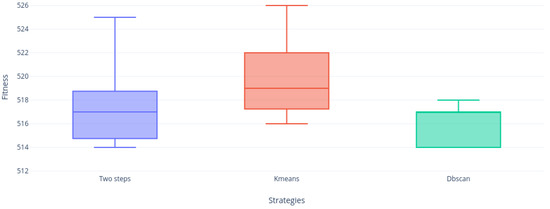

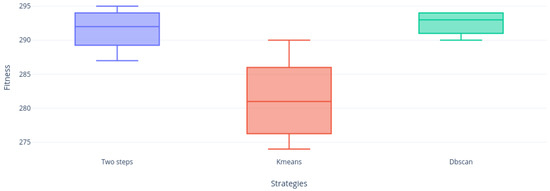

Instance distribution: Now that we have all the results of all the strategies that solved SCP, we compare the distribution of the samples of each instance through a box plot that shows the full distribution of the data. In order to make a summary of all the instances below, we present and describe the hardest instances of each group (4.10, 5.10, 6.5, a.5, b.5, c.5, d.5, nre.5, nrf.5, nrg.5 and nrh.5):

We can see that Figure 8 and Figure 9 are alike. The Two-steps and DBscan strategies obtained BKS but KMeans did not. However, the distribution is quite homogeneous. If we see the instance 4.10 illustrated in Figure 8, DBscan’s behavior is remarkable.

Figure 8.

Instance 4.10 distribution.

Figure 9.

Instance 5.10 distribution.

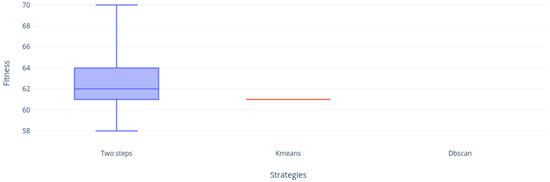

Figure 10 shows again that the Two-steps and DBscan strategies obtained BKS and the KMeans strategy did not. However, it can be appreciated again that DBscan is far more compact than Two-steps, which indicates that clustering processing is accurate in this instance. Then, Figure 11 shows us for the first time a very nice and solidly compact distribution. It is necessary to emphasize that the three strategies obtained BKS. Finally, Figure 12 shows how the Two-steps and KMeans strategies obtained BKS while DBscan did not. However, if it was very close, despite this the most compact strategies in its distribution were KMeans and DBscan.

Figure 10.

Instance 6.5 distribution.

Figure 11.

Instance a.5 distribution.

Figure 12.

Instance b.5 distribution.

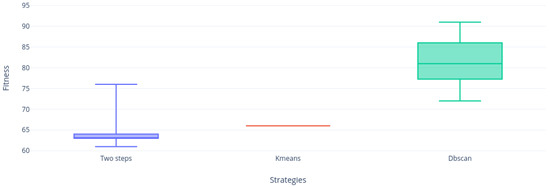

If we continue analyzing the samples, we can realize that Figure 13, Figure 14, Figure 15, Figure 16 and Figure 17 are very similar, the two-step strategies and KMeans far exceeded DBscan. This gives us an indication that DBscan works better by solving small instances with this metaheuristic, perhaps due to a large number of calculations made by grouping the complete set of solutions versus KMeans that groups the solution and Two-steps that perform binarization instantly on each vector column solution.

Figure 13.

Instance c.5 distribution.

Figure 14.

Instance d.5 distribution.

Figure 15.

Instance nre.5 distribution.

Figure 16.

Instance nrf.5 distribution.

Figure 17.

Instance nrg.5 distribution.

Finally, Figure 18 show us a null contribution of DBscan, who could not complete the executions due to the excessive delay of the strategy when clustering the most difficult instances; later we can observe KMeans absolutely trapped in an optimal local. However, the Two-steps approach continues to show good results, being the most robust at the BKS level as mentioned earlier in the strategy comparison.

Figure 18.

Instance nrh.5 distribution.

6.5. Knapsack Problem Results

Unlike the previous problem (SCP), the Knapsack problem has fewer instances, so it was not necessary to group by complexity.

Comparison strategy: Table 7 shows a summary of the instances.

Table 7.

CSA/KP—Two-steps vs. KMeans vs. DBscan resume summary.

To get more information of the comparison between strategies, see [62].

Summary: Next, we rank the strategies in order of BKS obtained. In addition, the instances that obtained BKS in the three strategies are shown.

- Score

- -

- Two-steps got 6/10 BKS.

- -

- DBscan got 5/10 BKS.

- -

- KMeans got 4/10 BKS.

- The three strategies got BKS in the same instances: f3-f4-f7-f9

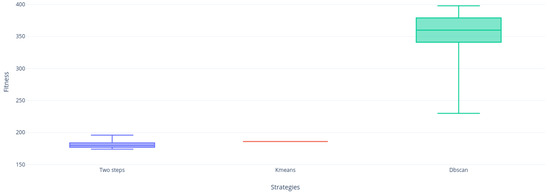

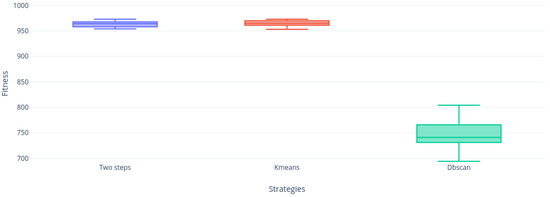

Instance distribution: Now that we have all the results of all the strategies that KP solved, we compare the distribution of the samples of each instance through a box diagram that shows the complete distribution of the data. To summarize all the instances below, we present and describe instances where the optimum was not reached because instances f3, f4, f7, and f9 always obtain BKS in each execution with the three strategies, so it is not necessary to graph these instances.

Figure 19, Figure 20, Figure 21, Figure 22, Figure 23 and Figure 24 shows us that the Two-steps strategy achieved better results but DBscan strategy is far more compact than KMeans strategy. Figure 24 shows the only instance where DBscan did not win to KMeans.

Figure 19.

Instance f1 distribution.

Figure 20.

Instance f2 distribution.

Figure 21.

Instance f5 distribution.

Figure 22.

Instance f6 distribution.

Figure 23.

Instance f8 distribution.

Figure 24.

Instance f10 distribution.

7. Statistical Test

As we mentioned, in order to determine independence, we propose the following hypotheses:

- -

- : states that / follows a normal distribution.

- -

- : states the opposite.

The test performed has yielded lower than ; therefore, cannot be assumed. Now that we know that the samples are independent and it cannot be assumed that they follow a normal distribution, it is not feasible to use the central limit theorem. Therefore, for evaluating the heterogeneity of samples we use a nonparametric evaluation called Mann–Whitney–Wilcoxon test. To compare all the results of the hardest instances, we propose the following hypotheses:

- -

- : Two-steps is better than KMeans

- -

- : states the opposite.

- -

- : Two-steps is better than DBscan

- -

- : states the opposite.

- -

- : KMeans is better than DBscan

- -

- : states the opposite.

Finally, the contrast statistical test demonstrates which technique is significantly better.

Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20, Table 21, Table 22, Table 23 and Table 24 compare SCP and 0/1 KP binariztion strategies for the hardest instances tested via the Wilcoxon’s signed rank test. As the significance level is also established to , smaller values than defines that , , and cannot be assumed.

Table 8.

p-values for instance 4.10.

Table 9.

p-values for instance 5.10.

Table 10.

p-values for instance 6.5.

Table 11.

p-values for instance a.5.

Table 12.

p-values for instance b.5.

Table 13.

p-values for instance c.5.

Table 14.

p-values for instance d.5.

Table 15.

p-values for instance nre.5.

Table 16.

p-values for instance nrf.5.

Table 17.

p-values for instance nrg.5.

Table 18.

p-values for instance nrh.5.

Table 19.

p-values for instance f.1.

Table 20.

p-values for instance f.2.

Table 21.

p-values for instance f.5.

Table 22.

p-values for instance f.6.

Table 23.

p-values for instance f.8.

Table 24.

p-values for instance f.10.

We have a method belonging to the PISA system to conduct the test run that supports the study. To this method, we indicate all data distributions (each in a file and each data in a line) and the algorithm will give us a p-value for the hypotheses.

The following tables show the result of the Mann-Whitney-Wilcoxon test. To understand them, it is necessary to have knowledge of the following acronyms:

- SWS = Statistically without significance.

- D/A = Does not apply.

SCP—p-value

KP—p-value

All reported p-values are less than 0.05; as indicated above, SWS indicates that it has no statistical significance, and D/A indicates that the comparison cannot be done because one of the strategies does not contain any data. So, with this information, we can clearly see which strategy was better than the three in each instance reported. As a resume for results for the SCP p-values lower than 0.05 were 10 for Two-steps, 10 for KMeans, and 5 for DBscan. On the other hand, results for the 0/1 KP p-values lower than 0.05 were 0 for Two steps, 10 for KMeans, and 5 for DBscan.

8. Conclusions

After implementing and analyzing binarization strategies through clusters, we can realize that DBscan achieved better results than KMeans at the BKS level. However, the execution times of DBscan were longer than KMeans; we believe that this has a clear explanation. The way in which the experiment was developed with clustering is crucial; KMeans cluster a vector while the BDscan clusters the complete matrix, which makes this technique take longer. Moreover, we do not report the solving time results due to the fact that it is known that this measure basically depends of the characteristics of the used machine (computer). Finally, our work is focused on enhancing the binariazation process for metahuerisics in order to find better results. In this way, we certainly have sacrificed the solving time but we think that DBscan is very promising because with these experiments we can realize that although we cluster the complete matrix with this technique, which took more time to execute, it still obtained good results.

When solving SCP, we have tested 65 nonunique instances of the Beasley OR Library and 10 well-known instances to solve KP, where classical technique obtained better results than clustering techniques in both problems. We believe that due to the number of combinations made (992 experiments for Two-steps), it allows a better performance on this metahuristic and also that it is necessary to make adjustments, perhaps of autonomous search on the clustering techniques to obtain a better performance.

The best results were obtained with the S-Shape transfer functions (S-Shape 4 predominates over the others) and Elitist binarization method. The worst results were the combination of the V-Shape transfer function with the binarization complement. It is very relevant to say that KMeans did not got better results than Two-steps strategy. On the other hand, it is relevant that DBscan obtained good results, better than KMeans and worse than Two-steps but the quantity of Two-step combinations is a very influential factor since with 32 different different ways of binarization it is far from being an easy way to implement; this requires time and knowledge of an expert user. Which means we reduce to one single experiment (31 executions) with DBscan over the combinatorial strategies of two-step getting good results. Statistically DBscan got better performance.

As future work, we plan to reverse the strategies of KMeans and DBscan by solving the grouping problem as a matrix with KMeans and as a unique solution with DBscan. In addition, we will implement a self-adaptive approach to get better results. Finally, we will apply the online control parameters in the Two-steps strategy to reach BKS in instances that did not reach optimal values.

Author Contributions

Formal analysis, S.V.; investigation, R.S., B.C., S.V., N.C. and R.O.; methodology, R.S. and B.C.; resources, R.S. and B.C.; software, R.O., S.V. and N.C.; validation, R.O., S.V., F.P., C.C. and N.C.; writing—original draft, S.V.; writing—review and editing, R.S., R.O. and S.V. All the authors of this paper hold responsibility for every part of this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

Ricardo Soto is supported by Grant CONICYT/FONDECYT/REGULAR/1190129. B.C. is supported by Grant CONICYT/FONDECYT/REGULAR/1171243. Nicolás Caselli is supported by PUCV-INF 2019.

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

Appendix A

Appendix A.1. Set Covering Problem Results

Appendix A.1.1. SCP—Crow Search Algorithm

Table A1.

CSA/SCP—SShape 1—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A1.

CSA/SCP—SShape 1—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | S1+Stand. | S1+Comp. | S1+Static | S1+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | ||

| 4.1 | 429 | 438 | 444.81 | 459 | 463.32 | 465 | 494.87 | 432 | 443.74 | 429 | 430 | 429 | 430.38 |

| 4.2 | 512 | 522 | 550.35 | 579 | 586.39 | 612 | 698.06 | 528 | 551.10 | 513 | 515.84 | 512 | 514.77 |

| 4.3 | 516 | 520 | 532.58 | 552 | 564.19 | 587 | 620.47 | 564 | 578.36 | 516 | 520.61 | 516 | 518.87 |

| 4.4 | 494 | 513 | 524.58 | 544 | 555.26 | 566 | 620.13 | 496 | 515.84 | 495 | 498.87 | 494 | 496.10 |

| 4.5 | 512 | 518 | 529.23 | 554 | 570.45 | 601 | 680.39 | 518 | 529.55 | 514 | 514.90 | 512 | 513.23 |

| 4.6 | 560 | 577 | 590.23 | 614 | 626.23 | 661 | 798.55 | 566 | 590 | 560 | 562.35 | 560 | 560.19 |

| 4.7 | 430 | 436 | 445.90 | 485 | 492.81 | 498 | 558.90 | 438 | 451.68 | 430 | 432.26 | 430 | 430.81 |

| 4.8 | 492 | 501 | 513.74 | 532 | 540.29 | 585 | 680.61 | 499 | 508.52 | 493 | 496.13 | 492 | 494.10 |

| 4.9 | 641 | 675 | 690.42 | 722 | 745.06 | 807 | 911.65 | 657 | 687.94 | 645 | 657.90 | 641 | 647.50 |

| 4.10 | 514 | 534 | 554.74 | 569 | 578.90 | 595 | 678.27 | 528 | 550.23 | 516 | 519.9 | 514 | 515.81 |

| 5.1 | 253 | 265 | 275.29 | 291 | 296.26 | 309 | 352.61 | 258 | 271.32 | 253 | 255.74 | 253 | 255.52 |

| 5.2 | 302 | 310 | 327.55 | 352 | 363.68 | 421 | 471.19 | 317 | 326.74 | 308 | 309.81 | 302 | 306.68 |

| 5.3 | 226 | 230 | 240.19 | 249 | 252.65 | 278 | 310.35 | 229 | 239.74 | 228 | 228.68 | 226 | 227.32 |

| 5.4 | 242 | 242 | 249.43 | 263 | 264.90 | 301 | 354.12 | 254 | 269.84 | 242 | 242.23 | 242 | 243.29 |

| 5.5 | 211 | 211 | 223.13 | 242 | 245.97 | 247 | 301.87 | 245 | 268.21 | 211 | 212.03 | 211 | 213.90 |

| 5.6 | 213 | 222 | 235.71 | 251 | 254.39 | 267 | 309.87 | 226 | 237.87 | 213 | 215.10 | 213 | 213.04 |

| 5.7 | 293 | 305 | 314.61 | 327 | 335.29 | 360 | 408.94 | 305 | 315.10 | 293 | 297.68 | 293 | 295.35 |

| 5.8 | 288 | 302 | 310.06 | 342 | 348.48 | 370 | 424.65 | 299 | 310.84 | 288 | 290.35 | 288 | 290.39 |

| 5.9 | 279 | 283 | 303.23 | 321 | 331.29 | 357 | 381.28 | 287 | 305.68 | 279 | 280.52 | 279 | 279.90 |

| 5.10 | 265 | 271 | 277.45 | 294 | 298.45 | 335 | 376.16 | 273 | 277.10 | 267 | 268.77 | 265 | 267.97 |

| 6.1 | 138 | 146 | 152.03 | 159 | 166 | 210 | 287.13 | 145 | 151.71 | 140 | 142.90 | 140 | 143.42 |

| 6.2 | 146 | 152 | 157.29 | 163 | 169 | 308 | 401.10 | 150 | 156.10 | 146 | 148.87 | 146 | 149.71 |

| 6.3 | 154 | 148 | 156.52 | 162 | 167.23 | 268 | 379.39 | 148 | 156.65 | 147 | 148.16 | 145 | 149.65 |

| 6.4 | 131 | 131 | 138.26 | 137 | 141.94 | 247 | 359.87 | 138 | 147.64 | 131 | 131.68 | 131 | 133.03 |

| 6.5 | 161 | 171 | 181.35 | 186 | 194.50 | 267 | 419.94 | 173 | 181.42 | 163 | 164.68 | 162 | 165.39 |

| AVG | 336.08 | 344.92 | 356.75 | 373.96 | 382.11 | 420.88 | 491.21 | 346.92 | 360.91 | 336.80 | 339.43 | 335.84 | 346.80 |

| a.1 | 253 | 257 | 263.74 | 269 | 272.48 | 474 | 584.81 | 256 | 261.81 | 254 | 255.55 | 254 | 256 |

| a.2 | 252 | 262 | 274.42 | 300 | 305.23 | 439 | 565.55 | 267 | 274.74 | 257 | 259.48 | 256 | 260.65 |

| a.3 | 232 | 242 | 251 | 256 | 258.16 | 410 | 525.81 | 242 | 250.35 | 235 | 237.10 | 233 | 237.35 |

| a.4 | 234 | 239 | 250.71 | 264 | 268.35 | 409 | 523.32 | 236 | 250.97 | 235 | 237.39 | 236 | 239.97 |

| a.5 | 236 | 239 | 243.32 | 258 | 263.03 | 421 | 540.65 | 238 | 241.84 | 236 | 237.06 | 236 | 237.52 |

| b.1 | 69 | 75 | 81.61 | 82 | 86.10 | 347 | 518.13 | 72 | 81.58 | 74 | 79.61 | 69 | 76.48 |

| b.2 | 76 | 84 | 90.26 | 92 | 94.19 | 411 | 549.61 | 81 | 88.35 | 83 | 90.03 | 81 | 86.81 |

| b.3 | 80 | 80 | 85.94 | 91 | 91.90 | 507 | 535.9 | 85 | 87.65 | 84 | 88.55 | 82 | 88.81 |

| b.4 | 79 | 83 | 89.10 | 96 | 100.26 | 517 | 660.52 | 84 | 88.55 | 84 | 87.90 | 83 | 88.16 |

| b.5 | 72 | 72 | 78.92 | 82 | 84.55 | 498 | 587.58 | 76 | 80.35 | 72 | 77.68 | 78 | 76.03 |

| c.1 | 227 | 232 | 237.65 | 263 | 264.65 | 512 | 718.52 | 233 | 236.71 | 228 | 230.29 | 233 | 238.77 |

| c.2 | 219 | 228 | 236.61 | 259 | 263.13 | 617 | 782.81 | 226 | 235.58 | 226 | 222.71 | 226 | 232.74 |

| c.3 | 243 | 257 | 270.55 | 297 | 302.32 | 738 | 939.94 | 261 | 271 | 254 | 256.68 | 253 | 264 |

| c.4 | 219 | 231 | 243.45 | 256 | 260.17 | 618 | 783.23 | 233 | 243.58 | 225 | 227.97 | 224 | 233.10 |

| c.5 | 215 | 219 | 226.81 | 245 | 250.29 | 529 | 713.77 | 218 | 226.06 | 215 | 216.35 | 222 | 230.42 |

| d.1 | 60 | 61 | 68.29 | 70 | 70.87 | 600 | 889.42 | 63 | 67.23 | 66 | 66 | 68 | 79.65 |

| d.2 | 66 | 70 | 73.52 | 77 | 78.68 | 686 | 1001.55 | 68 | 74.19 | 71 | 71 | 73 | 89.71 |

| d.3 | 72 | 77 | 81.68 | 83 | 86.74 | 881 | 1114.29 | 78 | 82.13 | 82 | 82 | 82 | 100.84 |

| d.4 | 66 | 65 | 68.29 | 71 | 71.81 | 644 | 945 | 65 | 68.23 | 67 | 67 | 70 | 81.74 |

| d.5 | 61 | 64 | 67.74 | 69 | 69.97 | 631 | 909.13 | 63 | 67.39 | 66 | 66 | 72 | 81.29 |

| AVG | 151.55 | 156.85 | 164.18 | 174 | 177.14 | 544.45 | 719.47 | 157.25 | 163.91 | 155.70 | 157.81 | 156.55 | 164 |

| nre.1 | 29 | 30 | 33.23 | 32 | 33 | 1354 | 1643.54 | 30 | 32.68 | 30 | 30 | 70 | 80.03 |

| nre.2 | 30 | 33 | 37.45 | 36 | 37.61 | 1405 | 1732.55 | 33 | 36.19 | 34 | 34 | 83 | 128.32 |

| nre.3 | 27 | 28 | 33.61 | 32 | 34.61 | 1137 | 1416.77 | 30 | 33.61 | 34 | 34 | 74 | 112.35 |

| nre.4 | 28 | 31 | 34 | 34 | 34 | 1210 | 1712.48 | 30 | 33.90 | 33 | 34 | 83 | 177.45 |

| nre.5 | 28 | 30 | 32.94 | 33 | 33.87 | 1292 | 1860.23 | 30 | 32.77 | 30 | 30 | 92 | 150.52 |

| nrf.1 | 14 | 17 | 19 | 17 | 17.03 | 667 | 903.48 | 15 | 18.42 | 17 | 17 | 372 | 444.19 |

| nrf.2 | 15 | 16 | 18.71 | 18 | 18 | 607 | 836.29 | 16 | 18.61 | 18 | 18 | 74 | 404.58 |

| nrf.3 | 14 | 17 | 19.39 | 19 | 19.90 | 858 | 1034.90 | 17 | 19.29 | 19 | 19 | 335 | 487.25 |

| nrf.4 | 14 | 15 | 18.45 | 17 | 18.61 | 667 | 902.97 | 16 | 18.29 | 18 | 18 | 52 | 420.52 |

| nrf.5 | 13 | 15 | 18.29 | 16 | 16.32 | 653 | 844.23 | 15 | 17.61 | 16 | 16 | 65 | 371.03 |

| nrg.1 | 176 | 201 | 208.26 | 230 | 233.48 | 4473 | 5588.65 | 192 | 203.39 | 197 | 197 | 287 | 385.13 |

| nrg.2 | 154 | 172 | 181.06 | 187 | 190.48 | 3848 | 4648.48 | 166 | 174.16 | 168 | 168 | 208 | 284.29 |

| nrg.3 | 166 | 180 | 190.55 | 196 | 197.84 | 4319 | 5026.55 | 180 | 185.19 | 183 | 183 | 211 | 347.23 |

| nrg.4 | 168 | 187 | 200.03 | 216 | 219.26 | 4142 | 4935.29 | 180 | 192.10 | 186 | 186 | 250 | 345.77 |

| nrg.5 | 168 | 191 | 201.10 | 213 | 217.32 | 4198 | 5155 | 181 | 193.84 | 186 | 186 | 230 | 353.97 |

| nrh.1 | 63 | 102 | 124.68 | 82 | 83.65 | 9080 | 10,028.42 | 72 | 77.42 | 77 | 77 | t.o. | t.o. |

| nrh.2 | 63 | 92 | 120.10 | 81 | 81.81 | 9085 | 10,332.71 | 71 | 76.48 | 71 | 71 | t.o. | t.o. |

| nrh.3 | 59 | 89 | 120.23 | 74 | 75 | 8101 | 9886.68 | 67 | 73.61 | 69 | 69 | t.o. | t.o. |

| nrh.4 | 58 | 91 | 116.52 | 73 | 74.97 | 8592 | 9899.26 | 67 | 70.94 | 68 | 68 | t.o. | t.o. |

| nrh.5 | 55 | 88 | 105.13 | 68 | 68.74 | 7786 | 9318.55 | 62 | 67.16 | 61 | 61 | t.o. | t.o. |

| AVG | 67.10 | 81.25 | 91.63 | 83.70 | 85.27 | 3673.70 | 4385.35 | 73.50 | 78.78 | 75.75 | 75.80 | t.o. | t.o. |

Table A2.

CSA/SCP—SShape 2—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A2.

CSA/SCP—SShape 2—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | S2+Stand. | S2+Comp. | S2+Static | S2+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | ||

| 4.1 | 429 | 432 | 443.48 | 457 | 462.81 | 622 | 686.58 | 431 | 439.73 | 429 | 430 | 429 | 430.38 |

| 4.2 | 512 | 517 | 549.26 | 578 | 587.26 | 929 | 1084.16 | 522 | 549.50 | 513 | 515.84 | 512 | 514.77 |

| 4.3 | 516 | 519 | 532 | 555 | 564.23 | 789 | 987.25 | 578 | 687.47 | 516 | 520.61 | 516 | 518.87 |

| 4.4 | 494 | 500 | 520.55 | 542 | 555.81 | 861 | 963.39 | 501 | 517.03 | 495 | 498.87 | 494 | 496.10 |

| 4.5 | 512 | 518 | 531.84 | 557 | 573.48 | 901 | 1101.97 | 514 | 529.40 | 514 | 514.90 | 512 | 513.23 |

| 4.6 | 560 | 566 | 588.48 | 610 | 624.81 | 1194 | 1320.35 | 568 | 588.70 | 560 | 562.35 | 560 | 560.19 |

| 4.7 | 430 | 436 | 447.13 | 479 | 491.35 | 821 | 892.06 | 437 | 448.60 | 430 | 432.26 | 430 | 430.81 |

| 4.8 | 492 | 499 | 514.74 | 525 | 539.52 | 946 | 1095.10 | 493 | 506.97 | 493 | 496.13 | 492 | 494.10 |

| 4.9 | 641 | 641 | 652.16 | 722 | 743.39 | 1368 | 1499.48 | 662 | 682.20 | 645 | 657.90 | 641 | 647.50 |

| 4.10 | 514 | 523 | 550.52 | 560 | 575.35 | 873 | 1064.16 | 518 | 546.53 | 516 | 519.9 | 514 | 515.81 |

| 5.1 | 253 | 257 | 273.52 | 287 | 295.26 | 490 | 556.81 | 260 | 270.27 | 253 | 255.74 | 253 | 255.52 |

| 5.2 | 302 | 312 | 325.23 | 352 | 361.77 | 717 | 800.55 | 308 | 321.53 | 308 | 309.81 | 302 | 306.68 |

| 5.3 | 226 | 231 | 240.77 | 250 | 253.23 | 466 | 522.19 | 229 | 237.13 | 228 | 228.68 | 226 | 227.32 |

| 5.4 | 242 | 244 | 249.13 | 261 | 264.68 | 357 | 487.25 | 247 | 278.64 | 242 | 242.23 | 242 | 243.29 |

| 5.5 | 211 | 215 | 223.77 | 242 | 245.87 | 287 | 359.25 | 287 | 301.07 | 211 | 212.03 | 211 | 213.90 |

| 5.6 | 213 | 217 | 235.61 | 245 | 253.68 | 422 | 498.10 | 224 | 234 | 213 | 215.10 | 213 | 213.04 |

| 5.7 | 293 | 307 | 312.90 | 331 | 334.16 | 563 | 650.16 | 299 | 311.40 | 293 | 297.68 | 293 | 295.35 |

| 5.8 | 288 | 291 | 310.71 | 339 | 346.74 | 575 | 654.52 | 298 | 308.57 | 288 | 290.35 | 288 | 290.39 |

| 5.9 | 279 | 288 | 306.16 | 320 | 331.23 | 487 | 587.37 | 297 | 357.26 | 279 | 280.52 | 279 | 279.90 |

| 5.10 | 265 | 269 | 276.58 | 290 | 297.55 | 553 | 609.87 | 272 | 278 | 267 | 268.77 | 265 | 267.97 |

| 6.1 | 138 | 148 | 151.67 | 161 | 165.26 | 569 | 732.77 | 143 | 150.77 | 140 | 142.90 | 140 | 143.42 |

| 6.2 | 146 | 150 | 158.58 | 166 | 169 | 774 | 995.52 | 151 | 157.90 | 146 | 148.87 | 146 | 149.71 |

| 6.3 | 154 | 150 | 157.65 | 162 | 167.77 | 766 | 949.35 | 150 | 155.27 | 147 | 148.16 | 145 | 149.65 |

| 6.4 | 131 | 134 | 138.65 | 139 | 142.06 | 778 | 954.21 | 147 | 157.28 | 131 | 131.68 | 131 | 133.03 |

| 6.5 | 161 | 175 | 181.94 | 186 | 192.58 | 792 | 1000.87 | 170 | 179.47 | 163 | 164.68 | 162 | 165.39 |

| AVG | 336.08 | 341.56 | 354.92 | 372.64 | 381.55 | 716 | 842.13 | 348.24 | 367.78 | 336.80 | 339.43 | 335.84 | 338.25 |

| a.1 | 253 | 258 | 264.39 | 270 | 273.16 | 981 | 1126.26 | 257 | 262.60 | 254 | 255.55 | 254 | 256 |

| a.2 | 252 | 263 | 275.06 | 301 | 305.32 | 943 | 1066.48 | 266 | 274.33 | 257 | 259.48 | 256 | 260.65 |

| a.3 | 232 | 247 | 250.35 | 256 | 257.87 | 812 | 998.84 | 241 | 250.03 | 235 | 237.10 | 233 | 237.35 |

| a.4 | 234 | 234 | 240.06 | 265 | 268.90 | 848 | 977.29 | 241 | 251.03 | 235 | 237.39 | 236 | 239.97 |

| a.5 | 236 | 238 | 244.26 | 259 | 264.29 | 834 | 971.19 | 239 | 242.90 | 236 | 237.06 | 236 | 237.52 |

| b.1 | 69 | 72 | 80.58 | 82 | 85.26 | 1068 | 1196.61 | 76 | 80.83 | 74 | 79.61 | 69 | 76.48 |

| b.2 | 76 | 76 | 81.45 | 93 | 94 | 1039 | 1179.84 | 80 | 88.30 | 83 | 90.03 | 81 | 86.81 |

| b.3 | 80 | 82 | 86.55 | 91 | 91.84 | 1054 | 1178.89 | 86 | 91.04 | 84 | 88.55 | 82 | 88.81 |

| b.4 | 79 | 84 | 88.68 | 95 | 100.23 | 1153 | 1353.97 | 83 | 87.57 | 84 | 87.90 | 83 | 88.16 |

| b.5 | 72 | 73 | 79.55 | 82 | 84.52 | 1127 | 1201.31 | 78 | 84.89 | 72 | 77.68 | 78 | 76.03 |

| c.1 | 227 | 233 | 240.23 | 262 | 264.68 | 1128 | 1308.87 | 230 | 236.47 | 228 | 230.29 | 233 | 238.77 |

| c.2 | 219 | 219 | 2225.10 | 254 | 262.68 | 1359 | 1470.74 | 230 | 237.47 | 226 | 222.71 | 226 | 232.74 |

| c.3 | 243 | 260 | 272.03 | 295 | 301.52 | 1447 | 1734.35 | 259 | 269.80 | 254 | 256.68 | 253 | 264 |

| c.4 | 219 | 235 | 243.74 | 254 | 259.42 | 1365 | 1463.19 | 233 | 243.40 | 225 | 227.97 | 224 | 233.10 |

| c.5 | 215 | 219 | 225.65 | 246 | 249.77 | 1253 | 1364.74 | 219 | 225.50 | 215 | 216.35 | 222 | 230.42 |

| d.1 | 60 | 63 | 68.77 | 70 | 70.87 | 1483 | 1682.68 | 62 | 67.67 | 66 | 66 | 68 | 79.65 |

| d.2 | 66 | 69 | 74.45 | 77 | 78.23 | 1608 | 1893.90 | 69 | 73.57 | 71 | 71 | 73 | 89.71 |

| d.3 | 72 | 78 | 82.06 | 83 | 86.68 | 1900 | 2102.94 | 77 | 80.40 | 82 | 82 | 82 | 100.84 |

| d.4 | 66 | 64 | 68.65 | 70 | 71.81 | 1531 | 1698.81 | 64 | 68.27 | 67 | 67 | 70 | 81.74 |

| d.5 | 61 | 62 | 67.94 | 69 | 69.90 | 1521 | 1699.13 | 64 | 66.87 | 66 | 66 | 72 | 81.29 |

| AVG | 151.55 | 156.45 | 262.97 | 173.70 | 177.05 | 1222.70 | 1383.50 | 157.70 | 164.15 | 155.70 | 157.81 | 156.55 | 164 |

| nre.1 | 29 | 29 | 33.14 | 32 | 33 | 2147 | 2147.65 | 30 | 33.85 | 30 | 30 | 70 | 80.03 |

| nre.2 | 30 | 32 | 37.29 | 36 | 37.61 | 2652 | 2897.61 | 33 | 37 | 34 | 34 | 83 | 128.32 |

| nre.3 | 27 | 31 | 34.23 | 33 | 34.52 | 2242 | 2510.84 | 30 | 33.20 | 34 | 34 | 74 | 112.35 |

| nre.4 | 28 | 29 | 34.32 | 33 | 33.97 | 2519 | 2804.13 | 31 | 33.97 | 33 | 34 | 83 | 177.45 |

| nre.5 | 28 | 30 | 33.58 | 33 | 33.94 | 2697 | 2965.90 | 29 | 32.67 | 30 | 30 | 92 | 150.52 |

| nrf.1 | 14 | 16 | 18.16 | 17 | 17 | 1438 | 1688.13 | 16 | 18.53 | 17 | 17 | 372 | 444.19 |

| nrf.2 | 15 | 16 | 19.23 | 17 | 17.97 | 1335 | 1516.13 | 17 | 18.47 | 18 | 18 | 74 | 404.58 |

| nrf.3 | 14 | 17 | 19.77 | 19 | 19.87 | 1540 | 1825.87 | 17 | 19.50 | 19 | 19 | 335 | 487.25 |

| nrf.4 | 14 | 16 | 18.68 | 17 | 18.71 | 1524 | 1664.94 | 16 | 18.37 | 18 | 18 | 52 | 420.52 |

| nrf.5 | 13 | 16 | 18.10 | 16 | 16.32 | 1313 | 1445.74 | 15 | 17.83 | 16 | 16 | 65 | 371.03 |

| nrg.1 | 176 | 327 | 371.45 | 230 | 233.13 | 7127 | 7506.23 | 192 | 203.50 | 197 | 197 | 287 | 385.13 |

| nrg.2 | 154 | 233 | 279.13 | 185 | 189.77 | 5994 | 6340.61 | 167 | 175.87 | 168 | 168 | 208 | 284.29 |

| nrg.3 | 166 | 281 | 315.81 | 195 | 197.71 | 6580 | 6997.29 | 178 | 186.17 | 183 | 183 | 211 | 347.23 |

| nrg.4 | 168 | 272 | 303.65 | 215 | 218.90 | 6601 | 7007.48 | 183 | 192.27 | 186 | 186 | 250 | 345.77 |

| nrg.5 | 168 | 276 | 329 | 216 | 217.55 | 6778 | 7198.52 | 184 | 194.33 | 186 | 186 | 230 | 353.97 |

| nrh.1 | 63 | 1165 | 1405.97 | 83 | 83.77 | 12,353 | 12,926.58 | 72 | 77.97 | 71 | 71 | t.o. | t.o. |

| nrh.2 | 63 | 1153 | 1326.81 | 79 | 81.77 | 11,778 | 12,818.74 | 73 | 78.57 | 71 | 71 | t.o. | t.o. |

| nrh.3 | 59 | 1199 | 1361.74 | 74 | 74.87 | 11,992 | 12,604.52 | 69 | 73.53 | 69 | 69 | t.o. | t.o. |

| nrh.4 | 58 | 1187 | 1334.77 | 73 | 74.84 | 12,295 | 12,797.39 | 66 | 71.03 | 68 | 68 | t.o. | t.o. |

| nrh.5 | 55 | 1011 | 1181.94 | 67 | 68.71 | 11,477 | 12,064.84 | 63 | 68.87 | 61 | 61 | t.o. | t.o. |

| AVG | 67.10 | 366.80 | 423.83 | 83.50 | 85.19 | 5619.10 | 5986.45 | 74.05 | 79.27 | 75.45 | 75.50 | t.o. | t.o. |

Table A3.

CSA/SCP—SShape 3—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A3.

CSA/SCP—SShape 3—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | S3+Stand. | S3+Comp. | S3+Static | S3+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | ||

| 4.1 | 429 | 431 | 442.52 | 452 | 461.10 | 587 | 667.32 | 432 | 439.45 | 429 | 430 | 429 | 430.38 |

| 4.2 | 512 | 515 | 542.26 | 576 | 585.35 | 906 | 1028.52 | 515 | 545.26 | 513 | 515.84 | 512 | 514.77 |

| 4.3 | 516 | 516 | 529.13 | 554 | 564.97 | 798 | 869.54 | 579 | 602.32 | 516 | 520.61 | 516 | 518.87 |

| 4.4 | 494 | 495 | 518.71 | 544 | 553.81 | 828 | 914.39 | 501 | 513.42 | 495 | 498.87 | 494 | 496.10 |

| 4.5 | 512 | 517 | 527.61 | 559 | 568.74 | 863 | 1036 | 514 | 525.35 | 514 | 514.90 | 512 | 513.23 |

| 4.6 | 560 | 565 | 585.53 | 618 | 624.13 | 1082 | 1239.10 | 566 | 587.68 | 560 | 562.35 | 560 | 560.19 |

| 4.7 | 430 | 434 | 445.52 | 484 | 491.16 | 728 | 830.16 | 438 | 447 | 430 | 432.26 | 430 | 430.81 |

| 4.8 | 492 | 498 | 508.68 | 529 | 539.26 | 942 | 1075.97 | 497 | 506.26 | 493 | 496.13 | 492 | 494.10 |

| 4.9 | 641 | 654 | 684.03 | 726 | 741.90 | 1145 | 1399.77 | 656 | 677.03 | 645 | 657.90 | 641 | 647.50 |

| 4.10 | 514 | 518 | 540.97 | 568 | 578.26 | 852 | 1017.61 | 517 | 537.58 | 516 | 519.9 | 514 | 515.81 |

| 5.1 | 253 | 260 | 270.84 | 289 | 294.16 | 471 | 519.52 | 257 | 268.13 | 253 | 255.74 | 253 | 255.52 |

| 5.2 | 302 | 313 | 325.77 | 349 | 360.81 | 694 | 761.77 | 314 | 323.06 | 308 | 309.81 | 302 | 306.68 |

| 5.3 | 226 | 229 | 237 | 249 | 252.81 | 419 | 476.16 | 228 | 234.52 | 228 | 228.68 | 226 | 227.32 |

| 5.4 | 242 | 243 | 248.53 | 261 | 264.45 | 368 | 589.12 | 302 | 317.22 | 242 | 242.23 | 242 | 243.29 |

| 5.5 | 211 | 214 | 221.10 | 241 | 244.97 | 358 | 475.74 | 235 | 247.54 | 211 | 212.03 | 211 | 213.90 |

| 5.6 | 213 | 221 | 233.32 | 250 | 253.84 | 383 | 469.61 | 222 | 234 | 213 | 215.10 | 213 | 213.04 |

| 5.7 | 293 | 301 | 311.84 | 327 | 332.84 | 558 | 622.35 | 302 | 311.52 | 293 | 297.68 | 293 | 295.35 |

| 5.8 | 288 | 298 | 307.87 | 338 | 345.74 | 545 | 631.48 | 294 | 307.29 | 288 | 290.35 | 288 | 290.39 |

| 5.9 | 279 | 281 | 303.13 | 315 | 329.26 | 4412 | 506.06 | 301 | 387.31 | 279 | 280.52 | 279 | 279.90 |

| 5.10 | 265 | 271 | 276.39 | 294 | 297.97 | 497 | 573.84 | 268 | 275.19 | 267 | 268.77 | 265 | 267.97 |

| 6.1 | 138 | 145 | 151.55 | 159 | 164.97 | 701 | 874.98 | 144 | 148.90 | 140 | 142.90 | 140 | 143.42 |

| 6.2 | 146 | 151 | 157.61 | 166 | 169.19 | 803 | 941.94 | 148 | 156.29 | 146 | 148.87 | 146 | 149.71 |

| 6.3 | 154 | 148 | 155.68 | 160 | 166.71 | 788 | 892.10 | 148 | 152.84 | 147 | 148.16 | 145 | 149.65 |

| 6.4 | 131 | 132 | 137 | 139 | 141.81 | 814 | 912.32 | 139 | 150.01 | 131 | 131.68 | 131 | 133.03 |

| 6.5 | 161 | 170 | 180.42 | 183 | 192 | 816 | 916.68 | 165 | 177.97 | 163 | 164.68 | 162 | 165.39 |

| AVG | 336.08 | 340.80 | 353.72 | 373.20 | 380.81 | 854.32 | 809.68 | 347.28 | 362.93 | 336.80 | 339.44 | 335.84 | 338.25 |

| a.1 | 253 | 257 | 262.13 | 270 | 273.10 | 903 | 1039.42 | 256 | 262.06 | 254 | 255.55 | 254 | 256 |

| a.2 | 252 | 261 | 274.94 | 301 | 305.19 | 861 | 980.68 | 264 | 271.61 | 257 | 259.48 | 256 | 260.65 |

| a.3 | 232 | 241 | 249.94 | 255 | 257.55 | 812 | 924.23 | 242 | 249.35 | 235 | 237.10 | 233 | 237.35 |

| a.4 | 234 | 243 | 251.97 | 246 | 268.48 | 828 | 921.84 | 240 | 249.87 | 235 | 237.39 | 236 | 239.97 |

| a.5 | 236 | 238 | 242.10 | 260 | 264.74 | 846 | 926.26 | 237 | 241.97 | 236 | 237.06 | 236 | 237.52 |

| b.1 | 69 | 74 | 80.68 | 82 | 84.90 | 962 | 1110.10 | 73 | 80.58 | 74 | 79.61 | 69 | 76.48 |

| b.2 | 76 | 83 | 88.68 | 90 | 93.71 | 961 | 1083.71 | 81 | 88.68 | 83 | 90.03 | 81 | 86.81 |

| b.3 | 80 | 84 | 87.13 | 90 | 91.71 | 987 | 1087.36 | 86 | 89.78 | 84 | 88.55 | 82 | 88.81 |

| b.4 | 79 | 84 | 89.32 | 95 | 99 | 1105 | 1265.16 | 81 | 88.03 | 84 | 87.90 | 83 | 88.16 |

| b.5 | 72 | 74 | 79.61 | 80 | 84.13 | 1187 | 1398.65 | 80 | 81.27 | 72 | 77.68 | 78 | 76.03 |

| c.1 | 227 | 232 | 237.48 | 262 | 264.52 | 1098 | 1230.23 | 233 | 236.48 | 228 | 230.29 | 233 | 238.77 |

| c.2 | 219 | 225 | 236.58 | 256 | 262.45 | 1193 | 1351.26 | 225 | 232.90 | 226 | 222.71 | 226 | 232.74 |

| c.3 | 243 | 257 | 270.03 | 298 | 301.10 | 1466 | 1621.74 | 262 | 270.23 | 254 | 256.68 | 253 | 264 |

| c.4 | 219 | 230 | 242.74 | 256 | 259.45 | 1220 | 1346.16 | 233 | 243.19 | 225 | 227.97 | 224 | 233.10 |

| c.5 | 215 | 218 | 225.13 | 243 | 249.65 | 1118 | 1289.65 | 219 | 225.03 | 215 | 216.35 | 222 | 230.42 |

| d.1 | 60 | 63 | 68.61 | 79 | 70.90 | 1367 | 1563.39 | 61 | 66.58 | 66 | 66 | 68 | 79.65 |

| d.2 | 66 | 70 | 74.26 | 77 | 78.35 | 1415 | 1757.35 | 69 | 73.39 | 71 | 71 | 73 | 89.71 |

| d.3 | 72 | 78 | 81 | 85 | 86.71 | 1665 | 1942.03 | 78 | 81.13 | 82 | 82 | 82 | 100.84 |

| d.4 | 66 | 67 | 68.68 | 71 | 71.90 | 1458 | 1610 | 65 | 67.84 | 67 | 67 | 70 | 81.74 |

| d.5 | 61 | 64 | 67.94 | 69 | 69.84 | 1443 | 1599.94 | 63 | 66.87 | 66 | 66 | 72 | 81.29 |

| AVG | 151.55 | 126.86 | 133.19 | 142.20 | 144.55 | 1243 | 1417.11 | 127.26 | 132.79 | 126.46 | 128.65 | 127.73 | 136.57 |

| nre.1 | 29 | 30 | 33.45 | 32 | 33 | 2224 | 2478.47 | 35 | 36.21 | 30 | 30 | 70 | 80.03 |

| nre.2 | 30 | 33 | 37.26 | 35 | 37.10 | 2535 | 2724.68 | 32 | 36 | 34 | 34 | 83 | 128.32 |

| nre.3 | 27 | 30 | 33.65 | 33 | 34.29 | 2016 | 2332.48 | 30 | 32.87 | 34 | 34 | 74 | 112.35 |

| nre.4 | 28 | 32 | 34.58 | 34 | 34 | 2386 | 2632.77 | 31 | 34.29 | 33 | 34 | 83 | 177.45 |

| nre.5 | 28 | 30 | 33.16 | 33 | 33.94 | 2573 | 2762.35 | 28 | 32.03 | 30 | 30 | 92 | 150.52 |

| nrf.1 | 14 | 15 | 19.35 | 16 | 17 | 1348 | 1565.77 | 15 | 17.87 | 17 | 17 | 372 | 444.19 |

| nrf.2 | 15 | 16 | 18.77 | 18 | 18 | 1265 | 1442.90 | 16 | 18.10 | 18 | 18 | 74 | 404.58 |

| nrf.3 | 14 | 17 | 20.29 | 19 | 19.68 | 1578 | 1739.68 | 16 | 19.52 | 19 | 19 | 335 | 487.25 |

| nrf.4 | 14 | 17 | 19.06 | 17 | 18.68 | 1386 | 1546.10 | 15 | 17.97 | 18 | 18 | 52 | 420.52 |

| nrf.5 | 13 | 16 | 18.74 | 16 | 16.26 | 1229 | 1367.84 | 15 | 17.61 | 16 | 16 | 65 | 371.03 |

| nrg.1 | 176 | 585 | 702 | 231 | 233.42 | 6483 | 7083.65 | 200 | 210.65 | 197 | 197 | 287 | 385.13 |

| nrg.2 | 154 | 441 | 506.13 | 186 | 190.19 | 5619 | 5953.74 | 166 | 177 | 168 | 168 | 208 | 284.29 |

| nrg.3 | 166 | 484 | 570.19 | 196 | 198.16 | 5920 | 6475.87 | 180 | 188.23 | 183 | 183 | 211 | 347.23 |

| nrg.4 | 168 | 506 | 571.71 | 217 | 219.74 | 5971 | 6549.42 | 185 | 196.81 | 186 | 186 | 250 | 345.77 |

| nrg.5 | 168 | 515 | 620.45 | 213 | 218.13 | 6572 | 6799.97 | 186 | 198.19 | 186 | 186 | 230 | 353.97 |

| nrh.1 | 63 | 2043 | 2305.06 | 83 | 83.97 | 11,391 | 12,133.23 | 119 | 156.13 | 77 | 77 | t.o. | t.o. |

| nrh.2 | 63 | 1942 | 2188.55 | 81 | 81.77 | 11,644 | 12,162.52 | 112 | 141.13 | 71 | 71 | t.o. | t.o. |

| nrh.3 | 59 | 1870 | 2244.55 | 75 | 75 | 11,169 | 11,969.55 | 100 | 141.81 | 69 | 69 | t.o. | t.o. |

| nrh.4 | 58 | 2102 | 2259.94 | 74 | 75.10 | 11,577 | 12,029.13 | 109 | 143.13 | 68 | 68 | t.o. | t.o. |

| nrh.5 | 55 | 1778 | 2010.26 | 67 | 68.90 | 10,952 | 11,382.81 | 93 | 118.71 | 61 | 61 | t.o. | t.o. |

| AVG | 67.10 | 625.10 | 712.35 | 83.80 | 85.31 | 5291.90 | 5656.64 | 84.15 | 96.71 | 75.75 | 75.80 | t.o. | t.o. |

Table A4.

CSA/SCP—SShape 4—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A4.

CSA/SCP—SShape 4—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | S4+Stand. | S4+Comp. | S4+Static | S4+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | ||

| 4.1 | 429 | 432 | 440.45 | 456 | 461.06 | 440 | 455.10 | 429 | 430.61 | 429 | 430 | 429 | 430.38 |

| 4.2 | 512 | 515 | 542.77 | 571 | 583.74 | 561 | 598.77 | 512 | 515.74 | 513 | 515.84 | 512 | 514.77 |

| 4.3 | 516 | 516 | 530.25 | 554 | 564.32 | 523 | 587.21 | 516 | 518.87 | 516 | 520.61 | 516 | 518.87 |

| 4.4 | 494 | 507 | 517.45 | 541 | 553.48 | 518 | 551.81 | 494 | 500.10 | 495 | 498.87 | 494 | 496.10 |

| 4.5 | 512 | 516 | 527.71 | 551 | 568.74 | 547 | 578.55 | 512 | 514.61 | 514 | 514.90 | 512 | 513.23 |

| 4.6 | 560 | 569 | 586.65 | 612 | 624.77 | 607 | 643.74 | 560 | 563.61 | 560 | 562.35 | 560 | 560.19 |

| 4.7 | 430 | 434 | 444.84 | 483 | 491.55 | 451 | 487.13 | 430 | 432.26 | 430 | 432.26 | 430 | 430.81 |

| 4.8 | 492 | 493 | 508.06 | 529 | 539 | 516 | 558.61 | 492 | 494.19 | 493 | 496.13 | 492 | 494.10 |

| 4.9 | 641 | 668 | 682.74 | 728 | 743.71 | 705 | 766.61 | 647 | 654.68 | 645 | 657.90 | 641 | 647.50 |

| 4.10 | 514 | 522 | 541.81 | 564 | 576.52 | 558 | 589.06 | 514 | 517.19 | 516 | 519.9 | 514 | 515.81 |

| 5.1 | 253 | 254 | 268.74 | 288 | 294.55 | 279 | 298.19 | 253 | 256.74 | 253 | 255.74 | 253 | 255.52 |

| 5.2 | 302 | 315 | 326.68 | 349 | 360.74 | 335 | 368.16 | 302 | 308.39 | 308 | 309.81 | 302 | 306.68 |

| 5.3 | 226 | 228 | 235.97 | 246 | 252.32 | 244 | 261.71 | 226 | 227.32 | 228 | 228.68 | 226 | 227.32 |

| 5.4 | 242 | 242 | 247.74 | 262 | 264.87 | 268 | 278.36 | 243 | 246.23 | 242 | 242.23 | 242 | 243.29 |

| 5.5 | 211 | 212 | 220.29 | 241 | 245.32 | 250 | 238.07 | 212 | 214.32 | 211 | 212.03 | 211 | 213.90 |

| 5.6 | 213 | 223 | 232.84 | 247 | 253.65 | 236 | 260.35 | 213 | 213.90 | 213 | 215.10 | 213 | 213.04 |

| 5.7 | 293 | 301 | 311.48 | 327 | 331.61 | 322 | 344.13 | 293 | 295.03 | 293 | 297.68 | 293 | 295.35 |

| 5.8 | 288 | 296 | 309.32 | 336 | 344.90 | 323 | 348.68 | 288 | 289.90 | 288 | 290.35 | 288 | 290.39 |

| 5.9 | 279 | 279 | 300.32 | 314 | 329.32 | 280 | 301.24 | 280 | 281.34 | 279 | 280.52 | 279 | 279.90 |

| 5.10 | 265 | 272 | 276.23 | 292 | 297.48 | 288 | 306.77 | 265 | 267.87 | 267 | 268.77 | 265 | 267.97 |

| 6.1 | 138 | 145 | 150.90 | 158 | 164.29 | 157 | 183.84 | 138 | 143.13 | 140 | 142.90 | 140 | 143.42 |

| 6.2 | 146 | 150 | 155.58 | 165 | 168.71 | 180 | 225.68 | 146 | 150.61 | 146 | 148.87 | 146 | 149.71 |

| 6.3 | 154 | 148 | 154.19 | 162 | 166.77 | 170 | 213.39 | 145 | 150.32 | 147 | 148.16 | 145 | 149.65 |

| 6.4 | 131 | 133 | 136.55 | 138 | 141.32 | 148 | 154.24 | 132 | 134.23 | 131 | 131.68 | 131 | 133.03 |

| 6.5 | 161 | 171 | 179.71 | 187 | 191.94 | 208 | 239.29 | 161 | 167 | 163 | 164.68 | 162 | 165.39 |

| AVG | 336.08 | 341.64 | 353.17 | 372.04 | 380.58 | 364.56 | 393.54 | 336.12 | 339.52 | 336.80 | 339.43 | 335.84 | 338.25 |

| a.1 | 253 | 253 | 257.65 | 270 | 273.13 | 322 | 367.97 | 254 | 257.26 | 254 | 255.55 | 254 | 256 |

| a.2 | 252 | 262 | 272.58 | 299 | 304.87 | 326 | 381.03 | 252 | 260.13 | 257 | 259.48 | 256 | 260.65 |

| a.3 | 232 | 242 | 249.81 | 255 | 257.81 | 310 | 348.26 | 232 | 236.52 | 235 | 237.10 | 233 | 237.35 |

| a.4 | 234 | 241 | 250.10 | 264 | 267.94 | 299 | 348.39 | 235 | 240.65 | 235 | 237.39 | 236 | 239.97 |

| a.5 | 236 | 239 | 243.87 | 261 | 266.13 | 295 | 350.74 | 236 | 237.74 | 236 | 237.06 | 236 | 237.52 |

| b.1 | 69 | 74 | 80.16 | 82 | 85 | 176 | 246.52 | 69 | 72.97 | 74 | 79.61 | 69 | 76.48 |

| b.2 | 76 | 79 | 87.81 | 92 | 93.74 | 183 | 261.26 | 77 | 81.45 | 83 | 90.03 | 81 | 86.81 |

| b.3 | 80 | 81 | 86.06 | 90 | 91.58 | 174 | 257.03 | 81 | 82.34 | 84 | 88.55 | 82 | 88.81 |

| b.4 | 79 | 85 | 89.68 | 95 | 99.13 | 161 | 297.45 | 79 | 82.55 | 84 | 87.90 | 83 | 88.16 |

| b.5 | 72 | 72 | 78.58 | 82 | 83.90 | 189 | 247.98 | 73 | 74.32 | 72 | 77.68 | 78 | 76.03 |

| c.1 | 227 | 231 | 237.90 | 262 | 264.55 | 371 | 424.48 | 227 | 232.77 | 228 | 230.29 | 233 | 238.77 |

| c.2 | 219 | 224 | 234.55 | 258 | 262.90 | 372 | 461.16 | 220 | 225.10 | 226 | 222.71 | 226 | 232.74 |

| c.3 | 243 | 258 | 270.42 | 294 | 300.68 | 434 | 560.23 | 243 | 252.81 | 254 | 256.68 | 253 | 264 |

| c.4 | 219 | 233 | 241.48 | 256 | 259.55 | 353 | 445.23 | 219 | 225.74 | 225 | 227.97 | 224 | 233.10 |

| c.5 | 215 | 219 | 225.55 | 245 | 250.42 | 374 | 444.32 | 216 | 220.55 | 215 | 216.35 | 222 | 230.42 |

| d.1 | 60 | 63 | 67.97 | 69 | 70.74 | 376 | 440.77 | 60 | 63 | 66 | 66 | 68 | 79.65 |

| d.2 | 66 | 70 | 74.32 | 77 | 78.45 | 397 | 493.06 | 66 | 68.39 | 71 | 71 | 73 | 89.71 |

| d.3 | 72 | 76 | 81.32 | 85 | 86.58 | 439 | 560.29 | 72 | 75.52 | 82 | 82 | 82 | 100.84 |

| d.4 | 66 | 67 | 68.42 | 71 | 71.81 | 319 | 450.90 | 62 | 65.58 | 67 | 67 | 70 | 81.74 |

| d.5 | 61 | 62 | 68 | 69 | 69.94 | 317 | 422.81 | 61 | 63.84 | 66 | 66 | 72 | 81.29 |

| AVG | 151.55 | 156.55 | 163.31 | 173.80 | 176.94 | 309.35 | 390.49 | 151.70 | 155.96 | 155.70 | 157.81 | 156.55 | 164 |

| nre.1 | 29 | 30 | 34.23 | 32 | 32.84 | 687 | 748.32 | 31 | 31.65 | 30 | 30 | 70 | 80.03 |

| nre.2 | 30 | 36 | 39.94 | 35 | 37.26 | 741 | 904.42 | 31 | 33.55 | 34 | 34 | 83 | 128.32 |

| nre.3 | 27 | 31 | 34.45 | 33 | 34.58 | 622 | 751.81 | 27 | 29.77 | 34 | 34 | 74 | 112.35 |

| nre.4 | 28 | 33 | 35.52 | 34 | 34 | 688 | 860.13 | 28 | 30.81 | 33 | 34 | 83 | 177.45 |

| nre.5 | 28 | 31 | 36.13 | 33 | 33.94 | 781 | 936.06 | 28 | 29.74 | 30 | 30 | 92 | 150.52 |

| nrf.1 | 14 | 15 | 18.58 | 17 | 17.03 | 362 | 444 | 14 | 15.65 | 17 | 17 | 372 | 444.19 |

| nrf.2 | 15 | 16 | 19.26 | 17 | 17.97 | 268 | 394.61 | 15 | 16.19 | 18 | 18 | 74 | 404.58 |

| nrf.3 | 14 | 17 | 19.61 | 19 | 19.68 | 355 | 500 | 15 | 16.61 | 19 | 19 | 335 | 487.25 |

| nrf.4 | 14 | 15 | 18.19 | 18 | 18.61 | 334 | 429.77 | 15 | 15.71 | 18 | 18 | 52 | 420.52 |

| nrf.5 | 13 | 16 | 18.35 | 16 | 16.10 | 272 | 377.81 | 14 | 15 | 16 | 16 | 65 | 371.03 |

| nrg.1 | 176 | 1071 | 1186.32 | 229 | 234.16 | 2757 | 3176.94 | 179 | 189.55 | 197 | 197 | 287 | 385.13 |

| nrg.2 | 154 | 758 | 861.84 | 189 | 190.58 | 2226 | 2669.29 | 160 | 166.23 | 168 | 168 | 208 | 284.29 |

| nrg.3 | 166 | 850 | 1008.58 | 197 | 198.39 | 2574 | 2898.26 | 168 | 177.65 | 183 | 183 | 211 | 347.23 |

| nrg.4 | 168 | 860 | 1008.48 | 217 | 219.29 | 2569 | 2951.26 | 175 | 180.74 | 186 | 186 | 250 | 345.77 |

| nrg.5 | 168 | 974 | 1078.77 | 216 | 219.39 | 2732 | 3081.35 | 174 | 181.13 | 186 | 186 | 230 | 353.97 |

| nrh.1 | 63 | 3065 | 3318.32 | 82 | 84.10 | 5294 | 6065.26 | 71 | 74.19 | 77 | 77 | t.o. | t.o. |

| nrh.2 | 63 | 2962 | 3254.03 | 81 | 81.97 | 5486 | 5997.68 | 67 | 73.48 | 71 | 71 | t.o. | t.o. |

| nrh.3 | 59 | 3034 | 3253.35 | 75 | 75.39 | 4853 | 5984.23 | 64 | 69.03 | 69 | 69 | t.o. | t.o. |

| nrh.4 | 58 | 3010 | 3255.65 | 73 | 75.55 | 5002 | 6163.90 | 62 | 67.71 | 68 | 68 | t.o. | t.o. |

| nrh.5 | 55 | 2655 | 2934.16 | 68 | 69.10 | 4773 | 5589.06 | 58 | 62.45 | 61 | 61 | t.o. | t.o. |

| AVG | 67.10 | 973.95 | 1071.68 | 84.05 | 85.49 | 2168.80 | 2546.21 | 69.80 | 73.84 | 75.75 | 75.80 | t.o. | t.o. |

Table A5.

CSA/SCP—VShape 1—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A5.

CSA/SCP—VShape 1—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | V1+Stand. | V1+Comp. | V1+Static | V1+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | MIN | AVG | ||

| 4.1 | 429 | 639 | 699.94 | 454 | 463.13 | 647 | 723.19 | 540 | 600.03 | 429 | 430 | 429 | 430.38 |

| 4.2 | 512 | 959 | 1086.65 | 573 | 584.39 | 953 | 1127.10 | 742 | 881.45 | 513 | 515.84 | 512 | 514.77 |

| 4.3 | 516 | 1040 | 1172.58 | 665 | 754.21 | 754 | 845.32 | 701 | 732.21 | 516 | 520.61 | 516 | 518.87 |

| 4.4 | 494 | 869 | 985.39 | 539 | 553.61 | 817 | 986.74 | 723 | 792.35 | 495 | 498.87 | 494 | 496.10 |

| 4.5 | 512 | 925 | 1102.23 | 554 | 561.52 | 964 | 1143.55 | 824 | 904.32 | 514 | 514.90 | 512 | 513.23 |

| 4.6 | 560 | 1259 | 1357.42 | 614 | 624.45 | 1119 | 1371.90 | 939 | 1038.55 | 560 | 562.35 | 560 | 560.19 |

| 4.7 | 430 | 751 | 875.52 | 481 | 493.45 | 800 | 913.26 | 590 | 710.81 | 430 | 432.26 | 430 | 430.81 |

| 4.8 | 492 | 1010 | 1144.26 | 530 | 539.45 | 1028 | 1175.71 | 796 | 902.58 | 493 | 496.13 | 492 | 494.10 |

| 4.9 | 641 | 1315 | 1513.10 | 720 | 740.29 | 1192 | 1616.90 | 1051 | 1193.26 | 645 | 657.90 | 641 | 647.50 |

| 4.10 | 514 | 975 | 1108.10 | 566 | 572.26 | 987 | 1131.74 | 748 | 863.19 | 516 | 519.9 | 514 | 515.81 |

| 5.1 | 253 | 506 | 566.03 | 288 | 296.23 | 477 | 557.65 | 385 | 446.97 | 253 | 255.74 | 253 | 255.52 |

| 5.2 | 302 | 730 | 821.42 | 350 | 359.68 | 726 | 849.23 | 545 | 622.29 | 308 | 309.81 | 302 | 306.68 |

| 5.3 | 226 | 454 | 502.19 | 246 | 251.19 | 450 | 537.45 | 366 | 407.32 | 228 | 228.68 | 226 | 227.32 |

| 5.4 | 242 | 438 | 519.26 | 321 | 354.78 | 451 | 534.21 | 369 | 409.99 | 242 | 242.23 | 242 | 243.29 |

| 5.5 | 211 | 363 | 402.84 | 254 | 2987.54 | 458 | 587.32 | 370 | 411.21 | 211 | 212.03 | 211 | 213.90 |

| 5.6 | 213 | 435 | 490.16 | 248 | 254.16 | 454 | 516.84 | 354 | 397.32 | 213 | 215.10 | 213 | 213.04 |

| 5.7 | 293 | 565 | 658.52 | 329 | 336.03 | 518 | 666.68 | 449 | 526.74 | 293 | 297.68 | 293 | 295.35 |

| 5.8 | 288 | 598 | 668.61 | 337 | 345.42 | 585 | 676.74 | 483 | 527.29 | 288 | 290.35 | 288 | 290.39 |

| 5.9 | 279 | 580 | 673.10 | 398 | 401.05 | 547 | 654.21 | 489 | 531.07 | 279 | 280.52 | 279 | 279.90 |

| 5.10 | 265 | 539 | 608.13 | 291 | 296.48 | 555 | 615.87 | 398 | 472.52 | 267 | 268.77 | 265 | 267.97 |

| 6.1 | 138 | 625 | 702.68 | 161 | 165 | 628 | 744.58 | 413 | 504.45 | 140 | 142.90 | 140 | 143.42 |

| 6.2 | 146 | 708 | 1013.94 | 161 | 167.13 | 923 | 1047.35 | 576 | 694.87 | 146 | 148.87 | 146 | 149.71 |

| 6.3 | 154 | 783 | 946.39 | 198 | 209.19 | 849 | 1021.68 | 529 | 641.48 | 147 | 148.16 | 145 | 149.65 |

| 6.4 | 131 | 534 | 618.55 | 180 | 185.98 | 847 | 1035.65 | 584 | 601.25 | 131 | 131.68 | 131 | 133.03 |

| 6.5 | 161 | 860 | 1012.23 | 189 | 194.06 | 840 | 1009.48 | 548 | 682.58 | 163 | 164.68 | 162 | 165.39 |

| AVG | 336.08 | 738.40 | 849.97 | 385.88 | 507.63 | 742.76 | 883.61 | 580.48 | 659.84 | 336.80 | 339.44 | 335.84 | 338.25 |

| a.1 | 253 | 1030 | 1148.10 | 269 | 271.06 | 992 | 1181.42 | 703 | 810.94 | 254 | 255.55 | 254 | 256 |

| a.2 | 252 | 903 | 1068.74 | 294 | 303.16 | 950 | 1085.06 | 612 | 766.45 | 257 | 259.48 | 256 | 260.65 |

| a.3 | 232 | 838 | 998.48 | 254 | 258.10 | 951 | 1019.65 | 598 | 714.74 | 235 | 237.10 | 233 | 237.35 |

| a.4 | 234 | 908 | 995.32 | 263 | 266.90 | 925 | 1026.77 | 635 | 706.06 | 235 | 237.39 | 236 | 239.97 |

| a.5 | 236 | 930 | 1017.94 | 252 | 259.16 | 848 | 1043.42 | 653 | 732.81 | 236 | 237.06 | 236 | 237.52 |

| b.1 | 69 | 1092 | 1205.55 | 82 | 85.42 | 1091 | 1235.58 | 656 | 781.48 | 74 | 79.61 | 69 | 76.48 |

| b.2 | 76 | 1093 | 1215.06 | 92 | 93.65 | 1108 | 1225.10 | 639 | 798.13 | 83 | 90.03 | 81 | 86.81 |

| b.3 | 80 | 1375 | 1546.65 | 96 | 100.28 | 1153 | 1482.72 | 689 | 799.25 | 84 | 88.55 | 82 | 88.81 |

| b.4 | 79 | 1250 | 1380.39 | 95 | 100.19 | 1194 | 1402.32 | 713 | 914.65 | 84 | 87.90 | 83 | 88.16 |

| b.5 | 72 | 1108 | 1209.35 | 87 | 98.21 | 1201 | 1403.21 | 721 | 915.41 | 72 | 77.68 | 78 | 76.03 |

| c.1 | 227 | 1163 | 1349.45 | 258 | 262.32 | 1209 | 1358.77 | 804 | 925.77 | 228 | 230.29 | 233 | 238.77 |

| c.2 | 219 | 1356 | 1502.10 | 258 | 261.97 | 1311 | 1534.29 | 837 | 1018.81 | 226 | 222.71 | 226 | 232.74 |

| c.3 | 243 | 1660 | 1769.61 | 295 | 301.03 | 1675 | 1832.58 | 1069 | 1218.94 | 254 | 256.68 | 253 | 264 |

| c.4 | 219 | 1354 | 1476.10 | 255 | 258.23 | 1331 | 1500.71 | 854 | 1014 | 225 | 227.97 | 224 | 233.10 |

| c.5 | 215 | 1283 | 1403.81 | 240 | 246.81 | 1265 | 1448.26 | 757 | 980.29 | 215 | 216.35 | 222 | 230.42 |

| d.1 | 60 | 1494 | 1743.97 | 69 | 70.58 | 1602 | 1790.90 | 942 | 1139.16 | 66 | 66 | 68 | 79.65 |

| d.2 | 66 | 1832 | 1982.32 | 76 | 77.90 | 1784 | 2016.32 | 1121 | 1287.32 | 71 | 71 | 73 | 89.71 |

| d.3 | 72 | 1955 | 2154.87 | 83 | 86.45 | 1985 | 2192.74 | 1187 | 1421.10 | 82 | 82 | 82 | 100.84 |

| d.4 | 66 | 1549 | 1779.29 | 68 | 76.26 | 1634 | 1786.94 | 1045 | 1184.48 | 67 | 67 | 70 | 81.74 |

| d.5 | 61 | 1628 | 1772.13 | 69 | 69.55 | 1542 | 1781.55 | 963 | 1165.39 | 66 | 66 | 72 | 81.29 |

| AVG | 151.55 | 1290.05 | 1435.96 | 172.75 | 177.36 | 1287.55 | 1467.41 | 809.90 | 964.76 | 155.70 | 157.82 | 156.55 | 164 |

| nre.1 | 29 | 2335 | 2506.26 | 31 | 39.36 | 2501 | 2975.21 | 1625 | 1825.21 | 30 | 30 | 70 | 80.03 |

| nre.2 | 30 | 2782 | 3022.13 | 35 | 37.77 | 2687 | 3002.68 | 1716 | 1929.81 | 34 | 34 | 83 | 128.32 |

| nre.3 | 27 | 2244 | 2547.81 | 32 | 34.61 | 2263 | 2628.39 | 1507 | 1700.26 | 34 | 34 | 74 | 112.35 |

| nre.4 | 28 | 2763 | 2912 | 34 | 34 | 2681 | 2944.87 | 1663 | 1917.81 | 33 | 34 | 83 | 177.45 |

| nre.5 | 28 | 2850 | 3078.26 | 33 | 33.97 | 2728 | 3036.26 | 1674 | 2017.84 | 30 | 30 | 92 | 150.52 |

| nrf.1 | 14 | 1570 | 1722.10 | 17 | 17 | 1566 | 1782.81 | 986 | 1139.03 | 17 | 17 | 372 | 444.19 |

| nrf.2 | 15 | 1376 | 1566 | 18 | 18 | 1450 | 1578.03 | 931 | 1063.00 | 18 | 18 | 74 | 404.58 |

| nrf.3 | 14 | 1733 | 1933.13 | 19 | 19.84 | 1716 | 1903.77 | 1126 | 1275.97 | 19 | 19 | 335 | 487.25 |

| nrf.4 | 14 | 1543 | 1729.35 | 18 | 18.90 | 1489 | 1718.84 | 1019 | 1134.26 | 18 | 18 | 52 | 420.52 |

| nrf.5 | 13 | 1334 | 1488.71 | 16 | 16.29 | 1332 | 1500.13 | 820 | 988.39 | 16 | 16 | 65 | 371.03 |

| nrg.1 | 176 | 7156 | 7696.32 | 230 | 232.68 | 7405 | 7914.90 | 4673 | 5041.81 | 197 | 197 | 287 | 385.13 |

| nrg.2 | 154 | 6109 | 6442.68 | 185 | 189.29 | 6155 | 6470.32 | 3935 | 4351.13 | 168 | 168 | 208 | 284.29 |

| nrg.3 | 166 | 6507 | 7098.19 | 195 | 197.42 | 6542 | 7166.68 | 4375 | 4740.77 | 183 | 183 | 211 | 347.23 |

| nrg.4 | 168 | 6460 | 7072.45 | 215 | 218.19 | 6792 | 7302.13 | 4086 | 4713.61 | 186 | 186 | 250 | 345.77 |

| nrg.5 | 168 | 6837 | 7458.77 | 214 | 216.81 | 6712 | 7387.65 | 4416 | 4919.71 | 186 | 186 | 230 | 353.97 |

| nrh.1 | 63 | 12,515 | 13,137.26 | 82 | 83.71 | 12,574 | 13,310.87 | 8001 | 8869 | 77 | 77 | t.o. | t.o. |

| nrh.2 | 63 | 12,381 | 13,125.45 | 81 | 81.94 | 12,227 | 13,093.87 | 7802 | 8797.23 | 71 | 71 | t.o. | t.o. |

| nrh.3 | 59 | 12,241 | 13,122.81 | 74 | 74.97 | 12,180 | 13,132.84 | 7957 | 8662.29 | 69 | 69 | t.o. | t.o. |

| nrh.4 | 58 | 12,464 | 13,209.45 | 73 | 74.97 | 12,097 | 12,974.87 | 7934 | 8595.19 | 68 | 68 | t.o. | t.o. |

| nrh.5 | 55 | 11,626 | 12,348.52 | 67 | 68.55 | 11,558 | 12,356.23 | 7339 | 8103.61 | 61 | 61 | t.o. | t.o. |

| AVG | 67.10 | 5741.30 | 6160.88 | 83.45 | 85.41 | 5732.75 | 6209.07 | 3679.25 | 4089.29 | 75.75 | 75.80 | t.o. | t.o. |

Table A6.

CSA/SCP—VShape 2—Two-steps vs. KMeans vs. DBscan (t.o = time out).

Table A6.

CSA/SCP—VShape 2—Two-steps vs. KMeans vs. DBscan (t.o = time out).

| Instance | BKS | V2+Stand. | V2+Comp. | V2+Static | V2+Elitist | KMeans | DBscan | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|