Abstract

Two inertial subgradient extragradient algorithms for solving variational inequality problems involving pseudomonotone operator are proposed in this article. The iterative schemes use self-adaptive step sizes which do not require the prior knowledge of the Lipschitz constant of the underlying operator. Furthermore, under mild assumptions, we show the weak and strong convergence of the sequences generated by the proposed algorithms. The strong convergence in the second algorithm follows from the use of viscosity method. Numerical experiments both in finite- and infinite-dimensional spaces are reported to illustrate the inertial effect and the computational performance of the proposed algorithms in comparison with the existing state of the art algorithms.

Keywords:

variational inequality problem; Lipschitz-type conditions; viscosity method; subgradient extragradient method; pseudomonotone operator MSC:

47H05; 47J20; 47J25; 65K15

1. Introduction

This paper considers the problem of finding a point such that

where is a nonempty closed convex subset of a real Hilbert space and is an operator on . The Variational Inequality (VI) problem Equation (1) is a fundamental problem in optimization theory which is applied in many areas of study, such as transportation problems, equilibrium, economics, engineering and so on (Refs. [1,2,3,4,5,6,7,8,9,10,11,12,13,14]).

There are many approaches to the VI problem, the basic one being the regularization and projection method. Many studies have been carried out and several algorithms have been considered and proposed (Refs. [5,15,16,17,18,19,20,21,22,23,24]). In this study, we are interested in the projection method.

The main iterative scheme in this study is given by

where is the inertial parameter with two different updating rules. The step size is define to be self adaptively updated according to a new step size rule.

We now discuss the relationship between our scheme and the existing algorithms. The iterative scheme Equation (2) with a fixed step size is exactly the scheme proposed in [25] and without the inertial step, it reduces to the original subgradient extragradient scheme proposed by Censor et al. [26] where the underlying operator is monotone. The subgradient extragradient method has been studied, modified and improved by several researchers to produce variant methods. Most of these modifications used a fixed step size which depends on the factorials of the underlying operator such as strongly (inverse) modulus and Lipschitz constant. Therefore, algorithms with fixed step size require the prior knowledge of such factorials to be implemented. In a situation where such constants are difficult to compute or do not exist, such algorithms may be impossible to implement.

Recently, Yang et al. [27] proposed a modified subgradient algorithm with variable step size which does not require the knowledge of the Lipschitz constant for solving Equation (1). However, they considered the underlying operator to be monotone and the sequence generated converges weakly. Interested readers may refer to some recent articles that propose algorithms with variable step sizes which are independent of factorials of the underlying operator and Lipschitz constant (Refs. [28,29,30,31]). The question now is, can we have an iterative scheme involving a more general class of operators with a strong convergence and self-adaptive step size? We hence provide a positive answer to this question.

Inspired and motivated by [26,27], we proposed a self-adaptive subgradient extragradient algorithm by incorporating the inertial extrapolation step with the subgradient extragradient scheme. The aim of this modification is to obtain a self-adaptive scheme with fast convergence properties involving a more general class of operators. Moreover, we present a strong convergence version of the proposed algorithm by incorporating a viscosity method. The proposed schemes do not require prior knowledge of the Lipschitz constant of the operator. Furthermore, we present numerical experiments in finite- and infinite-dimensional spaces to illustrate the performance and the effect of the inertial step when compared to the existing algorithms in the literature.

The outline of this work is as follows: We give some definitions and Lemmas which we will use in our convergence analysis in the next section. We present the convergence analysis of our proposed schemes in Section 3 and lastly, in Section 4, we illustrate the inertial effect and the computational performance of our algorithms by giving some examples.

2. Preliminaries

This section recalls some known facts and necessary tools that we need for the convergence analysis of our method. Throughout this article, is a real Hilbert space with inner product and norm denoted respectively as and , is a nonempty closed and convex subset of . The notation is used to indicate that, respectively, the sequence converges weakly (strongly) to u. The following is known to hold in a Hilbert space:

for every [32].

Definition 1.

Let be a mapping defined on a real Hilbert space . is said to be:

- (1)

- Pseudomonotone if

- (2)

- sequentially weakly continuous on if then

Lemma 1.

[32] For any closed and convex and a metric projection from onto . for any , ⇔

Lemma 2.

[33] For any closed and convex, for every u in , the following holds

- i.

- for all ,

- ii.

- for all .

For more properties of projection interested reader should see [33].

Lemma 3.

[34] Let be a continuous and pseudomonotone mapping. Then if and only if is a solution of the problem of finding such that

Lemma 4.

[35] Suppose , and be sequences in such that for all ,

and there exists with for all Then the following are satisfied:

- (i)

- , where ;

- (ii)

- there exists with .

Lemma 5.

[36] Let be a nonempty set and a sequence in such that the following are satisfied:

- (a)

- for every exists;

- (b)

- every sequentially weak cluster point of is in E.

Therefore, converges weakly in

Lemma 6.

Let be a nonnegative real number sequence, be a sequence of real numbers in with and be a sequence of real numbers satisfying

If for every subsequence of satisfying then

3. A Self-Adaptive Subgradient Extragradient Scheme for Variational Inequality Problem

In this section, we give a detail description of our proposed algorithms. First, we present weak convergence analysis of the iterates generated by the algorithm to the solution of the VI problem Equation (1) involving pseudomonotone operator. We suppose the following assumptions for the analysis of our method.

Assumption 1.

Remark 1.

For it can be observed from Equation (5) that for all This implies that

| Algorithm 1 Adaptive Subgradient Extragradient Algorithm for Pseudomonotone Operator. |

|

Lemma 7.

The generated sequence by Equation (6) is monotonically decreasing and bounded from below by

Proof.

It can be observed that, the sequence is monotonically decreasing. Since is a Lipschitz function with Lipschitz’s constant for , we have

□

Remark 2.

By Lemma 7, Equation (6) is well-defined and

Next, the following lemma and its proof is crucial for the convergence analysis of the sequence generated by Algorithm 1.

Lemma 8.

Let be an operator satisfying the Assumption 1 (). Then, for all we have

Proof.

By Lemma 2 and the definition of we get

Using the definition of and the fact that , we have

Now, from the definition of , we have,

Lemma 9.

Let be a sequence generated by Algorithm 1 and Assumption is satisfied. If there exists a subsequence weakly convergent to with Then

Proof.

Since,

this implies that

Furthermore,

By the hypothesis, is bounded, consequently, is also bounded, and from the Lipschitz continuity of , is bounded as well. Passing limit as to Equation (10), we obtain

Furthermore, we have

The last inequality follows from Equations (11) and (12) and (this follows from the fact that is Lipschitz on and ). Let be a decreasing sequence of positive numbers tending to 0 and for each a smallest positive integer such that

It can be observed that the sequence is increasing. Moreover, assume (otherwise is a solution), set

therefore, for each i, we get

By the pseudomonotonicity of we have

This implies that

To show that , to that end, we show that . By the sequentially weakly lower semicontinuity of the norm mapping, we get

Since, and as we get

Therefore, Now, from the fact that is uniformly bounded, are bounded and we obtain

Therefore, for all we have

By Lemma 3, and hence the proof is complete. □

Remark 3.

As noted in [37,38], when the operator is monotone, the sequentially weak- weak assumption is not needed in Lemma 9.

Theorem 1.

Let A be an operator satisfying the Assumption 1 (). Then, for all the sequence generated by Algorithm 1, converges weakly to

Proof.

By Lemma 8, we have

Moreover, from Equation (4), we have

Substituting the last equation in Equation (15), we have

Observe that with , thus, there exists , such that for all . Consequently, we have

Now, comparing the last equation with Lemma 4, we have

Therefore, we get

This implies that

Since exists, therefore, the sequence is bounded. Let be a subsequence of such that , then from Equation (18) we have Now, since by Lemma 9 we get Consequently by Lemma 5, the sequence converges weakly to the solution of the VI problem. □

Notice that when in Algorithm 1, then we have the following as a corollary.

Corollary 1.

Suppose that satisfies in Assumption 1. Let and be the sequences generated as follows:

- i.

- Choose andwhere Moreover, the stepsize sequence is updated as follows:

Then, the sequences and weakly converge to the solution of the VI problem.

Strong Convergence Analysis

In this subsection we present a modified version of Algorithm 1 by using viscosity method to establish a strong convergence. The modified algorithm similar to Algorithm 1 has adaptive step size, that is, the step size does not depend on the Lipschitz constant. Moreover, for the convergence analysis of the modified algorithm, the Lipschitz constant of the operator is not required.

For the convergence analysis, we suppose to be a contraction mapping with as the contraction parameter. is a positive sequence such that where with:

The modified algorithm is as follows:

| Algorithm 2 Adaptive Subgradient Extragradient Algorithm for Pseudomonotone Operator. |

|

Remark 4.

It can be observed that from Equation (20) and that is we have for all

Theorem 2.

Let be an operator satisfying the Assumption 1 (A3). Then, for all the sequence generated by Algorithm 2, converges strongly to

Proof.

To show that iterates generated by Algorithm 2 converges strongly to we consider four claims.

Claim I: The sequence generated by Algorithm 2 is bounded.

In fact, from Lemma 9,

Recall that there exists , such that for all . This implies that for all Thus, from Equation (22) we get

On the other hand,

It follows from Remark 4 that for all there exists a constant such that

On the other hand, we have

Therefore, is bounded and thus, and are bounded.

Claim II:

In fact, from Equation (26), we have

From Equation (25), we get

Thus,

where

Claim III:

In fact, we have

Using the inequality we get

where the last inequality follows from Equation (23). Substituting Equation (32) into Equation (33), we get

where

Claim IV: The sequence converges to zero. From Lemma 6 it suffices to show that for every subsequence of such that

To this end, let be a subsequence of such that Equation (34) is satisfied, then by claim II we get

Therefore,

and

Next, we show that

In fact, we have

and

It now follows that, there exists a subsequence of such that, for some and

From Equation (37), we have which it follows from Equation (35) and Lemma 9 that From Equation (38) and the fact that we have

Putting Equations (36) and (39) together, we get

Thus, the desired result follows from Equation (40), Claim III and Lemma 6. □

4. Computational Experiments

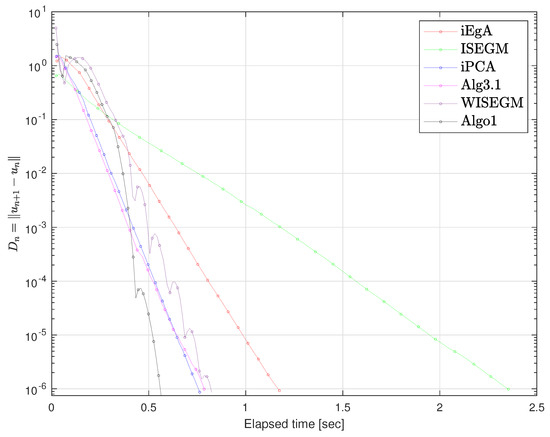

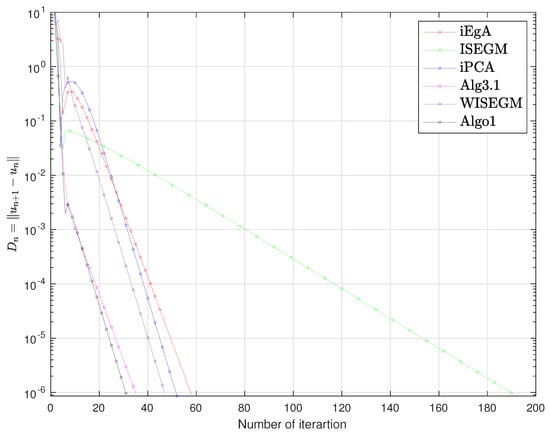

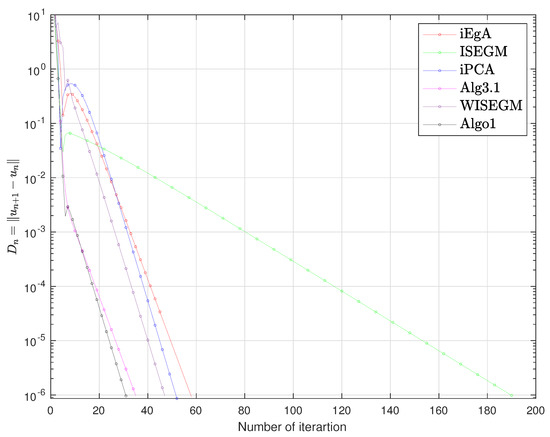

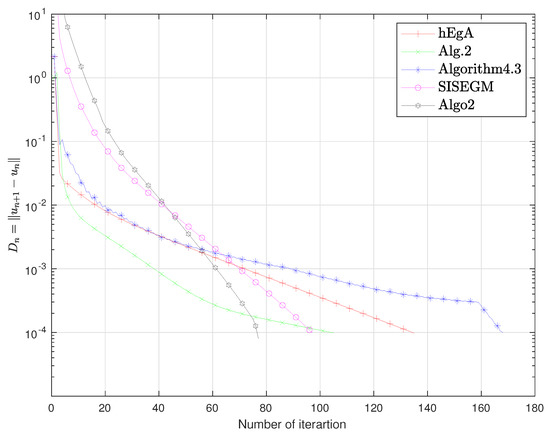

Numerical experiments will be presented in this section to demonstrate the performance of our proposed methods. The Codes were run on a PC Intel(R) Core(TM)i5-6200 CPU @ 2.30GHz 2.40GHz, RAM 8.00 GB, MATLAB version 9.5 (R2018b). Throughout these examples y-axis and x-axis represent and number of iterations or execution time (in seconds) respectively. The following examples were considered for the numerical experiments of the two proposed algorithms.

Example 1.

Let an operator be define on as follows

where B is an matrix, S is an skew-symmetric matrix, D is an diagonal matrix, whose diagonal entries are nonnegative. These matrices and the vector q are randomly generated in The set is closed and convex and defined as

where C is an matrix and d is a nonnegative vector. It is clear that is monotone and L-Lipschitz continuous with

Example 2.

The following problem which was considered by Sun [39] (page 9, example 5), with an operator defined as

where

Here E is an square matrix with entries defined as

while

Example 3.

Let and Define an operator as follows:

where Observe that the solution set and the operator is pseudomonotone but not monotone with Lipschitz constant The projection onto is explicitly computed as

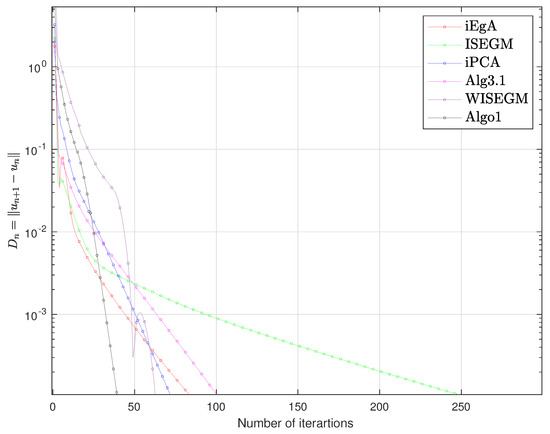

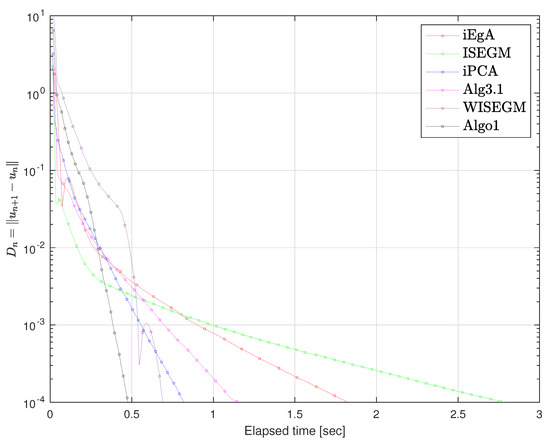

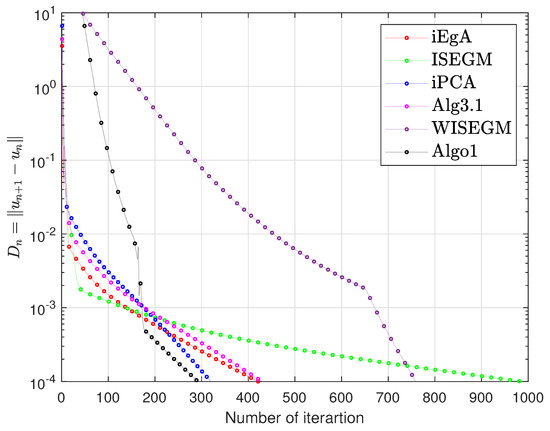

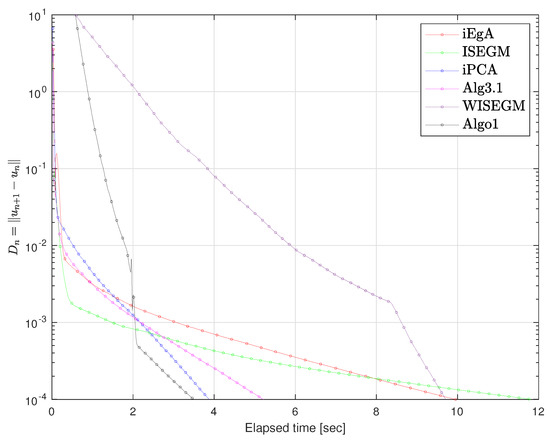

4.1. Comparative Analysis for Algorithm 1

Here, we give the numerical experiment of Algorithm 1 in comparison with some existing algorithms in the literature. We use the following algorithms and their corresponding control parameters details.

- Dong et al. [19] on page 3 (shortly, iEgA) with and

- Thong et al. [25] on page 5 (shortly, ISEGM), with and

- Dong et al. [17] Algorithm 3.1 (shortly, iPCA), with and

- Yang et al. [40] Algorithm 3.1 (shortly, Alg3.1), with and

- Thong et al. [41] Algorithm 3.1 (shortly, WISEGM), and

For Algorithm 1 (shortly, Algo1) we chose and The results are shown in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8.

Figure 1.

Example 1 in : Numbers of iterations are 85, 254, 73, 102, 63, 40 respectively.

Figure 2.

Example 1 in : Elapsed time 1.83, 2.78, 0.82, 1.25, 0.69, 0.48 respectively.

Figure 3.

Example 1 in : Numbers of iterations 421, 985, 320, 430, 759, 292 respectively.

Figure 4.

Example 1 in : Elapsed time 9.95, 11.79, 3.87, 5.19, 9.70, 3.41 respectively.

Figure 5.

Example 2: Numbers of iterations are 45, 169, 155, 52, 60, 38 respectively.

Figure 6.

Example 2: Elapsed time are 1.17, 2.35, 0.76, 0.78, 0.82, 0.56 respectively.

Figure 7.

Example 3: Numbers of iterations are 58, 190, 52, 35, 47, 31 respectively.

Figure 8.

Example 3: Elapsed time are 2.94, 19.59, 2.73, 3.83, 4.76, 3.04 respectively.

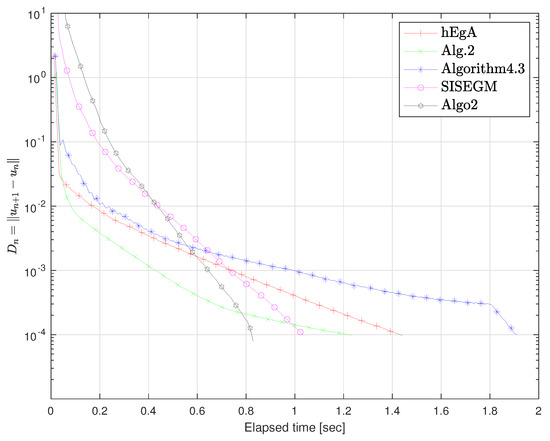

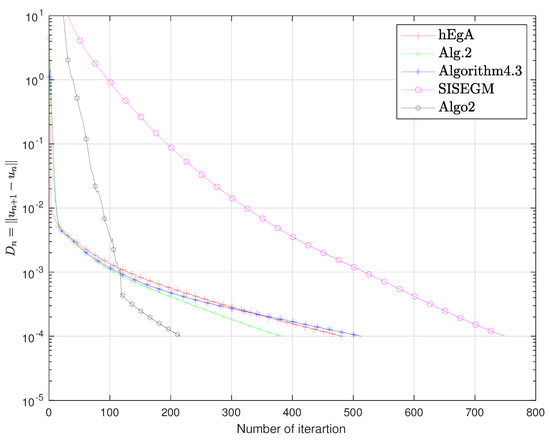

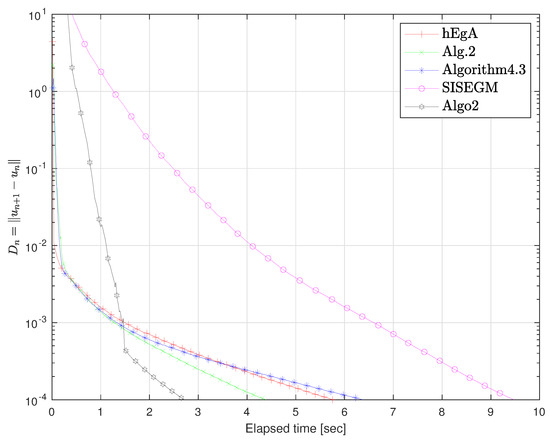

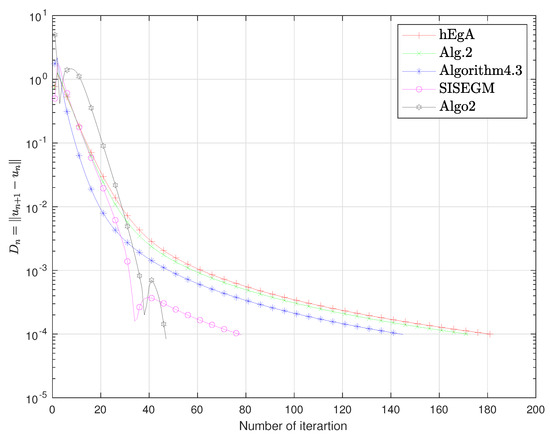

4.2. Comparative Results of Algorithm 2

For the numerical results of Algorithm 2, we used the following algorithms with strong convergence.

- Kraikaew et al. [42] on page 6 (shortly, hEgA), with and

- Yang et al. [27] Algorithm 2 (shortly, Alg.2), with and

- Shehu et al. [29] Algorithm 4.3 (shortly, Algorithm4.3), with and

- Thong et al. [41] Algorithm 3.2 (shortly, SISEGM), with and

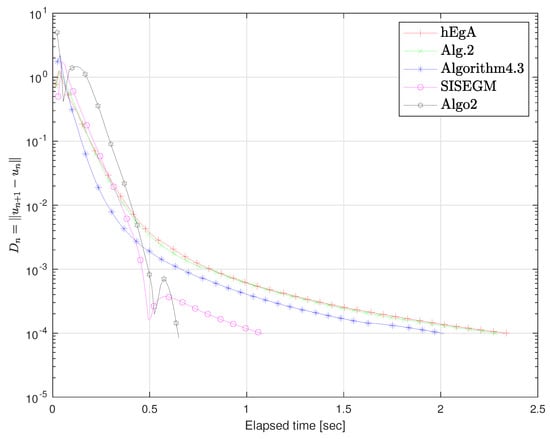

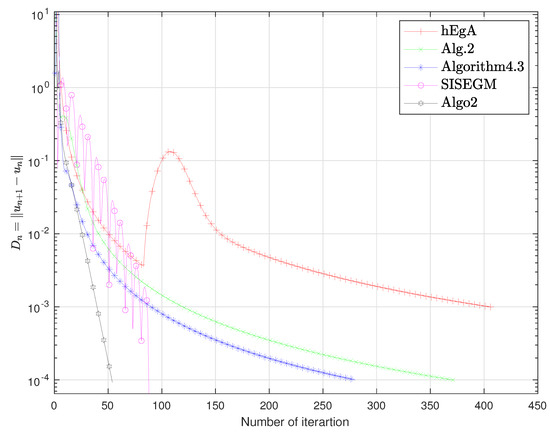

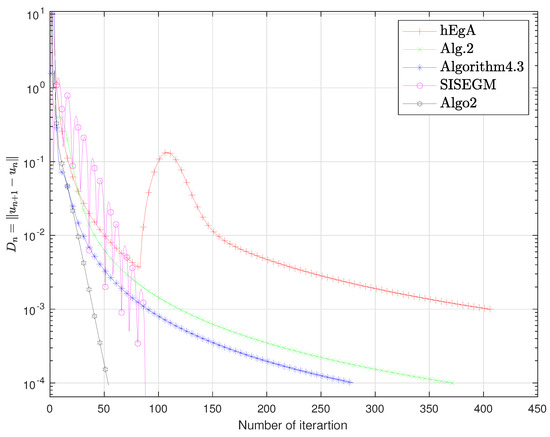

For Algorithm 2 (shortly, Algo2), we take and The results are shown in Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16.

Figure 9.

Example 1 in : Numbers of iterations are 135, 105, 168, 97, 77 respectively.

Figure 10.

Example 1 in : Elapsed time 1.44, 1.23, 1.97, 1.03, 0.84 respectively.

Figure 11.

Example 1 in : Numbers of iterations are 482, 382, 513, 749, 216 respectively.

Figure 12.

Example 1 in : Elapsed time 5.76, 4.38, 6.37, 9.47, 2.71 respectively.

Figure 13.

Example 2: Numbers of iterations are 181, 172, 145, 78, 47 respectively.

Figure 14.

Example 2: Elapsed time 2.33, 2.29, 2.01, 1.08, 0.64 respectively.

Figure 15.

Example 3: Numbers of iterations are 406, 372, 280, 88, 54 respectively.

Figure 16.

Example 3: Elapsed time 2.28, 2.40, 1.35, 0.58, 0.37 respectively.

For the strong convergent algorithm, it can be observed that for Example 1, 2 and 3 in Figure 9, Figure 10, Figure 11 and Figure 12, Figure 13 and Figure 14 and Figure 15 and Figure 16 respectively, the proposed algorithm is faster, more efficient and more robust than the compared existing algorithms.

5. Conclusions

A self-adaptive subgradient extragradient algorithms with an inertial extrapolation step is presented in this work. The proposed algorithms involve a more general class of operators, the iterates generated by the first scheme converge weakly to the solution of the variational inequality problem. Furthermore, a modified version of the proposed algorithm is given by using the viscosity method to obtain a strong convergence algorithm. The main advantage of the proposed algorithms is that they do not require the prior knowledge of the Lipchitz constant of the cost operator and the iterates generated converge faster to the solution of the problem due to the inertial extrapolation step. Numerical comparison of the proposed algorithms with some of the existing state of the art algorithms shows that the proposed algorithms are fast, robust and efficient.

Author Contributions

Conceptualization, J.A. and A.H.I.; methodology, J.A.; software, H.u.R.; validation, J.A., P.K. and A.H.I.; formal analysis, J.A.; investigation, P.K.; resources, P.K.; data curation, H.u.R.; writing—original draft preparation, J.A.; writing—review and editing, J.A. and A.H.I.; visualization, H.u.R.; supervision, P.K.; project administration, P.K.; funding acquisition, P.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), Faculty of Science, KMUTT. The first, third and the fourth authors were supported by “the Petchra Pra Jom Klao Ph.D. Research Scholarship” from ‘King Mongkut’s University of Technology Thonburi” (Grant No. 38/2018, 35/2017 and 16/2018 respectively).

Acknowledgments

The authors acknowledge the financial support provided by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), Faculty of Science, KMUTT.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Noor, M.A. Some developments in general variational inequalities. Appl. Math. Comput. 2004, 152, 199–277. [Google Scholar]

- Aubin, J.; Ekeland, I. Applied Nonlinear Analysis; John Wiley and Sons: New York, NY, USA, 1984. [Google Scholar]

- Marcotte, P. Application of Khobotov’s algorithm to variational inequalities and network equilibrium problems. INFOR Inf. Syst. Oper. Res. 1991, 29, 258–270. [Google Scholar] [CrossRef]

- Khobotov, E.N. Modification of the extra-gradient method for solving variational inequalities and certain optimization problems. USSR Comput. Math. Math. Phys. 1987, 27, 120–127. [Google Scholar] [CrossRef]

- Abbas, M.; Ibrahim, Y.; Khan, A.R.; de la Sen, M. Strong Convergence of a System of Generalized Mixed Equilibrium Problem, Split Variational Inclusion Problem and Fixed Point Problem in Banach Spaces. Symmetry 2019, 11, 722. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; Siam: Philadelphia, PA, USA, 1980; Volume 31. [Google Scholar]

- Baiocchi, C. Variational and quasivariational inequalities. Appl. Free Bound. Probl. 1984. [Google Scholar]

- Konnov, I. Combined Relaxation Methods for Variational Inequalities; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001; Volume 495. [Google Scholar]

- Jaiboon, C.; Kumam, P. An extragradient approximation method for system of equilibrium problems and variational inequality problems. Thai J. Math. 2012, 7, 77–104. [Google Scholar]

- Kumam, W.; Piri, H.; Kumam, P. Solutions of system of equilibrium and variational inequality problems on fixed points of infinite family of nonexpansive mappings. Appl. Math. Comput. 2014, 248, 441–455. [Google Scholar] [CrossRef]

- Chamnarnpan, T.; Phiangsungnoen, S.; Kumam, P. A new hybrid extragradient algorithm for solving the equilibrium and variational inequality problems. Afr. Mat. 2015, 26, 87–98. [Google Scholar] [CrossRef]

- Deepho, J.; Kumam, W.; Kumam, P. A new hybrid projection algorithm for solving the split generalized equilibrium problems and the system of variational inequality problems. J. Math. Model. Algorithms Oper. Res. 2014, 13, 405–423. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequalities Appl. 2019, 2019, 1–25. [Google Scholar] [CrossRef]

- Kazmi, K.R.; Rizvi, S. An iterative method for split variational inclusion problem and fixed point problem for a nonexpansive mapping. Optim. Lett. 2014, 8, 1113–1124. [Google Scholar] [CrossRef]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Solodov, M.V.; Svaiter, B.F. A new projection method for variational inequality problems. SIAM J. Control. Optim. 1999, 37, 765–776. [Google Scholar] [CrossRef]

- Dong, Q.; Cho, Y.; Zhong, L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Denisov, S.; Semenov, V.; Chabak, L. Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 2015, 51, 757–765. [Google Scholar] [CrossRef]

- Dong, Q.L.; Lu, Y.Y.; Yang, J. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

- Malitsky, Y.V. Projected reflected gradient methods for variational inequalities. SIAM J. Optim. 2015, 25, 502–520. [Google Scholar] [CrossRef]

- Abubakar, J.; Sombut, K.; Ibrahim, A.H.; Rehman, H.R. An Accelerated Subgradient Extragradient Algorithm for Strongly Pseudomonotone Variational Inequality Problems. Thai J. Math. 2019, 18, 166–187. [Google Scholar]

- Sombut, K.; Kitkuan, D.; Padcharoen, A.; Kumam, P. Weak Convergence Theorems for a Class of Split Variational Inequality Problems. In Proceedings of the 2018 International Conference on Control, Artificial Intelligence, Robotics & Optimization (ICCAIRO), Prague, Czech Republic, 19–21 May 2018; pp. 277–282. [Google Scholar]

- Chamnarnpan, T.; Wairojjana, N.; Kumam, P. Hierarchical fixed points of strictly pseudo contractive mappings for variational inequality problems. SpringerPlus 2013, 2, 540. [Google Scholar] [CrossRef]

- Crombez, G. A geometrical look at iterative methods for operators with fixed points. Numer. Funct. Anal. Optim. 2005, 26, 157–175. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D. Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms 2018, 79, 597–610. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Liu, H.; Liu, Z. Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 2018, 67, 2247–2258. [Google Scholar] [CrossRef]

- Van Hieu, D.; Thong, D.V. New extragradient-like algorithms for strongly pseudomonotone variational inequalities. J. Glob. Optim. 2018, 70, 385–399. [Google Scholar] [CrossRef]

- Shehu, Y.; Dong, Q.L.; Jiang, D. Single projection method for pseudo-monotone variational inequality in Hilbert spaces. Optimization 2019, 68, 385–409. [Google Scholar] [CrossRef]

- Khanh, P.D.; Vuong, P.T. Modified projection method for strongly pseudomonotone variational inequalities. J. Glob. Optim. 2014, 58, 341–350. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D. Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 2018, 341, 80–98. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin/Heidelberg, Germany, 2011; Volume 408. [Google Scholar]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry, and Nonexpansive; Marcel Dekker Inc.: New York, NY, USA, 1984. [Google Scholar]

- Alvarez, F. Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert space. SIAM J. Optim. 2004, 14, 773–782. [Google Scholar] [CrossRef]

- Ofoedu, E. Strong convergence theorem for uniformly L-Lipschitzian asymptotically pseudocontractive mapping in real Banach space. J. Math. Anal. Appl. 2006, 321, 722–728. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Thong, D.V.; Vuong, P.T. Modified Tseng’s extragradient methods for solving pseudo-monotone variational inequalities. Optimization 2019, 68, 2207–2226. [Google Scholar] [CrossRef]

- Vuong, P.T. On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J. Optim. Theory Appl. 2018, 176, 399–409. [Google Scholar] [CrossRef] [PubMed]

- Sun, D. A projection and contraction method for the nonlinear complementarity problem and its extensions. Math. Numer. Sin. 1994, 16, 183–194. [Google Scholar]

- Yang, J. Self-adaptive inertial subgradient extragradient algorithm for solving pseudomonotone variational inequalities. Appl. Anal. 2019. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D.; Rassias, T.M. Self adaptive inertial subgradient extragradient algorithms for solving pseudomonotone variational inequality problems. Optim. Lett. 2020, 14, 115–144. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).