Abstract

In this paper, we focus on studying the split feasibility problem (SFP), which has many applications in signal processing and image reconstruction. A popular technique is to employ the iterative method which is so called the relaxed CQ algorithm. However, the speed of convergence usually depends on the way of selecting the step size of such algorithms. We aim to suggest a new hybrid CQ algorithm for the SFP by using the self adaptive and the line-search techniques. There is no computation on the inverse and the spectral radius of a matrix. We then prove the weak convergence theorem under mild conditions. Numerical experiments are included to illustrate its performance in compressed sensing. Some comparisons are also given to show the efficiency with other CQ methods in the literature.

1. Introduction

In the present work, we aim to study the split feasibility problem (SFP), which is to find a point

where C and Q are nonempty closed and convex subsets of real Hilbert spaces and , respectively, and a bounded linear operator. In 1994, the SFP was first investigated by Censor and Elfving [1] in finite dimensional Hilbert spaces. There have been applications in real world such as image processing and signal recovery (see [2,3]). Byrne [4,5] introduced the following recursive procedure for solving SFP:

where , and are the projections onto C and Q, respectively, and is the adjoint of A. This projection algorithm is usually called the CQ algorithm. Subsequently, Yang [6] introduced the relaxed CQ algorithm. In this case, the projections and are, respectively, replaced by and , where

where is convex and lower semicontinuous, and , and

where is convex and lower semicontinuous, and In what follows, we define

and

Precisely, Yang [6] proposed the relaxed CQ algorithm in a finite-dimensional Hilbert space as follows:

Algorithm 1.

Let. For, define

where

It is seen that, since the sets and are half spaces, the projections are easily to be computed. However, the step size still depends on the norm of A.

To eliminate this difficulty, in 2012, López et al. [7] suggested a new way to select the step size as follows:

where is a sequence in such that for some . They established the weak convergence of the CQ algorithm (Equation (2)) and the relaxed CQ algorithm (Equation (7)) with the step size defined by Equation (8) in real Hilbert spaces.

Qu and Xiu [8] adopted the line-search technique to construct the step size in Euclidean spaces as follows:

Algorithm 2.

Choose, , . Letbe a point in. For, let

whereandis the smallest nonnegative integer such that

Set

In 2012, Bnouhachem et al. [9] proposed the following projection method for solving the SFP.

Algorithm 3.

For a given, let

wheresatisfies

Define

where

and

Recently, many authors establish weak and strong convergence theorems for the SFP (see also [10,11]).

In this work, combining the work of Bnouhachem et al. [9] and López et al. [7], we suggest a new hybrid CQ algorithm for solving the split feasibility problem and establish weak convergence theorem in Hilbert spaces. Finally, numerical results are given for supporting our main results. The comparison is also given to algorithms of Qu and Xiu [8] and Bnouhachem et al. [9]. It is shown that our method has a better convergence behavior than these CQ algorithms through numerical examples.

2. Preliminaries

We next recall some useful basic concepts that will be used in our proof. Let H be a real Hilbert space equipped with the inner product and the norm . Let be a nonlinear mapping. Then, T is called firmly nonexpansive if, for all ,

In a real Hilbert space H, we have the following equality:

A function is convex if and only if

for all .

A function is said to be weakly lower semi-continuous (w-lsc) at x if implies

The projection of a nonempty, closed and convex set C onto H is defined by

We note that and are firmly nonexpansive. From [5], we know that, if

then is -Lipschitz continuous. Moreover, in real Hilbert spaces, we know that [12]

- (i)

- for all ;

- (ii)

- for all ; and

- (iii)

- for all .

Lemma 1.

[12] Let S be a nonempty, closed and convex subset of a real Hilbert space H and be a sequence in H that satisfies the following assumptions:

- (i)

- exists for each ; and

- (ii)

- .

Then, converges weakly to a point in S.

3. Main Results

Throughout this paper, let S be the set of solution of SFP and suppose that S is nonempty. Let C and Q be nonempty that satisfy the following assumptions:

(A1) The set C is defined by

where is convex, subdifferentiable on C and bounded on bounded sets, and the set Q is defined by

where is convex, subdifferentiable on Q and bounded on bounded sets.

(A2) For each , at least one subgradient can be computed, where

(A3) For each , at least one subgradient can be computed, where

Next, we propose our new relaxed CQ algorithm in real Hilbert spaces.

Algorithm 4.

Let, for any, . Assumeandhave been constructed. Computevia the formula

whereandis the smallest nonnegative integer such that

Define

where

Lemma 2.

[8] The line-search in Equation (27) terminates after a finite number of steps. In addition, we have the following:

for all , where .

Next, we state our main theorem in this paper.

Theorem 1.

Assume that and satisfy the assumptions:

- (a1)

- ; and

- (a2)

- .

Then, defined by Algorithm 4 converges weakly to a solution of the SFP.

Proof.

Let . Then, we have and . It follows that . We see that

Since is firmly nonexpansive and , we get

It also follows that

From Equation (19), we see that

Combining Equations (31), (32) and (35), we get

where the last inequality follows from Lemma 2. Since and , it follows that

Thus, exists and hence is bounded.

From Equation (36) and Assumption (A2), it also follows that

By Assumption (A1), we have

It follows that

and

From Equation (36), we have

Let be a cluster point of with converging to . From Equation (42), we see that also converges to . We next show that is in S. Since , by the definition of , we have

where . By the assumption that is bounded and Equation (42), we get

which implies . Hence . Since , we obtain

where . By the boundedness of and Equation (43), it follows that

We conclude that . Thus, . Thus, is a solution of the SFP.

Hence, by Lemma 1, we conclude that the sequence converges to a point in S. This completes the proof. □

4. Numerical Experiments

In this section, we provide numerical experiments in compressed sensing. We illustrate the performance of Algorithms 4 and 1 of Yang [6], Algorithm 2 of Qu and Xiu [8], and Algorithm 3 of Bnouhuchem et al. [9]. In signal processing, compressed sensing can be modeled as the following linear equation:

where is a recovered vector with m nonzero components, is the observed data, is the noisy and A is an matrix with . The problem in Equation (48) can be seen as the LASSO problem:

where is a given constant. In particular, if and , then the LASSO problem can be considered as the SFP. From this connection, we can apply the CQ algorithm to solve Equation (49).

In this example, the sparse vector is generated by the uniform distribution in with m nonzero elements. The matrix A is generated by the normal distribution with mean zero and invariance one. The observation y is generated by the white Gaussian noise with SNR=40. The process is started with and initial point .

The stopping criterion is defined by the mean square error (MSE):

where is an estimated signal of and is a tolerance.

In what follows, let , , , and . The numerical results are reported as follows.

In Table 1, we observe that the performance of Algorithm 4 is better than other algorithms in terms of CPU time and number of iterations as the spikes of sparse vector is varied from 10 to 30. In this example, it is shown that Algorithm 4 of Yang [6], for which the step size depends on the norm of A, converges more slowly than other algorithms in terms of CPU time.

Table 1.

Numerical results ( and ).

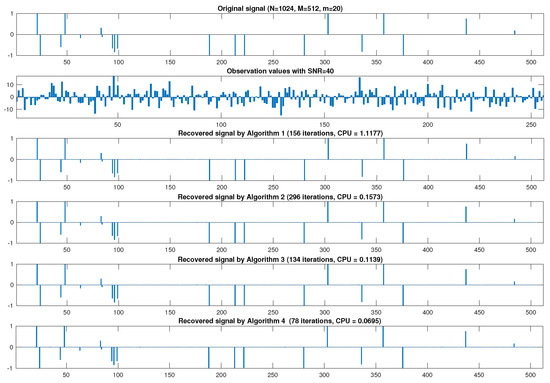

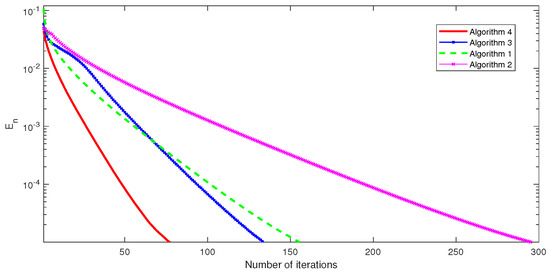

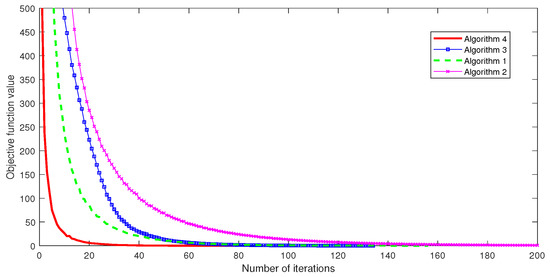

Next, we provide Figure 1, Figure 2 and Figure 3 to illustrate the convergence behavior, MSE, number of iterations and objective function values when , , and .

Figure 1.

From top to bottom: original signal, observation data and recovered signal by Algorithms 1–4, respectively.

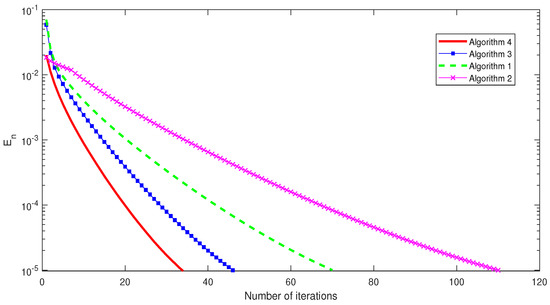

Figure 2.

MSE versus number of iterations when , and .

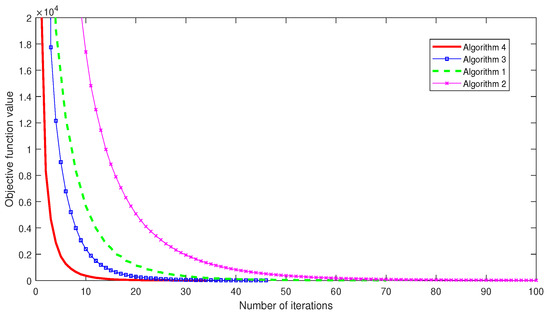

Figure 3.

The objective function value versus number of iterations when , and .

In Figure 1, Figure 2 and Figure 3, we can summarize that our proposed algorithm is really more efficient and faster than algorithms of Yang [6], Qu and Xiu [8] and Bnouhachem et al. [9].

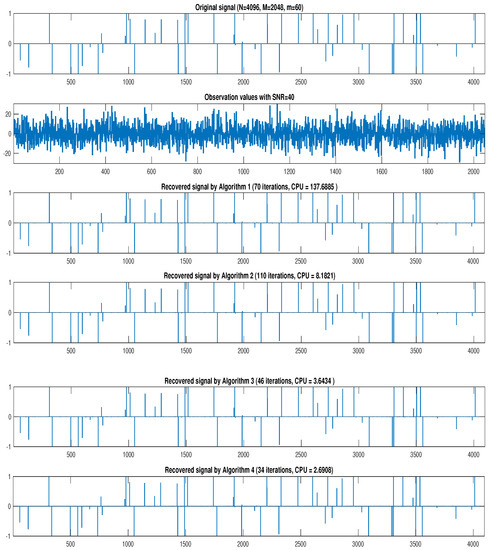

In Table 2, we observe that Algorithm 4 is effective and also converges more quickly than Algorithm 1 of Yang [6], Algorithm 2 of Qu and Xiu [8] and Algorithm 3 of Bnouhuchem et al. [9]. Moreover, it is seen that Algorithm 1 of Yang [6] has the highest CPU time in computation. In this case, Algorithm 1 takes more CPU time than it does in the first case (see Table 1). Therefore, we can conclude that our proposed method has the advantage in comparison to other methods, especially Algorithm 1, which requires the spectral computation.

Table 2.

Numerical results ( and ).

We next provide Figure 4, Figure 5 and Figure 6 to illustrate the convergence behavior, MSE, number of iterations and objective function values when , , and .

Figure 4.

From top to bottom: original signal, observation data and recovered signal by Algorithms 1–4, respectively.

Figure 5.

MSE versus number of iterations when , and .

Figure 6.

The objective function value versus number of iterations when , and .

5. Comparative Analysis

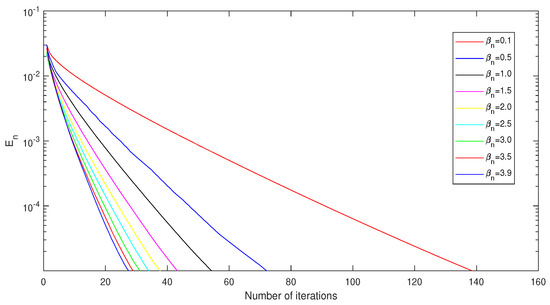

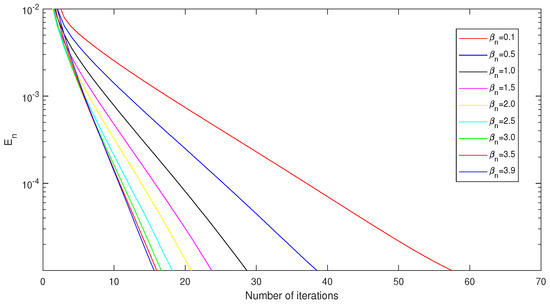

In this section, we discuss the comparative analysis to show the effects of the step sizes and in Algorithm 4.

We begin this section by studying the effect of the step size in Algorithm 4 in terms of the number of iterations and the CPU time with the varied cases.

Choose , , and . Let and A be as in the previous example. The stopping criterion is defined by Equation (50) with .

In Table 3, it is observed that the number of iterations and the CPU time have small reduction when the step size tends to 4. The numerical experiments for each cases of are shown in Figure 7 and Figure 8, respectively.

Table 3.

The convergence behavior of Algorithm 4 with different cases of .

Figure 7.

Graph of number of iterations versus in case and .

Figure 8.

Graph of number of iterations versus in case and .

Next, we discuss the effect of the step size in Algorithm 4. We note that the step size depends on the parameters and . Thus, we aim to vary these parameters and study its convergence behavior.

Choose , , and . Let and A be as in the previous example. The stopping criterion is defined by Equation (50) with . The numerical results are reported in Table 4.

Table 4.

The convergence behavior of Algorithm 4 with different cases of .

In Table 4, we see that the CPU time decreases significantly when the parameter is also decreased. However, the choice of has no effect in terms of number of iterations.

Next, we discuss the effect of in Algorithm 4. In this experiment, choose , , and . The error is defined by Equation (50) with . The numerical results are reported in Table 5.

Table 5.

The convergence behavior Algorithm 4 with different cases of .

In Table 5, we observe that the choices of have a small effect in both terms of the CPU time and the number of iterations.

Finally, we discuss the convergence of Algorithm 4 with different cases of M and N. In this case, we set , , , and . The stopping criterion is defined by Equation (50).

In Table 6, it is shown that, if M and N have a high value, then the number of iteration decreases. However, in this case, the CPU time increases.

Table 6.

The convergence behavior Algorithm 4 with different cases of M and N.

6. Conclusions

In this work, we introduce a new hybrid CQ algorithm by using the self adaptive and the line-search techniques for the split feasibility problem in Hilbert spaces. This method can be viewed as a refinement and improvement of other CQ algorithms. Convergence analysis of the proposed method is proved under some suitable conditions. The numerical results show that our algorithm has a better convergence behavior than the algorithms of Yang [6], Qu and Xiu [8] and Bnouhachem et al. [9]. A comparative analysis was also performed to show the effects of the step sizes in our algorithm.

Author Contributions

S.S.; supervision and investigation, S.K.; writing original draft and P.C.; formal analysis and methodology.

Funding

This research was funded by Chiang Mai University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Censor, Y.; Elfving, T. A multiprojection algorithms using Bregman projection in a product space. Numer. Algor. 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Borwein, J.M. On projection algorithms for solving convex feasibility problems. SIAM Rev. 1996, 38, 367–426. [Google Scholar] [CrossRef]

- Stark, H. Image Recovery: Theory and Application; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Yang, Q. The relaxed CQ algorithm for solving the split feasibility problem. Inverse Prob. 2004, 20, 1261–1266. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Qu, B.; Xiu, N. A note on the CQ algorithm for the split feasibility problem. Inverse Prob. 2005, 21, 1655–1665. [Google Scholar] [CrossRef]

- Bnouhachem, A.; Noor, M.A.; Khalfaoui, M.; Zhaohan, S. On descent-projection method for solving the split feasibility problems. J. Glob. Optim. 2012, 54, 627–639. [Google Scholar] [CrossRef]

- Dong, Q.L.; Tang, Y.C.; Cho, Y.J.; Rassias, T.M. “Optimal” choice of the step length of the projection and contraction methods for solving the split feasibility problem. J. Glob. Optim. 2018, 71, 341–360. [Google Scholar] [CrossRef]

- Gibali, A.; Liu, L.W.; Tang, Y.C. Note on the modified relaxation CQ algorithm for the split feasibility problem. Optim. Lett. 2017, 12, 1–14. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: London, UK, 2011. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).