Three-Step Projective Methods for Solving the Split Feasibility Problems

Abstract

1. Introduction

2. Basic Concepts

- (i)

- nonexpansive if

- (ii)

- firmly nonexpansive if, for all ,

- (i)

- for all ;

- (ii)

- for all ;

- (iii)

- for all .

- (i)

- For each , exists;

- (ii)

- .

- (i)

- ;

- (ii)

- ;

- (iii)

- implies for any subsequence of .

3. Weak Convergence Result

| Algorithm 1: The proposed algorithm for weak convergence. |

| Choose . Let be iteratively generated by |

4. Strong Convergence Result

| Algorithm 2: The proposed algorithm for strong convergence. |

| Choose . Assume , and have been constructed. Compute the sequence by |

- (a)

- and ;

- (b)

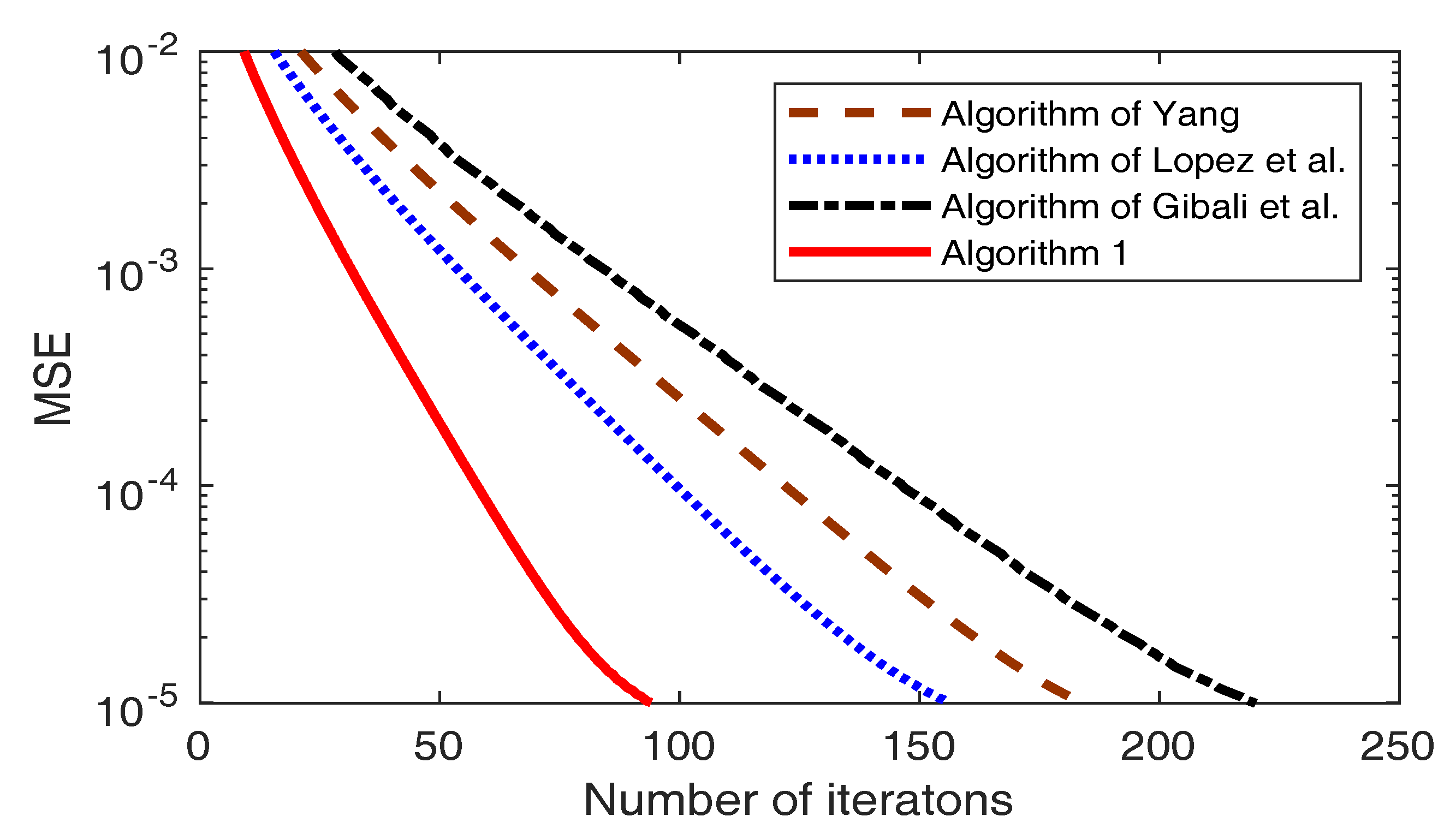

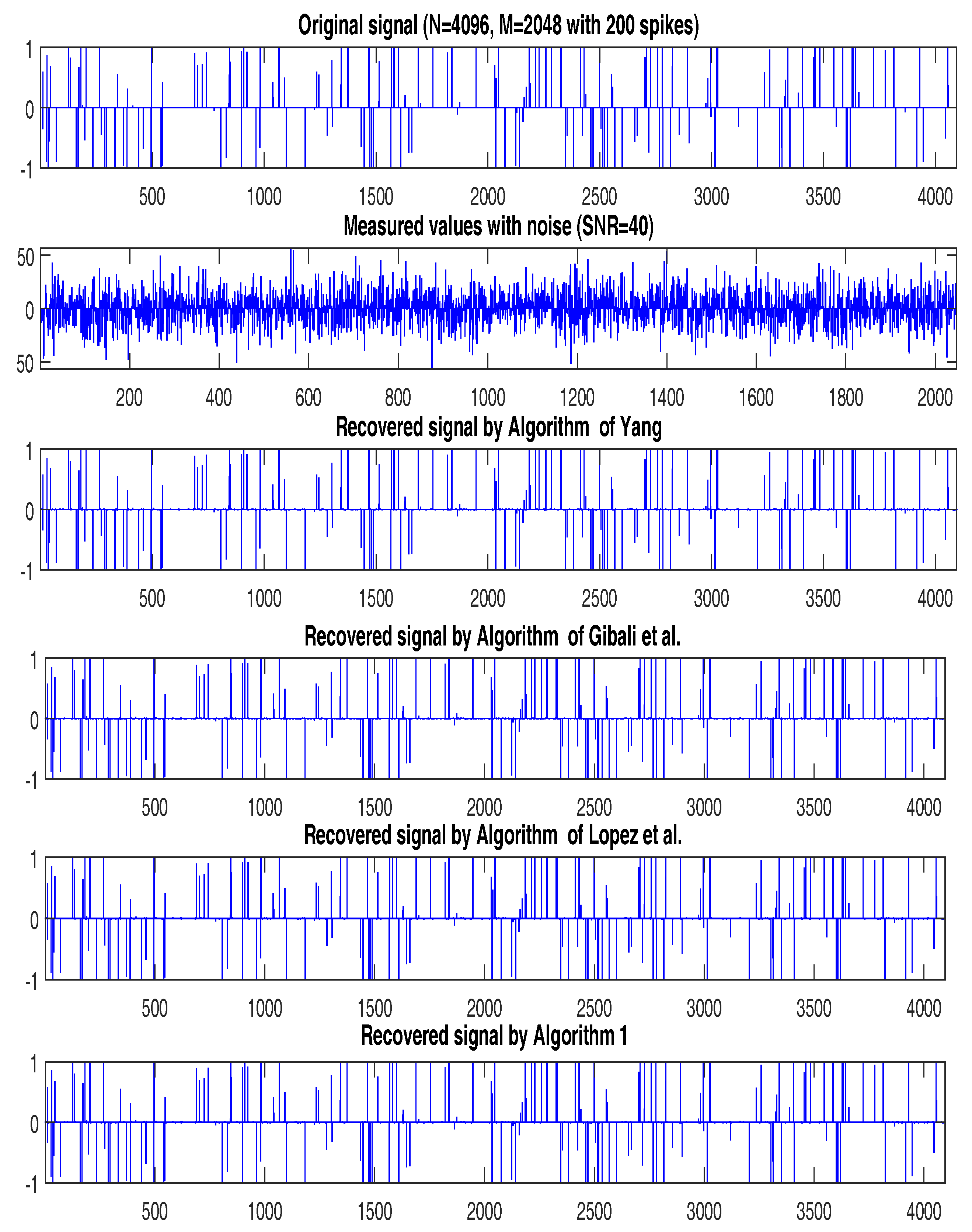

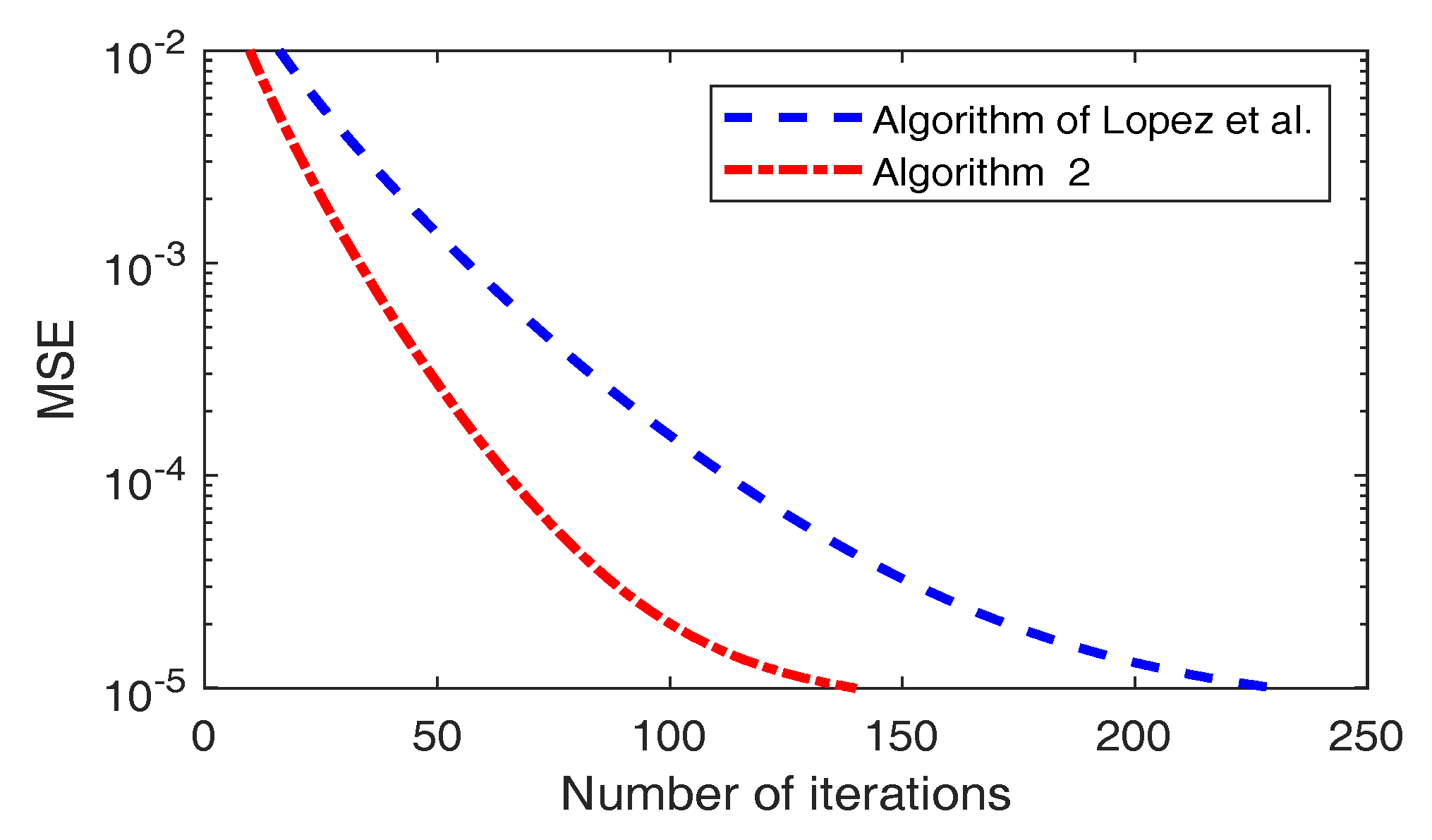

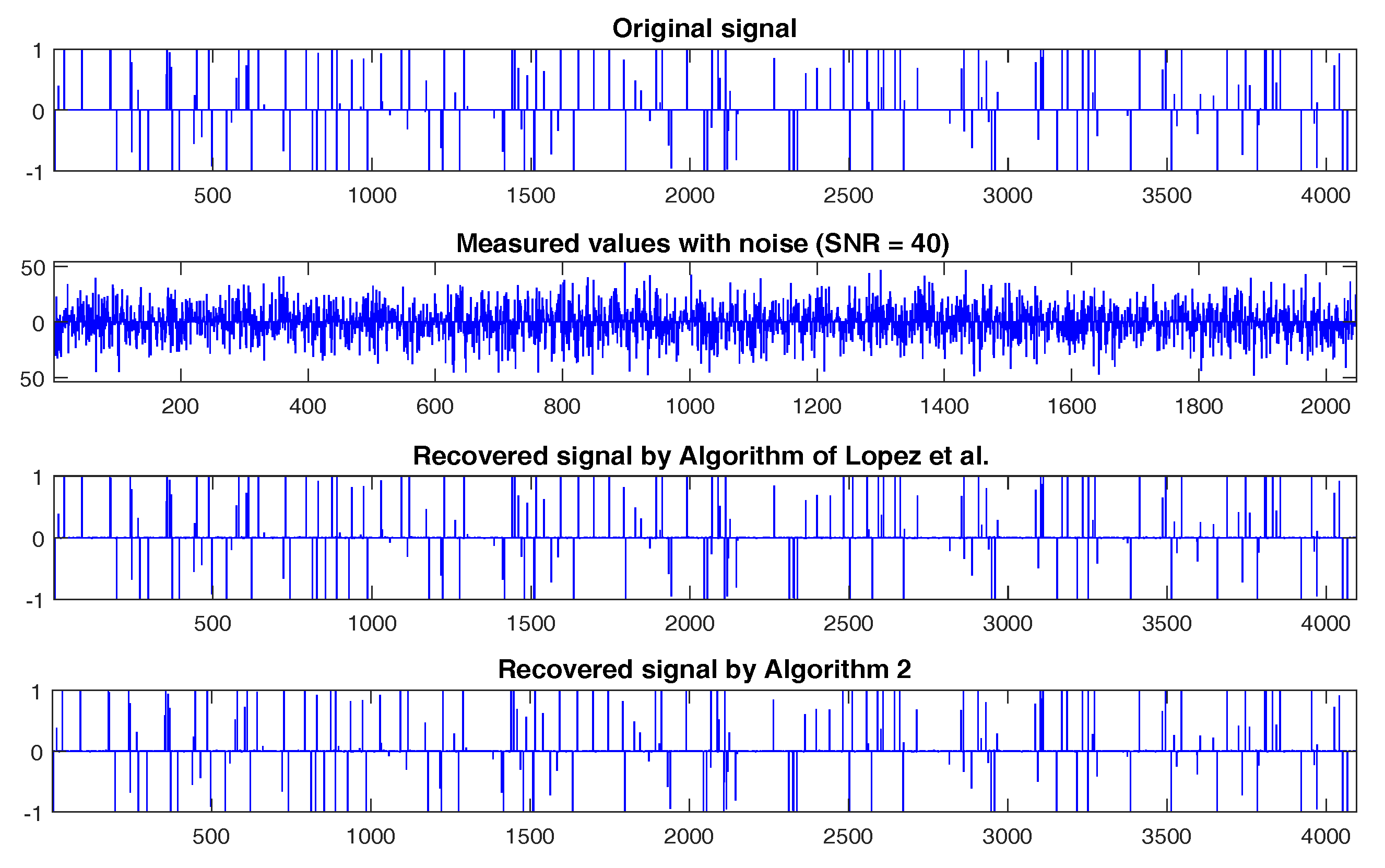

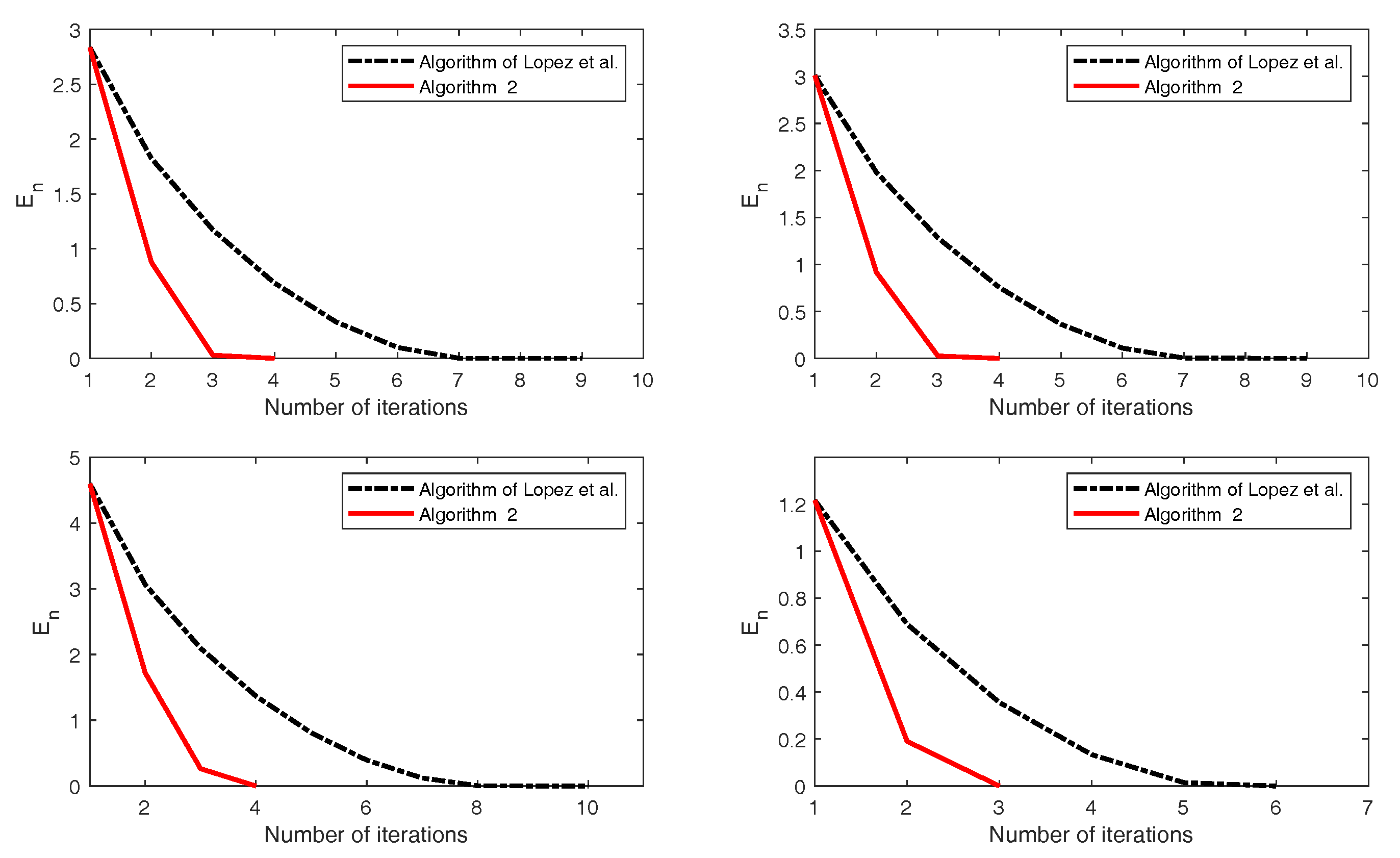

5. Numerical Examples

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Censor, Y.; Elfving, T. A multiprojection algorithms using Bregman projection in a product space. Numer. Algor. 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Wang, F.; Xu, H.K. Approximating curve and strong convergence of the CQ algorithm for the split feasibility problem. J. Inequal. Appl. 2010, 2010, 102085. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 2010, 26, 105018. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algor. 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Gibali, A. A new split inverse problem and application to least intensity feasible solutions. Pure Appl. Funct. Anal. 2017, 2, 243–258. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Yang, Q. The relaxed CQ algorithm for solving the split feasibility problem. Inverse Probl. 2004, 20, 1261–1266. [Google Scholar] [CrossRef]

- Fukushima, M. A relaxed projection method for variational inequalities. Math. Program. 1986, 35, 58–70. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Qu, B.; Xiu, N. A note on the CQ algorithm for the split feasibility problem. Inverse Probl. 2005, 21, 1655–1665. [Google Scholar] [CrossRef]

- Gibali, A.; Liu, L.W.; Tang, Y.C. Note on the modified relaxation CQ algorithm for the split feasibility problem. Optim. Lett. 2017, 12, 1–14. [Google Scholar] [CrossRef]

- Ćirić, L.; Rafiq, A.; Radenović, S.; Rajović, M.; Ume, J.S. On Mann implicit iterations for strongly accretive and strongly pseudo-contractive mappings. Appl. Math. Comput. 2008, 198, 128–137. [Google Scholar] [CrossRef]

- Ćirić, L. Some Recent Results in Metrical Fixed Point Theory; University of Belgrade: Belgrade, Serbia, 2003. [Google Scholar]

- Dang, Y.; Gao, Y. The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 2011, 27, 015007. [Google Scholar] [CrossRef]

- Yang, Q. On variable-step relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 2005, 302, 166–179. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Y.; Yang, Q. Modified projection methods for the split feasibility problem and multiple-sets feasibility problem. Appl. Math. Comput. 2012, 219, 1644–1653. [Google Scholar] [CrossRef]

- Bnouhachem, A.; Noor, M.A. Three-step projection method for general variational inequalities. Int. J. Mod. Phys. B. 2012, 26, 1250066. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. Efficient three-step iterative methods with sixth order convergence for nonlinear equations. Numer. Algorithms 2010, 53, 485–495. [Google Scholar] [CrossRef]

- Noor, M.A.; Noor, K.I. Three-step iterative methods for nonlinear equations. Appl. Math. Comput. 2006, 183, 322–327. [Google Scholar]

- Noor, M.A.; Yao, Y. Three-step iterations for variational inequalities and nonexpansive mappings. Appl. Math. Comput. 2007, 190, 1312–1321. [Google Scholar] [CrossRef]

- Phuengrattana, W.; Suantai, S. On the rate of convergence of Mann, Ishikawa, Noor and SP-iterations for continuous functions on an arbitrary interval. J. Comput. Appl. Math. 2011, 235, 3006–3014. [Google Scholar] [CrossRef]

- Rafiq, A.; Hussain, S.; Ahmad, F.; Awais, M.; Zafar, F. An efficient three-step iterative method with sixth-order convergence for solving nonlinear equations. Int. J. Comput. Math. 2007, 84, 369–375. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: London, UK, 2011. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. A weak-to-strong convergence principle for Fejér-monotone methods in Hilbert spaces. Math. Oper. Res. 2001, 26, 248–264. [Google Scholar] [CrossRef]

- He, S.; Yang, C. Solving the variational inequality problem defined on intersection of finite level sets. Abstr. Appl. Anal. 2013. [Google Scholar] [CrossRef]

| Case 1: , | Yang (10) | López et al. (11) | Gibali et al. (13) | Algorithm 1 |

| 74 | 65 | 106 | 39 | |

| 217 | 184 | 246 | 111 | |

| Case 2: , | Yang (10) | López et al. (11) | Gibali et al. (13) | Algorithm 1 |

| 87 | 77 | 117 | 48 | |

| 184 | 156 | 220 | 94 |

| Case 1: , | López et al. (12) | Algorithm 2 |

| 85 | 43 | |

| 119 | 64 | |

| Case 2: , | López et al. (12) | Algorithm 2 |

| 85 | 48 | |

| 230 | 140 |

| López et al. (12) | Algorithm 2 | ||

|---|---|---|---|

| No. of Iter. | 9 | 4 | |

| cpu (time) | 6.3707 | 4.0171 | |

| No. of Iter. | 9 | 4 | |

| cpu (time) | 6.5169 | 4.1789 | |

| No. of Iter. | 10 | 4 | |

| cpu (time) | 9.4818 | 5.5274 | |

| No. of Iter. | 6 | 3 | |

| cpu (time) | 3.7478 | 2.9404 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suantai, S.; Eiamniran, N.; Pholasa, N.; Cholamjiak, P. Three-Step Projective Methods for Solving the Split Feasibility Problems. Mathematics 2019, 7, 712. https://doi.org/10.3390/math7080712

Suantai S, Eiamniran N, Pholasa N, Cholamjiak P. Three-Step Projective Methods for Solving the Split Feasibility Problems. Mathematics. 2019; 7(8):712. https://doi.org/10.3390/math7080712

Chicago/Turabian StyleSuantai, Suthep, Nontawat Eiamniran, Nattawut Pholasa, and Prasit Cholamjiak. 2019. "Three-Step Projective Methods for Solving the Split Feasibility Problems" Mathematics 7, no. 8: 712. https://doi.org/10.3390/math7080712

APA StyleSuantai, S., Eiamniran, N., Pholasa, N., & Cholamjiak, P. (2019). Three-Step Projective Methods for Solving the Split Feasibility Problems. Mathematics, 7(8), 712. https://doi.org/10.3390/math7080712