A Meta-Model-Based Multi-Objective Evolutionary Approach to Robust Job Shop Scheduling

Abstract

1. Introduction

- The robustness of a schedule is considered jointly with the makespan and is defined as EMD, for which a meta-model is designed by using a data-driven response surface method.

- A MOEA is proposed based on the meta-model, gaining excellent performance and efficiency.

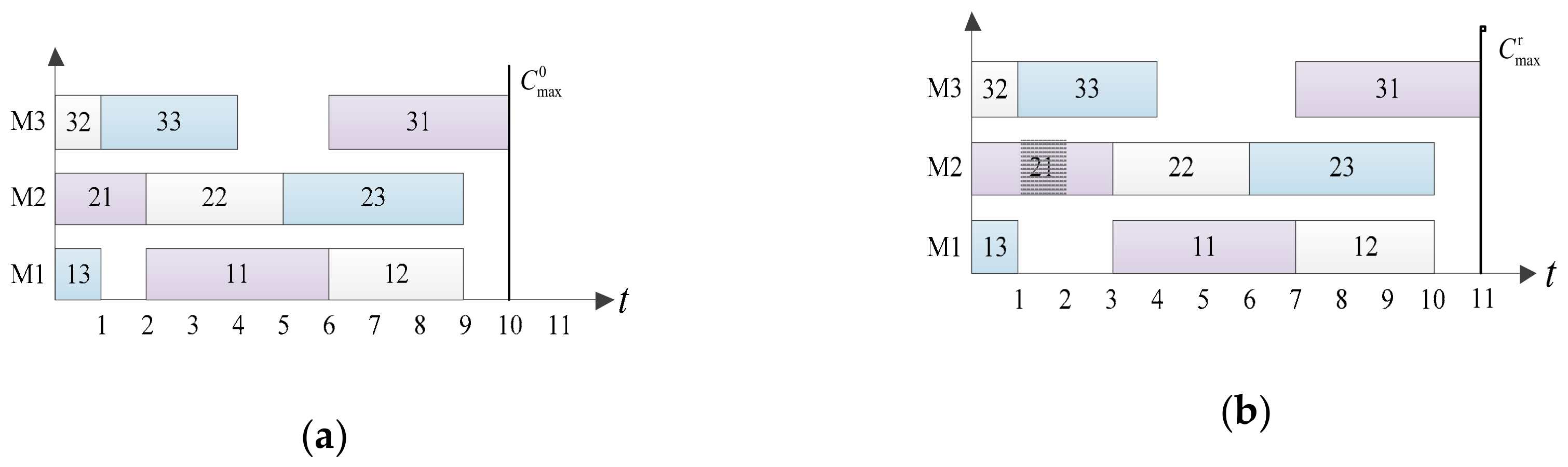

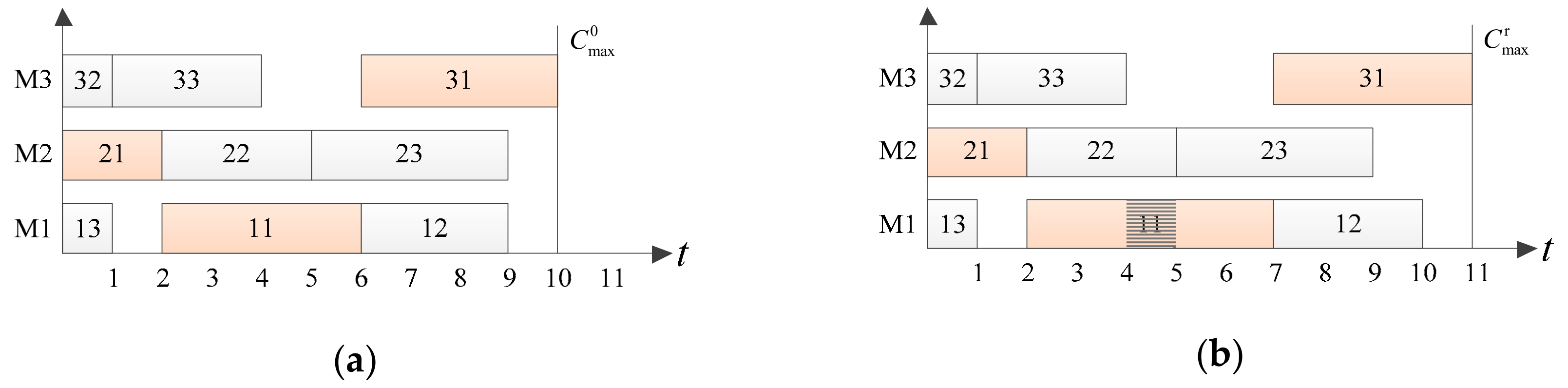

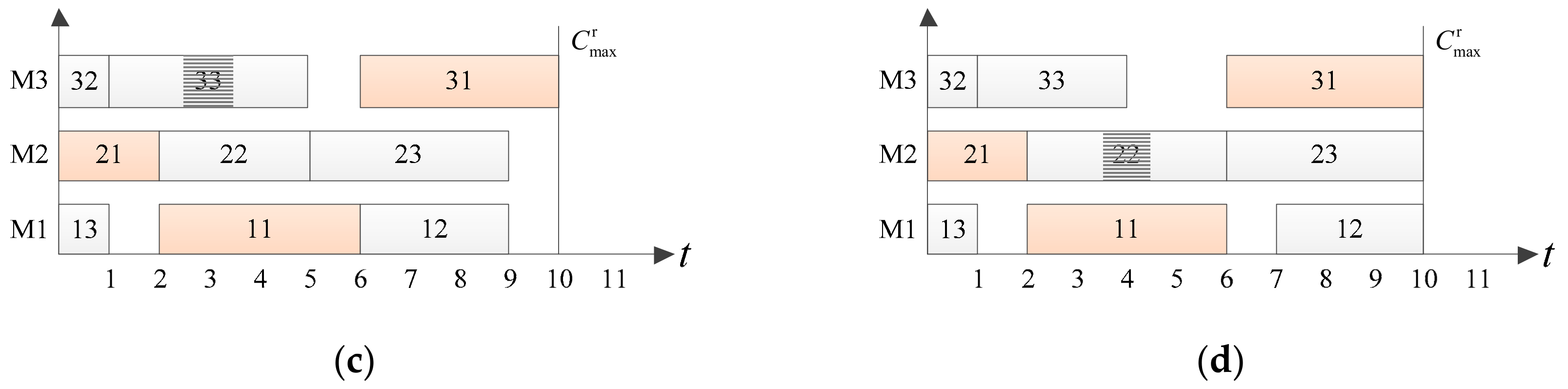

2. Problem Definition

3. The Meta-Model Based MOEA

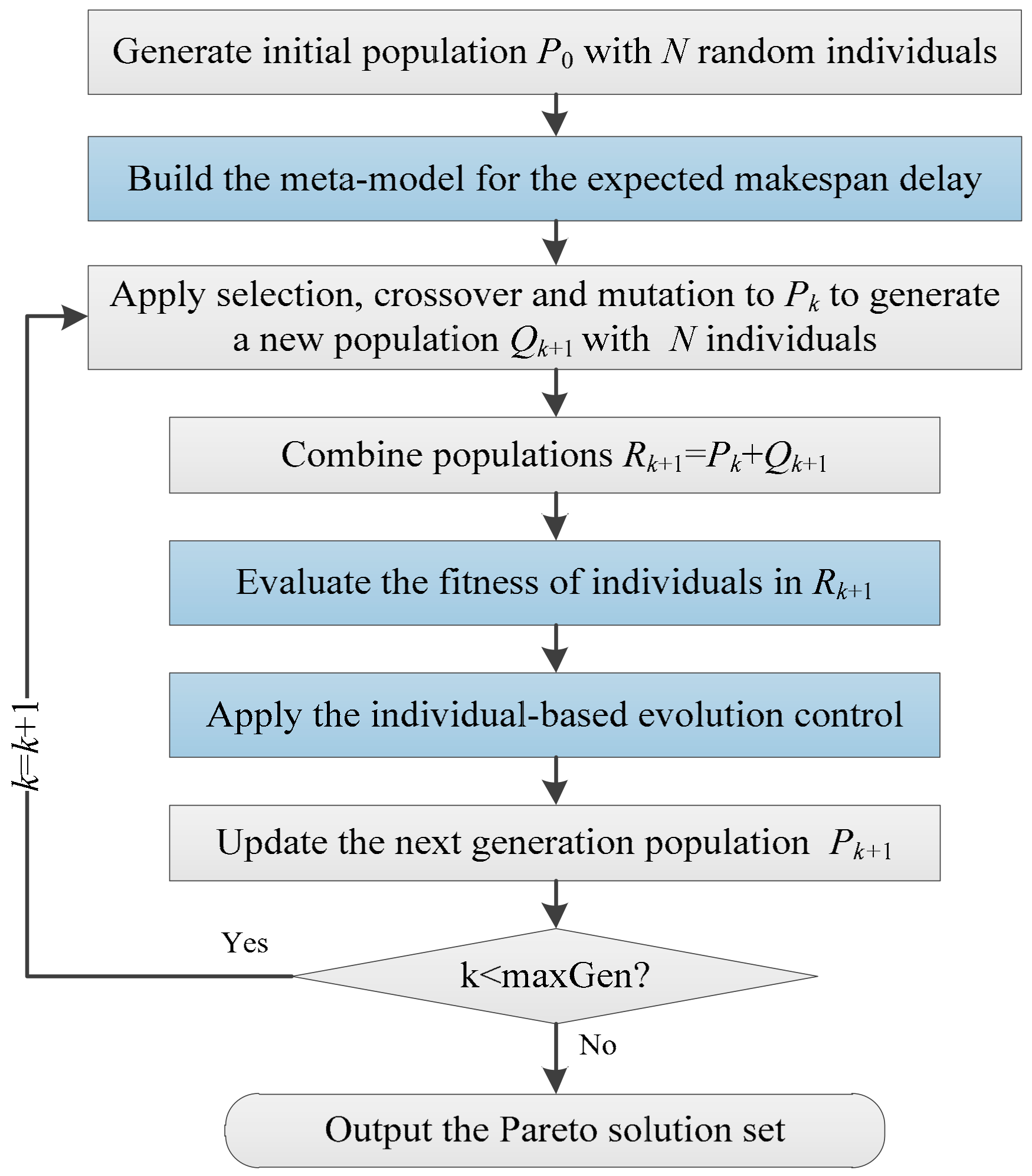

3.1. Framework of The Algorithm

3.2. Meta-Model of The EMD

| Algorithm 1 The pseudo-code to finalize the meta-model. |

| Inputs: the initial population P0 and the input variant vector x Outputs: the meta-model with the determined coefficients |

| 1: Set the training data set ; 2: Generate Ns machine breakdown scenarios with the machine failure rate and the expected downtime ; 3: for to 4: Generate the schedule based on the ith individual in P0; 5: Determine the makespan of the schedule by Equation (3); 6: Calculate the free slack time and the total slack time in schedule ; 7: Calculate the sum of processing time and ; 8: Calculate the average free slack time and the average total slack time ; 9: Determine the values of the input variant vector ; 10: Determined the EMD by Equation (17); 11: Generate the ith data instance ; 12: Update the training data set ; 13: end for 14: Based on the training data set , apply the multiple linear regression to determine the coefficients of the meta-model in Equation (16); 15: Return the finalized meta-model . |

3.3. Fitness Evaluation

| Algorithm 2 Fitness evaluation for the combined population |

| Inputs: the combined population Rk+1 Outputs: the fitness set of the individuals in Rk+1 |

| 1: Set the fitness set ; 2: for to 3: Select the ith individual from the population Rk+1; 4: Generate the schedule based on the individual ; 5: Evaluate the makespan of the schedule by Equation (3); 6: Get machine failure rate and expected downtime ; 7: Calculate the free slack time and the total slack time in the schedule ; 8: Calculate the sum of processing time and ; 9: Calculate the average free slack time and the average total slack time ; 10: Determine the values of the input variant vector 11: Evaluate the EMD by in Equation (16) with the values of ; 12: Update the fitness set ; 13: end for 14: Return the fitness set ; |

3.4. Individual-Based Evolution Control

| Algorithm 3 Individual-based evolution control framework |

| Inputs: the fitness set of the combined population Rk+1 Outputs: the modified fitness set |

| 1: Generate Ns scenarios with the machine failure rate and the expected downtime ; 2: Rank the fitness set by the fast non-dominated sorting; 3: Sort the fitness set in the ascending lexicographic order of the rank, the makespan and the EMD; 4: Set ; 5: for to 6: if 7: Break; 8: else if and 9: Update the fitness by ; 10: else 11: Generate the schedule of the individual associated by in the Rk+1; 12: Evaluate the EMD by the Monte Carlo method in Equation (17); 13: Update the fitness by ; 14: end if 15: end for 16: Return the modified fitness set . |

3.5. Evolutionary Operators

4. Experimental Analysis

4.1. Experiment Setting

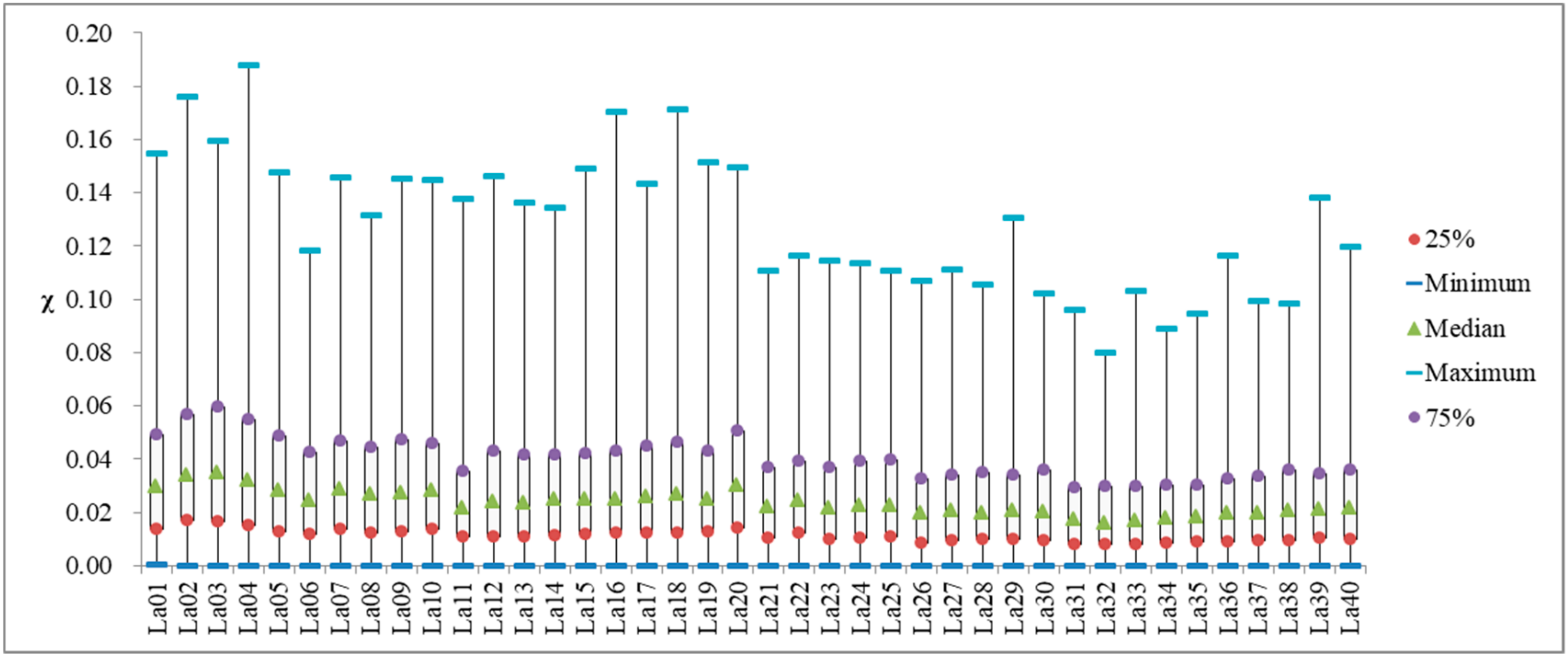

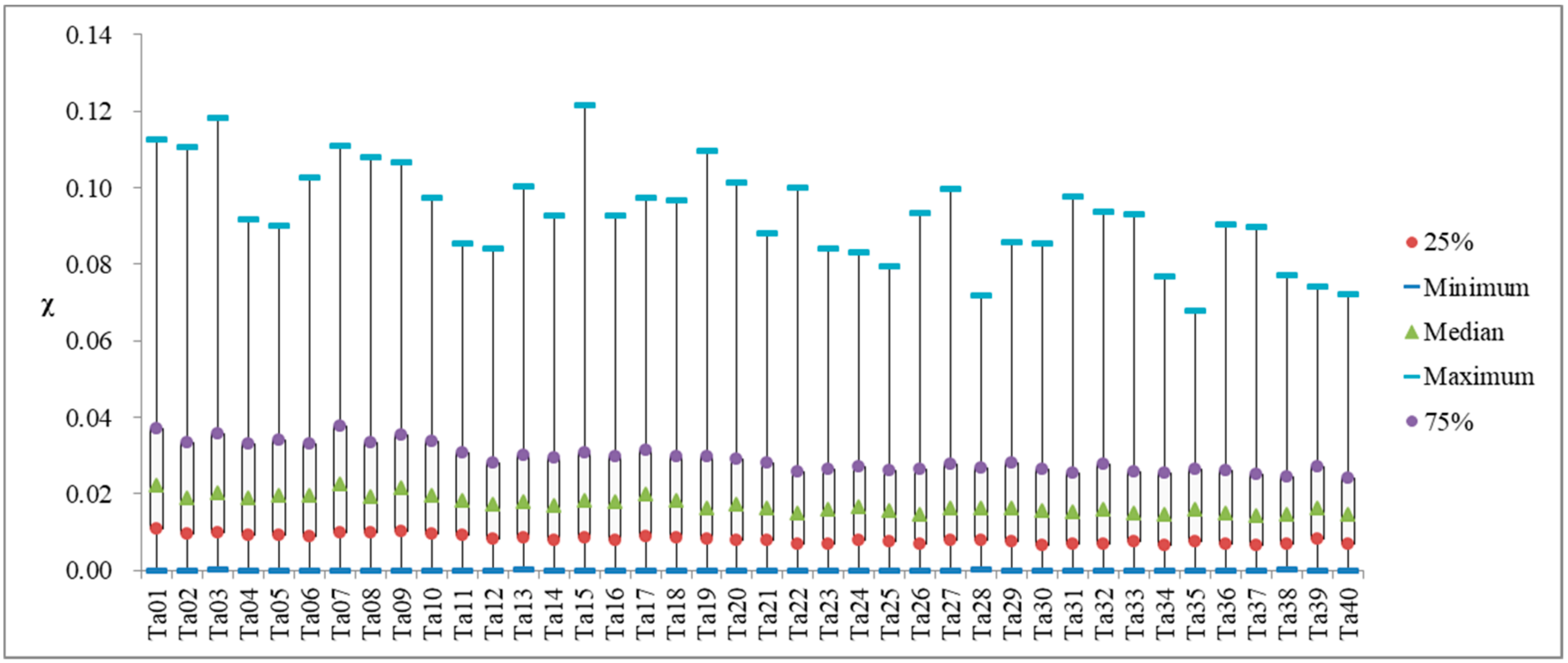

4.2. Evaluation Performance of The Proposed Mete-Model

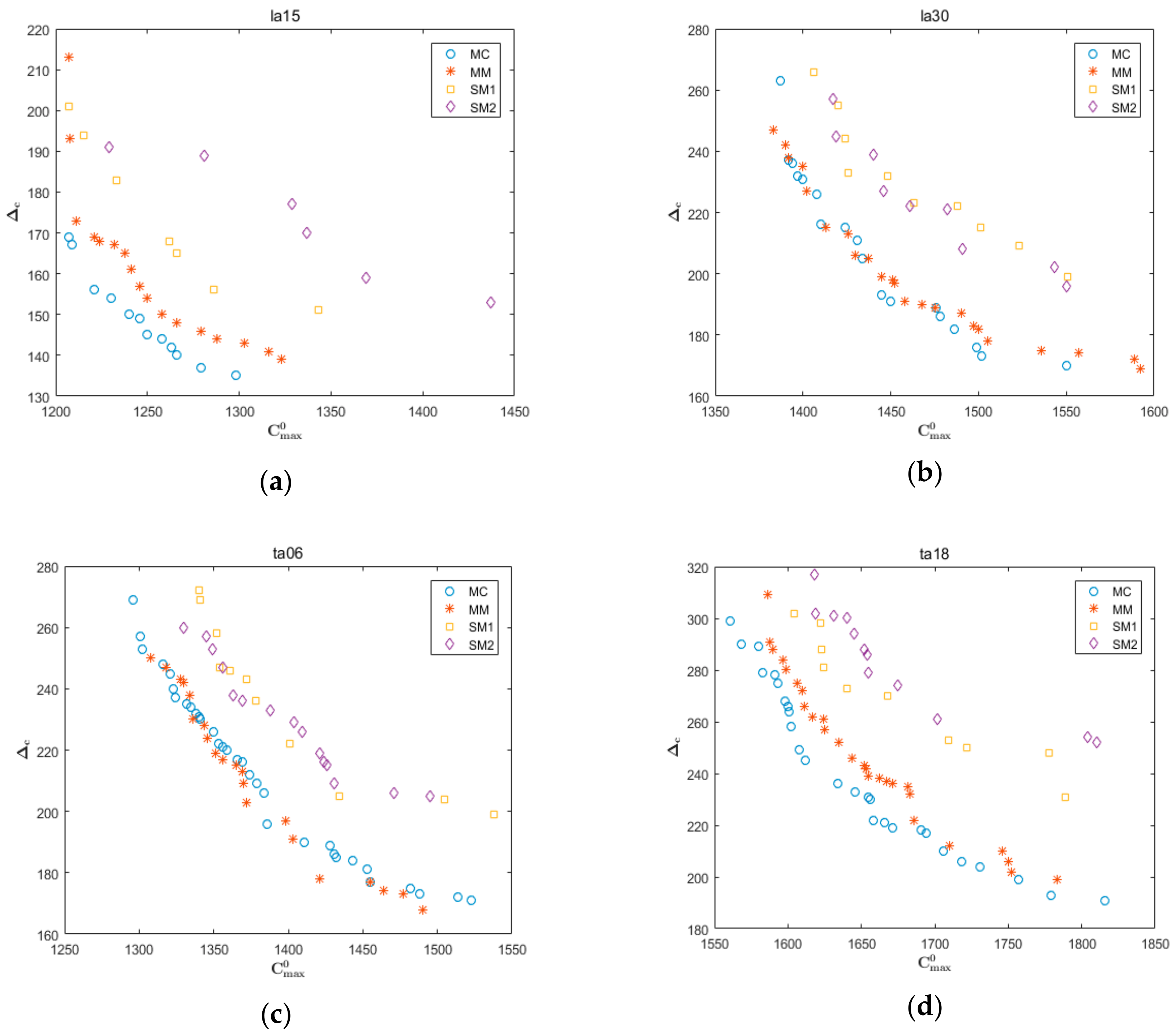

4.3. Optimization Performance of The Proposed Algorithm

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Notations:

| JSS | Job shop scheduling problem | RMD | Random machine breakdowns |

| EMD | Expected makespan delay | MOEA | Multi-objective evolutionary algorithm |

| Number of jobs | Number of machines | ||

| Job j, j = 1, 2, …, n | Machine i, i = 1, 2, …, m | ||

| Set of jobs, | Set of machines | ||

| Operation that job is on machine | Set of operations for job | ||

| Processing time of operation | Starting time of operation | ||

| Completion time of operation | Free slack time of operation | ||

| Total slack time of operation | Machine breakdown probability of | ||

| Downtime when processing | Makespan of a schedule before execution | ||

| Actual makespan of a schedule | Makespan delay of a schedule | ||

| Expression of expected makespan delay | Meta-model of | ||

| Monte Carlo approximation of | Set of operations without slack time | ||

| Set of operations with slack time | Sum of processing time in the set | ||

| Sum of processing time in the set | Average free slack time in the set | ||

| Average total slack time in the set | Machine failure rate of each machine | ||

| Expectation of the downtime | Initial population | ||

| Current population in generation k | Combined population in generation k | ||

| Input vector | A data instance | ||

| Training data set | Schedule of ith individual in | ||

| Fitness of the ith individual in | Fitness set of the population |

References

- Sotskov, Y.N.; Egorova, N.G. The optimality region for a single-machine scheduling problem with bounded durations of the jobs and the total completion time objective. Mathematics 2019, 7, 382. [Google Scholar] [CrossRef]

- Gafarov, E.; Werner, F. Two-machine job-shop scheduling with equal processing times on each machine. Mathematics 2019, 7, 301. [Google Scholar] [CrossRef]

- Luan, F.; Cai, Z.; Wu, S.; Jiang, T.; Li, F.; Yang, J. Improved whale algorithm for solving the flexible job shop scheduling problem. Mathematics 2019, 7, 384. [Google Scholar] [CrossRef]

- Turker, A.; Aktepe, A.; Inal, A.; Ersoz, O.; Das, G.; Birgoren, B. A decision support system for dynamic job-shop scheduling using real-time data with simulation. Mathematics 2019, 7, 278. [Google Scholar] [CrossRef]

- Sun, L.; Lin, L.; Li, H.; Gen, M. Cooperative co-evolution algorithm with an MRF-based decomposition strategy for stochastic flexible job shop scheduling. Mathematics 2019, 7, 318. [Google Scholar] [CrossRef]

- Zhang, J.; Ding, G.; Zou, Y.; Qin, S.; Fu, J. Review of job shop scheduling research and its new perspectives under Industry 4.0. J Intell. Manuf. 2019, 30, 1809–1830. [Google Scholar] [CrossRef]

- Potts, C.N.; Strusevich, V.A. Fifty years of scheduling: A survey of milestones. J. Oper. Res. Soc. 2009, 60, S41–S68. [Google Scholar] [CrossRef]

- García-León, A.A.; Dauzère-Pérèsb, S.; Mati, Y. An efficient Pareto approach for solving the multi-objective flexible job-shop scheduling problem with regular criterio. Comput. Oper. Res. 2019, 108, 187–200. [Google Scholar] [CrossRef]

- Zhang, C.; Rao, Y.; Li, P. An effective hybrid genetic algorithm for the job shop scheduling problem. Int. J. Adv. Manuf. Tech. 2008, 39, 965–974. [Google Scholar] [CrossRef]

- Li, L.; Jiao, L.; Stolkin, R.; Liu, F. Mixed second order partial derivatives decomposition method for large scale optimization. Appl. Soft Comput. 2017, 61, 1013–1021. [Google Scholar] [CrossRef]

- Watanabe, M.; Ida, K.; Gen, M. A genetic algorithm with modified crossover operator and search area adaptation for the job-shop scheduling problem. Comput. Ind. Eng. 2005, 48, 743–752. [Google Scholar] [CrossRef]

- Eswaramurthy, V.P.; Tamilarasi, A. Hybridizing tabu search with ant colony optimization for solving job shop scheduling problems. Int. J. Adv. Manuf. Tech. 2009, 40, 1004–1015. [Google Scholar] [CrossRef]

- Mencía, C.; Mencía, R.; Sierra, M.R.; Varela, R. Memetic algorithms for the job shop scheduling problem with operators. Appl. Soft. Comput. 2015, 34, 94–105. [Google Scholar] [CrossRef]

- Buddala, R.; Mahapatra, S.S. Two-stage teaching-learning-based optimization method for flexible job-shop scheduling under machine breakdown. Int. J. Adv. Manuf. Tech. 2019, 100, 1419–1432. [Google Scholar] [CrossRef]

- Mehta, S.V.; Uzsoy, R.M. Predictable scheduling of a job shop subject to breakdowns. IEEE Trans. Robotic. Autom. 1998, 14, 365–378. [Google Scholar] [CrossRef]

- Lei, D. Minimizing makespan for scheduling stochastic job shop with random breakdown. Appl. Math. Comput. 2012, 218, 11851–11858. [Google Scholar] [CrossRef]

- Nouiri, M.; Bekrar, A.; Jemai, A.; Trentesaux, D.; Ammari, A.C.; Niar, S. Two stage particle swarm optimization to solve the flexible job shop predictive scheduling problem considering possible machine breakdowns. Comput. Ind. Eng. 2017, 112, 595–606. [Google Scholar] [CrossRef]

- von Hoyningen-Huene, W.; Kiesmueller, G.P. Evaluation of the expected makespan of a set of non-resumable jobs on parallel machines with stochastic failures. Eur. J. Oper. Res. 2015, 240, 439–446. [Google Scholar] [CrossRef]

- Jamili, A. Robust job shop scheduling problem: Mathematical models, exact and heuristic algorithms. Expert Syst. Appl. 2016, 55, 341–350. [Google Scholar] [CrossRef]

- Xiong, J.; Xing, L.; Chen, Y. Robust scheduling for multi-objective flexible job-shop problems with random machine breakdowns. Int. J. Prod. Econ. 2013, 141, 112–126. [Google Scholar] [CrossRef]

- Wu, Z.; Sun, S.; Xiao, S. Risk measure of job shop scheduling with random machine breakdowns. Comput. Oper. Res. 2018, 99, 1–12. [Google Scholar] [CrossRef]

- Liu, N.; Abdelrahman, M.A.; Ramaswamy, S.R. A Complete Multiagent Framework for Robust and Adaptable Dynamic Job Shop Scheduling. IEEE Trans. Syst. Man. Cybern. Part C 2007, 37, 904–916. [Google Scholar] [CrossRef]

- Xiao, S.; Sun, S.; Jin, J.J. Surrogate Measures for the Robust Scheduling of Stochastic Job Shop Scheduling Problems. Energies 2017, 10, 543. [Google Scholar] [CrossRef]

- Zuo, X.; Mo, H.; Wu, J. A robust scheduling method based on a multi-objective immune algorithm. Inform Sciences 2009, 179, 3359–3369. [Google Scholar] [CrossRef]

- Ahmadi, E.; Zandieh, M.; Farrokh, M.; Emami, S.M. A multi objective optimization approach for flexible job shop scheduling problem under random machine breakdown by evolutionary algorithms. Comput. Oper. Res. 2016, 73, 56–66. [Google Scholar] [CrossRef]

- Al-Fawzan, M.A.; Haouari, M. A bi-objective model for robust resource-constrained project scheduling. Int. J. Prod. Econ. 2005, 96, 175–187. [Google Scholar] [CrossRef]

- Goren, S.; Sabuncuoglu, I. Optimization of schedule robustness and stability under random machine breakdowns and processing time variability. IIE Trans. 2010, 42, 203–220. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Li, L.; Yao, X.; Stolkin, R.; Gong, M.; He, S. An evolutionary multi-objective approach to sparse reconstruction. IEEE Trans. Evol. Comput. 2014, 18, 827–845. [Google Scholar]

- Zhou, A.; Qu, B.; Li, H.; Zhao, S.; Suganthan, P.N.; Zhang, Q. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 2011, 1, 32–49. [Google Scholar] [CrossRef]

- Xiong, J.; Tan, X.; Yang, K.; Xing, L.; Chen, Y. A Hybrid Multiobjective Evolutionary Approach for Flexible Job-Shop Scheduling Problems. Math. Probl. Eng. 2012, 2012, 1–27. [Google Scholar] [CrossRef]

- Hosseinabadi, A.A.R.; Siar, H.; Shamshirband, S.; Shojafar, M.; Nasir, M.H.N.M. Using the gravitational emulation local search algorithm to solve the multi-objective flexible dynamic job shop scheduling problem in Small and Medium Enterprises. Ann. Oper. Res. 2015, 229, 451–474. [Google Scholar] [CrossRef]

- Hosseinabadi, A.A.R.; Kardgar, M.; Shojafar, M.; Shamshirband, S.; Abraham, A. GELS-GA: Hybrid metaheuristic algorithm for solving Multiple Travelling Salesman Problem. In Proceedings of the 2014 IEEE 14th International Conference on Intelligent Systems Design and Applications, Okinawa, Japan, 28–30 November 2014. [Google Scholar]

- Jin, Y.; Branke, J. Evolutionary Optimization in Uncertain Environments: A Survey. IEEE T. Evolut. Comput. 2005, 9, 303–317. [Google Scholar] [CrossRef]

- Al-Hinai, N.; Elmekkawy, T.Y. Robust and stable flexible job shop scheduling with random machine breakdowns using a hybrid genetic algorithm. Int. J. Prod. Econ. 2011, 132, 279–291. [Google Scholar] [CrossRef]

- Chaari, T.; Chaabane, S.; Loukil, T.; Trentesaux, D. A genetic algorithm for robust hybrid flow shop scheduling. Int. J. Comput. Integ. M. 2011, 24, 821–833. [Google Scholar] [CrossRef]

- Yang, F.; Zheng, L.; Luo, Y. A novel particle filter based on hybrid deterministic and random sampling. IEEE Access 2018, 6, 67536–67542. [Google Scholar] [CrossRef]

- Yang, F.; Luo, Y.; Zheng, L. Double-Layer Cubature Kalman Filter for Nonlinear Estimation. Sensors 2019, 19, 986. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Li, T.; Sun, S.; Corchado, J.M. A survey of recent advances in particle filters and remaining challenges for multitarget tracking. Sensors 2017, 17, 2707. [Google Scholar] [CrossRef]

- Mirabi, M.; Ghomi, S.M.T.F.; Jolai, F. A two-stage hybrid flowshop scheduling problem in machine breakdown condition. J. Intell. Manuf. 2013, 24, 193–199. [Google Scholar] [CrossRef]

- Liu, L.; Gu, H.; Xi, Y. Robust and stable scheduling of a single machine with random machine breakdowns. Int. J. Adv. Manuf. Technol. 2006, 31, 645–654. [Google Scholar] [CrossRef]

- Leon, J.; Wu, S.D.; Storer, R.H. Robustness measures and robust scheduling for job shops. IIE Trans. 1994, 26, 32–43. [Google Scholar] [CrossRef]

- Jensen, M.T. Generating robust and flexible job shop schedules using genetic algorithms. IEEE Trans. Evol. Comput. 2003, 7, 275–288. [Google Scholar] [CrossRef]

- Yen, G.G.; He, Z. Performance Metric Ensemble for Multiobjective Evolutionary Algorithms. IEEE Trans. Evol. Comput. 2014, 18, 131–144. [Google Scholar] [CrossRef]

| Cases | n × m | Sig. | Cases | n × m | Sig. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| La01 | 10 × 5 | 0.035 | 0.027 | 0.74 | <0.01 | La21 | 15 × 10 | 0.026 | 0.019 | 0.77 | <0.01 |

| La02 | 10 × 5 | 0.040 | 0.029 | 0.59 | <0.01 | La22 | 15 × 10 | 0.028 | 0.021 | 0.74 | <0.01 |

| La03 | 10 × 5 | 0.041 | 0.031 | 0.53 | <0.01 | La23 | 15 × 10 | 0.026 | 0.020 | 0.78 | <0.01 |

| La04 | 10 × 5 | 0.038 | 0.028 | 0.71 | <0.01 | La24 | 15 × 10 | 0.027 | 0.021 | 0.76 | <0.01 |

| La05 | 10 × 5 | 0.034 | 0.026 | 0.78 | <0.01 | La25 | 15 × 10 | 0.028 | 0.021 | 0.75 | <0.01 |

| La06 | 15 × 5 | 0.029 | 0.022 | 0.80 | <0.01 | La26 | 20 × 10 | 0.023 | 0.018 | 0.76 | <0.01 |

| La07 | 15 × 5 | 0.033 | 0.024 | 0.71 | <0.01 | La27 | 20 × 10 | 0.024 | 0.018 | 0.74 | <0.01 |

| La08 | 15 × 5 | 0.032 | 0.024 | 0.72 | <0.01 | La28 | 20 × 10 | 0.024 | 0.019 | 0.73 | <0.01 |

| La09 | 15 × 5 | 0.033 | 0.026 | 0.68 | <0.01 | La29 | 20 × 10 | 0.024 | 0.018 | 0.75 | <0.01 |

| La10 | 15 × 5 | 0.033 | 0.024 | 0.76 | <0.01 | La30 | 20 × 10 | 0.025 | 0.019 | 0.74 | <0.01 |

| La11 | 20 × 5 | 0.026 | 0.020 | 0.76 | <0.01 | La31 | 30 × 10 | 0.020 | 0.016 | 0.75 | <0.01 |

| La12 | 20 × 5 | 0.029 | 0.023 | 0.76 | <0.01 | La32 | 30 × 10 | 0.020 | 0.015 | 0.75 | <0.01 |

| La13 | 20 × 5 | 0.029 | 0.023 | 0.70 | <0.01 | La33 | 20 × 10 | 0.020 | 0.015 | 0.76 | <0.01 |

| La14 | 20 × 5 | 0.029 | 0.023 | 0.76 | <0.01 | La34 | 30 × 10 | 0.021 | 0.016 | 0.76 | <0.01 |

| La15 | 20 × 5 | 0.029 | 0.023 | 0.71 | <0.01 | La35 | 30 × 10 | 0.022 | 0.017 | 0.82 | <0.01 |

| La16 | 10 × 10 | 0.031 | 0.024 | 0.74 | <0.01 | La36 | 15 × 15 | 0.023 | 0.018 | 0.80 | <0.01 |

| La17 | 10 × 10 | 0.031 | 0.024 | 0.74 | <0.01 | La37 | 15 × 15 | 0.023 | 0.018 | 0.75 | <0.01 |

| La18 | 10 × 10 | 0.032 | 0.025 | 0.72 | <0.01 | La38 | 15 × 15 | 0.025 | 0.019 | 0.74 | <0.01 |

| La19 | 10 × 10 | 0.030 | 0.023 | 0.72 | <0.01 | La39 | 15 × 15 | 0.025 | 0.018 | 0.75 | <0.01 |

| La20 | 10 × 10 | 0.035 | 0.026 | 0.65 | <0.01 | La40 | 15 × 15 | 0.025 | 0.019 | 0.71 | <0.01 |

| Aver. | / | 0.032 | 0.025 | 0.71 | <0.01 | Aver. | / | 0.024 | 0.018 | 0.76 | <0.01 |

| Cases | n × m | Sig. | Cases | n × m | Sig. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ta01 | 15 × 15 | 0.026 | 0.019 | 0.73 | <0.01 | Ta21 | 20 × 20 | 0.019 | 0.015 | 0.78 | <0.01 |

| Ta02 | 15 × 15 | 0.023 | 0.018 | 0.76 | <0.01 | Ta22 | 20 × 20 | 0.018 | 0.014 | 0.79 | <0.01 |

| Ta03 | 15 × 15 | 0.025 | 0.019 | 0.74 | <0.01 | Ta23 | 20 × 20 | 0.018 | 0.014 | 0.76 | <0.01 |

| Ta04 | 15 × 15 | 0.023 | 0.018 | 0.77 | <0.01 | Ta24 | 20 × 20 | 0.019 | 0.014 | 0.77 | <0.01 |

| Ta05 | 15 × 15 | 0.023 | 0.018 | 0.78 | <0.01 | Ta25 | 20 × 20 | 0.018 | 0.014 | 0.79 | <0.01 |

| Ta06 | 15 × 15 | 0.023 | 0.017 | 0.76 | <0.01 | Ta26 | 20 × 20 | 0.018 | 0.014 | 0.78 | <0.01 |

| Ta07 | 15 × 15 | 0.026 | 0.020 | 0.73 | <0.01 | Ta27 | 20 × 20 | 0.019 | 0.015 | 0.75 | <0.01 |

| Ta08 | 15 × 15 | 0.024 | 0.018 | 0.76 | <0.01 | Ta28 | 20 × 20 | 0.019 | 0.014 | 0.77 | <0.01 |

| Ta09 | 15 × 15 | 0.025 | 0.018 | 0.74 | <0.01 | Ta29 | 20 × 20 | 0.019 | 0.015 | 0.76 | <0.01 |

| Ta10 | 15 × 15 | 0.023 | 0.018 | 0.77 | <0.01 | Ta30 | 20 × 20 | 0.018 | 0.014 | 0.79 | <0.01 |

| Ta11 | 20 × 15 | 0.022 | 0.016 | 0.76 | <0.01 | Ta31 | 30 × 15 | 0.018 | 0.014 | 0.77 | <0.01 |

| Ta12 | 20 × 15 | 0.020 | 0.016 | 0.78 | <0.01 | Ta32 | 30 × 15 | 0.019 | 0.015 | 0.77 | <0.01 |

| Ta13 | 20 × 15 | 0.021 | 0.016 | 0.78 | <0.01 | Ta33 | 30 × 15 | 0.018 | 0.014 | 0.74 | <0.01 |

| Ta14 | 20 × 15 | 0.020 | 0.016 | 0.77 | <0.01 | Ta34 | 30 × 15 | 0.017 | 0.014 | 0.78 | <0.01 |

| Ta15 | 20 × 15 | 0.021 | 0.016 | 0.73 | <0.01 | Ta35 | 30 × 15 | 0.018 | 0.014 | 0.80 | <0.01 |

| Ta16 | 20 × 15 | 0.021 | 0.015 | 0.78 | <0.01 | Ta36 | 30 × 15 | 0.018 | 0.014 | 0.76 | <0.01 |

| Ta17 | 20 × 15 | 0.023 | 0.017 | 0.74 | <0.01 | Ta37 | 30 × 15 | 0.018 | 0.014 | 0.76 | <0.01 |

| Ta18 | 20 × 15 | 0.021 | 0.016 | 0.75 | <0.01 | Ta38 | 30 × 15 | 0.018 | 0.014 | 0.79 | <0.01 |

| Ta19 | 20 × 15 | 0.021 | 0.017 | 0.76 | <0.01 | Ta39 | 30 × 15 | 0.019 | 0.013 | 0.81 | <0.01 |

| Ta20 | 20 × 15 | 0.021 | 0.016 | 0.79 | <0.01 | Ta40 | 30 × 15 | 0.017 | 0.013 | 0.78 | <0.01 |

| Aver. | / | 0.023 | 0.017 | 0.76 | <0.01 | Aver. | / | 0.018 | 0.014 | 0.77 | <0.01 |

| Cases | n × m | MC | MM | SM1 | SM2 | Cases | n × m | MC | MM | SM1 | SM2 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| La01 | 10 × 5 | 0.01 | 0.07 | 0.99 | 1.57 | La21 | 15 × 10 | 0.00 | 0.10 | 0.31 | 0.34 |

| La02 | 10 × 5 | 0.01 | 0.04 | 0.26 | 0.50 | La22 | 15 × 10 | 0.03 | 0.01 | 0.19 | 0.13 |

| La03 | 10 × 5 | 0.01 | 0.05 | 0.08 | 0.10 | La23 | 15 × 10 | 0.00 | 0.14 | 0.19 | 0.31 |

| La04 | 10 × 5 | 0.07 | 0.00 | 0.16 | 0.36 | La24 | 15 × 10 | 0.04 | 0.03 | 0.20 | 0.12 |

| La05 | 10 × 5 | 0.00 | 0.00 | 0.00 | 0.00 | La25 | 15 × 10 | 0.00 | 0.07 | 0.19 | 0.30 |

| La06 | 15 × 5 | 0.00 | 0.92 | 3.96 | 7.19 | La26 | 20 × 10 | 0.02 | 0.02 | 0.23 | 0.17 |

| La07 | 15 × 5 | 0.52 | 0.00 | 1.55 | 1.22 | La27 | 20 × 10 | 0.06 | 0.02 | 0.26 | 0.06 |

| La08 | 15 × 5 | 0.00 | 0.33 | 3.70 | 2.95 | La28 | 20 × 10 | 0.00 | 0.11 | 0.18 | 0.15 |

| La09 | 15 × 5 | 0.00 | 0.35 | 2.89 | 1.45 | La29 | 20 × 10 | 0.01 | 0.07 | 0.17 | 0.08 |

| La10 | 15 × 5 | 0.00 | 0.00 | 0.00 | 0.00 | La30 | 20 × 10 | 0.02 | 0.04 | 0.26 | 0.24 |

| La11 | 20 × 5 | 0.00 | 0.66 | 6.06 | 4.84 | La31 | 30 × 10 | 0.15 | 0.00 | 0.21 | 0.25 |

| La12 | 20 × 5 | 0.04 | 0.03 | 1.38 | 0.92 | La32 | 30 × 10 | 0.00 | 0.18 | 0.24 | 0.24 |

| La13 | 20 × 5 | 0.06 | 0.05 | 1.34 | 1.22 | La33 | 20 × 10 | 0.00 | 0.17 | 0.24 | 0.09 |

| La14 | 20 × 5 | 0.00 | 0.00 | 0.00 | 0.00 | La34 | 30 × 10 | 0.00 | 0.10 | 0.27 | 0.08 |

| La15 | 20 × 5 | 0.00 | 0.30 | 0.63 | 1.12 | La35 | 30 × 10 | 0.01 | 0.16 | 0.18 | 0.26 |

| La16 | 10 × 10 | 0.09 | 0.14 | 1.09 | 0.36 | La36 | 15 × 15 | 0.00 | 0.12 | 0.19 | 0.38 |

| La17 | 10 × 10 | 0.00 | 0.08 | 0.20 | 0.16 | La37 | 15 × 15 | 0.00 | 0.12 | 0.22 | 0.25 |

| La18 | 10 × 10 | 0.00 | 0.15 | 0.30 | 0.46 | La38 | 15 × 15 | 0.00 | 0.04 | 0.18 | 0.27 |

| La19 | 10 × 10 | 0.01 | 0.02 | 0.34 | 0.13 | La39 | 15 × 15 | 0.00 | 0.13 | 0.04 | 0.20 |

| La20 | 10 × 10 | 0.02 | 0.09 | 0.40 | 0.55 | La40 | 15 × 15 | 0.00 | 0.05 | 0.24 | 0.31 |

| Aver. | / | 0.04 | 0.16 | 1.27 | 1.26 | Aver. | / | 0.02 | 0.08 | 0.21 | 0.21 |

| Cases | n × m | MC | MM | SM1 | SM2 | Cases | n × m | MC | MM | SM1 | SM2 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ta01 | 15 × 15 | 0.00 | 0.08 | 0.19 | 0.20 | Ta21 | 20 × 20 | 0.02 | 0.05 | 0.23 | 0.31 |

| Ta02 | 15 × 15 | 0.00 | 0.08 | 0.22 | 0.33 | Ta22 | 20 × 20 | 0.00 | 0.17 | 0.28 | 0.34 |

| Ta03 | 15 × 15 | 0.00 | 0.04 | 0.17 | 0.09 | Ta23 | 20 × 20 | 0.09 | 0.01 | 0.14 | 0.19 |

| Ta04 | 15 × 15 | 0.01 | 0.12 | 0.21 | 0.17 | Ta24 | 20 × 20 | 0.02 | 0.01 | 0.17 | 0.16 |

| Ta05 | 15 × 15 | 0.00 | 0.15 | 0.15 | 0.24 | Ta25 | 20 × 20 | 0.00 | 0.22 | 0.29 | 0.24 |

| Ta06 | 15 × 15 | 0.04 | 0.01 | 0.23 | 0.22 | Ta26 | 20 × 20 | 0.00 | 0.07 | 0.30 | 0.22 |

| Ta07 | 15 × 15 | 0.00 | 0.14 | 0.24 | 0.26 | Ta27 | 20 × 20 | 0.00 | 0.09 | 0.18 | 0.38 |

| Ta08 | 15 × 15 | 0.01 | 0.02 | 0.08 | 0.11 | Ta28 | 20 × 20 | 0.03 | 0.05 | 0.30 | 0.21 |

| Ta09 | 15 × 15 | 0.06 | 0.03 | 0.27 | 0.30 | Ta29 | 20 × 20 | 0.02 | 0.06 | 0.31 | 0.34 |

| Ta10 | 15 × 15 | 0.06 | 0.10 | 0.29 | 0.22 | Ta30 | 20 × 20 | 0.02 | 0.05 | 0.24 | 0.24 |

| Ta11 | 20 × 15 | 0.00 | 0.22 | 0.29 | 0.30 | Ta31 | 30 × 15 | 0.02 | 0.04 | 0.16 | 0.17 |

| Ta12 | 20 × 15 | 0.01 | 0.04 | 0.24 | 0.19 | Ta32 | 30 × 15 | 0.05 | 0.05 | 0.13 | 0.14 |

| Ta13 | 20 × 15 | 0.00 | 0.08 | 0.14 | 0.29 | Ta33 | 30 × 15 | 0.03 | 0.05 | 0.34 | 0.26 |

| Ta14 | 20 × 15 | 0.01 | 0.12 | 0.10 | 0.17 | Ta34 | 30 × 15 | 0.00 | 0.13 | 0.33 | 0.39 |

| Ta15 | 20 × 15 | 0.00 | 0.21 | 0.26 | 0.37 | Ta35 | 30 × 15 | 0.00 | 0.09 | 0.21 | 0.14 |

| Ta16 | 20 × 15 | 0.00 | 0.06 | 0.23 | 0.21 | Ta36 | 30 × 15 | 0.02 | 0.03 | 0.15 | 0.18 |

| Ta17 | 20 × 15 | 0.03 | 0.06 | 0.14 | 0.33 | Ta37 | 30 × 15 | 0.03 | 0.02 | 0.17 | 0.08 |

| Ta18 | 20 × 15 | 0.00 | 0.07 | 0.24 | 0.30 | Ta38 | 30 × 15 | 0.00 | 0.27 | 0.24 | 0.35 |

| Ta19 | 20 × 15 | 0.09 | 0.00 | 0.30 | 0.34 | Ta39 | 30 × 15 | 0.07 | 0.16 | 0.01 | 0.13 |

| Ta20 | 20 × 15 | 0.00 | 0.14 | 0.17 | 0.19 | Ta40 | 30 × 15 | 0.00 | 0.13 | 0.19 | 0.18 |

| Aver. | / | 0.02 | 0.09 | 0.21 | 0.24 | Aver. | / | 0.02 | 0.09 | 0.22 | 0.23 |

| Cases | n × m | MC | MM | SM1 | SM2 | Cases | n × m | MC | MM | SM1 | SM2 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| La01 | 10 × 5 | 11 | 12 | 2 | 4 | La21 | 15 × 10 | 15 | 15 | 8 | 13 |

| La02 | 10 × 5 | 12 | 9 | 5 | 4 | La22 | 15 × 10 | 16 | 17 | 10 | 9 |

| La03 | 10 × 5 | 11 | 8 | 2 | 6 | La23 | 15 × 10 | 13 | 18 | 6 | 12 |

| La04 | 10 × 5 | 9 | 8 | 4 | 4 | La24 | 15 × 10 | 12 | 12 | 10 | 15 |

| La05 | 10 × 5 | 1 | 1 | 2 | 3 | La25 | 15 × 10 | 14 | 15 | 11 | 9 |

| La06 | 15 × 5 | 3 | 3 | 3 | 6 | La26 | 20 × 10 | 15 | 15 | 10 | 10 |

| La07 | 15 × 5 | 11 | 6 | 4 | 8 | La27 | 20 × 10 | 12 | 14 | 10 | 13 |

| La08 | 15 × 5 | 5 | 7 | 3 | 9 | La28 | 20 × 10 | 14 | 18 | 8 | 7 |

| La09 | 15 × 5 | 7 | 9 | 9 | 7 | La29 | 20 × 10 | 13 | 14 | 7 | 11 |

| La10 | 15 × 5 | 1 | 2 | 4 | 2 | La30 | 20 × 10 | 13 | 16 | 10 | 9 |

| La11 | 20 × 5 | 4 | 5 | 6 | 5 | La31 | 30 × 10 | 15 | 12 | 9 | 8 |

| La12 | 20 × 5 | 11 | 8 | 5 | 7 | La32 | 30 × 10 | 13 | 12 | 7 | 12 |

| La13 | 20 × 5 | 12 | 7 | 7 | 6 | La33 | 20 × 10 | 11 | 13 | 8 | 9 |

| La14 | 20 × 5 | 1 | 1 | 3 | 1 | La34 | 30 × 10 | 13 | 16 | 11 | 10 |

| La15 | 20 × 5 | 12 | 16 | 7 | 6 | La35 | 30 × 10 | 11 | 16 | 10 | 7 |

| La16 | 10 × 10 | 9 | 8 | 2 | 2 | La36 | 15 × 15 | 12 | 16 | 11 | 11 |

| La17 | 10 × 10 | 10 | 11 | 7 | 7 | La37 | 15 × 15 | 14 | 17 | 8 | 9 |

| La18 | 10 × 10 | 12 | 14 | 8 | 4 | La38 | 15 × 15 | 15 | 18 | 14 | 13 |

| La19 | 10 × 10 | 12 | 12 | 6 | 6 | La39 | 15 × 15 | 18 | 14 | 8 | 12 |

| La20 | 10 × 10 | 12 | 14 | 4 | 7 | La40 | 15 × 15 | 14 | 17 | 10 | 11 |

| Aver. | / | 8.3 | 8.1 | 4.7 | 5.2 | Aver. | / | 13.7 | 15.3 | 9.3 | 10.5 |

| Cases | n × m | MC | MM | SM1 | SM2 | Cases | n × m | MC | MM | SM1 | SM2 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ta01 | 15 × 15 | 16 | 18 | 9 | 9 | Ta21 | 20 × 20 | 17 | 14 | 10 | 9 |

| Ta02 | 15 × 15 | 14 | 10 | 10 | 12 | Ta22 | 20 × 20 | 13 | 15 | 6 | 8 |

| Ta03 | 15 × 15 | 15 | 19 | 12 | 10 | Ta23 | 20 × 20 | 15 | 11 | 11 | 11 |

| Ta04 | 15 × 15 | 14 | 19 | 11 | 11 | Ta24 | 20 × 20 | 13 | 13 | 11 | 9 |

| Ta05 | 15 × 15 | 17 | 15 | 9 | 11 | Ta25 | 20 × 20 | 14 | 12 | 11 | 9 |

| Ta06 | 15 × 15 | 16 | 14 | 9 | 10 | Ta26 | 20 × 20 | 16 | 12 | 10 | 10 |

| Ta07 | 15 × 15 | 15 | 17 | 12 | 10 | Ta27 | 20 × 20 | 14 | 8 | 12 | 9 |

| Ta08 | 15 × 15 | 17 | 11 | 13 | 9 | Ta28 | 20 × 20 | 15 | 17 | 7 | 9 |

| Ta09 | 15 × 15 | 15 | 14 | 10 | 8 | Ta29 | 20 × 20 | 17 | 12 | 12 | 10 |

| Ta10 | 15 × 15 | 15 | 14 | 11 | 12 | Ta30 | 20 × 20 | 17 | 10 | 7 | 7 |

| Ta11 | 20 × 15 | 13 | 15 | 10 | 10 | Ta31 | 30 × 15 | 13 | 13 | 9 | 10 |

| Ta12 | 20 × 15 | 12 | 14 | 8 | 7 | Ta32 | 30 × 15 | 13 | 12 | 7 | 8 |

| Ta13 | 20 × 15 | 16 | 13 | 8 | 10 | Ta33 | 30 × 15 | 13 | 11 | 6 | 5 |

| Ta14 | 20 × 15 | 13 | 14 | 9 | 10 | Ta34 | 30 × 15 | 14 | 13 | 10 | 9 |

| Ta15 | 20 × 15 | 17 | 18 | 12 | 11 | Ta35 | 30 × 15 | 10 | 14 | 9 | 7 |

| Ta16 | 20 × 15 | 19 | 13 | 8 | 8 | Ta36 | 30 × 15 | 15 | 10 | 9 | 6 |

| Ta17 | 20 × 15 | 15 | 11 | 10 | 9 | Ta37 | 30 × 15 | 13 | 11 | 6 | 8 |

| Ta18 | 20 × 15 | 16 | 15 | 10 | 9 | Ta38 | 30 × 15 | 9 | 15 | 8 | 10 |

| Ta19 | 20 × 15 | 10 | 13 | 12 | 8 | Ta39 | 30 × 15 | 11 | 14 | 7 | 6 |

| Ta20 | 20 × 15 | 16 | 17 | 11 | 10 | Ta40 | 30 × 15 | 13 | 13 | 9 | 7 |

| Aver. | / | 15.1 | 14.7 | 10.2 | 9.7 | Aver. | / | 13.8 | 12.5 | 8.9 | 8.4 |

| Cases | n × m | MC | MM | SM1 | SM2 | ||||

|---|---|---|---|---|---|---|---|---|---|

| La01–La05 | 10 × 5 | 66 | 1.00 | 53 | 0.80 | 50 | 0.76 | 50 | 0.76 |

| La06–La10 | 15 × 5 | 83 | 1.00 | 54 | 0.65 | 52 | 0.63 | 50 | 0.60 |

| La11–La15 | 20 × 5 | 113 | 1.00 | 55 | 0.49 | 55 | 0.49 | 52 | 0.46 |

| La16–La20 | 10 × 10 | 102 | 1.00 | 54 | 0.53 | 54 | 0.53 | 51 | 0.50 |

| La21–La25 | 15 × 10 | 153 | 1.00 | 56 | 0.37 | 55 | 0.36 | 54 | 0.35 |

| La26–La30 | 20 × 10 | 220 | 1.00 | 60 | 0.27 | 59 | 0.27 | 57 | 0.26 |

| La31–La35 | 30 × 10 | 398 | 1.00 | 73 | 0.18 | 70 | 0.18 | 68 | 0.17 |

| La36–La40 | 15 × 15 | 238 | 1.00 | 65 | 0.27 | 62 | 0.26 | 60 | 0.25 |

| Ta01–Ta10 | 15 × 15 | 231 | 1.00 | 63 | 0.27 | 61 | 0.26 | 59 | 0.26 |

| Ta11–Ta20 | 20 × 15 | 351 | 1.00 | 75 | 0.21 | 70 | 0.20 | 68 | 0.19 |

| Ta21–Ta30 | 20 × 20 | 543 | 1.00 | 85 | 0.16 | 82 | 0.15 | 83 | 0.15 |

| Ta31–Ta40 | 30 × 15 | 733 | 1.00 | 95 | 0.13 | 92 | 0.13 | 89 | 0.12 |

| Aver. | / | 269.3 | 1.00 | 65.7 | 0.24 | 63.5 | 0.24 | 61.8 | 0.23 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Yu, S.; Li, T. A Meta-Model-Based Multi-Objective Evolutionary Approach to Robust Job Shop Scheduling. Mathematics 2019, 7, 529. https://doi.org/10.3390/math7060529

Wu Z, Yu S, Li T. A Meta-Model-Based Multi-Objective Evolutionary Approach to Robust Job Shop Scheduling. Mathematics. 2019; 7(6):529. https://doi.org/10.3390/math7060529

Chicago/Turabian StyleWu, Zigao, Shaohua Yu, and Tiancheng Li. 2019. "A Meta-Model-Based Multi-Objective Evolutionary Approach to Robust Job Shop Scheduling" Mathematics 7, no. 6: 529. https://doi.org/10.3390/math7060529

APA StyleWu, Z., Yu, S., & Li, T. (2019). A Meta-Model-Based Multi-Objective Evolutionary Approach to Robust Job Shop Scheduling. Mathematics, 7(6), 529. https://doi.org/10.3390/math7060529