Abstract

Large language models (LLMs) are increasingly adopted in medical question answering (QA) scenarios. However, LLMs have been proven to generate hallucinations and nonfactual information, undermining their trustworthiness in high-stakes medical tasks. Conformal Prediction (CP) is now recognized as a robust framework within the broader domain of machine learning, offering statistically rigorous guarantees of marginal (average) coverage for prediction sets. However, the applicability of CP in medical QA remains to be explored. To address this limitation, this study proposes an enhanced CP framework for medical multiple-choice question answering (MCQA) tasks. The enhanced CP framework associates the non-conformance score with the frequency score of the correct option. The framework generates multiple outputs for the same medical query by leveraging self-consistency theory. The proposed framework calculates the frequency score of each option to address the issue of limited access to the model’s internal information. Furthermore, a risk control framework is incorporated into the enhanced CP framework to manage task-specific metrics through a monotonically decreasing loss function. The enhanced CP framework is evaluated on three popular MCQA datasets using off-the-shelf LLMs. Empirical results demonstrate that the enhanced CP framework achieves user-specified average (or marginal) error rates on the test set. Moreover, the results show that the test set’s average prediction set size (APSS) decreases as the risk level increases. It is concluded that it is a promising evaluation metric for the uncertainty of LLMs.

Keywords:

large language models; conformal prediction; medical multiple-choice question answering; average prediction set size MSC:

68T35

1. Introduction

Recently, large language models (LLMs) [1] have gained prominence as revolutionary instruments in real-world medical question answering (QA) applications [2], offering significant potential to help both patients and healthcare professionals effectively address medical inquiries [3,4,5]. In contemporary healthcare practices, many people rely on online search engines to obtain health-related information [6]. Despite the promising capabilities of LLMs, concerns persist about the reliability of their generated responses [7,8], particularly in light of the potential hazards associated with inaccurate or contradictory information. Such risks are especially critical in clinical settings, where misinformation can lead to adverse outcomes and potentially endanger patient safety [9]. Unlike general-domain QA tasks, medical QA often involves domain-specific terminology, context-sensitive reasoning, and high-stakes decision making, where even minor inaccuracies may have significant consequences. Therefore, ensuring the reliability and trustworthiness of model outputs is not merely a performance issue, but a fundamental requirement for real-world applicability. These challenges highlight the need for rigorous uncertainty quantification frameworks with formal guarantees, and they strongly motivate the adaptation and extension of conformal prediction methods, specifically for the medical QA domain.

Uncertainty quantification (UQ) has emerged as a critical methodology for assessing the reliability of LLM output [10,11,12,13,14]. Existing UQ techniques can be broadly categorized into white-box and black-box approaches. White-box methods utilize internal model information, such as logarithmic summation, to quantify semantic uncertainty [15,16,17], including metrics such as semantic entropy [18]. In contrast, black-box uncertainty measures, rooted in self-consistency theory, evaluate uncertainty based on the semantic diversity of multiple outputs generated for the same input without requiring access to the model’s internal states [19,20,21]. Despite their utility, existing methods rely on heuristic definitions of uncertainty and lack of rigorous statistical guarantees for task-specific performance metrics [22].

Conformal prediction (CP) is now recognized as a robust framework within the broader domain of machine learning, offering statistically rigorous guarantees of marginal (average) coverage for prediction sets [23,24]. Previous research has demonstrated the successful adaptation of CP to classification tasks [25,26,27], achieving error correction miscoverage rates defined by the user. However, extending CP to medical question answering (QA) tasks presents substantial challenges.

To address these limitations, this study proposed an enhanced CP framework, which develops the non-conformity score (NS) to be closely aligned with the correct option in multiple-choice medical question answering (MCQA) tasks. Given that access to internal model information may not be feasible in practical medical MCQA scenarios, the enhanced CP framework defines the NS as one minus the probability of the correct option for each calibration data point, generates multiple outputs for the same medical query, and computes the frequency score for each option. Using self-consistency theory [28], the enhanced CP framework utilizes the frequency score within the sampling set as a proxy for the probability of the option. Furthermore, to address the limitation that the enhanced CP framework only provides rigorous control over the correct answer coverage, this study presents a risk control framework to manage task-specific metrics, ensuring that equivalent results can be obtained when the loss function is designed to reflect the correctness error miscoverage. So that equivalent results can be obtained when the loss function is designed to reflect the correctness error miscoverage

The proposed enhanced CP framework was evaluated on three multiple medical MCQA datasets, including the MedMCQA [29], MedQA [29], and MMLU [30] datasets, utilizing four popular pre-trained LLMs, including Llama-3.2-1B-Instruct, Llama-3.2-3B-Instruct, Qwen2.5-1.5B-Instruct, and Qwen2.5-3B-Instruct. Empirical results demonstrate that the enhanced CP framework achieves strict control over the marginal error rate (average correctness miscoverage rate) on test MCQA samples at various risk levels. Moreover, the average prediction set size (APSS) within the test set is also estimated in this study. Experimental findings show that the APSS metric decreases as the risk level increases, which can assist in using indicators such as accuracy to comprehensively evaluate the performance of LLMs. The main contributions of this study are summarized as follows. (i) For the first time, conformal prediction is applied to medical multiple-choice question answering tasks, strictly guaranteeing the coverage of correct options. (ii) A promising metric is revealed to assess the uncertainty of LLMs by estimating the average prediction set size on the test MCQA sample. (iii) Extensive evaluation and ablation studies on three popular medical benchmarks are conducted using four pre-trained LLMs, demonstrating the effectiveness and robustness of the enhanced CP framework.

2. Related Work

Medical question answering (QA) presents distinct challenges compared to general-domain QA tasks, owing to its reliance on domain-specific terminology, the necessity for precise multi-hop reasoning across heterogeneous medical knowledge sources, and the potentially severe consequences of erroneous outputs. Furthermore, many medical queries are inherently ambiguous or highly context-dependent, and the corresponding data are often incomplete or uncertain.

These complexities hinder the ability of conventional QA models to produce reliable responses, particularly in open-ended or generative settings. To mitigate these limitations, recent research has introduced self-consistency-based approaches, which enhance model robustness by measuring the agreement across multiple independently generated responses. Building upon this paradigm, the present study integrates self-consistency mechanisms within a conformal prediction framework, enabling the construction of uncertainty-aware prediction sets that offer formal statistical coverage guarantees, while maintaining task-specific reliability in high-stakes medical QA scenarios.

2.1. Natural Language Generation Tasks in the Medical Domain

The application of Natural Language Generation (NLG) in the medical domain has become a critical area of research, driven by the growing reliance on advanced technologies to optimize patient care and clinical communication. NLG systems are increasingly employed to automate clinical documentation, address medical inquiries, and provide diagnostic suggestions. These systems utilize state-of-the-art large language models (LLMs) such as GPT-3 [3] and BERT [31] to produce responses that are both contextually relevant and medically accurate. By delivering timely and precise information, these technologies aim to alleviate healthcare professionals’ workload and enhance patient outcomes [9].

While NLG models present considerable potential within medical applications, they also encounter substantial challenges. A primary concern is the reliability of the generated content, especially in high-stakes scenarios such as medical diagnosis and patient care. Despite their ability to develop contextually appropriate and persuasive responses, these models may also produce factually incorrect or misleading information, posing significant risks in clinical environments. Recent research efforts have focused on implementing domain-specific fine-tuning strategies and integrating uncertainty quantification (UQ) techniques to assess and enhance the reliability of NLG systems in medical contexts [19]. Nonetheless, further advancements are needed to ensure that NLG systems consistently meet the rigorous safety and accuracy standards of healthcare settings.

2.2. Uncertainty Quantification in Medical Question Answering Tasks

Uncertainty Quantification (UQ) plays a pivotal role in medical question answering (QA) tasks, where the consequences of inaccurate or unreliable responses can lead to severe clinical implications. In such high-stakes applications, it is imperative to rigorously assess the confidence of the system’s predictions to determine when a generated answer can be considered trustworthy. Traditional UQ methodologies, predominantly designed for classification tasks, are not readily applicable to the inherently open-ended nature of medical QA, where responses often involve complex, nuanced, and context-dependent information.

Recent advancements in the field have introduced specialized UQ techniques tailored to the unique demands of medical QA tasks. Confidence-based metrics [10] and entropy-based approaches [32] have been employed to evaluate the uncertainty associated with medical responses. These methods typically involve analyzing the model’s output distribution or assessing the consistency of predictions across multiple generated responses. Additionally, self-consistency frameworks and ensemble methods have been proposed to compute confidence scores by aggregating the outputs from diverse model iterations [19]. Despite their potential, many existing approaches lack formal statistical guarantees, representing a significant limitation in clinical settings where precision and reliability are critical. Consequently, developing robust UQ methodologies is necessary to effectively manage the complexities of medical data while delivering reliable and interpretable uncertainty estimates.

2.3. Conformal Prediction for Uncertainty Quantification in Medical Question Answering Tasks

Conformal Prediction (CP) has emerged as a robust methodology for uncertainty quantification (UQ) in medical question answering (QA) tasks, providing a rigorous framework for constructing prediction sets with statistically guaranteed coverage [23]. Unlike traditional UQ techniques that produce single-point predictions, CP generates a set of potential outcomes alongside confidence measures, offering a quantifiable assessment of the reliability of a generated response. The capability is particularly critical in medical QA applications, where the implications of erroneous predictions can be severe, and clinicians must often rely on automated responses to inform clinical decision making.

The utility of CP is further enhanced in the context of large language models (LLMs) [20,25,33,34,35], which are frequently characterized as “black-box” models due to their inherent opacity [19]. A key advantage of CP is its model-agnostic nature, as it does not require assumptions about the underlying data distribution, allowing its application across a broad spectrum of machine learning models, including LLMs. Recent studies have explored the adaptation of CP methodologies to open-ended natural language generation (NLG) tasks, such as medical QA, demonstrating the potential of CP to balance prediction accuracy with coverage. This controlled trade-off is particularly valuable in high-stakes domains like healthcare, where maintaining a balance between model performance and reliability is essential. Nevertheless, significant challenges remain in scaling CP approaches and managing the complexities of diverse and heterogeneous medical data.

3. Method

The methods presented in this section introduce a novel framework based on Conformal Prediction (CP) for medical multiple-choice question answering tasks. This framework provides an advanced approach for uncertainty quantification (UQ) and task-specific risk management. By leveraging the framework, prediction sets are generated that are statistically guaranteed to contain the true label with a predefined confidence level, which is essential for applications in high-stakes fields such as healthcare.

3.1. Adaptation of Conformal Prediction to Medical MCQA Tasks

Conformal Prediction (CP) is a statistical framework that generates prediction sets with robust uncertainty quantification for machine learning models. Unlike traditional methods that provide single-point predictions, CP constructs a set of potential outcomes for each test instance, ensuring that the true label is included with at least confidence. This characteristic is particularly valuable for high-stakes applications such as medical MCQA tasks.

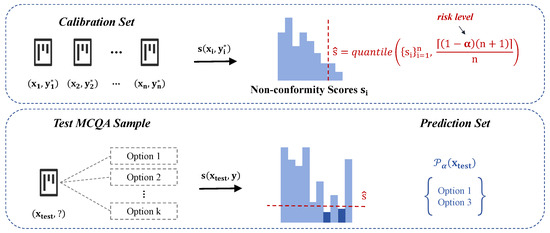

As illustrated in Figure 1, the framework first measures the disagreement between the input and its ground-truth label by computing a non-conformity score for each calibration sample. A quantile threshold is then determined based on these scores to establish the statistical risk level. Given a test MCQA sample , the model evaluates multiple candidate answers, computing their respective non-conformity scores. The prediction set is subsequently constructed by retaining only those answer choices whose scores fall below the calibrated threshold, ensuring that the final prediction set adheres to the desired miscoverage rate guarantees.

Figure 1.

Adaptation of conformal prediction to medical MCQA tasks.

In medical MCQA, CP calculates a conformity score for each test instance, comparing it with the empirical distribution of conformity scores from the training data. A threshold is then derived to define the prediction set that meets the desired coverage probability, as follows:

The method ensures a statistically rigorous coverage guarantee, expressed as follows:

The calibration process further enhances this reliability by fine-tuning the threshold using a dedicated calibration set. However, while CP provides a robust approach to managing uncertainty in predictive models and offers well-calibrated prediction sets suitable for the stringent requirements of medical MCQA tasks, its limitation lies in the inability to manage task-specific metrics beyond mere coverage control. This shortcoming underscores the necessity of the proposed risk control framework, which extends the capabilities of CP by introducing a monotonic decreasing loss function to manage task-specific risks effectively and enhance the reliability of large language models in high-stakes medical applications.

3.2. Guarantee of Task-Specific Metrics

This section introduces a method for constructing prediction sets with statistically rigorous guarantees on miscoverage rates via conformal calibration, specifically tailored for the medical multiple-choice question answering (MCQA) context. Unlike general-purpose QA tasks, medical MCQA involves domain-specific terminology that is often rare and compound in nature, which is typically segmented into multiple sub-tokens during language model processing. This characteristic introduces inconsistency in token-level semantic evaluations, leading to unstable and biased uncertainty estimates. To address this challenge, the proposed framework operates at the answer option level, treating each candidate response as a discrete class and computing non-conformity scores to quantify its deviation from correctness. By leveraging a calibration set and a test data point , the method enables precise task-specific risk control through the design of customized loss functions, facilitating robust uncertainty quantification in high-stakes medical scenarios.

The proposed method extends traditional classification tasks into the Question Answering (QA) domain by leveraging the flexibility of the non-conformity score. In a typical classification task, a model predicts a discrete label from a predefined set of classes. The classification task is transformed into a QA task by mapping each class label to a potential answer in a natural language format. This mapping is particularly effective when the model generates candidate answers requiring more contextual understanding and natural language generation.

For example, a classification task that categorizes images into “cat”, “dog”, or “bird” can be rephrased as a QA task by asking, “What animal is in the image?”. The candidate answers become the possible labels, and the model evaluates each candidate’s reliability through the non-conformity score, ensuring that the prediction set adheres to the desired coverage guarantees.

Given a pre-trained language model f, the input query is utilized to generate a candidate set . For each candidate set, the prediction set is defined as follows:

is a quantitative measure of the reliability of a candidate’s answer y within the set. The parameter t functions as a threshold, systematically regulating the inclusion of predictions based on their reliability scores.

To enable task-specific risk management, we introduce the non-conformity score , which quantifies the degree of deviation of a candidate prediction y from the expected true answer given the input . The non-conformity score is defined as follows:

represents the reliability score of the candidate answer. The non-conformity score is critical in bridging classification and QA tasks by providing a unified metric for evaluating prediction quality across different task types.

In the classification setting, can be interpreted as a measure of how likely a predicted label is to be incorrect. When extended to QA tasks, this score helps determine the most suitable candidate answers by evaluating their conformity with the context of the question. By setting appropriate thresholds, the method ensures that only highly reliable answers are included in the prediction set, effectively managing task-specific risks.

For each calibration data point, the loss function is defined as follows:

is the ground-truth answer and the loss function is designed as a non-increasing function with respect to the tuning parameter t, ensuring that a higher parameter value does not increase the loss. This property is critical for maintaining the theoretical monotonicity required for robust empirical risk management.

The empirical loss over the calibration set is defined as follows:

The primary objective of the proposed method is to control the expected loss of new test data by ensuring the following:

Under the exchangeability assumption of the calibration and test data, the optimal parameter is derived by solving the following optimization problem:

This formulation guarantees a statistically rigorous control of the miscoverage rate, thereby offering robust theoretical assurances for predictive accuracy and reliability. Beyond ensuring marginal coverage, the proposed method provides flexible support for task-specific metric control through a customized loss function design. By tailoring the loss function to the characteristics of classification and question answering (QA) tasks, this framework presents a highly adaptable risk management strategy. Integrating the non-conformity score further enhances the model’s ability to maintain stable uncertainty control across various prediction scenarios, which is particularly beneficial in high-stakes applications requiring strong statistical guarantees.

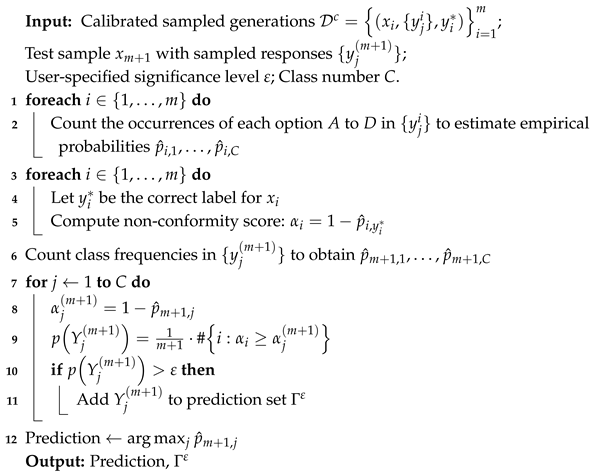

The complete procedure is summarized in Algorithm 1, which details each step in constructing prediction sets with conformal guarantees for medical MCQA tasks and demonstrates how statistical rigor is operationalized into a practical inference framework.

| Algorithm 1: Frequency-based implementation of conformal prediction for medical MCQA |

|

4. Experiments

The experiments presented in this section aim to evaluate the performance of the proposed framework, utilizing a set of widely recognized medical datasets and state-of-the-art large language models (LLMs). These experiments focus on assessing the framework’s ability to manage uncertainty quantification (UQ) and task-specific risks across various medical question answering (QA) tasks. Additionally, the performance of the framework is measured using several standard metrics, providing a comprehensive analysis of its effectiveness in real-world applications.

4.1. Experimental Settings

4.1.1. Datasets

The study utilizes three widely recognized benchmark datasets—MedMCQA, MedQA, and the multi-task MMLU dataset—to rigorously assess the performance of the proposed approach across diverse medical question answering (QA) scenarios. To ensure a robust and unbiased evaluation, we uniformly sampled 2000 representative instances from each dataset to maintain consistency and comparability throughout the experimental design.

The selected datasets provide a broad and diverse benchmark for evaluating medical and general knowledge QA systems. The MedMCQA dataset is curated explicitly for real-world medical entrance examinations, encompassing over 194,000 high-quality multiple-choice questions [29]. It spans 2400 healthcare topics across 21 medical subjects, requiring advanced reasoning and analytical skills. The MedQA dataset offers a multilingual multiple-choice question dataset. It includes 12,723 questions in English, 34,251 in simplified Chinese, and 14,123 in traditional Chinese, along with a large-scale corpus of medical textbook content to support reading comprehension models.

The MMLU dataset evaluates multi-task language understanding across 57 diverse tasks, including the humanities, social sciences, hard sciences, and law. It challenges models with both general knowledge queries and advanced problem-solving scenarios. Together, these datasets serve as robust and diverse evaluation tools, enabling a thorough assessment of model performance in specialized medical and broad general knowledge domains, and facilitating the advancement of more reliable and adaptable QA systems.

4.1.2. Based LLMs

This work evaluates the proposed framework using four large language models (LLMs), each with distinct architectures and model sizes. Specifically, the models employed include Llama-3.2-1B-Instruct [36], Llama-3.2-3B-Instruct [36], Qwen2.5-1.5B-Instruct [37], and Qwen2.5-3B-Instruct [37]. These models span a parameter range from 1.5 billion to 3 billion, enabling a comprehensive analysis of how model size influences performance across diverse tasks.

The Llama-3.2-1B-Instruct [36] and Llama-3.2-3B-Instruct [36] models are built upon the Llama architecture, which is explicitly designed to excel in instruction-following scenarios. These models are particularly effective in tasks requiring a nuanced understanding of natural language instructions and generating coherent and contextually appropriate responses. Conversely, the Qwen2.5-1.5B-Instruct [37] and Qwen2.5-3B-Instruct [37] models are optimized for multi-task learning, performing effectively in various NLP applications, including classification, reasoning, and responding to questions. By incorporating LLMs with diverse architectures and parameter scales, the proposed experimental design enhances the robustness and generalizability of the evaluation. This approach ensures a thorough and nuanced assessment of the proposed framework’s effectiveness across models of varying sizes and functional capabilities, contributing to a more holistic understanding of its performance dynamics.

4.1.3. Hyperparameters

In the experiments, the split ratio between the calibration and test sets is fixed at 0.5, ensuring an equal data distribution across both sets. This strategy provides a balanced and reliable evaluation of model performance. Consistent with prior studies, multinomial sampling is utilized to generate M candidate responses for each data point. For the MMLU and MedMCQA datasets, each comprising four answer options per question, the number of candidate responses M is set to 20, aligning with established practices [15]. For the MMLU-Pro dataset, where each sample includes ten multiple-choice options, M is increased to 50 to approximate the model output distribution more accurately.

To optimize computational efficiency in multiple-choice question answering (MCQA) tasks, the maximum generation length is set to 1, and the generation temperature is fixed at 1.0 for sampling. A broader set of hyperparameters is explored for open-domain QA tasks, with further performance refinement achieved through risk calibration techniques as detailed in prior research [22,24,26]. The parameter input_length refers to the embedding length of the input prompt after being encoded by the tokenizer of the current language model. Additionally, the maximum generation length for both tasks is set to 36 tokens, ensuring that generated responses are sufficiently detailed while avoiding excessive constraints imposed by model limitations.

4.1.4. Evaluation Metrics

The performance is rigorously assessed using two principal metrics as follows: the Empirical Miscoverage Rate (EMR) and the Average Prediction Set Size (APSS). The EMR assesses how well the proposed framework maintains the intended marginal coverage within the test set. It measures the frequency at which the true label of a test sample falls outside the predicted coverage set, offering a quantitative assessment of prediction reliability. A thorough analysis of the EMR verifies that the model consistently achieves the desired coverage level under various test conditions, demonstrating its robustness and generalization capabilities.

In addition to EMR, the APSS metric is utilized to assess prediction efficiency and uncertainty for each LLM. The APSS represents the average number of candidate responses generated per test sample, indicating the model’s handling of uncertainty in the prediction process. A lower APSS reflects a more efficient model that produces fewer yet accurate responses, while a higher APSS may indicate increased uncertainty or a more conservative predictive approach. By combining EMR and APSS metrics, the proposed framework offers a comprehensive analysis of model performance, balancing predictive reliability with efficiency and providing valuable insights into its behavior in real-world applications.

4.2. Empirical Evaluations

4.2.1. Statistical Guarantees of the EMR Metric

This section rigorously validates the calibrated prediction sets constructed according to Equation (2), showing that they reliably achieve the desired coverage levels across different user-defined error rates. Furthermore, the practical effectiveness is investigated by applying selective prediction guided by the proposed uncertainty metric.

4.2.2. Empirical Coverage Guarantees

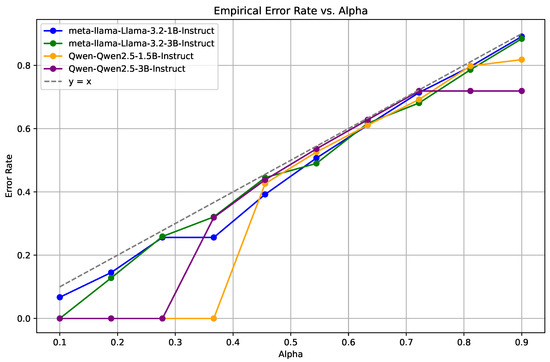

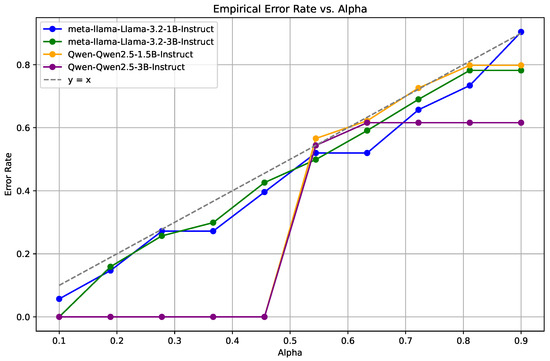

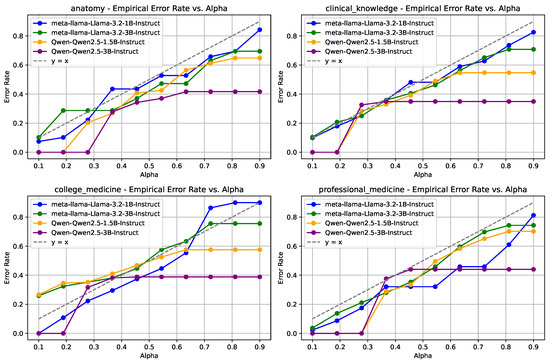

To verify the minimum correctness coverage level empirically, each of the four datasets is partitioned with a 1:10 ratio into calibration and testing subsets. The calibration subset determines the conformal uncertainty threshold based on the maximum allowable error rate. Subsequently, coverage performance is assessed using the testing subset, and corresponding results for MedMCQA, MedQA, and MMLU are shown in Figure 2, Figure 3 and Figure 4.

Figure 2.

Empirical Miscoverage Rate (EMR) for the MedMCQA dataset across different confidence levels (). The Llama models exhibit superior stability and lower error rates compared to the Qwen2.5 models.

Figure 3.

EMR performance on the MedQA dataset, demonstrating the consistent reliability of Llama models over a range of error rates, with the Qwen2.5 models showing considerable variability.

Figure 4.

EMR results for the MMLU dataset across categories including high school biology, anatomy, clinical knowledge, and college medicine. The Llama models maintain better stability and accuracy, particularly in clinical knowledge tasks.

In Figure 2, which displays the MedMCQA dataset results, a consistent trend of increasing error rates is observed as the confidence level rises. Notably, the Llama-3.2-1B-Instruct [36] and Llama-3.2-3B-Instruct [36] models consistently maintain lower and more stable error rates compared to the Qwen2.5-1.5B-Instruct [37] and Qwen2.5-3B-Instruct [37] models, which exhibit more significant variability.

A similar pattern is evident in Figure 3, representing the MedQA dataset. The Llama models again demonstrate superior stability and lower error rates, whereas the Qwen2.5 models show an increase in error rates at higher values. The findings indicates that the Llama models offer more reliable predictive performance across a broad range of confidence levels.

For the MMLU dataset, as shown in Figure 4, four distinct categories are analyzed as follows: high school biology, anatomy, clinical knowledge, and college medicine. Across all categories, the Llama models consistently exhibit enhanced stability and lower error rates relative to the Qwen2.5 models. The performance discrepancy is mainly pronounced in the clinical knowledge category, where the Qwen2.5 models show significant fluctuations in error rates.

These empirical results validate the stringent control over the correctness coverage rate under varying error rate conditions across all datasets. The statistical guarantees provided by these findings align closely with the theoretical framework established in Equations (2) and (7), demonstrating that the calibrated prediction sets constructed by the proposed method ensure robust and statistically rigorous correctness coverage under diverse calibration scenarios.

4.2.3. Uncertainty Estimation of LLMs

The Average Prediction Set Size (APSS) metric is a critical indicator of model uncertainty, demonstrating a clear inverse relationship with the risk level . As illustrated in Table 1, the APSS consistently decreases as the risk level increases, representing a more stringent criterion for constructing prediction sets. This trend is evident across all evaluated models, underscoring the robustness of the APSS metric as a measure of predictive uncertainty.

Table 1.

Results of the APSS metric at various risk levels.

For each dataset, an increase in the risk level generally reduces the APSS metric. The larger models typically exhibit smaller average prediction set sizes than the Qwen2.5 series models. For instance, in the MedMCQA dataset, the Llama-3.2-1B-Instruct [36] model reduces its APSS from 3.569 at to 0.214 at . Similarly, in the MedQA dataset, the Llama-3.2-3B-Instruct model demonstrates a pronounced decrease in APSS from 4.000 at to 0.137 at , highlighting a consistent narrowing of the prediction sets as the uncertainty threshold tightens.

In contrast, the Qwen2.5 models exhibit a more gradual reduction in APSS with increasing values. Specifically, the Qwen2.5-1.5B-Instruct model [37] on the MMLU dataset maintains an APSS of 4.000 up to , after which a gradual decrease is observed. This behavior suggests that the Qwen2.5 models tend to generate larger and more stable prediction sets across varying confidence levels, potentially indicating higher uncertainty in their predictions.

These empirical findings validate that the APSS metric effectively captures model uncertainty under different error thresholds. A lower APSS correlates with reduced uncertainty and a more precise prediction set, aligning well with the theoretical expectations of selective prediction. As the model’s confidence increases, the prediction set size appropriately contracts, demonstrating the model’s capability to manage uncertainty. Additionally, the efficiency of different models is highlighted, with the larger Llama models demonstrating superior efficiency in narrowing prediction sets compared to the Qwen2.5 models, which maintain larger prediction sets, even at higher levels.

Overall, the APSS metric serves as a reliable measure of model uncertainty and offers critical insights into model optimization strategies for achieving more precise and selective predictions under varying confidence thresholds. It reinforces the utility of APSS as a tool for evaluating model performance in high-stakes applications where prediction certainty is paramount.

4.3. Sensitivity Analysis

4.3.1. EMR at Various Split Ratios

To assess the sensitivity of the proposed method to different data partitioning strategies, a comprehensive analysis of the Empirical Miscoverage Rate (EMR) was conducted on the MedMCQA dataset, with the parameter . The evaluation was performed across a range of split ratios (0.1, 0.3, 0.5, 0.7), as detailed in Table 2. The results demonstrate that the Llama-3.2-1B-Instruct [36] and Llama-3.2-3B-Instruct [36] models maintained consistently low and stable EMR values, ranging from 0.13 to 0.20, irrespective of the split ratio. In contrast, the Qwen2.5-1.5B-Instruct [37] and Qwen2.5-3B-Instruct [37] models exhibited an EMR consistently at 0.00 across all tested scenarios, indicating robust performance independent of data partitioning.

Table 2.

Exact Match Ratio (EMR) at various split ratios.

These findings underscore the robustness of the proposed method, demonstrating its capacity to maintain stable performance across diverse data distributions and varying sample sizes. This stability is particularly valuable in mitigating the risks associated with model overfitting or underfitting, enhancing the method’s generalization capabilities across different experimental conditions.

4.3.2. AUROC Analysis

As presented in Table 3, the Area Under the Receiver Operating Characteristic (AUROC) results for the MedMCQA, MedQA, and MMLU datasets indicate a high degree of alignment between the frequency-based method and the logit-based approach. The AUROC values for both methods remain closely matched across all tested models and datasets, consistently achieving relatively high performance. This strong correlation suggests that the frequency-based method serves as a viable approximation of the logit-based approach, particularly in scenarios where logits are unavailable.

Table 3.

Results of the AUROC metric.

The minimal performance discrepancy between the two methods highlights the robustness and practicality of employing the frequency method as a substitute for logit-based evaluations. This is particularly advantageous in applications with restricted access to logit data, enabling a reliable and effective alternative for model performance assessment under such constraints.

4.3.3. Reliability Measurement

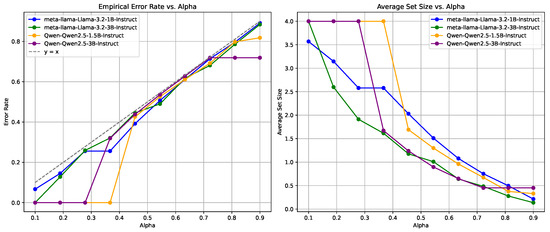

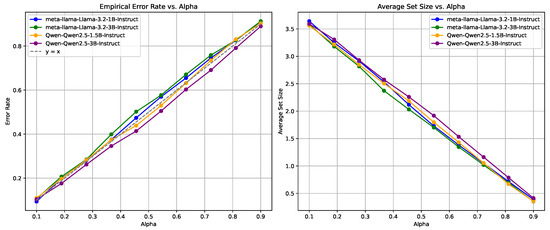

A comparative analysis was conducted to evaluate model reliability using two distinct measurement strategies as follows: frequency-based metrics and logit-based metrics, explicitly focusing on the MedMCQA dataset. The assessment centered on the following two critical performance indicators: Empirical Error Rate (EMR) and Average Prediction Set Size (APSS).

Figure 5 presents the performance using frequency-based measurements, while Figure 6 illustrates the results with logit-based metrics. The logit-based approach demonstrates a consistent and gradual increase in EMR and APSS at varying risk level values. The proposed models evaluated with logit-based metrics exhibit more stable performance, with error rates and prediction set sizes increasing smoothly and predictably as rises.

Figure 5.

Reliability measurement using frequency-based metrics on the MedMCQA dataset, showing variability in EMR and APSS across different values.

Figure 6.

Reliability measurement using logit-based metrics on the MedMCQA dataset, demonstrates stable performance with a smooth increase in EMR and APSS as increases.

Conversely, the frequency-based approach, as shown in Figure 5, results in more pronounced fluctuations, particularly in EMR. The frequency-based method shows a steeper decline in average set size; however, the associated increase in EMR is more erratic when compared to the logit-based approach. It is indicated that frequency-based metrics may lead to greater variability in model performance as the confidence threshold changes.

The analysis suggests that the logit-based approach is more effective and reliable in maintaining consistent performance, particularly in managing error rates and prediction set sizes. It provides a more predictable balance between error rate and average set size, making it a preferred choice for high-stakes applications where reliability and stability are critical.

Nevertheless, frequency-based metrics offer a practical alternative in practical scenarios where access to the model’s internal logits may not be feasible. While these metrics may not achieve the same level of precision and stability as the logit-based approach, they still provide a reasonable approximation of performance and reliability. This adaptability is advantageous in real-world applications where the internal model structure or logit data are inaccessible, enabling robust reliability assessments using only observable model outputs.

5. Conclusions

This study presents an enhanced CP framework to achieve risk control in medical MCQA tasks. By integrating self-consistency theory, the enhanced CP framework provides a statistically rigorous and interpretable method to improve the trustworthiness of LLMs in high-stakes medical applications by mitigating risks linked to model hallucinations. Comparative experiments demonstrated that the enhanced CP framework not only preserves CP’s model-agnostic and distribution-free advantages but also extends its functionality beyond simple coverage control by introducing a monotonically decreasing loss function that effectively manages task-specific metrics. Additionally, the enhanced CP framework consistently achieved user-specified miscoverage rates. The result analysis also indicated a clear inverse relationship between the APSS and the associated risk level, highlighting the potential of APSS as a robust metric for quantifying LLM uncertainty. Future research will investigate the adaptation of this framework to other domain-specific QA tasks and its integration with advanced LLM architectures to improve reliability and transparency further.

Author Contributions

Conceptualization, Y.K. and H.L.; methodology, Y.K.; software, Y.K.; validation, Y.K., H.L. and Y.R.; formal analysis, Y.K.; investigation, Y.K.; resources, Y.K.; data curation, Y.K.; writing—original draft preparation, Y.K.; writing—review and editing, J.T. and L.L.; visualization, Y.K.; supervision, J.T.; project administration, L.L.; mathematical derivations and performance verification, Y.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 72171172 and 92367101; the Aeronautical Science Foundation of China under Grant 2023Z066038001; the National Natural Science Foundation of China Basic Science Research Center Program under Grant 62088101; Shanghai Municipal Science and Technology Major Project under Grant 2021SHZDZX0100.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MDPI | Multidisciplinary Digital Publishing Institute |

| LLMs | Large Language Models |

| QA | Question Answering |

| MCQA | Multiple-Choice Question Answering |

| UQ | Uncertainty Quantification |

| CP | Conformal Prediction |

| EMR | Empirical Miscoverage Rate |

| APSS | Average Prediction Set Size |

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| NS | Non-Conformity Score |

| MMLU | Massive Multitask Language Understanding |

| MedMCQA | Medical Multiple-Choice Question Answering Dataset |

| MedQA | Medical Question Answering Dataset |

References

- Faria, F.T.J.; Baniata, L.H.; Kang, S. Investigating the predominance of large language models in low-resource Bangla language over transformer models for hate speech detection: A comparative analysis. Mathematics 2024, 12, 3687. [Google Scholar] [CrossRef]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Amin, M.; Hou, L.; Clark, K.; Pfohl, S.R.; Cole-Lewis, H.; et al. Toward expert-level medical question answering with large language models. Nat. Med. 2025, 31, 943–950. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Hager, P.; Jungmann, F.; Holland, R.; Bhagat, K.; Hubrecht, I.; Knauer, M.; Vielhauer, J.; Makowski, M.; Braren, R.; Kaissis, G.; et al. Evaluation and mitigation of the limitations of large language models in clinical decision-making. Nat. Med. 2024, 30, 2613–2622. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large language models in medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef]

- He, K.; Mao, R.; Lin, Q.; Ruan, Y.; Lan, X.; Feng, M.; Cambria, E. A survey of large language models for healthcare: From data, technology, and applications to accountability and ethics. Inf. Fusion 2025, 118, 102963. [Google Scholar] [CrossRef]

- Das, B.C.; Amini, M.H.; Wu, Y. Security and privacy challenges of large language models: A survey. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in Bayesian deep learning for computer vision? Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Shorinwa, O.; Mei, Z.; Lidard, J.; Ren, A.Z.; Majumdar, A. A survey on uncertainty quantification of large language models: Taxonomy, open research challenges, and future directions. arXiv 2024, arXiv:2412.05563. [Google Scholar]

- Kadavath, S.; Conerly, T.; Askell, A.; Henighan, T.; Drain, D.; Perez, E.; Schiefer, N.; Hatfield-Dodds, Z.; DasSarma, N.; Tran-Johnson, E.; et al. Language models (mostly) know what they know. arXiv 2022, arXiv:2207.05221. [Google Scholar]

- Xiong, M.; Hu, Z.; Lu, X.; Li, Y.; Fu, J.; He, J.; Hooi, B. Can LLMs express their uncertainty? An empirical evaluation of confidence elicitation in LLMs. arXiv 2023, arXiv:2306.13063. [Google Scholar]

- Chen, J.; Mueller, J. Quantifying uncertainty in answers from any language model and enhancing their trustworthiness. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), St. Paul, MN, USA, 8–12 July 2024. [Google Scholar]

- Kuhn, L.; Gal, Y.; Farquhar, S. Semantic uncertainty: Linguistic invariances for uncertainty estimation in natural language generation. In Proceedings of the Eleventh International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Wang, Z.; Duan, J.; Yuan, C.; Chen, Q.; Chen, T.; Zhang, Y.; Wang, R.; Shi, X.; Xu, K. Word-sequence entropy: Towards uncertainty estimation in free-form medical question answering applications and beyond. Eng. Appl. Artif. Intell. 2025, 139, 109553. [Google Scholar] [CrossRef]

- Duan, J.; Cheng, H.; Wang, S.; Zavalny, A.; Wang, C.; Xu, R.; Kailkhura, B.; Xu, K. Shifting attention to relevance: Towards the predictive uncertainty quantification of free-form large language models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), St. Paul, MN, USA, 8–12 July 2024. [Google Scholar]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Lin, Z.; Trivedi, S.; Sun, J. Generating with confidence: Uncertainty quantification for black-box large language models. arXiv 2024, arXiv:2305.19187. [Google Scholar]

- Wang, Z.; Duan, J.; Cheng, L.; Zhang, Y.; Wang, Q.; Shi, X.; Xu, K.; Shen, H.T.; Zhu, X. ConU: Conformal uncertainty in large language models with correctness coverage guarantees. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Online, 6–10 December 2024. [Google Scholar]

- Qiu, X.; Miikkulainen, R. Semantic Density: Uncertainty quantification in semantic space for large language models. arXiv 2024, arXiv:2405.13845. [Google Scholar]

- Jin, Y.; Candès, E.J. Selection by prediction with conformal p-values. J. Mach. Learn. Res. 2023, 24, 1–41. [Google Scholar]

- Campos, M.; Farinhas, A.; Zerva, C.; Figueiredo, M.A.T.; Martins, A.F.T. Conformal prediction for natural language processing: A survey. Trans. Assoc. Comput. Linguist. 2024, 12, 1497–1516. [Google Scholar] [CrossRef]

- Angelopoulos, A.N.; Bates, S. A gentle introduction to conformal prediction and distribution-free uncertainty quantification. arXiv 2021, arXiv:2107.07511. [Google Scholar]

- Angelopoulos, A.N.; Barber, R.F.; Bates, S. Theoretical foundations of conformal prediction. arXiv 2024, arXiv:2411.11824. [Google Scholar]

- Huang, L.; Lala, S.; Jha, N.K. CONFINE: Conformal prediction for interpretable neural networks. arXiv 2024, arXiv:2406.00539. [Google Scholar]

- Angelopoulos, A.N.; Bates, S.; Jordan, M.; Malik, J. Uncertainty sets for image classifiers using conformal prediction. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Chi, E.; Narang, S.; Chowdhery, A.; Zhou, D. Self-consistency improves chain of thought reasoning in language models. arXiv 2022, arXiv:2203.11171. [Google Scholar]

- Jin, D.; Pan, E.; Oufattole, N.; Weng, W.H.; Fang, H.; Szolovits, P. What disease does this patient have? A large-scale open domain question answering dataset from medical exams. Appl. Sci. 2021, 11, 6421. [Google Scholar] [CrossRef]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring massive multitask language understanding. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Malinin, A.; Gales, M. Uncertainty estimation in autoregressive structured prediction. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Gui, Y.; Jin, Y.; Ren, Z. Conformal Alignment: Knowing When to Trust Foundation Models with Guarantees. arXiv 2024, arXiv:2401.12345. [Google Scholar]

- Ye, F.; Yang, M.; Pang, J.; Wang, L.; Wong, D.; Yilmaz, E.; Shi, S.; Tu, Z. Benchmarking LLMs via Uncertainty Quantification. Adv. Neural Inf. Process. Syst. 2024, 37, 15356–15385. [Google Scholar]

- Wang, Q.; Geng, T.; Wang, Z.; Wang, T.; Fu, B.; Zheng, F. Sample then Identify: A General Framework for Risk Control and Assessment in Multimodal Large Language Models. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar]

- Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 technical report. arXiv 2024, arXiv:2412.15115. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).