Abstract

In recent years, the field of multi-source navigation data fusion has witnessed substantial advancements, propelled by the rapid development of multi-sensor technologies, Artificial Intelligence (AI) algorithms and enhanced computational capabilities. On one hand, fusion methods based on filtering theory, such as Kalman Filtering (KF), Particle Filtering (PF), and Federated Filtering (FF), have been continuously optimized, enabling effective handling of non-linear and non-Gaussian noise issues. On the other hand, the introduction of AI technologies like deep learning and reinforcement learning has provided new solutions for multi-source data fusion, particularly enhancing adaptive capabilities in complex and dynamic environments. Additionally, methods based on Factor Graph Optimization (FGO) have also demonstrated advantages in multi-source data fusion, offering better handling of global consistency problems. In the future, with the widespread adoption of technologies such as 5G, the Internet of Things, and edge computing, multi-source navigation data fusion is expected to evolve towards real-time processing, intelligence, and distributed systems. So far, fusion methods mainly include optimal estimation methods, filtering methods, uncertain reasoning methods, Multiple Model Estimation (MME), AI, and so on. To analyze the performance of these methods and provide a reliable theoretical reference and basis for the design and development of a multi-source data fusion system, this paper summarizes the characteristics of these fusion methods and their corresponding application scenarios. These results can provide references for theoretical research, system development, and application in the fields of autonomous driving, unmanned vehicle navigation, and intelligent navigation.

MSC:

93B27

1. Introduction

Individually, Global Navigation Satellite Systems (GNSS), Inertial Navigation Systems (INS), Ultra-Wideband (UWB) technology, Bluetooth, Wireless Local Area Networks (WLAN), visual sensors, Pseudolites (PL), and various other sensors struggle to meet demanding navigation performance requirements. GNSS, for instance, as a non-autonomous navigation system, is particularly limited in specific complex environments such as urban canyons and tunnels, where its signals are highly susceptible to blockage, interference, and shielding. To significantly enhance the overall performance of navigation systems, integrated navigation technology emerges as an effective solution. This technology entails the collaborative use of two or more distinct types of navigation systems to measure and calculate the same navigation information, thereby generating quantitative measurements. These measurements are subsequently utilized to compute and correct the errors inherent in each navigation system. By leveraging a diverse array of technical means and methods, this approach ensures high accuracy and reliability of navigation and positioning services across a wide range of scenarios. These scenarios encompass seamless indoor and outdoor positioning, environments with electromagnetic interference, as well as underwater and underground environments. Therefore, multi-source data fusion positioning, which is founded on the collaboration of multiple technology sources and adheres to specific optimization criteria, becomes the linchpin for achieving optimal fusion positioning. The fusion method not only serves as the prerequisite and foundation for all-source navigation but also acts as the key and core of integrated navigation systems.

The concept of data fusion was first introduced by the renowned American systems scientist Bar-Shalom in his seminal article titled ‘Extension of the Probabilistic Data Association Filter in Multi-Target Tracking’. In this pioneering work, he proposed the probabilistic data interconnection filter, which has since become a hallmark of multi-source information fusion technology. Over the years, multi-source data fusion methods have evolved and diversified. Currently, the primary approaches employed in this field include the switching method, the average weighted fusion method, and the adaptive weighted fusion method. Each of these methods offers unique advantages and is tailored to address specific challenges in data fusion, thereby enhancing the overall effectiveness and reliability of integrated navigation and positioning systems.

Based on the performance of different positioning sources, the optimal single positioning source is selected as the positioning means [1]. However, ignoring other positioning sources is a waste and not the best choice. The average weighted fusion method does not take into account the different performances of different positioning sources but assigns the same weight to all positioning sources for fusion localization [2], which cannot achieve the optimal fusion effect. The adaptive weighted fusion method assigns different weights according to the characteristics of different fusion sources to achieve the best fusion positioning [3]. The algorithms corresponding to these three methods mainly include optimal estimation algorithms, weighted fusion algorithms or adaptive weighted fusion algorithms [4,5,6], Bayesian filters (BF), variable-decibel Bayesian adaptive estimation [7,8], Particle Filter (PF), Statistical decision theory [9], evidence theory [10], fuzzy logic [11], etc. However, these algorithms all have specific preconditions and application scenarios, and it is necessary to establish a mathematical model between the observation information of the navigation source and the system state parameters.

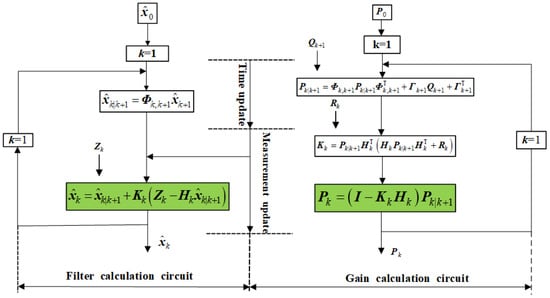

In the field of dynamic positioning such as autonomous driving and vehicle navigation, the Kalman Filter (KF) has been widely used due to the introduction of physical motion models. However, KF is primarily designed for linear systems. For nonlinear systems, such as inertial navigation, the Extended KF (EKF) is suitable for weakly nonlinear objects because higher-order terms above the second order are discarded in the linearization process. To address the issue that the batch processing of the EKF random model requires storing a large amount of data, a recursive method for the random model has been proposed [12], and time-domain non-local filtering data fusion algorithms have also been included [13]. The Unscented KF (UKF) retains the accuracy achieved by the third-order term of the Taylor series, making it suitable for nonlinear object estimation, although it involves relatively high computational demands. When both the system state and measurement noise are nonlinear, the PF can be used for nonlinear systems and systems with uncertain error models. However, the PF requires a probability density that closely approximates the real density. Otherwise, the filtering effect may be poor or even divergent. To address this, the Unscented Kalman Particle Filter (UPF) algorithm has been developed [14]. However, both the PF and UPF methods face the issue of rapidly increasing computational load as the number of particles grows.

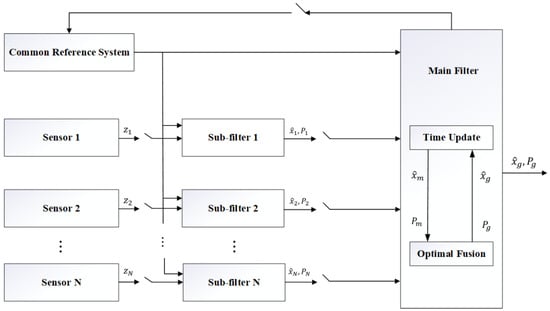

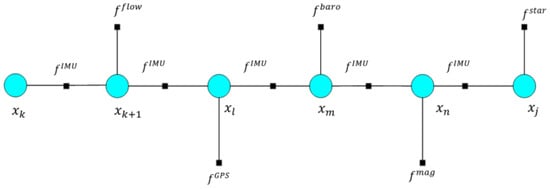

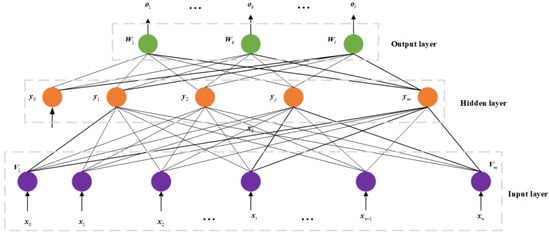

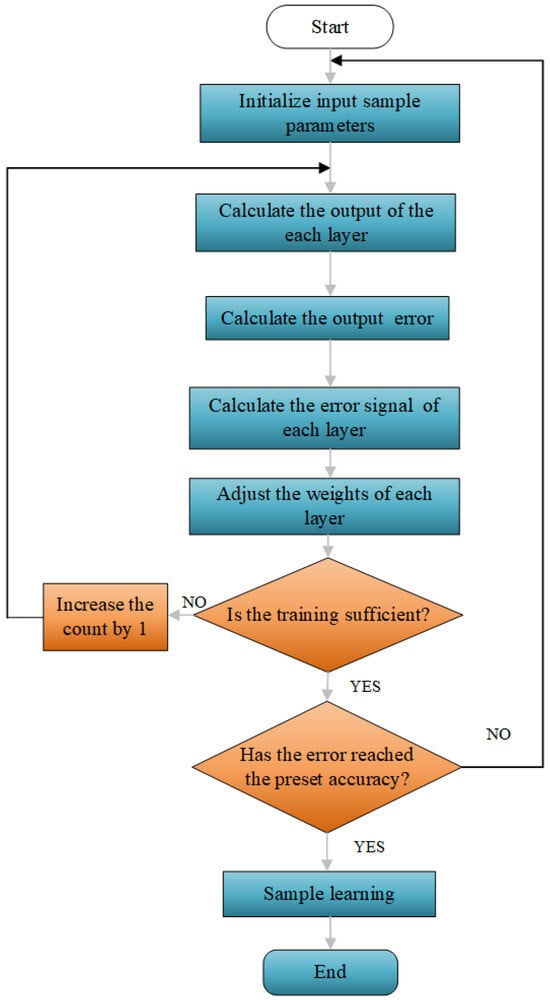

With the increasing demand from users for more comprehensive and intelligent navigation and positioning performance, filtering methods such as Factor Graphs (FG) and neural networks have been introduced. For example, FG algorithms have been extensively applied in single GNSS positioning, GNSS/INS integrated positioning, ambiguity resolution, and robust estimation [15,16,17,18]. To enhance positioning accuracy in urban environments, FG algorithms have been optimized and improved [19,20]. These studies have demonstrated that under certain conditions, FG algorithms exhibit higher computational accuracy and robustness compared to EKF. In 1965, Magill proposed the Multi-Model Estimation (MME) method [21], which enhances the adaptability of system models to real systems and changes in external environments under complex conditions, thereby improving the accuracy and stability of filtering estimates. The design of the model set, the selection of filters, estimation fusion, and the reset of filter inputs are all very important aspects. To enhance the high fault-tolerance capability of integrated navigation systems, Carlson introduced the Federated Filtering (FF) theory in 1988 [22]. This theory has been applied in indoor navigation, robotic navigation, and vision–language tasks. Existing Artificial Intelligence (AI) algorithms mainly include fuzzy control adaptive algorithms and neural network adaptive algorithms [23,24]. For example, to address the impact of random disturbances on systems in underwater environments, RBF neural network-assisted FF has been employed for information fusion [25]. By establishing a black box model with sufficiently accurate samples through offline training, the positioning accuracy and adaptability of the algorithm have been improved. To tackle the issues of high cost and susceptibility to weather conditions in existing high-precision satellite navigation for agricultural machinery, Yu et al. (2021) proposed a multi-sensor fusion automatic navigation method for farmland based on D-S-CNN [26]. However, these AI algorithms require extensive training data, comprehensive pre-training of the system, and significant computational resources, and often struggle to ensure real-time performance, typically being used for post-processing.

Recently, scholars from various countries have conducted extensive research on integrated navigation systems. For instance, researchers from Linköping University in Sweden proposed a combined navigation system that integrates GPS, INS, and visible light vision assistance [27]. This system utilizes the vision system and INS for positioning when GPS fails. Locata Corporation in Australia has integrated the Locata system with GPS, INS, vision systems, and Simultaneous Localization and Mapping (SLAM), achieving high-precision applications of the Locata system in both indoor and outdoor environments [28]. A communication and navigation fusion system has been applied for seamless positioning across wide-area indoor and outdoor spaces [29]. A multi-frequency ground-penetrating radar data fusion system is used for working antennas in different frequency ranges [30], while multi-sensor data fusion is employed for analyzing airspeed measurement fault detection in drones [3]. Additionally, an indoor mobile robot based on dead reckoning data fusion and fast response code detection [31], and an IoT-based multi-sensor fusion strategy for analyzing occupancy sensing systems in smart buildings have been developed [32]. Systems that integrate vision, inertial navigation, and asynchronous optical tracking with Inertial Measurement Units (IMU) have also been implemented. Furthermore, several research teams have successfully developed open-source integrated navigation systems for use by academic or industrial technical personnel [33,34,35].

Although the aforementioned studies include extensive research and testing on multi-source data fusion methods, fusion systems, and their applications, the theories and models of these methods have their specific applicable scenarios and conditions. Therefore, this paper summarizes the fundamental principles and mathematical models of multi-source data fusion methods, analyzes the advantages and disadvantages of different fusion approaches, and provides theoretical support and reference for the design, development, and application of fusion systems.

4. Summary of Features and Application Scenarios for Multi-Source Fusion Methods

The introduced methods for multi-source data fusion processing show differences in terms of optimization criteria, fundamental principles, mathematical models, prior information, number of observations, and application scenarios. To enable users to make targeted selections of different fusion methods when developing integrated systems, we have summarized the main characteristics and applicable scenarios of the fusion methods introduced, as shown in Table 9.

Table 9.

Main characteristics and applicable scenarios of fusion methods.

5. Conclusions

This paper provides a detailed overview of various algorithms corresponding to multi-source fusion processing methods. It summarizes the fundamental principles of these algorithms and briefly introduces their mathematical models, key characteristics, and application scenarios, offering significant theoretical and technical support for intelligent navigation, driverless vehicles, autonomous navigation, and related fields. Due to limitations in theoretical understanding and technical conditions, all existing fusion algorithms exhibit shortcomings. Currently, no single fusion algorithm can fully meet the requirements of multi-source integrated navigation systems. Therefore, appropriate fusion algorithms must be selected based on practical needs and application contexts. The historical development of these fusion algorithms reveals their interdisciplinary nature, combining theories and methodologies from integrated navigation, GNSS data processing, satellite geodesy, probability theory and mathematical statistics, computer science, statistics, and artificial intelligence. Consequently, multi-source integrated navigation algorithms should not be confined to traditional positioning and navigation approaches. Instead, they should continuously incorporate insights from other disciplines, foster mutual learning and advancement across fields, and generate innovative theories and methods through interdisciplinary integration. This evolution aims to deliver high-precision, high-reliability positioning, navigation, and timing services across all temporal and spatial domains—representing the future development trend of multi-source integrated navigation systems.

Author Contributions

Conceptualization, X.M. and X.H.; methodology, X.M.; software, P.Z.; validation, X.H. and P.Z.; formal analysis, P.Z.; investigation, P.Z.; resources, X.M.; data curation, P.Z.; writing—original draft preparation, X.M.; writing—review and editing, X.H.; visualization, P.Z.; supervision, X.H.; project administration, X.H.; funding acquisition, X.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (42364002, 42274039), the Major Discipline Academic and Technical Leaders Training Program of Jiangxi Province (20225BCJ23014), the Key Research and Development Program Project of Jiangxi Province (20243BBI91033), Xi‘an Science and Technology plan Project (24ZDCYJSGG0015), and State Key Laboratory of Satellite Navigation System and Equipment Technology (CEPNT2023B02), and Chongqing Municipal Education Commission Science and Technology Research Project (KJQN202403241).

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zou, D.; Meng, W.; Han, S. Euclidean Distance Based Handoff Algorithm for Fin-gerprint Positioning of Wlan System. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 7–10 April 2013; pp. 1564–1568. [Google Scholar]

- Bhujle, H. Weighted-average Fusion Method for Multiband Images. In Proceedings of the 2016 International Conference on Signal Processing and Communications (SPCOM), Bangalore, India, 12–15 June 2016; pp. 1–5. [Google Scholar]

- Guo, X.; Li, L.; Ansari, N.; Liao, B. Knowledge Aided Adaptive Localization via Global Fusion Profile. IEEE Internet Things J. 2018, 5, 1081–1089. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X. Multitarget-Multisensor Tracking: Principles and Techniques. Aerosp. Electron. Syst. Mag. IEEE 1996, 16, 93. [Google Scholar]

- Hoang, M.; Denis, B.; Harri, J.; Slock, M. Robust and Low Complexity Bayesian Data Fusion for Hybrid Cooperative Vehicular Localization. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Zhang, D.; Liu, J.; Lv, Y.; Wei, X. A Weighted Fusion Algorithm for Multi-sensors. In Proceedings of the 2016 Sixth International Conference on Instrumentation Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 808–811. [Google Scholar]

- Sun, T.; Yu, M. Research on Multi-source Data Fusion Method Based on Bayesian Estimation. In Proceedings of the 2016 9th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 10–11 December 2016; Volume 2, pp. 321–324. [Google Scholar]

- Li, X.; Li, J.; Wang, A. A review of integrated navigation technology based on visual/inertial/UWB fusion. Sci. Surv. Mapp. 2023, 48, 49–58. [Google Scholar]

- Berger, J. Statistical Decision Theory and Bayesian Analysis; Springer: Berlin/Heidelberg, Germany, 2002; Volume 83, p. 266. [Google Scholar]

- Zhang, Y.; Liu, Y.; Zhang, Z.; Chao, H.; Zhang, J.; Liu, Q. A Weighted Evidence Combination Approach for Target Identification in Wireless Sensor Networks. IEEE Access 2017, 5, 21585–21596. [Google Scholar] [CrossRef]

- Arikumar, K.; Natarajan, V.; Clarence, L.; Priyanka, M. Efficient Fuzzy Logic Based Data Fusion in Wireless Sensor Networks. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, India, 19 November 2016; pp. 1–6. [Google Scholar]

- Zhang, X.; Lu, X. Recursive estimation of the stochastic model based on the Kalman filter formulation. GPS Solut. 2021, 25, 24. [Google Scholar] [CrossRef]

- Cheng, Q.; Liu, H.; Shen, H.; Wu, P.; Zhang, L. A Spatial and Temporal Nonlocal Filter-Based Data Fusion Method. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4476–4488. [Google Scholar] [CrossRef]

- Merwe, R.; Doucet, A.; Freitas, N.; Wan, E. The Unscented Particle Filter. In Proceedings of the 14th International Conference on Neural Information Processing Systems, Denver, CO, USA, 1 January 2001; Volume 13. [Google Scholar]

- Watson, R.; Gross, J. Robust Navigation in GNSS Degraded Environment Using Graph Optimization. In Proceedings of the 30th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2017), Portland, OR, USA, 25–29 September 2017; pp. 2906–2918. [Google Scholar]

- Wen, W.; Pfeifer, T.; Bai, X.; Hsu, L. It is time for Factor Graph Optimization for GNSS/INS Integration: Comparison between FGO and EKF. arXiv 2020, arXiv:2004.10572. [Google Scholar]

- Gao, H.; Li, H.; Huo, H.; Yang, C. Robust GNSS Real-Time Kinematic with Ambiguity Resolution in Factor Graph Optimization. In Proceedings of the 2022 International Technical Meeting of The Institute of Navigation, Long Beach, CA, USA, 25–27 January 2022; pp. 835–843. [Google Scholar]

- Zhang, T.; Wang, G.; Chen, Q.; Tang, H.; Wang, L.; Niu, X. Influence Analysis of IMU Scale Factor Error in GNSS/MEMS IMU vehicle integrated navigation. J. Geod. Geodyn. 2024, 44, 134–137. [Google Scholar]

- Liao, J.; Li, X.; Feng, S. GVIL: Tightly-Coupled GNSS PPP/Visual/INS/LiDAR SLAM Based on Graph Optimization. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1204–1215. [Google Scholar]

- Zhang, X.; Zhang, Y.; Zhu, F. Factor Graph Optimization for Urban Environment GNSS Positioning and Robust Performance Analysis. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1050–1057. [Google Scholar]

- Magill, D. Optimal Adaptive Estimation of Sampled Stochastic Processes. IEEE Trans. Autom. Control 1965, 10, 434–439. [Google Scholar] [CrossRef]

- Carlson, N. Federated filter for fault-tolerant integrated navigation systems. In Proceedings of the IEEE PLANS 88, Orlando, FL, USA, 29 November–2 December 1988; pp. 110–119. [Google Scholar]

- Bian, H.; Jin, Z.; Tian, W. Analysis of Adaptive Kalman Filter Based on Intelligent Information Fusion Techniques in Integrated Navigation System. Syst. Eng. Electron. 2004, 26, 1449–1452. [Google Scholar]

- Ghamisi, P.; Hofle, B.; Zhu, X. Hyperspectral and Lidar Data Fusion Using Extinction Profiles and Deep Convolutional Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3011–3024. [Google Scholar] [CrossRef]

- Li, P.; Xu, X.; Zhang, X. Application of intelligent Kalman Filter to Underwater Terrain Integrated Navigation System. J. Chin. Inert. Technol. 2011, 19, 579–589. [Google Scholar]

- Yu, J.; Lu, W.; Zeng, M.; Zhao, S. Low-cost Agricultural Machinery Intelligent Navigation Method Based on Multi-Sensor Information Fusion. China Meas. Test 2021, 47, 106–119. [Google Scholar]

- Conte, G.; Doherty, P. Vision-Based Unmanned Aerial Vehicle Navigation Using Geo-Referenced Information. EURASIP J. Adv. Signal Process. 2009, 2009, 387308. [Google Scholar] [CrossRef]

- Montillet, J.; Bonenberg, L.; Hancock, C.; Roberts, G. On the Improvements of the Single Point Positioning Accuracy with Locata Technology. GPS Solut. 2014, 18, 273–282. [Google Scholar] [CrossRef]

- Deng, Z.; Yu, Y.; Yuan, X.; Wan, N.; Yang, L. Situation and Development Tendency of Indoor Positioning. China Commun. 2013, 10, 42–55. [Google Scholar] [CrossRef]

- Coster, D.; Lambot, S. Fusion of Multifrequency GPR Data Freed from Antenna Effects. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 2, 1–11. [Google Scholar] [CrossRef]

- Nazemzadeh, P.; Fontanelli, D.; Macii, D. Indoor Localization of Mobile Robots through QR Code Detection and Dead Reckoning Data Fusion. IEEE/ASME Trans. Mechatron. 2017, 22, 2588–2599. [Google Scholar] [CrossRef]

- Nesa, N.; Banerjee, I. IoT-Based Sensor Data Fusion for Occupancy Sensing Using Dempster–Shafer Evidence Theory for Smart Buildings. Internet Things J. 2017, 4, 1563–1570. [Google Scholar] [CrossRef]

- Chen, K.; Chang, G.; Chen, C. GINav: A MATLAB-based Software for the Data Processing and Analysis of A GNSS/INS Integrated Navigation System. GPS Solut. 2021, 25, 108. [Google Scholar] [CrossRef]

- Chi, C.; Zhang, X.; Liu, J.; Sun, Y.; Zhang, Z.; Zhan, X. GICI-LIB: A GNSS/INS/Camera Integrated Navigation Library. arXiv 2023, arXiv:2306.13268. [Google Scholar] [CrossRef]

- Li, X.; Huang, J.; Li, X.; Yuan, Y.; Zhang, K.; Zheng, H.; Zhang, W. GREAT: A Scientific Software Platform for Satellite Geodesy and Multi-Source Fusion Navigation. Adv. Space Res. 2024, 74, 1751–1769. [Google Scholar] [CrossRef]

- Koch, K. Least Squares Adjustment and Collocation. Bull. Geod. 1977, 51, 127–135. [Google Scholar] [CrossRef]

- Cui, X.; Yu, Z.; Tao, B.; Liu, D.; Yu, Z.; Sun, H.; Wang, X. Generalized Surveying Adjustment; Wuhan University Press: Wuhan, China, 2001. [Google Scholar]

- He, X.; Wang, T.; Liu, W.; Luo, T. Measurement Data Fusion Based on Optimized Weighted Least-Squares Algorithm for Multi-Target Tracking. IEEE Access 2019, 7, 13901–13916. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, P.; Li, T. Information Fusion for Large-Scale Multi-Source Data Based on the Dempster-Shafer Evidence Theory. Inf. Fusion 2025, 115, 102754. [Google Scholar] [CrossRef]

- Huang, W. Modern Adjustment Theory and Its Applications; PLA Press: Beijing, China, 1992. [Google Scholar]

- Kalman, R. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Jazwinski, A. Stochastic Processes and Filtering Theory; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Simon, D. Optimal State Estimation-Kalman, H∞ and Nonlinear Approaches; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Bierman, G.; Belzer, M. A Decentralized Square Root Information Filter/Smoother. In Proceedings of the NAECON, Dayton, OH, USA, 18–22 May 1987; pp. 1448–1456. [Google Scholar]

- Li, J.; Hao, S.; Huang, G. Modified Strong Tracking Filter based on UD Decomposition. Syst. Eng. Electron. 2009, 31, 1953–1957. [Google Scholar]

- Mehra, R. Approaches to adaptive filtering. IEEE Trans. Autom. Control 1972, 17, 693–698. [Google Scholar] [CrossRef]

- Athans, M.; Wisher, R.; Bertolini, A. Suboptimal State Estimation Algorithm for Continuous-Time Nonlinear Systems from Discrete Measurements. IEEE Trans. Autom. Control 1968, AC-13, 504–515. [Google Scholar] [CrossRef]

- Wang, G.; Fan, X.; Zhao, J.; Yang, C.; Ma, L.; Dai, W. Iterated Maximum Mixture Correntropy Kalman Filter and Its Applications in Tracking and Navigation. IEEE Sens. J. 2024, 24, 27790–27802. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, Z.; Yang, C.; Ma, L.; Dai, W. Robust EKF Based on Shape Parameter Mixture Distribution for Wireless Localization with Time-Varying Skewness Measurement Noise. IEEE Trans. Instrum. Meas. 2025, 74, 1–10. [Google Scholar] [CrossRef]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H. A new Approach for Filtering Nonlinear Systems. In Proceedings of the 1995 American Control Conference-ACC ’95, Seattle, WA, USA, 21–23 June 1995; Volume 3, pp. 1628–1632. [Google Scholar]

- Wan, E.; Van Der Merwe, R. The Unscented Kalman Filter for Nonlinear Estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, And Control Symposium (Cat. No. 00EX373), Lake Louise, AB, Canada, 4 October 2000; pp. 153–158. [Google Scholar]

- Ye, L.; Anxi, Y.; Amp, J. Unscented Kalman Filtering in the Additive Noise Case. Sci. China Technol. Sci. 2010, 53, 929–941. [Google Scholar]

- Shi, C.; Teng, W.; Zhang, Y.; Yu, Y.; Chen, L.; Chen, R.; Li, Q. Autonomous Multi-Floor Localization Based on Smartphone-Integrated Sensors and Pedestrian Indoor Network. Remote Sens. 2023, 15, 2933. [Google Scholar] [CrossRef]

- Cahyadi, N.; Asfihani, T.; Suhandri, F.; Erfianti, R. Unscented Kalman Filter for a Low-Cost GNSS/IMU-based Mobile Mapping Application Under Demanding Conditions. Geod. Geodyn. 2024, 15, 166–176. [Google Scholar] [CrossRef]

- Wiener, N. The theory of prediction. Mod. Math. Eng. 1956, 165, 6. [Google Scholar]

- Doucet, A.; De Freitas, N.; Gordon, N. Sequential Monte Carlo Methods in Practice; Springer: New York, NY, USA, 2001. [Google Scholar]

- Gray, J.; Muray, W. A Derivation of An Analytical Expression for the Tracking Index for the Alpha-Beta-Gamma Filtering. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 1064–1065. [Google Scholar] [CrossRef]

- MacCormick, J.; Blake, A. A probabilistic Exclusion Principle for Tracking Multiple Objects. In Proceedings of the International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999; pp. 572–578. [Google Scholar]

- Moral, P. Measure Valued Process and Interacting Particle Systems: Application to Non-Linear Filtering Problems. Ann. Appl. Probab. 1998, 8, 438–495. [Google Scholar]

- Kitagawa, G. Monte Carlo Filter and Smother for Non-Gaussian Nonlinear State Space Models. J. Comput. Graph. Stat. 1996, 5, 1–25. [Google Scholar] [CrossRef]

- Crisan, D.; Ducet, A. A Survey of Convergence Results on Particle Filtering Methods for Practitioners. IEEE Trans. Signal Process. 2002, 50, 736–746. [Google Scholar] [CrossRef]

- Andrieu, C.; Doucet, A.; Singh, S.; Tadic, V. Particle Methods for Change Detection, System Identification and Control. Proc. IEEE 2004, 92, 428–438. [Google Scholar] [CrossRef]

- Wang, E.; Pang, T.; Qu, P.; Cai, M.; Zhang, Z. GPS receiver Autonomous Integrity Monitoring Algorithm Based on Improved Particle Filter. Tele-Commun. Eng. 2014, 54, 437–441. [Google Scholar] [CrossRef]

- Wang, E.; Qu, P.; Pang, T.; Qu, P.; Cai, M.; Zhang, Z. Receiver Autonomous Integrity Monitoring Based on Particle Swarm Optimization Particle Filter. J. Beijing Univ. Aeronaut. Astronaut. 2016, 42, 2572–2578. [Google Scholar]

- Yun, L.; Shu, S.; Gang, H. A Weighted Measurement Fusion Particle Filter for Nonlinear Multisensory Systems Based on Gauss–Hermite Approximation. Sensors 2017, 17, 2222. [Google Scholar] [CrossRef] [PubMed]

- Bi, X. HUS Firefly Algorithm with High Precision Mixed Strategy Optimized Particle Filter. J. Shanghai Jiaotong Univ. 2019, 53, 232–238. [Google Scholar]

- Pearson, J. Dynamic Decomposition Techniques in Optimization Methods for Large-Scale System; McGraw-Hill: New York, NY, USA, 1971; p. 67. [Google Scholar]

- Speyer, J. Computation and Transmission Requirements for A Decentralized Linear-Quadratic-Gaussian Control Problem. IEEE Trans. Autom. Control 1979, AC-24, 266–269. [Google Scholar] [CrossRef]

- Willsky, A.; Bello, M.; Castanon, D.; Levy, B.; Verghese, G. Combining and Updating of Local Estimates and Regional Maps Along Sets of One-Dimensional Tracks. IEEE Trans. Autom. Control 1982, AC-27, 799–813. [Google Scholar] [CrossRef]

- Kerr, T. Decentralized filtering and Redundancy Management for Multisensor Navigation. IEEE Trans. Aerosp. Electron. Syst. 1987, AES-23, 83–119. [Google Scholar] [CrossRef]

- Loomis, P.; Carlson, N.; Berarducci, M. Common Kalman filter: Fault-Tolerant Navigation for Next Generation Aircraft. In Proceedings of the 1988 National Technical Meeting of The Institute of Navigation, Santa Barbara, CA, USA, 26–29 January 1988; pp. 38–45. [Google Scholar]

- Li, X. Hybrid Estimation Techniques in Control and Dynamic Systems: Advances in Theory and Applications; Academic Press: New York, NY, USA, 1996; Volume 76, pp. 213–287. [Google Scholar]

- Blom, H.; Bar-Shalom, Y. The Interacting Multiple Model Algorithm for Systems with Markovian Switching Coefficients. IEEE Trans. Autom. Control 1988, 33, 780–783. [Google Scholar] [CrossRef]

- Luan, Z.; Yu, C.; Gu, B.; Zhao, X. A Time-varying IMM Fusion Target Tracking Method. Radar Navig. 2021, 47, 111–116. [Google Scholar]

- Tian, Y.; Yan, Y.; Zhong, Y.; Li, J.; Meng, Z. Data Fusion Method Based on IMM-Kalman for An Integrated Navigation System. J. Harbin Eng. Univ. 2022, 43, 973–978. [Google Scholar]

- Yang, H.; Wang, M.; Wang, Y.; Wu, Y. Multiple-Mode Self-Calibration Unscented Kalman Filter Method. J. Aerosp. Power 2024, 1–7. [Google Scholar]

- Kschischang, F.; Frey, B.; Loeliger, H. Factor Graphs and the Sum-Product Algorithm. IEEE Trans. Inf. Theory 2002, 47, 498–519. [Google Scholar] [CrossRef]

- Zhou, Z. Machine Learning; Tsinghua University Press: Beijing, China, 2016. (In Chinese) [Google Scholar]

- Mcclelland, J.; Rumelhart, D.; PDP Research Group. Parallel Distributed Processing; MIT Press: Cambridge, NA, USA, 1987. [Google Scholar]

- Vapnik, V. An Overview of Statistical Learning Theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef]

- Cubuk, E.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q. AutoAugment: Learning Augmentation Strategies from Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How Transferable Are Features in Deep Neural Networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, PMLR, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged Consistency Targets Improve Semi-Supervised Deep Learning Results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Settles, B. Active Learning Literature Survey; University of Wisconsin-Madison: Madison, WI, USA, 2009. [Google Scholar]

- Côté, P.; Nikanjam, A.; Ahmed, N.; Humeniuk, D.; Khomh, F. Data Cleaning and Machine Learning: A Systematic Literature Review. Autom. Softw. Eng. 2024, 31, 54. [Google Scholar] [CrossRef]

- Zhang, N.; Poole, D. Exploiting Causal Independence in Bayesian Network Inference. J. Artif. Intell. Res. 1996, 5, 301–328. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Wang, X.; Li, X.; Liao, J.; Feng, S.; LI, S.; Zhou, Y. Tightly Coupled Stereo Visual-Inertial-LiDAR SLAM based on graph optimization. Acta Geod. Cartogr. Sin. 2022, 51, 1744–1756. [Google Scholar]

- Zhu, H.; Wang, F.; Zhang, W.; Luan, M.; Cheng, Y. Real-Time Precise Positioning Method for Vehicle-Borne GNSS/MEMS IMU Integration in Urban Environment. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1232–1240. [Google Scholar]

- Li, Y.; Yang, Y.; He, H. Effects Analysis of Constraints on GNSS/INS Integrated Navigation. Geomat. Inf. Sci. Wuhan Univ. 2017, 42, 1249–1255. [Google Scholar]

- Gao, Z. Research on The Methodology and Application of the Integration Between the Multi-Constellation GNSS PPP and Inertial Navigation System. Ph.D. Thesis, Wuhan University, Wuhan, China, 2016. [Google Scholar]

- Li, W.; Li, W.; Cui, X.; Zhao, S.; Lu, M. A tightly coupled RTK/INS Algorithm with Ambiguity Resolution in the Position Domain for Ground Vehicles in Harsh Urban Environments. Sensors 2018, 18, 2160. [Google Scholar] [CrossRef] [PubMed]

- Liu, F. Research on High-Precision Seamless Positioning Model and Method Based on Multi-Sensor Fusion. Acta Geod. Cartogr. Sin. 2021, 50, 1780. [Google Scholar]

- Yu, H.; Li, Z.; Wang, J.; Han, H. Data Fusion for GPS/INS Tightly-Coupled Positioning System with Equality and Inequality Constraints Using an Aggregate Constraint Unscented Kalman filter. J. Spat. Sci. 2020, 65, 377–399. [Google Scholar] [CrossRef]

- Cao, Z. Indoor and Outdoor Seamless Positioning Based on the Integration of GNSS/INS/UWB. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2021. [Google Scholar]

- Yuan, L.; Zhang, S.; Wang, J.; Guo, H. GNSS/INS integrated navigation aided by sky images in urban occlusion environment. Sci. Surv. Mapp. 2023, 48, 1–8. [Google Scholar]

- Wang, J.; Ling, H.; Jiang, W.; Cai, B. Integrated Train Navigation system based on Full state fusion of Multi-constellation satellite positioning and inertial navigation. J. China Railw. Soc. 2022, 44, 45–52. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).