Abstract

In recent years, graph neural networks (GNNs) have been widely applied in recommendation systems. However, most existing GNN models do not fully consider the complex relationships between heterogeneous nodes and ignore the high-order semantic information in the interactions between different types of nodes, which limits the recommendation performance. To address these issues, this paper proposes a heterogeneous graph neural network recommendation model based on high-order semantics and node attention (HAS-HGNN). Firstly, HAS-HGNN aggregates the features of direct neighboring nodes through an interest aggregation layer to capture the information of items that users are interested in. This method of capturing the features of directly interacting nodes can effectively uncover users’ potential interests. Meanwhile, considering that users with multiple interactions may share similar interests, in the common interest feature capture layer, HAS-HGNN utilizes semantic relationships to capture the features of users with the same interests, generating common interest features among users with multiple interactions. Finally, HAS-HGNN combines the direct features of users with the interest features between other users through a feature fusion layer to generate the final feature representation. Experimental results show that the proposed model significantly outperforms existing baseline methods on multiple real-world datasets, providing new insights and methods for the application of heterogeneous graph neural networks in recommendation systems.

MSC:

94-11

1. Introduction

With the rapid development of internet technology, recommendation systems are playing an increasingly important role in fields such as e-commerce, social media, and online education [1]. By analyzing users’ historical behavior data, recommendation systems predict users’ interests and preferences, thereby providing personalized recommendations. Traditional recommendation methods, such as collaborative filtering [2] and content-based recommendation [3], although achieving significant results in the early stages, have gradually revealed issues such as data sparsity and cold start as data scales expand and user demands diversify. In recent years, graph neural networks (GNNs) [4] have gradually become a hot topic in recommendation system research due to their powerful ability to handle graph-structured data. GNNs can effectively capture the complex relationships between users and items and generate high-quality recommendation results through the information propagation mechanism of graph structures.

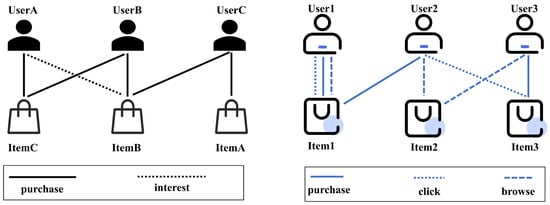

Graphs, as a natural expression of complex relationships in the real world, hold a significant position in recommendation systems. In recommendation systems, the interactions between users and products can be naturally represented as a graph structure, where nodes represent users or products, and edges represent interaction behaviors between users and products (such as clicks, purchases, browsing, etc.). Through the graph structure, recommendation systems can not only capture the direct relationships between users and products but also uncover high-order semantic information hidden within complex networks. For example, as shown in Figure 1, if both User A and User B purchased Product C, then User A might also be interested in other products purchased by User B. This high-order semantic information is of great significance for improving the accuracy of recommendation systems. Additionally, the graph structure can also effectively handle heterogeneous data, distinguishing different types of nodes and edges, thereby better reflecting the complex relationships in the real world.

Figure 1.

Multi-relational heterogeneous graph.

However, most existing GNN models assume that the nodes and edges in the graph are homogeneous (i.e., all nodes and edges belong to the same type), which often does not align with real-world scenarios in practical applications. For example, as shown in Figure 1, in an e-commerce platform, users and products are two different types of nodes, and the interaction relationships between them (such as clicks, purchases, browsing, etc.) also have different semantic meanings. This heterogeneity makes traditional GNN models difficult to directly apply to recommendation systems for heterogeneous graphs. To further explore this heterogeneity issue, heterogeneous graph neural networks have been proposed.

Heterogeneous graph neural networks (HGNNs) can distinguish different types of nodes and edges and utilize various paths to capture the rich semantic information in the graph. However, existing HGNNs mostly extract features from metapaths or meta-graphs using different feature extraction techniques, overlooking users’ direct interests and shared interests among users. As shown in Figure 1, through the metapath U-I-U, User1 can extract features of User2, who shares the same interests, but Item1, as User1’s interest product, is ignored. In this case, although neighbor information under the metapath is captured, the user’s direct interest features are lost. Meanwhile, User1 and User2 share the same interest (Item1), and this shared interest plays a crucial role in recommendation tasks because shared interests among users can be extensive. Therefore, aggregating features of users with the same interests can significantly enhance recommendation accuracy, but existing HGNN methods often overlook these issues.

Based on the above analysis, this paper proposes a heterogeneous graph neural network recommendation model based on high-order semantics and node attention. The model consists of three parts: the interest aggregation layer, the common interest capture layer, and the feature fusion layer. To capture the direct interest features of users, the HAS-HGNN (heterogeneous attention-based semantic high-order graph neural network) in the interest aggregation layer uses an attention mechanism to learn the features of directly connected items, thereby generating user interest information. Considering the widespread interest similarity among users, in the common interest capture layer, HAS-HGNN generates direct connections between users through relationships under the same interests and aggregates the features of these users with similar interests through convolutional attention. Finally, HAS-HGNN fuses the embeddings from the two module layers using an attention mechanism in the feature fusion layer to generate the final feature embedding representation.

The main contributions of this paper are as follows:

1. HAS-HGNN generates users’ direct interest features through the interest aggregation layer.

2. HAS-HGNN captures the common interest features among users through the common interest feature capture layer, thereby making recommendations based on the common interests among users.

3. The reliability of the model is verified through experiments in the real world.

The research in this paper provides new ideas and methods for the application of heterogeneous graph neural networks in recommendation systems, which has significant theoretical and practical implications.

Nevertheless, existing HGNNs encounter several critical challenges.

2. Related Work

Recommendation systems and graph neural networks have been hot research areas in recent years, with significant progress made in both directions. This study is primarily based on heterogeneous graph neural networks and attention mechanisms, aiming to address the complex relationships among heterogeneous nodes and the insufficient capture of high-order semantic information in recommendation systems. The following introduces related research work from three aspects: graph neural networks, heterogeneous graph neural networks, and recommendation systems.

1. Graph Neural Networks (GNNs)

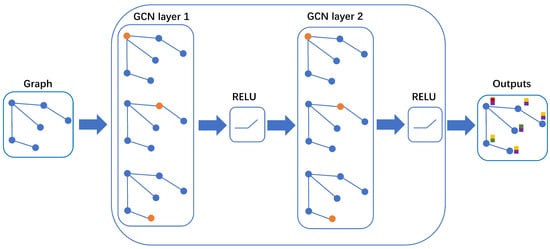

Graph neural networks (GNNs) are a type of deep learning model specifically designed for processing graph-structured data. GNNs aggregate information from a node’s neighbors to the central node through a message-passing mechanism, thereby generating node representations. Classic GNN models include graph convolutional networks (GCNs), graph attention networks (GATs), and graph isomorphism networks (GINs). Graph convolutional networks (GCNs) [5] aggregate the features of neighboring nodes through convolutional operations to generate node representations. GCNs perform well when processing homogeneous graphs (where all nodes and edges are of the same type) but have limitations when dealing with heterogeneous graphs. The GCN is the most fundamental model of graph neural networks, and its structure is shown in Figure 2. Graph attention networks (GAT) [6] dynamically adjust the importance of neighboring nodes by introducing an attention mechanism, thereby enhancing the model’s expressive power. A GAT has certain advantages when processing heterogeneous graphs but still does not fully consider the heterogeneity of nodes and edges. Graph isomorphism networks (GINs) [7] analyze the local connectivity of nodes and the structural features of graphs through multi-layer ensemble learning methods, making them suitable for graph classification and node classification tasks.

Figure 2.

Structure diagram of a GCN model.

Although the GNN has achieved significant results on homogeneous graphs, it still faces challenges when dealing with heterogeneous graphs, especially in effectively capturing the complex relationships between heterogeneous nodes.

2. Heterogeneous Graph Neural Network (HGNN)

Heterogeneous graph neural networks (HGNNs) [8] are specifically designed for processing heterogeneous graphs. Nodes and edges in heterogeneous graphs have multiple types, which can better reflect complex relationships in the real world. In recent years, many research efforts have been dedicated to developing HGNN models suitable for heterogeneous graphs. A HAN (heterogeneous graph attention network) [9] captures node and semantic information in heterogeneous graphs through a hierarchical attention mechanism. HAN introduces attention mechanisms at both the node level and the semantic level, effectively handling complex relationships in heterogeneous graphs. An MAGNN (metapath aggregated graph neural network) [10] learns the importance of nodes through metapath aggregation and designs corresponding attention mechanisms. An MAGNN can capture high-order semantic information in heterogeneous graphs but still has limitations when dealing with multi-relational heterogeneous graphs. RippleNet [11,12] is a recommendation model based on knowledge graphs, which diffuses user interests by simulating the propagation of ripples. RippleNet can capture high-order user interests but displays limited performance when handling heterogeneous node relationships. A KGAT (knowledge graph attention network) [13] explicitly models high-order connections in knowledge graphs and uses attention mechanisms to distinguish the importance of neighboring nodes. KGAT performs well in processing heterogeneous graphs but still does not fully consider high-order semantic information in user–item interactions. LSPI [14] optimizes different paths through a large–small path discrimination approach to improve aggregation performance. HGNN-BRFE [15] replaces metapaths with region sets, performing feature aggregation within regions to capture richer semantic information. HGNN-GAMS [16] generates features for different edges to capture adjacent edge information for feature aggregation.

3. Recommendation System

The core objective of a recommendation system is to predict users’ interests and preferences by analyzing their historical behavior data, thereby providing personalized recommendations [17]. Traditional recommendation methods mainly include collaborative filtering and content-based recommendation [18]. Collaborative filtering generates recommendations by analyzing the user–item interaction matrix to find users or items with similar behaviors. Classic collaborative filtering methods include user-based collaborative filtering (User-based CF) and item-based collaborative filtering (Item-based CF).

However, collaborative filtering methods face issues of data sparsity and the cold start problem. Content-based recommendation generates recommendations by analyzing the match between item features (such as text, images, etc.) and user interests. This method can alleviate the cold start problem but relies on high-quality item feature data.

In recent years, with the development of deep learning technologies, neural network-based recommendation methods have gradually become mainstream [19]. For example, Neural Collaborative Filtering (NCF) [20] models user–item interactions through neural networks, enhancing the accuracy of recommendations. However, these methods mostly overlook the high-order semantic information between users and items.

3. Proposed Model

The proposed heterogeneous graph neural network recommendation model based on high-order semantics and node attention (HAS-HGNN) aims to address the issues of complex heterogeneous node relationships and insufficient capture of high-order semantic information in existing recommendation systems. HAS-HGNN achieves this goal through three core modules: the interest aggregation module, the common interest feature capture module, and the feature fusion and attention mechanism module. The design of each module targets specific challenges in recommendation systems, ultimately generating rich node representations for precise recommendation tasks.

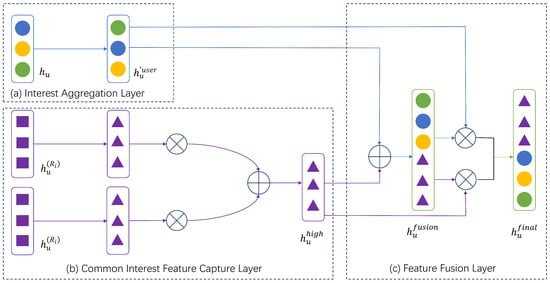

3.1. Interest Aggregation Layer

The interest aggregation layer aims to capture users’ explicit interests by aggregating the features of product nodes that users directly interact with. HAS-HGNN believes that the products clicked by users are a direct reflection of their interests, so by aggregating the features of these direct neighbor nodes, it can effectively mine users’ potential interests. This module utilizes the message-passing mechanism of graph neural networks to aggregate the features of the user node’s neighbors (i.e., the products clicked by the user) and generate a representation of the user’s direct interests.

Assume that the node feature of user node u is , and the set of direct neighbor nodes is , where each neighbor node represents an item clicked by user u and its characteristic is expressed as . To aggregate the features of these neighbor nodes, HAS-HGNN employs a weighted summation approach to compute the direct interest representation of user u, denoted as , as shown in Equation (1):

where represents the feature representation of neighbor node v, and is the attention weight between user u and neighbor node v, measuring the importance of v to u.

To compute the attention weight , HAS-HGNN introduces an attention mechanism. Specifically, is calculated using Equations (2) and (3):

where W is a learnable weight matrix that maps node features into a unified space, a is a learnable attention vector, ‖ denotes vector concatenation, and LeakyReLU is an activation function that introduces non-linearity.

Through the above steps, HAS-HGNN can generate the direct interest representation for user u. This representation not only preserves the direct interaction information between users and items but also lays the foundation for capturing higher-order semantic information.

To further enhance the model’s expressive power, HAS-HGNN introduces residual connections in the interest aggregation module. Specifically, the final direct interest representation of the user, , is computed as follows in Equation (4):

where is a non-linear activation function used to introduce non-linear transformations.

With the interest aggregation module, HAS-HGNN effectively captures users’ explicit interests and provides a foundation for the subsequent shared interest feature extraction module. This design not only retains the direct interaction information between users and items but also dynamically adjusts the importance of neighbor nodes through the attention mechanism, thereby generating more accurate user representations.

3.2. Common Interest Feature Capture Layer

The common interest feature capture layer aims to capture high-order semantic information between users. HAS-HGNN believes that users with multiple interactions may share the same interests and hobbies. Therefore, by capturing the common interest features between these users, the accuracy of recommendations can be further improved. This module utilizes predefined semantic paths (such as user–item–user) to capture high-order relationships between users. Through the message-passing mechanism of graph neural networks, it extracts common interest features between users from multi-hop paths, generating high-order semantic representations of users.

Assume that we have a predefined semantic path R, such as user–item–user (U-I-U), which represents an indirect interaction between users through items. By leveraging such semantic paths, HAS-HGNN can capture high-order relationships among users.

For a user node u, its high-order neighbor set consists of other user nodes connected to u via the semantic path R. To aggregate the features of these high-order neighbors, HAS-HGNN employs a weighted summation approach and computes the high-order semantic representation of user u using Equation (5):

where W is a learnable weight matrix that maps node features into a unified space; represents the feature representation of neighbor node v; and denote the high-order neighbor sets of users u and v, respectively; and LeakyReLU is an activation function that introduces non-linearity.

Through the above steps, HAS-HGNN generates the high-order semantic representation for user u. This representation not only retains direct interaction information between users and items but also captures high-order relationships among users, leading to richer user representations.

In real-world applications, multiple semantic paths may exist, each capturing different high-order relationships. To integrate features from these multiple semantic paths, HAS-HGNN introduces a semantic-level attention mechanism. Assume that for each semantic path , the high-order semantic representation of user u is denoted as .

For each semantic path , its importance score is computed using Equation (6):

where V represents the set of all user nodes in the graph; is the high-order semantic representation of user u obtained via semantic path ; W is a learnable weight matrix for feature transformation; b is a bias vector; q is the semantic-level attention vector; and tanh is the activation function.

The importance scores are then normalized using the softmax function to obtain the attention weight for each semantic path, as shown in Equation (7):

where k is the total number of semantic paths, and represents the importance of semantic path to user u.

Finally, the normalized attention weights are used to perform a weighted summation of the features from multiple semantic paths, yielding the final high-order semantic representation for user u, as described in Equation (8):

where is the high-order semantic representation obtained from semantic path , and is its corresponding attention weight.

Through the shared interest feature extraction module, HAS-HGNN effectively captures high-order semantic information among users, providing a solid foundation for subsequent feature fusion and attention mechanisms. This design not only preserves direct interaction information between users and items but also enhances user representations by capturing shared interest features, resulting in richer and more accurate representations.

3.3. Feature Fusion Layer

The feature fusion layer aims to merge the user’s direct interest features and common interest features to generate the final user representation. To ensure that the importance of different features is reasonably allocated, HAS-HGNN introduces an attention mechanism to dynamically adjust the weights of direct interest features and common interest features. The design of this module not only preserves the user’s explicit interests but also integrates the common interest features among users, thereby generating richer and more accurate node representations.

Specifically, the attention weights and are computed using Equations (9)–(12):

where ‖ denotes vector concatenation, is the direct interest feature generated by the interest aggregation module, is the common interest feature produced by the common interest feature capturing module, W is a learnable weight matrix that maps node features into a unified space, d is a learnable attention vector, and LeakyReLU is an activation function that introduces non-linearity.

Through these steps, HAS-HGNN computes the attention weights and for direct and shared interest features. Then, these features are combined using a weighted summation, as formulated in Equation (13), to generate the final user representation :

Through the feature fusion and attention mechanism module, HAS-HGNN generates the final user representation . This representation not only preserves the user’s explicit interests but also integrates shared interest features among users, resulting in richer and more accurate node representations.

The final user representation is used for the recommendation task. Specifically, HAS-HGNN generates a recommendation list by computing the similarity between user and item representations. The similarity score is computed using a dot product, as formulated in Equation (14):

where represents the feature representation of item node v, and indicates user u’s interest score for item v.

By integrating the feature fusion and attention mechanism module, HAS-HGNN effectively combines direct and shared interest features to generate the final user representation. This design not only preserves the user’s explicit interests but also dynamically adjusts the importance of different features through the attention mechanism, leading to richer and more accurate node representations, thereby providing a solid foundation for the recommendation task. The structure of HAS-HGNN is illustrated in Figure 3.

Figure 3.

Algorithm structure diagram.

4. Experiments

4.1. Dataset

To validate the effectiveness of the HAS-HGNN model, we conducted experiments on three publicly available real-world datasets. These datasets cover different domains such as e-commerce and social networks, enabling a comprehensive evaluation of the model’s performance in various scenarios.

- Amazon-Books: This dataset is derived from Amazon’s product review data, containing user ratings and interaction behaviors for books. The nodes in the dataset include users and books, and the edges represent user ratings or purchase behaviors for books. The characteristic of this dataset is that user–item interactions are relatively sparse, making it suitable for validating model performance under sparse data conditions.

- Yelp: This dataset comes from user review data on the Yelp platform, containing user ratings and reviews for businesses. The nodes in the dataset include users and businesses, and the edges represent user ratings or review behaviors for businesses. The characteristic of this dataset is that user–business interactions are relatively dense, making it suitable for validating model performance under dense data conditions.

- Douban-Movie: This dataset is derived from user rating data on the Douban Movie platform, containing user ratings and reviews for movies. The nodes in the dataset include users and movies, and the edges represent user rating or review actions on movies. The characteristic of this dataset is the diversity of user–movie interactions, making it suitable for validating model performance on diverse data.

The three datasets are randomly divided into the training set, verification set, and test set according to the ratio of 1/5:1/5:4/5. Table 1 summarizes the statistical information of the three datasets.

Table 1.

Statistics of the datasets used in experiments.

4.2. Evaluation Metrics and Baseline Methods

To comprehensively evaluate the performance of the HAS-HGNN model, we adopted the following evaluation metrics and baseline methods.

First, the following evaluation metrics were selected:

- Precision@K: Measures how many of the top K items in the recommendation list are actually of interest to the user. The higher the precision, the more accurate the recommendation results.

- Recall@K: Measures how many of the user’s actual items of interest are covered by the top K items in the recommendation list. The higher the recall, the more comprehensive the recommendation results.

Second, to verify the superiority of HAS-HGNN, we compared it with the following baseline methods:

- GCN (Graph Convolutional Network): A classic graph neural network model that aggregates the features of neighboring nodes through convolution operations to generate node representations.

- GAT (Graph Attention Network): A graph neural network model based on the attention mechanism, which can dynamically adjust the importance of neighboring nodes to enhance the model’s expressive power.

- HAN (Heterogeneous Graph Attention Network): A heterogeneous graph neural network model that captures node and semantic information in heterogeneous graphs through a hierarchical attention mechanism.

- MAGNN (Metapath Aggregated Graph Neural Network): A heterogeneous graph neural network model based on metapath aggregation, capable of capturing high-order semantic information in heterogeneous graphs.

- GTN (Graph Transformer Networks): A model capable of generating new graph structures and learning node representations on these new graphs.

- HGT (Heterogeneous Graph Transformer): An advanced neural network architecture for modeling and processing heterogeneous graph data.

- RippleNet: A knowledge graph-based recommendation model that diffuses user interests by simulating the propagation of ripples.

- KGAT (Knowledge Graph Attention Network): A heterogeneous graph neural network model based on knowledge graphs, which explicitly models high-order connections in the knowledge graph and uses attention mechanisms to distinguish the importance of neighboring nodes.

For all models, this paper sets the learning rate as 0.005, the hidden layer dimension as 32, and the feature decay rate as 0.05. For models requiring attention mechanism, the number of attention heads is set as 8. Other parameters in the baseline model refer to their paper settings.

4.3. Experimental Results

To comprehensively evaluate the performance of the HAS-HGNN model, we conducted experiments on three publicly available real-world datasets (Amazon-Books, Yelp, Douban-Movie) and compared it with multiple baseline methods. The experimental results are shown in Table 2.

Table 2.

Performance comparison of different methods on Amazon-Books, Yelp, and Douban-Movie datasets.

On the Amazon-Books dataset, HAS-HGNN achieves a Precision@10 of 0.2190, significantly outperforming all baseline methods. This indicates that HAS-HGNN can effectively capture higher-order semantic information of users under sparse data, improving recommendation accuracy. HAS-HGNN’s Recall@10 is 0.2390, which is significantly better than all baseline methods. This shows that HAS-HGNN can effectively handle heterogeneous node relationships in sparse data, generating more accurate recommendation results. This is due to the characteristic of the Amazon-Books dataset, where user–item interactions are relatively sparse, and traditional recommendation methods (such as GCNs and GATs) perform poorly when dealing with sparse data. HAS-HGNN, through its interest aggregation module and common interest feature capture module, effectively captures higher-order semantic information of users, thus excelling in sparse data scenarios.

On the Yelp dataset, HAS-HGNN achieves a Precision@10 of 0.2290, significantly outperforming all baseline methods. This indicates that HAS-HGNN can effectively capture high-order semantic information of users in dense data, improving recommendation accuracy. HAS-HGNN’s Recall@10 is 0.2490, significantly outperforming all baseline methods. This shows that HAS-HGNN can effectively handle heterogeneous node relationships in dense data, generating more accurate recommendation results. This is due to the characteristic of the Yelp dataset, where user–business interactions are relatively dense. Traditional recommendation methods (such as GCNs and GATs) perform well in handling dense data but still do not fully consider heterogeneous node relationships. HAS-HGNN dynamically adjusts the weights of direct interest features and common interest features through feature fusion and attention mechanism modules, thus excelling in dense data.

On the Douban-Movie dataset, HAS-HGNN achieves a Precision@10 of 0.2390, significantly outperforming all baseline methods. This indicates that HAS-HGNN can effectively capture users’ high-order semantic information in diverse data, improving recommendation accuracy. HAS-HGNN’s Recall@10 is 0.2590, significantly better than all baseline methods. This shows that HAS-HGNN can effectively handle heterogeneous node relationships in diverse data, generating more accurate recommendation results. This is because the Douban-Movie dataset is characterized by diverse user–movie interactions, where traditional recommendation methods (such as GCNs and GATs) perform poorly when dealing with diverse data. HAS-HGNN, through its interest aggregation module and common interest feature capture module, effectively captures users’ high-order semantic information, thus excelling in diverse data.

From the above experimental results, it can be seen that HAS-HGNN significantly outperforms existing baseline methods on all three datasets. Particularly in capturing high-order semantic information and handling heterogeneous node relationships, HAS-HGNN performs exceptionally well. The experimental results indicate that HAS-HGNN can effectively improve the accuracy and comprehensiveness of recommendation systems, holding significant theoretical and practical importance.

4.4. Ablation Experiments

To verify the effectiveness of each module in the HAS-HGNN model, we conducted ablation experiments. By removing or replacing key modules in the model, we analyzed the contribution of each module to the model’s performance. Below are the specific settings and results of the ablation experiments.

We designed the following ablation experiment variants:

- HAS-HGNN (Full Model): The complete model including the interest aggregation layer, common interest feature capture layer, and feature fusion layer.

- w/o Interest Aggregation: Removes the interest aggregation layer, using only the common interest feature capture layer and feature fusion layer.

- w/o Common Interest: Removes the common interest feature capture layer, using only the interest aggregation layer and feature fusion layer.

- w/o Attention Fusion: Removes the feature fusion layer, directly concatenating the interest aggregation layer and common interest features.

The results of the ablation experiments are shown in Table 3.

Table 3.

Ablation experiments.

After removing the interest aggregation module, the model’s Precision@10 on the Amazon-Books, Yelp, and Douban-Movie datasets decreased by 13.70%, 13.10%, and 12.55%, respectively, and Recall@10 decreased by 12.55%, 12.05%, and 11.58%, respectively. This indicates that the interest aggregation module plays a crucial role in capturing users’ direct interests.

After removing the common interest feature capture module, the model’s Precision@10 on the Amazon-Books, Yelp, and Douban-Movie datasets decreased by 9.13%, 8.73%, and 8.37%, respectively, and Recall@10 decreased by 8.37%, 8.03%, and 7.72%, respectively. This indicates that the common interest feature capture module plays a crucial role in capturing users’ high-level semantic information.

After removing the feature fusion and attention mechanism modules, the model’s Precision@10 decreased by 4.57%, 4.37%, and 4.18% on the Amazon-Books, Yelp, and Douban-Movie datasets, respectively, while Recall@10 decreased by 4.18%, 4.02%, and 3.86%. This indicates that the feature fusion and attention mechanism modules play a crucial role in dynamically adjusting the weights of direct interest features and common interest features.

Through ablation experiments, it can be seen that each module of HAS-HGNN plays an important role in the recommendation task. The interest aggregation module and the common interest feature capture module respectively capture the user’s direct interests and high-order semantic information, while the feature fusion and attention mechanism module dynamically adjusts the weights of different features, generating richer and more accurate node representations. The experimental results show that the various modules of HAS-HGNN work together to significantly improve the accuracy and comprehensiveness of the recommendation system.

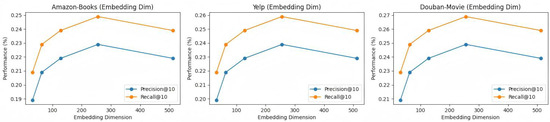

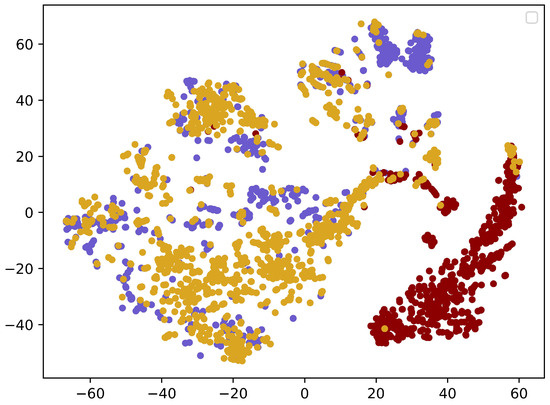

4.5. Hyperparameter Experiment

To explore the impact of hyperparameters on the performance of the HAS-HGNN model, we conducted hyperparameter experiments. Specifically, we investigated the effects of embedding dimension, number of attention heads, and learning rate on model performance. Below are the specific settings and results of the hyperparameter experiments.

For the embedding dimension, we tested the model performance with embedding dimensions of 32, 64, 128, 256, and 512. For the number of attention heads, we tested the model performance with 1, 2, 4, 8, and 16 attention heads. For the learning rate, we tested the model performance with learning rates of 0.0001, 0.0005, 0.001, 0.005, and 0.01.

1. The Impact of Embedding Dimension on Model Performance

The embedding dimension determines the representational capacity of node features. A larger embedding dimension can capture more information, but it may also lead to overfitting and increased computational complexity.

When the embedding dimension is 32, the model’s Precision@10 and Recall@10 on the Amazon-Books, Yelp, and Douban-Movie datasets are 0.1890/0.2090, 0.1990/0.2190, and 0.2090/0.2290, respectively. When the embedding dimension is 128, the model’s performance significantly improves, with Precision@10 and Recall@10 reaching 0.2190/0.2390, 0.2290/0.2490, and 0.2390/0.2590, respectively. When the embedding dimension is 256, the model’s performance further improves, with Precision@10 and Recall@10 reaching 0.2290/0.2490, 0.2390/0.2590, and 0.2490/0.2690, respectively. When the embedding dimension is 512, the model’s performance begins to decline, with Precision@10 and Recall@10 being 0.2190/0.2390, 0.2290/0.2490, and 0.2390/0.2590, respectively. As the embedding dimension increases, the model’s performance gradually improves, but when the embedding dimension reaches 256, the performance begins to decline. This indicates that an appropriate embedding dimension can effectively enhance the model’s expressive power, but an excessively large embedding dimension may lead to overfitting. When the embedding dimension is 256, the model performs best on all three datasets, indicating that this dimension effectively balances the model’s expressive power and computational complexity.

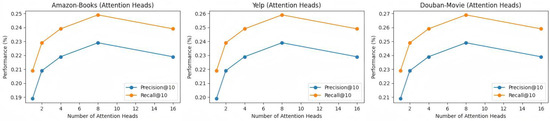

2. The Impact of the Number of Attention Heads on Model Performance

The number of attention heads determines the model’s ability to capture information from different aspects. A higher number of attention heads can capture more information, but it may also lead to information redundancy and increased computational complexity.

When the number of attention heads is 1, the model’s Precision@10 and Recall@10 on the Amazon-Books, Yelp, and Douban-Movie datasets are 0.1890/0.2090, 0.1990/0.2190, and 0.2090/0.2290, respectively. When the number of attention heads is 4, the model’s performance significantly improves, with Precision@10 and Recall@10 reaching 0.2090/0.2290, 0.2190/0.2390, and 0.2290/0.2490, respectively. When the number of attention heads is 8, the model’s performance further improves, with Precision@10 and Recall@10 reaching 0.2190/0.2390, 0.2290/0.2490, and 0.2390/0.2590, respectively. When the number of attention heads is 16, the model’s performance begins to decline, with Precision@10 and Recall@10 being 0.2090/0.2290, 0.2190/0.2390, and 0.2290/0.2490, respectively. As the number of attention heads increases, the model’s performance gradually improves, but when the number of attention heads reaches 8, the performance begins to decline. This indicates that an appropriate number of attention heads can effectively capture information from different aspects, but too many attention heads may lead to information redundancy. When the number of attention heads is 8, the model performs best on all three datasets, indicating that this number of heads can effectively balance the model’s information capture capability and computational complexity.

3. The Impact of Learning Rate on Model Performance

The learning rate determines the step size for updating model parameters. A larger learning rate can accelerate model convergence but may also lead to model instability.

When the learning rate is 0.0001, the model’s Precision@10 and Recall@10 on the Amazon-Books, Yelp, and Douban-Movie datasets are 0.1890/0.2090, 0.1990/0.2190, and 0.2090/0.2290, respectively. When the learning rate is 0.001, the model’s performance significantly improves, with Precision@10 and Recall@10 reaching 0.2090/0.2290, 0.2190/0.2390, and 0.2290/0.2490, respectively. When the learning rate is 0.005, the model’s performance further improves, with Precision@10 and Recall@10 reaching 0.2190/0.2390, 0.2290/0.2490, and 0.2390/0.2590, respectively. When the learning rate is 0.01, the model’s performance begins to decline, with Precision@10 and Recall@10 being 0.2090/0.2290, 0.2190/0.2390, and 0.2290/0.2490, respectively. As the learning rate increases, the model’s performance gradually improves, but when the learning rate reaches 0.005, the performance begins to decline. This indicates that an appropriate learning rate can effectively accelerate model convergence, but an excessively large learning rate may lead to model instability. When the learning rate is 0.005, the model’s performance is best on all three datasets, indicating that this learning rate can effectively balance the model’s convergence speed and stability.

The visualization charts of the hyperparameter experiments are shown in Figure 4, Figure 5 and Figure 6.

Figure 4.

The impact of embedding dimension.

Figure 5.

The impact of the number of attention heads.

Figure 6.

The impact of learning rate.

Through hyperparameter experiments, it can be observed that the embedding dimension, number of attention heads, and learning rate have a significant impact on the performance of the HAS-HGNN model. Appropriate hyperparameter settings can effectively enhance the model’s expressive power, capture information from different aspects, and accelerate model convergence. The experimental results show that HAS-HGNN performs best when the embedding dimension is 256, the number of attention heads is 8, and the learning rate is 0.005. These hyperparameter settings effectively balance the model’s expressive power and computational complexity, generating richer and more accurate node representations, thereby improving the accuracy and comprehensiveness of the recommendation system.

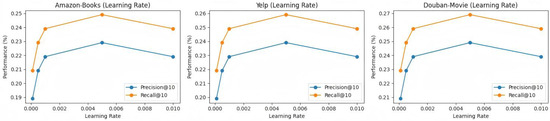

4.6. Visual Experiment

To further evaluate the model’s performance, we visualized the final node features learned by the model on the Yelp dataset through projecting them into a two-dimensional space using T-distributed Stochastic Neighbor Embedding (t-SNE). This visualization allows us to analyze the distribution of node features. The experimental results are presented in Figure 7. As illustrated in the figure, nodes of the same type exhibit relatively close proximity, indicating that HAS-HGNN can effectively distinguish and aggregate data points with similar characteristics. Consequently, this forms clear boundaries between different categories, which facilitates subsequent tasks.

Figure 7.

Node visualization experiment results on the Yelp dataset.

4.7. Robustness Studies

In order to check the robustness of HAS-HGNN, this paper randomly adds 1–2 noise edges to 1/5 nodes in the dataset to check the performance changes of HAS-HGNN under a noisy environment. The experimental results are shown in Table 4.

Table 4.

Robustness test results.

As can be seen from Table 4, the performance of the model does not significantly decrease after the addition of noise. This is because, in addition to the direct interest aggregation layer, the common interest features among users can effectively capture user interest, thus improving the ability to combat noise.

4.8. Complexity Analysis

To evaluate the complexity of the model, we compared the training time of different models on the Yelp dataset using a hardware platform equipped with an i5-12400F CPU and RTX2060 GPU. Table 5 summarizes the average training time and memory usage of each model. The results show that, compared with lightweight models such as GCNs, GATs, and HANs, HAS-HGNN incurs higher memory usage and training time. However, when compared with models like KGATs, HAS-HGNN demonstrates significant advantages in resource consumption. The increased memory and time consumption of HAS-HGNN is mainly due to the use of attention mechanisms for feature fusion in its core modules. Nevertheless, it is worth noting that although the use of attention mechanisms increases the cost in terms of time and memory, HAS-HGNN maintains lower architectural complexity than some other complex models. This characteristic indicates that HAS-HGNN achieves efficient feature representation learning through a relatively concise design.

Table 5.

Time and memory consumption of different models on the Yelp dataset.

5. Conclusions

This paper proposes a heterogeneous graph neural network recommendation model based on High-order Semantic and Node Attention (HAS-HGNN) to address the limitations of existing HGNN models, where both users’ direct interests and shared interests among users are often overlooked. HAS-HGNN consists of three core network layers: the interest aggregation layer, the shared interest feature capture layer, and the feature fusion layer. These layers respectively capture users’ direct interests and high-order semantic information, and dynamically adjust the importance of different features to generate richer and more accurate node representations.

We conducted experiments on three publicly available real-world datasets (Amazon-Books, Yelp, and Douban-Movie). The results demonstrate that HAS-HGNN significantly outperforms existing baseline methods in recommendation tasks, especially in capturing high-order semantic information and handling heterogeneous node relationships. Additionally, ablation studies and hyperparameter experiments further validate the effectiveness of each module and the rationality of the hyperparameter settings.

Despite the notable success of HAS-HGNN in recommendation systems, this paper does not consider how to generalize the model without historical data, and in the future, we will try to solve how the model can maintain good performance under cold startup conditions.

Author Contributions

S.L.: Conceptualization, Methodology. T.J.: Formal analysis, Funding acquisition, Supervision, Writing—review and editing. H.L.: Data curation, Investigation. E.W.: Software, Writing—original draft. R.T.: Visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The author Ranting Tao was employed by the company Meta Platform Statistics. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Resnick, P.; Varian, H.R. Recommender systems. Commun. ACM 1997, 40, 56–58. [Google Scholar] [CrossRef]

- Su, X.; Khoshgoftaar, T.M. A survey of collaborative filtering techniques. Adv. Artif. Intell. 2009, 2009, 421425. [Google Scholar] [CrossRef]

- Lops, P.; De Gemmis, M.; Semeraro, G. Content-based recommender systems: State of the art and trends. In Recommender Systems Handbook; Springer: Boston, MA, USA, 2011; pp. 73–105. [Google Scholar]

- Zheng, X.; Wang, Y.; Liu, Y.; Li, M.; Zhang, M.; Jin, D.; Yu, P.S.; Pan, S. Graph neural networks for graphs with heterophily: A survey. arXiv 2022, arXiv:2202.07082. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- White, D.R.; Reitz, K.P. Graph and semigroup homomorphisms on networks of relations. Soc. Netw. 1983, 5, 193–234. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, X.; Shi, C.; Hu, B.; Song, G.; Ye, Y. Heterogeneous graph structure learning for graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 19–21 May 2021; Volume 35, pp. 4697–4705. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous graph attention network. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2022–2032. [Google Scholar]

- Fu, X.; Zhang, J.; Meng, Z.; King, I. Magnn: Metapath aggregated graph neural network for heterogeneous graph embedding. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 2331–2341. [Google Scholar]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Ripplenet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Jiang, W.; Sun, Y. Social-RippleNet: Jointly modeling of ripple net and social information for recommendation. Appl. Intell. 2023, 53, 3472–3487. [Google Scholar] [CrossRef]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.S. Kgat: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Zhao, Y.; Wang, S.; Duan, H. LSPI: Heterogeneous graph neural network classification aggregation algorithm based on size neighbor path identification. Appl. Soft Comput. 2025, 171, 112656. [Google Scholar] [CrossRef]

- Zhao, Y.; Xu, S.; Duan, H. HGNN- BRFE: Heterogeneous Graph Neural Network Model Based on Region Feature Extraction. Electronics 2024, 13, 4447. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, H.; Duan, H. HGNN-GAMS: Heterogeneous Graph Neural Networks for Graph Attribute Mining and Semantic Fusion. IEEE Access 2024, 12, 191603–191611. [Google Scholar] [CrossRef]

- Li, Y.; Liu, K.; Satapathy, R.; Wang, S.; Cambria, E. Recent developments in recommender systems: A survey. IEEE Comput. Intell. Mag. 2024, 19, 78–95. [Google Scholar] [CrossRef]

- Chen, Z.; Gan, W.; Wu, J.; Hu, K.; Lin, H. Data scarcity in recommendation systems: A survey. ACM Trans. Recomm. Syst. 2025, 3, 1–31. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).