1. Introduction

SA, also known as opinion mining, is a dynamic field within natural language processing (NLP). It employs computational methods to analyze and evaluate subjective opinions, sentiments, emotions, and attitudes expressed in textual data. SA makes an effort to categorize user-generated reviews or comments into positive and negative classes to offer helpful insights from online opinions and customer feedback. This improves the user experience and helps in decision-making in areas such as marketing, customer support, and public opinion tracking.

Two main approaches dominate SA: lexicon-based and machine learning-based [

1]. Lexicon-based methods rely on pre-defined lists of sentiment words. The sentiment of a text is determined by counting these words and their assigned values. They are fast and do not need training data but can be rigid and inflexible. Machine learning methods are trained on labeled data to learn the complex relationships between words and sentiments. They are more versatile but require extensive data and computation. This judgment is necessary to accurately determine the sentiment orientation of a particular text.

DNNs have shown remarkable performance in the field of textual SA. Recent research in SA highlights shortcomings in DNN-based methods. Despite their effectiveness, these models can extract irrelevant or redundant features while missing essential sentiment cues in words, leading to reduced classification accuracy [

2]. DNNs lead in SA performance, but traditional methods remain strong in interpretability and efficiency. Can we build a hybrid model that leverages both strengths? Our research explores a hybrid model for synergizing DNN and classic feature-based methods for enhanced SA performance. In order to address the aforementioned issues in SA, we suggest an SA model specifically designed for semantic and sentiment awareness. The goal of this model is to improve sentiment analysis’s accuracy and solve its current problems. We introduce an innovative fusion approach that greatly improves text SA performance and reduces the high-dimensional feature space by combining conventional feature creation with DNN-based techniques.

The review text is first parsed, including through tokenization and the methodical application of linguistic rules. The parsing process identifies sentences that contain contradictory positive and negative clauses or phrases within the same structure as expressing mixed views. Linguistic rules (LR) aim to choose the most representative sentences/clauses from mixed opinions for the training set, with the primary goal of preserving (or enhancing) the effectiveness of the classification model [

1,

3]. We then apply part-of-speech (POS) tags to specific words, including adjectives, adverbs, verbs, and nouns, using the Stanford POS tagger [

4]. This tagging process lays the foundation for our incorporation of the wide coverage integrated sentiment lexicon (WCISL) [

1].

We use the WCISL as a valuable asset to acquire semantic and sentimental information. This helps in extracting sentiment-related words and features from textual data, including verbs, nouns, adjectives, and adverbs. Next, we employ the RoBERTa transformer model as an encoder to tokenize the input sequence and encode it into a discriminative sentiment-enhanced word embedding. To further enhance word embeddings with richer contextual understanding and highlight the most significant features, we employ a bidirectional GRU model augmented by an attention mechanism.

The dimensionality of the feature space is then successfully reduced by applying a statistical feature reduction approach, namely Principal Component Analysis (PCA). PCA is preferred due to its speed, ease of use, and exceptional ability to preserve original data to a considerable degree. In order to choose the features optimally and increase the overall effectiveness of our method, this reduction step is essential. After the feature reduction step, the Sigmoid function is applied to produce the probabilistic distribution of the classes in the sentiment analysis dataset. In particular, our integrated model fulfills three primary purposes: First, it reveals the underlying connections in the data. Second, it effectively extracts sentiment words and gives the important sentiment words the appropriate weight. Third, it lowers the dimensionality of the feature space and eliminates superfluous features.

The following is a summary of our primary contributions:

We suggest DK-HDNN, a unique hybrid deep neural network-based accurate sentiment analysis model that combines semantic and sentiment awareness. The suggested model effectively finds and extracts significant contextual sentiment features from the review text by combining traditional DNNs with contextual semantics, linguistic, and sentiment information. In particular, the suggested hybrid sentiment analysis model had been significantly (statistically) impacted by the incorporation of linguistic semantics and sentiment knowledge with standard deep neural networks (RoBERTA, BiGRU, attention mechanism), as well as by PCA.

We use linguistic semantic rules to classify review texts with mixed opinions composed of positive and negative words.

We employ WCISL and Standford PoS Tagger to tap into semantic and sentiment knowledge, which facilitate the identification and extraction of sentiment features in the review text for SA.

We utilize the RoBERTa transformer model as the encoder to tokenize the input sequence and encode it into a distinct sentiment-enhanced word embedding.

We weigh the important aspects and investigate the deeper internal relationships in the data using BiGRU with the attention mechanism.

We employ PCA to efficiently reduce the dimensionality of features in our data while preserving the most important information.

In comparison to a number of earlier baseline techniques in sentiment analysis, our suggested model shows a notable boost in performance across four real-world benchmark datasets.

The remaining paper is structured as follows:

Section 2 describes related work,

Section 3 presents the detailed architectures and proposed methodology of the proposed system,

Section 4 shows experimental results,

Section 5 concludes the paper, and

Section 6 discusses challenges and future directions.

2. Related Work

This section reviews the state-of-the-art methods for text sentiment analysis, which are feature extraction and selection, pre-trained large language models, and deep neural network models.

2.1. Feature Extraction and Selection

Numerous studies have explored diverse feature representation schemes to enhance sentiment classification performance in textual sentiment analysis (SA). For instance, Mohammed et al. [

5] developed a sentiment analysis framework that utilizes Count Vectorizer for feature extraction, combining five machine learning models and three deep learning models, including LSTM, MLP, and CNN. When tested on Facebook and Twitter datasets, the LSTM model achieved the highest accuracy of 0.99, particularly excelling with Facebook data. The proposed method outperformed existing models, improving accuracy by up to 20.9% and showing significant gains in precision, recall, and F1-score. Their study demonstrates that integrating diverse algorithms can significantly enhance sentiment detection on social media platforms.

Chang et al. [

6] introduced two innovative feature selection techniques—Modified Categorical Proportional Difference (MCPD) and Balanced Category Feature (BCF)—designed to ensure equal representation of attributes from text reviews. Their experiments demonstrated that integrating MCPD and BCF not only significantly reduces the dimensionality of the feature space but also enhances the accuracy of sentiment classification.

Khan et al. [

7] introduced a novel ensemble approach for text SA named EnSWF, which leverages POS tagging and n-gram patterns. This method incorporates semantic context, sentiment cues, and word order to enhance performance. Relevant features for SA were effectively extracted and selected using POS patterns and POS-based n-gram structures, combined through ensemble learning techniques to improve overall accuracy.

Noura et al. [

8] highlighted the importance of feature extraction in SA by evaluating several methods—BoW, Word2Vec, N-gram, TF-IDF, HV, and GloVe—on the Twitter US Airlines and Amazon Musical Instruments datasets. Using a Random Forest classifier, their results showed that TF-IDF delivered the best performance, achieving 99% accuracy in Amazon reviews and 96% on Twitter data. This study emphasizes how selecting the right feature extraction technique can significantly enhance SA outcomes.

Sen et al. [

9] proposed a novel method to improve text classification by combining traditional n-gram features with graph-based deep learning. They transformed text into graphs using discriminative n-gram sequences to capture long-range word dependencies and trained a graph convolutional network (GCN) to produce enriched word embeddings. When these embeddings were integrated into an LSTM model, they achieved a 2% performance gain across various datasets, demonstrating the effectiveness of merging n-gram features with deep learning techniques.

The extraction and selection of relevant attributes conveying sentiment information are pivotal in SA. In this procedure, irrelevant and noisy attributes are excised from the feature space, resulting in a leaner, more accurate representation of sentiment-carrying elements. This refined set of features contributes to improved classification accuracy in SA and related tasks. In the realm of text categorization and SA, diverse feature selection techniques have been explored, with Document Frequency (DF), Chi-square (CHI), Mutual Information (MI), and Information Gain (IG) emerging as prominent approaches for refining feature sets and optimizing classification performance [

7].

Principal Component Analysis (PCA) [

10] is a statistical technique that streamlines complex datasets by identifying a compact set of principal components that capture the majority of the original data’s variance, enabling effective dimensionality reduction without sacrificing significant information.

Despite the application of traditional feature selection techniques for dimensionality reduction, classifiers continue to face significant challenges related to data sparsity. This issue arises because traditional feature selection methods, such as filter-based, wrapper-based, or embedded approaches, primarily focus on reducing the number of features by selecting the most relevant ones. However, they do not necessarily enhance the quality of data representation.

To address these challenges, advanced representation learning techniques like deep learning-based feature extraction, word embeddings (e.g., Word2Vec, GloVe, transformer models), and autoencoders can be used.

2.2. Pre-Trained Large Language Models

Pre-trained large language models (PLLMs) are revolutionizing the field of SA, bringing an unprecedented level of sophistication, accuracy, and contextual understanding to this domain. These models, such as BERT, GPT, XLNet, T5, and RoBERTa [

11,

12,

13,

14], have been trained on vast amounts of textual data, enabling them to understand language in a highly nuanced manner. Unlike traditional machine learning models that rely on handcrafted features and shallow text representations, PLLMs can capture complex linguistic structures, contextual dependencies, and sentiment nuances with remarkable efficiency.

The transformer-based architectures of these models allow them to process text bidirectionally (e.g., BERT, RoBERTa, and XLNet) or autoregressively (e.g., GPT). This bidirectional processing is particularly beneficial for sentiment analysis, as it enables models to consider both past and future words in a sentence when determining sentiment. For instance, the meaning of a word like “great” in “This movie is great!” versus “This movie is not great.” depends heavily on context, which these models effectively capture.

Advantages of LLMs in Sentiment Analysis:

Contextual Understanding—Unlike traditional word embedding techniques (e.g., Word2Vec, GloVe), PLLMs generate dynamic word representations that change based on the surrounding words, allowing for context-sensitive sentiment classification.

Handling Ambiguity and Sarcasm—Sentiment in text is often complex, especially in informal settings like social media. LLMs improve the detection of subtle linguistic cues such as sarcasm, negations, and implicit sentiments.

Transfer Learning Capabilities—Since these models are pre-trained on massive datasets, they require less labeled training data and can be fine-tuned for domain-specific sentiment tasks with improved generalization.

Multilingual Capabilities—Many LLMs, including XLM-R and mBERT, are multilingual, enabling SA across different languages without the need for separate models.

Why RoBERTa for Sentiment Analysis?

In this research, we employ RoBERTa for dynamic word embedding and vectorization, leveraging its optimized training strategies and enhanced performance over BERT. RoBERTa, short for Robustly Optimized BERT Pretraining Approach, retains the original transformer-based bidirectional architecture of BERT but incorporates key modifications that improve its efficiency and accuracy in NLP tasks.

Improved Training Strategies—RoBERTa removes the Next Sentence Prediction (NSP) objective from BERT, focusing entirely on masked language modeling (MLM), which enhances its contextual learning capabilities.

Larger Training Data and Batch Sizes—RoBERTa is trained on much larger datasets with increased batch sizes, enabling it to capture richer linguistic patterns.

Dynamic Masking—Unlike BERT’s static masking approach, RoBERTa uses dynamic masking, meaning the masked words in training change across epochs, leading to better generalization.

Faster and More Efficient Fine-Tuning—With no NSP and an optimized training procedure, RoBERTa achieves comparable or superior performance to BERT while requiring less training time and hyperparameter tuning.

Due to these advantages, RoBERTa excels in sentiment analysis tasks, providing better contextual embeddings and improved sentiment classification accuracy. By leveraging RoBERTa in our study, we aim to capture fine-grained sentiment variations and enhance sentiment classification performance in complex textual datasets.

2.3. DNN Paradigms

The development of architectures such as convolutional neural networks (CNNs), bidirectional long short-term memory (BiLSTM), and bidirectional gated recurrent unit (BiGRU) architectures, alongside word embeddings and attention mechanisms, has had a major impact on SA and other related areas, sparking significant research interest [

4,

15,

16,

17,

18,

19,

20,

21].

Kim [

22] developed a CNN model with multiple filters and max-pooling to extract key features, which were then classified using a fully connected layer. Rezaeinia et al. [

23] enhanced word embedding in a CNN-based SA model by incorporating lexical, positional, and syntactical features, followed by sequential CNN modules for feature selection. Yang et al. [

24] introduced a dual-channel DNN using pre-trained Word2Vec for text feature extraction and intent classification, achieving strong results in multi-intent tasks. Additionally, CNN primarily captures local features and neglects sequence information in text analysis.

LSTM and its variants are effective for sequential modeling tasks and capturing long-range dependencies between words in a sentence [

25,

26,

27,

28,

29]. Wang et al. [

30] utilized the Word2Vec model for word embedding and introduced an LSTM-based sentiment classification method for short social media texts. Yang et al. [

31] proposed an attention-enhanced bidirectional LSTM to improve target-dependent sentiment classification.

The synergistic integration of deep learning architectures, word embeddings, and attention mechanisms demonstrates promising predictive potential in text classification and SA [

4,

19,

32,

33].

Li et al. [

34] employed the CBOW model for word embedding in an LSTM-CNN hybrid framework for Chinese news text classification, achieving promising results. Similarly, Zhang et al. [

35] developed an LSTM-CNN hybrid model for sentiment classification of movie reviews.

Additionally, several studies have explored hybrid models that integrate deep neural networks (DNNs) with attention mechanisms to enhance SA [

32,

36,

37]. Liu et al. [

36] introduced AC-BiLSTM, a hybrid model combining bidirectional LSTM and CNN with an attention mechanism for SA and question answering. Their approach leveraged BiLSTM for capturing contextual dependencies while using attention to focus on the most relevant text segments.

Basiri et al. [

37] proposed ABCDM, a SA model integrating BiLSTM, BiGRU, and CNN with an attention mechanism. The model was evaluated on five English comment datasets and three Twitter datasets, achieving state-of-the-art performance across multiple benchmarks.

However, recent studies suggest that DNN-based methods tend to select irrelevant and redundant features [

38], overlooking the sentiment cues associated with each sentiment word. This can impact their performance in terms of classification accuracy.

Although conventional feature extraction methods offer interpretability and efficiency, recent studies show that Deep Neural Networks (DNNs) consistently outperform them in SA tasks. This paper proposes DK-HDNN, a novel model that leverages both approaches. DK-HDNN combines conventional feature extraction with deep learning techniques that incorporate linguistic semantics and sentiment information.

DK-HDNN stands out from existing models in several ways. First, it integrates linguistic semantics and sentiment information from sources like sentiment lexicons and linguistic rules. This allows for extracting context-rich sentiment-bearing words and accurate classification of opinions. Second, DK-HDNN utilizes RoBERTA to generate sentiment-enhanced word embeddings. These embeddings are then processed by a BiGRU with an attention mechanism, further capturing nuanced sentiment features. Finally, DK-HDNN employs PCA for dimensionality reduction, ensuring an efficient model.

3. Proposed Technical Approach

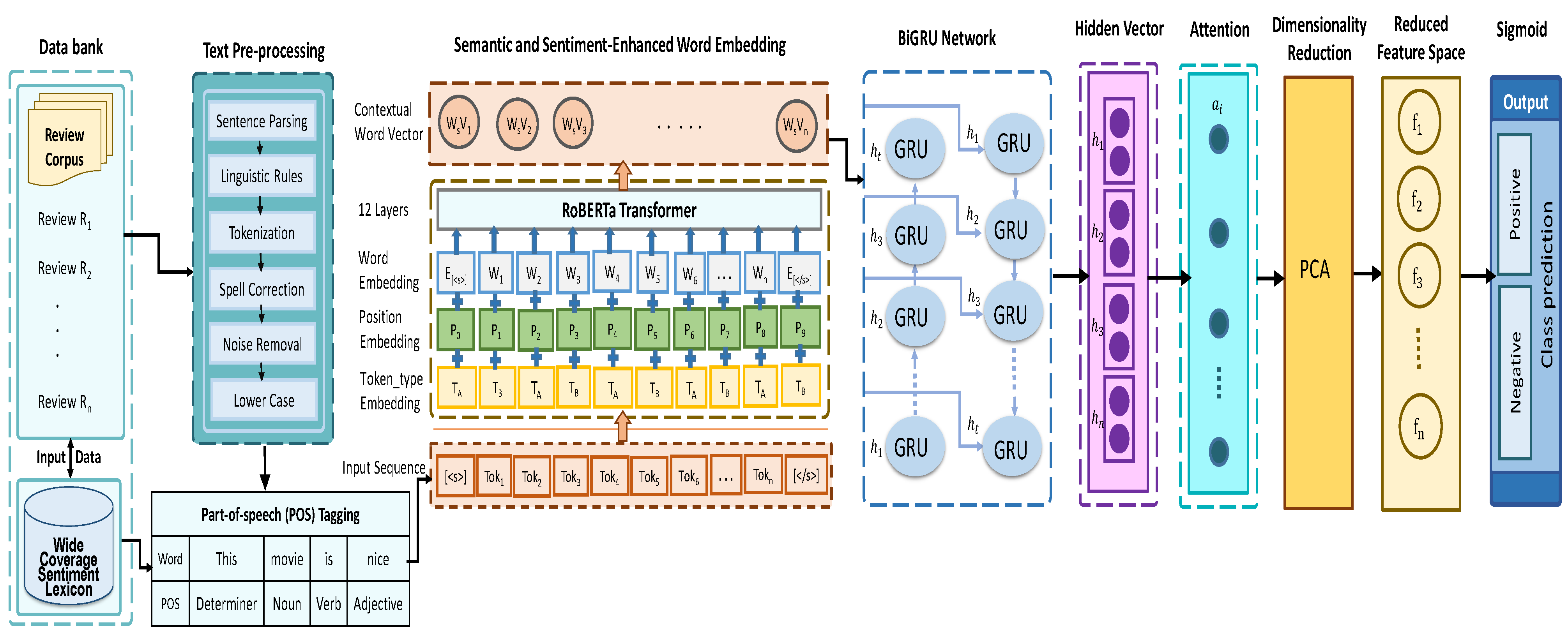

Conventional SA methods often struggle with limitations such as sparse data and the intricate nature of natural language. DK-HDNN bridges this gap by combining feature engineering’s interpretability with DNNs’ power to capture language nuances. More specifically, we introduce DK-HDNN, a novel hybrid approach that integrates DNNs and feature engineering for accurate text SA. To create the initial sentiment feature space and clean up the review text, the approach starts with text preprocessing. Subsequently, the RoBERTa pre-trained language model is utilized to extract dynamic semantic and sentiment feature representations from the input review text’s sequence. The extracted word features are then input into the BiGRU network, enhancing the model’s ability to understand intricate details within the text. The attention mechanism is incorporated to highlight key features, and PCA is applied to reduce dimensionality and overcome feature redundancy. Finally, the reduced feature vector is fed into the sigmoid layer for classification, completing the SA of the review text. The detailed framework is shown in

Figure 1.

3.1. Preprocessing the Text and Extracting Features

We systematically generate the initial feature space by preprocessing the text. It involves loading the review dataset, employing a sentence parser to segment text into manageable units, and applying a tokenizer to break down sentences into individual tokens, preparing the data for feature extraction.

To enhance the signal-to-noise ratio in the dataset, we employ a noise reduction and text modification module to eliminate unnecessary elements such as stop words, URLs, and numerical symbols. Additionally, we incorporate spell correction using the spellchecker library, handle contractions with regular expressions, and improve irrelevant content filtering by removing special characters and redundant text.

After that, the POS tagger is used to give words POS tags, paying special attention to recognizing verbs, nouns, adjectives, and adverbs. The WCISL searches the sentiment orientation of these words to find and extract sentiment features.

Linguistic

Semantic Rules

To uncover the subtle nuances of language and navigate sentiment’s twists and turns, we utilize linguistic norms as our guide. Inspired by the wisdom of [

39,

40,

41], these norms act as a compass, helping us navigate the complexities of context and differentiate between synonyms and antonyms. Imagine a sentence as a tapestry of words, each thread delicately woven together. Linguistic semantic rules act as weavers, carefully examining the patterns and connections to reveal the true sentiment hidden within. Consider this example: “The movie maker is well-known but the movie is uninteresting”. Without linguistic guidance, we might misinterpret the overall sentiment. However, these rules focus our attention on the pivotal clause following “but”, leading us to the heart of the matter: the movie’s disappointing quality. Specific words like “but”, “despite”, “while”, and “unless”, act as signposts, signaling potential shifts in sentiment. As gatekeepers of meaning, they hold the power to transform a sentence’s polarity. Expanding on the groundwork established by earlier studies [

3,

39,

40], we choose a carefully curated set of linguistic rules, as shown in

Table 1, to ensure a comprehensive and context-sensitive SA.

3.2. Enhanced Sentiment and Semantic Word Embedding

We utilize the extensive expertise of wide coverage integrated sentiment lexicons (WCISLs), which include sentiment scores and related words in conjunction with refined linguistic rules. This potent combination enables the extraction and curation of highly relevant sentiment features. These serve as valuable inputs for both word embedding, which enriches understanding of individual words in context, and sentiment classification, which determines the overall emotional stance of the text. This phase entails the execution of the subsequent steps, as detailed below.

3.3. Sentiment Lexicons with Integrated Wide Coverage

A sentiment lexicon serves as a collection of words used to express either positive or negative sentiments. These lexicons play a crucial role in recognizing sentimental features and phrases. In the literature, numerous sentiment lexicons have been created on different scales, which contain AFFIN, OL, SO-CAL, WordNet-Affect, GI, SentiSense, MPQA Subjectivity Lexicon, NRC Hashtag Sentiment Lexicon, SenticNet5, and SentiWordNet, among others [

42].

We standardize various sentiment lexicons by assigning scores of +1 (positive), −1 (negative), and 0 (neutral) to words. This allows us to seamlessly integrate information from various sources, culminating in the creation of an extensive merged lexicon, denoted as WCISL. This lexicon encompasses a significantly larger repertoire of sentiment-bearing words compared to individual sources.

In this research, we leverage the matching process between the review sentence words and those present in the WCISL to conduct accurate SA. Formally,

denotes the wide coverage integrated sentiment lexicon (

m words) and

an input sentence (

n features).

represents the sentiment polarity of word

in

, and

= 1 indicates

presence in

S.

Table 2 offers comprehensive details on the dimensions and composition of these cutting-edge sentiment lexicons, highlighting their individual word coverage and contributing to the richness of the consolidated WCISL.

The main difficulty with sentiment lexicons is their limited vocabulary coverage. If a sentiment word is not present in the existing sentiment lexicons, it is typically omitted, which can impact the sentiment of the review text. To address this issue, we utilize semantic knowledge from WordNet to identify synonyms of out-of-vocabulary sentiment words and determine their sentiment orientation. Specifically, for a given word W, we retrieve its synonym set from WordNet. Each synonym is then checked in the WCISL. If a synonym exists in WCISL, its sentiment score is used.

The sentiment score of

W is computed as follows:

where

represents the sentiment polarity of synonym

in WCISL. If

, the sentiment is classified as positive; otherwise, it is negative. Furthermore, the WCISL is utilized for sentiment-enhanced word embedding and classification. Given an input review sentence

our method applies WCISL to extract sentiment words from

S and form a sentiment-enhanced word vector:

which is then used for improved SA.

3.4. Word Vector Representation Based on RoBERTA

The proposed model leverages the RoBERTa, a pre-trained transformer-based large language model (PLLM), to generate numerical feature vectors that capture the semantic and syntactic nuances of input text. RoBERTa, a refined descendant of BERT, stands upon the same transformer foundation, offering enhanced language understanding capabilities. RoBERTa leverages byte-level BPE (Byte Pair Encoding) for tokenization, dynamic masking, and training on massive datasets (BookCorpus+Wikipedia, CC-News, OpenWebText, Stories) to achieve superior accuracy and efficiency compared to BERT’s character-level BPE and static masking. The RoBERTa base model consists of 12 layers, 768 hidden state vectors, and 125 million parameters. These base layers are designed to generate a meaningful word embedding as the feature representation, facilitating the subsequent layers in capturing valuable information from this embedding.

To effectively process an input sequence/sentence, we use special boundary tokens: “” to indicate the beginning of a sentence and “” to indicate the end of a sentence. These tokens help the model recognize sentence boundaries and structure, enabling it to interpret the input as a coherent sentence rather than a disordered collection of words. This is crucial for preserving the contextual integrity of the sentence and for allowing the model to accurately learn and represent the relationships between words.

For each training instance , we first locate all sentiment words within using the WCISL dictionary. Next, we tokenize into a sequence of subwords and retrieve the corresponding embeddings for each subword. This sequence of embeddings is then passed into the PLLM. From the final layer of the PLLM, we take the representation of the first token as the context representation for .

At the same time, we also extract the embeddings of the sentiment words present in

. As illustrated in

Figure 1, the pre-trained RoBERTa model generates embeddings based on individual words

, positional information

indicating each word’s position within the sentence, and token type IDs

, which reflect the segment type within the training input.

WordEmbedding (W): This is the representation of individual words in the input sequence. Each word in the sequence (, , …, ) is mapped to a high-dimensional vector (, , …, ) based on pre-trained or learned word vectors. These word vectors capture semantic meaning and the inherent relationships between words.

Position Embedding (P): Position embeddings encode the relative or absolute position of a word in the sequence. Since transformers, like RoBERTa, do not inherently process sequential data (unlike recurrent neural networks), position embeddings are added to the word embeddings to inform the model about the order of words in the sentence.

Token-Type Embedding (T): Token-type embeddings are helpful in distinguishing between the sentence that provides the main content (such as opinions or sentiments) and other structural tokens like punctuation or special markers. This can guide the model in focusing on the most relevant words for sentiment classification.

Combined Input Representation: These embeddings (W, P, T) are combined through superimposition, forming the input for the next network layer, with the formula being

RoBERTa then transforms the input into a low-dimensional vector , capturing contextual information at time t.

Key Process Flow:

Raw Text to Tokenization: The sentence is tokenized into individual words, adding special tokens for the sentence boundaries.

Embedding Combination: Word embeddings (W), position embeddings (P), and token-type embeddings (T) are added together for each token.

Transformer Layers: The combined input representation is passed through 12 transformer layers, where each word’s contextual meaning is refined and updated based on its interaction with other words in the sequence.

This process ultimately generates rich vector representations that encode both semantic (word meanings) and sentiment (contextual sentiment based on word interactions) information, enabling effective sentiment classification.

To enhance the RoBERTa model’s output, a BiGRU is utilized as a feature extractor. This incorporation allows the model to benefit from both the contextual information captured by RoBERTa and the long-range dependencies between tokens, leading to more precise predictions.

3.5. BiGRU Deep Neural Network

Bidirectional GRUs (BiGRUs) are specialized neural networks that build on traditional GRUs. BiGRUs often achieve performance comparable to BiLSTMs while using fewer parameters, leading to faster training, reduced memory requirements, and lower computational costs. Unlike simpler RNNs, BiGRUs can effectively learn from both past and future information within lengthy sequences, even overcoming the vanishing gradient problem. This allows BiGRUs to capture subtle contextual nuances and ultimately achieve deeper linguistic understanding.

In a standard GRU, input sequences are processed sequentially. At each time step, the GRU unit takes the current input and the previous hidden state to generate a new hidden state and output. GRU incorporates a reset gate

and an update gate

that regulates information flow. The update gate ensures the network holds onto valuable insights, while the reset gate prevents it from becoming bogged down by outdated information, fostering a dynamic and adaptive understanding. Furthermore,

h and

bear the current and updated information, respectively. The calculation of

,

,

h, and

at time step

t is summarized as follows.

where the symbol ⊙ represents element-wise multiplication.

is a sigmoid function.

,

,

,

, and

,

,

are all weights to be learned. The biases of the update gate, reset gate, and memory cell are denoted as (

,

,

).

In BiGRU, features are extracted using both a forward GRU and a backward GRU. To comprehensively capture information from different directions, we concatenate the two extracted features using the following equation.

where

represents the forward sequence,

represents the backward sequence, and ⊕ denotes the concatenation operation. BiGRU processes the text matrix, producing output H, which encapsulates deep semantic features. Its expression is as follows:

In our model, a strategically placed BiGRU leverages RoBERTa’s encoded text, unraveling hidden relationships that might otherwise remain concealed. This long-range comprehension empowers the model to grasp the text’s overall coherence, unveiling the intricate tapestry woven by distant words and concepts.

3.6. Enhancing BiGRU with Attention

Although BiGRU networks excel in capturing contextual relationships within text, they sometimes struggle to pinpoint the most crucial words for accurate classification. Additionally, their hidden layers might inadvertently discard valuable information from earlier stages. To overcome these challenges, attention mechanisms can be integrated to selectively focus on keywords and preserve important information throughout the network, enhancing overall performance. Attention mechanisms revolutionize deep learning by pinpointing the essence of language.

Derivation of the Attention Weight Formula:

Contextualizing the Hidden States: The attention mechanism is incorporated into the hidden layer of BiGRU. The attention mechanism gives each word a weight, represented as

, using a softmax function. Higher attention weights are given to words with stronger sentiment signals, highlighting their importance in the analysis. It is anticipated that contextually rich features, which are essential for enhancing the text’s contextual meaning for sentiment prediction, will receive the majority of this attention weight. This process is used to calculate the attention weights for the hidden state output

from the BiGRU, as shown in

Figure 1. Attention weights are computed as follows.

Applying the Softmax Function: The attention scores

are passed through a softmax function to normalize them and produce attention weights

:

This ensures that the attention weights sum to 1, making them interpretable as probabilities. Words that are more important for sentiment analysis will receive higher attention weights.

Calculating Attention Scores:Each hidden state

is assigned an attention score, denoted as

, using the following formula:

While is formed by mixing the representations from the forward and backward GRU, (weight matrix) and (hidden state) are learnt parameters, and is a bias term. The hyperbolic tangent function tanh is used to introduce non-linearity and ensure that the attention scores are within a suitable range for further calculation.

Computing the Context Vector: Each word is given an attention weight

that indicates its importance in the text.

The whole input text vector, including sentiment data collected word-by-word, is represented by the variable D. This context vector D captures the most relevant features of the text, weighted by the attention mechanism.

Before feeding the key feature vector into the sigmoid classifier for final decision-making, we first apply PCA, a dimensionality reduction technique. This process effectively condenses the feature vector into its most informative components, removing irrelevant and redundant features and enhancing the classifier’s accuracy.

3.7. Optimizing Feature Representation with Reduced Dimensionality

Traditional attention mechanisms attempt to determine importance by averaging weights across all input information. However, this can lead to unnecessary attention being given to less relevant words, potentially hindering performance. To address this, this paper employs PCA [

43], a technique that strategically reduces dimensionality, ensuring focus on the most meaningful aspects of the data for SA. PCA is a widely used statistical method for dimensionality reduction. It employs an orthogonal transformation to convert potentially correlated input attributes into a set of uncorrelated variables called principal components. The first principal component captures the largest amount of variance in the data, and subsequent components capture progressively smaller amounts of the remaining variance. PCA reduces the number of dimensions while retaining most of the original data’s information, as the number of principal components is always less than or equal to the original number of attributes.

3.8. Sentiment Classification with Sigmoid

To determine the final output of the binary class, we use the sigmoid function shown in Equation (11) to predict sentiment polarity. The input data are essentially mapped to the range [0, 1] by this function, which generates a probability distribution across the labels. Finding the sentiment label of the input text is the goal of the two-class SA problem. A sentiment class that leans toward the negative polarity is shown when the output

y is around 0. On the other hand, an emotion class that tends toward the positive polarity is indicated when

y is around 1.

The equation includes learned parameters w (the parameter matrix) and b (the bias), with x representing the strong sentiment state.

3.9. Model Compilation

The model compilation utilizes categorical cross entropy as the loss function, the Adam optimization algorithm, and accuracy as the evaluation metric. The objective of the model is to predict the correct class for each input sequence.

Algorithm 1 presents detailed step-by-step pseudocode for implementing a hybrid deep learning model that integrates Word-level Contextual Wide Coverage Sentiment Lexicon (WCISL), RoBERTa, BiGRU, an attention mechanism, and PCA for sentiment classification tasks. This innovative architecture combines traditional feature engineering with advanced deep learning methods to enhance SA performance. Additionally, the algorithm employs linguistic semantic rules to effectively classify mixed opinions, providing a nuanced approach to SA. The pseudocode starts with preprocessing the input text and extracting features using WCISL and pre-trained word embeddings. It then leverages RoBERTa to capture contextual representations and applies BiGRU to model sequential dependencies in the text. The attention mechanism identifies the most relevant features, emphasizing critical parts of the input, while PCA reduces dimensionality and eliminates redundant features. Finally, the algorithm describes using a sigmoid layer for classification. The pseudocode provides a high-level overview of the DK-HDNN model, encompassing the key steps in its construction, compilation, training, and evaluation, including the essential operations and decision points.

The comprehensive design ensures the extraction of both semantic and sentiment features, making the model suitable for various SA tasks on benchmark datasets.

| Algorithm 1 DK-HDNN for Text Sentiment Analysis |

Input ; /* review text documents */ -/* pre-trained embedding model (e.g., RoBERTa) */ WCISL- /* wide coverage integrated sentiment lexicon */ -/* attention weights */

Output ; /* sentiment labels for documents, */

- 0:

Begin - 1:

for each document do - 2:

/* Preprocessing */ - 3:

Tokenize into words ; - 4:

Remove stop words, punctuation, and apply part of speech tagging and spell correction; - 5:

/* Feature Extraction */ - 6:

Obtain word embeddings ; - 7:

Extract sentiment scores ; - 8:

Concatenate embeddings and sentiment features , where - 9:

/* Deep Learning */ - 10:

Pass X through RoBERTa to obtain contextual representations - 11:

Use BiGRU to process : - 12:

; - 13:

; - 14:

. - 15:

Apply attention mechanism to compute attention weights : - 16:

. - 17:

Compute weighted document representation: - 18:

. - 19:

Reduce dimensionality of r using PCA: . - 20:

Predict sentiment class: - 21:

. - 22:

Store in . - 23:

end for - 24:

return ; /* predicted sentiment labels */ - 25:

End

|

4. Experimental Results and Discussion

4.1. Evaluation

We evaluated our system’s performance using four real-world benchmark datasets: (1) Movie Reviews (MR) dataset [

44]; (2) Customer Review datasets (CR) [

45]; (3) Large Movie Review (IMDB) dataset [

46]; (4) SemEval 2013 dataset [

47]. There are 5331 positive and 5331 negative review samples in the MR dataset. The CR dataset is composed of 14 product reviews collected from Amazon, as detailed in [

45]. The IMDB dataset features a rich collection of 50,000 movie review texts. SemEval 2013 has a traditional training-test split; however, MR, CR, and IMDB do not have comparable splits. Following prior work [

48], we use 10-fold cross-validation for evaluation. For MR, CR, and SemEval 2013, we also set aside 10% of the training data for development purposes, such as early stopping.

We used 300-dimensional word vectors for word embedding, implemented with Keras v2.11.0 and TensorFlow v2.11.0, and utilized RapidMiner Studio v10.1 for visual workflow design. We used the RoBERTA-BASE model for word vectorization, employing an Adam optimizer with a learning rate of . The RoBERTA-BASE model’s architecture consisted of 12 layers, a hidden layer dimension of 768, 12 attention heads, and over 110 million parameters. Additionally, we used the wide coverage integrated sentiment lexicon (WCISL) to extract sentiment information from the review texts. In BiGRU, 128 hidden neurons are allocated to each layer. In the output layer, the sigmoid function is used to calculate the probabilities related to class labels.

The total number of training epochs in this architecture was set at 10. We employed PCA to reduce the dimensionality of each embedding vector individually, projecting them into a 300-dimensional vector space. We assessed the effectiveness of our proposed hybrid method by analyzing its predictive performance using metrics such as Accuracy (ACC), Precision (PRE), Recall (REC), and F-measure. We used a paired t-test to calculate the evaluation metrics for our suggested model, setting the significance level (p < 0.05) to less than 0.05.

4.2. Model Variants

We evaluate the effectiveness of the proposed method by using four distinct model variants (One baseline and three proposed models). First, we employed the SOTA baseline DNN model (RoBERTA+BiGRU), which we call HDNN-1. Then, for the second model variant (DK-HDNN-2), we included SSK (linguistic semantics and sentiment knowledge) with RoBERTA and BiGRU. Similarly, for the third and fourth model variants (DK-HDNN-3, DK-HDNN), we included the attention mechanism and PCA, respectively, in order to find which model variant is most important.

HDNN-1 (RoBERTA+BiGRU): The model utilizes RoBERTA’s pre-trained word embedding in tandem with BiGRU, excluding considerations of linguistic semantics and sentiment knowledge (SSK), PCA, and the attention mechanism.

DK-HDNN-2 (SSK+RoBERTA+BiGRU): The model leverages linguistic, semantic, and sentiment knowledge, employing RoBERTA’s pre-trained word embedding in conjunction with BiGRU. Notably, it does not incorporate PCA or the attention mechanism.

DK-HDNN-3 (SSK+RoBERTA+BiGRU+Attention): The model incorporates linguistic semantics and sentiment knowledge and utilizes RoBERTA’s pre-trained word embedding in conjunction with BiGRU and an attention mechanism, while PCA is excluded from the model’s configuration.

DK-HDNN (SSK+RoBERTA+BiGRU+Attention+PCA): The model integrates linguistic semantics and sentiment knowledge, utilizes RoBERTA’s pre-trained word embedding, BiGRU, attention mechanism, and employs PCA.

4.3. Influence of Sentiment Knowledge, Attention Mechanism, and Dimensionality Reduction on DK-HDNN Performance

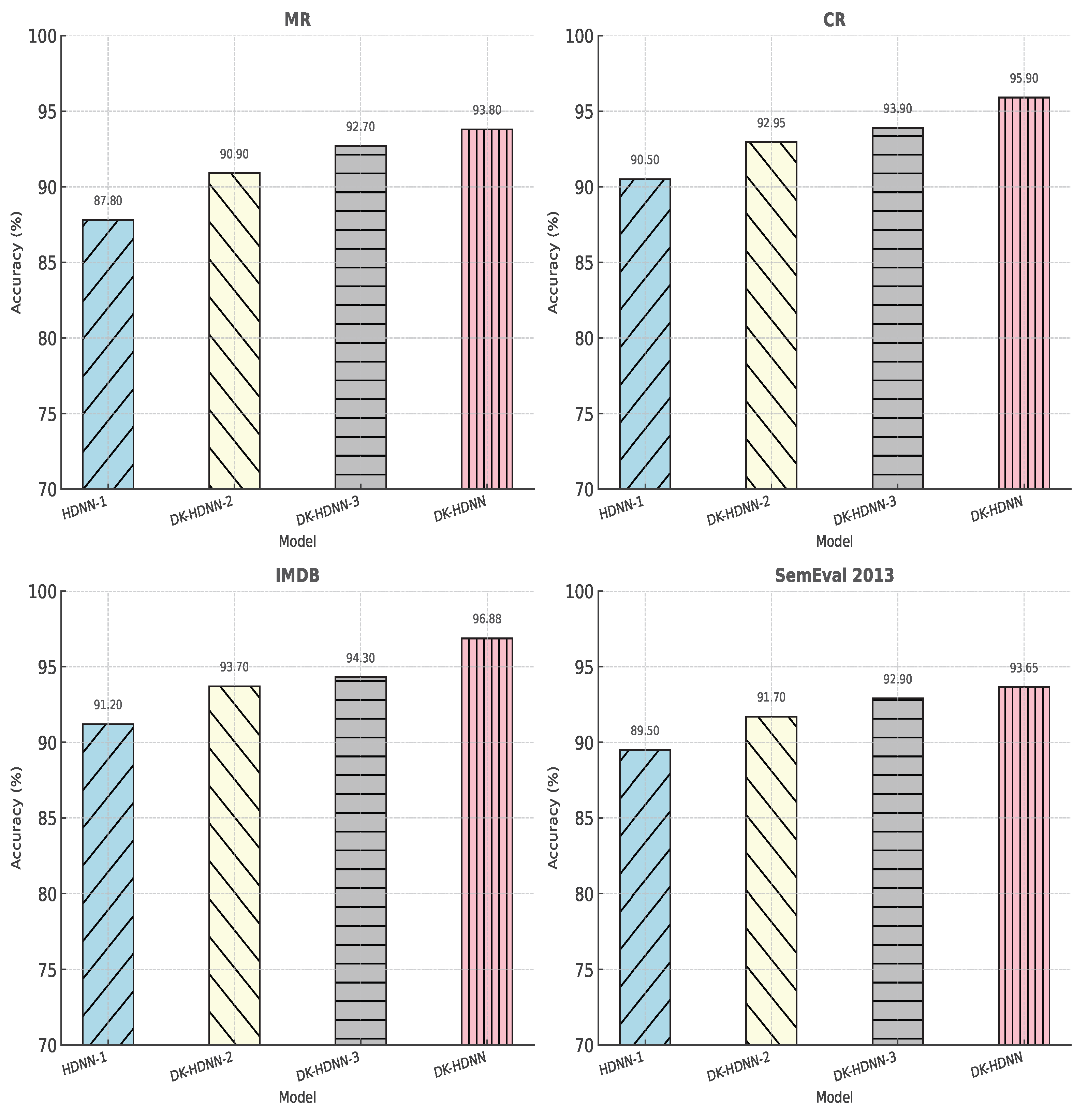

The accuracy of the ablation results for each variant model and the recommended model for different datasets is shown in

Figure 2 and

Table 3. As shown in

Figure 2, the proposed DK-HDNN model and its variants showcase their accuracy across multiple datasets. Notably, DK-HDNN demonstrates superior performance on four distinct multi-domain datasets, outperforming all variant models. The results clearly indicate that the integration of linguistic semantics, sentiment knowledge, attention mechanisms, and dimensionality reduction (PCA) plays a pivotal role in enhancing model performance. The sentiment knowledge utilized in DK-HDNN, including meaningful words obtained through word conversion and typo correction, linguistic rules with POS tagging, and the wide coverage integrated sentiment lexicon (WCISL) (see

Figure 1), significantly contributes to its effectiveness in sentiment classification. Additionally, the attention mechanism enables the model to focus on the most relevant features within the data, while PCA reduces feature dimensionality, effectively preserving critical information and mitigating overfitting. Each of these components synergistically enhances the model’s overall performance, with DK-HDNN-4 achieving the highest accuracy, solidifying its position as the most effective configuration.

4.4. Cross Model Comparison

We compare our proposed approach with previously proposed DNN-based baseline models on the binary sentiment classification problem [

20,

48,

49,

50,

51,

52,

53,

54,

55] as shown in

Table 4. More specifically, this section evaluates the classification accuracy of the proposed DK-HDNN model against previously established baseline models on the binary sentiment classification task using multi-domain datasets. The comparative results, presented in

Table 4, highlight the performance of various DNN-based models, with the best outcomes emphasized in bold. As shown in

Table 4, DK-HDNN consistently outperforms competing approaches on most benchmark datasets, demonstrating its superior effectiveness. Specifically, DK-HDNN achieves notable classification accuracies of 93.80%, 95.90%, 96.88%, 93.65% on the MR, CR, IMDB, and SemEval 2013 datasets, respectively. These findings underscore the robustness and efficiency of DK-HDNN compared to conventional DNN models in addressing sentiment classification challenges.

Furthermore, the results highlight DK-HDNN (Our proposed model) as a highly sentiment- and context-aware model that effectively integrates linguistic semantics and sentiment knowledge. By leveraging wide-coverage domain sentiment lexicons, linguistic rules, POS tagging, and sentiment clues, the model attains a deeper understanding of textual nuances. Additionally, the incorporation of RoBERTA embeddings, attention mechanism, and PCA further enhances the model’s ability to focus on critical features while reducing dimensionality. Together, these elements, combined with hybrid DNNs, validate the superior classification accuracy of DK-HDNN for textual SA, establishing it as a robust and efficient solution in this domain.

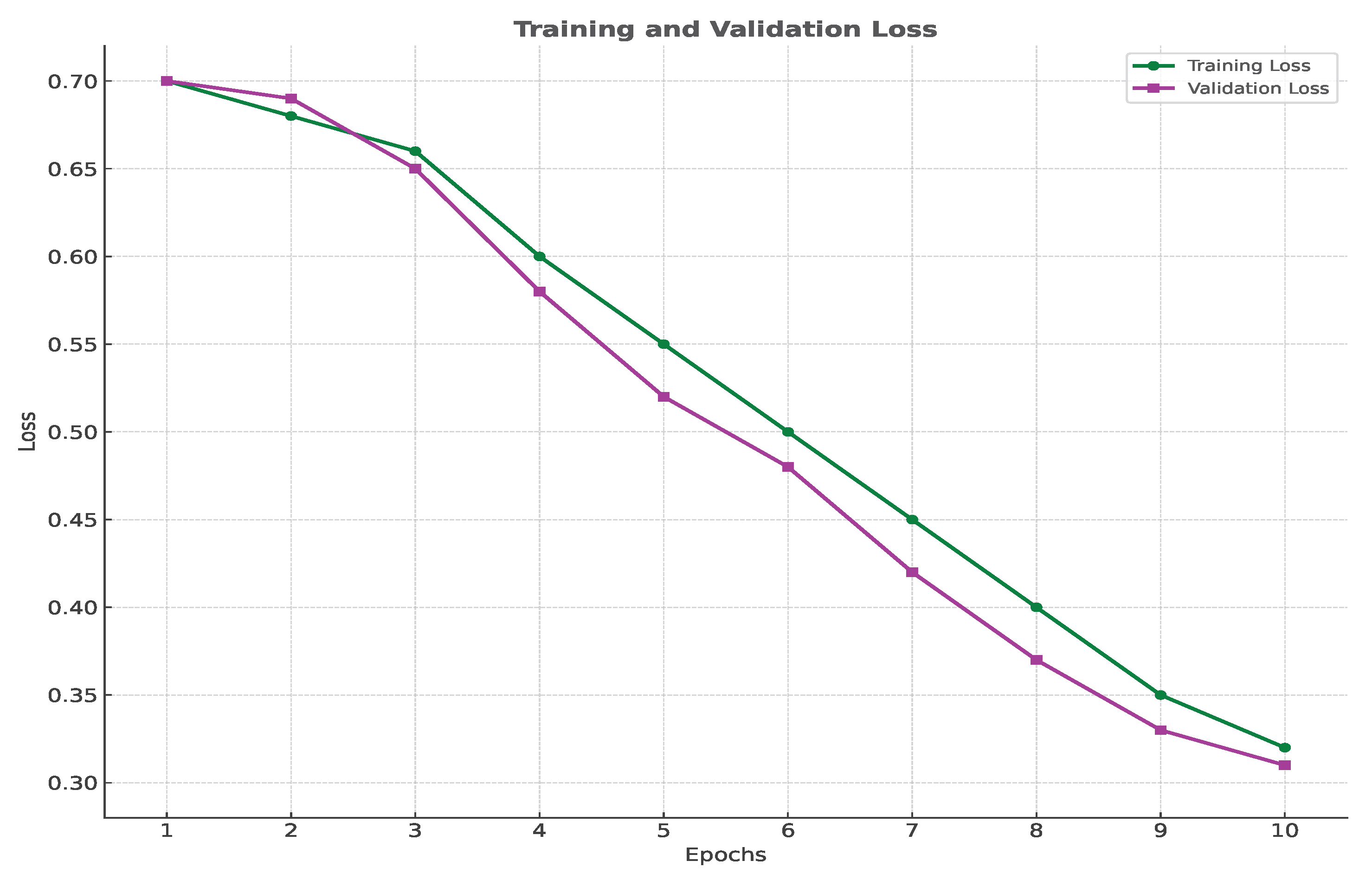

4.5. Loss Function Analysis

The lower loss values observed for both training and validation indicate that the model’s predictions are, on average, closer to the actual targets. This demonstrates that the model is effectively learning the underlying patterns in the data, as shown in

Figure 3.

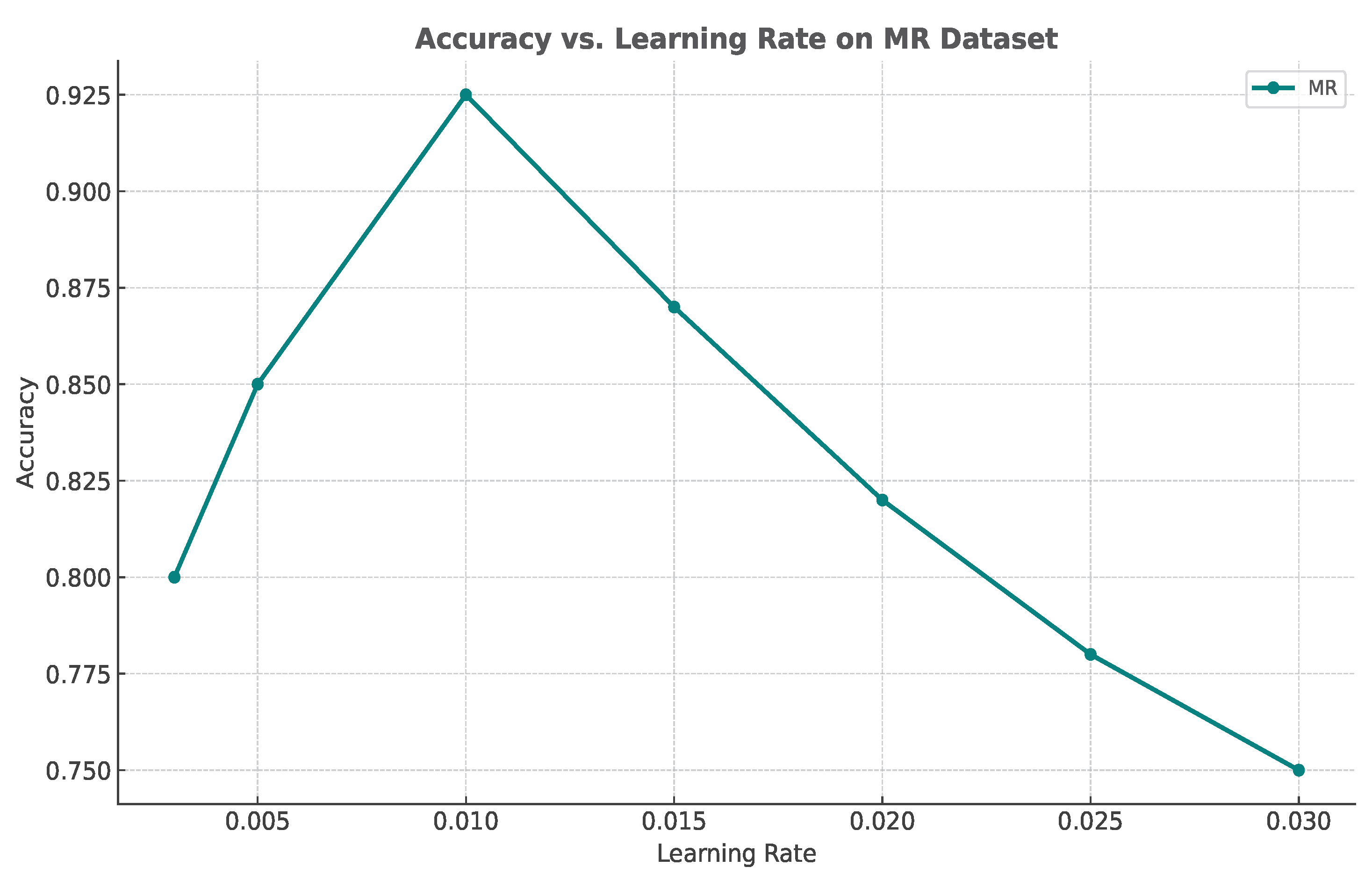

4.6. Effect of Learning Rate on DK-HDNN

Choosing the right learning rate is crucial for efficient training in gradient descent. A learning rate that is too small can lead to excessive iterations or convergence to local minima. In the worst-case scenario, an excessively small learning rate might even cause the optimization process to become trapped in an infinite loop. Conversely, a learning rate that is too large can lead to cost function instability and hinder convergence to the global minimum. This can significantly slow down the optimization process. The presented

Figure 4 exemplifies the sensitivity of the DK-HDNN model’s performance to the learning rate. As observed, a learning rate of 0.01 results in peak model accuracy. Further increases in the learning rate lead to a decrease in accuracy. This empirical evaluation suggests that a learning rate of 0.01 is optimal for this specific configuration.

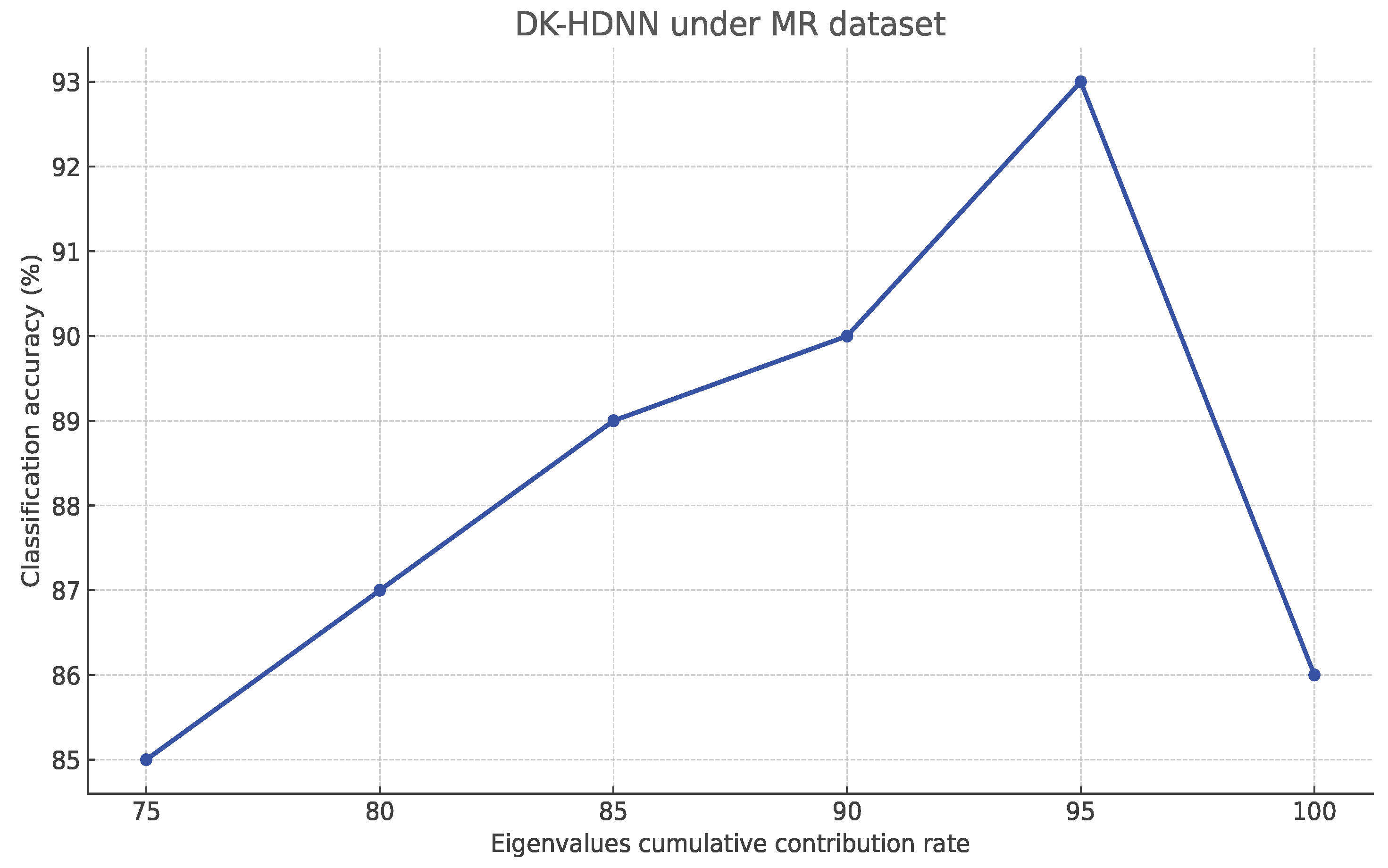

4.7. Impact of Cumulative Contribution Rate of Eigenvalues on Classification Performance

This study examines how dimensionality reduction through Principal Component Analysis (PCA) affects the classification performance of a DK-HDNN model. Specifically, it investigates the impact of the Cumulative Contribution Rate of Eigenvalues (CCRE) on classification accuracy using an MR dataset. The analysis focuses on varying the CCRE from 100% to 95%. Text features extracted by the DK-HDNN model undergo PCA to progressively eliminate redundant information. The findings, depicted in the provided figure, indicate enhanced classification accuracy when the CCRE is set to 95%. This implies that removing a moderate amount of redundancy results in a more informative feature representation for the classification task. However, reducing dimensionality further by lowering the CCRE below 95% appears to eliminate not only redundant information but also partially relevant text features, leading to a decline in classification performance (

Figure 5).

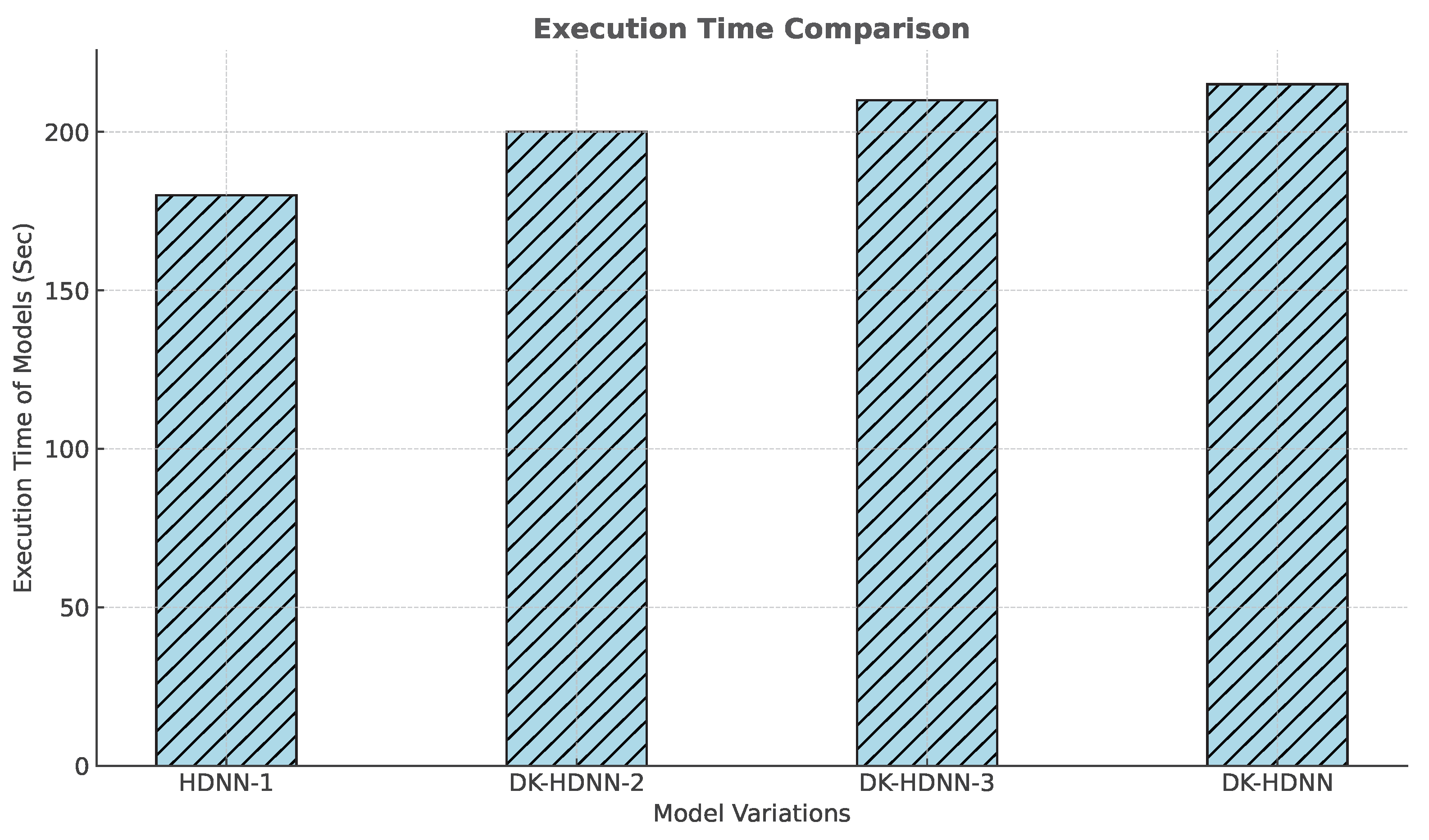

4.8. Comparative Analysis of Execution Time Across Model Variation

We evaluated the computational cost of all model variations by running experiments for up to 10 epochs. The figure presents the execution time of the proposed DK-HDNN model alongside other model variations using the large Movie Reviews (MR) dataset. The DK-HDNN model demonstrates slightly higher execution time compared to the baseline model HDNN-1, primarily due to the integration of both semantic and sentiment knowledge, as well as the attention mechanism (

Figure 6).

4.9. Model Performance

We investigated the performance of our proposed domain knowledge-based Hybrid Deep Neural Network model, which incorporates linguistic semantics and sentiment knowledge for sentiment analysis. Our model’s increased accuracy over the most advanced baseline DNN-based models can be attributed to a number of reasons. First of all, our model performs exceptionally well in the preprocessing phase by removing unnecessary and noisy elements from the review text and fixing typos. Second, it shows effectiveness in identifying and extracting sentiment elements that are pertinent to the text at hand. Thirdly, our approach successfully classifies mixed opinions by using linguistic semantic principles. Fourthly, for accurate sentiment-related feature recognition, it integrates the sentiment lexicon with broad coverage. Fifth, it may capture long-range relationships within the word sequence as well as contextual semantic information inside the text by representing sentiment features as high-density real-valued vectors. Sixthly, it makes use of a powerful blend of cutting-edge methods, such as Principal Component Analysis (PCA), attention mechanism, Bidirectional Gated Recurrent Unit (BiGRU), and RoBERTa. Together with linguistic semantics and sentiment knowledge, this combination effectively extracts highly informative sentiment features, which helps the model classify sentiment accurately.

6. Challenges and Future Directions

The proposed approach faces some challenges in classifying reviews, as outlined below:

Idiomatic expressions: Culturally specific or non-literal phrases may be overlooked, limiting the model’s ability to interpret nuanced sentiments.

Figurative language: Metaphors, irony, and sarcasm often rely on contextual or tonal subtleties (e.g., exaggerated praise masking criticism) that literal textual analysis may misinterpret.

Subtle or layered sentiments: Implicit opinions, indirect critiques, or humor-laced dissatisfaction require deeper contextual inference beyond surface-level keyword detection.

To address these limitations, our upcoming work will focus on the following:

Enhanced contextual markers: Integration of punctuation-based cues (e.g., exclamation marks), laugh indicators (e.g., “haha”, “lol”), and explicit sarcasm markers (e.g., exaggerated positive terms in negative contexts) to better detect irony and mixed sentiments.

Expanded linguistic rules: Development of a dynamic rule set incorporating syntactic patterns, sentiment polarity shifts, and context-aware phrase disambiguation to decode ambiguous or contradictory expressions.

Self-learning mechanisms: Implementation of adaptive feedback loops to iteratively refine sentiment predictions based on user corrections and evolving linguistic trends, improving adaptability across diverse textual contexts (e.g., social media, product reviews).

By bridging these gaps, we aim to enhance the model’s robustness in identifying complex sentiment expressions, particularly in scenarios where literal and figurative meanings diverge.

In our upcoming work, we will explore the integration of exclamation marks, laugh indicators, sarcasm markers, and mixed sentiment indicators to enhance sentiment analysis, particularly in detecting sarcasm and irony. Additionally, we aim to expand the linguistic rule set and incorporate self-learning mechanisms to improve the model’s adaptability in handling complex sentiment expressions across diverse textual contexts.