Abstract

This paper presents a new matrix representation of ant colony optimization (ACO) for solving parametric problems. This representation allows us to perform calculations using matrix processors and single-instruction multiple-data (SIMD) calculators. To solve the problem of stagnation of the method without a priori information about the system, a new probabilistic formula for choosing the parameter value is proposed, based on the additive convolution of the number of pheromone weights and the number of visits to the vertex. The method can be performed as parallel calculations, which accelerates the process of determining the solution. However, the high speed of determining the solution should be correlated with the high speed of calculating the objective function, which can be difficult when using complex analytical and simulation models. Software has been developed in Python 3.12 and C/C++ 20 to study the proposed changes to the method. With parallel calculations, it is possible to separate the matrix modification of the method into SIMD and multiple-instruction multiple-data (MIMD) components and perform calculations on the appropriate equipment. According to the results of this research, when solving the problem of optimizing benchmark functions of various dimensions, it was possible to accelerate the method by more than 12 times on matrix SIMD central processing unit (CPU) accelerators. When calculating on the graphics processing unit (GPU), the acceleration was about six times due to the difficulties of implementing a pseudo-random number stream. The developed modifications were used to determine the optimal values of the SARIMA parameters when forecasting the volume of transportation by airlines of the Russian Federation. Mathematical dependencies of the acceleration factors on the algorithm parameters and the number of components were also determined, which allows us to estimate the possibilities of accelerating the algorithm by using a reconfigurable heterogeneous computer.

Keywords:

ant colony optimization; parallel computing; parametric problem; SIMD; MIMD; reconfigurable heterogeneous computer; optimal structure; hash table MSC:

65K10

1. Introduction

The problems of analysis and synthesis of complex systems are based on the issues of optimization of their parameters according to certain criteria. For single-criterion optimization, gradient methods are most often used, providing the fastest search for optimal parameter values. But the gradient method works well only on simple single-extreme functions, preferably of simple form. For multi-extreme functions of complex form, the algorithms determine the local extremum. To search for a global extremum, metaheuristic algorithms are considered, which allow a stochastic parallel consideration of many different solutions [1]. Due to the evolutionary development of these heuristics in nature, their improved convergence is assumed. Among the metaheuristic algorithms, the most widespread are the genetic algorithm, the particle swarm method, simulated annealing, and others. The heuristic nature of these algorithms allows for easy modification of the algorithm and its extension to other optimization problems; adding information to the algorithm allows us to take into account the features of the problem being solved, which generates many modifications and practices of its application [2]. The basis of the original algorithm remains the same. For example, the genetic algorithm is based on the principles of natural selection and evolution: it constantly changes the population of solutions through the processes of crossing and mutation of individuals. This ensures a variety of possible solutions and allows the algorithm to find more optimal options. The particle swarm method, in turn, models the behavior of a group of particles that simultaneously move in the search space. Each particle strives to achieve a common global minimum, while taking into account both its own experience and information about the best solutions found by other particles. This collective behavior contributes to the efficient exploration of the solution space. Simulated annealing is another interesting approach that borrows the concept of a thermodynamic process. This method gradually reduces the area of random oscillations of the agent’s position, allowing it to focus on finding the optimal solution. At first, the algorithm explores the solution space more freely, and it then narrows its search steps as the system “cools”, which helps to find a more accurate solution.

The development and gradual distribution of supercomputers both in government structures and in private firms allows the application of optimization algorithms not only to especially important problems, but also to private optimization problems. An optimization problem involves determining (using a computer) the values of parameters in order to optimize the value of criteria in the absence of information on the nature of the dependencies between the parameters and criteria. For parametric optimization problems, a modification of ACO is necessary, in which heuristic information may be absent. When solving the TSP, the heuristic information is the arc length; in the QAP, it is the assignment cost. The heuristic parameter is significant when searching for a solution and directly affects the efficiency of the method. In this paper, modifications of the method with and without a heuristic parameter are investigated, and the problem of algorithm stagnation with this approach, described in [1], is solved. The widespread use of individual components of processors and accelerators, allowing efficient execution of SIMD algorithms on modern personal computers, provides a sharp increase in efficiency. In this case, parametric problems can be described by discrete and continuous parameters simultaneously, which requires studying the data structure for representing information on the system parameters. For efficient execution of ACO on SIMD accelerators, it is necessary to ensure parallel execution of all operations. For this, it is necessary to develop a matrix formalization of ACO, which was omitted in previous works due to the symmetry of the implementation of algorithm modifications. Each agent, at each step, performed operations of the same dimension, for example, determining the next vertex in an array of possible paths of the same dimension. The introduction of a unique behavior of an ant agent required the synchronization of algorithms or adding a modification to all ant agents simultaneously. The formal matrix representation of ACO allows for modifications of ACO in the matrix representation, immediately taking into account the possibilities of parallel execution of the algorithm. If, in the matrix representation, it is possible to separate the agent’s behavior depending on its behavior, then such behavior can be implemented using MIMD components for heterogeneous computers, which in general are both modern GPUs and CPUs, and in the future, easier access to supercomputer architectures is expected. For such systems, it is necessary to evaluate the efficiency of individual components in order to modernize the hardware. The use of reconfigurable heterogeneous systems allows us to increase the efficiency of the algorithm not only by applying effective modifications but also an effective computer structure and an effective policy for modernizing the hardware complex. It is worth noting separately that the TSP and QAP imply a fast, possibly parallel, determination of the criterion value for the parameters selected by the ant agents. In parametric optimization, the determination of criteria values for given parameters can be carried out on the basis of complex analytical and simulation models, which can significantly increase the search time for the objective function values. If parallel operation of the model is impossible, the use of parallel modifications of ACO is ineffective. This paper studies a mechanism with intermediate storage of the results of ACO in hash tables in order to speed up the search for paths already considered and speed up the operation of ACO.

2. Literature Review

2.1. Effective Modifications of Ant Colony Optimization

This paper considers various modifications of the metaheuristic bionic method of ant colonies [3]. The ant colony optimization (ACO) method proposed by M. Dorigo solved the traveling salesman problem and was later extended to a large class of optimization problems [3,4]. In addition to the classic traveling salesman problem, the multiple traveling salesman problem (mTSP) [5] and the quality object location problem (QAP) [6,7] are solved. Although the original ACO algorithm showed encouraging results in solving the traveling salesman problem, unbalanced exploration and exploitation mechanisms lead to stagnation problems when all ant agents follow the same trajectory. When the algorithm operates, either the exploration of unoccupied areas of the search space or the exploitation of neighboring high-quality solutions occurs [8]. If exploration predominates, the algorithm may explore useless areas, and if exploitation is too strong, it may converge prematurely and give a poor result. The differences between ACO algorithms lie in the way they manage the balance between exploration and exploitation. Modifications of ACO to balance exploration and exploitation processes are usually divided into pheromone management, parameterization, and hybridization [9]. The first group includes various mechanisms used in ACO algorithms, such as elitism in elite ant systems (EASs), trail learning in ant colony systems (ACSs), ant-based Q-learning (AntQ), rank-based ant systems (RASs), trail limiting in Max–Min Ant System (MMAS), and pheromone subtraction in best–worst ant systems (BWASs), among others [9]. The second group includes methods where exploration and exploitation are supported by parameterization, in which the values of ACO algorithm parameters are changed online or offline. Offline methods are either a trial-and-error scheme or a machine learning scheme [10]. Both schemes are applied before the algorithm is run and are close to the multi-start methodology. Online methods are suggestions for changing the parameter values during the run. This may increase the complexity and workload of the algorithm development. The third group includes successful combinations of basic pheromone management techniques with local search algorithms, which significantly improve the quality of ACO solutions. The use of local search, such as 2-Opt, in combination with ACO has shown its effectiveness, and various hybrid approaches using other optimization methods have been proposed. The most widespread are combinations of the genetic method and ACO, as well as ACO and the particle swarm method [11,12].

This study considers the application of ACO to solve parametric optimization. In this problem, it is necessary to determine the parameter values that provide the optimal value of the objective function. If the parameters are set for an external model, then such a problem is called hyperparameter optimization; it is often not necessary to search for the exact optimal value, but it is still necessary to quickly find rational parameter values that provide close-to-optimal values of the objective function.

2.2. Parallel Modifications of ACO, Running on Central Processing Unit (CPU) and Graphics Processing Unit (GPU), and Using Open Multi-Processing (OpenMP)

In ACO, ant agents move in groups of ant agents. During this movement, the graph does not change, which allows for parallel movement. Parallel algorithms imply division into fine-grained parallelism when each agent ant is executed in its own flow and coarse-grained parallelism when separate ant colonies work in one flow, interacting with each other through various mechanisms. Most often, fine-grained algorithms are inferior to coarse-grained ones due to the simplicity of the algorithm in terms of the movement of a single ant agent and the overhead associated with starting parallel processes. In order to reduce communications between processors, a partially asynchronous parallel implementation (PAPI) method was developed, in which information is exchanged between colonies after a fixed number of iterations [13]. The master/slave approach to the parallel ACO for solving the QAP and travelling salesman problem (TSP) uses the concept of a master thread. The master thread collects solutions from parallel ant colonies and broadcasts information about updating each colony for their pheromone matrices. This requires that each thread, a separate ant colony, has its own data and its own algorithms [14]. Coarse-grained parallel modifications of ACO have been successfully implemented using OpenMP technology [15]. Modern research allows the use of multicriteria modifications of ACO (MOACO) in combination with local search algorithms when solving the knapsack problem [16]. But due to the complexity of simultaneous access to memory in different threads, the OpenMP model is effective only for complex modifications of algorithms in which the parallel execution of identical commands is difficult. In a comparative analysis of the implementations of ACO—an implementation based on OpenMP and an implementation based on the parallel message passing interface (MPI)—the OpenMP-based implementation showed an acceleration of 24 times for the TSP, while the one based on message passing showed an acceleration of only 16 times [17].

Further research into ACO was aided by the development of computing technology and the emergence of compute unified device architecture (CUDA) parallel computing cores on NVIDIA GPUs. Since GPUs can provide higher peak computing performance than multi-core CPUs and are inexpensive, researchers are more interested in parallelizing the ACO algorithm on GPUs than on multi-core CPUs [18]. This type of parallel ACO is based on the deep parallel attention model (DPAM). The basic concept of DPAM is to associate each ant with a group of threads with a shared memory, such as a CUDA thread block. In addition, by assigning each thread to one or more cities, the threads in the block can compute the state transition rule jointly, thereby improving data-level parallelism. In addition, since the shared memory on the chip is capable of storing ant data structures, the latency of the uncoalesced memory access method in tour construction is reduced [19]. In this formulation, researchers consider the GPU computer as an SIMD (single-instruction multiple-data) accelerator, assuming that each action of the algorithm is performed on different data. The results obtained from the modification of ACO for solving the TSP on CUDA for modern computing power were excellent; for example, for min–max modification [20,21], which is usually used together with a taboo search [22], the acceleration can be more than 20 times. Close to the parametric problem is the QAP, in which the transport graph is represented by the assignment graph. With parallel implementation on the GPU, the following stages are sequentially performed: pheromone updates, solution construction, and calculation of the solution cost [23]. Separately, it is worth noting the study of automatic optimization of code implemented on SIMD GPUs using additional libraries. These studies show that automatic optimization methods are close in efficiency to manual optimization methods [24].

The possibility of parallelization on GPUs for solving the TSP and QAP usually depends on the need to interact with common data, which has been the subject of many works [23,25,26]. Another important problem in implementing modifications on GPUs is the difficulty of implementing SIMD logic on GPUs due to the possibility of executing MIMD (multiple-instruction multiple-data) calculations on GPUs with a delay in the warp of individual threads [23]. At the same time, various modifications of ACO were considered to solve problems in the parallel execution of instructions [27]. Separately, it is worth noting the study of ACOs for solving the TSP on Xeon Phi [28,29].

2.3. Modifications of the Ant Colony for Parametric Optimization

The efficiency of ACO in solving the QAP allowed us to expand the application of ACO to optimization problems, where the most widespread problem is the optimization of hyperparameters, for example, the optimization of the parameters of Long Short-Term Memory (LSTM) recurrent neural networks [30]. ACO determines the optimal number of neurons in one layer of the network or the values of discrete parameters of the system. It is worth noting the small number of iterations of ACO and the small number of ant agents. This is due to the fact that the task of training an LSTM network is a long operation and it is desirable to spend the resources of parallel computers on accelerating the training of the network and not on a parallel search for parameters using ACO [31]. In this case, the model uses heuristic information on the efficiency of choosing a value [32]. For LSTM models, such information can be obtained as a result of statistical research methods [33]. For example, in the parametric optimization of LSTM models, correlograms are used to forecast time series.

2.4. Review of Metaheuristic Algorithms Applied to Optimization Problems

Among the metaheuristic algorithms, the genetic algorithm has gained the greatest popularity. The genetic algorithm was considered in detail and modified for solving optimization problems [34,35]. The particle swarm method was also studied and showed high efficiency in searching for the optimum of benchmark functions [36,37]. The ant colony method was also considered in the context of application to the problems of searching for the optimum of a function. In [38], continuous-domain-based ACO (ACOR) was studied for solving various optimization parametric problems, and results for the Rosenbrock function were included. The proposed algorithm is based on continuous optimization based on Gaussian distributions. In this algorithm, an ant agent selects a distribution, and then, a point is generated. By gradually reducing the variance in Gaussian distributions, it is possible to reduce the search area for one ant agent. The improved method using the ant agent pre-selection strategy was also investigated on the Rastrigin and Ackley functions [39], as well as with adaptive domain tuning [40]. Good results were demonstrated by the modification of ACOR with separation of the search and exploitation strategy [41]. The method of placing distributed generation (DG) for minimizing losses and improving the voltage profile in the distribution system was investigated, and a metaheuristic approach based on ACOR was used to determine the DG capacity [42]. However, the proposed methods cannot be easily adapted to mixed problems of high dimension, having both discrete and continuous parameter values. Discrete approaches for continuous benchmark functions, allowing the determination of parameter values with a certain accuracy, have not been studied in detail.

2.5. Features of the Current State of ACO

Based on the results of the conducted research review, two directions of ACO development can be distinguished. The original, discrete ACO demonstrates high efficiency in solving a wide class of optimization problems, including the traveling salesman problem and the object location problem. The main directions of the method’s improvement are pheromone control, parameterization, and hybridization with other optimization methods. Combinations of ACO with a genetic algorithm and a particle swarm method turned out to be especially promising. In the field of parallel computing, coarse-grained modifications using OpenMP technology turned out to be the most effective, providing a significant acceleration of computations compared to traditional approaches. The conducted literature analysis shows that the ant colony method demonstrates high efficiency when implemented on CUDA graphics processors. Also promising is the use of ACO for optimizing hyperparameters in LSTM neural networks, where the method successfully determines the optimal number of neurons and discrete parameters. The continuous version of ACOR based on Gaussian distributions has demonstrated good results in solving continuous function optimization problems.

However, the availability of heuristic information is essential for discrete ACO, without which the algorithm stagnates. This requirement does not allow us to propose universal modifications. ACOR does not use heuristic information but is often limited to the class of continuous optimization problems and is not suitable for discrete or mixed problems. It should also be noted that parallel modifications of ACO on CUDA and OpenMP investigate only discrete ACO on the TSP, QAP, and ACO-LSTM problem. In the proposed problems, the search time for the function value is commensurate (small) with the time it takes for the ant agent to find the path. No studies have been conducted on modifications of the method with intermediate storage of information to speed up computations and the use of hybrid SIMD/MIMD computers. To use strict SIMD calculators, a matrix formalization of the ACO method with the allocation of individual MIMD stages is necessary. It should also be noted that most works on parallel ACO are based on parallel multithreaded and distributed computations and computations on GPU CUDA cores and do not take into account the widespread development of SIMD cores of CPUs.

3. Materials and Methods

3.1. Statement of Parametric Problem in Matrix Form

Let there be a discrete set of parameters . Each -th parameter has a set of admissible values . The number of admissible values is determined by the value , which depends on the parameter number. Thus, different numbers of possible values can be defined for different parameters. As a result of the optimization algorithm, a vector of parameter values is determined, hereinafter called solution , where . Here, is the number of the ant agent, which obtains the solution independently of other ant agents. In total, at the iteration of ACO, the solution is set simultaneously by agents. Thus, it is possible to obtain each solution in parallel. The found vector of parameter values is sent to the computer, which returns the value of the criterion, the objective function . The solutions are considered in the discrete space of parameter values, and the continuity and differentiability requirements are not imposed on . In further development of the method, it is assumed that restrictions on the discreteness of the parameters will be removed by applying continuous modifications of ACO [43].

It is required to find the optimal solution ; if there is more than one such solution, then it is necessary to determine the set , such that . When searching for rational solutions, the set of solutions is determined, such that , where is the region of satisfactory values of the objective function. The number of solutions is not specified by the decision maker. For the minimization problem, it is sufficient to specify only the right boundary for the acceptable region , and . This determines the region of criteria values acceptable for the decision maker. This problem formally belongs to the class of constraint satisfaction problems (CSPs) [44].

3.2. Ant Colony Optimization

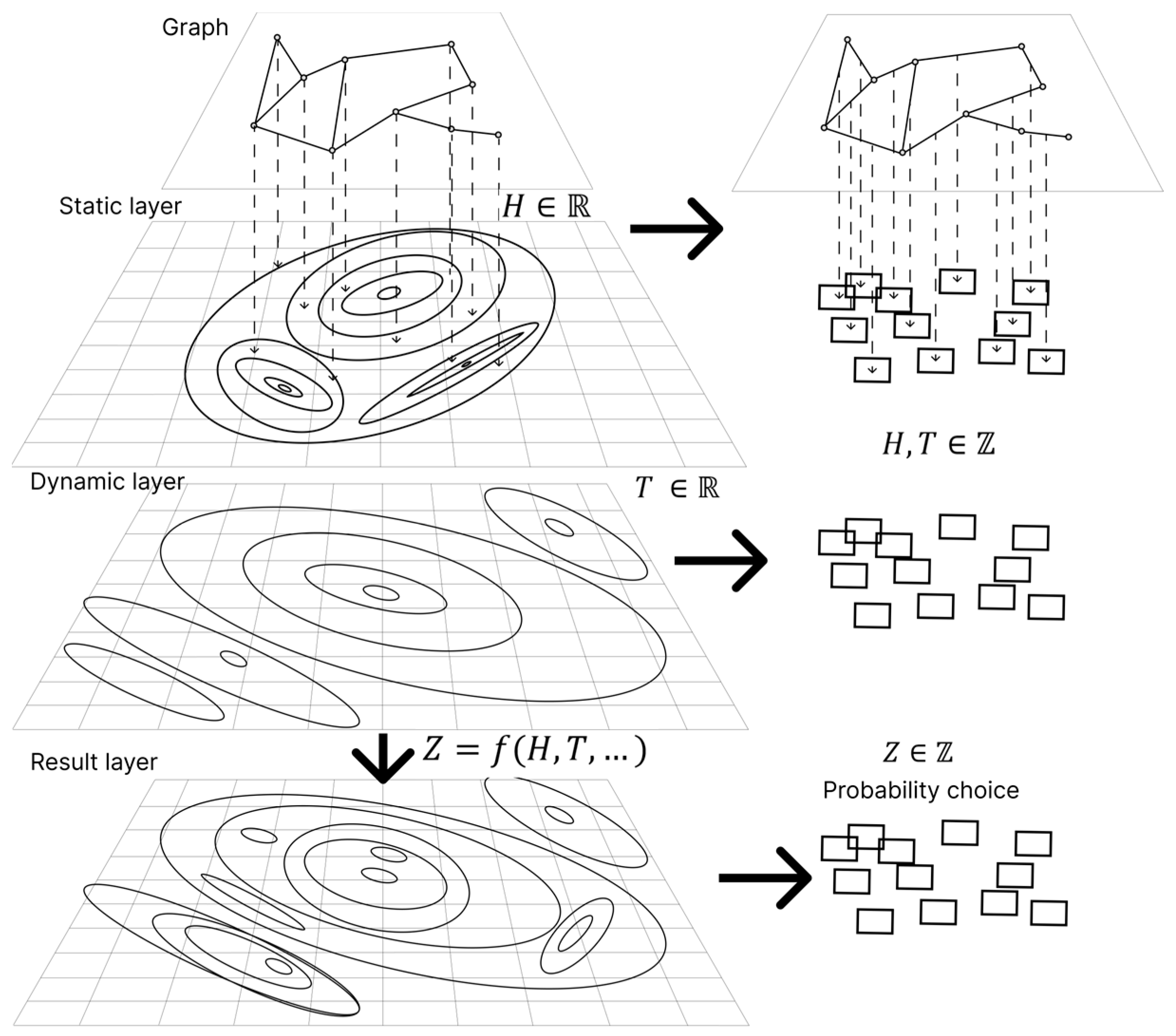

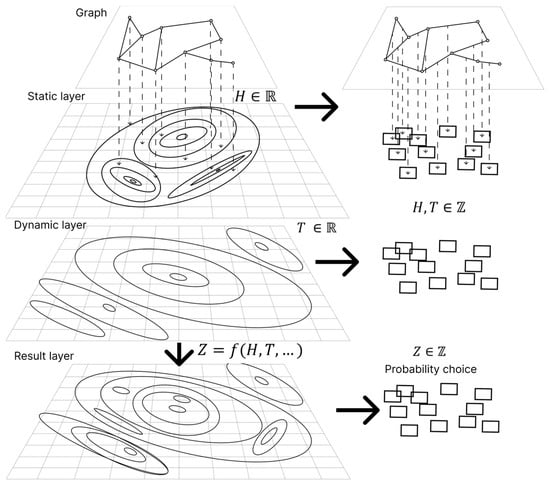

In ACO, each parameter value is defined by the sets and , where is the set of parameters; is the set of admissible values; is the amount of pheromone weight for the parameter value; and is information about the arc length. This paper proposes to represent the information required by ACO as a set of layers (Figure 1). The layers are divided into static, which do not change between iterations of ACO, and dynamic. For discrete problems, the values of each layer can be represented as matrices. Information about the arc length forms a static layer of values . does not depend on the iteration number . Based on the static layer, the problem can be solved using various algorithms for the traveling salesman problem; Dijktra’s methods, branch-and-bound methods, or dynamic programming can be used. Unique to ACO is the dynamic layer , in which the assignment of pheromone weights to each graph arc is determined. At the initial stage of the algorithm, the values of the dynamic layer are the same, and therefore, this layer does not affect the probability of the agent choosing an arc. Gradually, the value of the dynamic layer changes, and it is the dynamic layer that plays a significant role in the agent’s choice of an arc.

Figure 1.

Dividing information into layers in ACO.

The original ACO used the formula for the probability transition (1) from vertex i to vertex j, which used information about the arc length and pheromone [3,4].

where is the probability of transition along arc for ant agent k at iteration (the ant agent is at a certain vertex, i are arcs along which the agent can move, and is set); is the value of arc i from at iteration t. is the value of arc i from (the value inverse to the arc length is taken), is the value of the criterion for ant agent k at iteration t, and are the parameters of multiplicative convolution, is the parameter of “weight evaporation”, allowing a reduction in the influence of previously found solutions, and is the parameter of added weights (due to normalization in the probability formula, it has no effect on the operation of the algorithm, and it is recommended to set ). The probability of an arc being selected by an agent ant (1) is determined by the multiplicative combination of the arc length and the amount of pheromone, taken with weighting coefficients . The multiplicative convolution can be expressed as the resulting arc weight, . To calculate the probability taking into account the resulting weight , it is necessary to normalize to satisfy the condition for .

3.3. Modification of ACO in Matrix Formulation

For a parametric problem, the graph vertex is the admissible value of the parameter , and the arcs connect all the parameter values with neighboring numbers and for . Since arcs in such graphs do not carry information about the movement capabilities of the agent ant (the agent ant can move to any vertex of the next parameter) and the weight pheromone is left on the vertices, the arcs are fictitious and the data structure for the problem under consideration can be represented as a list of parameters, each parameter having a list of values [45]. Since the number of admissible values for each parameter is different, then to bring all parameters to matrix form, it is necessary to supplement the values of vectors to , obtaining a matrix . Setting the initial value of the layer or for the added parameter values equal to zero will ensure a zero probability of selecting these parameters, and the following conditions will be met for the added values: .

3.3.1. New Probability Formula Without Taking into Account the Heuristic Parameter

In this case, in parametric problems or other problems in which there is no a priori information about the efficiency of the agent’s movement (layer ), Formula (1) changes and only one normalized factor remains in it:

As a result, ACO stagnates at the early stages to the first rational solution found [1,45]. To solve the problem of stagnation at the early stages and improve the exploitation strategy, it is proposed to add information to the probabilistic formula related to the number of visits of agents to the graph vertices (layer ):

where is the parameter number; ; is the parameter value number ; t is the iteration number; are the coefficients of the additive convolution are the degrees of the terms of the additive convolution, which are not considered in this paper and are present in the formula due to their presence in (1). ; is the normalized weight for the value with number of the parameter with number for iteration ; is the number of ant agents that visited the vertex for the value with number of the parameter with number for iteration ; is the maximum possible number of ant agents that visited the vertices for the parameter with number .

The disadvantages of linear convolution and the mutual compensation of terms are an advantage for a probabilistic formula [45]. According to the results of mutual compensation at different iterations of the algorithm, different terms have a greater effect on the probability of choosing a parameter value, which allows the algorithm to maintain efficiency during operation. The first term depends only on the normalized number of weights for the parameter with number and its value with number . The second term depends on the number of visits of the parameter value by the agent, i.e., how many times the parameter value was considered in decisions. This term allows us to increase the probability of choosing the parameter values with the least number of visits. Adding this parameter makes it possible to avoid stagnation of the algorithm at the initial stage of operation. After several iterations of the algorithm, the influence of this parameter decreases due to the increased value of . The third term, on the contrary, increases when the number of solutions with a certain value of the parameter approaches the maximum number . The value of for a discrete problem can be calculated exactly by the formula The value of is static and does not change between iterations of the algorithm and can be calculated before starting work. This term helps to find the last solutions and has virtually no effect on the operation of the algorithm at early iterations.

3.3.2. Matrix Formalization of the Method

Due to the representation of all values for Formula (3) in the form of matrices, it is possible to calculate the probability matrix :

The presented matrix transformations consist of multiplying the matrix by a number, adding the matrix elements, and calculating the matrix–vector ratios, which can be easily performed on matrix calculators. It is worth noting separately the need to normalize the values of the matrix for using them in the following additive convolution: . The selection of the values of the parameters that form the solution, vector , is carried out on the basis of the probability distribution of the selection of vertices for each column of matrix using the inverse function method. For the inverse function method to work, it is necessary to calculate the matrix of distribution functions and .

At each iteration of ACO, the generation of ant agents of dimension finds solutions and calculates vector . After all ant agents from one generation have received decisions, a new state of all matrices is determined. During the movement of individual ants from one generation, matrices and do not change, which allows us to consider the movement of all ant agents as matrix transformations. To calculate the path of each ant agent, which determines the selected vertex values for each parameter, a matrix of the implementation of random variables uniformly distributed in the interval (0; 1) is generated, where is the parameter of ACO, which determines the number of ant agents (generation) at the iteration. Next, the index of the inverse function is determined for the corresponding generated number so that the following inequality is satisfied: . In this way, the index of the selected value is determined. The matrix of solutions, the paths of ant agents in the parametric graph , is determined from matrix V: . For each solution found, the value of the objective function is calculated, forming vector .

The values of matrix are updated according to Formula (1), defined in matrix form: . The discrepancy between the dimensions of and is resolved by adding the value of the element of vector only for the vertices, the values of the parameters that the ant agent selected, defined by matrix . The algorithmic record of this procedure can be represented as , with cycles over the variables and .

3.3.3. Modifications of the Method for the Parametric Optimization of a System with Negative Values of the Objective Function

In the probability Formulas (2)–(4), as in the original Formula (1), the negative value of the pheromone weights leads to errors in the probability formula and failures in the operation of ACO, i.e., Provided that ( determines the set of parameter values for which a negative value of the objective function is obtained), it is necessary to ensure the transformation .

The simplest transformation will be a shift in the value of the objective function by the largest value in absolute value. . The difficulty of the transformation in Formula (5) lies in the need to calculate the shift before the method can work.

Another option for the transformation is to use the absolute value of the objective function. . The transformation requires changing the direction of optimization and using it only for positive or negative objective functions.

In this case, Formulas (3) and (4) use the normalized value , which can be calculated as , which does not impose restrictions on the values of and can be used when .

3.4. Modification of the Ant Method Using a Hash Table

The use of modifications of ACO for parametric problems of optimizing model hyperparameters assumes high time costs for calculating the values of the objective function in an analytical or simulation model compared to the running time of the modified ACO. In the case of executing ACO on an SIMD computer, the acceleration of the algorithm obtained as a result of matrix formalization will be offset by the long time required to calculate the objective function in the model.

In the case when performing calculations on the model is possible only in sequential mode, parallel operation of ACO is not required. The proposed modifications are capable of working with a model in which asynchronous access to a model operating in parallel mode is possible. In such a case, it is usually assumed that the model is launched on a cluster or supercomputer. Another method that can be used together with parallelization of model calculations is intermediate storage of the results of calculating the objective function. This paper proposes to use a hash table as temporary storage, implementing matrix transformations of the ant agent path matrix into a vector of hash functions , with subsequent checking of the path in the hash table. If , then or else . The presented actions change the behavior of the algorithm as a result of checking the condition, which makes it impossible to perform these actions on an SIMD accelerator, but parallel execution on an MIMD computer is possible, parallelizing into threads.

With this approach, it is possible to consider various modifications of ACO that perform different actions when finding a value in a hash table. The point is that if the value is found, then there is an ant agent that has passed along this path in previous iterations, and a change in the behavior of the new agent will lead to the possibility of all agents not converging on one solution at the iteration but continuing to search for new, not yet considered solutions. As a result, ACO will feed new, not yet considered solutions to the computing cluster, ensuring a uniform load on the cluster. With such a formulation of the problem, the proposed modifications of ACO allow solving problems of reordering parameter sets, when sets of parameter values are sequentially sent to the computing cluster, and ACO determines the order of sending.

The following modifications of ACO are proposed in this paper [45]:

- ACOCN (ACO Cluster New): This is classic ACO that uses a hash table and obtains the values of the objective function without accessing the computing cluster. If , then .

- ACOCNI (ACO Cluster New Ignor): If the ant agent has found the already-considered solution, then this ant agent does not change the state of the dynamic layers described by matrices and , i.e., it is ignored. If , then .

- ACOCCyN (ACO Cluster Cycle N): If the ant agent has found the already-considered solution, then it performs a further cyclic search for a new solution. The cycle is limited to iterations; if a new solution is not found, then the solution is ignored.

- ACOCCyI (ACO Cluster Cycle Infinity): If the agent ant has found a solution that has already been considered, then it performs a further cyclic search until a new solution is found.

The ACOCCyI modification differs from ACOCNI in that the ACOCCyI modification guarantees that agent ant paths are found in one iteration, while in the ACOCNI and ACOCCyN modifications, .

3.5. Matrix Modification of the Ant Colony Method for Running on SIMD

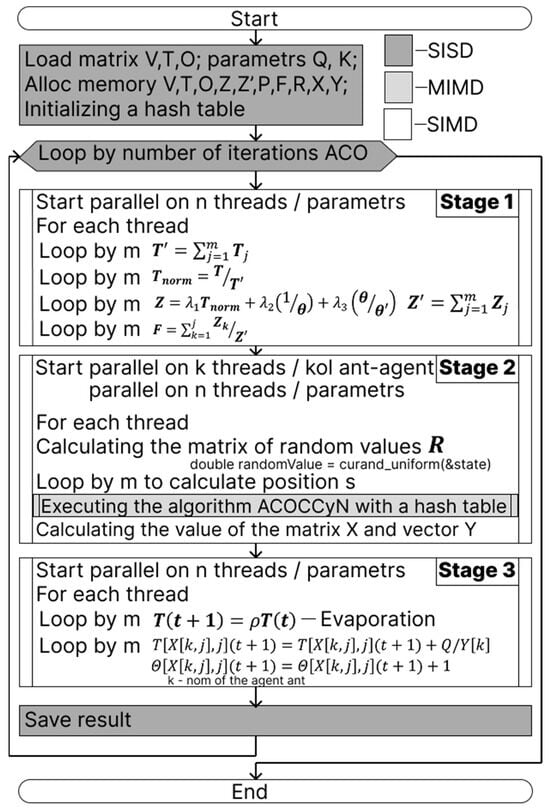

The algorithm for the matrix modification of ACO using hash tables when running on SIMD computers, using computers on the CUDA platform as an example, consists of three stages (Figure 2):

- Calculation of matrix : The following are performed sequentially: the calculation of or , to obtain the values of the normalized matrix or , respectively; calculation of matrix and then vector ; and calculation of the transition probability matrix and the distribution function matrix . Since at the first stage all matrices have the dimension and vectors have the dimension , then all actions of the algorithm are performed in parallel on threads, where each thread performs operations for one parameter. When optimizing the algorithm, one can refuse to calculate matrix and calculate vector simultaneously with matrix .

- Calculation of the ant agent decisions and : The following are performed sequentially: the generation of matrix and calculation of position ; and the determination of and the calculation of vectors and , necessary for working with the hash table. One of the modifications of ACOCN, ACOCNI, ACOCCyN, and ACOCCyI is performed, based on the results on which vector is determined. This stage can be performed in threads on SIMD and MIMD computers. In this case, the operations necessary for calculating matrix , within each thread can be performed on additional threads for each thread of the ant agent.

- Calculation of the new values of matrices and : First, all values of matrix are reduced—evaporation ,—and then, the values from ant agents are added: . The values of matrix are changed in a similar way: . This algorithm can be executed on parallel threads, each of which calculates the values of a separate parameter.

The algorithm repeats a specified number of iterations, which determine the criterion for stopping the algorithm.

Figure 2.

Algorithm for matrix implementation of ACO on SISD, SIMD, and MIMD components.

Figure 2.

Algorithm for matrix implementation of ACO on SISD, SIMD, and MIMD components.

In some cases, it may be appropriate to combine stages to reduce the number of transitions from the GPU to the CPU when using CUDA technology. It is possible to combine the third and first stages in order to perform a sequential change in the states of matrices and and calculate matrix on threads. This algorithm requires the creation of an initial matrix and vector .

It is also worth highlighting the implementation using CUDA technology, in which the number of threads and the number of blocks can be specified. In such an algorithm, all three stages are performed in parallel on blocks, where each block defines a separate behavior of the ant agent, and the update of matrices and and the calculation of matrix are performed in parallel on n threads. With this formulation, only one call to the method implemented on a GPU with CUDA technology is required.

3.6. Graph Structure for Parametric Optimization

Separately, it is worth noting the acceleration of the algorithm due to division into threads, where determines the number of parameters in the problem. At the same time, many benchmarks and parametric problems have a small number of parameters, , usually equal to two, for which parallel calculations are ineffective. For numerical parameter values obtained by discretizing continuous parameters with a certain accuracy, the number of such values for one parameter m can be very large. This paper considers an algorithm that allows the division of a set of parameter values into separate sets, forming related successive layers of values related to one parameter. The ant agent selects not one vertex of the parameter value but several values from the vertices of the set, calculating the final value of the parameter by linear convolution of the obtained values in all layers.

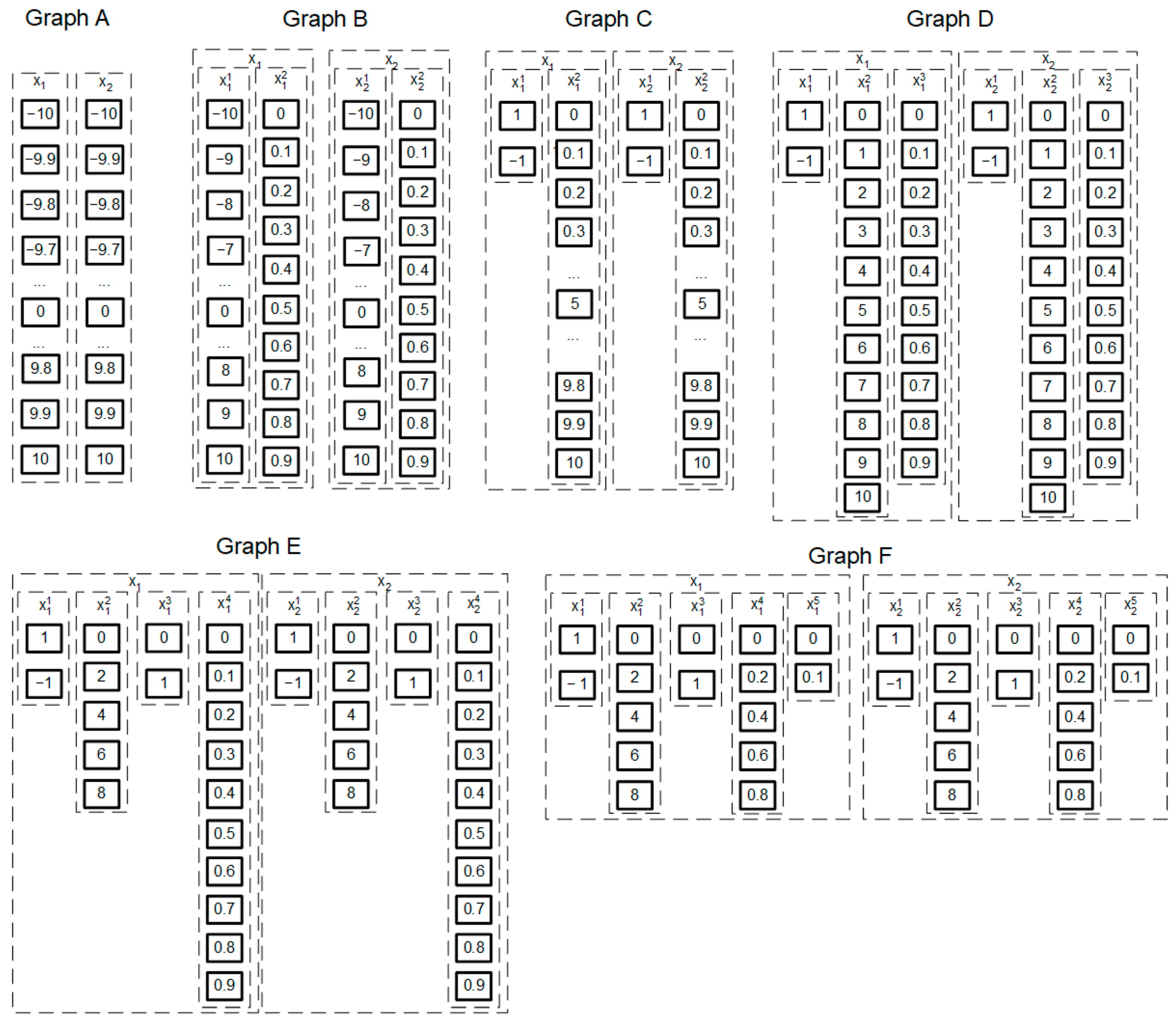

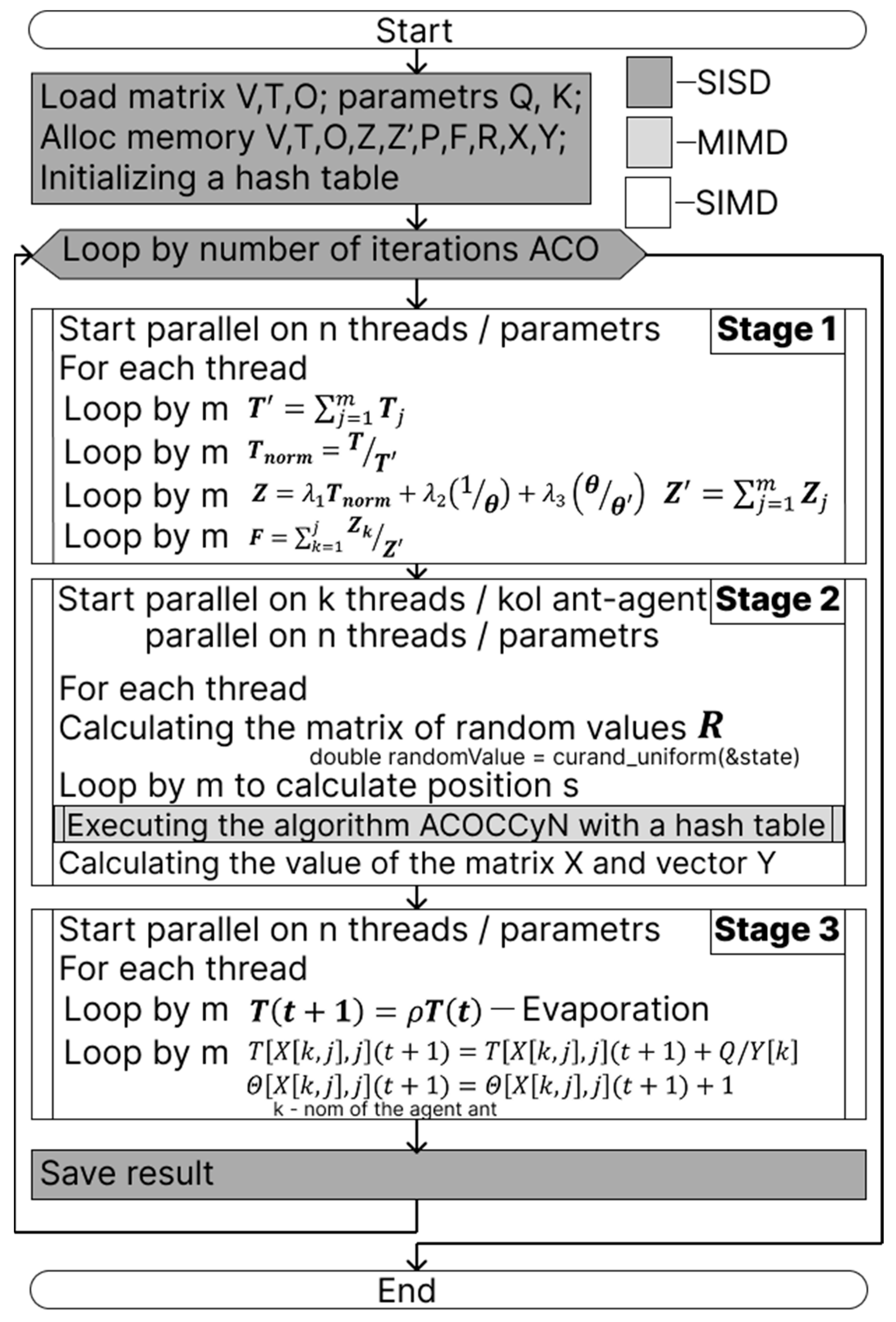

For example, when considering a two-criterion benchmark with with an accuracy of , the following layer configurations can be considered:

- Standard: The standard configuration for and uses 201 vertices. See Graph A in Figure 3.

Figure 3. Decomposition of parameter values into layers.

Figure 3. Decomposition of parameter values into layers. - Separation of the real part: Each layer is divided into the integer part in the interval [–10, 10] with a step of 1 (21 vertices) and the fractional part in the interval [0, 0.9] with a step of 0.1 (10 vertices). . In total, there will be 2 layers for each parameter in the parametric graph. The total number of solutions will increase to 44,100 due to the appearance of several zeros. See Graph B in Figure 3.

- Selection of the negative part: Each layer is divided into the sign (2 vertices) and the positive part in the interval [–10…10] with a step of 0.1 (101 vertices). . In total, the parametric graph has 2 layers for each parameter and 40,804 vertices. See Graph C in Figure 3.

- Separation of integer, real, and signed parts: Each layer is divided into the sign (2 vertices), the integer part in the interval [0, 10] with a step of 1 (11 vertices), and the fractional in the interval [0, 0.9] with a step of 0.1 (10 vertices). . In total, the parametric graph will have 3 layers for each parameter and 48,400 solutions. There are 7999 more solutions here than in the standard graph, since there are “extra” solutions, for example, . See Graph D in Figure 3. This graph is intuitive. When increasing the precision of parameter discretization, for example, to 0.01, it is necessary to simply add the corresponding layers for each parameter in the interval [0…0.09] with a step of 0.01 (10 vertices).

- In addition to selecting the integer, real, and sign parts, it is possible to decompose layers in the intervals [0, 10]. As a result, the parametric graph will have 4 layers for each parameter. ; is a sign layer (2 vertices), and corresponds to one vertex, , from Graph D: comprise even numbers from the interval [0, 8], and takes 2 values, 0 or 1. This graph is designated in Figure 3 as Graph E.

- Further decomposition of not only the integer but also up to 5 layers for each parameter of the real part. . See Graph F in Figure 3.

To decompose numerical values of parameters into separate layers, an automatic decomposition system is proposed, based on decomposing the range of values into simple factors to determine the minimum number of values for each layer . The number 10 is decomposed into two simple factors, 2 and 5, that is, into 2 layers with 2 and 5 vertices, respectively (Graph E, Figure 3). If the values change in the interval [0, 10] (11 vertices), then it will not be possible to divide this layer. But for values in the interval [0, 11] (12 vertices), it is possible to distinguish 3 layers with 2, 2, and 3 vertices. To determine the values at the vertices, one can use the formula , where is the parameter number; is the layer number for this parameter; and is the vertex number in the layer. Since the most appropriate and logical division is into integers, tenths, and hundredths, each such layer consists of 10 vertices, which can be decomposed into 2 more layers. As a result, with such a decomposition, many layers are obtained for each parameter with a maximum number of values in one layer, , which significantly reduces the number of calculations and makes the operation of the multithreaded system efficient.

4. Results

4.1. Analysis of the Efficiency of Application of the Proposed Modifications of ACO

Analyses of a new probabilistic formula, the interaction of ACO with a hash table, and the modifications of ACOCN, ACOCNI, ACOCCyN, and ACOCCyI were carried out on software developed in Python 3.12 (https://github.com/kalengul/ACO_Cluster (accessed on 9 April 2025); the entry point is the main.py file, and the task and parameters of the ant colony method are specified through the setting.ini files). This software explores a single-threaded version of ACO for the convenience of testing and research of the proposed modifications. In addition, the software has the ability to run in multi-process mode (taking into account the presence of Global Interpreter Lock—GIL) and interact with the model through a socket connection to calculate the values of the objective functions. The absence of multithreading allows us to consider the effectiveness of the method modifications in the worst case.

During this study, estimates of the mathematical expectation of the algorithm’s running time, estimates of the probability of finding an optimal solution (if the value of the objective function is known), and estimates of the mathematical expectation of the iteration number at which the optimal solution was found were calculated. Testing was carried out on various benchmarks and large-scale test graphs [46,47].

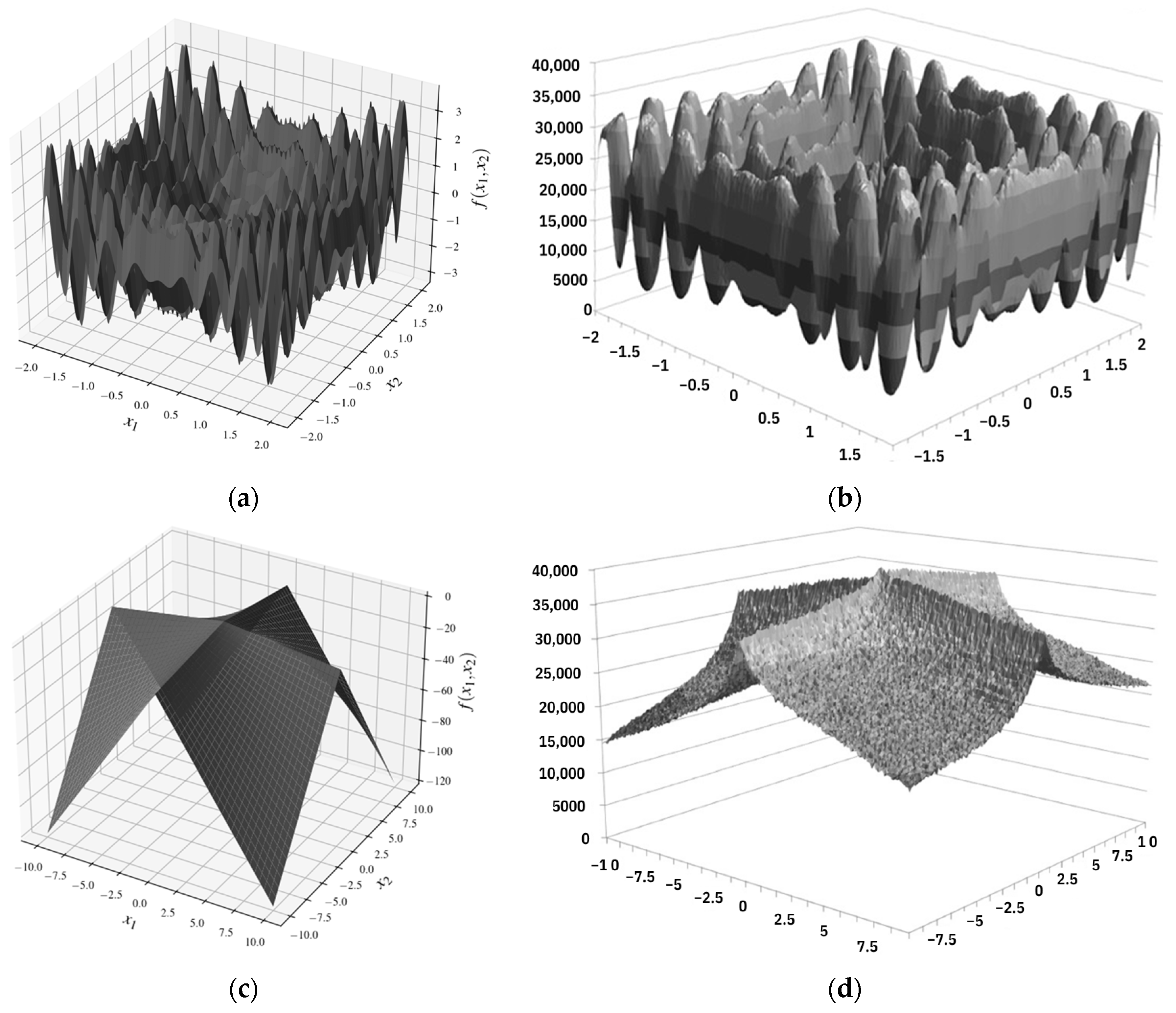

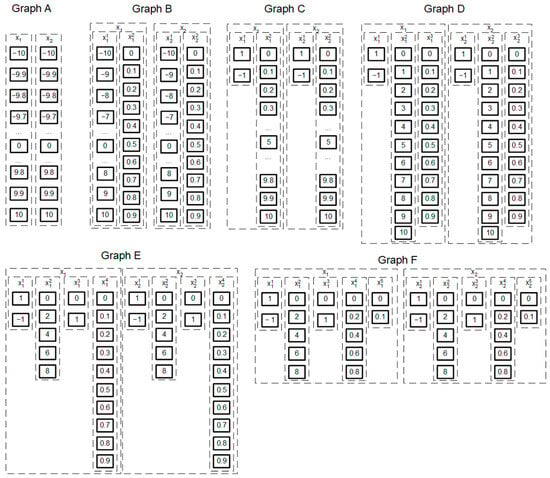

Figure 4 shows the graphs of the “multi-function” function (a) and the “Schwefel” function (c), and Graphs (b) and (d) show the estimate (based on the results of 3000 runs in the direction of minimizing the values of the objective function) of the mathematical expectation of the ordinal number of the solution, in which the given values of the parameters and were considered. The estimate of the mathematical expectation of the iteration number resembles the outlines of the function graph. As a result, statistically optimal sets of parameter values were determined at early iterations. After finding the first optimal solution in early iterations, the ACOCCyI algorithm did not stop working despite the convergence of the algorithm and still allowed the determination of all optimal solutions.

Figure 4.

Graphs of the “multi-function” function (a) and the “Scheffel” function (c). Graphs (b) and (d) show an estimate of the mathematical expectation of the ordinal number of the solution on which these values of the parameters were considered.

4.1.1. Investigation of a New Probability Formula and the Influence of Additional Terms

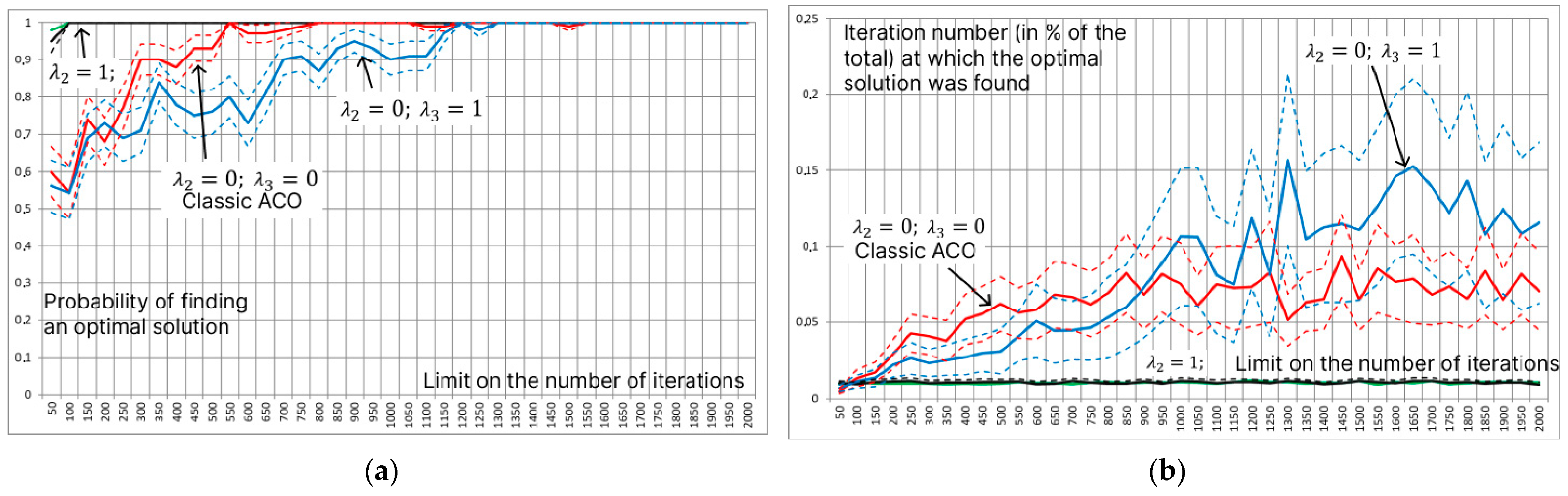

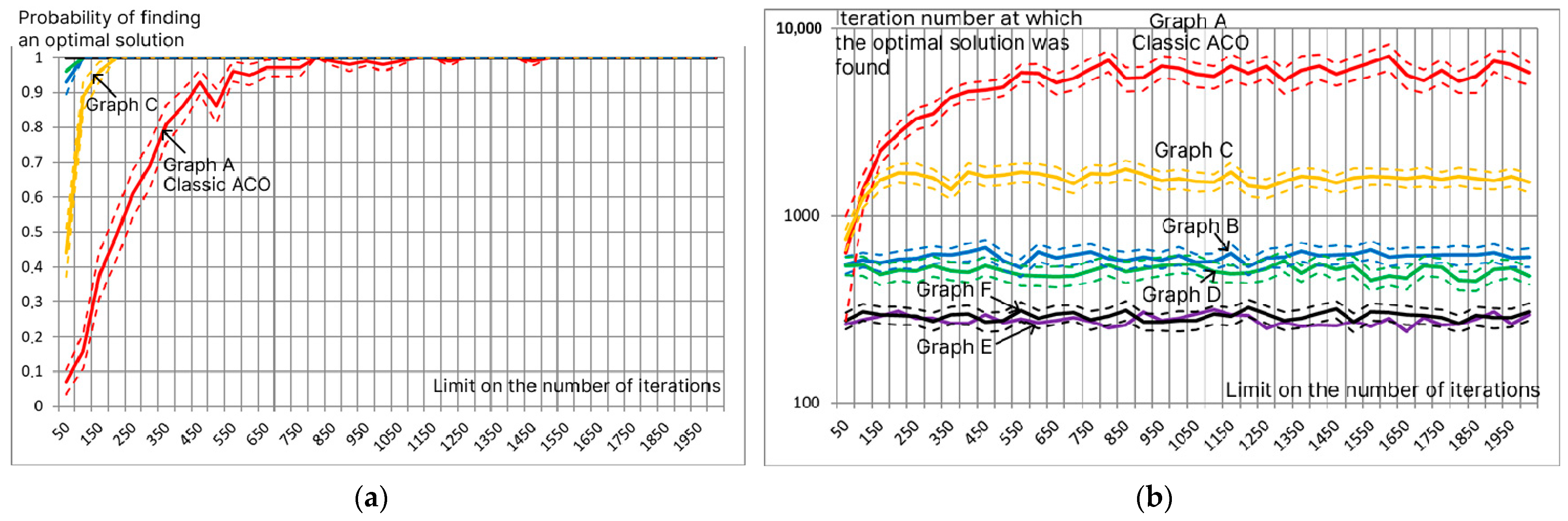

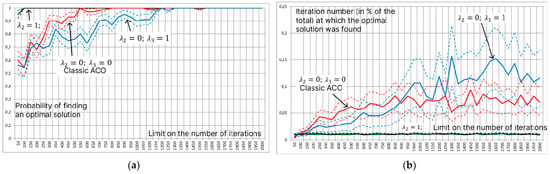

Figure 5 presents an analysis of the efficiency of applying the new probability formula, Formula (3). The influence of using different terms of the additive convolution on the estimate of the probability of finding the optimal solution for a given number of iterations (Figure 5a) and the estimate of the mathematical expectation of the iteration number at which the first optimal solution was found (Figure 5b) are studied. Testing was carried out on the benchmark “Carrom table function”:

with discretization up to (201 values of each of the parameters and ) with 25 agents per iteration (). Since ant agents perform probabilistic, stochastic route selection, estimates of the mathematical expectation were calculated as a result of 200 launches of ACO with a limited number of iterations. The dashed lines indicate confidence intervals of estimates at a significance level of 0.95. The original ant colony method has no additional terms: , , and (shown in the figures by the red lines). The results of the research revealed that the presence of the second term allows for the determination of the optimal solution at the earliest iterations, and its absence leads to stagnation of the algorithm. At the same time, the third term does not have a special effect on the efficiency of ACO in the process of searching for the optimal solution and works only when solving the problem of rearranging parameter values for sending to the computing cluster.

Figure 5.

Estimation of the probability of finding an optimal solution for a given number of iterations (a) and estimation of the mathematical expectation of the iteration number at which the first optimal solution was found (b) under various restrictions on the number of iterations. The dotted lines indicate confidence intervals of estimates at a significance level of 0.95; the color of the dotted line corresponds to the color of the estimate.

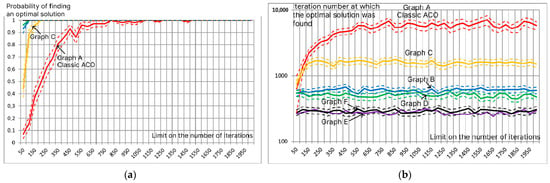

4.1.2. Analysis of Parametric Graph Decomposition

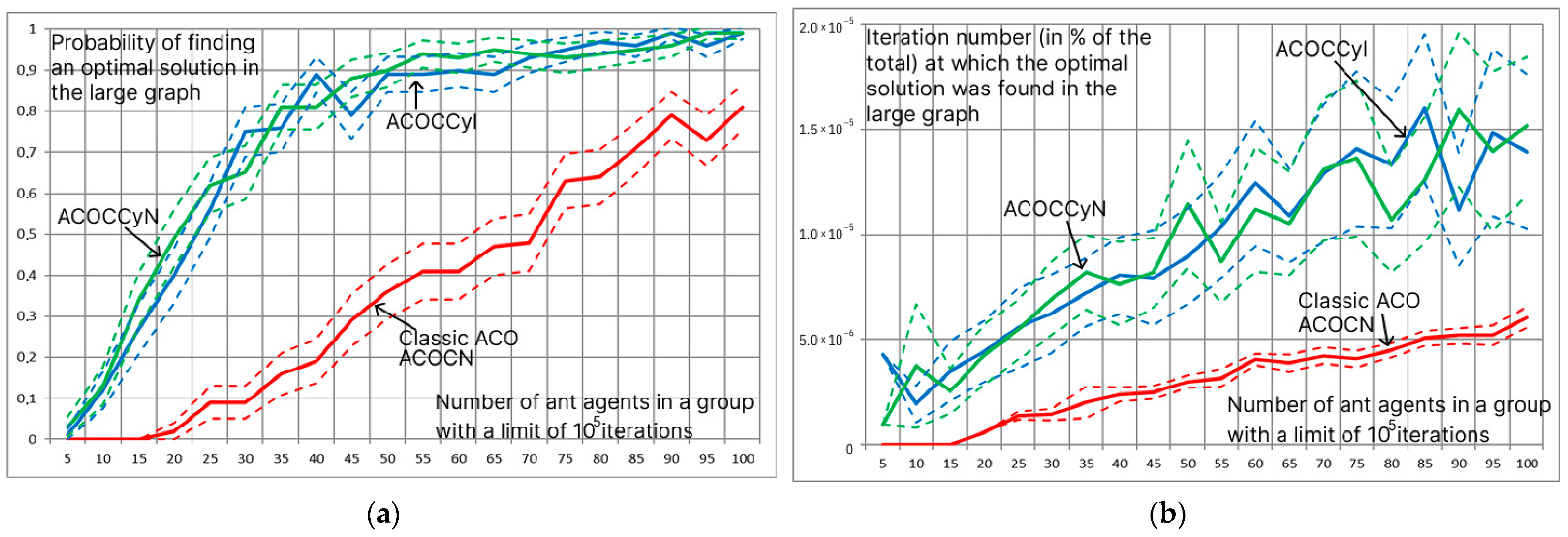

When studying the possibility of decomposing all parameter values into separate layers with subsequent linear convolution, it was found that ACO works well with low-dimensional graphs. Figure 6 shows graphs of the estimated probability of finding an optimal solution for a given number of iterations (Figure 6a) and the estimated mathematical expectation of the iteration number at which the first optimal solution was found (Figure 6b) for the structures shown in Figure 3 (). The red graph represents a graph similar to the graphs applicable in ACO-LSTM, in which each parameter forms a layer. The graphs show that the maximum decomposition to Graphs E and F not only allows us to find the optimal solution earlier but also determines it with a higher probability even at a small number of iterations. Graphs B and D also demonstrated high efficiency, in which the decomposition of a real value is carried out into tens, integers, tenths, hundredths, etc. The automatic creation of such graphs is significantly simpler than the creation of Graphs E and F.

Figure 6.

Estimation of the probability of finding the optimal solution for a given number of iterations (a) and estimation of the mathematical expectation of the iteration number at which the first optimal solution was found (b) for the structures shown in Figure 3.

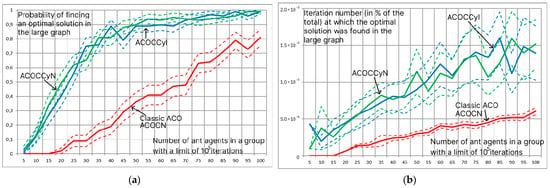

4.1.3. Analysis of Modifications of the Ant Colony Method Using a Hash Table

An analysis of the efficiency of modifications of the ant colony method associated with working with a hash table was carried out for problems of small and large dimensions [45]. The problem of small dimension is represented by the benchmark “Carrom table function” with discretization up to 10−2; for large dimension, a practical problem consisting of 13 parameters was considered, totaling more than 109 solutions. The proposed modifications ACOCN, ACOCNI, ACOCCyN, and ACOCCyI were compared with classic discrete parametric optimization close to solving the QAP, and they were used without using a hash table, i.e., if the ant agent goes along an already-explored path, the pheromone will be added. The objective of this analysis is to determine the optimal modifications of ACO for the purpose of further comparison of the best modification with other metaheuristic algorithms.

In the problem of small dimensions, the optimal values of the system parameters are already determined at early iterations, and the use of the modifications ACOCN, ACOCNI, ACOCCyN, and ACOCCyI is distinguishable only when continuing the search in order to ensure the enumeration of all parameter values or the search for all optimal solutions. In the case of the problem of large (more than solutions in total) dimensions, the ACOCN and ACOCNI algorithms are close to stagnation and begin to converge to good, rational solutions. The ACOCCyN and ACOCCyI algorithms allow one to completely avoid stagnation processes due to a repeated cyclic search for solutions and demonstrate high efficiency (Figure 7). . The dotted lines in the figure indicate confidence intervals for a significance level of 0.95. Already with 50 ant agents per iteration, the ACOCCyN and ACOCCyI algorithms, with a probability of more than 90%, determine the optimal solution in iterations. A further increase in the number of ant agents per iteration leads to an increase in the iteration number at which the optimal solution will be found.

Figure 7.

Estimate of the probability of finding the optimal solution for 105 iterations (a) and estimate of the mathematical expectation of the iteration number at which the first optimal solution was found (b) depending on the number of ant agents at the iteration. Estimates for modifications are ACOCN, ACOCCyN, and ACOCCyI.

The main disadvantage of the ACOCCyN and ACOCCyI modifications is the need to perform additional iterations. This procedure requires additional time, which increases the average time of the path search by one agent ant (Table 1). However, additional iterations do not have a significant effect, increasing the path search time by no more than 20% (the ACOCCyN modifications find the optimal solution on average two times faster).

Table 1.

Estimation of expected value of time of solution search by one agent (in seconds).

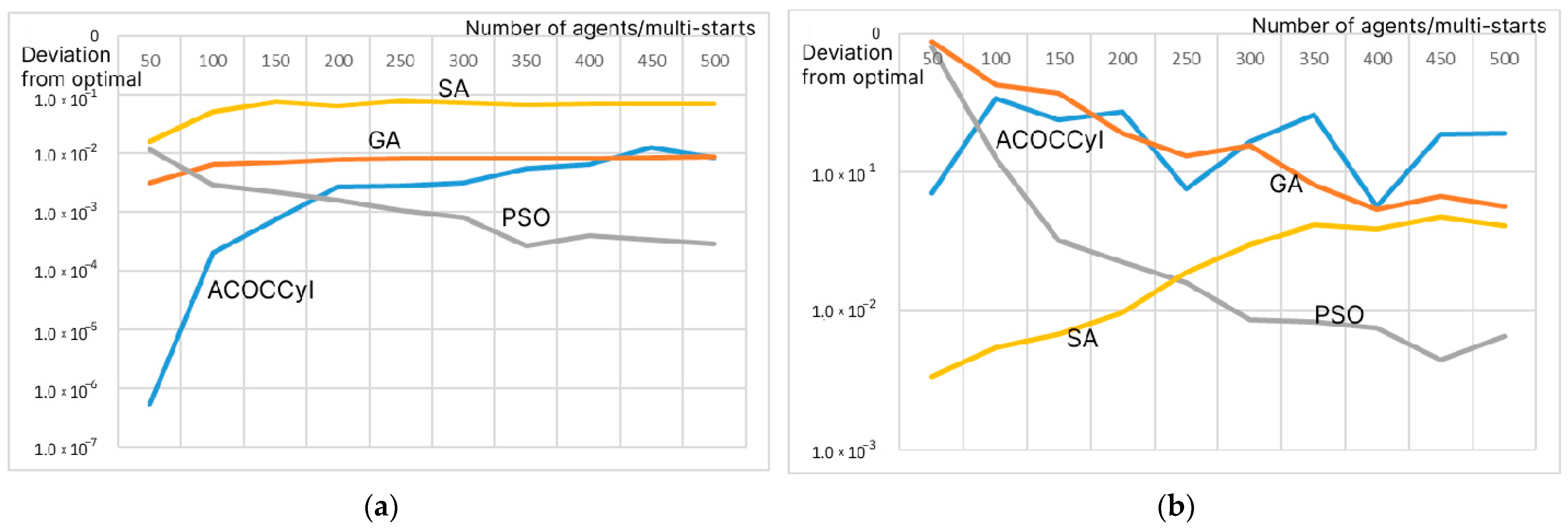

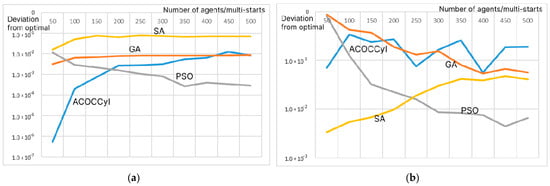

4.1.4. Comparison of Proposed Modifications of ACO with Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Simulated Annealing (SA)

To evaluate the efficiency of the proposed modifications of the ant colony method, we compare them with the genetic algorithm, particle swarm method, and simulated annealing. This analysis was conducted on five two-criterion benchmarks , with a limit of of the considered solutions. The estimate of the mathematical expectation and confidence intervals of the found optimal values of the function was determined based on the results of 500 runs.

Since the ACO, GA, and PSO algorithms specify the population size as , the number of algorithm iterations is determined as . For the SA algorithm, a multi-start approach was used, where the number of starts was set to be equal to the population size, K. The parameters of the studied algorithms (implementation of GA, PSO, and SA—https://github.com/kalengul/ACO_Cluster/tree/master/GA%2C%20PSO%2CSA, accessed on 9 April 2025) are as follows:

- ACOCCyI—. With the use of elitism, the number of elite ant agents is two times greater than the number of agents per iteration. Since the modifications under consideration require the discretization of parameters, the values of and were determined with an accuracy of , presented as Graph F with a total number of possible solutions of 1.96 × ;

- GA—An algorithm with Linear Crossover is used, in which Random Alpha is additionally determined; Rank Selection is carried out for a group of individuals, and Random Selection is carried out for each individual from the selected group, mutation with adaptive change, and the use of elitism. .

- PSO—.

- SA—.

For this analysis, the number of individuals per iteration varied from 50 to 500. Figure 8 shows the results for the benchmarks: the root function (a) and the “Bird” function (b). Table A2 in Appendix A describes all the values of the estimates of the mathematical expectation of the deviation of the found value from the optimal one, indicating the confidence intervals for a significance level of 0.95.

Figure 8.

Estimation of the mathematical expectation of the deviation of the optimal value found by the algorithm from the optimal value of the root function (a) and the “Bird” function (b) depending on the number of agents/multi-start for 105 iterations.

When analyzing the results in Figure 8 and Table A2 in Appendix A, we can conclude that the proposed ACO modifications work well for a small number of agents per iteration. For 50 agents per iteration, ACO did not show the best results only on the complex “Bird” benchmark , for which the SA and PSO algorithms turned out to be the best. At the same time, the ACO modifications did not use additional heuristic information. Moreover, among all the algorithms and all the values of the number of agents at the iteration, ACO determined the best value of the root function . For the remaining functions, the PSO method showed the best values. The results obtained both for the GA, PSO, and SA algorithms, and for the proposed modification of ACO with additive convolution and the use of a hash table, correspond to the results demonstrated in the following works: [34,36,39,40,41].

4.2. Analysis of the Parallel Method of Ant Colonies

Based on the research results, the probabilistic Formula (3) is implemented for the efficient operation of the parallel ACO without taking into account the third term for large-scale problems while taking into account the third term for small-scale problems. The absence of the third term will reduce the number of operations for calculating the formula and will eliminate the need to calculate and store matrix . Algorithms using a hash table are studied: ACOCNI and ACOCCyN with a limit of 100 additional iterations. The parametric problem is necessarily decomposed into layers up to Graph F, with a maximum number of vertices in one layer equal to five. This decomposition is required to increase the number of layers, each of which can be processed in a separate thread on an SIMD computer and to reduce the number of additional vertices required to represent Graph F as a matrix .

To study the efficiency of the parallel ACO, software was developed in C/C++ for operation on CUDA graphics cores for GPUs manufactured by NVIDIA https://github.com/kalengul/ACO_SIMD, accessed on 9 April 2025 (the entry point is the kernel.cu file, the parameters of the algorithm and the research procedure are specified in the parametrs.h file, and the result is output to the log file). Also, a matrix formalization of the ACO modification was implemented to perform calculations on the CPU. Modern processors have sets of extensions for executing matrix instructions for parallel data processing, for example, SSE (Streaming SIMD Extensions), AVX (Advanced Vector Extensions), and others. The comparison was carried out with the classic implementation of ACO (implemented as separate functions in the same software), built in C++20 using objects and optimized for efficient solutions of the same problems as matrix modifications.

It is worth noting the features of the random number generator assignment when performing calculations on CUDA. CUDA technology involves the use of independent blocks and threads with minimization of communication over shared memory. Unlike matrix processors’ CPU, for which one random number generator with a random seed value at each iteration or run is sufficient, CUDA requires setting a unique initial seed value for each thread. In the case of a match in the sequence of pseudo-random numbers, the ant agents will make the same probabilistic choice and receive the same paths. As a result, the number of solution matches in the hash table increases sharply and the operating time of the ACOCCyN modification increases sharply. To solve this problem, information about the block number (blockIdx.x), thread number (threadIdx.x), and time (clock64()) was used when calculating the seed.

The studies of acceleration of the running time of matrix modifications of ACO were carried out on the benchmark “Schaffer function”:

with an accuracy of for different numbers of parameters and solving the minimization problem. . When decomposing one parameter, 21 layers are implemented. In this case, problems with 2 (classic benchmark), 4, 8, 16, 32, and 64 parameters were considered. In practice, two-criterion problems of small dimensions are rare, and simpler algorithms are used to solve them. As a result of the development of computing systems, matrix computers, and parallel instruction processing technologies, large-scale problems are of increasing interest; for example, for a problem with 64 parameters, the number of layers reaches 1344. The optimization of ultra-large-scale problems is relevant and feasible but requires further research [48]. The proposed matrix modification depends on the computing power: the number of cores, the maximum possible number of SIMD threads, or the number of blocks and threads in one block when calculating using CUDA technology.

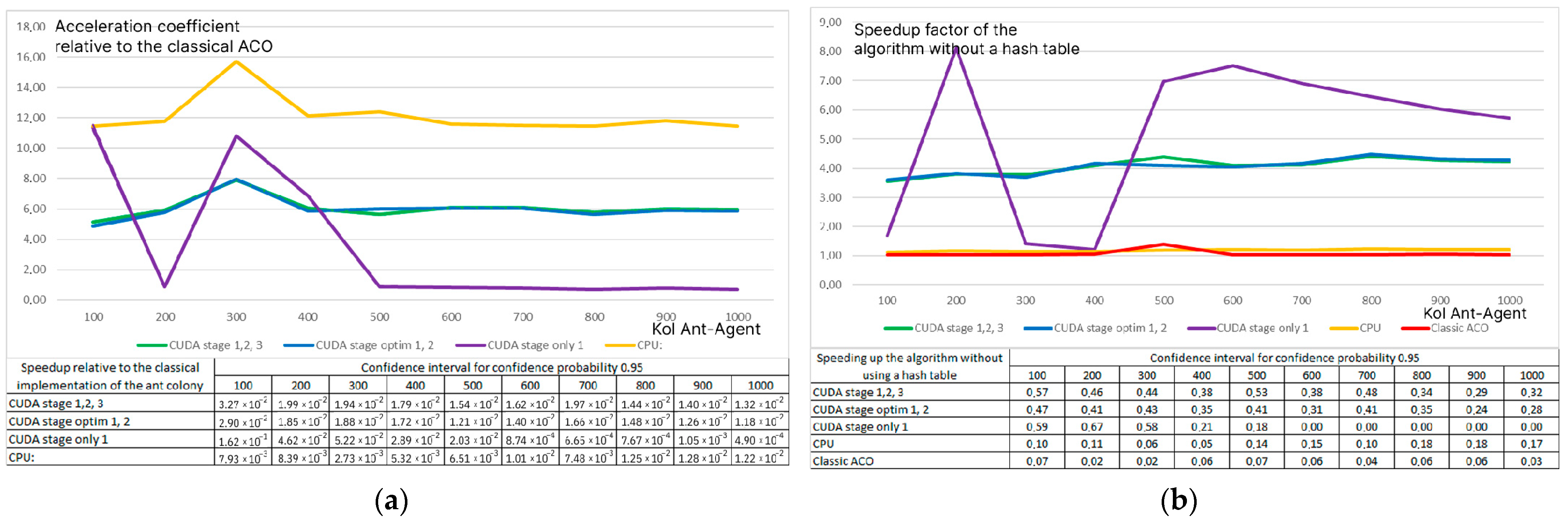

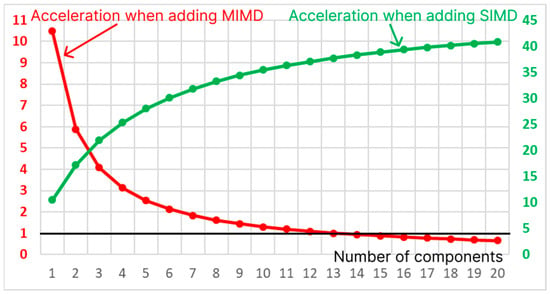

Time interval measurements were performed on an NVIDIA GeForce GTX 3060 Ti Notebook video card and an Intel Core i5-12450H processor which has 16 Gb of Random Access Memory. The mathematical expectation of the execution time of the algorithm and its individual stages was estimated when comparing the classic ACO, the matrix implementation on the CPU, and the implementation on the GPU with CUDA technology in three variants: stages 1 (obtaining matrix ), 2 (searching for solutions of ant agents using a hash table), and 3 (changing the states of matrices and ) separately; stages 1 and 3 combined; and all stages executed in one algorithm. The results of calculating the time for the ACOCCyN algorithm and confidence intervals for a confidence probability of 0.95 obtained for 100 runs are presented in Table 2. Table A1 in Appendix A contains detailed measurements of various modifications of the algorithms on different problem dimensions, broken down into individual stages. According to the research results, the matrix representation of ACO provides an acceleration of 5–6 times when using CUDA technology and 12 times when executing the algorithm on the CPU. It is worth noting separately that doubling the dimension doubles the execution time of the matrix implementation on the CPU and the classic algorithm.

Table 2.

Estimation of the mathematical expectation of the execution time (in ms) of 500 iterations by 500 agents when searching for the optimum of the Schaffer function on graphs of different dimensions using different algorithms.

The standard, classic ACO method does not interact with the hash table in any way, stagnating at good solutions but not violating the sequence of SIMD calculations. The analysis of the running times of ACO modifications using a hash table and without it showed that for classic ACO and matrix ACO, the hash table does not cause significant delays since the ant agents in these algorithms determine a unique set of values at each iteration and since interaction with the hash table is reduced to checking the existence of a solution and adding a new value (Table 3). The implementation of matrix ACO based on CUDA requires the synchronization of threads when interacting with the hash table, which increases the time for this modification by more than four times.

Table 3.

Speeding up the algorithm without using a hash table of 500 iterations by 500 agents when searching for the optimum of the Schaffer function on graphs of different dimensions using different algorithms.

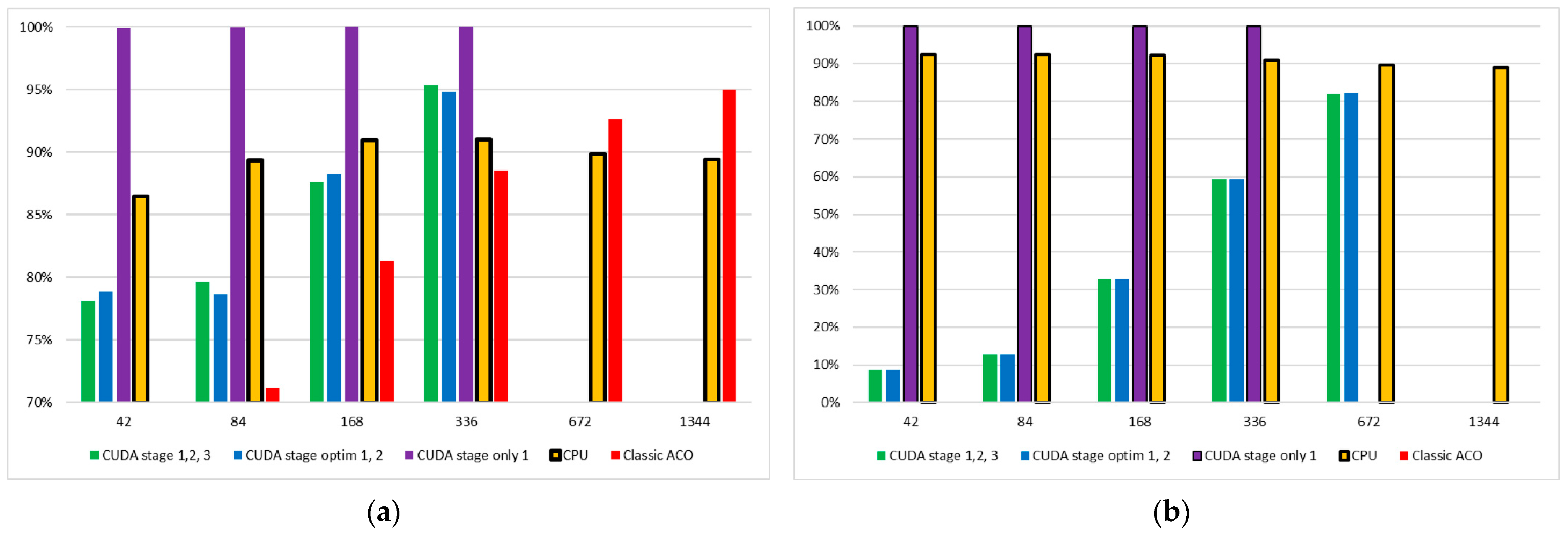

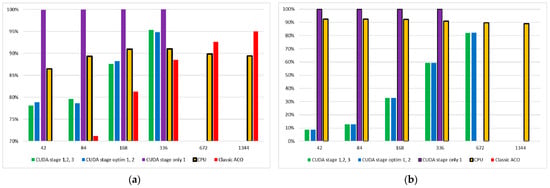

Figure 9 shows the ratio of the second stage (calculation of matrices and ; calculation of vectors , and ; and operation of modifications ACOCN, ACOCNI, ACOCCyN, and ACOCCyI) of the algorithm operation to the total operating time of the algorithms with (a) and without (b) a hash table. For algorithms without a hash table, the second stage only determines the path of the ant agent and calculates the objective function, the parallel calculation of which is performed quite quickly. As the problem dimension increases, the share of time in calculating the agent paths also increases. The presence of a hash table, even taking into account only the check for the absence of a record and the addition of a new one, significantly increases the operating time of the second stage. As a result of the conducted research, it can be concluded that for algorithms operating without a data warehouse, it is necessary to optimize the work with the T and layers, and when using a hash table, it is necessary to optimize the stage of interaction with the hash table.

Figure 9.

Histograms of the ratio of the execution time of the second stage of the matrix algorithm to the total execution time of the algorithm for modifications with the use of a hash table (a) and without it (b).

The analysis of the effect of the matrix modification of ACO on the efficiency of the method with varying numbers of ant agents per iteration showed the relative stability of the efficiency of the methods (Figure 10). Each ant agent finds a path only at the second stage and is not involved at stages 1 and 3, and the second stage in the proposed algorithms involves searching for a path by each agent in a separate SIMD/MIMD thread. Up to 512 agents, the division into threads occurs effectively, and it is possible to increase the efficiency of the modification (in terms of execution time).

Figure 10.

Speed-up factor of matrix modifications of ACO when changing the number of ant agents per iteration for a problem with 84 layers for the ACOCCyI modification (a) and without a hash table (b).

Additionally, studies were conducted on the Intel Core i5-8300H CPU, which has 8 Gb of Random Access Memory, and NVIDIA GeForce GTX 1050 Ti GPU for a 336-layer structure. . Based on the results of 100 iterations, the greatest acceleration of 12.12 times (±0.76 confidence interval for a confidence probability of 0.95) was achieved by the “CUDA stage optim 1, 2” algorithm. The CPU provided an acceleration of 10.01 times (±0.51).

4.3. Analysis of the Optimal Structure of a Heterogeneous Computer Based on SIMD and MIMD Components

Based on the calculated estimates of the duration of the individual stages of work, it is possible to estimate the acceleration coefficient of the matrix modification for individual stages. As a result of such an assessment, it is possible to calculate the time of the following stages:

- Start of the algorithm and creation of the necessary data structures (Stage Start): Since this stage is performed in a single copy, its execution is possible only on SISD systems, and the acceleration of this stage is carried out using FPGA. When analyzing the efficiency of the system, the execution time of this stage is constant and mandatory; it can be neglected when constructing a heterogeneous computer.

- Iteration overhead (Stage Delt): This overhead is associated with counter incrementing, context switching, calculating the timing of nested stages, etc.

- The first stage (stage 1), associated with matrix transformations to obtain matrix , can be performed on SIMD computers.

- The second stage (stage 2), associated with the search for paths by ant agents, can be performed on SIMD computers in the absence of interaction with the hash table. When interacting with the hash table, the result of operations, the duration of individual transformations, and the subsequent behavior of the algorithm are undefined and depend on the results of the system’s operation. As a result, this stage is best implemented using an MIMD component or an SIMD accelerator and an MIMD component together.

- The third stage (stage 3), associated with updating matrices and , consists only of matrix transformations and can be performed on an SIMD accelerator.

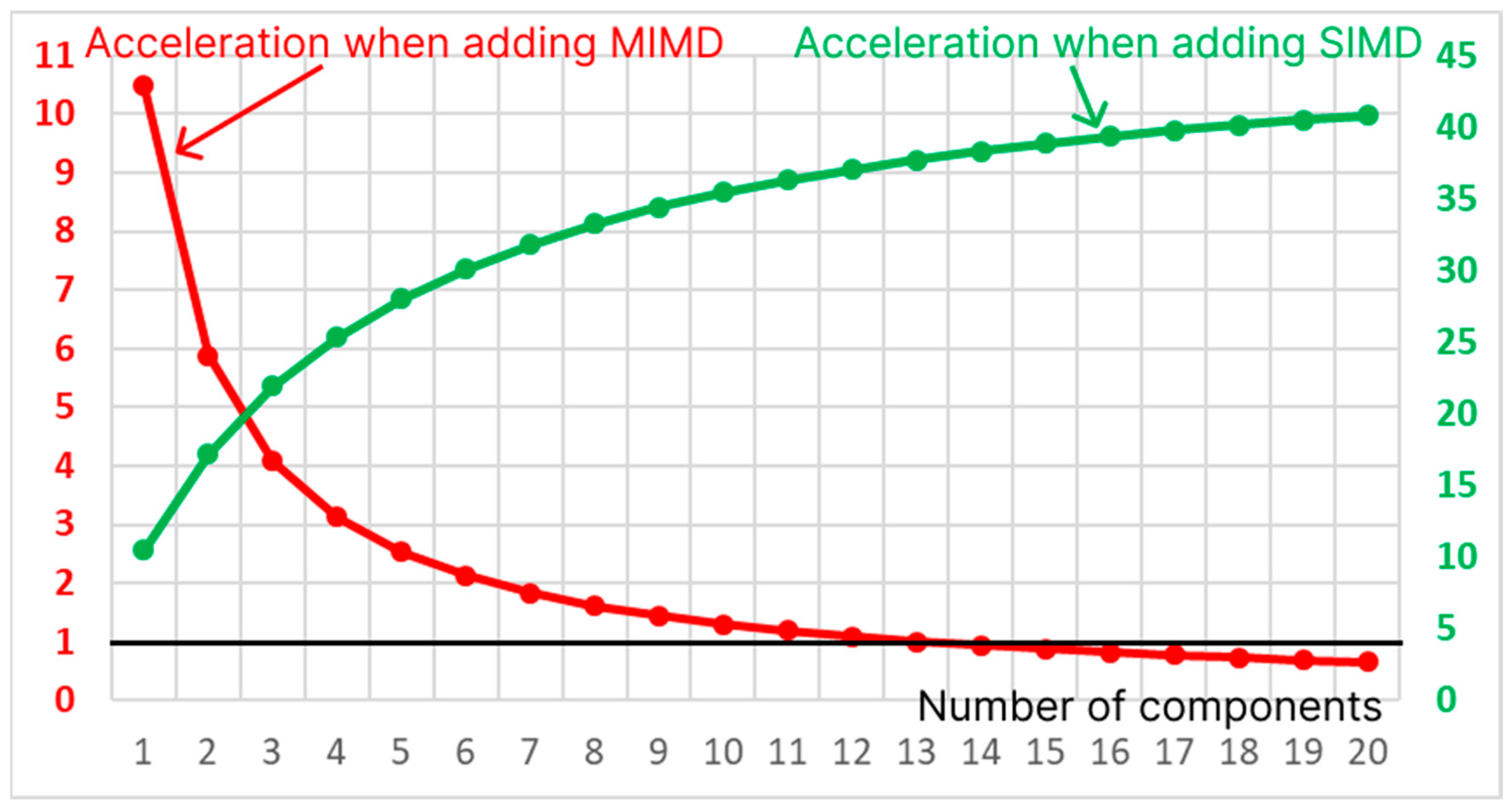

The design example of the optimal structure of the heterogeneous calculator will be based on the time characteristics and acceleration factors of matrix implementation on the CPU in comparison with the classic implementation for 500 iterations and 500 ant agents when solving the problem of calculating the optimum of the Schaffer function with 336 layers in the data structure using the ACOCCyN algorithm. The estimated mathematical expectation of the execution time of the classic modification is s, the time without taking into account the SISD component is , and the proportion of the parallelized fragment is , which indicates the inefficiency of deep optimization of the SISD component. High delay is associated with the need to initialize the hash table, which can be accelerated by a multithreaded computer, so for the classic algorithm without using a hash table, . Using a hybrid computer consisting of SIMD and MIMD components allows us to reduce the time of matrix algorithm , which is divided into stages as follows: , where and are the operating time of the SIMD component for stages 1 and 3, respectively, and is the operating time of the SIMD and MIMD components at the second stage. To further divide the operating time of the SIMD and MIMD components in the second stage, the operating time of the method modification that does not use the hash table is used, since this modification can be entirely performed only on an SIMD accelerator. The times for individual stages for different modifications are given in Table A1 of Appendix A. As a result, the total execution time of the SIMD block is , and the time of the MIMD block is , without taking into account the SISD component .

To evaluate the efficiency and determine the acceleration factor, it is necessary to calculate the acceleration obtained by using the SIMD calculator. Since the Intel Core i5-12450H central processor connects the matrix accelerator for all matrix-related calculations, it is difficult to accurately estimate the acceleration factor associated with the intentional use of the SIMD accelerator on the CPU. The estimate is used. By measuring the time taken by the classic algorithm, it is possible to determine the ratio of the execution time of the MIMD fragment to the total execution time of the parallelized block . The share of the SIMD fragment will be .

If there are processors (cores) in the computer, it is possible to run several matrix modifications of ACO in parallel with different initial data as well as run them sequentially with the possibility of parallel work with the hash table in the MIMD fragment. In the first case, the total execution time of or less tasks simultaneously will be equal to . In the second case, , where is the execution time of the matrix modification taking into account the acceleration of the MIMD fragment on threads. where is the acceleration coefficient on cores. . Therefore, , and the acceleration factor on q processors associated with the use of the matrix parallel algorithm relative to the classic parallel algorithm is . In the ideal case, when we obtain (Figure 9). When , the acceleration of the MIMD fragment remains effective.

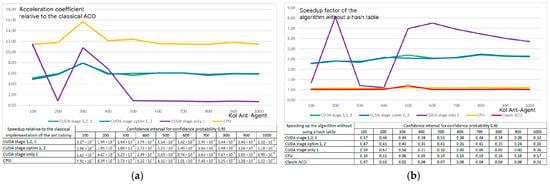

It is also possible to speed up the matrix method by increasing the number of SIMD accelerators and dividing the layers for parameters/ threads into different accelerators. With accelerators, the speed-up factor can be calculated as the ratio of the running time of the matrix modification of the algorithm to the running time of the matrix modification under the condition of acceleration of the execution process on SIMD accelerators. . In Figure 11, the additional right axis shows the change in the speed-up factor depending on the number of SIMD accelerators in the optimal case, in which . for and ; the difference from the obtained value of 12.72 (Table 2) is due to the acceleration of the SISD component, which performs the initialization of the hash table and data loading. The maximum value of the coefficient can be calculated under the condition . We obtain .

Figure 11.

Acceleration due to the increase in the number of SIMD accelerators and MIMD cores.

In general, the acceleration factor for computing using MIMD processors and SIMD accelerators can be calculated using the formula , using optimal MIMD and SIMD components, i.e., where .

4.4. Analysis of the Optimal Structure of a Heterogeneous Computer Taking into Account the Reconfiguration Mechanism

To achieve maximum acceleration, various computing structures containing different numbers of cores and accelerators are required. In practice, the number of cores and accelerators in a hybrid computing device is fixed. As a rule, due to design features, is satisfied. Starting from a certain value, , the increase in the acceleration coefficient for a given becomes insignificant. Therefore, it is advisable to form two structures in the computing device:

- A homogeneous MIMD structure of general-purpose cores without SIMD accelerators;

- A hybrid structure containing MIMD cores and SIMD accelerators, in which cores interact with one accelerator.

In this formulation, the overall acceleration coefficient is the sum of the acceleration coefficients of the homogeneous MIMD structure () and the hybrid structure . , where . To calculate the optimal number of cores in the hybrid structure, we calculate the derivative with respect to or the acceleration factor using the quotient differentiation rule for the hybrid acceleration factor.

Substituting the values for the problem studied in the previous subsection, we obtain , and . For example, in the case of one SIMD accelerator and 16 MIMD cores, it is recommended to assign 5 MIMD cores to a homogeneous MIMD structure and add 11 MIMD cores to the SIMD accelerator in a hybrid structure. In this case, If there are five SIMD accelerators in the system, then only one MIMD core can be freed from the hybrid structure .

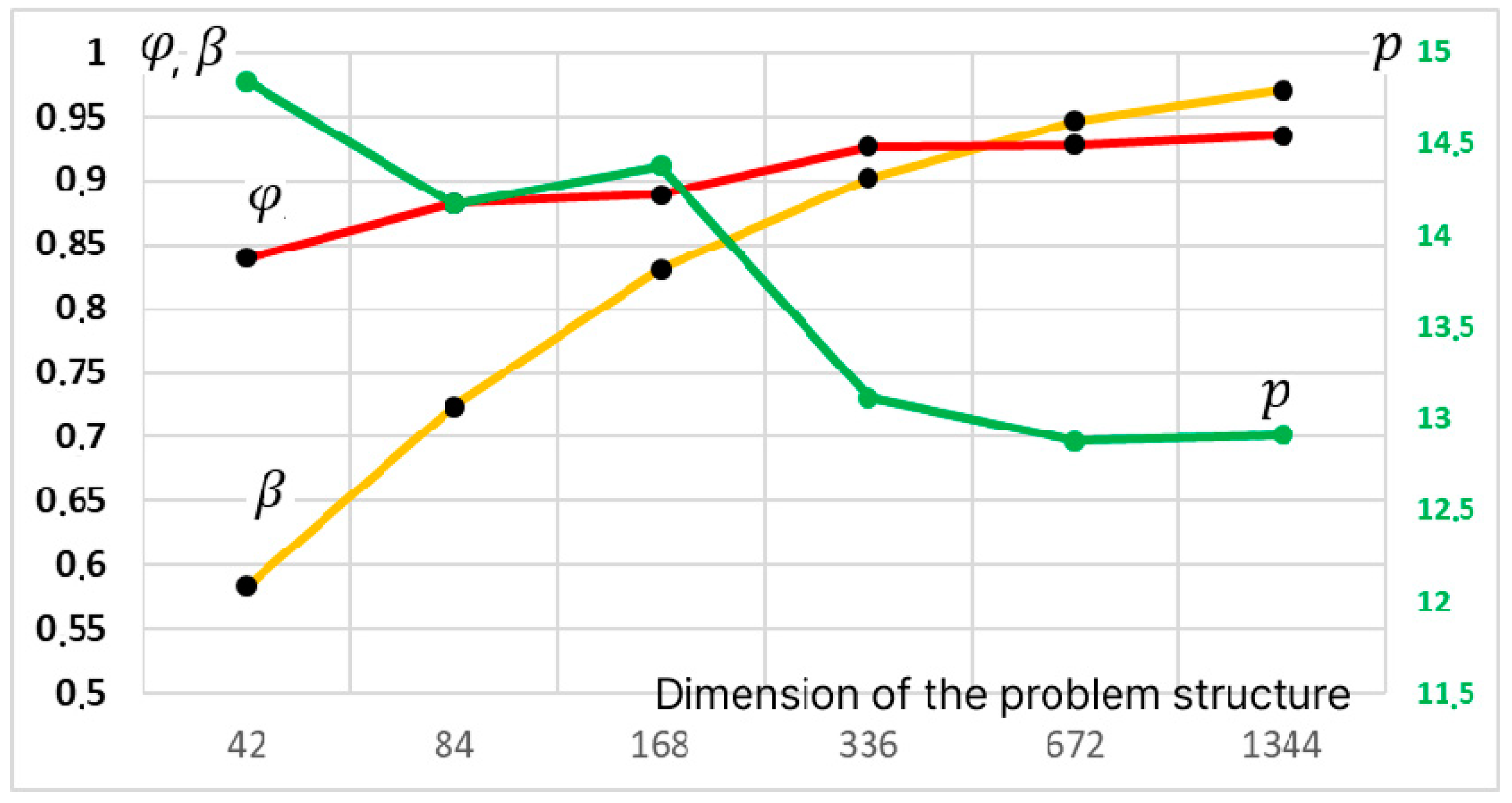

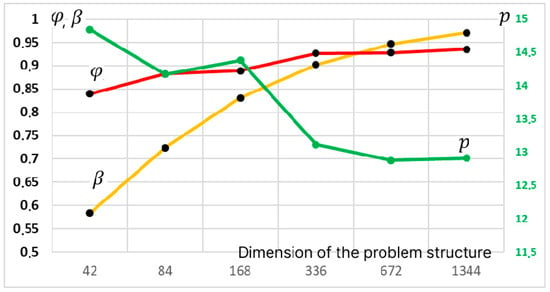

The resulting final formulas depend on the ratios of the volumes of parallelized calculations, ; the ratio of the volumes of SIMD and MIMD components, ; the acceleration coefficient of the SIMD accelerator compared to the MIMD core, ; and the planned parallelization coefficients for MIMD and SIMD components, , respectively. Figure 12 shows the dependencies of the presented characteristics for the CPU matrix modification of ACO for different problem dimensions.

Figure 12.

Changing the coefficients (yellow line, left axis) and (red line, left axis) and (green line, right axis) depending on the problem dimension for the matrix modification of the ACO running on the CPU.

Figure 12 shows that the influence of the MIMD component decreases significantly with increasing problem dimension. The coefficients and tend to unity and, for a dimension of 336 layers, are greater than 0.9, i.e., 90% of all calculations are concentrated in the SIMD accelerator.

4.5. Analysis of the Efficiency of Matrix Modification of the Algorithm on a GPU Using CUDA Technology for the Case of Repeated Searches for Solutions by the ACOCCyN Algorithm

The above example of calculating the efficiency of the implementation of the matrix method of ant colonies on the CPU provides maximum acceleration of the algorithm due to the fact that all ant agents find unique paths and interaction with the hash table. It is limited only to determining the absence of a route and entering a new record. In this form, there will be no differences in the operation of the ACOCN, ACOCNI, ACOCCyN, and ACOCCyI algorithms.

At the same time, on the GPU, due to the difficulties with generating random numbers, the same paths are repeatedly found, which greatly reduces the efficiency of the algorithm due to the need to conduct a repeated search by the ACOCCyN algorithm. But the obtained time characteristics allow us to estimate the acceleration coefficients for the ACOCCyN algorithm, in which the influence of the MIMD component is more significant.

To perform the matrix implementation on the GPU using the ACOCCyN algorithm for a problem with a dimension of 336 layers, an average of 3220.7 additional iterations were required (estimated by 100 runs). The value of is 2.7 times longer than the CPU execution time. At the same time, and . The time of the MIMD component is significantly increased, since various options for searching for a value in the hash table are possible, either by adding a new value to it or re-searching for the path. As a result, the acceleration factor and the coefficients and remained unchanged, since they depend only on the classic implementation of ACO. As a result, the acceleration factor for 1 SIMD accelerator and 16 MIMD cores of the computer (11 cores for the hybrid structure) is , and that for 5 SIMD accelerators (15 cores of the hybrid structure) is . Such an increase in the efficiency of the algorithm compared to the algorithm on the CPU is due to a significant acceleration of the SIMD component of the GPU. For and , . Theoretically, the acceleration of the SIMD component allows for the acceleration of the classic ACO by 22 times.

4.6. Application of Modifications of ACO in Searching for Optimal Values of the SARIMA Model Parameters

The proposed and studied modifications of ACO were applied to solve the practical problem of calculating the values of the parameters of the SARIMA model for forecasting the volumes of passenger and cargo transportation by airlines of the Russian Federation. Before the 2020 restrictions associated with the COVID-19 pandemic, the volumes of passenger and cargo transportation by air had a clear oscillatory component with a fixed small trend (up to 5% per year) and a weak noise component (up to 10%). The stability of such a series made it possible to quite accurately predict the values of the indicators for the next year. In this case, the following indicators of the air transport industry were analyzed: passenger flow; completed passenger turnover; seat occupancy rate; average number of hours per flight; distance per flight; completed tonne-kilometers; and the amount of transported cargo and mail and others on both domestic and international flights, divided into regular and irregular. There were more than 30 indicators in total. After the 2020 lockdown, the volumes of indicators related to passenger transportation dropped to 0 and showed a rapid recovery for domestic flights, having an unstable nature with a difficult-to-predict structure. To make a forecast, LSTM neural networks and SARIMA class models were considered. Due to the small sample (54 values), the use of the LSTM model showed less efficiency than the use of the SARIMA model. At the same time, for the SARIMA model, the parameter values were determined by modifications of ACO when optimizing the MAE and RMSE criteria (both a single-criterion problem for each criterion separately and a multi-criteria problem were solved). ACO has proven its effectiveness in determining better values of indicators than classic methods, such as pmdarima.arima.auto_arima, and the matrix implementation allows us not to lose too much time in searching for optimal values.

For the LSTM model, a comparison of the proposed modifications of the ACO algorithm was carried out; the ACO modification in which the heuristic information about the effective parameters of the LSTM model set the initial values of the weights and the ACO-LSTM modification. The mathematical expectation of the found solution was studied for 100 runs of the model with the following parameters: , and 50 elite ant agents. The confidence interval is specified for a confidence probability of 0.95. As a result, the ACO modification proposed in this article loses to ACO-LSTM by 1.23 (±0.12) times, and ACO with the initial values of the weights loses to ACO-LSTM by 1.08 (±0.03) times. At the same time, the modification proposed in this work allows one to rebuild the optimization of the hyperparameters of the SARIMA model without adding new information; only the data structure changes. The loss of the proposed modification of ACO compared to ACO-LSTM, in which the heuristic value of choosing the parameters SARIMA is determined based on the results of the analysis of corellograms, is 1.18 (±0.04) times.

5. Discussion