A Hubness Information-Based k-Nearest Neighbor Approach for Multi-Label Learning

Abstract

1. Introduction

2. Related Work

2.1. Multi-Label Classification Algorithms

2.2. Methods Addressing the Hubness Problem

3. The Proposed Method: MLHiKNN

3.1. Label Relevance Score for Query Points

3.2. Fuzzy Measure of Label Relevance for Training Points

| Algorithm 1 k-occurrence counting. |

| Input: |

| D: training set; |

| k: number of nearest neighbors to take into account. |

| Output: |

: the k-occurrence and label k-occurrence with respect to each possible label l of each instance x in D.

|

3.3. Distance and Hubness Weighting

3.4. Learning with Label Relevance Scores

| Algorithm 2 The training process of MLHiKNN. |

| Input: |

| D: training set; |

| k: number of nearest neighbors to take into account; |

| : threshold parameter for computing ; |

| : distance weighting parameter for computing ; |

| s: smoothing parameter for computing . |

| Output: |

trained MLHiKNN classifier;

|

| Algorithm 3 The prediction process of MLHiKNN. |

| Input: |

| trained MLHiKNN classifier: ; |

| t: query instance. |

| Output: |

| : label set prediction of instance t. |

3.5. Complexity Analysis

4. Experimental Results and Discussions

4.1. Experimental Setup

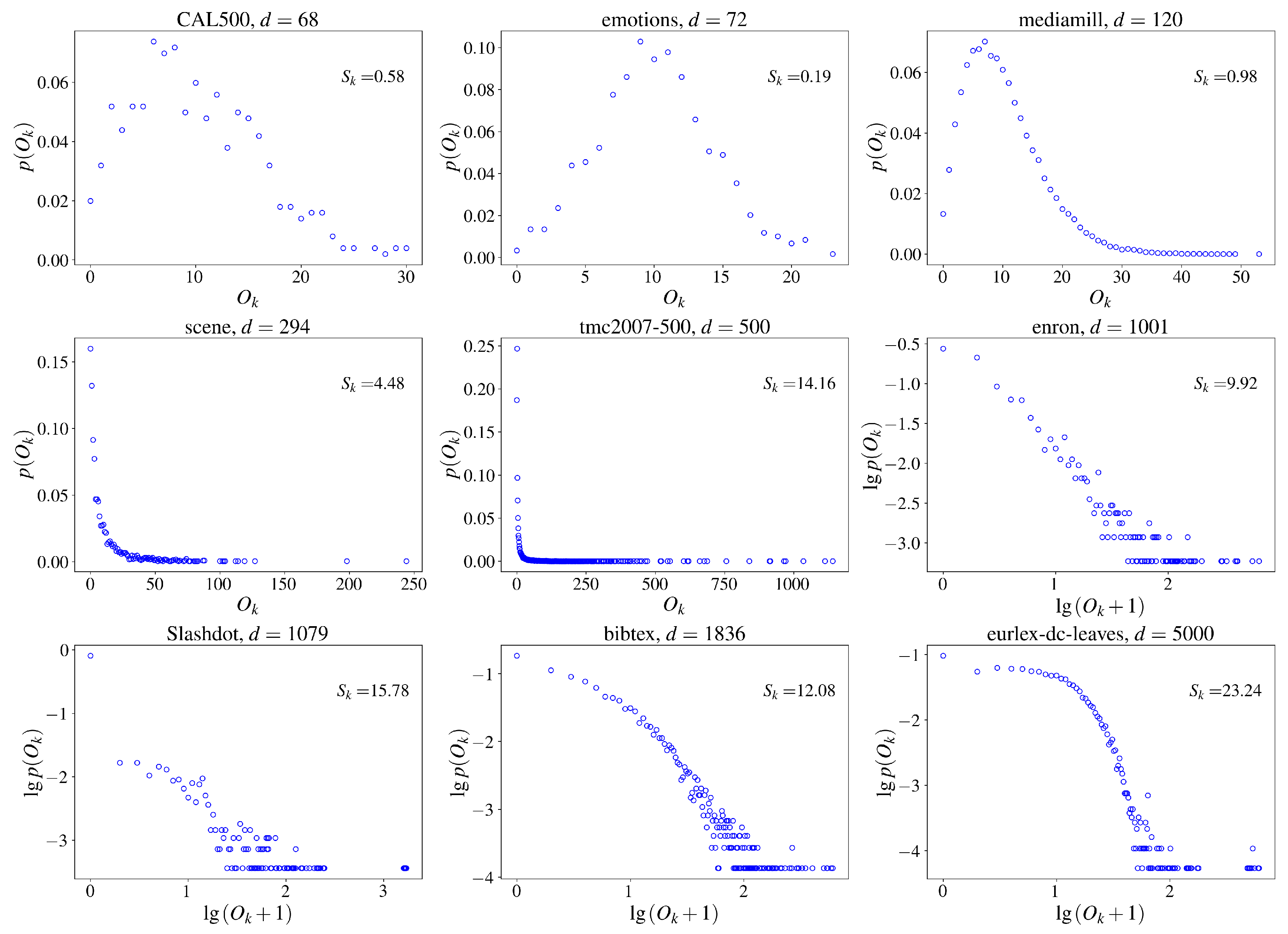

4.1.1. Datasets and Metrics

4.1.2. Compared Approaches

| Algorithm 4 Hubness-reduced BRkNNa. |

| Input: |

| D: training set; |

| : parameters needed for BRkNNa and the hubness reduction technique; |

| : testing set. |

| Output: |

: label predictions of testing set.

|

| Algorithm 5 Hubness-reduced MLKNN. |

| Input: |

| D: training set; |

| : parameters needed for MLKNN and the hubness reduction technique; |

| : testing set. |

| Output: |

: label predictions of testing set.

|

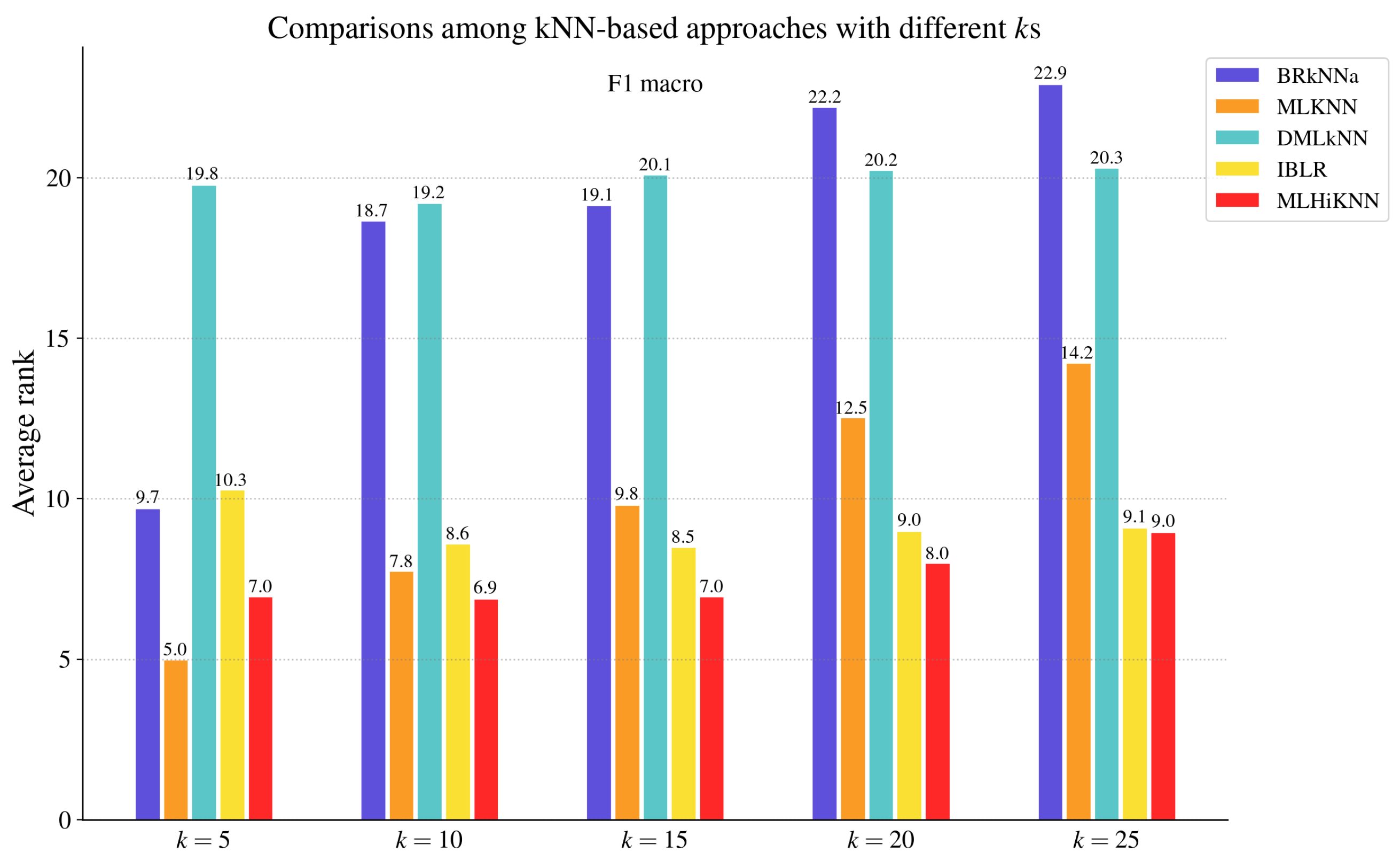

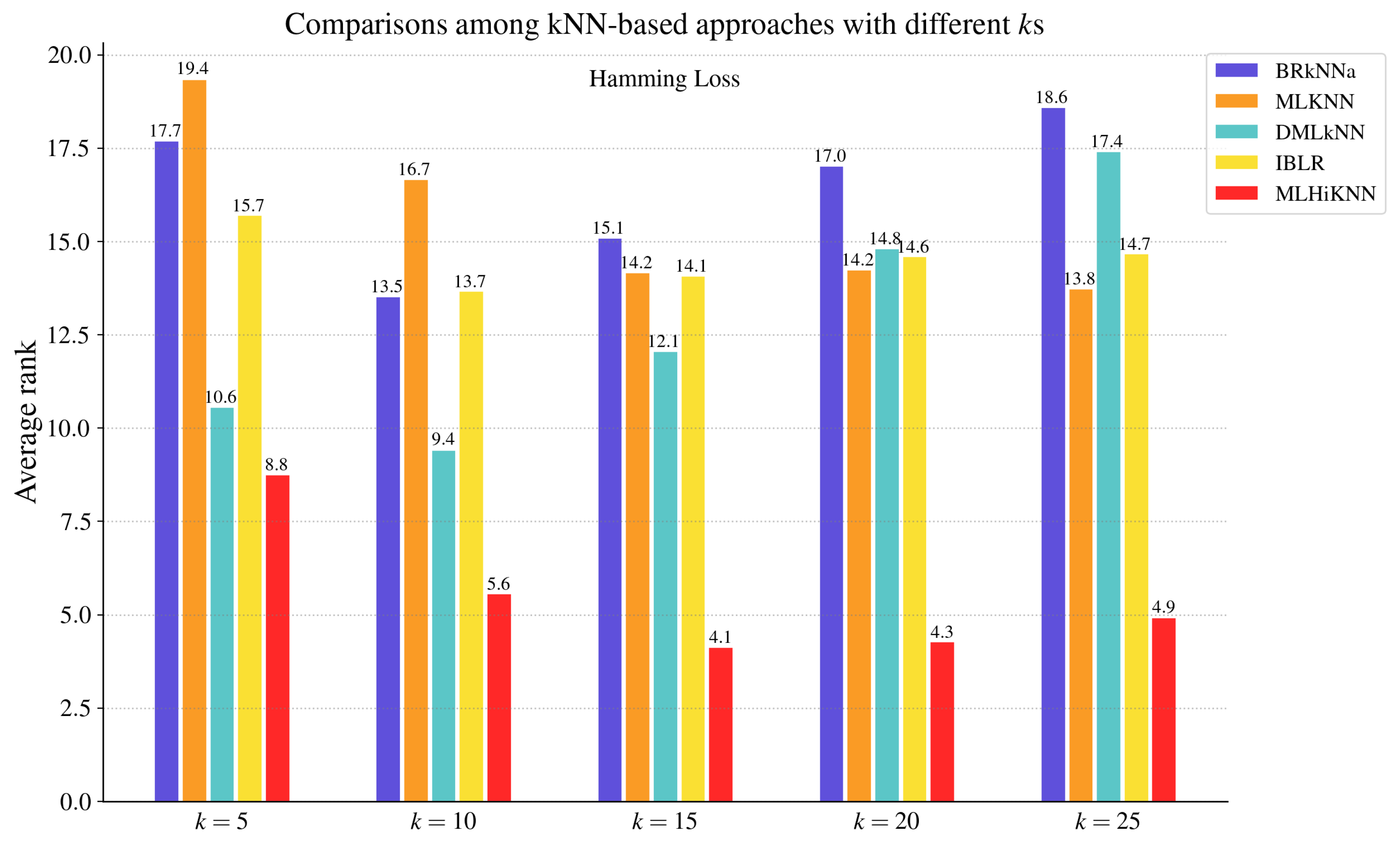

4.2. Comparisons with MLC Algorithms

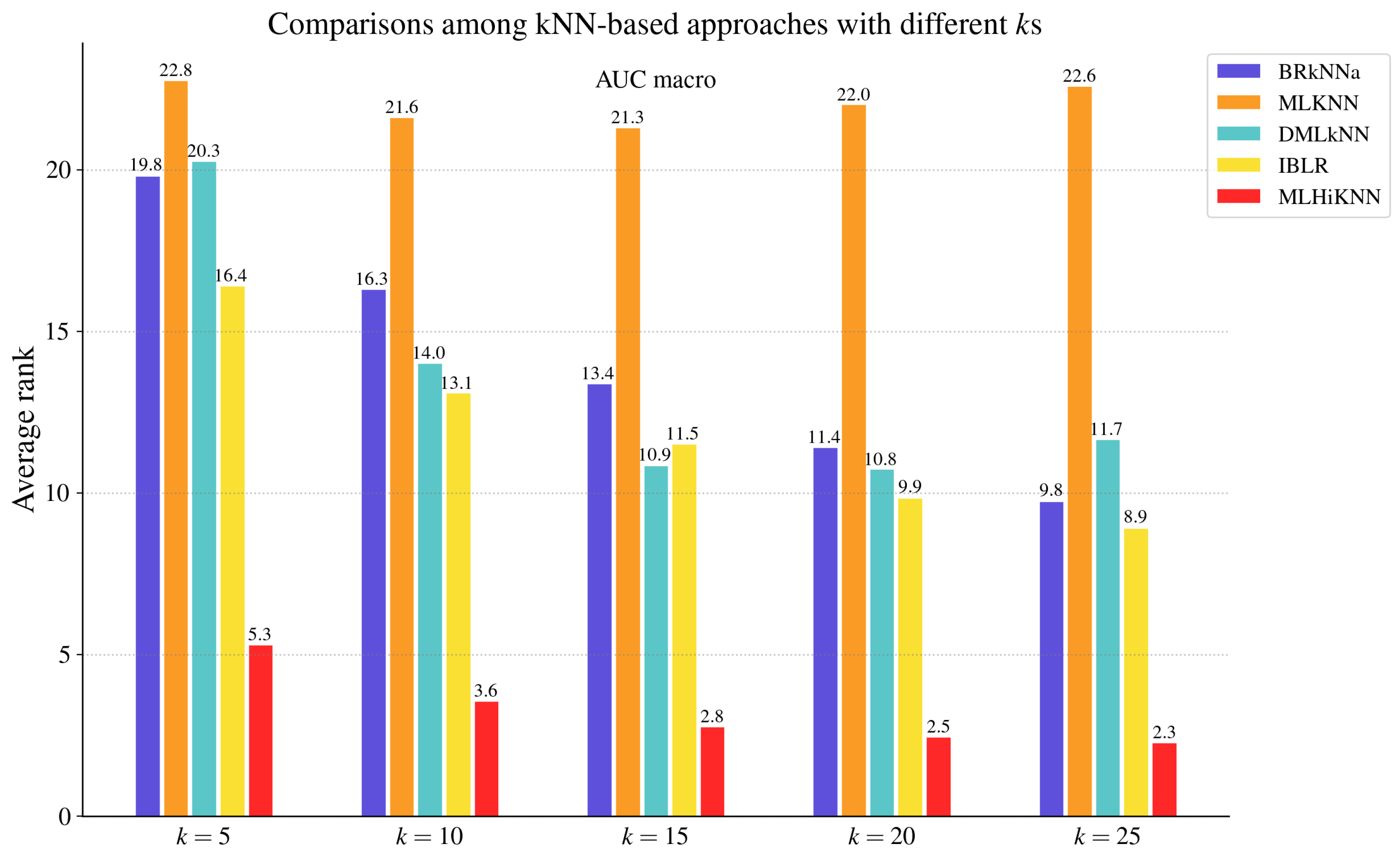

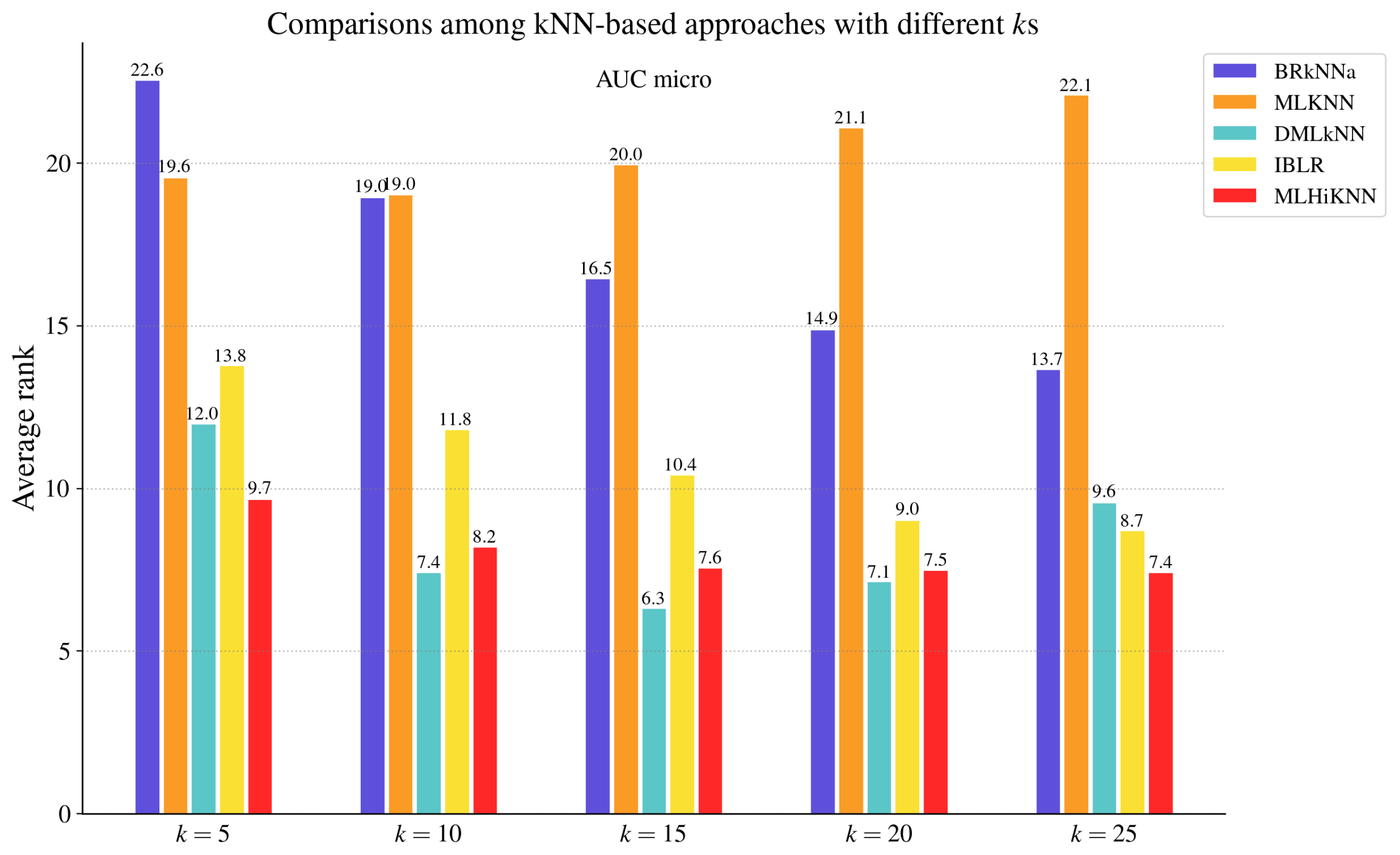

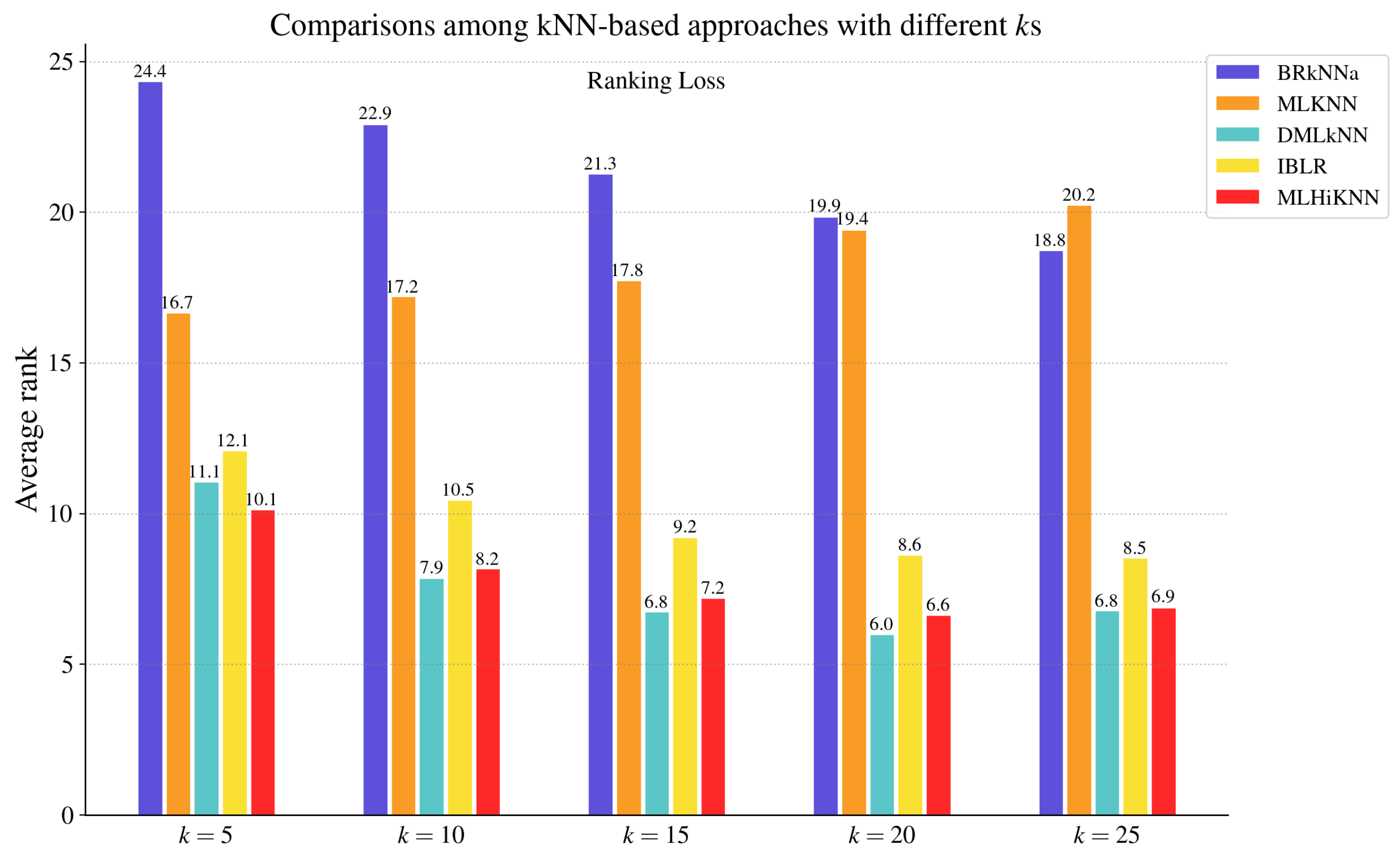

4.3. Comparisons with Hubness Reduction Techniques

4.4. Ablation Analysis for MLHiKNN

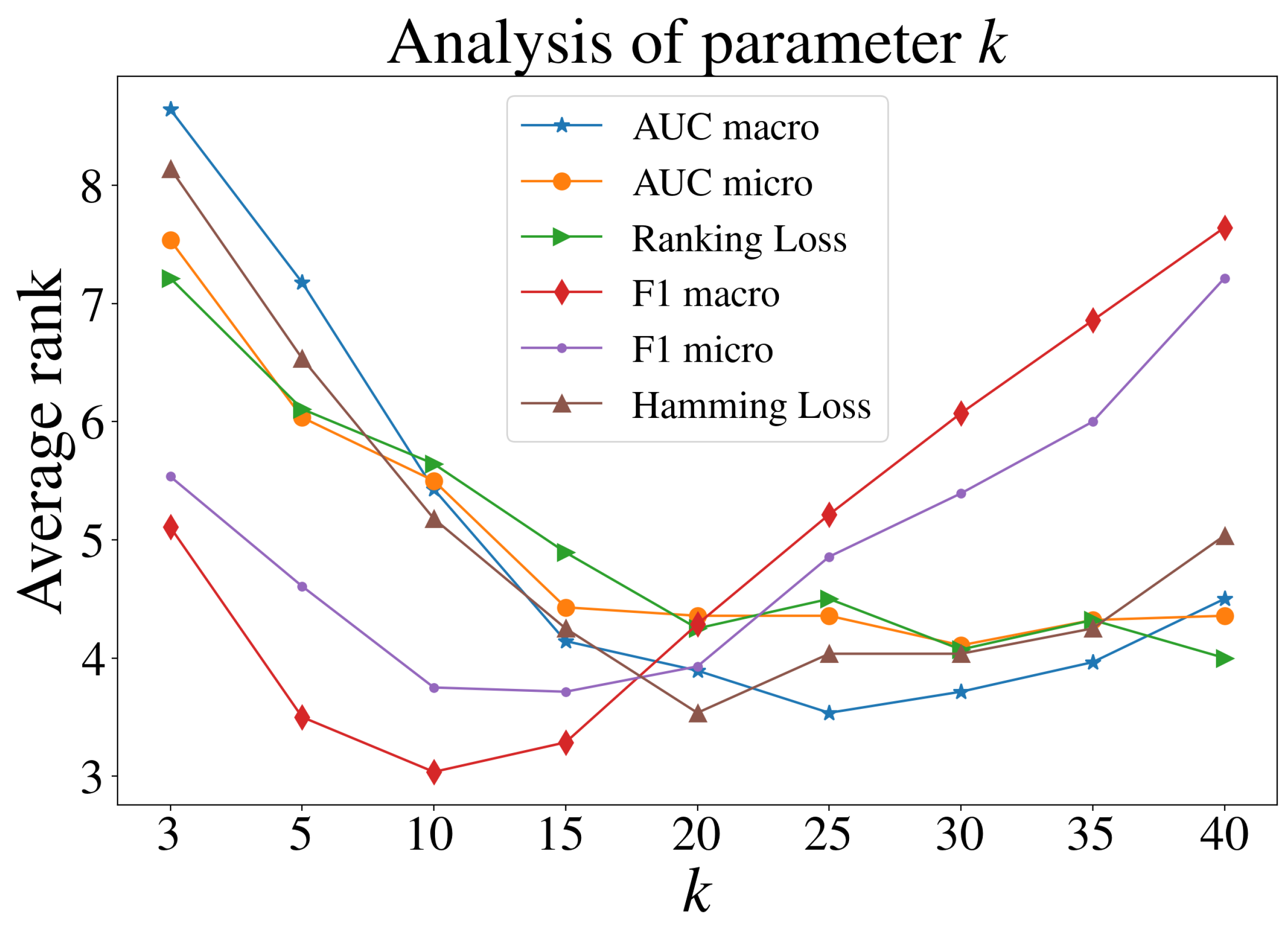

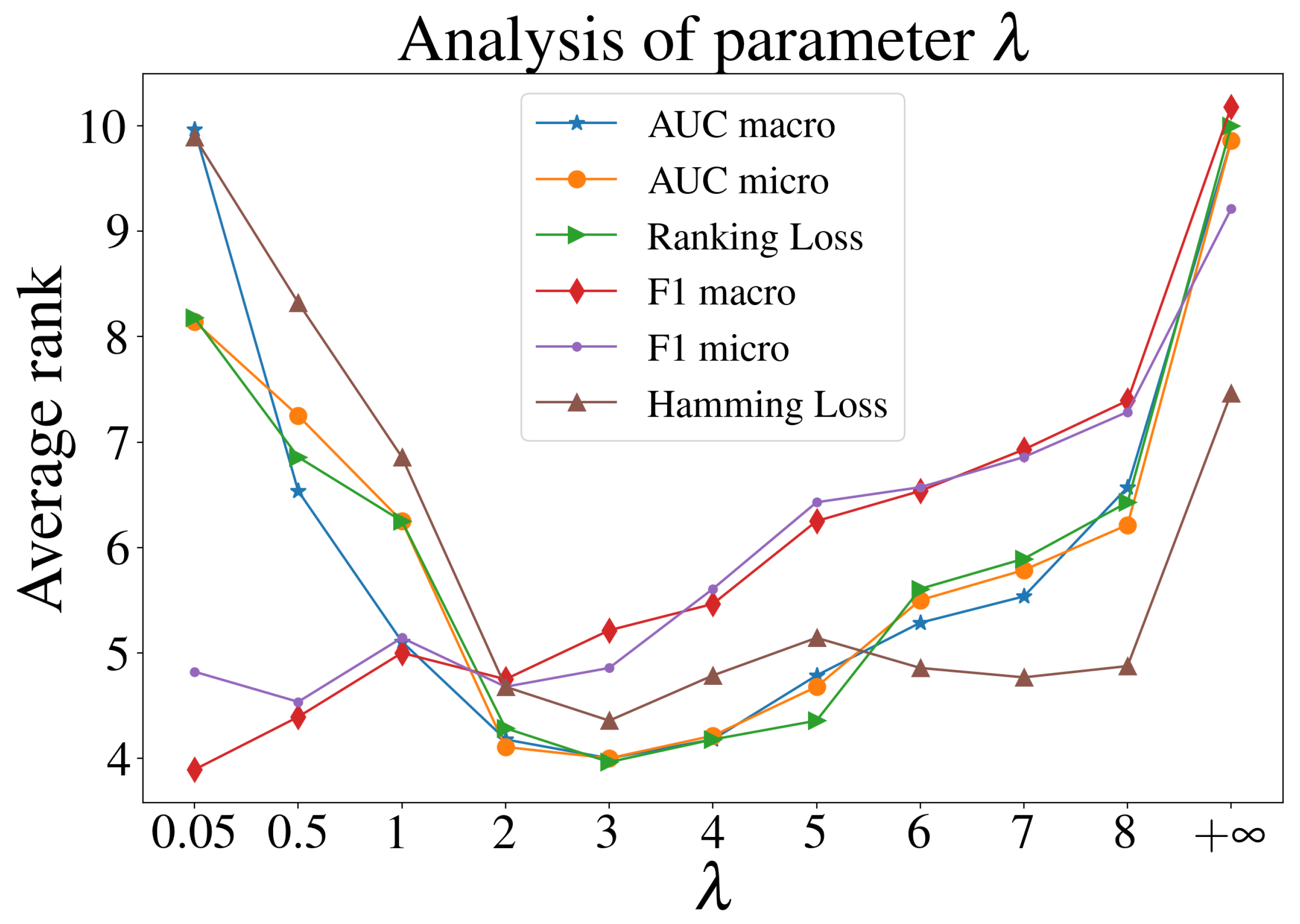

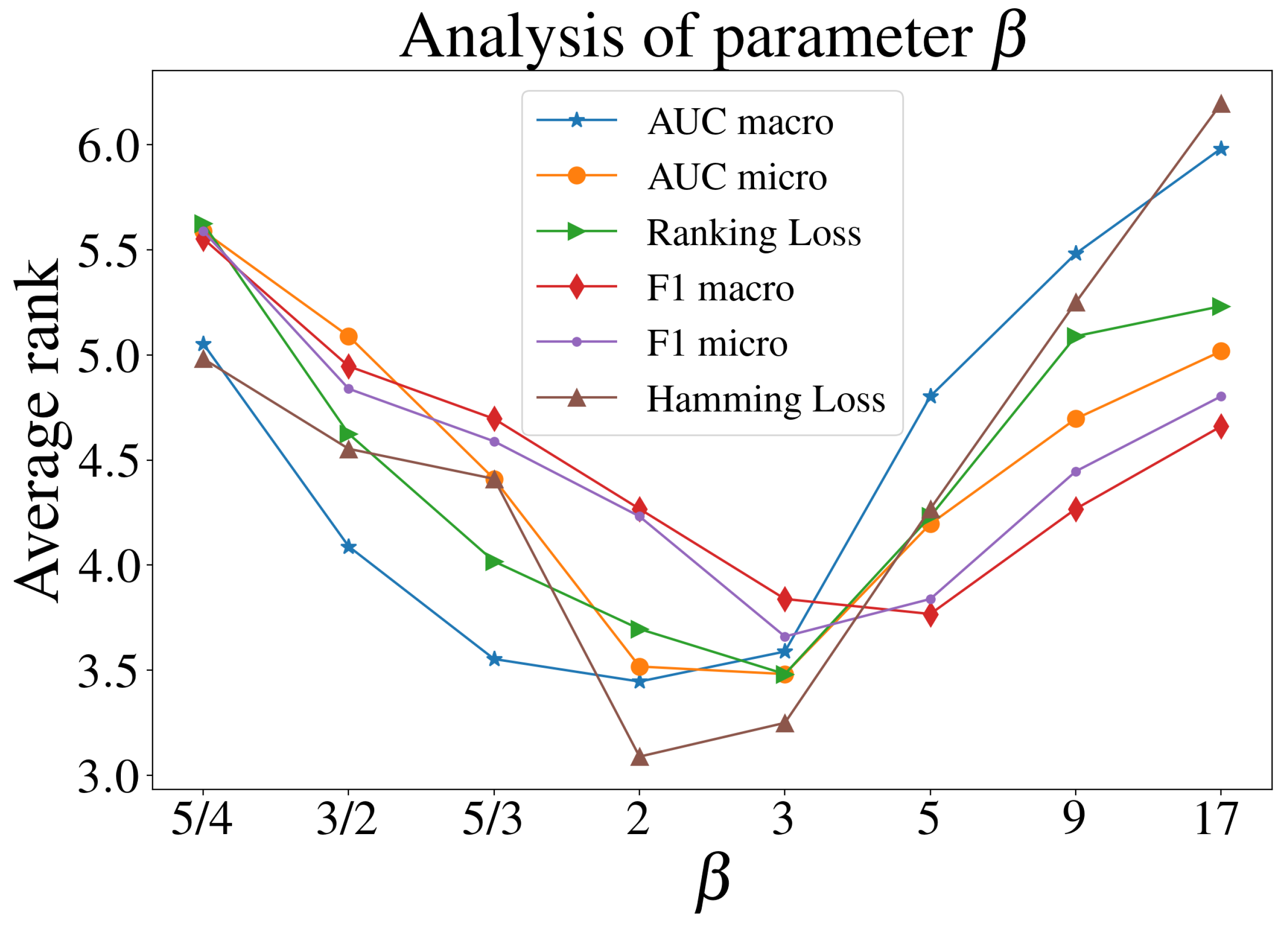

4.5. Parameter Analysis for MLHiKNN

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MLC | Multi-label classification |

| SLC | Single-label classification |

| MLDs | Multi-label datasets |

| SLDs | Single-label datasets |

| kNN | k-nearest neighbor |

| MLHiKNN | Multi-label hubness information-based k-nearest neighbor |

| LS | Local scaling |

| MP | Mutual proximity |

| DSL | Local dissimilarity |

| A set consisting of the k-nearest neighbors of example t | |

| The number of times x appears among the k nearest neighbors of all other points in a dataset | |

| The number of instances relevant to label l in |

Appendix A. Time Costs of the Proposed Method

| Dataset | BRkNNa | MLKNN | MLHiKNN-fo | MLHiKNN | ||||

|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | |

| birds | 0.02 | 0.05 | 0.05 | 0.59 | 0.59 | 1.14 | 0.59 | |

| CAL500 | 0.03 | 0.60 | 0.30 | 0.11 | 0.12 | 13.01 | 0.17 | |

| emotions | 0.03 | 0.07 | 0.09 | 0.14 | 0.15 | 0.43 | 0.16 | |

| genbase | 0.04 | 0.11 | 0.12 | 0.23 | 0.24 | 0.72 | 0.26 | |

| LLOG | 0.08 | 1.04 | 0.57 | 0.04 | 0.06 | 3.82 | 0.10 | |

| enron | 0.10 | 0.56 | 0.45 | 0.91 | 0.92 | 4.01 | 0.98 | |

| scene | 0.12 | 0.25 | 0.35 | 9.39 | 9.39 | 10.02 | 9.68 | |

| yeast | 0.12 | 0.44 | 0.42 | 1.13 | 1.17 | 2.64 | 1.23 | |

| Slashdot | 0.13 | 0.41 | 0.46 | 0.02 | 0.07 | 0.95 | 0.09 | |

| corel5k | 0.29 | 5.81 | 3.37 | 0.07 | 0.16 | 57.89 | 0.71 | |

| rcv1subset1 | 0.35 | 3.89 | 2.52 | 0.11 | 0.23 | 23.56 | 0.40 | |

| rcv1subset2 | 0.35 | 3.98 | 2.57 | 0.10 | 0.22 | 23.64 | 0.36 | |

| rcv1subset3 | 0.35 | 3.99 | 2.57 | 0.10 | 0.22 | 23.59 | 0.36 | |

| rcv1subset4 | 0.37 | 3.94 | 2.55 | 0.09 | 0.22 | 22.93 | 0.35 | |

| rcv1subset5 | 0.35 | 4.02 | 2.59 | 0.09 | 0.22 | 23.77 | 0.35 | |

| bibtex | 0.42 | 8.95 | 5.16 | 0.12 | 0.27 | 63.46 | 0.81 | |

| Arts | 0.44 | 1.81 | 1.65 | 0.13 | 0.28 | 3.72 | 0.38 | |

| Health | 0.54 | 2.03 | 1.92 | 0.42 | 0.62 | 3.78 | 0.63 | |

| Business | 0.67 | 2.78 | 2.47 | 0.24 | 0.46 | 4.77 | 0.57 | |

| Education | 0.76 | 3.18 | 2.78 | 0.68 | 0.96 | 5.86 | 0.87 | |

| Computers | 0.82 | 3.33 | 2.92 | 0.75 | 1.02 | 9.09 | 1.02 | |

| Entertainment | 0.84 | 2.47 | 2.51 | 0.90 | 1.18 | 4.05 | 1.20 | |

| Recreation | 0.83 | 2.94 | 2.76 | 0.51 | 0.76 | 4.37 | 0.76 | |

| Society | 1.01 | 3.81 | 3.38 | 1.00 | 1.31 | 9.49 | 1.29 | |

| eurlex-dc-l | 1.15 | 29.21 | 16.56 | 1.26 | 1.54 | 90.64 | 2.11 | |

| eurlex-sm | 1.33 | 20.06 | 11.82 | 1.79 | 2.22 | 74.25 | 3.35 | |

| tmc2007-500 | 2.59 | 7.12 | 6.53 | 8.47 | 9.34 | 15.64 | 7.77 | |

| mediamill | 4.52 | 33.90 | 20.46 | 282.61 | 285.35 | 395.58 | 275.31 | |

Appendix B. Experimental Results of Compared MLC Algorithms

| Dataset | AUC Micro | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BR | CC | ECC | RAkEL | RAkELd | BRkNNa | MLKNN | DMLkNN | IBLR | MLHiKNN | |

| birds | 0.666(8) ± 0.039 | 0.660(9) ± 0.028 | 0.785(1) ± 0.015 • | 0.761(2) ± 0.011 | 0.676(7) ± 0.025 | 0.736(5) ± 0.014 | 0.659(10) ± 0.013 | 0.730(6) ± 0.016 | 0.736(4) ± 0.014 | 0.746(3) ± 0.019 |

| CAL500 | 0.628(7) ± 0.011 | 0.589(9) ± 0.010 | 0.731(4) ± 0.004 | 0.688(6) ± 0.002 | 0.601(8) ± 0.005 | 0.749(3) ± 0.003 | 0.713(5) ± 0.003 | 0.755(2) ± 0.001 | 0.558(10) ± 0.007 | 0.757(1) ± 0.004 • |

| emotions | 0.693(9) ± 0.022 | 0.688(10) ± 0.019 | 0.837(4) ± 0.008 | 0.812(6) ± 0.009 | 0.697(8) ± 0.014 | 0.852(3) ± 0.005 | 0.747(7) ± 0.011 | 0.828(5) ± 0.007 | 0.856(2) ± 0.007 | 0.862(1) ± 0.005 • |

| genbase | 0.996(7) ± 0.006 | 0.995(8) ± 0.007 | 0.997(1) ± 0.003 • | 0.997(3) ± 0.004 | 0.996(6) ± 0.006 | 0.993(9) ± 0.004 | 0.970(10) ± 0.012 | 0.997(2) ± 0.002 | 0.997(4) ± 0.003 | 0.996(5) ± 0.004 |

| LLOG | 0.771(5) ± 0.002 | 0.670(8) ± 0.021 | 0.682(7) ± 0.027 | 0.659(9) ± 0.008 | 0.618(10) ± 0.023 | 0.788(4) ± 0.005 | 0.791(3) ± 0.002 | 0.805(1) ± 0.003 • | 0.805(2) ± 0.003 | 0.767(6) ± 0.002 |

| enron | 0.764(8) ± 0.007 | 0.760(9) ± 0.011 | 0.851(4) ± 0.005 | 0.823(6) ± 0.005 | 0.751(10) ± 0.009 | 0.801(7) ± 0.012 | 0.825(5) ± 0.004 | 0.863(1) ± 0.003 • | 0.855(3) ± 0.005 | 0.860(2) ± 0.002 |

| scene | 0.747(9) ± 0.019 | 0.744(10) ± 0.020 | 0.928(5) ± 0.005 | 0.902(6) ± 0.003 | 0.755(8) ± 0.012 | 0.934(4) ± 0.003 | 0.885(7) ± 0.004 | 0.940(3) ± 0.004 | 0.945(2) ± 0.003 | 0.950(1) ± 0.002 • |

| yeast | 0.683(8) ± 0.010 | 0.652(10) ± 0.013 | 0.816(5) ± 0.004 | 0.791(6) ± 0.003 | 0.679(9) ± 0.007 | 0.837(3) ± 0.003 | 0.766(7) ± 0.004 | 0.832(4) ± 0.003 | 0.840(2) ± 0.004 | 0.842(1) ± 0.004 • |

| Slashdot | 0.937(5) ± 0.004 | 0.940(4) ± 0.003 | 0.892(10) ± 0.004 | 0.892(9) ± 0.004 | 0.918(7) ± 0.008 | 0.932(6) ± 0.008 | 0.912(8) ± 0.009 | 0.951(1) ± 0.004 • | 0.948(2) ± 0.006 | 0.945(3) ± 0.004 |

| corel5k | 0.776(3) ± 0.004 | 0.744(6) ± 0.003 | 0.573(9) ± 0.008 | 0.558(10) ± 0.003 | 0.714(7) ± 0.006 | 0.713(8) ± 0.008 | 0.772(5) ± 0.004 | 0.802(1) ± 0.003 • | 0.775(4) ± 0.005 | 0.796(2) ± 0.004 |

| rcv1subset1 | 0.788(7) ± 0.006 | 0.727(10) ± 0.008 | 0.814(6) ± 0.004 | 0.748(8) ± 0.005 | 0.746(9) ± 0.008 | 0.894(3) ± 0.004 | 0.866(5) ± 0.003 | 0.913(1) ± 0.002 • | 0.901(2) ± 0.004 | 0.874(4) ± 0.009 |

| rcv1subset2 | 0.803(6) ± 0.007 | 0.736(9) ± 0.009 | 0.800(7) ± 0.004 | 0.730(10) ± 0.005 | 0.762(8) ± 0.009 | 0.895(2) ± 0.003 | 0.874(4) ± 0.003 | 0.914(1) ± 0.003 • | 0.893(3) ± 0.005 | 0.865(5) ± 0.010 |

| rcv1subset3 | 0.797(7) ± 0.005 | 0.712(10) ± 0.009 | 0.808(6) ± 0.004 | 0.727(9) ± 0.005 | 0.763(8) ± 0.009 | 0.893(3) ± 0.003 | 0.871(4) ± 0.003 | 0.912(1) ± 0.003 • | 0.896(2) ± 0.002 | 0.861(5) ± 0.007 |

| rcv1subset4 | 0.818(7) ± 0.007 | 0.730(10) ± 0.010 | 0.819(6) ± 0.004 | 0.732(9) ± 0.005 | 0.783(8) ± 0.006 | 0.906(2) ± 0.004 | 0.884(4) ± 0.002 | 0.922(1) ± 0.002 • | 0.904(3) ± 0.003 | 0.884(5) ± 0.010 |

| rcv1subset5 | 0.809(6) ± 0.009 | 0.756(9) ± 0.009 | 0.807(7) ± 0.005 | 0.743(10) ± 0.004 | 0.768(8) ± 0.006 | 0.891(3) ± 0.003 | 0.871(4) ± 0.002 | 0.914(1) ± 0.002 • | 0.896(2) ± 0.003 | 0.868(5) ± 0.009 |

| bibtex | 0.788(6) ± 0.007 | 0.776(7) ± 0.007 | 0.709(9) ± 0.003 | 0.667(10) ± 0.004 | 0.739(8) ± 0.008 | 0.838(3) ± 0.003 | 0.802(5) ± 0.004 | 0.863(1) ± 0.003 • | 0.821(4) ± 0.007 | 0.863(2) ± 0.006 |

| Arts | 0.789(5) ± 0.006 | 0.787(6) ± 0.005 | 0.765(7) ± 0.004 | 0.689(10) ± 0.004 | 0.722(8) ± 0.023 | 0.818(4) ± 0.003 | 0.713(9) ± 0.004 | 0.840(3) ± 0.003 | 0.845(2) ± 0.004 | 0.851(1) ± 0.003 • |

| Health | 0.864(5) ± 0.005 | 0.862(6) ± 0.008 | 0.857(7) ± 0.004 | 0.819(9) ± 0.003 | 0.817(10) ± 0.010 | 0.893(4) ± 0.004 | 0.827(8) ± 0.004 | 0.910(3) ± 0.003 | 0.912(2) ± 0.003 | 0.919(1) ± 0.002 • |

| Business | 0.908(5) ± 0.004 | 0.895(7) ± 0.002 | 0.869(9) ± 0.004 | 0.853(10) ± 0.003 | 0.882(8) ± 0.006 | 0.927(4) ± 0.002 | 0.908(6) ± 0.002 | 0.945(2) ± 0.002 | 0.943(3) ± 0.001 | 0.947(1) ± 0.001 • |

| Education | 0.830(6) ± 0.004 | 0.840(5) ± 0.005 | 0.823(7) ± 0.004 | 0.746(10) ± 0.003 | 0.757(9) ± 0.022 | 0.876(4) ± 0.002 | 0.822(8) ± 0.003 | 0.900(3) ± 0.002 | 0.900(2) ± 0.002 | 0.906(1) ± 0.002 • |

| Computers | 0.827(5) ± 0.003 | 0.817(8) ± 0.007 | 0.822(6) ± 0.004 | 0.776(10) ± 0.003 | 0.779(9) ± 0.013 | 0.862(4) ± 0.003 | 0.820(7) ± 0.003 | 0.888(3) ± 0.003 | 0.888(2) ± 0.003 | 0.896(1) ± 0.003 • |

| Entertainment | 0.821(6) ± 0.005 | 0.805(7) ± 0.005 | 0.847(5) ± 0.006 | 0.775(9) ± 0.003 | 0.786(8) ± 0.010 | 0.863(4) ± 0.002 | 0.754(10) ± 0.004 | 0.879(3) ± 0.002 | 0.880(2) ± 0.001 | 0.891(1) ± 0.002 • |

| Recreation | 0.810(5) ± 0.004 | 0.796(7) ± 0.004 | 0.800(6) ± 0.004 | 0.717(9) ± 0.004 | 0.756(8) ± 0.019 | 0.839(4) ± 0.005 | 0.697(10) ± 0.004 | 0.859(3) ± 0.004 | 0.863(2) ± 0.004 | 0.874(1) ± 0.004 • |

| Society | 0.805(6) ± 0.003 | 0.812(5) ± 0.003 | 0.772(7) ± 0.004 | 0.722(10) ± 0.002 | 0.738(9) ± 0.018 | 0.829(4) ± 0.003 | 0.753(8) ± 0.004 | 0.858(3) ± 0.002 | 0.860(2) ± 0.002 | 0.869(1) ± 0.002 • |

| eurlex-dc-l | 0.837(6) ± 0.003 | 0.827(7) ± 0.004 | 0.758(9) ± 0.004 | 0.712(10) ± 0.002 | 0.799(8) ± 0.005 | 0.882(3) ± 0.004 | 0.875(4) ± 0.003 | 0.895(2) ± 0.003 | 0.863(5) ± 0.003 | 0.919(1) ± 0.005 • |

| eurlex-sm | 0.871(6) ± 0.003 | 0.866(7) ± 0.004 | 0.837(8) ± 0.002 | 0.806(10) ± 0.001 | 0.831(9) ± 0.005 | 0.919(4) ± 0.002 | 0.917(5) ± 0.002 | 0.936(2) ± 0.002 | 0.932(3) ± 0.002 | 0.952(1) ± 0.002 • |

| tmc2007-500 | 0.842(9) ± 0.003 | 0.845(8) ± 0.004 | 0.920(5) ± 0.001 | 0.907(6) ± 0.001 | 0.832(10) ± 0.004 | 0.928(4) ± 0.001 | 0.891(7) ± 0.002 | 0.942(3) ± 0.001 | 0.942(2) ± 0.001 | 0.958(1) ± 0.001 • |

| mediamill | 0.815(8) ± 0.004 | 0.780(9) ± 0.003 | 0.879(6) ± 0.002 | 0.857(7) ± 0.001 | 0.763(10) ± 0.004 | 0.930(5) ± 0.001 | 0.930(4) ± 0.000 | 0.950(2) ± 0.001 | 0.949(3) ± 0.001 | 0.957(1) ± 0.001 • |

| average rank | 6.43 | 7.86 | 6.18 | 8.18 | 8.39 | 4.14 | 6.39 | 2.29 | 2.89 | 2.25 |

| win/tie/loss | 86/24/142 | 55/22/175 | 97/14/141 | 41/16/195 | 32/22/198 | 161/9/82 | 97/13/142 | 208/14/30 | 189/18/45 | 211/14/27 |

| Dataset | Ranking Loss | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BR | CC | ECC | RAkEL | RAkELd | BRkNNa | MLKNN | DMLkNN | IBLR | MLHiKNN | |

| birds | 0.362(7) ± 0.039 | 0.372(9) ± 0.031 | 0.317(5) ± 0.023 | 0.370(8) ± 0.026 | 0.391(10) ± 0.028 | 0.317(4) ± 0.020 | 0.345(6) ± 0.018 | 0.271(3) ± 0.018 | 0.264(2) ± 0.020 | 0.251(1) ± 0.020 • |

| CAL500 | 0.403(7) ± 0.013 | 0.450(8) ± 0.012 | 0.337(5) ± 0.004 | 0.381(6) ± 0.004 | 0.485(10) ± 0.010 | 0.282(3) ± 0.003 | 0.284(4) ± 0.003 | 0.242(2) ± 0.002 | 0.456(9) ± 0.007 | 0.240(1) ± 0.005 • |

| emotions | 0.385(9) ± 0.021 | 0.385(8) ± 0.024 | 0.213(5) ± 0.011 | 0.230(6) ± 0.010 | 0.432(10) ± 0.014 | 0.184(3) ± 0.011 | 0.241(7) ± 0.015 | 0.185(4) ± 0.011 | 0.158(2) ± 0.007 | 0.153(1) ± 0.007 • |

| genbase | 0.007(6) ± 0.011 | 0.007(5) ± 0.011 | 0.005(3) ± 0.004 | 0.007(4) ± 0.008 | 0.008(7) ± 0.011 | 0.016(9) ± 0.006 | 0.026(10) ± 0.010 | 0.010(8) ± 0.005 | 0.002(1) ± 0.002 • | 0.004(2) ± 0.003 |

| LLOG | 0.186(4) ± 0.002 | 0.283(7) ± 0.023 | 0.481(9) ± 0.060 | 0.536(10) ± 0.020 | 0.368(8) ± 0.028 | 0.229(6) ± 0.005 | 0.186(3) ± 0.002 | 0.186(1) ± 0.002 • | 0.186(2) ± 0.002 | 0.187(5) ± 0.002 |

| enron | 0.225(5) ± 0.009 | 0.231(6) ± 0.013 | 0.243(7) ± 0.010 | 0.301(10) ± 0.010 | 0.260(9) ± 0.011 | 0.260(8) ± 0.018 | 0.165(4) ± 0.005 | 0.130(1) ± 0.003 • | 0.135(3) ± 0.004 | 0.135(2) ± 0.003 |

| scene | 0.321(9) ± 0.018 | 0.302(8) ± 0.021 | 0.115(5) ± 0.007 | 0.138(7) ± 0.004 | 0.360(10) ± 0.018 | 0.102(4) ± 0.005 | 0.121(6) ± 0.004 | 0.078(3) ± 0.004 | 0.076(2) ± 0.004 | 0.071(1) ± 0.003 • |

| yeast | 0.364(8) ± 0.018 | 0.409(9) ± 0.011 | 0.225(5) ± 0.004 | 0.245(7) ± 0.003 | 0.417(10) ± 0.008 | 0.191(4) ± 0.003 | 0.234(6) ± 0.004 | 0.175(3) ± 0.003 | 0.169(2) ± 0.003 | 0.168(1) ± 0.003 • |

| Slashdot | 0.049(5) ± 0.004 | 0.047(4) ± 0.004 | 0.155(10) ± 0.009 | 0.153(9) ± 0.008 | 0.081(7) ± 0.009 | 0.084(8) ± 0.011 | 0.066(6) ± 0.008 | 0.042(1) ± 0.004 • | 0.043(2) ± 0.004 | 0.047(3) ± 0.005 |

| corel5k | 0.227(4) ± 0.004 | 0.256(6) ± 0.003 | 0.848(9) ± 0.017 | 0.879(10) ± 0.006 | 0.293(7) ± 0.006 | 0.452(8) ± 0.011 | 0.228(5) ± 0.003 | 0.199(1) ± 0.003 • | 0.221(3) ± 0.006 | 0.206(2) ± 0.004 |

| rcv1subset1 | 0.205(6) ± 0.006 | 0.265(8) ± 0.009 | 0.315(9) ± 0.009 | 0.457(10) ± 0.011 | 0.254(7) ± 0.008 | 0.143(5) ± 0.005 | 0.120(4) ± 0.003 | 0.077(1) ± 0.002 • | 0.087(2) ± 0.003 | 0.118(3) ± 0.008 |

| rcv1subset2 | 0.182(6) ± 0.009 | 0.257(8) ± 0.011 | 0.326(9) ± 0.008 | 0.479(10) ± 0.010 | 0.231(7) ± 0.012 | 0.141(5) ± 0.005 | 0.112(3) ± 0.002 | 0.072(1) ± 0.003 • | 0.085(2) ± 0.003 | 0.118(4) ± 0.009 |

| rcv1subset3 | 0.188(6) ± 0.008 | 0.277(8) ± 0.008 | 0.314(9) ± 0.007 | 0.481(10) ± 0.010 | 0.231(7) ± 0.010 | 0.147(5) ± 0.004 | 0.115(3) ± 0.001 | 0.075(1) ± 0.002 • | 0.085(2) ± 0.002 | 0.122(4) ± 0.005 |

| rcv1subset4 | 0.163(6) ± 0.008 | 0.250(8) ± 0.011 | 0.284(9) ± 0.007 | 0.469(10) ± 0.008 | 0.206(7) ± 0.007 | 0.125(5) ± 0.006 | 0.100(3) ± 0.001 | 0.064(1) ± 0.002 • | 0.077(2) ± 0.002 | 0.100(4) ± 0.009 |

| rcv1subset5 | 0.177(6) ± 0.011 | 0.239(8) ± 0.012 | 0.322(9) ± 0.010 | 0.461(10) ± 0.011 | 0.225(7) ± 0.007 | 0.150(5) ± 0.006 | 0.115(3) ± 0.002 | 0.072(1) ± 0.001 • | 0.084(2) ± 0.002 | 0.117(4) ± 0.009 |

| bibtex | 0.202(5) ± 0.006 | 0.204(6) ± 0.006 | 0.566(9) ± 0.005 | 0.638(10) ± 0.009 | 0.257(7) ± 0.009 | 0.282(8) ± 0.006 | 0.197(4) ± 0.005 | 0.122(1) ± 0.003 • | 0.182(3) ± 0.006 | 0.147(2) ± 0.004 |

| Arts | 0.189(5) ± 0.007 | 0.186(4) ± 0.004 | 0.330(9) ± 0.011 | 0.545(10) ± 0.009 | 0.285(8) ± 0.022 | 0.206(6) ± 0.004 | 0.266(7) ± 0.004 | 0.136(3) ± 0.003 | 0.135(2) ± 0.003 | 0.129(1) ± 0.003 • |

| Health | 0.119(5) ± 0.005 | 0.118(4) ± 0.007 | 0.209(9) ± 0.007 | 0.285(10) ± 0.006 | 0.185(8) ± 0.011 | 0.125(6) ± 0.004 | 0.146(7) ± 0.005 | 0.076(3) ± 0.002 | 0.076(2) ± 0.002 | 0.070(1) ± 0.002 • |

| Business | 0.066(5) ± 0.003 | 0.076(6) ± 0.002 | 0.174(9) ± 0.006 | 0.198(10) ± 0.004 | 0.098(8) ± 0.006 | 0.081(7) ± 0.003 | 0.066(4) ± 0.001 | 0.037(2) ± 0.001 | 0.038(3) ± 0.001 | 0.037(1) ± 0.001 • |

| Education | 0.167(7) ± 0.004 | 0.156(5) ± 0.004 | 0.261(8) ± 0.006 | 0.441(10) ± 0.007 | 0.265(9) ± 0.022 | 0.156(4) ± 0.003 | 0.162(6) ± 0.002 | 0.093(2) ± 0.002 | 0.094(3) ± 0.002 | 0.089(1) ± 0.002 • |

| Computers | 0.146(5) ± 0.003 | 0.148(6) ± 0.005 | 0.267(9) ± 0.005 | 0.378(10) ± 0.005 | 0.214(8) ± 0.013 | 0.166(7) ± 0.003 | 0.144(4) ± 0.004 | 0.089(2) ± 0.002 | 0.090(3) ± 0.002 | 0.086(1) ± 0.002 • |

| Entertainment | 0.169(4) ± 0.006 | 0.181(6) ± 0.004 | 0.227(8) ± 0.009 | 0.387(10) ± 0.007 | 0.244(9) ± 0.013 | 0.174(5) ± 0.003 | 0.224(7) ± 0.003 | 0.122(3) ± 0.002 | 0.121(2) ± 0.002 | 0.111(1) ± 0.002 • |

| Recreation | 0.164(4) ± 0.006 | 0.172(5) ± 0.004 | 0.283(9) ± 0.008 | 0.499(10) ± 0.009 | 0.255(7) ± 0.020 | 0.197(6) ± 0.006 | 0.277(8) ± 0.005 | 0.127(3) ± 0.003 | 0.126(2) ± 0.004 | 0.115(1) ± 0.004 • |

| Society | 0.168(5) ± 0.004 | 0.152(4) ± 0.003 | 0.326(9) ± 0.008 | 0.448(10) ± 0.007 | 0.248(8) ± 0.017 | 0.189(6) ± 0.004 | 0.216(7) ± 0.004 | 0.112(2) ± 0.002 | 0.112(3) ± 0.002 | 0.105(1) ± 0.002 • |

| eurlex-dc-l | 0.150(5) ± 0.003 | 0.156(6) ± 0.003 | 0.455(9) ± 0.008 | 0.547(10) ± 0.005 | 0.188(7) ± 0.005 | 0.206(8) ± 0.006 | 0.118(3) ± 0.003 | 0.074(1) ± 0.002 • | 0.119(4) ± 0.003 | 0.078(2) ± 0.005 |

| eurlex-sm | 0.127(5) ± 0.003 | 0.130(6) ± 0.003 | 0.307(9) ± 0.003 | 0.372(10) ± 0.003 | 0.169(8) ± 0.006 | 0.138(7) ± 0.003 | 0.084(4) ± 0.001 | 0.053(2) ± 0.001 | 0.064(3) ± 0.001 | 0.050(1) ± 0.002 • |

| tmc2007-500 | 0.148(9) ± 0.004 | 0.145(8) ± 0.003 | 0.121(6) ± 0.003 | 0.144(7) ± 0.002 | 0.179(10) ± 0.004 | 0.092(4) ± 0.001 | 0.105(5) ± 0.001 | 0.059(3) ± 0.001 | 0.057(2) ± 0.001 | 0.044(1) ± 0.001 • |

| mediamill | 0.181(6) ± 0.005 | 0.224(8) ± 0.004 | 0.187(7) ± 0.002 | 0.233(9) ± 0.002 | 0.240(10) ± 0.004 | 0.092(5) ± 0.001 | 0.059(4) ± 0.000 | 0.043(2) ± 0.000 | 0.044(3) ± 0.000 | 0.038(1) ± 0.001 • |

| average rank | 5.86 | 6.57 | 7.79 | 9.04 | 8.21 | 5.75 | 5.11 | 2.18 | 2.61 | 1.89 |

| win/tie/loss | 108/18/126 | 90/19/143 | 57/9/186 | 20/10/222 | 48/10/194 | 115/5/132 | 127/12/113 | 218/8/26 | 203/11/38 | 214/18/20 |

| Dataset | F1 Macro | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BR | CC | ECC | RAkEL | RAkELd | BRkNNa | MLKNN | DMLkNN | IBLR | MLHiKNN | |

| birds | 0.368(4) ± 0.040 | 0.367(5) ± 0.030 | 0.385(2) ± 0.030 | 0.409(1) ± 0.035 • | 0.382(3) ± 0.017 | 0.034(10) ± 0.019 | 0.157(8) ± 0.027 | 0.117(9) ± 0.029 | 0.242(7) ± 0.024 | 0.258(6) ± 0.069 |

| CAL500 | 0.170(5) ± 0.007 | 0.195(3) ± 0.006 | 0.141(6) ± 0.006 | 0.191(4) ± 0.005 | 0.215(2) ± 0.003 | 0.083(10) ± 0.002 | 0.103(7) ± 0.003 | 0.084(8) ± 0.002 | 0.229(1) ± 0.007 • | 0.083(9) ± 0.003 |

| emotions | 0.570(8) ± 0.011 | 0.551(10) ± 0.023 | 0.639(2) ± 0.013 | 0.614(4) ± 0.013 | 0.555(9) ± 0.018 | 0.608(5) ± 0.011 | 0.602(6) ± 0.023 | 0.595(7) ± 0.017 | 0.638(3) ± 0.014 | 0.651(1) ± 0.013 • |

| genbase | 0.980(5) ± 0.012 | 0.977(6) ± 0.018 | 0.983(3) ± 0.007 | 0.984(2) ± 0.012 | 0.984(1) ± 0.014 • | 0.639(10) ± 0.058 | 0.913(8) ± 0.030 | 0.820(9) ± 0.080 | 0.980(4) ± 0.009 | 0.954(7) ± 0.017 |

| LLOG | 0.117(2) ± 0.005 | 0.042(10) ± 0.006 | 0.049(9) ± 0.010 | 0.094(8) ± 0.005 | 0.097(7) ± 0.010 | 0.141(1) ± 0.012 • | 0.116(4) ± 0.005 | 0.113(5) ± 0.003 | 0.116(3) ± 0.005 | 0.097(6) ± 0.016 |

| enron | 0.215(5) ± 0.007 | 0.218(2) ± 0.006 | 0.217(4) ± 0.007 | 0.224(1) ± 0.005 • | 0.217(3) ± 0.011 | 0.067(10) ± 0.008 | 0.131(8) ± 0.007 | 0.103(9) ± 0.010 | 0.170(6) ± 0.010 | 0.131(7) ± 0.009 |

| scene | 0.622(8) ± 0.009 | 0.610(9) ± 0.010 | 0.723(5) ± 0.008 | 0.688(6) ± 0.009 | 0.602(10) ± 0.013 | 0.643(7) ± 0.015 | 0.726(3) ± 0.007 | 0.726(4) ± 0.007 | 0.730(2) ± 0.008 | 0.749(1) ± 0.008 • |

| yeast | 0.382(5) ± 0.015 | 0.381(7) ± 0.006 | 0.389(2) ± 0.010 | 0.388(3) ± 0.005 | 0.382(6) ± 0.006 | 0.347(10) ± 0.006 | 0.385(4) ± 0.010 | 0.352(9) ± 0.010 | 0.370(8) ± 0.006 | 0.420(1) ± 0.009 • |

| Slashdot | 0.135(6) ± 0.033 | 0.132(7) ± 0.024 | 0.129(8) ± 0.022 | 0.177(2) ± 0.024 | 0.172(3) ± 0.023 | 0.081(10) ± 0.002 | 0.149(5) ± 0.036 | 0.120(9) ± 0.021 | 0.177(1) ± 0.023 • | 0.163(4) ± 0.025 |

| corel5k | 0.029(4) ± 0.003 | 0.029(6) ± 0.004 | 0.008(8) ± 0.002 | 0.029(5) ± 0.002 | 0.031(3) ± 0.003 | 0.001(10) ± 0.001 | 0.038(2) ± 0.006 | 0.003(9) ± 0.002 | 0.055(1) ± 0.004 • | 0.026(7) ± 0.004 |

| rcv1subset1 | 0.243(3) ± 0.008 | 0.242(4) ± 0.007 | 0.216(6) ± 0.011 | 0.246(2) ± 0.009 | 0.238(5) ± 0.007 | 0.074(10) ± 0.006 | 0.181(7) ± 0.010 | 0.097(9) ± 0.004 | 0.255(1) ± 0.008 • | 0.152(8) ± 0.014 |

| rcv1subset2 | 0.231(3) ± 0.008 | 0.237(2) ± 0.013 | 0.197(6) ± 0.008 | 0.230(4) ± 0.007 | 0.227(5) ± 0.005 | 0.067(10) ± 0.003 | 0.173(7) ± 0.011 | 0.081(9) ± 0.003 | 0.246(1) ± 0.007 • | 0.133(8) ± 0.015 |

| rcv1subset3 | 0.217(3) ± 0.007 | 0.221(2) ± 0.008 | 0.191(6) ± 0.007 | 0.214(5) ± 0.009 | 0.215(4) ± 0.007 | 0.060(10) ± 0.001 | 0.164(7) ± 0.006 | 0.076(9) ± 0.006 | 0.239(1) ± 0.008 • | 0.120(8) ± 0.010 |

| rcv1subset4 | 0.231(3) ± 0.014 | 0.244(2) ± 0.008 | 0.197(6) ± 0.007 | 0.228(4) ± 0.011 | 0.227(5) ± 0.010 | 0.081(10) ± 0.005 | 0.178(7) ± 0.008 | 0.091(9) ± 0.004 | 0.253(1) ± 0.007 • | 0.149(8) ± 0.013 |

| rcv1subset5 | 0.228(2) ± 0.009 | 0.226(3) ± 0.007 | 0.192(6) ± 0.007 | 0.223(4) ± 0.008 | 0.221(5) ± 0.009 | 0.069(10) ± 0.003 | 0.163(7) ± 0.006 | 0.082(9) ± 0.008 | 0.243(1) ± 0.008 • | 0.134(8) ± 0.017 |

| bibtex | 0.213(1) ± 0.007 • | 0.202(4) ± 0.005 | 0.191(5) ± 0.006 | 0.204(3) ± 0.008 | 0.204(2) ± 0.005 | 0.060(9) ± 0.002 | 0.148(8) ± 0.006 | 0.052(10) ± 0.003 | 0.173(7) ± 0.003 | 0.187(6) ± 0.006 |

| Arts | 0.267(2) ± 0.007 | 0.256(6) ± 0.008 | 0.257(5) ± 0.011 | 0.274(1) ± 0.008 • | 0.266(3) ± 0.011 | 0.140(10) ± 0.010 | 0.223(7) ± 0.013 | 0.182(9) ± 0.014 | 0.218(8) ± 0.019 | 0.263(4) ± 0.015 |

| Health | 0.445(4) ± 0.015 | 0.446(3) ± 0.012 | 0.450(2) ± 0.010 | 0.459(1) ± 0.011 • | 0.435(5) ± 0.013 | 0.269(10) ± 0.016 | 0.360(8) ± 0.019 | 0.338(9) ± 0.017 | 0.360(7) ± 0.016 | 0.404(6) ± 0.011 |

| Business | 0.272(1) ± 0.020 • | 0.245(5) ± 0.014 | 0.237(8) ± 0.012 | 0.269(2) ± 0.019 | 0.265(4) ± 0.014 | 0.141(10) ± 0.008 | 0.239(7) ± 0.010 | 0.194(9) ± 0.009 | 0.244(6) ± 0.014 | 0.267(3) ± 0.005 |

| Education | 0.260(4) ± 0.012 | 0.264(3) ± 0.012 | 0.258(5) ± 0.009 | 0.269(2) ± 0.010 | 0.257(6) ± 0.010 | 0.145(10) ± 0.008 | 0.220(8) ± 0.009 | 0.179(9) ± 0.015 | 0.235(7) ± 0.012 | 0.278(1) ± 0.015 • |

| Computers | 0.303(2) ± 0.015 | 0.289(5) ± 0.013 | 0.275(6) ± 0.015 | 0.306(1) ± 0.014 • | 0.296(3) ± 0.014 | 0.142(10) ± 0.011 | 0.255(8) ± 0.014 | 0.211(9) ± 0.011 | 0.257(7) ± 0.011 | 0.294(4) ± 0.010 |

| Entertainment | 0.390(5) ± 0.013 | 0.378(6) ± 0.014 | 0.400(2) ± 0.012 | 0.404(1) ± 0.008 • | 0.393(3) ± 0.012 | 0.257(10) ± 0.008 | 0.347(7) ± 0.012 | 0.304(9) ± 0.012 | 0.327(8) ± 0.012 | 0.390(4) ± 0.008 |

| Recreation | 0.369(4) ± 0.010 | 0.361(6) ± 0.010 | 0.373(3) ± 0.015 | 0.385(2) ± 0.011 | 0.367(5) ± 0.010 | 0.240(10) ± 0.022 | 0.352(7) ± 0.014 | 0.316(9) ± 0.013 | 0.352(8) ± 0.015 | 0.390(1) ± 0.016 • |

| Society | 0.261(4) ± 0.010 | 0.261(5) ± 0.010 | 0.250(6) ± 0.008 | 0.271(2) ± 0.006 | 0.265(3) ± 0.009 | 0.158(10) ± 0.008 | 0.246(7) ± 0.010 | 0.220(9) ± 0.008 | 0.243(8) ± 0.012 | 0.291(1) ± 0.012 • |

| eurlex-dc-l | 0.258(2) ± 0.008 | 0.258(3) ± 0.008 | 0.237(6) ± 0.004 | 0.253(4) ± 0.007 | 0.251(5) ± 0.006 | 0.148(9) ± 0.003 | 0.177(7) ± 0.005 | 0.068(10) ± 0.003 | 0.169(8) ± 0.004 | 0.267(1) ± 0.010 • |

| eurlex-sm | 0.377(3) ± 0.004 | 0.370(6) ± 0.006 | 0.372(5) ± 0.006 | 0.377(2) ± 0.005 | 0.372(4) ± 0.007 | 0.252(9) ± 0.005 | 0.296(8) ± 0.007 | 0.200(10) ± 0.006 | 0.307(7) ± 0.007 | 0.388(1) ± 0.009 • |

| tmc2007-500 | 0.560(4) ± 0.006 | 0.558(5) ± 0.007 | 0.582(2) ± 0.005 | 0.578(3) ± 0.005 | 0.544(6) ± 0.004 | 0.239(10) ± 0.004 | 0.464(7) ± 0.010 | 0.425(9) ± 0.009 | 0.452(8) ± 0.006 | 0.603(1) ± 0.007 • |

| mediamill | 0.172(4) ± 0.006 | 0.156(5) ± 0.004 | 0.128(8) ± 0.005 | 0.196(2) ± 0.004 | 0.180(3) ± 0.005 | 0.085(10) ± 0.002 | 0.137(7) ± 0.006 | 0.088(9) ± 0.002 | 0.155(6) ± 0.005 | 0.293(1) ± 0.005 • |

| average rank | 3.79 | 5.00 | 5.07 | 2.89 | 4.39 | 9.29 | 6.64 | 8.68 | 4.86 | 4.39 |

| win/tie/loss | 140/55/57 | 124/45/83 | 116/39/97 | 175/41/36 | 135/49/68 | 19/5/228 | 80/27/145 | 33/11/208 | 134/23/95 | 143/27/82 |

| Dataset | F1 Micro | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BR | CC | ECC | RAkEL | RAkELd | BRkNNa | MLKNN | DMLkNN | IBLR | MLHiKNN | |

| birds | 0.452(3) ± 0.044 | 0.448(4) ± 0.035 | 0.497(1) ± 0.021 • | 0.481(2) ± 0.024 | 0.438(5) ± 0.021 | 0.074(10) ± 0.042 | 0.230(8) ± 0.050 | 0.208(9) ± 0.039 | 0.327(7) ± 0.027 | 0.358(6) ± 0.061 |

| CAL500 | 0.353(4) ± 0.011 | 0.362(3) ± 0.011 | 0.386(1) ± 0.007 • | 0.383(2) ± 0.004 | 0.351(5) ± 0.005 | 0.321(10) ± 0.006 | 0.338(8) ± 0.009 | 0.331(9) ± 0.005 | 0.338(7) ± 0.008 | 0.342(6) ± 0.014 |

| emotions | 0.579(8) ± 0.012 | 0.561(10) ± 0.022 | 0.655(3) ± 0.012 | 0.627(6) ± 0.012 | 0.564(9) ± 0.018 | 0.635(4) ± 0.013 | 0.629(5) ± 0.020 | 0.616(7) ± 0.018 | 0.662(2) ± 0.011 | 0.672(1) ± 0.011 • |

| genbase | 0.987(4) ± 0.008 | 0.986(6) ± 0.010 | 0.990(2) ± 0.005 | 0.990(1) ± 0.007 • | 0.989(3) ± 0.010 | 0.839(10) ± 0.040 | 0.949(8) ± 0.020 | 0.909(9) ± 0.036 | 0.986(5) ± 0.007 | 0.965(7) ± 0.014 |

| LLOG | 0.429(2) ± 0.013 | 0.100(10) ± 0.035 | 0.145(9) ± 0.061 | 0.367(7) ± 0.016 | 0.371(6) ± 0.033 | 0.466(1) ± 0.023 • | 0.427(3) ± 0.013 | 0.421(5) ± 0.008 | 0.427(4) ± 0.012 | 0.363(8) ± 0.059 |

| enron | 0.522(4) ± 0.009 | 0.524(3) ± 0.012 | 0.584(1) ± 0.007 • | 0.565(2) ± 0.009 | 0.514(5) ± 0.008 | 0.219(10) ± 0.022 | 0.476(6) ± 0.011 | 0.450(9) ± 0.014 | 0.454(8) ± 0.015 | 0.460(7) ± 0.013 |

| scene | 0.610(8) ± 0.009 | 0.594(9) ± 0.012 | 0.715(5) ± 0.009 | 0.677(6) ± 0.009 | 0.591(10) ± 0.012 | 0.649(7) ± 0.011 | 0.720(3) ± 0.007 | 0.720(4) ± 0.008 | 0.725(2) ± 0.008 | 0.743(1) ± 0.008 • |

| yeast | 0.573(8) ± 0.011 | 0.539(9) ± 0.010 | 0.637(3) ± 0.006 | 0.609(7) ± 0.002 | 0.538(10) ± 0.005 | 0.628(6) ± 0.004 | 0.635(4) ± 0.006 | 0.628(5) ± 0.006 | 0.641(2) ± 0.003 | 0.643(1) ± 0.006 • |

| Slashdot | 0.845(5) ± 0.007 | 0.846(4) ± 0.007 | 0.846(3) ± 0.007 | 0.849(1) ± 0.008 • | 0.849(2) ± 0.008 | 0.843(7) ± 0.007 | 0.843(6) ± 0.011 | 0.842(10) ± 0.008 | 0.843(9) ± 0.006 | 0.843(8) ± 0.008 |

| corel5k | 0.093(4) ± 0.008 | 0.094(2) ± 0.008 | 0.029(8) ± 0.006 | 0.081(5) ± 0.006 | 0.095(1) ± 0.007 • | 0.003(10) ± 0.003 | 0.094(3) ± 0.013 | 0.007(9) ± 0.004 | 0.075(6) ± 0.004 | 0.050(7) ± 0.011 |

| rcv1subset1 | 0.369(3) ± 0.009 | 0.357(6) ± 0.004 | 0.365(5) ± 0.009 | 0.387(1) ± 0.005 • | 0.366(4) ± 0.006 | 0.203(10) ± 0.007 | 0.345(7) ± 0.011 | 0.261(9) ± 0.007 | 0.374(2) ± 0.007 | 0.296(8) ± 0.021 |

| rcv1subset2 | 0.383(3) ± 0.005 | 0.369(6) ± 0.009 | 0.377(5) ± 0.011 | 0.388(1) ± 0.007 • | 0.382(4) ± 0.006 | 0.275(10) ± 0.008 | 0.367(7) ± 0.016 | 0.288(9) ± 0.007 | 0.385(2) ± 0.007 | 0.334(8) ± 0.012 |

| rcv1subset3 | 0.383(3) ± 0.012 | 0.371(6) ± 0.009 | 0.372(5) ± 0.007 | 0.387(1) ± 0.012 • | 0.381(4) ± 0.006 | 0.258(10) ± 0.007 | 0.362(7) ± 0.013 | 0.279(9) ± 0.009 | 0.386(2) ± 0.006 | 0.333(8) ± 0.009 |

| rcv1subset4 | 0.427(4) ± 0.008 | 0.423(6) ± 0.012 | 0.420(7) ± 0.008 | 0.437(1) ± 0.006 • | 0.426(5) ± 0.009 | 0.336(10) ± 0.007 | 0.430(3) ± 0.007 | 0.362(9) ± 0.005 | 0.434(2) ± 0.007 | 0.394(8) ± 0.019 |

| rcv1subset5 | 0.403(2) ± 0.004 | 0.377(6) ± 0.007 | 0.384(5) ± 0.008 | 0.411(1) ± 0.006 • | 0.398(3) ± 0.008 | 0.284(10) ± 0.010 | 0.369(7) ± 0.009 | 0.303(9) ± 0.010 | 0.397(4) ± 0.005 | 0.348(8) ± 0.014 |

| bibtex | 0.393(1) ± 0.008 • | 0.386(5) ± 0.006 | 0.387(4) ± 0.006 | 0.392(2) ± 0.007 | 0.389(3) ± 0.005 | 0.241(9) ± 0.006 | 0.328(7) ± 0.004 | 0.231(10) ± 0.006 | 0.256(8) ± 0.006 | 0.367(6) ± 0.006 |

| Arts | 0.393(5) ± 0.005 | 0.393(3) ± 0.008 | 0.419(1) ± 0.007 • | 0.410(2) ± 0.008 | 0.393(4) ± 0.010 | 0.263(10) ± 0.016 | 0.340(7) ± 0.017 | 0.296(9) ± 0.022 | 0.316(8) ± 0.018 | 0.369(6) ± 0.014 |

| Health | 0.611(4) ± 0.006 | 0.619(3) ± 0.006 | 0.654(1) ± 0.006 • | 0.634(2) ± 0.006 | 0.608(5) ± 0.008 | 0.491(10) ± 0.012 | 0.531(7) ± 0.017 | 0.519(9) ± 0.016 | 0.530(8) ± 0.013 | 0.574(6) ± 0.011 |

| Business | 0.720(4) ± 0.004 | 0.712(8) ± 0.005 | 0.732(1) ± 0.004 • | 0.730(2) ± 0.003 | 0.717(5) ± 0.005 | 0.705(10) ± 0.005 | 0.714(7) ± 0.006 | 0.707(9) ± 0.004 | 0.716(6) ± 0.004 | 0.722(3) ± 0.004 |

| Education | 0.421(4) ± 0.007 | 0.426(3) ± 0.005 | 0.460(1) ± 0.006 • | 0.440(2) ± 0.003 | 0.414(5) ± 0.005 | 0.288(10) ± 0.012 | 0.357(7) ± 0.013 | 0.307(9) ± 0.019 | 0.321(8) ± 0.014 | 0.378(6) ± 0.014 |

| Computers | 0.514(3) ± 0.004 | 0.502(6) ± 0.007 | 0.551(1) ± 0.004 • | 0.532(2) ± 0.003 | 0.504(5) ± 0.004 | 0.468(10) ± 0.004 | 0.490(7) ± 0.008 | 0.478(9) ± 0.013 | 0.487(8) ± 0.004 | 0.511(4) ± 0.005 |

| Entertainment | 0.534(4) ± 0.006 | 0.507(5) ± 0.009 | 0.572(1) ± 0.007 • | 0.562(2) ± 0.006 | 0.534(3) ± 0.006 | 0.400(10) ± 0.009 | 0.472(7) ± 0.014 | 0.441(9) ± 0.011 | 0.453(8) ± 0.011 | 0.505(6) ± 0.008 |

| Recreation | 0.468(3) ± 0.006 | 0.422(7) ± 0.007 | 0.489(1) ± 0.005 • | 0.484(2) ± 0.007 | 0.466(4) ± 0.006 | 0.345(10) ± 0.018 | 0.428(6) ± 0.017 | 0.402(9) ± 0.017 | 0.406(8) ± 0.015 | 0.454(5) ± 0.012 |

| Society | 0.444(6) ± 0.005 | 0.488(2) ± 0.005 | 0.494(1) ± 0.004 • | 0.470(3) ± 0.002 | 0.447(5) ± 0.003 | 0.385(10) ± 0.014 | 0.433(7) ± 0.010 | 0.415(9) ± 0.020 | 0.432(8) ± 0.009 | 0.468(4) ± 0.010 |

| eurlex-dc-l | 0.483(4) ± 0.005 | 0.484(3) ± 0.006 | 0.494(1) ± 0.007 • | 0.489(2) ± 0.004 | 0.478(6) ± 0.005 | 0.390(8) ± 0.003 | 0.401(7) ± 0.006 | 0.288(9) ± 0.006 | 0.278(10) ± 0.003 | 0.479(5) ± 0.007 |

| eurlex-sm | 0.599(3) ± 0.003 | 0.594(5) ± 0.004 | 0.624(1) ± 0.005 • | 0.616(2) ± 0.004 | 0.596(4) ± 0.004 | 0.504(9) ± 0.006 | 0.533(7) ± 0.006 | 0.481(10) ± 0.006 | 0.510(8) ± 0.006 | 0.589(6) ± 0.007 |

| tmc2007-500 | 0.668(4) ± 0.003 | 0.665(5) ± 0.003 | 0.716(2) ± 0.003 | 0.707(3) ± 0.002 | 0.657(6) ± 0.003 | 0.577(10) ± 0.003 | 0.634(9) ± 0.006 | 0.638(8) ± 0.002 | 0.640(7) ± 0.004 | 0.726(1) ± 0.003 • |

| mediamill | 0.553(8) ± 0.003 | 0.536(10) ± 0.002 | 0.597(3) ± 0.002 | 0.598(2) ± 0.002 | 0.541(9) ± 0.003 | 0.573(6) ± 0.002 | 0.583(4) ± 0.004 | 0.568(7) ± 0.002 | 0.580(5) ± 0.002 | 0.635(1) ± 0.001 • |

| average rank | 4.21 | 5.54 | 2.93 | 2.57 | 5.00 | 8.82 | 6.21 | 8.46 | 5.86 | 5.39 |

| win/tie/loss | 140/41/71 | 108/32/112 | 184/21/47 | 195/20/37 | 121/40/91 | 28/8/216 | 90/30/132 | 41/14/197 | 101/39/112 | 121/17/114 |

| Dataset | Hamming Loss | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BR | CC | ECC | RAkEL | RAkELd | BRkNNa | MLKNN | DMLkNN | IBLR | MLHiKNN | |

| birds | 0.143(4) ± 0.013 | 0.145(7) ± 0.012 | 0.119(1) ± 0.007 • | 0.134(2) ± 0.008 | 0.157(10) ± 0.011 | 0.144(5) ± 0.007 | 0.145(6) ± 0.007 | 0.146(8) ± 0.006 | 0.151(9) ± 0.006 | 0.137(3) ± 0.008 |

| CAL500 | 0.228(7) ± 0.003 | 0.244(8) ± 0.005 | 0.198(5) ± 0.002 | 0.227(6) ± 0.002 | 0.263(9) ± 0.003 | 0.187(2) ± 0.002 | 0.192(4) ± 0.001 | 0.189(3) ± 0.001 | 0.300(10) ± 0.003 | 0.187(1) ± 0.001 • |

| emotions | 0.260(8) ± 0.010 | 0.270(9) ± 0.011 | 0.209(4) ± 0.007 | 0.225(7) ± 0.008 | 0.271(10) ± 0.011 | 0.198(2) ± 0.007 | 0.211(5) ± 0.006 | 0.216(6) ± 0.007 | 0.199(3) ± 0.006 | 0.190(1) ± 0.005 • |

| genbase | 0.002(4) ± 0.001 | 0.002(5) ± 0.002 | 0.002(2) ± 0.001 | 0.002(1) ± 0.001 • | 0.002(3) ± 0.002 | 0.026(10) ± 0.007 | 0.009(8) ± 0.003 | 0.014(9) ± 0.005 | 0.002(6) ± 0.001 | 0.006(7) ± 0.002 |

| LLOG | 0.185(1) ± 0.004 • | 0.206(10) ± 0.007 | 0.202(9) ± 0.009 | 0.187(5) ± 0.004 | 0.187(6) ± 0.005 | 0.192(8) ± 0.004 | 0.185(3) ± 0.004 | 0.185(2) ± 0.004 | 0.185(4) ± 0.004 | 0.188(7) ± 0.006 |

| enron | 0.080(5) ± 0.001 | 0.080(7) ± 0.002 | 0.070(1) ± 0.001 • | 0.074(2) ± 0.001 | 0.083(9) ± 0.002 | 0.090(10) ± 0.001 | 0.080(6) ± 0.001 | 0.078(4) ± 0.001 | 0.082(8) ± 0.002 | 0.075(3) ± 0.001 |

| scene | 0.138(8) ± 0.003 | 0.147(10) ± 0.005 | 0.095(5) ± 0.003 | 0.108(7) ± 0.003 | 0.145(9) ± 0.004 | 0.103(6) ± 0.003 | 0.092(3) ± 0.002 | 0.093(4) ± 0.003 | 0.089(2) ± 0.003 | 0.084(1) ± 0.003 • |

| yeast | 0.255(8) ± 0.007 | 0.277(9) ± 0.005 | 0.212(6) ± 0.003 | 0.234(7) ± 0.003 | 0.280(10) ± 0.004 | 0.199(2) ± 0.003 | 0.204(5) ± 0.002 | 0.202(4) ± 0.002 | 0.198(1) ± 0.002 • | 0.200(3) ± 0.003 |

| Slashdot | 0.028(5) ± 0.001 | 0.028(4) ± 0.001 | 0.028(3) ± 0.001 | 0.027(1) ± 0.001 • | 0.027(2) ± 0.002 | 0.028(8) ± 0.001 | 0.028(6) ± 0.002 | 0.028(7) ± 0.001 | 0.028(9) ± 0.001 | 0.029(10) ± 0.001 |

| corel5k | 0.022(6) ± 0.000 | 0.022(7) ± 0.000 | 0.021(4) ± 0.000 | 0.021(5) ± 0.000 | 0.022(8) ± 0.000 | 0.021(3) ± 0.000 | 0.022(9) ± 0.000 | 0.021(2) ± 0.000 | 0.038(10) ± 0.001 | 0.021(1) ± 0.000 • |

| rcv1subset1 | 0.036(7) ± 0.000 | 0.039(10) ± 0.001 | 0.034(4) ± 0.000 | 0.036(6) ± 0.000 | 0.037(8) ± 0.000 | 0.034(5) ± 0.000 | 0.033(3) ± 0.000 | 0.033(2) ± 0.000 | 0.037(9) ± 0.001 | 0.032(1) ± 0.000 • |

| rcv1subset2 | 0.031(7) ± 0.000 | 0.032(9) ± 0.001 | 0.028(5) ± 0.000 | 0.030(6) ± 0.000 | 0.031(8) ± 0.000 | 0.028(3) ± 0.000 | 0.028(4) ± 0.000 | 0.028(2) ± 0.000 | 0.033(10) ± 0.001 | 0.027(1) ± 0.000 • |

| rcv1subset3 | 0.031(7) ± 0.001 | 0.033(10) ± 0.001 | 0.028(5) ± 0.000 | 0.030(6) ± 0.001 | 0.031(8) ± 0.000 | 0.028(3) ± 0.000 | 0.028(4) ± 0.000 | 0.028(2) ± 0.000 | 0.032(9) ± 0.001 | 0.027(1) ± 0.000 • |

| rcv1subset4 | 0.028(7) ± 0.000 | 0.030(10) ± 0.001 | 0.026(5) ± 0.000 | 0.027(6) ± 0.000 | 0.028(8) ± 0.000 | 0.025(4) ± 0.000 | 0.025(3) ± 0.000 | 0.025(2) ± 0.000 | 0.030(9) ± 0.000 | 0.024(1) ± 0.000 • |

| rcv1subset5 | 0.030(7) ± 0.000 | 0.033(10) ± 0.001 | 0.028(5) ± 0.000 | 0.029(6) ± 0.000 | 0.030(8) ± 0.000 | 0.027(3) ± 0.000 | 0.028(4) ± 0.000 | 0.027(2) ± 0.000 | 0.032(9) ± 0.000 | 0.027(1) ± 0.000 • |

| bibtex | 0.013(4) ± 0.000 | 0.013(7) ± 0.000 | 0.013(1) ± 0.000 • | 0.013(3) ± 0.000 | 0.013(6) ± 0.000 | 0.013(8) ± 0.000 | 0.013(5) ± 0.000 | 0.013(9) ± 0.000 | 0.026(10) ± 0.001 | 0.013(2) ± 0.000 |

| Arts | 0.061(7) ± 0.001 | 0.073(10) ± 0.001 | 0.058(1) ± 0.001 • | 0.059(2) ± 0.001 | 0.063(9) ± 0.001 | 0.061(6) ± 0.001 | 0.061(5) ± 0.000 | 0.061(8) ± 0.000 | 0.060(4) ± 0.000 | 0.059(3) ± 0.001 |

| Health | 0.053(3) ± 0.000 | 0.054(5) ± 0.001 | 0.049(1) ± 0.001 • | 0.050(2) ± 0.001 | 0.054(4) ± 0.001 | 0.061(10) ± 0.001 | 0.059(9) ± 0.001 | 0.059(8) ± 0.001 | 0.057(7) ± 0.001 | 0.055(6) ± 0.001 |

| Business | 0.032(8) ± 0.000 | 0.033(10) ± 0.001 | 0.030(1) ± 0.001 • | 0.031(2) ± 0.000 | 0.032(9) ± 0.001 | 0.032(7) ± 0.001 | 0.031(5) ± 0.001 | 0.032(6) ± 0.001 | 0.031(4) ± 0.001 | 0.031(3) ± 0.000 |

| Education | 0.049(8) ± 0.000 | 0.055(10) ± 0.001 | 0.045(1) ± 0.001 • | 0.046(2) ± 0.000 | 0.050(9) ± 0.001 | 0.047(6) ± 0.001 | 0.047(7) ± 0.001 | 0.047(5) ± 0.001 | 0.047(4) ± 0.001 | 0.046(3) ± 0.001 |

| Computers | 0.045(8) ± 0.000 | 0.049(10) ± 0.001 | 0.042(1) ± 0.000 • | 0.042(2) ± 0.000 | 0.046(9) ± 0.000 | 0.045(7) ± 0.000 | 0.044(5) ± 0.001 | 0.044(6) ± 0.000 | 0.044(4) ± 0.000 | 0.043(3) ± 0.000 |

| Entertainment | 0.062(4) ± 0.001 | 0.077(10) ± 0.001 | 0.058(1) ± 0.002 • | 0.058(2) ± 0.001 | 0.064(6) ± 0.001 | 0.064(9) ± 0.001 | 0.064(7) ± 0.001 | 0.064(8) ± 0.001 | 0.063(5) ± 0.001 | 0.061(3) ± 0.001 |

| Recreation | 0.052(5) ± 0.001 | 0.068(10) ± 0.001 | 0.049(1) ± 0.001 • | 0.050(2) ± 0.001 | 0.053(9) ± 0.001 | 0.053(8) ± 0.001 | 0.052(6) ± 0.001 | 0.052(7) ± 0.001 | 0.051(4) ± 0.001 | 0.050(3) ± 0.001 |

| Society | 0.054(8) ± 0.000 | 0.056(10) ± 0.001 | 0.052(3) ± 0.000 | 0.052(4) ± 0.000 | 0.055(9) ± 0.001 | 0.054(7) ± 0.001 | 0.053(5) ± 0.001 | 0.053(6) ± 0.001 | 0.052(2) ± 0.001 | 0.051(1) ± 0.001 • |

| eurlex-dc-l | 0.005(4) ± 0.000 | 0.005(6) ± 0.000 | 0.004(1) ± 0.000 • | 0.005(3) ± 0.000 | 0.005(5) ± 0.000 | 0.005(8) ± 0.000 | 0.005(7) ± 0.000 | 0.005(9) ± 0.000 | 0.010(10) ± 0.000 | 0.004(2) ± 0.000 |

| eurlex-sm | 0.011(4) ± 0.000 | 0.011(6) ± 0.000 | 0.010(1) ± 0.000 • | 0.010(2) ± 0.000 | 0.011(5) ± 0.000 | 0.012(8) ± 0.000 | 0.012(7) ± 0.000 | 0.012(9) ± 0.000 | 0.013(10) ± 0.000 | 0.011(3) ± 0.000 |

| tmc2007-500 | 0.064(4) ± 0.000 | 0.065(5) ± 0.001 | 0.055(2) ± 0.001 | 0.056(3) ± 0.000 | 0.066(8) ± 0.000 | 0.070(10) ± 0.001 | 0.067(9) ± 0.000 | 0.066(7) ± 0.000 | 0.065(6) ± 0.001 | 0.051(1) ± 0.000 • |

| mediamill | 0.035(8) ± 0.000 | 0.037(9) ± 0.000 | 0.030(2) ± 0.000 | 0.031(7) ± 0.000 | 0.038(10) ± 0.000 | 0.031(3) ± 0.000 | 0.031(5) ± 0.000 | 0.031(6) ± 0.000 | 0.031(4) ± 0.000 | 0.028(1) ± 0.000 • |

| average rank | 5.93 | 8.32 | 2.89 | 3.89 | 7.64 | 6.11 | 5.54 | 5.46 | 6.50 | 2.71 |

| win/tie/loss | 92/32/128 | 34/28/190 | 185/21/46 | 156/20/76 | 55/25/172 | 96/28/128 | 110/34/108 | 113/30/109 | 90/25/137 | 197/21/34 |

Appendix C. Experimental Results of Compared Hubness-Reduced MLC Approaches

| Dataset | AUC Micro | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN | |

| birds | 0.736(4) ± 0.014 | 0.724(5) ± 0.021 | 0.754(1) ± 0.011 • | 0.738(3) ± 0.009 | 0.659(6) ± 0.013 | 0.565(7) ± 0.029 | 0.545(8) ± 0.014 | 0.532(9) ± 0.024 | 0.746(2) ± 0.019 |

| CAL500 | 0.749(2) ± 0.003 | 0.746(5) ± 0.003 | 0.749(4) ± 0.002 | 0.749(3) ± 0.003 | 0.713(6) ± 0.003 | 0.703(7) ± 0.003 | 0.703(8) ± 0.003 | 0.702(9) ± 0.003 | 0.757(1) ± 0.004 • |

| emotions | 0.852(4) ± 0.005 | 0.841(5) ± 0.008 | 0.861(2) ± 0.007 | 0.859(3) ± 0.007 | 0.747(6) ± 0.011 | 0.731(9) ± 0.015 | 0.734(7) ± 0.013 | 0.731(8) ± 0.013 | 0.862(1) ± 0.005 • |

| genbase | 0.993(4) ± 0.004 | 0.993(3) ± 0.004 | 0.994(2) ± 0.004 | 0.986(5) ± 0.004 | 0.970(6) ± 0.012 | 0.832(7) ± 0.043 | 0.831(8) ± 0.053 | 0.721(9) ± 0.042 | 0.996(1) ± 0.004 • |

| LLOG | 0.788(2) ± 0.005 | 0.782(3) ± 0.006 | 0.781(5) ± 0.004 | 0.782(3) ± 0.006 | 0.791(1) ± 0.002 • | 0.592(9) ± 0.040 | 0.604(8) ± 0.041 | 0.607(7) ± 0.037 | 0.767(6) ± 0.002 |

| enron | 0.801(5) ± 0.012 | 0.828(3) ± 0.005 | 0.830(2) ± 0.005 | 0.801(6) ± 0.007 | 0.825(4) ± 0.004 | 0.714(7) ± 0.005 | 0.711(8) ± 0.005 | 0.708(9) ± 0.006 | 0.860(1) ± 0.002 • |

| scene | 0.934(5) ± 0.003 | 0.943(4) ± 0.002 | 0.948(2) ± 0.002 | 0.948(3) ± 0.003 | 0.885(6) ± 0.004 | 0.868(9) ± 0.009 | 0.871(7) ± 0.009 | 0.870(8) ± 0.009 | 0.950(1) ± 0.002 • |

| yeast | 0.837(4) ± 0.003 | 0.827(5) ± 0.004 | 0.842(2) ± 0.003 | 0.842(3) ± 0.003 | 0.766(6) ± 0.004 | 0.734(9) ± 0.004 | 0.740(8) ± 0.005 | 0.740(7) ± 0.005 | 0.842(1) ± 0.004 • |

| Slashdot | 0.932(5) ± 0.008 | 0.935(3) ± 0.008 | 0.938(2) ± 0.004 | 0.935(4) ± 0.008 | 0.912(6) ± 0.009 | 0.880(9) ± 0.009 | 0.887(8) ± 0.012 | 0.890(7) ± 0.009 | 0.945(1) ± 0.004 • |

| corel5k | 0.713(6) ± 0.008 | 0.747(5) ± 0.008 | 0.752(4) ± 0.008 | 0.752(3) ± 0.009 | 0.772(2) ± 0.004 | 0.668(8) ± 0.005 | 0.668(7) ± 0.005 | 0.666(9) ± 0.004 | 0.796(1) ± 0.004 • |

| rcv1subset1 | 0.894(4) ± 0.004 | 0.911(2) ± 0.003 | 0.912(1) ± 0.003 • | 0.899(3) ± 0.004 | 0.866(6) ± 0.003 | 0.689(7) ± 0.006 | 0.687(8) ± 0.006 | 0.656(9) ± 0.008 | 0.874(5) ± 0.009 |

| rcv1subset2 | 0.895(4) ± 0.003 | 0.912(1) ± 0.003 • | 0.909(2) ± 0.004 | 0.898(3) ± 0.003 | 0.874(5) ± 0.003 | 0.672(8) ± 0.012 | 0.683(7) ± 0.015 | 0.636(9) ± 0.012 | 0.865(6) ± 0.010 |

| rcv1subset3 | 0.893(3) ± 0.003 | 0.908(2) ± 0.003 | 0.909(1) ± 0.006 • | 0.893(4) ± 0.004 | 0.871(5) ± 0.003 | 0.671(7) ± 0.006 | 0.670(8) ± 0.015 | 0.639(9) ± 0.012 | 0.861(6) ± 0.007 |

| rcv1subset4 | 0.906(4) ± 0.004 | 0.918(1) ± 0.003 • | 0.917(2) ± 0.004 | 0.909(3) ± 0.004 | 0.884(5) ± 0.002 | 0.703(8) ± 0.006 | 0.707(7) ± 0.006 | 0.671(9) ± 0.007 | 0.884(6) ± 0.010 |

| rcv1subset5 | 0.891(4) ± 0.003 | 0.907(1) ± 0.002 • | 0.904(2) ± 0.002 | 0.893(3) ± 0.003 | 0.871(5) ± 0.002 | 0.665(8) ± 0.006 | 0.690(7) ± 0.006 | 0.635(9) ± 0.007 | 0.868(6) ± 0.009 |

| bibtex | 0.838(5) ± 0.003 | 0.853(4) ± 0.003 | 0.864(1) ± 0.003 • | 0.853(3) ± 0.003 | 0.802(6) ± 0.004 | 0.489(9) ± 0.007 | 0.524(8) ± 0.006 | 0.541(7) ± 0.005 | 0.863(2) ± 0.006 |

| Arts | 0.818(4) ± 0.003 | 0.822(2) ± 0.004 | 0.819(3) ± 0.003 | 0.810(5) ± 0.004 | 0.713(6) ± 0.004 | 0.592(8) ± 0.006 | 0.603(7) ± 0.004 | 0.511(9) ± 0.015 | 0.851(1) ± 0.003 • |

| Health | 0.893(4) ± 0.004 | 0.896(2) ± 0.003 | 0.895(3) ± 0.002 | 0.884(5) ± 0.004 | 0.827(6) ± 0.004 | 0.717(8) ± 0.007 | 0.724(7) ± 0.003 | 0.546(9) ± 0.023 | 0.919(1) ± 0.002 • |

| Business | 0.927(4) ± 0.002 | 0.929(3) ± 0.002 | 0.930(2) ± 0.002 | 0.925(5) ± 0.002 | 0.908(6) ± 0.002 | 0.824(8) ± 0.005 | 0.825(7) ± 0.005 | 0.797(9) ± 0.007 | 0.947(1) ± 0.001 • |

| Education | 0.876(3) ± 0.002 | 0.876(2) ± 0.002 | 0.876(4) ± 0.002 | 0.868(5) ± 0.002 | 0.822(6) ± 0.003 | 0.744(9) ± 0.004 | 0.747(7) ± 0.003 | 0.746(8) ± 0.004 | 0.906(1) ± 0.002 • |

| Computers | 0.862(4) ± 0.003 | 0.865(2) ± 0.002 | 0.865(3) ± 0.003 | 0.853(5) ± 0.003 | 0.820(6) ± 0.003 | 0.695(8) ± 0.005 | 0.700(7) ± 0.004 | 0.582(9) ± 0.005 | 0.896(1) ± 0.003 • |

| Entertainment | 0.863(3) ± 0.002 | 0.865(2) ± 0.002 | 0.861(4) ± 0.003 | 0.854(5) ± 0.003 | 0.754(6) ± 0.004 | 0.637(8) ± 0.005 | 0.647(7) ± 0.006 | 0.481(9) ± 0.011 | 0.891(1) ± 0.002 • |

| Recreation | 0.839(4) ± 0.005 | 0.840(2) ± 0.005 | 0.839(3) ± 0.004 | 0.824(5) ± 0.004 | 0.697(6) ± 0.004 | 0.534(8) ± 0.010 | 0.541(7) ± 0.007 | 0.443(9) ± 0.013 | 0.874(1) ± 0.004 • |

| Society | 0.829(4) ± 0.003 | 0.830(2) ± 0.003 | 0.830(3) ± 0.002 | 0.823(5) ± 0.004 | 0.753(6) ± 0.004 | 0.646(7) ± 0.004 | 0.645(8) ± 0.004 | 0.553(9) ± 0.012 | 0.869(1) ± 0.002 • |

| eurlex-dc-l | 0.882(4) ± 0.004 | 0.884(2) ± 0.004 | 0.883(3) ± 0.003 | 0.872(6) ± 0.003 | 0.875(5) ± 0.003 | 0.603(7) ± 0.006 | 0.603(8) ± 0.005 | 0.267(9) ± 0.008 | 0.919(1) ± 0.005 • |

| eurlex-sm | 0.919(4) ± 0.002 | 0.921(2) ± 0.002 | 0.920(3) ± 0.002 | 0.914(6) ± 0.002 | 0.917(5) ± 0.002 | 0.704(7) ± 0.005 | 0.699(8) ± 0.005 | 0.692(9) ± 0.004 | 0.952(1) ± 0.002 • |

| tmc2007-500 | 0.928(4) ± 0.001 | 0.939(2) ± 0.001 | 0.937(3) ± 0.001 | 0.925(5) ± 0.001 | 0.891(6) ± 0.002 | 0.783(7) ± 0.004 | 0.775(8) ± 0.004 | 0.733(9) ± 0.011 | 0.958(1) ± 0.001 • |

| mediamill | 0.930(6) ± 0.001 | 0.932(3) ± 0.001 | 0.933(2) ± 0.001 | 0.932(4) ± 0.001 | 0.930(5) ± 0.000 | 0.862(7) ± 0.001 | 0.862(8) ± 0.001 | 0.861(9) ± 0.001 | 0.957(1) ± 0.001 • |

| average rank | 4.04 | 2.80 | 2.54 | 4.16 | 5.36 | 7.86 | 7.54 | 8.61 | 2.11 |

| win/tie/loss | 132/16/76 | 162/16/46 | 171/24/29 | 129/19/76 | 99/3/122 | 25/18/181 | 29/21/174 | 4/13/207 | 187/10/27 |

| Dataset | Ranking Loss | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN | |

| birds | 0.317(4) ± 0.020 | 0.325(5) ± 0.022 | 0.292(2) ± 0.016 | 0.310(3) ± 0.014 | 0.345(6) ± 0.018 | 0.441(7) ± 0.030 | 0.462(8) ± 0.017 | 0.469(9) ± 0.026 | 0.251(1) ± 0.020 • |

| CAL500 | 0.282(2) ± 0.003 | 0.285(6) ± 0.004 | 0.282(4) ± 0.003 | 0.282(3) ± 0.003 | 0.284(5) ± 0.003 | 0.293(7) ± 0.003 | 0.294(8) ± 0.002 | 0.294(9) ± 0.003 | 0.240(1) ± 0.005 • |

| emotions | 0.184(4) ± 0.011 | 0.201(5) ± 0.014 | 0.175(2) ± 0.009 | 0.176(3) ± 0.009 | 0.241(6) ± 0.015 | 0.256(9) ± 0.018 | 0.252(7) ± 0.014 | 0.255(8) ± 0.018 | 0.153(1) ± 0.007 • |

| genbase | 0.016(4) ± 0.006 | 0.015(2) ± 0.005 | 0.015(3) ± 0.005 | 0.028(6) ± 0.006 | 0.026(5) ± 0.010 | 0.155(7) ± 0.045 | 0.155(8) ± 0.053 | 0.245(9) ± 0.044 | 0.004(1) ± 0.003 • |

| LLOG | 0.229(3) ± 0.005 | 0.235(4) ± 0.008 | 0.235(6) ± 0.009 | 0.235(4) ± 0.008 | 0.186(1) ± 0.002 • | 0.388(9) ± 0.045 | 0.336(7) ± 0.027 | 0.343(8) ± 0.032 | 0.187(2) ± 0.002 |

| enron | 0.260(6) ± 0.018 | 0.218(4) ± 0.007 | 0.217(3) ± 0.008 | 0.255(5) ± 0.009 | 0.165(2) ± 0.005 | 0.285(7) ± 0.003 | 0.287(8) ± 0.006 | 0.290(9) ± 0.006 | 0.135(1) ± 0.003 • |

| scene | 0.102(5) ± 0.005 | 0.091(4) ± 0.004 | 0.085(2) ± 0.004 | 0.085(3) ± 0.005 | 0.121(6) ± 0.004 | 0.138(8) ± 0.011 | 0.138(9) ± 0.011 | 0.138(7) ± 0.010 | 0.071(1) ± 0.003 • |

| yeast | 0.191(4) ± 0.003 | 0.198(5) ± 0.004 | 0.186(3) ± 0.002 | 0.186(2) ± 0.003 | 0.234(6) ± 0.004 | 0.262(8) ± 0.005 | 0.261(7) ± 0.005 | 0.262(9) ± 0.005 | 0.168(1) ± 0.003 • |

| Slashdot | 0.084(6) ± 0.011 | 0.076(4) ± 0.013 | 0.071(3) ± 0.007 | 0.077(5) ± 0.013 | 0.066(2) ± 0.008 | 0.091(9) ± 0.009 | 0.087(7) ± 0.010 | 0.087(8) ± 0.006 | 0.047(1) ± 0.005 • |

| corel5k | 0.452(9) ± 0.011 | 0.408(8) ± 0.015 | 0.400(7) ± 0.014 | 0.399(6) ± 0.017 | 0.228(2) ± 0.003 | 0.331(4) ± 0.005 | 0.330(3) ± 0.005 | 0.332(5) ± 0.004 | 0.206(1) ± 0.004 • |

| rcv1subset1 | 0.143(6) ± 0.005 | 0.119(3) ± 0.004 | 0.119(2) ± 0.004 | 0.137(5) ± 0.006 | 0.120(4) ± 0.003 | 0.301(7) ± 0.008 | 0.304(8) ± 0.007 | 0.342(9) ± 0.008 | 0.118(1) ± 0.008 • |

| rcv1subset2 | 0.141(6) ± 0.005 | 0.117(2) ± 0.004 | 0.122(4) ± 0.006 | 0.136(5) ± 0.004 | 0.112(1) ± 0.002 • | 0.314(8) ± 0.015 | 0.302(7) ± 0.018 | 0.354(9) ± 0.016 | 0.118(3) ± 0.009 |

| rcv1subset3 | 0.147(6) ± 0.004 | 0.124(4) ± 0.004 | 0.124(3) ± 0.008 | 0.145(5) ± 0.004 | 0.115(1) ± 0.001 • | 0.313(7) ± 0.007 | 0.314(8) ± 0.012 | 0.347(9) ± 0.012 | 0.122(2) ± 0.005 |

| rcv1subset4 | 0.125(6) ± 0.006 | 0.108(3) ± 0.005 | 0.110(4) ± 0.006 | 0.121(5) ± 0.006 | 0.100(1) ± 0.001 • | 0.267(8) ± 0.007 | 0.262(7) ± 0.007 | 0.299(9) ± 0.007 | 0.100(2) ± 0.009 |

| rcv1subset5 | 0.150(6) ± 0.006 | 0.127(3) ± 0.004 | 0.131(4) ± 0.004 | 0.146(5) ± 0.005 | 0.115(1) ± 0.002 • | 0.317(8) ± 0.006 | 0.291(7) ± 0.006 | 0.349(9) ± 0.007 | 0.117(2) ± 0.009 |

| bibtex | 0.282(6) ± 0.006 | 0.256(4) ± 0.007 | 0.240(3) ± 0.007 | 0.257(5) ± 0.007 | 0.197(2) ± 0.005 | 0.493(9) ± 0.007 | 0.456(8) ± 0.006 | 0.447(7) ± 0.006 | 0.147(1) ± 0.004 • |

| Arts | 0.206(4) ± 0.004 | 0.202(2) ± 0.004 | 0.204(3) ± 0.004 | 0.216(5) ± 0.004 | 0.266(6) ± 0.004 | 0.407(8) ± 0.008 | 0.396(7) ± 0.005 | 0.506(9) ± 0.017 | 0.129(1) ± 0.003 • |

| Health | 0.125(4) ± 0.004 | 0.121(2) ± 0.003 | 0.122(3) ± 0.002 | 0.137(5) ± 0.004 | 0.146(6) ± 0.005 | 0.249(8) ± 0.007 | 0.242(7) ± 0.003 | 0.460(9) ± 0.026 | 0.070(1) ± 0.002 • |

| Business | 0.081(5) ± 0.003 | 0.079(4) ± 0.003 | 0.076(3) ± 0.002 | 0.084(6) ± 0.004 | 0.066(2) ± 0.001 | 0.126(8) ± 0.004 | 0.123(7) ± 0.004 | 0.146(9) ± 0.005 | 0.037(1) ± 0.001 • |

| Education | 0.156(3) ± 0.003 | 0.156(2) ± 0.002 | 0.156(4) ± 0.002 | 0.167(6) ± 0.003 | 0.162(5) ± 0.002 | 0.251(9) ± 0.004 | 0.249(8) ± 0.003 | 0.249(7) ± 0.004 | 0.089(1) ± 0.002 • |

| Computers | 0.166(5) ± 0.003 | 0.160(3) ± 0.003 | 0.162(4) ± 0.003 | 0.174(6) ± 0.005 | 0.144(2) ± 0.004 | 0.253(8) ± 0.005 | 0.245(7) ± 0.004 | 0.390(9) ± 0.006 | 0.086(1) ± 0.002 • |

| Entertainment | 0.174(3) ± 0.003 | 0.171(2) ± 0.002 | 0.175(4) ± 0.004 | 0.183(5) ± 0.005 | 0.224(6) ± 0.003 | 0.345(8) ± 0.005 | 0.338(7) ± 0.005 | 0.530(9) ± 0.011 | 0.111(1) ± 0.002 • |

| Recreation | 0.197(4) ± 0.006 | 0.194(2) ± 0.006 | 0.194(3) ± 0.005 | 0.212(5) ± 0.005 | 0.277(6) ± 0.005 | 0.462(8) ± 0.013 | 0.453(7) ± 0.008 | 0.577(9) ± 0.014 | 0.115(1) ± 0.004 • |

| Society | 0.189(4) ± 0.004 | 0.187(2) ± 0.004 | 0.188(3) ± 0.004 | 0.196(5) ± 0.005 | 0.216(6) ± 0.004 | 0.335(8) ± 0.004 | 0.334(7) ± 0.006 | 0.457(9) ± 0.013 | 0.105(1) ± 0.002 • |

| eurlex-dc-l | 0.206(5) ± 0.006 | 0.204(3) ± 0.006 | 0.206(4) ± 0.005 | 0.224(6) ± 0.005 | 0.118(2) ± 0.003 | 0.377(7) ± 0.005 | 0.378(8) ± 0.004 | 0.735(9) ± 0.009 | 0.078(1) ± 0.005 • |

| eurlex-sm | 0.138(5) ± 0.003 | 0.136(3) ± 0.003 | 0.137(4) ± 0.003 | 0.146(6) ± 0.003 | 0.084(2) ± 0.001 | 0.317(7) ± 0.005 | 0.323(8) ± 0.004 | 0.330(9) ± 0.004 | 0.050(1) ± 0.002 • |

| tmc2007-500 | 0.092(4) ± 0.001 | 0.077(2) ± 0.001 | 0.081(3) ± 0.001 | 0.095(5) ± 0.001 | 0.105(6) ± 0.001 | 0.209(7) ± 0.003 | 0.217(8) ± 0.004 | 0.255(9) ± 0.010 | 0.044(1) ± 0.001 • |

| mediamill | 0.092(6) ± 0.001 | 0.087(3) ± 0.001 | 0.088(4) ± 0.001 | 0.088(5) ± 0.001 | 0.059(2) ± 0.000 | 0.120(7) ± 0.002 | 0.120(8) ± 0.001 | 0.121(9) ± 0.001 | 0.038(1) ± 0.001 • |

| average rank | 4.82 | 3.45 | 3.46 | 4.84 | 3.64 | 7.68 | 7.36 | 8.54 | 1.21 |

| win/tie/loss | 106/21/97 | 141/24/59 | 143/29/52 | 105/20/99 | 143/12/69 | 27/20/177 | 34/24/166 | 8/17/199 | 212/11/1 |

| Dataset | F1 Macro | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN | |

| birds | 0.034(9) ± 0.019 | 0.058(7) ± 0.024 | 0.072(6) ± 0.019 | 0.052(8) ± 0.018 | 0.157(2) ± 0.027 | 0.118(3) ± 0.022 | 0.092(4) ± 0.034 | 0.082(5) ± 0.027 | 0.258(1) ± 0.069 • |

| CAL500 | 0.083(5) ± 0.002 | 0.075(9) ± 0.004 | 0.083(2) ± 0.002 | 0.083(4) ± 0.003 | 0.103(1) ± 0.003 • | 0.080(6) ± 0.005 | 0.080(8) ± 0.005 | 0.080(7) ± 0.004 | 0.083(3) ± 0.003 |

| emotions | 0.608(4) ± 0.011 | 0.544(9) ± 0.009 | 0.626(2) ± 0.011 | 0.624(3) ± 0.013 | 0.602(7) ± 0.023 | 0.604(5) ± 0.023 | 0.595(8) ± 0.024 | 0.603(6) ± 0.020 | 0.651(1) ± 0.013 • |

| genbase | 0.639(8) ± 0.058 | 0.648(7) ± 0.062 | 0.649(6) ± 0.059 | 0.567(9) ± 0.054 | 0.913(2) ± 0.030 | 0.743(3) ± 0.063 | 0.740(4) ± 0.083 | 0.701(5) ± 0.097 | 0.954(1) ± 0.017 • |

| LLOG | 0.141(1) ± 0.012 • | 0.115(5) ± 0.024 | 0.118(3) ± 0.028 | 0.115(5) ± 0.024 | 0.116(4) ± 0.005 | 0.124(2) ± 0.013 | 0.095(9) ± 0.017 | 0.103(7) ± 0.017 | 0.097(8) ± 0.016 |

| enron | 0.067(8) ± 0.008 | 0.084(7) ± 0.008 | 0.090(6) ± 0.006 | 0.067(9) ± 0.008 | 0.131(2) ± 0.007 | 0.100(4) ± 0.009 | 0.101(3) ± 0.005 | 0.092(5) ± 0.007 | 0.131(1) ± 0.009 • |

| scene | 0.643(9) ± 0.015 | 0.664(8) ± 0.010 | 0.696(7) ± 0.010 | 0.699(6) ± 0.011 | 0.726(5) ± 0.007 | 0.738(3) ± 0.012 | 0.739(2) ± 0.012 | 0.734(4) ± 0.009 | 0.749(1) ± 0.008 • |

| yeast | 0.347(6) ± 0.006 | 0.285(9) ± 0.009 | 0.344(8) ± 0.006 | 0.344(7) ± 0.006 | 0.385(2) ± 0.010 | 0.356(3) ± 0.010 | 0.356(5) ± 0.010 | 0.356(4) ± 0.008 | 0.420(1) ± 0.009 • |

| Slashdot | 0.081(8) ± 0.002 | 0.090(7) ± 0.014 | 0.097(6) ± 0.017 | 0.080(9) ± 0.001 | 0.149(2) ± 0.036 | 0.115(5) ± 0.026 | 0.117(4) ± 0.026 | 0.126(3) ± 0.016 | 0.163(1) ± 0.025 • |

| corel5k | 0.001(9) ± 0.001 | 0.006(8) ± 0.001 | 0.007(7) ± 0.001 | 0.007(6) ± 0.001 | 0.038(1) ± 0.006 • | 0.016(4) ± 0.003 | 0.016(5) ± 0.003 | 0.017(3) ± 0.003 | 0.026(2) ± 0.004 |

| rcv1subset1 | 0.074(9) ± 0.006 | 0.091(7) ± 0.005 | 0.097(6) ± 0.006 | 0.078(8) ± 0.004 | 0.181(1) ± 0.010 • | 0.145(4) ± 0.006 | 0.145(3) ± 0.004 | 0.136(5) ± 0.006 | 0.152(2) ± 0.014 |

| rcv1subset2 | 0.067(9) ± 0.003 | 0.077(7) ± 0.003 | 0.083(6) ± 0.003 | 0.073(8) ± 0.002 | 0.173(1) ± 0.011 • | 0.125(3) ± 0.005 | 0.123(4) ± 0.008 | 0.111(5) ± 0.006 | 0.133(2) ± 0.015 |

| rcv1subset3 | 0.060(9) ± 0.001 | 0.074(7) ± 0.002 | 0.081(6) ± 0.010 | 0.067(8) ± 0.003 | 0.164(1) ± 0.006 • | 0.120(3) ± 0.008 | 0.124(2) ± 0.012 | 0.111(5) ± 0.008 | 0.120(4) ± 0.010 |

| rcv1subset4 | 0.081(9) ± 0.005 | 0.094(7) ± 0.006 | 0.099(6) ± 0.006 | 0.086(8) ± 0.005 | 0.178(1) ± 0.008 • | 0.135(3) ± 0.008 | 0.132(4) ± 0.010 | 0.128(5) ± 0.009 | 0.149(2) ± 0.013 |

| rcv1subset5 | 0.069(9) ± 0.003 | 0.075(7) ± 0.004 | 0.081(6) ± 0.003 | 0.072(8) ± 0.004 | 0.163(1) ± 0.006 • | 0.117(3) ± 0.011 | 0.107(5) ± 0.003 | 0.107(4) ± 0.008 | 0.134(2) ± 0.017 |

| bibtex | 0.060(9) ± 0.002 | 0.069(8) ± 0.004 | 0.090(6) ± 0.005 | 0.079(7) ± 0.004 | 0.148(2) ± 0.006 | 0.122(4) ± 0.006 | 0.131(3) ± 0.005 | 0.112(5) ± 0.003 | 0.187(1) ± 0.006 • |

| Arts | 0.140(7) ± 0.010 | 0.140(6) ± 0.011 | 0.148(5) ± 0.010 | 0.112(8) ± 0.011 | 0.223(2) ± 0.013 | 0.188(3) ± 0.009 | 0.178(4) ± 0.015 | 0.004(9) ± 0.006 | 0.263(1) ± 0.015 • |

| Health | 0.269(7) ± 0.016 | 0.278(5) ± 0.015 | 0.278(6) ± 0.011 | 0.216(8) ± 0.012 | 0.360(2) ± 0.019 | 0.336(3) ± 0.009 | 0.311(4) ± 0.017 | 0.026(9) ± 0.003 | 0.404(1) ± 0.011 • |

| Business | 0.141(7) ± 0.008 | 0.147(6) ± 0.010 | 0.154(5) ± 0.010 | 0.124(8) ± 0.007 | 0.239(2) ± 0.010 | 0.192(3) ± 0.010 | 0.172(4) ± 0.011 | 0.039(9) ± 0.001 | 0.267(1) ± 0.005 • |

| Education | 0.145(6) ± 0.008 | 0.146(5) ± 0.008 | 0.144(7) ± 0.008 | 0.114(9) ± 0.012 | 0.220(2) ± 0.009 | 0.167(3) ± 0.012 | 0.164(4) ± 0.014 | 0.137(8) ± 0.009 | 0.278(1) ± 0.015 • |

| Computers | 0.142(7) ± 0.011 | 0.144(6) ± 0.012 | 0.160(5) ± 0.008 | 0.121(8) ± 0.011 | 0.255(2) ± 0.014 | 0.201(3) ± 0.015 | 0.182(4) ± 0.012 | 0.023(9) ± 0.002 | 0.294(1) ± 0.010 • |

| Entertainment | 0.257(7) ± 0.008 | 0.260(6) ± 0.008 | 0.263(5) ± 0.007 | 0.215(8) ± 0.011 | 0.347(2) ± 0.012 | 0.304(3) ± 0.013 | 0.285(4) ± 0.012 | 0.006(9) ± 0.008 | 0.390(1) ± 0.008 • |

| Recreation | 0.240(7) ± 0.022 | 0.247(6) ± 0.018 | 0.254(5) ± 0.018 | 0.191(8) ± 0.016 | 0.352(2) ± 0.014 | 0.315(3) ± 0.015 | 0.297(4) ± 0.024 | 0.002(9) ± 0.003 | 0.390(1) ± 0.016 • |

| Society | 0.158(7) ± 0.008 | 0.161(6) ± 0.008 | 0.164(5) ± 0.008 | 0.117(8) ± 0.013 | 0.246(2) ± 0.010 | 0.230(3) ± 0.013 | 0.216(4) ± 0.013 | 0.021(9) ± 0.003 | 0.291(1) ± 0.012 • |

| eurlex-dc-l | 0.148(3) ± 0.003 | 0.147(4) ± 0.004 | 0.145(5) ± 0.003 | 0.118(8) ± 0.005 | 0.177(2) ± 0.005 | 0.140(6) ± 0.004 | 0.132(7) ± 0.004 | 0.001(9) ± 0.001 | 0.267(1) ± 0.010 • |

| eurlex-sm | 0.252(5) ± 0.005 | 0.253(4) ± 0.005 | 0.250(6) ± 0.005 | 0.234(8) ± 0.004 | 0.296(2) ± 0.007 | 0.259(3) ± 0.004 | 0.248(7) ± 0.006 | 0.232(9) ± 0.006 | 0.388(1) ± 0.009 • |

| tmc2007-500 | 0.239(9) ± 0.004 | 0.289(7) ± 0.002 | 0.291(6) ± 0.004 | 0.248(8) ± 0.006 | 0.464(2) ± 0.010 | 0.443(3) ± 0.009 | 0.429(4) ± 0.005 | 0.395(5) ± 0.008 | 0.603(1) ± 0.007 • |

| mediamill | 0.085(6) ± 0.002 | 0.055(9) ± 0.002 | 0.084(8) ± 0.001 | 0.085(7) ± 0.002 | 0.137(2) ± 0.006 | 0.103(3) ± 0.005 | 0.100(4) ± 0.003 | 0.095(5) ± 0.003 | 0.293(1) ± 0.005 • |

| average rank | 7.11 | 6.77 | 5.61 | 7.48 | 2.11 | 3.46 | 4.54 | 6.32 | 1.61 |

| win/tie/loss | 41/22/161 | 53/20/151 | 78/31/115 | 31/22/171 | 190/10/24 | 137/30/57 | 111/39/74 | 58/34/132 | 196/18/10 |

| Dataset | F1 micro | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN | |

| birds | 0.074(9) ± 0.042 | 0.130(7) ± 0.058 | 0.159(5) ± 0.034 | 0.112(8) ± 0.037 | 0.230(2) ± 0.050 | 0.205(3) ± 0.035 | 0.164(4) ± 0.054 | 0.146(6) ± 0.045 | 0.358(1) ± 0.061 • |

| CAL500 | 0.321(6) ± 0.006 | 0.311(9) ± 0.007 | 0.316(8) ± 0.005 | 0.317(7) ± 0.006 | 0.338(2) ± 0.009 | 0.330(3) ± 0.009 | 0.327(5) ± 0.009 | 0.330(4) ± 0.009 | 0.342(1) ± 0.014 • |

| emotions | 0.635(6) ± 0.013 | 0.592(9) ± 0.013 | 0.654(2) ± 0.013 | 0.652(3) ± 0.014 | 0.629(8) ± 0.020 | 0.641(4) ± 0.019 | 0.629(7) ± 0.028 | 0.639(5) ± 0.015 | 0.672(1) ± 0.011 • |

| genbase | 0.839(7) ± 0.040 | 0.846(6) ± 0.040 | 0.850(5) ± 0.036 | 0.779(9) ± 0.035 | 0.949(2) ± 0.020 | 0.851(4) ± 0.037 | 0.855(3) ± 0.046 | 0.819(8) ± 0.047 | 0.965(1) ± 0.014 • |

| LLOG | 0.466(1) ± 0.023 • | 0.394(4) ± 0.062 | 0.407(3) ± 0.063 | 0.394(4) ± 0.062 | 0.427(2) ± 0.013 | 0.360(7) ± 0.028 | 0.327(9) ± 0.053 | 0.351(8) ± 0.039 | 0.363(6) ± 0.059 |

| enron | 0.219(8) ± 0.022 | 0.314(7) ± 0.016 | 0.321(6) ± 0.014 | 0.219(9) ± 0.027 | 0.476(1) ± 0.011 • | 0.458(3) ± 0.017 | 0.453(4) ± 0.013 | 0.414(5) ± 0.021 | 0.460(2) ± 0.013 |

| scene | 0.649(9) ± 0.011 | 0.663(8) ± 0.009 | 0.696(7) ± 0.009 | 0.700(6) ± 0.009 | 0.720(5) ± 0.007 | 0.734(3) ± 0.011 | 0.736(2) ± 0.011 | 0.730(4) ± 0.008 | 0.743(1) ± 0.008 • |

| yeast | 0.628(6) ± 0.004 | 0.585(9) ± 0.007 | 0.627(8) ± 0.004 | 0.627(7) ± 0.005 | 0.635(5) ± 0.006 | 0.638(2) ± 0.004 | 0.637(4) ± 0.005 | 0.637(3) ± 0.005 | 0.643(1) ± 0.006 • |

| Slashdot | 0.843(4) ± 0.007 | 0.843(3) ± 0.007 | 0.844(1) ± 0.007 • | 0.843(6) ± 0.008 | 0.843(2) ± 0.011 | 0.833(8) ± 0.014 | 0.832(9) ± 0.012 | 0.835(7) ± 0.009 | 0.843(5) ± 0.008 |

| corel5k | 0.003(9) ± 0.003 | 0.020(8) ± 0.007 | 0.026(7) ± 0.007 | 0.027(6) ± 0.010 | 0.094(1) ± 0.013 • | 0.031(3) ± 0.008 | 0.028(5) ± 0.007 | 0.030(4) ± 0.005 | 0.050(2) ± 0.011 |

| rcv1subset1 | 0.203(9) ± 0.007 | 0.240(7) ± 0.004 | 0.246(6) ± 0.005 | 0.209(8) ± 0.005 | 0.345(1) ± 0.011 • | 0.299(3) ± 0.014 | 0.306(2) ± 0.011 | 0.286(5) ± 0.010 | 0.296(4) ± 0.021 |

| rcv1subset2 | 0.275(8) ± 0.008 | 0.288(7) ± 0.007 | 0.295(6) ± 0.006 | 0.274(9) ± 0.005 | 0.367(1) ± 0.016 • | 0.329(3) ± 0.011 | 0.327(4) ± 0.012 | 0.314(5) ± 0.012 | 0.334(2) ± 0.012 |

| rcv1subset3 | 0.258(9) ± 0.007 | 0.281(7) ± 0.007 | 0.290(6) ± 0.016 | 0.263(8) ± 0.009 | 0.362(1) ± 0.013 • | 0.324(4) ± 0.010 | 0.326(3) ± 0.019 | 0.318(5) ± 0.014 | 0.333(2) ± 0.009 |

| rcv1subset4 | 0.336(9) ± 0.007 | 0.355(7) ± 0.006 | 0.359(6) ± 0.006 | 0.339(8) ± 0.005 | 0.430(1) ± 0.007 • | 0.396(2) ± 0.010 | 0.392(4) ± 0.015 | 0.387(5) ± 0.012 | 0.394(3) ± 0.019 |

| rcv1subset5 | 0.284(8) ± 0.010 | 0.297(7) ± 0.009 | 0.299(6) ± 0.008 | 0.280(9) ± 0.007 | 0.369(1) ± 0.009 • | 0.341(3) ± 0.014 | 0.329(4) ± 0.011 | 0.321(5) ± 0.011 | 0.348(2) ± 0.014 |

| bibtex | 0.241(9) ± 0.006 | 0.252(8) ± 0.006 | 0.295(5) ± 0.006 | 0.272(7) ± 0.006 | 0.328(2) ± 0.004 | 0.312(4) ± 0.004 | 0.323(3) ± 0.008 | 0.292(6) ± 0.007 | 0.367(1) ± 0.006 • |

| Arts | 0.263(7) ± 0.016 | 0.267(5) ± 0.017 | 0.267(6) ± 0.016 | 0.234(8) ± 0.020 | 0.340(2) ± 0.017 | 0.310(3) ± 0.015 | 0.299(4) ± 0.021 | 0.012(9) ± 0.020 | 0.369(1) ± 0.014 • |

| Health | 0.491(7) ± 0.012 | 0.502(5) ± 0.013 | 0.502(6) ± 0.012 | 0.463(8) ± 0.013 | 0.531(2) ± 0.017 | 0.525(3) ± 0.011 | 0.519(4) ± 0.014 | 0.258(9) ± 0.059 | 0.574(1) ± 0.011 • |

| Business | 0.705(7) ± 0.005 | 0.707(4) ± 0.005 | 0.705(6) ± 0.004 | 0.696(8) ± 0.005 | 0.714(2) ± 0.006 | 0.708(3) ± 0.004 | 0.705(5) ± 0.004 | 0.670(9) ± 0.004 | 0.722(1) ± 0.004 • |

| Education | 0.288(7) ± 0.012 | 0.290(5) ± 0.011 | 0.290(6) ± 0.011 | 0.254(9) ± 0.012 | 0.357(2) ± 0.013 | 0.310(3) ± 0.014 | 0.301(4) ± 0.016 | 0.267(8) ± 0.018 | 0.378(1) ± 0.014 • |

| Computers | 0.468(4) ± 0.004 | 0.463(7) ± 0.004 | 0.463(6) ± 0.003 | 0.446(8) ± 0.013 | 0.490(2) ± 0.008 | 0.480(3) ± 0.006 | 0.466(5) ± 0.009 | 0.362(9) ± 0.033 | 0.511(1) ± 0.005 • |

| Entertainment | 0.400(7) ± 0.009 | 0.407(5) ± 0.010 | 0.405(6) ± 0.009 | 0.349(8) ± 0.011 | 0.472(2) ± 0.014 | 0.457(3) ± 0.011 | 0.435(4) ± 0.008 | 0.021(9) ± 0.032 | 0.505(1) ± 0.008 • |

| Recreation | 0.345(7) ± 0.018 | 0.350(6) ± 0.018 | 0.357(5) ± 0.016 | 0.285(8) ± 0.018 | 0.428(2) ± 0.017 | 0.411(3) ± 0.015 | 0.399(4) ± 0.016 | 0.005(9) ± 0.009 | 0.454(1) ± 0.012 • |

| Society | 0.385(7) ± 0.014 | 0.389(5) ± 0.012 | 0.386(6) ± 0.011 | 0.354(8) ± 0.016 | 0.433(2) ± 0.010 | 0.430(3) ± 0.014 | 0.412(4) ± 0.014 | 0.236(9) ± 0.047 | 0.468(1) ± 0.010 • |

| eurlex-dc-l | 0.390(3) ± 0.003 | 0.390(4) ± 0.003 | 0.389(5) ± 0.004 | 0.333(8) ± 0.009 | 0.401(2) ± 0.006 | 0.370(6) ± 0.005 | 0.359(7) ± 0.005 | 0.026(9) ± 0.027 | 0.479(1) ± 0.007 • |

| eurlex-sm | 0.504(6) ± 0.006 | 0.506(4) ± 0.006 | 0.505(5) ± 0.005 | 0.490(8) ± 0.003 | 0.533(2) ± 0.006 | 0.516(3) ± 0.005 | 0.502(7) ± 0.007 | 0.489(9) ± 0.005 | 0.589(1) ± 0.007 • |

| tmc2007-500 | 0.577(8) ± 0.003 | 0.606(7) ± 0.003 | 0.607(6) ± 0.003 | 0.574(9) ± 0.006 | 0.634(5) ± 0.006 | 0.658(2) ± 0.004 | 0.652(3) ± 0.005 | 0.635(4) ± 0.006 | 0.726(1) ± 0.003 • |

| mediamill | 0.573(6) ± 0.002 | 0.548(9) ± 0.002 | 0.571(7) ± 0.002 | 0.571(8) ± 0.002 | 0.583(2) ± 0.004 | 0.577(3) ± 0.003 | 0.575(4) ± 0.003 | 0.574(5) ± 0.005 | 0.635(1) ± 0.001 • |

| average rank | 6.89 | 6.41 | 5.61 | 7.59 | 2.29 | 3.46 | 4.54 | 6.54 | 1.68 |

| win/tie/loss | 46/25/153 | 59/26/139 | 78/36/110 | 30/26/168 | 175/23/26 | 129/44/51 | 103/47/74 | 53/38/133 | 190/25/9 |

| Dataset | Hamming Loss | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN | |

| birds | 0.144(7) ± 0.007 | 0.141(3) ± 0.006 | 0.139(2) ± 0.006 | 0.142(5) ± 0.006 | 0.145(8) ± 0.007 | 0.141(4) ± 0.007 | 0.144(6) ± 0.009 | 0.146(9) ± 0.009 | 0.137(1) ± 0.008 • |

| CAL500 | 0.187(3) ± 0.002 | 0.187(2) ± 0.001 | 0.188(5) ± 0.001 | 0.188(4) ± 0.001 | 0.192(9) ± 0.001 | 0.189(7) ± 0.001 | 0.189(6) ± 0.001 | 0.189(8) ± 0.002 | 0.187(1) ± 0.001 • |

| emotions | 0.198(4) ± 0.007 | 0.216(9) ± 0.007 | 0.192(2) ± 0.007 | 0.193(3) ± 0.009 | 0.211(8) ± 0.006 | 0.203(5) ± 0.010 | 0.207(7) ± 0.010 | 0.203(6) ± 0.006 | 0.190(1) ± 0.005 • |

| genbase | 0.026(7) ± 0.007 | 0.024(6) ± 0.006 | 0.024(5) ± 0.006 | 0.035(9) ± 0.006 | 0.009(2) ± 0.003 | 0.023(4) ± 0.005 | 0.022(3) ± 0.006 | 0.027(8) ± 0.006 | 0.006(1) ± 0.002 • |

| LLOG | 0.192(3) ± 0.004 | 0.195(5) ± 0.005 | 0.193(4) ± 0.006 | 0.195(5) ± 0.005 | 0.185(1) ± 0.004 • | 0.253(9) ± 0.037 | 0.215(8) ± 0.021 | 0.213(7) ± 0.021 | 0.188(2) ± 0.006 |

| enron | 0.090(8) ± 0.001 | 0.085(7) ± 0.002 | 0.085(6) ± 0.002 | 0.090(9) ± 0.001 | 0.080(4) ± 0.001 | 0.079(2) ± 0.001 | 0.079(3) ± 0.001 | 0.082(5) ± 0.001 | 0.075(1) ± 0.001 • |

| scene | 0.103(9) ± 0.003 | 0.097(8) ± 0.002 | 0.091(6) ± 0.002 | 0.090(5) ± 0.003 | 0.092(7) ± 0.002 | 0.087(3) ± 0.003 | 0.086(2) ± 0.002 | 0.087(4) ± 0.003 | 0.084(1) ± 0.003 • |

| yeast | 0.199(6) ± 0.003 | 0.208(9) ± 0.002 | 0.197(1) ± 0.002 • | 0.197(3) ± 0.003 | 0.204(8) ± 0.002 | 0.197(2) ± 0.003 | 0.198(4) ± 0.002 | 0.198(5) ± 0.002 | 0.200(7) ± 0.003 |

| Slashdot | 0.028(4) ± 0.001 | 0.028(3) ± 0.001 | 0.028(1) ± 0.001 • | 0.028(5) ± 0.001 | 0.028(2) ± 0.002 | 0.030(7) ± 0.003 | 0.030(9) ± 0.003 | 0.030(8) ± 0.002 | 0.029(6) ± 0.001 |

| corel5k | 0.021(4) ± 0.000 | 0.021(6) ± 0.000 | 0.021(8) ± 0.000 | 0.021(7) ± 0.000 | 0.022(9) ± 0.000 | 0.021(3) ± 0.000 | 0.021(2) ± 0.000 | 0.021(5) ± 0.000 | 0.021(1) ± 0.000 • |

| rcv1subset1 | 0.034(9) ± 0.000 | 0.033(2) ± 0.000 | 0.033(5) ± 0.000 | 0.034(8) ± 0.000 | 0.033(6) ± 0.000 | 0.033(3) ± 0.000 | 0.033(4) ± 0.000 | 0.033(7) ± 0.000 | 0.032(1) ± 0.000 • |

| rcv1subset2 | 0.028(7) ± 0.000 | 0.028(3) ± 0.000 | 0.028(2) ± 0.000 | 0.028(5) ± 0.000 | 0.028(9) ± 0.000 | 0.028(6) ± 0.000 | 0.028(4) ± 0.000 | 0.028(8) ± 0.000 | 0.027(1) ± 0.000 • |

| rcv1subset3 | 0.028(8) ± 0.000 | 0.027(3) ± 0.000 | 0.027(2) ± 0.000 | 0.028(4) ± 0.000 | 0.028(9) ± 0.000 | 0.028(6) ± 0.000 | 0.028(5) ± 0.000 | 0.028(7) ± 0.000 | 0.027(1) ± 0.000 • |

| rcv1subset4 | 0.025(9) ± 0.000 | 0.025(2) ± 0.000 | 0.025(3) ± 0.000 | 0.025(7) ± 0.000 | 0.025(8) ± 0.000 | 0.025(4) ± 0.000 | 0.025(6) ± 0.000 | 0.025(5) ± 0.000 | 0.024(1) ± 0.000 • |

| rcv1subset5 | 0.027(7) ± 0.000 | 0.027(2) ± 0.000 | 0.027(3) ± 0.000 | 0.027(4) ± 0.000 | 0.028(9) ± 0.000 | 0.027(5) ± 0.000 | 0.027(6) ± 0.000 | 0.027(8) ± 0.000 | 0.027(1) ± 0.000 • |

| bibtex | 0.013(9) ± 0.000 | 0.013(8) ± 0.000 | 0.013(1) ± 0.000 • | 0.013(5) ± 0.000 | 0.013(6) ± 0.000 | 0.013(4) ± 0.000 | 0.013(3) ± 0.000 | 0.013(7) ± 0.000 | 0.013(2) ± 0.000 |

| Arts | 0.061(5) ± 0.001 | 0.061(4) ± 0.001 | 0.061(7) ± 0.001 | 0.063(8) ± 0.001 | 0.061(3) ± 0.000 | 0.060(2) ± 0.001 | 0.061(5) ± 0.001 | 0.069(9) ± 0.001 | 0.059(1) ± 0.001 • |

| Health | 0.061(7) ± 0.001 | 0.060(6) ± 0.001 | 0.060(5) ± 0.001 | 0.065(8) ± 0.001 | 0.059(4) ± 0.001 | 0.058(2) ± 0.001 | 0.058(3) ± 0.001 | 0.079(9) ± 0.002 | 0.055(1) ± 0.001 • |

| Business | 0.032(7) ± 0.001 | 0.031(5) ± 0.001 | 0.031(4) ± 0.000 | 0.033(8) ± 0.001 | 0.031(2) ± 0.001 | 0.031(3) ± 0.001 | 0.032(6) ± 0.001 | 0.034(9) ± 0.001 | 0.031(1) ± 0.000 • |

| Education | 0.047(6) ± 0.001 | 0.047(4) ± 0.000 | 0.047(5) ± 0.000 | 0.049(9) ± 0.001 | 0.047(7) ± 0.001 | 0.047(2) ± 0.000 | 0.047(3) ± 0.001 | 0.048(8) ± 0.000 | 0.046(1) ± 0.001 • |

| Computers | 0.045(7) ± 0.000 | 0.045(6) ± 0.000 | 0.044(4) ± 0.000 | 0.046(8) ± 0.000 | 0.044(3) ± 0.001 | 0.044(2) ± 0.000 | 0.045(5) ± 0.000 | 0.055(9) ± 0.002 | 0.043(1) ± 0.000 • |

| Entertainment | 0.064(5) ± 0.001 | 0.064(4) ± 0.001 | 0.065(6) ± 0.001 | 0.067(8) ± 0.001 | 0.064(3) ± 0.001 | 0.063(2) ± 0.001 | 0.065(7) ± 0.001 | 0.083(9) ± 0.002 | 0.061(1) ± 0.001 • |

| Recreation | 0.053(7) ± 0.001 | 0.053(6) ± 0.001 | 0.053(5) ± 0.001 | 0.055(8) ± 0.001 | 0.052(3) ± 0.001 | 0.051(2) ± 0.001 | 0.052(4) ± 0.001 | 0.065(9) ± 0.000 | 0.050(1) ± 0.001 • |

| Society | 0.054(7) ± 0.001 | 0.053(5) ± 0.001 | 0.053(6) ± 0.000 | 0.055(8) ± 0.001 | 0.053(3) ± 0.001 | 0.052(2) ± 0.000 | 0.053(4) ± 0.001 | 0.063(9) ± 0.002 | 0.051(1) ± 0.001 • |

| eurlex-dc-l | 0.005(4) ± 0.000 | 0.005(3) ± 0.000 | 0.005(5) ± 0.000 | 0.005(8) ± 0.000 | 0.005(2) ± 0.000 | 0.005(6) ± 0.000 | 0.005(7) ± 0.000 | 0.006(9) ± 0.000 | 0.004(1) ± 0.000 • |

| eurlex-sm | 0.012(6) ± 0.000 | 0.012(4) ± 0.000 | 0.012(5) ± 0.000 | 0.012(8) ± 0.000 | 0.012(3) ± 0.000 | 0.012(2) ± 0.000 | 0.012(7) ± 0.000 | 0.012(9) ± 0.000 | 0.011(1) ± 0.000 • |

| tmc2007-500 | 0.070(8) ± 0.001 | 0.066(4) ± 0.000 | 0.067(5) ± 0.001 | 0.070(9) ± 0.001 | 0.067(7) ± 0.000 | 0.063(2) ± 0.001 | 0.064(3) ± 0.000 | 0.067(6) ± 0.001 | 0.051(1) ± 0.000 • |

| mediamill | 0.031(7) ± 0.000 | 0.031(9) ± 0.000 | 0.030(4) ± 0.000 | 0.030(6) ± 0.000 | 0.031(8) ± 0.000 | 0.030(2) ± 0.000 | 0.030(3) ± 0.000 | 0.030(5) ± 0.000 | 0.028(1) ± 0.000 • |

| average rank | 6.45 | 4.95 | 4.18 | 6.59 | 5.46 | 3.68 | 4.84 | 7.39 | 1.46 |

| win/tie/loss | 50/46/128 | 92/44/88 | 109/47/68 | 53/36/135 | 80/34/110 | 118/49/57 | 89/56/79 | 34/38/152 | 200/16/8 |

| Metric | # Algorithms | # Datasets | Critical Value | |

|---|---|---|---|---|

| AUC macro | 203.401 | 9 | 28 | 1.981 |

| AUC micro | 181.614 | |||

| Ranking Loss | 172.663 | |||

| F1 macro | 143.012 | |||

| F1 micro | 134.357 | |||

| Hamming Loss | 95.295 |

| AUC micro | BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN |

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | - | 0.998 | 1.000 | 0.219 | 1.83 | 3.73 | 3.73 | 3.73 | 0.998 |

| BRkNNa-dsl | 0.002 | - | 0.868 | 0.008 | 4.10 | 3.73 | 3.73 | 3.73 | 0.959 |

| BRkNNa-ls | 6.47 | 0.137 | - | 1.23 | 2.05 | 3.73 | 3.73 | 3.73 | 0.925 |

| BRkNNa-mp | 0.788 | 0.992 | 1.000 | - | 5.52 | 3.73 | 3.73 | 3.73 | 0.998 |

| MLKNN | 1.000 | 1.000 | 1.000 | 1.000 | - | 3.73 | 3.73 | 3.73 | 1.000 |

| MLKNN-dsl | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | - | 0.984 | 2.63 | 1.000 |

| MLKNN-ls | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.017 | - | 1.03 | 1.000 |

| MLKNN-mp | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | - | 1.000 |

| MLHiKNN | 0.002 | 0.043 | 0.078 | 0.002 | 1.14 | 3.73 | 3.73 | 3.73 | - |

| Ranking Loss | BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN |

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | - | 0.999 | 1.000 | 0.315 | 0.842 | 2.61 | 3.28 | 2.05 | 1.000 |

| BRkNNa-dsl | 0.001 | - | 0.593 | 0.002 | 0.558 | 1.23 | 1.23 | 1.23 | 1.000 |

| BRkNNa-ls | 3.37 | 0.416 | - | 5.10 | 0.478 | 7.08 | 7.08 | 3.73 | 1.000 |

| BRkNNa-mp | 0.693 | 0.998 | 1.000 | - | 0.836 | 7.08 | 7.08 | 7.08 | 1.000 |

| MLKNN | 0.164 | 0.451 | 0.531 | 0.169 | - | 3.73 | 3.73 | 3.73 | 1.000 |

| MLKNN-dsl | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | - | 0.984 | 2.63 | 1.000 |

| MLKNN-ls | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.017 | - | 1.14 | 1.000 |

| MLKNN-mp | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | - | 1.000 |

| MLHiKNN | 3.73 | 1.86 | 3.73 | 3.73 | 7.71 | 3.73 | 3.73 | 3.73 | - |

| F1 Macro | BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN |

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | - | 0.976 | 1.000 | 0.066 | 1.000 | 1.000 | 1.000 | 0.407 | 1.000 |

| BRkNNa-dsl | 0.025 | - | 1.000 | 0.016 | 1.000 | 1.000 | 1.000 | 0.442 | 1.000 |

| BRkNNa-ls | 4.66 | 2.00 | - | 3.28 | 1.000 | 1.000 | 1.000 | 0.284 | 1.000 |

| BRkNNa-mp | 0.937 | 0.984 | 1.000 | - | 1.000 | 1.000 | 1.000 | 0.513 | 1.000 |

| MLKNN | 2.61 | 3.73 | 2.61 | 1.86 | - | 5.22 | 1.12 | 1.86 | 0.999 |

| MLKNN-dsl | 4.10 | 1.86 | 3.28 | 7.08 | 1.000 | - | 5.29 | 6.30 | 1.000 |

| MLKNN-ls | 8.83 | 3.28 | 2.76 | 6.30 | 1.000 | 1.000 | - | 2.76 | 1.000 |

| MLKNN-mp | 0.602 | 0.567 | 0.724 | 0.496 | 1.000 | 1.000 | 1.000 | - | 1.000 |

| MLHiKNN | 2.61 | 1.12 | 2.61 | 1.12 | 0.001 | 1.60 | 1.86 | 1.12 | - |

| F1 Micro | BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN |

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | - | 0.972 | 1.000 | 0.037 | 1.000 | 1.000 | 1.000 | 0.299 | 1.000 |

| BRkNNa-dsl | 0.030 | - | 1.000 | 0.010 | 1.000 | 1.000 | 1.000 | 0.315 | 1.000 |

| BRkNNa-ls | 5.32 | 1.37 | - | 5.10 | 1.000 | 1.000 | 0.999 | 0.066 | 1.000 |

| BRkNNa-mp | 0.965 | 0.990 | 1.000 | - | 1.000 | 1.000 | 1.000 | 0.433 | 1.000 |

| MLKNN | 2.61 | 3.73 | 1.60 | 7.08 | - | 1.83 | 2.00 | 6.30 | 0.965 |

| MLKNN-dsl | 3.60 | 3.37 | 9.68 | 9.42 | 1.000 | - | 1.09 | 2.61 | 1.000 |

| MLKNN-ls | 2.92 | 1.09 | 9.34 | 2.11 | 1.000 | 1.000 | - | 2.76 | 1.000 |

| MLKNN-mp | 0.709 | 0.693 | 0.937 | 0.576 | 1.000 | 1.000 | 1.000 | - | 1.000 |

| MLHiKNN | 1.67 | 5.22 | 1.60 | 7.08 | 0.037 | 3.73 | 7.08 | 3.73 | - |

| Hamming Loss | BRkNNa | BRkNNa-dsl | BRkNNa-ls | BRkNNa-mp | MLKNN | MLKNN-dsl | MLKNN-ls | MLKNN-mp | MLHiKNN |

|---|---|---|---|---|---|---|---|---|---|

| BRkNNa | - | 0.994 | 1.000 | 0.105 | 0.940 | 0.997 | 0.973 | 0.014 | 1.000 |

| BRkNNa-dsl | 0.007 | - | 0.955 | 0.008 | 0.903 | 0.982 | 0.794 | 0.007 | 1.000 |

| BRkNNa-ls | 1.91 | 0.047 | - | 3.37 | 0.261 | 0.836 | 0.323 | 2.42 | 1.000 |

| BRkNNa-mp | 0.899 | 0.992 | 1.000 | - | 0.877 | 0.996 | 0.975 | 0.010 | 1.000 |

| MLKNN | 0.063 | 0.101 | 0.746 | 0.127 | - | 0.998 | 0.907 | 0.030 | 1.000 |

| MLKNN-dsl | 0.004 | 0.019 | 0.169 | 0.004 | 0.002 | - | 0.009 | 5.52 | 1.000 |

| MLKNN-ls | 0.028 | 0.212 | 0.685 | 0.027 | 0.097 | 0.992 | - | 1.09 | 1.000 |

| MLKNN-mp | 0.987 | 0.994 | 1.000 | 0.990 | 0.972 | 1.000 | 1.000 | - | 1.000 |

| MLHiKNN | 7.08 | 1.86 | 5.52 | 9.42 | 2.38 | 2.00 | 4.10 | 2.05 | - |

Appendix D. Results of Ablation Analysis Experiments

| Dataset | AUC Micro | |||||

|---|---|---|---|---|---|---|

| MLHiKNN-g1 | MLHiKNN-g0 | MLHiKNN-h1 | MLHiKNN-d1 | MLHiKNN-fo | MLHiKNN | |

| birds | 0.737(5) ± 0.022 | 0.743(4) ± 0.025 | 0.744(3) ± 0.018 | 0.737(6) ± 0.015 | 0.784(1) ± 0.019 • | 0.746(2) ± 0.019 |

| CAL500 | 0.751(5) ± 0.007 | 0.722(6) ± 0.010 | 0.759(2) ± 0.004 | 0.758(3) ± 0.003 | 0.764(1) ± 0.002 • | 0.757(4) ± 0.004 |

| emotions | 0.862(1) ± 0.005 • | 0.854(6) ± 0.006 | 0.862(2) ± 0.005 | 0.859(4) ± 0.007 | 0.858(5) ± 0.006 | 0.862(3) ± 0.005 |

| genbase | 0.995(6) ± 0.004 | 0.996(5) ± 0.004 | 0.997(3) ± 0.003 | 0.997(2) ± 0.004 | 0.999(1) ± 0.001 • | 0.996(4) ± 0.004 |

| LLOG | 0.764(6) ± 0.005 | 0.767(5) ± 0.002 | 0.767(2) ± 0.002 | 0.767(4) ± 0.002 | 0.798(1) ± 0.004 • | 0.767(3) ± 0.002 |

| enron | 0.859(4) ± 0.003 | 0.847(6) ± 0.005 | 0.857(5) ± 0.002 | 0.859(3) ± 0.004 | 0.868(1) ± 0.004 • | 0.860(2) ± 0.002 |

| scene | 0.950(1) ± 0.003 • | 0.948(6) ± 0.002 | 0.948(4) ± 0.002 | 0.949(3) ± 0.002 | 0.948(5) ± 0.002 | 0.950(2) ± 0.002 |

| yeast | 0.841(4) ± 0.004 | 0.829(6) ± 0.005 | 0.842(3) ± 0.004 | 0.840(5) ± 0.003 | 0.843(1) ± 0.003 • | 0.842(2) ± 0.004 |

| Slashdot | 0.938(6) ± 0.006 | 0.944(4) ± 0.003 | 0.944(5) ± 0.004 | 0.948(2) ± 0.004 | 0.949(1) ± 0.005 • | 0.945(3) ± 0.004 |

| corel5k | 0.791(5) ± 0.004 | 0.781(6) ± 0.005 | 0.796(3) ± 0.003 | 0.795(4) ± 0.003 | 0.802(1) ± 0.004 • | 0.796(2) ± 0.004 |

| rcv1subset1 | 0.859(6) ± 0.007 | 0.905(2) ± 0.003 | 0.871(5) ± 0.008 | 0.872(4) ± 0.009 | 0.931(1) ± 0.002 • | 0.874(3) ± 0.009 |

| rcv1subset2 | 0.843(6) ± 0.008 | 0.906(2) ± 0.004 | 0.863(5) ± 0.008 | 0.870(3) ± 0.012 | 0.933(1) ± 0.004 • | 0.865(4) ± 0.010 |

| rcv1subset3 | 0.835(6) ± 0.006 | 0.897(2) ± 0.005 | 0.860(5) ± 0.009 | 0.864(3) ± 0.008 | 0.930(1) ± 0.002 • | 0.861(4) ± 0.007 |

| rcv1subset4 | 0.866(6) ± 0.007 | 0.917(2) ± 0.003 | 0.886(4) ± 0.005 | 0.889(3) ± 0.009 | 0.938(1) ± 0.002 • | 0.884(5) ± 0.010 |

| rcv1subset5 | 0.847(6) ± 0.007 | 0.902(2) ± 0.003 | 0.866(5) ± 0.008 | 0.871(3) ± 0.007 | 0.931(1) ± 0.002 • | 0.868(4) ± 0.009 |

| bibtex | 0.820(6) ± 0.008 | 0.869(2) ± 0.004 | 0.860(4) ± 0.006 | 0.859(5) ± 0.005 | 0.883(1) ± 0.004 • | 0.863(3) ± 0.006 |

| Arts | 0.838(6) ± 0.003 | 0.849(4) ± 0.003 | 0.851(3) ± 0.003 | 0.851(2) ± 0.003 | 0.849(5) ± 0.003 | 0.851(1) ± 0.003 • |

| Health | 0.912(6) ± 0.002 | 0.916(5) ± 0.002 | 0.918(3) ± 0.002 | 0.918(2) ± 0.002 | 0.917(4) ± 0.002 | 0.919(1) ± 0.002 • |

| Business | 0.939(6) ± 0.002 | 0.946(4) ± 0.002 | 0.947(2) ± 0.001 | 0.948(1) ± 0.001 • | 0.945(5) ± 0.001 | 0.947(3) ± 0.001 |

| Education | 0.899(6) ± 0.002 | 0.905(4) ± 0.002 | 0.906(3) ± 0.002 | 0.906(2) ± 0.002 | 0.903(5) ± 0.002 | 0.906(1) ± 0.002 • |

| Computers | 0.884(6) ± 0.005 | 0.892(4) ± 0.004 | 0.895(3) ± 0.003 | 0.895(2) ± 0.003 | 0.891(5) ± 0.002 | 0.896(1) ± 0.003 • |

| Entertainment | 0.880(6) ± 0.001 | 0.889(4) ± 0.002 | 0.890(3) ± 0.002 | 0.890(2) ± 0.002 | 0.886(5) ± 0.002 | 0.891(1) ± 0.002 • |

| Recreation | 0.854(6) ± 0.004 | 0.873(3) ± 0.004 | 0.873(4) ± 0.004 | 0.875(1) ± 0.004 • | 0.868(5) ± 0.004 | 0.874(2) ± 0.004 |

| Society | 0.861(5) ± 0.002 | 0.864(4) ± 0.004 | 0.868(2) ± 0.002 | 0.868(3) ± 0.002 | 0.860(6) ± 0.002 | 0.869(1) ± 0.002 • |

| eurlex-dc-l | 0.904(6) ± 0.005 | 0.915(3) ± 0.004 | 0.919(1) ± 0.005 • | 0.905(5) ± 0.006 | 0.909(4) ± 0.003 | 0.919(2) ± 0.005 |

| eurlex-sm | 0.948(3) ± 0.001 | 0.945(5) ± 0.002 | 0.952(2) ± 0.002 | 0.946(4) ± 0.002 | 0.943(6) ± 0.002 | 0.952(1) ± 0.002 • |

| tmc2007-500 | 0.956(3) ± 0.001 | 0.949(6) ± 0.001 | 0.956(4) ± 0.001 | 0.952(5) ± 0.001 | 0.956(2) ± 0.001 | 0.958(1) ± 0.001 • |

| mediamill | 0.958(1) ± 0.001 • | 0.948(6) ± 0.001 | 0.957(3) ± 0.000 | 0.951(5) ± 0.001 | 0.954(4) ± 0.001 | 0.957(2) ± 0.001 |

| average rank | 4.96 | 4.21 | 3.32 | 3.25 | 2.86 | 2.39 |

| win/tie/loss | 19/23/98 | 42/22/76 | 55/46/39 | 54/41/45 | 81/13/46 | 76/41/23 |

| Dataset | Ranking Loss | |||||

|---|---|---|---|---|---|---|

| MLHiKNN-g1 | MLHiKNN-g0 | MLHiKNN-h1 | MLHiKNN-d1 | MLHiKNN-fo | MLHiKNN | |

| birds | 0.269(6) ± 0.025 | 0.253(3) ± 0.029 | 0.256(4) ± 0.019 | 0.262(5) ± 0.018 | 0.217(1) ± 0.023 • | 0.251(2) ± 0.020 |

| CAL500 | 0.246(5) ± 0.008 | 0.275(6) ± 0.010 | 0.239(2) ± 0.004 | 0.239(3) ± 0.003 | 0.233(1) ± 0.002 • | 0.240(4) ± 0.005 |

| emotions | 0.154(3) ± 0.007 | 0.160(5) ± 0.007 | 0.152(1) ± 0.007 • | 0.156(4) ± 0.010 | 0.161(6) ± 0.009 | 0.153(2) ± 0.007 |

| genbase | 0.005(5) ± 0.003 | 0.006(6) ± 0.003 | 0.004(1) ± 0.002 • | 0.004(2) ± 0.003 | 0.004(3) ± 0.002 | 0.004(4) ± 0.003 |

| LLOG | 0.190(5) ± 0.005 | 0.187(4) ± 0.003 | 0.187(1) ± 0.002 • | 0.187(3) ± 0.002 | 0.195(6) ± 0.003 | 0.187(2) ± 0.002 |

| enron | 0.136(4) ± 0.004 | 0.143(6) ± 0.005 | 0.139(5) ± 0.003 | 0.135(3) ± 0.005 | 0.124(1) ± 0.003 • | 0.135(2) ± 0.003 |

| scene | 0.071(1) ± 0.004 • | 0.074(5) ± 0.002 | 0.073(4) ± 0.003 | 0.072(3) ± 0.003 | 0.074(6) ± 0.003 | 0.071(2) ± 0.003 |

| yeast | 0.168(2) ± 0.003 | 0.180(6) ± 0.004 | 0.168(4) ± 0.003 | 0.170(5) ± 0.003 | 0.167(1) ± 0.003 • | 0.168(3) ± 0.003 |

| Slashdot | 0.051(6) ± 0.005 | 0.048(4) ± 0.003 | 0.048(5) ± 0.004 | 0.044(2) ± 0.004 | 0.042(1) ± 0.005 • | 0.047(3) ± 0.005 |

| corel5k | 0.211(5) ± 0.004 | 0.218(6) ± 0.005 | 0.206(3) ± 0.004 | 0.207(4) ± 0.004 | 0.173(1) ± 0.004 • | 0.206(2) ± 0.004 |

| rcv1subset1 | 0.132(6) ± 0.007 | 0.087(2) ± 0.003 | 0.121(5) ± 0.007 | 0.119(4) ± 0.008 | 0.058(1) ± 0.001 • | 0.118(3) ± 0.008 |

| rcv1subset2 | 0.139(6) ± 0.007 | 0.081(2) ± 0.004 | 0.120(5) ± 0.008 | 0.115(3) ± 0.010 | 0.054(1) ± 0.002 • | 0.118(4) ± 0.009 |

| rcv1subset3 | 0.145(6) ± 0.004 | 0.089(2) ± 0.003 | 0.123(5) ± 0.007 | 0.120(3) ± 0.008 | 0.056(1) ± 0.001 • | 0.122(4) ± 0.005 |

| rcv1subset4 | 0.113(6) ± 0.006 | 0.071(2) ± 0.002 | 0.099(4) ± 0.005 | 0.095(3) ± 0.008 | 0.048(1) ± 0.002 • | 0.100(5) ± 0.009 |

| rcv1subset5 | 0.135(6) ± 0.008 | 0.087(2) ± 0.002 | 0.118(5) ± 0.008 | 0.114(3) ± 0.006 | 0.055(1) ± 0.001 • | 0.117(4) ± 0.009 |

| bibtex | 0.184(6) ± 0.006 | 0.136(2) ± 0.004 | 0.150(4) ± 0.004 | 0.150(5) ± 0.003 | 0.092(1) ± 0.002 • | 0.147(3) ± 0.004 |

| Arts | 0.140(6) ± 0.003 | 0.131(5) ± 0.003 | 0.130(4) ± 0.003 | 0.129(3) ± 0.003 | 0.128(1) ± 0.002 • | 0.129(2) ± 0.003 |

| Health | 0.077(6) ± 0.001 | 0.072(5) ± 0.002 | 0.071(4) ± 0.002 | 0.070(2) ± 0.002 | 0.070(3) ± 0.002 | 0.070(1) ± 0.002 • |

| Business | 0.042(6) ± 0.001 | 0.037(4) ± 0.001 | 0.037(2) ± 0.001 | 0.036(1) ± 0.001 • | 0.038(5) ± 0.001 | 0.037(3) ± 0.001 |

| Education | 0.095(6) ± 0.002 | 0.091(5) ± 0.002 | 0.090(3) ± 0.002 | 0.089(1) ± 0.002 • | 0.090(4) ± 0.002 | 0.089(2) ± 0.002 |

| Computers | 0.093(6) ± 0.003 | 0.089(5) ± 0.002 | 0.086(2) ± 0.002 | 0.087(3) ± 0.002 | 0.087(4) ± 0.002 | 0.086(1) ± 0.002 • |

| Entertainment | 0.119(6) ± 0.002 | 0.114(4) ± 0.003 | 0.113(3) ± 0.003 | 0.112(2) ± 0.002 | 0.114(5) ± 0.002 | 0.111(1) ± 0.002 • |

| Recreation | 0.129(6) ± 0.004 | 0.116(3) ± 0.004 | 0.117(4) ± 0.004 | 0.115(1) ± 0.004 • | 0.118(5) ± 0.004 | 0.115(2) ± 0.004 |

| Society | 0.112(6) ± 0.003 | 0.110(4) ± 0.004 | 0.106(2) ± 0.002 | 0.107(3) ± 0.002 | 0.110(5) ± 0.002 | 0.105(1) ± 0.002 • |

| eurlex-dc-l | 0.092(6) ± 0.004 | 0.081(3) ± 0.004 | 0.078(1) ± 0.005 • | 0.090(4) ± 0.005 | 0.090(5) ± 0.004 | 0.078(2) ± 0.005 |

| eurlex-sm | 0.055(3) ± 0.001 | 0.056(5) ± 0.002 | 0.050(2) ± 0.002 | 0.056(6) ± 0.002 | 0.056(4) ± 0.001 | 0.050(1) ± 0.002 • |

| tmc2007-500 | 0.048(5) ± 0.001 | 0.050(6) ± 0.001 | 0.046(2) ± 0.001 | 0.047(4) ± 0.001 | 0.047(3) ± 0.001 | 0.044(1) ± 0.001 • |

| mediamill | 0.037(1) ± 0.001 • | 0.044(6) ± 0.001 | 0.038(3) ± 0.001 | 0.043(5) ± 0.000 | 0.040(4) ± 0.000 | 0.038(2) ± 0.001 |

| average rank | 5.04 | 4.21 | 3.21 | 3.21 | 2.89 | 2.43 |

| win/tie/loss | 17/23/100 | 40/20/80 | 53/46/41 | 56/42/42 | 78/23/39 | 78/42/20 |

| Dataset | F1 Macro | |||||

|---|---|---|---|---|---|---|

| MLHiKNN-g1 | MLHiKNN-g0 | MLHiKNN-h1 | MLHiKNN-d1 | MLHiKNN-fo | MLHiKNN | |

| birds | 0.217(5) ± 0.074 | 0.348(1) ± 0.043 • | 0.269(2) ± 0.052 | 0.236(4) ± 0.066 | 0.161(6) ± 0.018 | 0.258(3) ± 0.069 |

| CAL500 | 0.071(6) ± 0.004 | 0.129(1) ± 0.006 • | 0.081(4) ± 0.004 | 0.083(2) ± 0.004 | 0.075(5) ± 0.003 | 0.083(3) ± 0.003 |

| emotions | 0.641(5) ± 0.012 | 0.643(4) ± 0.017 | 0.646(2) ± 0.017 | 0.645(3) ± 0.018 | 0.617(6) ± 0.010 | 0.651(1) ± 0.013 • |

| genbase | 0.969(2) ± 0.014 | 0.927(6) ± 0.018 | 0.957(3) ± 0.019 | 0.970(1) ± 0.013 • | 0.950(5) ± 0.008 | 0.954(4) ± 0.017 |

| LLOG | 0.091(6) ± 0.016 | 0.094(4) ± 0.013 | 0.102(2) ± 0.013 | 0.094(5) ± 0.018 | 0.114(1) ± 0.015 • | 0.097(3) ± 0.016 |